- 1College of Computer Science and Technology, Changchun Normal University, Changchun, Jilin, China

- 2College of Computer Science and Artificial Intelligence, Wenzhou University, Wenzhou, China

- 3School of Surveying and Geospatial Engineering, College of Engineering, University of Tehran, Tehran, Iran

- 4Department of Dermatology, The Affiliated Hospital of Medical School, Ningbo University, Ningbo, China

- 5School of Medicine, Ningbo University, Ningbo, Zhejiang, China

Introduction: Atopic dermatitis (AD) is an allergic disease with extreme itching that bothers patients. However, diagnosing AD depends on clinicians’ subjective judgment, which may be missed or misdiagnosed sometimes.

Methods: This paper establishes a medical prediction model for the first time on the basis of the enhanced particle swarm optimization (SRWPSO) algorithm and the fuzzy K-nearest neighbor (FKNN), called bSRWPSO-FKNN, which is practiced on a dataset related to patients with AD. In SRWPSO, the Sobol sequence is introduced into particle swarm optimization (PSO) to make the particle distribution of the initial population more uniform, thus improving the population’s diversity and traversal. At the same time, this study also adds a random replacement strategy and adaptive weight strategy to the population updating process of PSO to overcome the shortcomings of poor convergence accuracy and easily fall into the local optimum of PSO. In bSRWPSO-FKNN, the core of which is to optimize the classification performance of FKNN through binary SRWPSO.

Results: To prove that the study has scientific significance, this paper first successfully demonstrates the core advantages of SRWPSO in well-known algorithms through benchmark function validation experiments. Secondly, this article demonstrates that the bSRWPSO-FKNN has practical medical significance and effectiveness through nine public and medical datasets.

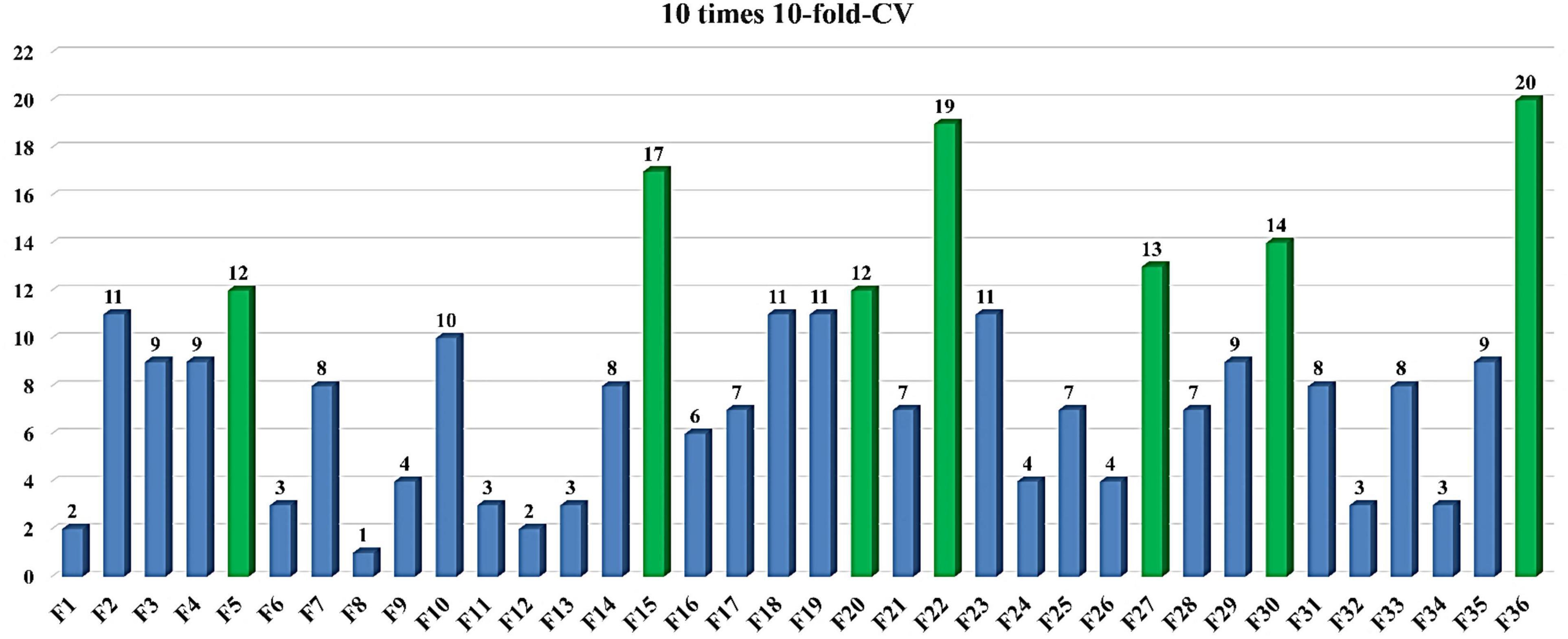

Discussion: The 10 times 10-fold cross-validation experiments demonstrate that bSRWPSO-FKNN can pick up the key features of AD, including the content of lymphocytes (LY), Cat dander, Milk, Dermatophagoides Pteronyssinus/Farinae, Ragweed, Cod, and Total IgE. Therefore, the established bSRWPSO-FKNN method practically aids in the diagnosis of AD.

1. Introduction

Atopic dermatitis (AD) is a chronic inflammatory skin disease accompanied by allergic reactions characterized by itchy eczematous skin-lesions and dry skin, which is common in childhood and influences at least 20% of children around the world (Rehbinder et al., 2020; Asano et al., 2022; Johansson et al., 2022). Considering the starting point of the atopic march with the development of food allergy, asthma, and allergic rhinitis, it is important to distinguish AD and intervene (Spergel, 2021). There are many diagnostic criteria for AD in the world, such as Hanifin & Rajka diagnostic criteria, Williams diagnostic criteria, International Study of Asthma and Allergy in Children (ISAAC) questionnaire, which depends on the subjective judgment of dermatologists (Williams et al., 1994; Williams, 1996). William’s criteria are the primary basis for diagnosing AD, which includes an itchy skin condition for the last 12 months and three minor criteria (Williams, 1996; Williams et al., 1996). Depending on the clinicians’ extensive experiences, some patients are missed or misdiagnosed. The sensitivity of diagnosis is enhanced when combined with serology results (Poto et al., 2022). Thus, a comprehensive evaluation of combination clinic symptoms with serology gradually attracts attention.

However, exploring such questions relies on vast amounts of relevant data. A large subject of research is medical information systems, which aid clinicians in providing quicker and more accurate medical diagnoses (Li C. et al., 2022; Liu S. et al., 2022; Zhuang et al., 2022). In this regard, manual analysis is impractical because it is time-consuming, inefficient, and error-prone for vast amounts of data. Consequently, it is essential to establish a machine learning model for studying AD, which will help AD staff better and more efficiently explore the factors and pathogenic features affecting AD. Moreover, some machine learning studies focus on AD. Gustafson et al. (2017) described a machine learning-based phenotyping algorithm that obtained higher positive predictive values (PPV) than previous low-sensitivity algorithms and demonstrated the utility of natural language processing (NLP) and machine learning in EHR-based phenotyping. Suhendra et al. (2019) proposed a machine learning algorithm that successfully combined a multi-class SVM classifier to classify and predict AD severity based on skin color, texture, and redness with an overall accuracy of about 0.86. Maintz et al. (2021) analyzed the association of 130 factors with AD severity based on a machine learning gradient boosting approach, cross-validated tuning, and multinomial logistic regression. It was demonstrated that the associations among AD patients identified in this study contribute to a deeper understanding, prevention, and treatment of AD disorders.

Guimarães et al. (2020) established a fully automated method based on a convolutional neural network (CNN) combined with multiphoton tomography (MPT) imaging to achieve AD morbidity prediction successfully. Li X. et al. (2021) used three machine learning models to analyze AI-assisted AD diagnosis and subclassify AD severity by 3D Raster Scanning Photoacoustic Mesoscopy (RSOM) images to extract features from volumetric vascular structures and clinical information. Jiang et al. (2022) developed a precise and automatic machine learning classifier on the basis of transcriptomic and microbiota data to predict the risk of AD. This method can accurately distinguish 161 subjects with AD from healthy individuals. Holm et al. (2021) developed two machine learning models to predict AD and explore the relationship between various immune markers in the serum of AD patients and AD disease severity based on clinically obtained biomarkers. Clayton et al. (2021) conducted a dermatological biopsy transcriptome profiling for AD. They performed cross-validation at different skin inflammation conditions and disease stages by using co-expression clustering and machine learning tools, ultimately revealing the impact of keratin-forming cell programming on skin inflammation and suggesting that perturbation of uniaxial immune signaling alone may not be sufficient to resolve keratin-forming cell immunophenotype abnormalities. Berna et al. (2021) constructed a machine learning framework for exploring the association between AD pathogenesis and low-frequency, rare alleles. However, because of the variety of factors that influence the physiological status of AD. Although the above scholars have conducted a series of explorations and studies for the prevention, diagnosis and treatment of AD, these extant studies are still inadequate for AD.

Therefore, to further explore the key factors affecting the physiological condition of AD, we propose a novel and effective feature selection method, bSRWPSO-FKNN, by combining the swarm intelligence optimization algorithm and machine learning techniques in this paper. While proposing the method to make the feature selection performance of the combination of particle swarm optimization (PSO) and the FKNN more outstanding, we first enhance the PSO. Thus, an improved variant of PSO combined with Sobol sequence population initialization (SOB), random replacement strategy (RRS), and adaptive weight strategy (AWS), named SRWPSO, is proposed for the first time. In SRWPSO, this study exploits the advantage of uniform distribution of low discrepancy sequences by the SOB, which enhances the diversity of the initial population and the traversal of the population space. It makes it easier for PSO to find the optimal particle position at the beginning. RRS and AWS are also introduced into PSO, which cooperate to make the PSO overcome the shortcomings of poor convergence ability and having fallen into local optimum. Moreover, the comprehensive performance of SRWPSO is demonstrated on 30 benchmark functions of CEC 2014, mainly including mechanism combination verification experiments, quality analysis experiments, comparison experiments with traditional algorithms, comparison experiments with famous variants, and comparison experiments with new peer variants. The benchmark function validation experiments show that SRWPSO, under the action of the three enhancement strategies, has the relatively best all-around performance among all the well-known algorithms involved in the comparison. Then, to apply SRWPSO to feature prediction, a binary version of SRWPSO is proposed in this paper, named bSRWPSO. Next, this paper combines bSRWPSO with FKNN to propose the bSRWPSO-FKNN model.

What’s more, to prove the feature selection performance of the model, the article firstly uses nine public datasets of the UCI to compare bSRWPSO with 10 other binary versions of the algorithm based on FKNN through the 10-fold cross-validation experiment. Then, this article also sets up a series of comparison experiments for the model on a medical dataset by the 10-fold cross-validation, including the comparison experiments of bSRWPSO combined with five classifiers, the comparison experiments of bSRWPSO-FKNN with other well-known classification models and the comparison experiments of 11 FKNN models combined with swarm intelligence algorithms. In this paper, we analyze the results of the experiments and demonstrate that bSRWPSO-FKNN has a significant core advantage over all the methods involved in the comparison experiments by combining the following evaluation indicators: Accuracy, Sensitivity, Matthews correlation coefficient (MCC), and F-measure. Finally, based on the bSRWPSO-FKNN and the medical dataset (AD), the key features affecting AD are extracted by 10 times 10-fold cross-validation experiments, mainly including the content of lymphocytes (LY), Cat dander, Milk, Dermatophagoides Pteronyssinus/Farinae, Ragweed, Cod, and Total IgE. The correctness and validity of the experimental results are also verified in the context of clinical medical practice. The main contributions of this study are summarized below.

1. An improved variant is proposed based on the PSO, named SRWPSO, which has stronger convergence in global optimization tasks.

2. A binary algorithm is proposed for solving discrete problems, named bSRWPSO.

3. A novel and efficient medical prediction method is proposed by combining bSRWPSO and FKNN, named bSRWPSO-FKNN.

4. The bSRWPSO-FKNN is successfully applied to AD prediction and provides a scientific approach to diagnosing AD and other disorders.

The rest of the paper is structured as follows. Section 2 describes the main work related to this article. Section 3 introduces the principle of operation of the original PSO. In section 4, the improvement process of the SRWPSO is presented. In section 5, the proposed bSRWPSO-FKNN is described. Section 6 sets up a series of benchmark function experiments to verify the advantages of SRWPSO. Section 7 sets up a series of feature selection experiments for bSRWPSO-FKNN and validates the potential of the method by the 10 times 10-fold cross-validation experiments. At last, section 8 reviews all the contents and guides the future work.

2. Related works

In recent years, feature selection technology based on swarm intelligence algorithms and machine learning techniques has gained wide attention in the field of medical diagnosis. Furthermore, many excellent machine learning methods have also been developed and applied to link diseases with various factors (El-Kenawy et al., 2020; Liu et al., 2020; Houssein et al., 2021; Hu et al., 2022a; Liu S. et al., 2021; Li Y. et al., 2022). For example, Hu et al. (2022b) presented a predictive framework based on an improved binary Harris hawk optimization (HHO) algorithm combined with a kernel extreme learning machine (KELM), which provides adequate technical support for early and accurate assessment of COVID-19 and differentiation of disease severity. Hu et al. (2022c) proposed a diagnostic model based on an improved binary mutation quantum grey wolf optimizer (MQGWO) and the FKNN techniques. They validated the model for hypoalbuminemia by predicting trends in serum albumin levels.

Liu et al. (2020) used the suggested COSCA method to optimize the two critical parameters of the SVM. As a result, they proposed a medical model that can self-directed the prediction of cervical hyperextension injury, named COSCA-SVM. Wu S. et al. (2021) combined an improved variant of the sine cosine algorithm (LSCA) and the FKNN techniques to propose a medical predictive model, named LSCA-FKNN, and successfully Its effectiveness has been validated on the disease in 3 medical datasets and lupus nephritis. Based on the proposed dispersed foraging sine cosine algorithm (DFSCA) and the KELM. Xia et al. (2022) established a new machine learning model called DFSCA-KELM. The medical diagnostic significance of the model was successfully confirmed by six public datasets and two real medical cases in the UCI library. Yang X. et al. (2022) proposed a feature selection framework called BSWEGWO-KELM and successfully verified the framework’s effectiveness by analyzing 1,940 records from 178 HD patients. Ye H. et al. (2021) proposed a predictive model that utilizes the HHO to optimize the FKNN, called HHO-FKNN. They successfully used this model to distinguish the severity of COVID-19, which one of the most hard cases in medicine (Li H. et al., 2021). Zuo et al. (2013) proposed an effective and efficient diagnostic system for Parkinson’s disease (PD) diagnosis based on particle swarm optimization (PSO) enhanced FKNN, which provides strong technical support for the diagnosis of PD.

Optimization methods are the oldest methods that can quickly bring feasible solutions using deterministic and gradient info (Cao et al., 2020b, 2021a,b) or without them (metaheuristic class). Also, as an emerging evolutionary computing technique, swarm intelligence algorithms have become the focus of more and more researchers. With the escalation of the solution problem, many different swarm intelligence optimization algorithms have gradually emerged to suit different problems. For example, there is ant colony optimization based on continuous optimization (ACOR) (Dorigo, 1992; Dorigo and Caro, 1999; Socha and Dorigo, 2008), particle swarm optimizer (PSO) (Cao et al., 2020a), different evolution (DE) (Storn and Price, 1997), sine cosine algorithm (SCA) (Mirjalili, 2016), HHO (Heidari et al., 2019b), grey wolf optimization (GWO) (Mirjalili et al., 2014), hunger games search (HGS) (Yang et al., 2021), Harris hawks optimization (HHO) (Heidari et al., 2019b), slime mould algorithm (SMA) (Li S. et al., 2020), Runge Kutta optimizer (RUN) (Ahmadianfar et al., 2021), weighted mean of vectors (INFO) (Ahmadianfar et al., 2022), colony predation algorithm (CPA) (Tu et al., 2021b), whale optimization algorithm (WOA) (Mirjalili and Lewis, 2016), bat-inspired algorithm (BA) (Yang, 2010), moth-flame optimization (MFO) (Mirjalili, 2015), wind-driven optimization (WDO)(Bayraktar et al., 2010), and so on. As time progresses, the drawbacks of the traditional swarm intelligence algorithm have also gradually emerged with the change of the problem, mainly including the slow convergence speed and low convergence accuracy of the algorithm when solving the problem. Therefore, many scholars have proposed a series of optimization variants based on the traditional algorithm. For example, there are hybridizing SCA with DE (SCADE) (Nenavath and Jatoth, 2018), chaotic BA (CBA) (Adarsh et al., 2016), modified SCA (m_SCA) (Qu et al., 2018), chaotic random spare ACO (RCACO) (Dorigo, 1992; Dorigo and Caro, 1999; Zhao et al., 2021), ACO with Cauchy and greedy levy mutations (CLACO) (Dorigo, 1992; Dorigo and Caro, 1999; Liu L. et al., 2021), hybridizing SCA with PSO (SCA_PSO) (Nenavath et al., 2018), double adaptive random spare reinforced WOA (RDWOA) (Chen et al., 2019), boosted GWO (OBLGWO) (Heidari et al., 2019a), fuzzy self-tuning PSO (FSTPSO) (Nobile et al., 2018) and so on. Furthermore, they have been well applied in many fields, such as resource allocation (Deng et al., 2022a), feature selection (Hu et al., 2022a; Liu Y. et al., 2022), complex optimization problem (Deng et al., 2022b), robust optimization (He et al., 2019, 2020), fault diagnosis (Yu et al., 2021), scheduling problems (Gao et al., 2020; Han et al., 2021; Wang et al., 2022), medical diagnosis (Chen et al., 2016; Wang et al., 2017), multi-objective problem (Hua et al., 2021; Deng et al., 2022d), solar cell parameter Identification (Ye X. et al., 2021), expensive optimization problems (Li J.-Y. et al., 2020; Wu S.-H. et al., 2021), gate resource allocation (Deng et al., 2020a, 2022b), and airport taxiway planning (Deng et al., 2022c).

Inspired by the foraging behavior of bird flocks, Kennedy and Eberhart (1995) proposed PSO, which is a stochastic search algorithm based on group collaboration developed by simulating the foraging behavior of bird flocks in 1995. Then, many famous scholars have researched and developed various variants of PSO based on different problems. Zhou Q. et al. (2021) propose a human-knowledge-integrated particle swarm optimization (Hi-PSO) scheme to globally optimize the design of the hydraulic-electromagnetic energy-harvesting shock absorber (HESA) for road vehicles. Nagra et al. (2019) put forward a mixed population algorithm (GSADMSPSO) that combines dynamic multi-swarm PSO (DMSPSO) and a gravitational search algorithm. Wang et al. (2021) proposed a dynamic modified chaotic PSO algorithm (DMO). Tu et al. (2020) proposed a novel quantum-inspired PSO (MQPSO) algorithm for electromagnetic applications. Zhen et al. (2020) proposed a hybrid optimization method (WPA-PSO) based on the wolf pack algorithm (WPA) and PSO. They proved that it has obvious advantages over a single algorithm in estimating and predicting the parameters of the software reliability model. The above improved PSO algorithms can have stronger capability to solve problems in one or several specific fields. However, there is no free lunch (Wolpert and Macready, 1997). In other words, the above methods gain enhancements in some problems while exposing drawbacks in other problems. Based on the above studies, we can conclude that PSO is an excellent swarm intelligence optimization algorithm, but there are many areas for improvement. Therefore, in this paper, an improved version (SRWPSO) is proposed for PSO and succeeds in making the classifier obtain better experimental results in feature selection experiments.

3. An overview of PSO

During the food search, PSO evaluates the fitness value of each individual at a location by a special evaluation function and uses this value to characterize the likelihood that the searching individual will find food there. Theoretically, the lower the evaluation value, the better the location. In addition, PSO introduces a memory mechanism for each searching individual to record that individual’s current optimal position. Then, the best position of all the independent individuals in the whole group of birds is used to determine the best foraging point for the whole group of birds, which is the global optimal position for the whole solution process. The PSO model is described in the section below.

Before updating the particle population, the PSO initializes a random population space X, as shown in Eq. 1.

where represents an initial population space, m represents the number of individuals in the population, and n represents the number of dimensions of each individual.

For each particle, the corresponding position is a potential solution to the optimization problem, and each position’s fitness value is obtained by a special evaluation function. Then, it is made to compare with the recorded fitness value of the current individual, and if it is smaller than the previous fitness value, it is replaced. The optimal position of that individual is updated once. In each search process, the optimal position of each particle is recorded by pB, as shown in Eq. 2.

where pBi records the best foraging position found by the ith particle in the current population, and dim indicates that each individual has dim dimensions.

The updating method is shown in Eq. 3. In the equation, Xi(t + 1) represents the position of the individual after the current update process, pBi(t + 1) represents the best position obtained by the current individual after the t + 1th update, and f() represents the evaluation method for calculating the fitness value of each individual.

For the whole particle population, the current search position of all particles becomes one of the candidates for the global optimal solution. The PSO will use the whole update process of the population to find the only global optimal target position and record it with Eq. 4, which is updated in the way shown in Eq. 5.

where gBest indicates the global optimal position.

Of course, the key role of the PSO in updating the population of individuals is the movement vector of each particle, as shown in Eq. 6. Based on this vector, PSO can control the update direction and movement step of each particle, as represented by Eq. 7.

where both c1 and c2 are learning factors representing the movement of the particles toward pB and gBest, respectively. To make the particles move under certain limits for better merit search, the PSO constrains the displacement vector Vε[−Vmax, Vmax]. To deal with the displacement vector crossing problem, the researchers made the following settings, as shown in Eq. 8.

Finally, the update formula for individuals is shown in Eq. 9.

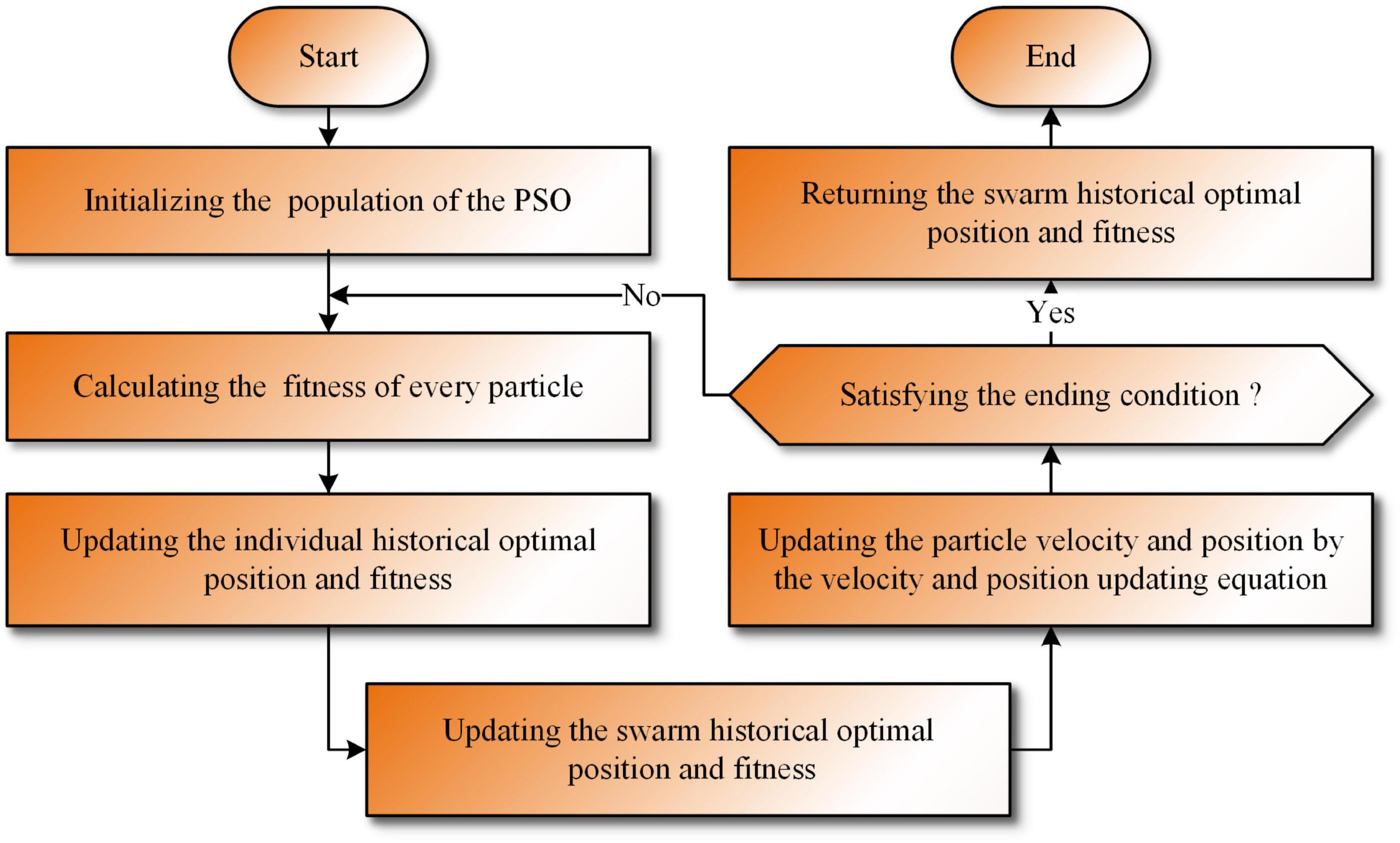

In summary, the workflow of the traditional PSO is shown in Algorithm 1 and Figure 1.

Algorithm 1. Pseudocode for the PSO

Input: The fitness function F (x),

maximum evaluation number (MaxFEs),

population size (N), dimension (dim)

Output: the best location (gBest)

Initialize a random population X

Initialize the parameters: FEs, t, Vmax, c1, c2

Initializes the velocity vector:

V = zeros(N, dim)

Initializes the optimal position

and grade of the current individual:

pB = zeros(N, dim), pB_score

Initialize position vector and score

for the best location: gBest, gBest_score

While (FEs < MaxFEs)

For i = 1: size(X, 1)

Keep each particle in the search

space

Calculate the fitness value for

every search particle

FEs = FEs + 1

Update the locations and scores

of gBest and pBi

End for

For i = 1: size(X, 1)

For j = 1: size (X, 2)

Updates the velocity vector

Vi,j by Eq. 7 and Eq. 8

Update the location of

particles by Eq. 9

End for

End for

t = t + 1

End while

Return gBest

In summary, the time complexity of traditional PSO can be easily found and is mainly affected by initialization, population updating, and fitness value calculation. The population initialization is the most important component of the initialization phase and can be analyzed as O(Initializing) = O(N × dim). The population updating phase was analyzed as O(Updating) = O(N × dim), and the fitness value calculation phase was analyzed as O(Calculating) = O(N × dim). Thus, O(PSO) = O(N × dim) + O(T = (N × dim)) = O((N × dim) = (T + 1)). Here, N denotes the population size, dim denotes that each particle has dim dimensions, and T = MaxFEs/M denotes the number of iterations, which is determined by having the total number of evaluations (MaxFEs) and the number of evaluations(M) during each iteration.

4. The proposed SRWPSO

4.1. Sobol sequence

Based on studies related to metaheuristic algorithms, it can be found that the distribution of initial population individuals affects the convergence performance of metaheuristic algorithms to some extent (Rahnamayan et al., 2007; Kazimipour et al., 2014; Dokeroglu et al., 2019). Therefore, in this study, a more uniformly distributed low-difference random sequence (Sobol sequence) is adopted instead of the traditional pseudo-random method in an attempt to improve the diversity of the population and the algorithm’s traversal of the population space through low-difference sample points, thus enhancing the efficiency of the algorithm in finding the global optimal solution.

In addition, many scholars have also conducted related research on population initialization. For example, Yang X. et al. (2022) used a sinusoidal initialization strategy (SS) to initialize the population of the GWO algorithm and successfully enhanced the search capability of traditional GWO. Qi et al. (2022a) combined the Levy fight strategy and the traditional initialization method and proposed a Levy fight initialization method with better effect and successfully used to improve WOA. Arora and Anand (2019) used the Circle chaos method to initialize the population and improve the Grasshopper optimization algorithm(GOA). The initialization steps in this study are as follows.

Step 1: The initialized population space takes a range of values Xε[lb, ub]. lb denotes the lower bound of the population’s space, and ub denotes the upper bound of the population space.

Step 2: Sobol sequence generates random sample points with low variance properties Sε[0, 1].

Step 3: The initialization method is defined as Eq. 10.

where Xi denotes the i-th particle in the population and iε[1, N].

Step 4: Repeating Step 3 N times based on population size N.

4.2. Random replacement strategy

To develop the population in a better direction, many scholars have tried to enhance the ability of traditional swarm intelligence algorithms for population updating by various methods. For example, the random replacement strategy has been effectively used in the literature (Gupta and Deep, 2018; Chen et al., 2019; Zhao et al., 2021). This strategy enriches the diversity of the population of individuals by replacing the position vector in the j-th dimension of the current individual with the position vector in the same dimension of the current swarm optimal individual. Thus, it improves the chance of exploiting the optimal individual.

Inspired by the method, this paper introduces the random replacement strategy into PSO, with the difference that this study transforms the object being replaced. In this improvement process, we combine the characteristic of PSO to record the current best position of each particle and achieve the improvement of the traditional PSO by replacing the position vector on the j-th dimension of the current best position of each particle with the position vector on the j-th dimension of the best individual of the population, as shown in Eq. 11.

During the search process, when the current optimal position of the population obtained by the algorithm approaches the global best position, we cannot exclude the possibility that it has excellent position vectors in some individual dimensions. Therefore, a probability parameter is introduced in the replacement strategy, as shown in Eq. 12.

where C denotes a Cauchy random number, and a is a decay factor that decays linearly from 1 to 0 as the number of evaluations increases.

4.3. Adaptive weight strategy

From the perspective of convergence speed and accuracy, the traditional PSO is easily trapped in the local optimum and lacks the ability to jump out of the local optimum in the middle and early stages of the updating process. In this paper, to remedy this deficiency, the adaptive weight ω is introduced into the velocity vector of the traditional PSO. The purpose is to improve the diversity of individuals in the population by increasing the perturbation capacity of the velocity vector, which facilitates the particles to explore and exploit the global optimum better, as shown in Eq. 15.

where β stands for a perturbation parameter under the control of C1 and S, giving the possibility of jumping out of the linearly decreasing trajectory when ω decreases linearly from 1 to 0. C1, like C, denotes a Cauchy random number. S denotes an adaptive parameter with an initial value of 0.01, which is updated, as shown in Eq. 17.

Therefore, the update of the velocity vector after the introduction of the adaptive weight ω can be expressed as Eq. 18.

4.4. Implementation of SRWPSO

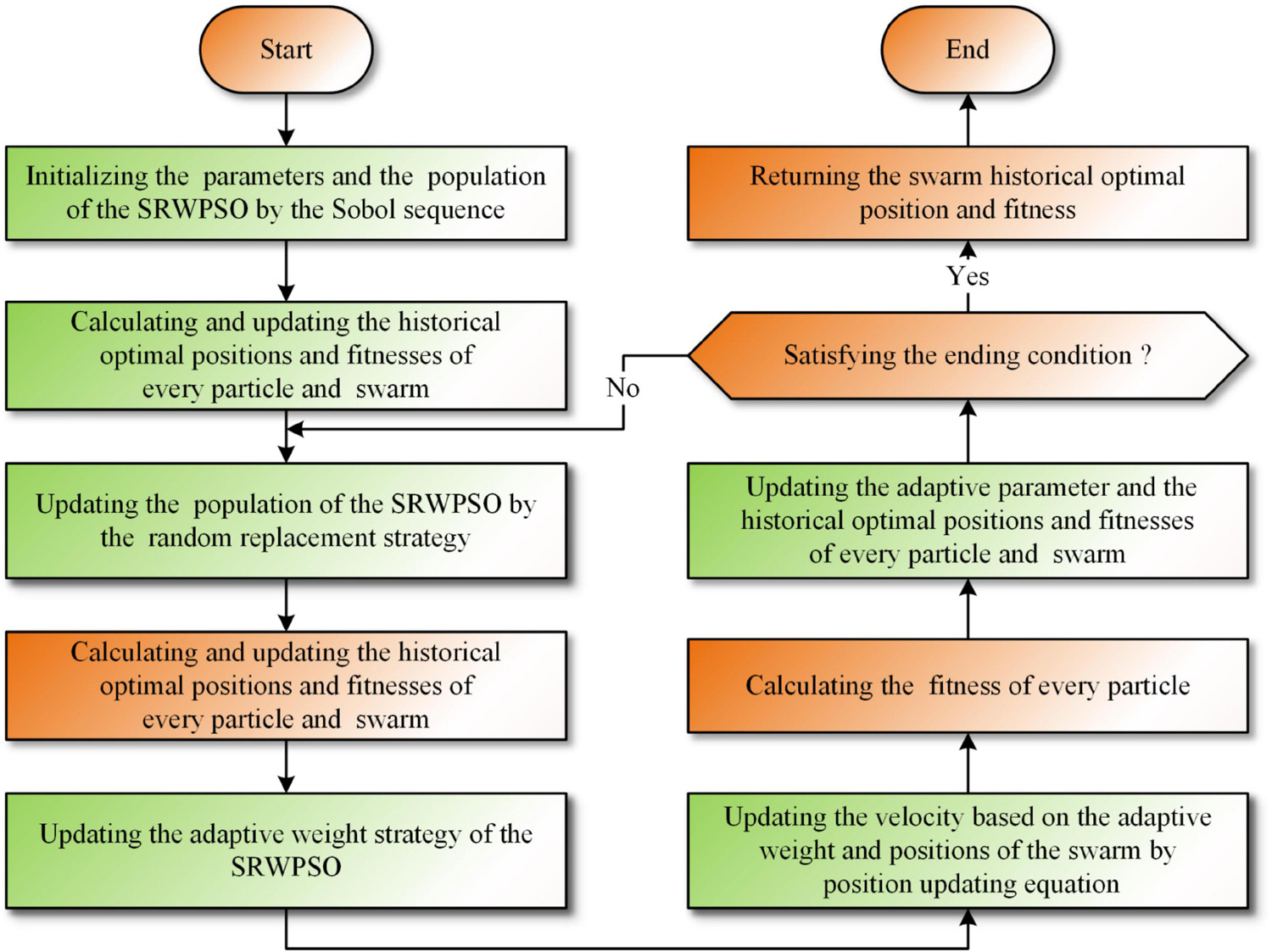

In order to improve the overall performance of the PSO, this paper makes PSO combined with the three optimization strategies introduced above for the first time and proposes an enhanced PSO named SRWPSO. First, this study introduces the Sobol sequence in PSO for initializing the particle population to enhance the algorithm for population space traversal by improving the overall quality of the initial population. Next, in order to improve the possibility of moving to the global optimal position, this study introduces a random substitution strategy based on the optimal position of the current particles. Finally, an adaptive weight strategy is introduced to improve the algorithm’s ability to jump out of the local trap during the optimization search to increase the particle population’s perturbation ability by enhancing the displacement vector’s scalability. The specific framework of the enhanced SRWPSO is shown in Algorithm 2 and Figure 2.

Algorithm 2. Pseudocode for the SRWPSO

Input: The fitness function F (x),

maximum evaluation number (MaxFEs),

population size (N), dimension (dim)

Output: the best location (gBest)

Initialize a random population X by

Eq. 10

Initialize the parameters:

FEs, t, Vmax, c1, c2, S

Initialize the velocity vector:

V = zeros(N, dim)

Initialize the optimal position and

grade of the current individual:

pB = zeros(N, dim), pB_score

Initialize position vector and score

for the best location: gBest, gBest_score

For i = 1: size(X, 1)

Keep each particle in the search

space

Calculate the fitness value for

every search particle

FEs = FEs + 1

Update the locations and scores

of gBest and pBi

End for

While (FEs = MaxFEs)

For i = 1: size(X, 1)

Updates the position of particles

by Eq. 12

Keep each particle in the search

space

Calculate the fitness value for

every search particle

FEs = FEs + 1

Update the locations and scores

of gBest and pBi

End for

Update the adaptive weight ω by Eq. 15

For i = 1: size(X, 1)

For j = 1: size(X, 2)

Update the velocity vector

Vi, j by Eq. 18

Update the location of

particles by Eq. 9

End for

End for

For i = 1: size(X, 1)

Keep each particle in the search

space

Calculate the fitness value for

every search particle

FEs = FEs + 1

Update the locations and scores

of gBest and pBi

Update the adaptive factor S by

Eq. 17

End for

t = t + 1

End while

Return gBest

Analyzing the above workflow, we can find that the complexity of SRWPSO is mainly determined by the population size (N), dimension size (dim), and the maximum number of evaluations (MaxFEs). If the number of times the fitness value is calculated in one iteration is M, the number of iterations (T) can be calculated as T = MaxFEs/M, which is determined by MaxFEs and the application time of the evaluation function. Therefore, the overall time complexity is O(SRWPSO) = O(Sobol initialization) + O(Assessment and selection of initialization) + O(Random replacement strategy) + O(Adaptive weight strategy). The complexity under the Sobol initialization is O(N × dim). The complexity under assessment and selection of initialization is O(N × dim). The complexity under the random replacement strategy is O(N × dim + 2 × N × dim). The complexity under the adaptive weight strategy is O(2 × 2 × N × dim). In conclusion, O(SRWPSO) = O(2 × N × dim) + T = (O(4 × N = dim) + O(3 × N × dim)) = O(N × dim + T × (7N × dim)).

5. The proposed bSRWPSO-FKNN

5.1. Binary conversion method

It is well known that feature selection is a binary-based discretization problem. However, the SRWPSO in this paper is proposed based on a continuous problem. Therefore, in order to make the SRWPSO applicable to the feature selection experiments, this subsection provides a binary conversion method suitable for the SRWPSO for converting from the continuous problem to the feature selection problem and finally proposes a novel discrete binary version of the SRWPSO, named bSRWPSO. The following is a partial description of the binary conversion process of the SRWPSO.

(1) Initialize the problem domain as [0,1]. In the problem, each dimension of each individual represents an attribute of the problem, and each feature has a data marker between 0 and 1.

(2) Discrete the continuous problem. As shown in Eq. 19, the obtained feature values are transformed into 0 or 1 by the V-transformation equation, indicating whether the feature is selected. Where 1 indicates that it is selected and 0 represents the opposite meaning.

where r is a random number from 0 to 1, Xd denotes the binary transformed position of the search agent, and V(⋅) is a V-shaped discretization equation, as shown in Eq. 20.

5.2. Fuzzy K-nearest neighbor

K-nearest neighbors (KNN) (Cover and Hart, 1967; Jadhav et al., 2018; Tang et al., 2020) is a simple, efficient, nonparametric classification method proposed by Cover and Hart (1967) and one of the world-famous machine learning algorithms since the 20th century. In KNN, one of its classes is assigned according to the most common class in its K-nearest neighbors. Keller et al. (1985) combined fuzzy set theory with the KNN and proposed a fuzzy version of the KNN, named the FKNN (Keller et al., 1985; Chen et al., 2011, 2013; Mailagaha Kumbure et al., 2020). Unlike the individual classes of the KNN, the fuzzy affiliations of the samples of the FKNN are assigned to different classes according to Eq. 21.

In the above equation, i = 1, 2, 3, …, C and j = 1, 2, 3, …, k. C denotes the number of classes and k represents the number of the nearest neighbors. In calculating the contribution of each neighbor to the affiliation value, the FKNN method determines the weight of the distance in the calculation process by using the fuzzy strength parameter m, which is usually taken as m ∈ (1, ∞). ∥ x-xj ∥ is calculated using the Euclidean distance, which denotes the distance between x and its j-th nearest neighbor xj. μi,j is the membership degree of the pattern xj from the training set to the class i, among the k nearest neighbors of x.

5.3. Implementation of bSRWPSO-FKNN

This section proposes a novel feature prediction model, named bSRWPSO-FKNN, based on the binary SRWPSO and the FKNN, which provides technical support for conducting feature selection experiments. The principle is to optimize the subset of data produced during the experiment by using the ability of the bSRWPSO to find the global optimum in order to obtain a better and more suitable optimization set for feature selection experiments and then use the FKNN to perform feature prediction on the obtained optimization set. By the above method, we not only exploit the potential of the FKNN but also improve the efficiency and accuracy of the classification experiments.

In addition, to better achieve the classification performance of the bSRWPSO-FKNN, this paper provides an evaluation method based on error rate and feature subset for aiding feature prediction, as shown in Eq. 22.

where Error denotes the error rate of classification results, and the sum of classification accuracy is 1; D denotes the number of features in the dataset involved in feature selection; R denotes the number of features in the subset of data obtained by the feature selection experiment; α and β are two important weight parameters, and α + β = 1, and α = 0.99 reflects the importance of error rate.

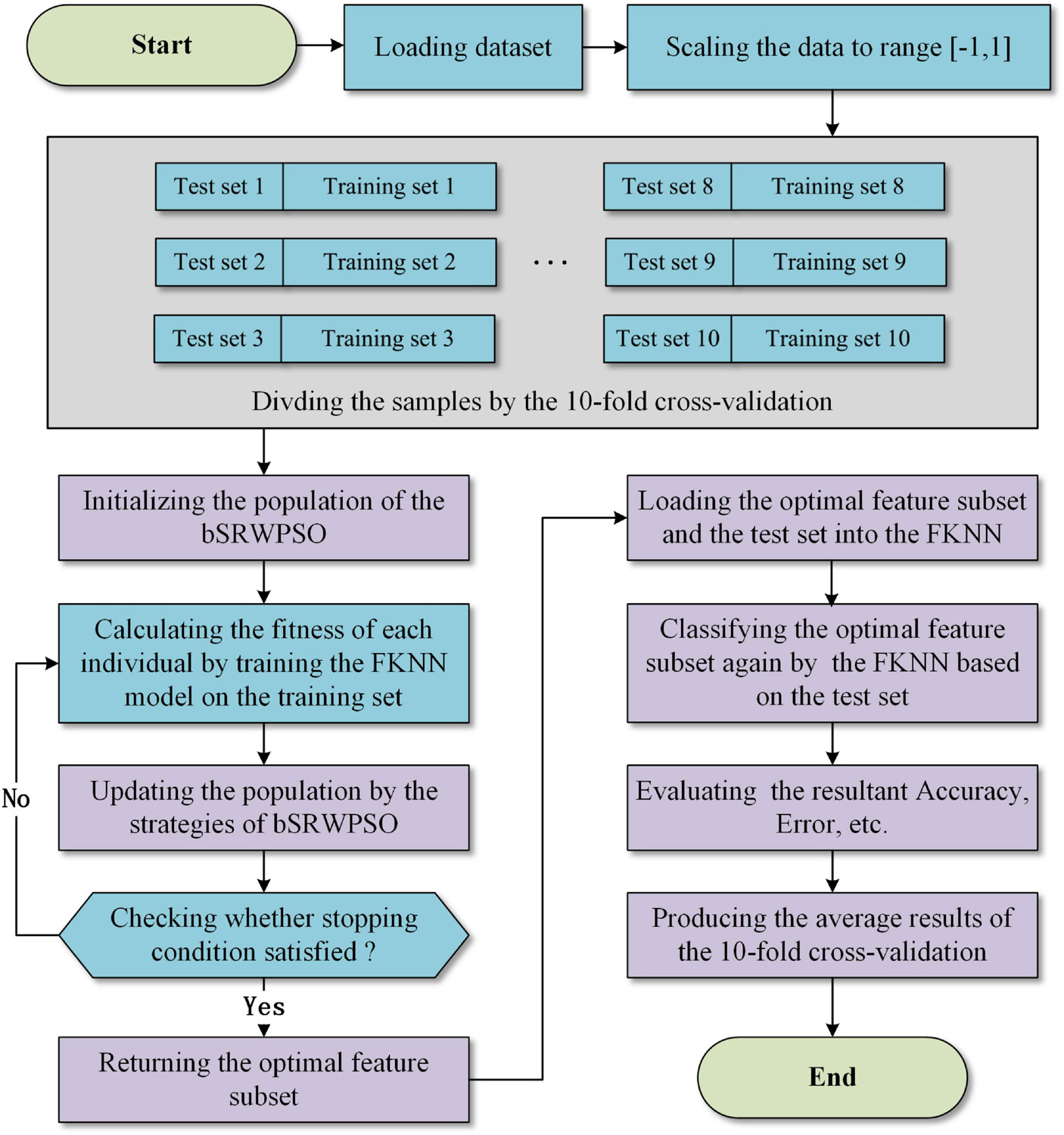

In summary, the workflow of the bSRWPSO-FKNN proposed in this paper is shown in Figure 3.

6. Benchmark function validation

In this section, this paper experiments to test the performance of the SRWPSO based on 30 benchmark functions from the CEC 2014. The convergence process of the SRWPSO is analyzed from several aspects, and its ability to escape the local optimum and search for the global optimum is fully demonstrated.

6.1. Experimental setup

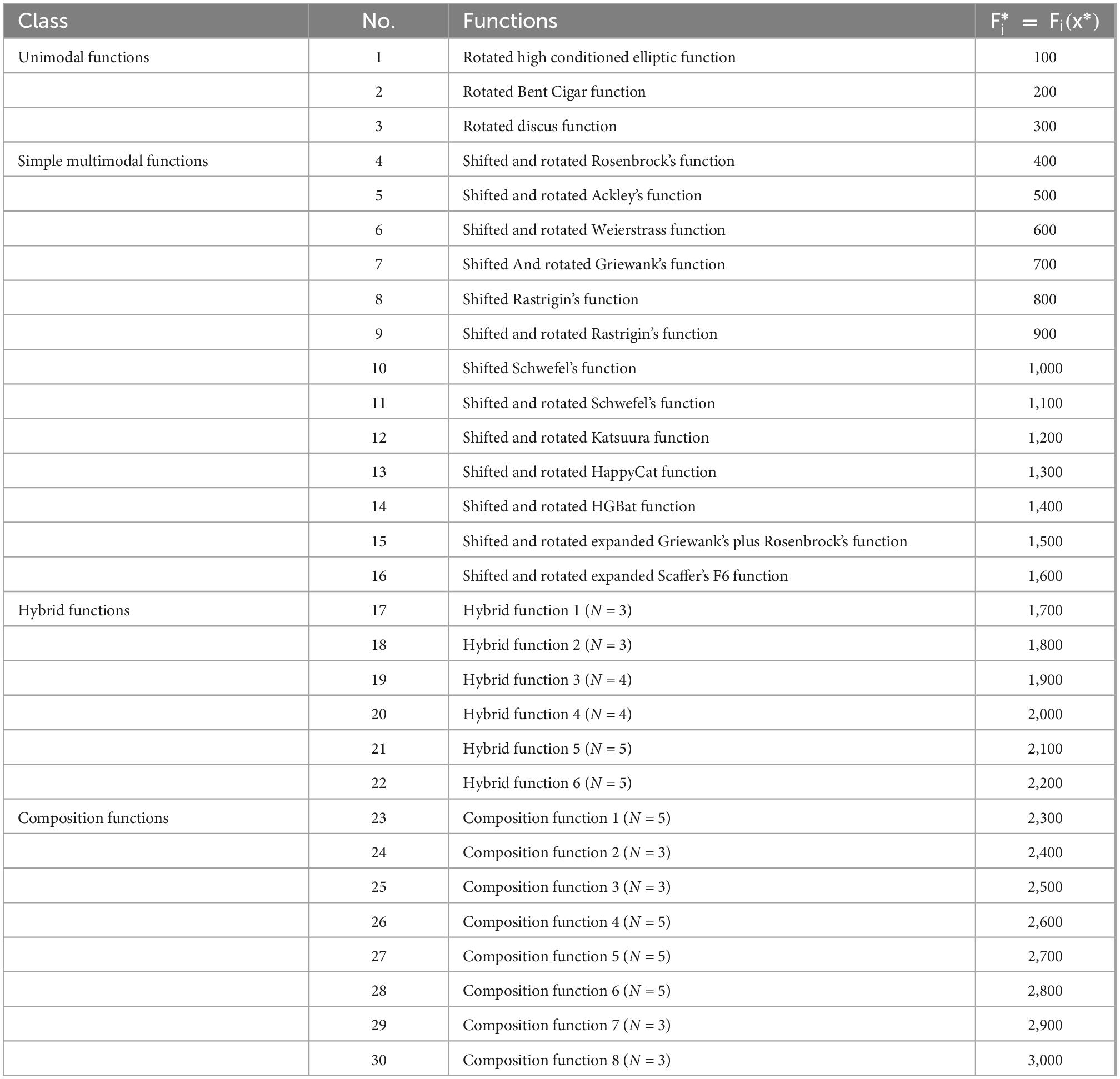

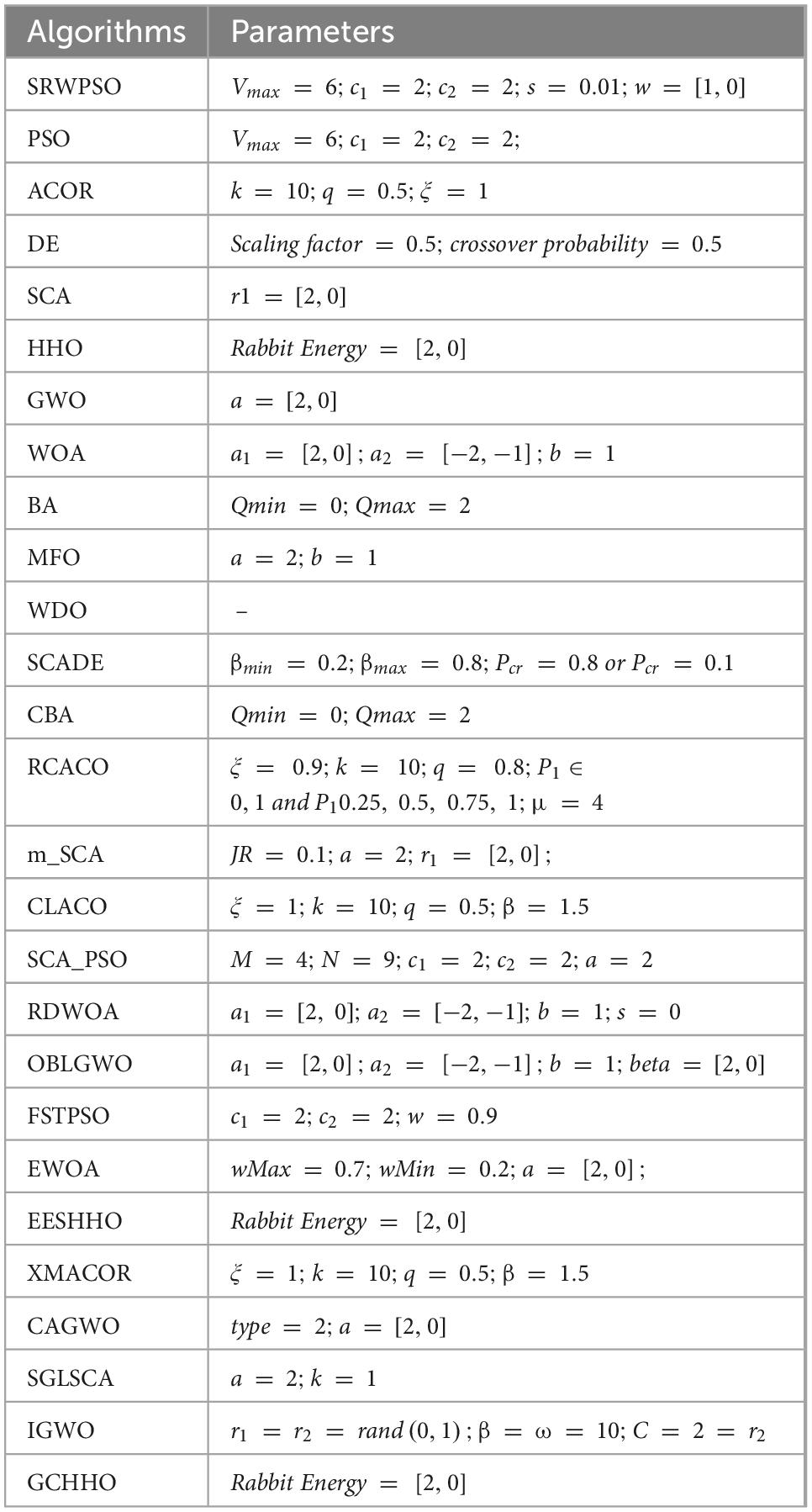

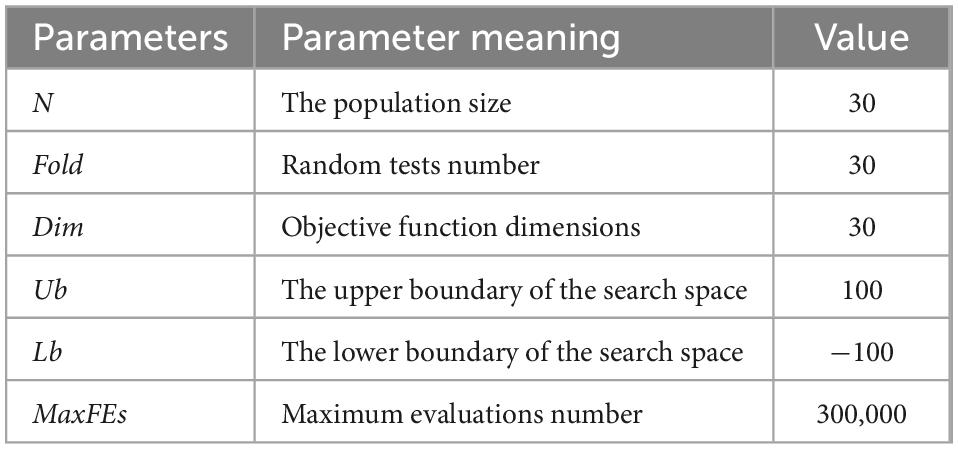

In order to verify the comprehensive ability of SRWPSO, this section sets up performance verification experiments for SRWPSO from four aspects, including mechanism combination verification experiments, quality analysis experiments, comparison experiments with traditional algorithms, comparison experiments with famous variants, and comparison experiments with new peer variants. At the same time, combined with the experimental results, this section analyzes the convergence process of SRWPSO and proves its excellent performance. As shown in Table 1, this subsection gives the specific details of the CEC 2014 benchmark function set. The parameters of the algorithms involved in this paper are shown in Table 2.

For the purpose of increasing the persuasion of test outcomes, we utilized two representative statistical standards in the analysis, namely average value (AVG) and variance (STD). In the analysis part, the AVG is employed to represent the comprehensive capability of the algorithm, and the smaller its value is, the better the comprehensive performance of the algorithm is; the STD reflects the performance state of the algorithm, and the smaller its value is, the more stable its comprehensive performance is. Then, to further discuss the comparative experimental results, this section provides two popular statistical methods for the experimental analysis process: the Wilcoxon signed-rank test (García et al., 2010) and the Friedman test (García et al., 2010). The “+,” “=,” and “–” appearing in the Wilcoxon signed-rank test, respectively mean that the performance of SRWPSO is superior to, equal to and inferior to competitors. In the table, the optimal data of the experimental results are highlighted in black. Eventually, some of the convergence curves are drawn to visualize the convergence effect of the algorithms.

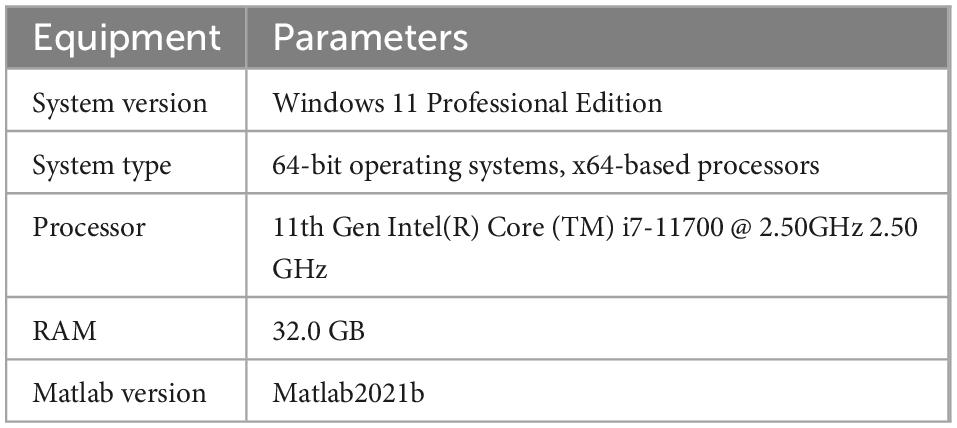

In addition, in order to balance the influence of the experimental process on the experimental outcomes, the experimental environment was unified from the internal and external aspects of the experiment. As displayed in Table 3, the study sets the population size, test times, target dimension, and other aspects of each algorithm during the experiment to eliminate the influence of internal experimental parameters on the performance of each algorithm. The difference is that the maximum number of the evaluation is used in this experiment instead of the number of iterations, which can be calculated by using iteration times. As shown in Table 4, the study uses the same experimental equipment to avoid the interference of the external experimental environment, thus further increasing the fairness and scientific nature.

6.2. Impacts of components

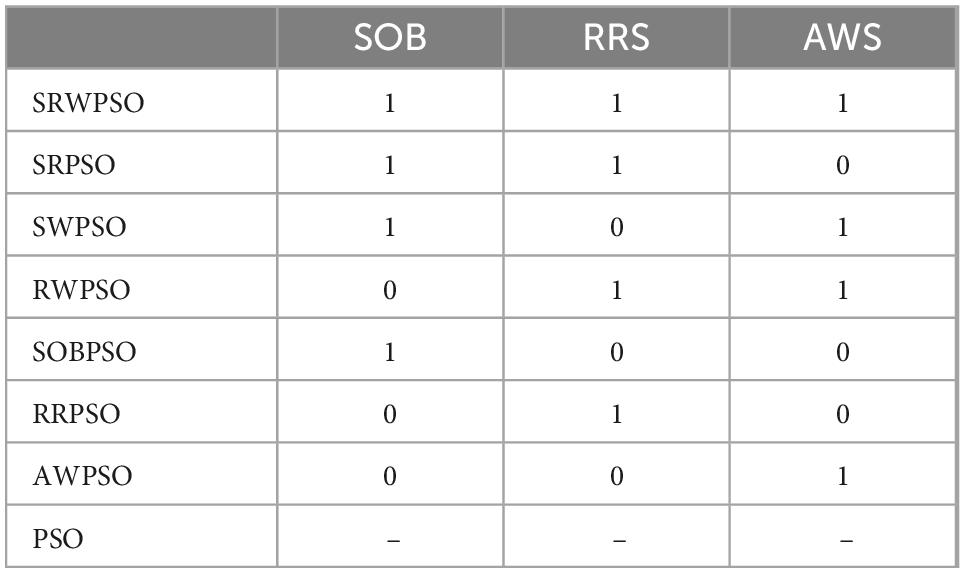

In this part, the experimental process of SRWPSO is presented. In this process, this paper explores the impact of three improved strategies on the performance of PSO based on the CEC 2014 benchmark function set. Table 5 shows the different combinations in the improvement process. In the table, the SOB represents the Sobol initialization strategy, the RRS represents the random replacement strategy, and the AWS represents the adaptive weight strategy.

Supplementary Appendix Table 1 reflects the effects of the different combinations of strategies on the comprehensive performance of PSO through AVG and STD. By analyzing the data in the table, it can be seen that the SRWPSO occupies the largest share of the number of excellent performances among the 30 test functions, especially in the unimodal functions and composition functions. For AVG, the smaller value obtained by the SRWPSO indicates that it performs better on the problem. Of course, the more frequency of occurrences of the minimum state of the AVG in the 30 test functions means that the SRWPSO is more adaptable to different problems. For STD, a smaller value obtained by the SRWPSO indicates a more stable performance on the corresponding problem. Similarly, the number of minimum states of the STD also reflects the adaptability of the corresponding algorithm to different problems in a certain extent. In addition, the performance of the PSO under single-strategy action is not outstanding enough compared to the traditional PSO, and even slightly worse than the traditional PSO in some problems. In the dual-strategy role, the PSO performs relatively well in terms of overall capability, especially the SWPSO and the RWPSO. Of course, by observing the table, it is easy to find that the SRWPSO shows the best-combined ability in this comparison test under the role of three strategies.

Supplementary Appendix Table 2 presents the p-values acquired based on the Wilcoxon signed-rank test. In analyzing the experiments, the article has bolded the experimental results less than 0.05 in the table, indicating that the excellent ability of the SRWPSO has statistical significance and higher confidence relative to the algorithms participating in the comparison. The table shows that the p-values less than 0.05 occupy a significant proportion compared to those greater than 0.05, especially relative to the performance of the traditional PSO on the 30 benchmark functions. It indicates that the SRWPSO proposed in this paper outperforms the single-strategy improvement variant, the two-strategy improvement variant, and the original PSO in the comparison experiments.

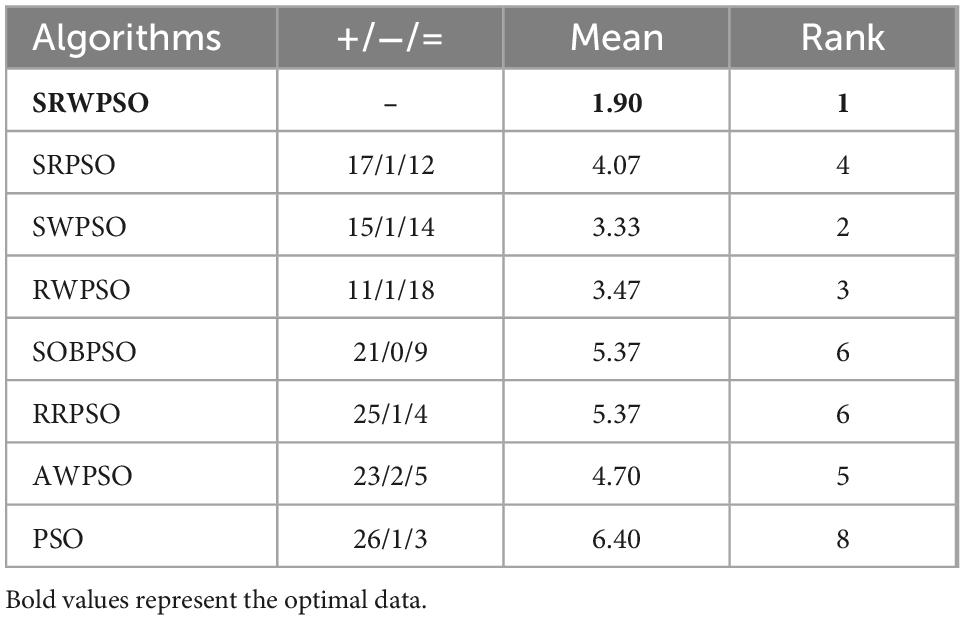

To enhance the persuasiveness of the experimental results, the experimental results based on the Wilcoxon signed-rank test are given in Table 6. By analyzing the table, it is easy to find that the SRWPSO shows the best comprehensive performance in this experiment, and the average value of the Wilcoxon signed-rank test obtained by it is much smaller than that of the second-ranked SWPSO. In addition, the SRWPSO performs better than the SWPSO on 15 of the 30 benchmark problems, and 14 have similar optimization capabilities. Of course, compared to the traditional PSO, the SRWPSO is more outstanding, with 26 benchmark functions performing well, and only one benchmark function performing less well than the traditional PSO, except for three with equal performance.

In order to advance to increase the authority of the experimental results, the statistical results based on the Friedman test are given in Figure 4. As seen from the figure, the Friedman statistic value obtained by the SRWPSO is 2.59, which is the smallest among the comparison results. In addition, it is not only much smaller than the traditional PSO, which ranks at the bottom, but also smaller than the SWPSO, which ranks second. This again indicates that the comprehensive performance of the SRWPSO performs relatively best in this experiment and also provides the basis for the SRWPSO proposed in this paper.

6.3. The qualitative analysis of SRWPSO

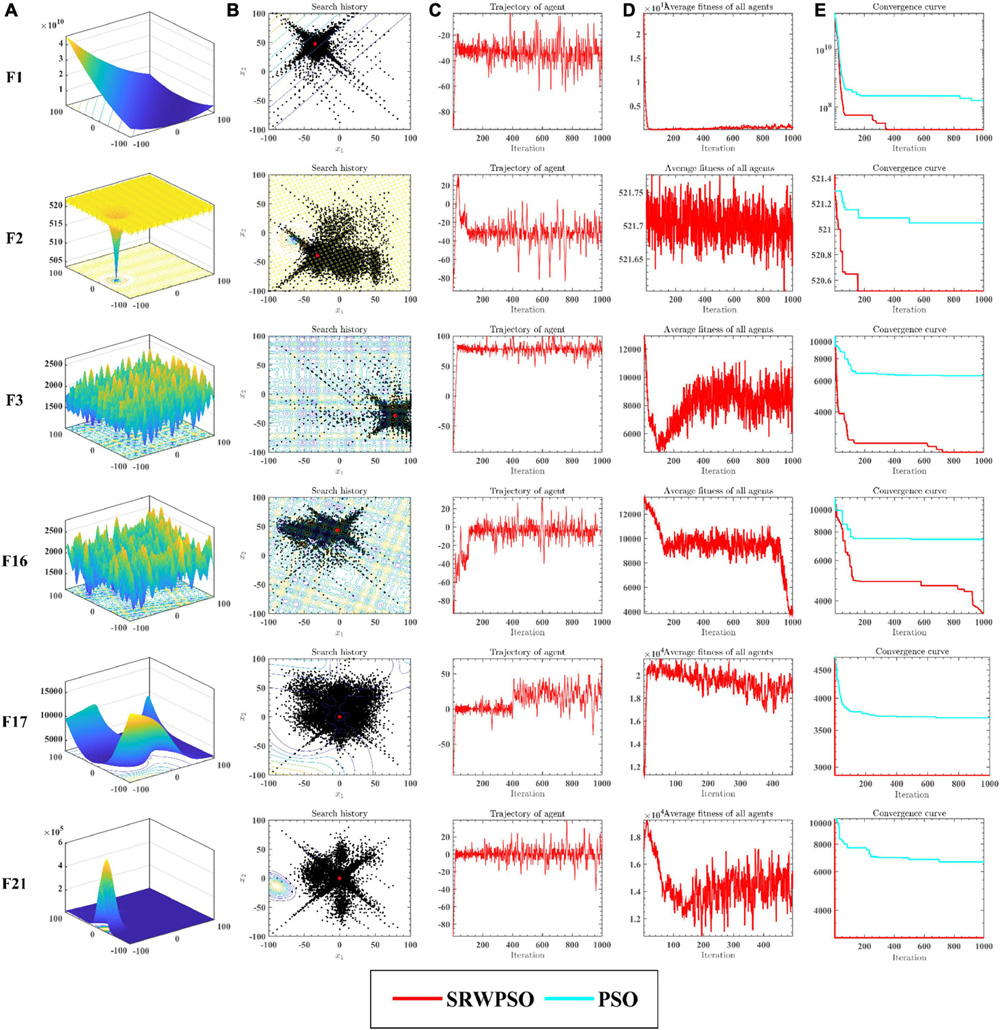

Figure 5 analyzes the performance of the SRWPSO from several perspectives. Figure 5A provides a three-dimensional view of the benchmark function. Figure 5B marks the two-dimensional distribution of the historical search positions of the SRWPSO during the search for superiority, where the red markers indicate the best positions throughout the search process and the black dots indicate the historical search positions. Figure 5C shows the change of the first dimension of each position during the iteration. Figure 5D gives the variation of the average fitness of all individuals in the population during the iteration. Figure 5E then provides the convergence curves of the SRWPSO and the PSO. The three-dimensional and two-dimensional distributions show that the SRWPSO is able to obtain better global optimal solutions on benchmark functions of different complexity. The variation of the first dimension at each position shows that the amplitude of oscillation at the beginning of the iteration is small. As the number of iterations keeps increasing, the amplitude of oscillations increases and stabilizes to a certain extent, indicating that individuals can better traverse the search space and increase the diversity of the population, thus enhancing the ability to escape the local optimal position. Similarly, it can be seen from Figure 5D that the average fitness values of the SRWPSO have a large oscillation amplitude on F12, F16, F17, and F21, again indicating the existence of population diversity during the search process. the convergence curves of the SRWPSO and the PSO show that the final convergence accuracy of the SRWPSO is better than that of the PSO. The convergence curves in Figure 5E also shows that the convergence ability of the SRWPSO is much larger than that of the PSO; the convergence curves on F1, F2, and F16 show that the SRWPSO has a solid ability to escape from the local optimum. Each inflection point on the convergence curve represents a successful escape from the local optimum position.

Figure 5. The analysis results of SRWPSO and PSO from multiple perspectives. See section 6.3 for details.

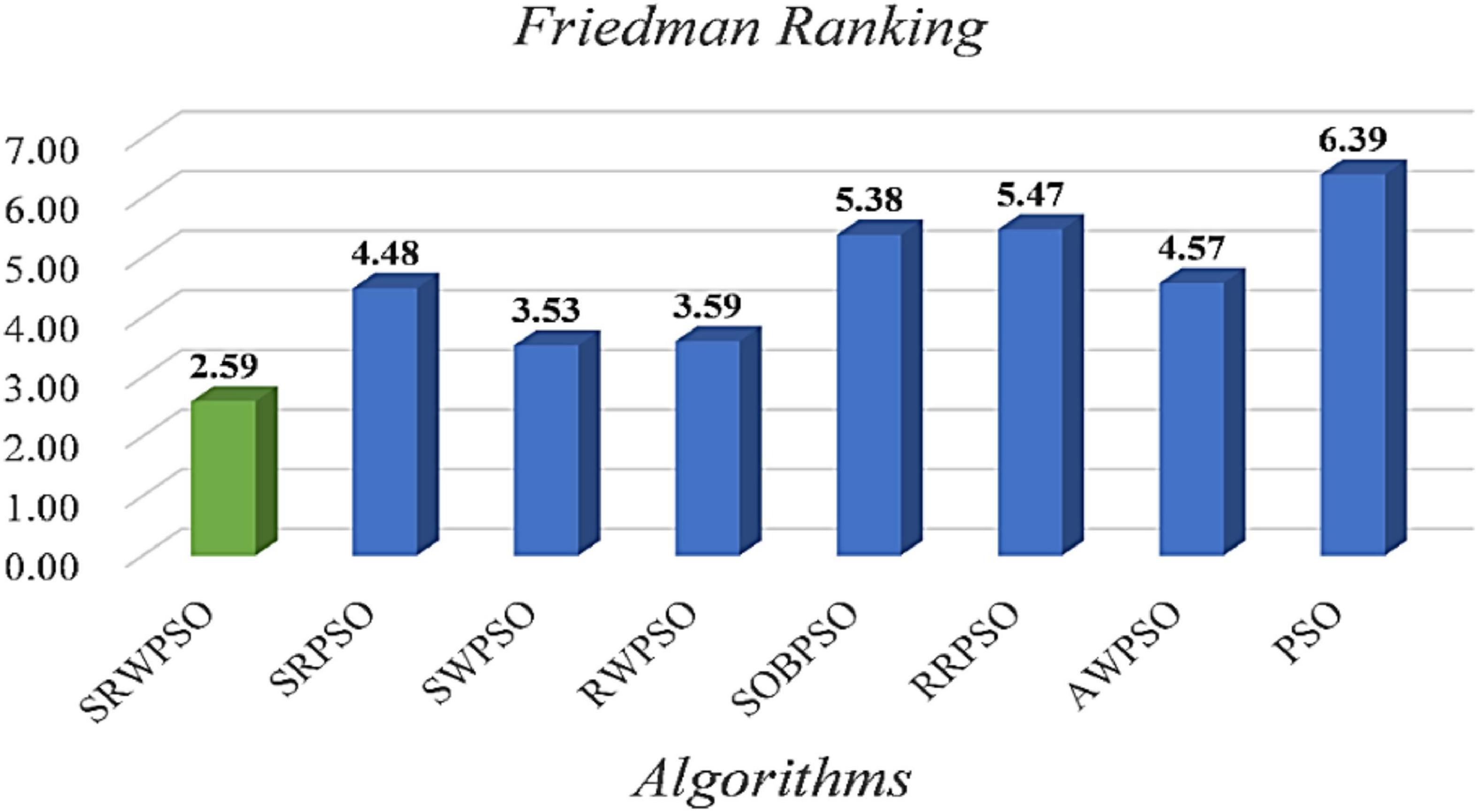

The results of the equilibrium analysis of the corresponding benchmark functions in Figure 5 are given in Figure 6. By comparing the equilibrium images of the SRWPSO and the PSO, it is easy to observe that there is a significant improvement in the development capability of the SRWPSO relative to the PSO, which makes the SRWPSO based on the three optimization strategies reach a better balance point in both exploration and development stages, thus making the convergence speed and final convergence accuracy of the SRWPSO better than the PSO.

6.4. Comparison with traditional algorithms

This subsection discusses the experimental results of comparing the SRWPSO with nine well-known traditional algorithms to demonstrate the core advantages of the SRWPSO further. In this comparison, the traditional algorithms involved include ant colony optimization based on continuous optimization (ACOR) (Dorigo, 1992; Dorigo and Caro, 1999; Socha and Dorigo, 2008), different evolution (DE) (Storn and Price, 1997), sine cosine algorithm (SCA) (Mirjalili, 2016), HHO (Heidari et al., 2019b), grey wolf optimization (GWO) (Mirjalili et al., 2014), whale optimization algorithm (WOA) (Mirjalili and Lewis, 2016), bat-inspired algorithm (BA) (Yang, 2010), moth-flame optimization (MFO) (Mirjalili, 2015), and wind-driven optimization (WDO)(Bayraktar et al., 2010).

Supplementary Appendix Table 3 gives the results of the SRWPSO compared with nine traditional algorithms based on AVG and STD. In terms of the number of the best solutions obtained on the 30 benchmark functions, the SRWPSO ranks first in this experiment. This indicates that the SRWPSO not only has the best comprehensive performance relatively but also its adaptability to different problems. In the same way, it’s evident that the SRWPSO still has a tremendous advantage over the other nine algorithms in finding the global optimum.

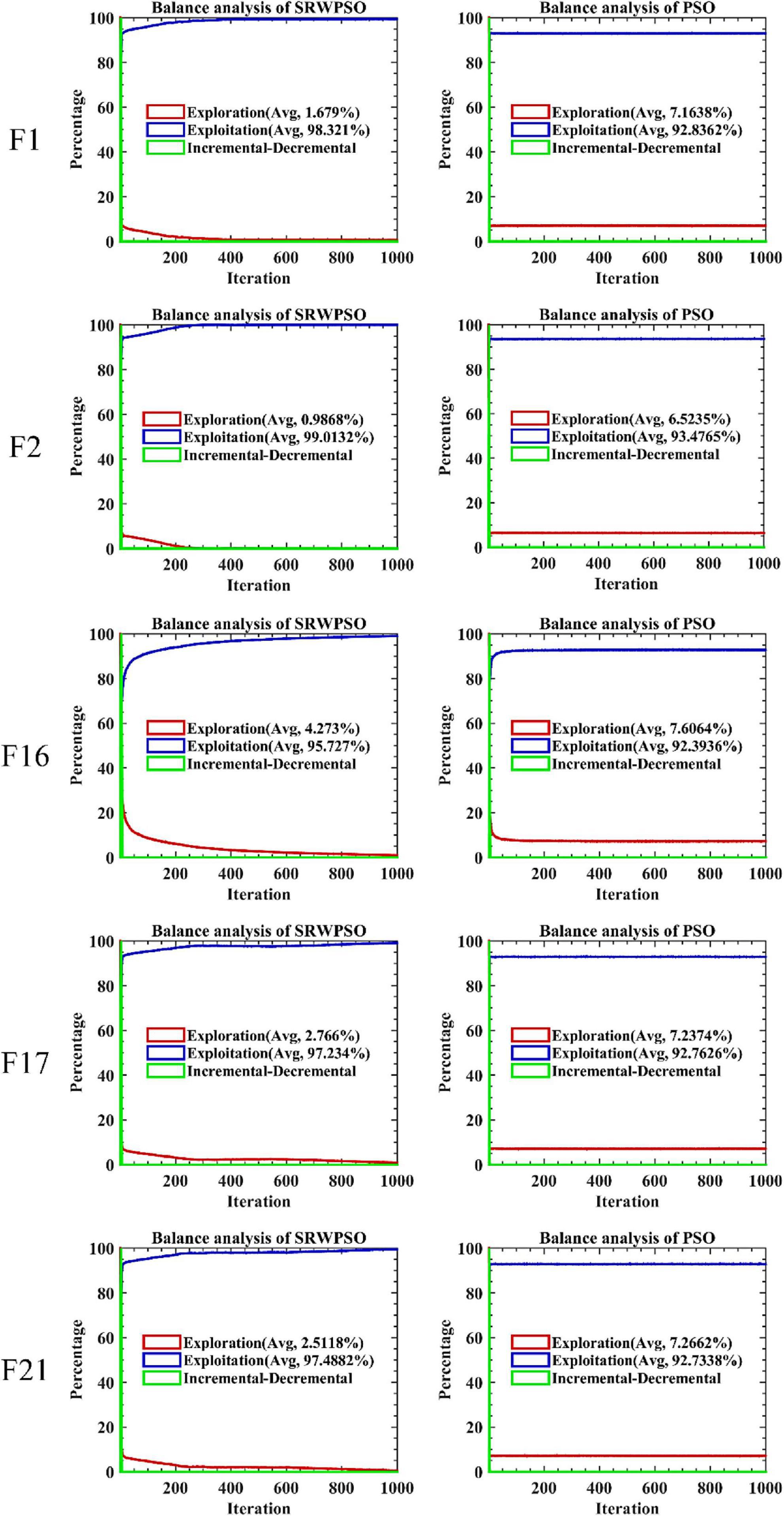

The analysis of the Wilcoxon signed-rank test in Table 7 shows that the SRWPSO ranks first in this comparison experiment with an overall mean of 1.53. It is 1.67, smaller than the average score of DE, which is ranked second overall and outperforms DE on 20 functions. The results of the p-values obtained during the Wilcoxon signed-rank test are presented in Supplementary Appendix Table 4. The data bolded in black in the table indicate less than 0.05, indicating that the experimental results are credible. The table shows that the p-values are essentially less than 0.05 in all the comparison results, indicating that the optimal solutions obtained by SRWPSO are credible when compared with the other nine conventional algorithms.

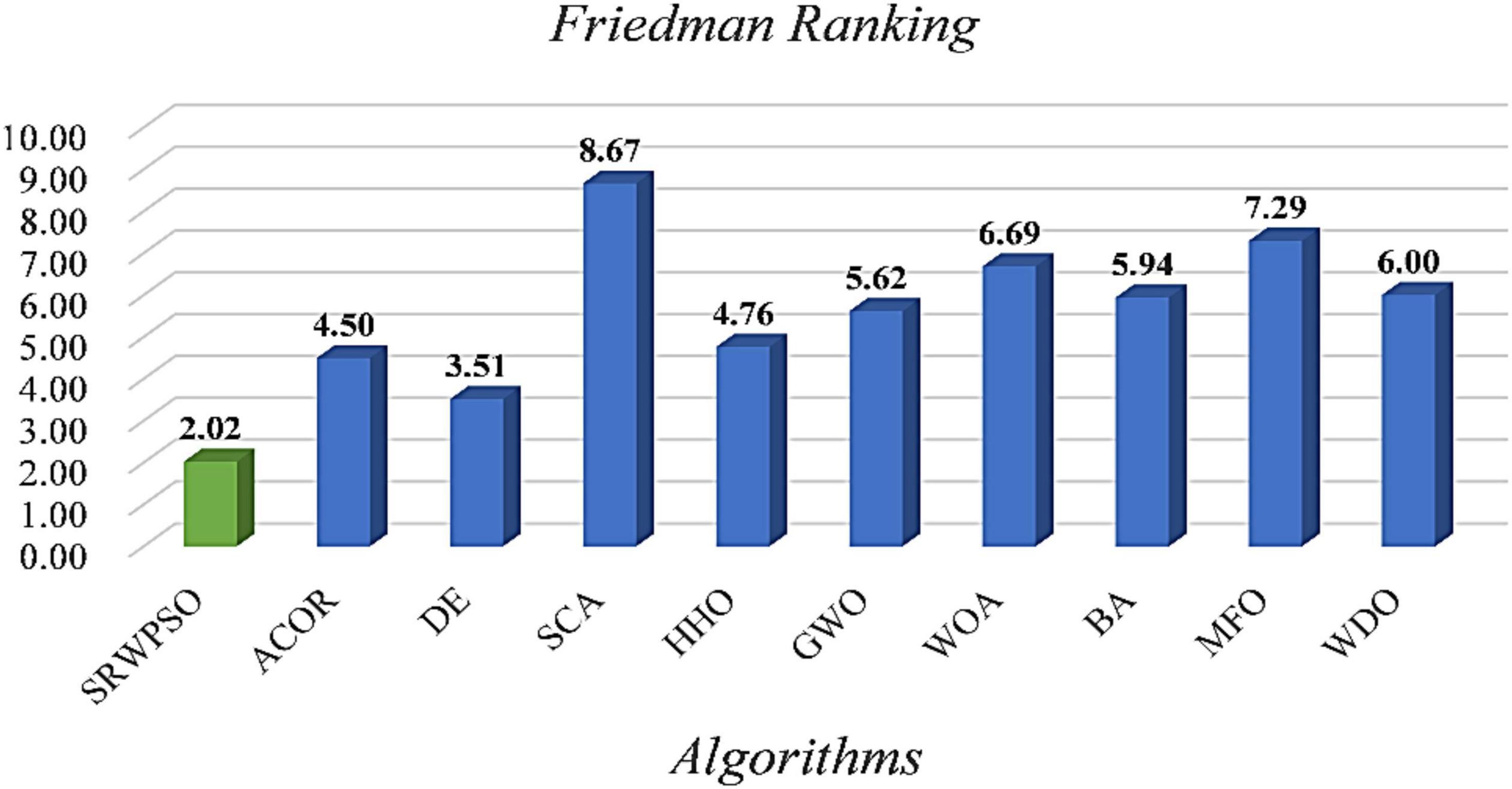

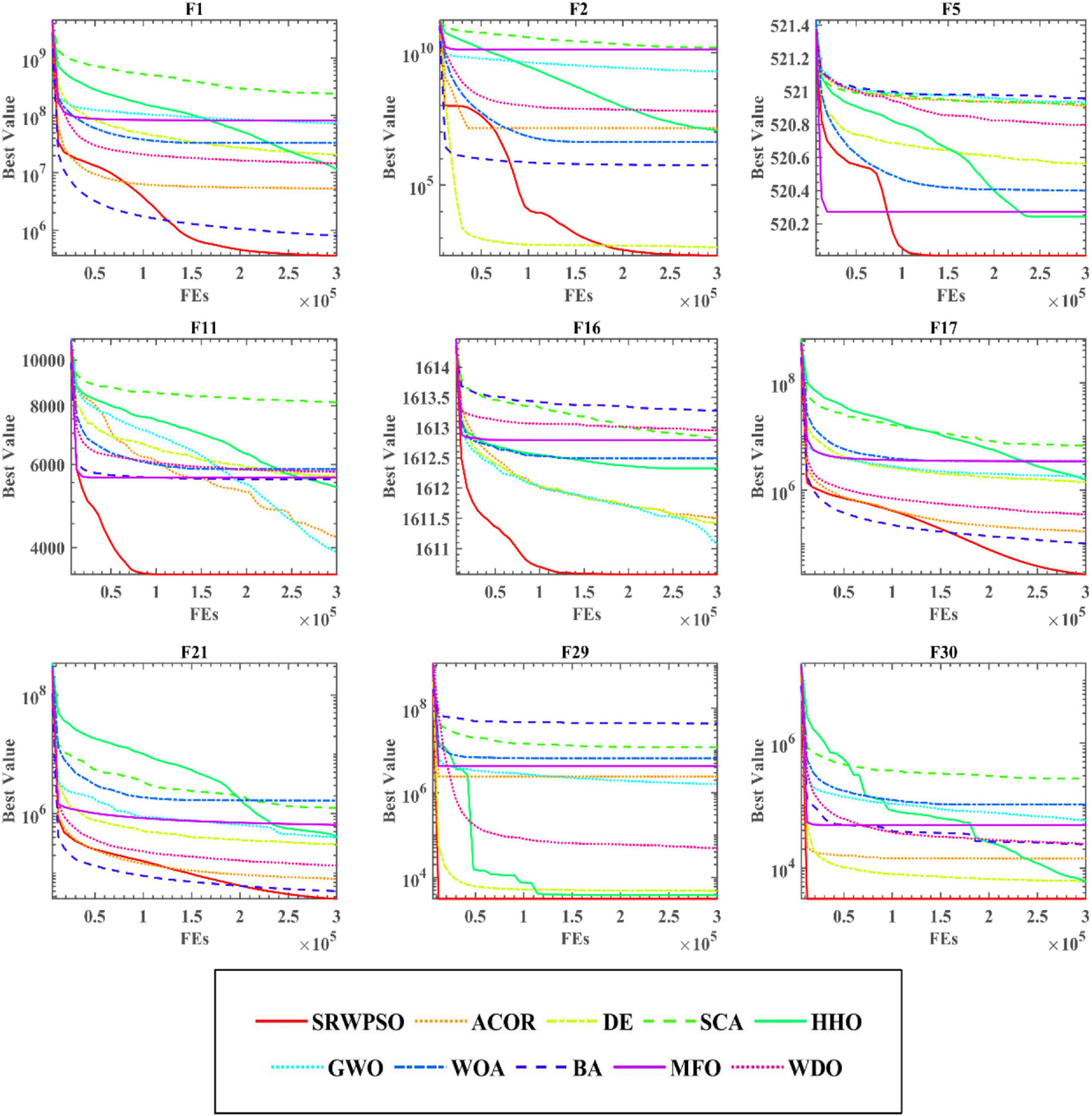

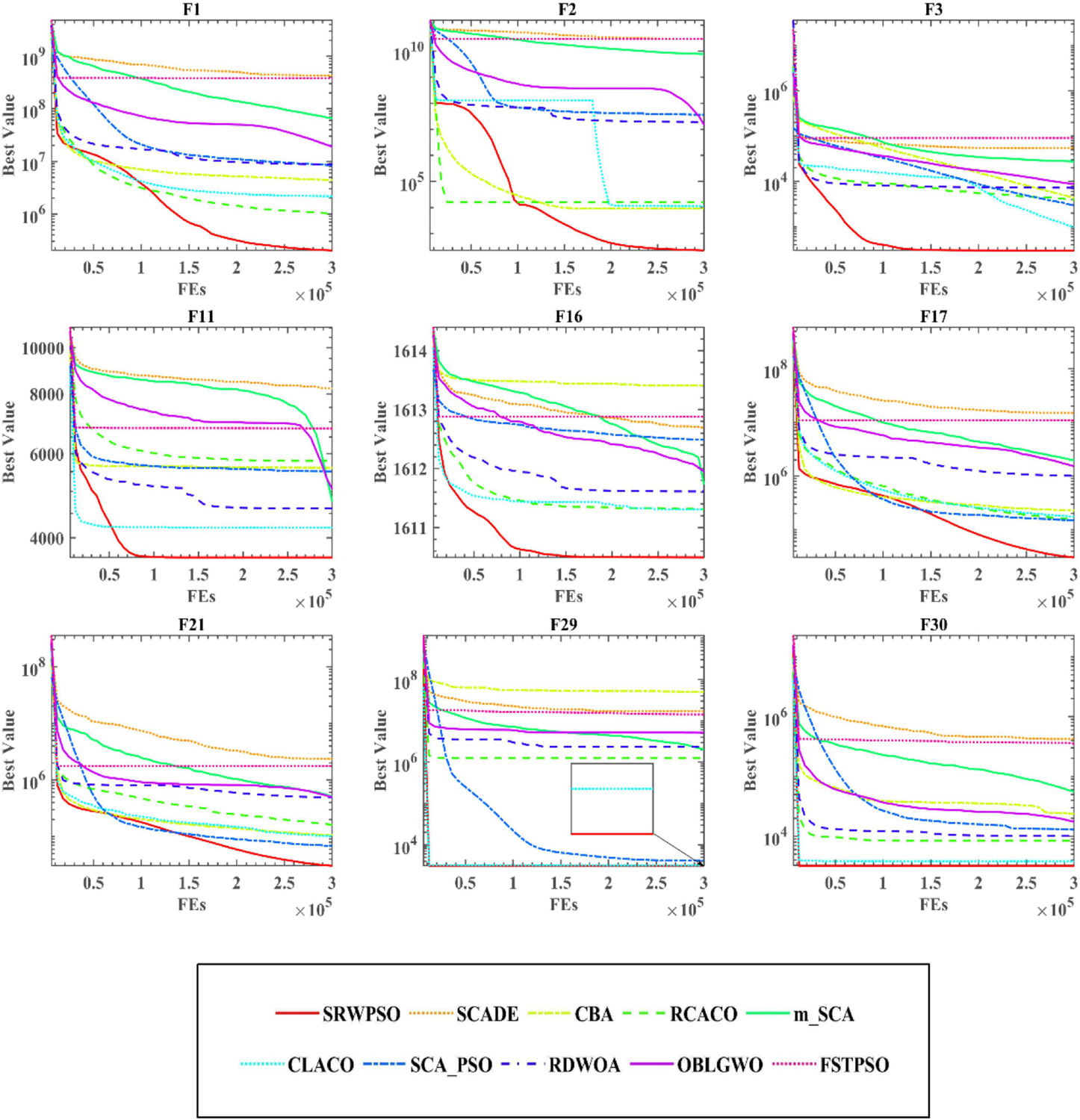

To further demonstrate the performance of SRWPSO, Figure 7 analyzes the experimental results based on the Friedman test. It is not obvious from the figure that the SRWPSO ranks first, obtaining the Friedman test result of 2.02, and DE ranks second with a score of 3.48 in the experiment. Thus, this is another evidence that SRWPSO still has a clear advantage compared to the basic algorithm. Figure 8 shows the representative partial convergence curves of SRWPSO compared with the other nine traditional algorithms. Among them, SRWPSO has significantly better convergence accuracy than the other algorithms. In addition, SRWPSO performs significantly better in terms of convergence speed on F11, F16, F29, and F30. On F1, F2, and F3, the convergence curves of SRWPSO have obvious inflection points compared with other algorithms, which indicates that SRWPSO has a more vital ability to escape from the local optimum position on this type of problem. Overall, SRWPSO is more competitive than the other nine traditional algorithms in searching for the global optimum. Therefore, when SRWPSO is compared with other basic algorithms, its core advantages are also well demonstrated.

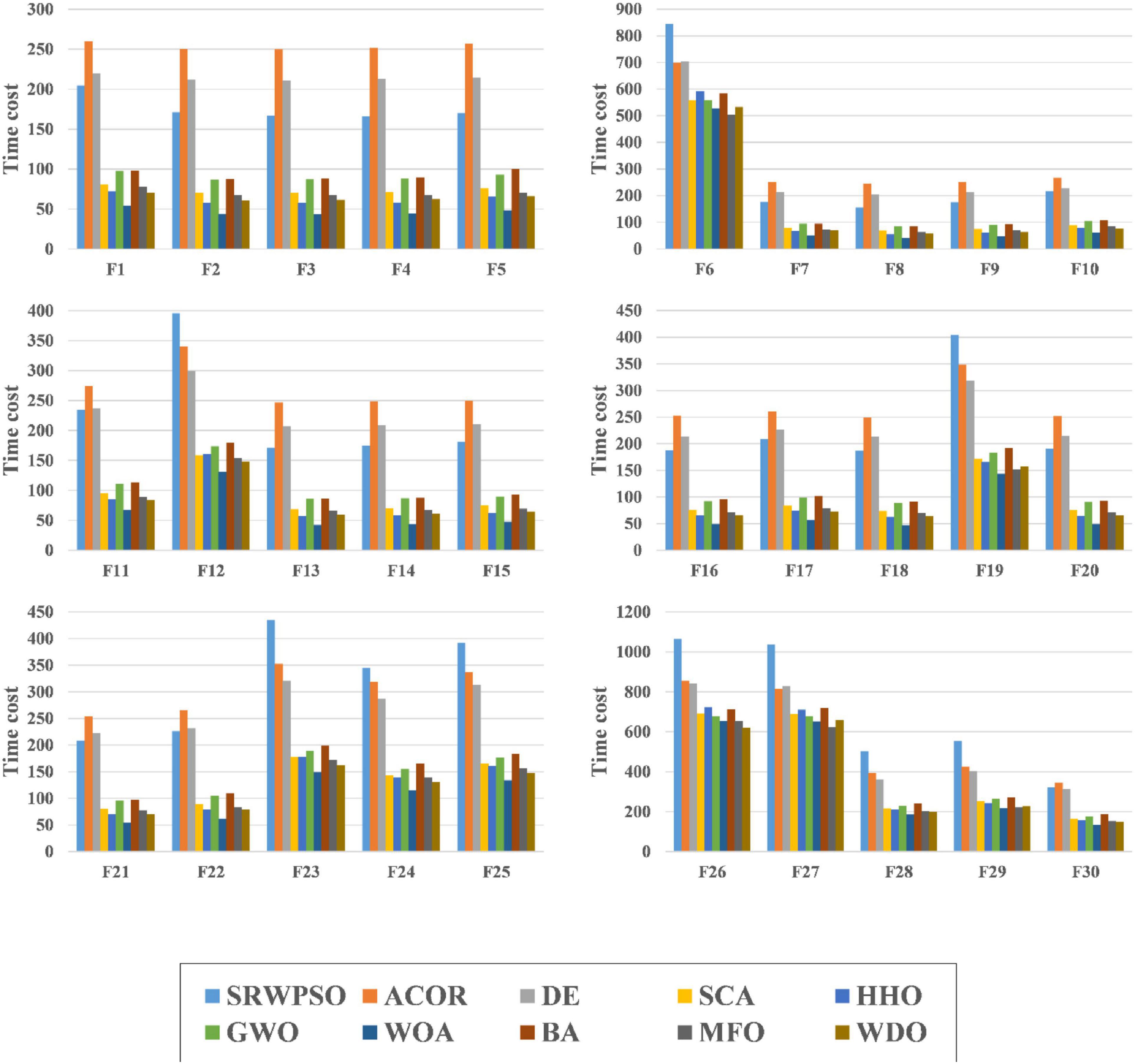

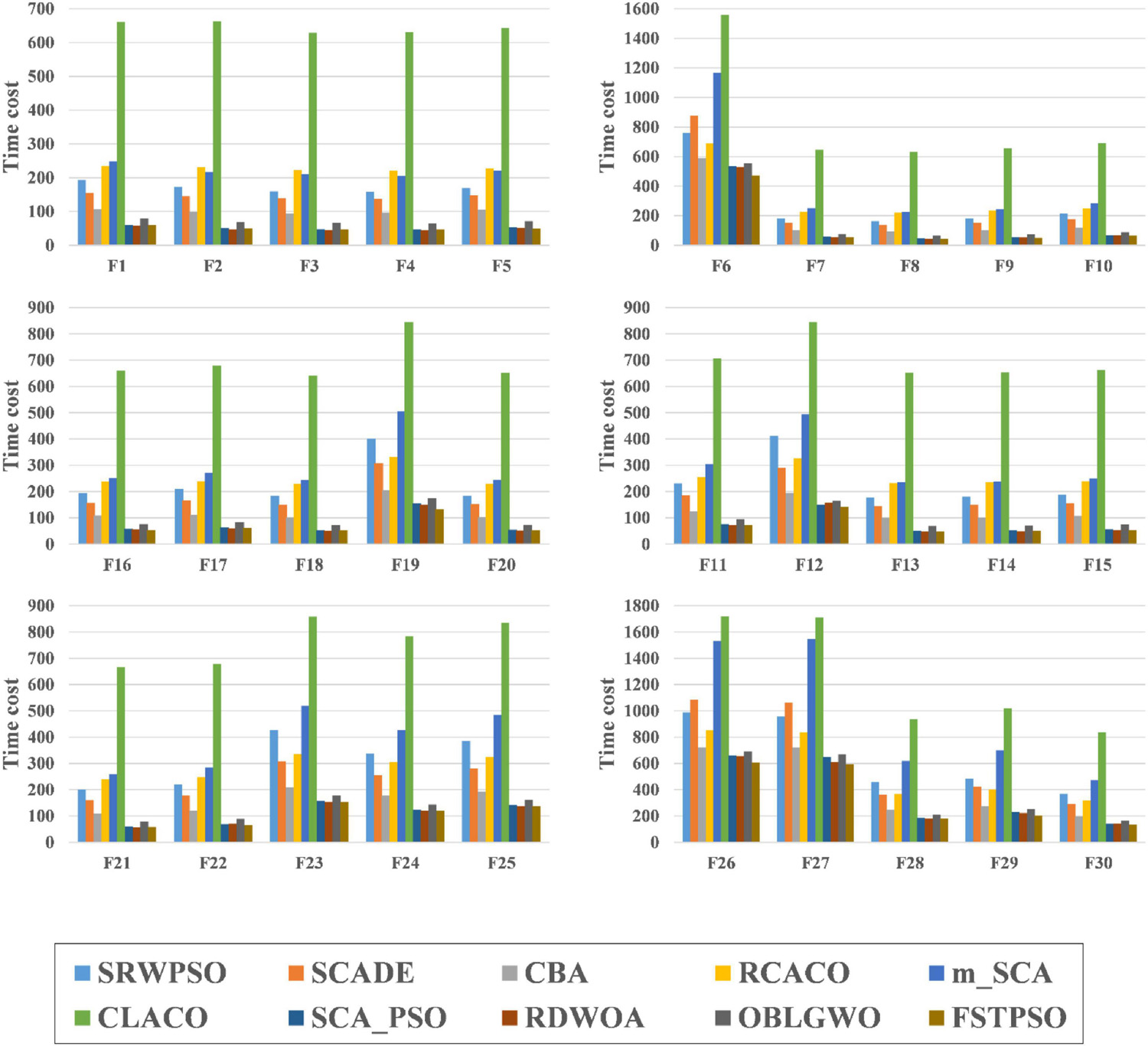

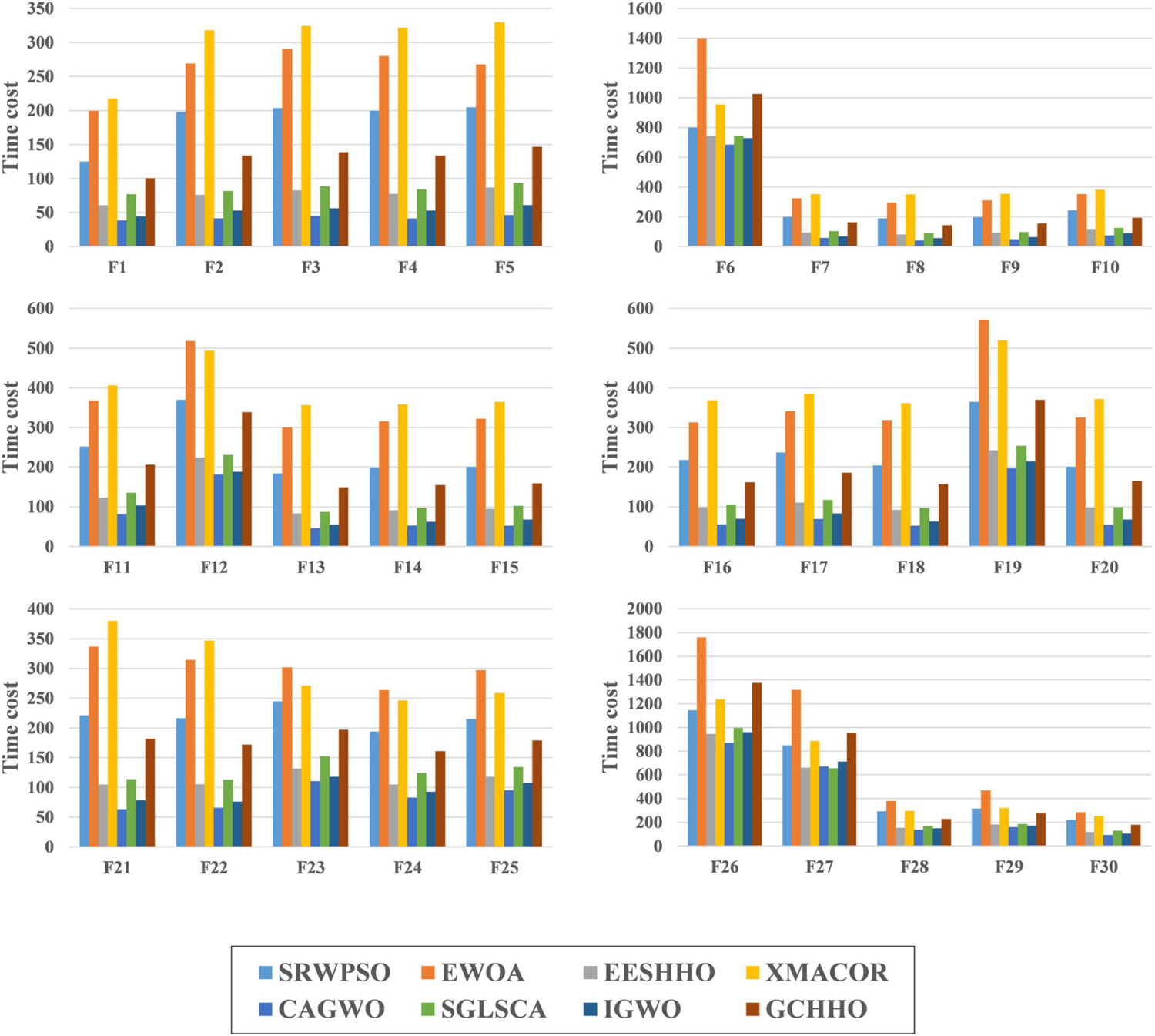

Figure 9 shows the time cost consumed by all algorithms in this experiment when run on the 30 benchmark functions. Each color in the figure represents an algorithm, and the experimental results are calculated in seconds. It is easy to see that SRWPSO has a higher consumption in the optimization task relative to these original classical algorithms. It is also easy to understand that this situation occurs due to the introduction of several improvement strategies in SRWPSO. However, the difference compared to ACOR and DE is not very large and even less consuming than them for most functions. This indicates that the computational cost of SRWPSO has an advantage over some well-known original algorithms.

6.5. Comparison with famous variants

To further verify that the comprehensive performance of SRWPSO has core advantages, this subsection compares SRWPSO with nine well-known variants of algorithms proposed in recent years, mainly hybridizing SCA with DE (SCADE) (Nenavath and Jatoth, 2018), chaotic BA (CBA) (Adarsh et al., 2016), chaotic random spare ACO (RCACO) (Dorigo, 1992; Dorigo and Caro, 1999; Zhao et al., 2021), modified SCA (m_SCA) (Qu et al., 2018), ACO with Cauchy and greedy levy mutations (CLACO) (Dorigo, 1992; Dorigo and Caro, 1999; Liu L. et al., 2021), hybridizing SCA with PSO (SCA_PSO) (Nenavath et al., 2018), double adaptive random spare reinforced WOA (RDWOA) (Chen et al., 2019), boosted GWO (OBLGWO) (Heidari et al., 2019a), and fuzzy self-tuning PSO (FSTPSO) (Nobile et al., 2018).

Supplementary Appendix Table 5 analyzes the AVG and STD obtained in the experiment after 30 independent runs. It can be seen that SRWPSO obtains the largest number of minimum AVG, which indicates that its convergence performance and adaptability to the problem are more advantageous than the other nine well-known variants in this comparison experiment. Also, SRWPSO obtains the largest number of minimum STD, which indicates that it exhibits performance with more stability.

The analytical results of the Wilcoxon signed-rank test are given in Table 8. As seen from the table, SRWPSO achieves relatively better global optimal solutions for most of the functions and ranks first in this experiment with an overall mean of 1.87. In addition, it is not difficult to observe the second column of the table to find that SRWPSO outperforms the second-ranked CLRCO by 17 out of 30 benchmark functions, 19 outperforms the third-ranked RCACO, and even 30 outperforms the bottom-ranked FSTPSO. This indicates that the comprehensive performance of SRWPSO has a powerful core advantage among all the algorithms participating in this experiment. To further demonstrate the core advantage of SRWPSO, Supplementary Appendix Table 6 analyzes the p-values obtained in the Wilcoxon signed-rank test. The bolded and blackened data in the table indicate that the p-values obtained are less than 0.05, again indicating that it is plausible that SRWPSO excels over the other nine well-known variants for the corresponding problem. Thus, it is credible that we can easily see that SRWPSO has superior performance in most comparisons through the table.

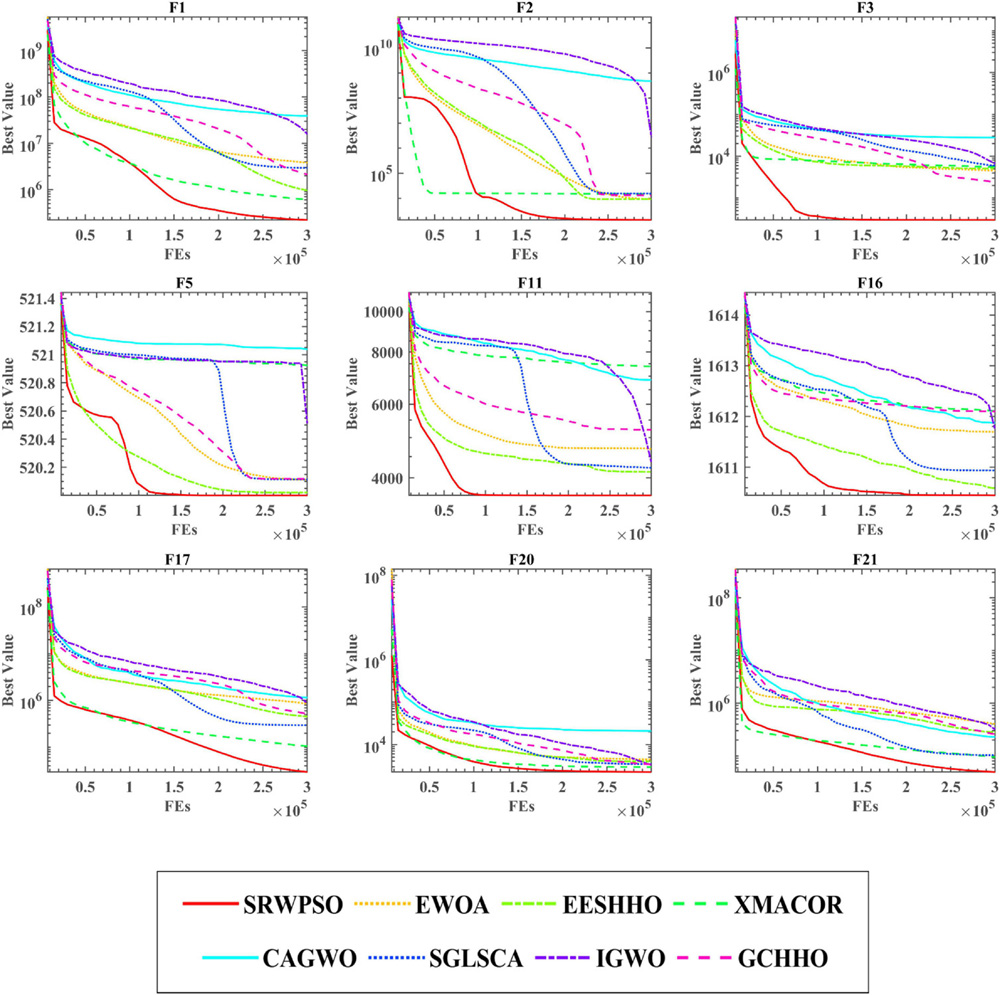

The results of the Friedman test given in Figure 10 show that SRWPSO ranks first with 2.37 and CLACO ranks second with 3.34, which proves that SRWPSO outperforms the other nine methods. To further analyze the convergence capability of SRWPSO, we give some of the convergence curves in this comparison experiment in Figure 11. From the figure, it can be observed that SRWPSO has the best convergence accuracy on the listed benchmark functions. In terms of convergence speed, SRWPSO is relatively more excellent on the F2, F3, F11, F16, and F30, while it is well demonstrated to have the ability to continuously find the global optimum on F1, F2, F17, and F21. Thus, the above analysis strongly demonstrates that the comprehensive performance of SRWPSO still has significant advantages compared with other advanced variants.

Figure 12 shows the time cost consumed by SRWPSO with 9 other famous variants for 30 optimization tasks. Each color in the figure represents an algorithm, and the experimental results are in seconds. SRWPSO consumes less than m_SCA and CLACO in all 30 optimization tasks, with the most prominent advantage over CLACO in particular. In addition, it is not difficult to find that SRWPSO consumes less than RCACO in most optimization tasks upon closer inspection. The difference compared to SCADE and CBA is also not very large. Of course, SRWPSO also has some weaknesses against several other variants, which are caused by introducing optimization strategies with different complexity to algorithms with different complexity. In conclusion, SRWPSO has good computational efficiency in comparison with famous variants.

6.6. Comparison with new peer variants

To highlight the core strengths of SRWPSO, we make a comparison of SRWPSO with seven new peer variants in this section. These variants are mainly enhanced WOA (EWOA) (Tu et al., 2021a), elite evolutionary strategy-based HHO (EESHHO) (Li C. et al., 2021), ACOR based on the directional crossover (DX) and directional variant (DM) (XMACOR) (Qi et al., 2022b), cellular grey wolf optimizer with a topological structure (CAGWO) (Lu et al., 2018), multi-core SCA (SGLSCA) (Zhou W. et al., 2021), improved GWO (IGWO) (Cai et al., 2019), and HHO based on Gaussian mutation and cuckoo search (GCHHO) (Song et al., 2021).

Supplementary Appendix Table 7 compares SRWPSO with new peer variants. It is obvious that SRWPSO has the best performance on unimodal functions (F1, F2, and F3) and composition functions (F23–F30). In addition, the AVG and STD of SRWPSO obtain the highest number of optimal in this experiment, which indicates that the method is relatively the most adaptable to different problems. In another way, it means that SRWPSO has not only better optimization ability but also better stability. Supplementary Appendix Table 8 shows the p-value of the comparison result of SRWPSO with new peer variants. The data marked in black in the table indicate that the p value is greater than 0.05, which indicates that these data lack statistical significance. On the contrary, other data have statistical significance and can be powerful evidence to verify SRWPSO. It is clear that most of the data is less than 0.05, which indicates that SRWPSO is better than the other algorithms in terms of the corresponding functions.

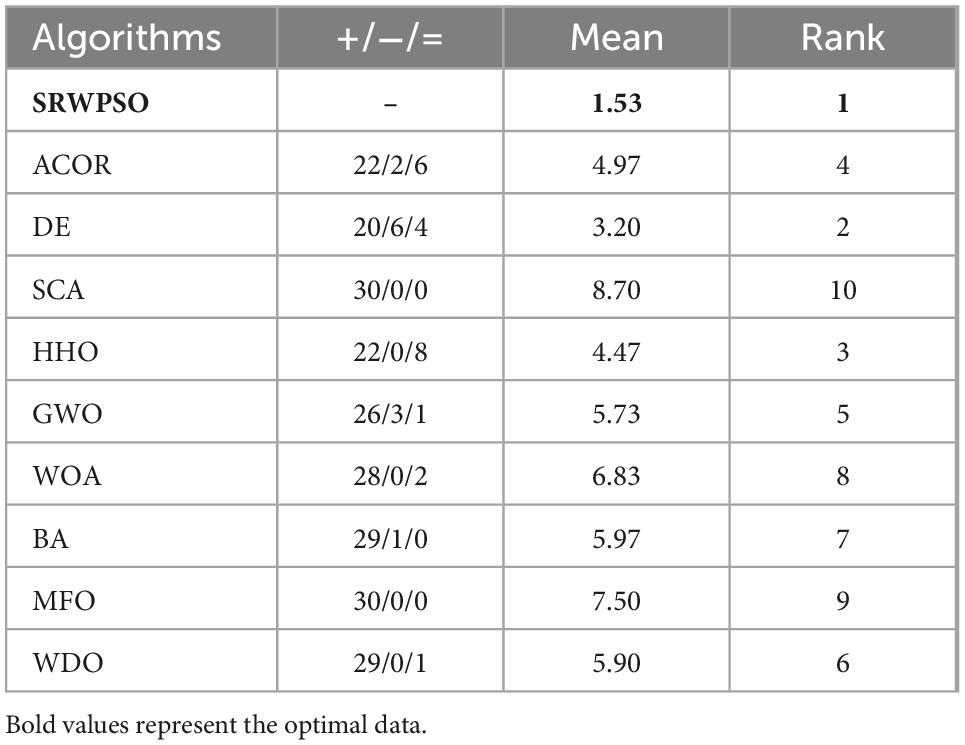

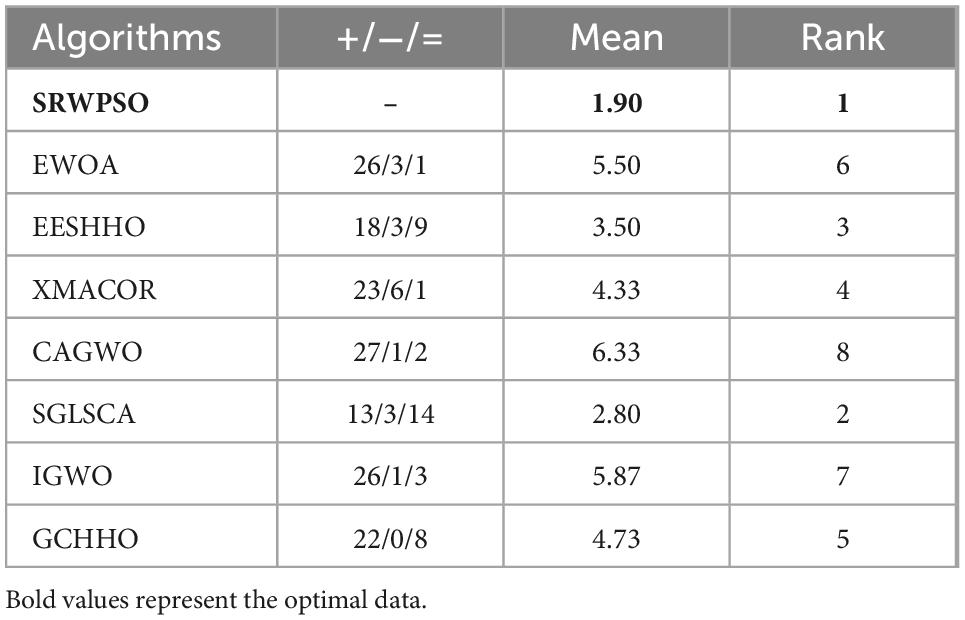

Table 9 shows the results of the Wilcoxon signed-rank test of SRWPSO with new peer variants. It is clear that SRWPSO ranks first in this test with a mean score of 1.90, which is 0.9, smaller than the second-ranked SGLSCA. Specifically, SRWPSO is stronger than SGLSCA on 13 functions, equal on 14 functions, and worse on only 3 functions. In addition, SRWPSO is superior to EWOA, XMACOR, CAGWO, IGWO, and GCHHO on 22 or more functions. In conclusion, this test shows that SRWPSO still has a significant advantage in comparison with the new peer variants.

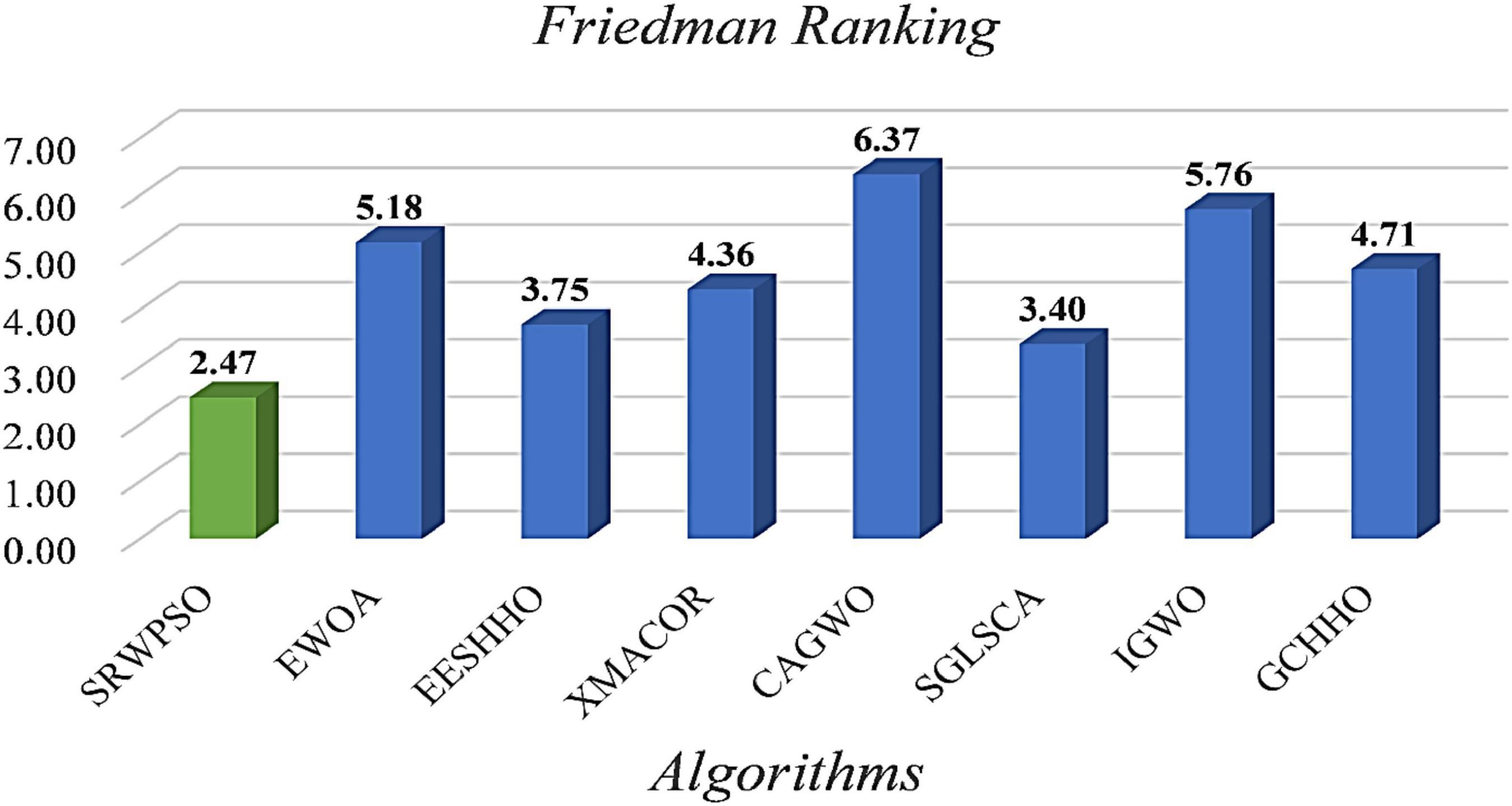

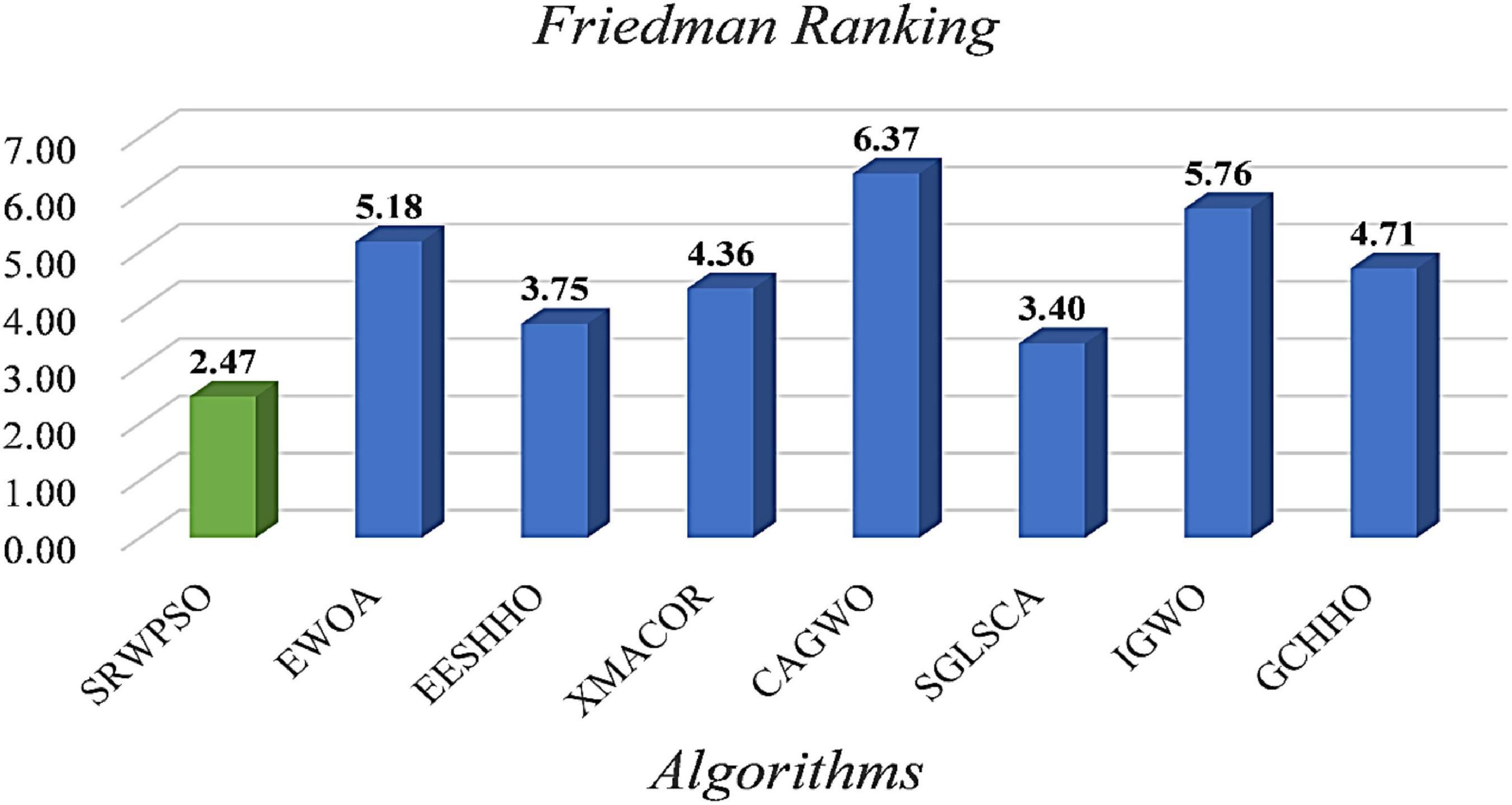

Figure 13 is the results of the Friedman test of SRWPSO with new peer variants. It is not difficult to see from the figure that SRWPSO obtains the best scores in the Friedman test, which is 0.93 smaller than the second-ranked SGLSCA and 3.9 smaller than the last ranked CAGWO. Figure 14 shows 9 convergence images of SRWPSO and new peer variants. In this figure, SRWPSO obtains the best convergence accuracy in all 9 convergence images. Also, the convergence curves of SRWPSO on F1, F2, F3, F5, and F16 have obvious inflection points compared to the other variants, which indicates that the algorithm has a stronger ability to escape from local optimal on the corresponding functions. Moreover, it is obvious that SRWPSO also has better convergence speed. In conclusion, SRWPSO has a very significant core advantage in comparison with the new peer variant.

Figure 15 is the time complexity evaluation results of SRWPSO with new peer variants in this experiment. In this figure, each color represents an algorithm, and the experimental results are in seconds. SRWPSO consumes significantly less than EWOA and XMACOR on all 30 functions. Except for F1, F2, and F3, there is not much difference between them, although SRWPSO is more time-consuming than GCHHO. It is not promising that SRWPSO is more time-consuming relative to EESHHO, SGLSCA, and IGWO. It is not difficult to understand this situation, mainly caused by the introduction of optimization strategies with different degrees of complexity in algorithms with different degrees of complexity. In conclusion, SRWPSO has better computational efficiency than the new peer variants. The SRWPSO and the future improved PSO can be applied in different fields, such as human activity recognition (Qiu et al., 2022), dynamic module detection (Ma et al., 2020; Li D. et al., 2021), recommender system (Li et al., 2014, 2017), smart contract vulnerability detection (Zhang L. et al., 2022), privacy protection of electronic medical records (Wu et al., 2022), named entity recognition (Yang Z. et al., 2022), structured sparsity optimization (Zhang X. et al., 2022), microgrids planning (Cao et al., 2021c), location-based services (Wu et al., 2020; Wu Z. et al., 2021), disease prediction (Su et al., 2019; Li L. et al., 2021), medical data processing (Guo et al., 2022), drug discovery (Zhu et al., 2018; Li Y. et al., 2020), and object tracking (Zhang et al., 2015).

7. Feature selection experiments and analysis

In this section, the performance of the bSRWPSO-FKNN is first tested and validated on the basis of nine public datasets in the UCI. Then, this section performs a secondary performance validation of the suggested model based on a medical dataset and successfully extracts the key features affecting the incidence of AD through 10 times 10-fold cross-validation experiments combined with clinical medical practice.

7.1. Experimental setup

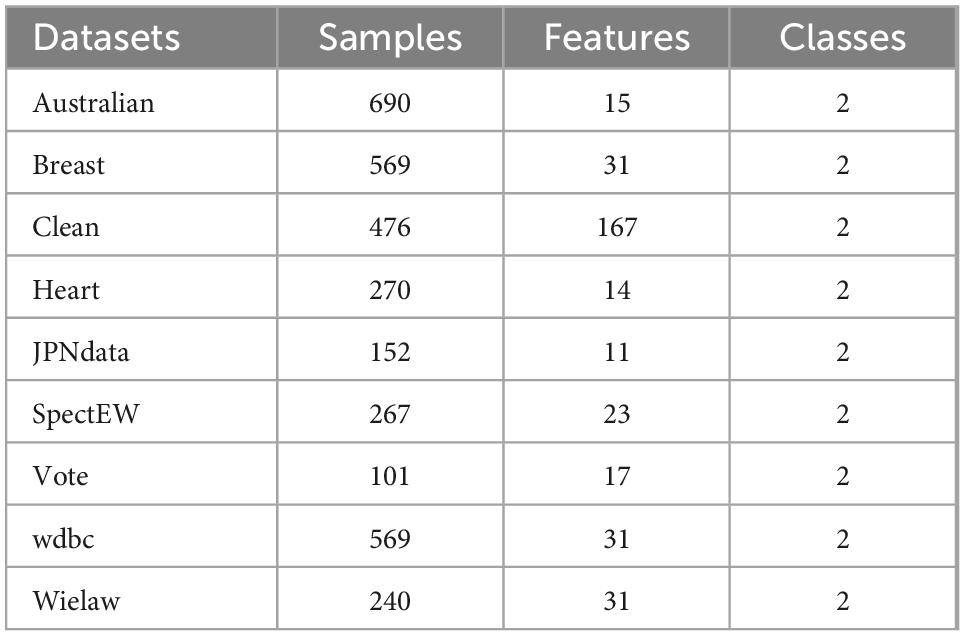

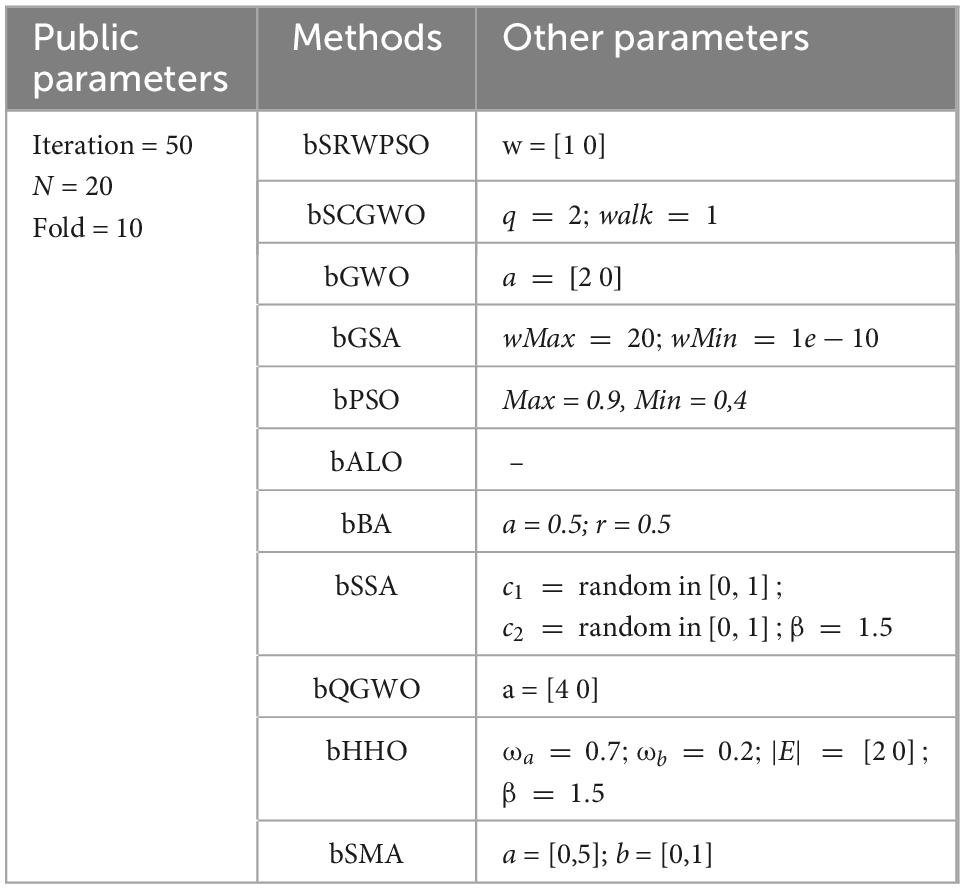

For the purpose of verifying that the proposed bSRWPSO-FKNN has better performance in feature selection, a series of comparative tests are conducted in this paper between bSRWPSO and some well-known algorithms in this field based on nine public datasets and one medical dataset. The details of the public datasets are described in Table 10. The main binary swarm intelligence algorithms involved in the tests are bSCGWO, bGWO, bGSA, bPSO, bALO, bBA, bSSA, bQGWO, bHHO, and bSMA. In addition to converting the swarm intelligence methods involved in the comparison to a binary discrete version suitable for feature selection, the parameters unique to the algorithms themselves remain unchanged, as shown in Table 11.

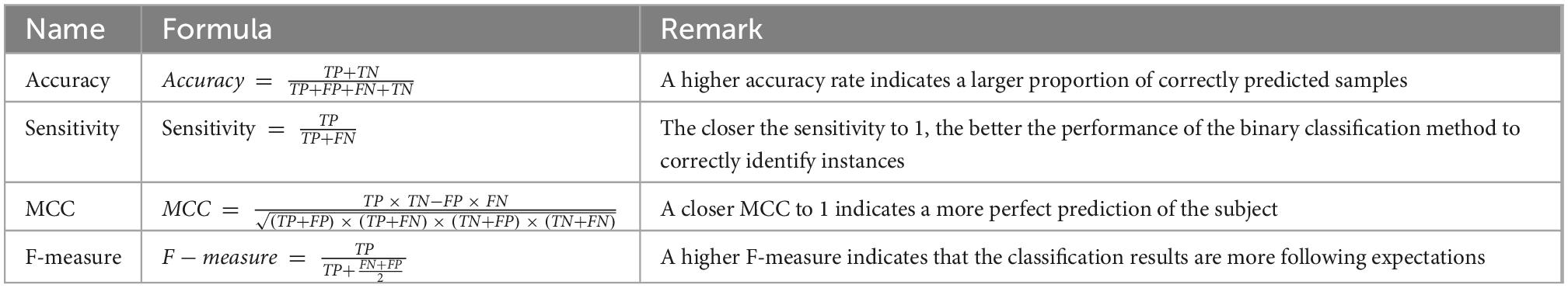

To facilitate the verification of the core advantages of the bSRWPSO-FKNN, four evaluation criteria, including Accuracy, Sensitivity, MCC, and F-measure, are applied in this paper to evaluate the methods involved in the comparison experiments, and the average value (AVG) and variance (STD) obtained during the experiments were analyzed and compared. Here, unlike the benchmark function validation part, a larger AVG indicates a more robust average performance of the method. In addition, the optimal data of the experimental results are highlighted in black. The details of the evaluation criteria are described in Table 12.

In the table above, true positive (TP) and true negative (TN) are correct situations, which means that positive and negative classes are correctly predicted, respectively. At the same time, false positive (FP) and false negative (FN) are error cases. The former means that negative class is wrongly predicted as positive class, while the latter indicates that positive class is incorrectly predicted as negative class.

In addition, this paper also evaluates the comparison models that participated in the feature selection experiments by the Friedman test and then gave the Friedman ranking corresponding to the comparison models, thus demonstrating more intuitively that the bSRWPSO-FKNN has relatively better feature selection performance. We conducted all of our experiments using fair aspects, which are recognized as being common across a wide range of computer platforms, while adhering to the guidelines for unbiased comparisons in preceding AI-based work (Duan et al., 2022; Jin et al., 2022; Yang B. et al., 2022). If the professional designed the programming, it is assumed that these variables occur regardless no matter how the approach is used (Li H. et al., 2021; Zhang Z. et al., 2022; Lu et al., 2023). Finally, to ensure the fairness of the experimental process, the internal environment of all experiments is kept consistent, and the external experimental environment is kept consistent with the experimental part of the benchmark function.

7.2. Public dataset experiments

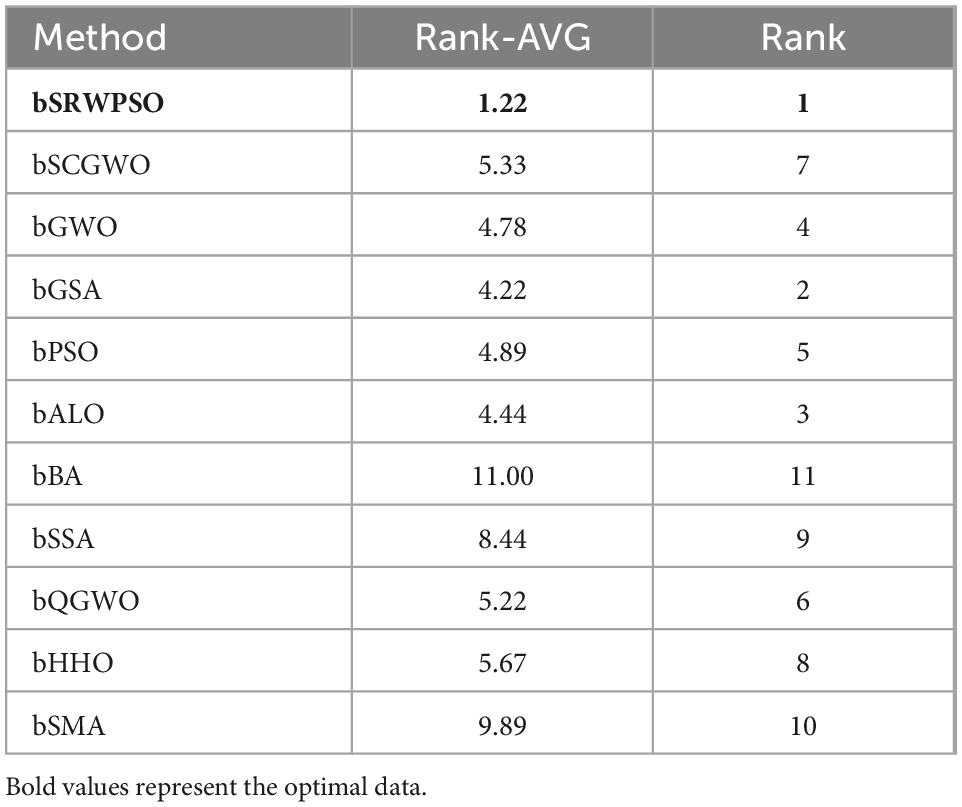

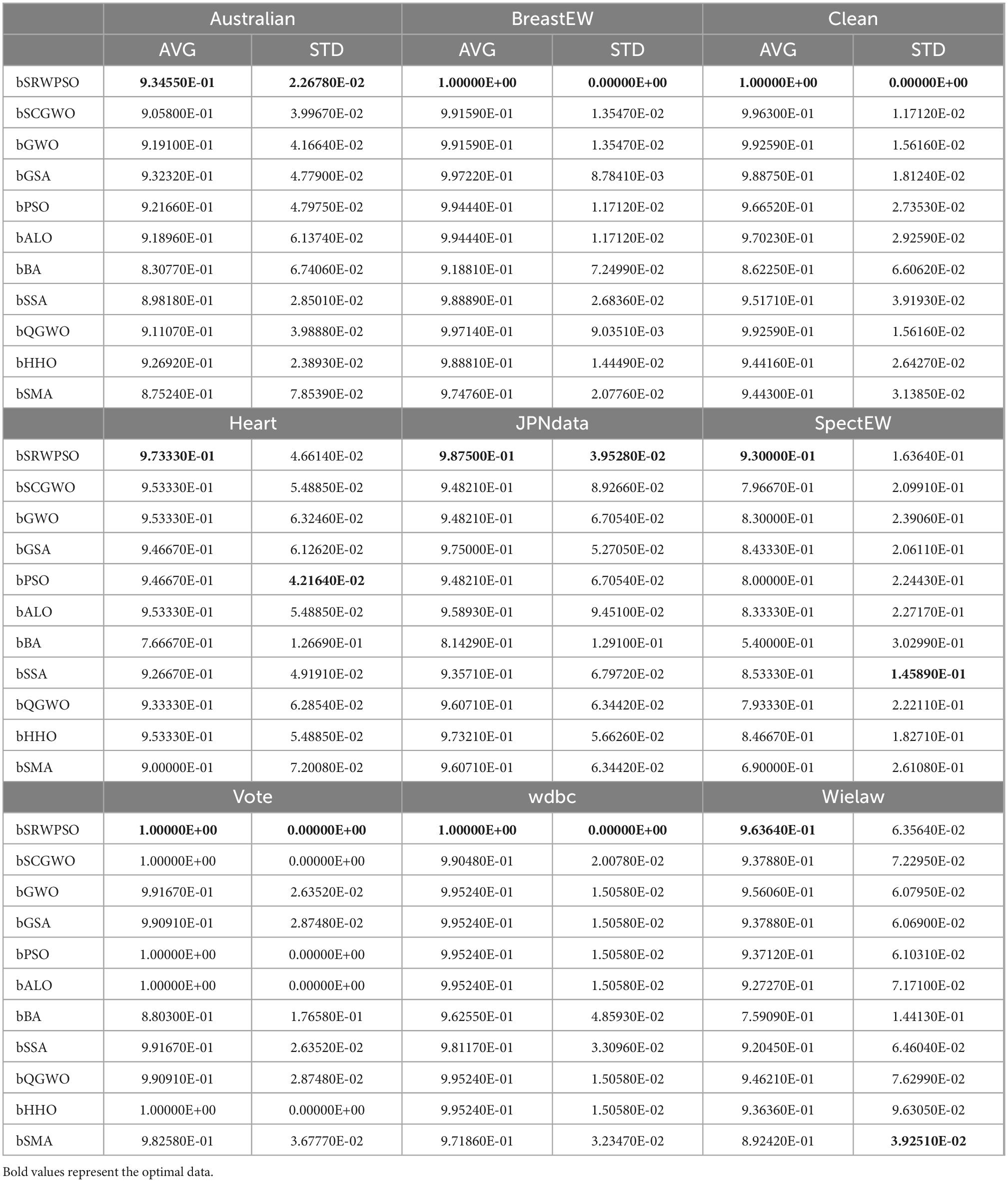

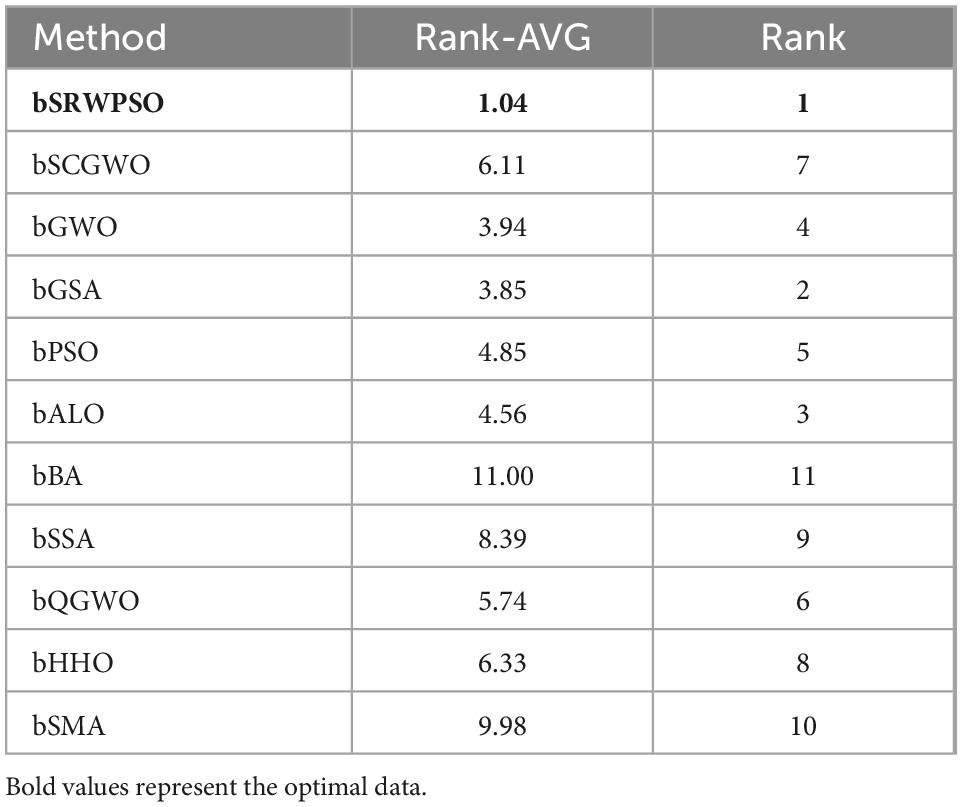

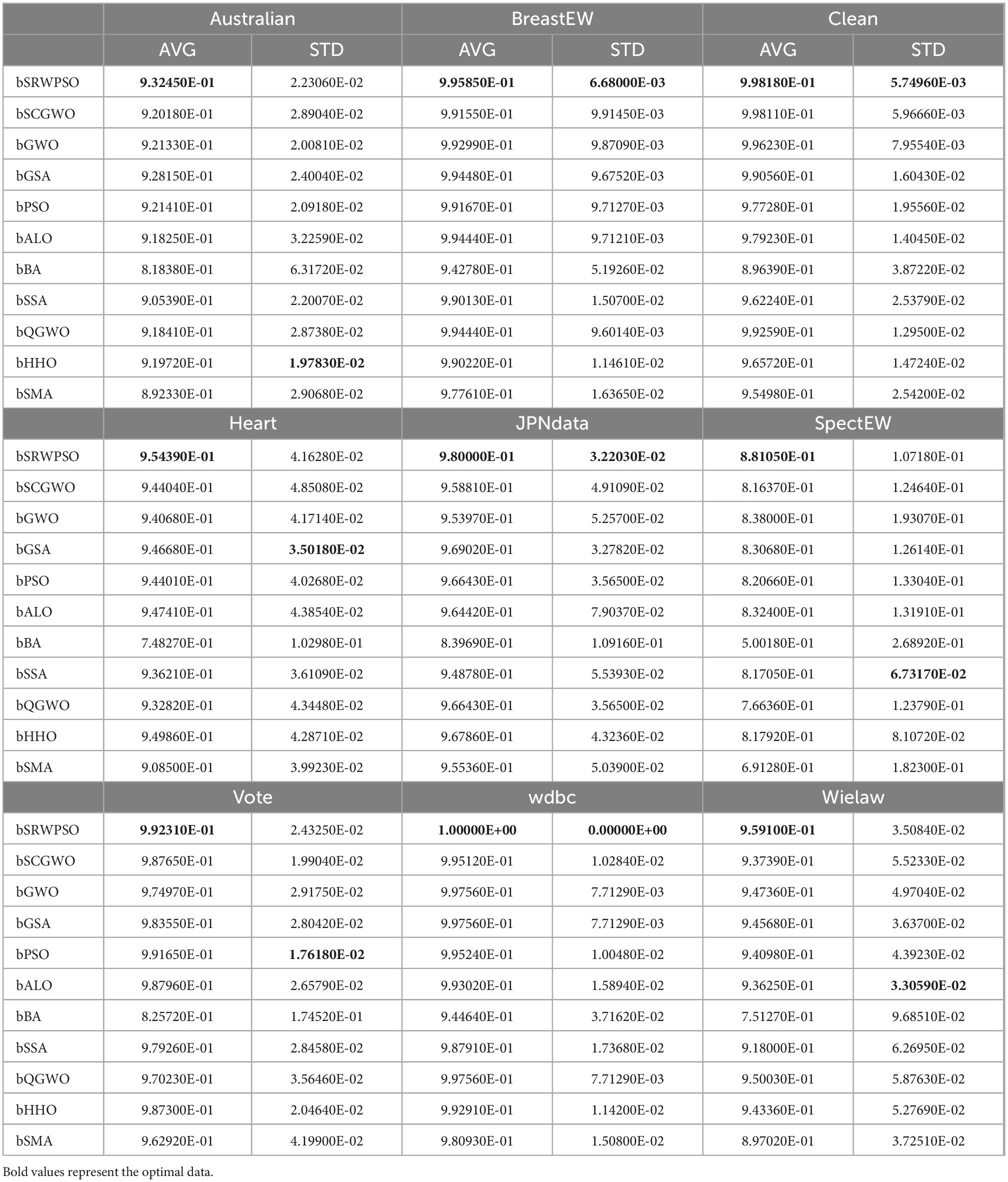

The evaluation results of the classification accuracy of bSRWPSO with the other ten binary algorithms are given in Table 13. From the table, it can be seen that bSRWPSO has the largest average classification accuracy on all nine public datasets tested, indicating that the method ranks first on the corresponding datasets. Secondly, it can also be seen that the stability of bSRWPSO is also the strongest among the compared methods. Table 14 shows the average ranking results of the 11 comparison methods based on nine public datasets obtained by the Friedman test for this experiment. As shown in the second column of the table, the mean value of the Friedman test of bSRWPSO based on nine public datasets is 1.22, which is the smallest among all comparison algorithms, indicating that bSRWPSO is ranked first in this classification accuracy test.

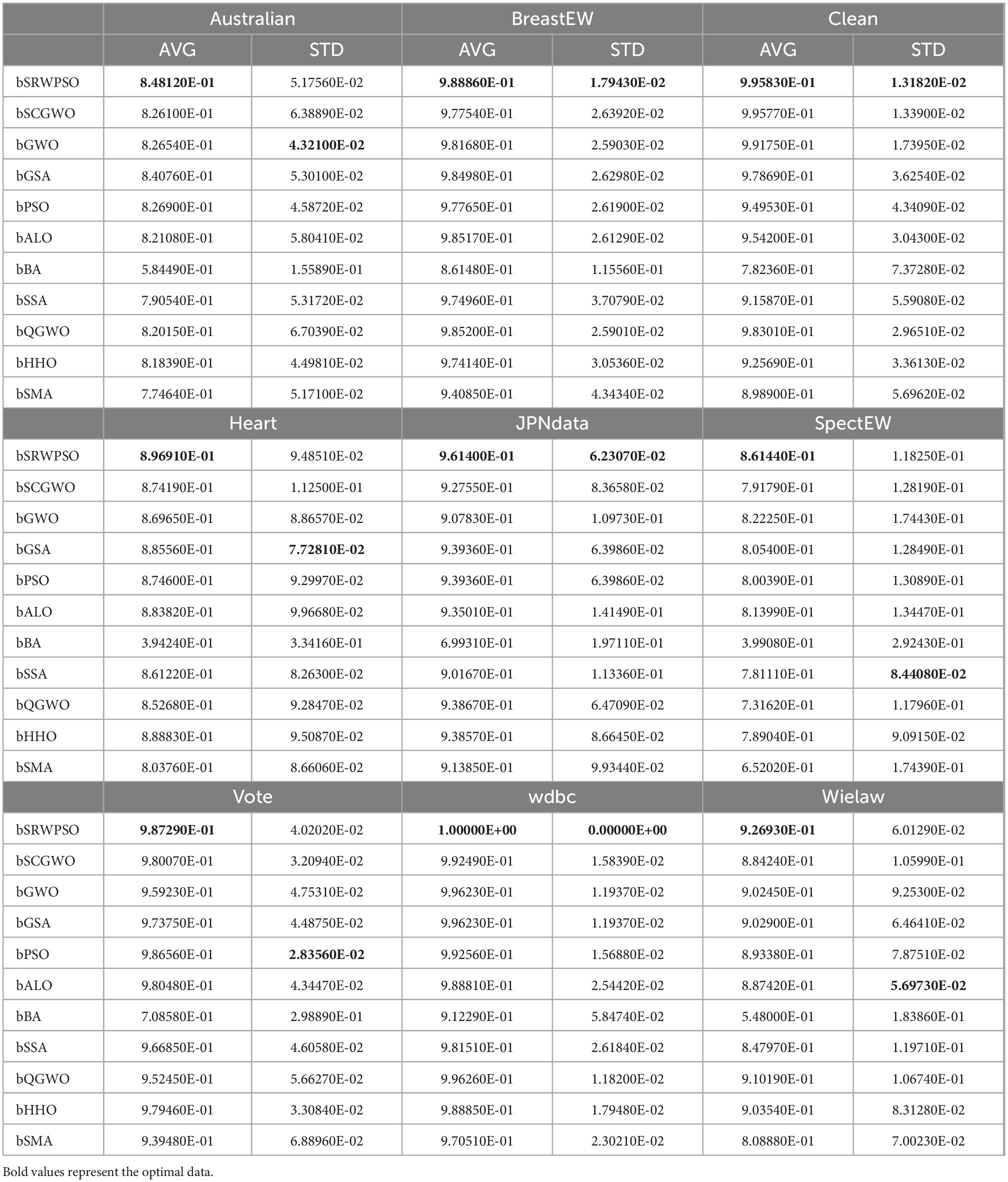

Table 15 analyzes the AVG and STD of the sensitivities of each method in this experiment. It can be seen that bSRWPSO performs the best on nine public datasets. Except for Australian, SpectEW, and Wielaw, the sensitivity of bSRWPSO on the other six public datasets is above 97%. Of course, bSRWPSO exhibits the highest number of smallest STD on the public datasets, indicating that the method has the relatively most stable adaptation to different classification problems. In addition, by codifying and analyzing the Friedman test results of each method on each public dataset, Table 16 compiles the Friedman mean values of each algorithm on the nine public datasets. According to the table, it can be seen that bSRWPSO is ranked first, which indicates that the sensitivity of this method is relatively the best among the methods involved in the comparison.

Table 17 shows the AVG and STD of the MCC for bSRWPSO and other comparison algorithms. Except for Australian, heart, and SpectEW, the AVG of bSRWPSO on all other public datasets are between 0.92 and 1. Also, combining the STD obtained in the experiment, it is easy to find that the overall performance of bSRWPSO ranks first. As shown in Table 18, the Friedman average ranking of bSRWPSO based on 9 public datasets is also the first.

Table 19 analyzes the performance of bSRWPSO on nine public datasets based on the F-measure and gives the AVG reflecting its average capability and the STD reflecting its stability. Looking at Table 19, it is easy to see that the minimum mean of bSRWPSO occurs on the public dataset SpectEW, but it is also above 0.88. Overall, it shows a general ability greater than 0.95 and ranks first on each dataset. The comprehensive Friedman ranking for the F-measure in this experiment is given in Table 20. Among them, bSRWPSO ranks first among the 11 compared algorithms with an average ranking of 1 and is smaller than the average ranking of the second-ranked bGSA by 3. While bPSO ranks fourth with a score of 4.56. Therefore, it can be concluded that the three improvement strategies introduced in this paper improve the classification performance of bPSO in a significant way.

In summary, this section verifies the comprehensive performance of bSRWPSO in feature selection experiments by analyzing classification accuracy, sensitivity, MCC, and F-measure. Comparing ten other methods demonstrates that the classification capability of bSRWPSO has a strong core competitive advantage. Therefore, the bSRWPSO proposed in this paper is a novel method with a more substantial classification capability that can be used for feature selection.

7.3. AD dataset experiments

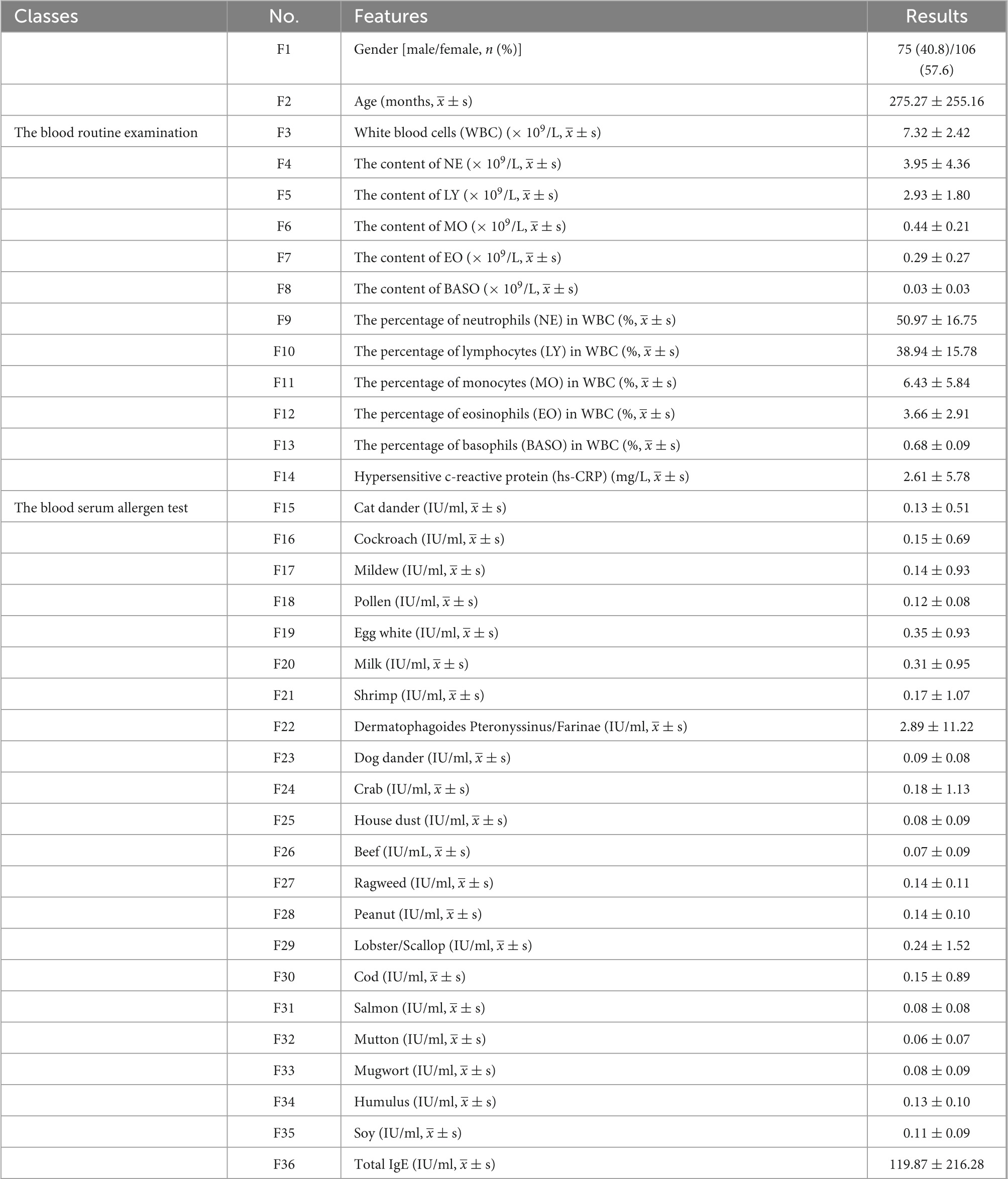

7.3.1. AD dataset

This medical dataset includes 181 patients enrolled at the Department of Dermatology at the Affiliated Hospital of Medical School, Ningbo University, from May 2021 to March 2022 diagnosed with AD. The primary demographic data such as sex and age are included, and the typical laboratory characteristics comprising the blood routine examination, blood serum allergen test, and Total IgE in serum are gathered. The clinical and laboratory results of the patients with AD are demonstrated in Table 21. Continuous data are expressed as means ± standard deviation. Categorical data are described as percentages. The Ethics Commission of the Affiliated Hospital of Medical School approved this medical dataset (NO. KY20191208).

7.3.2. Medical validation experiments

To further demonstrate the classification capability of bSRWPSO, this section sets up four comparison experiments on bSRWPSO based on a specific medical dataset. First, to illustrate the core advantage of the combination of bSRWPSO and FKNN in feature selection, this section sets up comparison experiments by making bSRWPSO combined with FKNN, kernel extreme learning machine (KELM), KNN, SVM, and MLP, respectively. Then, to verify that the classification ability of the bSRWPSO-FKNN is better than that of the classical classifier, this section makes the comparison experiments and analysis of bSRWPSO-FKNN with five classical classifiers based on a specific medical dataset, mainly including BP, CART, RandomF, AdaBoost, and ELMforFS. Next, this section makes bSRWPSO, and ten other binary versions of the swarm intelligence optimization algorithm combined with FKNN, respectively, and the classification advantages of the bSRWPSO-FKNN are verified by setting up feature classification comparison experiments. Finally, this section uses the bSRWPSO-FKNN to set up ten times 10-fold cross-validation experiments on a medical dataset. As a result, it successfully extracts the key features affecting the onset of AD.

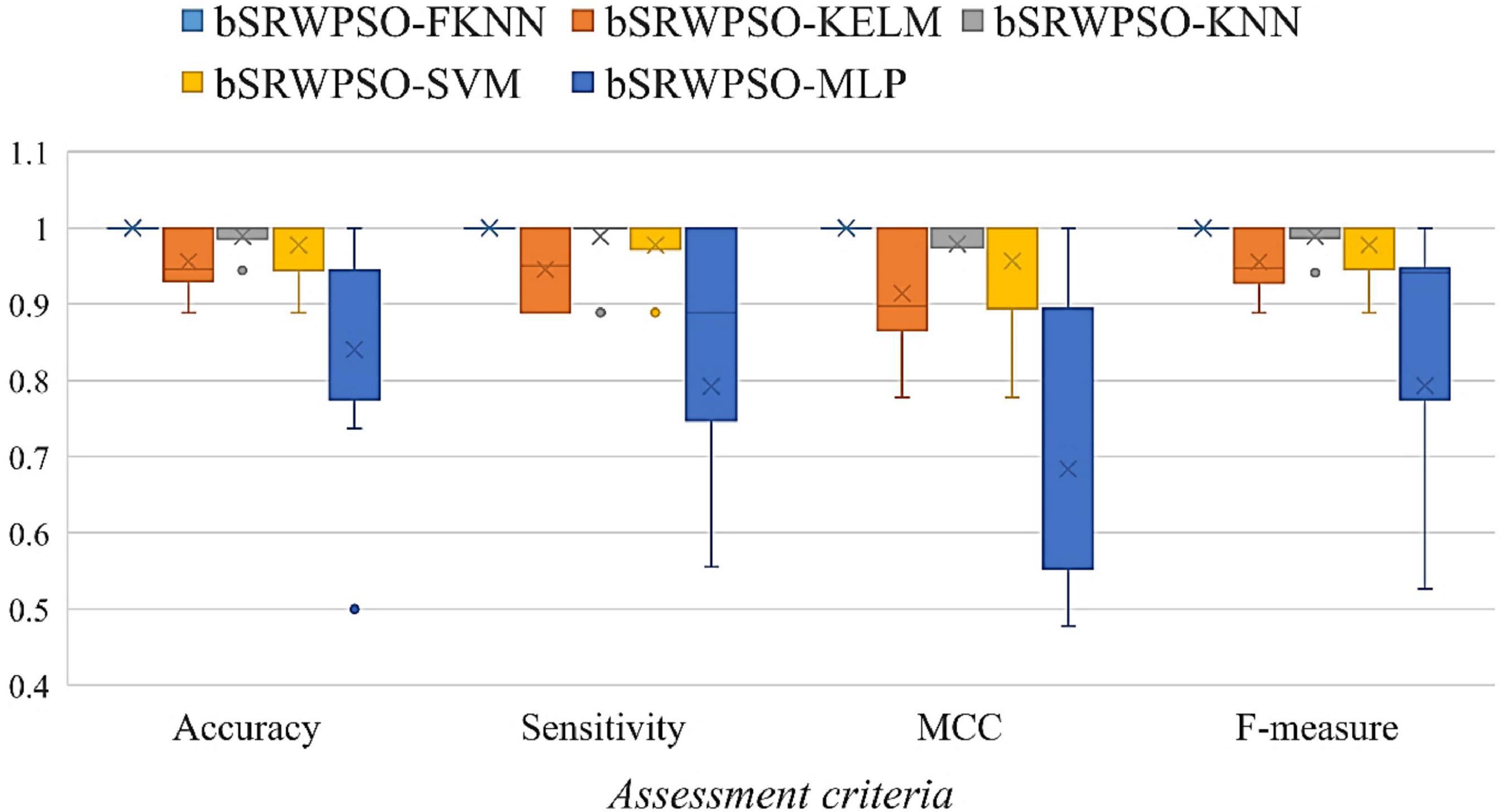

Figure 16 shows the analysis of the results of the comparative experiments combining the bSRWPSO with each of the five machine learning algorithms. As seen from the box plots, the bSRWPSO-FKNN obtains the most concentrated experimental results among the four evaluation criteria, indicating that the classification ability of the model is relatively the most stable in this experiment. In the figure, the marker × represents the average value of each group of data. Therefore, it is easy to observe that the average value of all four evaluation methods for the combination of bSRWPSO and FKNN is 1 and greater than the other four combinations, indicating that the classification performance of the bSRWPSO-FKNN is the best on this medical dataset.

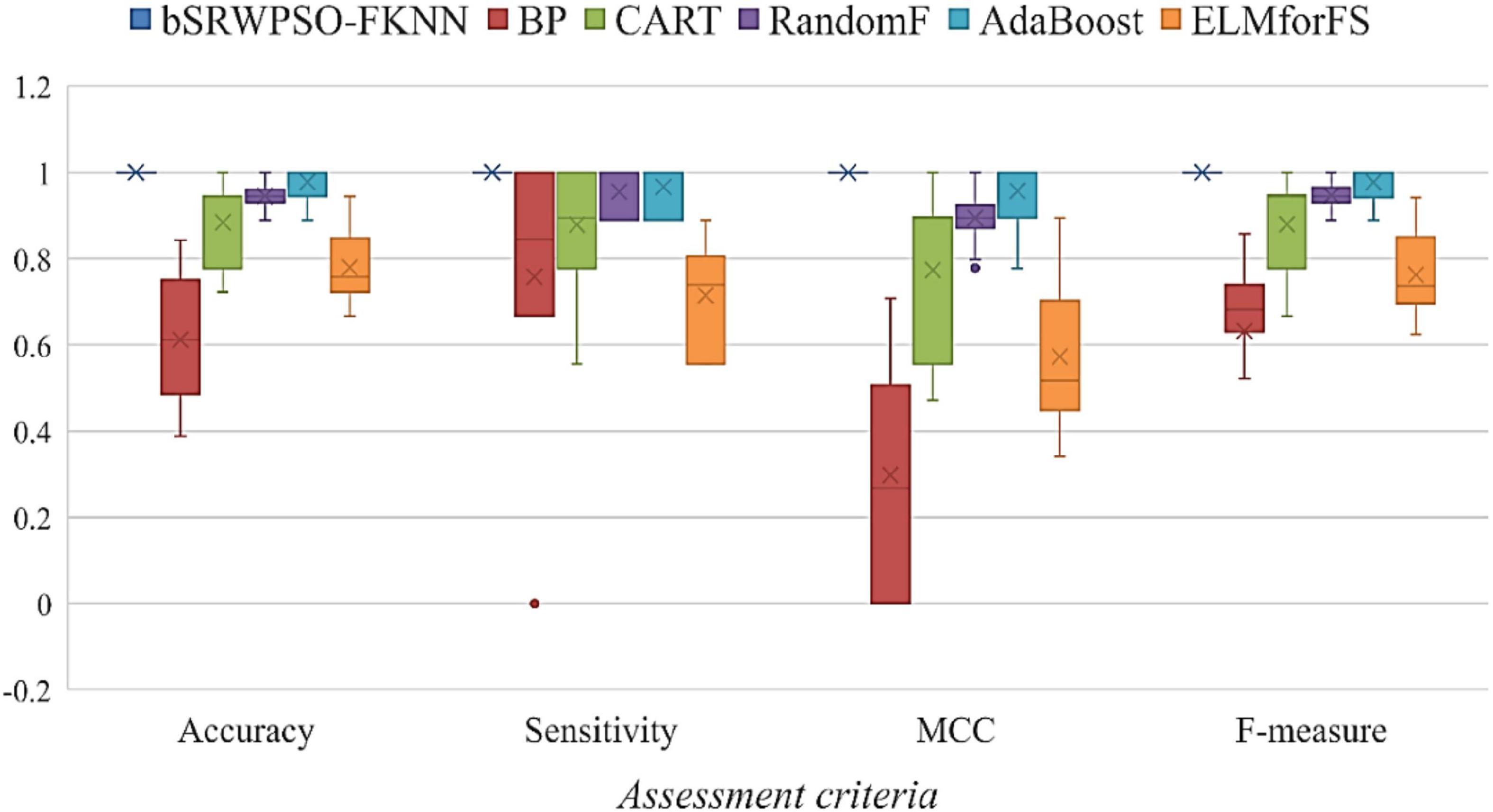

Figure 17 shows the results of comparing the bSRWPSO-FKNN with five classical classifiers. Combined with the characteristics of the box plot, it can be noticed that the bSRWPSO-FKNN has an undeniable competitive advantage in this comparison. bSRWPSO-FKNN has a relatively more stable classification performance and is the best in terms of comprehensive classification ability. On the contrary, the evaluation results of the other five classical classifiers in all four evaluation criteria are relatively scattered, indicating that the classification ability of these classical methods is unstable. Therefore, the bSRWPSO-FKNN still has the core competitive advantage in this experiment.

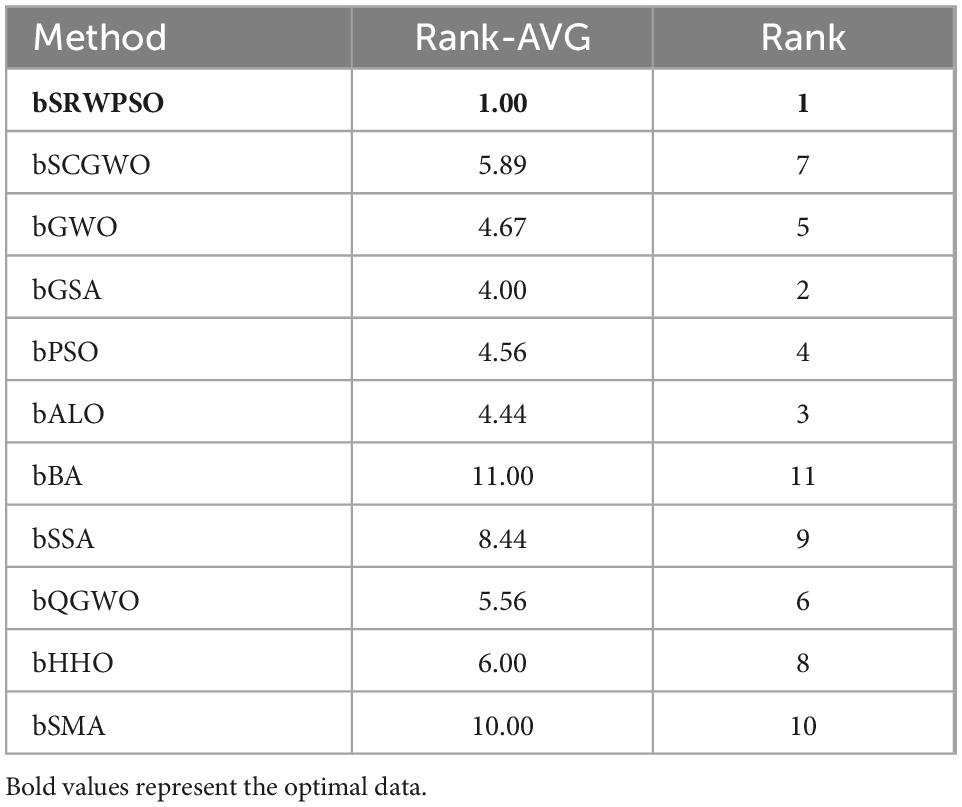

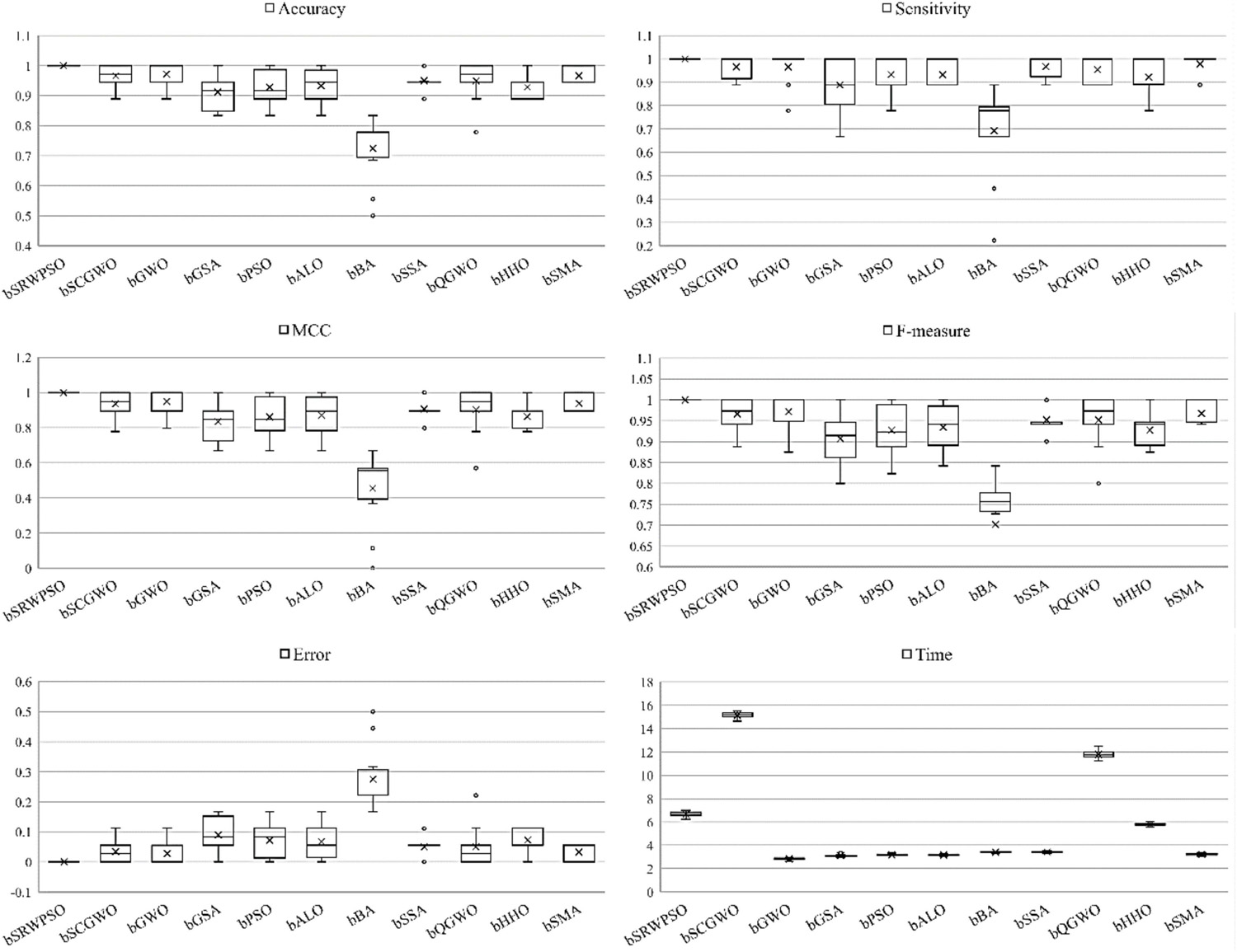

Figure 18 shows the experimental results comparing bSRWPSO with ten other binary swarm intelligence algorithms. The figure shows the analysis results based on six evaluation criteria through six box plots, including Accuracy, Sensitivity, MCC, F-measure, Error, and Time. The error indicates the error rate of the classification method, and the sum of classification accuracy is 1. Time reflects the time spent by the classification method on feature experiments, and the larger the value, the more time the classification method consumes to extract key features successfully. Comparing the Accuracy, Sensitivity, MCC, and F-measure in the figure, we can see that bSRWPSO has the largest experimental results, which indicates that bSRWPSO has the most successful combination with FKNN among all the swarm intelligence optimization algorithms involved in the feature experiments. Its classification performance is not only the best but also the most stable. Comparing the Error in the figure, it is easy to find that bSRWPSO has the slightest possibility of an error during the experiments. However, by comparing the time of the 11 methods, it can be found that bSRWPSO has some shortcomings in time complexity; although it is much lower than the two methods, bSCGWO and bQGWO, in terms of time cost, it is higher than the other eight compared methods.

To further demonstrate the core advantages of the bSRWPSO-FKNN, Table 22 gives the average (AVG) and ranking results (Rank) of the Friedman test based on Accuracy, Sensitivity, MCC, and F-measure. According to the table, it is not difficult to conclude that bSRWPSO obtained the minimum average in all four evaluation criteria, indicating its first ranking in each criterion.

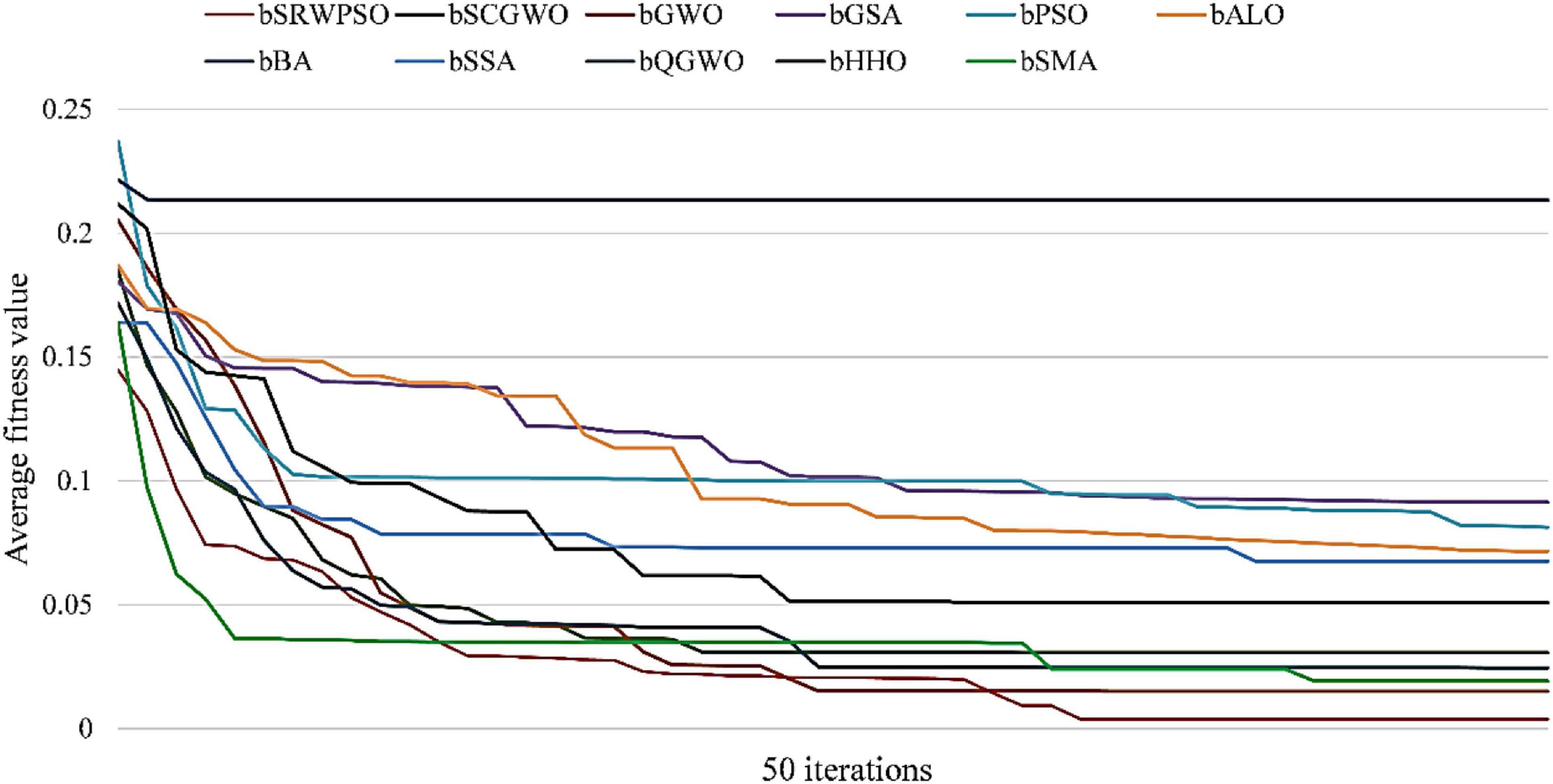

As shown in Figure 19, the convergence curve of bSRWPSO is lower than other methods’ convergence curves after reaching the maximum number of iterations, which means that bSRWPSO has the relatively best optimization accuracy among the methods involved in the experiments. Therefore, bSRWPSO is the most effective in optimizing the classification ability of FKNN.

Figure 20 shows the experimental results of bSRWPSO for ten times 10-fold cross-validation on the AD dataset. In the figure, the vertical axis indicates the number of times each attribute was selected and the horizontal axis indicates the different attributes in AD. From the figure, it is easy to find that features F5, F15, F20, F22, F27, F30, and F36 were selected by bSRWPSO-FKNN, denoting the content of LY, Cat dander, Milk, Dermatophagoides Pteronyssinus/Farinae, Ragweed, Cod, and Total IgE, respectively. Combined with clinical medicine, it was concluded that these features have medical reference value. Therefore, this experiment proves that bSRWPSO-FKNN is scientific and practical for predicting AD development.

8. Conclusion and future works

This study puts forward an improved algorithm according to SOB, RRS, AWS, and PSO, called SRWPSO. Next, we propose a binary version of the SRWPSO, called bSRWPSO. Then, we make bSRWPSO combined with FKNN to offer a novel feature prediction model called bSRWPSO-FKNN. In SRWPSO, the SOB improves the quality of the initial swarm and improves the algorithm’s traversal of the initial population space. The RRS boosts the capability of the original PSO to get rid of the local optimum, which enhances the original PSO’s convergence accuracy. The AWS perturbs the algorithm according to its optimization search process and enhances the exploration ability of the algorithm by controlling the displacement vector. The RRS, in conjunction with the AWS, improves the diversity of the particle swarm, which in turn enhances the exploration and exploitation capability of the original PSO. In addition, based on the performance analysis experiments of SRWPSO and the original PSO, it can be concluded that the combination of the three improved strategies not only increases the population diversity of the original PSO but also balances the exploration and exploitation of the original PSO, which in turn leads to a stronger convergence capability of the original PSO. By analyzing the comparison results of SRWPSO, the original PSO, the nine original algorithms, and the nine improved algorithms on 30 benchmark functions, it is easy to conclude that the SRWPSO’s core advantages are faster convergence speed, higher convergence accuracy, and greater ability to escape local optimal solutions. For bSRWPSO, this paper introduces a V-shaped binary transformation method in SRWPSO to successfully discretize SRWPSO. In bSRWPSO-FKNN, when doing feature selection experiments, the model first optimizes the datasets participating in the experiments by bSRWPSO to obtain the optimization subsets. Then, the model performs classification prediction on the optimized subsets by FKNN. In order to verify the classification capability of bSRWPSO-FKNN, a series of performance validation experiments are conducted on the model in this paper, which successfully demonstrates that the performance of the model is relatively the best among the methods involved in the experiments. In addition, we conducted ten times 10-fold cross-validation experiments on bSRWPSO-FKNN based on a specific medical dataset in this paper and successfully extracted the key features affecting the onset of AD, mainly including the content of lymphocytes (LY), Cat dander, Milk, Dermatophagoides Pteronyssinus/Farinae, Ragweed, Cod and Total IgE. Finally, we enabled the selected key features to be discussed and analyzed in conjunction with clinical practice, demonstrating that the bSRWPSO-FKNN possesses practical medical significance.

However, the approach proposed in this paper is flawed. While the three improvement strategies greatly enhance the performance of the original PSO, they increase the time complexity of the original PSO, which means that the SRWPSO will solve the problem at a higher cost than the original PSO. Since bSRWPSO-FKNN is proposed based on SRWPSO, the increase in time complexity of the model is inevitable, which can be seen in Figure 18. Therefore, reducing the time complexity of this study will be one of the essential works in the future. In addition, we can use more effective strategies to improve the original PSO in future work.

Data availability statement

The original contributions presented in this study are included in the article/Supplementary material, further inquiries can be directed to the corresponding authors.

Author contributions

YL, ZX, AH, XJ, ZL, MW, and QZ: writing—original draft, writing—review and editing, software, visualization, and investigation. DZ, HC, and SX: conceptualization, methodology, formal analysis, investigation, writing—review and editing, funding acquisition, and supervision. All authors contributed to the article and approved the submitted version.

Funding

This work was supported by grants from Major Science and Technology Program for Medicine and Health in Zhejiang Province (No. WKJ-ZJ-2012), Funded by the Project of NINGBO Leading Medical & Health Discipline, Project Number: 2022-F23, Natural Science Foundation of Jilin Province (YDZJ202201ZYTS567), “Thirteenth Five-Year” Science and Technology Project of Jilin Provincial Department of Education (JJKH20200829KJ), Changchun Normal University Ph.D., Research Startup Funding Project (BS [2020]), Natural Science Foundation of Zhejiang Province (LZ22F020005), and National Natural Science Foundation of China (62076185 and U1809209).

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Supplementary material

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/fninf.2022.1063048/full#supplementary-material

References

Adarsh, B. R., Raghunathan, T., Jayabarathi, T., and Yang, X. S. (2016). Economic dispatch using chaotic bat algorithm. Energy 96, 666–675. doi: 10.1016/j.energy.2015.12.096

Ahmadianfar, I., Heidari, A., Gandomi, A., Chu, X., and Chen, H. (2021). RUN beyond the metaphor: An Efficient Optimization Algorithm Based on Runge Kutta Method. Expert Syst. Applic. 181:115079. doi: 10.1016/j.eswa.2021.115079

Ahmadianfar, I., Heidari, A., Noshadian, S., Chen, H., and Gandomi, A. (2022). INFO: An efficient optimization algorithm based on weighted mean of vectors. Expert Syst. Applic. 195:116516. doi: 10.1016/j.eswa.2022.116516

Arora, S., and Anand, P. (2019). Chaotic grasshopper optimization algorithm for global optimization. Neural Comput. Applic. 31, 4385–4405. doi: 10.1007/s00521-018-3343-2

Asano, K., Tamari, M., Zuberbier, T., Yasudo, H., Morita, H., Fujieda, S., et al. (2022). Diversities of allergic pathologies and their modifiers: Report from the second DGAKI-JSA meeting. Allergol. Int. 71, 310–317. doi: 10.1016/j.alit.2022.05.003

Bayraktar, Z., Komurcu, M., and Werner, D. H. (2010). “Wind Driven Optimization (WDO): A novel nature-inspired optimization algorithm and its application to electromagnetics,” in Proceedings of the 2010 IEEE Antennas and Propagation Society International Symposium, Toronto. doi: 10.1109/APS.2010.5562213

Berna, R., Mitra, N., Hoffstad, O., Wubbenhorst, B., Nathanson, K., Margolis, D., et al. (2021). Using a machine learning approach to identify low-frequency and rare FLG alleles associated with remission of atopic dermatitis. JID Innov. 1:100046. doi: 10.1016/j.xjidi.2021.100046

Cai, Z., Gu, J., Jie, L., and Zhang, Q. (2019). Evolving an optimal kernel extreme learning machine by using an enhanced grey wolf optimization strategy. Expert Syst. Applic. 138:112814. doi: 10.1016/j.eswa.2019.07.031