- 1Telecom SudParis, ARTEMIS Department, SAMOVAR Laboratory, Evry, France

- 2VIDMIZER, Paris, France

The last decades have seen video production and consumption rise significantly: TV/cinematography, social networking, digital marketing, and video surveillance incrementally and cumulatively turned video content into the predilection type of data to be exchanged, stored, and processed. Belonging to video processing realm, video fingerprinting (also referred to as content-based copy detection or near duplicate detection) regroups research efforts devoted to identifying duplicated and/or replicated versions of a given video sequence (query) in a reference video dataset. The present paper reports on a state-of-the-art study on the past and present of video fingerprinting, while attempting to identify trends for its development. First, the conceptual basis and evaluation frameworks are set. This way, the methodological approaches (situated at the cross-roads of image processing, machine learning, and neural networks) can be structured and discussed. Finally, fingerprinting is confronted to the challenges raised by the emerging video applications (e.g., unmanned vehicles or fake news) and to the constraints they set in terms of content traceability and computational complexity. The relationship with other technologies for content tracking (e.g., DLT - Distributed Ledger Technologies) are also presented and discussed.

1 Introduction

Nowadays, TV/cinematography, social networking, digital marketing, and video surveillance incrementally and cumulatively turned video content into the predilection type of data to be exchanged, stored, and processed. As an illustration, according to Statista, 2022, the TV over Internet traffic tripled between 2016 and 2021, reaching a monthly 42,000 petabytes of data.

Such a tremendous quantity of information, coupled to myriad of domestic/professional usages should be backboned by strong scientific and methodological video processing paradigms, and video fingerprinting is one of these. Video fingerprinting identifies duplicated, replicated and/or slightly modified versions of a given video sequence (query) in a reference video dataset Douze et al., 2008, Lee and Yoo, 2008, Su et al., 2009, Wary and Neelima, 2019. It is also referred to as near duplicate detection, or content-based copy detection Law-To et al., 2007a. The term video hashing1 (or perceptual video hashing) is also in use for fingerprinting applications applied to very large video database search Nie et al., 2015, Liu, 2019, Anuranji and Srimathi, 2020.

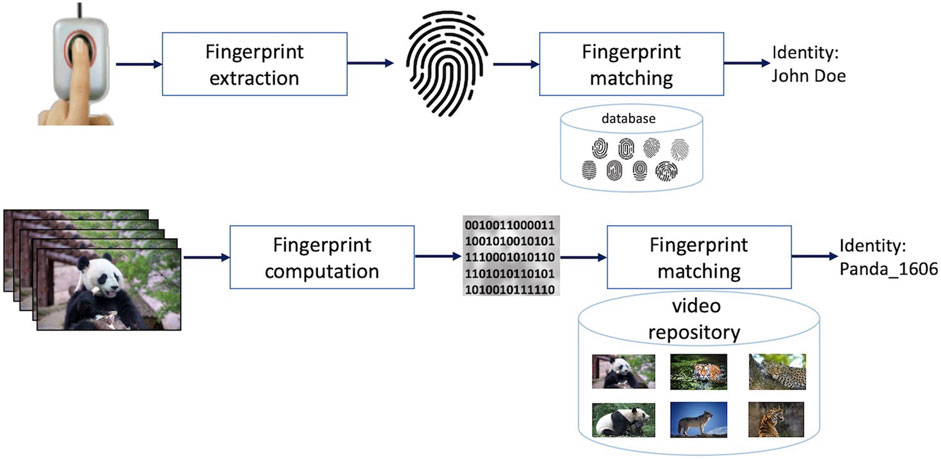

Video fingerprint principle can be illustrated in relation to the human fingerprints Oostveen et al., 2002, Figure 1. The patterns of dermal ridges on human fingertips are natural identifiers for humans, as disclosed by Sir Francis Galton in 1893. Although they are tiny when compared to the entire human body, human fingerprints can uniquely identify a person regardless of their physiognomy changes and potential disguises. Analogously, video fingerprints are meant to be video identifiers that shall uniquely identify videos even if their contents undergo a predefined, application dependent set of transformations.

The conceptual premise being generic, the underlying research studies are very different, from both methodological and applicative perspectives. The present paper reports on a state-of-the-art study on the past and present of video fingerprinting while trying to identify trends for its future development. It solely considers the video component and leaves the multimodal approaches (video/audio, video/annotations, video/depth, etc.) outside its scope.

The paper is structured as follows. First, Section 2 identifies the fingerprinting scope with respect to two related yet complementary applicative frameworks, namely video indexing and video watermarking. The fingerprinting evaluation framework is set in Section 3. This way, the methodological approaches (situated at the cross-roads of image processing, ML—machine learning and NN—neural networks) can be objectively structured and presented in Section 4. Finally, fingerprinting is confronted to the challenges raised by emerging video processing paradigms in Section 5. Conclusions are drawn in Section 6. A list of acronyms (unless they are commonly known and/or unambiguous) is included after References.

2 Applicative scope

The applicative scope of video fingerprint can be identified through synergies and complementarities with video indexing Idris and Panchanathan, 1997 and video watermarking Cox et al., 2007. To this end, this section will incrementally illustrate the principles of these three paradigms and will identify their relationship.

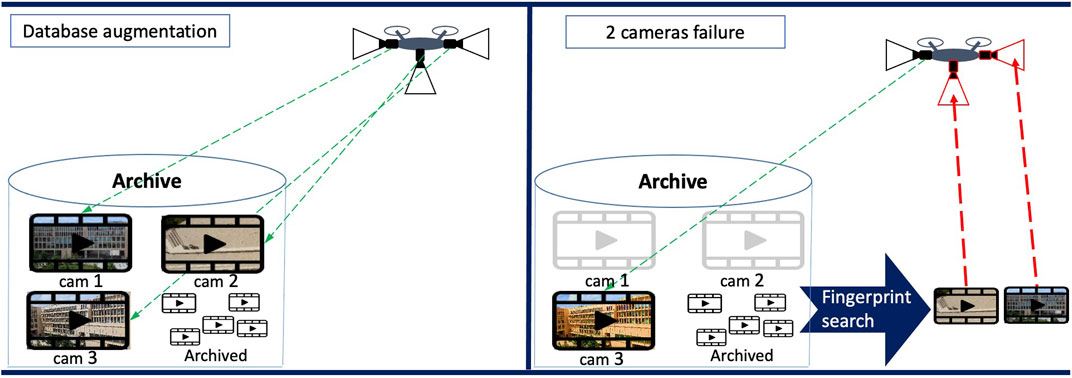

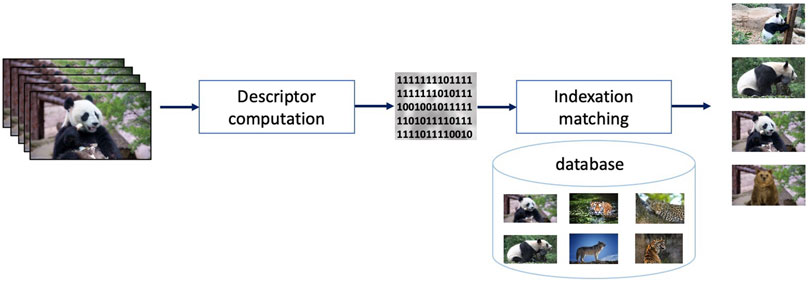

Video indexing might be considered as the first framework for content-based video searching and retrieval Idris and Panchanathan, 1997, Coudert et al., 1999. Assuming a video repository, the objective of video indexing is to find all the video sequences that are visually related to a query. For instance, assuming the query is a video showing some Panda bears and the repository consist of some wild animal sequences, a video indexing solution searches for all sequences in the repository that contain Panda bears, as well as images containing the same type of background, as illustrated in Figure 2. To this end, salient information (referred to as descriptor) is extracted from the query and compared to the descriptors of all the sequences in that repository (that were a priori computed and stored). Such a comparison implicitly assumes that a similarity measure for the visual proximity between two video sequences is defined and that a threshold according to which two descriptors can be matched is set.

FIGURE 2. Video indexing principle: a binary descriptor is extracted from a query video to retrieve any other related visual content in the dataset.

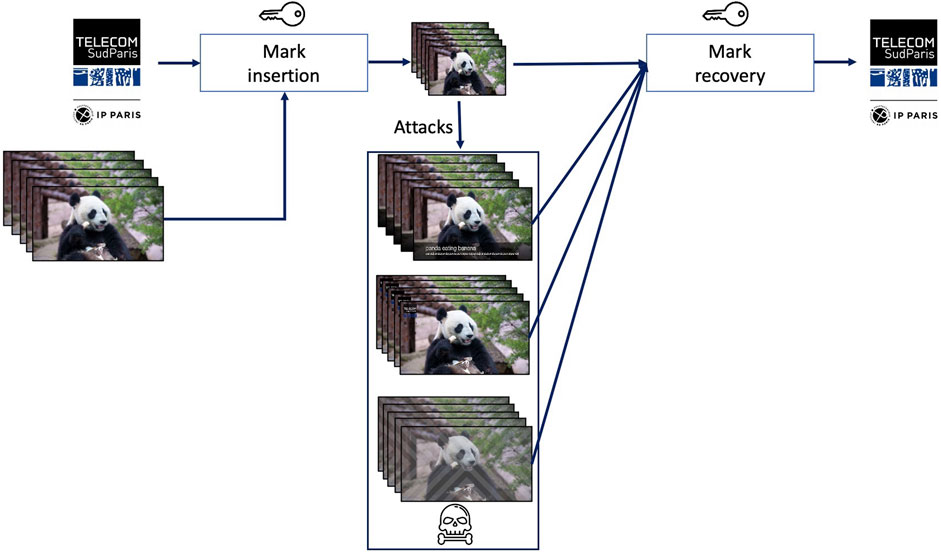

Digital watermarking Cox et al., 2007 deals with the identification of any modified version of video content, Figure 3. For instance, assuming again a video sequence representing some Panda bears is displayed on a screen and that the screen content is recorded by an external camera, the original content should be identifiable from the camcordered version. To this end, according to the digital watermarking framework, extra information (referred to as mark or watermark) is imperceptibly inserted (or, as a synonym, embedded) into the video content prior to its release (distribution, storage, display, … ). By detecting the watermark in a potentially modified version of the watermarked video content, the original content shall be unambiguously identified. Of course, the watermark shall not be recovered from any unmarked content (be it visually related to the original content or not).

FIGURE 3. Video watermarking principle: a binary watermark is imperceptibly inserted (embedded) in the video sequence; this way, the watermarked sequence can be subsequently identified even when its content is modified (maliciously or not).

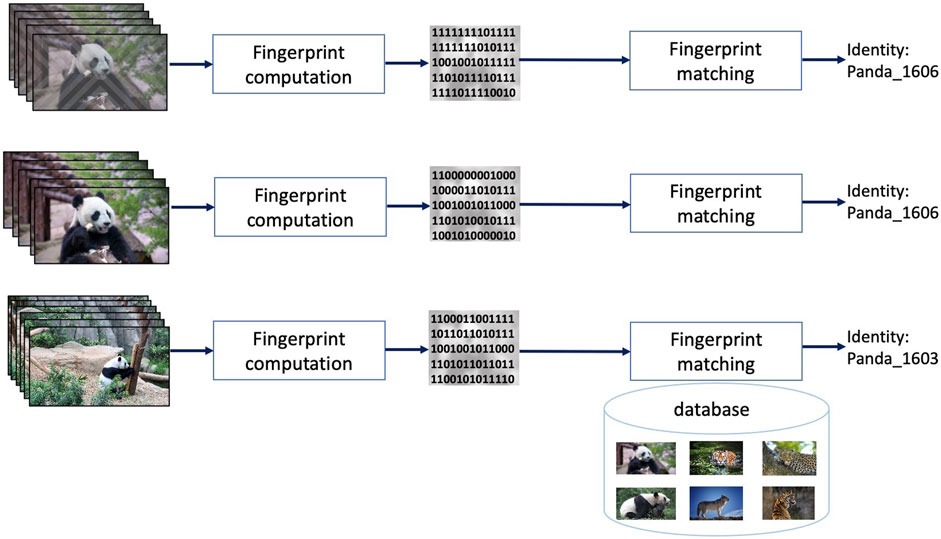

Video fingerprinting also deals with identifying slightly modified (replicated, or near duplicated) content, yet its approach is different with respect to both indexing and watermarking, as illustrated in Figure 4. Coming back to the previous two examples, video fingerprinting shall also track a near-duplicated video sequence (e.g., a screen recorded Panda sequence) back to its original (e.g., the Panda original sequence) that is stored in a video repository. Yet, unlike indexing, any other sequence, even visually related to it (e.g., the same Panda bear at a different time of the day and/or in different postures) shall not be detected as identical. To this end, some salient information (referred to as fingerprint or perceptual hash) is extracted from the query video sequence (note that this information is not previously inserted in the content, as in case of watermarking sequences). By comparing (according to a similarity measure and a preestablished threshold) the query fingerprint to the reference sequence fingerprints, a decision on the visual identity between the video sequences shall be made.

FIGURE 4. Video fingerprinting principle: a binary descriptor extracted from a query video (fingerprint) can unambiguously identify all the near-duplicated versions of that content.

Three main properties are generally considered for fingerprinting.

First, the unicity (or uniqueness) property assumes that different contents (i.e., content that is neither the query nor one of its near-duplicated versions) result in different fingerprints (in the sense of the similarity measure and of its related threshold).

Secondly, the robustness property relates to the possibility of identifying as similar sequences that are near-duplicated. The transformations a video can undergo will be further referred to as modifications, distortions, or attacks, be them malicious or mundane. The video that is obtained through transformations, modifications, distortions, or attacks will be denoted as a copy, a replica video, a near duplicated video or an attacked video. While these terms are conceptually similar, fine distinction among them can be made for some specific applicative fields. For instance, Liu et al., 2013 mention at least four different definitions related to near duplicated video content, ranging from “Identical or approximately identical videos close to the exact duplicate of each other, but different in file formats, encoding parameters, photometric variations (color, lighting changes), editing operations (caption, logo and border insertion), different lengths, and certain modifications (frames add/remove)” Wu et al., 2007a, Wu et al., 2007b to “Videos of the same scene (e.g., a person riding a bike) varying viewpoints, sizes, appearances, bicycle type, and camera motions. The same semantic concept can occur under different illumination, appearance, and scene settings, just to name a few.” Basharat et al., 2008. Our study will stay at a generic level and will use these terms as referred to in the cited studies.

Finally, a fingerprinting method is said to feature dataset search efficiency if the computation of the fingerprints and the matching procedure ensure low, application dependent computation time. The dataset search efficiency is assessed by the average computation time needed to identify a query in the context of a considered video fingerprinting use case (that is, execution time on a given processing environment and on a given repository).

By comparing among them these three methodological frameworks, it can be noted that:

• Indexing and fingerprinting share the concept of tracking content thanks to information directly extracted from that content (that is, both indexing and fingerprinting are passive tracking technique); yet, while fingerprinting tracks the content per se, indexing rather tracks a whole semantic family related to that content. From the applicative point of view, indexing and fingerprinting differ in the unicity property.

• Watermarking and fingerprinting share the possibility of tracking both an original content and its replicas modified under a given level of accepted distortion; yet watermarking requires the insertion of additional information (that is, watermarking is an active tracking technique) while fingerprinting solely exploits information extracted from the very content to be tracked.

Moreover, note that video fingerprinting is also sometimes referred to as (perceptual) video hashing Nie et al., 2015, Liu, 2019, Anuranji and Srimathi, 2020. Yet, distinction should be made with respect to robust video hashing Fridrich and Goljan, 2000, Zhao et al., 2013, Ouyang et al., 2015 that belongs to the security and/or forensics applicative areas and generally refers to applications where distinction between content preserving and content manipulation attacks should be made. Robust video hashing is out of the scope of the present study.

These properties turn fingerprinting in a paradigm with potential impact in large variety of applicative fields. The ability to identify and retrieve video even under distortions is a powerful tool for automatic video filtering and retrieval, copyright infringement prevention, media content broadcast monitoring over multi-broadcast channels, contextual advertising, or business analytics, to mention but a few Lefebvre et al., 2009, Lu, 2009, Seidel, 2009, Yuan et al., 2016, Wary and Neelima, 2019, Nie et al., 2021.

The analogy between the human and video fingerprints brings to light two key aspects. First, from the conceptual point of view, it implicitly assumes that video fingerprinting exists, that is, that a reduced set of information extracted from the video content makes it possible for the content to be tracked. As this concept cannot be a priori proved, it requires comprehensive a posteriori validation in a consensual evaluation framework, as discussed in Section 3. Secondly, from the methodological point of view, any video fingerprinting processing pipeline is composed of two main components: the fingerprint extractor (that is, the method for computing the fingerprint) and the fingerprint detector (that is, the method for searching similar content based on that fingerprint). Consequently, the state-of-the-art studies in Section 4 will be presented according to these two items.

3 Evaluation framework

In a nutshell, the performances of a video fingerprinting system can be objectively assessed by evaluating its properties (uniqueness, robustness, and dataset search efficiency) on a consensual, statistically relevant dataset, and this section is structured accordingly. Section 3.1 presents the quantitative measures that are most often considered in state-of-the-art studies, alongside with their statistical grounds. Section 3.2 deals with the datasets to be processed in video fingerprinting experiments and presents the principles for their specification as well as some key examples that will be further referred to in Section 4.

3.1 Property evaluation

The evaluation of the uniqueness and the robustness properties can be achieved by considering fingerprinting as a statistical binary decision problem. Be there a query sequence whose identity is looked up in a reference dataset with the help of a video fingerprinting system.

According to the binary decision principle, when comparing a query to a given sequence in the dataset, two hypotheses can be stated:

oH0: the query is a replica of a video sequence identified though the tested fingerprint.

oH1: the query is not a replica of the video sequence identified through the tested fingerprint.

The output of the system can be of two types: positive, when the query is identified as replica of a video sequence and negative otherwise.

When confronted to the ground truth, the statistical decisions can be labeled as: true, when the result provided by the test is correct and as false otherwise.

Consequently, four types of decisions are made:

oFalse positive (or false alarm, denoted by

oFalse negative (or missed detection, denoted by

oTrue positive (denoted by

oTrue negative (denoted by

The objective evaluation of a video fingerprinting system is achieved by deriving performance indicators from the four measures above.

To evaluate the uniqueness property, two measures are generally considered: the

To evaluate the robustness property, the

An efficient fingerprinting method (featuring both unicity and robustness) should jointly ensure low values for

Although

In practice, several other derived and/or complementary performance indicators can be considered, such as the F1 score, the ROC (Receiver Operating Characteristic), the AUC (Area Under the Curve), or the mAP (mean Average Precision).

From a theoretical point of view, the dataset search efficiency can be expressed by the computational complexity, that expresses the number of elementary operations required for computing and matching fingerprints as a function of video sequence parameters (frame size, frame rate) and repository size. As such an approach is limitative for NN-based algorithms, the dataset search efficiency property is commonly assessed by the average processing time required by the video fingerprinting system to identify the query within the reference dataset and to output the result for a query. The average processing time can be obtained by averaging the processing time required by the system for the considered collection of queries. Of course, such an evaluation implicitly assumes that detail description is available about the computing configuration (CPU, GPU) performances as well as about the size of the dataset.

3.2 Evaluation dataset

Regardless the evaluated property, the dataset plays a central role, and its design is expected to observe to three constraints: statistical relevance, application completeness, and consensual usage.

The statistical relevance (and implicitly, the reproducibility of the results) mainly relates to the size of the dataset that should ensure the statistical error control (e.g., the sizes of

The application completeness mainly relates to the type of content included in the dataset, that is expected to serve and to cover the applicative scope of the developed method.

The consensual usage relates to the acceptance of the dataset by the research community: this item relates to the possibility of objectively comparing results reported in different studies.

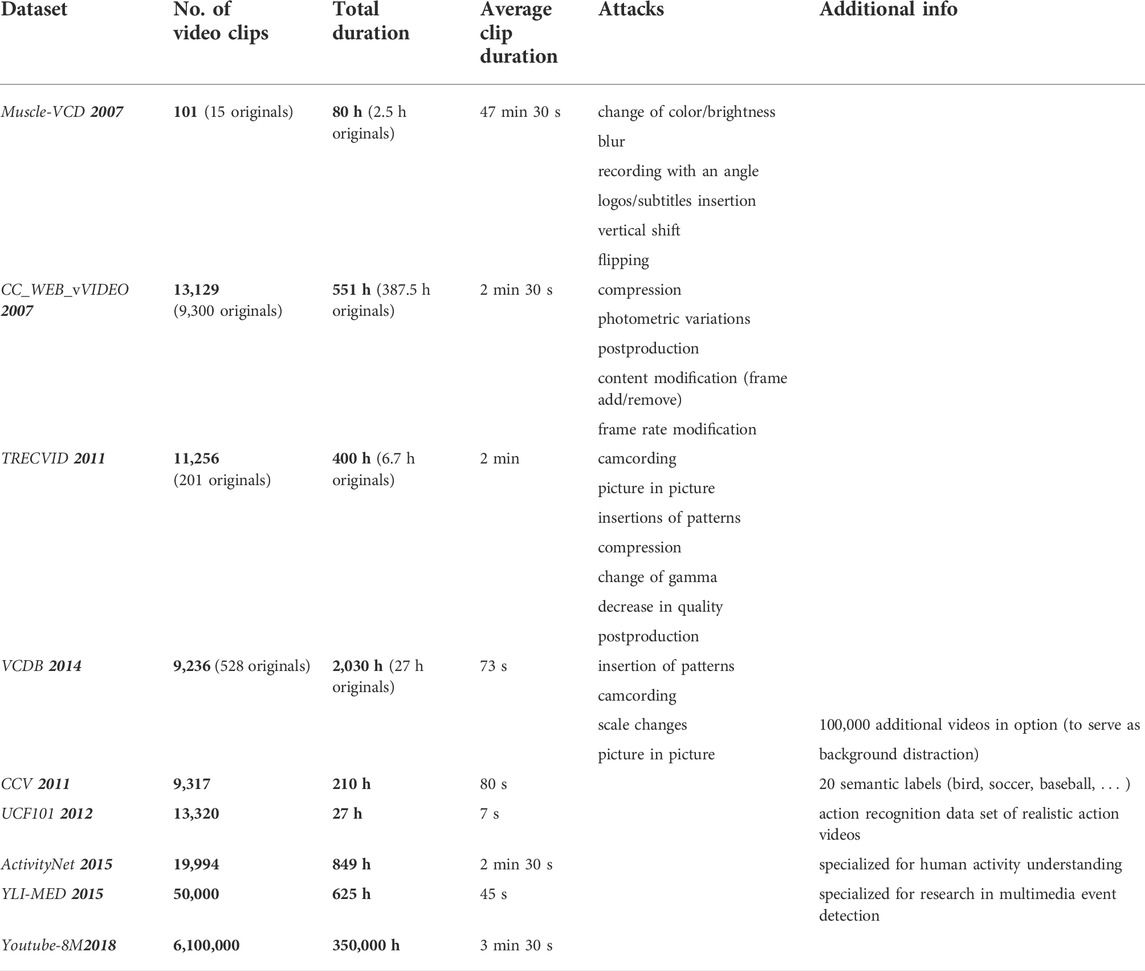

Of course, each dataset and each application evaluated on a specific dataset reach a different trade-off among these three desiderata. Table 1 provides a comparative view about some of the most often considered datasets (see Section 4); some of these corpora are introduced here-after.

TABLE 1. Examples of datasets processed for fingerprinting evaluation. The lower part (last 5 rows) corresponds to corpora processed by fingerprinting method exploiting NN.

TRECVID (TREC Video Retrieval Evaluation) framework Trecvid, 2022, Douze et al., 2008 is a key example in this respect, as it provides consequent benchmarking datasets. Sponsored by the NIST (National Institute of Standards and Technology) with additional support from other US governmental agencies, TRECVID is structured around different “tasks” focused on a particular aspect of the multimedia retrieval problem, as ad-hoc video search, instance search, and event detection, to mention but a few. TRECVID datasets consider video copies that are generated under video transformations, such as blurring, cropping, shifting, brightness changing, noise addition, picture-in-picture, frame removing, or text inserting Wang et al., 2016, Mansencal et al., 2018.

Related efforts are also carried out under different frameworks, such as Muscle-VCD (or simply Muscle) Law-To et al., 2007b or VCDB (Large-Scale Video Copy Detection Database) Jiang and Wang, 2016. Research institutes active in the field, like INRIA in France, also created INRIA Copy Days dataset Jegou et al., 2008. National and/or international research projects are also prone to generate datasets Open Video, 2022, Garboan and Mitrea, 2016.

With the advent of NN approaches, research groups affiliated to popular multimedia platforms operators organized and made available large datasets, as presented in the last 5 rows in Table 1. For instance, YouTube-8M Segments dataset Abu-El-Haija et al., 2016 includes human-verified labels on about 237K segments and 1,000 classes, summing-up to more than 6 million video ID or more than 350,000 h of video. The dataset is organized in about 3,800 classes with an average of 3 labels per video. Of course, several other AI datasets coexist. For instance, Zhixiang et al., 2018 points to three of them: CCV (Columbia Consumer Video) Jiang et al., 2011, YLI-MED (YLI Multimedia Event Detection) Bend, 2015, Thomee, 2016, and ActivityNet Heilbron et al., 2015. Note that unlike the TRECVID datasets, the datasets mentioned in this paragraph are not specifically designed for fingerprinting applications but for general video tracking applications (including indexing): hence, the near duplicated content is expected to be created by the experimenter, according to the application requirements and the principles above.

4 Methodological frameworks

While today any fingerprinting state-of-the-art study cannot be either exhaustive or detailed, this section rather focusses on illustrating the main trends than on the impressive variety of studies. It is structured according to the two main steps in a generic fingerprinting computing pipeline: fingerprinting extraction (that is, spatio-temporal salient information extraction) and fingerprinting matching (that is, comparing salient information extracted from two different video sequences). These two basic steps are, in their turn, composed of several sub-steps Douze et al., 2008, Lee and Yoo, 2008, Su et al., 2009. On the one hand, the fingerprinting computation generally includes video pre-processing (e.g., letterboxing removal, frame resizing, frame dropping and/or key-frame detection), local feature extraction, global feature extraction, local/global feature description, temporal information retrieval, and the means for accelerating the search in the dataset (inversed file, etc.). On the other hand, the detection procedure generally includes some time-alignment operations (time origin synchronization, jitter cancelation, … ), followed by information matching.

Significant differences occur in the ways these steps are implemented. Hence, this section will be structured into two categories, further referred to as conventional (Section 4.1) and NN-based fingerprinting (Section 4.2) methods. The former category relates to the earliest fingerprinting methods (e.g., 2009–2019) and stems from image processing and machine learning, being backboned by information theory concepts. The latter category is incremental with respect to the former one, as it (partially) considers concepts and tools belonging to the NN realm for achieving fingerprint extraction and matching. Of course, studies combining conventional and NN tools also exist Nie et al., 2015, Nie X. et al., 2017, Duan et al., 2019, Zhou et al., 2019 that will be discussed in Section 4.2.

4.1 Conventional methods

4.1.1 Main directions

As a common ground, these methods stem from image processing, machine learning, and information theory concepts and leverage the fingerprinting extraction on three incremental levels Garboan and Mitrea, 2016.

First, in an attempt to get to frame aspect distortion invariance, the fingerprinting is extracted from derived representations such as 2D-DWT (2D Discrete Wavelet Transform) coefficients Garboan and Mitrea, 2016, 3D-DCT (3D Discrete Cosine Transform) coefficients Coskun et al., 2006, pixel differences between consecutive frames, temporal ordinal measure of average intensity blocks in successive frames Hampapur and Bolle, 2001, visual attention regions Su et al., 2009, quantized block motion vectors, ordinal ranking of average gray level of frame blocks, quantized compact Fourier–Mellin transform coefficients, ordinal histograms of frames Kim and Vasudev, 2005, Sarkar et al., 2008, color layout descriptor, ...

Secondly, frame content distortion invariance can be achieved by the complementary between global features incorporating geometric information (e.g., centroid of gradient orientations of keyframes Lee and Yoo, 2008 or invariant moments of frames edge representation) and local features based on interest points (corner features, Hessian-Affine, Harris points, SIFT (Scale-Invariant Feature Transform), SURF (Speeded Up Robust Features)) generally described under the BoVW (Bag of Visual Words) framework Douze et al., 2008, Jiang et al., 2011.

Thirdly, video format distortion invariance is generally handled by using a large variety of additional synchronization mechanisms, pair designed with the feature selection, from synchronization block, based on wavelet coefficients to K-Nearest Neighbors matching Law-To et al., 2007a of interest points or Viterbi-like algorithms Shikui et al., 2011.

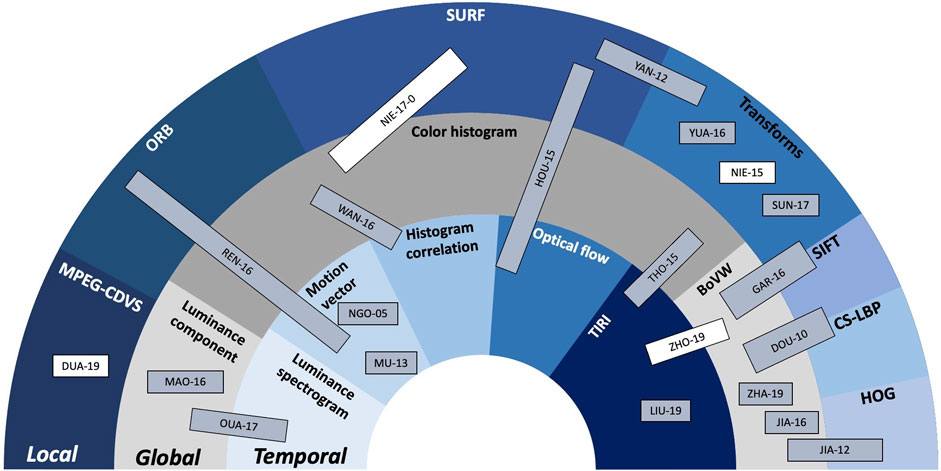

These main directions as well as their mutual combinations will be considered in the next section as structuring elements. They will be illustrated by a selection of 15 studies, published between 2009 and 2019, that will be presented in chronological order. The functional synergies among and between these studies are synoptically presented in Figure 5 that is structured in three layers, shaped as hemicycles:

• the outer blue layer relates to local feature description, exemplified through MPEG-CDVS (Compact Descriptors for Visual Search), ORB (Oriented Fast and Rotated BRIEF), SURF, Transformed domains, SIFT, CS-LBP (Center-symmetric Local Binary Patterns), and HOG (Histogram of Oriented Gradient).

• the middle gray layer relates to global features, exemplified through luminance component, color histograms, and BoVW.

• the inner blue layer relates to the temporal features, exemplified through luminance spectrogram, motion vectors, histogram correlation, optical flow, and TIRI (Temporal Informative Representative Image).

FIGURE 5. Conventional fingerprinting method synopsis: the hemicycles (areas) related to the local, global, and temporal features are located at the outer, middle, and inner parts of the figure, respectively. Inside each hemicycle, examples of state-of-the-art solutions are presented. Conventional methods are presented in gray-shadowed rectangles while NN-based methods that also include conventional modules are represented in white rectangles.

The order of the classes in each hemicycle is chosen to allow for a better visual representation of the synergies among them. The studies represented in gray-shadowed rectangles correspond to conventional methods while the studies represented in white rectangles correspond to NN-based method that also include conventional modules.

4.1.2 Methods overview

The complementarity between visual similarity and temporal consistency is exploited in Tan et al. (2009) to achieve scalability during the detection and localization of video content replicas. The video content synchronization is modeled as a network flow problem. Specifically, the chronological matching of the frames between the two video sequences is replaced by the search for a maximal path that carries the maximum capacity in transmission network, under constraints of type must-link and cannot-link. As the theoretical solution thus obtained can feature a large complexity, the study also suggests an a posteriori simplification based 7 heuristic constraints. The study exploits the idea that the temporal alignment leverages the constraints on visual feature effectiveness and to prove this, a Hessian-Affine detector and PCA-SIFT (Principal Component Analysis) feature are considered in the experiments. On the detection side, key-point matching is considered. The experiments are structured at four levels: partial segments of full-length movies to videos crawled from YouTube, detection of near-duplicates in a dataset of more than 500 h, near-duplicate shot detection and copy detection on TRECVID and Muscle-VCD-2007 datasets, respectively.

A fingerprinting method that is optimized for searching of strongly modified sequences in reduced-size video datasets is presented in Douze et al. (2010). The fingerprints are computed from a subset of frames, either periodically sampled from the video sequence or chosen according to a visual content rule (key frames). The local visual information is extracted through Hessian-Affine detectors followed by SIFT and CS-LBP descriptors Heikkila et al., 2009. The descriptors are subsequently clustered by a bag of words approach combined to a Hamming Embedding procedure. To improve search efficiency, an inverted file structure is finally considered. For the fingerprinting retrieval, a spatio-temporal verification is performed to reduce the number of potential candidates. The experiments are carried out on the TRECVID 2008 dataset and show how the method parameters can be adjusted to reach a trade-off between accuracy and efficiency.

The study reported in Yang et al. (2012) is based on SURF points Bay et al., 2008 that are first extracted at the frame level. After dividing the frame into 16 even square blocks, the number of SURF points in each quadrant is traversed to build a third-order Hilbert curve that will pass through each quadrant, resulting in an adjacent grid that keeps the same neighborhood as the original image. Finally, the hash bits are computed as the differences of SURF points. To match two fingerprints, the CSR (Clip Similarity Rate) or the SSR (Sequence Similarity Rate) are calculated when the query and the reference videos have the same length, or different length, respectively. The former (CSR) relates to the mean of the matching distances between the 2 hashes while the latter (SSR) represents a weighted average of matched, mismatched, and re-matched frames in query video. The experiments select 40 source videos from TRECVID 2011 framework and 60 of their replicas (logo insertion, picture in picture, video flipping, Gaussian noise). Three types of metrics are used to evaluate the method: Prec, Rec and ROC. For a preestablished Prec value (set at 0.8 in the experiments), the advanced algorithm has the best Rec value (0.92) compared to the solutions advanced in Zhao et al. (2008) (Rec = 0.78) and in Kim and Vasudev (2005) (Rec = 0.57).

Aiming at obtaining fingerprint invariance against rotations, Jiang et al., 2012 suggests the joint use of HOG and RMI (Relative Mean Intensity) to express the visual characteristics in the frames. The fingerprinting matching is based on the Chi-square statistics. The experimental results are obtained by processing the Muscle corpus and are expressed in terms of matching quality, computed as the ratio of correct answers to total number of queries.

An early work presented in Ngo et al. (2005) considers an approach to video summarization that models the video as a temporal graph, by detecting its highlights based on analyzing motion vectors. That work is the backbone of the fingerprinting technique presented in Li and Vishal (2013) with a focus on the compactness of the fingerprint. The key steps of the algorithm are preprocessing and segment extraction, computing the SGM (Structural Graphical Model), graph partitioning using the graph normalized cuts method, fingerprint extraction, and fingerprint quantization by applying RAQ (Randomized Adaptive Quantizer). For the fingerprint extraction step, the authors selected the TIRI method, based on frame averaging followed by 2-D DCT, Esmaeili et al., 2011. The Hamming distance is used during the matching stage. To test the proposed method, 600 different videos were collected from YouTube, then copy videos were created using 8 different attacks of three major types: signal processing attacks, frame geometric attacks, and temporal attacks. The results present better accuracy particularly with restricted fingerprint length compared to TIRI Esmaeili et al., 2011, CGO (Centroids of Gradient Orientations) Lee and Yoo, 2006, and RASH (Radial hASHing) Roover et al., 2005.

The method presented in Thomas and Sumesh (2015) stands for a simple yet robust color-based video copy detection technique. The first step consists of summarizing the video by extracting the key frames, then generating the TIRI, thus including temporal information in the fingerprint. The second step extracts the color correlation group of each pixel of the TIRIs. The color correlation is clustered into 6 groups, by comparing the intensity of each component in the RGB color space (e.g., group 1 corresponds to

A multifeatured video fingerprinting system, designed to jointly improve the accuracy and the robustness is advanced in Hou et al. (2015). The fingerprint computation starts by extracting spatial features from the key frames that have been preprocessed (size and frame rate uniformization), then partitioned into

Belonging to the DWT-based fingerprinting family, the study presented in Nie et al. (2015) also focusses on the fingerprint dimensionality. The advanced fingerprinting scheme consists of two types of coefficients, intra-cluster and inter-cluster, thus preserving both global and local information. After normalizing the video clips (at 300 × 240 pixels, 500 frames), the first step is to cluster the frames, according to a graph model based on the K-means algorithm, whose parameters are estimated from the relationships among frames. To select the feature that represents a frame, the fourth order Cumulant of Luminance Component is computed, thus ensuring invariance to different types of distortions (Gaussian noise addition, scaling, lossy compression, and low-pass filtering). The next step reduces the dimensionality while preserving the local and global structures thanks to an algorithm referred to as DOP (Double Optimal Projection): the dimensionality reduction is obtained by multiplying the cumulant coefficient matrix by a mapping matrix. The distance vector thus obtained results in two types of fingerprints: the statistical fingerprint, represented by the kurtosis coefficient of the distance vector and the geometrical fingerprint, represented by the binarization of the distance vector. The matching procedure is performed in two steps: first, according to the distance between the statistical fingerprints and then according to the Hamming distance between the geometric fingerprints (an empiric threshold of 0.18 is considered for the binary decision). The experiments are performed on a dataset of 300 original video clips and of some of their replicas (MPEG compression, letterboxing, frame change, blur, shifting, rotations) and result in both Prec and Rec larger than 0.95.

The technique presented in Mao et al. (2016) assumes that the probability that five identical successive scene frames occur in two different videos is very low. The fingerprint computation starts by frame resizing (down to 108 × 132) and division (into 9 × 11 sub-regions). For each sub-region, two types of information are extracted from the luminance component: the mean value of the sub-region and 4 differential elements of the sub-region sub-blocks. This process generates 720 elements in total counting 144 mean values and 576 differential values. The fingerprint is subsequently quantized and clustered. A matching technique based on binary search of inverted file is implemented. A test dataset was created by collecting 510 Hollywood film clips and 756 of their replicas (re-encoding, logo addition, noise addition, picture in picture, … ). An average detection rate of 0.98 is obtained.

The Shearlet transform is a multi-scale and multi-dimensional transform that is specifically designed to address anisotropic and directional information at different scales. This property can by be exploited in fingerprinting applications, as demonstrated in Yuan et al. (2016), where a 4-scale Shearlet transform with 6 directions is considered. The fingerprinting definition considers both low and high frequency coefficients and is defined under the form of the normalized sum of SSCA (sub-band coefficient amplitudes). Low frequency coefficients are supposed to feature invariance with respect to common distortions, hence, to ensure the fingerprinting robustness. The high frequency coefficients, on their side, are supposed to keep visual content inner information, hence, to contribute to the method uniqueness. Such frame-level fingerprint is coupled to a TIRI of the video. The search efficiency is based on the use of IIF (Invert Index File) mechanism. The experimental results are carried out on visual content sampled from TRECVID 2010 and form INRIA Copy Day dataset Jegou et al., 2008. The replicas are obtained through geometrical distortions (letterboxing, rotation), luminance distortions, noise addition (salt and pepper, Gaussian), text insertion, and JPEG compression. The quantitative results are expressed in terms of TPR, FPR, and F1 score and consider as ground two state-of-the-art methods based on the DCT on Ordinal Intensity Signature (OIS). The method main advantage is given by its resilience to geometric transformations (gains of about 0.3 in F1 score).

The study Wang et al., 2016 is centered around the usage of the temporal dimension expressed as the temporal correlation among successive frames in a video sequence. To this end, the video sequence is structured into groups of frames centered on some key frames (that is, the temporal context for a key frame is computed based on both preceding and succeeding frames). A fingerprint is subsequently extracted from each group of frames. From a conceptual standpoint, the fingerprint is based on the color correlation histogram computed on the frame sequence. Yet, to enhance the overall method speed, this visual information is processed through several types of operations. First, the dimensionality is reduced by projection on a random, bipolar (+1/−1) matrix. Secondly, a binary code is defined based on a weighted addition of the color correlation histogram elements. Finally, the search speed is accelerated by an LSH (Locality Sensitive Hashing) algorithm Datar et al., 2004. The matching algorithm is based on LCS (Longest Common Subsequence) algorithm. The experiments consider 8 transformations included in the TRECVID 2009 dataset and report results (expressed in terms of Prec and Rec) that are compared against a solution relaying on BoVW and SIFT Zhao et al., 2010: according to the type of attacks, absolute gains between 5 and 14% in Prec and between 6 and 12% in Rec are shown. Although the method was optimized for reducing the search time, no experimental result is reported in this respect.

A fingerprinting system based on contourlet HMT (Hidden Markov Tree) model is designed in Sun et al. (2017). The contourlet is a multidirectional and multiscale transform that is expected to handle the directional plane information Do and Vetterli, 2004 better than the well-known wavelets transform. HMT generates links between the hidden state of the coefficients and their respective children. Before the extraction of the fingerprint, a normalization phase takes place. It unifies the frame rate, the width, and the height, and converts the frames to grayscale. Once normalized, each frame is partitioned into equal blocks, thus preserving the local features. The contourlet transform is then applied to each block to obtain the contourlet coefficients which are fed to the HMT model to generate the standard deviation matrices. Finally, the SVD (Singular Value Decomposition) is used to reduce the dimension of the resultant standard deviation matrices. The video fingerprint is created by concatenating the fingerprints extracted from all the frames. This study adopts a 2-step matching algorithm. In the first step, the fingerprint of a random frame is used to compute its distance to all the fingerprints present in the dataset. The N best matches are further investigated in the second step where the squared Euclidean distance between all the frames presenting the query clip and a referenced clip is calculated. The reference video with the minimum distance is identified as the matching result. Compared to the CGO based method Lee and Yoo, 2008, the Sun et al., 2017 method achieves better performances in terms of the probability of false alarm and the probability of true detection.

Ouali et al., 2017 extends some basic concepts from audio to video fingerprinting. To this end, the video sequence is considered as a sequence of frames that are first resized. The fingerprint encodes the positions of several salient regions in some binary images generated from the luminance spectrogram; in this study, the term salient designates the regions featuring the highest spectral values. The selection of the salient areas can be done at the level of the frame or at the level of successive frames. The former considers a window of spectrogram coefficients centered on the related median while the latter considers the regions that have the highest variations compared to the same regions in the previous frame. The experimental results are carried out on the TRECVID 2009 and 2010 datasets and show that the fingerprint extracted on sequences of frames outperforms the fingerprint extracted at the level of frames.

The study presented in Liu (2019) addresses the issue of reducing the complexity and the execution time of the fingerprint matching in large datasets. The method to extract the fingerprint is referred to as rHash and it is derived from the aHash method Yang et al., 2006. First, a pre-processing step reduces the frame rate to 10, uniformizes the resolution to 144x176, and generates the TIRIs Esmaeili and Ward, 2010. Secondly, the rHash involves 4 steps: image resizing, division into blocks, block-wise local mean computation, and the binarization of each pixel based on the correspondent block mean value. The rHash outputs a fingerprint composed of 12 words of 9 bits each. For the matching process, an algorithm based on a look-up table, word counting, and ordering operations is advanced. The TRECVID 2011 and the VCDB Jiang and Wang, 2016 datasets are processed when benchmarking the advanced method against aHash and DCT-2ac hash Esmaeili et al., 2011 methods: higher accuracy as well as increased searching speed are thus brought to light.

As video content is preponderantly recorded, stored, and transmitted in compressed formats, fingerprints extracted directly from the compressed stream will beneficially eliminate the need for decoding operations. While early studies Ngo et al., 2005, Li and Vishal, 2013 already considered MPEG motion vectors as a partial information in fingerprinting applications, Ren et al., 2016 can be considered as an incremental step: the fingerprinting computation combines information extracted from the decompressed (pixel) domain to information extracted at the MPEG-2 stream level. First, from the decompressed I frames, key frames are selected according to their visual saliency. To this end, histogram-based contrast is computed for each I frames alongside with the underlying image entropy. Then, key frames are selected according to the Person’s coefficient. For any selected key frame, both global and local features are extracted as the color histograms and ORB descriptors, respectively. Finally, motion vectors directly extracted from the MPEG-2 stream serve local temporal information: specifically, motion vectors angle histograms are computed. Hence, the key frame fingerprint is a combination of the color histograms, ORB descriptors and motion vector normalized histogram. The video fingerprint is computed as the set of key frame fingerprints. The matching procedure is individually performed at the level of the three components (i.e., based on their individual appropriate matching criteria) and the overall decision is achieved through fusing decisions made on multiple features by a weighted additive voting model. In experiments, the color histogram, ORB descriptors and motion vector histograms weights are set to 0.2, 0.4, and 0.4, respectively. The experimental results are obtained by processing the TRECVID 2009 dataset and consider one state of the art measure based on SIFT. The gains of the advanced algorithm have been evaluated in terms of NDCR (Normalized Detection Cost Rate), F1 score, and copy detection processing time.

4.1.3 Discussion

The previous section brings to light that the fingerprinting conventional methods form a fragmented landscape. While the general methodological framework is unitary (cf. Section 3), each study ambitions to take a different applicative challenge, from searching of strongly modified sequences in reduced-size video datasets to reducing the complexity and the execution time of the fingerprint matching. The evaluation criteria are different, with a preponderancy of Prec, Rec and F1 that are generally computed on datasets sampled from the corpora presented in Table 1; yet, the criteria of sampling the reference datasets are not always precised. In this context, no general and/or precise conclusion about the pros and the cons of the state-of-the-art methods can be drawn.

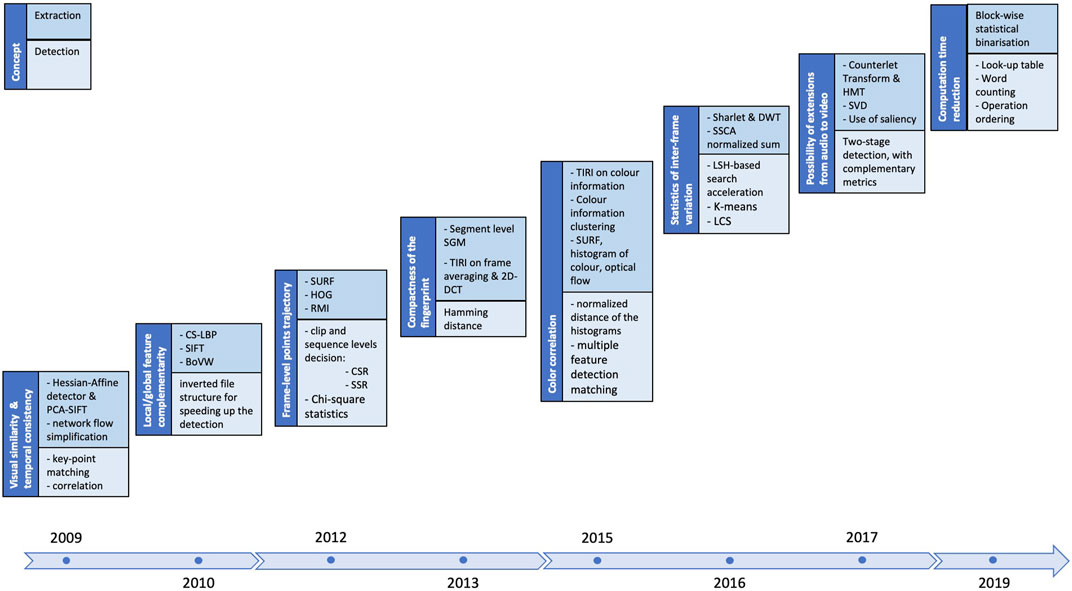

However, the value of these research efforts can be collectively judged by analyzing their steadily evolution, as illustrated in Figure 6. This figure covers the 2009—2019 time span and presents, for each analyzed year, the key conceptual ideas (the dark-blue, left block) as well as the methodological enablers in fingerprinting extraction (the blue, right-upper block) and matching (the light-blue, right-lower block)2.

Figure 6 and Section 4.1.2 show that the state-of-the-art is versatile enough to pragmatically offer solutions to specific applicative fields, without being able to provide the ultimate fingerprinting method. As an attempt in reaching such a solution, NN—based solutions are considered for some or all of the blocks in the fingerprinting scheme, as explain ion Section 4.2.

4.2 NN-based methods

4.2.1 Main directions

The class of NN-based video fingerprinting methods can be considered as an additional direction with respect to the conventional fingerprinting methods presented in Section 4.1. They inherit its basic conceptual workflow: pre-processing video sequence, extracting spatial and temporal information, eventually aggregating them into various derived representations (be them binary or not), matching.

However, NN-based video fingerprinting methods rely (at least partially) on various types of NN, from AlexNet Krizhevsky et al., 2012 and ResNet (Residual neural network) (He et al., 2016) to CapsNet (Capsule Neural Network) Sabour et al., 2017 and LSTM Hochreiter and Schmidhuber, 1997, sometimes requiring specifically designed architectures Zhixiang et al., 2018. Yet, such an approach does not exclude the usage of partial conventional solutions in conjunction with NN, e.g., BoVW can be considered as an aggregation tool of visual features extracted by CNN (Convolutional Neural Network) Zhang et al., 2019. Moreover, the matching algorithm generally comes across with the NN considered in the extraction phase.

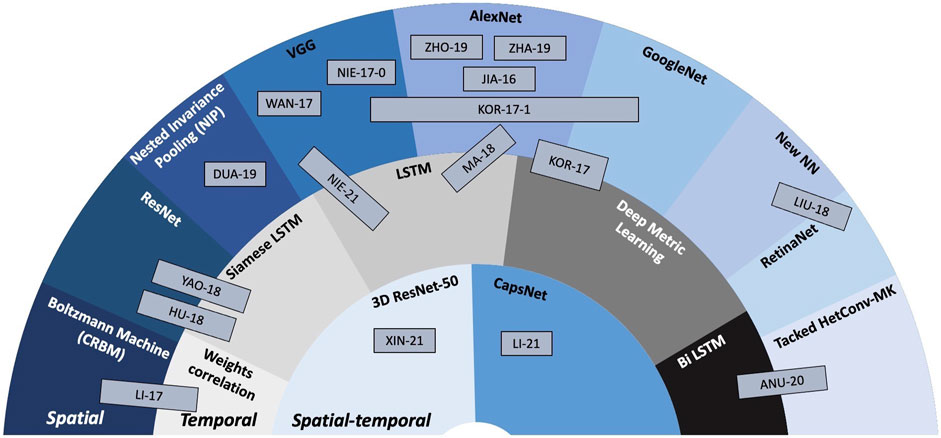

These main directions will be illustrated by a selection of 20 studies, published since 2016, that will be presented in chronological order. The relationship among and between them is depicted in Figure 7, that is also structured in three hemicycles (as Figure 5), yet their meanings are slightly different:

• the outer blue layer corresponds to the spatial features, exemplified through: CRBM(Conditional Restricted Boltzmann Machine), ResNet, NIP (Nested Invariance Pooling), VGGNet, AlexNet, GoogleNet, new structures designed to the fingerprinting purpose, RetinaNet, and Tracked HetConv-MK (heterogeneous convolutional multi-kernel).

• the middle gray layer corresponds to temporal features, exemplified through: weight correlation, LSTM (Long-Short Term Memory), SiameseLSTM (Siamese LSTM), Deep Metric Learning, and BiLSTM (bidirectional LSTM).

• the inner blue layer corresponds to spatial-temporal features, exemplified through: 3D-ResNet50, and CapsNets structures.

FIGURE 7. NN-based fingerprinting method synopsis: the hemicycles (areas) related to the spatial, temporal, and spatial-temporal features are located at the outer, middle, and inner parts of the figure, respectively. Inside each hemicycle, examples of state-of-the-art solutions are presented.

The order of the classes in each hemicycle is again chosen to allow for a better visual representation of the synergies among them.

4.2.2 Methods overview

The work presented in Jiang and Wang (2016) is twofold. First, the VCDB is organized and presented by a comparison to other existing datasets (e.g., Muscle-VCD) used to evaluate video copy detection algorithms. In parallel, a fingerprinting method referred to as SCNN (Siamese Convolutional Neural Network) is advanced. SCNN is composed of two identical AlexNet Krizhevsky et al., 2012 followed by a connection function layer that computes the Euclidean distance between the two AlexNet outputs, and finally a contrastive loss layer Hadsell et al., 2006. The information thus obtained is structured by BoVW. The experiments focus on the relationship between the dataset and the efficiency of the system. The rule of thumb that is thus stated is “the bigger and the more heterogeneous the dataset, the harder for the systems to accurately detect copy videos”. Specifically, the SCNN achieves F1 = 0.69 on VCDB.

A two-level fingerprint approach is presented in Nie X. et al. (2017). First, LRF (Low-level Representation Fingerprint) is computed as a tensor-based model that fuses different visual features such as SURF and color histograms. Then, the DRF (Deep Representation Fingerprint) extracts the deep semantic features by using a pretrained VGGNet Karen and Andrew, 2014 containing five convolutional layers and 3 fully connected layers. The DRF takes 224 × 224 RGB images as input and outputs a 4096-dimension vector. The matching solution is also structured at two levels: the LRF component identifies a candidate set while the DRF further identifies the source video from the candidate set. The experiments consider both CC_WEB_VIDEO Wu et al., 2009 and Open Video Open Video, 2022 datasets, thus processing about 20,000 source clips. The method is benchmarked against four methods LRTA Li and Vishal, 2012, 3D DCT Baris et al., 2006, CGO Lee and Yoo, 2008, and CMF Nie et al., 2017b that it outperforms in terms of ROC curve.

The study in Schuster et al. (2017) discloses a video stream fingerprinting. The method takes advantage of the loophole in the MPEG-DASH standard Sodagar, 2011 that induces an outburst of content dependent packet bursts, despite the stream encryption. The video is represented as information bursts that are sent to the end user from the streaming services. The data traffic features are captured via a script on the client device or intruding detectors in the network. To this end, a CNN composed of 3 convolution layers, max pooling, and 2 dense layers is designed. To train the model, an Adam optimizer Kingma and Jimmy, 2014 was used as well as a categorical cross-entropy error function. The dataset is extracted from 100 Netflix titles, 3,558 YouTube videos, 10 Vimeo and 10 Amazon titles. A different model is trained for each streaming platform. The classifier achieved 92% accuracy. Inspired by these results, the study in Li (2018) further investigates the aspects specifically related to network, by extracting the information from the Wi-Fi traffic, where both transport and MAC (Media Access Control) layers are encrypted via TLS (Transport Layer Security), and WPA-2 (Wi-Fi Protected Access 2), respectively. The Multi-Layer Perceptron (MLP) model achieves 97% accuracy to identify videos from a small 10-video dataset.

Instead of using the output of the CNN as visual features, the study in Kordopatis-Zilos et al. (2017a) advances a method that extracts the image features starting from the activation values in the convolutional layers. The extracted information forms a frame-level histogram. A video-level histogram is then generated by summing all the frame-level histograms. For fast video retrieval, TF-IDF weighing is coupled to an inverted file indexing structure Sivic and Zisserman, 2003. To evaluate the proposed method, the CC_WEB_VIDEO Wu et al., 2009 dataset is used as well as 3 pre-trained CNNs, namely AlexNet Krizhevsky et al., 2012, GoogleNet Szegedy, 2015, and VGGNet Simonyan and Zisserman, 2014. GoogleNet performed the best (mAP = 0.958), followed by AlexNet (mAP = 0.951) than VGGNet (mAP = 0.937).

A deep learning architecture with a focus on DML (Deep Metric Learning) is presented in Kordopatis-Zilos et al. (2017b). For feature extraction, the video is sampled to 1 frame per second then fed to a pre-trained CNN model (AlexNet Krizhevsky et al., 2012 and GoogleNet Szegedy, 2015 are considered). For the DML architecture, a triplet-based network is proposed where an anchor, a positive and a negative video are used to optimize the loss function. The first layer of the DML is composed of 3 parallel Siamese DNN (Deep Neural Network). In their turn, the Siamese DNN are composed of 3 dense fully connected layers followed by a normalization layer where the sizes of the layers and their outputs depend on the input size. The VCDB dataset Jiang and Wang, 2016 is used to train the DML. To evaluate the proposed system, the CC_WEB_VIDEO Wu et al., 2009 dataset is used. The system scores mAP = 0.969 using the GoogleNet and mAP = 0.964 when using AlexNet, thus increasing the performances presented in Kordopatis-Zilos et al. (2017a).

The study presented in Li and Chen (2017) develops a deep learning model capable of extracting spatio-temporal correlations among video frames based on a CRBM Taylor et al., 2007 that can simultaneously model the spatial and temporal correlations of a video. The spatial correlations are modeled by the connections between the visible and hidden layers at a given moment. The temporal correlations are modeled by the connections among the layers at different timestamps. The CRBM is paired with a denoising auto-encoder Vincent et al., 2010 module that reduces the dimension of the CRBM output by reducing the redundancies and discovering the invariants to distortions. This process can be applied recursively. A so-called post-processing module takes as input 2 video fingerprints and decides whether they are similar or not. The TRECVID 2011 dataset is used for benchmarking. The advanced method reaches F1 = 0.98, thus outperforming four state-of-the-art techniques: SGM Li and Vishal, 2013 F1 = 0.91), 3D-DCT Esmaeili et al., 2011 F1 = 0.89, Lee and Yoo, 2008 F1 = 0.78, and RASH Roover et al., 2005 F1 = 0.79.

The study in Wang et al. (2017) investigates the influence of the frame sampling that is usually applied at the beginning of fingerprint extraction and sets its goal on computing a compact fingerprint without decreasing the frame rate (that is, without frame dropping). Three main steps are designed: frame feature extraction, video feature encoding, and video segment matching. The frame feature extraction is realized by means of a VGGNet-16 Simonyan and Zisserman, 2014 composed of 13 convolutional layers, 3 fully connected layers, and 5 max-pooling layers inserted after the convolutional layers. This step follows by PCA whitening on the CNN output to reduce its dimensionality. The feature compression and aggregation are realized via the sparse coding technique and timeline aligning by pooling the frame features into 1sec. interval (max-pooling is chosen). The matching features fast retrieval is ensured by using a KD-tree to store the fingerprints and temporal alignment implemented according to the temporal network described in Jiang et al. (2014). To run the tests, the VCDB dataset Jiang and Wang, 2016 is used. The advanced method performs better than two baseline fingerprinting methods: CNN with AlexNet Jiang and Wang, 2016, and Fusion with SCNN Jiang and Wang 2016. The experiments also studied the impact of frame sampling: F1 = 0.7 when processing all the frames and it drops to F1 = 0.66 when processing 1 frame per second.

The study in Hu and Lu (2018) combines CNN and RNN (Recursive Neural Network) architectures for video copy detection purposes. The method is divided into 2 main steps. First, a CNN architecture extracts content features from each frame: by a ResNet model He et al., 2016, each frame is represented by a 2048-component vector. Secondly, spatio-temporal representations are generated on top of frame-level vectors. Thus, a Long-Short Term Memory unit based Siamese Recurrent Neural Networks (SiameseLSTM) is trained. The training is achieved by selecting clips with the same length (20 frames) from CC_WEB_VIDEO Wu et al., 2009. For video searching/matching purposes, the video is cut into 20 frame clips, before their respective spatial-temporal representations are generated. To identify the copied segments a graph based temporal network algorithm is used Tan et al., 2009. This algorithm is tested using the VCDB dataset Jiang and Wang, 2016 and yields Prec = 0.9, Rec = 0.58, and F1 = 0.7233.

Liu, 2018 represents an example of spatial fingerprinting relying on CNN. The principle is to represent the video sequence as a collection of conceptual objects (in the computer vision sense) that are subsequently binarized. To compute the fingerprint, the video sequence is first space/time down sampled. For each down sampled frame, visual objects are computed using the RetinaNet structure Lin et al., 2017. The binarization of the detected objects is recursively block-wise achieved: each object is divided into a group of non-overlapping blocks and each block in several non-overlapping subblocks. The fingerprinting bits are assigned according to a thresholding operation: the subblock pixel value average is compared to the average of all the pixels in the corresponding block. The matching technique considers an IIF structure and a weighted Hamming distance. The experimental results concern the values of Prec, Rec and F1, computed on VCDB dataset and show that a 10% higher recall rate can be achieved with a decrease of 1% prediction rate [the comparison is made against an ML method based on SIFT descriptors as well as against the CNN method presented in Wang et al. (2017)].

The method presented by Zhixiang et al. (2018) proposes a nonlinear structural video hashing approach to retrieve videos in large datasets thanks to binary representations. To this purpose, a multi-layer neural network is designed to generate a compact L-bit binary representation for each frame of the video. To optimize the matching process, a subspace grouping method is applied to each video, thus decomposing the nonlinear representation to a set of linear subspaces. To compute the distance between 2 video clips, the distances between the underlying subspaces are integrated, where the Hamming distance is used to compute the distance between a pair of subspaces. CCV Jiang et al., 2011, YLI-MED Bend, 2015 and ActivityNet Heilbron et al., 2015 datasets are selected to test the performance of the algorithm that is benchmarked against DeepH (Deep Hashing) Liong et al., 2015, SDH (Supervised Discrete Hashing) Shen et al., 2015, and KSH (Kernel-Based Supervised Hashing) Liu et al., 2012. The experimental results show that the advanced method outperforms state-of-the-art solutions with the increase of code length.

An unsupervised learning video hashing technique is advanced in Ma et al. (2018). The first step is to extract the spatial feature of the video frames using AlexNet Krizhevsky et al., 2012. The output of the CNN is fed to a single-layer LSTM network. Next, a time series pooling is applied. This step combines all the frame level features to form a single video level feature. Finally, an unsupervised hashing network extracts a compact binary representation of the video. To test its effectiveness, UCF-101 Soomro et al., 2012 dataset and 100 h worth of videos are downloaded from YouTube and used as dataset. Few unsupervised hashing networks were evaluated and the ITQ-ST (Iterative Quantizing—Spatio-Temporal) and BA-ST (Binary Autoencoder—Spatio-Temporal) Carreira-Perpinán and Raziperchikolaei, 2015 methods worked the best to represent the videos, resulting into

The joint use of CNN (ResNet He et al., 2016) and RNN (SiameseLSTM) is studied in Yaocong and Xiaobo (2018). The selected CNN is ResNet50 that takes 224 × 224 RGB frames as input and outputs a 2048-dimension vector per frame. The RNN achieves the spatio-temporal fusion and sequence matching. To further optimize spatio-temporal feature extraction, positive pairs (similar video content) and negative pairs (dissimilar video content) are fed to the SiameseLSTM. The resulting feature vectors are considered as the video fingerprint. For the matching process, a graph based temporal network Tan et al., 2009 is used. For training, the CC_WEB_VIDEO Wu et al., 2009 dataset is used, and the video clips are normalized to 20 frames. For evaluation, the VCDB dataset Jiang and Wang, 2016 is used. The method yields Prec = 90% and Rec = 58%, which is slightly better than the solution advanced in Wang et al. (2017) and Jiang and Wang (2016).

The challenge of retrieving the top-k video clips from a single frame is taken in Zhang et al. (2019), where visual features are extracted by utilizing CNN and BoVW. The first step is to extract representative frames at fixed-time intervals and to resize them to 256 × 256 pixels. The second step is to feed all those images to a CNN feature extractor, implemented by the AlexNet architecture Krizhevsky et al., 2012. For each frame, a 4096-dimension feature vector is generated. This vector is the input for the BoVW module which aims to create a visual dictionary for the reference video dataset via a feature matrix. The extraction of visual words from visual features is done via the K-means clustering method. To optimize the retrieval time, frame pre-clustering is done, also based on K-means. A VWII (Visual Word Inverted Index) is deployed to improve search efficiency. The performance of the algorithm is benchmarked against SIFT Zhao et al., 2010, and BF-PI de Araújo and Girod, 2018 methods, on two datasets, namely Youtube-8M Abu-El-Haija et al., 2016 and Sports-1M Karpathy et al., 2014. The experimental results consider 4 criteria, namely precision evaluation on the size of dataset, precision evaluation on the number of visual words, efficiency evaluation (execution time) on the number of k results, and the efficiency evaluation (execution time) on the size of dataset.

Duan et al., 2019 presents an overview of the CDVA (Compact Descriptors for Video Analysis standard) promoted by ISO/IEC JTC 1 SC 29, a. k.a MPEG. The DVA framework is specified incrementally with respect to MPEG-CDVS. To extract video features, the key frames and the inter feature prediction are determined before being fed to a deep learning model based on CNN. The proposed CNN model is derived from NIP feature descriptors which adds robustness to the system. To make the system light weight and multiplatform, the NN was compressed by using the Lloyd-Max algorithm. To reduce the time of video retrieval time, the output of the CNN is binarized via a one-bit scalar quantizer. A Hamming distance is used for the fingerprint matching. For testing, a dataset with source and attacked clips is created gathering 4,693 matching pairs as well as 46,930 non-matching pairs. By coupling the deep learning extracted features with the handcrafted features proposed in CDVS, the system gained further precision.

The study in Zhou et al. (2019) presents a video copy detection method establishing synergies among CNN and conventional computer vision tools. The first step consists in dividing the video into equal-length video sequences, from which frames are sampled with a fixed period, thus allowing the computation of the TIRI for each sequence. The second step consists in extracting the spatial features using a pre-trained AlexNet Krizhevsky et al., 2012 model, followed by a sum-pooling layer to reduce the matrix dimension. The model takes as input the TIRI and outputs a 256-dimension vector. The third step extracts the temporal features. In this respect, it starts by feeding all video sequence frames to the AlexNet and follows by averaging all frame matrices and by computing their centroids. Two matrices representing the distance in cylindrical coordinates (distance and angle) between the centroids are subsequently computed. The fourth step first creates a BoVW by clustering the extracted spatial features through a K-means algorithm and then structures the BOVW in an inverted index file. During the copy detection step, for each query-reference pair, three individual distances are computed: between spatial representations, between temporal distance representations and between temporal angle representations. These three distances are fused to compute a decision score that is compared to a pre-defined threshold, thus ascertaining whether the query is a copy version or not. Evaluated under the TRECVID 2008 framework, the method achieves mAP = 0.65.

A supervised stacked HetConv-MK Singh et al., 2019 and BiLSTM hashing model is designed in Anuranji and Srimathi (2020). The model integrates two main blocks devoted to spatial and temporal feature extraction, respectively. First, the convolutional block computes the spatial features via passing the frames through a stacked convolutional filter and a max-pooling layer. Secondly, the BiLSTM model computes the stream forward and backward. Finally, a fully connected layer generates a binary fingerprint that integrates the output of the previous units. The experimental results are obtained out by processing 3 datasets: CCV Jiang et al., 2011, ActivityNet Heilbron et al., 2015, and HMDB (Human Metabolome Database) Kuehne et al., 2011, with a total of almost 30,000 clips. To determine the effectiveness of the algorithm, Hamming ranking, and Hamming lookup are used in conjunction with mAP and Prec. The advanced method is compared to existing methods such as SDH (Supervised Discrete Hashing) Shen et al., 2015, supervised deep learning Liong et al., 2015, Deep Hashing Liong et al., 2015, and ITQ (Iterative Quantization) Gong et al., 2013. The results show an improvement in accuracy introduced with large scale dataset.

A video hashing framework, referred to as CEDH (Classification-Enhancement Deep Hashing) is conceived in Nie et al. (2021). CEDH is a deep learning model that is composed of 3 main layers. First, a VGGNet-19 Simonyan and Zisserman, 2014 layer to extract frame-level features. Then, a LSTM Hochreiter and Schmidhuber, 1997 network is adopted to capture temporal features. Finally, a classification module is implemented to enhance the label information. To train the model, the loss term is matched to the peculiarities of the layer: triplet loss, classification loss, and code constraint terms, respectively. To evaluate its performance, 3 video datasets are processed: the FCVID (Fudan-Columbia VIDeo) dataset Jiang et al., 2018, HMDB Kuehne et al., 2011, and UCF-101 Soomro et al., 2012, thus resulting in a total of 7,070 video clips for training and 3,030 clips for testing. The CEDH is benchmarked against 8 state-of-the-art solutions, namely: locality sensitive hashing Datar et al., 2004, PCA hashing Wang et al., 2010, iterative quantization Gong et al., 2013, spectral hashing Weiss et al., 2009, density sensitive hashing Jin et al., 2014, shift-invariant kernel local sensitive hashing Raginsky and Lazebnik, 2009, self-supervised video hashing Song et al., 2018, and deep video hashing Liong et al., 2017. The evaluation criteria are mAP, Prec and Rec.

A hybrid method combining deep learning and hashing techniques to achieve a video fingerprinting technique is presented in Xinwei et al. (2021). The method is based on quadruplet fully connected CNN, centered around 4 3D ResNet-50 networks that extract spatio-temporal features. The input is composed of 4 videos: the source clip, a copy of the clip (a modified version extracted from the original), and 2 clips that are not related to the original clip. The output consists of 2 elements: a 2048-dimension vector and a 16 bits binary code. For training and testing, three public datasets are considered: UCF-101 Soomro et al., 2012, HMDB Kuehne et al., 2011 and FCVID Jiang et al., 2018. A normalization process of the 4,986 videos takes place before the training, where each video is downsized to 320×240 and only the first 100 frames of each clip are used to identify the video. The proposed method is mainly compared to a similar deep learning method that shares global architectural similarities called NL_Triplet. The two methods have a similar performances and behaviors in the various benchmarking setups.

The study in Li et al. (2021) presents a fingerprinting method that takes advantage of the capabilities of the CapsNet Sabour et al., 2017 to model the relationships among compressed features. The architecture of the convolution layers is composed of two 3D-convolution modules extracting spatio–temporal features, followed by an average pooling module along temporal dimension and finally by a 2D-convolution module. The role of the primary capsule layer is convolution computation and dimension transformation, while the advanced capsule is composed of 32 neurons and is responsible for matrix transformations and dynamic routing Sabour et al., 2017. The output of this architecture is a 32-dimension fingerprint. A triplet network is designed for the matching. During the training, the matching network requires three inputs: an anchor sample (original video), a positive sample (a copy/modified of the original video), and a negative sample (non-related video). The dataset is composed of 4,000 videos randomly sampled from FCVID Jiang et al., 2018, TRECVID, and YouTube. The ROC and F1 scores are considered as evaluation criteria when comparing the advanced method to DML Kordopatis-Zilos et al., 2017b, CNN + LSTM Yaocong and Xiaobo, 2018, and TIRI Coskun et al., 2006. The advanced method achieves a F1 = 0.99 compared to F1 = 0.97 for DML, F1 = 0.94 for CNN + LSTM and F1 = 0.825 for TIRI.

4.2.3 Discussion

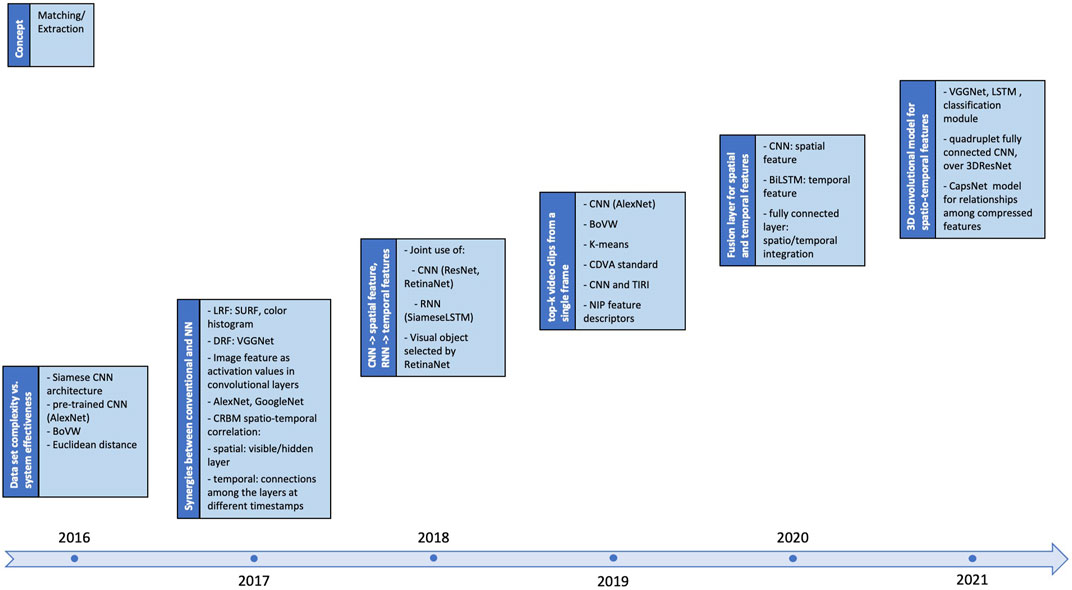

A global retrospective view on the investigated NN-based methods is presented in Figure 8 that is paired designed with Figure 6. It originates in 2016 and presents, for each analyzed year, the key conceptual ideas (the dark-blue, left block) as well as the methodological enablers in fingerprinting (the blue, right block). Note that in this case the fingerprint extraction and matching are merged (as they are tightly coupled).

The previous section brings to light that the NN-based fingerprinting is still an emerging research field. It inherits its methodological framework from conventional fingerprinting, while updating both the fingerprint extraction and matching.

Since 2016, fingerprint extraction gradually shifted from considering NN solution at an individual level (e.g., spatial or temporal features) to holistic, 3D Nets able to simultaneously capture integrated spatio-temporal features. Intermediate solutions, combining NN and conventional image processing tools (e.g., SURF, TIRI, or BoVW) are also encountered. The fingerprinting matching generally comes across with the fingerprinting extraction.

The experimental testbed principles are also inherited from the case of conventional methods. Yet, the datasets are different in their size as well as in the fact that experimenter generally creates the attacked versions of the video content (cf. The last 5 lines in Table 1). The evaluation criteria generally cover Prec, Rec, F1 and mAP. This variety in experimental conditions makes impossible for an objective performance comparison to be stated.

Figure 8 and Section 4.2.2 demonstrate that the exploratory work of using NN in conjunction to conventional tools can be considered as successful and that the way towards effective NN—only solutions is open Li et al., 2021.

However, when comparing current day conventional to NN—based solutions, the quantitative results seem unbalanced in favor of conventional methods. Yet, quick conclusions should be avoided, as the datasets are of significantly different sizes and the task complexity is significantly different. The generic evaluation criteria introduced in Section 3 are seldom jointly evaluated, with each study focusing on a specific metric and/or a pair of metrics. Moreover, note that the computational complexity is seldom discussed as a true evaluation criterion, thus making a sharp decision even more complicated.

5 Challenges and perspectives

Fingerprint challenges and trends are structured according to the constraints set by current day video production and distribution, and to the new applicative fields in which fingerprinting can help, as discussed in Sections 5.1 and 5.2, respectively.

5.1 Stronger constraints on video fingerprinting properties

Whilst not being either exhaustive or detailed, Section 4 is meant to bring light on the very complex, fragmented yet well-structured landscape of the video fingerprinting methods, as illustrated in Figures 5–8.

Despite clear incremental progress, achieving the ultimate method for generic video content (TV/movies/social media) fingerprinting is still an open research topic that will continuously be faced to new challenges in terms of: 1) video content size and typology, 2) complexity of near-duplicated copies, 3) compressed stream extraction, and 4) energy consumption reduction.

First, the size of video content is expected to continuously increase. Social media, personalized video content, business oriented video content (e.g., videoconferencing) are expected to lead soon to an average of 38 h a week of video consumption per person in US Delloite, 2022. Such quantity of content is expected to be processed, stored and retrieve without impairing the user experience, hence new challenges in reducing the complexity of fingerprinting matching are expected to be set.

Secondly, the image/video software editing solutions as well as professional video transmission technologies (such as broadcasting, encoding, or publishing) will increase the number, the variety and the complexity of the near-duplicated copies to be dealt with. As for time being these near-duplicated copies are rather considered one-by-one and no attempt to exploit would-be statistical models unitary representing them, this trend is expected to increase the constraints on fingerprinting robustness.

Thirdly, although the video content is mainly generated in compressed format, just few partial results related to fingerprinting extraction directly from the stream syntax elements are reported Ngo et al., 2005, Li and Vishal, 2013, Ren et al., 2016, Schuster et al., 2017. This highly contrast with related applicative fields, like indexing and watermarking, where more advanced results are already obtained Manerba et al., 2008; Benois-Pineau, 2010, Hasnaoui and Mitrea, 2014.

Finally, video fingerprinting is also expected to take the challenge of reducing the computational complexity, following a green computing trend in video processing Ejembi and Bhatti, 2015, Fernandes et al., 2015, Katayama et al., 2016. This working direction is expected to be coupled to the previous one, namely designing green compressed video fingerprinting solutions.

With respect to the above-mentioned four items, short term research efforts are expected to address several incremental aspects, from both methodological and applicative standpoints. The former encompasses aspects such as the explicability of the NN-based results, the relationship between semantics, content, and the human visual system, the questionable possibility of modeling the modifications induced in near-duplicated content, … The latter is expected to investigate the very applicative utility of conventional performance criteria, the computational complexity balancing among extraction/detection in context of NN-based methods and massive datasets, the possibility of identifying a unique structure or a set of structures per performance criterion to be optimized, etc.

As a final remark, note that no convergence towards a theoretical model able to accommodate the current-day efforts can be identified and, in this respect, information theory, statistics and/or signal/image processing are expected to still be at stake during long-term research efforts. Such a theoretical model is expected to have different beneficial effects, from allowing a comparison among existing methods to be carried out with rigor to identifying the tools for answering the applicative expectancies and/or the theoretical bounds.

5.2 Emerging applicative domains

Fingerprinting benefits are likely to be become appealing for several new applicative domains, such as fake news identifying and tracking, unmanned vehicles video processing, metaverse content tracking, or medical imaging, to mention but a few. This extension rises new challenges not only in terms of applicative integration between fingerprinting and other technologies but also in terms of content type and composition.

In the sequel, we shall detail the cases of fingerprinting for visual fake news and for the video captured by unmanned vehicles.

5.2.1 Visual fake news

While the concept of fake news does not still have a sharp and consensual definition Katarya and Massoudi, 2020, it can be considered that, in the video context, it relates to the malicious creation of a new video content, whose semantic is not genuine and/or whose interpretation yields to false conclusion. The fake news creation starts generally from some original video content that is subsequently edited. Hence, such a problem is multifold and various types of solutions can be envisaged: detecting whether a content is modified or not, detecting the original content that has been manipulated, detecting the last authorized modification of the original content, etc. Consequently, various video processing paradigms can contribute (individually and/or combined) to elucidate some of these aspects, Lago et al., 2018, Zhou et al., 2020, Agrawal and Sharma, 2021, Devi et al., 2021.

For instance, video forensics are generally considered as a tool to identify content modification, solely based on the analyzed content. On its side, watermarking provides effective solutions for identifying video content modifications and/or the last authorized user but requires the possibility of modifying the original content prior to its distribution.

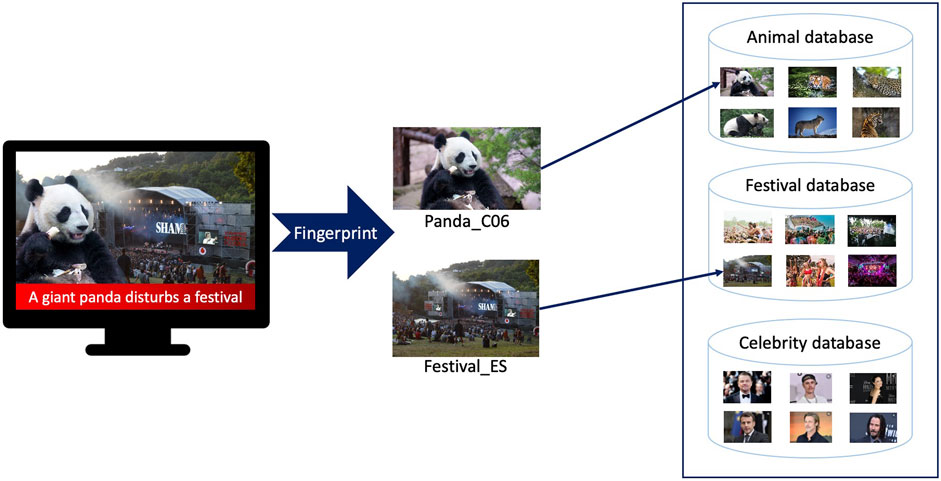

Video fingerprinting affords the detection of the original content that has been manipulated to create the fake news content. Figure 9 illustrates the case3 where two video contents, from two different repositories, are combined to create a fake content. In this respect, the challenge of designing fingerprinting methods robust to content cropping is expected to be taken soon. This example shows that fingerprint is complementary to forensics. With respect to watermarking, fingerprinting has as main advantage is passive behavior (it does not require the original content to be modified).

Moreover, video fingerprinting is still expected to be complemented with security mechanisms, and blockchain (also referred to as Distributed Ledger Technologies—DLT) seems very promising in this respect.