- 1Department of Psychology, Lyon College, Batesville, AR, United States

- 2Research Center for Human-Animal Interaction, College of Veterinary Medicine, University of Missouri, Columbia, MO, United States

Facial Action Coding Systems (FACS) are some of the most systematic and standardized methods for studying the physical aspects of animal facial behavior. Although FACS manuals provide some guidance on how to implement their coding system, they often lack detailed recommendations for data collection protocols, which impacts the types of data that can be coded. Furthermore, there are various methods for data coding that are not always discussed in FACS. In this perspective piece, I aim to review some considerations related to the data collection and coding process and to offer best practice recommendations, as well as alternatives. While this list of considerations and recommendations is not comprehensive, I hope it encourages more discussions and the sharing of additional guidelines and protocols for FACS. By doing this, we can enhance the systematic approach and scientific rigor of FACS further.

Introduction

The study of animal facial behavior is a valuable research avenue, providing a non-invasive and cost-effective method to analyze (and compare) the biological processes, social dynamics, emotional responses, and cognitive mechanisms across species (Descovich et al., 2017). Since Darwin’s seminal work “The Expression of the Emotions in Man and Animals” was published in 1872, there have been significant advancements in how the physical form of facial behavior is studied. Specifically, most studies conducted over the past 15 years have utilized protocols described in Facial Action Coding Systems [FACS (Ekman and Rosenberg, 2005)]. FACS is a systematic and standardized method for identifying both subtle and overt facial movements based on muscle contractions, referred to as Action Units (AUs). The combination of one or more AUs constitutes a facial behavior (or AU combination). Originally developed to work with humans (Ekman and Rosenberg, 2005), FACS have now also been added for numerous other primates and domesticated animals (Parr et al., 2007; Parr et al., 2010; Waller et al., 2012; Caeiro et al., 2013; Caeiro et al., 2013; Wathan et al., 2015; Waller et al., 2020; Correia-Caeiro et al., 2022; Waller et al., 2024; Correia-Caeiro et al., 2025).

FACS-based approaches offers several benefits, including: (A) rigorous training protocols (Clark et al., 2020) that integrate information from anatomical dissections and literature reviews on animal faces, along with video footage and intramuscular stimulation of the related muscle contractions (Waller et al., 2020); (B) a certification test that evaluates inter-observer reliability with FACS experts, ensuring consistency across published studies and applications (Cohn et al., 2007); and (C) consistent use of Action Unit (AU) codes across different species and their corresponding FACS, promoting comparative research (Ekman and Rosenberg, 2005; Waller et al., 2020). One major drawback, however, is that training and using FACS can be time-intensive. It has been reported that obtaining certification in FACS requires several months of training, and manually coding just 4–5 seconds of video footage using FACS-based approaches takes approximately one hour (Cross et al., 2023).

After completing training and obtaining the necessary certifications from experts who contributed to the development of the system (Cohn et al., 2007; Waller et al., 2020), researchers must determine how to collect data and apply FACS to their specific projects on animal facial behavior. This process is not straightforward, as research questions and key variables of interest can differ significantly from one study to another. Various (and interconnected) approaches to data collection and coding can be employed, each with its own advantages and disadvantages, which are detailed further below. Due to the significant time commitment required to use FACS, it is crucial to make early decisions regarding data collection and coding.

The goal of this perspective piece is to offer insights into some of these different approaches by drawing on my 11 years of experience applying non-human FACS in my research, discussions with colleagues who also use FACS, and relevant published studies that incorporate FACS-based approaches. While FACS manuals offer guidance on using their coding system, they usually do not include recommendations for data collection protocols, which impacts the type of data that can be coded. There are also various ways FACS-based coding approaches can be applied to collected media, which is not always discussed in the respective manuals. Although some researchers have published guidance on data collection and coding protocols for humans (e.g., humanFACS (Cohn et al., 2007);), this topic is rarely discussed in relation to non-human animals, whose facial morphology and behavior can differ significantly from those of humans. I have summarized these approaches and provided best practice recommendations.

This perspective piece will concentrate exclusively on utilizing FACS to examine the physical form of animal facial behaviors. When studying the social function of animal facial behaviors, it is also essential to develop a clear plan for implementing FACS-based coding protocols. This careful planning can significantly enhance research efficiency and save valuable time and energy during the coding process.

Collecting facial behaviors

FACS manuals often include multiple images and short video examples for each Action Unit (AU). These examples illustrate the transition from a point where the AU is completely absent to a point where the AU is being produced, representing either the middle or end of its production. This is sometimes achieved by providing images of a neutral face for comparison or by starting the video clip just before the AU is produced [as seen in the human FACS (Ekman and Rosenberg, 2005)]. For example, AU25 (lips part) is a facial muscle movement observed in both humans and non-human species (Ekman and Rosenberg, 2005; Waller et al., 2020). FACS manuals typically feature 1–3 still images (Ekman and Rosenberg, 2005; Parr et al., 2007; Parr et al., 2010; Waller et al., 2012; Caeiro et al., 2013; Caeiro et al., 2013; Correia-Caeiro et al., 2022; Correia-Caeiro et al., 2025) and 1–2 video clips (Ekman and Rosenberg, 2005; Caeiro et al., 2013; Caeiro et al., 2013; Wathan et al., 2015; Waller et al., 2024; Correia-Caeiro et al., 2025) for the AU25 entry. Still images featuring the same individuals who produce AU25 are often provided as a source of comparison (Parr et al., 2007; Waller et al., 2012; Caeiro et al., 2013). However, this does not encompass all instances of AU25 across manuals. It may also appear alongside other movements and is therefore included in the respective entries for those facial muscle movements as well. It is important to note that FACS coding is not limited to changes in neutral facial behaviors; it can also include documenting individual AUs or sets of AUs (in cases of co-occurrence), regardless of the presence/absence of other AUs.

Because of these considerations, questions then arise regarding the use of images or video footage, as well as when to begin and end the documentation process. Video clips can vary in length; they may be short, lasting just a few seconds to focus on a single facial behavior (as seen in most examples from the FACS manuals) or longer, allowing for the potential observation of multiple facial behaviors. Additionally, the focus of the documentation can be on a single individual or on multiple individuals, either simultaneously or throughout the duration of the documentation.

Recommendation 1: capture a neutral comparison point

When deciding between using images or video clips of any length, it is advisable to establish a neutral reference point to accurately identify facial muscle contractions. This helps to account for unique variations in facial structure. For photos, capturing a neutral reference point can be done by taking photographs of individuals exhibiting no facial muscle contractions [as seen in FACS for primates (Parr et al., 2007; Waller et al., 2012; Caeiro et al., 2013)]. For videos, this can be done by collecting footage of the same individual when they are producing no facial muscle contractions, either at the beginning or end of the recording. Facial morphology can change over time, so it is beneficial to periodically update reference points using this recording method. This is a practice my colleagues and I had to implement in our data collection with chimpanzees, as the facial morphology of the infant chimpanzees changed dramatically over the three-year data collection period (Florkiewicz et al., 2023; Florkiewicz and Lazebnik, 2025). Accurately recording neutral reference points is particularly important for researchers who are examining a limited number of action units (AUs), as these may be obscured by other facial movements (Ekman and Rosenberg, 2005).

Recommendation 2: short video clips are ideal

My colleagues and I have previously found that images yield higher inter-observer reliability scores than videos, while longer video clips tend to have the lowest scores (Florkiewicz et al., 2018; Molina et al., 2019). However, for observing muscle contractions, video recordings are the most ideal, as they preserve facial movement without being affected by premature cuts based on when the image was captured. Short video clips seem to be the most effective approach, and can be recorded opportunistically by capturing facial behaviors or by editing longer clips into shorter segments based on key moments of interest related to facial behaviors. Longer clips should only be considered if they can capture multiple facial behaviors, as reviewing and coding longer clips is a time-consuming process (Florkiewicz and Campbell, 2021). When analyzing longer video clips, a key consideration is whether AUs should be coded continuously throughout the entire video (taking into account their presence or absence, intensity, duration, etc.) or if they should be coded only when a specific facial behavior ‘event’ of interest occurs (Cohn et al., 2007). We have found that during focal sampling (where videos are concentrated on a single individual or pair for extended periods of time), event coding is ideal, as it saves time in the coding process (Florkiewicz et al., 2018; Florkiewicz et al., 2023).

Video clips of any length should always be recorded in the highest quality, using video stabilization equipment or software to reduce the likelihood of missing observations due to poor video quality or camera movement. Capturing video footage of the front of the face is the most ideal. However, it may also acceptable to record from the side (especially during social interactions), as long as all facial features are clearly visible and there are no concerns pertaining to unilateral movement. My colleagues and I frequently study the communicative interactions of primates and domesticated animals, so we often must record their faces from the side [as two individuals turn towards and interact with each other (Scheider et al., 2014; Florkiewicz et al., 2023)].

Recommendation 3: the fewer individuals, the better

My colleagues and I have also found that as the number of individuals in a frame increases, inter-observer reliability scores tend to decrease (Molina et al., 2019). Therefore, if a research project focuses on how AUs are utilized in a social setting, it may be ideal to concentrate on the interactions between 2 to 3 individuals. This approach can help minimize the potential decline in coding performance. When it’s not possible to achieve this and many individuals are in the frame, recoding video footage to maintain high focus on a single individual or smaller group may be the best approach. My colleagues and I needed to implement this strategy while studying the facial behaviors of chimpanzees (Florkiewicz and Lazebnik, 2025) and domesticated cats (Scott and Florkiewicz, 2023), which were housed with numerous individuals. Sometimes, a video clip would display multiple interactions happening at once, and we found it easier to focus on isolating and coding one interaction at a time. If a research project examines facial behaviors without social interaction, it is best to focus on a single individual.

Coding facial behaviors

After obtaining certifications, researchers can begin coding Action Units (AUs) using FACS. However, researchers may gain additional benefits by carefully considering how they code observed AUs and what other variables they can include in their protocols.

Recommendation 4: secure FACS coders early

It is considered good practice to conduct inter-observer reliability on a sample of the entire coded dataset, even if all researchers have received their FACS certification. This approach enables researchers to detect type I and type II coding errors, as intra-observer reliability typically remains high when revisiting coded facial behaviors (Florkiewicz et al., 2018). Typically, agreement is assessed using Wexler’s ratio (Ekman and Rosenberg, 2005), which accounts for other AUs that may be coded as present or absent by other researchers (Ekman and Rosenberg, 2005). Wexler’s ratio is calculated by taking the number of AUs that two certified coders have agreed upon, multiplying this value by two, and then dividing the result by the total number of AUs scored by both coders (Wathan et al., 2015). However, Wexler’s ratio is often calculated for combinations of AUs rather than for individual AUs. This approach has been known to cause issues with human Facial Action Coding System (FACS) evaluations, as it can lead to some subtle AUs being overlooked and others being incorrectly categorized (Cohn et al., 2007). If the study focuses on individual AUs, assessing agreement across different dimensions of AU coding would be beneficial. This includes using Cohen’s kappa for evaluating presence/absence, intensity, and timing data (Sayette et al., 2001; Cohn et al., 2007). To date, only a limited number of studies involving animal FACS have utilized Cohen’s kappa to evaluate the agreement of AUs directly (Bennett et al., 2017), as Wexler’s ratio appears to be far more commonly used (Ekman and Rosenberg, 2005; Parr et al., 2007; Wathan et al., 2015).

Training and obtaining certifications with FACS is a time-intensive process that often requires practice and patience. Unfortunately, this means that there are usually few (2–3 FACS coders) available for a given study. I highly recommended to secure research assistants and begin the training process before starting data coding. Research assistants can often assist with other project aspects, potentially earning authorship on papers (see (Molina et al., 2019; Scott and Florkiewicz, 2023; Mahmoud et al., 2025) for examples). Collaboration is recommended as another way to secure additional coders, which is essential for maintaining the systematic rigor of the FACS-based approach. Individuals responsible for issuing FACS certifications typically maintain a list of those who have already obtained certification, along with the reasons for their certification. As a last resort, it could be beneficial to reach out to these individuals to identify potential collaborators.

Recommendation 5: identify and focus on primary data

The ideal approach involves gathering detailed information on both individual AUs and their combinations. However, this may require additional coding time. Depending on the research questions being generated, it might be more advantageous to focus either on individual AUs or their combinations (Cohn et al., 2007). Researchers also have the option to focus on coding either AUs or AU combinations as their primary data, where focused coding efforts can be implemented for other relevant variables. During the data analysis phase, basic secondary data can be extracted on AUs or AU combinations.

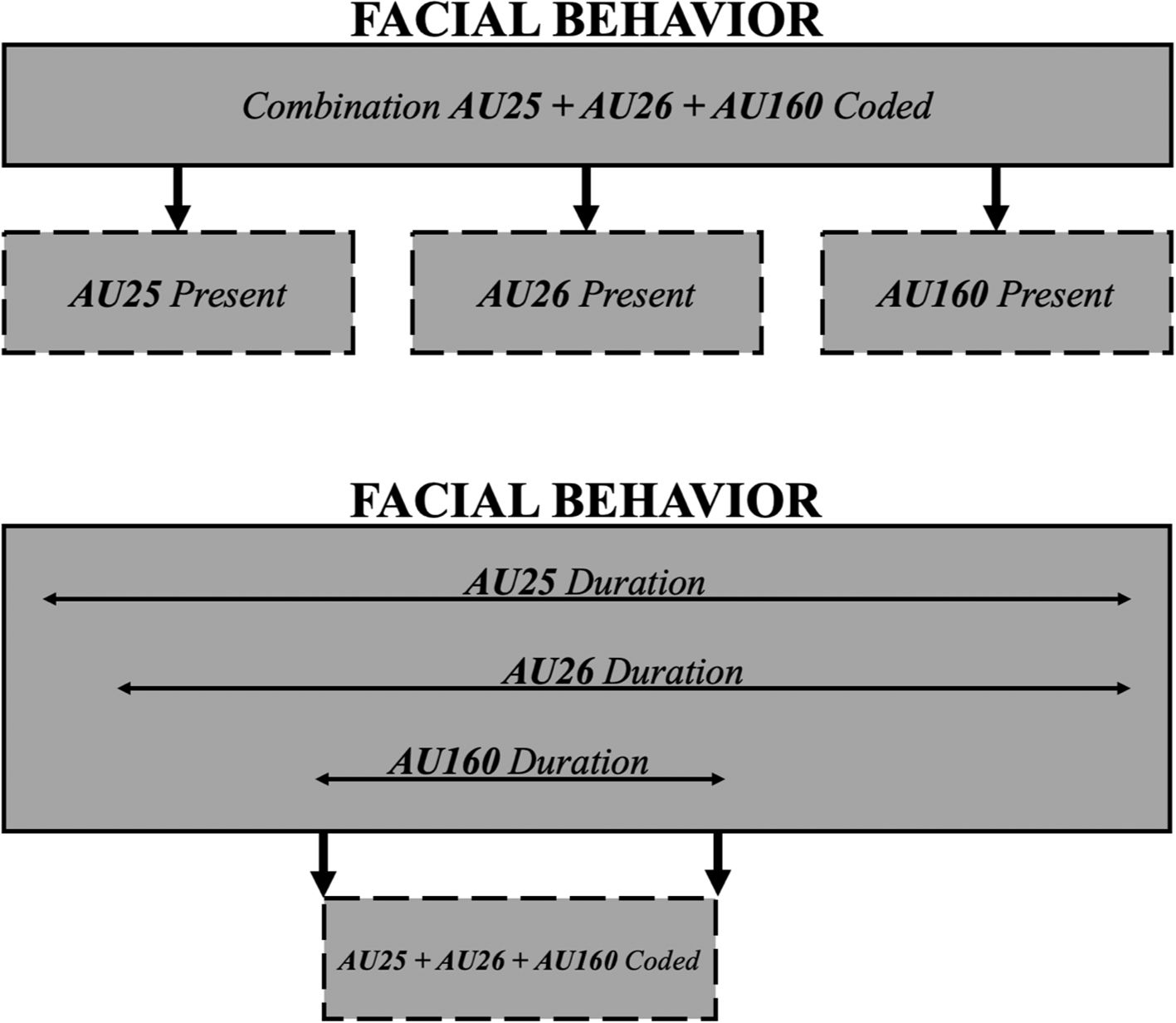

It is important to note that if the focus on AUs is superficial (such as being concerned only with presence/absence data) and there is a greater emphasis on their combinations (and associated properties), researchers should prioritize coding combinations first (as primary data). In this approach, combinations are identified at the peak of the facial behavior, which represents the moment when all AUs associated with the facial behavior event are produced and combined simultaneously. Combinations can be further broken down into their individual AU components for each facial signaling behavior (as secondary data; top of Figure 1). This is an approach my colleagues and I have adopted for our studies, as we are primarily interested in documenting the communicative repertoires of various species (Florkiewicz et al., 2018; Florkiewicz et al., 2023; Scott and Florkiewicz, 2023). But if the focus on AU combinations is superficial (such as being concerned with only which AUs appear at the apex of the facial behavior) then researchers should prioritize coding individual AUs first (as primary data). In this approach, individual AUs are identified and coded at their maximum production, which represents the moment when facial muscle contractions are most pronounced. For each facial behavior, the point of overlap where most or all AUs are present could be used to generate the corresponding AU combination (as secondary data; bottom of Figure 1). Additional information about extracting secondary data can be found below.

Figure 1. An illustration representing the different ways that secondary data on AUs and their combinations can be extracted from primary data. The first method (top figure) involves coding AU combinations as primary data, and then extracting secondary presence/absence data for individual AUs. The second method (bottom) involves coding individual AUs as primary data, and then extracting secondary AU combination data.

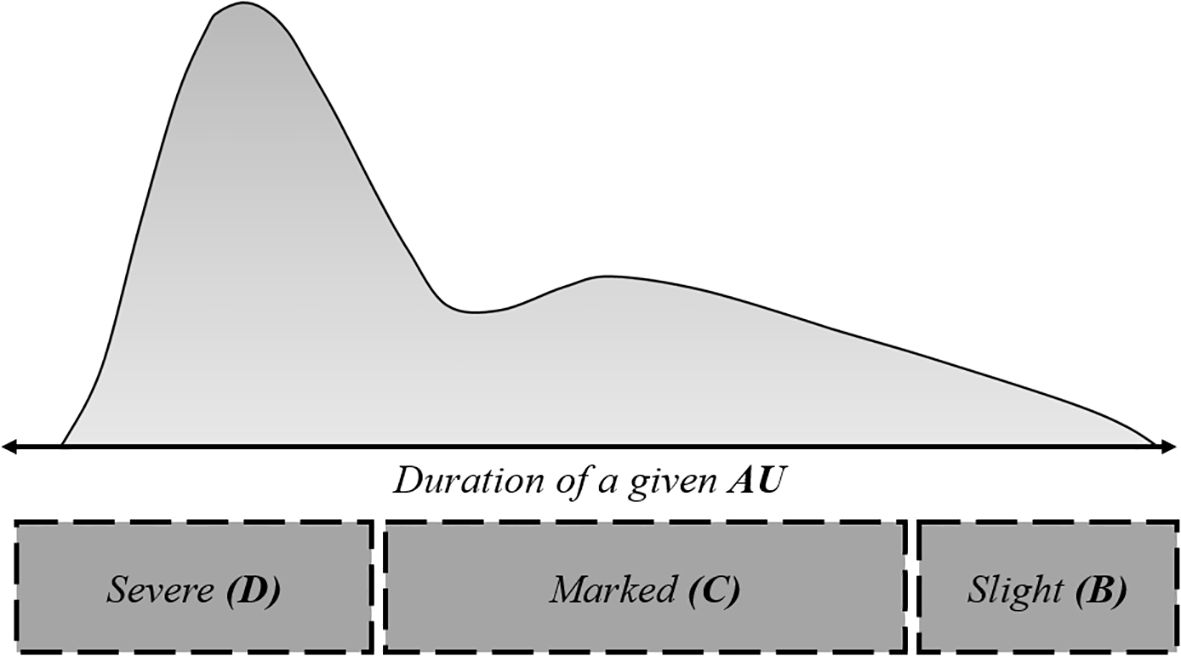

Recommendation 6: consider timing and intensity

In FACS coding protocols, individual AUs are often identified at the apex of their production, which is the moment when the associated facial contractions are the most visible (Ekman and Rosenberg, 2005). AU combinations are often identified based on the moment of overlap, where most or all AUs are markedly present. However, AUs and AU combinations can vary in terms of when and how long they occur for, and individual AUs can also vary in intensity depending on the strength of facial contractions. Some AUs may be quickly and intensely, whereas others may be produced slowly and subtly. If the timing and intensity of AUs are perceived to be important, it may be beneficial to not only mark their onset and offset but also to divide this marked timeframe based on changes in intensity (Figure 2).

Figure 2. An illustration depicting a specific AU, showing varying duration and intensity throughout the facial behavior. Intensity categories from FACS manuals may be applied, depending on the extent of muscle contraction.

Most FACS manuals include intensity rating scales that can be used. When coding for the simple presence or absence of an AU, if the AU is clearly evident (meaning it shows a marked intensity, corresponding to a rating of at least C on an A to E scale) it is considered present (Vick et al., 2007). However, this approach means that, as illustrated in Figure 2, the coded duration of the AU will be shortened. Coding the intensity of individual Action Units (AUs) is also a subjective process, which can lead to lower inter-observer reliability scores (Vick et al., 2007). Many FACS manuals provide descriptive information about how the appearance of an AU varies with intensity [under the “appearance changes” section for each AU entry (Ekman and Rosenberg, 2005; Parr et al., 2007; Parr et al., 2010; Waller et al., 2012; Caeiro et al., 2013; Caeiro et al., 2013; Wathan et al., 2015; Waller et al., 2020; Correia-Caeiro et al., 2022; Waller et al., 2024; Correia-Caeiro et al., 2025)]. It is recommended to use this information to create a more structured ethogram, which should be tested for reliability before implementation.

Recommendation 7: focus on fewer AUs if possible

There are dozens of AUs described across animal FACS, and as a result, hundreds of thousands of possible AU combinations (Mahmoud et al., 2025). Although researchers can consider all AUs in a given FACS, they may want to consider narrowing the scope, especially if some of these codes may not be of particular importance. For instance, some studies on the communicative facial behaviors of animals frequently omit action descriptors, codes for head and eye movements, unilateral action codes, and visibility codes (Florkiewicz et al., 2023; Scott and Florkiewicz, 2023). These omissions occur because it is often unclear whether these specific movements are communicative or if they result from non-communicative processes (like being produced for biological maintenance). By concentrating on fewer AUs and combinations, there are fewer type II coding errors. In mobility studies, however, it may be more important to focus on action descriptors and movement codes (Vick et al., 2007).

If there is a particular interest in coding many different AUs and their combinations [as is the case when documenting the different novel AU combinations an animal can produce (Florkiewicz et al., 2023; Scott and Florkiewicz, 2023; Mahmoud et al., 2025)], it may be beneficial to break down the coding based on specific regions of the face (ears, eyes, nose, mouth) or the direction of movement (horizontal, vertical). This approach can help minimize the risk of coding fatigue or overlooking certain AUs (Cohn et al., 2007). Researchers can either use specialized coding software that categorizes regions of the face alongside their associated AUs, or they can recode video footage by focusing each coding attempt on a specific region or direction of movement.

Recommendation 8: choose your coding software wisely

Researchers should ideally select a coding program that allows them to view photos and videos while simultaneously coding AUs and their combinations [such as ELAN or BORIS (Wittenburg et al., 2006; Friard and Gamba, 2016)]. This type of integrative software can streamline the coding process and facilitate the review of images and video footage, eliminating the need to consult other data sheets and manually revisit images or videos (as is the case if using Excel and video player software). In my past experience, I found that using Excel was relatively messy (Florkiewicz et al., 2018). It often required more time to clean and verify datasets than it would have taken to set up coding templates for ELAN or BORIS. I started using these coding templates during my studies with chimpanzees and domesticated cats (Florkiewicz et al., 2023; Scott and Florkiewicz, 2023; Florkiewicz and Lazebnik, 2025; Mahmoud et al., 2025). Guidelines have also been developed for implementing human FACS coding using ELAN (see (Mulrooney et al., 2014) for an example).

For coding images, data labeling toolsets like Labelbox (https://labelbox.com/) are ideal as they allow users to identify key regions of the face and their associated movements. These data labeling toolsets have also been utilized to assist in developing automated FACS coding systems. Data labeling tools should be able to handle numerous images and export compiled data for analysis. For coding video clips, video annotation programs like BORIS and ELAN are ideal, as they allow researchers to utilize keystrokes, dropdown menus, and text boxes to mark observations on video timelines. This functionality simplifies the process of reviewing footage for recoding and conducting inter-observer reliability. Additionally, these programs often automatically add time stamps to observations, making it easier to track the timing of individual AUs and their combinations.

Programs that rely on a combination of keystrokes, drop-down menus and textboxes [like ELAN (Wittenburg et al., 2006)] may be more suitable for coding numerous AUs, their combinations, intensity scores, and/or additional behavioral measures through nested hierarchies. Programs that rely exclusively on keystrokes may be more suitable for coding individual AUs and a few associated behavioral measures. Using programs that require researchers to manually enter in data using text is not recommended to minimize the risk of typographic errors.

Discussion

When implementing FACS-based approaches to the study of animal facial behavior, several important considerations must be considered. In my discussion of different data collection and coding procedures, I have included best practice recommendations along with guidance on how to proceed if these recommendations cannot be met due to limitations related to study subjects, equipment or software issues, the research team, or time constraints. While this list of approaches and considerations may not be exhaustive, I hope it sparks further discussion and the sharing of additional guidelines and protocols for FACS, or the improvement of automated approaches. In recent years, there has been a push towards the development of automated FACS coding approaches for studying human behavior (Kaiser and Wehrle, 1992; Lewinski et al., 2014) and landmark detection systems that are developed using FACS as guidance in non-human animals (Mills et al., 2024; Martvel et al., 2024a; Martvel et al., 2025). My colleagues and I have found that landmark detection systems are particularly effective in studying animal facial behavior, as they perform similarly to manual coding with FACS (for example, see (Martvel et al., 2024b)). Engaging in discussions about the considerations and constraints involved in data collection and coding can enhance the systematic approach and scientific rigor of FACS.

Data availability statement

The original contributions presented in the study are included in the article/supplementary material. Further inquiries can be directed to the corresponding author.

Author contributions

BF: Conceptualization, Investigation, Visualization, Writing – original draft, Writing – review & editing.

Funding

The author(s) declare that no financial support was received for the research and/or publication of this article.

Conflict of interest

The author declares that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Generative AI statement

The author(s) declare that no Generative AI was used in the creation of this manuscript.

Any alternative text (alt text) provided alongside figures in this article has been generated by Frontiers with the support of artificial intelligence and reasonable efforts have been made to ensure accuracy, including review by the authors wherever possible. If you identify any issues, please contact us.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

References

Bennett V., Gourkow N., and Mills D. S. (2017). Facial correlates of emotional behaviour in the domestic cat (Felis catus). Behav. Processes. 141, 342–350. doi: 10.1016/j.beproc.2017.03.011

Caeiro C., Waller B., and Burrows A. (Eds.) (2013). The Cat Facial Action Coding System manual (CatFACS).

Caeiro C. C., Waller B. M., Zimmermann E., Burrows A. M., and Davila-Ross M. (2013). OrangFACS: A muscle-based facial movement coding system for orangutans (Pongo spp.). Int. J. Primatology 34, 115–129. doi: 10.1007/s10764-012-9652-x

Clark E. A., Kessinger J. N., Duncan S. E., Bell M. A., Lahne J., Gallagher D. L., et al. (2020). The facial action coding system for characterization of human affective response to consumer product-based stimuli: a systematic review. Front. Psychol. 11, 920. doi: 10.3389/fpsyg.2020.00920

Cohn J. F., Ambadar Z., and Ekman P. (2007). Observer-based measurement of facial expression with the Facial Action Coding System. Handb. Emotion elicitation assessment. 1, 203–221.

Correia-Caeiro C., Burrows A., Wilson D. A., Abdelrahman A., and Miyabe-Nishiwaki T. (2022). CalliFACS: The common marmoset Facial Action Coding System. PLoS One 17, e0266442. doi: 10.1371/journal.pone.0266442

Correia-Caeiro C., Costa R., Hayashi M., Burrows A., Pater J., Miyabe-Nishiwaki T., et al. (2025). GorillaFACS: the facial action coding system for the gorilla spp. PLoS One 20, e0308790. doi: 10.1371/journal.pone.0308790

Cross M. P., Hunter J. F., Smith J. R., Twidwell R. E., and Pressman S. D. (2023). Comparing, differentiating, and applying affective facial coding techniques for the assessment of positive emotion. J. Positive Psychol. 18, 420–438. doi: 10.1080/17439760.2022.2036796

Descovich K. A., Wathan J., Leach M. C., Buchanan-Smith H. M., Flecknell P., Farningham D., et al. (2017). Facial expression: An under-utilized tool for the assessment of welfare in mammals. ALTEX-Alternatives to Anim. experimentation. 34, 409–429. doi: 10.14573/altex.1607161

Ekman P. and Rosenberg E. L. (2005). What the Face Reveals: Basic and Applied Studies of Spontaneous Expression Using the Facial Action Coding System (FACS) (New York: New York: Oxford University Press).

Florkiewicz B. N. and Campbell M. W. (2021). A comparison of focal and opportunistic sampling methods when studying chimpanzee facial and gestural communication. Folia Primatologica. 92, 164–174. doi: 10.1159/000516315

Florkiewicz B. N. and Lazebnik T. (2025). Combinatorics and complexity of chimpanzee (Pan troglodytes) facial signals. Anim. Cognition. 28, 34. doi: 10.1007/s10071-025-01955-0

Florkiewicz B. N., Oña L. S., Oña L., and Campbell M. W. (2023). Primate socio-ecology shapes the evolution of distinctive facial repertoires. J. Comp. Psychol. 138 (1), 32–44. doi: 10.1037/com0000350

Florkiewicz B., Skollar G., and Reichard U. H. (2018). Facial expressions and pair bonds in hylobatids. Am. J. Phys. Anthropology. 167, 108–123. doi: 10.1002/ajpa.23608

Friard O. and Gamba M. (2016). BORIS: a free, versatile open-source event-logging software for video/audio coding and live observations. Methods Ecol. evolution. 7, 1325–1330. doi: 10.1111/2041-210X.12584

Kaiser S. and Wehrle T. (1992). Automated coding of facial behavior in human-computer interactions with FACS. J. Nonverbal Behav. 16, 67–84. doi: 10.1007/BF00990323

Lewinski P., Uyl T., and Butler C. (2014). Automated facial coding: validation of basic emotions and FACS AUs in facereader. J. Neuroscience Psychology Econ. 7, 227–236. doi: 10.1037/npe0000028

Mahmoud A., Scott L., and Florkiewicz B. N. (2025). Examining Mammalian facial behavior using Facial Action Coding Systems (FACS) and combinatorics. PLoS One 20, e0314896. doi: 10.1371/journal.pone.0314896

Martvel G., Lazebnik T., Feighelstein M., Meller S., Shimshoni I., Finka L., et al. (2024a). Automated landmark-based cat facial analysis and its applications. Front. veterinary science. 11, 1442634. doi: 10.3389/fvets.2024.1442634

Martvel G., Scott L., Florkiewicz B., Zamansky A., Shimshoni I., and Lazebnik T. (2024b). Computational investigation of the social function of domestic cat facial signals. Sci. Rep. 14, 27533. doi: 10.1038/s41598-024-79216-2

Martvel G., Zamansky A., Pedretti G., Canori C., Shimshoni I., and Bremhorst A. (2025). Dog facial landmarks detection and its applications for facial analysis. Sci. Rep. 15, 21886. doi: 10.1038/s41598-025-07040-3

Mills D., Feighelstein M., Ricci-Bonot C., Hasan H., Weinberg H., Rettig T., et al. (2024). Automated recognition of emotional states of horses from facial expressions. 19 (7), e0302893. doi: 10.1371/journal.pone.0302893

Molina A., Florkiewicz B., and Cartmill E. (2019). Exploring sources of variation in inter-observer reliability scoring of facial expressions using the chimpFACS. FASEB J. 33, 774.9–774.9. doi: 10.1096/fasebj.2019.33.1_supplement.774.9

Mulrooney K., Hochgesang J. A., Morris C., and Lee K. (Eds.) (2014). The “how-to” of integrating FACS and ELAN for analysis of non-manual features in ASL (European Language Resources Association (ELRA). sign-lang@ LREC 2014.

Parr L. A., Waller B. M., Burrows A. M., Gothard K. M., and Vick S. J. (2010). Brief communication: MaqFACS: A muscle-based facial movement coding system for the rhesus macaque. Am. J. Phys. Anthropology. 143, 625–630. doi: 10.1002/ajpa.21401

Parr L. A., Waller B. M., Vick S. J., and Bard K. A. (2007). Classifying chimpanzee facial expressions using muscle action. Emotion. 7, 172–181. doi: 10.1037/1528-3542.7.1.172

Sayette M. A., Cohn J. F., Wertz J. M., Perrott M. A., and Parrott D. J. (2001). A psychometric evaluation of the facial action coding system for assessing spontaneous expression. J. Nonverbal Behav. 25, 167–185. doi: 10.1023/A:1010671109788

Scheider L., Liebal K., Oña L., Burrows A., and Waller B. (2014). A comparison of facial expression properties in five hylobatid species. Am. J. Primatology. 76, 618–628. doi: 10.1002/ajp.22255

Scott L. and Florkiewicz B. N. (2023). Feline faces: Unraveling the social function of domestic cat facial signals. Behav. Processes. 213, 104959. doi: 10.1016/j.beproc.2023.104959

Vick S.-J., Waller B. M., Parr L. A., Smith Pasqualini M. C., and Bard K. A. (2007). A cross-species comparison of facial morphology and movement in humans and chimpanzees using the facial action coding system (FACS). J. Nonverbal Behav. 31, 1–20. doi: 10.1007/s10919-006-0017-z

Waller B., Correia Caeiro C., Peirce K., Burrows A., and Kaminski J. (2024). DogFACS: the dog facial action coding system (University of Lincoln).

Waller B. M., Julle-Daniere E., and Micheletta J. (2020). Measuring the evolution of facial ‘expression’ using multi-species FACS. Neurosci. Biobehav. Rev. 113, 1–11. doi: 10.1016/j.neubiorev.2020.02.031

Waller B. M., Lembeck M., Kuchenbuch P., Burrows A. M., and Liebal K. (2012). GibbonFACS: A muscle-based facial movement coding system for hylobatids. Int. J. Primatology. 33, 809–821. doi: 10.1007/s10764-012-9611-6

Wathan J., Burrows A. M., Waller B. M., and McComb K. (2015). EquiFACS: the equine facial action coding system. PLoS One 10, e0131738. doi: 10.1371/journal.pone.0131738

Keywords: facial behavior, facial action coding systems, FACS, action units, face coding

Citation: Florkiewicz BN (2025) Navigating the nuances of studying animal facial behaviors with Facial Action Coding Systems. Front. Ethol. 4:1686756. doi: 10.3389/fetho.2025.1686756

Received: 22 August 2025; Accepted: 12 September 2025;

Published: 24 September 2025.

Edited by:

Aras Petrulis, Georgia State University, United StatesReviewed by:

Temple Grandin, Colorado State University, United StatesCopyright © 2025 Florkiewicz. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Brittany N. Florkiewicz, YnJpdHRhbnkuZmxvcmtpZXdpY3pAbHlvbi5lZHU=

Brittany N. Florkiewicz

Brittany N. Florkiewicz