- 1Institute of Psychiatry and Neuroscience of Paris (IPNP), Université Paris Cité, INSERM U1266, Membrane Traffic in Healthy and Diseased Brain Team, Paris, France

- 2Membrane Mechanics and Dynamics of Intracellular Signaling Laboratory, Institut Curie Research Center, CNRS UMR3666, INSERM U1339, PSL Research University, Paris, France

In recent years, advances in microscopy and the development of novel fluorescent probes have significantly improved neuronal imaging. Many neuropsychiatric disorders are characterized by alterations in neuronal arborization, neuronal loss—as seen in Parkinson’s disease—or synaptic loss, as in Alzheimer’s disease. Neurodevelopmental disorders can also impact dendritic spine morphogenesis, as observed in autism spectrum disorders and schizophrenia. In this review, we provide an overview of the various labeling and microscopy techniques available to visualize neuronal structure, including dendritic spines and synapses. Particular attention is given to available fluorescent probes, recent technological advances in super-resolution microscopy (SIM, STED, STORM, MINFLUX), and segmentation methods. Aimed at biologists, this review presents both classical segmentation approaches and recent tools based on deep learning methods, with the goal of remaining accessible to readers without programming expertise.

Neuronal morphology and synaptopathies

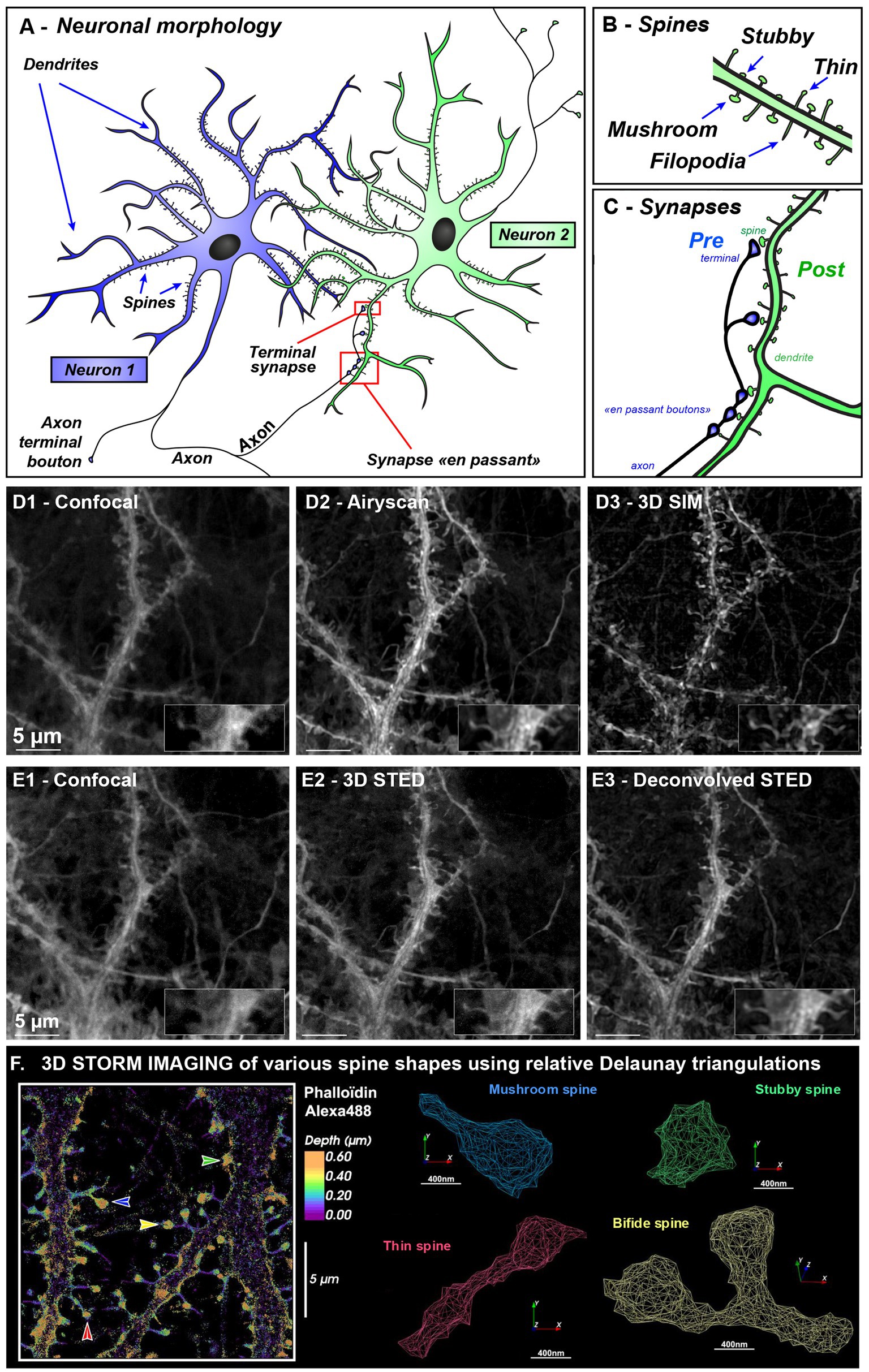

Neuronal cells are communicating through billions of synapses. Axons can contact dendrite directly (shaft synapse) or established contact with a dendritic membrane protrusion called dendritic spine (Bucher et al., 2020) (Figure 1A). Dendritic spines are highly dynamic structures, full of actin, changing their shape and numbers during development, ageing and learning (Rao and Craig, 2000; Zhang and Benson, 2000; Konietzny et al., 2017; Bucher et al., 2020). They are filamentous during development [called filopodia (Herms and Dorostkar, 2016), Figure 1B] and can then either retract to form stubby-type spines (without neck) (Harris, 1999) or develop a head (Hotulainen and Hoogenraad, 2010; Kashiwagi and Okabe, 2021) during or after learning. Spine with heads can be categorized into “Thin” (long spine with small heads) or “mushroom” (short spine with larger head and restricted neck) (Harris, 2020). Such changes affect synaptic function and plasticity at the cellular level. For the past decades, the importance of spines shape in neuropathological disorder-related disease has emerged with the development of biochemical, imaging and analysis tools. In particular, changes in dendritic spine shape and number is associated with a large number of brain disorders that involve deficits in information processing and cognition (Forrest et al., 2018). Recent evidence supports altered synaptic connectivity and plasticity within developmental stages in children and adolescents (ASD, Autism Spectrum Disorders and schizophrenia) but also in ageing associated disease (Alzheimer’s disease with memory deficit) (Glantz and Lewis, 2000; Selkoe, 2002; Tackenberg et al., 2009; Hutsler and Zhang, 2010). Specifically, accumulating neuropathological evidences points toward synapse and dendritic spine loss in schizophrenia (Garey et al., 1998) and Alzheimer’s disease (Boros et al., 2017; Forrest et al., 2018; Rao et al., 2022), whereas ASD displays an increased spine number and immature spine shape (Hutsler and Zhang, 2010). Hence dendritic spines could be seen as a common substrate to study neuropsychiatric disorders involving cognitive deficits.

Figure 1. Neuronal and spine morphologies revealed using various microscopy modalities illustrating resolution gain needed for spine imaging. (A–C) Morphology of communication between two neurons (A). A neuron is made up of a cell body, an axon, and several dendrites. The axon can divide in collaterals branches that can contact several other neurons and release neurotransmitter at synaptic sites. Synapses are dedicated contact sites between soma or dendrites that receives information from neighboring axons. Synapse can be present at the very end of the axon (at the presynaptic terminal) or can establish “contact en passant” along the axon. On the dendrite, the synaptic zone is called post-synaptic. Blue Neuron is sending information via his axon and green neuron is receiving information on different dendritic spines (B) leading to the formation of synapses (C). (D,E) Correlative images of hippocampal neurons after 21 days in vitro labeled with MemBright Cy3.5 probe (D1–D3) were acquired on Zeiss Elyra PS1 equipped with Airyscan and SIM allowing correlative images of various microscopy modalities while (E1–E3) were imaged on Leica confocal STED 3DX. The same sample and region was used for both (D,E). The inset display a magnified portion of the dendrite harboring one thin (left) and mushroom spine (right). Deconvolution was done using Classic Maximum Likelihood Estimation in Huygens software. Scale bar: 5 μm. (F) 3D STORM imaging of dendritic spines class was done using Alexa488-Phalloidin that labels spine heads and neck. All STORM localizations, were pooled and external envelope was reconstructed using Delauney triangulation. In contrast to membrane labeling, here spine head is underestimated since actin is not filling all spine head volume especially close to the PSD. Various spine types are shown with colored arrows. Stubby spine (with no neck) is in green, mushroom spine (with short neck and big head) is in blue, thin spine (with long neck and small head) is in red, and a bifid spine is in yellow.

Imaging neurons and dendritic spines with cytosolic and membrane probes

Neuronal morphology has traditionally been visualized using confocal microscopy after transfection of cytosolic GFP, multicolor brainbow variants (Livet et al., 2007) or biolistic delivery of DiIC₁₈, a lipophilic dye diffusing in the entire membrane (see Okabe, 2020; Wouterlood, 2023 for reviews). DID which is an oil version of DiIC18 has also been used on hippocampal neurons in culture. However, variability in intensity levels between cells, complicated quantitative segmentation (Collot et al., 2019). Spine morphogenesis could be monitored using spinning-disk live imaging with actin-GFP reporters (Supplementary Figure S1A). On fixed samples, spines are efficiently labeled with fluorescent phalloidin, a toxin that binds specifically to F-actin (Supplementary Figures S1B–D). When using phalloidin or cytosolic GFP, dendritic spine necks (red arrows) typically exhibit lower fluorescence compared to spine heads (green arrow), making them more difficult to visualize and quantify. The use of new variant of membranous GFP [Addgene 117,858 (Wu et al., 2018); Supplementary Figures S1E,F] improved neck detection. Live Membrane can also be stained indirectly using fluorescent Wheat Germ Agglutinin that are lectins binding to carbohydrates present at the cell surface. However, this labelling is dependent on carbohydrate distribution which can be sometimes expressed in a punctate pattern complexifying neuronal segmentation (Collot et al., 2019). More recently, we developed the MemBright™ probes (Collot et al., 2019) that are lipophilic fluorescent dyes labeling any plasma membrane after 5 min incubation in cell culture media (Supplementary Figures S1B–D in red). MemBright™ offers the key advantage of labeling all cell types without requiring transfection, making it suitable for both live and fixed samples. By uniformly integrating into the plasma membrane, it enables clear visualization of both spine necks and heads (Supplementary Figures S1B–D, red arrows and Supplementary Figures S1G–I), facilitating accurate neuronal segmentation.

The advent of super-resolution microscopy has revolutionized neuroimaging by enabling 3D-visualization of nanoscopic nervous system components. Techniques such as Structured Illumination Microscopy (SIM) and Airyscan technology now routinely achieve resolutions of approximately 100 to 140 nm, respectively (see Figures 1D2,D3 and Glossary for detailed optical principle and comparison of super-resolution techniques with resolution and applications). These enhanced resolutions, combined with improved signal-to-noise ratios, enable the visualization of small structures—such as pre- or post-synaptic protein clusters and small organelles like endosomes. Such fine structures can then be assessed for their synaptic localization using Ripley’s Function. We developed Icy SODA plugin (Lagache et al., 2018) to detect coupling between non overlapping pre and post synaptic proteins and accurately measure their coupling distances. SIM microscopy, with its wide field of view, enables large-scale screening of synaptic structures. From just 15 SIM images, we were able to analyze over 45,000 synapsin clusters and determine their associations with post-synaptic markers such as PSD95 (mean distance: 107 ± 73 nm) and Homer (138 ± 89 nm). The combination of SIM with ICY SODA plugin thus provides a powerful method for molecular mapping of synapse, facilitating the rapid identification of potential synaptopathies (Breton et al., 2024; De Koninck et al., 2024).

For live-cell imaging, Airyscan’s inline acquisition technology provides image quality far superior to that of spinning disks and operates 4 to 5 times faster than conventional confocal microscopy, enabling dynamic monitoring of nanoscale synaptic components over time. Airyscan can provide isotropic pixels paving the way to improved 3D spine morphology reconstruction (Figure 1D2 and Supplementary Figures S1B–I).

3D-STED microscopy (Figures 1E2,E3 & Glossary) is particularly well-suited for tissue imaging, enabling resolution of synaptic contacts even in depth. Unlike SIM, it is less prone to reconstruction ringing-artifacts surrounding clusters. The primary limitation in tissue imaging, lies in the scattering nature of neural tissue, which restricts the depth of light penetration. This constraint can be overcome by implementing a tissue clearing step, which facilitates light propagation in thick samples (ranging from 0.5 to 1 mm in thickness). We recently employed such a combined approach (Cauzzo et al., 2024), clearing thick brain sections from transgenic mice expressing cytosolic GFP in Purkinje cells. This enabled us to perform correlative imaging across scales—from low-magnification mosaic imaging (20x, covering several millimeters in width) to high-magnification 3D-STED imaging (93x). This multiscale strategy allowed us to correlate cellular population organization, neuronal dendritic arborization, and the morphology of dendritic spines, including necks as narrow as a 100 nm.

Single-molecule localization microscopy (SMLM), with localization precisions of 10–30 nm for STORM and as low as 2–3 nm for MINFLUX, provides nanoscopic resolution previously reserved to electron microscopy. It enabled detailed visualization of actin–spectrin networks around clathrin-coated pits (Bingham et al., 2023; Wernert et al., 2024) and dendritic spines (Breton et al., 2024; Saladin et al., 2024). 3D-STORM imaging using MemBright or fluorescent phalloidin, combined with Delaunay triangulation (see Glossary), can reveal spine neck constrictions characteristic of mushroom and thin spines (Figure 1F). Recently, MINFLUX has even tracked dynein stepping in live neurons (Schleske et al., 2024).

Neuronal and dendritic spine segmentation pipeline

Much emphasis has been put in the past decades on the correlation between structural changes (termed as synaptic plasticity) and neurodegenerative diseases. Dendritic complexity can be evaluated with the total dendritic length along with the number and distribution of their branching points. Pyramidal cell contains roughly 30,000 synapses and dendritic total length is 3 times longer in human cortex (14.5 mm) than in macaque (6.2 mm) or mice (5.3 mm)(Mohan et al., 2015). Pyramidal cells in the human prefrontal cortex have 72% more dendritic spines than macaques and four times more than squirrel monkeys or the mouse motor cortex (DeFelipe, 2011). Spine density can range from 1–4 spine/μm in rat and mice hippocampal neurons (Papa et al., 1995; Nwabuisi-Heath et al., 2012) up to 15/μm in cerebellum (Napper and Harvey, 1988). Thus, precise, high-throughput quantification of spine morphology is critical.

Spine segmentation pipelines generally fell into two categories: rule-based or data-driven approaches. Computational methods that can segment and quantitate dendritic spines in either 2D or 3D imaging have been exhaustively reviewed in 2020 (Okabe, 2020), we will try here to complete this view with the last recent approaches.

Rule-based pipelines rely on features established by image analysts (or neurobiologists) regarding dendritic spines characteristics. Most segmentation pipelines adopted a two-step strategy: first segmenting the entire dendrite tree and then extracting the spines.

Dendrite segmentation can rely on intensity thresholding, such as global Otsu (Li et al., 2022), multilevel thresholding (Kashiwagi et al., 2019) or adaptive thresholding (Ekaterina et al., 2023). Platforms like Vaa3D offer diverse segmentation methods with user-friendly visualization interface (Peng et al., 2010).

Uniform staining quality significantly enhanced the performance of threshold-based approaches. When image quality is insufficient for reliable segmentation, preprocessing steps such as smoothing or denoising can be required (Smirnov et al., 2018; Das et al., 2021; Argunşah et al., 2022). Targeting the inherent signal heterogeneity in confocal and two-photon images, the SmRG algorithm integrated Region Growing (RG) procedure with a mixture model describing the signal statistics (Callara et al., 2020). This allows SmRG to calculate local thresholds for iteratively growing segmented structure, thus enabling the 3D segmentation of complex neurons.

Dendritic spine segmentation strategies

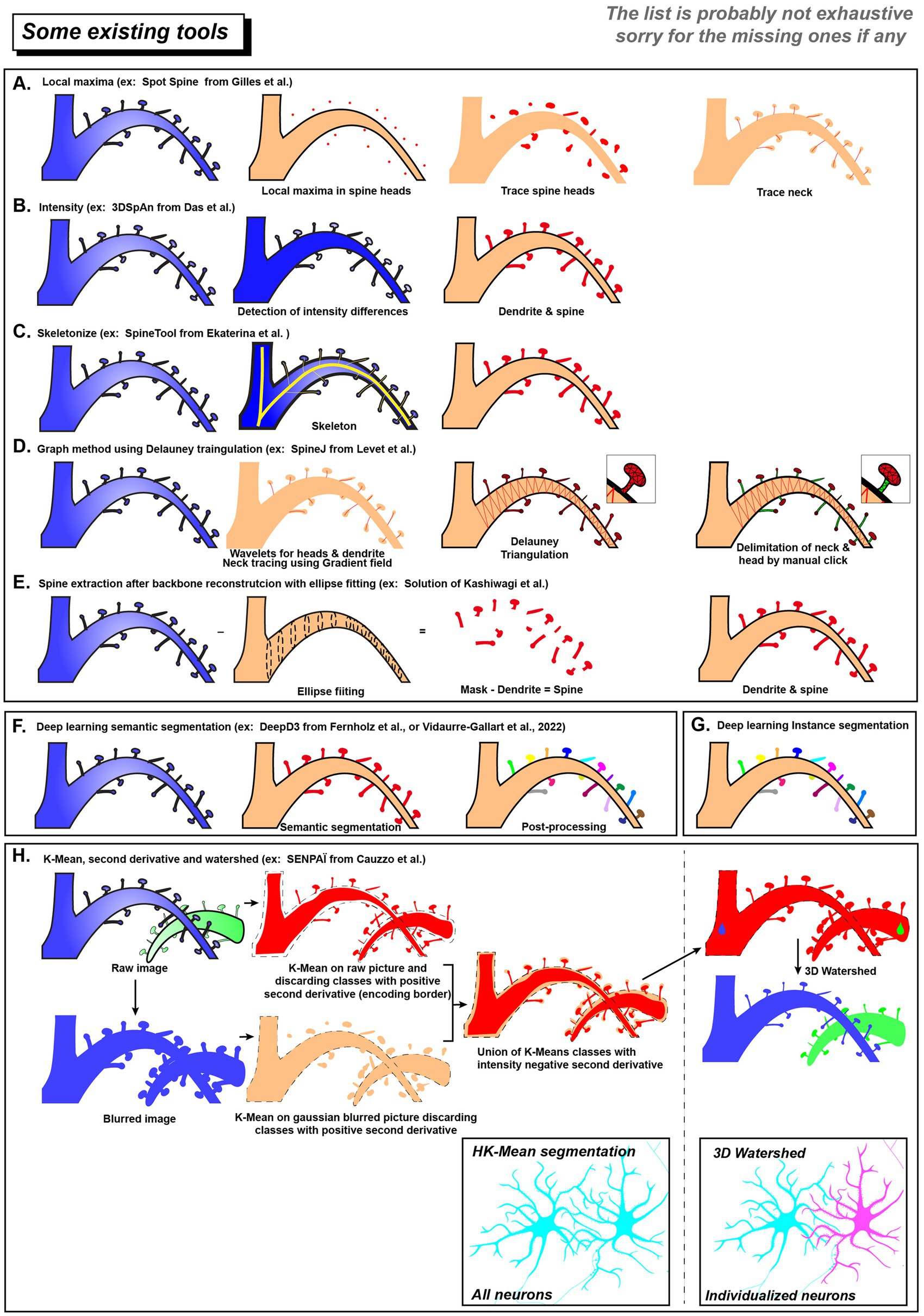

One strategy uses the differences in pixel intensity between dendritic spines and shaft after staining (see Figures 2A,B). The implementation of intensity-based criteria varies among segmentation approaches. For example, the Spot Spine assumes spine heads have stronger signals, locating and segmenting them by finding local intensity maxima (Gilles et al., 2024). In contrast, 3dSpAn can handle cases where spine are weaker (light blue in Figure 2B) than the shaft (Figure 2B, dark blue). It iteratively isolates spine structures by applying multi-scale morphological opening operations (Das et al., 2021).

Figure 2. List of existing tool for spines and neurons segmentation. Schematic overview of dendritic spines segmentations using different ruled based approaches (A–E) or data driven modalities (F–H). Deep learning methods can use semantic segmentation (F) or instance segmentation (G). (H) SENPAÏ Tool for neuron and spine segmentation is using both ruled based and data driven approaches. In the end, neurons in a dense population will be segmented as an individual cell (blue and magenta).

Skeletonization is another strategy for spine segmentation (Figure 2C). By using the fact that spines are considerably shorter than dendritic shaft, Rusakov et al. and SpineTool skeletonized the dendritic structure and identified short branches as potential spines (Rusakov and Stewart, 1995; Ekaterina et al., 2023). In contrast, SpineJ (Figure 2D) infers a graph structure from the generated skeleton (Levet et al., 2020). Then it identifies spine by analyzing nodes topological properties (e.g., leaf nodes and their connectivity patterns).

Other strategies first reconstructed the dendritic shaft and then considered external protrusions as spine candidates. Kashiwagi et al. (2019) proposed to fit the dendritic shaft using elliptical cross-sections (Figure 2E). Protrusions extending beyond the reconstructed shaft are regarded as candidate spines and are subsequently filtered according to geometric characteristics (volume or axial elongation).

A key advantage of rule-based methods is their strong interpretability, without the need for annotation, making them ideal when annotated datasets are scarce. However, since these methods rely on fixed logic, ensuring consistency in imaging conditions (microscopy modality, laser intensity, magnification and numerical aperture…) is crucial for optimal performance.

Data-driven pipelines, which include traditional machine learning methods [e.g., clustering methods like K-means (MacQueen, 1967; Jain et al., 1999)] see Glossary and deep learning methods [like the widely used U-Net for semantic segmentation (Ronneberger et al., 2015)], have been effectively applied in dendritic spines analysis.

Xiao et al. trained a fully convolutional network (FCN, see Glossary) to achieve automated detection of dendritic spines in 2D images (Xiao et al., 2018). Regarding 3D dendritic spine segmentation, Vidaurre-Gallart et al. elegantly developed a dataset of in vitro confocal images from healthy human tissue and trained a 3D U-Net model for segmentation (Vidaurre-Gallart et al., 2022). They created an image user interface allowing post-processing, such as reconnecting isolated dendrites and applying the watershed algorithm to resolve overlapping dendritic spines.

Some other tools like DeepD3 offers capabilities for both training and prediction and provides dendritic spine and dendrite segmentation (Fernholz et al., 2024). The deep learning framework utilizes a diverse dataset derived from two-photon and confocal imaging techniques, incorporating both in vivo and in vitro data, and supporting various fluorophores (tdTomato, Alexa-594, EGFP). DeepD3 employs an enhanced U-Net architecture with a dual-decoder network, generating separate segmentation masks for dendrites and spines. Post-processing includes filtering small segments, dilating dendritic maps to ensure that spine candidates are close to dendrites, and applying methods like flood-filling (or threshold-based 3D connected component analysis) for region of interest detection, with adjustable parameters to refine segmentation results. A graphical user interface is provided for both training and prediction.

In addition to develop new segmentation models, the extension of existing deep learning tools plays an important role in enhancing the accessibility of these technologies for neuroscientists. As an example, Schünemann et al. (2025) employed the Zeiss arivis Cloud platform to train and deploy a model for human dendritic spine morphology analysis. Similarly, the recent RESPAN pipeline (Garcia et al., 2024) integrates CSBDeep (Weigert et al., 2018) for image restoration (improving signal and contrast) with nnU-Net (Isensee et al., 2021) for spine segmentation, offering a combined workflow solution.

Other data-driven techniques such as clustering algorithms, operating without manual annotation, are also crucial. These approaches leverage intrinsic data properties to discern structures, offering distinct advantages for complex biological datasets where extensive annotation is prohibitive notably in nanoscale imaging.

Some techniques such as the multiphoton confocal microscopy allow the visualization of very dense neurons arborization. The resolution/SNR are sufficient to resolve spine head but mitigate spine neck detection. Previously, we developed some algorithm to infer neck central line using geodesic distances (Jain et al., 2021). However, since neck width was proposed to be related to electrical resistance, investing much effort in super-resolution technique would make neck detection more accurate and biologically relevant. The use of the 3D-STED microscopy, overcoming the diffraction of light, enables the characterization of these small protrusions. Segmenting these neurons requires the use of algorithms but only a few of them are able to deal with a complex cell packing (Li et al., 2019; Milligan et al., 2019; Callara et al., 2020).

We recently develop a new framework called SENPAI (SEgmentation of Neurons using PArtial derivative Information) that extract information of single neurons at micro and nanoscale within brain tissue. As a proof of concept, we considered L7-GFP transgenic mice that express cytosolic GFP within cerebellar Purkinje cells. SENPAI is working with a two-step segmentation and parcellation process which gives back the morphology at the nanoscale when combined with super-resolution.

SENPAI constitute a topological informed data driven approach to neuronal reconstruction. We used k-means clustering (Figure 2H) on raw or 3D Gaussian smoothed image to retrieve, respectively, tiny details (spine neck) or higher structures (dendrites & spine heads). To decipher border of the object (neuron or spine), we used a k-means scalable algorithm exploiting spatial derivatives of intensity. Indeed, the fluorescence is smoothly decreased at the neuronal border. We took advantage of intensity second derivatives that are negative within the inner edge of this intensity transition. Classes with positive second derivatives were considered as background whereas neuronal shape of interest is found in highest average intensity classes that present negative second derivatives in the 3 directions (Figure 2H).

The second step of SENPAI is the parcellation of the segmented image which exploits topographic distances. To separate neurons from the entire neuronal population, SENPAI uses the 3D watershed transform relying on cell body seeds used as neuronal core. This separation is applied on the previous segmentation mask, making the connection between dendritic branch and the related cellular body seed colorizing them into a single neuronal entity. In high-resolution datasets when spine necks are not detectable, the same strategy is used to assign small spines to a specific dendritic branch, which serves as the neuronal core. Thus, our SENPAI pipeline can isolate and extract neuron’s morphology (Figure 2H, pink neuron) from neuronal branches to dendritic spines even in a dense arborization context like in brain tissue.

Discussion

Enhancing segmentation performance: architectural and non-architectural strategies

Both rule-based and data-driven methods have made significant progress in 3D dendritic spine segmentation, each offering unique advantages in terms of interpretability and robustness.

Data-driven approaches, especially deep learning, enable different levels of segmentation. Semantic segmentation (Figure 2F & Glossary) classifies all spine pixels into a single category (spine) (Long et al., 2015); instance segmentation (Figure 2G) further identifies and distinguishes each individual spine instance (He et al., 2017); and panoptic segmentation integrates both, distinguishing individual spine instances while assigning a semantic label to every pixel, including the dendritic shaft, background, and other cellular structures (Kirillov et al., 2019).

Moving forward, combining the strengths of both approaches, such as refining lightweight semantic segmentation models with efficient, rule-based post-processing strategies, remains a promising direction. These hybrid methods strike a favorable balance between computational cost and segmentation accuracy, while offering strong potential for generalization across diverse datasets.

To further improve the accuracy of semantic segmentation models, DeepD3 has demonstrated that modifying model architectures can lead to significant performance gains (Fernholz et al., 2024). Its customized network design demonstrates superior performance over the conventional U-Net model, highlighting the effectiveness of architectural optimization.

Improvements in segmentation quality depend not only on model architecture but also on non-architectural factors—such as image preprocessing, training procedures, inference strategies, and post-processing techniques—which play crucial roles. A representative example is the nnU-Net framework, which, although based on the standard U-Net architecture, adapts key non-architectural parameters using rule-based strategies (Isensee et al., 2021). These include image cropping based on object size and optimizing resampling resolutions based on voxel spacing. Such adaptive mechanisms have enabled nnU-Net to achieve leading performance across multiple 3D medical image segmentation tasks, underscoring the importance of these non-architectural aspects in performance optimization.

The vision and reality of instance segmentation: from ideal goals to feasible path

Semantic segmentation allows classifying pixels as belonging to spines or not. A further level of complexity involves distinguishing individual spines from one to another. To achieve this, instance segmentation can be used to identify distinct spine instances within the ‘spine’ semantic class. For example, the Segmentation Anything Model (SAM) (Kirillov et al., 2023), a pre-trained instance segmentation model trained on 11 million 2D natural images, has demonstrated robust generalization capabilities. Subsequently, the Medical SAM Adapter (Med-SA) was developed by fine-tuning approximately 2% of the SAM model’s parameters (~13 million), effectively adapting the model to 17 different medical imaging modalities and enabling 3D image segmentation (Wu et al., 2025). These results indicate SAM’s strong potential for cross-domain adaptation and 3D instance segmentation.

However, despite their promise, the practical application of instance segmentation models remains challenging. For instance, training Med-SA requires substantial computational resources (four NVIDIA A100 GPUs with 80 GB of memory each). Such hardware is not readily accessible to most researchers or laboratories, especially when compared to the limited resources available on free platforms like Google Colab, which typically offer a single, less powerful GPU (NVIDIA T4) with around 16 GB of memory. Furthermore, the scale of existing 3D datasets for dendritic spine segmentation is significantly smaller than those available in the medical imaging domain. For comparison, the BraTS2021 dataset (Baid et al., 2021) used for training Med-SA includes 1,280 3D samples, whereas spine segmentation datasets are considerably more limited. These challenges in computational resources and data availability constrain the feasibility of adopting instance segmentation models in current research.

As a result, most existing pipelines continue to rely on semantic segmentation, where pixels are labeled as belonging to any spine without distinguishing between individual instances. While effective for detecting the presence of spines, semantic segmentation typically produces a single collective mask, making it difficult to separate adjacent spines, especially when they are densely distributed or intertwined. Given this limitation, rule-based post-processing methods remain essential for refining segmentation results and achieving the necessary isolation of individual spines. Therefore, optimizing semantic segmentation pipelines in combination with effective post-processing remains a vital and pragmatic focus for advancing dendritic spine analysis. Achieving instance segmentation in the future will depend on continued breakthroughs in models and algorithms, broader access to computational resources, and expansion of datasets. In contrast, achieving panoptic segmentation also requires labeling strategies that can clearly show the microenvironment around dendritic spines, such as nearby axons. Membrane labeling techniques can help by providing the information needed enabling precise semantic annotation of each pixel within an image.

High-quality updated annotation and model adaptation with evolving microscopy technologies

Despite the availability of powerful pre-trained models, annotation and retraining with new datasets is unavoidable. This is primarily due to the continuous advancement in imaging technologies and labelling techniques, which introduce characteristics that differ from those present in the original training sets. As a result, pre-trained models may experience performance degradation when applied to novel datasets. The typical workflow for 3D annotation and model training involves: converting and preprocessing microscopy data, performing semantic annotation with specialized tools, organizing the data into a model-compatible format, and training deep learning models on the annotated dataset (Supplementary Figure S2). Both the quantity and quality of annotated data play critical roles. However, generating precise 3D annotations are time-consuming processes involving meticulous layer-by-layer and pixel-by-pixel inspection.

3D annotation platforms

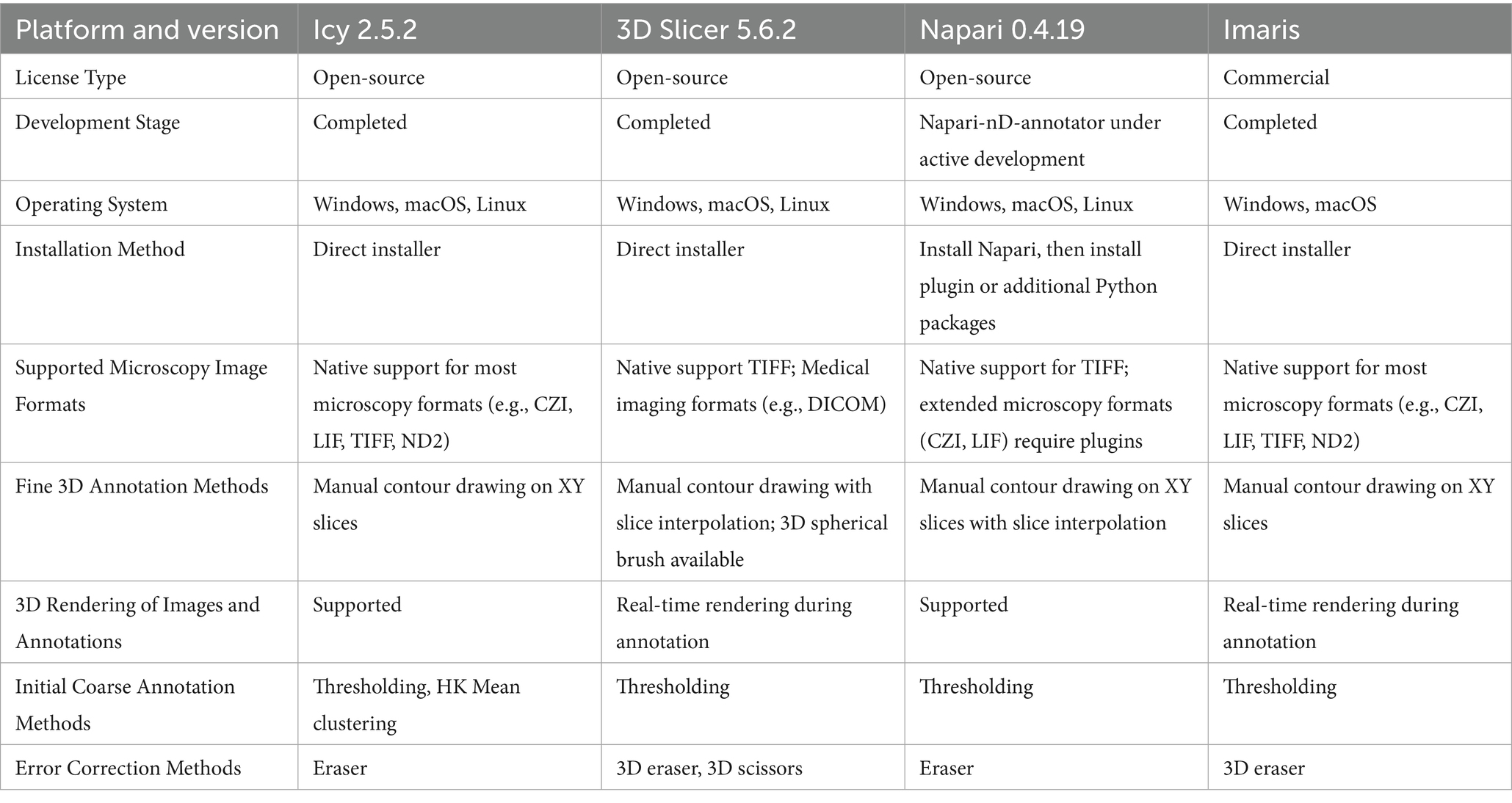

Several bioimage analysis platforms with 3D annotation capabilities have been developed, each offering distinct advantages, as exemplified in Table 1.

3D annotation requires broad support for the import and export of various image formats. For example, the Icy platform provides wild compatibility with microscopy image formats, thereby offering considerable flexibility during annotation (De Chaumont et al., 2012). Efficient initial pre-annotation methods can substantially reduce the manual workload. For instance, the HK-Mean method implemented in Icy enables threshold-based preliminary segmentation to expedite this process (Dufour et al., 2008). However, despite the use of automated approaches, substantial manual verification and refinement are still required, rendering the process time-consuming. To accelerate 3D annotations, tools that support efficient interpolation and large-area corrections are highly valuable. A commonly used approach for 3D annotation involves contour-based manual labeling on individual slices. Tools such as Napari and 3D Slicer support automatic interpolation between unannotated slices, thereby improving annotation efficiency (Fedorov et al., 2012; Chiu and Clack, 2022). Notably, 3D Slicer extends the brush tool into a 3D sphere, which enables more efficient volumetric labeling thus speeding up the manual workload.

All platforms provide basic erasing functions, while 3D Slicer additionally supports direct 3D object clipping, enabling precise correction operations. 3D Slicer offers volumetric visualization, facilitating prompt correction, and thereby improving the overall efficiency and accuracy of the annotation process.

In the next years, we may expect that combining semantic segmentation with ruled-based approaches on multi-scale imaging will provide nice information both on neuronal dendritic tree complexity and dendritic spines morphology which are crucial to decipher many neuropsychiatric diseases.

Author contributions

PN: Writing – original draft, Methodology, Data curation, Visualization, Investigation, Formal analysis, Resources, Writing – review & editing. SX: Writing – original draft, Writing – review & editing, Resources, Investigation, Methodology, Visualization, Data curation. VB: Writing – review & editing, Methodology, Investigation, Visualization, Data curation, Resources. DB: Visualization, Resources, Writing – review & editing. LD: Writing – original draft, Writing – review & editing, Project administration, Supervision, Data curation, Formal analysis, Methodology, Validation, Investigation, Visualization, Resources, Conceptualization, Funding acquisition.

Funding

The author(s) declare that financial support was received for the research and/or publication of this article. We thank the French “Agence nationale de la recherche” (ANR) for supporting the project through grants to LD: ANR-21-CE11-0010; ANR-19-CE16-0012-03; ANR-22-CE17-0010; ANR-24-CE16-5973 and ANR-25-CE16-7573.

Acknowledgments

We deeply thanks our colleagues Philippe Bun and Sylvain Jeannin for their respecting advices concerning image analysis and super-resolution.

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Generative AI statement

The authors declare that Gen AI was used in the creation of this manuscript. Text and review of the literature was conceived and written by authors. AI was sporadically used to optimize English fluidity in some sentences.

Any alternative text (alt text) provided alongside figures in this article has been generated by Frontiers with the support of artificial intelligence and reasonable efforts have been made to ensure accuracy, including review by the authors wherever possible. If you identify any issues, please contact us.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Supplementary material

The Supplementary material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/fninf.2025.1630133/full#supplementary-material

References

Argunşah, A. Ö., Erdil, E., Ghani, M. U., Ramiro-Cortés, Y., Hobbiss, A. F., Karayannis, T., et al. (2022). An interactive time series image analysis software for dendritic spines. Sci. Rep. 12:12405. doi: 10.1038/s41598-022-16137-y

Baid, K. D., Ghodasara, S., Mohan, S., Bilello, M., Calabrese, E., Colak, E., et al. (2021). The rsna-asnr-miccai brats 2021 benchmark on brain tumor segmentation and radiogenomic classification. arXiv preprint arXiv:2107.02314.

Bingham, D., Jakobs, C. E., Wernert, F., Boroni-Rueda, F., Jullien, N., Schentarra, E. M., et al. (2023). Presynapses contain distinct actin nanostructures. J. Cell Biol. 222:e202208110. doi: 10.1083/jcb.202208110

Boros, B. D., Greathouse, K. M., Gentry, E. G., Curtis, K. A., Birchall, E. L., Gearing, M., et al. (2017). Dendritic spines provide cognitive resilience against Alzheimer's disease. Ann. Neurol. 82, 602–614. doi: 10.1002/ana.25049

Breton, V., Nazac, P., Boulet, D., and Danglot, L. (2024). Molecular mapping of neuronal architecture using STORM microscopy and new fluorescent probes for SMLM imaging. Neurophotonics 11:014414. doi: 10.1117/1.NPh.11.1.014414

Bucher, M., Fanutza, T., and Mikhaylova, M. (2020). Cytoskeletal makeup of the synapse: shaft versus spine. Cytoskeleton 77, 55–64. doi: 10.1002/cm.21583

Callara, A. L., Magliaro, C., Ahluwalia, A., and Vanello, N. (2020). A smart region-growing algorithm for single-neuron segmentation from confocal and 2-photon datasets. Front. Neuroinform. 14. doi: 10.3389/fninf.2020.00009

Cauzzo, S., Bruno, E., Boulet, D., Nazac, P., Basile, M., Callara, A. L., et al. (2024). A modular framework for multi-scale tissue imaging and neuronal segmentation. Nat. Commun. 15:4102. doi: 10.1038/s41467-024-48146-y

Chiu, C.-L., and Clack, N. (2022). Napari: a Python multi-dimensional image viewer platform for the research community. Microsc. Microanal. 28, 1576–1577. doi: 10.1017/S1431927622006328

Collot, M., Ashokkumar, P., Anton, H., Boutant, E., Faklaris, O., Galli, T., et al. (2019). MemBright: a family of fluorescent membrane probes for advanced cellular imaging and neuroscience. Cell Chem. Biol. 26, 600–614.e7. e607. doi: 10.1016/j.chembiol.2019.01.009

Das, N., Baczynska, E., Bijata, M., Ruszczycki, B., Zeug, A., Plewczynski, D., et al. (2021). 3dspan: an interactive software for 3D segmentation and analysis of dendritic spines. Neuroinformatics 20, 679–698. doi: 10.1007/s12021-021-09549-0

De Chaumont, F., Dallongeville, S., Chenouard, N., Hervé, N., Pop, S., Provoost, T., et al. (2012). Icy: an open bioimage informatics platform for extended reproducible research. Nat. Methods 9, 690–696. doi: 10.1038/nmeth.2075

De Koninck, Y., Alonso, J., Bancelin, S., Béïque, J.-C., Bélanger, E., Bouchard, C., et al. (2024). Understanding the nervous system: lessons from frontiers in neurophotonics. Neuro 11:014415-014415. doi: 10.1364/josaa.534150

DeFelipe, J. (2011). The evolution of the brain, the human nature of cortical circuits, and intellectual creativity. Front. Neuroanat. 5:29. doi: 10.3389/fnana.2011.00029

Dufour, A., Meas-Yedid, V., Grassart, A., and Olivo-Marin, J.-C. (2008). "Automated quantification of cell endocytosis using active contours and wavelets", in: 2008 19th international conference on pattern recognition: IEEE), 1–4.

Ekaterina, P., Peter, V., Smirnova, D., Vyacheslav, C., and Ilya, B. (2023). Spinetool is an open-source software for analysis of morphology of dendritic spines. Sci. Rep. 13:10561. doi: 10.1038/s41598-023-37406-4

Fedorov, A., Beichel, R., Kalpathy-Cramer, J., Finet, J., Fillion-Robin, J.-C., Pujol, S., et al. (2012). 3D slicer as an image computing platform for the quantitative imaging network. Magn. Reson. Imaging 30, 1323–1341. doi: 10.1016/j.mri.2012.05.001

Fernholz, M. H., Guggiana Nilo, D. A., Bonhoeffer, T., and Kist, A. M. (2024). DeepD3, an open framework for automated quantification of dendritic spines. PLoS Comput. Biol. 20:e1011774. doi: 10.1371/journal.pcbi.1011774

Forrest, M. P., Parnell, E., and Penzes, P. (2018). Dendritic structural plasticity and neuropsychiatric disease. Nat. Rev. Neurosci. 19, 215–234. doi: 10.1038/nrn.2018.16

Garcia, S. B., Schlotter, A. P., Pereira, D., Polleux, F., and Hammond, L. A. (2024). RESPAN: An accurate, unbiased and automated pipeline for analysis of dendritic morphology and dendritic spine mapping. bioRxiv. doi: 10.1101/2024.06.06.597812

Garey, L., Ong, W., Patel, T., Kanani, M., Davis, A., Mortimer, A., et al. (1998). Reduced dendritic spine density on cerebral cortical pyramidal neurons in schizophrenia. J. Neurol. Neurosurg. Psychiatry 65, 446–453. doi: 10.1136/jnnp.65.4.446

Gilles, J.-F., Mailly, P., Ferreira, T., Boudier, T., and Heck, N. (2024). Spot spine, a freely available ImageJ plugin for 3D detection and morphological analysis of dendritic spines. F1000Res 13:176. doi: 10.12688/f1000research.146327.2

Glantz, L. A., and Lewis, D. A. (2000). Decreased dendritic spine density on prefrontal cortical pyramidal neurons in schizophrenia. Arch. Gen. Psychiatry 57, 65–73. doi: 10.1001/archpsyc.57.1.65

Harris, K. M. (1999). Structure, development, and plasticity of dendritic spines. Curr. Opin. Neurobiol. 9, 343–348. doi: 10.1016/S0959-4388(99)80050-6

He, K., Gkioxari, G., Dollár, P., and Girshick, R. (2017). "Mask r-cnn", in: Proceedings of the IEEE international conference on computer vision, 2961–2969.

Herms, J., and Dorostkar, M. M. (2016). Dendritic spine pathology in neurodegenerative diseases. Ann. Rev. Pathol. 11, 221–250. doi: 10.1146/annurev-pathol-012615-044216

Hotulainen, P., and Hoogenraad, C. C. (2010). Actin in dendritic spines: connecting dynamics to function. J. Cell Biol. 189, 619–629. doi: 10.1083/jcb.201003008

Hutsler, J. J., and Zhang, H. (2010). Increased dendritic spine densities on cortical projection neurons in autism spectrum disorders. Brain Res. 1309, 83–94. doi: 10.1016/j.brainres.2009.09.120

Isensee, F., Jaeger, P. F., Kohl, S. A., Petersen, J., and Maier-Hein, K. H. (2021). Nnu-net: a self-configuring method for deep learning-based biomedical image segmentation. Nat. Methods 18, 203–211. doi: 10.1038/s41592-020-01008-z

Jain, S., Mukherjee, S., Danglot, L., and Olivo-Marin, J.-C. (2021) Morphological reconstruction of detached dendritic spines via geodesic path prediction, in: 2021 IEEE 18th international symposium on biomedical imaging (ISBI): IEEE, 944–947.

Jain, A. K., Murty, M. N., and Flynn, P. J. (1999). Data clustering: a review. ACM Comput. Surv. 31, 264–323. doi: 10.1145/331499.331504

Kashiwagi, Y., Higashi, T., Obashi, K., Sato, Y., Komiyama, N. H., Grant, S. G. N., et al. (2019). Computational geometry analysis of dendritic spines by structured illumination microscopy. Nat. Commun. 10, 1285–1214. doi: 10.1038/s41467-019-09337-0

Kashiwagi, Y., and Okabe, S. (2021). Imaging of spine synapses using super-resolution microscopy. Anat. Sci. Int. 96, 343–358. doi: 10.1007/s12565-021-00603-0

Kirillov, A., He, K., Girshick, R., Rother, C., and Dollár, P. (2019). "Panoptic segmentation", in: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, 9404–9413.

Kirillov, A., Mintun, E., Ravi, N., Mao, H., Rolland, C., Gustafson, L., et al. (2023). "Segment anything", in: Proceedings of the IEEE/CVF international conference on computer vision, 4015–4026.

Konietzny, A., Bär, J., and Mikhaylova, M. (2017). Dendritic actin cytoskeleton: structure, functions, and regulations. Front. Cell. Neurosci. 11:147. doi: 10.3389/fncel.2017.00147

Lagache, T., Grassart, A., Dallongeville, S., Faklaris, O., Sauvonnet, N., Dufour, A., et al. (2018). Mapping molecular assemblies with fluorescence microscopy and object-based spatial statistics. Nat. Commun. 9:698. doi: 10.1038/s41467-018-03053-x

Levet, F., Tønnesen, J., Nägerl, U. V., and Sibarita, J.-B. (2020). SpineJ: a software tool for quantitative analysis of nanoscale spine morphology. Methods (San Diego, Calif.) 174, 49–55. doi: 10.1016/j.ymeth.2020.01.020

Li, P., Zhang, Z., Tong, Y., Foda, B. M., and Day, B. (2022). ILEE: algorithms and toolbox for unguided and accurate quantitative analysis of cytoskeletal images. J. Cell Biol. 222:e202203024. doi: 10.1083/jcb.202203024

Li, R., Zhu, M., Li, J., Bienkowski, M. S., Foster, N. N., Xu, H., et al. (2019). Precise segmentation of densely interweaving neuron clusters using G-cut. Nat. Commun. 10:1549. doi: 10.1038/s41467-019-09515-0

Livet, J., Weissman, T. A., Kang, H., Draft, R. W., Lu, J., Bennis, R. A., et al. (2007). Transgenic strategies for combinatorial expression of fluorescent proteins in the nervous system. Nature 450, 56–62. doi: 10.1038/nature06293

Long, J., Shelhamer, E., and Darrell, T. (2015). Fully convolutional networks for semantic segmentation, in: Proceedings of the IEEE conference on computer vision and pattern recognition, 3431–3440.

MacQueen, J. (1967). "Some methods for classification and analysis of multivariate observations", in: Proceedings of the fifth Berkeley symposium on mathematical statistics and probability, volume 1: Statistics: University of California Press, 281–298.

Milligan, K., Balwani, A., and Dyer, E. (2019). Brain mapping at high resolutions: challenges and opportunities. Curr. Opin. Biomed. Eng. 12, 126–131. doi: 10.1016/j.cobme.2019.10.009

Mohan, H., Verhoog, M. B., Doreswamy, K. K., Eyal, G., Aardse, R., Lodder, B. N., et al. (2015). Dendritic and axonal architecture of individual pyramidal neurons across layers of adult human neocortex. Cereb. Cortex 25, 4839–4853. doi: 10.1093/cercor/bhv188

Napper, R., and Harvey, R. (1988). Quantitative study of the Purkinje cell dendritic spines in the rat cerebellum. J. Comp. Neurol. 274, 158–167. doi: 10.1002/cne.902740203

Nwabuisi-Heath, E., LaDu, M. J., and Yu, C. (2012). Simultaneous analysis of dendritic spine density, morphology and excitatory glutamate receptors during neuron maturation in vitro by quantitative immunocytochemistry. J. Neurosci. Methods 207, 137–147. doi: 10.1016/j.jneumeth.2012.04.003

Okabe, S. (2020). Recent advances in computational methods for measurement of dendritic spines imaged by light microscopy. Microscopy 69, 196–213. doi: 10.1093/jmicro/dfaa016

Papa, M., Bundman, M. C., Greenberger, V., and Segal, M. (1995). Morphological analysis of dendritic spine development in primary cultures of hippocampal neurons. J. Neurosci. 15, 1–11. doi: 10.1523/JNEUROSCI.15-01-00001.1995

Peng, H., Ruan, Z., Long, F., Simpson, J. H., and Myers, E. W. (2010). V3D enables real-time 3D visualization and quantitative analysis of large-scale biological image data sets. Nat. Biotechnol. 28, 348–353. doi: 10.1038/nbt.1612

Rao, A., and Craig, A. M. (2000). Signaling between the actin cytoskeleton and the postsynaptic density of dendritic spines. Hippocampus 10, 527–541. doi: 10.1002/1098-1063(2000)10:5<527::AID-HIPO3>3.0.CO;2-B

Rao, Y. L., Ganaraja, B., Murlimanju, B., Joy, T., Krishnamurthy, A., and Agrawal, A. (2022). Hippocampus and its involvement in Alzheimer’s disease: a review. 3 Biotech 12:55. doi: 10.1007/s13205-022-03123-4

Ronneberger, O., Fischer, P., and Brox, T. (2015). "U-net: convolutional networks for biomedical image segmentation", in: Medical image computing and computer-assisted intervention–MICCAI 2015: 18th international conference, Munich, Germany, October 5–9, 2015, proceedings, part III 18: Springer), 234–241.

Rusakov, D. A., and Stewart, M. G. (1995). Quantification of dendritic spine populations using image analysis and a tilting disector. J. Neurosci. Methods 60, 11–21. doi: 10.1016/0165-0270(94)00215-3

Saladin, L., Breton, V., Le Berruyer, V., Nazac, P., Lequeu, T., Didier, P., et al. (2024). Targeted Photoconvertible BODIPYs based on directed photooxidation-induced conversion for applications in photoconversion and live super-resolution imaging. J. Am. Chem. Soc. 146, 17456–17473. doi: 10.1021/jacs.4c05231

Schleske, J. M., Hubrich, J., Wirth, J. O., D'Este, E., Engelhardt, J., and Hell, S. W. (2024). MINFLUX reveals dynein stepping in live neurons. Proc. Natl. Acad. Sci. USA 121:e2412241121. doi: 10.1073/pnas.2412241121

Schünemann, K. D., Hattingh, R. M., Verhoog, M. B., Yang, D., Bak, A. V., Peter, S., et al. (2025). Comprehensive analysis of human dendritic spine morphology and density. J. Neurophy 133, 1086–1102. doi: 10.1152/jn.00622.2024

Selkoe, D. J. (2002). Alzheimer's disease is a synaptic failure. Science 298, 789–791. doi: 10.1126/science.1074069

Smirnov, M. S., Garrett, T. R., and Yasuda, R. (2018). An open-source tool for analysis and automatic identification of dendritic spines using machine learning. PLoS One 13:e0199589. doi: 10.1371/journal.pone.0199589

Tackenberg, C., Ghori, A., and Brandt, R. (2009). Thin, stubby or mushroom: spine pathology in Alzheimer's disease. Curr. Alzheimer Res. 6, 261–268. doi: 10.2174/156720509788486554

Vidaurre-Gallart, I., Fernaud-Espinosa, I., Cosmin-Toader, N., Talavera-Martínez, L., Martin-Abadal, M., Benavides-Piccione, R., et al. (2022). A deep learning-based workflow for dendritic spine segmentation. Front. Neuroanat. 16. doi: 10.3389/fnana.2022.817903

Weigert, M., Schmidt, U., Boothe, T., Müller, A., Dibrov, A., Jain, A., et al. (2018). Content-aware image restoration: pushing the limits of fluorescence microscopy. Nat. Methods 15, 1090–1097. doi: 10.1038/s41592-018-0216-7

Wernert, F., Moparthi, S. B., Pelletier, F., Lainé, J., Simons, E., Moulay, G., et al. (2024). The actin-spectrin submembrane scaffold restricts endocytosis along proximal axons. Science 385:eado2032. doi: 10.1126/science.ado2032

Wouterlood, F. G. (2023). “Techniques to render dendritic spines visible in the microscope” in Dendritic spines: Structure, function, and plasticity (Springer), 69–102.

Wu, Y., Ma, L., Duyck, K., Long, C. C., Moran, A., Scheerer, H., et al. (2018). A population of navigator neurons is essential for olfactory map formation during the critical period. Neuron 100, 1066–1082.e6. e1066. doi: 10.1016/j.neuron.2018.09.051

Wu, J., Wang, Z., Hong, M., Ji, W., Fu, H., Xu, Y., et al. (2025). Medical sam adapter: adapting segment anything model for medical image segmentation. Med. Image Anal. 102:103547. doi: 10.1016/j.media.2025.103547

Xiao, X., Djurisic, M., Hoogi, A., Sapp, R. W., Shatz, C. J., and Rubin, D. L. (2018). Automated dendritic spine detection using convolutional neural networks on maximum intensity projected microscopic volumes. J. Neurosci. Methods 309, 25–34. doi: 10.1016/j.jneumeth.2018.08.019

Zhang, W., and Benson, D. L. (2000). Development and molecular organization of dendritic spines and their synapses. Hippocampus 10, 512–526. doi: 10.1002/1098-1063(2000)10:5<>3.0.CO;2-M

Glossary

Reconstruction ringing-artifacts - A type of image distortion that appears as spurious ripples or oscillations around sharp edges or high-intensity spots in a reconstructed image. This often occurs due to limitations in the mathematical algorithms (like the Fourier transform) used to process the raw microscopy data, especially when high-frequency information is lost or truncated.

Delaunay triangulation - A computational geometry method for connecting a set of discrete points (or localizations) into a mesh of triangles. Triangles are chosen so that the circumcircle of the triangle should not include any points of neighboring triangles.

Ripley’s function - A spatial statistical method. It is used to assess whether a set of points is clustered, regularly spaced, or randomly distributed. It evaluates the number of neighboring points within a given radius around each point, and compares this to what would be expected under complete spatial randomness. Commonly used in cell biology and neuroscience to analyze the spatial organization of cells, synapses, or molecules.

SODA - SODA stands for Standard Object Distance Analysis. This is a free plugin running on Icy software that use Ripley’s function to analyse spatial distribution of spots or STORM localizations. When used with 2 channel pictures of single molecule Localization microscopy, SODA can indicate whether green molecules are randomly distributed or if they are statistically associated with red molecules. SODA can also be used to check if one protein is associated or not to synapse using a pre or post-synaptic marker (See Lagache et al. Nature Comm 2018 for details or Breton et al. Neurophotonics 2024 for review).

Super-resolution microscopy - A family of light microscopy techniques that circumvent the diffraction limit of light using physical or computational methods to achieve a spatial resolution higher than that of conventional light microscopes (typically <200 nm). This includes SIM, STED, STORM, PALM, SMLM, MINFLUX.

Airyscan - A detector technology for confocal laser scanning microscopy. It replaces the conventional single-point pinhole with an area detector to capture more spatial information. This information is then computationally processed to reconstruct an image with higher resolution and a better signal-to-noise ratio than standard confocal. This technique can be applied to both cultured cells and tissue samples. Resolution typically ranges from 120 to 140 nm. This technique gives higher resolution than confocal but less than “Super-resolution techniques”. Although the resolution is slightly lower compared to SIM, the signal homogeneity is particularly advantageous, as it greatly facilitates image segmentation and quantification.

SIM (Structured Illumination Microscopy) - A super-resolution technique. It illuminates the sample with a patterned light (e.g., stripes) causing interference with the sample. By analyzing the " Moiré pattern" created by the interaction of this pattern with the sample's structure, a higher-resolution image is computationally reconstructed from multiple raw images. Resolution typically ranges between 100 and 120 nm. This technique is highly effective in neuronal cell cultures. Its application in tissue samples is possible but more challenging, as artifacts may arise with increasing imaging depth.

STED (Stimulated Emission Depletion) - A super-resolution technique. It uses two lasers: an excitation laser to illuminate a spot of fluorescent molecules, synchronized with by a donut-shaped "depletion" laser that deactivates fluorescence in the outer region of the spot, effectively shrinking the point spread function. Resolution typically ranges from 40 to 80 nm. This technique can be applied to both cultured cells and tissue samples. However, imaging thick specimens may require clearing to minimize light scattering and improve acquisition quality.

STORM (Stochastic Optical Reconstruction Microscopy) - A single-molecule localization-based super-resolution technique. It uses photo switchable fluorescent probes, stochastically activating only a small, sparse subset of them in each camera frame. By precisely localizing these individual molecules and combining thousands of such frames, a high-resolution image is reconstructed. Resolution typically ranges from 10 to 50 nm. This technique can be applied to both cultured cells and tissue samples. However, imaging thick specimens is challenging (due to blinking buffer penetration for STORM, or oligos penetrations for PAINT) and light scattering.

MINFLUX (MINimal emission FLUXes) - A super-resolution technique that combines the principles of STED and single-molecule localization. It uses a donut-shaped excitation beam (dark in the center and bright around it) to probe fluorescence at different positions. By fitting photon count distributions obtained from multiple targeted excitation positions surrounding the fluorophore, the system triangulates the molecule’s location with high precision and low photobleaching due to reduced excitation intensity. It can achieve 1-3 nm precision and is fast enough to track molecules live. Most published applications concerns cell culture up to now.

SNR (Signal-to-Noise Ratio) - A measure of signal quality. It compares the level of a desired signal to the level of background noise. A high SNR indicates a clean and reliable measurement.

PSF (Point-Spread Function) - This is a mathematical function that describes how the image of a single point is distorted by a microscopy system. For example, a fluorescent spherical bead with a physical diameter of 100 nm appears larger than 200 nm in fluorescence microscopy. The three-dimensional image of such a bead—commonly referred to as the point spread function (PSF)—reveals that the distortion is typically anisotropic in conventional microscopy, with greater deformation along the Z-axis than in the X and Y directions.

HK-Mean - This segmentation method applies N-class thresholding based on K-Means clustering of the image histogram. It then performs object extraction in a bottom-up manner, guided by user-defined minimum and maximum object size constraints. Conventional thresholding typically employs two classes, with black representing the background and white denoting the object of interest. By using HK-Means clustering, for example, it becomes possible to perform thresholding with four classes: white, black, and two intermediate grey levels.

FCN (Fully Convolutional Network) - A type of neural network model where all layers perform convolutions. Unlike traditional networks that have fixed-size outputs, FCNs can process input images of any size and produce an output map of a corresponding size (e.g., a segmentation mask). This makes them ideal for pixel-level tasks like identifying all cells in an image.

U-Net - A widely-used neural network model for biomedical image segmentation, named for its U-shaped architecture. The U shape is composed of 2 parts: the stem (the U center that extract interesting characteristics) and the decoders that extend resolution by up-sampling. Its design cleverly combines an understanding of the overall image context with the preservation of fine details, allowing it to achieve highly precise segmentation even with limited training data. This makes it particularly effective in fields like medicine and biology where large annotated datasets are often rare.

Dual-decoder network - An efficient neural network architecture designed to perform two related output tasks simultaneously from a single image. It operates in two steps: first, a shared Encoder analyzes the image to extract key feature information, performing an "understanding" role. Then, two separate Decoders use this shared information to construct different output maps. For instance, one decoder can generate the precise boundaries of cells (a boundary map), while the second decoder simultaneously generates a map identifying different cell types (a class map).

Panoptic segmentation - An image segmentation goal. It doesn't just distinguish "cell" from "background" (semantic segmentation), but also identifies each individual cell instance. The final result: every pixel in the image is labeled as either background or as belonging to a specific instance, like "cell 1", "cell 2", etc. This is crucial for accurate counting and single-cell analysis.

SAM (Segmentation Anything Model) - A foundation model for image segmentation. It was pre-trained on a massive general-purpose dataset containing 11 million images and over 1 billion segmentation masks. This extensive training equips it with powerful "zero-shot" segmentation capabilities, allowing it to segment virtually any unseen object in an image, often prompted by minimal user input like a single click or a box.

Med-SA (Medical SAM Adapter) - An efficient method for adapting the general-purpose SAM for medical image analysis. It enables the model to process various types of 3D medical data—a task the original 2D SAM cannot perform.

Med-SA fine-tuning - "Fine-tuning" means taking a smart model (here, Med-SA) that has already been trained on a vast amount of general medical images, and then giving it specialized training on our own smaller, specific dataset (e.g., our images of a particular neuron type). This allows the model to quickly adapt and become an expert on our unique analysis task without starting from scratch.

GPU memory - This refers to the dedicated, high-speed memory on a Graphics Processing Unit (GPU). In deep learning, GPUs perform massive parallel computations. GPU memory acts as the GPU's "workbench"; its size determines the size and complexity of the images and neural network models that can be processed at once. Large GPU memory is critical for handling high-resolution 3D images or large models.

Dataset scale - Refers to the amount of data used for training and testing a model. It can be measured in various ways, such as the total number of images, the total number of annotated objects, or the total data storage size (e.g., Gigabytes, Terabytes). The scale of the dataset directly impacts the complexity of the computational methods that can be applied and the robustness of the resulting model.

Keywords: super-resolution (SR), deep learning, neuron, dendrite, dendritic spine, labeling, segmentation (image processing), probe

Citation: Nazac P, Xu S, Breton V, Boulet D and Danglot L (2025) Super-resolution microscopy and deep learning methods: what can they bring to neuroscience: from neuron to 3D spine segmentation. Front. Neuroinform. 19:1630133. doi: 10.3389/fninf.2025.1630133

Edited by:

Maryam Naseri, Yale University, United StatesReviewed by:

Yutaro Kashiwagi, The University of Tokyo, JapanGuilherme Bastos, Federal University of Itajubá, Brazil

Jagadish Sankaran, A*STAR Genome Institute of Singapore, Singapore

Copyright © 2025 Nazac, Xu, Breton, Boulet and Danglot. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Lydia Danglot, bHlkaWEuZGFuZ2xvdEBpbnNlcm0uZnI=

‡ORCID: David Boulet, orcid.org/0009-0000-1262-7095

†These authors have contributed equally to this work

Paul Nazac

Paul Nazac Shengyan Xu

Shengyan Xu Victor Breton

Victor Breton David Boulet1‡

David Boulet1‡ Lydia Danglot

Lydia Danglot