- 1Centre for Cognition and Decision Making, Institute for Cognitive Neuroscience, National Research University Higher School of Economics, Moscow, Russia

- 2Department of Psychology, National University of Singapore, Singapore, Singapore

- 3Department of Psychology, National Research University Higher School of Economics, Moscow, Russia

- 4Department of Psychology, York University, Toronto, ON, Canada

Identifying facial expressions is crucial for social interactions. Functional neuroimaging studies show that a set of brain areas, such as the fusiform gyrus and amygdala, become active when viewing emotional facial expressions. The majority of functional magnetic resonance imaging (fMRI) studies investigating face perception typically employ static images of faces. However, studies that use dynamic facial expressions (e.g., videos) are accumulating and suggest that a dynamic presentation may be more sensitive and ecologically valid for investigating faces. By using quantitative fMRI meta-analysis the present study examined concordance of brain regions associated with viewing dynamic facial expressions. We analyzed data from 216 participants that participated in 14 studies, which reported coordinates for 28 experiments. Our analysis revealed bilateral fusiform and middle temporal gyri, left amygdala, left declive of the cerebellum and the right inferior frontal gyrus. These regions are discussed in terms of their relation to models of face processing.

Introduction

Effective face processing is essential for perceiving and recognizing intentions, emotion and mental states in others. Facial expressions have traditionally been investigated by utilizing static pictures of faces as opposed to dynamic moving faces (i.e., short video clips). Faces elicit activity in an established set of brain areas that includes the fusiform gyri associated with face perception, amygdala associated with processing affect and fronto-temporal regions associated with knowledge of a person (Fusar-Poli et al., 2009 for meta-analyses). Some suggest that dynamic faces compared to static faces are more ecologically valid (Bernstein and Yovel, 2015), and facilitate recognition of facial expressions (Ceccarini and Caudek, 2013). O'Toole et al. (2002) explain that when both static and dynamic identity information are available, people tend to rely primarily on static information for face recognition (i.e., supplemental information hypothesis), whereas dynamic information such as motion contributes to the quality of the structural information accessible from a human face (representation enhancement hypothesis). This dynamic information plays a key role in social interactions when evaluating the mood or intentions of others (Langton et al., 2000; O'Toole et al., 2002). The brain areas that respond to dynamic faces are not fully characterized with up-to-date meta-analysis methods and findings in the field. The purpose of this study is to examine concordance in brain regions associated with dynamic facial expressions using quantitative meta-analysis.

Functional magnetic resonance imaging (fMRI) studies investigating face perception typically reveal activation within the fusiform gyrus and occipital gyrus, areas part of the core regions of face processing, which mediate visual analysis of faces (O'Toole et al., 2002; Gobbini and Haxby, 2007). The extended system associated with extracting meaning from faces includes the inferior frontal cortex and amygdalae (Haxby et al., 2000). Notably, compared to static faces, much fewer fMRI studies use dynamic face stimuli, likely due to methodological and practical challenges in using dynamic faces. Specifically, short videos of faces need to be standardized in terms of presentation speed (i.e., how fast a neutral face transforms to an emotional expression), as this requires consistency across emotions. Similarly, morphed faces are modified to transform a static photo from a neutral to an emotional expression in a series of frames. Thus, adopting a protocol for using dynamic facial expressions (e.g., videos and morphs) requires more computational processing and in turn more time to prepare.

These additional efforts, however, have been found to be beneficial in populations that have an altered sensitivity to faces. For example, research shows that regions related to visual properties (i.e., the core system) and emotional/cognitive processing of faces (i.e., the extended system) are hypoactive in patients with autism spectrum disorders (Hadjikhani et al., 2007; Bookheimer et al., 2008; Nomi and Uddin, 2015 for review). Dynamic changes in facial expressions were used to show that individuals with and without autism spectrum disorders elicit equivalent activity in occipital regions, and differential activity in the fusiform gyrus, amygdala and superior temporal sulcus, suggesting a dysfunction in the relational and affective processing of faces (Pelphrey et al., 2007). Thus, in practice, usage of dynamic stimuli would be advantageous when studying populations with difficulties in processing faces and emotions.

A recent review of the face perception literature adopted the model of core and extended systems to explain processing of dynamic faces in typical adults (Bernstein and Yovel, 2015). This review provides support for a dorsal stream that encompasses the superior temporal sulcus, and encodes low-frequency information such as face motion, head rotation and processing of moving facial parts (O'Toole et al., 2002; Peyrin et al., 2004, 2005, 2010; Saxe, 2006), and a ventral stream that comprises bilateral inferior occipital cortex and fusiform gyrus, and processes high-frequency information such as facial expressions and face parts (e.g., Eger et al., 2004; Iidaka et al., 2004; Corradi-Dell'Acqua et al., 2014). Since the dorsal stream processes more information about movement of faces, dynamic facial expressions should involve more activation of the superior temporal lobe.

An early meta-analysis analyzed coordinates from 11 experiments on dynamic facial expressions and identified concordance in temporal, parietal, and frontal cortices (Arsalidou et al., 2011). Since then, there has been an increase in the number of fMRI studies that examine brain responses to dynamic faces. Critically, there have been methodological advances to the activation likelihood estimation (ALE) method (Turkeltaub et al., 2012) and documented implementation errors in the old ALE software that have since been corrected (Eickhoff et al., 2017); ALE software developers recommend re-analyses and evaluation of current and past meta-analyses. Thus, the purpose of the current paper was to examine brain areas associated with processing of dynamic facial expressions in healthy adults and establish their implication above and beyond to brain areas responding to static faces and other control tasks.

Methods

Literature Search and Article Selection

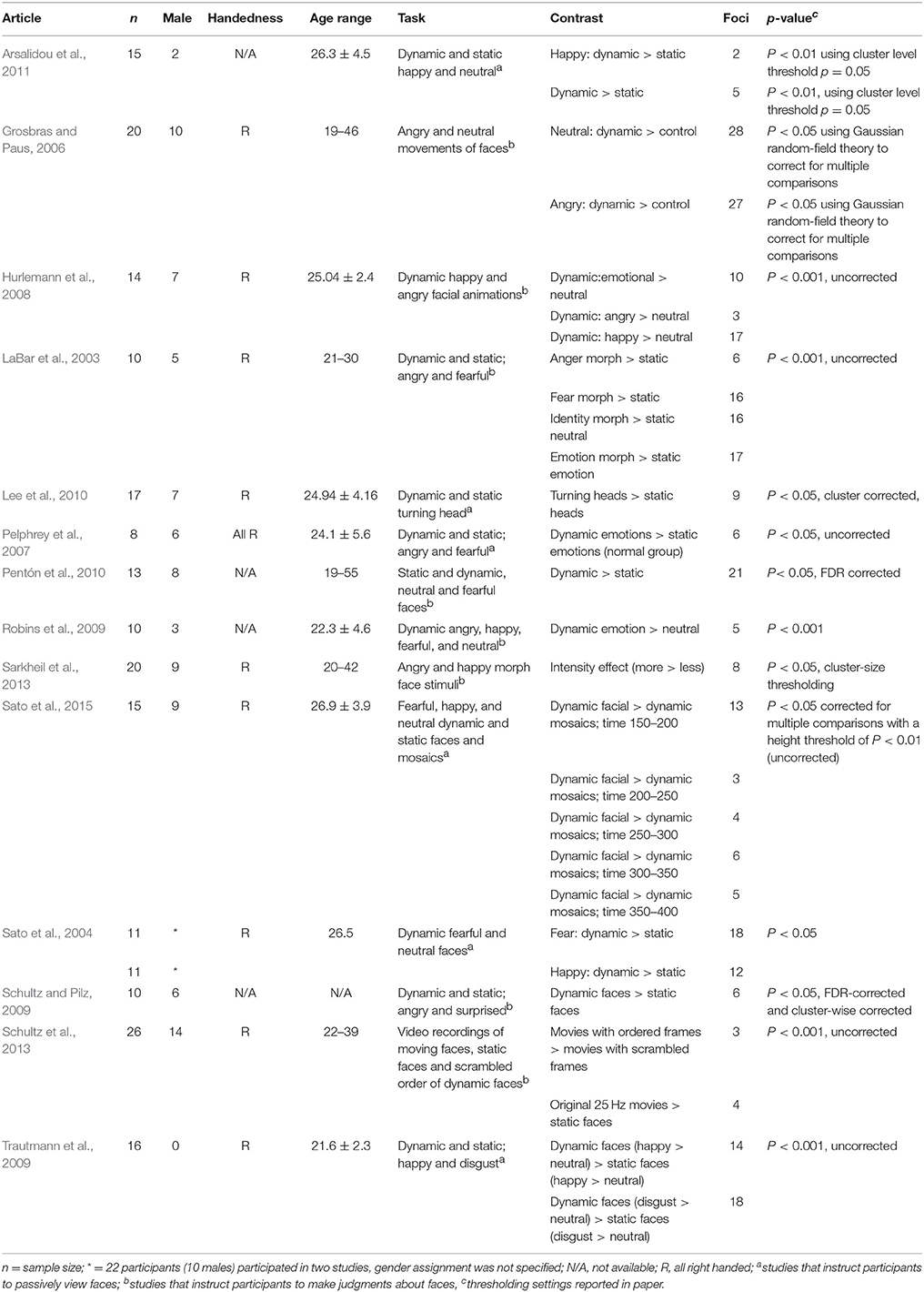

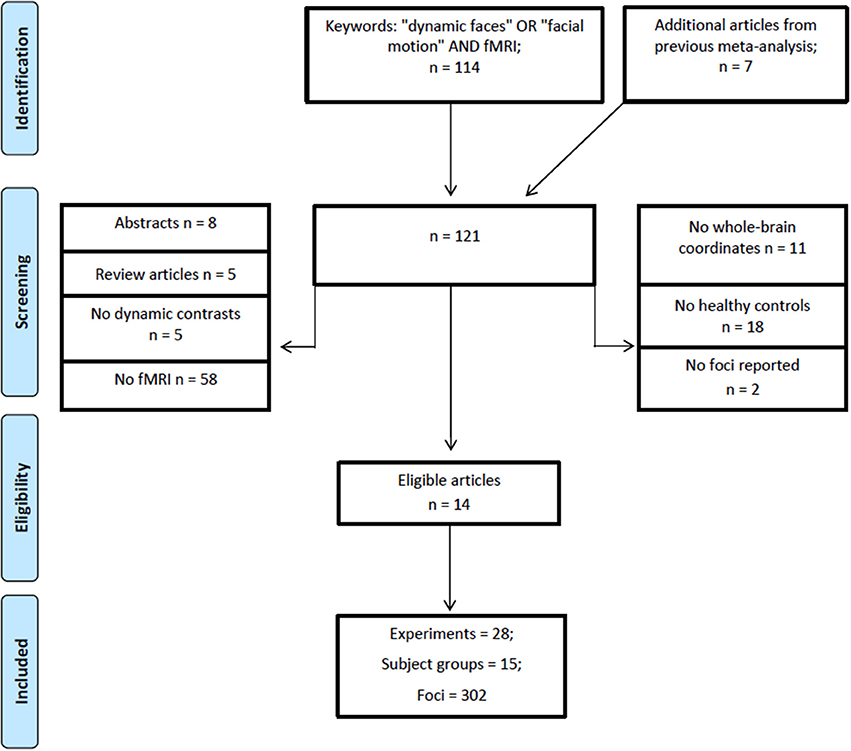

A literature search was performed using Web of Science (http://apps.webofknowledge.com/) on October, 6th, 2017, keywords (“dynamic faces” OR “facial motion” AND “fMRI”), years 1995–2017, yielding a total of 114 articles. Figure 1 shows the steps taken to identify eligible articles. Specifically, we excluded articles that: (1) reported no fMRI data; (2) studies that did not report whole brain analysis; (3) reported no data on healthy adults; (4) did not report fMRI coordinates and, (5) articles with irrelevant tasks. Articles surviving these criteria underwent a full text review by two researchers independently (O.Z. and Z.Y.). The remaining articles included healthy adults; reported stereotaxic coordinates in Talairach or Montreal Neurological Institute (MNI) space from random effects whole-brain analysis, which reported a contrast (i.e., experiment) comparing dynamic with static faces. Articles from a previous meta-analysis and an eligible study within it (Arsalidou et al., 2011) resulted in 7 additional articles. All relevant experiments from each article were included in the analysis because the most recent algorithm uses a correction to avoid summation of within-group effects and provides increased power (Turkeltaub et al., 2012). Table 1 shows participant demographics and details from a total 28 experiments from 14 articles, sorted by 15 separate subject groups, which were included in the meta-analysis. The number of experiments we included in the analysis adheres to current recommendations (n = 17–20) for achieving sufficient statistical power (Eickhoff et al., 2017).

Figure 1. PRISMA flowchart for eligibility of articles (Template by Moher et al., 2009).

Meta-Analysis

The meta-analysis was performed using GingerALE software (2.3.6), which relies on ALE, a coordinate-based meta-analytic method (Eickhoff et al., 2009, 2017) available at http://www.brainmap.org/ale/. Foci from different articles were used to create a probabilistic map that compares the likelihood of activation compared to random spatial distribution. MNI coordinates were converted to Talairach space using the Lancaster et al. (2007) transformation. Significance was assessed using a cluster-level threshold for multiple comparisons at p = 0.05 with a cluster-forming threshold set to p = 0.001 (Eickhoff et al., 2012, 2017). GingerALE software does not provide an option for estimating replicability of the data, however, based on simulations of ALE analyses that have been performed to test sensitivity, number of incidental clusters and statistical power (Eickhoff et al., 2016), a recommended minimum number of experiments (N = 17–20) has been proposed (Eickhoff et al., 2017). Moreover, a cluster-level threshold sets the cluster minimum volume such that only, for example, 5% of the simulated data clusters exceed this size, minimizing the possibility that an ALE peak could be driven by only one study.

The majority of studies used tasks where participants were instructed to passively observe facial stimuli (Sato et al., 2004; Trautmann et al., 2009; Pentón et al., 2010; Arsalidou et al., 2011) or to perform a simple target detection task (Pelphrey et al., 2007; Robins et al., 2009; Lee et al., 2010; Sato et al., 2015). Two studies asked to rank the presented emotional expressions (Grosbras and Paus, 2006; Sarkheil et al., 2013); three studies instructed the participants to make a decision about the gender of face stimuli (Hurlemann et al., 2008; Pentón et al., 2010; Ceccarini and Caudek, 2013); one study asked to rank the meaningfulness of moving faces and judge the fluidity of facial motions (Schultz et al., 2013); in another study participants were told to identify the category of face stimuli (LaBar et al., 2003); and in another study participants performed a one-back matching task (Schultz and Pilz, 2009). Five articles reported experiments related to dynamic > static in various emotions: anger (LaBar et al., 2003; Grosbras and Paus, 2006), fear (Sato et al., 2004), and happiness (Sato et al., 2004; Trautmann et al., 2009; Arsalidou et al., 2011). Six articles presented participants with dynamic > static faces after subtracting neutral from emotional faces in one (Hurlemann et al., 2008), several (Pelphrey et al., 2007; Robins et al., 2009; Schultz and Pilz, 2009), or no emotional component (Lee et al., 2010; Pentón et al., 2010). One article reported experiments regarding the morph intensity effect in dynamic faces (Sarkheil et al., 2013), and two articles contrasted dynamic faces to mosaic stimuli (Sato et al., 2015; we note that this study reported fMRI coordinates using magnetic encephalography-fMRI data reconstruction) or scrambled faces (Schultz et al., 2013).

Results

Analyses included data from 216 right-handed participants (27.24 ± 9.02 years; 39.81% men, Table 1 for details).

ALE Map

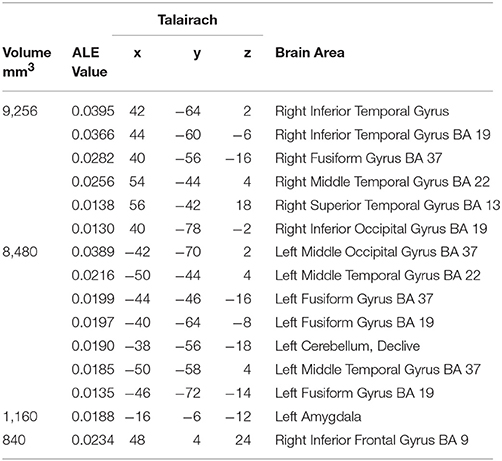

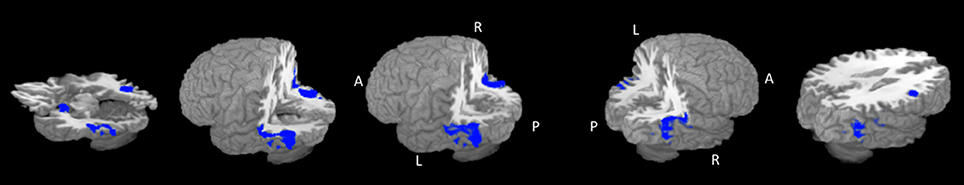

The largest cluster with the highest ALE value was found in the right hemisphere and extended from the inferior temporal and occipital, to fusiform and superior temporal gyri (Figure 2, Table 2). The second cluster was found in left hemisphere and extended from the middle occipital and temporal gyri to the fusiform gyrus and cerebellum. Other areas included the left amygdala, and right inferior frontal gyrus.

Figure 2. Rendered ALE map showing significant concordance across studies for dynamic facial expressions. A, anterior; P, Posterior; L, left; R, right. All coordinates are listed in Table 2.

Discussion

We examined concordance across studies in brain areas responding more to dynamic facial expressions. We report concordance in: (a) areas associated with the core visual system of processing faces such as fusiform gyrus and posterior parts of the superior temporal gyrus, (b) areas associated with the extended system for processing faces such as the left amygdala, inferior frontal gyrus, and anterior parts of the superior temporal gyrus and (c) a cluster within the cerebellar declive, a region previously not highlighted in models of facial cognition. We build on previous models of face processing and discuss possible roles of these areas during the processing of dynamic faces.

In comparison with the previous meta-analysis on dynamic faces (Arsalidou et al., 2011); the current analysis yields similar brain regions, however the output resulted in less clusters that were larger in size and carried higher ALE values. When comparing the top clusters, the amygdala and cerebellar declive are found in the left hemisphere for both the current and previous analyses. Clusters in right precuneus (BA 7) and cuneus, and left hypothalamus, previously found to be concordant (Arsalidou et al., 2011), were not observed in the current meta-analysis; these areas had both lower ALE scores and smaller cluster volumes. We note three methodological choices that may account for differences in the current and previous meta-analyses; (a) the number of experiments included in the current meta-analyses is larger, which provide increased power, (b) the GingerALE algorithm, which allows for controlling for within-group effects and provides increased power (Turkeltaub et al., 2012) and (c) the thresholding approach follows cluster-level threshold for controlling for multiple comparisons, which is more suitable for ALE meta-analyses (Eickhoff et al., 2016, 2017). Critically, the current meta-analysis shows that the overall size of clusters in occipito-temporal regions is similar in the right and left hemisphere, suggesting bilateral engagement.

Specifically, bilateral occipito-temporal gyri comprise of the fusiform and superior temporal gyri, areas are most associated with face processing; the fusiform gyri are implicated in configuring relations among visual features and relying on high-spatial-frequency to form face percepts as a whole (e.g., Vuilleumier et al., 2003; Iidaka et al., 2004; Sabatinelli et al., 2011), or in part (e.g., Rossion et al., 2003; Nichols et al., 2010; Yaple et al., 2016). This is consistent with models that classify the fusiform gyrus as part of the core visual processing system for faces (Gobbini and Haxby, 2007), and as part of the ventral stream of face processing (e.g., Bernstein and Yovel, 2015).

Moreover, we observe concordance in posterior and more dorsal parts of the superior temporal gyri. The superior temporal gyri are known for their involvement in the analysis of low-spatial frequency information (i.e., global facial information) such as gaze direction and motion associated with interpreting social signals (Allison et al., 2000; Taylor et al., 2009; Wegrzyn et al., 2015). According to the face perception model by Haxby and colleagues posterior parts of the superior temporal sulcus are part of the core visual face processing system responsible for basic visual analyses of faces, whereas adjacent more anterior parts of the superior temporal gyri are part of the extended system that is responsible for further processing of personal information (Haxby et al., 2000; Gobbini and Haxby, 2007). Our data are also consistent with the more recent interpretation of a dorsal face processing pathway proposed by Bernstein and Yovel (2015). Importantly, consistent with the representation enhancement hypothesis (O'Toole et al., 2002) we propose that dynamic faces may show increased implication in superior temporal cortices because they provide richer input for the brain to interpret.

As part of the left occipito-temporal cluster we observed concordance in the cerebellar declive, an area not highlighted as part of face processing models. Traditionally, the cerebellum was known for its involvement in motor functioning. However, its role in cognitive and affective processing has been discussed (e.g., Brooks, 1984; Paulin, 1993; Doya, 2000; Stoodley and Schmahmann, 2010) and a generic role in timing mechanisms has been proposed (e.g., Ivry and Spencer, 2004). Past meta-analyses identify concordance in the cerebellum for static facial expressions (Fusar-Poli et al., 2009), however its role in social cognition remains unclear. In relation to social processes some have shown that the cerebellum is associated with mirroring and mentalizing motor actions (Van Overwalle et al., 2014, 2015). We suggest that the cerebellum may play a role in tracking the sequences for conveying the signal and updating the information about perceptual features in a face to predict possible changes, similar to its involvement in the motor system.

Concordance in the left amygdala and right inferior frontal gyrus is respectively associated with emotional and cognitive processing of faces. The amygdala responds to all sorts of emotional stimuli such as fear processing and fear conditioning (LeDoux, 2003), reward and punishment (Gupta et al., 2011). Growing evidence suggests that amygdala activation is not specific to fearful expressions or any particular emotion (van der Gaag et al., 2007), but rather it processes salient information of faces (Fitzgerald et al., 2006). It has been suggested that the amygdala contribute to social-emotional recognition (Adolphs et al., 2002; Adolphs and Spezio, 2006) and processing of salient face stimuli during unpredictable situations (Adolphs, 2010). Some have emphasized the evolutionary significance of the amygdalae, suggesting it plays a role in detecting relevant stimuli (Sander et al., 2003) and signaling potentially significant consequential events (Fitzgerald et al., 2006). Thus, based on past findings, perhaps the processing of dynamic faces requires increased amygdala activation due to an increased vigilance in observing the dynamically changing salient features of faces.

The inferior frontal gyrus, a part of the ventrolateral prefrontal cortex, is associated with all sorts of cognitive functions including response inhibition (Aron et al., 2003; Hampshire et al., 2009, 2010), working memory (Yaple and Arsalidou, in press), negative priming (Yaple and Arsalidou, 2017) and mental attention (Arsalidou et al., 2013). A hierarchical model of the prefrontal cortex suggests that the inferior frontal gyri would be responsible for simple, non-abstract judgments (Christoff et al., 2009). The majority of studies asked participants to make simple judgments about gender, emotion, or motion of faces congruent with this hypothesis. Regarding right lateralization, relevant to social interactions, the right inferior frontal gyrus is active when processing social information such as cooperative interaction (Liu et al., 2015) and interpersonal interactions (Liu et al., 2016). It has been shown that bilateral inferior frontal gyrus as a part of the dorsomedial network (Bzdok et al., 2013), which is involved in contemplation of others' mental states (Mar, 2011 for meta-analysis). Alternatively, based on a trade-off between task difficulty and the mental-attentional capacity of the individual, the right hemisphere is hypothesized to be favored in simple, automatized processes (Pascual-Leone, 1989; Arsalidou et al., 2018 for details). Overall, right inferior frontal gyrus's activation during face perception may be associated with cognitive processing of social information processing or maintaining with simple task requirements.

Limitations

Data presented here represent concordance across fMRI studies that investigated dynamic vs. static facial expressions and across different emotional states. ALE methodological limitations have been discussed elsewhere (Zinchenko and Arsalidou, 2018; Yaple and Arsalidou, in press) and include lack of control of statistical methodologies adopted by original articles and consideration only of peak coordinates. A shortcoming of the current study is data we report here are in majority based on female participants as original articles favored recruiting female participants who may show a greater response to faces.

Conclusion

A coordinate-based meta-analysis was performed to assess the concordance of brain activations derived from experiments that identified more activity in dynamic compared to static faces and other control tasks. We observed concordance across studies in brain areas well established in the face processing literature, as well as the cerebellum, which is not discussed in models associated with face processing. The observed results suggest that dynamic faces require increased resources in the brain to process complex, dynamically changing features of faces. The current data provide a stereotaxic set of brain regions that underlie dynamic facial expression in typical adults. Practically, these normative data can serve as a benchmark for future studies with atypical populations, such as individuals with autism spectrum disorder. Theoretically, these findings provide further support for an extended set of areas that support processing of dynamic facial expression. Overall, our present findings can inform current models and help guide future studies on dynamic facial expressions.

Author Contributions

OZ helped collect and analyze data and prepared the first draft of the manuscript. ZY helped collect and analyze data and contributed to manuscript preparation. MA conceptualized research and contributed to manuscript preparation.

Conflict of Interest Statement

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Acknowledgments

The article was in part supported by the Russian Science Foundation (#17-18-01047) to MA and prepared within the framework of the Basic Research Program at the National Research University Higher School of Economics (HSE) and in part supported within the framework of a subsidy by the Russian Academic Excellence Project 5–100.

References

Adolphs, R. (2010). What does the amygdala contribute to social cognition? Ann. N. Y. Acad. Sci. 1191, 42–61. doi: 10.1111/j.1749-6632.2010.05445.x

Adolphs, R., Baron-Cohen, S., and Tranel, D. (2002). Impaired recognition of social emotions following amygdala damage. J. Cogn. Neurosci. 14, 1264–1274. doi: 10.1162/089892902760807258

Adolphs, R., and Spezio, M. (2006). Role of the amygdala in processing visual social stimuli. Prog. Brain Res. 156, 363–378. doi: 10.1016/S0079-6123(06)56020-0

Allison, T., Puce, A., and McCarthy, G. (2000). Social perception from visual cues: role of the STS region. Trends Cogn. Sci. 4, 267–278. doi: 10.1016/S1364-6613(00)01501-1

Aron, A. R., Bullmore, E. T., Sahakian, B. J., and Robbins, T. W. (2003). Stop-signal inhibition disrupted by damage to right inferior frontal gyrus in humans. Nat. Neurosci. 6, 115–117. doi: 10.1038/nn1003

Arsalidou, M., Morris, D., and Taylor, M. J. (2011). Converging evidence for the advantage of dynamic facial expressions. Brain Topogr. 24, 149–163. doi: 10.1007/s10548-011-0171-4

Arsalidou, M., Pascual-Leone, J., Johnson, J., Morris, D., and Taylor, M. J. (2013). A balancing act of the brain: activations and deactivations driven by cognitive load. Brain Behav. 3, 273–285. doi: 10.1002/brb3.128

Arsalidou, M., Pawliw-Levac, M., Sadeghi, M., and Pascual-Leone, J. (2018). Brain areas associated with numbers and calculations in children: meta-analyses of fMRI studies. Dev. Cogn. Neurosci. 30, 239–250. doi: 10.1016/j.dcn.2017.08.002

Bernstein, M., and Yovel, G. (2015). Two neural pathways of face processing: a critical evaluation of current models. Neurosci Biobehav Rev. 55, 536–546. doi: 10.1016/j.neubiorev.2015.06.010

Bookheimer, S. Y., Wang, A. T., Scott, A., Sigman, M., and Dapretto, M. (2008). Frontal contributions to face processing differences in autism: evidence from fMRI of inverted face processing. J. Int. Neuropsychol. Soc. 14, 922–932. doi: 10.1017/S135561770808140X

Bzdok, D., Langner, R., Schilbach, L., Engemann, D. A., Laird, A. R., Fox, P. T., et al. (2013). Segregation of the human medial prefrontal cortex in social cognition. Front. Hum. Neurosci. 7:232. doi: 10.3389/fnhum.2013.00232

Ceccarini, F., and Caudek, C. (2013). Anger superiority effect: The importance of dynamic emotional facial expressions. Vis. Cogn. 21, 498–540. doi: 10.1080/13506285.2013.807901

Christoff, K., Keramatian, K., Gordon, A. M., Smith, R., and Mädler, B. (2009). Prefrontal organization of cognitive control according to levels of abstraction. Brain Res. 1286, 94–105. doi: 10.1016/j.brainres.2009.05.096

Corradi-Dell'Acqua, C., Schwartz, S., Meaux, E., Hubert, B., Vuilleumier, P., and Deruelle, C. (2014). Neural responses to emotional expression information in high-and low-spatial frequency in autism: evidence for a cortical dysfunction. Front. Hum. Neurosci. 8:189. doi: 10.3389/fnhum.2014.00189

Doya, K. (2000). Complementary roles of basal ganglia and cerebellum in learning and motor control. Curr. Opin. Neurobiol. 10, 732–739. doi: 10.1016/S0959-4388(00)00153-7

Eger, E., Schyns, P. G., and Kleinschmidt, A. (2004). Scale invariant adaptation in fusiform face-responsive regions. Neuroimage 22, 232–242. doi: 10.1016/j.neuroimage.2003.12.028

Eickhoff, S. B., Bzdok, D., Laird, A. R., Kurth, F., and Fox, P. T. (2012). Activation likelihood estimation revisited. Neuroimage 59, 2349–2361. doi: 10.1016/j.neuroimage.2011.09.017

Eickhoff, S. B., Laird, A. R., Fox, P. M., Lancaster, J. L., and Fox, P. T. (2017). Implementation errors in the GingerALE Software: description and recommendations. Hum. Brain Mapp. 38, 7–11. doi: 10.1002/hbm.23342

Eickhoff, S. B., Laird, A., Grefkes, C., Wang, L., Zilles, K., and Fox, P. (2009). Coordinate-based activation likelihood estimation meta-analysis of neuroimaging data: a random-effects approach based on empirical estimates of spatial uncertainty. Hum. Brain Mapp. 30, 2907–2926. doi: 10.1002/hbm.20718

Eickhoff, S. B., Nichols, T. E., Laird, A. R., Hoffstaedter, F., Amunts, K., Fox, P. T., et al. (2016). Behavior, sensitivity, and power of activation likelihood estimation characterized by massive empirical simulation. Neuroimage 137, 70–85. doi: 10.1016/j.neuroimage.2016.04.072

Fitzgerald, D. A., Angstadt, M., Jelsone, L. M., Nathan, P. J., and Phan, K. L. (2006). Beyond threat: amygdala reactivity across multiple expressions of facial affect. Neuroimage 30, 1441–1448. doi: 10.1016/j.neuroimage.2005.11.003

Fusar-Poli, P., Placentino, A., Carletti, F., Landi, P., Allen, P., Surguladze, S., et al. (2009). Functional atlas of emotional faces processing: a voxel-based meta-analysis of 105 functional magnetic resonance imaging studies. J. Psychiatry Neurosci. 34, 418–432.

Gobbini, M. I., and Haxby, J. V. (2007). Neural systems for recognition of familiar faces. Neuropsychologia 45, 32–41. doi: 10.1016/j.neuropsychologia.2006.04.015

Grosbras, M. H., and Paus, T. (2006). Brain networks involved in viewing angry hands or faces. Cereb. Cortex 16, 1087–1096. doi: 10.1093/cercor/bhj050

Gupta, R., Koscik, T. R., Bechara, A., and Tranel, D. (2011). The amygdala and decision making. Neuropsychologia 49, 760–766. doi: 10.1016/j.neuropsychologia.2010.09.029

Hadjikhani, N., Joseph, R. M., Snyder, J., and Tager-Flusberg, H. (2007). Abnormal activation of the social brain during face perception in autism. Hum. Brain Mapp. 28, 441–449. doi: 10.1002/hbm.20283

Hampshire, A., Chamberlain, S. R., Monti, M. M., Duncan, J., and Owen, A. M. (2010). The role of the right inferior frontal gyrus: inhibition and attentional control. Neuroimage 50, 1313–1319. doi: 10.1016/j.neuroimage.2009.12.109

Hampshire, A., Thompson, R., Duncan, J., and Owen, A. M. (2009). Selective tuning of the right inferior frontal gyrus during target detection. Cogn. Affect. Behav. Neurosci. 9, 103–112. doi: 10.3758/CABN.9.1.103

Haxby, J. V., Hoffman, E. A., and Gobbini, M. I. (2000). The distributed human neural system for face perception. Trends Cogn. Sci. 4, 223–233. doi: 10.1016/S1364-6613(00)01482-0

Hurlemann, R., Rehme, A. K., Diessel, M., Kukolja, J., Maier, W., Walter, H., et al. (2008). Segregating intra-amygdalar responses to dynamic facial emotion with cytoarchitectonic maximum probability maps. J. Neurosci. Methods 172, 13–20. doi: 10.1016/j.jneumeth.2008.04.004

Iidaka, T., Yamashita, K., Kashikura, K., and Yonekura, Y. (2004). Spatial frequency of visual image modulates neural responses in the temporo-occipital lobe. An investigation with event-related fMRI. Cogn. Brain Res. 18, 196–204. doi: 10.1016/j.cogbrainres.2003.10.005

Ivry, R. B., and Spencer, R. M. (2004). The neural representation of time. Curr. Opin. Neurobiol. 14, 225–232. doi: 10.1016/j.conb.2004.03.013

LaBar, K. S., Crupain, M. J., Voyvodic, J. T., and McCarthy, G. (2003). Dynamic perception of facial affect and identity in the human brain. Cereb. Cortex, 13, 1023–1033. doi: 10.1093/cercor/13.10.1023

Lancaster, J. L., Tordesillas-Gutiérrez, D., Martinez, M., Salinas, F., Evans, A., Zilles, K., et al. (2007). Bias between MNI and Talairach coordinates analyzed using the ICBM?152 brain template. Hum. Brain Mapp. 28, 1194–1205. doi: 10.1002/hbm.20345

Langton, S. R., Watt, R. J., and Bruce, I. (2000). Do the eyes have it? Cues to the direction of social attention. Trends Cogn. Sci. 4, 50–59. doi: 10.1016/S1364-6613(99)01436-9

LeDoux, J. (2003). The emotional brain, fear, and the amygdala. Cell Mol. Neurobiol. 23, 727–738. doi: 10.1023/A:1025048802629

Lee, L. C., Andrews, T. J., Johnson, S. J., Woods, W., Gouws, A., Green, G. G., et al. (2010). Neural responses to rigidly moving faces displaying shifts in social attention investigated with fMRI and MEG. Neuropsychologia 48, 447–490. doi: 10.1016/j.neuropsychologia.2009.10.005

Liu, T., Saito, H., and Oi, M. (2015). Role of the right inferior frontal gyrus in turn-based cooperation and competition: a near-infrared spectroscopy study. Brain Cogn. 99, 17–23. doi: 10.1016/j.bandc.2015.07.001

Liu, T., Saito, H., and Oi, M. (2016). Obstruction increases activation in the right inferior frontal gyrus. Soc. Neurosci. 11, 344–352. doi: 10.1080/17470919.2015.1088469

Mar, R. A. (2011). The neural bases of social cognition and story comprehension. Annu. Rev. Psychol. 62, 103–134. doi: 10.1146/annurev-psych-120709-145406

Moher, D., Liberati, A., Tetzlaff, J., Altman, D. G., and Prisma Group (2009). Preferred reporting items for systematic reviews and meta-analyses: the PRISMA statement. PLoS Med. 6:e1000097. doi: 10.1371/journal.pmed.1000097

Nichols, D. F., Betts, L. R., and Wilson, H. R. (2010). Decoding of faces and face components in face-sensitive human visual cortex. Front. Psychol. 1:28. doi: 10.3389/fpsyg.2010.00028

Nomi, J. S., and Uddin, L. Q. (2015). Face processing in autism spectrum disorders: from brain regions to brain networks. Neuropsychologia 71, 201–216. doi: 10.1016/j.neuropsychologia.2015.03.029

O'Toole, A. J., Roark, D. A., and Abdi, H. (2002). Recognizing moving faces: a psychological and neural synthesis. Trends Cogn. Sci. 6, 261–266. doi: 10.1016/S1364-6613(02)01908-3

Pascual-Leone, J. (1989). “An organismic process model of Witkin's field-dependence-independence,” in Human Development, Vol. 3, Cognitive Style and Cognitive Development, eds T. Globerson and T. Zelniker (Westport, CT: Ablex Publishing), 36–70.

Paulin, M. G. (1993). The role of the cerebellum in motor control and perception. Brain Behav. Evol. 41, 39–50. doi: 10.1159/000113822

Pelphrey, K. A., Morris, J. P., McCarthy, G., and Labar, K. S. (2007). Perception of dynamic changes in facial affect and identity in autism. Soc. Cogn. Affect. Neurosci. 2, 140–149. doi: 10.1093/scan/nsm010

Pentón, L. G., Fernández, A. P., León, M. A., Ymas, Y. A., García, L. G., Iturria-Medina, Y., et al. (2010). Neural activation while perceiving biological motion in dynamic facial expressions and point-light body action animations. Neural Regen. Res. 5, 1076–1083. doi: 10.3969/j.issn.1673-5374.2010.14.007

Peyrin, C., Baciu, M., Segebarth, C., and Marendaz, C. (2004). Cerebral regions and hemispheric specialization for processing spatial frequencies during natural scene recognition. An event-related fMRI study. Neuroimage 23, 698–707. doi: 10.1016/j.neuroimage.2004.06.020

Peyrin, C., Michel, C. M., Schwartz, S., Thut, G., Seghier, M., Landis, T., et al. (2010). The neural substrates and timing of top–down processes during coarse-to-fine categorization of visual scenes: a combined fMRI and ERP study. J. Cogn. Neurosci. 22, 2768–2780. doi: 10.1162/jocn.2010.21424

Peyrin, C., Schwartz, S., Seghier, M., Michel, C., Landis, T., and Vuilleumier, P. (2005). Hemispheric specialization of human inferior temporal cortex during coarse-to-fine and fine-to-coarse analysis of natural visual scenes. Neuroimage 28, 464–473. doi: 10.1016/j.neuroimage.2005.06.006

Robins, D. L., Hunyadi, E., and Schultz, R. T. (2009). Superior temporal activation in response to dynamic audio-visual emotional cues. Brain Cogn. 69:269–278. doi: 10.1016/j.bandc.2008.08.007

Rossion, B., Caldara, R., Seghier, M., Schuller, A. M., Lazeyras, F., and Mayer, E. (2003). A network of occipito-temporal face-sensitive areas besides the right middle fusiform gyrus is necessary for normal face processing. Brain 126, 2381–2395. doi: 10.1093/brain/awg241

Sabatinelli, D., Fortune, E. E., Li, Q., Siddiqui, A., Krafft, C., Oliver, W. T., et al. (2011). Emotional perception: meta-analyses of face and natural scene processing. Neuroimage 54, 2524–2533. doi: 10.1016/j.neuroimage.2010.10.011

Sander, D., Grafman, J., and Zalla, T. (2003). The human amygdala: an evolved system for relevance detection. Rev. Neurosci. 14, 303–316. doi: 10.1515/REVNEURO.2003.14.4.303

Sarkheil, P., Goebel, R., Schneider, F., and Mathiak, K. (2013). Emotion unfolded by motion: a role for parietal lobe in decoding dynamic facial expressions. Soc. Cogn. Affect. Neurosci. 8, 950–957. doi: 10.1093/scan/nss092

Sato, W., Kochiyama, T., and Uono, S. (2015). Spatiotemporal neural network dynamics for the processing of dynamic facial expressions. Sci. Rep. 5:12432. doi: 10.1038/srep12432

Sato, W., Kochiyama, T., Yoshikawa, S., Naito, E., and Matsumura, M. (2004). Enhanced neural activity in response to dynamic facial expressions of emotion: an fMRI study. Cogn. Brain Res. 20, 81–91. doi: 10.1016/j.cogbrainres.2004.01.008

Saxe, R. (2006). Uniquely human social cognition. Curr. Opin. Neurobiol. 16, 235–239. doi: 10.1016/j.conb.2006.03.001

Schultz, J., Brockhaus, M., Bülthoff, H. H., and Pilz, K. S. (2013). What the human brain likes about facial motion. Cereb. Cortex 23, 1167–1178. doi: 10.1093/cercor/bhs106

Schultz, J., and Pilz, K. S. (2009). Natural facial motion enhances cortical responses to faces. Exp. Brain Res. 194, 465–475. doi: 10.1007/s00221-009-1721-9

Stoodley, C. J., and Schmahmann, J. D. (2010). Evidence for topographic organization in the cerebellum of motor control versus cognitive and affective processing. Cortex 46, 831–844. doi: 10.1016/j.cortex.2009.11.008

Taylor, M. J., Arsalidou, M., Bayless, S. J., Morris, D., Evans, J. W., and Barbeau, E. J. (2009). Neural correlates of personally familiar faces: parents, partner and own faces. Hum. Brain Mapp. 30, 2008–2020. doi: 10.1002/hbm.20646

Trautmann, S. A., Fehr, T., and Herrmann, M. (2009). Emotions in motion: dynamic compared to static facial expressions of disgust and happiness reveal more widespread emotion-specific activations. Brain Res. 1284, 100–115. doi: 10.1016/j.brainres.2009.05.075

Turkeltaub, P. E., Eickhoff, S. B., Laird, A. R., Fox, M., Wiener, M., and Fox, P. (2012). Minimizing within-experiment and within-group effects in activation likelihood estimation meta-analyses. Hum. Brain Mapp. 33, 1–13. doi: 10.1002/hbm.21186

van der Gaag, C., Minderaa, R. B., and Keysers, C. (2007). The BOLD signal in the amygdala does not differentiate between dynamic facial expressions. Soc. Cogn. Affect. Neurosci. 2, 93–103. doi: 10.1093/scan/nsm002

Van Overwalle, F., Baetens, K., Mariën, P., and Vandekerckhove, M. (2014). Social cognition and the cerebellum: a meta-analysis of over 350 fMRI studies. Neuroimage 86, 554–572. doi: 10.1016/j.neuroimage.2013.09.033

Van Overwalle, F., D'aes, T., and Mariën, P. (2015). Social cognition and the cerebellum: a meta-analytic connectivity analysis. Hum. Brain Mapp. 12, 5137–5154. doi: 10.1002/hbm.23002

Vuilleumier, P., Armony, J. L., Driver, J., and Dolan, R. J. (2003). Distinct spatial frequency sensitivities for processing faces and emotional expressions. Nat. Neurosci. 6, 624–631. doi: 10.1038/nn1057

Wegrzyn, M., Riehle, M., Labudda, K., Woermann, F., Baumgartner, F., Pollmann, S., et al. (2015). Investigating the brain basis of facial expression perception using multi-voxel pattern analysis. Cortex 69, 131–140. doi: 10.1016/j.cortex.2015.05.003

Yaple, Z. A., and Arsalidou, M. (in press). N-back working memory task. Meta-analysis of normative fMRI studies with children. Child Dev. doi: 10.1111/cdev.13080. [Epub ahead of print].

Yaple, Z., and Arsalidou, M. (2017). Negative priming: a meta-analysis of fMRI studies. Exp. Brain Res. 235, 3367–3374. doi: 10.1007/s00221-017-5065-6

Yaple, Z. A., Vakhrushev, R., and Jolij, J. (2016). Investigating emotional top down modulation of ambiguous faces by single pulse TMS on early visual cortices. Front. Neurosci. 10:305. doi: 10.3389/fnins.2016.00305

Keywords: dynamic faces, fMRI meta-analysis, activation likelihood estimate, social cognition, facial expressions

Citation: Zinchenko O, Yaple ZA and Arsalidou M (2018) Brain Responses to Dynamic Facial Expressions: A Normative Meta-Analysis. Front. Hum. Neurosci. 12:227. doi: 10.3389/fnhum.2018.00227

Received: 05 February 2018; Accepted: 16 May 2018;

Published: 05 June 2018.

Edited by:

Wataru Sato, Kyoto University, JapanReviewed by:

Johannes Schultz, Max-Planck-Gesellschaft (MPG), GermanyScott A. Langenecker, University of Illinois at Chicago, United States

Copyright © 2018 Zinchenko, Yaple and Arsalidou. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Marie Arsalidou, bWFyaWUuYXJzYWxpZG91QGdtYWlsLmNvbQ==

Oksana Zinchenko

Oksana Zinchenko Zachary A. Yaple

Zachary A. Yaple Marie Arsalidou

Marie Arsalidou