- 1Institute for Neuroscience and Psychology, University of Glasgow, Glasgow, UK

- 2Max Planck Institute for Psycholinguistics, Nijmegen, Netherlands

- 3Department of Psychology, University of Edinburgh, Edinburgh, UK

The interactive-alignment account of dialogue proposes that interlocutors achieve conversational success by aligning their understanding of the situation under discussion. Such alignment occurs because they prime each other at different levels of representation (e.g., phonology, syntax, semantics), and this is possible because these representations are shared across production and comprehension. In this paper, we briefly review the behavioral evidence, and then consider how findings from cognitive neuroscience might lend support to this account, on the assumption that alignment of neural activity corresponds to alignment of mental states. We first review work supporting representational parity between production and comprehension, and suggest that neural activity associated with phonological, lexical, and syntactic aspects of production and comprehension are closely related. We next consider evidence for the neural bases of the activation and use of situation models during production and comprehension, and how these demonstrate the activation of non-linguistic conceptual representations associated with language use. We then review evidence for alignment of neural mechanisms that are specific to the act of communication. Finally, we suggest some avenues of further research that need to be explored to test crucial predictions of the interactive alignment account.

Introduction

Conversation involves an extremely complicated set of processes in which participants have to interweave their activities with precise timing, and yet it is a skill that all speakers seem very good at (Garrod and Pickering, 2004). One argument for why conversation is so easy is that interlocutors tend to become aligned at different levels of linguistic representation and therefore find it easier to perform this joint activity than the individual activities of speaking or listening (Garrod and Pickering, 2009). Pickering and Garrod (2004) explain the process of alignment in terms of their interactive-alignment account. According to this account, conversation is successful to the extent that participants come to understand the relevant aspects of what they are talking about in the same way. More specifically, they construct mental models of the situation under discussion (i.e., situation models; Zwaan and Radvansky, 1998), and successful conversation occurs when these models become aligned. Interlocutors usually do not align deliberately. Rather, alignment is largely the result of the tendency for interlocutors to repeat each other's linguistic choices at many different levels, such as words and grammar (Garrod and Anderson, 1987; Brennan and Clark, 1996; Branigan et al., 2000). Such alignment is, therefore, a form of imitation. Essentially, interlocutors prime each other to speak about things in the same way, and people who speak about things in the same way tend to think about them in the same way as well.

At the level of situation models, interlocutors align on spatial reference frames: if one speaker refers to objects egocentrically (e.g., “on the left” to mean on the speaker's left), then the other speaker tends to use an egocentric perspective as well (Watson et al., 2004). More generally, they align on a characterization of the representational domain, for instance using coordinate systems (e.g., A4, D3) or figural descriptions (e.g., T-shape, right indicator) to refer to positions in a maze (Garrod and Anderson, 1987; Garrod and Doherty, 1994). They also repeat each other's referring expressions, even when they are unnecessarily specific (Brennan and Clark, 1996). Imitation also occurs for grammar, with speakers repeating the syntactic structure used by their interlocutors for cards describing events (Branigan et al., 2000; e.g., “the diver giving the cake to the cricketer”) or objects (Cleland and Pickering, 2003; e.g., “the sheep that is red”), and repeating syntax or closed-class lexical items in question-answering (Levelt and Kelter, 1982). Bilinguals even repeat syntax between languages, for example when one interlocutor speaks English and the other speaks Spanish (Hartsuiker et al., 2004). Finally, there is evidence for alignment of phonetics (Pardo, 2006), and of accent and speech rate (Giles et al., 1991).

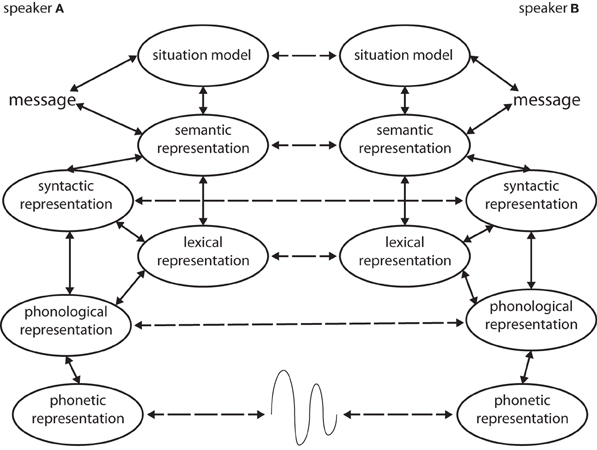

An important property of interactive alignment is that it is automatic in the sense that speakers are not aware of the process and that it does not appear effortful. Such automatic imitation or mimicry occurs in social situations more generally. Thus, Dijksterhuis and Bargh (2001) argued that many social behaviors are automatically triggered by perception of the actions of other people, in a way that often leads to imitation (e.g., Chartrand and Bargh, 1999). We propose that the automatic alignment channels linking different levels of linguistic representation operate in essentially the same fashion (see Figure 1). In other words, conversationalists do not need to decide to interpret each different level of linguistic representation for alignment to occur at all these channels (Pickering and Garrod, 2006). This is because the alignment channels reflect priming rather than deliberative processing. In addition there are aspects of automatic non-linguistic imitation that can facilitate alignment at linguistic levels (Garrod and Pickering, 2009). For example, when speakers and listeners align their gaze to look at the same thing this can facilitate alignment of interpretation (Richardson and Dale, 2005; Richardson et al., 2007). The reverse also appears to hold, with linguistic alignment enhancing romantic attraction, which presumably involves non-linguistic alignment (Ireland et al., 2011).

Figure 1. The interactive-alignment model (based on Pickering and Garrod, 2004). Speakers A and B represent two interlocutors in a dialogue in this schematic representation of the stages of comprehension and production according to the model. The dashed lines represent alignment channels.

The interactive alignment account makes two basic assumptions about language processing in dialogue. First, there is parity of representations used in speaking and listening. The same representations are used during production (when speaking) and comprehension (when listening to another person). This explains why linguistic repetition occurred in experiments such as Branigan et al. (2000), who had participants take turns to describe and match picture cards, and found that they tended to use the form of utterance just used by their partner. For example, they tended to use a “prepositional object” form such as the pirate giving the book to the swimmer following another prepositional object sentence but a “double object” form such as the pirate giving the swimmer the book following another double object sentence (though both sentences have essentially the same meaning). In such cases, the same grammatical representation is activated during speaking and listening. For a different form of evidence for syntactic parity, see Kempen et al. (2011).

Second, the processes of alignment at different levels (e.g., words, structure, meaning) interact in such a way that increased alignment at one level leads to increased alignment at other levels (i.e., alignment percolates between levels). In this review, we examine the neural evidence for these two assumptions. For example, alignment of syntactic structure is enhanced by repetition of words, with participants being even more likely to say The cowboy handing the banana to the burglar after hearing The chef handing the jug to the swimmer than after The chef giving the jug to the swimmer (Branigan et al., 2000). Thus, alignment at one level (in this case, lexical alignment) enhances alignment at another level (in this case, grammatical alignment). Similarly, people are more likely to use an unusual form such as the sheep that's red (rather than the red sheep) after they have just heard the goat that's red than after they heard the door that's red (Cleland and Pickering, 2003). This is because alignment at the semantic level (in this case, with respect to animals) increases syntactic alignment. Furthermore, alignment of words leads to alignment of situation models—people who describe things the same way tend to think about them in the same way too (Markman and Makin, 1998). This means that alignment of low-level structure can eventually affect alignment at the crucial level of speakers' situation models, the hallmark of successful communication.

In this review, we appraise the neural evidence for the interactive alignment model. We focus on three central points. The first is that parity of representations exists between speaking and listening. This is a necessary, though not sufficient, condition for interactive alignment between interlocutors to be possible. The second is that alignment at one level of representation affects alignment at another. We review what evidence is available, and suggest concrete avenues for further research. The third is that alignment of representations should be related to mutual understanding. Further, we briefly explore how alignment between interlocutors may also play a role in controlling non-linguistic aspects of a conversation.

Neural Evidence

Evidence for Parity

If interactive alignment of different linguistic representations between speakers and listeners is to be possible, then these representations need to be coded in the same form irrespective of whether a person is speaking or listening. There needs to be parity of representations between language comprehension and production. If this parity exists, then presumably, the neuronal infrastructure underlying the processing of language at different levels of representation should be the same during speaking and listening. This is a prerequisite for neural alignment during conversation, in which both interlocutors speak and listen. Neural parity underlies Hasson et al.'s (2012) brain-to-brain coupling principle, in which parity emerges from the process by which the perceiver's perceptual system codes for an actor's behavior.

Below, we review the evidence for parity of neural representations in speaking and listening across different linguistic levels. We focus mainly on studies that have either directly compared the two modalities, or manipulated one while observing the other. The number of relevant studies is limited because neuroimaging evidence on language production is much scarcer than on language comprehension. Many of the studies, in particular those concerned with higher-level processes, investigate whether different modalities engage the same brain regions. This comparison yields less-than-perfect evidence for parity, because it is possible that the same brain region might code different representations, but it does provide suggestive evidence.

Perception and production of speech sounds

Much of the debate on the neuronal overlap between action and perception in language has focused on the role of the motor system. In their motor theory of speech perception, Liberman and Mattingly (1985) proposed that perceiving speech is to perceive the articulatory gestures one would make to produce the same speech. Thanks largely to the discovery of mirror neurons (Rizzolatti and Arbib, 1998), this theory has received renewed interest (Galantucci et al., 2006). It receives support from TMS studies that have shown that listening to speech affects the excitability of brain regions controlling articulatory muscles (Watkins et al., 2003; Pulvermüller et al., 2006; Watkins and Paus, 2006). fMRI has provided converging evidence for enhanced motor cortex activity when listening to speech compared to rest (Wilson et al., 2004).

These studies show motor cortex involvement in perceiving speech, but they do not make clear the exact role of the motor cortex. According to motor theory, the primary motor cortex activity should be specific to the sounds perceived. According to a proposal by Scott et al. (2009), motor cortex involvement in speech perception could instead reflect a process general to the act of perceiving speech. In this proposal, the motor involvement reflects a readiness on the part of the listener to take part in the conversation and hence commence speaking. However, TMS studies suggest that the motor cortex activity during speech perception is in fact specific to the sounds being articulated, as motor theory would predict: the excitability of articulators through TMS to the primary motor cortex is stronger when perceiving sounds that require those articulators than when perceiving sounds that require different articulators (Fadiga et al., 2002; D'Ausilio et al., 2009). These claims are further supported by recent behavioral evidence that the interference from distractor words on articulation is greater when the distractors contain sounds incompatible with the articulation target (Yuen et al., 2010).

In addition, primary motor cortex response to videos of words being uttered depends on the articulatory complexity of these words (Tremblay and Small, 2011). This suggests that the motor involvement when listening to speech is related to the effort required to produce the same speech, again suggesting that motor cortex involvement in speech perception is specific to the content of the perceived speech. A different measure of articulatory effort, sentence length, fails to support this observation: when listening to sentences, primary motor cortex response does not appear to depend on the length of sentences being heard (Menenti et al., 2011; also see below). Hence, it is possible that the effect of articulatory effort on motor involvement in speech perception is specific to observing videos, or that it is somehow observable when listening to single words but not when listening to sentences. In this context, it is worth noting that many of the studies showing motor involvement in perception use highly artificial paradigms (e.g., presenting phonemes in isolation or degrading the stimulus), and often compare speech to radically different, often less complex, acoustic stimuli, so it is possible that motor effects in natural speech perception could be less pronounced (McGettigan et al., 2010). The null finding in a study studying motor involvement in more naturalistic speech perception (Menenti et al., 2011) could be an indication in this direction.

Now that there is clear evidence for some motor involvement in speech perception, the debate has shifted to whether this involvement is a necessary component of perceiving speech. Researchers from the mirror neuron tradition argue for a causal role of the motor cortex involvement in speech perception described above, much along the lines of motor theory of speech perception (Pulvermüller and Fadiga, 2010). But an alternative view proposes that motor activation can occur, but that it is not necessary. The involvement has been characterized as modulatory (Hickok, 2009; Lotto et al., 2009; but see Wilson, 2009) or as being specific to certain situations and materials (Toni et al., 2008). In any case, evidence showing a link between specific properties of speech sounds being perceived and the articulators needed to produce them suggest that there is a link between representations.

In summary, there is considerable evidence for neural parity at the level of speech sounds. In contrast, the evidence for neural parity at higher linguistic levels is much scarcer. In particular, the technical difficulties associated with investigating speaking in fMRI increase as the stimuli become longer (e.g., words, sentences, narratives). Furthermore, psycholinguistics (unlike work on the articulation and perception of speech) has generally assumed that comprehension and production of language have little to do with each other. We now review what is available on lexical and syntactic processing in turn.

Parity of lexical processing

For processing of words, two similar studies contrasted processing of intransitive (e.g., jump) and transitive (e.g., hit) verbs in either speech production (Den Ouden et al., 2009) or comprehension (Thompson et al., 2007). In the production study, the verbs were elicited using pictures or videos, and in comprehension the subjects read the verbs. The two studies produced very different results for the two modalities: in the comprehension study (Thompson et al., 2007), only one cluster in the left inferior parietal lobe showed a significant difference between the two kinds of verb. Despite the fact that a much larger distributed network of areas showed the effect in production (Den Ouden et al., 2009), that one cluster was not part of the production network.

However, studies that directly compare production with comprehension or manipulate the one while investigating the other do find that words share neural processes between the two modalities. In an intra-operative mapping study, Ilmberger et al. (2001) directly stimulated the cortex during comprehension and production of words. The two tasks used had previously been shown to share a lot of variance, which was taken to indicate that they tapped into similar processes. Twelve out of 14 patients had sites where stimulation affected both naming and comprehension performance. Many of these sites were in left inferior frontal gyrus. This region contains Brodmann area (BA) 44, which has been shown to be involved both in lexical selection in speaking and in lexical decision in listening (Heim et al., 2007, 2009). Menenti et al. (2011) reported an fMRI adaptation study that compared semantic, lexical, and syntactic processes in speaking and listening. They found that repetition of lexical content across heard or spoken sentences induced suppression effects in the same set of areas (left anterior and posterior middle temporal gyrus and left inferior frontal gyrus) in both speaking and listening, although the precuneus additionally showed an adaptation effect in speaking but not listening. On the whole, then, there seems to be some evidence that the linguistic processing of words is accomplished by similar brain regions in speaking and listening.

Parity of syntax

There is somewhat clearer evidence for neural parity of syntax. Such work builds on theoretical and behavioral studies that support parity of syntactic representations (Branigan et al., 2000). Heim reviewed fMRI data on processing syntactic gender and concluded that speaking and listening rely on the same network of brain areas, in particular BA 44 (Heim, 2008). In addition, Menenti et al.'s (2011) fMRI adaptation study showed that repetition of syntactic structure (as found in active and passive transitive sentences) induced suppression effects in the same brain regions (BA 44 and BA 21) for speaking and listening. However, in a PET study on comprehension and production of syntactic structure, Indefrey and colleagues found effects of syntactic complexity in speech production (in BA 44), but not comprehension (Indefrey et al., 2004). They interpreted their data in terms of theoretical accounts in which listeners need not always fully encode syntactic structure but can instead rely on other cues to understand what is being said (Ferreira et al., 2002), but where speakers always construct complete syntactic representations. However, it is also possible that this lack of parity is due to task requirements rather than indicating general differences between production and comprehension.

Importantly, as mentioned above, studies showing that the same brain regions are involved in two modalities do not prove that the same representations or even the same processes are being recruited. Conceivably, different neuronal populations in the same general brain regions could process syntax in speaking and listening respectively. To address this issue, Segaert et al. (2011) used the same paradigm as Menenti et al. (2011), but this time intermixing comprehension and production trials within the same experiment. Participants therefore produced or heard transitive sentences in interspersed order and the syntactic structure of these sentences could be either novel or repeated across sentences. This produced cross-modal adaptation effects and no interaction between the size of the effect and whether priming was intra- or inter-modal. This strongly supports the idea that the same neuronal populations are being recruited for the production and comprehension of syntax in speaking and listening, and hence that the neural representations involved in the two modalities are alike.

So far we have reviewed evidence for parity of different types of linguistic representations in speaking and listening, but in intra-individual settings. While such parity is a necessary condition for alignment, it is not a sufficient one: a central tenet of interactive alignment is that representations become more aligned over the course of dialogue. Testing this tenet requires studies in which actual between-participant communication takes place, and in which different levels of representation can be segregated in terms of their neural signature. These studies, unfortunately, still need to be done.

Percolation

The interactive alignment account further predicts that alignment at one level of representation leads to alignment at other levels of representation as well. To test this prediction, it is necessary to conduct studies in communicative settings that somehow target at least two levels of representation. In the introduction, we have noted behavioral evidence from structural priming (Cleland and Pickering, 2003). In another behavioral study, Adank et al. (2010) showed that alignment of speech sounds can improve comprehension. Participants were tested on their comprehension of sentences in an unfamiliar accent presented in noise. They then underwent one of several types of training: no training; just listening to the sentences; transcribing the sentences; repeating the sentences in their own accent; repeating the sentences while imitating the accent; and doing so in noise so that they could not hear themselves speak. They were then tested on comprehension for a different set of similar sentences. Only the two imitation conditions improved comprehension performance in the post-test. This suggests that allowing a listener to align with the speaker at the sound-based level that is required to produce the output improves comprehension.

In a study investigating gestural communication, Schippers and colleagues scanned pairs of players in a game of charades (Schippers et al., 2010). They first scanned the gesturer and videotaped his or her gestures, and then they scanned the guesser while he or she was watching the videotape. Using Granger Causality Mapping, they looked for brain regions whose activity in the gesturer predicted that in the guesser. First, they found Granger-causation between the “putative mirror neuron system (pMNS)”—defined as dorsal and ventral premotor, somatosensory cortex, anterior inferior parietal lobule, and midtemporal gyrus—from the gesturer to the guesser. This provides further support for the extensive literature arguing for overlap in neural processes between action and perception (Hasson et al., 2012). In addition, they found Granger-causation between the gesturer's pMNS and the guesser's ventromedial prefrontal cortex, an area that is involved in inferring someone's intention (i.e., mentalizing; Amodio and Frith, 2006). Dale and colleagues used the tangram task, a dialogue task known to elicit progressively more similar lexical representations from interlocutors, to show that over time interlocutors' eye movements also become highly synchronous (Dale et al., 2011). Alignment in lexical representation here, therefore, co-occurs with alignment in behavior. Further, Broca's area has often been found involved in both producing and comprehending language at various levels (Bookheimer, 2002; Hagoort, 2005), and in producing and comprehending actions (Rossi et al., 2011), suggesting a potential neural substrate for percolation between these two levels of representation. Together, these data suggest that alignment between conversation partners occurs from lower to higher levels of representation, and also between non-linguistic and linguistic processes.

Admittedly, neural evidence for (or against) percolation is scarce. As mentioned in the introduction, the lexical boost in syntactic priming is one example of percolation. This lexical boost could be used in an fMRI study by comparing syntactic priming between interlocutors in conditions with and without lexical repetition. For example, if the study by Menenti et al. (2011) was repeated in an interactive setting, then the extent of lexical repetition suppression across participants should correlate with syntactic priming. If inter-subject correlations in brain activity reflect alignment (Stephens et al., 2010; see below), alignment at one level (e.g., sound) could be manipulated, and the extent of correlation between subjects as well as comprehension could be assessed. Phonological alignment should affect the inter-subject correlations, and in particular, it should affect those inter-subject correlations that also correlate with the comprehension score of the subject.

Ultimate Goal of Communication: Alignment of Situation Models

According to the interactive alignment account, conversation is successful to the extent that participants come to understand the relevant aspects of what they are talking about in the same way. Ultimately, therefore, alignment of situation models is crucial—both to communication and to the interactive alignment account.

In an fMRI study, Awad et al. (2007) showed that a similar network of areas is involved in comprehending and producing narrative speech. However, production and comprehension were each contrasted with radically different baseline conditions before being compared to one another, making the results hard to interpret. In their fMRI adaptation study on overlap between speaking and listening, Menenti et al. (2011) also looked at repetition of sentence-level meaning. As for lexical repetition and syntactic repetition, they found that the same brain regions (in this case, the bilateral temporoparietal junction) show adaptation effects irrespective of whether people are speaking or listening, suggesting a neuronal correlate for parity of meaning. This study, however, left unanswered the question at which level of meaning parity of representations held: was it the non-verbal situation model underlying the sentences, or the linguistic meaning of the sentences itself?

In a follow-up study on sentence production, Menenti et al. (2012) thus further distinguished between repetition of the linguistic meaning of sentences (the sense) or the underlying mental representation (the reference). For example, if the sentence The man kisses the woman was used twice to refer to different subsequent pictures, this constituted a repetition of sense. Conversely, the same picture of a man kissing a woman could be shown first with the sentence The red man kisses the green woman and then with the sentence The yellow man kisses the blue woman, leading to a repetition of reference. The brain regions previously shown to have similar semantic repetition effects in speaking and listening (Menenti et al., 2011) turned out to be mainly sensitive to repetition of referential meaning: they showed suppression effects when the same picture was repeated even if with a different sentence, but did not exhibit any such sensitivity to the repetition of sentences themselves if accompanied by different pictures. This suggests alignment of underlying non-linguistic representations in speaking and listening, rather than purely alignment of linguistic semantic structure.

It is also possible to investigate alignment of meaning in a more naturalistic way, while still allowing for a detailed analysis. The drawback of naturalistic experiments is often that interpretations are hard to draw because the relevant details of the stimulus are not clear. This problem can be circumvented by using subjects as models for each other, an inter-subject correlation approach (Hasson et al., 2004). The idea is that if there are areas where subjects' brain activity is the same over the whole time-course of a stimulus, these correlations in brain activity are likely to be driven by that stimulus, whatever the stimulus may be. Stephens et al. (2010) used this approach to investigate inter-subject correlations in fMRI between a speaker and a group of listeners. They first recorded a speaker in the scanner while she was telling an unrehearsed story, and then recorded listeners who heard that story. Correlations between speakers and listeners occurred in many different brain regions. These correlations were positively related to listeners' comprehension (as measured by a subsequent test). When a group of listeners were presented a story in an unfamiliar language (Russian), these correlations disappeared. This suggests that alignment in brain activity between a speaker communicating information and a listener hearing it is tied to the understanding of that information.

In a study on listeners only, Lerner et al. (2011) studied inter-subject correlations for four levels of temporal structure: reversed speech, a word list, a list of paragraphs, and a story. They found that as the temporal structure of the materials increased (i.e., they were closer to complete stories), the correlations between participants extended from auditory cortex further posterior and into the parietal lobes. This study was conducted with listeners only, and thus did not properly target alignment between interlocutors in communication. However it provides indirect evidence: the interactive alignment account assumes that listeners align with speakers. Different listeners of the same speaker should, therefore, also align. Building on the speaker-listener correlations shown by Stephens et al. (2010) listener-listener correlations can, then, tell us something about neural alignment. These findings provide some evidence that alignment at several levels of representation leads to more extensive correlations in brain activity. However, for both Stephens et al. (2010) and Lerner et al. (2011) a word of caution is in order: both studies showed an effect (in this case, a correlation) in one condition but not the other (in Stephens et al., different languages; in Lerner et al. different temporal structures); they did not show that the conditions were significantly different.

These studies provide evidence that situation models for even very complex stimuli can be usefully investigated by using novel analysis techniques. They suggest that alignment can be tracked in the brain, and can be measured in time as well (Hasson et al., 2012). More work is needed though: while these studies suggest that alignment can be operationalized as inter-subject correlations, and that these are related to understanding, different levels of representations can only be distinguished indirectly, by mapping the findings onto other studies that haven't necessarily targeted communication. An important avenue for further research, therefore, is to investigate in more detail to what aspects of communication correlations in different brain regions are due. Furthermore, the interactive alignment account assumes that dialogue is not just an expanded monologue. Therefore, if we want to find out how dialogue works, we will need to go and study dialogue.

Non-Linguistic Aspects of Dialogue

The interactive-alignment model assumes that successful communication is supported by interlocutors aligning at many different levels of representation. Above, we have reviewed studies concerned with linguistic representations. But language alone is not sufficient to have a proper conversation (Enrici et al., 2010; Willems and Varley, 2010). Alignment between interlocutors may also be occurring for additional non-linguistic processes that are necessary to keep a conversation flowing. In Section “Ultimate Goal of Communication: Alignment of Situation Models” we discussed a few examples of where alignment of non-linguistic processes may percolate into alignment of linguistic representations. Below, we touch upon proposals of how alignment of non-linguistic processes may help govern the act of holding a conversation.

During conversation, we do not only use language to convey our intentions. Body posture, prosody, and gesture are vital aspects of conversation and are taken into account effortlessly when trying to infer what a speaker intends. Abundant evidence suggests that gesture and speech comprehension and production are closely related (Willems and Hagoort, 2007; Enrici et al., 2012). Percolation between gesture and speech could, therefore, occur just like percolation within levels of representation in speech. The extensive literature on the mirror neuron system shows that action observation and action execution are intimately intertwined (Fabbri-Destro and Rizzolatti, 2008), suggesting a plausible neural correlate for alignment at the gestural level between interlocutors. Communicative gestures have indeed been shown to produce related brain activity in observers' and gesturers' pMNSs (Schippers et al., 2010).

Once a person has settled on a message, they may need to decide how best to convey it in a particular setting to a particular partner. A set of studies targeted the generation or recognition of such communicative intentions in verbal and non-verbal communication. Both tasks were designed to make communication difficult and hence enhance the need for such processes: in the non-verbal experiment, participants devised a novel form of communication using only the movement of shapes in a grid (Noordzij et al., 2009). In the verbal experiment, participants described words to each other, but were not allowed to use words highly associated with the target (Willems et al., 2010). Both studies showed that sending and receiving these messages involved the same brain region: the right temporo-parietal junction in non-verbal communication, and the left temporo-parietal junction in verbal communication. These studies support parity for communicative processes in verbal and non-verbal communication, respectively. However in neither study was feedback allowed, so they would need to be generalized to interactive dialogue.

Another important aspect of holding a smooth conversation is turn taking. While we may well be attuned to what our partner intends to say, if we fail to track when it is our turn to speak and when it is not, then we are likely to pause excessively between contributions, speak at the same time, or interrupt each other, leaving the conversation with little chance of success. One account has alignment of neural oscillations playing a major role in conversation (Wilson and Wilson, 2005). In this account, the production system of a speaker oscillates with a syllabic phase: the readiness to initiate a new syllable is at a minimum in the middle of a syllable and peaks half a cycle after syllable offset. Conversation partners' oscillation rates become entrained over the course of a conversation, but they are in anti-phase, so that the speaker's readiness to speak is at minimum when the listener's is at a maximum, and vice versa (Gambi and Pickering, 2011; Hasson et al., 2012). A further hypothesis is that the theta frequency range is central to this mechanism: across languages, typical speech production is 3–8 syllables per second (Drullman, 1995; Greenberg et al., 2003; Chandrasekaran et al., 2009). Auditory cortex has been shown to produce ongoing oscillations at this frequency (Buzsaki and Draguhn, 2004). A possibility is that the ongoing oscillations resonate with the incoming speech at the same frequency, thereby amplifying the signal. This means that the neural oscillations in the theta frequency band become entrained between listeners and speakers, and that this aids communication (Hasson et al., 2012).

Entrainment at the syllable frequency, however, cannot be enough to explain turn-taking as we don't normally want to interrupt our interlocutors at every syllable (Gambi and Pickering, 2011). Recently, Bourguignon et al. (2012) demonstrated coherence between a speakers' speech production (f0 formant) and listener's brain oscillations around 0.5 Hz. This frequency is related to the prosodic envelope of speech. Indeed, the coherence was also present for unintelligible speech stimuli (a foreign language or a hummed text), but in different brain regions. Possibly, then, resonating with our interlocutor's speech patterns at different frequencies enables us to better predict when his turn will end (Giraud and Poeppel, 2012).

Future Directions

In the above, we have reviewed neural evidence relevant to the interactive alignment model of conversation. While neuroimaging studies on speech production of anything more complex than a single phoneme are still too scarce to provide a definite answer, the evidence is mounting that speakers and listeners generally employ the same brain regions for the same types of stimuli. Indeed, when communicating, speakers' and listeners also show correlated brain activity. Alignment is, therefore, both possible and real.

But does neural alignment occur during interactive language? It would surely be surprising if neural alignment occurred when speakers and listeners were separated, but did not occur when they interacted (in part because psycholinguistic evidence for alignment is based on dialogue; Pickering and Garrod, 2004). However, the current literature does not yet directly answer this question. The field needs strategies to meaningfully study interacting participants. Promising approaches have been devised for non-linguistic live interaction (Newman-Norlund et al., 2007, 2008; Dumas et al., 2010, 2011; Redcay et al., 2010; Baess et al., 2012; Guionnet et al., 2012). It is time that neuroimaging research on language follows suit—not an easy challenge, as the dearth of studies attempting this shows. Technical challenges aren't the only issue when wanting to study conversation: with so little control over a stimulus; it is hard to devise experiments that provide precise and meaningful information. Gambi and Pickering (2011) provide suggestions for possible paradigms to study interactive language use; these may also be beneficial to neuroimaging research on the topic.

As the attention of neuroscience turns toward the role of prediction (Friston and Kiebel, 2009; Friston, 2010; Clark, in press), interactive alignment provides a natural mechanistic basis on which predictions can be built. Pickering and Garrod (in press) propose a “simulation” account in which comprehenders covertly imitate speakers and use those representations to predict upcoming utterances (and therefore prepare their own contributions accordingly). Comprehenders are more likely to predict appropriately when they are well-aligned with speakers; but in addition, the process of covert imitation provides a mechanism for alignment. This account assumes that production processes are used during comprehension (and in fact that comprehension processes are used during production).

Based on the interactive alignment model, we make the following predictions for further research on dialogue: (1) Alignment: speaking and listening make use of similar representations, and hence have largely overlapping neural correlates. We have reviewed the available evidence for this prediction above, but more work is needed, particularly studies targeting both speaking and listening simultaneously: the overlap in neuronal correlates for each level of representation should further be increased in an interactive, communicative setting compared to non-communicative settings. (2) Percolation: alignment at one level of representation leads to alignment at other levels. In particular, alignment at lower levels of representation leads to better alignment of situation models, and thus, better communication. We have reviewed the (scarce) evidence available above, but truly putting this prediction to the test requires that studies of interacting interlocutors manipulate different levels of representation simultaneously, and furthermore have an outcome measure of communicative success. (3) Language processes are complemented by processes specific to a communicative setting. By carefully targeting both linguistic and non-linguistic aspects of conversation, future research will hopefully be able to demonstrate how these processes interact.

Conclusion

We have reviewed neural evidence for the interactive alignment model of conversation. For linguistic processes, we have shown that representations in speaking and listening are similar, and that, hence, alignment between participants in a conversation is at least possible. We have further reviewed evidence pertaining to the goal of a conversation, which is to communicate. As the interactive alignment model predicts, the ease of constructing a situation model is associated with increased correlation in brain activity between participants. Finally, we have touched upon literature dealing with alignment of processes more specific to the act of communicating, and suggested how these might relate to the interactive-alignment model.

Conflict of Interest Statement

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

References

Adank, P., Hagoort, P., and Bekkering, H. (2010). Imitation improves language comprehension. Psychol. Sci. 21, 1903–1909.

Amodio, D. M., and Frith, C. D. (2006). Meeting of minds: the medial frontal cortex and social cognition. Nat. Rev. Neurosci. 7, 268–277.

Awad, M., Warren, J. E., Scott, S. K., Turkheimer, F. E., and Wise, R. J. S. (2007). A common system for the comprehension and production of narrative speech. J. Neurosci. 27, 11455–11464.

Baess, P., Zhdanov, A., Mandel, A., Parkkonen, L., Hirvenkari, L., Mäkelä, J. P., Jousmäki, V., and Hari, R. (2012). MEG dual scanning: a procedure to study real-time auditory interaction between two persons. Front. Hum. Neurosci. 6:83. doi: 10.3389/fnhum.2012.00083

Bookheimer, S. (2002). Functional MRI of language: new approaches to understanding the cortical organization of semantic processing. Annu. Rev. Neurosci. 25, 151–188.

Bourguignon, M., de Tiège, X., de Beeck, M. O., Ligot, N., Paquier, P., van Bogaert, P., Goldman, S., Hari, R., and Jousmäki, V. (2012). The pace of prosodic phrasing couples the listener's cortex to the reader's voice. Hum. Brain Mapp. doi: 10.1002/hbm.21442. [Epub ahead of print].

Branigan, H. P., Pickering, M. J., and Cleland, A. A. (2000). Syntactic co-ordination in dialogue. Cognition 75, B13–B25.

Brennan, S. E., and Clark, H. H. (1996). Conceptual pacts and lexical choice in conversation. J. Exp. Psychol. Learn. Mem. Cogn. 22, 1482–1493.

Buzsaki, G., and Draguhn, A. (2004). Neuronal oscillations in cortical networks. Science 304, 1926–1929.

Chandrasekaran, C., Trubanova, A., Stillittano, S., Caplier, A., and Ghazanfar, A. A. (2009). The natural statistics of audiovisual speech. PLoS Comput. Biol. 5:e1000436. doi: 10.1371/journal.pcbi.1000436

Chartrand, T. L., and Bargh, J. A. (1999). The chameleon effect: the perception – Äìbehavior link and social interaction. J. Pers. Soc. Psychol. 76, 893–910.

Clark, A. (in press). Whatever next? Predictive brains, situated agents, and the future of cognitive science. Behav. Brain Sci.

Cleland, A. A., and Pickering, M. J. (2003). The use of lexical and syntactic information in language production: evidence from the priming of noun-phrase structure. J. Mem. Lang. 49, 214–230.

Dale, R., Kirkham, N. Z., and Richardson, D. C. (2011). The dynamics of reference and shared visual attention. Front. Psychol. 2:355. doi: 10.3389/fpsyg.2011.00355

D'Ausilio, A., Pulvermüller, F., Salmas, P., Bufalari, I., Begliomini, C., and Fadiga, L. (2009). The motor somatotopy of speech perception. Curr. Biol. 19, 381–385.

Den Ouden, D.-B., Fix, S., Parrish, T. B., and Thompson, C. K. (2009). Argument structure effects in action verb naming in static and dynamic conditions. J. Neurolinguist. 22, 196–215.

Dijksterhuis, A., and Bargh, J. A. (2001). “The perception-behavior expressway: automatic effects of social perception on social behavior,” in Advances in Experimental Social Psychology, ed P. Z. Mark (San Diego, CA: Academic Press), 1–40.

Drullman, R. (1995). Temporal envelope and fine structure cues for speech intelligibility. J. Acoust. Soc. Am. 97, 585–592.

Dumas, G., Lachat, F., Martinerie, J., Nadel, J., and George, N. (2011). From social behaviour to brain synchronization: review and perspectives in hyperscanning. IRBM 32, 48–53.

Dumas, G., Nadel, J., Soussignan, R., Martinerie, J., and Garnero, L. (2010). Inter-brain synchronization during social interaction. PLoS ONE 5:e12166. doi: 10.1371/journal.pone.0012166

Enrici, I., Adenzato, M., Cappa, S., Bara, B. G., and Tettamanti, M. (2010). Intention processing in communication: a common brain network for language and gestures. J. Cogn. Neurosci. 23, 2415–2431.

Enrici, I., Adenzato, M., Cappa, S., Bara, B. G., and Tettamanti, M. (2012). Intention processing in communication: a common brain network for language and gestures. J. Cogn. Neurosci. 23, 2415–2431.

Fabbri-Destro, M., and Rizzolatti, G. (2008). Mirror neurons and mirror systems in monkeys and humans. Physiology 23, 171–179.

Fadiga, L., Craighero, L., Buccino, G., and Rizzolatti, G. (2002). Speech listening specifically modulates the excitability of tongue muscles: a TMS study. Eur. J. Neurosci. 15, 399–402.

Ferreira, F., Bailey, K. G. D., and Ferraro, V. (2002). Good-enough representations in language comprehension. Curr. Dir. Psychol. Sci. 11, 11–15.

Friston, K. (2010). The free-energy principle: a unified brain theory? Nat. Rev. Neurosci. 11, 127–138.

Friston, K., and Kiebel, S. (2009). Predictive coding under the free-energy principle. Philos. Trans. R. Soc. B Biol. Sci. 364, 1211–1221.

Galantucci, B., Fowler, C. A., and Turvey, M. T. (2006). The motor theory of speech perception reviewed. Psychon. Bull. Rev. 13, 361–377.

Gambi, C., and Pickering, M. J. (2011). A cognitive architecture for the coordination of utterances. Front. Psychol. 2:275. doi: 10.3389/fpsyg.2011.00275

Garrod, S., and Anderson, A. (1987). Saying what you mean in dialogue: a study in conceptual and semantic co-ordination. Cognition 27, 181–218.

Garrod, S., and Doherty, G. (1994). Conversation, co-ordination and convention: an empirical investigation of how groups establish linguistic conventions. Cognition 53, 181–215.

Garrod, S., and Pickering, M. J. (2009). Joint action, interactive alignment, and dialog. Top. Cogn. Sci. 1, 292–304.

Giles, H., Coupland, N., and Coupland, J. (eds.). (1991). Contexts of Accommodation: Developments in Applied Sociolinguistics. Cambridge, MA: Cambridge University Press.

Giraud, A.-L., and Poeppel, D. (2012). Cortical oscillations and speech processing: emerging computational principles and operations. Nat. Neurosci. 15, 511–517.

Greenberg, S., Carvey, H., Hitchcock, L., and Chang, S. (2003). Temporal properties of spontaneous speech – a syllable-centric perspective. J. Phon. 31, 465–485.

Guionnet, S., Nadel, J., Bertasi, E., Sperduti, M., Delaveau, P., and Fossati, P. (2012). Reciprocal imitation: toward a neural basis of social interaction. Cereb. Cortex 22, 971–978.

Hartsuiker, R. J., Pickering, M. J., and Veltkamp, E. (2004). Is syntax separate or shared between languages? Cross-linguistic syntactic priming in Spanish/English bilinguals. Psychol. Sci. 15, 409–414.

Hasson, U., Ghazanfar, A. A., Galantucci, B., Garrod, S., and Keysers, C. (2012). Brain-to-brain coupling: a mechanism for creating and sharing a social world. Trends Cogn. Sci. 16, 114–121.

Hasson, U., Nir, Y., Levy, I., Fuhrmann, G., and Malach, R. (2004). Intersubject synchronization of cortical activity during natural vision. Science 303, 1634–1640.

Heim, S. (2008). Syntactic gender processing in the human brain: a review and a model. Brain Lang. 106, 55–64.

Heim, S., Eickhoff, S., Friederici, A., and Amunts, K. (2009). Left cytoarchitectonic area 44 supports selection in the mental lexicon during language production. Brain Struct. Funct. 213, 441–456.

Heim, S., Eickhoff, S., Ischebeck, A., Supp, G., and Amunts, K. (2007). Modality-independent involvement of the left BA 44 during lexical decision making. Brain Struct. Funct. 212, 95–106.

Hickok, G. (2009). Speech Perception Does Not Rely on Motor Cortex: Response to D'Ausilio et al. [Online]. Available: http://www.cell.com/current-biology/comments_Dausilio (Accessed 10-11-2011).

Ilmberger, J., Eisner, W., Schmid, U., and Reulen, H.-J. (2001). Performance in picture naming and word comprehension: evidence for common neuronal substrates from intraoperative language mapping. Brain Lang. 76, 111–118.

Indefrey, P., Hellwig, F., Herzog, H., Seitz, R. J., and Hagoort, P. (2004). Neural responses to the production and comprehension of syntax in identical utterances. Brain Lang. 89, 312–319.

Ireland, M. E., Slatcher, R. B., Eastwick, P. W., Scissors, L. E., Finkel, E. J., and Pennebaker, J. W. (2011). Language style matching predicts relationship initiation and stability. Psychol. Sci. 22, 39–44.

Kempen, G., Olsthoorn, N., and Sprenger, S. (2011). Grammatical workspace sharing during language production and language comprehension: evidence from grammatical multitasking. Lang. Cogn. Process. 27, 345–380.

Lerner, Y., Honey, C. J., Silbert, L. J., and Hasson, U. (2011). Topographic mapping of a hierarchy of temporal receptive windows using a narrated story. J. Neurosci. 31, 2906–2915.

Levelt, W. J. M., and Kelter, S. (1982). Surface form and memory in question answering. Cogn. Psychol. 14, 78–106.

Liberman, A. M., and Mattingly, I. G. (1985). The motor theory of speech perception revised. Cognition 21, 1–36.

Lotto, A. J., Hickok, G. S., and Holt, L. L. (2009). Reflections on mirror neurons and speech perception. Trends Cogn. Sci. 13, 110–114.

Markman, A. B., and Makin, V. S. (1998). Referential communication and category acquisition. J. Exp. Psychol. Gen. 127, 331–354.

McGettigan, C., Agnew, Z. K., and Scott, S. K. (2010). Are articulatory commands automatically and involuntarily activated during speech perception? Proc. Natl. Acad. Sci. U.S.A. 107, E42–E42.

Menenti, L., Gierhan, S. M. E., Segaert, K., and Hagoort, P. (2011). Shared language. Psychol. Sci. 22, 1174–1182.

Menenti, L., Petersson, K. M., and Hagoort, P. (2012). From reference to sense: how the brain encodes meaning for speaking. Front. Psychol. 2:384. doi: 10.3389/fpsyg.2011.00384

Newman-Norlund, R. D., Bosga, J., and Meulenbroek, R. G. J. (2008). Anatomical substrates of cooperative joint-action in a continuous motor task: virtual lifting and balancing. Neuroimage 41, 169–177.

Newman-Norlund, R. D., van Schie, H. T., van Zuijlen, A. M. J., and Bekkering, H. (2007). The mirror neuron system is more active during complementary compared with imitative action. Nat. Neurosci. 10, 817–818.

Noordzij, M. L., Newman-Norlund, S. E., de Ruiter, J. P., Hagoort, P., Levinson, S. C., and Toni, I. (2009). Brain mechanisms underlying human communication. Front. Hum. Neurosci. 3:14. doi: 10.3389/neuro.09.014.2009

Pardo, J. S. (2006). On phonetic convergence during conversational interaction. J. Acoust. Soc. Am. 119, 2382–2393.

Pickering, M., and Garrod, S. (2006). Alignment as the basis for successful communication. Res. Lang. Comput. 4, 203–228.

Pickering, M. J., and Garrod, S. (2004). Toward a mechanistic psychology of dialogue. Behav. Brain Sci. 27, 169–190.

Pickering, M. J., and Garrod, S. (in press). An integrated theory of language production and comprehension. Behav. Brain Sci.

Pulvermüller, F., and Fadiga, L. (2010). Active perception: sensorimotor circuits as a cortical basis for language. Nat. Rev. Neurosci. 11, 351–360.

Pulvermüller, F., Huss, M., Kherif, F. Moscoso Del Prado Martin, F., Hauk, O., and Shtyrov, Y. (2006). Motor cortex maps articulatory features of speech sounds. Proc. Natl. Acad. Sci. U.S.A. 103, 7865–7870.

Redcay, E., Dodell-Feder, D., Pearrow, M. J., Mavros, P. L., Kleiner, M., Gabrieli, J. D. E., and Saxe, R. (2010). Live face-to-face interaction during fMRI: a new tool for social cognitive neuroscience. Neuroimage 50, 1639–1647.

Richardson, D. C., and Dale, R. (2005). Looking to understand: the coupling between speakers' and listeners' eye movements and its relationship to discourse comprehension. Cogn. Sci. 29, 1045–1060.

Richardson, D. C., Dale, R., and Kirkham, N. Z. (2007). The art of conversation is coordination. Psychol. Sci. 18, 407–413.

Rossi, E., Schippers, M., and Keysers, C. (2011). “Broca's area: linking perception and production in language and actions,” in On Thinking, eds S. Han and E. Pöppel (Berlin, Heidelberg: Springer Berlin Heidelberg), 169–184.

Schippers, M. B., Roebroeck, A., Renken, R., Nanetti, L., and Keysers, C. (2010). Mapping the information flow from one brain to another during gestural communication. Proc. Natl. Acad. Sci. U.S.A. 107, 9388–9393.

Scott, S. K., McGettigan, C., and Eisner, F. (2009). A little more conversation, a little less action: candidate roles for the motor cortex in speech perception. Nat. Rev. Neurosci. 10, 295–302.

Segaert, K., Menenti, L., Weber, K., Petersson, K. M., and Hagoort, P. (2011). Shared syntax in language production and language comprehension—an fMRI study. Cereb. Cortex. doi: 10.1093/cercor/bhr249. [Epub ahead of print].

Stephens, G. J., Silbert, L. J., and Hasson, U. (2010). Speaker-listener neural coupling underlies successful communication. Proc. Natl. Acad. Sci. U.S.A. 107, 14425–14430.

Thompson, C. K., Bonakdarpour, B., Fix, S. C., Blumenfeld, H. K., Parrish, T. B., Gitelman, D. R., and Mesulam, M. M. (2007). Neural correlates of verb argument structure processing. J. Cogn. Neurosci. 19, 1753–1767.

Toni, I., de Lange, F. P., Noordzij, M. L., and Hagoort, P. (2008). Language beyond action. J. Physiol. Paris 102, 71–79.

Tremblay, P., and Small, S. L. (2011). On the context-dependent nature of the contribution of the ventral premotor cortex to speech perception. Neuroimage 57, 1561–1571.

Watkins, K. E., and Paus, T. (2006). Modulation of motor excitability during speech perception: the role of Broca's area. J. Cogn. Neurosci. 16, 978–987.

Watkins, K. E., Strafella, A. P., and Paus, T. (2003). Seeing and hearing speech excites the motor system involved in speech production. Neuropsychologia 41, 989–994.

Watson, M. E., Pickering, M. J., and Branigan, H. P. (2004). “Alignment of reference frames in dialogue,” in Proceedings of the 26th Annual Conference of the Cognitive Science Society, (Mahwah, NJ).

Willems, R. M., de Boer, M., de Ruiter, J. P., Noordzij, M. L., Hagoort, P., and Toni, I. (2010). A dissociation between linguistic and communicative abilities in the human brain. Psychol. Sci. 21, 8–14.

Willems, R. M., and Hagoort, P. (2007). Neural evidence for the interplay between language, gesture, and action: a review. Brain Lang. 101, 278–298.

Willems, R. M., and Varley, R. A. (2010). Neural insights into the relation between language and communication. Front. Hum. Neurosci. 4:203. doi: 10.3389/fnhum.2010.00203

Wilson, M., and Wilson, T. P. (2005). An oscillator model of the timing of turn-taking. Psychon. Bull. Rev. 12, 957–968.

Wilson, S. M. (2009). Speech perception when the motor system is compromised. Trends Cogn. Sci. 13, 329–330.

Wilson, S. M., Saygin, A. P., Sereno, M. I., and Iacoboni, M. (2004). Listening to speech activates motor areas involved in speech production. Nat. Neurosci. 7, 701–702.

Yuen, I., Davis, M. H., Brysbaert, M., and Rastle, K. (2010). Activation of articulatory information in speech perception. Proc. Natl. Acad. Sci. U.S.A. 107, 592–597.

Keywords: dialogue, fMRI, language comprehension, language production, spoken communication

Citation: Menenti L, Pickering MJ and Garrod SC (2012) Toward a neural basis of interactive alignment in conversation. Front. Hum. Neurosci. 6:185. doi: 10.3389/fnhum.2012.00185

Received: 23 February 2012; Accepted: 02 June 2012;

Published online: 27 June 2012.

Edited by:

Chris Frith, Wellcome Trust Centre for Neuroimaging at University College London, UKReviewed by:

István Winkler, University of Szeged, HungaryKristian Tylen, Aarhus University, Denmark

Copyright: © 2012 Menenti, Pickering and Garrod. This is an open-access article distributed under the terms of the Creative Commons Attribution Non Commercial License, which permits non-commercial use, distribution, and reproduction in other forums, provided the original authors and source are credited.

*Correspondence: Laura Menenti, Max Planck Institute for Psycholinguistics, P. O. Box 310, 6500 AH Nijmegen, Netherlands. e-mail:bGF1cmEubWVuZW50aUBtcGkubmw=