- 1Institute of Neuroscience, National Yang-Ming University, Taipei, Taiwan

- 2Department of Rehabilitation, National Yang-Ming University, Yilan, Taiwan

- 3Department of Education and Research, Taipei City Hospital, Taipei, Taiwan

The human voice, which has a pivotal role in communication, is processed in specialized brain regions. Although a general consensus holds that the anterior insular cortex (AIC) plays a critical role in negative emotional experience, previous studies have not observed AIC activation in response to hearing disgust in voices. We used magnetoencephalography to measure the magnetic counterparts of mismatch negativity (MMNm) and P3a (P3am) in healthy adults while the emotionally meaningless syllables dada, spoken as neutral, happy, or disgusted prosodies, along with acoustically matched simple and complex tones, were presented in a passive oddball paradigm. The results revealed that disgusted relative to happy syllables elicited stronger MMNm-related cortical activities in the right AIC and precentral gyrus along with the left posterior insular cortex, supramarginal cortex, transverse temporal cortex, and upper bank of superior temporal cortex. The AIC activity specific to disgusted syllables (corrected p < 0.05) was associated with the hit rate of the emotional categorization task. These findings may clarify the neural correlates of emotional MMNm and lend support to the role of AIC in the processing of emotional salience already at the preattentive level.

Introduction

Mismatch negativity (MMN) has recently been used as an index of the salience of emotional voice processing (Schirmer et al., 2005; Cheng et al., 2012; Fan et al., 2013; Hung et al., 2013; Fan and Cheng, 2014; Hung and Cheng, 2014). MMN reflects the early saliency detection of auditory stimuli regarding stimulus discrimination based on the perceptual processes of physical features (Pulvermüller and Shtyrov, 2006; Thönnessen et al., 2010). Considering that the anterior insular cortex (AIC) plays a critical role in negative emotional experience (Craig, 2002, 2009), particularly in perceiving disgust, and magnetoencephalography (MEG) could complement the spatiotemporal dynamics in a passive auditory oddball paradigm, we proposed the AIC activation with respect to emotional MMN.

The AIC is a polysensory cortex involved in the awareness of bodily sensations and subjective feelings (Craig, 2002, 2009). Perceiving a disgusting odor, disgusted faces, and imagining feeling disgust have consistently activated the AIC (e.g., Phillips et al., 1997, 2004; Adolphs et al., 2003; Krolak-Salmon et al., 2003; Wicker et al., 2003; Jabbi et al., 2008). Menon and Uddin (2010) suggested that the AIC is a key region of the emotional salience network that integrates external stimuli with internal states to guide behaviors. However, previous studies have failed to identify the AIC activation associated with disgusted vocal expressions (Phillips et al., 1998).

MEG enables non-invasive measurements of neural activity with sufficient spatial resolution and excellent temporal resolution. MMN/its magnetic equivalent (MMNm), and P3a/P3am, can be elicited using a passive auditory oddball paradigm in which participants engage in a task and must ignore the stimuli that are presented in a random series, with one stimulus (standard) occurring more frequently than the other stimuli (deviant). P3a/P3am is associated with involuntary attention switches for sound changes (Alho et al., 1998). As a preattentive change detection index, MMN/MMNm can reflect N-methyl-D-aspartate receptor function (Näätänen et al., 2011), which mediates sensory memory formation and emotional reactivity in various neuropsychiatric disorders (Campeau et al., 1992; Barkus et al., 2010). MMNm elicited by emotional (happy and angry) deviants in an oddball paradigm can reflect early stimulus processing of emotional prosodies (Thönnessen et al., 2010). Recent studies have indicated that, in addition to being used as an index of the acoustic features of sounds, such as frequency, duration, and phonetic contents (e.g., Ylinen et al., 2006; Horvath et al., 2008), MMN can also be used as an index of the salience of emotional voices (Cheng et al., 2012; Fan et al., 2013; Hung et al., 2013; Fan and Cheng, 2014; Hung and Cheng, 2014).

Various manners have been used for the acoustic control of emotional voices. For example, scrambling voices enables the amplitude envelope to be preserved (Belin et al., 2000). In one study, simple tones synthesized from the strongest formant of the vowel were used as the control stimuli in the mismatch paradigm (Čeponienė et al., 2003). In another studies, physically identical stimuli were presented as both standards and deviants (Schirmer et al., 2005, 2008). Because no single acoustic parameter can fully explain strong neural responses to emotional prosodies (Wiethoff et al., 2008), the present study, using the same theorems as Belin et al. (2000) did, involved employing two stringent sets of acoustic control stimuli, simple tones and complex tones, to control the temporal envelope and core spectral elements of emotional voices [spectral centroid (fn) and fundamental frequency (f0)], respectively.

To elucidate the neural correlates underpinning the emotional salience of voices, we measured MMNm and P3am in a passive auditory oddball paradigm while presenting the neutrally, happily, and disgustedly spoken syllables dada to young adults. We hypothesized that, if the AIC is involved in the preattentive processing of emotional salience, then AIC activation would be observed in the source distribution of MMNm, in accordance with the auditory cortices and early response latencies. If AIC activation is specific to voices, MMNm in response to acoustic attributes, i.e., simple and complex tone deviants, would not elicit the AIC activation. If neurophysiologic changes can guide behaviors (Menon and Uddin, 2010), then people exhibiting stronger AIC activation in response to hearing emotional syllables are expected to perform more favorably in emotional recognition. Furthermore, because men and women might engage in dissimilar neural processing of emotional stimuli (Hamann and Canli, 2004), the gender factor was introduced into the analyses.

Materials and Methods

Participants

Twenty healthy participants (10 men), aged 18–30 years (mean ± SD: 22 ± 1.9), underwent MEG recording and structural MRI scanning after providing written informed consent. The study was approved by the ethics committee in National Yang-Ming University and conducted in accordance with the Declaration of Helsinki. One person was excluded from data analysis because of motion artifacts. All participants were right-handed without hearing or visual impairments. They had no neurological and psychiatric disorders. Participants received monetary compensation for their participation.

Auditory Stimuli

The stimulus material consisted of three categories: emotional syllables, simple tones, and complex tones. For the emotional syllables, a young female speaker produced the syllables dada with two sets of emotional (happy and disgusted) prosodies and one set of neutral prosodies. Within each type of emotional or neutral prosodies, the speaker produced the dada syllables more than ten times to enable validation. Sound Forge 9.0 and Cool Edit Pro 2.0 were used to edit the syllables so that they were equally long (550 ms) and loud (max: 62 dB, mean: 59 dB).

Each syllable set was rated for emotionality on a 5-point Likert-scale by a total of 120 listeners (60 men). For the disgusted set, listeners classified each stimulus from extremely disgusted to not disgusted at all. For the happy set, listeners classified from extremely happy to not happy at all and for the neutral set, listeners classified from extremely emotional to not emotional at all. Emotional syllables that were consistently identified as the extremely disgusted and happy (i.e., the highest ratings) as well as the most emotionless (i.e., the lowest rating) were used as the stimuli. The Likert-scale (mean ± SD) of happy, disgusted, and neutral syllables were 4.34 ± 0.65, 4.04 ± 0.91, and 2.47 ± 0.87, respectively.

Although firmly controlling the spectral power distribution may result in the loss of temporal flow associated with formant contents in voices (Belin et al., 2000), the synthesizing the temporal envelope and the core spectral elements of voices should enable the maximal control of the spectral and temporal features of vocal and corresponding non-vocal sounds (Schirmer et al., 2007; Remedios et al., 2009). In order to create a set of stimuli that retain acoustical correspondence with the emotional syllables, we synthesized simple and complex tones by using Praat (Boersma, 2001) and MATLAB (The MathWorks, Inc., Natick, MA, USA). Using a sine waveform, we extracted the fundamental frequencies (f0) and the spectral centroid (fn) of each original syllable to produce complex and simple tones, respectively (Supplementary Figures S1, S2). The lower end of the spectrogram at each time point determined the fundamental frequency (f0). For the complex tones, the f0 over time was extracted to preserve the pitch contour. For the simple tones, the spectral centroid (fn), indicating the center of mass of the spectrum, was extracted to reflect the brightness of sounds. The original syllable envelope then multiplied the extracted frequencies. Hence, to control temporal features, three categories (emotional syllables, complex tones, and simple tones) were assigned to have identical temporal envelopes. To control spectral features, complex tones retained the f0 whereas simple tones retained the fn of emotional syllables. The length (550 ms) and loudness (max: 62 dB; mean: 59 dB) of all stimuli were controlled.

Procedures

During MEG recording, participants lay in a magnetically shielded chamber and watched a silent movie with subtitles while the task-irrelevant vocally spoken or synthesized stimuli were presented. To ensure that the auditory stimuli were sufficiently irrelevant, participants attentively watched the movie and answered questions regarding the movie content after data recording.

Three sessions (emotional syllables, complex tones, and simple tones) were conducted. The session order was pseudorandomized among participants. In the emotional session, neutral syllables set as the standard (S), and happy and disgusted syllables designed as two isometric deviants (D1, D2) followed the oddball paradigm. During the complex and simple sessions, we applied an identical oddball paradigm for the corresponding synthesized tones so that relative acoustic features among S, D1, and D2 were controlled across all three categories. Each session consisted of 800 standards, 100 D1s, and 100 D2s. A minimum of two standards was presented between any two deviants. The successive deviants were always diverse. The stimulus onset asynchrony was 1200 ms.

After MEG recording, participants performed a forced-choice emotional categorization task. While listening to the forty-five stimuli, including five Ss, five D1s, and five D2s of each stimulus category, participants identified each emotional characteristics as one of three types (emotionless, happy, or disgusted) in a self-paced manner. The chance level was 33.33% based on three alternatives.

Apparatus and Recordings

The data were recorded by using a 157-channel axial gradiometer whole-head MEG system (Kanazawa Institute of Technology, Kanazawa, Japan). Prior to data acquisition, the locations of five head position indicator coils attached to the scalp and several additional scalp surface points were recorded with respect to fiduciary landmarks (nasion and two preauricular points) by using a 3-D digitizer, which digitized each participant's head shape and localized the position of the participant's head inside the MEG helmet. Data were collected at a sampling frequency of 1 kHz. Participants kept their heads steady during MEG recording. The head-shape and head-position indicator locations were digitized at the onset of recording and were later used to coregister the MEG coordinate system with the structural MRI of each participant. Structural MR images were acquired on a 3 T Siemens Magnetom Trio-Tim scanner using a 3D MPRAGE sequence (TR/TE = 2530/3.5 ms, FOV = 256 mm, flip angle = 7°, matrix = 256 × 256, 176 slices/slab, slice thickness = 1 mm, no gap).

MEG Preprocessing and Analysis

In offline processing the MEG data, we applied a low-pass filter at 20 Hz (Luck, 2005) and reduced the noise using the algorithm of time-shifted principle component analysis (de Cheveigné and Simon, 2007; Hsu et al., 2011). The MEG data were then epoched for each trial type by time locking the stimulus onsets at 100-ms prestimulus intervals and 700-ms poststimulus intervals. Epochs with a signal range exceeding 1.5 fT at any channel were excluded from the averaging and subsequent statistical analyses, in which the deviant-stimulus averages were calculated based on at least 90 trials per participants. The amplitudes of averaged MEG response waveforms were measured with respect to a 100-ms prestimulus baseline.

Event-Related Fields (ERF)

For amplitude and latency analyses, we used the Isofield Contour Map to identify the channels with the strongest signal in the direction. Because head position variation might unequally contribute to the differential activity observed at individual sensors, we created a composite map by grand-averaging nine conditions, where three D1s, three D2s and three Ss of three categories were pooled together. For the ERF difference, the difference maps [disgusted MMNm (D2-S); happy MMNm (D1-S)] were averaged for each category. Based on the composite maps of each ERF component, we selected the four clusters with the strongest signal in the direction. The amplitudes of the sensory ERF peaks (N1m and P2m), MMNm and P3am were measured as an average within a 60-ms window centered at each participant's individual peak latencies. P3am was defined as the component immediately following MMNm, peaking at 300-500 ms. Two-tailed t tests were used to determine the statistical presence (difference from 0 fT) of ERF peaks related to the stimuli.

Statistical analysis involved Three-Way mixed ANOVAs with two within-subject factors: category (emotional syllables, complex tones, or simple tones) and stimulus [neutral (S), happy (D1), or disgusted (D2)] and one between-subject factor: gender (males vs. females). The dependent variables were the amplitudes and latencies of each component. Bonferroni test was conducted only when preceded by significant effects.

MEG Source Analysis

The structural MR images were processed using FreeSurfer (CorTechs Labs, La Jolla, CA and MGH/HMS/MIT Athinoula A. Martinos Center for Biomedical Imaging, Charleston, MA) to create a cortical reconstruction of each brain. Minimum-norm estimates (MNEs) (Hämäläinen and Ilmoniemi, 1994) were computed from combined anatomical MRI and MEG data by using the MNE toolbox (MGH/HMS/MIT Athinoula A. Martinos Center for Biomedical Imaging, Charleston, MA). For inverse computations, the cortical surface was decimated to 5000–10,000 vertices per hemisphere. We used the boundary-element model method to compute the forward solution, which was an estimate of the magnetic field at each MEG sensor resulting from the activity at each of the vertices. The forward solution was then employed to create the inverse solution, which enabled identifying the spatiotemporal distribution of any activity over sources, that most accurately account for each participant's average MEG data. The noise covariance matrix was estimated according to the prestimulus baselines of the individual trials. Only the components of activation that were in a direction normal to the cortical surface were retained in the minimum-norm solution. The MNE results were then converted into dynamic statistical parameter maps (dSPM), which measured the noise-normalized activation at each source and enabled several standard minimum-norm calculations inaccuracies to be avoided (Dale et al., 2000).

To test whether the evoked response significantly differed between conditions, the problem of multiple comparisons was addressed by conducting a cluster-level permutation test across space. For each cortical location within each region of interest (ROI), a paired-samples t value was computed for testing the deviant-standard contrast or the contrast between two deviants (p = 0.05). We then selected all of the samples for which this t value exceeded an a priori threshold (uncorrected p < 0.05). Finally, the selected samples were clustered according to spatial adjacency. By clustering neighboring cortical locations that exhibited the same effect, we addressed the multiple comparisons problem while considering the dependency of the data. Cortical dipoles were considered to be neighbors if the distance between them was less than 12 mm. A sample was included in the cluster only when there were at least two neighboring samples in space.

Regions of Interests (ROI) and Source-Specific Time-Course Extraction

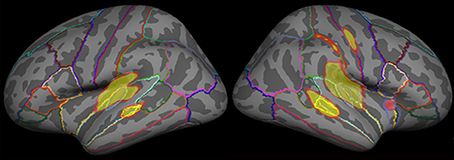

The cortical surface of each participant was normalized onto a standard brain supported by FreeSurfer, and the dSPM solutions of all participants were subsequently averaged so that they could be used in the defined regions and time windows of interest. Considering the fact that the trial number contributed considerably to the inverse source estimation, we selected only the standard exactly preceding the deviant to estimate cortical activity The dSPM solutions estimated for the standards, which immediately preceded D1 and D2, were then averaged to represent the cortical activity for standard sound processing. To further qualitatively clarify the underlying neural correlates of MMNm and P3am, a functional map, using inclusive masking to display significant dSPM activation for either deviant (D1 or D2), was used to select the ROIs for all of the stimulus categories. Specifically, the grand averaged functional maps evoked by D1 and D2 were overlaid onto a common reconstructed cortical sphere, respectively. The ROIs were drawn along the border of functional maps as well as the anatomical criterion where the vertices were optimally parceled using the gyral-sulcal patterns (Fischl et al., 1999; Sereno et al., 1999; Leonard et al., 2010). The ROIs for D1 and D1 were then combined to form an inclusive mask displaying significant dSPM activations for either deviant (D1 or D2). Then, the dSPM time courses were extracted from the predetermined ROIs after their amplitudes were measured and calculated as described previously (Figure 1).

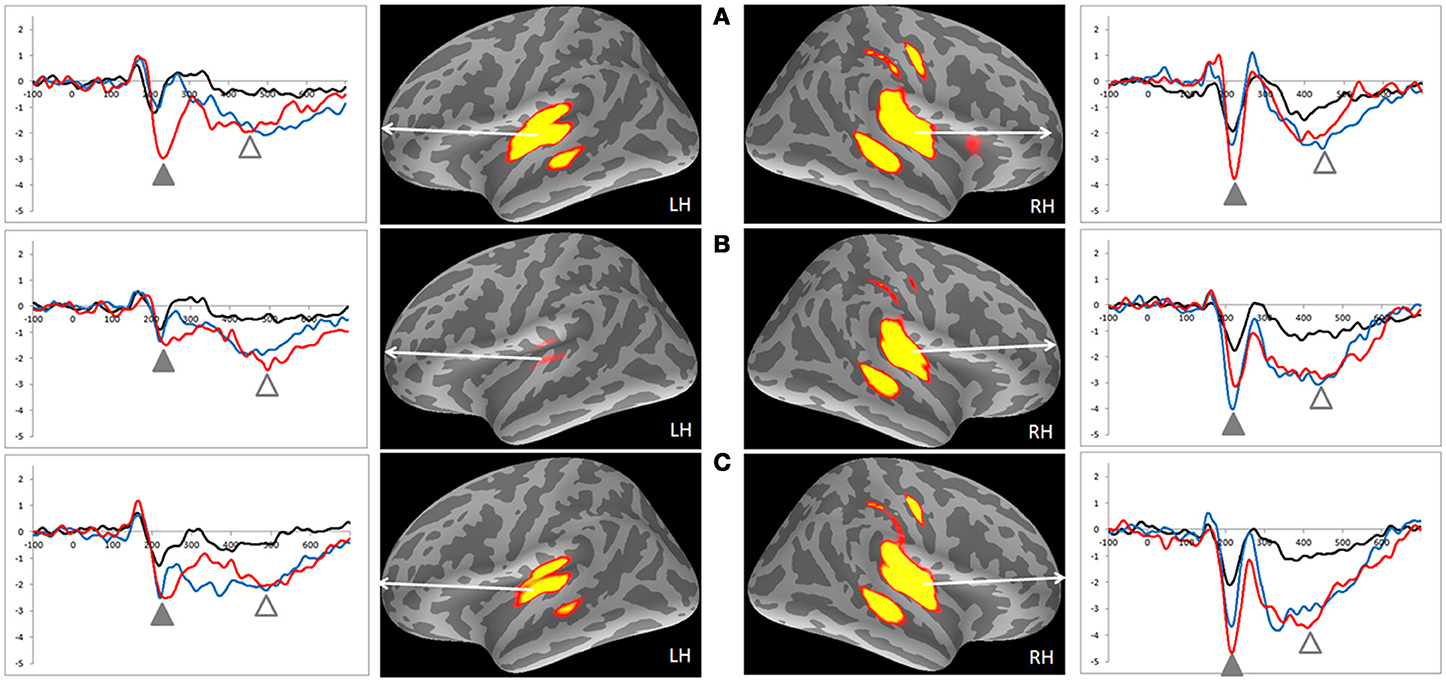

Figure 1. The grand averaged functional map evoked by emotional syllables. The functional map of dSPM solutions estimated for each category (emotional syllables, complex, and simple tones) was superimposed onto a reconstructed anatomical criterion where the vertices were optimally parceled out using the gyral-sulcal patterns. We overlaid the grand averaged functional map in response to emotional syllables onto the common reconstructed cortical sphere as an example.

Results

Behavioral Performance

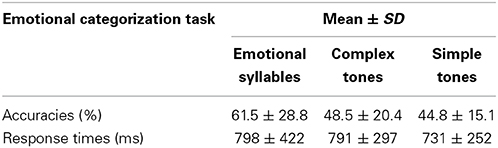

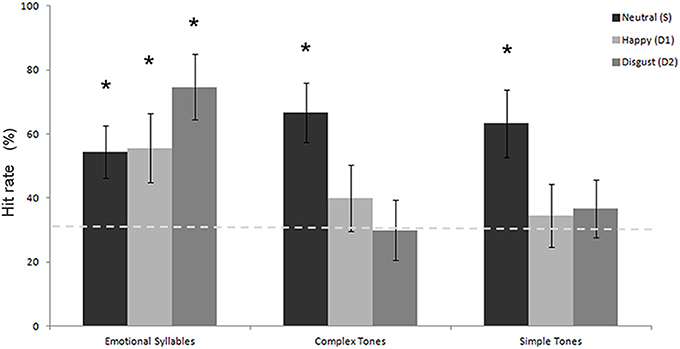

Table 1 shows the performance on the emotional categorization task. A Three-Way mixed ANOVA targeting categories (emotional syllables, complex tones, or simple tones) and stimulus (neutral, happy, or disgusted) as the within-subject variables and gender (male vs. female) as the between-subject variable was computed in terms of the hit rate. The category effect [F(2, 32) = 4.90, p = 0.01] and the interaction between category and stimulus [F(4, 64) = 2.80, p = 0.03] were significant (Figure 2). No significance was observed regarding gender and gender-related interaction. The emotional syllables exhibited more favorable performance than did the complex (p = 0.031) and simple (p = 0.005) tones. Post-hoc comparisons showed that the neural- relative to emotional-derived tones exerted more favorable performance in the complex [Neutral- > Happy-derived tones: t(17) = 1.9, Cohen's d = 0.70, one-tailed p = 0.035; Neutral- > Disgusted-derived tones: t(17) = 2.5, Cohen's d = 0.92, one-tailed p = 0.01] and simple tones [t(17) = 1.8, Cohen's d = 0.67, one-tailed p = 0.04; t(17) = 1.8, Cohen's d = 0.67, one-tailed p = 0.04], but this pattern was not observed in the emotional syllables (p = 0.11; p = 0.94). Only the emotional syllables exerted above-chance hit rates (>33.33%) in all emotions, rather than the complex and simple tones, indicating emotional neutrality of acoustic controls.

Figure 2. Hit rate in the emotional categorization task. The emotional syllables exhibited more favorable performance than did the complex and simple tones. Only the emotional syllables attained above-chance hit rates for each emotion. The asterisk (*p < 0.05) indicates that the hit rate is statistically higher than the chance level (dashed line).

Sensory ERF

Each stimulus type of each category reliably elicited an N1-P2 complex, which is typically obtained in adults during fast stimulus presentation (Supplementary Table S1 and Figure S3) (Näätänen and Picton, 1987; Pantev et al., 1988; Tremblay et al., 2001; Shahin et al., 2003; Ross and Tremblay, 2009).

Statistical analyses for each identified cluster on the Isofield Contour Map revealed that N1m had the category effect for the cluster over the right anterior region [F(2, 36) = 5.33, p = 0.009] and P2m had the category effect for the clusters over the left and right posterior regions [F(2, 36) = 15.03, p < 0.001; F(2, 36) = 13.65, p < 0.001]. Post-hoc analyses indicated that the emotional syllables elicited stronger N1m than did the complex tones (p = 0.005) and simple tones (p = 0.02) for the cluster over the right anterior region. For those over the left and right posterior regions, the simple tones elicited stronger P2m amplitudes than did the emotional syllables (left: p = 0.03; right: p = 0.02) and complex tones (p = 0.01; p < 0.001). There was no significance for gender and gender-related interaction.

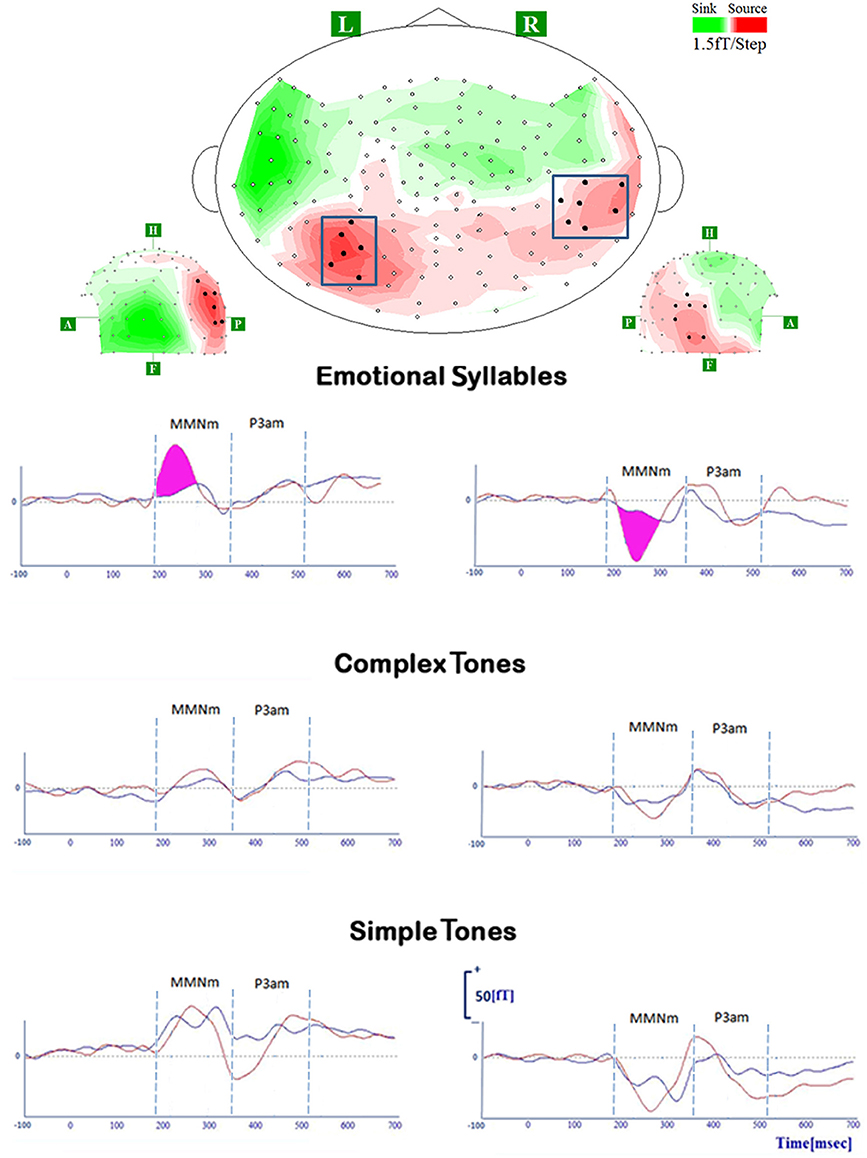

MMNm

All deviant stimuli of each category elicited MMNm significantly. Statistical analyses for each identified cluster on the Isofield Contour Map revealed that there were significant interactions between category and stimulus over the left [F(2, 36) = 4.73, p = 0.015] and right posterior [F(2, 36) = 5.05, p = 0.012] clusters. In the left posterior cluster, post-hoc analyses showed that the stimulus effect where disgusted (D2) relative to happy (D1) MMNm was larger in amplitudes was present in the emotional syllables (p = 0.004), but none was detected in the simple tones (p = 0.27) and complex tones (p = 0.41). In the right posterior cluster, the stimulus effect was found in the emotional syllables (p < 0.001) and simple tones (p = 0.005). Gender and gender-related interaction were not significant (p >0.05).

MMNm-Related Cortical Activities

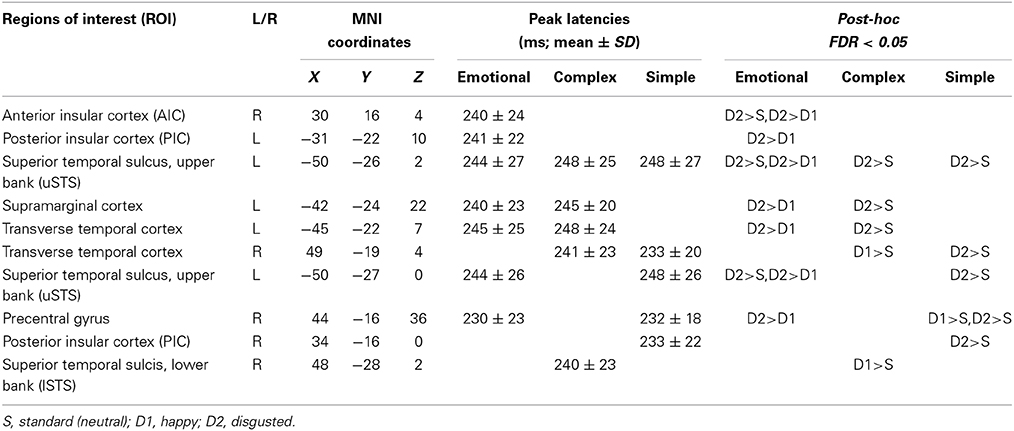

Table 2 lists the peak latencies used for analyzing source-specific amplitudes for each ROI. Statistical analyses, using a Three-Way mixed ANOVA targeting category (emotional syllables, complex tones, or simple tones) and stimulus [neutral (S), happy (D1), or disgusted (D2)] as the within-subject variables and gender (males vs. females) as the between-subject variable for each ROI, revealed that the brain regions exhibiting an interaction between the category and stimulus were the right AIC [F(4, 68) = 3.35, p = 0.015], right precentral gyrus [F(4, 68) = 5.54, p = 0.001], left supramarginal cortex [F(4, 68) = 3.03, p = 0.023], upper and lower bank of superior temporal sulcus (uSTS and lSTS) [F(4, 68) = 2.78, p = 0.03; F(4, 68) = 3.41, p = 0.03] together with the left posterior insular cortex (PIC) [F(4, 68) = 5.09, p = 0.006], left and right transverse temporal cortex [F(4, 68) = 4.71, p = 0.002; F(4, 68) = 3.02, p = 0.02] (Figure 3). Gender and gender-related interaction were non-significant. Post-hoc tests indicated that the processing of emotional salience (Figure 4), as indicated by stronger cortical activities for disgusted syllables relative to happy syllables (D2 > D1: FDR corrected p < 0.05) occurred in the right AIC [F(2, 34) = 7.84, p = 0.002] and precentral gyrus [F(2, 34) = 7.98, p = 0.005] along with the left PIC [F(2, 34) = 5.55, p = 0.008], supramarginal cortex [F(2, 34) = 6.71, p = 0.004], transverse temporal cortex [F(2, 34) = 8.95, p = 0.001], and uSTS [F(2, 34) = 8.49, p = 0.001].

Figure 3. MMNm-related cortical activities and source-specific (dSPM) time courses of ROIs in the emotional syllables and complex and simple tones. (A) Emotional syllables. (B) Complex tones. (C) Simple tones. Positive dSPM values indicate the current flowing outward from the cortical surface, whereas negative values indicate the current flowing inward. Grand average (n = 19 participants) time courses of the mean estimated current strength for happy (D1, blue line), disgusted (D2, red line) ad neutral syllables (S, black line) were extracted from primary auditory cortex. The functional map shows significant dSPM activation in the early and late time window, indicated by solid and empty triangles, respectively.

Figure 4. MMNm and P3am in response to deviant stimuli of emotional syllables, complex tones, and simple tones. All deviant stimuli of each category (emotional syllables, complex, and simple tones) consistently elicit MMNm and P3am [red line: disgusted MMNm (D2-S); blue line: happy MMNm (D1-S)]. For the sake of argument, the grand-average whole-head topography was derived from emotional syllables at the peak latency of MMNm (265 ms). The magenta area indexes the emotional salience processing, as shown by significant differences between disgusted MMNm and happy MMNm.

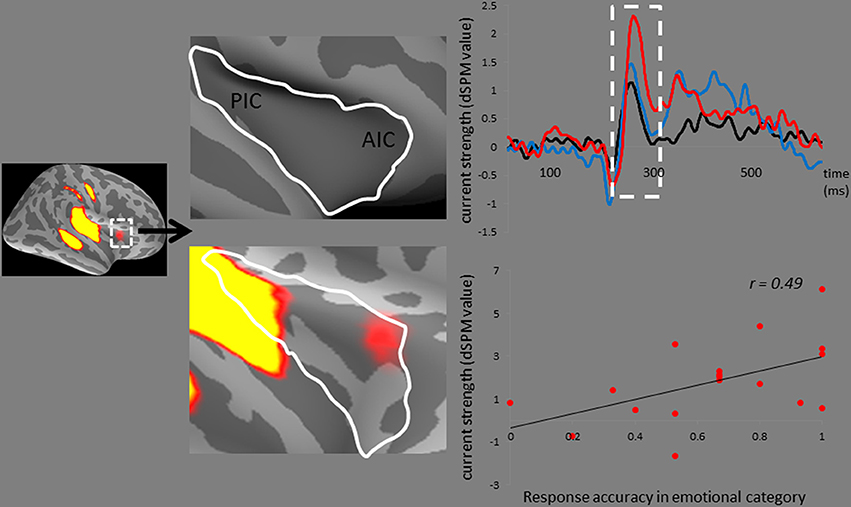

In addition, the right AIC activities, which surpassed the dSPM criterion, specifically responded to the disgusted syllables (Figure 5). The correlation analysis revealed that the MMNm-related AIC activities were associated with the hit rates for emotional syllables in the emotional categorization task [r(18) = 0.49, p = 0.036]. Participants exhibiting larger amplitudes in the right AIC activation triggered by disgusted syllables were likely to perform better in the emotional categorization task.

Figure 5. The anterior insular cortex activity in response to disgusted syllables. Disgusted deviants exclusively elicited the activation in the AIC, as indicated by MMNm-related cortical activities. Grand average (n = 19 participants) time courses of the mean estimated current strength were extracted from the right AIC (red line: disgust, D2; blue line: happy, D1; black line: standard, S). The AIC activation for disgusted deviants and the hit rates in the emotional categorization task were positively correlated [r(18) = 0.49, p = 0.036].

P3am

Paired t-tests used to determine the statistical presence (difference from 0 fT/cm) indicated that all deviants from each category elicited P3am, temporally following MMNm (Supplementary Table S2). The ANOVA model on P3am amplitudes for each identified cluster on the Isofield Contour Map, did not find any significance in the category, stimulus, gender, and their related interaction (all p > 0.05).

P3am-Related Cortical Activities

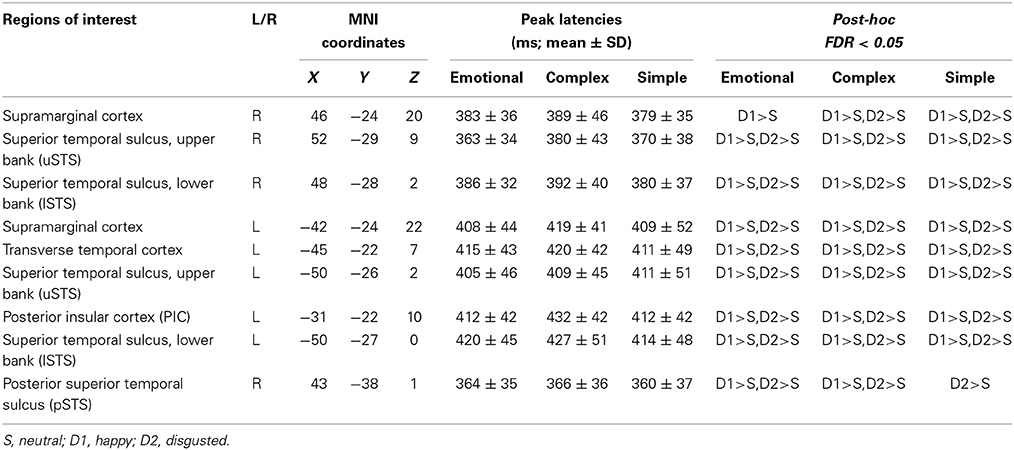

P3am and MMNm had similar brain sources and manifested as two contiguous peaks in the dSPM source-specific time course (Figure 3 and Table 3). Statistical analyses on P3am-related cortical activities used a Three-Way mixed ANOVA targeting category (emotional syllables, complex tones, or simple tones) and stimulus [neutral (S), happy (D1), or disgusted (D2)] as the within-subject variables and gender (male vs. female) as the between-subject variable. The brain regions exhibiting the stimulus effect included the right supramarginal cortex [F(2, 34) = 25.37, p = 0.032], uSTS [F(2, 34) = 23.48, p = 0.018], lSTS [F(2, 34) = 30.94, p = 0.006], and posterior superior temporal sulcus (pSTS) [F(2, 34) = 17.44, p = 0.004], as well as the left transverse temporal cortex [F(2, 34) = 16.67, p = 0.002], supramarginal cortex [F(2, 34) = 12.00, p = 0.008], PIC [F(2, 34) = 16.25, p = 0.006], uSTS [F(2, 34) = 14.81, p = 0.003], and lSTS [F(2, 34) = 26.42, p = 0.001]. None of ROIs reached any interaction between the category and stimulus. Gender and gender-related interaction were non-significant.

Discussion

Although the AIC plays a critical role in the negative experience of emotions (Craig, 2002, 2009; Menon and Uddin, 2010), including disgust (e.g., Phillips et al., 1997, 2004; Adolphs et al., 2003; Krolak-Salmon et al., 2003; Wicker et al., 2003; Jabbi et al., 2008), previous studies have not observed AIC activation in response to hearing disgusted voices. It thus leaves a room for more research to clarify whether AIC activation is specific to disgust or, alternatively, reflects general aversive arousal in response to negative emotions. In contrast to the predicted correlation between AIC activation and disgust recognition, we determined that the AIC activation predicted the performance of emotion recognition in general within the emotional category. This may be partially attributed to the functional role of the AIC in salience processing (Seeley et al., 2007; Sridharan et al., 2008; Menon and Uddin, 2010; Legrain et al., 2011). One MEG study on disgusted faces reported that early insular activation occurs at approximately 200 ms after the stimulus onset of emotionally arousing stimuli, regardless of valence, whereas the later insular response (350 ms) differentiates disgusted from happy facial expressions (Chen et al., 2009). Accordingly, AIC activation occurred in response to disgusted voices at approximately 250 ms in our study. The AIC is a brain region underpinning error awareness and saliency detection (Sterzer and Kleinschmidt, 2010; Harsay et al., 2012). This passive oddball study required no target detection. The AIC activation, which surpassed the dSPM criterion, specifically responded to disgusted syllables rather than happy syllables. Participants exhibiting stronger AIC activities were likely to have higher hit rats in the emotional categorization task (please see Figure 5). In addition, disgusted relative to happy syllables exhibited stronger MMN-related cortical activities, lending support for the notion that disgusted relative to happy voices might be more acoustically salient (Banse and Scherer, 1996; Simon-Thomas et al., 2009; Sauter et al., 2010).

Using MEG in a passive auditory oddball paradigm, we demonstrated the involvement of AIC in the preattentive perception of disgusted voices. MMNm-related AIC activation was specific to disgusted syllables, but not happy syllables. In addition, acoustically matched simple and complex tones did not activate the AIC in the same manner. Participants who exhibited stronger MMNm-related AIC activations were more prone to obtaining higher hit rates in the emotional categorization task. The involvement of AIC in emotional MMNm appears to be consistent between genders.

The MMNm response was sensitive to the positive and negative valence of emotional voices, as indicated by stronger amplitudes elicited by disgusted syllables than by happy syllables. Particularly, in the left hemisphere, the emotional salience processing was specific to voices rather than their acoustic attributes (see Figure 4). This should not be surprising because affective discrimination beyond acoustical distinction emerges early in the neonatal period (Cheng et al., 2012). Hearing angry and fearful syllables relative to happy syllables elicited stronger MMN (Schirmer et al., 2005; Fan et al., 2013; Hung et al., 2013; Fan and Cheng, 2014; Hung and Cheng, 2014). From an evolutionary perspective, disgust, an aversive emotion, exhibits a negativity bias that elicits stronger responses than neutral events do (Lange, 1922; Huang and Luo, 2006).

MMNm-related AIC activation may reflect emotional salience at the preattentive level. The significance of this finding lies in the lack of similar findings in the auditory modality by previous studies, despite the widespread evidence of the involvement of AIC in the experience of negative emotions. Particularly, attentively hearing disgusted voices did not activate the AIC (Phillips et al., 1998), indicating that AIC may be involved in the preattentive processing of disgusted voices. Theoretically, the passive auditory oddball paradigm should be the optimal approach for probing the preattentive processing of emotional voices because MMN can indicate the neural activity in a comatose or deep-sleeping brain (Kotchoubey et al., 2002). Although a silent movie presentation is unable to guarantee the lack of awareness to auditory stimuli, limited attention resources can indeed modulate the neurophysiological processing of emotional stimuli (Pessoa and Adolphs, 2010). We do not assert that the preattentive processing of emotional salience of voices was only dominated by the AIC. Through the AIC, the cortical-subcortical interactions for coordinating the function of cortical networks might be attributed to the neural mechanism underpinning the evaluation of the biological significance of affective voices.

The PIC exhibited stronger MMNm-related cortical activities for disgusted syllables relative to happy syllables, possibly involved in the representation of emotional salience. The PIC that has been functionally identified as the portion of the extended auditory cortex responded preferentially to vocal communication sounds (Remedios et al., 2009). The salient sensory information would reach the multimodal cortical areas, such as the PIC, directly from the thalamus, bypassing primary sensory cortices. This direct thalamocortical transmission is parallel to the modality-specific processing of stimulus attributes via the transmission from the thalamus to the relevant primary sensory cortices (Liang et al., 2013). In the present study, failing to observe any activation in the thalamus and anterior cingulate cortex in every condition could be attributed to the stringent statistic dSPM criterion as well as the absence of the target detection in a passive oddball task. The insular cortex, including AIC and PIC, can monitor the salience (appetitive and aversive) and integrate with the stimulus effect on the state of the body (Deen et al., 2011).

In addition, the left transverse temporal cortex, a part of primary auditory cortex, was sensitive to the processing of emotional salience [disgust (D2) > happy (D1)]. This finding supports that vocal emotional expression might be processed beyond the right hemisphere, being anchored within sensory, cognitive, and emotional processing systems at an early auditory discrimination stage (Schirmer and Kotz, 2006). The activation of the precentral gyrus observed across all three categories may reflect general attention and memory enhancement during information processing (Chen et al., 2010).

Importantly, the present study identified several future areas of inquiry. First, familiarity might potentially confound the effect of affective modulation observed here. Simple and complex tones are less familiar than emotional voices, and it might be impossible to categorize synthesized tones in relation to natural speech sounds. Second, using a pseudoword, such as dada, as an example might limit the generalizability for emotion representation. By using non-linguistic emotional vocalizations (Fecteau et al., 2007), additional studies are needed to verify whether the passive oddball paradigm is optimal for detecting emotional salience. Third, based on three alternatives, we defined the hit rate as the number of hits divided by the total number of trials at each stimulus category. This study did not control false alarm rates with the traditional approaches within the framework of Signal Detection Theory [SDT: d′ = Z (hit rate) − Z (false alarm rate)]. The performance of acoustic controls showed the skewed distribution, in which participants tended to classify the emotional-derived tones as neutral. Accordingly, a higher hit rate for neutral-derived tones than happy-/disgusted-derived tones might potentially violate the assumption that acoustic controls are emotionless. On the other hand, the sum of the hit rate for emotional syllables in the emotional categorization task, which prevents false alarms, exhibited a better prediction to the AIC activation than did the hit rate specific for disgust syllables. The hit rate of 75% for disgusted syllables and 55% for happy syllables corroborated existing findings in the identification of prototypical disgust and pleasure vocal burst (Simon-Thomas et al., 2009). However, only 55% being the hit rate for happy syllables has to be interpreted with caution. Future research should include the pre-evaluation of voices not only on the target emotion but also on other valence scales.

This MEG study clearly demonstrated the right AIC activation in response to disgusted deviance in a passive auditory oddball paradigm. The MMNm-related AIC activity was associated with the emotional categorization performance. The findings may clarify the neural correlates of emotional MMN and support that the AIC is involved in the processing of emotional salience at the preattentive level.

Conflict of Interest Statement

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Acknowledgments

The study was funded by the Ministry of Science and Technology (MOST 103-2401-H-010-003-MY3), National Yang-Ming University Hospital (RD2014-003), Health Department of Taipei City Government (10301-62-009), and Ministry of Education (Aim for the Top University Plan). None of the authors have any conflicts of interest.

Supplementary Material

The Supplementary Material for this article can be found online at: http://www.frontiersin.org/journal/10.3389/fnhum.2014.00743/abstract

References

Adolphs, R., Tranel, D., and Damasio, A. R. (2003). Dissociable neural systems for recognizing emotions. Brain Cogn. 52, 61–69. doi: 10.1016/S0278-2626(03)00009-5

Alho, K., Winkler, I., Escera, C., Huotilainen, M., Virtanen, J., Jaaskelainen, I. P., et al. (1998). Processing of novel sounds and frequency changes in the human auditory cortex: magnetoencephalographic recordings. Psychophysiology 35, 211–224. doi: 10.1111/1469-8986.3520211

Banse, R., and Scherer, K. R. (1996). Acoustic profiles in vocal emotion expression. J. Pers. Soc. Psychol. 70, 614–636. doi: 10.1037/0022-3514.70.3.614

Barkus, C., McHugh, S. B., Sprengel, R., Seeburg, P. H., Rawlins, J. N., and Bannerman, D. M. (2010). Hippocampal NMDA receptors and anxiety: at the interface between cognition and emotion. Eur. J. Pharmacol. 626, 49–56. doi: 10.1016/j.ejphar.2009.10.014

Belin, P., Zatorre, R. J., Lafaille, P., Ahad, P., and Pike, B. (2000). Voice-selective areas in human auditory cortex. Nature 403, 309–312. doi: 10.1038/35002078

Boersma, P. (2001). Praat, a system for doing phonetics by computer. Glot. Int. 5, 341–345. Available online at: http://www.fon.hum.uva.nl/praat/

Campeau, S., Miserendino, M. J., and Davis, M. (1992). Intra-amygdala infusion of the N-methyl-D-aspartate receptor antagonist AP5 blocks acquisition but not expression of fear-potentiated startle to an auditory conditioned stimulus. Behav. Neurosci. 106, 569–574. doi: 10.1037/0735-7044.106.3.569

Čeponienė, R., Lepisto, T., Shestakova, A., Vanhala, R., Alku, P., Näätänen, R., et al. (2003). Speech-sound-selective auditory impairment in children with autism: they can perceive but do not attend. Proc. Natl. Acad. Sci. U.S.A. 100, 5567–5572. doi: 10.1073/pnas.0835631100

Chen, T. L., Babiloni, C., Ferretti, A., Perrucci, M. G., Romani, G. L., Rossini, P. M., et al. (2010). Effects of somatosensory stimulation and attention on human somatosensory cortex: an fMRI study. Neuroimage 53, 181–188. doi: 10.1016/j.neuroimage.2010.06.023

Chen, Y. H., Dammers, J., Boers, F., Leiberg, S., Edgar, J. C., Roberts, T. P., et al. (2009). The temporal dynamics of insula activity to disgust and happy facial expressions: a magnetoencephalography study. Neuroimage 47, 1921–1928. doi: 10.1016/j.neuroimage.2009.04.093

Cheng, Y., Lee, S. Y., Chen, H. Y., Wang, P. Y., and Decety, J. (2012). Voice and emotion processing in the human neonatal brain. J. Cogn. Neurosci. 24, 1411–1419. doi: 10.1162/jocn_a_00214

Craig, A. D. (2002). How do you feel? Interoception: the sense of the physiological condition of the body. Nat. Rev. Neurosci. 3, 655–666. doi: 10.1038/nrn894

Craig, A. D. (2009). How do you feel-now? The anterior insula and human awareness. Nat. Rev. Neurosci. 10, 59–70. doi: 10.1038/nrn2555

Dale, A. M., Liu, A. K., Fischl, B. R., Buckner, R. L., Belliveau, J. W., Lewine, J. D., et al. (2000). Dynamic statistical parametric mapping: combining fMRI and MEG for high-resolution imaging of cortical activity. Neuron 26, 55–67. doi: 10.1016/S0896-6273(00)81138-1

de Cheveigné, A., and Simon, J. Z. (2007). Denoising based on time-shift PCA. J. Neurosci. Methods 165, 297–305. doi: 10.1016/j.jneumeth.2007.06.003

Deen, B., Pitskel, N. B., and Pelphrey, K. A. (2011). Three systems of insular functional connectivity identified with cluster analysis. Cereb. Cortex 21, 1498–1506. doi: 10.1093/cercor/bhq186

Fan, Y. T., and Cheng, Y. (2014). Atypical mismatch negativity in response to emotional voices in people with autism spectrum conditions. PLoS ONE 9:e102471. doi: 10.1371/journal.pone.0102471

Fan, Y. T., Hsu, Y. Y., and Cheng, Y. (2013). Sex matters: n-back modulates emotional mismatch negativity. Neuroreport 24, 457–463. doi: 10.1097/WNR.0b013e32836169b9

Fecteau, S., Belin, P., Joanette, Y., and Armony, J. L. (2007). Amygdala responses to nonlinguistic emotional vocalizations. Neuroimage 36, 480–487. doi: 10.1016/j.neuroimage.2007.02.043

Fischl, B., Sereno, M. I., Tootell, R. B., and Dale, A. M. (1999). High-resolution intersubject averaging and a coordinate system for the cortical surface. Hum. Brain Mapp. 8, 272–284. doi: 10.1002/(SICI)1097-0193(1999)8:4<272::AID-HBM10>3.0.CO;2-4

Hämäläinen, M., and Ilmoniemi, R. (1994). Interpreting magnetic fields of the brain: minimum norm estimates. Med. Biol. Eng. Comput. 32, 35–42. doi: 10.1007/BF02512476

Hamann, S., and Canli, T. (2004). Individual differences in emotion processing. Curr. Opin. Neurobiol. 14, 233–238. doi: 10.1016/j.conb.2004.03.010

Harsay, H. A., Spaan, M., Wijnen, J. G., and Ridderinkhof, K. R. (2012). Error awareness and salience processing in the oddball task: shared neural mechanisms. Front. Hum. Neurosci. 6:246. doi: 10.3389/fnhum.2012.00246

Horvath, J., Winkler, I., and Bendixen, A. (2008). Do N1/MMN, P3a, and RON form a strongly coupled chain reflecting the three stages of auditory distraction? Biol. Psychol. 79, 139–147. doi: 10.1016/j.biopsycho.2008.04.001

Hsu, C. H., Lee, C. Y., and Marantz, A. (2011). Effects of visual complexity and sublexical information in the occipitotemporal cortex in the reading of Chinese phonograms: a single-trial analysis with MEG. Brain Lang. 117, 1–11. doi: 10.1016/j.bandl.2010.10.002

Huang, Y. X., and Luo, Y. J. (2006). Temporal course of emotional negativity bias: an ERP study. Neurosci. Lett. 398, 91–96. doi: 10.1016/j.neulet.2005.12.074

Hung, A. Y., Ahveninen, J., and Cheng, Y. (2013). Atypical mismatch negativity to distressful voices associated with conduct disorder symptoms. J. Child Psychol. Psychiatry 54, 1016–1027. doi: 10.1111/jcpp.12076

Hung, A. Y., and Cheng, Y. (2014). Sex differences in preattentive perception of emotional voices and acoustic attributes. Neuroreport 25, 464–469. doi: 10.1097/WNR.0000000000000115

Jabbi, M., Bastiaansen, J., and Keysers, C. (2008). A common anterior insula representation of disgust observation, experience and imagination shows divergent functional connectivity pathways. PLoS ONE 3:e2939. doi: 10.1371/journal.pone.0002939

Kotchoubey, B., Lang, S., Bostanov, V., and Birbaumer, N. (2002). Is there a mind? Electrophysiology of unconscious patients. News Physiol. Sci. 17, 38–42. Available online at: http://physiologyonline.physiology.org/content/17/1/38.article-info

Krolak-Salmon, P., Henaff, M. A., Isnard, J., Tallon-Baudry, C., Guenot, M., Vighetto, A., et al. (2003). An attention modulated response to disgust in human ventral anterior insula. Ann. Neurol. 53, 446–353. doi: 10.1002/ana.10502

Lange, C. G. (1922). “The emotions: a psychological study,” in The Emotions, eds C. G. Lange and W. James (Baltimore,MD: Williams & Wilkins), 33-90.

Legrain, V., Iannetti, G. D., Plaghki, L., and Mouraux, A. (2011). The pain matrix reloaded: a salience detection system for the body. Prog. Neurobiol. 93, 111–124. doi: 10.1016/j.pneurobio.2010.10.005

Leonard, M. K., Brown, T. T., Travis, K. E., Gharapetian, L., Hagler, D. J. Jr., Dale, A. M., et al. (2010). Spatiotemporal dynamics of bilingual word processing. Neuroimage 49, 3286–3294. doi: 10.1016/j.neuroimage.2009.12.009

Liang, M., Mouraux, A., and Iannetti, G. D. (2013). Bypassing primary sensory cortices–a direct thalamocortical pathway for transmitting salient sensory information. Cereb. Cortex 23, 1–11. doi: 10.1093/cercor/bhr363

Luck, S. J. (2005). An Introduction To The Event-Related Potential Technique. Cambridge, Ma: MIT Press.

Menon, V., and Uddin, L. Q. (2010). Saliency, switching, attention and control: a network model of insula function. Brain Struct. Funct. 214, 655–667. doi: 10.1007/s00429-010-0262-0

Näätänen, R., Kujala, T., Kreegipuu, K., Carlson, S., Escera, C., Baldeweg, T., et al. (2011). The mismatch negativity: an index of cognitive decline in neuropsychiatric and neurological diseases and in ageing. Brain 134, 3435–3453. doi: 10.1093/brain/awr064

Näätänen, R., and Picton, T. (1987). The N1 wave of the human electric and magnetic response to sound: a review and an analysis of the component structure. Psychophysiology 24, 375–425. doi: 10.1111/j.1469-8986.1987.tb00311.x

Pantev, C., Hoke, M., Lehnertz, K., Lutkenhoner, B., Anogianakis, G., and Wittkowski, W. (1988). Tonotopic organization of the human auditory cortex revealed by transient auditory evoked magnetic fields. Electroencephalogr. Clin. Neurophysiol. 69, 160–170. doi: 10.1016/0013-4694(88)90211-8

Pessoa, L., and Adolphs, R. (2010). Emotion processing and the amygdala: from a “low road” to “many roads” of evaluating biological significance. Nat. Rev. Neurosci. 11, 773–783. doi: 10.1038/nrn2920

Phillips, M. L., Williams, L. M., Heining, M., Herba, C. M., Russell, T., Andrew, C., et al. (2004). Differential neural responses to overt and covert presentations of facial expressions of fear and disgust. Neuroimage 21, 1484–1496. doi: 10.1016/j.neuroimage.2003.12.013

Phillips, M. L., Young, A. W., Scott, S. K., Calder, A. J., Andrew, C., Giampietro, V., et al. (1998). Neural responses to facial and vocal expressions of fear and disgust. Proc. Biol. Sci. 265, 1809–1817. doi: 10.1098/rspb.1998.0506

Phillips, M. L., Young, A. W., Senior, C., Brammer, M., Andrew, C., Calder, A. J., et al. (1997). A specific neural substrate for perceiving facial expressions of disgust. Nature 389, 495–498. doi: 10.1038/39051

Pulvermüller, F., and Shtyrov, Y. (2006). Language outside the focus of attention: the mismatch negativity as a tool for studying higher cognitive processes. Prog. Neurobiol. 79, 49–71. doi: 10.1016/j.pneurobio.2006.04.004

Remedios, R., Logothetis, N. K., and Kayser, C. (2009). An auditory region in the primate insular cortex responding preferentially to vocal communication sounds. J. Neurosci. 29, 1034–1045. doi: 10.1523/JNEUROSCI.4089-08.2009

Ross, B., and Tremblay, K. (2009). Stimulus experience modifies auditory neuromagnetic responses in young and older listeners. Hear. Res. 248, 48–59. doi: 10.1016/j.heares.2008.11.012

Sauter, D. A., Eisner, F., Calder, A. J., and Scott, S. K. (2010). Perceptual cues in nonverbal vocal expressions of emotions. Q. J. Exp. Psychol. (Hove). 63, 2251–2272. doi: 10.1080/17470211003721642

Schirmer, A., Escoffier, N., Zysset, S., Koester, D., Striano, T., and Friederici, A. D. (2008). When vocal processing gets emotional: on the role of social orientation in relevance detection by the human amygdala. Neuroimage 40, 1402–1410. doi: 10.1016/j.neuroimage.2008.01.018

Schirmer, A., and Kotz, S. A. (2006). Beyond the right hemisphere: brain mechanisms mediating vocal emotional processing. Trends Cogn. Sci. 10, 24–30. doi: 10.1016/j.tics.2005.11.009

Schirmer, A., Simpson, E., and Escoffier, N. (2007). Listen up! Processing of intensity change differs for vocal and nonvocal sounds. Brain Res. 1176, 103–112. doi: 10.1016/j.brainres.2007.08.008

Schirmer, A., Striano, T., and Friederici, A. D. (2005). Sex differences in the preattentive processing of vocal emotional expressions. Neuroreport 16, 635–639. doi: 10.1097/00001756-200504250-00024

Seeley, W. W., Menon, V., Schatzberg, A. F., Keller, J., Glover, G. H., Kenna, H., et al. (2007). Dissociable intrinsic connectivity networks for salience processing and executive control. J. Neurosci. 27, 2349–2356. doi: 10.1523/JNEUROSCI.5587-06.2007

Sereno, M. I., Dale, A. M., Liu, A. K., and Tootell, R. B. H. (1999). A surface-based coordinate system for a canonical cortex. Neuroimage 9, 195–207. doi: 10.1016/S1053-8119(96)80254-0

Shahin, A., Bosnyak, D. J., Trainor, L. J., and Roberts, L. E. (2003). Enhancement of neuroplastic P2 and N1c auditory evoked potentials in musicians. J. Neurosci. 23, 5545–5552. Available online at: http://www.jneurosci.org/content/23/13/5545.short

Simon-Thomas, E. R., Keltner, D. J., Sauter, D., Sinicropi-Yao, L., and Abramson, A. (2009). The voice conveys specific emotions: evidence from vocal burst displays. Emotion 9, 838–846. doi: 10.1037/a0017810

Sridharan, D., Levitin, D. J., and Menon, V. (2008). A critical role for the right fronto-insular cortex in switching between central-executive and default-mode networks. Proc. Natl. Acad. Sci. U.S.A. 105, 12569–12574. doi: 10.1073/pnas.0800005105

Sterzer, P., and Kleinschmidt, A. (2010). Anterior insula activations in perceptual paradigms: often observed but barely understood. Brain Struct. Funct. 214, 611–622. doi: 10.1007/s00429-010-0252-2

Thönnessen, H., Boers, F., Dammers, J., Chen, Y. H., Norra, C., and Mathiak, K. (2010). Early sensory encoding of affective prosody: neuromagnetic tomography of emotional category changes. Neuroimage 50, 250–259. doi: 10.1016/j.neuroimage.2009.11.082

Tremblay, K., Kraus, N., McGee, T., Ponton, C., and Otis, B. (2001). Central auditory plasticity: changes in the N1-P2 complex after speech-sound training. Ear Hear. 22, 79–90. doi: 10.1097/00003446-200104000-00001

Wicker, B., Keysers, C., Plailly, J., Royet, J. P., Gallese, V., and Rizzolatti, G. (2003). Both of us disgusted in My insula: the common neural basis of seeing and feeling disgust. Neuron 40, 655–664. doi: 10.1016/S0896-6273(03)00679-2

Wiethoff, S., Wildgruber, D., Kreifelts, B., Becker, H., Herbert, C., Grodd, W., et al. (2008). Cerebral processing of emotional prosody–influence of acoustic parameters and arousal. Neuroimage 39, 885–893. doi: 10.1016/j.neuroimage.2007.09.028

Keywords: mismatch negativity (MMN), magnetoencephalography (MEG), anterior insular cortex (AIC), emotional salience

Citation: Chen C, Lee Y-H and Cheng Y (2014) Anterior insular cortex activity to emotional salience of voices in a passive oddball paradigm. Front. Hum. Neurosci. 8:743. doi: 10.3389/fnhum.2014.00743

Received: 06 March 2014; Accepted: 03 September 2014;

Published online: 22 September 2014.

Edited by:

Sonja A. Kotz, Max Planck Institute Leipzig, GermanyReviewed by:

Sascha Frühholz, University of Geneva, SwitzerlandAlessandro Tavano, University of Leipzig, Germany

Copyright © 2014 Chen, Lee and Cheng. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) or licensor are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Yawei Cheng, Institute of Neuroscience, National Yang-Ming University, 155, Sec. 2, St. Linong, Dist. Beitou, Taipei 112, Taiwan e-mail:eXdjaGVuZzJAeW0uZWR1LnR3

Chenyi Chen

Chenyi Chen Yu-Hsuan Lee

Yu-Hsuan Lee Yawei Cheng

Yawei Cheng