- 1Department of Psychology, University of Milano-Bicocca, Milan, Italy

- 2Milan Centre for Neuroscience, Milan, Italy

Neuropsychological and neuroimaging studies have shown that facial recognition and emotional expressions are dissociable. However, it is unknown if a single system supports the processing of emotional and non-emotional facial expressions. We aimed to understand if individuals with impairment in face recognition from birth (congenital prosopagnosia, CP) can use non-emotional facial expressions to recognize a face as an already seen one, and thus, process this facial dimension independently from features (which are impaired in CP), and basic emotional expressions. To this end, we carried out a behavioral study in which we compared the performance of 6 CP individuals to that of typical development individuals, using upright and inverted faces. Four avatar faces with a neutral expression were presented in the initial phase. The target faces presented in the recognition phase, in which a recognition task was requested (2AFC paradigm), could be identical (neutral) to those of the initial phase or present biologically plausible changes to features, non-emotional expressions, or emotional expressions. After this task, a second task was performed, in which the participants had to detect whether or not the recognized face exactly matched the study face or showed any difference. The results confirmed the CPs' impairment in the configural processing of the invariant aspects of the face, but also showed a spared configural processing of non-emotional facial expression (task 1). Interestingly and unlike the non-emotional expressions, the configural processing of emotional expressions was compromised in CPs and did not improve their change detection ability (task 2). These new results have theoretical implications for face perception models since they suggest that, at least in CPs, non-emotional expressions are processed configurally, can be dissociated from other facial dimensions, and may serve as a compensatory strategy to achieve face recognition.

Introduction

Prosopagnosia refers to a category-specific perceptual deficit in face recognition. It can be acquired (i.e., resulting from brain damage, mainly after lesions of occipito-temporal regions; Bodamer, 1947) or congenital (McConachie, 1976). Congenital prosopagnosia (CP) is not caused by brain lesions, but is present from birth, and it occurs along with intact sensory visual abilities and normal intelligence (Behrmann and Avidan, 2005). It has been described as a quite common cognitive disorder, which occurs in 2.47% of the population and almost always runs in families (Kennerknecht et al., 2006). It can be quite dysfunctional given the importance of faces in social life (Behrmann and Avidan, 2005).

Faces, in fact, are among the most important visual stimuli we perceive as they simultaneously convey several pieces of important social information. They inform us not only about a person's identity, gender, or age, but also about their mood, emotion, and direction of gaze. Thus, faces can be considered multi-dimensional stimuli. Although several behavioral and neuropsychological studies have brought evidence for the existence of cognitive and neural mechanisms dedicated to face perception (Kanwisher et al., 1997, 1999; Posamentier and Abdi, 2003; Kanwisher and Yovel, 2006), still little is known about how these various dimensions are coded and how they are integrated into a single face percept. A first classical distinction has been made between facial expression and facial recognition and identity, which would be processed along two separate routes after an initial stage of visual structural encoding (Bruce and Young, 1986; Kanwisher et al., 1997, 1999; Haxby et al., 2000; Posamentier and Abdi, 2003; Kanwisher and Yovel, 2006). Indeed, it has been reported that prosopagnosic patients with lesions in associative visual cortices, despite their deficit in face recognition can still recognize emotional facial expressions, whereas deficits in expression recognition can occur in patients without prosopagnosia (e.g., Kurucz and Feldmar, 1979; Adolphs et al., 1995), suggesting that expression and identity can be processed independently from each other.

Using fMRI, Haxby et al. (2000) proposed a distributed neural system model for face perception in which face responsive regions were grouped in two systems: the core system that includes areas involved with the visuo-perceptual analysis of a face, and the extended system that includes areas that are involved in the extraction of other information (such as semantics, speech, emotions). Within the core system they emphasize a further distinction between the representation of invariant and changeable aspects of faces. In particular, an important functional and anatomical distinction has been made for the processing of invariant aspects (i.e., eyes, nose, mouth, etc.) and that of changeable aspects of the face (such as eye-gaze direction, facial expression, lip movement, and pre-lexical speech perception), with the former being responsible for the processing of face identity, and the latter being involved in the perception of information that facilitates social interaction and communication (e.g., facial expression).

In the analysis of facial expressions the classical models implicitly assume an emotional content (Bruce and Young, 1986; Haxby et al., 2000). However, in everyday life people can show expressions on their faces which do not convey an emotional state. A good example is represented by celebrity impersonators who can mimic the ways in which famous people move their faces. Contrary to facial emotional expressions that are universally recognized and expressed in the same way by all individuals (Ekman and Friesen, 1976), this particular kind of facial expression (called dynamic facial signatures) is idiosyncratic, does not carry an emotional content and provides cues beyond the form of the face (Munhall et al., 2002; O'Toole et al., 2002).

The fact that an observer can quickly and easily recognize in the impersonator's performance the facial mimics of that particular famous actor or politician indicates that we have the ability to extract the identity of a face not only from its invariant aspects (e.g., visual appearance), but also from its changeable aspects (e.g., facial motion and expressions), and even when they do not convey an affective state. People move in unique ways and thus have dynamic facial signatures that perceivers can recognize (Lander et al., 1999). Hence, at least for familiar faces, the person's identity is conveyed both by emotional and non-emotional facial expressions (Hill and Johnston, 2001; Posamentier and Abdi, 2003; Lander and Metcalfe, 2007). Moreover, there is evidence that our brain and cognitive systems can also recognize people both from features and from facial expressions that do not convey an affective state (Knappmeyer et al., 2001). However, there are several outstanding issues regarding the processing of non-emotional facial expressions.

What happens when we perceive expressions that are not emotional (e.g., when somebody pulls his/her face in a meaningless but distinct way)? In keeping with the existing cognitive and neural models, would they be analyzed by the same mechanism and cortical regions underlying the processing of emotional facial expressions? Or instead, would they be processed and perceived as a change in the face invariant features? Although Haxby et al.'s model has been modified to accommodate the recognition of familiar faces thorough the processing of non-emotional facial expression by differentiating the role of visual familiarity from the role of person knowledge (O'Toole et al., 2002; Gobbini and Haxby, 2006), no claim has been made about a possible distinction between emotional and non-emotional facial expression in unfamiliar (unknown) faces.

Recently, it is has been proposed that information about identity could be coded both in the FFA and in the STS. Specifically, the FFA would process static features for both familiar and unfamiliar faces, and the STS, as well as processing emotional facial expression, could also code face identity in the form of dynamic, non-emotional identity signatures (O'Toole et al., 2002). Dynamic information, in fact, contributes to face/person recognition particularly in poor viewing conditions and when invariant facial cues are degraded (Knight and Johnston, 1997; Lander et al., 1999, 2001; Lander and Bruce, 2000). This is because characteristic movements and gestures are reliable cues not only to identity, but also to the recognition of faces of unknown people that have already been seen. In other words, face recognition (i.e., the ability to categorize a face as already seen, although unknown) also relies on changeable features of the face and their dynamic patterns, as does face identity (i.e., the ability to recognize a face as familiar and retrieve our knowledge of it). Lander and Davies (2007) using a face recognition task showed that characteristic motion information could be extracted very rapidly and efficiently when learning a new face, thus suggesting that as a face is learned, dynamic facial information is encoded with its identity and could be used for face recognition also in unfamiliar faces.

Although, like acquired prosopagnosic patients (Kurucz and Feldmar, 1979; Tranel et al., 1988; Adolphs et al., 1995; but see also Humphreys et al., 2007), congenital prosopagnosic individuals are indistinguishable from controls in perceiving emotional facial expressions (e.g., Behrmann and Avidan, 2005), very little investigation has been carried out to understand whether in this population non-emotional facial expressions can lead to person recognition, and are dissociable from other facial dimensions (i.e., facial features and emotional facial expressions).

The first evidence suggesting that non-emotional facial expressions could be processed in a specific way, dissociable from emotional facial expressions and other facial features, comes from a study by Comparetti et al. (2011) on typical development individuals (young adults). In this behavioral study both the changeable (emotional and non-emotional expressions) and the invariant (features) aspects of unfamiliar faces were manipulated to investigate a possible new dissociation between emotional and non-emotional facial expressions (i.e., expressions that do not have an affective meaning). Participants were asked to perform a recognition task (2AFC paradigm) and a change detection task, using upright and inverted faces. The faces to be recognized could be either identical to the ones presented in the exposure phase (a face bearing a neutral expression), the same but modified in their internal features, emotional and non-emotional facial expressions, or new faces. Once participants recognized a face as an already seen one, they had to detect whether it was identical to the one previously seen or contained a change. The change could regard the size of the eyes or the mouth (invariant feature manipulation) or the presence or absence of an emotional or a non-emotional facial expressions. The accuracy and RT were measured. It was hypothesized that, if the emotional and non-emotional facial expressions were processed differently, a difference in performance for the three manipulations should emerge. The results showed that each of the three different manipulation conditions had a different impact on the inversion effect (i.e., a decrement in performance that occurs when faces are inverted, thought to reflect a disruption in configural processing and in encoding invariant features; Yin, 1969). In particular, the magnitude of the inversion effect differed in the three manipulations, indicating a difference not only in the processing of the invariant features and the emotional facial expressions, but also a further difference in the processing of non-emotional and emotional facial expressions.

These differences could be due to the fact that although both emotional facial expressions and non-emotional facial expressions convey biological motion, only the former would involve the emotional system (i.e., the extended system in Haxby et al.'s model). Since both types of facial expressions convey dynamic facial information, it is plausible that they are processed by the same area of the core system (i.e., the STS). However, other areas outside the core system could also be involved in processing them, causing the differences between emotional and non-emotional expressions (Gobbini and Haxby, 2006). Thus, it is an open question whether non-emotional facial expressions, which seem to be processed differently both from invariant features and emotional facial expressions, can lead to, or contribute to categorize a face as already seen (i.e., face recognition).

Following our previous study (Comparetti et al., 2011), we made the hypothesis that non-emotional and emotional expressions are processed separately as much as invariant features and changeable aspects.

Important hints come from the study of congenital prosopagnosics, who are impaired at recognizing faces, have difficulties in deriving the configural or holistic relations between face features, but can use facial movement information conveyed by a dynamic face to recognize facial identities (Steede et al., 2007) or to discriminate in a matching task whether two sequentially presented dynamic unfamiliar faces were or not the same identity (Lander et al., 2004). CP individuals, similar to patients affected by acquired prosopagnosia (Busigny and Rossion, 2010), are minimally affected by face inversion and some of them even show a better performance for inverted than for upright faces (the “inversion” superiority effect) (Avidan et al., 2011). Therefore, given that it has been found that in typical development individuals invariant features, emotional and non-emotional facial expressions differ in terms of configural face processing (Comparetti et al., 2011), CP individuals may process non-emotional facial expressions differently than invariant face features, and in the same way as typical development individuals. Moreover, if the processing of non-emotional facial expressions is intact in CP individuals, then it is possible that they use them as cues to facilitate face recognition, thus compensating for their face processing deficits.

The aim of the present study was two-fold. First, we wanted to investigate whether facial expressions that do not convey an affective state (i.e., non-emotional facial expressions) are processed in the same way as emotional facial expressions by congenital prosopagnosic individuals. Second, we wondered whether in CP individuals these expressions could be used as a cue to face recognition given that they should not be, or be less impaired in processing the changeable aspects of a face (Steede et al., 2007). To this end, as in Comparetti et al. (2011), we used the face inversion paradigm and we presented static unfamiliar faces in which one of the following facial aspects was changed: emotional expression; “non-emotional” expression; size of invariant features. Two different tasks were used: a same/different person task (recognition task) and a change detection task. The first task allowed us to test the effect of our manipulations on face recognition processing; whereas the second one was designed to test whether, within the same identity, the change of a specific facial aspect was successfully detected. Moreover, we exploited the face inversion effect as an indicator of underlying perceptual processing. A difference in the magnitude of the face inversion effect for each manipulation in each task would reflect a difference in the processing of face recognition and emotional/non-emotional facial expressions.

Method

Participants

The study was conducted in accordance with the ethical standards laid down in the 1964 Declaration of Helsinki and fulfilled the ethical standard procedure recommended by the Italian Association of Psychology (AIP). All experimental protocols were also approved by the ethical committee of the University of Milano-Bicocca. All the participants were volunteers and gave their informed consent to the study.

Six participants (3 F and 3 M; aged between 25 and 45 years old; mean = 35; SD = 8.83), who reported in a non-structured interview lifelong difficulties in face recognition and showed impaired performance on tests of face recognition, took part in the study. They were right-handed, had normal or corrected-to-normal vision, and had no neurological or neuropsychological deficit aside from the impairment in face processing.

In order to compare them with a control group, their performance was compared with that of 10 typical development individuals (6 F and 4 M). They did have difficulties in face recognition (self-report) and were matched to the CP group by be age [controls aged between 22 and 49 years old; mean = 33.8; SD = 9.55; CPs vs. controls t(14) = 0.25; n.s.].

Assessment of Congenital Prosopagnosia

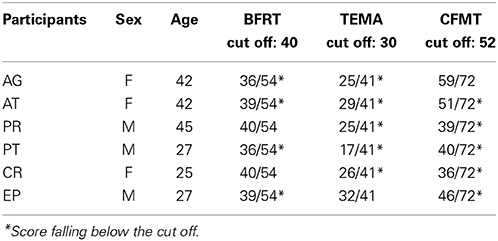

Due to the fact that there is an ongoing debate on how to diagnose CP, and on the heterogeneity of the deficit (Schmalzl et al., 2008), in the present study we assessed face perception problems reported by the CP participants by means of more than just one neuropsychological test. The problems reported in a pre-test not structured interview concerned perceived face recognition difficulties, uncertainty in face recognition, prolonged recognition times and the development of compensatory strategies, a pattern compatible with the presence of CP. The presence of CP was further confirmed by comparing the performance of each participant to normative data on three face processing tasks: Benton Facial Recognition Test, TEMA Subtest for memory faces, Cambridge Face Memory Test.

The Benton Facial Recognition Test (BFRT, Benton and Van Allen, 1968; Ferracuti and Ferracuti, 1992), widely used for acquired prosopagnosia, is a test to assess face recognition abilities. For each item, individuals are presented with a target face above six test faces, and they are asked to indicate which of the six images match the target face.

In the TEMA (Reynolds and Bigler, 1995), the subtest for memory faces requires the recognition of target faces from sets of photos of individuals differing in terms of age, gender and ethnic backgrounds, with an increasing number of targets and distracters.

The Cambridge Face Memory Test (CFMT, Duchaine and Nakayama, 2006; Bowles et al., 2009) is the most used and valid test to diagnose CP and it measures face memory (Wilmer et al., 2012); participants learn six unfamiliar target faces, and subsequently are required to recognize them from sets of three faces (one target and two distractor faces). Besides, those faces vary from the learned one (e.g., seen from different viewpoints, with visual noise, etc.). The CFMT test includes two versions based on the orientation of faces, upright and inverted.

Table 1 shows the performance of our experimental group at each test. Inclusion criteria required a pathological performance at least in two out of three tests.

Stimuli

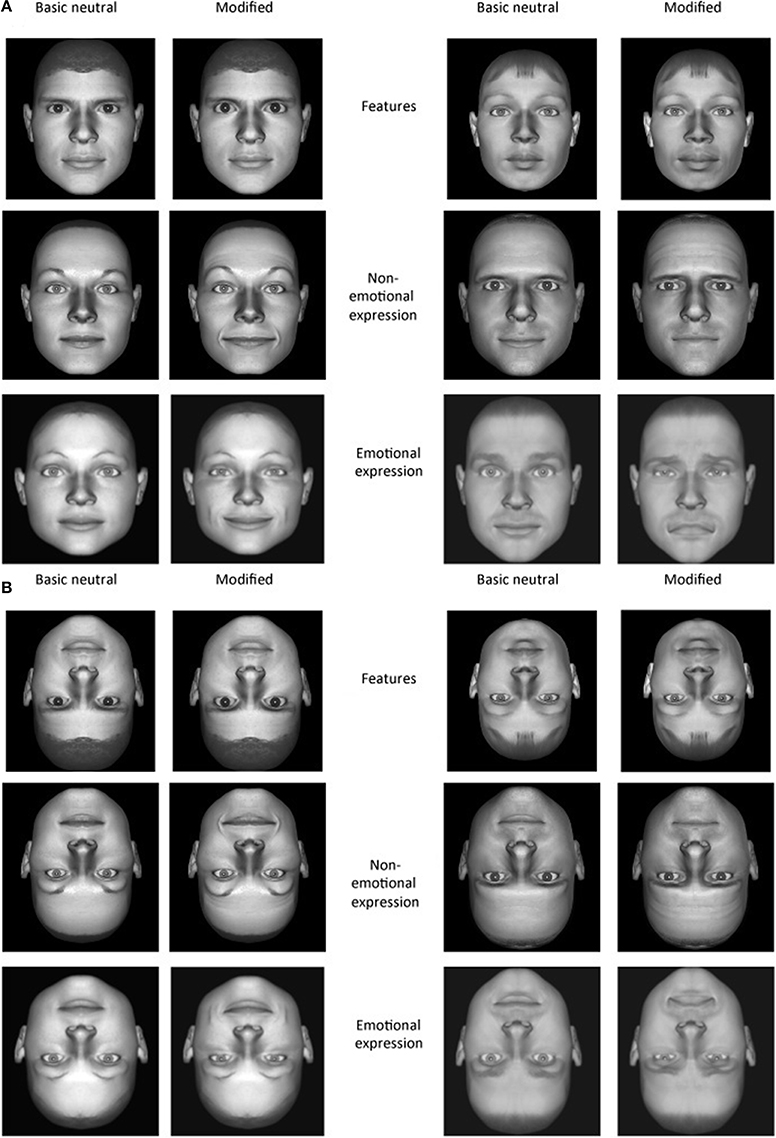

Stimuli were the same as used in Comparetti et al. (2011). The faces were created from digital photos of real faces by means of Adobe Photoshop and Poser 5.0 software (Curios Lab, Inc., ad e-frontier, Inc., Santa Cruz, CA) as follows. Firstly, by means of Photoshop a completely symmetrical face was created by duplicating just one hemi-face of the original face. Therefore, the left and the right hemi-faces were perfect mirror-images of one another. This ensured that none of the stimuli used contained any intrinsic, unintended asymmetries that could facilitate recognition. Then, the mirror digital photos were imported in a different software program (Poser 5.0) to generate 12 neutral basic stimuli. For every face, external features were almost entirely removed by the software so that face recognition could only be based on the internal features.

The stimuli comprised 12 neutral basic (unmodified) faces generated by Poser and three sets of modified faces in which different manipulations were made (features, emotions, and non-emotional facial expressions). Among the neutral stimuli, 4 were target stimuli (2 picturing females and 2 picturing males) and 8 were distracters (4 F and 4 M), plus 72 modified stimuli which were generated by target and distracter stimuli. For every manipulation, indeed, two different versions of the same manipulation were created (3 different manipulations × 2 versions = 72) using different neutral faces (see Figure 1). The first manipulation, regarded the size of features. From each target stimulus and from each distracter, one modified stimulus (version 1) was created in which the eyes were enlarged and another one was created in which the mouth was enlarged (version 2). Both changes consisted of an increase in size of 1 Poser software unit. This unit respects the boundaries of biological compatibility. The second manipulation, regarded emotional facial expressions. Neutral stimuli were now manipulated by means of Poser 5.0 software to show either a happy (version 1) or a sad (version 2) expression. Finally, the non-emotional facial expressions were created by manipulating the neutral faces in their upper (version 1) and lower part (version 2) respectively, around the eyes and the mouth. In doing so, the resulting facial expressions did not express an affective state (i.e., non-emotional facial expressions).

Figure 1. (A) Examples of basic neutral faces (male and female) and their modified versions. The changes in features are depicted in the two upper rows. On the left enlarged eye size; on the right enlarged mouth size. The changes in non-emotional expressions are depicted in the middle rows. On the left the change occurred in the upper part of the face; on the right the change occurred in the lower part of the face. The changes in emotional expression version are depicted in the lower rows. On the left a happy expression; on the right a sad expression. (B) Stimuli are showed inverted. Each manipulation complied with the parameters of biological compatibility.

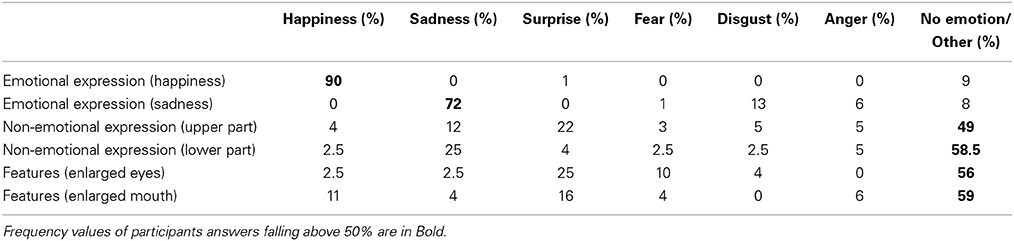

In order to validate the modified stimuli for use in the present and in other studies (e.g., Comparetti et al., 2011) a scalar rating was performed on a sample of 36 stimuli (12 randomly selected from each stimulus set) to evaluate whether they conveyed or not an emotional facial expression. The selected stimuli were presented in upright orientation on a PC display. Twenty typical development participants who did not take part in the present study (12 F and 8 M, aging between 18 and 32 year old) had to evaluate the faces in a Likert-like scale from 0 (stimulus does not express any emotions) to 4 (stimulus expresses clearly an emotions) (see Table 2). Following that, they had to indicate which emotions they perceived. They could choose among 8 alternatives: happiness, sadness, anger, disgust, fear, surprise, “other,” or “non-emotions.” Each stimulus lasted until response.

Table 2. Mean percentage (%) of emotional and non-emotional ratings given to each type of modified stimulus.

A univariate Analysis of Variance (ANOVA) was performed on the mean percentages of emotional and non-emotional ratings. The effect of stimulus condition was significant [F(1, 19) = 81.19; p < 0.0001]. The results were as follows. In the case of manipulation of features and manipulation of non-emotional facial expressions the stimuli were generally perceived as not expressing a particular affective state and they did not differ from each other, whereas all the stimuli bearing an emotional facial expression were judged as expressing an emotion and differed both from those with modified features (p < 0.0001) and from those displaying a non-emotional facial expression (p < 0.0005). Moreover, faces expressing happiness were judged as happy stimuli, and those expressing sadness as sad stimuli. Therefore, the rating analysis corroborated the validity of our face stimuli.

In the present experiment, each face (7.1° × 9.2°) was presented in gray scale and against the same black colored background. All of the stimuli were presented both upright and inverted (see Figure 1).

Apparatus

The experiment took place in a dark, sound attenuated room. Participants sat in front of a PC computer monitor at a distance of approximately 70 cm. The screen was framed with a circle black carton board of about 15 cm of diameter. Stimulus presentation and registration of task performance were controlled by program Presentation version 9.8. Two keyboards were used: one for the participants, covered by a black card with a hole in correspondence with the button “yes” and “no” (recognition task, see below) and one for the experimenter (same/different task, see below).

Procedure

The experiment was divided in two sessions, an exposure and an experimental session. In the exposure session the participants saw on the screen the 4 target faces, one by one, 10 times, for 3 s each time. The experimental session followed the exposure one and was divided in four blocks: 2 of upright faces and 2 of inverted faces. In each block neutral and manipulated faces were presented randomly. For each experimental trial the sequence of events was as follows. The trial started with a fixation cross in the center of the screen which lasted 250 ms, then the face stimulus was presented in the center for 500 ms, then there was a gray screen for each task, the same/different person task and the change detection task. For every stimulus participants were asked to indicate whether or not the face was one of the target stimuli. Participants had to press the button “yes” if they saw the face in the exposure phase, or the key no if they did not recognize the face (2 Alternative Forced Choice paradigm). When a stimulus received a “yes” response, participants had then to judge if the stimulus was exactly the same as the one seen in the exposure phase or if there was some change. For the same/different task the experimenter registered the participant's answer on another keyboard pressing the “same” or “different” key. For either the recognition task or the same/different task accuracy was recorded and analyzed.

We used the presence of the inversion effect as a marker of configural processing (e.g., Rossion, 2008).

Results

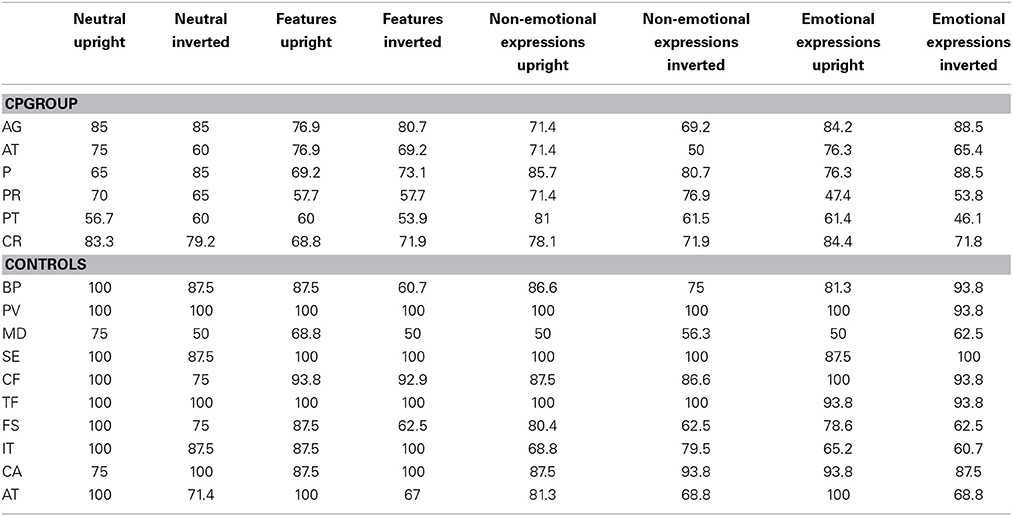

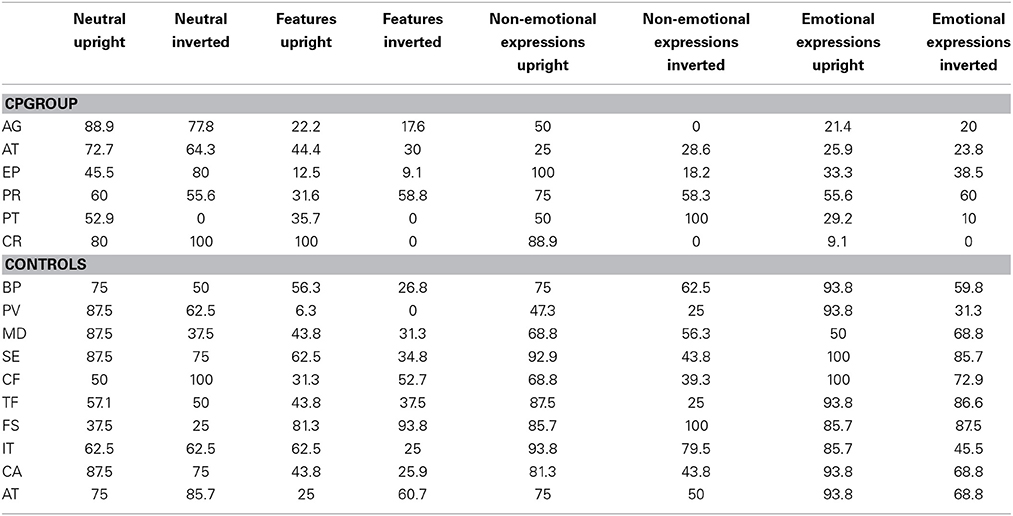

The percentage of correct responses was used as a dependent variable (Tables 3, 4).

Table 3. The mean percentages of correct responses for each participant subdivided for each condition in Task 1 (Recognition task).

Table 4. The mean percentages of correct responses for each participant subdivided for each condition in Task 2 (Change detection task).

An ANOVA was run separately for each task (recognition and change detection) and for each orientation (upright and inverted), with group (CPs and controls) as a between-subject factor and condition (neutral, features, non-emotional, and emotional expressions) as a within-subject factor. T-test statistics for independent samples were run as Post-hoc tests to compare the performances of the two groups for significant interactions. T-test statistics against the null hypothesis (50%) were also performed in order to test that the effects were not due to chance.

All statistical analyses were conducted using the software package Statistica for Windows (version 8.0, Statsoft Inc., 2007). The variances between groups were assessed by Levene's test for the homogeneity of the variances.

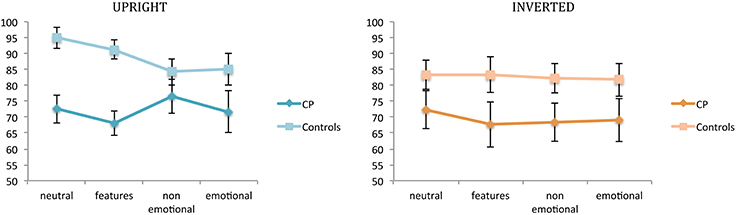

Figure 2 illustrates participants' performance (CPs and controls) at the first task for each experimental condition.

Figure 2. Mean percentages of correct responses in the recognition task (task 1). Percentages are plotted for up-right and inverted presentation as a function of experimental manipulations. Error bars represent standard error of the mean. *Between subjects significant differences.

A first ANOVA was run for the recognition task and the upright condition.

A main effect of group emerged [F(1, 14) = 9.72; p = 0.007], confirming that the two groups came from different populations in terms of their ability to recognize unfamiliar faces. As expected, controls were better than CPs in recognition (88.87 vs. 72.19%, respectively). However, the significant interaction between group and condition [F(3, 42) = 3.196; p = 0.033] indicates that this was the case only for neutral faces [controls: 95% vs. CPs: 72.5%, t(14) = −4.084; p = 0.001; Levene test: F(1, 14) = 0.096; p = 0.761] and for faces with modified features size [controls: 91.26% vs. CPs: 68.08%, t(14) = −4.804; p = 0.0002; Levene test: F(1, 14) = 0.317; p = 0.582]. Both non-emotional facial expressions [controls: 84.21% vs. CPs: 76.51%, t(14) = −1.14; n.s.] and emotional facial expressions [controls: 85.02% vs. CPs: 71.66%, t(14) = −1.62; n.s.] did not differ between the two groups.

No significant main effect of condition emerged [F(3, 42) = 1.21; n.s.].

A second ANOVA was run for the recognition task and the inverted condition.

No significant main effect of group [F(1, 14) = 3.361; n.s.], or condition [F(3, 42) = 0.306; n.s.] emerged. Their interaction was also not significant [F(3, 42) = 0.183; n.s.].

These results are coherent with the idea that in control subjects a configural processing of features is triggered only by upright faces (e.g., Diamond and Carey, 1986), and is compromised in CP individuals (e.g., de Gelder and Rouw, 2000; Behrmann and Avidan, 2005).

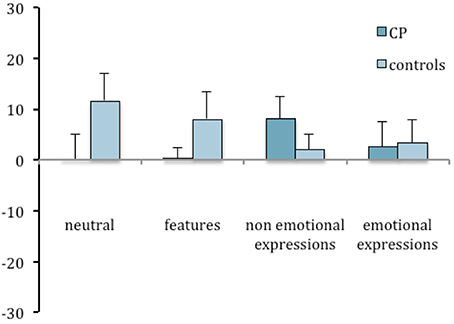

In order to assess configural face-specific mechanisms, the face inversion effect was computed as the difference in accuracy between upright and inverted faces, and CP individuals' performance was compared to that of controls for each task by means of an ANOVA. No significant effect occurred [Group: F(1, 14) = 0.446; n.s.; Condition: F(3, 42) = 0.245; n.s.; Interaction: F(3, 42) = 3.391; n.s.].

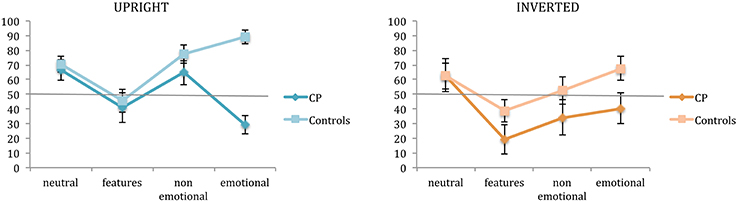

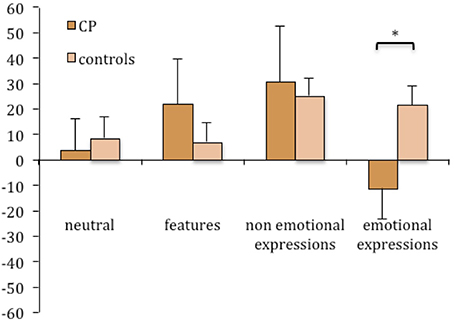

The detection of features, and non-emotional and emotional expression changes was assessed by the second task, in which participants were requested to judge if the faces recognized as already seen in the first task were exactly the same or somehow different from those seen in the exposure phase. Figure 3 illustrates the performance at the second task of controls and CP individuals for each experimental condition.

Figure 3. Mean percentages of correct responses in the change detection task (task 2). Percentages are plotted for up-right and inverted presentation as a function of experimental manipulations. Error bars represent standard error of the mean. *Between subjects significant differences.

A third ANOVA was run on change detection accuracy for the upright condition.

A main effect of group emerged [F(1, 14) = 18.68; p = 0.0007], confirming a better performance of controls in this task (70.75 vs. 50.41%, respectively). A main effect of condition also emerged [F(3, 42) = 5.76; p = 0.002], as well as a significant interaction between group and condition [F(3, 42) = 6.459; p = 0.001]. In particular, these results indicated that the feature condition differed from all the other ones (all p < 0.005) and that a difference between the two groups was present only when faces had emotional expressions [controls: 89.04% vs. CPs: 29.08%, t(14) = −7.819; p < 0.0001; Levene test: F(1, 14) = 0.041; p = 0.842].

A forth ANOVA was run on change detection accuracy for the inverted condition.

Only the main effect of group [F(1, 14) = 5.976; p = 0.028] and condition [F(3, 42) = 4.199; p = 0.01] emerged, confirming a slightly better performance of controls in this task (55.31 vs 39.19%, respectively), and a different performance with feature modified faces than with neutral (p = 0.004) and emotional expression faces (p = 0.014).

As it can be seen by the inspection of Figure 3, a change in the size of features was really hard to detect both for CP individuals and controls. They all performed below 50%, either with upright or inverted stimuli (CPs: 41.08%, 19.26%, and controls: 45.66%, 38.85%, respectively). This result could be due to the fact that the face processing mechanisms have a low sensitivity to such modifications so as to guarantee efficiency in face identification even when some modifications to the face features (such as a puffiness, for example) occur.

However, the performance at the second task was generally very low in both groups and for this reason we tested each condition in each group vs. the percentage of random responses (50%).

Controls showed a performance above the chance level in the neutral [t(9) = 3.618; p = 0.006], the non-emotional [t(9) = 6.243; p = 0.0001] and the emotional expression [t(9) = 8.947; p < 0.0001] conditions with upright stimuli. As regards the inverted condition, performance was above chance level only in the emotional expression [t(9) = 3.051; p = 0.014].

In contrast, the CPs' performance was never significantly above the chance level, and in two conditions were significantly lower: features condition of inverted stimuli [t(5) = −3.348; p = 0.020] and emotional expression condition of upright stimuli [t(5) = −3.325; p = 0.021].

It is interesting to note that the presence of emotional expressions facilitates the detection of change in the controls, and reduces it in the CPs. It is not the same for non-emotional expressions.

Overall, the results of task 2 suggest a difference in the processing of emotional and non-emotional facial expressions.

The face inversion effect was computed for task 2 as well, and an ANOVA was run with group as a between-subject factor and condition as a repeated-subject factor. No significant effects were found [Group: F(1, 14) = 0.289; n.s.; Condition: F(3, 42) = 1.763; n.s.; Interaction: F(3, 42) = 1.694; n.s.]. Nevertheless, the inspection of Figures 4, 5 suggests that CP individuals show a greater inversion effect in the condition of non-emotional expressions, in task 2 as much as in task 1.

Figure 4. Mean difference between upright and inverted conditions in the percentages of correct responses in the recognition task (task 1) plotted as a function of group and experimental manipulations. Error bars represent standard error of the mean.

Figure 5. Mean difference between upright and inverted conditions in the percentages of correct responses in the change detection task (task 2) plotted as a function of group and experimental manipulations. Error bars represent standard error of the mean. *Between subjects significant differences.

Discussion

The aim of this investigation was to shed new light on emotional and non-emotional facial expression processing and to investigate whether in CP individuals these expressions could be used as a cue to face recognition, given that they should be less impaired, or not at all impaired (e.g., Steede et al., 2007). Two consecutive tasks were used. In the first task participants had to recognize static unfamiliar faces which could differ either in the emotional facial expressions, in the non-emotional facial expressions, or in the size of invariant features from a set of previously presented faces. The face stimuli were presented either upright or inverted. We also developed a new task (task 2—change detection task), in which participants were asked to detect whether or not a change occurred in the recognized face compared to the exposure session.

The first main result that emerged from our data was that in task 1, in the upright presentation condition, CPs had a significantly worse performance than controls only for two conditions: neutral and feature-modified faces. This is in line with the hypothesis proposed in the literature (e.g., de Gelder and Rouw, 2000; Behrmann and Avidan, 2005) in which congenital prosopagnosia is characterized by an impairment in processing the invariant features of faces.

The second main result concerned the fact that we did not find a difference between CPs and controls in the recognition of unfamiliar face (as our stimuli were) when the manipulations involved facial expressions (emotional and non-emotional), thus suggesting in CPs both a dissociation between changeable and invariant aspects, and a spared processing of the changeable aspects of the face. Although it has already been shown that dynamic facial expressions can help face recognition (Longmore and Tree, 2013), our finding further indicates that CPs could effectively use non-emotional facial expressions of static images as a cue to recognition, but this seems not to be the case for emotional ones (as evident from their performance in task 2).

Even though we did not formally assess CP abilities in discriminate emotional expressions, it is worth noticing that CP individuals were bad at detecting emotional expression changes but this did not seem to affect their ability to detect a change in non-emotional facial expressions. This result is new and suggests that CPs' good performance in the detection of changes in facial expressions is likely to reflect the use of face motion cues even when they have to be derived from a static image of the face, as in the present study.

The anatomo-functional correlate of the processing of changeable aspects of a face is considered to be the Superior Temporal Sulcus (STS; Haxby et al., 2000), the same area which also underlies the processing of biological motion (Allison et al., 2000). It has been reported that responses to facial expressions and other changeable aspects of the face, such as gaze directions, have different locations in the STS (Engell and Haxby, 2007). Therefore, given the heterogeneity of STS, and on the basis of our results, it could be argued that STS region functionality is preserved in CPs. Therefore, one may expect that CP individuals could also perceive biological motion, in the same way they could process the changeable aspect of a face. Future research is needed to clarify this issue.

Interestingly, our results also bring evidence of a further differentiation between emotional and non-emotional facial expression processing. A new finding that extends the results present in the literature (Longmore and Tree, 2013), and is not accommodated by many face recognition models (Bruce and Young, 1986; Kanwisher et al., 1997, 1999; Haxby et al., 2000; Posamentier and Abdi, 2003; Kanwisher and Yovel, 2006). Although CPs' performance in the recognition task did not differ from that of controls in the emotional and non-emotional expressions conditions, in the second task it dropped severely when the change occurred in the emotional facial expressions.

Taken together these data indicate that the processing of emotional and non-emotional facial expressions differs and that a successful recognition of unfamiliar faces can rely on the detection of non-emotional changeable facial features, at least in subjects affected by CP. A possible explanation for this is that emotions conveyed by facial expression have a more universal meaning than non-emotional facial expressions, which instead can be idiosyncratic and more suitable to face recognition (idiosyncratic dynamic facial signature, as defined by O'Toole et al., 2002). In other words, emotional facial expressions are less useful in recognizing an unfamiliar face which has been seen only once. Hence, non-emotional expressions can be used as a better cue to face identity even when the face is unfamiliar. Note also that our findings demonstrate an accurate detection of non-emotional expressions (task 2), other than a dissociation with emotional expressions. Therefore, they can be memorized and used independently from emotional expressions for correct face recognition both by controls and CPs.

We suggest that CPs could rely more on changeable features for improving face recognition, and this is why they could also be more sensitive to detecting differences in these face dimensions.

Our explanation is consistent with the results from a previous study by Lander and Davies (2007), who claimed the possibility of recognizing faces from facial expression even if they are unfamiliar because as a face is learnt, information about its characteristic motion is encoded with identity. Indeed, it seems that typical development individuals were able to extract and encode dynamic information even when viewing a face for a very short time, such as in our exposure session. Our findings are consistent with this idea and support the proposal of a rapid learning of the characteristic of “implied” motion patterns. In this vein, CPs may have developed a special ability to extract information on the identity from the changeable aspects of faces at the expense of a more fine-tuned emotional expression processing.

In controls, the presence of an emotional expression, in fact, facilitates the detection of a difference in the recognized face, while in CPs the performance associated with these stimuli is greatly reduced (task 2). This indicates that in CPs the affective component of facial expression does not play a key role in face recognition.

In line with O'Toole et al. (2002) model, we propose that the processing of facial changeable aspects can lead to face identification since important cues to identity information are extracted through it. These cues are useful for recognizing both familiar (Albonico et al., 2012) and unfamiliar faces, as shown by previous studies (Longmore and Tree, 2013) and the present study. In particular, we argue that the processing of non-emotional facial expressions is preserved and enhanced in CP individuals, who can then use it to compensate their face recognition deficits. We also speculated that the nature of the processing of the changeable aspects of a face could be configural. Specifically, this is true for non-emotional expressions as it is revealed by the presence of a large inversion effect in CP participants both in the recognition and in the change detection task. Interestingly, in our second task (change detection task) the processing of emotional facial expressions seems to be analytic rather then holistic. In fact, not only did CPs show a very poor performance in the detection of a change in the emotional expressions, but they also showed an “inversion of the inversion effect” (i.e., a better performance for inverted than upright stimuli). This is in line with previous studies (Chen and Chen, 2010), which suggested that relevant information for emotion detection is extracted better by facial single district movements and are processed more analytically than non-emotional expression information.

We think that the configural processing of invariant features is the typical mode to reach face recognition and identification, but when this mechanism is impaired such as in congenital prosopagnosia, the analytic processing of single features and the processing of the non-emotional expressions (which are changeable aspects of a face and are processed via a different and dissociable pathway from that of the facial features) can help compensate for face recognition impairments.

In conclusion, congenital prosopagnosics, even if characterized by a deficit in the global processing of invariant features, could show a preserved analysis of changeable aspects, in particular of non-emotional facial expressions which can be used to face recognition.

A speculative hypothesis, to test in future study with a bigger sample size, could be that, although the configural mechanisms processing invariant features are impaired in CPs (in keeping with their difficulty in face recognition tests), the configural processing of changeable aspects could instead be preserved.

Author Contributions

Roberta Daini designed the experiment and analyzed the data, wrote the manuscript, discussed the results, and prepared the figures. Chiara M. Comparetti designed, performed the experiment, wrote the Method Section and prepared the stimuli. Paola Ricciardelli discussed the results, wrote and revised the manuscript.

Conflict of Interest Statement

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Acknowledgments

Paola Ricciardelli was supported by a grant from Università di Milano-Bicocca (Fondo di Ateneo 2011). We thank Andrea Albonico, Manuela Malaspina e Laura Corpaccini for helping with data collection.

References

Adolphs, R., Tranel, D., Damasio, H., and Damasio, A. R. (1995). Fear and the human amygdala. J. Neurosci. 15, 5879–5891.

Albonico, A., Malaspina, M., and Daini, R. (2012). Congenital prosopagnosia: the role of changeable and invariant aspects in famous face identification. Perception 41(Suppl. 106).

Allison, T., Puce, A., and McCarthy, G. (2000). Social perception froma visual cues: role of STS region. Trends Cogn. Sci. 4, 267–278. doi: 10.1016/S1364-6613(00)01501-1

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Avidan, G., Tanzer, M., and Behrmann, M. (2011). Impaired holistic processing in congenital prosopagnosia. Neuropsychologia 49, 2541–2552. doi: 10.1016/j.neuropsychologia.2011.05.002

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Behrmann, M., and Avidan, G. (2005). Congenital prosopagnosia: face-blind from birth. Trends Cogn. Sci. 9, 180–187. doi: 10.1016/j.tics.2005.02.01

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Benton, A. L., and Van Allen, M. W. (1968). Impairment in facial recognition in patients with cerebral disease. Trans. Am. Neurol. Assoc. 93, 38–42.

Bowles, D. C., McKone, E., Dawel, A., Duchaine, B., Palermo, R., Schmalzl, L., et al. (2009). Diagnosing prosopagnosia: effects of ageing, sex, and participant-stimulus ethnic match on the Cambridge face memory test and Cambridge face perception test. Cogn. Neuropsychol. 26, 423–455. doi: 10.1080/02643290903343149

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Bruce, V., and Young, A. (1986). Understanding face recognition. Br. J. Psychol. 77, 305–327. doi: 10.1111/j.2044-8295.1986.tb02199.x

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Busigny, T., and Rossion, B. (2010). Acquired prosopagnosia abolishes the face inversion effect. Cortex 46, 965–981. doi: 10.1016/j.cortex.2009.07.004

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Chen, M. Y., and Chen, C. C. (2010). The contribution of the upper and lower face in happy and sad facial expression classification. Vision Res. 50, 1814–1823. doi: 10.1016/j.visres.2010.06.002

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Comparetti, C. M., Ricciardelli, P., and Daini, R. (2011). Caratteristiche invarianti di un volto, espressioni emotive ed espressioni non emotive: una tripla dissocazione? G. Ital. Psicol. 1, 215–224. doi: 10.1421/34846

de Gelder, B., and Rouw, R. (2000). Configural face processes in acquired and developmental prosopagnosia: evidence for two separate face systems? Neuroreport 11, 3145–3150. doi: 10.1097/00001756-200009280-00021

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Diamond, R., and Carey, S. (1986). Why faces are and are not special: an effect of expertise. J. Exp. Psychol. Gen. 115, 107–117. doi: 10.1037/0096-3445.115.2.107

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Duchaine, B. C., and Nakayama, K. (2006). The Cambridge face memory test: results for neurologically intact individuals and an investigation of its validity using inverted face stimuli and prosopagnosic participants. Neuropsychologia 44, 576–585. doi: 10.1016/j.neuropsychologia.2005.07.001

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Ekman, P., and Friesen, W. V. (1976). Pictures of Facial Affect. Palo-Alto, CA: Consulting Psychologists Press.

Engell, A. D., and Haxby, J. (2007). Facial expression and gaze-direction in human superior temporal sulcus. Neuropsychologia 45, 3234–3241. doi: 10.1016/j.neuropsychologia.2007.06.022

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Ferracuti, F., and Ferracuti, S. (1992). Taratura del campione italiano. In test di riconoscimento di volti ignoti. Firenze 26–29.

Gobbini, M. I., and Haxby, J. V. (2006). Neural response to the visual familiarity of faces. Brain Res. Bull. 71, 76–82. doi: 10.1016/j.brainresbull.2006.08.003

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Haxby, J. V., Hoffman, E. A., and Gobbini, M. I. (2000). The distributed human neural system for face perception. Trends Cogn. Sci. 4, 223–233. doi: 10.1016/S1364-6613(00)01482-0

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Hill, H., and Johnston, A. (2001). Categorizing sex and identity from the biological motion of faces. Curr. Biol. 11, 880–885. doi: 10.1016/S0960-9822(01)00243-3

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Humphreys, K., Avidan, G., and Behrmann, M. (2007). A detailed investigation of facial expression processing in congenital prosopagnosia as compared to acquired prosopagnosia. Exp. Brain Res. 176, 356–373. doi: 10.1007/s00221-006-0621-5

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Kanwisher, N., McDermott, J., and Chun, M. M. (1997). The fusiform face area: a module in human extrastriate cortex specialized for face perception. J. Neurosci. 17, 4302–4311.

Kanwisher, N., Stanley, D., and Harris, A. (1999). The fusiform face area is selective for faces not animals. Neuroreport 10, 183–187.

Kanwisher, N., and Yovel, G. (2006). The fusiform face area: a cortical region specialized for the perception of faces. Philos. Trans. R. Soc. Lond. B Biol. Sci. 361, 2109–2128. doi: 10.1098/rstb.2006.1934

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Kennerknecht, I., Grueter, T., Welling, B., Wentzek, S., Horst, J., Edwards, S., et al. (2006). First report of prevalence of non-syndromic hereditary prosopagnosia (HPA). Am. J. Med. Genet. A. 140, 1617–1622. doi: 10.1002/ajmg.a.31343

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Knappmeyer, B., Thornton, I., and Bulthoff, H. H. (2001). Facial motion can determine facial identity. J. Vis. 3:338a. doi: 10.1167/1.3.338

Knight, B., and Johnston, A. (1997). The role of movement in face recognition. Vis. Cogn. 4, 265–274.

Kurucz, J., and Feldmar, G. (1979). Prosopo-affective agnosia as a symptom of cerebral organic disease. J. Am. Geriatr. Soc. 27, 225–230.

Lander, K., and Bruce, V. (2000). Recognizing famous faces: exploring the benefits of facial motion. Ecol. Psychol. 12, 259–272. doi: 10.1207/S15326969ECO1204_01

Lander, K., Bruce, V., and Hill, H. (2001). Evaluating the effectiveness of pixelation and blurring on masking the identity of familiar faces. Appl. Cogn. Psychol. 15, 101–116. doi: 10.1002/1099-0720(200101/02)

Lander, K., Christie, F., and Bruce, V. (1999). The role of movement in the recognition of famous faces. Mem. Cognit. 27, 974–985.

Lander, K., and Davies, R. (2007). Exploring the role of characteristic motion when learning new faces. Q. J. Exp. Psychol. (Hove). 60, 519–526. doi: 10.1080/17470210601117559

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Lander, K., Humphreys, G., and Bruce, V. (2004). Exploring the role of motion in prosopagnosia: recognizing, learning and matching faces. Neurocase 10, 462–470. doi: 10.1080/13554790490900761

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Lander, K., and Metcalfe, S. (2007). The influence of positive and negative facial expressions on face familiarity. Memory 15, 63–69. doi: 10.1080/09658210601108732

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Longmore, C. A., and Tree, J. J. (2013). Motion as a cue to face recognition: evidence from congenital prosopagnosia. Neuropsychologia 51, 864–875. doi: 10.1016/j.neuropsychologia.2013.01.022

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Munhall, K. G., Servos, P., Santi, A., and Goodale, M. A. (2002). Dynamic visual speech perception in a patient with visual form agnosia. Neuroreport 13, 1793–1796. doi: 10.1097/00001756-200210070-00020

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

O'Toole, A. J., Roark, D. A., and Abdi, H. (2002). Recognizing moving faces: a psychological and neural synthesis. Trends Cogn. Sci. 6, 261–266. doi: 10.1016/S1364-6613(02)01908-3

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Posamentier, M. T., and Abdi, H. (2003). Processing faces and facial expressions. Neuropsychol. Rev. 13, 113–143. doi: 10.1023/A:1025519712569

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Reynolds, C. R., and Bigler, E. D. (1995). Test di Memoria e Apprendimento. TEMA, Trento: Erickson. (Test of Memory and Learning. TOMAIL. Austin: PRO-ED, 1994).

Rossion, B. (2008). Picture-plane inversion leads to qualitative changes of face perception. Acta Psychol. 128, 274–289. doi: 10.1016/j.actpsy.2008.02.003

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Schmalzl, L., Palermo, R., and Coltheart, M. (2008). Cognitive heterogeneity in genetically based prosopagnosia: a family study. J. Neuropsychol. 2, 99–117. doi: 10.1348/174866407X256554

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Steede, L. L., Tree, J. J., and Hole, G. J. (2007). I can't recognize your face but I can recognize its movement. Cogn. Neuropsychol. 24, 451–466. doi: 10.1080/02643290701381879

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Tranel, D., Damasio, A. R., and Damasio, H. (1988). Intact recognition of facial expression, gender, and age in patients with impaired recognition of face identity. Neurology 38, 690–696. doi: 10.1212/WNL.38.5.690

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Wilmer, J. B., Germine, L., Chabris, C. F., Chatterjee, G., Gerbasi, M., and Nakayama, K. (2012). Capturing specific abilities as a window into human individuality: the example of face recognition. Cogn. Neuropsychol. 2, 360–392. doi: 10.1080/02643294.2012.753433

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Keywords: face perception, congenital prosopagnosia, unfamiliar face recognition, emotional expressions, non-emotional expression processing

Citation: Daini R, Comparetti CM and Ricciardelli P (2014) Behavioral dissociation between emotional and non-emotional facial expressions in congenital prosopagnosia. Front. Hum. Neurosci. 8:974. doi: 10.3389/fnhum.2014.00974

Received: 30 April 2014; Accepted: 15 November 2014;

Published online: 03 December 2014.

Edited by:

Mark A. Williams, Macquarie University, AustraliaCopyright © 2014 Daini, Comparetti and Ricciardelli. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) or licensor are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Roberta Daini, Dipartimento di Psicologia, Università degli Studi di Milano-Bicocca, Piazza dell'Ateneo Nuovo, 1, 20126 Milano, Italy e-mail:cm9iZXJ0YS5kYWluaUB1bmltaWIuaXQ=

Roberta Daini

Roberta Daini Chiara M. Comparetti

Chiara M. Comparetti Paola Ricciardelli

Paola Ricciardelli