- 1School of Computer Science and Technology, Tianjin Key Laboratory of Cognitive Computing and Application, Tianjin University, Tianjin, China

- 2State Key Laboratory of Intelligent Technology and Systems, National Laboratory for Information Science and Technology, Tsinghua University, Beijing, China

- 3Medical Imaging Research Institute, Binzhou Medical University, Yantai, China

- 4Department of Radiology, Yantai Affiliated Hospital of Binzhou Medical University, Yantai, China

It is an important question how human beings achieve efficient recognition of others’ facial expressions in cognitive neuroscience, and it has been identified that specific cortical regions show preferential activation to facial expressions in previous studies. However, the potential contributions of the connectivity patterns in the processing of facial expressions remained unclear. The present functional magnetic resonance imaging (fMRI) study explored whether facial expressions could be decoded from the functional connectivity (FC) patterns using multivariate pattern analysis combined with machine learning algorithms (fcMVPA). We employed a block design experiment and collected neural activities while participants viewed facial expressions of six basic emotions (anger, disgust, fear, joy, sadness, and surprise). Both static and dynamic expression stimuli were included in our study. A behavioral experiment after scanning confirmed the validity of the facial stimuli presented during the fMRI experiment with classification accuracies and emotional intensities. We obtained whole-brain FC patterns for each facial expression and found that both static and dynamic facial expressions could be successfully decoded from the FC patterns. Moreover, we identified the expression-discriminative networks for the static and dynamic facial expressions, which span beyond the conventional face-selective areas. Overall, these results reveal that large-scale FC patterns may also contain rich expression information to accurately decode facial expressions, suggesting a novel mechanism, which includes general interactions between distributed brain regions, and that contributes to the human facial expression recognition.

Introduction

Facial expression is an important medium for social communication as it conveys information about others’ emotion. Humans can quickly and effortlessly decode emotion expressions from faces and perceive them in a categorical manner. The mechanism under which enables human brain achieving the efficient recognition of facial expressions is intensively studied.

The usual way in exploring facial expression perception is recoding the brain activity patterns while participants are presented with facial stimuli. Jin et al. (2012, 2014a,b) have made a lot of efforts on the stimulus presentation approaches with face stimuli. In order to accurately locate the increased neural activity in brain areas, functional magnetic resonance imaging (fMRI) technology is widely used. Using fMRI, earlier model for face perception is proposed by Haxby et al. (2000), in which they found a “core” and an “extended system” that participated in the processing of facial signals. Subsequently, the core face network, which contained the fusiform face area (FFA), the occipital face area (OFA) and the face-selective area in the posterior superior temporal sulcus (pSTS) have been widely discussed in facial expression perception studies and are considered as key regions (Haxby et al., 2000; Grill-Spector et al., 2004; Winston et al., 2004; Yovel and Kanwisher, 2004; Ishai et al., 2005; Rotshtein et al., 2005; Lee et al., 2010; Gobbini et al., 2011). Previous fMRI studies on facial expression perception mainly employed static expression images as stimuli (Gur et al., 2002; Murphy et al., 2003; Andrews and Ewbank, 2004). Because natural expressions include action, recent studies have suggested that dynamic stimuli are more ecologically valid than the static stimuli and the use of dynamic stimuli may be more appropriate to investigate the “authentic” mechanism of human facial expression recognition (Trautmann et al., 2009; Johnston et al., 2013). Recent studies with dynamic stimuli have found enhanced brain activation patterns compared with static stimuli and found that in addition to the conventional face-selective areas, motion-sensitive areas also significantly responded to facial expressions (Furl et al., 2012, 2013, 2015).

Most of the past fMRI studies on facial expression perception employed univariate statistics to analyze expression stimuli induced increments of neural activity in specific brain areas. Due to the expected existence of interactions between different brain areas, the analyses of functional connectivity (FC) attracted more and more attention, which is measured as the temporal correlations in the fMRI activity between distinct brain areas (Smith, 2012; Wang et al., 2016). Analysis of FC patterns has been applied in the recent studies of various objects categorization (He et al., 2013; Hutchison et al., 2014; Stevens et al., 2015; Wang et al., 2016), and it was generally observed that distinct brain regions are intrinsically interconnected. Considering these, FC patterns may also contribute to the facial expression recognition. A recent fMRI study on face perception employed FC patterns analysis to construct the hierarchical structure of the face-processing network (Zhen et al., 2013). However, it only focused on the FC patterns among the face-selective areas, the general FC interactions for facial expression recognition remained unclear. Consequently, exploring the whole-brain FC patterns during the processing of different expression information would be meaningful.

Machine learning techniques make use of the multivariate nature of the fMRI data and are being increasingly applied to decode cognitive processes (Pereira et al., 2009). Previous studies of facial expression decoding combined machine learning with multi-voxel activation patterns to examine the decoding performance in the specific brain areas. In these studies, Said et al. (2010) and Harry et al. (2013) respectively, highlighted the roles of STS and FFA in the facial expression decoding, and Wegrzyn et al. (2015) directly compared classification rates across the brain areas proposed by Haxby’s model (Haxby et al., 2000). Additionally, Furl et al. (2012) and Liang et al. (2017) showed that both face-selective and motion-sensitive areas contributed to the facial expression decoding. Considerable attention has been paid to activation-based facial expression decoding in individual brain areas; however, the potential mechanisms of expression information representation through the FC patterns remained unclear. Recently, a study by Wang et al. (2016) showed the successful decoding of various object categories based on the FC patterns. Their study motivated us to explore whether facial expression information can also be robust decoded from the FC patterns.

The present fMRI study explored the role of the FC patterns in the facial expression recognition. We hypothesized that expression information may also be represented in the FC patterns. To address this issue, we collected neural activities while participants viewed facial expressions of six basic emotions (anger, disgust, fear, joy, sadness, and surprise) in a block design experiment. Both static and dynamic expression stimuli were included in our experiment. After scanning, we conducted a behavioral experiment in accordance to previous study to assess the validity of the facial stimuli, in which we recorded the classification accuracy, the emotional intensity the participants perceived and the corresponding reaction times for each facial stimulus (Furl et al., 2013, 2015). A standard anatomical atlas [Harvard-Oxford atlas, FSL, Oxford University, Meng et al. (2014)] was employed to define the anatomical regions in the brain. We obtained the whole-brain FC patterns for each facial expression and then applied multivariate pattern analyses with machine learning algorithms (fcMVPA) to examine the decoding performance for facial expressions based on the FC patterns.

Materials and Methods

Participants

The data used in this study were collected in our previous study (Liang et al., 2017). Eighteen healthy, right-handed participants (nine females; range 20–24 years old) took part in our experiment. They were Chinese students who were recruited from the Binzhou Medical University. All participants were with no history of neurological or psychiatric disorders and had normal or corrected-to-normal vision. Participants signed informed consent before the experiment. This study was approved by the Institutional Review Board (IRB) of Tianjin Key Laboratory of Cognitive Computing and Application, Tianjin University.

fMRI Data Acquisition

All the participants were scanned using a 3.0-T Siemens scanner with an eight-channel head coil in Yantai Affiliated Hospital of Binzhou Medical University. Foam pads and earplugs were used to reduce the head motion and scanner noise. Functional images were obtained using a gradient echo-planar imaging (EPI) sequence (TR = 2000 ms, TE = 30 ms, voxel size = 3.1 mm × 3.1 mm × 4.0 mm, matrix size = 64 × 64, slices = 33, slices thickness = 4 mm, slice gap = 0.6 mm). In addition, a three-dimensional magnetization-prepared rapid-acquisition gradient echo (3D MPRAGE) sequence (TR = 1900 ms, TE = 2.52 ms, TI = 1100 ms, voxel size = 1 mm × 1 mm × 1 mm, matrix size = 256 × 256) was used to acquire the T1-weighted anatomical images. The stimuli were displayed by high-resolution stereo 3D glasses within a VisualStim Digital MRI Compatible fMRI system (Choubey et al., 2009; Liang et al., 2017; Yang et al., 2018).

Procedure

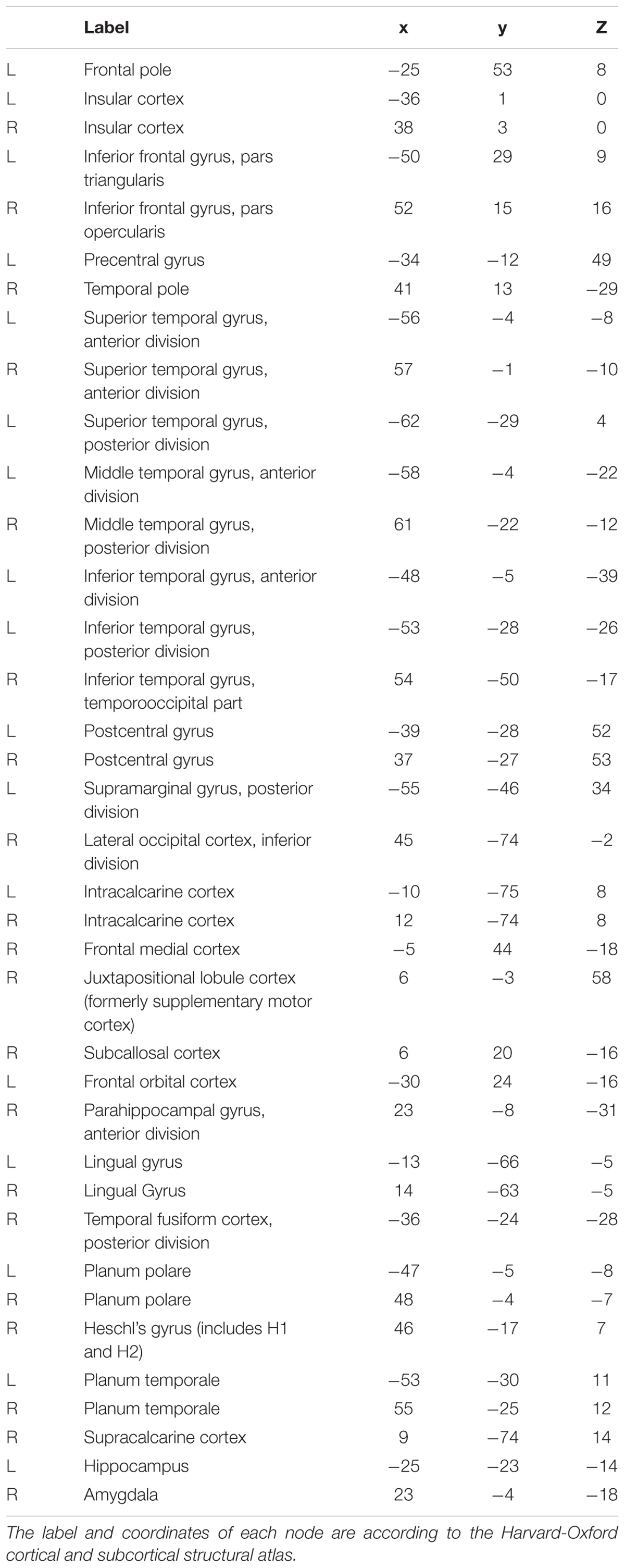

All facial expression stimuli were taken from the Amsterdam Dynamic Facial Expression Set (ADFES), which is a standard facial expression database containing both images and videos of basic emotions (van der Schalk et al., 2011). Video clips of 12 different identities (six males andsix females) displayed six basic emotions (anger, disgust, fear, joy, sadness, and surprise) were chosen. The exemplar stimuli for the six basic emotions are shown in Figure 1A. We created the dynamic expression stimuli by cropping all videos to 1520 ms to retain the transition from a neutral expression to the expression apex, and the apex expression image was used as the static stimuli (Furl et al., 2013, 2015).

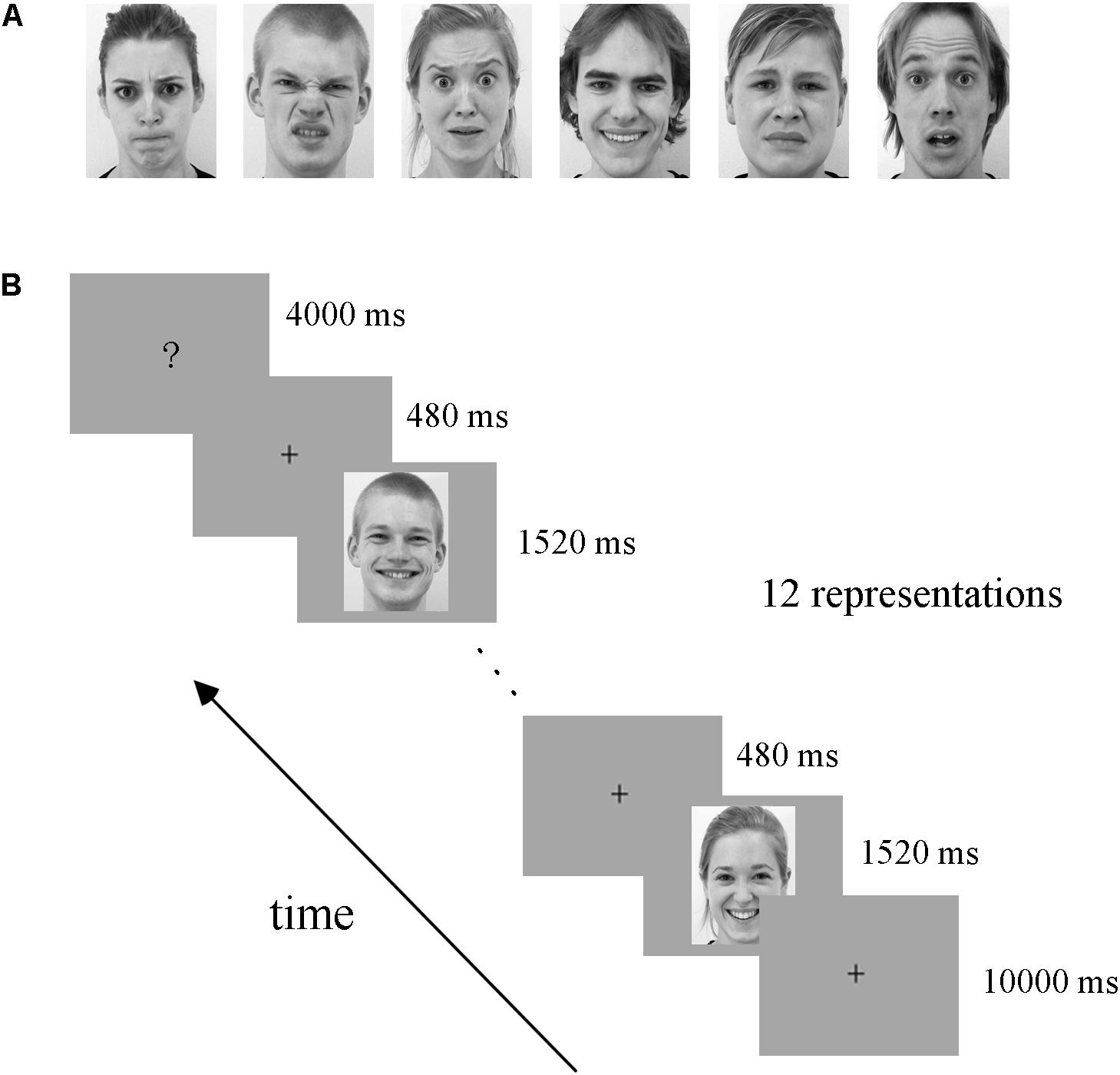

FIGURE 1. Exemplar facial stimuli (A) and schematic representation of the experimental paradigm (B). All facial expression stimuli were taken from the ADFES database. The experiment employed a block design, including four runs. There was a fixation cross (10 s) before each block, and then 12 expression stimuli appeared. Subsequently, the participants completed a button task after each block to indicate their discrimination of the expression they had seen.

The experiment employed a block design, with four runs. There were three conditions in our experiment: static facial expressions, dynamic facial expressions and dynamic expressions with obscured eye-region. Each condition included all six basic expressions: anger, disgust, fear, joy, sadness, and surprise. Data from the obscured condition were not analyzed in the current study but were included for the purpose of another study (Liang et al., 2017). In each run, there were 18 blocks (6 expressions × 3 conditions), each expression and condition appearing once. The 18 blocks were presented in a pseudo-random order to ensure that the same emotion or condition were not presented consecutively (Axelrod and Yovel, 2012; Furl et al., 2013, 2015). Figure 1B shows the schematic representation of the employed paradigm. At the beginning of each run, there was a 10 s fixation cross, which was followed by a 24 s stimulus block (the same condition and expression) and then a 4 s button task. Successive stimulus blocks were separated by the presentation of a fixation cross for 10 s. In each stimulus block, 12 expression stimuli were presented (each for 1520 ms) with an interstimulus interval (ISI) of 480 ms. During the course of each stimulus block, participants were instructed to carefully watch the facial stimuli, and after the block, a screen appeared with six emotion categories and corresponding button indexes to instruct the participants to press a button to indicate the facial expression they had seen in the previous block (Liang et al., 2017; Yang et al., 2018). Participants were provided with one response pad per hand with three buttons each in the fMRI experiment (Ihme et al., 2014), and they were pre-trained to familiarize the button pad before scanning. The total duration of the experiment was 45.6 min, with each run lasting 11.4 min. Stimulus presentation was performed using E-Prime 2.0 Professional (Psychology Software Tools, Pittsburgh, PA, United States).

After scanning, participants were required to complete a behavioral experiment outside the scanner in accordance to the previous studies (Furl et al., 2013, 2015). During it, we recorded their classification of emotion category, emotional intensity rating and the corresponding reaction times for each stimulus used in the fMRI experiment. The emotional intensity for each stimulus was rated on a 1–9 scale with 1 refers to the lowest and 9 refers to the highest emotional intensity (Furl et al., 2013). Each stimulus was presented once in a random order, with the same duration as in the fMRI experiment.

Data Preprocessing

Functional image preprocessing was conducted using SPM8 software1. For each run, the first five volumes were discarded to allow for T1 equilibration effects. The remaining functional images were corrected for the slice-time and head motion. Next, the functional data were normalized by using the structural image unified segmentation. The high-resolution structural image was co-registered with the functional images and was subsequently segmented into gray matter, white matter and cerebrospinal fluid. And the spatial normalization parameters estimated during unified segmentation were applied to normalize the functional images into the standard Montreal Neurological Institute (MNI) space, with a re-sampled voxel size of 3 mm × 3 mm × 3 mm. Finally, the functional data were spatially smoothed with a 4-mm full-width at half-maximum Gaussian kernel.

Construction of Whole-Brain FC Patterns

Estimation of the task-related whole-brain FC was carried out using the CONN toolbox2 (Whitfield-Gabrieli and Nieto-Castanon, 2012) in MATLAB. For each participant, the normalized anatomical volume and the preprocessed functional data were submitted to CONN. We employed the Harvard-Oxford atlas3 (FSL, Oxford University, Meng et al., 2014) as network nodes, which contained 112 cortical and subcortical regions. Time series of functional MRI signal were extracted from each voxel and averaged within each ROI for each condition. CONN implemented a component-based (CompCor) strategy to remove the non-neural sources of confounders. Principle components associated with white matter (WM) and cerebrospinal fluid (CSF) were regressed out along with the six head movement parameters, and the data were temporally filtered with band-pass filter 0.01 – 0.1 HZ as previously used for task-induced connectivity analysis (Wang et al., 2016). We conducted ROI-to-ROI analysis to assess pairwise correlations between the ROIs. For both static and dynamic facial expressions, we obtained six FC matrices (112 × 112) for each participant, one per emotion category. Second-level analysis was performed for each facial expression for the group comparisons of the differences in expression-related FC between ROIs (p < 0.001, FDR corrected for connection-level, two-sides).

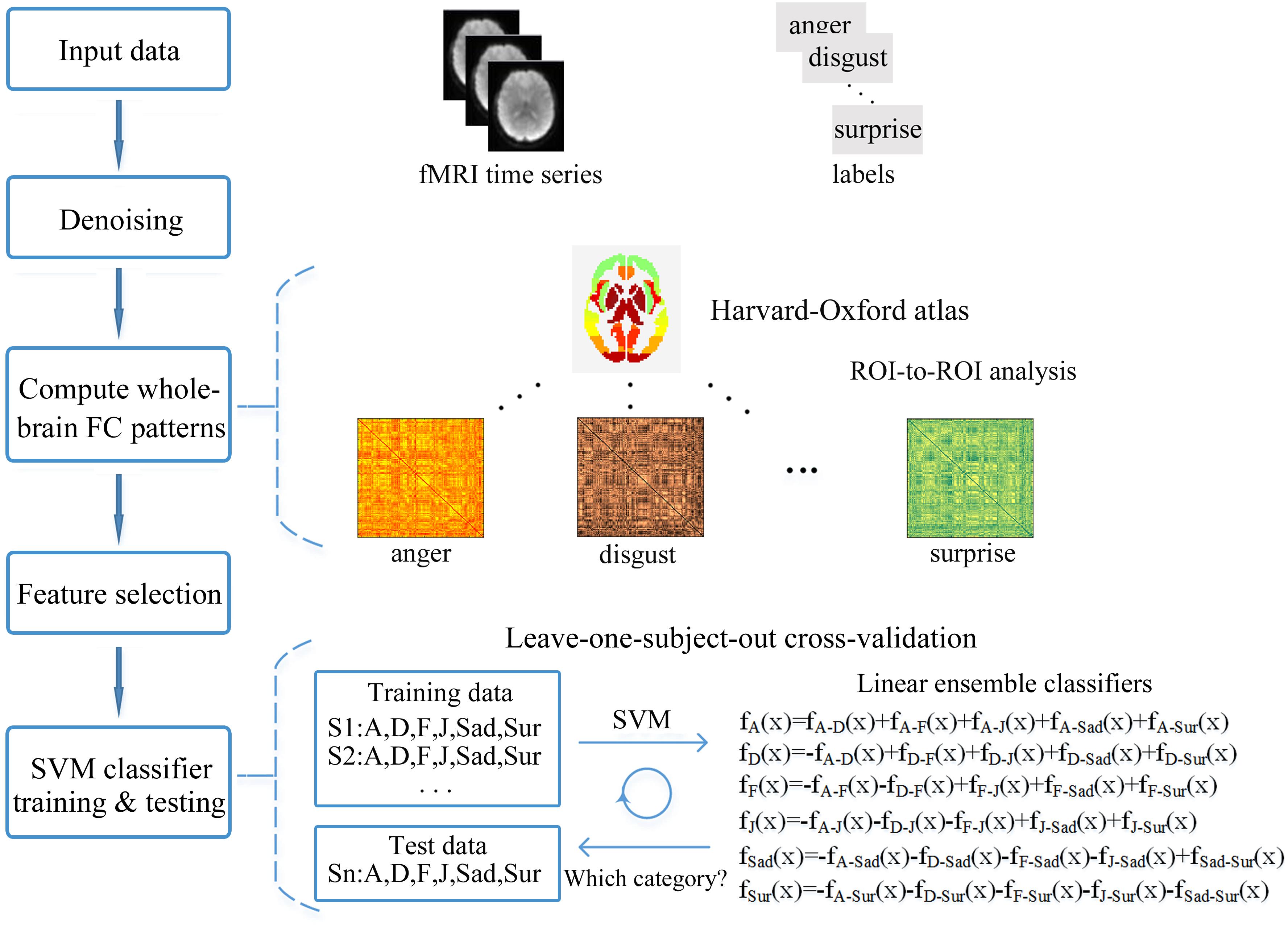

Across-Subject Expression Classification Based on the FC Patterns

We employed multivariate pattern analysis and machine learning to examine whether facial expression information could be decoded from the FC patterns (fcMVPA). Figure 2 represents the framework overview of our fcMVPA classification. We performed a six-way expression classification separately for the static and dynamic facial expressions. Due to some evidence showed that the interpretation of the negative FCs remained controversial (Fox M.D. et al., 2009; Weissenbacher et al., 2009; Wang et al., 2016), we also focused mainly on the positive FCs in current study. For each category, we obtained 6216 [(112 × 111)/2] connections in total. We performed the procedure adopted in Wang et al. (2016) to obtain the positive FCs. For each category, we conducted a one-sample t-test across participants for each of the 6216 connections and retained FCs that had values significantly greater than zero. P-values were corrected for multiple comparisons with the false discovery rate (FDR) q = 0.01. This procedure identified 1540 positive FCs for anger, 1792 positive FCs for disgust, 1466 positive FCs for fear, 1838 positive FCs for joy, 1799 positive FCs for sadness and 1726 positive FCs for surprise for the static expressions, while for the dynamic expressions, it correspondingly identified 1944, 1798, 1696, 1703, 1608, and 1822 positive FCs for each of the six basic expressions. Pooling the positive FCs together separately for static and dynamic conditions, we obtained a total of 3014 (for static) and 2986 (for dynamic) FCs that were significantly positive for at least one expression (Wang et al., 2016). For classification, we employed a linear support vector machine (SVM) classifier as implemented in the LIBSVM4. A leave-one-subject-out cross-validation scheme (LOOCV) was used to evaluate the performance (Liu et al., 2015; Wang et al., 2016; Jang et al., 2017). For multi-class classification, this implementation used a one-against-one voting strategy. In each iteration of LOOCV, we trained the classifier in all but one participant and the remaining one was used as the testing set. During the classifier training, we first obtained 15 classifiers for each pair of expressions and then added these pairwise classifiers to yield the linear ensemble classifier for each expression. Feature selection was executed using ANOVA (p < 0.05), which was only performed on the training data of each LOOCV fold to avoid peeking. The statistical significance of the decoding performance was evaluated with permutation test, in which the same cross-validation procedure was carried out for 1000 random shuffles of class labels (Liu et al., 2015; Wang et al., 2016; Fernandes et al., 2017). The p-value for the decoding accuracy was calculated as the fraction of the number of accuracies from 1000 permutation tests that were equal to or larger than the accuracy obtained with the correct labels. If no more than 5% (p < 0.05) of the accuracies from all permutation tests exceeded the actual accuracy using correct labels, the results was thought to be significant.

FIGURE 2. Framework overview of the fcMVPA. The pairs of preprocessed functional images and the corresponding labels of expression categories were used as the input data. Estimation of the FC patterns was performed using CONN toolbox. Denoising was used to remove unwanted motion, physiological and other artifactual effects from the BOLD signals before the connectivity measured. Then, the whole-brain FC patterns for each of the six facial expressions were computed using ROI-to-ROI analysis with the 112 nodes defined by the Harvard-Oxford atlas. Feature selection was performed with ANOVA only using the training data. Finally, the FC pattern classification of six facial expressions was carried out in a leave-one-subject-out cross-validation scheme with a SVM classifier.

Results

Behavioral Results

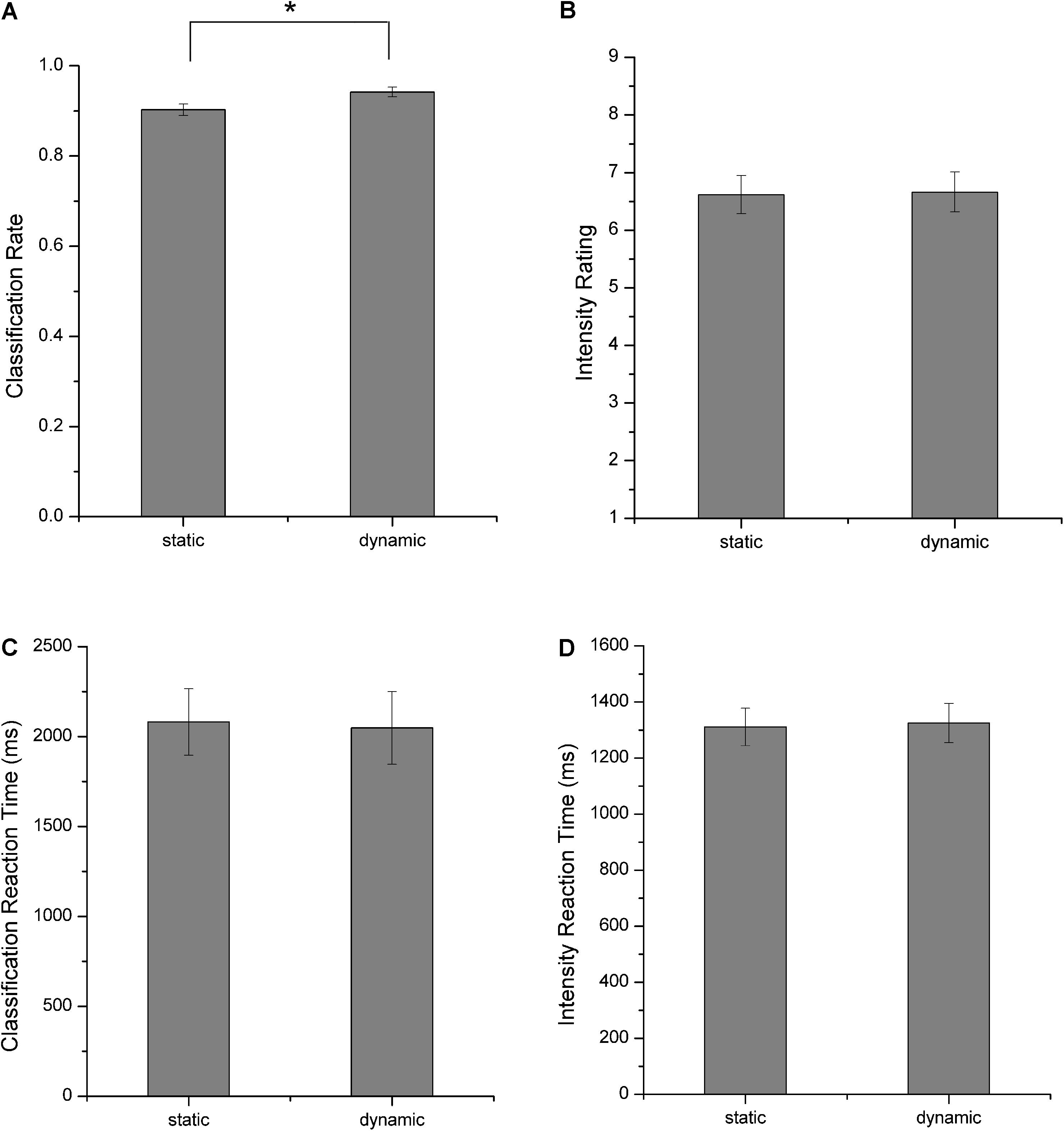

Participants completed a behavioral experiment in which they classified the emotional categories and rated the perceived emotional intensities for each facial stimulus after scanning. Figure 3 shows the behavioral results. These results verified the validity of the facial stimuli used in our experiment as both static and dynamic facial expression stimuli could be successfully classified with high accuracies (Figure 3A). A further comparison of the classification accuracies, intensity ratings and the corresponding reaction times between static and dynamic facial expressions, we found that participants showed higher classification accuracies for dynamic compared with static facial expressions [one-tailed paired t-test, t(17) = 3.265, p = 0.002]. For the emotional intensity and the reaction times, there were no significant differences.

FIGURE 3. Behavioral results. (A) Classification rates, (B) perceived emotional intensities, (C) reaction times for facial expression classification, and (D) reaction times for emotional intensity rating. All error bars indicate the SEM. ∗Indicates statistical significance with paired t-test, p < 0.05.

Whole-Brain FC Patterns for Each Facial Expression in Static and Dynamic Conditions

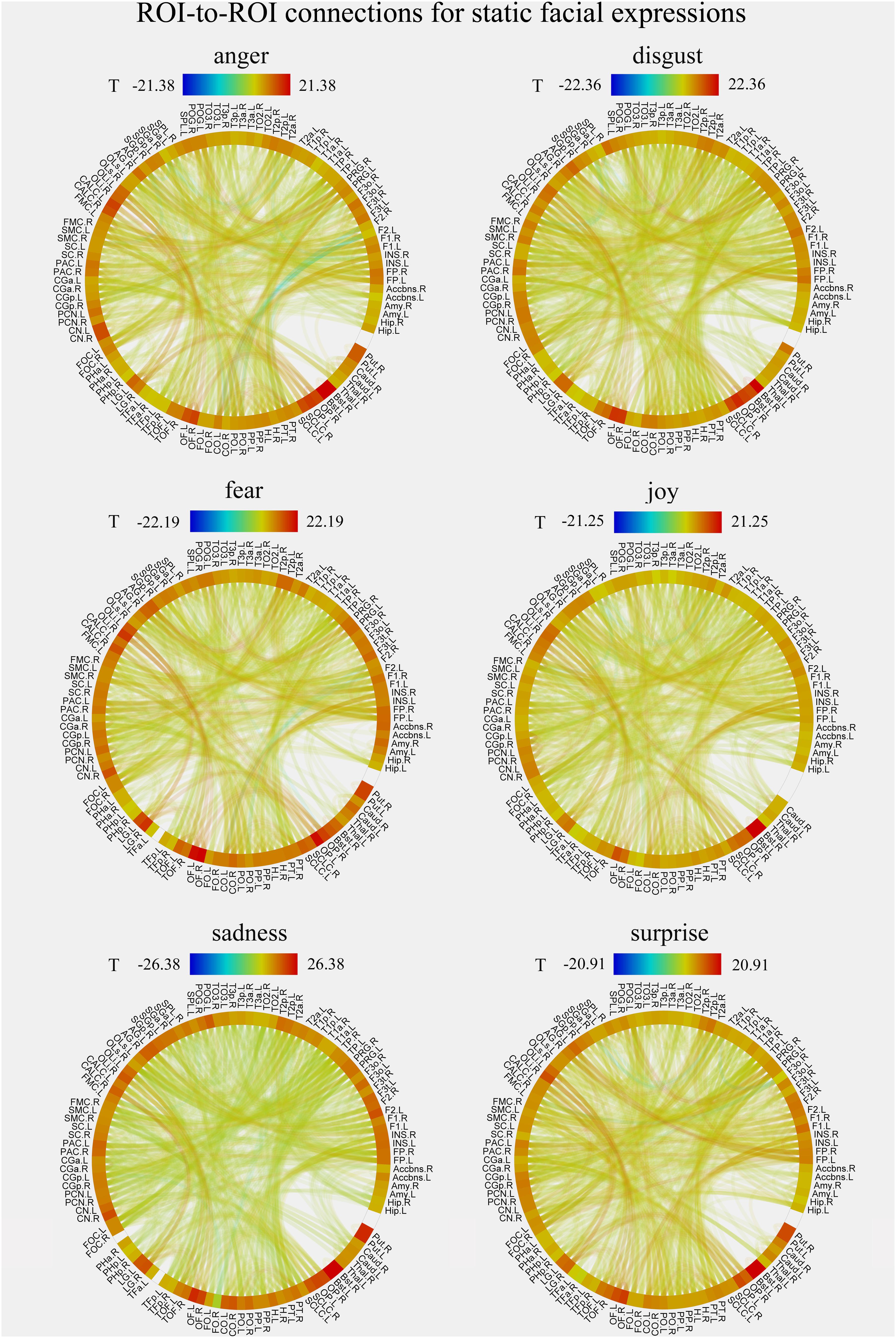

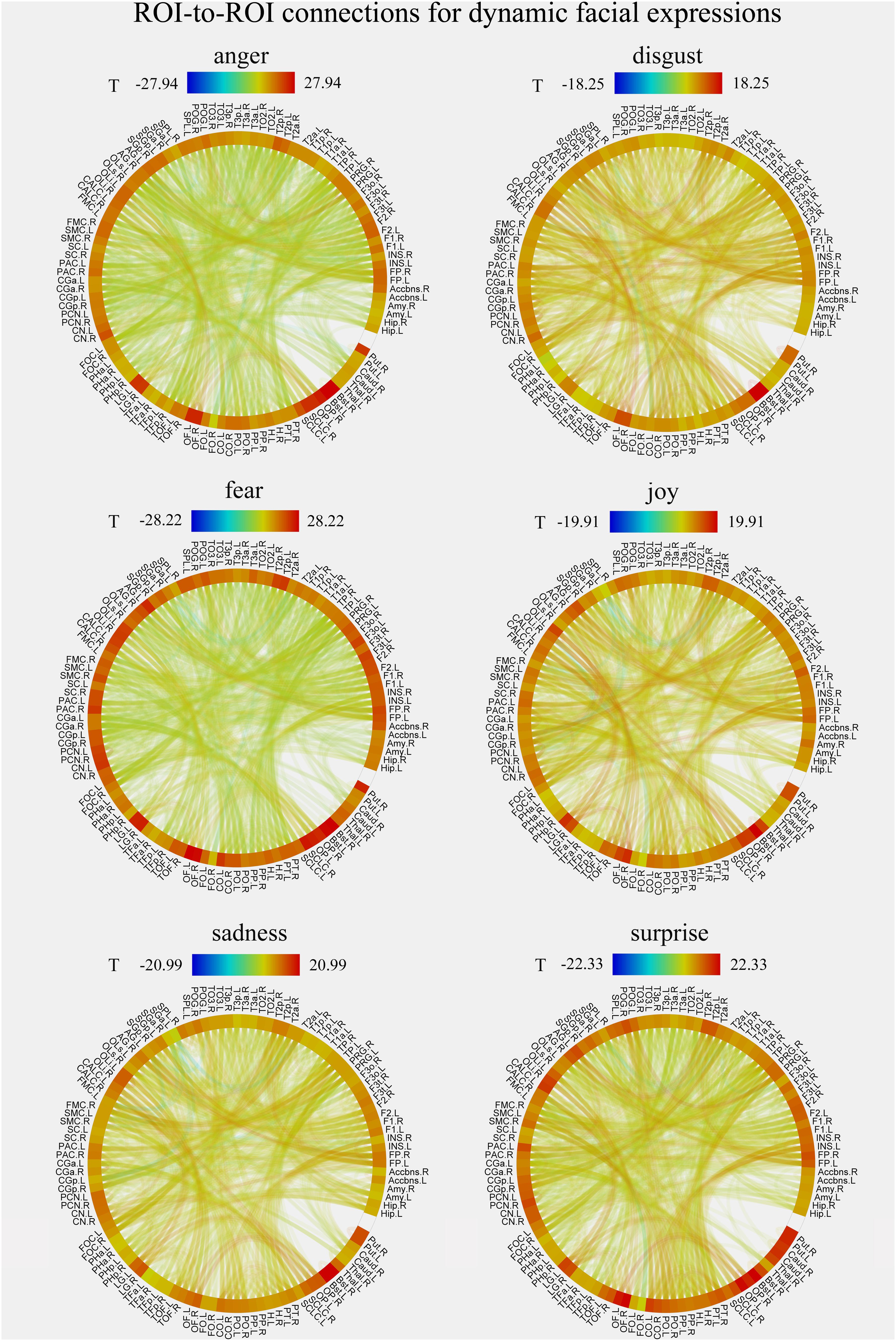

We constructed the whole-brain FC patterns for each facial expression separately for the static and dynamic stimuli. For each participant, we got 12 FC matrices with each contained 6216 [(112 × 111)/2] connections between the pre-defined 112 brain notes. Second-level analysis was performed for each facial expression for the group comparisons of the differences in these ROI-to-ROI functional connections. Figures 4, 5 show the results of group-level analysis of the FC patterns for each facial expression (p < 0.001, FDR corrected for connection-level, two-sides).

FIGURE 4. Group-level results of ROI-to-ROI connections for each facial expression (anger, disgust, fear, joy, sadness, and surprise) in static condition (p < 0.001, FDR corrected at the connection-level, two-sided). All ROIs are deriving from the Harvard-Oxford brain atlas and are labeled with the abbreviations for clarity.

FIGURE 5. Group-level results of ROI-to-ROI connections for each facial expression (anger, disgust, fear, joy, sadness, and surprise) in dynamic condition (p < 0.001, FDR corrected at the connection-level, two-sided). All ROIs are deriving from the Harvard-Oxford brain atlas and are labeled with the abbreviations for clarity.

Facial Expression Decoding Based on fcMVPA

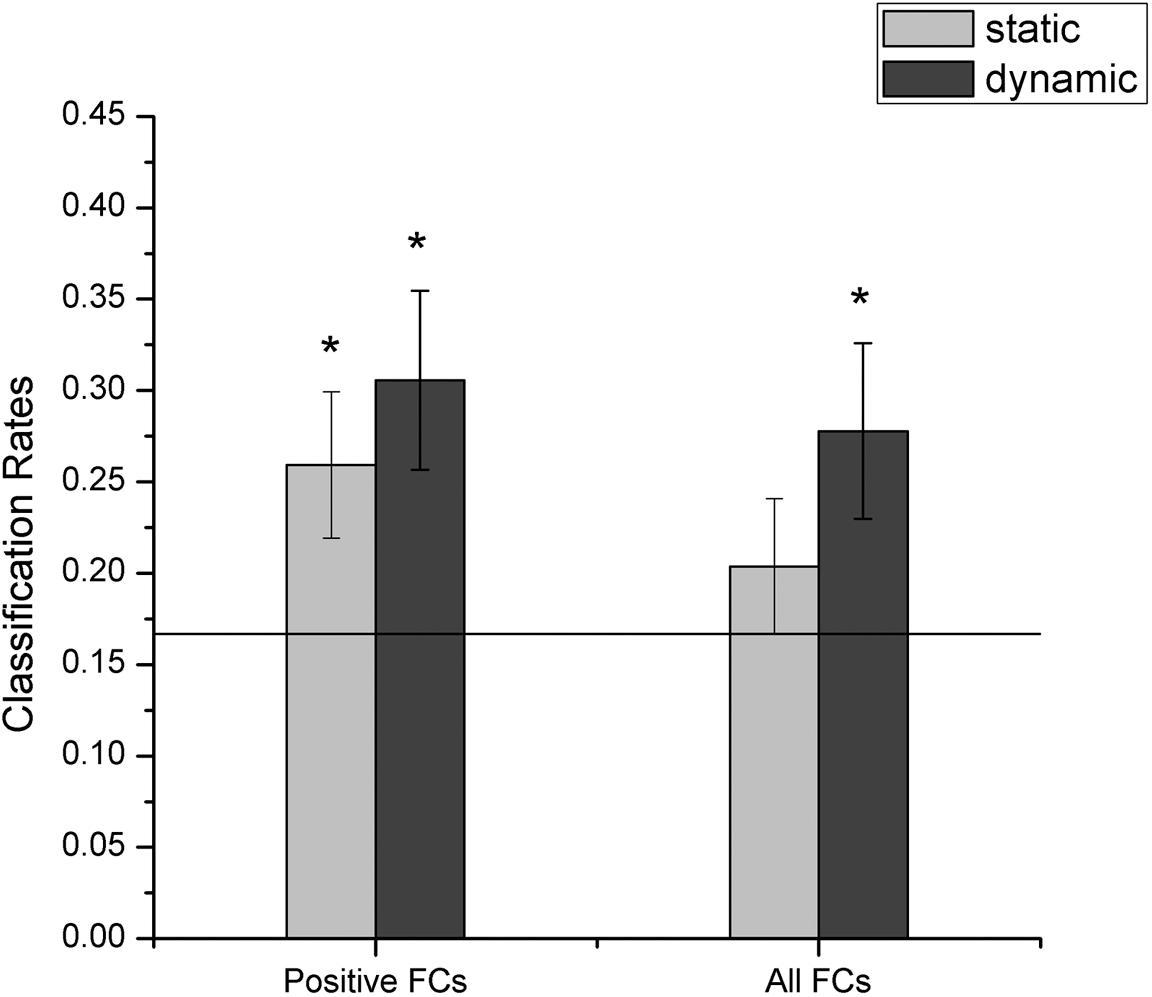

In this section, we explored whether facial expressions could be decoded from the FC patterns using fcMVPA. Since the interpretation of the negative FCs remained controversial (Fox M.D. et al., 2009; Weissenbacher et al., 2009; Wang et al., 2016), we focused on the positive FCs in the fcMVPA classification. Separately for the static and dynamic conditions, we obtained the positive FCs for each facial expression using one-sample t-test across participants with multiple comparisons (FDR q = 0.01) and by pooling the positive FCs together, we obtained 3014 (for static) and 2986 (for dynamic) FCs for the classification of static and dynamic facial expressions (Wang et al., 2016). In the main results below, we used these positive FCs. For the multiclass facial expression classification, the performance was evaluated with the LOOCV strategy. As shown in Figure 6 (left columns), we found that classification accuracies based on the FC patterns were significantly above the chance level for both static and dynamic facial expressions (p = 0.003 for static facial expressions and p < 0.001 for dynamic facial expressions, 1000 permutations), indicating that expression information could be successfully decoded from the FC patterns.

FIGURE 6. Accuracies of decoding static and dynamic facial expressions using fcMVPA. The black line indicates the chance level, and all error bars indicate the SEM. ∗Represents statistical significance over 1000 permutation tests.

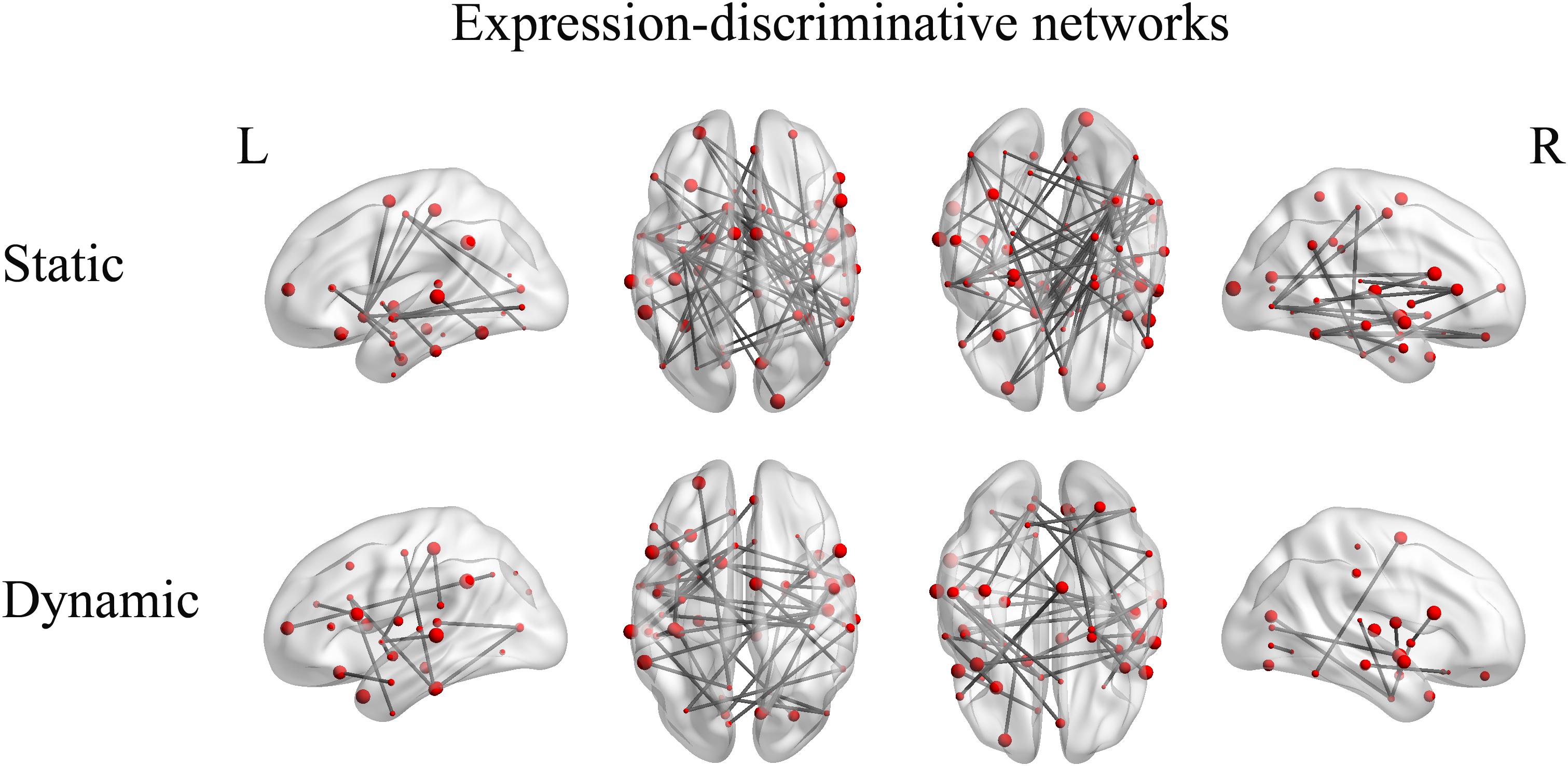

Furthermore, we identified the expression-discriminative networks for the static and dynamic facial expressions, defined as FCs that contributed significantly in discriminating between different expression categories. Connections that were selected over all iterations of LOOCV feature selection (consensus features, ANOVA p < 0.05) composed the expression-discriminative networks. Figure 7 shows the expression-discriminative networks for the static and dynamic facial expressions, all of which were widely distributed in both hemispheres. We summarized the brain regions that were involved in both static and dynamic expression-discriminative networks in Table 1. We found conventional face-selective areas, including the insula, inferior frontal gyrus, superior temporal gyrus, lateral occipital cortex (inferior occipital gyrus); temporal fusiform cortex (fusiform gyrus) and amygdala, which were commonly studied in previous fMRI studies on facial expression perception (Fox C.J. et al., 2009; Trautmann et al., 2009; Furl et al., 2013, 2015; Johnston et al., 2013; Harris et al., 2014). Moreover, we found the expression-discriminative networks contained brain regions far beyond these conventional face-selective areas. For instance, the middle temporal gyrus, which was reported sensitive to facial motion (Furl et al., 2012; Liang et al., 2017), was also included. Other regions that were not classically considered in previous fMRI studies on facial expression perception with activation measure were also included, such as the supramarginal gyrus, the lingual gyrus and the parahippocampal gyrus.

FIGURE 7. Expression-discriminative networks for the static and dynamic facial expressions. The coordinates of each node are according to the Harvard-Oxford brain atlas. The brain regions are scaled by the number of their connections and the results are mapped on the cortical surfaces using BrainNet Viewer.

Discussion

The main purpose of this study was to explore whether the FC patterns effectively contributed to human facial expression recognition. To address this issue, we employed a block design experiment and conducted fcMVPA. We obtained the whole-brain FC patterns for each facial expression separately for static and dynamic stimuli and found that both static and dynamic facial expressions could be successfully decoded from the FC patterns. We also identified the expression-discriminative networks for the static and dynamic facial expressions, composed of FCs that significantly contributed to the classification between different facial expressions.

Facial Expressions Are Decoded From the FC Patterns

Using multivariate connectivity pattern analysis and machine learning algorithm, we found the successful decoding of both static and dynamic facial expressions based on the FC patterns.

Previous studies on facial expression recognition are dominated by identifying cortical regions showing preferential activation to facial expressions (Gur et al., 2002; Winston et al., 2004; Trautmann et al., 2009; Furl et al., 2013, 2015; Johnston et al., 2013; Harris et al., 2014). Although a few recent studies have started to explore the decoding of facial expressions, they only conducted activation-based classification analyses on individual brain regions (Said et al., 2010; Furl et al., 2012; Harry et al., 2013; Wegrzyn et al., 2015; Liang et al., 2017). The potential effects of the FC patterns on the facial expression decoding still undetected. Our study obtained the whole-brain FC patterns for each of the six basic expressions. Using fcMVPA, we found that expression information could be successfully decoded from the FC patterns. These results reveal that facial expression information may also be represented in the FC patterns, which add to the recently growing body of evidence for the large amount of information that the FC patterns contain for the decoding of individual brain maturity (Dosenbach et al., 2010), object categories (Wang et al., 2016), tasks (Cole et al., 2013) and mental states (Pantazatos et al., 2012; Shirer et al., 2012). Our study further provides new evidence for the potential of the FC patterns in the facial expression decoding. To summarize, our results suggest that the FC patterns may also contain rich expression information and effectively contribute to the recognition of facial expressions.

Expression-Discriminative Networks Contain Brain Areas Far Beyond Conventional Face-Selective Areas

Neuroscience studies on facial expressions have paid considerable attention to the face-selective areas which exhibited selectivity to facial stimuli based on traditional activation analyses. Previous fMRI studies have indicated that face-selective areas are involved in the processing of facial expressions (Fox C.J. et al., 2009; Fox M.D. et al., 2009; Trautmann et al., 2009; Foley et al., 2011; Furl et al., 2013, 2015; Johnston et al., 2013; Harris et al., 2014). In our study, we obtained compatible results. We found the involvement of the face-selective areas in both static and dynamic expression-discriminative networks. In particular, the lateral occipital cortex (inferior occipital gyrus) for the early face perception (Rotshtein et al., 2005); the temporal fusiform cortex (fusiform gyrus) for the processing of facial features and identity (Fox M.D. et al., 2009) and the superior temporal gyrus for the processing of transient facial signals (Hoffman and Haxby, 2000; Harris et al., 2014) which together constitute the “core face network,” as well as a subset of brain areas in the extended face system including the amygdala, the insula and the inferior frontal gyrus that support the core system regions (Haxby et al., 2000; Fox C.J. et al., 2009; Trautmann et al., 2009; Johnston et al., 2013; Wegrzyn et al., 2015). Together, our results provide additional support for the importance of face-selective areas in the facial expression recognition with evidence from fcMVPA.

In addition, we found brain regions beyond these conventional face-selective areas participated in the expression-discriminative networks. The middle temporal gyrus, which was unanimously found sensitive to facial motion in the previous studies (Schultz and Pilz, 2009; Trautmann et al., 2009; Foley et al., 2011; Pitcher et al., 2011; Grosbras et al., 2012; Furl et al., 2013, 2015; Johnston et al., 2013; Schultz et al., 2013), was also included in our discriminative networks. Our results suggest the important role of the motion-sensitive areas in the processing of facial expressions. This is consistent with the previous evidence, which showed that motion-sensitive areas also represented expression information and contributed to the facial expression recognition (Furl et al., 2012; Liang et al., 2017). Moreover, other brain areas, which were found related to face or emotion perception in previous studies, were also included. For instance, the inferior temporal gyrus was related to emotional processing of faces in the study of effectivity connectivity on face perception (Fairhall and Ishai, 2007); the supramarginal gyrus and parahippocampal gyrus were found preference to face category by the fcMVPA (Wang et al., 2016); the lingual gyrus was reported in response to face stimuli, independent of emotional valence (Fusar-Poli et al., 2009) and the hippocampus was conventionally considered as an emotion-related region which was involved in emotion processing, learning and memory (Amunts et al., 2005; Xia et al., 2017). Furthermore, our study showed that brain regions, such as the postcentral gyrus and the Heschl’s gyrus, which were not classically considered in previous studies on facial expression perception with activation measure, were also included in the expression-discriminative networks. Together, these results suggest the potential effects of the activation-defined face-neutral regions in the recognition of facial expressions. To sum, our study showed the involvement of widespread brain regions beyond the conventional face-selective areas in the expression-discriminative networks, suggesting a potential mechanism which supports general interactive nature between distributed brain regions for the human facial expression recognition.

Moreover, it has been demonstrated a common neural substrate underlying the processing of static and dynamic facial expressions (Johnston et al., 2013). Our results support this idea with the analysis of FC, showing that a majority of common brain regions, which were involved in facial expression perception, are shared in the discriminative networks for both static and dynamic facial expressions.

In our present study, we employed comparable sample size as the previous fMRI studies on facial expression perception and MVPA-based analyses (Furl et al., 2012; Harry et al., 2013; Wegrzyn et al., 2015; Wang et al., 2016). Future studies with more samples may further improve the implementation of the classification scheme and boost the accuracy. Additionally, including of both Eastern and Western emotional expressions as stimuli in future studies could further investigate the potential cultural effect on facial expression recognition. In addition to the emotion information perceives from faces, body parts also convey emotion information (Kret et al., 2011, 2013). Therefore, further studies with comprehensive exploration of the FC patterns for both face and body emotions, investigating their similarities and differences may help to better understand human emotion perception.

Conclusion

In summary, we show that expression information can be successfully decoded from the FC patterns and the expression-discriminative networks include brain regions far beyond the conventional face-selective areas identified in previous studies. Our results highlighted the important role of the FC patterns in the facial expression decoding, providing new evidence that the large-scale FC patterns may also contain rich expression information and effectively contribute to the facial expression recognition. Our study extends the traditional research on facial expression recognition and may further the understanding of the potential mechanisms under which human brain achieve quick and accurate recognition of facial expressions.

Author Contributions

BL designed the study. YL, XL, and PW performed the experiments. YL analyzed the results and wrote the manuscript. YL and BL contributed to manuscript revision. All authors have approved the final manuscript.

Funding

This work was supported by the National Natural Science Foundation of China (Nos. U1736219 and 61571327), Shandong Provincial Natural Science Foundation of China (No. ZR2015HM081), and Project of Shandong Province Higher Educational Science and Technology Program (J15LL01).

Conflict of Interest Statement

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Footnotes

- ^ http://www.fil.ion.ucl.ac.uk/spm/software/spm8/

- ^ http://www.nitrc.org/projects/conn

- ^ http://www.cma.mgh.harvard.edu/fsl_atlas.html

- ^ http://www.csie.ntu.edu.tw/cjlin/libsvm/

References

Amunts, K., Kedo, O., Kindler, M., Pieperhoff, P., Mohlberg, H., Shah, N. J., et al. (2005). Cytoarchitectonic mapping of the human amygdala, hippocampal region and entorhinal cortex: intersubject variability and probability maps. Anat. Embryol. 210, 343–352. doi: 10.1007/s00429-005-0025-5

Andrews, T. J., and Ewbank, M. P. (2004). Distinct representations for facial identity and changeable aspects of faces in the human temporal lobe. Neuroimage 23, 905–913. doi: 10.1016/j.neuroimage.2004.07.060

Axelrod, V., and Yovel, G. (2012). Hierarchical processing of face viewpoint in human visual cortex. J. Neurosci. 32, 2442–2452. doi: 10.1523/JNEUROSCI.4770-11.2012

Choubey, B., Jurcoane, A., Muckli, L., and Sireteanu, R. (2009). Methods for dichoptic stimulus presentation in functional magnetic resonance imaging - a review. Open Neuroimag. J. 3, 17–25. doi: 10.2174/1874440000903010017

Cole, M. W., Reynolds, J. R., Power, J. D., Repovs, G., Anticevic, A., and Braver, T. S. (2013). Multi-task connectivity reveals flexible hubs for adaptive task control. Nat. Neurosci. 16, 1348–1355. doi: 10.1038/nn.3470

Dosenbach, N. U. F., Nardos, B., Cohen, A. L., Fair, D. A., Power, J. D., Church, J. A., et al. (2010). Prediction of individual brain maturity using fMRI. Science 329, 1358–1361. doi: 10.1126/science.1194144

Fairhall, S. L., and Ishai, A. (2007). Effective connectivity within the distributed cortical network for face perception. Cereb. Cortex 17, 2400–2406. doi: 10.1093/cercor/bhl148

Fernandes, O., Portugal, L. C. L., Alves, R. C. S., Arruda-Sanchez, T., Rao, A., and Volchan, E. (2017). Decoding negative affect personality trait from patterns of brain activation to threat stimuli. Neuroimage 145(Pt B), 337–345. doi: 10.1016/j.neuroimage.2015.12.050

Foley, E., Rippon, G., Thai, N. J., Longe, O., and Senior, C. (2011). Dynamic facial expressions evoke distinct activation in the face perception network: a connectivity analysis study. J. Cogn. Neurosci. 24, 507–520. doi: 10.1162/jocn_a_00120

Fox, C. J., Iaria, G., and Barton, J. J. S. (2009). Defining the face processing network: optimization of the functional localizer in fMRI. Hum. Brain Mapp. 30, 1637–1651. doi: 10.1002/hbm.20630

Fox, M. D., Zhang, D., Snyder, A. Z., and Raichle, M. E. (2009). The global signal and observed anticorrelated resting state brain networks. J. Neurophysiol. 101, 3270–3283. doi: 10.1152/jn.90777.2008

Furl, N., Hadj-Bouziane, F., Liu, N., Averbeck, B. B., and Ungerleider, L. G. (2012). Dynamic and static facial expressions decoded from motion-sensitive areas in the macaque monkey. J. Neurosci. 32, 15952–15962. doi: 10.1523/JNEUROSCI.1992-12.2012

Furl, N., Henson, R. N., Friston, K. J., and Calder, A. J. (2013). Top-down control of visual responses to fear by the amygdala. J. Neurosci. 33, 17435–17443. doi: 10.1523/JNEUROSCI.2992-13.2013

Furl, N., Henson, R. N., Friston, K. J., and Calder, A. J. (2015). Network interactions explain sensitivity to dynamic faces in the superior temporal sulcus. Cereb. Cortex 25, 2876–2882. doi: 10.1093/cercor/bhu083

Fusar-Poli, P., Placentino, A., Carletti, F., Landi, P., Allen, P., Surguladze, S., et al. (2009). Functional atlas of emotional faces processing: a voxel-based meta-analysis of 105 functional magnetic resonance imaging studies. J. Psychiatry Neurosci. 34, 418–432.

Gobbini, M. I., Gentili, C., Ricciardi, E., Bellucci, C., Salvini, P., Laschi, C., et al. (2011). Distinct neural systems involved in agency and animacy detection. J. Cogn. Neurosci. 23, 1911–1920. doi: 10.1162/jocn.2010.21574

Grill-Spector, K., Knouf, N., and Kanwisher, N. (2004). The fusiform face area subserves face perception, not generic within-category identification. Nat. Neurosci. 7, 555–562. doi: 10.1038/nn1224

Grosbras, M.-H., Beaton, S., and Eickhoff, S. B. (2012). Brain regions involved in human movement perception: a quantitative voxel-based meta-analysis. Hum. Brain Mapp. 33, 431–454. doi: 10.1002/hbm.21222

Gur, R. C., Schroeder, L., Turner, T., McGrath, C., Chan, R. M., Turetsky, B. I., et al. (2002). Brain activation during facial emotion processing. Neuroimage 16(3 Pt 1), 651–662. doi: 10.1006/nimg.2002.1097

Harris, R. J., Young, A. W., and Andrews, T. J. (2014). Dynamic stimuli demonstrate a categorical representation of facial expression in the amygdala. Neuropsychologia 56, 47–52. doi: 10.1016/j.neuropsychologia.2014.01.005

Harry, B., Williams, M. A., Davis, C., and Kim, J. (2013). Emotional expressions evoke a differential response in the fusiform face area. Front. Hum. Neurosci. 7:692. doi: 10.3389/fnhum.2013.00692

Haxby, J. V., Hoffman, E. A., and Gobbini, M. I. (2000). The distributed human neural system for face perception. Trends Cogn. Sci. 4, 223–233. doi: 10.1016/S1364-6613(00)01482-0

He, C., Peelen, M. V., Han, Z., Lin, N., Caramazza, A., and Bi, Y. (2013). Selectivity for large nonmanipulable objects in scene-selective visual cortex does not require visual experience. Neuroimage 79, 1–9. doi: 10.1016/j.neuroimage.2013.04.051

Hoffman, E. A., and Haxby, J. V. (2000). Distinct representations of eye gaze and identity in the distributed human neural system for face perception. Nat. Neurosci. 3, 80–84. doi: 10.1038/71152

Hutchison, R. M., Culham, J. C., Everling, S., Flanagan, J. R., and Gallivan, J. P. (2014). Distinct and distributed functional connectivity patterns across cortex reflect the domain-specific constraints of object, face, scene, body, and tool category-selective modules in the ventral visual pathway. Neuroimage 96, 216–236. doi: 10.1016/j.neuroimage.2014.03.068

Ihme, K., Sacher, J., Lichev, V., Rosenberg, N., Kugel, H., Rufer, M., et al. (2014). Alexithymic features and the labeling of brief emotional facial expressions–An fMRI study. Neuropsychologia 64, 289–299. doi: 10.1016/j.neuropsychologia.2014.09.044

Ishai, A., Schmidt, C. F., and Boesiger, P. (2005). Face perception is mediated by a distributed cortical network. Brain Res. Bull. 67, 87–93. doi: 10.1016/j.brainresbull.2005.05.027

Jang, H., Plis, S. M., Calhoun, V. D., and Lee, J.-H. (2017). Task-specific feature extraction and classification of fMRI volumes using a deep neural network initialized with a deep belief network: evaluation using sensorimotor tasks. Neuroimage 145(Pt B), 314–328. doi: 10.1016/j.neuroimage.2016.04.003

Jin, J., Allison, B. Z., Kaufmann, T., Kűbler, A., Zhang, Y., Wang, X., et al. (2012). The changing face of P300 BCIs: a comparison of stimulus changes in a p300 BCI involving faces, emotion, and movement. PLoS One 7:e49688. doi: 10.1371/journal.pone.0049688

Jin, J., Allison, B. Z., Zhang, Y., Wang, X., and Cichocki, A. (2014a). An ERP-based BCI using an oddball paradigm with different faces and reduced errors in critical functions. Int. J. Neural Syst. 24:1450027. doi: 10.1142/S0129065714500270

Jin, J., Daly, I., Zhang, Y., Wang, X., and Cichocki, A. (2014b). An optimized ERP brain-computer interface based on facial expression changes. J. Neural Eng. 11:036004. doi: 10.1088/1741-2560/11/3/036004

Johnston, P., Mayes, A., Hughes, M., and Young, A. W. (2013). Brain networks subserving the evaluation of static and dynamic facial expressions. Cortex 49, 2462–2472. doi: 10.1016/j.cortex.2013.01.002

Kret, M. E., Pichon, S., Grèzes, J., and Gelder, B. D. (2011). Similarities and differences in perceiving threat from dynamic faces and bodies. An fMRI study. Neuroimage 54, 1755–1762. doi: 10.1016/j.neuroimage.2010.08.012

Kret, M. E., Stekelenburg, J. J., Roelofs, K., and de Gelder, B. (2013). Perception of face and body expressions using electromyography, pupillometry and gaze measures. Front. Psychol. 4:28. doi: 10.3389/fpsyg.2013.00028

Lee, L. C., Andrews, T. J., Johnson, S. J., Woods, W., Gouws, A., Green, G. G. R., et al. (2010). Neural responses to rigidly moving faces displaying shifts in social attention investigated with fMRI and MEG. Neuropsychologia 48, 477–490. doi: 10.1016/j.neuropsychologia.2009.10.005

Liang, Y., Liu, B., Xu, J., Zhang, G., Li, X., Wang, P., et al. (2017). Decoding facial expressions based on face-selective and motion-sensitive areas. Hum. Brain Mapp. 38, 3113–3125. doi: 10.1002/hbm.23578

Liu, F., Guo, W., Fouche, J.-P., Wang, Y., Wang, W., Ding, J., et al. (2015). Multivariate classification of social anxiety disorder using whole brain functional connectivity. Brain Struct. Funct. 220, 101–115. doi: 10.1007/s00429-013-0641-4

Meng, C., Brandl, F., Tahmasian, M., Shao, J., Manoliu, A., Scherr, M., et al. (2014). Aberrant topology of striatum’s connectivity is associated with the number of episodes in depression. Brain 137(Pt 2), 598–609. doi: 10.1093/brain/awt290

Murphy, F. C., Nimmo-Smith, I., and Lawrence, A. D. (2003). Functional neuroanatomy of emotions: a meta-analysis. Cogn. Affect. Behav. Neurosci. 3, 207–233. doi: 10.3758/CABN.3.3.207

Pantazatos, S. P., Talati, A., Pavlidis, P., and Hirsch, J. (2012). Decoding unattended fearful faces with whole-brain correlations: an approach to identify condition-dependent large-scale functional connectivity. PLoS Comput. Biol. 8:e1002441. doi: 10.1371/journal.pcbi.1002441

Pereira, F., Mitchell, T., and Botvinick, M. (2009). Machine learning classifiers and fMRI: a tutorial overview. Neuroimage 45(Suppl. 1), S199–S209. doi: 10.1016/j.neuroimage.2008.11.007

Pitcher, D., Dilks, D. D., Saxe, R. R., Triantafyllou, C., and Kanwisher, N. (2011). Differential selectivity for dynamic versus static information in face-selective cortical regions. Neuroimage 56, 2356–2363. doi: 10.1016/j.neuroimage.2011.03.067

Rotshtein, P., Henson, R. N. A., Treves, A., Driver, J., and Dolan, R. J. (2005). Morphing Marilyn into Maggie dissociates physical and identity face representations in the brain. Nat. Neurosci. 8, 107–113. doi: 10.1038/nn1370

Said, C. P., Moore, C. D., Engell, A. D., Todorov, A., and Haxby, J. V. (2010). Distributed representations of dynamic facial expressions in the superior temporal sulcus. J. Vis. 10:11. doi: 10.1167/10.5.11

Schultz, J., Brockhaus, M., Bülthoff, H. H., and Pilz, K. S. (2013). What the human brain likes about facial motion. Cereb. Cortex 23, 1167–1178. doi: 10.1093/cercor/bhs106

Schultz, J., and Pilz, K. S. (2009). Natural facial motion enhances cortical responses to faces. Exp. Brain Res. 194, 465–475. doi: 10.1007/s00221-009-1721-9

Shirer, W. R., Ryali, S., Rykhlevskaia, E., Menon, V., and Greicius, M. D. (2012). Decoding subject-driven cognitive states with whole-brain connectivity patterns. Cereb. Cortex 22, 158–165. doi: 10.1093/cercor/bhr099

Smith, S. M. (2012). The future of FMRI connectivity. Neuroimage 62, 1257–1266. doi: 10.1016/j.neuroimage.2012.01.022

Stevens, W. D., Tessler, M. H., Peng, C. S., and Martin, A. (2015). Functional connectivity constrains the category-related organization of human ventral occipitotemporal cortex. Hum. Brain Mapp. 36, 2187–2206. doi: 10.1002/hbm.22764

Trautmann, S. A., Fehr, T., and Herrmann, M. (2009). Emotions in motion: dynamic compared to static facial expressions of disgust and happiness reveal more widespread emotion-specific activations. Brain Res. 1284, 100–115. doi: 10.1016/j.brainres.2009.05.075

van der Schalk, J., Hawk, S. T., Fischer, A. H., and Doosje, B. (2011). Moving faces, looking places: validation of the Amsterdam dynamic facial expression set (ADFES). Emotion 11, 907–920. doi: 10.1037/a0023853

Wang, X. S., Fang, Y. X., Cui, Z. X., Xu, Y. W., He, Y., Guo, Q. H., et al. ( (2016). Representing object categories by connections: evidence from a mutivariate connectivity pattern classification approach. Hum. Brain Mapp. 37, 3685–3697. doi: 10.1002/hbm.23268

Wegrzyn, M., Riehle, M., Labudda, K., Woermann, F., Baumgartner, F., Pollmann, S., et al. (2015). Investigating the brain basis of facial expression perception using multi-voxel pattern analysis. Cortex 69, 131–140. doi: 10.1016/j.cortex.2015.05.003

Weissenbacher, A., Kasess, C., Gerstl, F., Lanzenberger, R., Moser, E., and Windischberger, C. (2009). Correlations and anticorrelations in resting-state functional connectivity MRI: a quantitative comparison of preprocessing strategies. Neuroimage 47, 1408–1416. doi: 10.1016/j.neuroimage.2009.05.005

Whitfield-Gabrieli, S., and Nieto-Castanon, A. (2012). Conn: a functional connectivity toolbox for correlated and anticorrelated brain networks. Brain Connect. 2, 125–141. doi: 10.1089/brain.2012.0073

Winston, J. S., Henson, R. N. A., Fine-Goulden, M. R., and Dolan, R. J. (2004). fMRI-Adaptation reveals dissociable neural representations of identity and expression in face perception. J. Neurophysiol. 92, 1830–1839. doi: 10.1152/jn.00155.2004

Xia, Y., Zhuang, K., Sun, J., Chen, Q., Wei, D., Yang, W., et al. (2017). Emotion-related brain structures associated with trait creativity in middle children. Neurosci. Lett. 658, 182–188. doi: 10.1016/j.neulet.2017.08.008

Yang, X., Xu, J., Cao, L., Li, X., Wang, P., Wang, B., et al. (2018). Linear representation of emotions in whole persons by combining facial and bodily expressions in the extrastriate body area. Front. Hum. Neurosci. 11:653. doi: 10.3389/fnhum.2017.00653

Yovel, G., and Kanwisher, N. (2004). Face perception: domain specific, not process specific. Neuron 44, 889–898. doi: 10.1016/j.neuron.2004.11.018

Keywords: facial expressions, fMRI, functional connectivity, multivariate pattern analysis, machine learning algorithm

Citation: Liang Y, Liu B, Li X and Wang P (2018) Multivariate Pattern Classification of Facial Expressions Based on Large-Scale Functional Connectivity. Front. Hum. Neurosci. 12:94. doi: 10.3389/fnhum.2018.00094

Received: 11 November 2017; Accepted: 27 February 2018;

Published: 19 March 2018.

Edited by:

Xiaochu Zhang, University of Science and Technology of China, ChinaReviewed by:

Jing Jin, East China University of Science and Technology, ChinaMaria Clotilde Henriques Tavares, University of Brasília, Brazil

Copyright © 2018 Liang, Liu, Li and Wang. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Baolin Liu, bGl1YmFvbGluQHRzaW5naHVhLmVkdS5jbg==

Yin Liang

Yin Liang Baolin Liu

Baolin Liu Xianglin Li3

Xianglin Li3 Peiyuan Wang

Peiyuan Wang