- 1School of Psychology, The University of Auckland, Auckland, New Zealand

- 2School of Medicine, The University of Auckland, Auckland, New Zealand

- 3Brain Research New Zealand, Auckland, New Zealand

The mirror neuron network (MNN) has been proposed as a neural substrate of action understanding. Electroencephalography (EEG) mu suppression has commonly been studied as an index of MNN activity during execution and observation of hand and finger movements. However, in order to establish its role in higher order processes, such as recognizing and sharing emotions, more research using social emotional stimuli is needed. The current study aims to contribute to our understanding of the sensitivity of mu suppression to facial expressions. Modulation of the mu and occipital alpha (8–13 Hz) rhythms was calculated in 22 participants while they observed dynamic video stimuli, including emotional (happy and sad) and neutral (mouth opening) facial expressions, and non-biological stimulus (kaleidoscope pattern). Across the four types of stimuli, only the neutral face was associated with a significantly stronger mu suppression than the non-biological stimulus. Occipital alpha suppression was significantly greater in the non-biological stimulus than all the face conditions. Source estimation standardized low resolution electromagnetic tomography (sLORETA) analysis comparing the neural sources of mu/alpha modulation between neutral face and non-biological stimulus showed more suppression in the central regions, including the supplementary motor and somatosensory areas, than the more posterior regions. EEG and source estimation results may indicate that reduced availability of emotional information in the neutral face condition requires more sensorimotor engagement in deciphering emotion-related information than the full-blown happy or sad expressions that are more readily recognized.

Introduction

Nonverbal communication is a crucial component of human social behavior, but its neural mechanisms are poorly understood. The ability to understand others’ mental states from their facial and bodily gestures allows us to respond effectively during social communication. Gallese and Goldman (1998) proposed a simulation theory of action understanding to account for the complexity of this process. Under this model, on observing an action, the observer subconsciously and automatically employs a specialized neural circuitry to simulate the action using their own motor system, in turn activating mental states associated with execution of the action, and providing insight into the mental state of the actor. The neural substrate of the simulation theory is proposed to be the mirror neuron (Gallese and Goldman, 1998).

Mirror neurons were first discovered in the motor areas of the monkey brain (di Pellegrino et al., 1992). They were observed to fire during both execution and observation of actions, such as grasping an object, putting it in mouth or breaking it. Moreover, the sensory modality by which the action was experienced did not seem to matter for a subset of these neurons: they were triggered by the sound of the action, even when the action was not seen (Kohler et al., 2002). The implication of these findings was that the mirror neurons could be coding the representations of the actions, allowing for recognizing the movements involved in an action and inferring the intention behind the action. Evidence for a similar mirroring mechanism in the human brain has come from functional magnetic resonance imaging (fMRI) and positron emission tomography (PET) studies (Caspers et al., 2010; Molenberghs et al., 2012) as well as single neuron recordings during surgery in humans (Mukamel et al., 2010).

In addition to metabolic brain imaging and in vivo cellular studies, Electroencephalography (EEG) studies have measured mu rhythm desynchronization to infer mirroring activity. Mu rhythm, characterized by 8–13 Hz oscillations detected over the sensorimotor area, is mostly associated with the functions of the sensorimotor cortex (Niedermeyer, 2005). Increased mu rhythm power indicates physical inactivity and resting, with movement execution as well as observation leading to its suppression (Hari and Salmelin, 1997; Cochin et al., 1998, 1999; Fecteau et al., 2004; Muthukumaraswamy et al., 2004; Lepage and Théoret, 2006). Due to the responsivity of the mu rhythm to action observation, it has been proposed to reflect mirror neuron activity related to viewing of biological action with or without object interaction, including finger movements (Babiloni et al., 1999; Cochin et al., 1999) and hand grip movements (Muthukumaraswamy et al., 2004), as well as hearing sounds that are linked to actions, such as piano melodies (Wu et al., 2016).

Since the initial discovery of mirror neurons, research has focused on their potential role in social cognitive processes that rely on an ability to understand actions and intentions, such as empathy. Similar activity in the brain regions observed in fMRI during execution and observation of facial expressions has been suggested to provide evidence for the existence of a single mechanism of action representation which allows people to empathize with others (Carr et al., 2003). In order to further the knowledge about the role of the mirror neuron network (MNN) in social emotional information processing, a group of researchers used EEG mu suppression as a proxy of the MNN to investigate the network’s sensitivity to emotional information using body parts in painful and non-painful situations, and found greater mu suppression in the painful compared to non-painful conditions (Yang et al., 2009; Cheng et al., 2014; Hoenen et al., 2015). In contrast to findings which suggest a heightened sensitivity of the mu rhythm to emotional information, others found similar levels of mu modulation during gender discrimination and emotion recognition tasks which entailed viewing point-light displays of human figures’ walk (Perry et al., 2010b). Facial expressions have been used as stimuli in EEG mu suppression research only in a handful of studies (Moore et al., 2012; Cooper et al., 2013; Rayson et al., 2016, 2017; Moore and Franz, 2017). Further research using different types of facial movements depicting varying levels of emotional information as the visual stimuli is necessary to investigate the differential sensitivity of the sensorimotor cortex to emotion-related information processing.

It is crucial to note that findings from some mu suppression studies indicate that mu can easily be confounded with occipital alpha activity, yielding alpha suppression at the central electrodes that is not only similar while viewing biological and non-biological motion, but also more pronounced to biological than non-biological motion when the observed action depicts pain. As pointed out by Milston et al. (2013), most of the studies that have explored the relation between mu suppression and empathy have used stimuli eliciting pain only (e.g., Yang et al., 2009; Perry et al., 2010a; Hoenen et al., 2015). Researchers have highlighted that processes other than empathy, such as attention, may be at work while viewing painful stimuli due to their threatening nature (Hoenen et al., 2013) or salience (Perry et al., 2010a). It may still be difficult to disentangle mu from alpha in tasks using non-emotional biological motion. For example, Aleksandrov and Tugin (2012) did not find any systematic differences in mu suppression to the observation of hand movements, non-biological objects or mental counting. Similarly, Perry and Bentin (2010) observed that alpha suppression at the mu and the occipital areas were very similar to the observation of hand movements toward an object. A recent study conducted by Hobson and Bishop (2016) showed that different types of baseline used to measure mu suppression engage the attention system differently, thus directly impacting the degree of suppression recorded. They found that mu and occipital alpha modulation while viewing hand movements and kaleidoscope movements were consistent with the MNN activity only when the static video of the image that immediately preceded the dynamic video of the image was used as the baseline. Due to the posterior alpha confound associated with attentional processes, the baseline and the control conditions need to be chosen carefully.

The current study aims to contribute to our understanding of the simulation account by investigating the responsiveness of the sensorimotor cortex to emotional and non-emotional facial expressions. Our goal is to examine the differential sensitivity of the mu rhythm to different types of facial movements. To our knowledge, this is the first study to examine the sensitivity of mu rhythm, while controling for occipital alpha activity, to dynamic neutral and emotional facial expressions not depicting pain. A within-trial baseline method was adopted as per Hobson and Bishop (2016): the 1,100 ms static image epoch was used as the baseline for quantifying activity in the subsequent 2,050 ms dynamic image epoch. It was hypothesized that mu suppression would be greater in the: (1) happy, sad and neutral face conditions than the non-biological stimulus condition; and (2) happy and sad face conditions than the neutral face condition, without a corresponding difference in occipital alpha suppression.

Materials and Methods

Participants

Twenty-five participants (16 female) between the ages of 19 and 36 (M = 26.5, SD = ±6) were recruited through flyers placed around the University of Auckland campus. Each participant was compensated with a $20 supermarket voucher. Prior to data collection, a pre-screening questionnaire was emailed to the volunteers to identify whether they met the criteria for participation. Exclusion criteria included self-reported major head injury, psychiatric diagnosis, psychoactive medication use, or sensorimotor problems. This study was carried out in accordance with the recommendations of the University of Auckland Human Participants Ethics Committee with written informed consent from all participants. All participants gave written informed consent in accordance with the Declaration of Helsinki. The protocol was approved by the The University of Auckland Human Participants Ethics Committee.

Stimuli and Design

EEG was recorded during a 30-min computer task, which entailed the viewing of four types of dynamic image videos: happy face, sad face, neutral face (i.e., mouth opening) and non-biological stimulus (i.e., kaleidoscope). There were four blocks of 40 trials (160 total). In each block, there were 10 happy face, 10 sad face, 10 neutral face and 10 non-biological stimulus videos, presented in random order. Each video was 6,000 ms long. Participants were free to rest between the blocks for as long as they wanted.

Happy and sad face videos were taken from the Amsterdam Dynamic Facial Expression Set (ADFES; van der Schalk et al., 2011). The ADFES is freely available for research from the Psychology Research Unit at the University of Amsterdam. Neutral faces were recorded by OK. Past research has validated mouth opening videos of actors as non-emotional (Rayson et al., 2016). Videos used in the present study were made similar to Rayson et al.’s (2016) and the ADFES stimuli in terms of duration, brightness, size, and contrast. Kaleidoscope images presented as the non-biological stimulus were those used in a previous study (Hobson and Bishop, 2016). All stimuli were grayscaled.

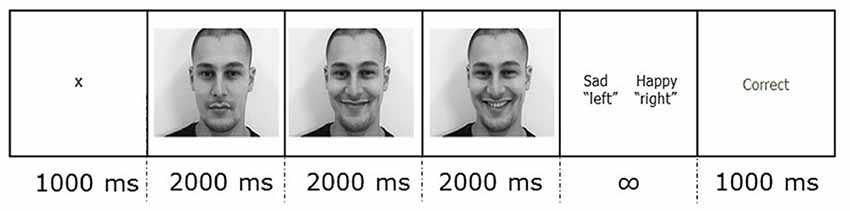

Participants were instructed to minimize movement throughout the experiment, and blinking during trials. As Figure 1 illustrates, each trial started with a 1,000 ms fixation cross against a white background. After the fixation cross, the static image stimulus was presented for 2,000 ms, followed by a 2,000 ms dynamic image in which the expression changed, and ending with a 2,000 ms static image of the last frame of the video. Then, a two-alternative forced-choice response slide showing the correct label alongside one of the other three labels prompted the participant to categorize the stimulus as happy, sad, neutral or other. The response slide remained on the screen until the participant gave a response using the keyboard. The participant pressed “d” if the label on the left was correct and “k” if the label on the right was correct. In half of the trials, the correct label was on the right, and in half, on the left. Each trial ended with a 1,000 ms feedback slide. The feedback slide displayed the word “Correct” or “Incorrect” depending on the key press.

Figure 1. An example of a trial showing the duration of each section in ms. Each condition video was presented for 6,000 ms of which the first 2,000 ms was static, the second 2,000 ms was dynamic, and the last 2,000 ms was static.

Accuracy and reaction time were not analyzed. The feedback slide was only used to gauge attention. The highest number of incorrect answers observed for a participant was six (i.e., 4.5%), indicating sustained attention to the stimuli for all participants.

EEG Data Recording

EEG recording was conducted in an electrically shielded room (IAC Noise Lock Acoustic—Model 1375, Hampshire, United Kingdom) using 128-channel Ag/AgCl electrode nets (Tucker, 1993) from Electrical Geodesics Inc. (Eugene, OR, USA). EEG was recorded continuously (1,000 Hz sample rate) with Electrical Geodesics Inc. amplifiers (300-MΩ input impedance). Electrode impedances were kept below 40 kΩ, an acceptable level for this system (Ferree et al., 2001). Common vertex (Cz) was used as a reference. Electrolytic gel was applied before the recording started. Each session consisted of two continuous recordings. After the first two blocks, recording was paused and electrolytic gel was re-applied to ensure the impedance was kept low.

EEG Data Preprocessing and Analysis

EEG processing was performed using EEGLAB, an open-source MATLAB toolbox (Delorme and Makeig, 2004). For each participant, the continuous data were downsampled to 250 Hz and then high-passed filtered at 0.1 Hz. 6,000 ms conditions starting from the dynamic image onset at time zero were created to get rid of between-session data. Line noise occurring at the harmonics of 50 Hz was removed. Bad channels were identified using the EEGLAB pop_rejchan function (absolute threshold or activity probability limit of 5 SD, based on kurtosis) and interpolated. Data were re-referenced to the average of all electrodes. Infomax ICA was run on each of the preprocessed dataset with EEGLAB default settings. Eye movement and large muscle artifact components were visually identified and rejected for each participant. EEG recordings of three participants were identified as very noisy during the cleaning stage and excluded from further processing.

For each condition, from the 6,000 ms image video, 800 ms to 1,900 ms early epochs corresponding to the static image and 1,950–4,000 ms late epochs corresponding to the dynamic image were extracted. The analysis was conducted for the mu/alpha band of 8–13 Hz over two central clusters of electrodes, six located around C3 on the left hemisphere (i.e., electrodes 30, 31, 37, 41, 42) and six around C4 on the right hemisphere (i.e., electrodes 80, 87, 93, 103, 105), and over six occipital electrodes (O1, Oz and O2). For each of the 15 electrodes, Fast Fourier Transform (FFT) was used to calculate the power spectral density (PSD) in each trial, separately for early and late epochs. For each trial, mu/alpha suppression at each of the 15 electrodes was calculated by taking the ratio of the late epoch PSD relative to the early epoch PSD. Ratio values instead of subtraction values were used as a measure of suppression to control for mu/alpha power variability between individuals that are due to differences in scalp thickness and electrode impedance (Cohen, 2014). Across the central and occipital electrode clusters separately, if a trial had a PSD ratio value greater than three scaled median absolute deviations from the median PSD ratio value of the cluster, that trial was excluded as an outlier. For each of the four conditions, the average PSD ratio of the 12 central electrodes was calculated to get a single mu value, and of the three occipital electrodes to get a single alpha value, resulting in eight power scores (i.e., suppression for happy, sad, neutral face and non-biological stimulus images at central and occipital areas) for each participant.

Since ratio data are non-normal, a log transform was used for statistical analysis. A log ratio value of less than zero indicates suppression, zero indicates no change, and greater than zero indicates facilitation.

Source Estimation

The 128-channel EEG data were analyzed using standardized low resolution electromagnetic tomography method (sLORETA) source localization (Pascual-Marqui, 2002; free academic software available at http://www.uzh.ch/keyinst/loreta.htm). sLORETA is an inverse solution that produces images of standardized current density at each of the 6,430 cortical voxels (spatial resolution 5 mm) in Montreal Neurological Institute (MNI) space (Pascual-Marqui, 2002). sLORETA images of the mu/alpha band (8–13 Hz) activity during the late epochs of the neutral face and non-biological stimulus conditions were computed for each participant, and then the group averages for the two conditions were extracted. Mu/alpha band power associated with late epochs of the neutral face and non-biological stimulus conditions was compared. A whole-brain analysis was conducted to provide evidence that the reduced mu/alpha band power during the late epoch in the neutral face condition compared to the non-biological stimulus condition was localized to the central instead of posterior regions, indicating stimulus-related differences in sensory and motor activity rather than a cortex-wide activity tapping attention. Voxel-wise t-tests were done on the frequency band-wise normalized and log-transformed sLORETA images. For all t-tests, the variance of each image was smoothed by combining half the variance at each voxel with half the mean variance for the image. Correction for multiple testing was applied using statistical nonparametric mapping (SnPM) with 5,000 permutations.

Results

EEG Results

All statistical analyses were performed using R studio (R Studio Team., 2016).

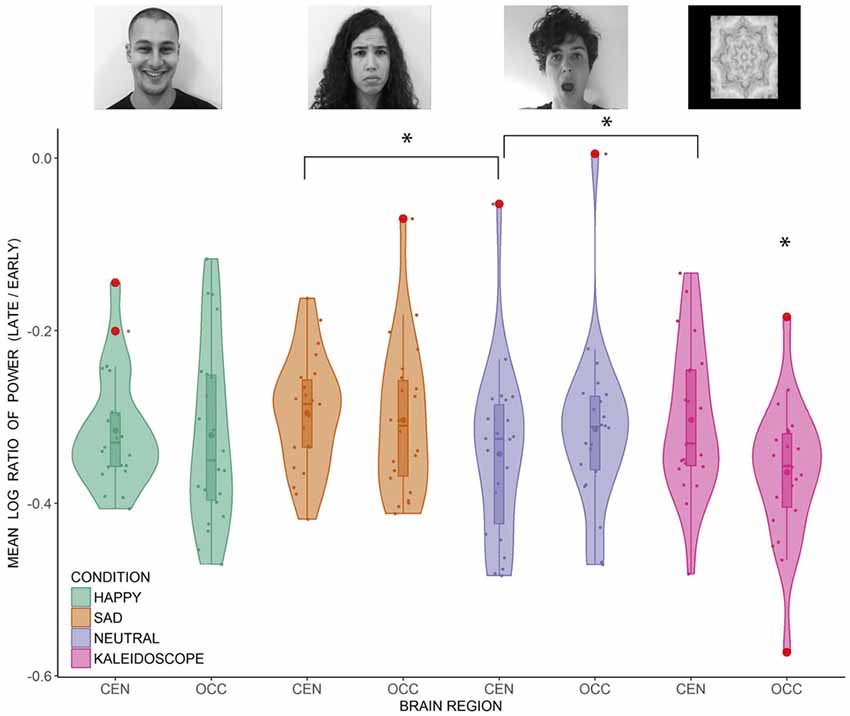

Data from 22 participants were included in the analysis. As explained in the “Materials and Methods” section, there were four conditions (i.e., happy face, sad face, neutral face, non-biological stimulus) and two brain regions (i.e., central, occipital) of interest. Before hypothesis testing, a t-test for each condition at each brain region was conducted to ensure that the PSD was significantly reduced during the late compared to the early epoch (all p-values < 0.001). Upon confirming suppression in each condition at both brain regions, a 4 × 2 repeated measures ANOVA was conducted. Mauchly’s test indicated that the assumption of sphericity was met for the condition variable. There was a non-significant main effect of condition (F(3,63) = 2.74, p = 0.051, = 0.115), and a non-significant main effect of region (F(1,21) = 1.520, p = 0.231, = 0.067). However, interpretation of these main effects is qualified by the significant interaction between condition and region (F(3,63) = 10.734, p < 0.001, = 0.338). The interaction effect was investigated further with two sets of pairwise comparisons across conditions at each brain region. Benjamini and Hochberg’s (1995) false discovery rate (FDR) correction was applied to correct for multiple comparisons between suppression values across the conditions at the central (p < 0.05, FDR corrected) and occipital regions (p < 0.05, FDR corrected). At the central region, only the neutral face condition showed significantly greater suppression than the non-biological stimulus (p < 0.05). Neutral face also showed greater central suppression than the sad face condition (p < 0.05). At the occipital region, suppression was significantly greater in the non-biological stimulus condition than all the other conditions (all p-values < 0.05). The distribution of the data points can be seen in Figure 2. Three participants had at least one ratio score greater than 1.5 times the interquartile range below the 25th or above the 75th quartile. Removing them did not change the pattern of results, so the analyses are reported including these outliers.

Figure 2. Distribution of the individual mean log ratio scores of 22 participants (dots) in the happy, sad, neutral face and non-biological stimulus conditions at the central and occipital regions. Outliers ( >1.5× interquartile range) are represented by the red disks. Inside the boxplots, dots represent the means and horizontal lines represent the medians. The density plots around the data points represent the kernel probability density of the data at different values. CEN, central region; OCC, occipital region. Significant differences are marked by an asterisk [p < 0.05, false discovery rate (FDR) corrected].

Source Estimation Results

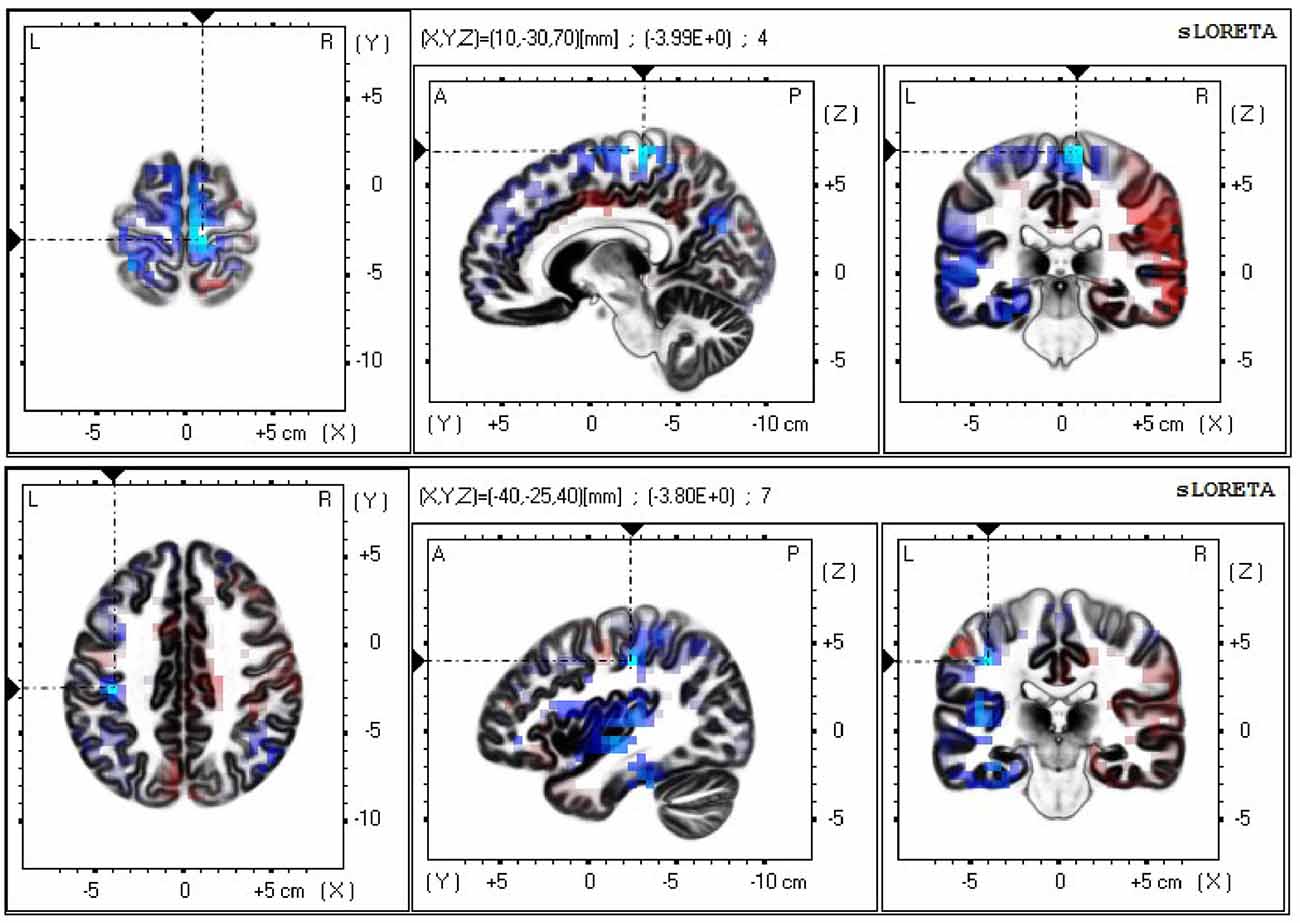

The neural sources of the difference in the mu/alpha band current density power between the neutral and non-biological stimulus conditions during the late epoch (neutral face minus non-biological stimulus) were analyzed using sLORETA with a one-tailed test (neutral face < non-biological stimulus). Exceedance proportion test output from sLORETA analysis was used to identify the voxels at which the difference in mu/alpha power between the two conditions was significant (p < 0.05). Based on the exceedance proportion test results which showed a threshold of −3.599 for a p-value of 0.0524, differences in alpha power were localized to the fusiform gyrus (BA20) t = −4.03 (X = −55, Y = −40, Z = −30; MNI coordinates), primary somatosensory cortex (BA3) t = −3.80 (X = −40, Y = −25, Z = 40), prefrontal cortex (BA9) t = −5.14 (X = 10, Y = 45, Z = 35), and medial premotor cortex (supplementary motor area; BA6) t = −3.99 (X = 10, Y = −30, Z = 70; see Figure 3). In the color scale, blue indicates less alpha power while red indicates the opposite.

Figure 3. Current density power analysis in the mu/alpha band (8–13 Hz), averaged across 22 participants, between the neutral face and non-biological stimulus conditions during the late epoch found significant voxels (p < 0.05) best matched to the supplementary motor area (top) and the primary somatosensory area (bottom). Horizontal (left), sagittal (middle), and coronal (right) sections through the voxel with the maximal t-statistic (local maximum) are displayed. Blue indicates less power in the alpha band in the neutral face than the non-biological stimulus condition.

Discussion

The current study investigated the modulation of mu rhythm while participants observed videos of emotional and neutral face movements and non-biological stimulus movements. Mu suppression, but not occipital alpha suppression, was predicted to be greater in the face conditions than the non-biological stimulus condition, with greater suppression in the emotional faces than the neutral face condition. In contrast to our prediction, only the neutral faces were associated with stronger mu activity than that for the non-biological stimulus condition. A lack of difference in mu/alpha band power between the emotional faces and the non-biological stimulus at the central region made it difficult to distinguish mu from posterior alpha modulation during emotional face observation. Greater suppression in the neutral face than the non-biological stimulus condition at the central region accompanied with an opposite pattern at the occipital region suggests that mu rhythm modulation associated with neutral face processing is distinct from the attenuation of the overall alpha activity power associated with information processing and attention. Similar opposing trends of alpha and mu suppression between biological and non-biological movement was observed by Hobson and Bishop (2016). Greater occipital alpha suppression in the non-biological stimulus than the neutral face condition may be explained by low-level visual differences between the two conditions, such as the contrast and the frequency domain information in the stimuli, and/or disparate demands on attention.

In addition to the results from the scalp-recorded EEG activity, source analysis data provide further support for a more localized than an overall difference in the mu/alpha band power between neutral face and non-biological stimulus conditions, suggesting different levels of activity between conditions in the face-related (i.e., fusiform gyrus) and MNN areas, specifically, the primary somatosensory cortex, prefrontal cortex and supplementary motor area. Greater activity in the fusiform gyrus in response to faces than non-biological stimulus was expected as this area responds more to faces than objects (Haxby et al., 2000). The premotor areas, including the supplementary motor area, and the primary somatosensory cortex are the key regions implicated in sensorimotor simulation during action observation (for a review, see Wood et al., 2016) and motor imagery (Burianová et al., 2013; Filgueiras et al., 2018). The premotor cortex has been a primary region investigated in studies of action observation Buccino et al., 2001; Johnson-Frey et al., 2003; Raos et al., 2004, 2007). While the motor representations of actions are stored in the premotor areas, the somatosensory areas may be involved in storing tactile and proprioceptive representations of these actions (Gazzola and Keysers, 2009). In addition to the role of the somatosensory activity in hand actions (Avikainen et al., 2002; Raos et al., 2004), there is evidence for the involvement of somatosensory representations in our ability to simulate basic emotions while observing facial expressions (Adolphs et al., 2000). Wood et al. (2016) review highlights the role of sensory simulation in addition to motor simulation in emotion recognition, pointing to a large overlap between brain areas involved in production and observation of facial expressions. Signaling from the somatosensory cortex to the premotor cortex may be a necessary step for action understanding and imitation (Gazzola and Keysers, 2009). This signaling may explain the significantly less mu/alpha band power present source estimation results show in these two brain areas in the neutral face movement compared to the non-biological stimulus condition.

We offer a number of possible explanations for the EEG results showing the strongest mu suppression to the neutral face movement in the form of mouth opening. Firstly, the results may be attributed to the sensitivity of the sensorimotor cortex to human-object interaction. Most research that has investigated the role of MNN in action observation involves hand and finger movements that almost always suggest some sort of interaction with an object, such as pincer movement with the thumb and the index finger (e.g., Cochin et al., 1999), manipulating objects (e.g., Gazzola and Keysers, 2009), or bringing food to mouth (Ferrari et al., 2003). In addition to limb movements, viewing oro-facial movements has also been observed to induce mu power decrease, with greatest suppression to viewing object-directed actions compared to undirected sucking and biting movements, and least suppression to the viewing of speech-like mouth movements (Muthukumaraswamy et al., 2006). In the present study, the sensorimotor cortex could be engaged by the mouth opening gesture which may have been perceived as an action associated with eating, an action implying interaction with an object (i.e., food), thereby supporting intention understanding (i.e., eating). Second, the MNN may be involved in the recognition of deliberate, voluntary gestures rather than involuntary communicative actions. Yet, mu suppression is reported to be modulated by contextual information, such as the actor’s familiarity (Oberman et al., 2008) or their reward value (Gros et al., 2015), or gaming context in which the hand gestures are viewed (Perry et al., 2011). In addition, viewing facial gestures that do not suggest object interaction or deliberate action also seems to modulate mu rhythm (Moore et al., 2012; Rayson et al., 2016, 2017; Moore and Franz, 2017). Thus, explanations which restrict mu suppression to voluntary or object-related actions are unlikely.

A third explanation is that different types of facial movements may tap different MNN areas. An fMRI experiment conducted by van der Gaag et al. (2007) found bilateral inferior frontal operculum activation to viewing emotional facial expressions but somatosensory activation to neutral movements (i.e., blowing up the cheeks). The authors attributed their findings to distinct processing pathways, more visceral in the former and more proprioceptive in the latter. A similar differential pathway may explain the current findings. Alternatively, if a single mirroring pathway underlies all types of facial movements, greater ambiguity of the action and/or the emotion in the mouth opening image may require the MNN more than the full-blown, easy to recognize emotional expressions. In other words, when the emotion information is presented in high intensity, the cognitive task of recognition may not be demanding enough to activate the MNN, thereby bypassing the whole system, as indexed by the lack of or reduced mu suppression.

Based on recent findings from connectivity research (see, for example, Gardner et al., 2015), our last explanation argues that rather than a global increase/decrease of activity in the totality of the network, a differential modulation of the signaling between the key MNN nodes is more likely to be at work during action observation. There is evidence for the existence of a subgroup of neurons in the human supplementary motor area that is excited by execution, but inhibited by observation of hand grasping actions and facial emotional expressions (Mukamel et al., 2010). These observation-inhibited neurons may be the mechanism for self-other discrimination process related to observing others’ actions, and the strength of their activity may modulate the amount of input from premotor areas to the sensorimotor cortex during action observation (Mukamel et al., 2010; Woodruff et al., 2011). Readily recognizable emotion-related information may activate the observation-inhibited mirror neurons in the premotor areas, leading to less excitatory input to the sensorimotor cortex. On the other hand, neutral facial movements that lack social and emotional information, as in mouth opening, may not activate observation-inhibited neurons as much as easily recognizable expressions do, resulting in stronger excitatory input to the sensorimotor cortex. Thus, in the face of subtle expressions, increased sensorimotor activity may aid action and emotion recognition.

Signaling between and within the key MNN areas during action observation and execution has recently been approached from a Bayesian perspective that suggests the existence of an updating mechanism which continuously attempts to minimize the difference (i.e., the error) between the predicted action and the observed or executed action to achieve an understanding of the most likely cause of an action (Keysers and Perrett, 2004; Kilner et al., 2007b). According to a predictive coding model of mirror neurons, when the mismatch between the predicted and observed actions of others is large due to the unfamiliarity, unusualness and unexpectedness of the observed action, the network generates a new prediction model, resulting in stronger motor activation (Kilner et al., 2007a,b). In line with this account, several studies have reported greater mu suppression in infants during observation of extraordinary actions (e.g., turning on a lamp with one’s forehead or lifting a cup to the ear) compared to ordinary actions (e.g., turning on a lamp with one’s hand or lifting a cup to the mouth), suggesting that as the deviation of the observed action from the expected action increases, motor activation increases (Stapel et al., 2010; Langeloh et al., 2018). In the current study, the unfamiliarity of the mouth-opening movement as a neutral gesture may have resulted in a greater error signal between the predicted, usual neutral gesture the participants would expect to see, and the observed, unusual neutral gesture they were instructed to categorize as such. Additional predictions that required updating in the mouth opening condition may have activated the sensorimotor areas more than the familiar and ordinary happy and sad gestures. Future research may examine the coordinated activity of the involved brain regions by connectivity analyses to quantify the differences in their associations or dependencies under different conditions.

Limitations

There are several important limitations of the current study that must be noted. Low-level visual properties, such as the contrast and the frequency domain composition of the images, in the face and the non-biological stimulus conditions were not matched. Future studies should aim to match contrast and frequency components of stimuli across conditions in order to mitigate the effect of these non-task related factors on mu/alpha activity. Second, in the face videos, every actor performed only one facial expression. This might have led the participants to learn the movement that followed each static image, leading to habituation across the blocks. Using the same actors for different facial expressions might help avoid habituation-driven mu/alpha activity changes. Another important limitation is related to the uncontroled degree of movement viewed in each condition. Variability in the amount of movement displayed in videos may have influenced mu and alpha power modulation across conditions. Thus, it is possible that the greater mu suppression to neutral faces reflects more the more pronounced movement in the mouth opening action compared to the happy and sad expressions rather than the differences in social emotional content. Furthermore, face videos used as stimuli may not induce mu modulation that would naturally be observed in real life settings. Finally, the limited sample size and lack of a priori power analysis require further replication studies to shed light on the modulatory influence of observed facial movements on the mu rhythm.

Conclusion

In conclusion, ambiguity or complexity of emotional information may result in greater activity in the sensorimotor areas if difficulty of the emotion recognition task requires a stronger engagement of the simulation system. Present findings provide support for the involvement of the MNN in face simulation, and indicate a complex relationship between sensorimotor activity and facial expression processing. Current data call for further research on the observation-related activity within and between the key brain areas involved in mimicry and social information processing. The explanations offered above which attribute the observed effect to the ambiguity of emotion may be addressed in future studies by comparing the level of activity in the premotor, motor and somatosensory areas in response to social stimuli depicting different intensities of various emotions. High spatial resolution neuroimaging techniques, such as fMRI, can be employed to investigate the involvement of the main MNN areas as well as deeper brain regions in the simulation of ambiguous motor and emotion information.

Data Availability

The datasets for this study will not be made publicly available because raw per-participant EEG data cannot be made publicly available as ethical clearance for sharing raw individual data on public repositories has not been received and it is not possible to do so retrospectively. Preprocessed EEG data, in a form as close to the raw data as possible, will be made available upon request of researchers.

Author Contributions

OK performed data acquisition, analysis, interpretation of the data and wrote the first and final drafts of the manuscript. IK contributed substantially to the design of the experiment and critical revision of the manuscript. MM contributed substantially to data analysis and critical revision of the manuscript. All authors approved the final draft of the manuscript for publication (OK, MM, IK).

Funding

This work was supported by The University of Auckland Postgraduate Research Student Support (PReSS) Account (OK).

Conflict of Interest Statement

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Acknowledgments

We thank Veema Lodhia for assistance during data collection and all the participants who took part in the study. This article has been published as a pre-print in bioRxiv (Karakale et al., 2019).

References

Adolphs, R., Damasio, H., Tranel, D., Cooper, G., and Damasio, A. R. (2000). A role for somatosensory cortices in the visual recognition of emotion as revealed by three-dimensional lesion mapping. J. Neurosci. 20, 2683–2690. doi: 10.1523/jneurosci.20-07-02683.2000

Aleksandrov, A. A., and Tugin, S. M. (2012). Changes in the mu rhythm in different types of motor activity and on observation of movements. Neurosci. Behav. Physiol. 42, 302–307. doi: 10.1007/s11055-012-9566-2

Avikainen, S., Forss, N., and Hari, R. (2002). Modulated activation of the human SI and SII cortices during observation of hand actions. Neuroimage 15, 640–646. doi: 10.1006/nimg.2001.1029

Babiloni, C., Carducci, F., Cincotti, F., Rossini, P. M., Neuper, C., Pfurtscheller, G., et al. (1999). Human movement-related potentials vs desynchronization of EEG alpha rhythm: a high-resolution EEG study. Neuroimage 10, 658–665. doi: 10.1006/nimg.1999.0504

Benjamini, Y., and Hochberg, Y. (1995). Controlling the false discovery rate: a practical and powerful approach to multiple testing. J. R Stat. Soc. Series B Methodol. 57, 289–300. doi: 10.1111/j.2517-6161.1995.tb02031.x

Buccino, G., Binkofski, F., Fink, G. R., Fadiga, L., Fogassi, L., Gallese, V., et al. (2001). Action observation activates premotor and parietal areas in a somatotopic manner: an fMRI study. Eur. J. Neurosci. 13, 400–404. doi: 10.1111/j.1460-9568.2001.01385.x

Burianová, H., Marstaller, L., Sowman, P., Tesan, G., Rich, A. N., Williams, M., et al. (2013). Multimodal functional imaging of motor imagery using a novel paradigm. Neuroimage 71, 50–58. doi: 10.1016/j.neuroimage.2013.01.001

Carr, L., Iacoboni, M., Dubeau, M.-C., Mazziotta, J. C., and Lenzi, G. L. (2003). Neural mechanisms of empathy in humans: a relay from neural systems for imitation to limbic areas. Proc. Natl. Acad. Sci. U S A 100, 5497–5502. doi: 10.1073/pnas.0935845100

Caspers, S., Zilles, K., Laird, A. R., and Eickhoff, S. B. (2010). ALE meta-analysis of action observation and imitation in the human brain. Neuroimage 50, 1148–1167. doi: 10.1016/j.neuroimage.2009.12.112

Cheng, Y., Chen, C., and Decety, J. (2014). An EEG/ERP investigation of the development of empathy in early and middle childhood. Dev. Cogn. Neurosci. 10, 160–169. doi: 10.1016/j.dcn.2014.08.012

Cochin, S., Barthelemy, C., Lejeune, B., Roux, S., and Martineau, J. (1998). Perception of motion and qEEG activity in human adults. Electroencephalogr. Clin. Neurophysiol. 107, 287–295. doi: 10.1016/s0013-4694(98)00071-6

Cochin, S., Barthelemy, C., Roux, S., and Martineau, J. (1999). Observation and execution of movement: similarities demonstrated by quantified electroencephalography. Eur. J. Neurosci. 11, 1839–1842. doi: 10.1046/j.1460-9568.1999.00598.x

Cohen, M. X. (2014). Analyzing Neural Time Series Data: Theory And Practice. Cambridge, CA: MIT press.

Cooper, N. R., Simpson, A., Till, A., Simmons, K., and Puzzo, I. (2013). Beta event-related desynchronization as an index of individual differences in processing human facial expression: further investigations of autistic traits in typically developing adults. Front. Hum. Neurosci. 7:159. doi: 10.3389/fnhum.2013.00159

Delorme, A., and Makeig, S. (2004). EEGLAB: an open source toolbox for analysis of single-trial EEG dynamics including independent component analysis. J. Neurosci. Methods 134, 9–21. doi: 10.1016/j.jneumeth.2003.10.009

di Pellegrino, G., Fadiga, L., Fogassi, L., Gallese, V., and Rizzolatti, G. (1992). Understanding motor events: a neurophysiological study. Exp. Brain Res. 91, 176–180. doi: 10.1007/bf00230027

Fecteau, S., Carmant, L., Tremblay, C., Robert, M., Bouthillier, A., and Théoret, H. (2004). A motor resonance mechanism in children? Evidence from subdural electrodes in a 36-month-old child. Neuroreport 15, 2625–2627. doi: 10.1097/00001756-200412030-00013

Ferrari, P. F., Gallese, V., Rizzolatti, G., and Fogassi, L. (2003). Mirror neurons responding to the observation of ingestive and communicative mouth actions in the monkey ventral premotor cortex. Eur. J. Neurosci. 17, 1703–1714. doi: 10.1046/j.1460-9568.2003.02601.x

Ferree, T. C., Clay, M. T., and Tucker, D. M. (2001). The spatial resolution of scalp EEG. Neurocomputing 38, 1209–1216. doi: 10.1016/s0925-2312(01)00568-9

Filgueiras, A., Conde, E. F. Q., and Hall, C. R. (2018). The neural basis of kinesthetic and visual imagery in sports: an ALE meta− analysis. Brain Imaging Behav. 12, 1513–1523. doi: 10.1007/s11682-017-9813-9

Gallese, V., and Goldman, A. (1998). Mirror neurons and the simulation theory of mind-reading. Trends Cogn. Sci. 2, 493–501. doi: 10.1016/s1364-6613(98)01262-5

Gardner, T., Goulden, N., and Cross, E. S. (2015). Dynamic modulation of the action observation network by movement familiarity. J. Neurosci. 35, 1561–1572. doi: 10.1523/jneurosci.2942-14.2015

Gazzola, V., and Keysers, C. (2009). The observation and execution of actions share motor and somatosensory voxels in all tested subjects: single-subject analyses of unsmoothed fMRI data. Cereb. Cortex 19, 1239–1255. doi: 10.1093/cercor/bhn181

Gros, I. T., Panasiti, M. S., and Chakrabarti, B. (2015). The plasticity of the mirror system: How reward learning modulates cortical motor simulation of others. Neuropsychologia 70, 255–262. doi: 10.1016/j.neuropsychologia.2015.02.033

Hari, R., and Salmelin, R. (1997). Human cortical oscillations: a neuromagnetic view through the skull. Trends Neurosci. 20, 44–49. doi: 10.1016/s0166-2236(96)10065-5

Haxby, J. V., Hoffman, E. A., and Gobbini, M. I. (2000). The distributed human neural system for face perception. Trends Cogn. Sci. 4, 223–233. doi: 10.1016/s1364-6613(00)01482-0

Hobson, H. M., and Bishop, D. V. (2016). Mu suppression-A good measure of the human mirror neuron system? Cortex 82, 290–310. doi: 10.1016/j.cortex.2016.03.019

Hoenen, M., Lübke, K. T., and Pause, B. M. (2015). Somatosensory mu activity reflects imagined pain intensity of others. Psychophysiology 52, 1551–1558. doi: 10.1111/psyp.12522

Hoenen, M., Schain, C., and Pause, B. M. (2013). Down-modulation of mu-activity through empathic top-down processes. Soc. Neurosci. 8, 515–524. doi: 10.1080/17470919.2013.833550

Johnson-Frey, S. H., Maloof, F. R., Newman-Norlund, R., Farrer, C., Inati, S., and Grafton, S. T. (2003). Actions or hand-object interactions? Human inferior frontal cortex and action observation. Neuron 39, 1053–1058. doi: 10.1016/s0896-6273(03)00524-5

Karakale, O., Moore, M. R., and Kirk, I. J. (2019). Mental simulation of facial expressions: Mu suppression to the viewing of dynamic neutral face videos. bioRxivorg 457846. doi: 10.1101/457846

Keysers, C., and Perrett, D. I. (2004). Demystifying social cognition: a Hebbian perspective. Trends Cogn. Sci. Regul. Ed. 8, 501–507. doi: 10.1016/j.tics.2004.09.005

Kilner, J. M., Friston, K. J., and Frith, C. D. (2007a). The mirror-neuron system: a Bayesian perspective. Neuroreport 18, 619–623. doi: 10.1097/wnr.0b013e3281139ed0

Kilner, J. M., Friston, K. J., and Frith, C. D. (2007b). Predictive coding: an account of the mirror neuron system. Cogn. Process. 8, 159–166. doi: 10.1007/s10339-007-0170-2

Kohler, E., Keysers, C., Umilta, M. A., Fogassi, L., Gallese, V., and Rizzolatti, G. (2002). Hearing sounds, understanding actions: action representation in mirror neurons. Science 297, 846–848. doi: 10.3410/f.1010294.150158

Langeloh, M., Buttelmann, D., Matthes, D., Grassmann, S., Pauen, S., and Hoehl, S. (2018). Reduced mu power in response to unusual actions is context-dependent in 1-year-olds. Front. Psychol. 9:36. doi: 10.3389/fpsyg.2018.00036

Lepage, J. F., and Théoret, H. (2006). EEG evidence for the presence of an action observation-execution matching system in children. Eur. J. Neurosci. 23, 2505–2510. doi: 10.1111/j.1460-9568.2006.04769.x

Milston, S. I., Vanman, E. J., and Cunnington, R. (2013). Cognitive empathy and motor activity during observed actions. Neuropsychologia 51, 1103–1108. doi: 10.1016/j.neuropsychologia.2013.02.020

Molenberghs, P., Cunnington, R., and Mattingley, J. B. (2012). Brain regions with mirror properties: a meta-analysis of 125 human fMRI studies. Neurosci. Biobehav. Rev. 36, 341–349. doi: 10.1016/j.neubiorev.2011.07.004

Moore, A., Gorodnitsky, I., and Pineda, J. (2012). EEG mu component responses to viewing emotional faces. Behav. Brain Res. 226, 309–316. doi: 10.1016/j.bbr.2011.07.048

Moore, M. R., and Franz, E. A. (2017). Mu rhythm suppression is associated with the classification of emotion in faces. Cogn. Affect.Behav. Neurosci. 17, 224–234. doi: 10.3758/s13415-016-0476-6

Mukamel, R., Ekstrom, A. D., Kaplan, J., Iacoboni, M., and Fried, I. (2010). Single-neuron responses in humans during execution and observation of actions. Curr. Biol. 20, 750–756. doi: 10.3410/f.3038956.2718054

Muthukumaraswamy, S. D., Johnson, B. W., and McNair, N. A. (2004). Mu rhythm modulation during observation of an object-directed grasp. Brain Res. Cogn.Brain Res. 19, 195–201. doi: 10.1016/j.cogbrainres.2003.12.001

Muthukumaraswamy, S. D., Johnson, B. W., Gaetz, W. C., and Cheyne, D. O. (2006). Neural processing of observed oro-facial movements reflects multiple action encoding strategies in the human brain. Brain Res. 1071, 105–112. doi: 10.1016/j.brainres.2005.11.053

Niedermeyer, E. (2005). “The normal EEG of the waking adult,” in Electroencephalography: Basic principles, Clinical Applications and Related Fields, eds E. Niedermeyer and F. H. Lopes da Silva (Baltimore: Williams and Wilkins), 167–192. doi: 10.1016/b978-0-323-04233-8.50007-x

Oberman, L. M., Ramachandran, V. S., and Pineda, J. A. (2008). Modulation of mu suppression in children with autism spectrum disorders in response to familiar or unfamiliar stimuli: the mirror neuron hypothesis. Neuropsychologia 46, 1558–1565. doi: 10.1016/j.neuropsychologia.2008.01.010

Pascual-Marqui, R. D. (2002). Standardized low-resolution brain electromagnetic tomography (sLORETA): technical details. Methods Find. Exp. Clin. Pharmacol. 24, 5–12.

Perry, A., and Bentin, S. (2010). Does focusing on hand-grasping intentions modulate electroencephalogram μ and α suppressions? Neuroreport 21, 1050–1054. doi: 10.1097/wnr.0b013e32833fcb71

Perry, A., Bentin, S., Bartal, I. B.-A., Lamm, C., and Decety, J. (2010a). “Feeling” the pain of those who are different from us: Modulation of EEG in the mu/alpha range. Cogn. Affect. Behav. Neurosci. 10, 493–504. doi: 10.3758/CABN.10.4.493

Perry, A., Troje, N. F., and Bentin, S. (2010b). Exploring motor system contributions to the perception of social information: Evidence from EEG activity in the mu/alpha frequency range. Soc. Neurosci. 5, 272–284. doi: 10.1080/17470910903395767

Perry, A., Stein, L., and Bentin, S. (2011). Motor and attentional mechanisms involved in social interaction—Evidence from mu and alpha EEG suppression. Neuroimage 58, 895–904. doi: 10.1016/j.neuroimage.2011.06.060

R Studio Team. (2016). R Studio: Integrated Development for R. Boston, MA: R Studio, Inc. Available online at: http://www.rstudio.com/

Raos, V., Evangeliou, M. N., and Savaki, H. E. (2004). Observation of action: grasping with the mind’s hand. Neuroimage 23, 193–201. doi: 10.1016/j.neuroimage.2004.04.024

Raos, V., Evangeliou, M. N., and Savaki, H. E. (2007). Mental simulation of action in the service of action perception. J. Neurosci. 27, 12675–12683. doi: 10.1523/jneurosci.2988-07.2007

Rayson, H., Bonaiuto, J. J., Ferrari, P. F., and Murray, L. (2016). Mu desynchronization during observation and execution of facial expressions in 30-month-old children. Dev. Cogn. Neurosc. 19, 279–287. doi: 10.1016/j.dcn.2016.05.003

Rayson, H., Bonaiuto, J. J., Ferrari, P. F., and Murray, L. (2017). Early maternal mirroring predicts infant motor system activation during facial expression observation. Sci. Rep. 7:11738. doi: 10.1038/s41598-017-12097-w

Stapel, J. C., Hunnius, S., van Elk, M., and Bekkering, H. (2010). Motor activation during observation of unusual versus ordinary actions in infancy. Soc. Neurosci. 5, 451–460. doi: 10.1080/17470919.2010.490667

Tucker, D. M. (1993). Spatial sampling of head electrical fields: the geodesic sensor net. Electroencephalogr. Clin. Neurophysiol. 87, 154–163. doi: 10.1016/0013-4694(93)90121-b

van der Gaag, C., Minderaa, R. B., and Keysers, C. (2007). Facial expressions: what the mirror neuron system can and cannot tell us. Soc. Neurosci. 2, 179–222. doi: 10.1080/17470910701376878

van der Schalk, J., Hawk, S. T., Fischer, A. H., and Doosje, B. (2011). Moving faces, looking places: validation of the amsterdam dynamic facial expression set (ADFES). Emotion 11, 907–920. doi: 10.1037/a0023853

Wood, A., Rychlowska, M., Korb, S., and Niedenthal, P. (2016). Fashioning the face: sensorimotor simulation contributes to facial expression recognition. Trends Cogn. Sci. 20, 227–240. doi: 10.1016/j.tics.2015.12.010

Woodruff, C. C., Martin, T., and Bilyk, N. (2011). Differences in self- and other-induced mu suppression are correlated with empathic abilities. Brain Res. 1405, 69–76. doi: 10.1016/j.brainres.2011.05.046

Wu, C. C., Hamm, J. P., Lim, V. K., and Kirk, I. J. (2016). Mu rhythm suppression demonstrates action representation in pianists during passive listening of piano melodies. Exp. Brain Res. 234, 2133–2139. doi: 10.1007/s00221-016-4615-7

Keywords: EEG, mirror neuron, mu rhythm, face emotion, source estimation, sLORETA

Citation: Karakale O, Moore MR and Kirk IJ (2019) Mental Simulation of Facial Expressions: Mu Suppression to the Viewing of Dynamic Neutral Face Videos. Front. Hum. Neurosci. 13:34. doi: 10.3389/fnhum.2019.00034

Received: 05 November 2018; Accepted: 22 January 2019;

Published: 08 February 2019.

Edited by:

Kaat Alaerts, KU Leuven, BelgiumReviewed by:

Hidetoshi Takahashi, National Center of Neurology and Psychiatry, JapanMaria Serena Panasiti, Sapienza University of Rome, Italy

Copyright © 2019 Karakale, Moore and Kirk. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Ozge Karakale, b2thcjE2NEBhdWNrbGFuZHVuaS5hYy5ueg==

Ozge Karakale

Ozge Karakale Matthew R. Moore

Matthew R. Moore Ian J. Kirk

Ian J. Kirk