- 1Division of Psychological Medicine and Clinical Neurosciences, School of Medicine, Cardiff University, Cardiff, United Kingdom

- 2Cardiff University Brain Research Imaging Centre, School of Psychology, Cardiff University, Cardiff, United Kingdom

- 3School of Psychology, Cardiff University, Cardiff, United Kingdom

- 4Neuroinformatics Group, Cardiff University Brain Research Imaging Centre, School of Psychology, Cardiff University, Cardiff, United Kingdom

- 5Neuroscience and Mental Health Research Institute, Cardiff University, Cardiff, United Kingdom

- 6Brain Innovation B. V., Maastricht, Netherlands

A brain–computer interface (BCI) is a channel of communication that transforms brain activity into specific commands for manipulating a personal computer or other home or electrical devices. In other words, a BCI is an alternative way of interacting with the environment by using brain activity instead of muscles and nerves. For that reason, BCI systems are of high clinical value for targeted populations suffering from neurological disorders. In this paper, we present a new processing approach in three publicly available BCI data sets: (a) a well-known multi-class (N = 6) coded-modulated Visual Evoked potential (c-VEP)-based BCI system for able-bodied and disabled subjects; (b) a multi-class (N = 32) c-VEP with slow and fast stimulus representation; and (c) a steady-state Visual Evoked potential (SSVEP) multi-class (N = 5) flickering BCI system. Estimating cross-frequency coupling (CFC) and namely δ-θ [δ: (0.5–4 Hz), θ: (4–8 Hz)] phase-to-amplitude coupling (PAC) within sensor and across experimental time, we succeeded in achieving high classification accuracy and Information Transfer Rates (ITR) in the three data sets. Our approach outperformed the originally presented ITR on the three data sets. The bit rates obtained for both the disabled and able-bodied subjects reached the fastest reported level of 324 bits/min with the PAC estimator. Additionally, our approach outperformed alternative signal features such as the relative power (29.73 bits/min) and raw time series analysis (24.93 bits/min) and also the original reported bit rates of 10–25 bits/min. In the second data set, we succeeded in achieving an average ITR of 124.40 ± 11.68 for the slow 60 Hz and an average ITR of 233.99 ± 15.75 for the fast 120 Hz. In the third data set, we succeeded in achieving an average ITR of 106.44 ± 8.94. Current methodology outperforms any previous methodologies applied to each of the three free available BCI datasets.

Introduction

From the very first work of Farwell and Donchin (Farwell and Donchin, 1988), the majority of P300-based brain–computer interface (BCI) systems focused on creating new applications (Polikoff et al., 1995; Bayliss, 2003), and on constructing and testing new algorithms for the reliable detection of the P300 waveform from noisy data sets (Xu et al., 2003; Kaper et al., 2004; Rakotomamonjy et al., 2005; Thulasidas et al., 2006; Hoffmann et al., 2008). For a review of P300, an interested person can read the following: Reza et al. (2012), Farwell et al. (2013), and Piccione et al. (2006).

The majority of BCI systems are based on three major types of brain signals: the event-related resynchronization which is associated with the P300, the motor-imagery and the steady-state visual evoked potentials (SSVEP) (Wolpaw et al., 2002). P300 could give very good results, for example in a spelling device (Guger et al., 2009), but for continuous control in a daily scenario like steering a wheelchair in many different directions, SSVPE performed better (Lin and Kuo, 2016).

Other approaches to the traditional BCI systems are based on visual evoked potentials (VEPs) paradigms. VEPs are alternative brain signals that can be used in a BCI system. Frequently, the two methods are mostly employed to distinguish various visual targets, the phase and frequency coding (Wang et al., 2008). These VEPs are usually observed in the occipital area in response to a repetitive visual stimulus, and they encode the undergoing visual information processing in the brain. In the context of BCIs, a subject is focused (fixated) into a flashing image (target). Each target is coded differently and thus, is presented by a unique stimulus sequence. These results are unique visual responses easily identified in the brain activity. BCI VEP-based systems can be organized into three distinct categories depending on the design: time (t), frequency (f), and (c) code modulated. The reader is invited to consult review works such as Riechmann et al. (2016) for more details. In this work, we will focus on a c-VEP system in which pseudorandom sequences are used for presenting the stimuli.

The most common domain where BCI c-VEPs are employed is the matrix spellers. It has been previously shown that they outperform the traditional BCIs regarding ITR performance (Mohebbi et al., 2015; Riechmann et al., 2016) with classification accuracies also being comparable.

c-VEPs are just starting to gain popularity in another domain; that is the control of virtual or physical devices. In Mohebbi et al. (2015), the authors built a system in which a 12-target virtual agent was simulated in a 3D environment (accuracy around 80%). Their paradigm closely follows those using the classic matrix design, but they replaced the letters with navigation and interaction symbols accordingly. A real-world scenario is developed in Kapeller et al. (2013a) where users can control (in real time) a remote robot with reported accuracies up to 98.18%; though only four navigational symbols (left, right, forward, backward) were used. In the same spirit, an application was developed to control a wheelchair model in four directions (Mohebbi et al., 2015). Their subject-specific study reported an average of 97% accuracy when controlling the wheelchair.

A novel c-VEP study has proposed a high presentation rate of coding sequence up to 120 Hz compared to the traditional 60 Hz. This is a very significant study since the interface was based on 32 circular white targets following a sequence stimulation paradigm. Apart from the frequency of the coding sequence, they also introduced a novel decoding algorithm based on spatio-temporal beamforming. Wittevrongel et al. (2017) reported that the median ITR was 172.87 bits/min.

The core of BCI-SSVEP systems is based on oscillatory responses elicited when a light source flashes at a specific frequency (e.g., 60 Hz). These frequency-dependent responses are spatially oriented over the parieto-occipital cortex. The design of a BCI-SSVEP system is multi-targeted where each one flashes on a different frequency (Müller-Putz et al., 2005) or on the same frequency (Maye et al., 2017). The subject has to focus on one of the targets that are presented simultaneously. The outcome of the SSVEP response is the translation of the subject's decision tailored to the design of the BCI system like a speller (Chen et al., 2015; Maye et al., 2017).

The bibliography suggests that the dominant methodology in c-VEP studies (Kapeller et al., 2013a,b; Mohebbi et al., 2015; Riechmann et al., 2016) is the usage of spatial filters through Canonical Correlation Analysis (CCA) combined with a classification algorithm (most notably SVMs Farwell and Donchin, 1988; Spuller et al., 2012a or LDA Polikoff et al., 1995). Briefly, CCA finds projections of the original EEG signal to increase the distinct activity among EEG sensors and it is used as a common spatial filter (Kapeller et al., 2013a). A recent retinotopic multi-target SSVEP study adopted CCA as spatial filters of amplitude and phase domain of the single trials, achieving very high classification accuracy (Maye et al., 2017).

Thus, researchers are experimenting with modifying the protocol to achieve optimal results. For instance, in Bin et al. (2009), they experimented with different EEG buffer lengths (in seconds) to produce the CCA templates. The authors reported an improved accuracy score up to 99.21%; however, this configuration has a direct impact on the latency of the BCI system. Another more sophisticated approach is explored in (Spuller et al., 2012b), where the authors incorporated the Error-related Potentials to initially calibrate the system online; thus, directing the classifier to the correct class. This approach achieved a grand average accuracy of 96.18%.

Hoffmann et al. demonstrated a six-choice P300 paradigm which was tested in a group of five disabled and four able-bodied subjects. The experimental paradigm was six flashing images with the content of a home device (Hoffmann et al., 2008). They tested how the electrode configuration can influence the accuracy in order to detect the best channel selection. They finally succeeded in achieving increased communication rates and classification accuracies compared to previous studies (Piccione et al., 2006; Sellers and Donchin, 2006; McCane et al., 2015).1

Multiple feature extraction techniques have been used in BCI systems including the analysis of raw time series, the estimation of signal power, connectivity analysis and so on. The most famous algorithms include the fast fourier transform (Resalat and Saba, 2016), the Auto-Regressive Model (Pineda et al., 2003), the short-time fourier transform, and the wavelet decomposition (Nguyen et al., 2015). Compared to the past, brain connectivity attracts much attention for BCI systems (Kabbara et al., 2016). However, cross-frequency coupling (CFC) has not yet explored its potentiality to BCI systems and especially to c-VEP and SSVEP BCI systems.

In the present study, we used the data set from Hoffmann et al. to demonstrate an alternative algorithmic approach with the main scope of improving the bit rates up to the limits. Our study focused on the c-VEP subcomponent of the brain signals generated by the flashing images. The basic hypothesis is to decode the features from the brain activity that are directly related to the content of the flashing image. For that occasion, we adopted a CFC estimator, namely phase-to-amplitude coupling (PAC), to quantify how the phase of the lower frequency brain rhythms modulates the amplitude of the higher oscillations. The whole approach was followed on a trial basis and within sensors located over the parieto-occipital brain areas. PAC proved to be a valuable estimator in many applications like the design of a biomarker: for amnestic mild cognitive impairment subjects during an auditory oddball paradigm (Dimitriadis et al., 2015), for dyslexia (Dimitriadis et al., 2016) and for mild traumatic brain injury (Antonakakis et al., 2016). Our main goal is to improve the performance and the bit rates focusing on the c-VEP component of the brain activity.

To further enhance the proposed methodology based on CFC-PAC estimates, we also report the results from two freely available BCI data sets. The first data set is a c-VEP multi-target (32 targets) gaze BCI system with slow (60 Hz) and fast (120 Hz) stimulus representation (Wittevrongel et al., 2017). The second data set is a SSVEP and is associated with flickering stimuli at different frequencies (5 frequencies−5 targets) with the main scope of predicting the gaze direction (Georgiadis et al., 2018).

To the best of our knowledge, this work is the only one suggesting CFC features for a BCI system and especially for c-VEP and SSVEP.

The layout of the paper is as follows. In section Materials and Methods, we briefly describe the three EEG data sets, the subject population, the experimental set-up, the methods used for data preprocessing steps of the proposed pipeline and the classification procedure. The results are presented in section Results. The discussion is addressed in section Discussion.

Materials and Methods

c-VEP Flashing Images Data Set

Experimental Set-Up

Six targeted flashed images are illustrated in Figure 1. The images show: a television, a telephone, a lamp, a door, a window, and a radio. The images were flashed for 100 ms and during the following 300 ms, none of the images was flashed, i.e., the inter-stimulus-interval was 400 ms.

The EEG was recorded at a 2048 Hz sampling rate from 32 EEG sensors placed at the standard positions of the 10–20 international system. For further details see the original paper (Hoffmann et al., 2008).

Subjects

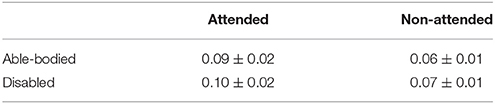

The system was tested with five disabled and four healthy subjects. The disabled subjects were all wheelchair-bound but had varying communication and limb muscle control abilities (see Table 1). Subjects 1–4 are the disabled group where in Table 1 one can see their description. Subjects 6–9 were Ph.D. students recruited from EPFL BCI group's laboratory (all males, age 30 ± 2.3 years). None of subjects 6–9 had known neurological deficits. Communication with Subject 5 was very difficult due to a severe hypophony and large fluctuations in the level of alertness.

Table 1. Subjects from which data were recorded in the study of the environment control system (Hoffmann et al., 2008).

Experimental Schedule

Each subject recruited to participate in this study completed four recording sessions, two sessions on 1 day and the remaining two sessions on a second day, with a maximum of 2 weeks between the two recording days. Each recording session consisted of a total number of six runs, one run per targeted image (Figure 1).

The duration of each run was 1 min and of the recording session was around 30 min. One session included on average 810 trials, while the whole data for each subject consisted of, on average, 3,240 trials. For further details about the protocol see Hoffmann et al. (2008).

Preprocessing of Single Trials

The impact of different single-sensor recordings on classification accuracy was tested in an offline procedure.

Before learning a classification function and cross-validation scheme, several preprocessing operations were applied to the data.

The preprocessing steps applied to the data set in this study are presented in the following steps:

(i) Referencing. We re-referenced single trials using the average signal from the two mastoid electrodes.

(ii) Filtering. A third-order zero phase Butterworth bandpass filter was used to filter the data. The MATLAB function butter was used to compute the filter coefficients and the function filtfilt was used for filtering. The predefined frequencies were: δ {0.5–4 Hz}, θ {4–8 Hz}, α1 {8–10 Hz}, α2 {10–13 Hz}, β1 {13–20 Hz}, β2 {20–30 Hz}, and γ1 {30–45 Hz}.

(iii) Downsampling. The EEG was downsampled from 2,048 to 512 Hz.

(iv) Single trial extraction. Single trials have a duration of 1,000 ms from the stimulus onset up to 1,000 ms after the stimulus onset.

(v) Electrode selection. We applied our analysis to recordings from single-sensor activity and mainly, PZ, OZ, P3, P4, P7, and P8.

vi) Feature vector construction. As an appropriate feature for each trial, we used PAC which has already shown its potentiality in building reliable biomarkers (Dimitriadis et al., 2015, 2016). PAC was estimated for each frequency pair (see ii). The description of PAC is given in the next section. As a complementary feature that can separate the counted stimuli from the non-counted stimuli, α relative signal powers have been estimated. Alpha power level can give us a valuable and objective criterion when a subject attends or does not attend the stimulus. Our idea is to create an initial binary classifier that will cut-off the attended from the non-attended stimuli for each subject prior to entering the main multi-class classifier.

CFC metric computation

CFC quantifies the strength of interactions between a time series of different frequency content. It can be estimated both within and also between sensors (Buzsáki, 2010; Canolty and Knight, 2010; Buzsáki et al., 2013). CFC can be estimated between power—power, amplitude—amplitude, and amplitude-phase representations of two time series with different frequency content. These representations can be derived by filtering twice one (within) or once two time series (between). The most common type of CFC interaction is PAC and it is the most common in the literature (Voytek et al., 2010). The PAC algorithm for a single EEG sensor is described below.

Let x(isensor, t), be the EEG time series at the isensor-th recording site, and t = 1, 2,.…T the sample points. Given a bandpassed filtered signal x(isensor,t), CFC is quantified under the notion that the phase of the lower frequency (LF) oscillations modulate the amplitude of the higher frequency (HF) oscillations. The following equations described the complex representations of both LF zLF(t) and HF oscillations zHF(t) produced via the Hilbert transform (HT):

The next step of the PAC algorithm is the estimation of the envelope of the HF oscillation AHF(t) which is then bandpass-filtered within the frequency range of LF oscillations. Afterward, the resulting time series is again Hilbert transformed in order to get its phase time series that describe phase dynamics ϕ'(t):

The aforementioned complex equation describes analytically the modulation of the amplitude of HF oscillation by the phase of LF oscillation.

The phase consistency between those two time series can be measured by the original phase-locking value (PLV) estimator (Lachaux et al., 1999) but also from its imaginary portion of PLV. The imaginary part of PLV (iPLV) can be used as an synchronization index that quantifies the strength of CFC-PAC coupling.

PLV is defined as follows:

and the iPLV as follows:

The iPLV is an estimator that is less affected compared to PLV from the volume conduction effect. Using iPLV for quantifying the strength of CFC interactions is an advantage over volume conduction. The iPLV is more sensitive to non-zero phase lag and for that reason is more resistant to any self-interactions that are directly linked to volume conductions (Nolte et al., 2004). For further details and applications, an interested reader can read our previous work Dimitriadis et al. (2015, 2016).

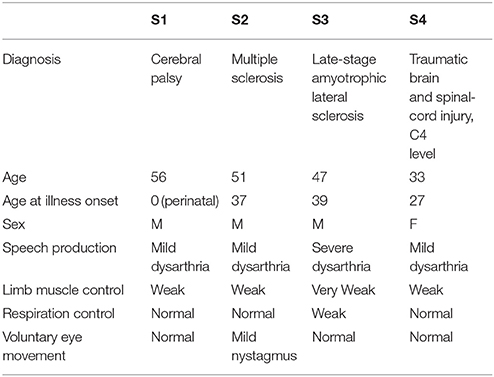

In the present study, as already mentioned, we used seven frequency bands which means that PAC is estimated for 7*6/2 = 21 cross-frequency pairs e.g., δϕ-θA, δϕ- where ϕ and A denote the phase and amplitude of each frequency band. Figure 2 demonstrates the preprocessing steps of the PAC estimator for a trial of Subject 6 at Target Image 6.

Figure 2. The algorithmic steps for PAC estimation. Using the first single-trial signal from session 1 and flashing image 1 (A), from the P300 of an able subject (subject 6), we demonstrate the detection of coupling between θ and β1 rhythm. To estimate θ-β1 PAC, the raw signal was band-pass filtered into both a (B) low-frequency θ (4–8 Hz) component where its envelope is extracted as well as (C) a high-frequency β1 (13–20 Hz) component where its instantaneous phase is extracted. (D) We then extracted the amplitude and the instantaneous phase of the band-passed β1 (13–20 Hz) and filtered this amplitude time series at the same frequency as θ (4–8 Hz), giving us the θ modulation in lower β amplitude. (E) We then extracted the instantaneous phase of both the θ-filtered signal and the θ-filtered lower-β amplitude and computed the phase-locking between these two signals. The latency depended differences (F), will be used in estimating the phase-locking (iPLV) that will reflect the PAC-interaction between the two involved brain rhythms. This phase-locking represents the degree to which the lower β (β1) amplitude is co-modulated with the θ phase.

For comparison purposes, we estimated the CFC phase-to-amplitude estimates via two alternative approaches: (1) In the first one, we followed the same analytic pathway as the one described above but instead of the imaginary part of PLV, PLV was estimated and (2) in the second approach, Canolty's et al. (2006) definitions were adopted based on mean vector length (MVL) and the complex estimation of modulation of the phase of slower rhythm to the amplitude of the higher oscillation. Hereafter, we will use the terms of PACiPLV, PACPLV and PACMVL to describe the CFC-PAC-based estimates with the three approaches.

We estimated the three different CFC estimates (PACiPLV, PACPLV and PACMVL) and relative signal power (RSP) for the first 32 samples (60 ms) increasing the window up to 500 ms (256 samples) with a step of 12 samples (5 ms).

Signal power

We estimated the relative power of each bandpass frequency signal segment with the following equations:

The first equation quantifies the signal power (SP) of each frequency as the sum of the filtered signal squared per sample (Equation 3) while Equation (4) divides the SP by the sum of the SP from all the frequencies which gives the RSP. The whole approach was repeated for every trial, sessions and subject. For the RSP estimation, we used the same predefined frequencies as for CFC-PAC estimates.

Machine learning and classification

The training data set includes on average 405/2025 target/non-target trials and the validation data sets consisted of 135/675 target/non-target trials.

Adopting, we used an unsupervised multi-class feature selection algorithm (Cai et al., 2010) to detect the characteristic cross-frequency pair via PAC value that gives the highest discrimination of each target image compared to the rest based on the training data set. Additionally, we used a sequential feature selection algorithm to detect the RSP that separate the counted flashing images from the non-counted images.

We trained a multi-class SVM classifier based on the selected PAC estimate from specific cross-frequency pairs and then we tested the classifier to the validation data to get the response tailored to each target image (Joachims, 1999). The training test consisted of the first session while the remaining three sessions were used for validating the whole analytic scheme. A k-nearest neighbor (k-NN) classifier was applied to differentiate the attended from the non-attended flashing images prior to a multi-class SVM classifier based on α RSP.

c-VEP Slow/Fast Stimulus Presentation

Experimental Set-Up

The time course of one trial of the experiment can be seen in Figure 1 in Wittevrongel et al. (2017). The design of the interface consisted of 32 circular white targets following an m-sequence stimulation paradigm (see further) and that were overlaid with static (i.e., non-flickering) gray letters or numbers arranged in a matrix of 4 (row) × 8 (columns).

The following m-sequence of a length of 63 was used to encode the targets:

where targets were lagged by integer multiples of two frames.

A trial started with the presentation of a target cue. Subjects were instructed to redirect their gaze to the cued target and then to press a button to start the trial/stimulation. After that, all targets were hidden but the characters were still shown in gray for 1 s, followed by the stimulation phase during which all targets adopted their unique lagged m-sequence and repeated this sequence either five or ten times for slow and fast stimulus representation, respectively.

The EEG was recorded at a 250 Hz sampling rate from 32 EEG sensors placed at the standard positions of the 10–20 international system. For further details see the original paper (see Figure 2 in Wittevrongel et al., 2017).

Subjects

Seventeen subjects with normal or corrected-to-normal vision participated in the experiment (14 female, 13 right handed, aged 22.35 ± 2.9, ranging from 18 to 30 years old). The data set and the preprocessing steps followed on from the original papers which are publicly available.2

Experimental Schedule

Every subject performed 5/10 m-sequence repetitions per trial for a 60/120 Hz stimulation rate. The total duration of a trial was 5.25 s.

The original goal of this study was dual. First, to assess the performance of the spatio-temporal beamforming algorithm for target identification when using cVEP-based encoding, and secondly to compare the performance for both slow-traditional (60 Hz) and high-speed (120 Hz) stimulus presentations (Wittevrongel et al., 2017).

Preprocessing of Single Trials

The impact of different single-sensor recordings on classification accuracy was tested in an offline procedure.

Before learning a classification function and cross-validation scheme, several preprocessing operations were applied to the data.

The preprocessing steps applied to the data set in this study are presented in the following steps:

(i) Referencing. We re-referenced single trials using the average reference signal instead of using the average signal from the mastoid as in the original data set.

(ii) Filtering. A third-order zero phase Butterworth bandpass filter was used to filter the data. The MATLAB function butter was used to compute the filter coefficients and the function filtfilt was used for filtering. The predefined frequencies were: δ {0.5–4 Hz}, θ {4–8 Hz}, α1 {8–10 Hz}, α2 {10–13 Hz}, β1 {13–20 Hz}, β2 {20–30 Hz}, and γ1 {30–45 Hz}.

(iii) Single trial extraction. Single trials have a duration of 5250 ms (5.25 s) from the stimulus onset up.

(iv) Electrode selection. We applied our analysis to recordings from single-sensor activity using the whole set of 32 EEG recording channels.

(v) Beamforming. We adopted the same strategy as in the original paper by building beamformers based on the training epochs for each subject. Beamformers act as spatial filterers and have shown their potentiality in event-related potential (ERP) studies (van Vliet et al., 2016). The activation patterns and the target and frequency specific beamformers were calculated from the training data where m is the number of channels, t is the number of samples and l is the number of epochs, as follows. For each epoch in training, a maximal number of t-second consecutive non-overlapping segments were extracted, where t represents the time needed to display one complete m-sequence.

The whole procedure was followed independently for each subject, target and frequency.

An LCMV beamformer was finally estimated for each target and frequency based on the testing data set. In the original paper, they applied the proposed beamformer within 4–31 Hz. For further details, see the original paper.

Our goal was not to use beamformers as a classifier but to diminish the effect of spurious activity among the EEG sensor channels.

(vi) Feature vector construction. As an appropriate feature for each trial, we used PAC which already has shown its potentiality in building reliable biomarkers (Dimitriadis et al., 2015, 2016). PAC was estimated for each frequency pair (see ii). We used a sliding-window of 100 ms (25 samples) that moved every 0.004 s (one sample). This approach leads to a PAC time series (PACts) of 501 samples long. Finally, for every subject and for each target, we have estimated a matrix with the following dimensions: trials x sensors (32) × CFC-pairs (21) x PACts (501). Trials refer to the testing data set following a five-fold cross-validation procedure.

Preprocessing steps have been applied independently to slow and fast stimulus representation.

Machine Learning and Classification

For each subject and at every five-fold of the cross-validation procedure, we thoroughly searched for the optimal set of channel selection, CFC-pairs and the time needed (length of PACts) to reach a plateau of classification performance or 100% absolute accuracy. Apart from the classification performance, time is an important parameter that further increases the optimal information transfer rate (ITR).

At every fold, we encoded the single trial PACts in the training set via a symbolisation procedure based on the neural-gas algorithm (Martinetz et al., 1993). This approach has already been used in single trial responses in a BCI-SSVEP system (Georgiadis et al., 2018) and also for transforming dynamic functional brain networks into functional connectivity microstates (Dimitriadis et al., 2013). Practically, for each PACts and for each sensor, we designed encoded prototypical code waves for the training data set.

To access the recognition accuracy of each channel and each PACts across the CFC-pairs and across time t (samples of the PACts), we employed the Wald-Wolfowitz (WW) test as a similarity index between training prototypical PACts and PACts from every single trial of the testing set. For further details regarding the WW-test, see section 2 in the Supplementary Material. The time window across the PACts was moved per sample in order to detect the best classification accuracy in a shorter time.

SSVEP Multi-Target Data Set

Experimental Set-Up

The visual stimulation included five violet squares, located as a cross (Figure 6 in Georgiadis et al., 2018) and flickering simultaneously at five different frequencies (6.66, 7.50, 8.57, 10.00, and 12.00 Hz).

The brain activity was recorded using a high-density EEG scanner with 256 electrodes [an EGI 300 Geodesic EEG System (GES 300)]. The sampling frequency was 250 Hz and the impedance for all electrodes was kept below 10 KΩ. During the recordings, an online bandpass filter (0.1 Hz−70 Hz) was applied to suppress noise and a 50 Hz notch filter to eliminate the power line interference (Georgiadis et al., 2018).

Subjects

Eleven healthy volunteers (8 males and 3 females mean ± SD age = 30.36 ± 5.20 years) participated in this study. The data set is publicly available.3

Experimental Schedule

Each subject participated in five sessions with 25 flickering windows per session leading to 125 trials of 5 s in total and 25 trials per target frequency. The order of the flickering targets (gaze directions) was randomly chosen.

The original goal of this study was to access the recognition accuracy of the SSVEP BCI system using multi-targets flickering at different frequencies (Wittevrongel et al., 2017). The selection of the frequencies focused on avoiding frequencies that are multiples of another frequency.

Preprocessing of Single Trials

The impact of different single-sensor recordings on classification accuracy was tested in an offline procedure.

Before learning a classification function and cross-validation scheme, several preprocessing operations were applied to the data.

The preprocessing steps applied to the data set in this study are presented in the following steps:

(i) Referencing. We re-referenced single trials using the average reference signal.

(ii) Filtering. A third-order zero phase Butterworth bandpass filter was used to filter the data. The MATLAB function butter was used to compute the filter coefficients and the function filtfilt was used for filtering. The predefined frequencies were: δ {0.5–4 Hz}, θ {4–8 Hz}, α1 {8–10 Hz}, α2 {10–13 Hz}, β1 {13–20 Hz}, β2 {20–30 Hz} and γ1 {30–45 Hz}.

(iii) Single trial extraction. Single trials have a duration of 5000 ms (5 s) from the stimulus onset up.

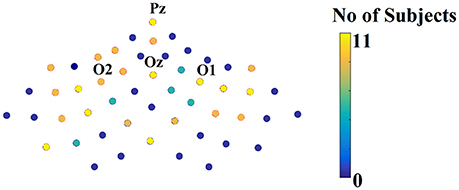

(iv) Electrode selection. We applied our analysis to recordings from single-sensor activity using the whole set of 52 parieto-occipital EEG recording channels (see Figure 6 in Oikonomou et al., 2016).

(v) Beamforming. We adopted the same strategy as in the second data set using the beamformers. Beamformers act as spatial filterers and have shown their potentiality in ERP studies (van Vliet et al., 2016). The activation patterns and the target and frequency specific beamformers were calculated from the training data, where m is the number of channels, t is the number of samples and l is the number of epochs, as follows. For each epoch in training, a maximal number of t-second consecutive non-overlapping segments were extracted, where t represents the time needed to display one complete m-sequence.

The whole procedure was followed independently for each subject, target and frequency.

An LCMV beamformer was finally estimated for each target and frequency based on the testing data set. For further details, see the original paper.

Our goal was not to use beamformers as a classifier but to diminish the effect of spurious activity among the EEG sensor channels.

(vi) Feature vector construction. As an appropriate feature for each trial, we used PAC, which has already shown its potentiality in building reliable biomarkers (Dimitriadis et al., 2015, 2016). PAC was estimated for each frequency pair (see ii). We used a sliding-window of 100 ms (25 samples) that moved every 0.004 s (one sample). This approach leads to a PAC time series (PACts) of 501 samples long. Finally, for every subject and for each target, we have estimated a matrix with the following dimensions: trials × sensors (25) × CFC-pairs (21) × PACts (501). Trials refer to the testing data set following a five-fold cross-validation procedure.

Preprocessing steps have been applied independently to slow and fast stimulus representation.

Machine Learning and Classification

For each subject and at every five-fold of the cross-validation procedure, we thoroughly searched for the optimal set of channel selection, CFC-pairs and the time needed (length of PACts) to reach a plateau of classification performance or 100% absolute accuracy. Apart from the classification performance, time is an important parameter that further increases the optimal ITR.

At every fold, we encoded the single trial PACts in the training set via a symbolisation procedure based on the neural-gas algorithm (Martinetz et al., 1993). This approach has already been used in single trial responses in a BCI-SSVEP system (Georgiadis et al., 2018) and also for transforming dynamic functional brain networks into functional connectivity microstates (Dimitriadis et al., 2013). Practically, for each PACts and for each sensor, we designed encoded prototypical code waves for the training data set.

To access the recognition accuracy of each channel and each PACts across the CFC-pairs and across time t (samples of the PACts), we employed the WW-test as a similarity index between training prototypical PACts and PACts from every single trial of the testing set. For further details regarding the WW-test, see section 2 in the Supplementary Material. The time window across the PACts was moved per sample in order to detect the best classification accuracy in a shorter time.

Performance Evaluation

Classification accuracy and ITR were estimated for the offline experiments. We estimated ITR (in bits per second) with the following Equation (5):

Where N is the number of classes/target images (i.e., six in this study), P is the accuracy of identification of the targeted image and t (seconds per selection) is the average time for a selection. ITR expressed the bits/symbol divided by the average time required to select a single symbol, T.

For data set 1, T = 0.4 s (300 ms duration of the flashing image + optimal time window from the response due to the decision) and N = 6 for both disabled and able-bodied subjects.

For data set 2, N = 32 and T was set to the optimal length of PACts plus an additional 500 ms to account for the time the subject would need to switch their gaze to the next target.

For data set 3, N = 5 and T was set to the optimal length of PACts plus an additional 5 s to account for the time the subject would need to switch their gaze to the next target.

Results

c-VEP Flashing Images Data Set

δ-θ PAC as a Valuable Feature for the BCI—c-VEP System

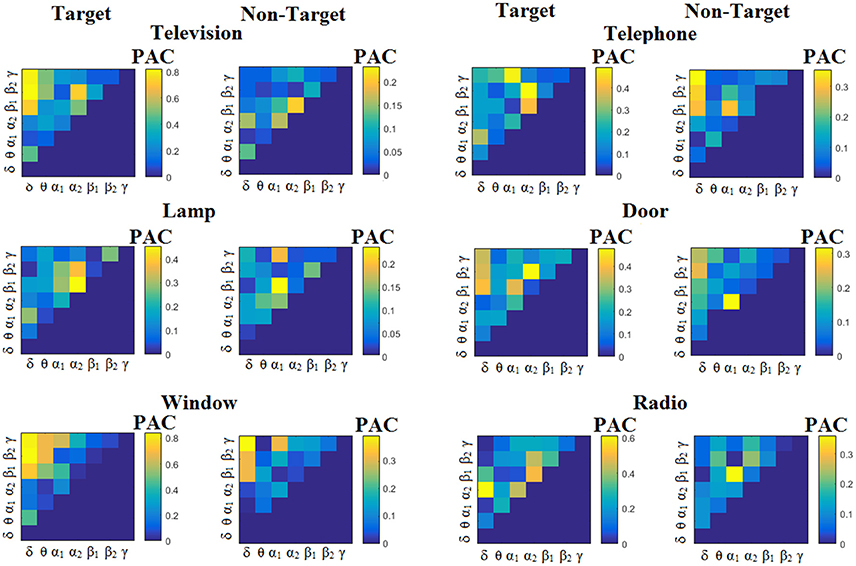

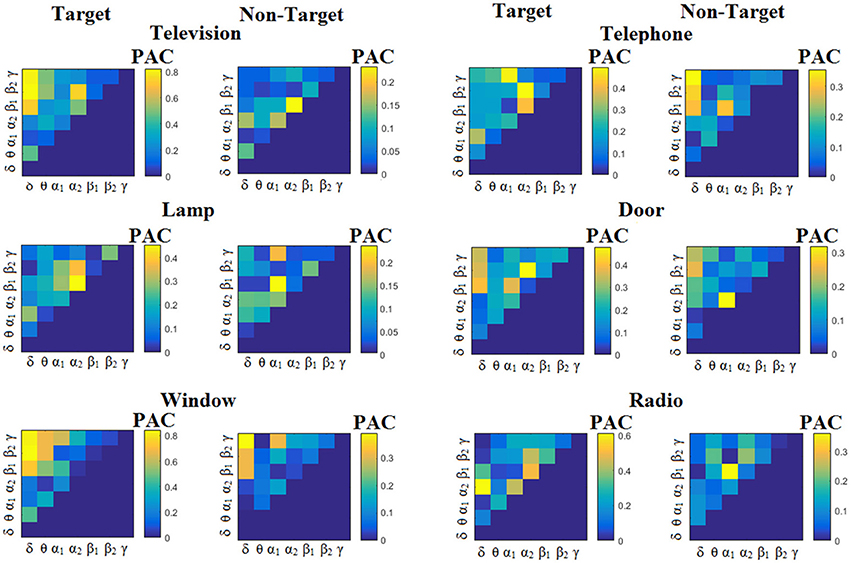

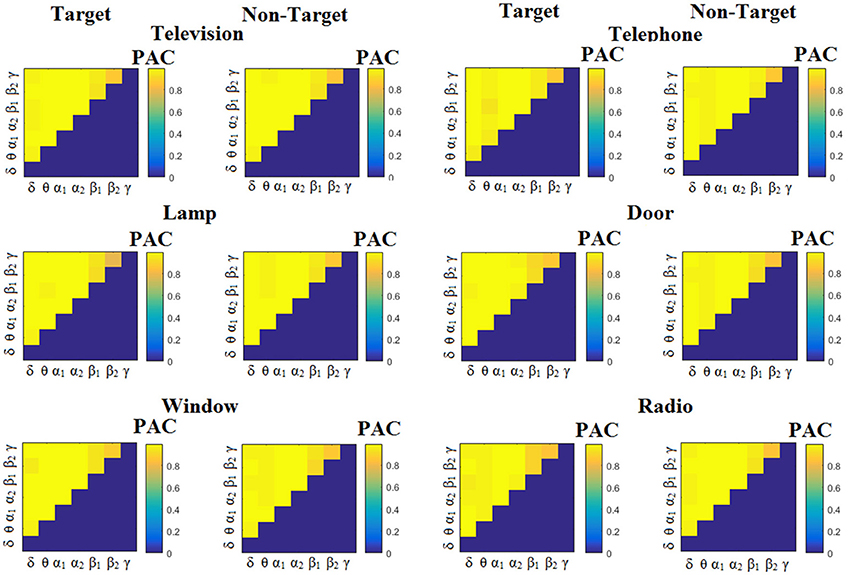

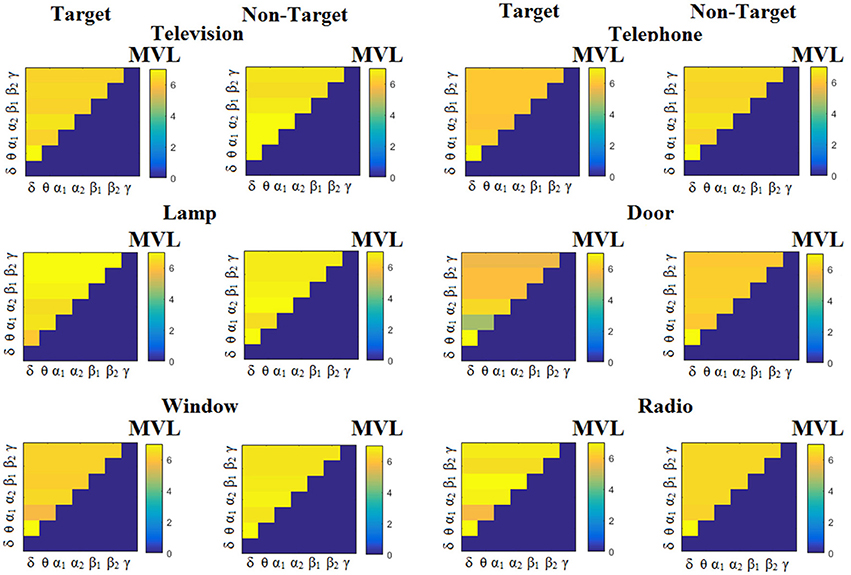

We estimated both PAC and RSP for the first 32 samples (60 ms) increasing the window up to 500 ms (256 samples) with a step of 12 samples (5 ms). The multi-class unsupervised algorithm (Cai et al., 2010) detected only one PAC feature from the 21 possible cross-frequency pairs as the unique candidate feature to separate the six classes of image stimuli. δϕ-θA was the selected feature for both disabled and able-bodied subjects for PACiPLV. In contrast, the selected CFC features based on PACPLV and PACMVL were completely random and different between and also within subjects. Trial-averaged comodulograms of PAC values for the three adapted estimators are demonstrated for two our of eight subjects (see Figures 3–5, 6–8). For the rest of the subjects see Supplementary Material.

Figure 3. Subject 6 (able bodied). Demonstrating the level of CFC in c-VEP responses for each flashing image. Trial-Averaged PACiPLV patterns from the c-VEP responses for each target image and for both attended vs. non-attended images.

The group-averaged classification performance was 99.96%±0.03 for each sensor location using the first 100 ms for both able-bodied and disabled subjects. The errors were detected on the trials where the subject missed the flashing image. The classification performance with the use of a kNN-classifier prior to the multi-class SVM was ~100 % for every subject and for all the pre-selected sensors namely PZ, OZ, P3, P4, P7, P8 EEG sensors.

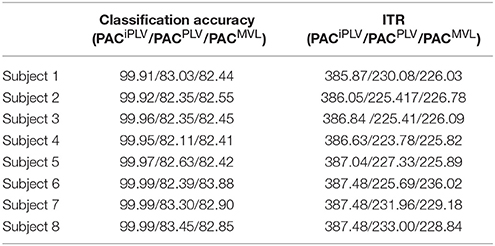

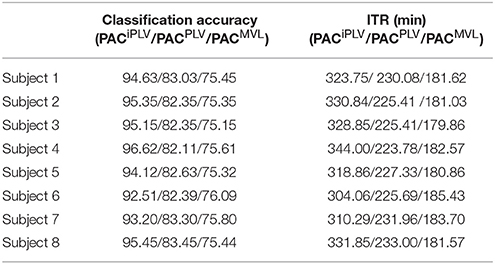

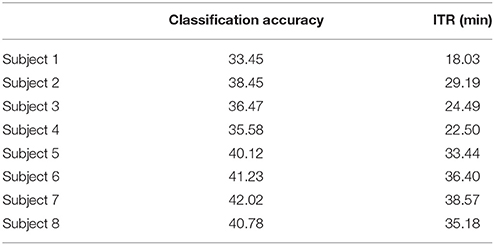

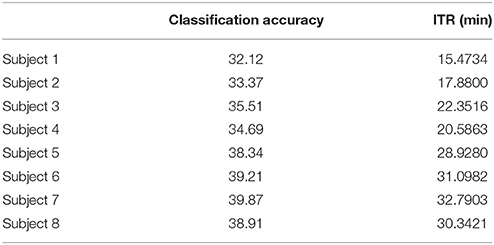

Table 2 summarizes the classification accuracy for every subject and connectivity estimator with the related ITR based on the Pz EEG sensor. The ITR obtained for both the disabled and able-bodied subjects reached the fastest reported level of 6.45 bits/s (or 387 bits/min) with the PACiPLV estimator compared to 3.79 bits/s (or 227 bits/min) with the PACPLV estimator and 3.80 bits/s (or 228 bits/min) with the PACMVL estimator. Additionally, our approach outperformed alternative signal features such as the RSP (1.09–1.37 bits/s) and raw time series analysis (1.05–1.25 bits/s) and also the original reported bit rates of 10–25 bits/min. The new preprocessing approach was based on recordings from the single-sensor Pz, while the classification accuracy was also tested for at the other electrodes.

Table 2. PAC-CFC: Single-subject classification and the related bit rates for the disabled (Subjects 1–4) and able-bodied (Subjects 5–8) subjects based on the Pz sensor and the three alternative CFC-PAC estimators.

We detected a significant difference between ITRiPLV and ITR original presented in Hoffmann et al. (2008) (Wilcoxon rank-sum test, p < 0.00001. Comparing ITRiPLV with ITRPLV and ITRMVL, we also detected significant differences (Wilcoxon rank-sum test, p < 0.00001). These results support the proposed iPLV estimator over the rest two.

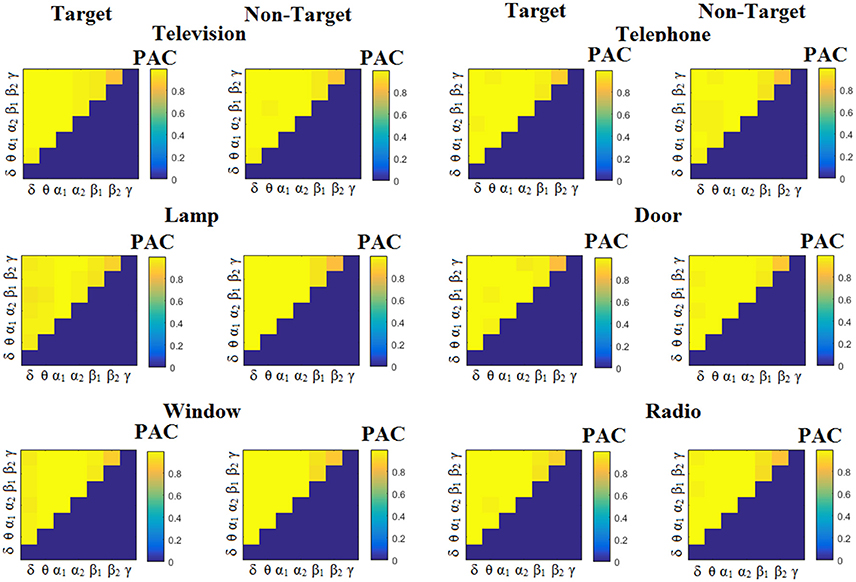

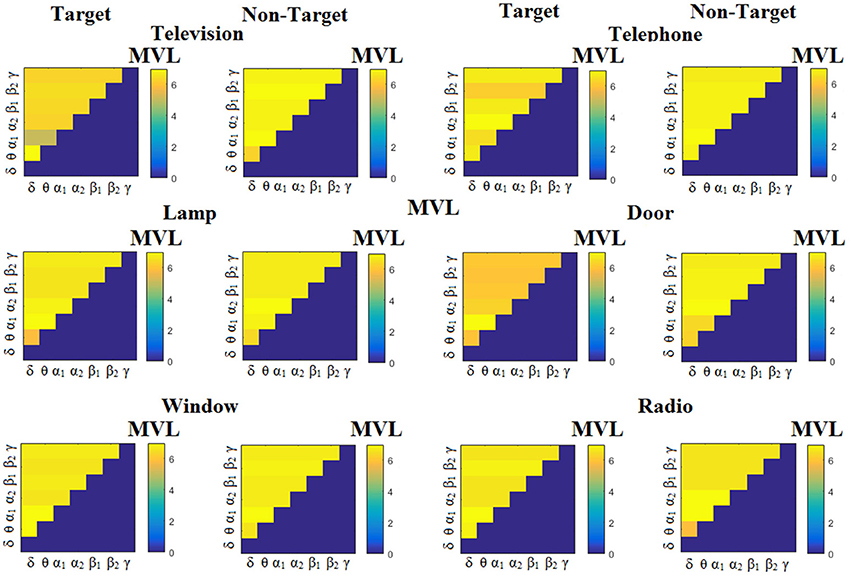

Figure 3 illustrates the trial-related (grand-averaged) PACiPLV -connectivity patterns (comodulograms) from the first able-bodied subject from target and non-target trials for each flashing image. In contrast, Figures 4, 5 demonstrate, similarly to Figure 3, the grand-averaged PACPLV-connectivity patterns and the grand-averaged PACMVL-connectivity patterns, respectively (Canolty's et al., 2006).

Figure 4. Subject 6 (able bodied). Demonstrating the level of CFC in c-VEP responses for each flashing image. Trial-Averaged PACPLV patterns from the c-VEP responses for each target image and for both attended vs. non-attended images.

Figure 5. Subject 6 (able bodied). Demonstrating the level of CFC in c-VEP responses for each flashing image. Trial-Averaged PACMVL patterns from the c-VEP responses for each target image and for both attended vs. non-attended images.

Similarly, Figure 6 demonstrates the grand-averaged PAC-connectivity patterns of from the first disabled subject using the PACiPLV comodulograms. For comparison purposes, Figures 7, 8 are dedicated to the grand-averaged PACPLV-connectivity patterns and the grand-averaged PACMVL -connectivity patterns, respectively (Canolty's et al., 2006). S.1–6 illustrate the grand-averaged PAC-connectivity patterns for the remaining six subjects of the data set (see Supplementary Material).

Figure 6. Subject 1(disabled). Demonstrating the level of CFC in c-VEP responses for each flashing image. Trial-Averaged PACiPLV patterns from the c-VEP responses for each target image and for both attended vs. non-attended images.

Figure 7. Subject 1(disabled). Demonstrating the level of CFC in c-VEP responses for each flashing image. Trial-Averaged PACPLV patterns from the c-VEP responses for each target image and for both attended vs. non-attended images.

Figure 8. Subject 1(disabled). Demonstrating the level of CFC in c-VEP responses for each flashing image. Trial-Averaged PACMVL patterns from the c-VEP responses for each target image and for both attended vs. non-attended images.

Comodulograms differed by contrasting target vs. non-target within each subject and target image but also between the two images. δϕ −θA was the unique and consistent feature for both disabled and able-bodied subjects based on the PACiPLV that can clearly predict the target image for both groups. Comodulograms derived from PACPLV and PACMVL are more random without succeeding to differentiate the 21 cross-frequency pairs in both conditions and across the flashing images. This observation further supports the non-consistency of feature selection across individuals for those two alternative PAC-CFC estimators.

Applying a Wilcoxon Rank Sum test of trial-based δϕ −θA between the able-bodied and disabled subjects, we detected significant different values (p < 0.01). Group-averaged δϕ −θA were higher for able-bodied subjects compared to disabled in the six-targeted images. However, the dataset is too small in order to make any conclusion regarding the sensitivity of δϕ −θA to detect abnormal visual decoding activity.

Attention and Alpha Power

Prior to multi-class SVM, we applied a kNN-classifier based on α1 SP which was selected as the feature that can discriminate counted from non-counted flashing images. The kNN-classifier performed 100% clear filtration of attended from non-attended trials for each subject and further improved the performance of multi-class SVM to 100%. We achieved this performance using an α1 signal relative power estimated from the first 100 ms for both able-bodied and disabled subjects.

The classification performance with the kNN-classifier was ~100% for every subject and for all the pre-selected sensors namely PZ, OZ, P3, P4, P7, P8 EEG sensors.

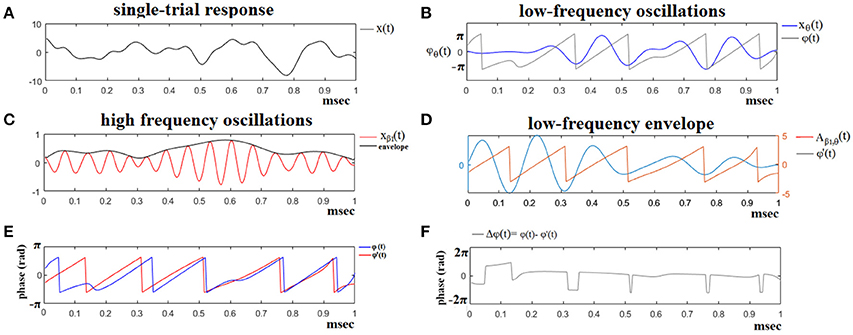

Table 3 summarizes the group-averaged RSP of an α1 frequency band for attended vs .non-attended images.

Managing the Cross-Session Transfer Learning Problem

In order to explore the effect on classification performance of collecting the data on two different days, we performed the same analysis using the trials derived from the second set of two sessions as a training set and the trials of the first set of two sessions as a testing set. Table 6, in complete analogy with Table 4, summarizes the classification accuracy and the ITR for every subject and CFC-PAC-connectivity estimator. The classification accuracy and bit rates diminished for the three CFC-PAC estimators compared to the original validation procedure (three sessions as a training set and the fourth as a testing set). Bit rates were: 5.4 bits/s for PACiPLV (Mean Classification Accuracy: 94.63), 3.02 bits/s for PACPLV (Mean Classification Accuracy: 75.40) and 3.03 bits/s for PACMVL (Mean Classification Accuracy: 75.52). Classification performance was still higher for PACiPLV compared to the two alternatives, while classification performance was still higher than the two alternative estimators and too high to support our approach as a key feature in the c-VEP BCI system.

Table 4. PAC-CFC: Single-subject classification and the related bit rates for the disabled (Subjects 1–4) and able-bodied (Subjects 5–8) subjects based on the Pz sensor and the three alternative CFC-PAC estimators.

δϕ −θA was again the selected feature for both disabled and able-bodied subjects for PACiPLV. In contrast, the selected CFC features based on PACPLV and PACMVL were completely random where in only two out of eight subjects, the selected feature was δϕ −θA.

We detected a significant difference between ITRiPLV and ITR original presented in Hoffmann et al. (2008) (Wilcoxon rank-sum test, p < 0.00001. Comparing ITRiPLV with ITRPLV and ITRMVL, we also detected significant differences (Wilcoxon rank-sum test, p < 0.00001). These results support the proposed iPLV estimator over the rest two.

PAC-CFC vs. Relative Power—Raw Time Series

To demonstrate the superiority of PAC-CFC to capture the local multiplexity of the human brain activity linked to c-VEP, we analyzed α1 relative power and raw time series filtered in α1. For comparison reasons, we used the first 100 ms after the end of the flashing images as we did with PAC-CFC. For the classification performance, we adopted multi-class Support Vector machines for both α1 relative power and α1 raw time series in order that the results be comparable with those derived from the three PAC-CFC estimators. Tables 5, 6 demonstrate the classification performance and ITR for each subject using recordings from the Pz sensor. Group-averaged bit rates for α1 relative power were 0.48 bits/s (29.73 bits/min) while for α1 raw time series were 0.41 bits/s (24.93 bits/min). Both alternative features extracted from the EEG recordings supported bit rates 12 times lower compared to the PACiPLV (5.4 bits/s or 324 bits/min). In general, PAC-CFC outperformed both α1 relative power and α1 raw time series in improving the bit rates further.

Table 5. α1 Relative Power: Single-subject classification and the related bit rates for the disabled (Subjects 1–4) and able-bodied (Subjects 5–8) subjects based on the Pz sensor.

Table 6. α1 Raw time series: Single-subject classification and the related bit rates for the disabled (Subjects 1–4) and able-bodied (Subjects 5–8) subjects based on the Pz sensor.

We detected a significant difference between ITRiPLV (Table 2) and α signal power (Table 5) and α raw time series (Table 6; Wilcoxon rank-sum test, p < 0.00001).

Performance Evaluation

In the present study, we succeeded ITR of 324.06 bits/min [see Table 4; with N = 6, P = 94.63, and T = 0.4 s (300 ms duration of the flashing image + 100 ms time window from the response due to the stimulus)] for both disabled and able-bodied subjects correspondingly for the Pz sensor location. The time for estimation of PAC and testing the trial was 0.00001 s on a Windows 7 Intel 7–8-core machine.

c-VEP Slow/Fast Stimulus Presentation

δ-θ PAC as a Valuable Feature for the BCI—c-VEP System

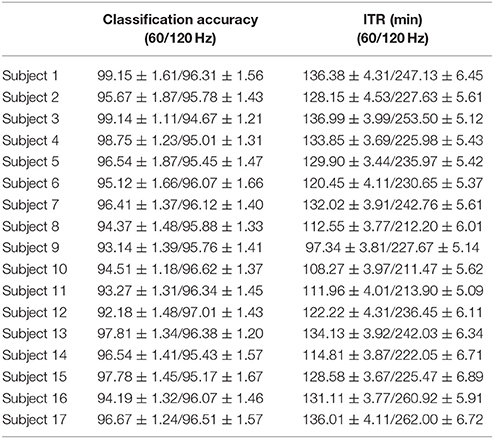

The group-averaged classification performance was 95.96 ± 2.15 for the 60 Hz and 95.91 ± 0.61 for the 120 Hz.

Table 7 summarizes the classification accuracy for every subject, the related ITR, the number of selected EEG sensors and the type of CFC-pairs. We succeeded an average ITR of 124.40 ± 11.68 for the slow 60 Hz and an average ITR of 233.99 ± 15.75 for the fast 120 Hz.

Table 7. PAC-CFC: Single-subject classification and the related bit rates for the 17 subjects based on the c-VEP data set in both slow and fast stimulus representation.

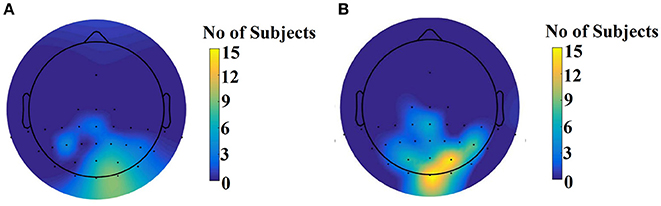

Figure 9 illustrates the number of times each channel was selected across subjects for the slow stimulation representation (60 Hz) and for the fast stimulation representation (120 Hz).

Figure 9. Scalp plot illustrating how many times each channel contributed to the best performance across subjects. (A) For the slow stimulation representation (60 Hz) and (B) For the fast stimulation representation (120 Hz).

We detected a significant difference between ITRiPLV and ITR original presented in Wittevrongel et al. (2017) (Wilcoxon rank-sum test, p < 0.00001).

SSVEP Multi-Target Data Set

δ-θ PAC as a Valuable Feature for the BCI—c-VEP System

The group-averaged classification performance was 94.25 ± 0.01 for the five targets.

Table 8 summarizes the classification accuracy for every subject, the related ITR, the number of selected EEG sensors and the type of CFC-pairs. We succeeded an average ITR of 106.44 ± 8.94.

Table 8. PAC-CFC: Single-subject classification and the related bit rates for the 11 subjects based on the SSVEP Multi-Target Data set.

Figure 10 illustrates the number of times each channel was selected across subjects over the parieto-occipital brain areas.

Figure 10. Scalp plot illustrating how many times each channel contributed to the best performance across subjects.

We detected a significant difference between ITRiPLV and ITR original presented in Georgiadis et al. (2018) (Wilcoxon rank-sum test, p < 0.00001).

Discussion

A novel approach of how to analyse single trials in a BCI system was introduced based on the estimation of CFC and namely PAC. PAC was estimated within EEG sensors from single trials recorded during a visually evoked experimental paradigm. The proposed analytic scheme is based on the extraction of unique features from the CFC patterns on a single trial basis, namely the δϕ −θA coupling. To evaluate the proposed analytic scheme and to further support the adaptation of CFC-PAC in BCI systems, we analyzed and presented our findings in three free publicly available EEG BCI data sets.

The first study referred to a well-known multi-class (N = 6) c-VEP-based BCI system for able-bodied and disabled subjects. Our experimentations showed a high classification rate (94.63%) based on the proposed PACiPLV estimator. In contrast, the two alternative PAC-CFC estimators succeeded in high classification accuracy and bit rates, but the choice of CFC features was random while the comodulograms were uniform across the cross-frequency pairs for every subject and flashing image.

The bit rates obtained for both the disabled and able-bodied subjects reached the fastest reported level of an ITR of 324 bits/min with the PACδ−θ estimator. Additionally, our approach outperformed alternative signal features such as the relative power ITR = 9.73 bits/min and raw time series analysis ITR = 24.93 bits/min and also the originally reported ITR = 10–25 bits/min. Our results outperformed the results presented on the original (324 bits/min vs 10–25 bits/min; Hoffmann et al., 2008). Using a binary classifier trained with α1 RSP prior to the multi-class SVM, we differentiated the attended from the non-attended stimuli which further improved the classification performance up to 100% in both groups.

The success of PACiPLV was further enhanced by the poorer classification accuracy and bit rates of the two comparable approaches (PACPLV, PACMVL). This further supported our analytic signal processing for the estimation of PAC-CFC estimates and the use of iPLV instead of PLV and also compared to MVL. In our previous studies, using the PACiPLV, we built a single-sensor and multi-sensor biomarker for mild cognitive impairment and reading disabilities using electro and magneto-encephalography recordings, respectively (Dimitriadis et al., 2015, 2016).

To properly manipulate any cross-session transfer between the two recording sessions, we repeated the whole classification analysis using, as a training set, the recordings from the second session and as a testing set, the recordings from the first session. The bit rates and the overall classification accuracy was decreased but the bit rates derived from the PACiPLV (5.4 bits/s) were still higher than the remaining two alternative CFC-PAC estimates and were kept at a very high level compared to other BCI studies. Overall, CFC-PAC estimates outperformed SP and raw time series analysis in α1 frequency further supporting the proposed analytic scheme.

In previous studies, like that of Sellers and Donchin (2006), the highest succeeded classification accuracy for the able-bodied and ALS subjects was 85 and 72%, respectively. Hoffmann et al. succeeded in absolute classification accuracy for both disabled and able-bodied subjects for the first demonstration of the current data set. However, they used a longer time series of over 15–20 s by concatenating trials in order to better train the classifier. Additionally, they used one classifier per image per each of the 20 blocks and the final outcome derived as the majority voting of the 20 classifiers.

The second BCI data set was a multi-class (N = 32) c-VEP with slow and fast stimulus representation. We succeeded in an average ITR = 124.40 ± 11.68 bits/min for the slow 60 Hz and an average ITR = 233.99 ± 15.75 bits/min for the fast 120 Hz. The major feature that contributes to this high classification accuracy was the PACδ−θ. Our results outperformed the ITR presented in the original paper (Wittevrongel et al., 2017), while our results further supported the introduction of LCMV beamformers in the BCI system (Wittevrongel and Van Hulle, 2016a; Wittevrongel et al., 2017).

The third BCI data set was a SSVEP multi-class (N = 5) flickering BCI system where we succeeded in an average ITR = 106.44 ± 8.94 bits/min. Like in the previous two data sets, the major feature that contributes to this high classification accuracy was the PACδ−θ. Our results outperformed the ITR presented in the original paper (Georgiadis et al., 2018), where they analyzed five out of 11 subjects based on broadband activity after first encoding single trials via a symbolization approach. Their analysis focused on the classification performance using only one EEG sensor at a time and the highest accuracies were achieved from sensors located over the parieto-occipital brain area.

The core of the bibliography in c-VEP studies, Kapeller et al. (2013a,b), Mohebbi et al. (2015) and Riechmann et al. (2016) suggests that as a dominant methodology, the usage of spatial filters through CCA combined with a classification algorithm [most notably SVMs (Farwell and Donchin, 1988; Spuller et al., 2012a) or LDA Polikoff et al., 1995] is considered. Here, alternatively, we proposed the usage of CFC-PAC as a descriptor that quantifies the local multiplexity of brain functions as each one oscillates on a characteristic frequency. In a recent retinotopic multi-class with single flickering frequency, they proposed a CCA spatial filter of the EEG responses in both the amplitude and phase domain (Maye et al., 2017). They achieved a high classification accuracy even in the nine classes referring to different visual angles across a visual circle. Additionally, in recent years, many researchers have introduced the notion of beamformers as spatial filters of scalp EEG activity (Wittevrongel and Van Hulle, 2016b; Wittevrongel et al., 2017). The results presented are comparable and even superior to SVM. In the second and third data set, we estimated PAC time series after first applying a LCMV beamformer.

The majority of BCI studies analyzed broadband signal while they preferred to analyse the preprocessed broadband raw time series using first a symbolization scheme Georgiadis et al. (2018), a CCA spatial filter (Maye et al., 2017) and a beamformer as a spatial filter (Wittevrongel et al., 2017). Even though the results are still high, they suppressed the enriched frequency information of EEG activity, the brain rhythms. Every frequency can encode different cognitive functions related to a task, while the CFC between two frequencies can bind two different cognitive functions when it is demanded by the conditions of the experiment.

This work is the only one suggesting CFC features and namely PAC for both c-VEP (slow and fast) and SSVEP BCI systems. The proposed analytic scheme has been validated on three publicly available data sets with different designs and a different number of classes. Additionally, our results outperformed the ITR of the original data sets even by a factor of up to three (data set 3).

According to Klimesch's α theory, α ‘directs the information flow toward to neural substrates that encodes information related to the system’ (e.g., visual stimulus to visual system, voice/sound to auditory system). The physiological main function of α is linked to inhibition. Klimesch's α theory hypothesizes that α enables the storage of information via the inhibition of task-irrelevant neuronal substrates and by synchronizing the brain activity in task-relevant neural systems. Many research findings have shown that both evoked α and phase-locking further support the successful encoding of a global stimulus-based feature within the post-interval of 0–150 ms (Klimesch et al., 2011).

Apart from the cross low-frequency-high-frequency coupling (e.g., θ-γ), there is much evidence (Lakatos et al., 2005; Cohen, 2008; Isler et al., 2008; Voytek et al., 2010; Engel et al., 2013; Jirsa and Müller, 2013) that CFC can be observed between the lower frequency bands (e.g., delta-theta, delta-alpha and theta-alpha). Lakatos et al. (2005) made a hypothesis about the “hierarchy” of EEG oscillations, suggesting that the amplitude of a lower frequency band may be modulated by the phase of a higher frequency. They revealed, in the primary auditory cortex of macaque monkeys, that δ (1–4 Hz) phase modulates θ (4–10 Hz) amplitude, and θ modulates γ (30–50 Hz) (Lakatos et al., 2005). This multiplexity of brain rhythms might reflect a general trend in the organization of brain rhythms, a true evidence in both humans and cats (Bragin et al., 1994 widespread basis including the occipital brain areas in orienting acoustic responses where novel sounds intermixed with frequent standard and infrequent target (Isler et al., 2008).

Evidence from the human auditory cortex untangled that δ-band modulates the amplitude of θ-band ICMs, whose phase modulates the amplitude of γ-band ICMs (Schroeder et al., 2008). This indirect enhanced effect employs the spontaneous activity of local neural activity in the primary auditory cortex. Their hypothesis supports the notion that neural oscillations reflect rhythmic shifting of excitability states of neural substrates between high and low levels. This hypothesis is further supported by the fact that oscillations can be predicted by visual input such that the auditory input arrives during a high excitability phase and is finally amplified. In the present study, we demonstrated that the δ (0.5–4 Hz) phase modulates θ (4–8 Hz) amplitude over visual brain areas due to flickering images and their content and was mainly observed on parieto-occipital EEG recording sites.

We should also mention that the reason why δϕ −θA coupling discriminates the flashing images can be directly linked to the content of the images. Visual attention sample image stimuli rhythmically demonstrate a peak of phase at 2 Hz (Dugué and VanRullen, 2014), while flashing images induce rhythmic fluctuations at higher frequencies (6–10 Hz) (Landau and Fries, 2012), here within the θ frequency range [4–8 Hz]. Finally, the work of Karakas et al. (2000) showed that the ERP represents an interplay between δ and θ frequencies and is directly linked to c-VEP (Demiralp et al., 2001).

δ-band oscillations long considered to be linked with deep sleep (Steriade, 2006). However, there are evidence that they play a key role in: (i) Controlling neuronal excitability, (ii) amplifying sensory inputs, (iii) in controlling and utilizing the attention, and (iv) unfolding the multiple operating modes responding to task demands (Schroeder and Lakatos, 2009).

Our results are aligned with findings in primary auditory cortex of macaque monkeys where δ (1–4 Hz) phase modulates θ (4–10 Hz) (Lakatos et al., 2005).

Ding et al. (2006) explored how attention modulates SSVEP power depending on the network triggered by the flickering frequency. They explored attentional effect at flicker frequencies within δ and α ranges. They found an occipital-frontal network to be phase-locked to the flicker when the flicker frequencies were within δ (2–4 Hz) and in upper α (10–11 Hz) when subject attending to the flicker. At flicker frequencies in the lower α (8–10 Hz), parietal and posterior frontal cortex, has higher amplitude when attention is directed away from the flicker. The major message from this study was that SSVEP amplitude and phase locking depends on which of two cortical networks, is selected by the flicker frequencies that have distinct spatial and dynamic properties.

There are strong evidence that slow-frequency ranges (δ, θ) play a pivotal role in controlling neuronal excitability and sensory processing and one would believe that play a key role also in attentional selection and especially during SSVEP (Morgan et al., 1996; Kim et al. (2007); Lakatos et al., 2008). There are findings that low-frequency oscillatory activity is enhanced by attentional demands during a task (Morgan et al., 1996; Kim et al. (2007); Lakatos et al., 2008). The coupling of δ-θ increased near visual stimulus onset during a visual attention task while it is decreased near visual stimulus onset in the auditory attention task (Lakatos et al., 2008). Finally, our results untangled that δϕ −θA coupling over parieto-occipital brain areas is a valuable feature for the improvement of BCI performance and the related ITR.

Conclusion

In this work, an efficient algorithmic approach was presented for two c-VEP-based BCI systems and a SSVE-BCI system with classes ranging from N = 6 to N = 32. We have demonstrated higher ITR in the three BCI systems outperforming the ITR presented in the original manuscripts. The proposed analytic scheme is based on CFC and namely PAC. Specifically, δ (0.5–4 Hz) phase modulates θ (4–8 Hz) amplitude and proved to be the candidate feature from PAC estimates that supported the highest classification accuracy, the fast ITR and the fast response time of the multi-class BCI systems.

Future improvements to the work presented could be the design of useful BCI-based application scenarios adapted to the needs of disabled subjects (King et al., 2014). Also, it might be useful to perform exploratory analysis on larger populations and in real time to further validate the results of the present work. Furthermore, many BCI systems based on c-VEP or SSVEP and tailored to different target populations could benefit from the current methodology to further improve ITRs (Lee et al., 2006).

Ethics Statement

The 3 datasets can be freely downloaded from the websites: Zurich (http://bci.epfl.ch/p300). Leuven: https://kuleuven.app.box.com/v/CVEP. Thessaloniki: https://physionet.org/physiobank/database/mssvepdb/.

Author Contributions

SD: Conception of the research; SD: Methods and design; SD and AM: Data analysis; SD: Drafting the manuscript; AM: Critical revision of the manuscript. Every author read and approved the final version of the manuscript.

Funding

This study was supported by the National Centre for Mental Health (NCMH) at Cardiff University and by MARIE-CURIE COFUND EU-UK RESEARCH FELLOWSHIP. We would like to acknowledge Cardiff RCUK funding scheme.

Conflict of Interest Statement

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Acknowledgments

SD was supported by MRC grant MR/K004360/1 (Behavioral and Neurophysiological Effects of Schizophrenia Risk Genes: A Multi-locus, Pathway Based Approach) and MARIE-CURIE COFUND EU-UK RESEARCH FELLOWSHIP. We would like also to acknowledge the reviewers for their valuable comments that further improve the quality of the current research article. AM was financially supported by the European Commission's Health Cooperation Work Program of the 7th Framework Program, under the Grant Agreement No. 602186 (BRAINTRAIN).

Supplementary Material

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/fninf.2018.00019/full#supplementary-material

Footnotes

1. ^The data sets in Hoffmann et al. can be freely downloaded from the website of the EPFL BCI group (http://bci.epfl.ch/p300).

References

Antonakakis, M., Dimitriadis, S. I., Zervakis, M., Micheloyannis, S., Rezaie, R., Babajani-Feremi, A., et al. (2016). Altered cross-frequency coupling in resting-state MEG after mild traumatic brain injury. Int. J. Psychophysiol. 102, 1–11. doi: 10.1016/j.ijpsycho.2016.02.002

Bayliss, J. D. (2003). Use of the evoked P3 component for control in a virtual apartment. IEEE Trans. Neural Syst. Rehab. Eng. 11, 113–116. doi: 10.1109/TNSRE.2003.814438

Bin, G., Gao, X., Wang, Y., Hong, B., and Gao, S. (2009). “VEP-based brain-computer interfaces: time, frequency, and code modulations [Research Frontier],” in IEEE Computational Intelligence Magazine, Vol. 4 (IEEE), 22–26.

Bragin, A., Jando, G., Nadasdy, Z., Hetke, J., Wise, K., and Busaki, G. (1994). Gamma (40-100 Hz) oscillation in the hippocampus of the behaving rat. 1. J. Neurosci. 15, 47–60. doi: 10.1523/JNEUROSCI.15-01-00047.1995

Buzsáki, G. (2010). Neural syntax: cell assemblies, synapsembles, and readers. Neuron 68, 362–385. doi: 10.1016/j.neuron.2010.09.023

Buzsáki, G., Logothetis, N., and Singer, W. (2013). Scaling brain size, keeping timing: evolutionary preservation of brain rhythms. Neuron 80, 751–764. doi: 10.1016/j.neuron.2013.10.002

Cai, D., Chiyuan, Z., and Xiaofei, H. (2010). “Unsupervised feature selection for multi-cluster data,” in 16th ACM SIGKDD Conference on Knowledge Discovery and Data Mining (KDD'10) (Washington, DC).

Canolty, R. T., and Knight, R. T. (2010). The functional role of cross-frequency coupling. Trends Cogn. Sci. 14, 506–515. doi: 10.1016/j.tics.2010.09.001

Canolty, R. T., Edwards, E., Dalal, S. S., Soltani, M., Nagarajan, S. S., Kirsch, H. E., et al. (2006). High gamma power is phase-locked to theta oscillations in human neocortex. Science. 313, 1626–1628. doi: 10.1126/science.1128115

Chen, X., Wang, Y., Nakanishim, M., Gao, X., Jung, T. P., and Gao, S. (2015). High-speed spelling with a noninvasive brain–computer interface. Proc. Natl. Acad. Sci. U.S.A. 112, E6058–E6067. doi: 10.1073/pnas.1508080112

Cohen, M. X. (2008). Assessing transient cross-frequency coupling in EEG data. J. Neurosci. Methods 168, 494–499. doi: 10.1016/j.jneumeth.2007.10.012

Demiralp, T., Ademoglu, A., Comerchero, M., and Polich, J. (2001). Wavelet analysis of P3a and P3b. Brain Topogr. 13, 251–267. doi: 10.1023/A:1011102628306

Dimitriadis, S. I., Laskaris, N. A., and Tzelepi, A. (2013). On the quantization of time-varying phase synchrony patterns into distinct functional connectivity microstates (fcmustates) in a multi-trial visual Erp paradigm. Brain Topogr. 26, 397–409. doi: 10.1007/s10548-013-0276-z

Dimitriadis, S. I., Laskaris, N. A., Bitzidou, M. P., Tarnanas, I., and Tsolaki, M. N. (2015). A novel biomarker of amnestic MCI based on dynamic cross-frequency coupling patterns during cognitive brain responses. Front. Neurosci. 9:350. doi: 10.3389/fnins.2015.00350

Dimitriadis, S. I., Laskaris, N. A., Simos, P. G., Fletcher, J. M., and Papanicolaou, A. C. (2016). Greater repertoire and temporal variability of Cross-Frequency Coupling (CFC) modes in resting-state neuromagnetic recordings among children with reading difficulties. Front. Hum. Neurosci. 10:163. doi: 10.3389/fnhum.2016.00163

Ding, J., Sperling, G., and Srinivasan, R. (2006). Attentional modulation of SSVEP power depends on the network tagged by the flicker frequency. Cereb. Cortex 16, 1016–1029. doi: 10.1093/cercor/bhj044

Dugué, L., and VanRullen, R. (2014). The dynamics of attentional sampling during visual search revealed by Fourier analysis of periodic noise interference. J. Vis. 14:11. doi: 10.1167/14.2.11

Engel, A. K., Gerloff, C., Hilgetag, C. C., and Nolte, G. (2013). Intrinsic coupling modes: multiscale Interactions in ongoing brain activity. Neuron 80, 867–886. doi: 10.1016/j.neuron.2013.09.038

Farwell, L. A., and Donchin, E. (1988). Talking off the top of your head: toward a mental prosthesis utilizing event-related brain potentials. Electroencephalogr. Clin. Neurophysiol. 70, 510–523. doi: 10.1016/0013-4694(88)90149-6

Farwell, L. A., Richardson, D. C., and Richardson, G. M. (2013). Brain fingerprinting field studies comparing P300-MERMER and P300 brainwave responses in the detection of concealed information. Cogn. Neurodyn. 7, 263–299. doi: 10.1007/s11571-012-9230-0

Georgiadis, K., Laskaris, N., Nikolopoulos, S., and Kompatsiaris, I. (2018). Discriminative codewaves: a symbolic dynamics approach to SSVEP recognition for asynchronous BCI. J. Neural Eng. 15:026008. doi: 10.1088/1741-2552/aa904c

Guger, C., Daban, S., Sellers, E., Holzner, C., Krausz, G., Carabalona, R., et al. (2009). How many people are able to control a P300-based brain–computer interface (BCI)? Neurosci. Lett. 462, 94–98. doi: 10.1016/j.neulet.2009.06.045

Hoffmann, U., Vesin, J.-M., and Ebrahimi, T. (2008). An efficient P300-based brain–computer interface for disabled subjects. J. Neurosci. Methods 167, 115–125. doi: 10.1016/j.jneumeth.2007.03.005

Isler, J. R., Grieve, P. G., Czernochowski, D., Stark, R. I., and Friedman, D. (2008). Cross-frequency phase coupling of brain rhythms during the orienting response. Brain Res. 1232, 163–172. doi: 10.1016/j.brainres.2008.07.030

Jirsa, V., and Müller, V. (2013). Cross-frequency coupling in real and virtual brain networks. Front. Comput. Neurosci. 7:78. doi: 10.3389/fncom.2013.00078

Joachims, T. (1999). “Making large-scale SVM learning practical,” in Advances in Kernel Methods–Support Vector Learning, eds B. Schölkopf, C. Burges, and A. Smola (MIT Press).

Kabbara, A., Khalil, M., El-Falou, W., Eid, H., and Hassan, M. (2016). Functional brain connectivity as a new feature for P300 speller. PLoS ONE 11:e0146282. doi: 10.1371/journal.pone.0146282

Kapeller, C., Hintermuller, C., Abu-Alqumsan, M., Prückl, R., Peer, A., and Guger, C. (2013a). “A BCI using VEP for continuous control of a mobile robot,” in Engineering in Medicine and Biology Society (EMBC), 2013 35th Annual International Conference of the IEEE (Osaka: IEEE), 5254–5257.

Kapeller, C., Kamada, K., Ogawa, H., Prueckl, R., Scharinger, J., and Guger, C. (2013b). An electrocorticographic BCI using code-based VEP for control in video applications: a single-subject study. Front. Syst. Neurosci. 8:139 doi: 10.3389/fnsys.2014.00139

Kaper, M., Meinicke, P., Grosskathoefer, U., Lingner, T., and Ritter, H. (2004). Support vector machines for the P300 speller paradigm. IEEE Trans. Biomed. Eng. 51, 1073–1076. doi: 10.1109/TBME.2004.826698

Karakas, S., Erzengin, O. U., and Basar, E. (2000). A new strategy involving multiple cognitive paradigms demonstrates that ERP components are determined by the superposition of oscillatory responses. Clin. Neurophysiol. 111, 1719–1732. doi: 10.1016/S1388-2457(00)00418-1

Kim, Y. J., Grabowecky, M., Paller, K. A., Muthu, K., and Suzuki, S. (2007). Attention induces synchronization-based response gain in steady state visual evoked potentials. Nat. Neurosci. 10, 117–125. doi: 10.1038/nn1821

King, C. E., Dave, K. R., Wang, P. T., Mizuta, M., Reinkensmeyer, D. J., Do, A. H., et al. (2014). Performance assessment of a brain–computer interface driven hand orthosis. Ann. Biomed. Eng. 42, 2095–2105. doi: 10.1007/s10439-014-1066-9

Klimesch, W., Fellinger, R., and Freunberger, R. (2011). Alpha oscillations and early stages of visual encoding. Front Psychol. 2:118. doi: 10.3389/fpsyg.2011.00118

Lachaux, J. P., Rodriguez, E., Martinerie, J., and Varela, F. J. (1999). Measuring phase synchrony in brain signals. Hum. Brain Mapp. 8, 194–208. doi: 10.1002/(SICI)1097-0193(1999)8:4<194::AID-HBM4>3.0.CO;2-C

Lakatos, P., Karmos, G., Mehta, A. D., Ulbert, I., and Schroeder, C. E. (2008). Oscillatory entrainment as a mechanism of attentional selection. Science 320, 110–113. doi: 10.1126/science.1154735

Lakatos, P., Shah, A. S., Knuth, K. H., Ulbert, I., Karmos, G., and Schroeder, C. E. (2005). An oscillatory hierarchy controlling neuronal excitability and stimulus processing in the auditory cortex. J. Neurophysiol. 94, 1904–1911. doi: 10.1152/jn.00263.2005

Landau, A. N., and Fries, P. (2012). Attention samples stimuli rhythmically. Curr Biol. 22, 1000–1004 doi: 10.1016/j.cub.2012.03.054

Lee, P. L., Hsieh, J. C., Wu, C. H., Shyu, K. K., Chen, S. S., Yeh, T. C., et al. (2006). The brain computer interface using flash visual evoked potential and independent component analysis. Ann. Biomed. Eng. 34, 1641–1654. doi: 10.1007/s10439-006-9175-8

Lin, Y. T., and Kuo, C. H. (2016). “Development of SSVEP-based intelligent wheelchair brain computer interface assisted by reactive obstacle avoidance,” in Proc. 2016 IEEE International Conference on Industrial Technology, (Saratov), 1572–1577.

Martinetz, T. M., Berkovich, S. G., and Schulten, K. J. (1993). ‘Neural-gas’ network for vector quantization and its application to time-series IEEE Trans. Neural Netw. 4, 558–569. doi: 10.1109/72.238311

Maye, A., Zhang, D., and Engel, A. K. (2017). Utilizing retinotopic mapping for a multi-target SSVEP BCI with a single flicker frequency. IEEE Trans. Neural Syst. Rehabil. Eng. 25, 1026–1036. doi: 10.1109/TNSRE.2017.2666479

McCane, L. M., Heckman, S. M., McFarland, D. J., Townsend, G., Mak, J. N., Sellers, E. W., and Zeitlin, D. (2015). P300-based Brain-Computer Interface (BCI) Event-Related Potentials (ERPs): people with Amyotrophic Lateral Sclerosis (ALS) vs. age-matched controls. Clin. Neurophysiol. 126, 2124–2131. doi: 10.1016/j.clinph.2015.01.013

Mohebbi, A., Engelsholm, S. K., Puthusserypady, S., Kjaer, T. W., Thomsen, C. E., and Sorensen, H. B. (2015). “A brain computer interface for robust wheelchair control application based on pseudorandom code modulated visual evoked potential,” in Engineering in Medicine and Biology Society (EMBC), 2015 37th Annual International Conference of the IEEE (Osaka: IEEE), 602–605.

Morgan, S. T., Hansen, J. C., and Hillyard, S A. (1996). Selective attention to stimulus location modulates the steady-state visual evoked potential. Proc. Natl. Acad. Sci. U.S.A. 93, 4770–4774. doi: 10.1073/pnas.93.10.4770

Müller-Putz, G. R., Scherer, R., Brauneis, C., and Pfurtscheller, G. (2005). Steady-state visual evoked potential (SSVEP)-based communication: impact of harmonic frequency components J. Neural Eng. 123–130. doi: 10.1088/1741-2560/2/4/008

Nguyen, T., Khosravi, A., Creighton, D., and Nahavandi, S. (2015). EEG signal classification for BCI applications by wavelets and interval type-2 fuzzy logic systems. Expert Syst. Appl. 42, 4370–4380. doi: 10.1016/j.eswa.2015.01.036

Nolte, G., Bai, O., Wheaton, L., Mari, Z., Vorbach, S., and Hallett, M. (2004). Identifying true brain interaction from EEG data using the imaginary part of coherency. Clin. Neurophysiol. 115, 2292–2307. doi: 10.1016/j.clinph.2004.04.029

Oikonomou, V. P., Liaros, G., Georgiadis, K., Chatzilari, E., Adam, K., Nikolopoulos, S., et al. (2016). Comparative Evaluation of State-of-the-Art Algorithms for SSVEP-Based BCIs. Technical Report. e-print.

Piccione, F., Giorgi, F., Tonin, P., Priftis, K., Giove, S., Silvoni, S., et al. (2006). P300-based brain–computer interface: reliability and performance in healthy and paralysed participants. Clin. Neurophysiol. 117, 531–537. doi: 10.1016/j.clinph.2005.07.024

Pineda, J. A., Silverman, D. S., Vankov, A., and Hestenes, J. (2003). Learning to control brain rhythms: making a brain-computer interface possible. IEEE Trans. Neural Syst. Rehabil. Eng. 11, 181–184. doi: 10.1109/TNSRE.2003.814445

Polikoff, J., Bunnell, H., and Borkowski, W. (1995). “Toward a P300-based computer interface,” in Proceedings of the RESNA'95 Annual Conference (Vancouver, BC).

Rakotomamonjy, A., Guigue, V., Mallet, G., and Alvarado, V. (2005). “Ensemble of SVMs for improving brain–computer interface P300 speller performances,” in Proceedings of International Conference on Neural Networks (ICANN) (Warsaw).

Resalat, S. N., and Saba, V. A (2016). Study of various feature extraction methods on a motor imagery based brain computer interface system. Basic Clin. Neurosci. 7, 13–19.

Reza, F.-R., Brendan, A. Z., Christoph, G., Eric, S. W., Sonja, K. C., and Andrea, K. (2012). P300 brain computer interface: current challenges and emerging trends. Front. Neuroeng. 5:14. doi: 10.3389/fneng.2012.00014

Riechmann, H., Finke, A., and Ritter, H. (2016). Using a cVEP-based brain-computer interface to control a virtual agent. IEEE Trans. Neural Syst. Rehabil. Eng. 24, 692–699. doi: 10.1109/TNSRE.2015.2490621

Schroeder, C. E., and Lakatos, P. (2009). Low-frequency neuronal oscillations as instruments of sensory selection. Trends Neurosci. 32, 9–18. doi: 10.1016/j.tins.2008.09.012

Schroeder, C. E., Lakatos, P., Kajikawa, Y., Partan, S., and Puce, A. (2008). Neuronal oscillations and visual amplification of speech. Trends Cogn. Sci. 12, 106–113 doi: 10.1016/j.tics.2008.01.002

Sellers, E., and Donchin, E. A. (2006). P300-based brain–computer interface: initial tests by ALS patients. Clin. Neurophysiol. 117, 538–548. doi: 10.1016/j.clinph.2005.06.027

Spuller, M., Rosenstiel, W., and Bogdan, M. (2012a). “One class svm andcanonical correlation analysis increase performance in a c-vep based brain-computer interface (bci),” in Proceedings of 20th European Symposium on Artificial Neural Networks (ESANN 2012) (Bruges), 103–108.

Spuller, M., Rosenstiel, W., and Bogdan, M. (2012b). Online adaptation of a c-VEP brain-computer interface (BCI) based on error-related potentials and unsupervised learning. PLoS ONE 7:e51077. doi: 10.1371/journal.pone.0051077

Steriade, M. (2006). Grouping of brain rhythms in corticothalamic systems. Neuroscience 137, 1087–1106 doi: 10.1016/j.neuroscience.2005.10.029

Thulasidas, M., Guan, C., and Wu, J. (2006). Robust classification of EEG signal for brain–computer interface. IEEE Trans. Neural Syst. Rehab. Eng. 14, 24–29. doi: 10.1109/TNSRE.2005.862695

van Vliet, M., Chumerin, N., De Deyne, S., Wiersema, J. R., Fias, W., Storms, G., et al. (2016). Single-trial erp component analysis using a spatiotemporal lcmv beamformer. IEEE Trans. Biomed. Eng. 63, 55–66 doi: 10.1109/TBME.2015.2468588

Voytek, B., Canolty, R. T., Shestyuk, A., Crone, N. E., Parvizi, J., and Knight, R. T. (2010). Shifts in Gamma phase–amplitude coupling frequency from Theta to alpha over posterior cortex during visual tasks. Front. Hum. Neurosci. 4:191 doi: 10.3389/fnhum.2010.00191

Wang, Y., Gao, X., Hong, B., Jia, C., and Gao, S. (2008). Brain-computer interfaces based on visual evoked potentials. IEEE Eng. Med. Biol. Mag. 27, 64–71. doi: 10.1109/MEMB.2008.923958

Wittevrongel, B., and Van Hulle, M. M. (2016a). Frequency- and phase encoded ssvep using spatiotemporal beamforming. PLoS ONE 11:e0159988. doi: 10.1371/journal.pone.0159988

Wittevrongel, B., and Van Hulle, M. M. (2016b). Faster p300 classifier training using spatiotemporal beamforming. Int. J. Neural Syst. 26:1650014. doi: 10.1142/S0129065716500143

Wittevrongel, B., Van Wolputte, E., and Van Hulle, M. M. (2017). Code-modulated visual evoked potentials using fast stimulus presentation and spatiotemporal beamformer decoding. Sci. Rep. 7:15037. doi: 10.1038/s41598-017-15373-x