- 1College of Mechanical Engineering, Quzhou University, Quzhou, China

- 2Department of Rehabilitation, The Quzhou Affiliated Hospital of Wenzhou Medical University, Quzhou People’s Hospital, Quzhou, China

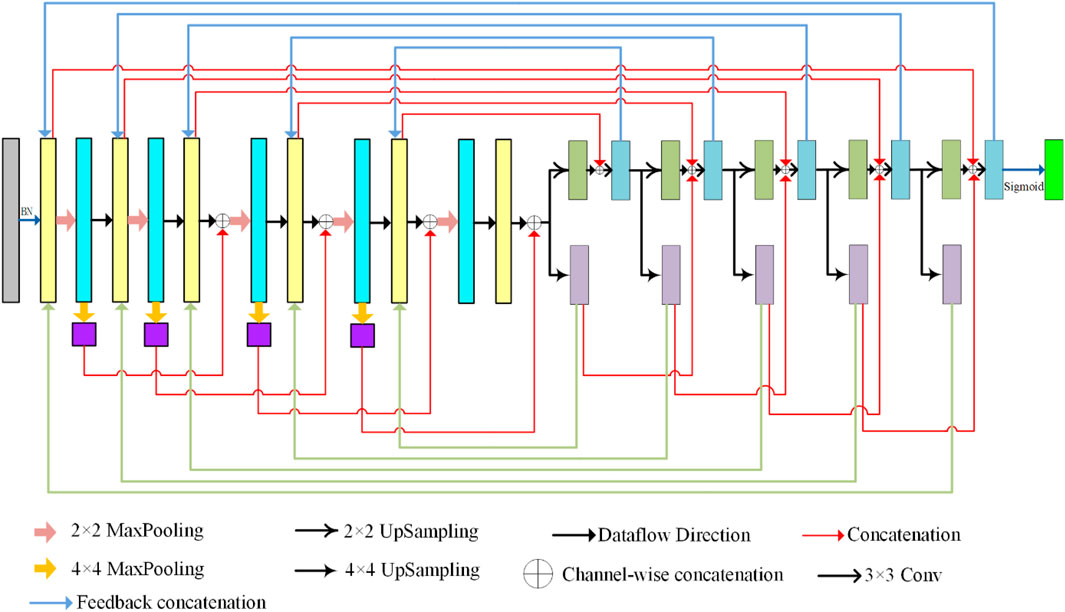

Accurate segmentation of skin lesions in dermoscopic images plays an important role in improving the survival rate of patients. However, due to the blurred boundaries of pigment regions, the diversity of lesion features, and the mutations and metastases of diseased cells, the effectiveness and robustness of skin image segmentation algorithms are still a challenging subject. For this reason, we proposed a bi-directional feedback dense connection network framework (called BiDFDC-Net), which can perform skin lesions accurately. Firstly, under the framework of U-Net, we integrated the edge modules into each layer of the encoder which can solve the problem of gradient vanishing and network information loss caused by network deepening. Then, each layer of our model takes input from the previous layer and passes its feature map to the densely connected network of subsequent layers to achieve information interaction and enhance feature propagation and reuse. Finally, in the decoder stage, a two-branch module was used to feed the dense feedback branch and the ordinary feedback branch back to the same layer of coding, to realize the fusion of multi-scale features and multi-level context information. By testing on the two datasets of ISIC-2018 and PH2, the accuracy on the two datasets was given by 93.51% and 94.58%, respectively.

1 Introduction

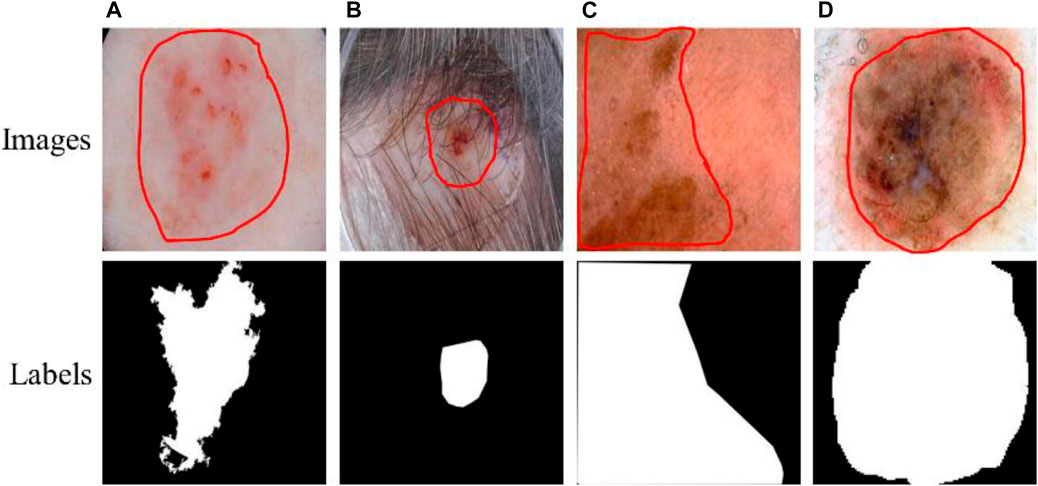

Skin cancer is an extensive, invasive, and fatal cancer. In the early stage, the characteristics of the lesions are not obvious, and very similar to other skin diseases, resulting in rapid growth and spread of cancer cells. Therefore, the early detection of lesions is very important to save the lives of patients. At present, skin diagnosis is mainly based on the use of dermatoscopic imaging technology to visualize the diseased area, which can improve the doctor’s visual clarity and relieve visual fatigue. However, the number of physicians with clinical experience is small, and the diagnostic decisions of this experience may be inaccurate or subjective. Recently, with the development of computer vision, computer-aided diagnosis technology has been widely used in the field of medical image segmentation. Due to the irregular and fuzzy contours of some lesions and the presence of interference information such as hair, ebony, and bubbles in the images, it is difficult to achieve accurate segmentation, as shown in Figure 1. Therefore, it is of great value to study an efficient and accurate segmentation method in both medical and academic fields.

FIGURE 1. Lesions and corresponding labels. (A) Irregular and fuzzy contours, (B) Hair, (C) Ebony, (D) Bubbles.

In the past, researchers have published many segmentation algorithms for skin images. Generally, these methods can be classified as traditional learning and deep learning. For the traditional segmentation methods, there was a genetic algorithm (Rouhollahi et al., 2020; Da Silva et al., 2021), a multilevel threshold method (Resma and Nair, 2021; Singh et al., 2021; Abualigah et al., 2022), watershed (Genitha et al., 2020; Indriyan et al., 2021), fuzzy clustering (Li, 2020; Qu and Wang, 2021), partial differential equation (Guo et al., 2020; Tian and Chen, 2020) and so on. These methods utilized digital image knowledge and mathematical equations to define the segmentation features, so they have the characteristics of simple calculation and fast segmentation speed. Nevertheless, they also have some disadvantages. For instance, the watershed algorithm may iteratively label gradient images for image segmentation. However, subtle changes such as the noise of the image and the texture of the object may affect the smoothness of the image gradient, causing it to over-segmentation. In multi-level thresholds, the optimum set of thresholds can divide the image into several regions. But it is difficult to choose a suitable range. Because not only does it rely heavily on a large number of controlled parameter values, but it is also expensive and complicated to operate. Its segmentation effect is also not ideal, especially for images with different kinds of noise or low contrast between foreground and background. In a word, they are sensitive to image noise and easily cause low segmentation accuracy. Moreover, they need to define a large number of parameters manually and extract the characteristics which leads to a low ability to generalize.

With the advent of computational techniques and artificial intelligence, deep learning starts to be applied to medical images. Its most prominent feature is that it has a convolutional neural network (CNN) (Tyagi and Mehra, 2020), which is similar to brain neurons. Not only can it learn the features of some local regions, but it is also sensitive to the size, position, and orientation of objects. As there is a loss of image detail in convolution and pooling, it is not possible to segment the specific contours of an image exactly. For this reason (Shelhamer et al., 2017), proposed a full convolutional network to effectively achieve image segmentation by replacing the convolutional layer with the fully connected layer. However, image segmentation only depends on the context information, so the segmentation results are not precise enough. Hence, some researchers have proposed model frameworks for variations of FCN, such as SegNet (Badrinarayanan et al., 2017), RefineNet (Lin et al., 2017), and U-Net (Ronneberger et al., 2015). Among them, Ronneberger et al. proposed that the U-Net network structure achieved a better segmentation effect in cell segmentation and others, which attracted many scholars to participate in the improvement of its model. Badshah et al. (Badshah and Ahmad, 2021) fused the residual block and the Bi-directional BConvLSTM network in the U-Net neural network, called ResBCU-Net. First, it uses residual blocks and batch normalization in the encoding path to prevent learning too many extraneous features and avoid overfitting. Then, dense convolutional connections are used in the bottleneck part of the model to enhance feature propagation and encourage feature reuse. Finally, a bidirectional long-short-term memory network is added to the decoding path to avoid gradient vanishing during long-term training. Yang et al. (Yang et al., 2020) proposed that the multiscale U-Net network uses multi-scale spatial pyramid pooling at the end of the down-sampling path to expand the feature receptive field, and uses the expanded convolutional residual block to improve the skip connection, which has good performance in brain tumor segmentation. Du et al. (Du et al., 2021) proposed a densely connected U-Net structure with multi-scale features. The idea of the model is mainly to use the initial block structure and the dense block structure of U-Net to achieve multiple feature extraction and efficient utilization through a string or parallel connections.

The above methods are suitable for the automatic segmentation of most skin images. However, its accuracy and robustness are still insufficient due to irregular object segmentation and imbalance between target and background, which severely hinders the application of deep learning methods in medical diagnosis. To overcome the shortcomings of traditional U-Net in the automatic segmentation of skin images, this is precisely what we focus on in this paper. Here, we proposed a novel bidirectional feedback dense connected network framework called BiDFDC-Net. The main contributions of this paper are as follows.

(1) After convolving the encoder in U-Net, the edge module was introduced to eliminate the problem of gradient vanishing and extract richer multiscale feature information.

(2) The dense connection network was applied to strengthen information fusion between U-Net layers. Each layer reads the state from its previous layer and writes it to the next layer, helping to train a deeper network architecture. In addition, dense connections have a regularizing effect, reducing overfitting to the training set.

(3) Using the two-branch module of dense feedback branch and ordinary feedback branch instead of single-branch upsampling can ensure that the network still has high segmentation accuracy in the case of deepening.

(4) We tested the proposed BiDFDC-Net network and compared it with some classical models and U-Net networks on the ISIC-2018 dataset. Experimental results showed that the BiDFDC-Net network achieves remarkable performance. In addition, to verify the accuracy and appropriateness of the network, the network trained on the ISIC-2018 dataset was tested on a common dataset named PH2.

2 Materials and methods

In this section, we present the details of our model for proposing a bi-directional feedback dense connection network framework (called BiDFDC-Net). The network framework is shown in Figure 2. The network model mainly fused shallow features and deep feature semantic information through dense connection, which reduces the loss of effective features caused by operations such as convolution to a certain extent. At the same time, the edge module structure was used as the main component of the coding structure to extract the relationship between different pixels in the image, and further extract the feature information of the image. Finally, the output results of each layer under each path of the decoded part were fed back to the corresponding layer in the encoding network to enable feedback connections and thus enhance the reuse of features and information transfer. And that will make the effect of sample training more efficient and flexible. We will introduce our bi-directional feedback, edge modules, and dense connections in the following subsections.

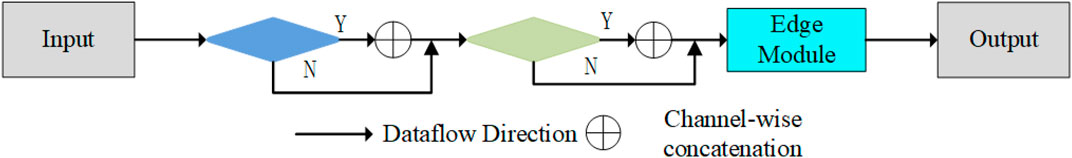

2.1 Bi-directional feedback

The mapping between the input and output of the network model in the network learning process uses context-dependent information. However, their ability to obtain contextual information is limited, and the network recursion will make the network segmentation ability gradually decline. To this end, we feed the decoding information back to the encoding in both directions (BiD), to obtain more global information and retain more effective features. In the model, we feed back the output under each path of the two-branch decoding path to the coding layer. Their feedback connections are shown in Figure 3. It will judge whether there is feedback information at each connection. It is connected if there is information input, otherwise, it is skipped. That makes the image back to the original size, the accuracy has improved. In addition, similar to U-Net, skip connections are used in our network to fuse the semantic features of the encoding and decoding parts, as shown in Figure 2.

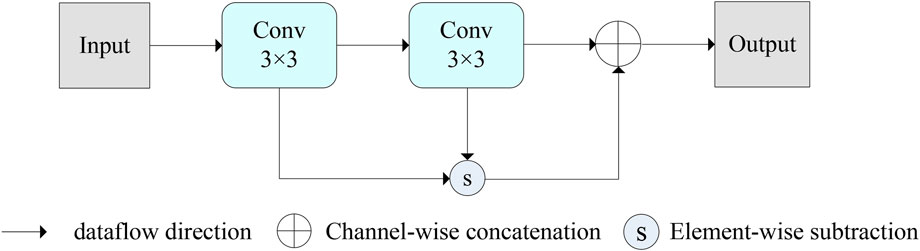

2.2 Edge module

To improve the accuracy of image segmentation, an edge module was created in the encoder to extract the edge information of the image. The structure of the edge module is illustrated in Figure 4. The working principle is to use the traditional edge detection technique, which is also the principle of Gaussian difference. It was integrated into the convolution to capture the edge information of the image and reduce the influence of other noise points on the segmentation effect. Its construction is mainly composed of two identical 3 × 3 convolutional layers, element-by-element subtraction, and joining. The purpose of convolutional layers is to obtain the feature information of the image. Other operations are to preserve image edge features at different semantic scales. It is similar to a Gaussian filter, which is equivalent to adding a filter in the image to remove the effect of other irrelevant backgrounds such as irrelevant noise or artifacts on the image segmentation. Its purpose is to extract the edge information of the image and hence improve the sensitivity of the image boundaries. Its formula is expressed as:

Where OutputEdge is the Output of the edge module, Conv represents the convolution operation, input is the input of the module.

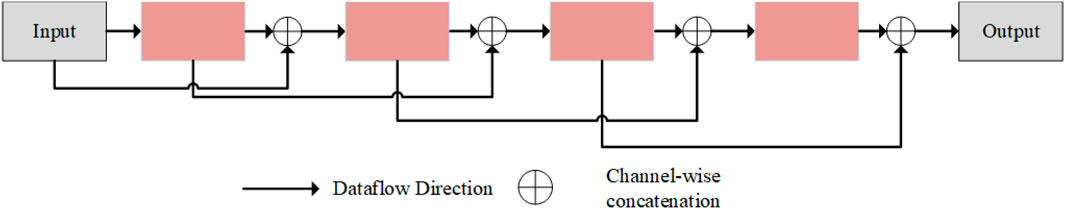

2.3 Dense connections

As displayed in Figure 5, we designed a dense connection to form a certain connection between the layers of the network, to integrate the semantic information and characteristic information between different layers. In contrast to other dense concatenations, we did not take the input of the connection of the local layer from the output of all previous layers but only utilized the parallel output results of the previous layers for concatenation. The connected input in the coding network is the result of parallel underground sampling. In decoding, the concatenation operation is performed by parallel up-sampling results. This not only reduces the learned feature parameters but also improves the network training speed. Moreover, this makes the network model more flexible and easier to migrate. In decoding, we turned the original single path into a double path up-sampling. A path is simply an up-sampling operation that passes information to the next level. The other path operation is to connect the up-sample results with the input information of the upper layer, and then carry out two 3 × 3 convolutions. This will allow the network to add more detail, resulting in a more accurate output.

3 Experiments and results

The dermoscopic images from the ISIC-2018 (Tschandl et al., 2018) and PH2 (Garg and Balkrishan, 2022) datasets were utilized to assess the BiDFDC-performance. All models were trained using the Keras API and Tensorflow as the backend in Python 3. The experimental environment is built around a workstation running Windows 10 and equipped with a 24 GB NVIDIA Quadro RTX 6000 GPU. The segmentation network is trained using the Adam optimizer (learning rate = 0.001) (Cui et al., 2023) with a batch size of 8 and an epoch of 300. Every epoch, the validation loss, which was calculated using the Dice loss (Ma et al., 2021), was monitored, and the best model was kept when the validation loss was the smallest during training.

3.1 Evaluation metrics

Several quantitative metrics, including Sensitivity (SN), Specificity (SP), Accuracy (ACC), Dice index coefficient (DIC), and Jaccard score (JAC), were applied to evaluate the performance of the models. The following is a diagram of the mathematical formula for each of these metrics:

where TP, TN, FP, and FN represent the number of true positives, true negatives, false positives, and false negatives at the pixel level, respectively.

3.2 Experimental results on two datasets

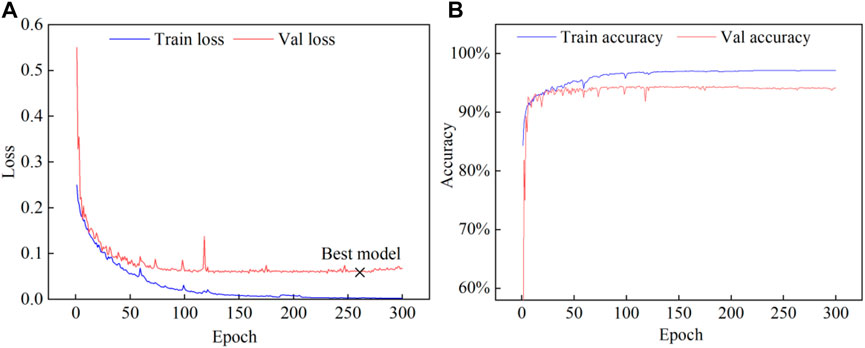

The change of loss versus accuracy during training on the ISIC-2018 dataset is shown in Figure 6. The loss steadily stabilized after 150 epochs, and at epoch 259 the ideal model was attained. The outcomes of the experiment demonstrated that the epochs chosen were appropriate.

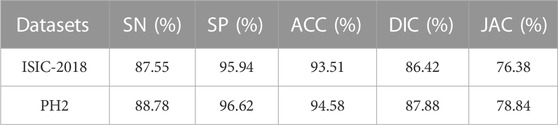

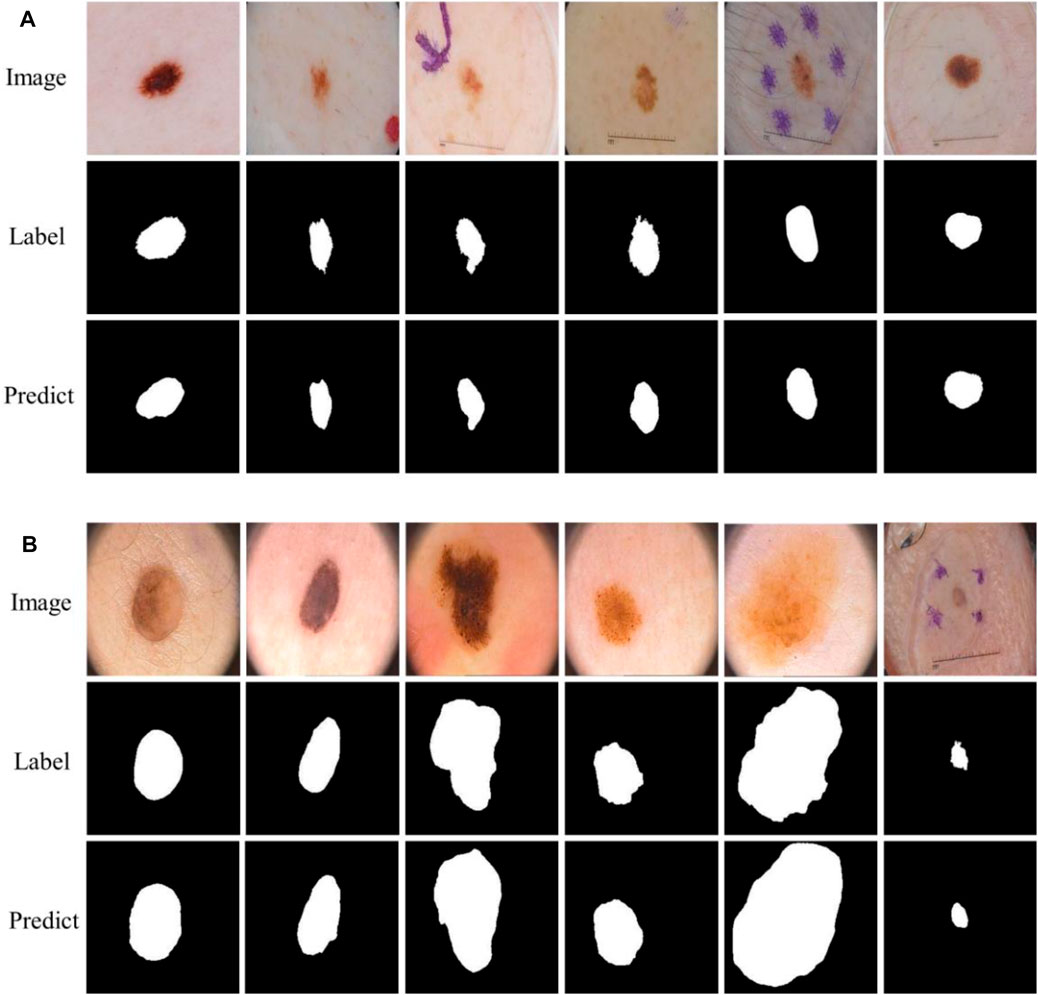

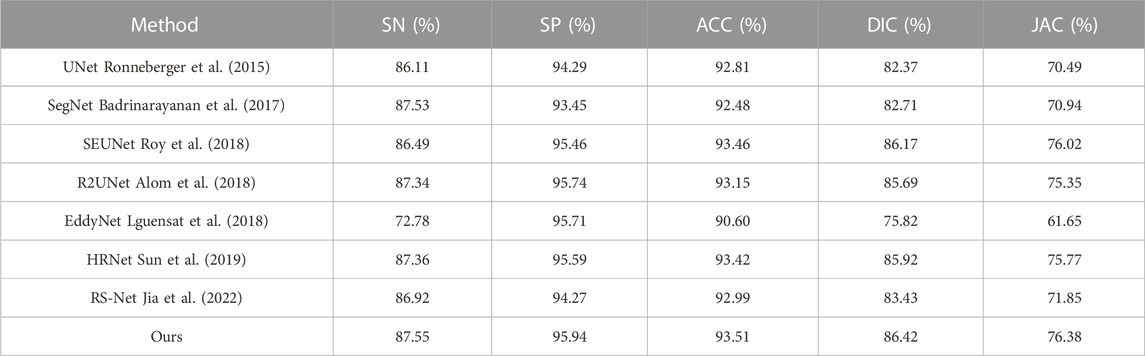

The evaluation metrics of the model test results on the two datasets are shown in Table 1. Additionally, the example of the segmentation effect of the model on the datasets is shown in Figure 7, which showed that the proposed model can effectively segment abnormal regions and accurately describe the boundaries of the lesion area from dermoscopic images.

3.3 Ablation experiment

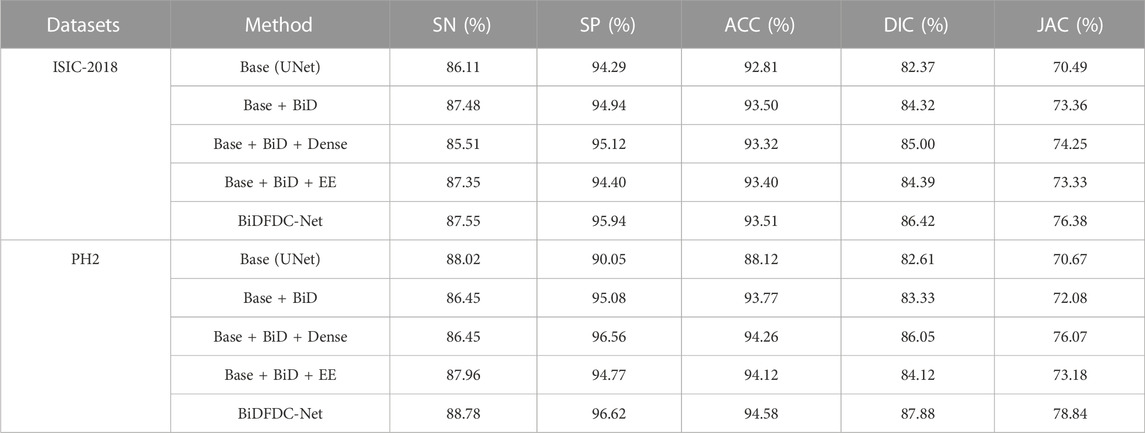

Ablation experiments were conducted based on the different structures to show how the enhanced structure affected the model. As seen in Table 2, the strategy we proposed produced the best metrics when compared to other methods.

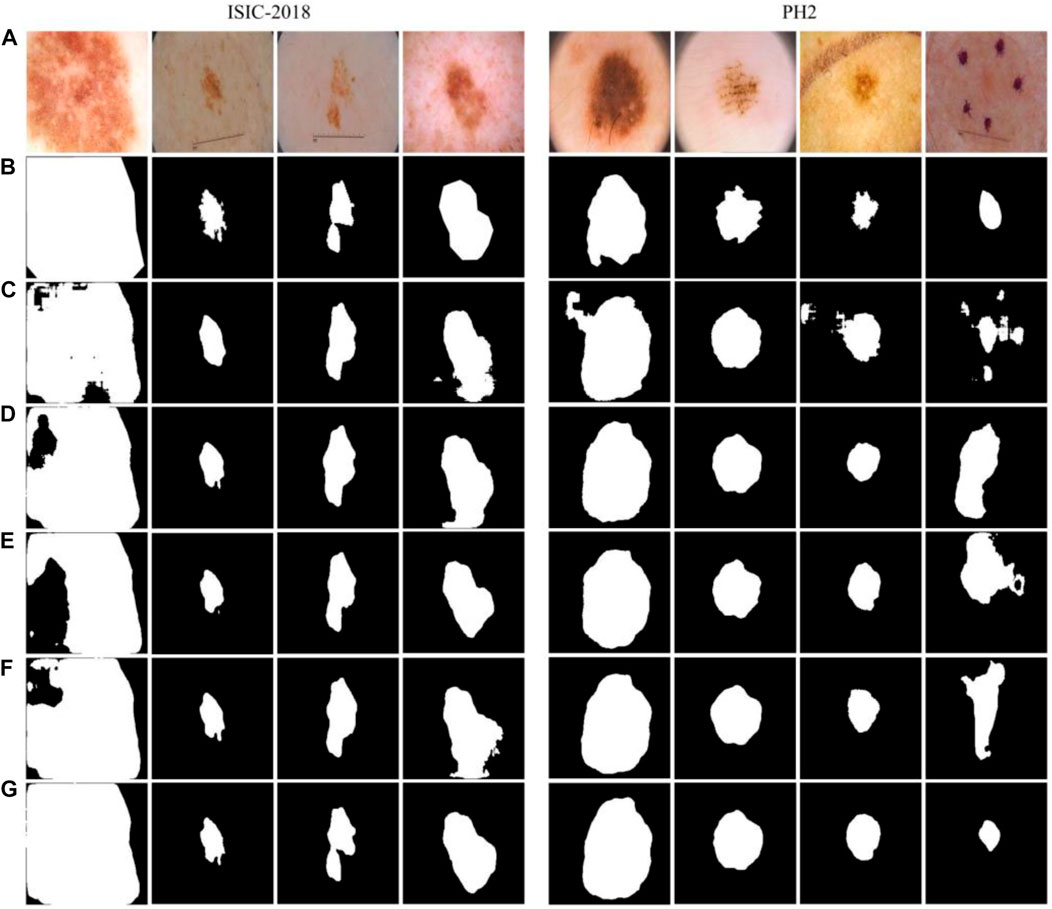

Some examples of segmentation were presented to show the impact of each model. Comparing the results in Figures 8C, D, it can be found that the network structure with a bidirectional feedback mechanism classified pixels more stably, and misjudgments of distant pixels were rare. As shown in Figures 8D,E, the model with the Dense connects strengthens and effectively uses the retrieved features but results in information hybrids due to excessive fusion. The network using the Edge module would incorrectly categorize dermoscopic images with significant background interference because there is no semantic relationship between the upper and bottom parts of the image, as seen in Figure 8F. The technique we suggested combines the benefits of both to produce better results, attaining the closest segmentation boundaries to label in the comparison of methods, as shown in Figure 8G.

FIGURE 8. Examples of segmentation results of different structures. (A) Images, (B) Labels, (C) Base, (D) Base + BiD, (E) Base + BiD + Dense, (F) Base + BiD + EE, (G) BiDFDC-Net.

3.4 Comparison with other models

3.4.1 Results of the ISIC-2018 dataset

To illustrate the effect of the proposed method, the model results were compared with some classical models. According to the data in Table 3, BiDFDC-Net outperforms all other models in the comparison in terms of segmentation evaluation metrics.

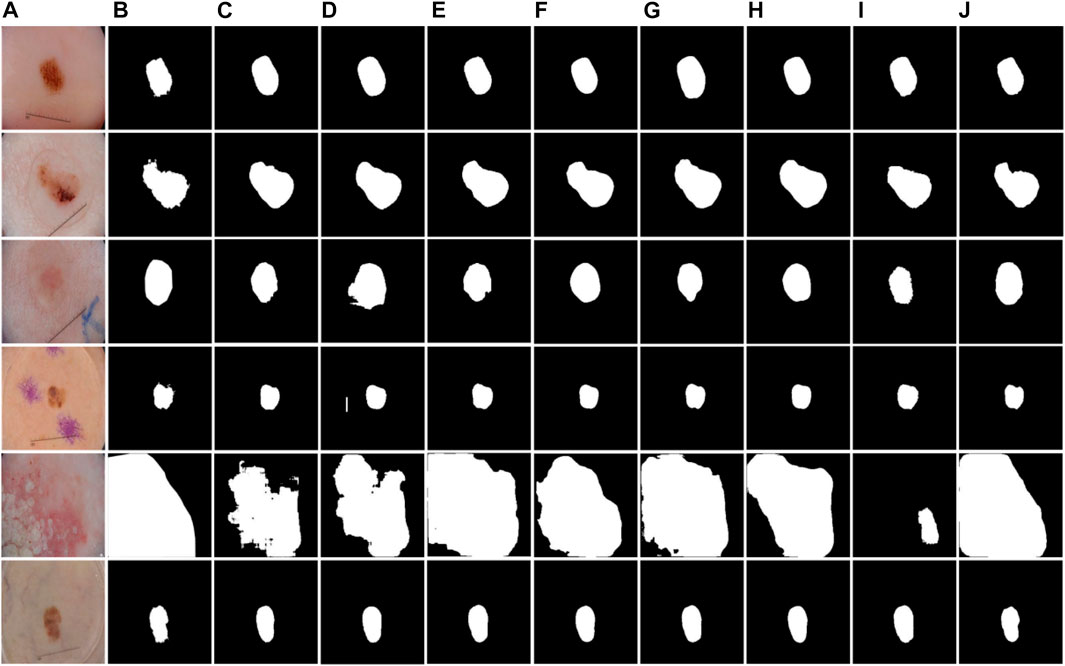

As shown in Figure 9, examples of segmentation results of different models are displayed. Due to the lack of association of high-level and low-level semantic features, UNet, SegNet, R2UNet, and EddyNet will produce missing pixels in the face of some dermoscopic images with large background interference and complex color changes, as shown in Figures 9C, D, H, I. As demonstrated in Figure 9E. Although HRNet fuses the context information to some extent, the lack of the Edge module makes it impossible to describe the edge more finely. For some models with an attention mechanism, although the location of the lesion area is accurate, the delineation of the edge is still lacking, which can be seen in Figures 9F, G. Note that the optimal results are obtained by our proposed method.

FIGURE 9. Examples of segmentation results of different models on the ISIC-2018 dataset. (A) Images, (B) Labels, (C) UNet, (D) SegNet, (E) HRNet, (F) RS-Net, (G) SEUNet, (H) R2UNet, (I) EddyNet, (J) Ours.

3.4.2 Results of the PH2 dataset

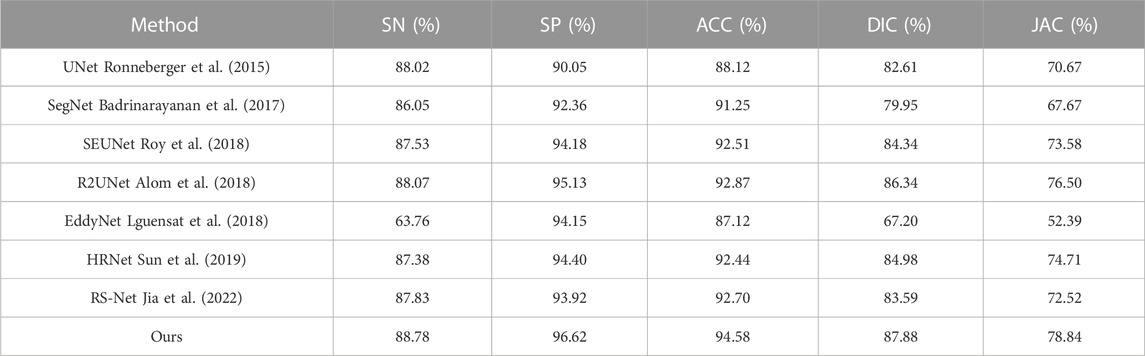

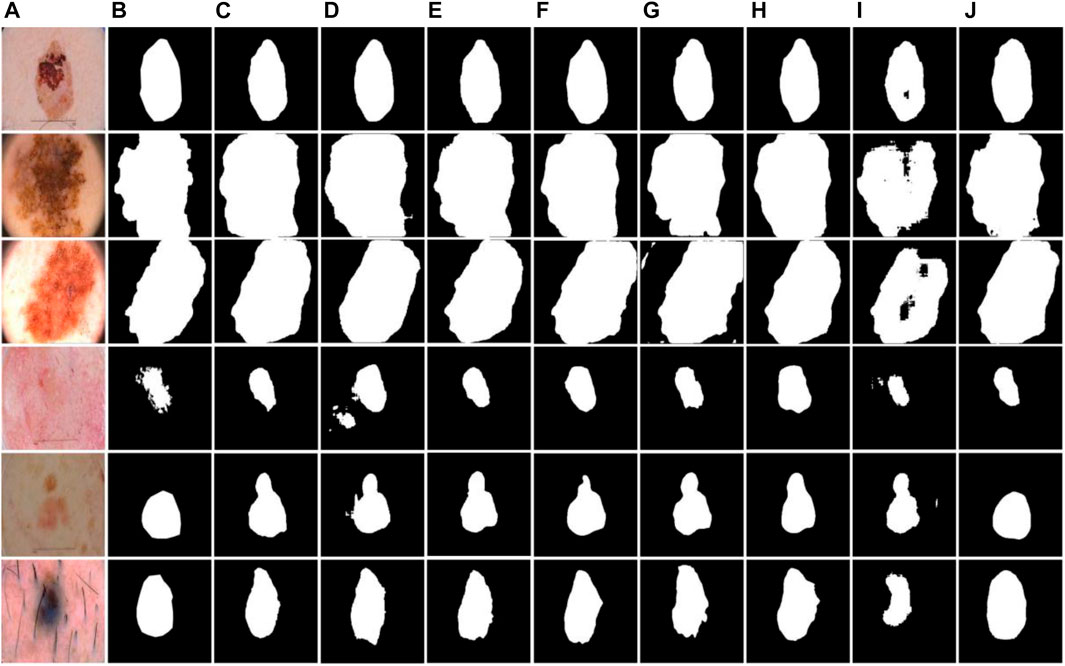

The test results of BiDFDC-Net on the PH2 dataset are superior to those of the other models taking part in the comparison in all metrics, as shown in Table 4. Additionally, as shown in Figure 10, the test results of models other than the suggested method on the PH2 dataset would contain mistakes and omissions, which is consistent with the prediction on the ISIC-2018 dataset. The outcomes show that the segmentation performance of the model we suggested on the PH2 dataset also has an excellent performance.

FIGURE 10. Examples of segmentation results of different models on the PH2 dataset. (A) Images, (B) Labels, (C) UNet, (D) SegNet, (E) HRNet, (F) RS-Net, (G) SEUNet, (H) R2UNet, (I) EddyNet, (J) Ours.

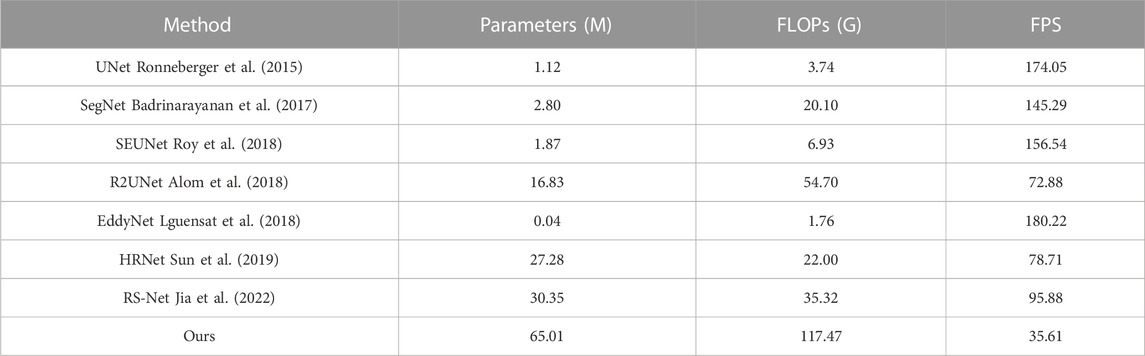

As shown in Table 5, we present a comprehensive presentation of the effects of different models. The results show that our network was higher than other models in parameter size, FLOPs, and its processing efficiency was slightly slower than the other models, but these values were still at a relatively respectable size. In addition, for medical images, we paid more attention to the accuracy of recognition, and improving the recognition accuracy at the expense of certain recognition efficiency is desirable for this research. Of course, we will continue to explore segmentation models with higher accuracy and efficiency in subsequent research.

4 Conclusion

In this study, we proposed a new network architecture to solve the problem of poor robustness of semantic segmentation of skin lesion images. The main conclusions are as follows.

(1) We proposed a network framework with bidirectional feedback and dense connectivity. It experiments on two publicly available datasets, ISIC-2018 and PH2. And the results showed that our model has a high accuracy of 93.51% and 94.58%, respectively.

(2) Bi-directional feedback was applied in the model to establish close connections between codec networks, which can enhance feature reuse and information transfer. An edge module was added to the encoding to ensure that image edge information is not lost. Dense connections were used in the model to integrate semantic and contextual information at different resolutions.

(3) The segmentation performance of our network model was quantitatively compared with that of U-Net and other classical models. Experimental results showed that our network structure has better results than other network structures.

It is worth noting that although our method achieves an advantage in segmentation accuracy, it comes at the cost of running efficiency. In the future, researching a lightweight model with high accuracy and efficiency is the direction we need to work on.

Data availability statement

The original contributions presented in the study are included in the article/supplementary material, further inquiries can be directed to the corresponding authors.

Ethics statement

The Institutional Ethics Review Committee of Quzhou University approved this retrospective study. All data were fully anonymized.

Author contributions

JJ: Writing-original draft, design; ZS Investigation, conceptualization; QZ: Formal analysis, visualization; KL: Methodology, validation; XJ: Supervision; YW: Writing-review and editing. All authors contributed to the article and approved the submitted version.

Funding

This work was supported by the National Natural Science Foundation of China (Nos 62102227, 51805124, and 62101206), Zhejiang Basic Public Welfare Research Project (Nos LZY22E050001, LZY22D010001, LGG19E050013, LZY21E060001, TGS23E030001, and LTGC23E050001), Science and Technology Major Projects of Quzhou (2021K29, 2022K56).

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

References

Abualigah, L., Al-Okbi, N. K., Abd Elaziz, M., and Houssein, E. H. (2022). Boosting marine predators algorithm by salp swarm algorithm for multilevel thresholding image segmentation. Multimed. Tools Appl. 81, 16707–16742. doi:10.1007/s11042-022-12001-3

Alom, M. Z., Hasan, M., Yakopcic, C., Taha, T. M., and Asari, V. K. (2018). Recurrent residual convolutional neural network based on U-Net (R2U-Net) for medical image segmentation. arXiv:1802.06955.

Badrinarayanan, V., Kendall, A., and Cipolla, R. (2017). SegNet: A deep convolutional encoder-decoder architecture for image segmentation. IEEE Trans. Patt. Anal. Mach. Intell. 39, 2481–2495. doi:10.1109/TPAMI.2016.2644615

Badshah, N., and Ahmad, A. (2021). ResBCU-Net: Deep learning approach for segmentation of skin images. Biomed. Signal Process. Control 40, 103137–137. doi:10.1016/j.bspc.2021.103137

Cui, R., Yang, R., Liu, F., and Geng, H. (2023). HD2A-Net: A novel dual gated attention network using comprehensive hybrid dilated convolutions for medical image segmentation. Comput. Biol. Med. 152, 106384. doi:10.1016/j.compbiomed.2022.106384

Da Silva, R. R., Escarpinati, M. C., and Backes, A. R. (2021). Sugarcane crop line detection from UAV images using genetic algorithm and Radon transform. Signal Image Video Process 15, 1723–1730. doi:10.1007/s11760-021-01908-3

Du, X. F., Wang, J. S., and Sun, W. Z. (2021). Densely connected U-Net retinal vessel segmentation algorithm based on multi-scale feature convolution extraction. Med. Phys. 48, 3827–3841. doi:10.1002/mp.14944

Garg, S., and Balkrishan, J. (2022). Skin lesion segmentation in dermoscopy imagery. Int. Arab. J. Inf. Technol. 19, 29–37. doi:10.34028/iajit/19/1/4

Genitha, C. H., Sowmya, M., and Sri, T. (2020). Comparative analysis for the detection of marine vessels from satellite images using FCM and marker-controlled watershed segmentation algorithm. J. Indian Soc. Remote Sens. 48, 1207–1214. doi:10.1007/s12524-020-01148-x

Guo, R., Shen, X. J., and Kang, H. (2020). Image segmentation algorithm based on partial differential equation. J. Intell. Fuzzy Syst. 38, 3903–3909. doi:10.3233/jifs-179614

Indriyan, T., Utoyo, M. I., and Rulaningtyas, R. (2021). A new watershed algorithm for pothole image segmentation. Stud. Inf. Control 30, 131–139. doi:10.24846/v30i3y202112

Jia, W. K., Zhang, Z. H., Shao, W. J., Ji, Z., and Hou, S. J. (2022). RS-Net: Robust segmentation of green overlapped apples. Precis. Agric. 23, 492–513. doi:10.1007/s11119-021-09846-3

Lguensat, R., Sun, M., Fablet, R., Mason, E., Tandeo, P., and Chen, G. (2018). “EddyNet: A deep neural network for pixel-wise classification of oceanic eddies,” in IEEE international geoscience and remote sensing symposium, 1764–1767. arXiv:1711.03954.

Li, H. (2020). Examination on image segmentation method of ischemic optic neuropathy based on fuzzy clustering theory. J. Intell. Fuzzy Syst. 38, 3625–3633. doi:10.3233/JIFS-179585

Lin, G., Milan, A., Shen, C., and Reid, I. (2017). “RefineNet: Multi-path refinement networks for high-resolution semantic segmentation,” in Proceedings of the IEEE conference on computer vision and pattern recognition, 5168–5177. arXiv:1611.06612.

Ma, J., Chen, J., Ng, M., Huang, R., Li, Y., Li, C., et al. (2021). Loss odyssey in medical image segmentation. Med. Image Anal. 71, 102035. doi:10.1016/j.media.2021.102035

Qu, Y. J., and Wang, Y. J. (2021). Segmentation of corpus callosum based on tensor fuzzy clustering algorithm. J. X-Ray Sci. Technol. 29, 931–944. doi:10.3233/XST-210928

Resma, K. P. B., and Nair, M. S. (2021). Multilevel thresholding for image segmentation using krill herd optimization algorithm. J. King Saud. University-Comput. Inf. Sci. 33, 528–541. doi:10.1016/j.jksuci.2018.04.007

Ronneberger, O., Fischer, P., and Brox, T. (2015). “U-Net: Convolutional networks for biomedical image segmentation,” in International conference on medical image computing and computer-assisted intervention (Springer), 234–241. arXiv:1505.04597.

Rouhollahi, A., Ilegbusi, O., and Foroosh, H. (2020). Segmentation and pore structure estimation in SEM images of tissue engineering scaffolds using genetic algorithm. Ann. Biomed. Eng. 49, 1033–1045. doi:10.1007/s10439-020-02638-2

Roy, A. G., Navab, N., and Wachinger, C. (2018). “Concurrent spatial and channel ‘squeeze & excitation’ in fully convolutional networks,” in International conference on medical image computing and computer-assisted intervention, 421–429. arXiv:1803.02579.

Shelhamer, E., Long, J., and Darrell, T. (2017). Fully convolutional networks for semantic segmentation. IEEE Trans. Pattern Anal.Mach. Intell. 39, 640–651. doi:10.1109/TPAMI.2016.2572683

Singh, S., Mittal, N., and Singh, H. (2021). A multilevel thresholding algorithm using HDAFA for image segmentation. Soft Comput. 25, 10677–10708. doi:10.1007/s00500-021-05956-2

Sun, K., Xiao, B., Liu, D., and Wang, J. D. (2019). “Deep high-resolution representation learning for human pose estimation,” in Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, 693–5703. arXiv:1902.09212.

Tian, C., and Chen, Y. G. (2020). Image segmentation and denoising algorithm based on partial differential equations. IEEE Sens. J. 20, 11935–11942. doi:10.1109/JSEN.2019.2959704

Tschandl, P., Rosendahl, C., and Kittler, H. (2018). The HAM10000 dataset, a large collection of multi-source dermatoscopic images of common pigmented skin lesions. Sci. data 5, 180161–180169. doi:10.1038/sdata.2018.161

Tyagi, A., and Mehra, R. (2020). An optimized CNN based intelligent prognostics model for disease prediction and classification from dermoscopy images. Multimed. Tools Appl. 79, 26817–26835. doi:10.1007/s11042-020-09074-3

Keywords: image segmentation, skin, bi-directional feedback, dense connection, U-Net

Citation: Jiang J, Sun Z, Zhang Q, Lan K, Jiang X and Wu J (2023) BiDFDC-Net: a dense connection network based on bi-directional feedback for skin image segmentation. Front. Physiol. 14:1173108. doi: 10.3389/fphys.2023.1173108

Received: 24 February 2023; Accepted: 12 June 2023;

Published: 20 June 2023.

Edited by:

Yiling Lu, University of Derby, United KingdomReviewed by:

Yucheng Song, Central South University, ChinaChao Liu, University of Maryland, United States

Tianqiao Zhang, Guilin University of Electronic Technology, China

Zheng Fang, University of Saskatchewan, Canada

Copyright © 2023 Jiang, Sun, Zhang, Lan, Jiang and Wu. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Zitong Sun, c3Vueml0b25nQHF6Yy5lZHUuY24=; Qile Zhang, emp6aGFuZ3FpbGVAMTYzLmNvbQ==

†These authors have contributed equally to this work and share first authorship

Jinyun Jiang1†

Jinyun Jiang1† Zitong Sun

Zitong Sun