- 1Department of Computer Science, Chiba Institute of Technology, Narashino, Japan

- 2Graduate School of Information and Computer Science, Chiba Institute of Technology, Narashino, Japan

- 3Department of Neuropsychiatry, University of Fukui, Fukui, Japan

- 4Research Center for Child Mental Development, Kanazawa University, Kanazawa, Japan

- 5Uozu Shinkei Sanatorium, Uozu, Japan

- 6Research Center for Mathematical Engineering, Chiba Institute of Technology, Narashino, Japan

- 7Department of Preventive Intervention for Psychiatric Disorders, National Center of Neurology and Psychiatry, National Institute of Mental Health, Tokyo, Japan

Introduction: Fear is a fundamental emotion essential for survival; however, excessive fear can lead to anxiety disorders and other adverse consequences. Monitoring fear states is crucial for timely intervention and improved mental well-being. Although functional magnetic resonance imaging (fMRI) has provided valuable insights into the neural networks associated with fear, its high cost and environmental constraints limit its practical application in daily life. Electroencephalography (EEG) offers a more accessible alternative but struggles to capture deep brain activity. Physiological measures such as pupil dynamics and heart rate can provide indirect insights into these deeper processes, yet they are often studied in isolation. In this context, we aimed to evaluate the practical effectiveness and limitations of a multimodal approach that combines pupil dynamics and heart rate—indirect indicators of deep brain activity—with EEG, a temporally precise but spatially limited measure of cortical responses.

Methods: We simultaneously recorded EEG, pupillometry, and heart rate in 40 healthy male participants exposed to fear-inducing and neutral visual stimuli, while also assessing their psychological states.

Results: Fear-inducing stimuli elicited distinct physiological responses, including increased occipital theta power, pupil dilation, and decreased heart rate. Notably, pupil size was the most sensitive discriminator of emotional state, though the integration of modalities yielded only limited improvement in classification accuracy.

Discussion: These findings provide empirical support for the feasibility of multimodal physiological monitoring of fear and underscore the need for further refinement for real-world applications.

1 Introduction

Fear is a fundamental emotion triggered by realistic and severe threats [reviewed in Blanchard and Blanchard (2008); Davis et al. (2010); Grupe and Nitschke (2013); Malezieux et al. (2023)]. Its neural basis involves the transmission of environmental information from primary and associative sensory cortices and the thalamus to the amygdala, where this information is evaluated for threat detection. When a threat is detected, the outcome of this evaluation triggers behavioral and physiological responses through connections to the hypothalamus and brainstem. Additionally, the amygdala projects to extensive cortical regions and the hippocampus, adjusting cognitive functions such as attention, memory, and decision-making. Thus, fear plays a vital role in survival by facilitating rapid responses and enhancing learning and memory (Phelps and LeDoux, 2005; LeDoux, 2013). However, excessive fear can negatively affect the body and lead to anxiety disorders, phobias, avoidance behaviors, and activity limitations [reviewed in Pittig et al. (2018)]. Therefore, accurately and efficiently monitoring an individual's fear state—especially through non-invasive, real-time techniques—is essential for enabling appropriate interventions and adaptive support in daily life. Multimodal physiological monitoring, combining neural and autonomic indicators, holds promise as a practical approach for this purpose (Sheikh et al., 2021; Williams and Pykett, 2022).

One method of monitoring fear involves assessing the activity of neural networks centered on the amygdala, a deep brain structure involved in fear evaluation [reviewed in Domínguez-Borràs and Vuilleumier (2022)]. Over the past few decades, functional magnetic resonance imaging (fMRI) studies have revealed the characteristics of these neural networks, particularly the interactions between the amygdala and the cerebral cortex, hippocampus, thalamus, and hypothalamus (Richardson et al., 2004; Kral et al., 2024). However, this approach is limited by high costs and environmental constraints, making it challenging to monitor mental states in everyday settings. Alternatively, electroencephalography (EEG)-based approaches are more cost-effective and limited by fewer environmental constraints. Although EEG has inherent limitations in spatial resolution and direct access to deep brain structures, its high temporal resolution and portability make it a promising tool for monitoring cortical responses related to emotional states in real-world settings (Chien et al., 2017; Sperl et al., 2019; Chen et al., 2021). Recent advancements in wearable EEG technology have spurred increasing interest in their application for monitoring mental states in real-world environments (Anders and Arnrich, 2022).

In addition to EEG, other physiological data, such as pupil dilation and heart rate, serve as powerful tools for monitoring mental states related the neural activities of amygdala and locus coeruleus (LC), a central hub for the noradrenergic (NA) [reviewed in Sheikh et al. (2021); Johansson and Balkenius (2018)]. Through these neural activities, emotions, such as fear, influence both behavioral and physiological responses. Furthermore, these physiological responses reflect activity in deep brain regions that cannot be captured by EEG alone. Therefore, combining EEG with behavioral and physiological data in a multimodal approach can complement the spatiotemporal coverage of each modality, enhancing the accuracy of mental state estimation (Anders and Arnrich, 2022). Although this approach offers potential advantages, multimodal strategies for monitoring mental states remain underexplored, as most studies focus on either EEG alone (Chien et al., 2017; Sperl et al., 2019; Anders and Arnrich, 2022) or single-modality approaches using behavioral or physiological data (Leuchs et al., 2017; Rafique et al., 2020).

In this context, we aimed to evaluate the practical effectiveness and limitations of a multimodal approach that combines pupil dynamics and heart rate—which are considered indirect indicators of deep brain activity—with EEG, a temporally precise but spatially limited measure of cortical activity. Rather than seeking to uncover novel neural mechanisms, the present study focuses on validating whether integrating these complementary modalities improves the accuracy of emotional state detection, particularly for fear. To this end, we conducted simultaneous EEG, pupillometry, and heart rate measurements in 40 male participants while presenting fear-inducing and neutral visual stimuli. The participants were also assessed for trait and state anxiety, enabling us to evaluate multimodal indicators in relation to individual emotional characteristics. By evaluating the relative utility and limitations of each physiological modality, this study seeks to contribute to the development of practical, real-world emotional monitoring systems. Our results revealed that pupil dilation following the stimuli was the most sensitive indicator distinguishing between fear and neutral conditions. However, integrating multiple modalities yielded limited classification improvements. These findings offer empirical insights into the relative utility of each physiological signal and suggest future directions for real-world emotional state monitoring.

2 Materials and methods

2.1 Participants

Forty male participants (aged 18–25 years) were recruited from the student body at Chiba Institute of Technology for this study. None of the participants had neurological impairments or behavioral difficulties, and all had normal vision or vision corrected to normal with contact lenses. Here, based on an a priori power analysis assuming a medium effect size [partial η2 = 0.06; (Cohen, 2013)], statistical power of 0.80, and two repeated measures, the minimum required sample size was estimated to be 33 participants. Female participants were excluded to avoid the potential influence of menstrual-related psychological stress on the state anxiety test. Participants were instructed to refrain from consuming caffeinated or alcoholic beverages and engaging in strenuous exercise on the day before and the day of the measurements. Written informed consent was obtained prior to participation. The Ethics Committee of Chiba Institute of Technology approved all experimental protocols and methods for this study (approval number: 2023-05-01). All procedures were conducted in accordance with the principles outlined in the Declaration of Helsinki.

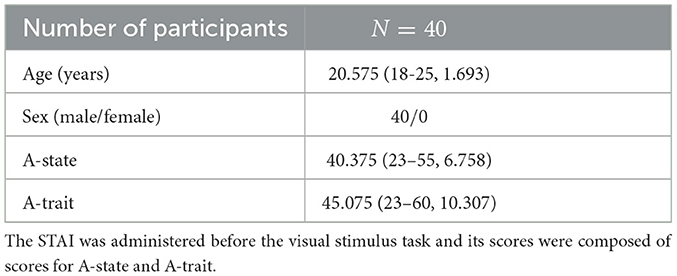

Table 1 presents the data for all participants. In this study, the Japanese version of the State-Trait Anxiety Inventory (STAI), developed by Spielberger and colleagues, was used to measure participants' anxiety levels (Spielberger et al., 1971). The STAI is widely recognized for its reliability and validity and is frequently used in educational and clinical settings. The STAI effectively captures trait anxiety, reflecting an individual's general tendency to experience anxiety and state anxiety, which varies according to situational factors, providing a comprehensive measure of psychological state. In this study, the STAI was employed to assess participants' baseline anxiety levels. The STAI evaluates anxiety across two distinct dimensions: state anxiety (A-State) and trait anxiety (A-Trait). State anxiety (A-State) assesses participants' transient feelings of anxiety, while trait anxiety (A-Trait) indicates their general tendency toward anxiety over time. Each anxiety dimension consists of 20 items. Participants rated their own psychological state on a 4-point Likert scale, ranging from 1 (rarely) to 4 (almost always). Scores for each item were summed, resulting in a total score for each dimension, with possible scores ranging from 20 to 80. Higher scores indicate higher levels of anxiety. The STAI was administered before the visual stimulus task to assess baseline anxiety. The STAI scores were calculated by aggregating participants' responses for each item, resulting in total scores for A-State and A-Trait.

Table 1. Demographic characteristics of all participants are represented as means (range, standard deviation [SD]).

2.2 Experimental procedures

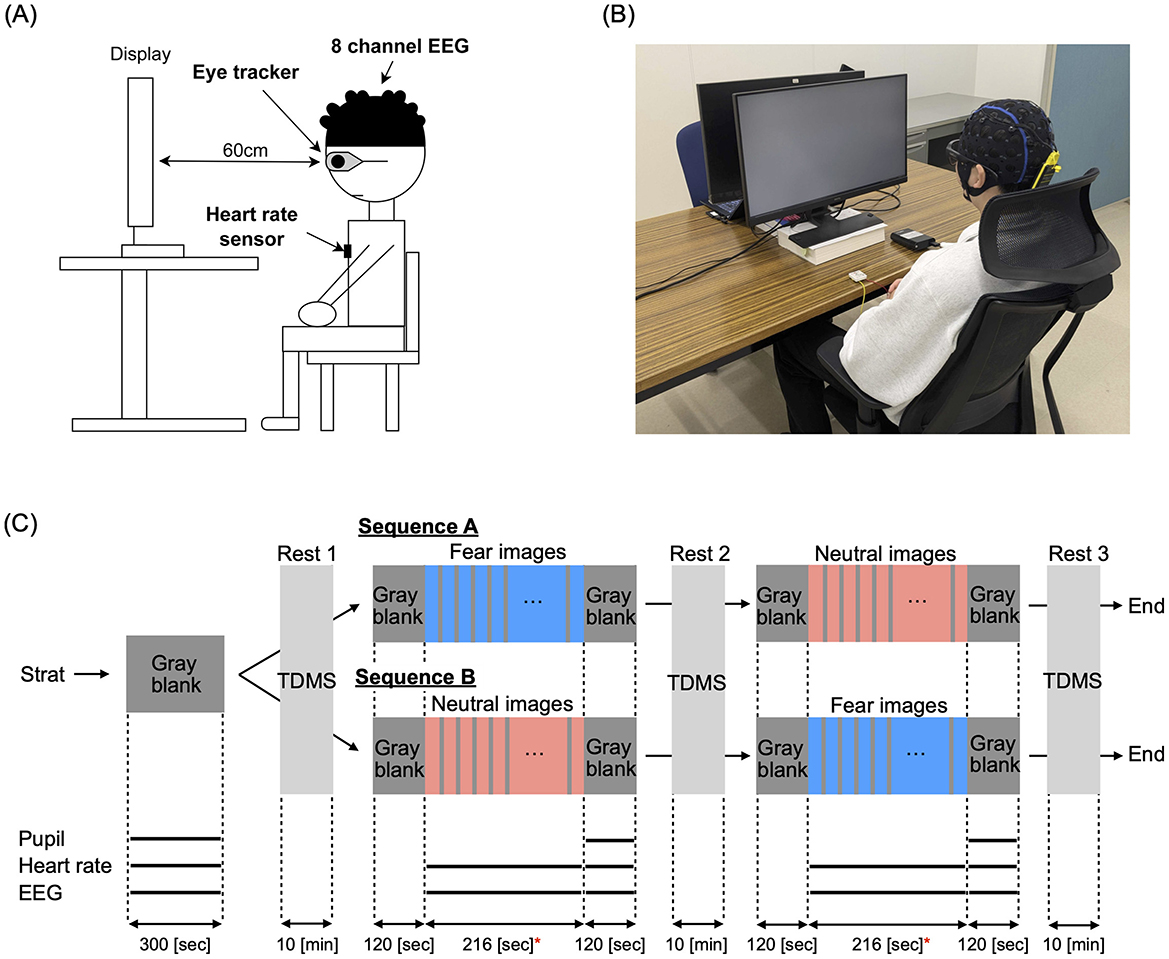

Figure 1A presents an overview of the experimental setup. The measurement equipment included a glasses-type eye tracker (Tobii Pro Glasses 3, Tobii Technology K.K.), an 8-channel wearable EEG device (NB1-EEG, East Medic Corporation), and a wearable heart rate monitor (my Beat, WHS-1, UNION TOOL CO.). Participants sat in a chair with a backrest while wearing the eye tracker, EEG device, and heart rate monitor during the experiments. An LCD monitor with a vertical size of 597.6 cm (1920 pixels) and a horizontal size of 336.2 cm (1,080 pixels) was used for visual stimulus presentation. The effective field of view for the participants was ±26.47° horizontally and ±6.48° vertically. The experiment was conducted in a laboratory with an illuminance range of 300 lx to 600 lx, measured using a lux meter compliant with JIS C 1609-1:2006 Class A linear type. In Figure 1B, the measurement scene is shown while presenting a gray blank image.

Figure 1. (A) Overview of the experimental setup. *Indicates significance at p < 0.05. Measurement equipment included a glasses-type eye tracker (Tobii Pro Glasses 3, Tobii Technology K.K.), an 8-channel wearable electroencephalography (EEG) device (NB1-EEG, East Medic Corporation), and a wearable heart rate monitor (my Beat, WHS-1). An LCD monitor was used for visual stimulus presentation. (B) Measurement scene during the presentation of a gray blank image. (C) Schedule for presenting visual stimuli, including gray blank images, fear images, and neutral images. Fear and neutral images were presented for 10 sec, followed by a 2-s gray blank image. During each 10-min resting period, participant's monetary mood states were evaluated using the Two-Dimensional Mood Scale (TDMS). The black solid lines at the bottom represent the evaluation periods for pupil diameter, heart rate, and EEG measurements.

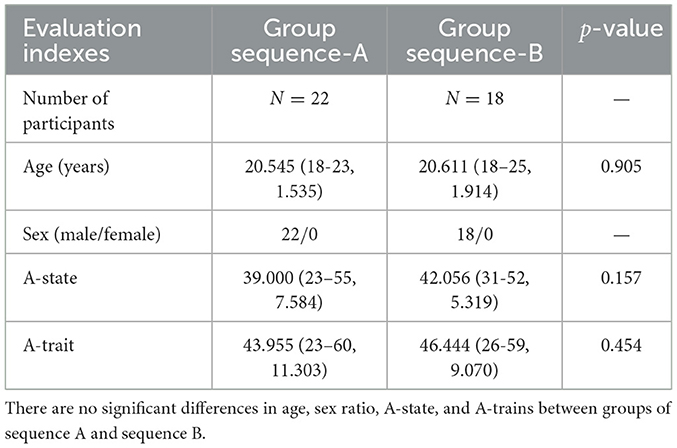

For the visual stimulation task, we selected 36 images from the International Affective Picture System (IAPS) based on each image's scores (mean pleasure/arousal), consisting of 18 neutral images (mean pleasure/arousal = 4.5, 6.2) and 18 fear images (mean pleasure/arousal = 2.1, 6.6). The fear images included depictions of weapons, corpses, and murder scenes, while the neutral images featured items like snakes, spiders, and sharks. Additionally, we prepared gray (neutral) images that only displayed a gray blank image with R = 0, G = 0, B = 0 in the RGB scale. In the experiment, to balance the presentation order of the images, participants were randomly divided into two groups: group sequence-A (22 participants) and group sequence-B (18 participants) (see demographic characteristics of both groups in Table 2). As demonstrated in Figure 1C, group sequence-A was first shown a gray blank image for 5 min, followed by a 10-min break, and then shown fear images for 216 s. After another 10-min break, participants were shown neutral images for 216 s, followed by a final 10-min break. Group sequence B was first shown gray images for 5 min, followed by a 10-min break, then neutral images for 216 s, another 10-min break, and finally fear images for 216 s, ending with a 10-min break. Additionally, gray images were presented for 2 min before and after each presentation of fear and neutral images. During each presentation, participants viewed each image for 10 s, followed by a 2-s gray image, and then another 10-s display, repeating this cycle for a total of 216 s. A 10-min resting period was given before and after the visual stimulation task to ensure participants had ample rest.

Table 2. Demographic characteristics of sequence-A and sequence-B participants are represented as means (range, standard deviation [SD]).

Furthermore, to evaluate participants' momentary mood states during each resting period, we used the Two-Dimensional Mood Scale (TDMS) (Sakairi et al., 2013). The TDMS is a psychological assessment tool developed for self-monitoring and self-regulation, assessing mood states based on two dimensions: pleasure and arousal. This scale allows participants to self-assess their mood using eight adjectives, such as “energized” and “relaxed,” rated on a 6-point Likert scale (0: not at all, 5: very much). Each mood state is classified into one of the following four quadrants based on the combination of pleasure and arousal: high-energy pleasure (e.g., lively), high-energy discomfort (e.g., tense), low-energy pleasure (e.g., calm), and low-energy discomfort (e.g., lethargic). This approach visually represents momentary psychological states, enabling a quantitative understanding of mood changes. In this study, TDMS was administered at three time points: before the task, after the first image presentation, and after the second image presentation. To evaluate mood fluctuations, difference scores were calculated by subtracting the pre-task baseline from each post-task score. This approach allowed us to capture short-term, task-induced changes in pleasure and arousal in response to specific psychological stimuli. For example, a decrease in energy levels and a decline in emotional stability following exposure to fear-inducing stimuli may indicate a strong psychological impact.

2.3 Recording

2.3.1 Pupil size

Pupil diameter was measured using the glasses-type eye tracker (Tobii Pro Glasses 3) with a sampling frequency of 100 Hz. For segments missing due to blinking, linear interpolation was performed, treating the range, including 0.1 s before and after the missing segment, as missing values. Furthermore, all segments of the pupil responses for the gray blank images, fear images, and neutral images in any participant that deviated by more than three SDs from that participant's mean for the corresponding condition were treated as missing values, and linear interpolation was performed accordingly. Finally, a 10 Hz low-pass filter was applied. The average pupil diameter during the 5-min presentation of a gray blank image was used as the baseline for each participant. The pupil size was then calculated as the difference between this baseline and the time-averaged pupil diameter during the 3-min presentation of a gray blank image following the visual stimulus task.

2.3.2 Heart rate

The heart rate measured by the wearable heart rate sensor (my Beat, WHS-1/RRD-1) was used to calculate the average R-R interval (RRI) during a 5-min presentation of a gray blank image, which served as the baseline for each participant. The difference from this baseline was then calculated for the average RRI during the visual stimulus tasks with fear and neutral image groups, as well as during the gray blank image presentation following the visual stimulus tasks.

2.3.3 Electroencephalography

EEG data were recorded using a wearable 8-electrode EEG device (NB1-EEG) with a sampling rate of 121.37 Hz. Using EEGLAB 2023.1, the data from each segment, consisting of a 5-min gray blank image, the visual stimulation task, and a gray blank image after the task, were resampled to 120 Hz and filtered with a 1-40 Hz band-pass filter. Independent Component Analysis (ICA) was then performed on each segment to classify each component into Brain, Muscle, Eye, Heart, or Other, according to the highest probability. Components classified as categories other than the Brain were removed. For the analysis, electrodes from the frontal (F3, F4) and parieto-occipital (PO3, PO4) regions were selected because these sites have been reliably associated with emotional and visual processing (Keil et al., 2002; Hajcak et al., 2010). The relative powers of the theta, alpha, and beta waves were calculated for each electrode, and averaged within the frontal and occipital regions.

2.3.4 Simultaneous measurements

The start timing of pupil size and EEG measurements was controlled using Python, and timestamps of visual stimuli during the measurements were also recorded. For the heart rate monitor, the start switch was pressed within 1 s before or after the start of pupil diameter and EEG measurements.

2.4 Evaluation index

The evaluation indices for pupil size, heart rate, and EEG were calculated using the average of the left and right pupil diameters, the average RRI value, and the relative power from the frontal (F3, F4) and occipital (PO3, PO4) electrodes, respectively. For EEG, the relative power of theta (2–4 Hz), alpha (8–13 Hz), and beta (13–30 Hz) bands was calculated for each electrode and averaged for the frontal and occipital regions. For pupil size, deviation from baseline was determined by subtracting the baseline value from the time-averaged left and right pupil diameters during the presentation of the gray blank image following the visual stimulation task. During the visual stimulation task, image luminance was not constantly maintained to ensure a vivid and naturalistic presentation. As a result, pupil diameter was affected not only by arousal and emotional valence, but also by luminance fluctuations. To minimize this confounding influence, we excluded the stimulation period from the pupil-based evaluation. In contrast, RRI values and EEG relative power were calculated by averaging data from both the visual stimulation period and the subsequent gray blank image.

2.5 Statistical analysis

The difference between neutral- and fear-induced responses was evaluated using repeated measures analysis of variance (ANOVA) for crossover trial assessment. The between-subject factor was sequence (sequence-A and sequence-B), while the within-subject factors were image type (neutral or fear) and presentation period. Each response was evaluated based on the change from the baseline value measured during the initial gray image presentation. Statistical significance was set at p < 0.05 using the Greenhouse-Geisser correction for both psychological responses (arousal and comfort levels) and physiological responses (pupil size, RRI, EEG power). To control for the risk of Type I error due to multiple comparisons among physiological indices, Bonferroni correction was additionally applied.

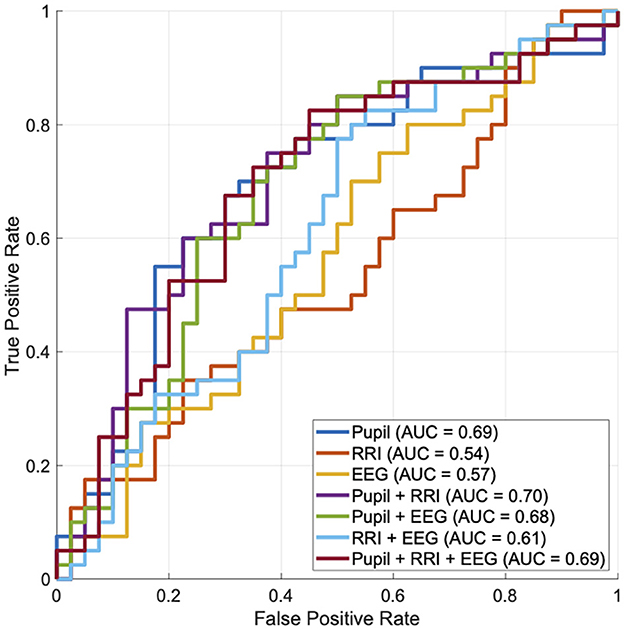

We utilized the receiver operating characteristic (ROC) curve and its area under the curve (AUC) based on logistic regression to assess the ability of biosignals to distinguish which stimulus (fear-induced or neutral) was presented to participants. For cross-validation, leave-one-out cross-validation (LOOCV) was applied. A random classification corresponds to AUC = 0.5, while a perfect classification corresponds to AUC = 1.0.

All statistical analyses were conducted using IBM SPSS Statistics (Version 29.0.2) or MATLAB (Version R2023b).

3 Results

3.1 Psychological responses and their relation to trait and state anxiety

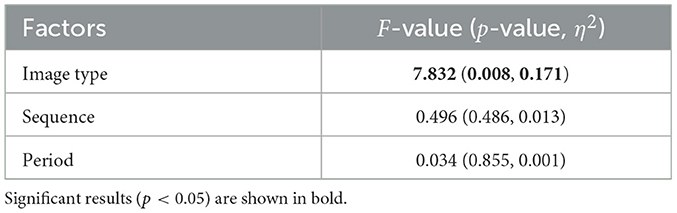

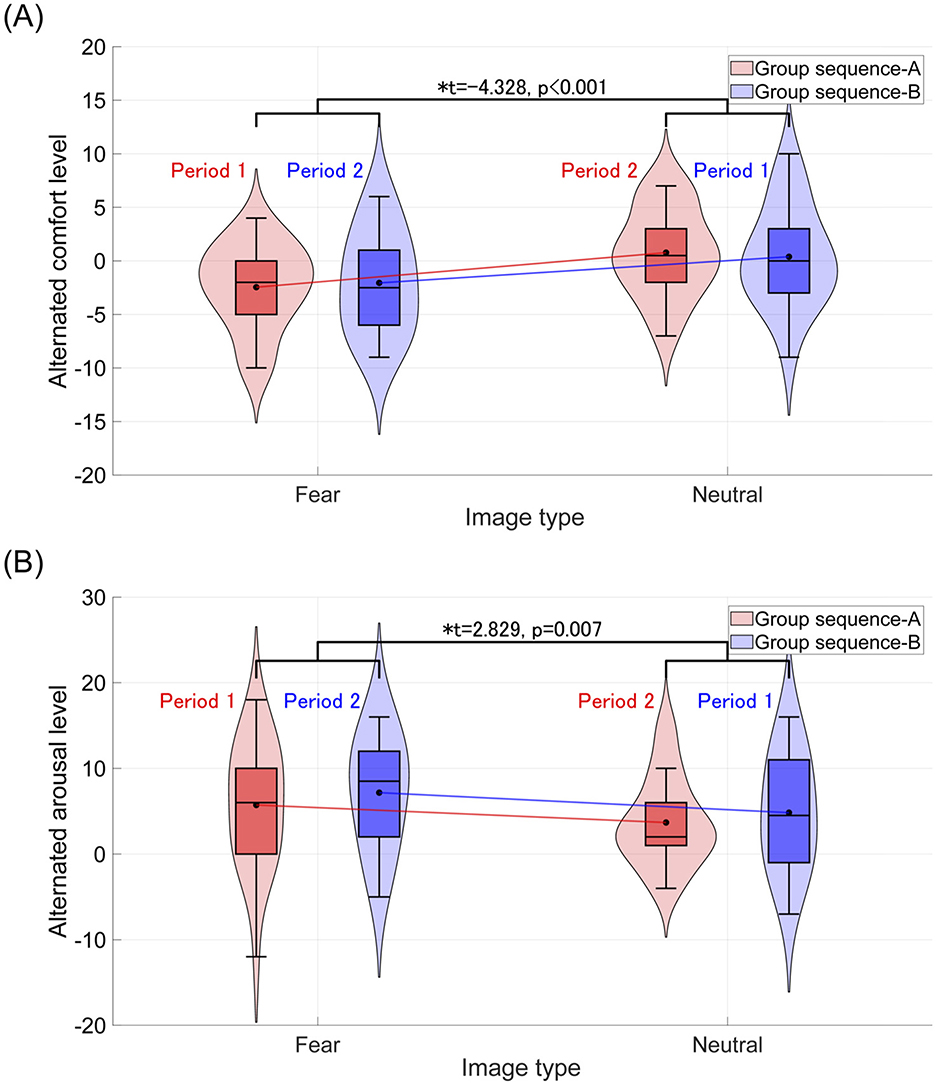

For each group, sequence A and sequence B, comfort and arousal levels following the visual stimulus presentation were measured using TDMS and evaluated through a crossover trial. The results of comfort and arousal evaluations are presented in Tables 3, 4, respectively. Both comfort and arousal levels showed a significant main effect related to image type (fear/neutral). Additionally, Figure 2 displays violin plots and boxplots showing the distribution of TDMS alternated scores after the stimuli compared to the baseline (beginning of the tasks) in the sequence A and sequence B groups. Subplots (A) and (B) correspond to the comfort level and arousal level, respectively. The presentation of fear-inducing images resulted in a significant decrease in comfort compared to neutral images. Conversely, fear-inducing images led to a significant increase in arousal compared to neutral images.

Table 3. Results of repeated-measures analysis of variance (ANOVA) for crossover trial on comfort levels with factors of image type, presentation sequence, and measurement period.

Table 4. Results of repeated-measures analysis of variance (ANOVA) for crossover trial on arousal levels with factors of image type, presentation sequence, and measurement period.

Figure 2. Violin plots and boxplots show the distribution of TDMS alternated scores after the stimuli (rest 2 and rest 3) compared to the beginning of the tasks (rest 1) in the sequence A and sequence B groups. *Indicates significance at p < 0.05. (A) Distribution of comfort levels. The average comfort level across groups (sequence A and sequence B) after representing the fear-inducing images decreases compared to that after the neutral stimulus. (B) Distribution of arousal levels. The average arousal level across groups (sequence A and sequence B) after representing the fear-inducing images increases compared to that after the neutral stimulus.

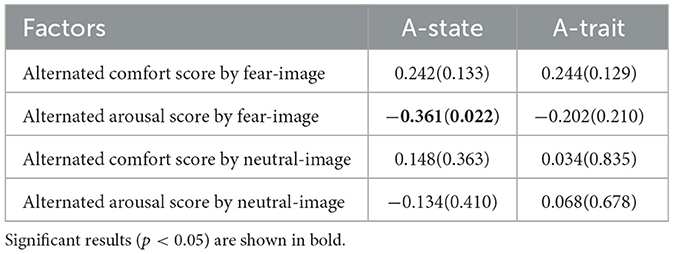

Moreover, correlations between A-state and A-trait in the STAI scores were analyzed. The results showed a significant and strong Pearson's correlation, r = 0.66 (p < 0.001). Regarding the relationship between alternated scores in the TDMS and the STAI scores, Table 5 presents the results of the correlation analysis, combining both groups for each type of image. A strong negative correlation was observed between the A-state and alternated arousal scores. This pattern suggests that participants with higher A-state scores tended to exhibit smaller changes in arousal, which is consistent with previous findings indicating reduced autonomic reactivity and restricted autonomic flexibility in high-anxiety individuals (Thayer and Lane, 2000; Friedman, 2007; Porges, 2007; Asbrand et al., 2022).

Table 5. Pearson's correlation r-value (p-value) between STAI scores and TDMS alternated scores based on the score after representing a gray blank image.

3.2 Physiological responses for pupil size, heart rate, and electroencephalography

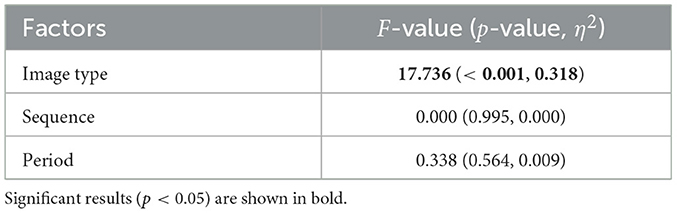

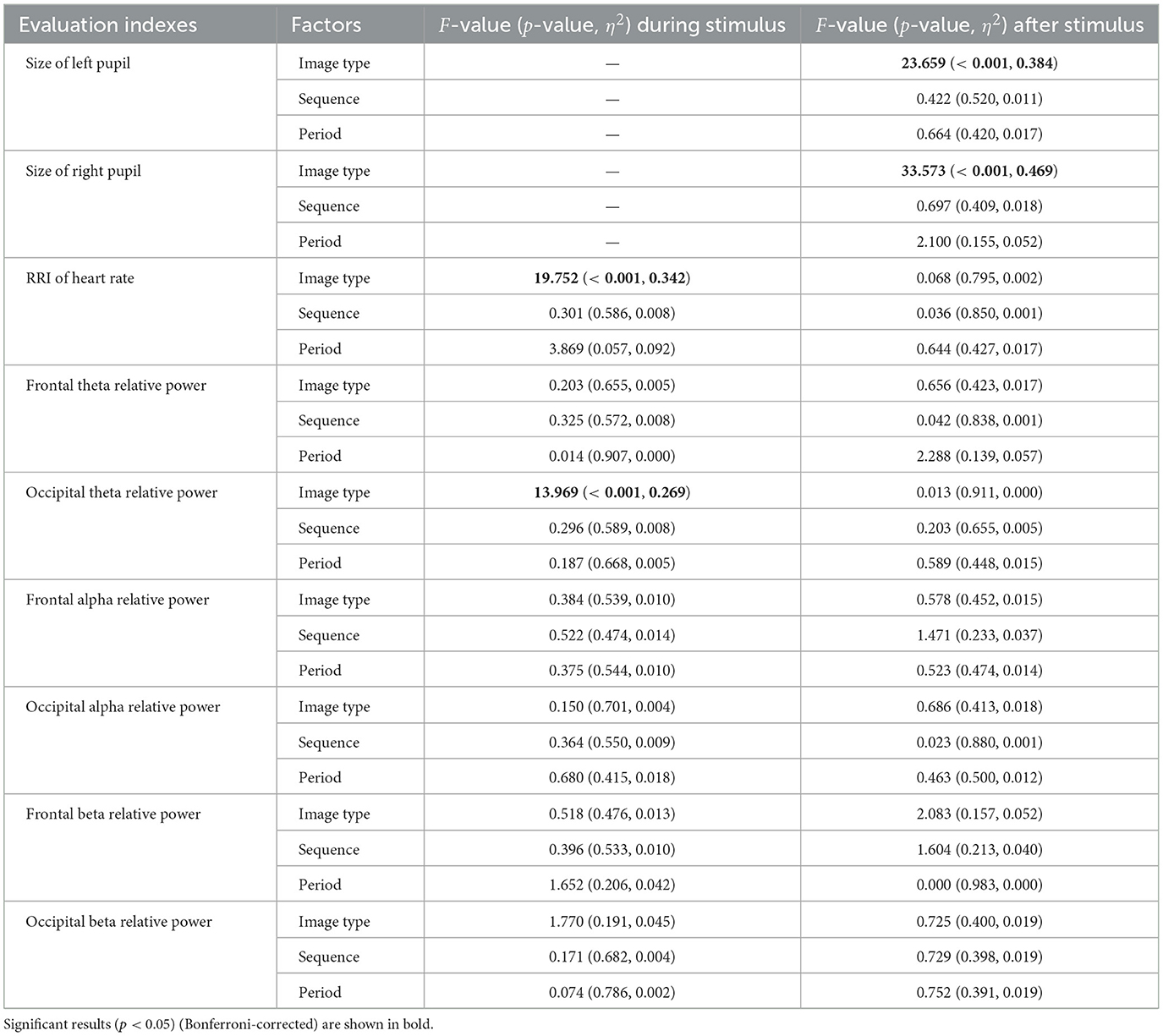

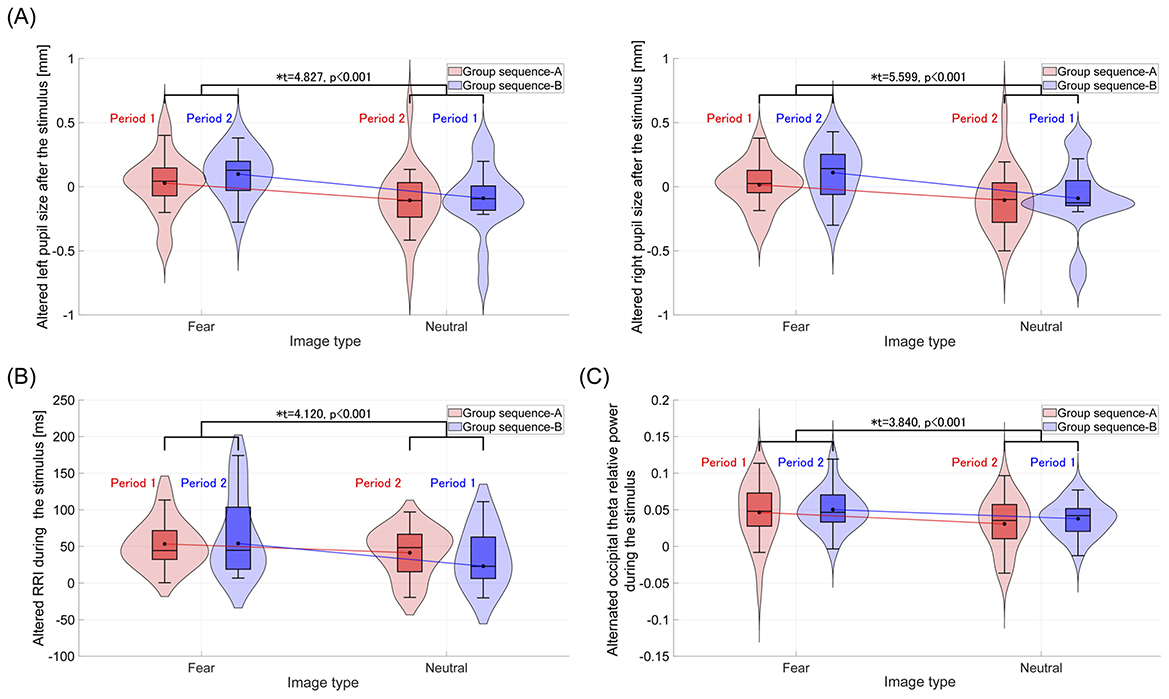

For each group, sequences A and B, the alternated pupil size, RRI, and frontal/occipital relative powers during and/or after the visual stimulus presentation were evaluated in a crossover trial, as shown in Table 6. Significant main effects of image type (fear vs. neutral) were observed in left and right pupil diameters following stimulus presentation, with corresponding Cohen's f values of f = 0.789 (left) and f = 0.939 (right), indicating large effect sizes. Similarly, significant effects were found for RRI and occipital theta relative power during stimulus presentation, with Cohen's f values of f = 0.720 and f = 0.606, respectively, also indicating large effect sizes. Figures 3A–C display violin plots and boxplots that illustrate the distribution of these alternated physiological responses. The results indicate that the average alternated values of these physiological responses across groups (sequence A and sequence B) significantly increased following fear-inducing images compared to neutral images.

Table 6. Results of repeated measures ANOVA for crossover trial regarding physiological responses for pupil size, heart rate, and EEG.

Figure 3. Violin plots and boxplots illustrate the distribution of alternated physiological responses after or during the stimuli, compared to the baseline (rest 1), in sequence A and B groups. *Indicates significance at p < 0.05. (A) After the stimulus, the distribution of alternated pupil sizes in the left and right eyes. The average alternated pupil sizes in both eyes across groups (sequence A and sequence B) significantly increase following fear-inducing images compared to neutral images. (B) Distribution of alternated RRI during the stimulus. Compared to neutral images, the average alternated RRI across groups significantly increases during fear-inducing images. (C) Distribution of alternated occipital theta relative power during the stimulus. The average occipital theta relative power across groups significantly increases during fear-inducing images compared to neutral images.

Further, we evaluated the ability to distinguish which stimulus (fear-induced or neutral) was presented to participants, based on pupil size after the stimulus, as well as RRI and occipital theta relative power during the stimulus. Here, the right and left pupil sizes showed a high correlation (Pearson's correlation r = 0.930); therefore, their values were averaged to obtain a single pupil size measure. In Figure 4, the ROC curve and AUC values obtained using logistic regression are presented. As a result, pupil size demonstrated the highest ability to distinguish stimuli (AUC = 0.69) among the other unimodal biosignals. Regarding combinations of these biosignals, combining RRI with pupil size slightly improved the detection ability (AUC = 0.70).

Figure 4. The ability to distinguish whether participants were presented with fear-induced or neutral stimuli was evaluated using the receiver operating characteristic (ROC) curve, based on pupil size after the stimulus, as well as the RRI and occipital theta relative power during the stimulus. Here, the right and left pupil sizes show a high correlation (Pearson's correlation r = 0.930); therefore, their values are averaged to obtain a single pupil size measure. Based on each ROC curve, the area under the curve (AUC) is shown in the legends.

4 Discussion

In this study, we aimed to investigate the neural activity associated with fear-induced cortical and deep brain responses, as reflected in heart rate and pupil changes. We simultaneously measured EEG, pupillometry, and heart rate in participants exposed to fear-inducing and neutral visual stimuli alongside assessments of their psychological responses. The results showed that fear-inducing stimuli decreased comfort levels and increased arousal, and these changes correlated more strongly with state anxiety than with trait anxiety. Furthermore, fear-inducing stimuli led to increased pupil size after the stimulus, a rise in RRI during the stimulus, and occipital theta band power during the stimulus.

4.1 Psychological responses

We observed that viewing the fear-inducing images lowered pleasantness and increased arousal, with a strong positive correlation between trait and state anxiety. This result is consistent with prior findings that an individual's enduring predisposition toward anxiety (trait anxiety) strongly influences their short-term anxiety in specific situations (state anxiety) (Leal et al., 2017). Regarding the effect of trait anxiety on responses to neutral- and fear-inducing images, no significant correlation was observed in either comfort or arousal. These results suggest that trait anxiety may not strongly influence physiological arousal responses to intense fear stimuli. In contrast, individuals with higher state anxiety exhibited lower changes in arousal (see Table 5). As noted in the Methods section (Section 2.2), the fear-inducing images were selected based on normative ratings from IAPS, with low valence (mean = 2.1) and high arousal (mean = 6.6), indicating strong emotional unpleasantness and intensity. Given the high baseline arousal induced by these stimuli, it is possible that their effects were already near ceiling levels, thus reducing observable modulation by individual differences in trait anxiety.

4.2 Physiological responses

4.2.1 Individual response

We discuss the reasons underlying the observed individual physiological responses. First, regarding the increase in RRI during the presentation of fear-inducing images, previous studies have reported a decrease in heart rate due to vagal activation triggered by fear stimuli (Bradley et al., 2008; Hermans et al., 2013). Our findings are consistent with those of earlier studies. Second, concerning the increase in pupil size following the presentation of fear-inducing stimuli, it is notable that the LC, the origin of the neural pathway controlling pupil size, strongly influences pupil dynamics via the NA system (Aston-Jones and Cohen, 2005). The heightened arousal induced by fear stimuli likely caused pupil dilation, which aligns with the psychological response of increased arousal measured using the TDMS (see Figure 2). Finally, the enhancement of occipital theta power during fear-inducing stimuli can be explained by the following mechanism: when the amygdala evaluates a stimulus as a threat, it provides attentional connections to the basal forebrain and various cortical areas (Pinto et al., 2013; Peck and Salzman, 2014; Chaves-Coira et al., 2018), leading to increased theta power in the temporal and occipital regions (Torrence et al., 2021). Our results align with this mechanism, showing enhanced occipital theta power in response to fear-inducing stimuli.

We discuss why pupil dilation exhibited the highest ability to distinguish between fear-induced and neutral stimuli, compared to RRI and occipital theta relative power. First, pupil size reflects neural activity in deep brain regions related to fear and arousal, such as the LC and amygdala (Johansson and Balkenius, 2018), which cannot be detected by EEG signals. Furthermore, the LC is directly involved in the neural pathway controlling pupil diameter, serving as its primary source. Therefore, pupil dilation more accurately reflects fear and arousal compared to other physiological signals.

It should be noted that, unlike EEG and RRI, pupil dilation was analyzed only during the post-stimulus blank period. This decision was made because the luminance of the fear-inducing and neutral images varied in order to maintain naturalistic and vivid presentation, which can significantly influence pupil size. Although some studies have attempted to correct for luminance-related effects using regression-based models (Hermans et al., 2013), such methods require careful calibration and validation, especially in dynamic visual environments. In this initial study, we prioritized isolating emotionally driven pupil responses by excluding the stimulation period altogether. Nevertheless, future work should consider incorporating luminance correction models or presenting stimuli with controlled brightness to enable more direct cross-modal comparisons of temporal dynamics.

One limitation of this study is the assumption that all images categorized as “neutral” or “fear-inducing” would elicit consistent emotional responses across participants. In particular, certain stimuli considered neutral (e.g., snakes or spiders) may evoke fear in some individuals, while others may not perceive fear-inducing images as strongly aversive. This variability in subjective perception could have influenced the distribution of behavioral responses, as reflected in the skewness observed in Figure 3. Future studies should include individual-level ratings of emotional valence for each image to better account for such inter-individual differences. Another limitation concerns the analysis of hemispheric asymmetries in neural and physiological signals. While we focused on averaged activity across hemispheres in the current study, previous research suggests that emotional processing may involve lateralized neural responses (Harmon-Jones and Gable, 2018). A thorough investigation of hemispheric effects would also require examining potential lateralization in other modalities such as pupil dynamics, which may be influenced by lateralized neural circuits such as the bilateral LCs (Samuels and Szabadi, 2008; Liu et al., 2017; Nobukawa et al., 2021; Kumano et al., 2022). However, clarifying these possibilities requires comprehensive multimodal analyses, such as examining hemispheric asymmetries in neural activity and pupil dynamics, which were beyond the scope of the present study. Future research should address this issue using a multimodal approach that explicitly incorporates hemisphere-specific analyses.

4.2.2 Multimodal response

We discuss why pupil dilation was observed exclusively during the post-stimulus period following the presentation of fear-inducing images. The LC-NA system strongly influences pupil size. In contrast, the enhancement of theta power in brain waves is affected by cortical feedback from the amygdala, while the increase in RRI is driven by vagal activity. Recent studies have shown that LC neurons exhibit two distinct firing modes: tonic discharge, which refers to a constant firing frequency, and phasic discharge, which occurs in response to specific stimuli or events. Under conditions of sustained stress, the LC transitions from a moderate tonic mode to a high tonic mode, and phasic mode activity is suppressed (Janitzky, 2020). Additionally, stress responses have been reported to prolong the activity of the NA system (Valentino and Van Bockstaele, 2008). Based on these findings, the sustained pupil dilation observed during the gray image period following the fear-inducing stimuli may be attributed to an acute stress state induced by the stimuli, which triggered a strong tonic mode in the LC. This sustained dilation likely elevated the baseline pupil size, with effects persisting for a relatively long duration. However, further studies are necessary to validate this hypothesis under experimental conditions that better isolate LC-NA system activity. Such investigations will be essential for a deeper understanding of this phenomenon.

Our findings confirmed that pupil dilation was the most sensitive single indicator of fear, consistent with previous literature on emotional arousal and sympathetic activation (Bradley et al., 2008; Laeng et al., 2012; Mathôt, 2018). While this result does not represent a novel discovery, its replication within a multimodal monitoring context provides empirical support for the robustness of pupillary measures (Mathôt, 2018). Importantly, the study contributes by systematically comparing pupil-based responses with EEG and heart rate measures under controlled conditions, highlighting the practical implications of modality selection for emotional state monitoring.

Regarding additional limitations of this study, although pupil dilation exhibited the highest accuracy in distinguishing which stimulus (fear-induced or neutral) was presented, combining it with EEG and RRI resulted in only limited accuracy improvement (see Figure 4). One possible reason for this limited synergy is that the three modalities may partially reflect overlapping physiological processes related to fear responses, reducing the incremental value of integration. Additionally, differences in temporal dynamics across modalities may have complicated effective integration. From an analytical perspective, our EEG analysis focused primarily on power changes at the sensor level. Importantly, we did not employ source localization or connectivity-based analyses, which could have provided deeper insights into the cortical or large-scale network dynamics associated with fear processing. As EEG and RRI reflect distinct aspects of neural activity beyond autonomic arousal, future studies should consider employing more advanced analytical approaches—such as source-level inference, functional connectivity analyses (ideally using high-density EEG), and complexity measures that capture non-linear dynamics indicative of large-scale neural interactions (Nobukawa et al., 2024)—to better delineate the complementary contributions of these modalities in emotional state monitoring. In addition, heart rate variability (HRV) indices may offer more informative features than simple RRI measures and should be incorporated into future multimodal designs. More limitation of this study is that only male participants were included. This decision was made to control for hormonal fluctuations related to the menstrual cycle, which can affect stress reactivity and emotional processing. Previous studies have shown that hormonal phases can modulate amygdala activity and autonomic responses to emotional stimuli (Andreano and Cahill, 2010). To reduce variability in physiological responses, we chose a homogeneous sample in this initial investigation. However, future studies should incorporate female participants and consider controlling for menstrual cycle phase or hormonal levels to enhance the generalizability and inclusivity of the findings. Furthermore, while we evaluated classification performance using LOOCV, we did not report confidence intervals for the AUC scores. This is primarily due to the small sample size (N = 40), which limited the feasibility of reliable resampling-based confidence interval estimation. LOOCV was chosen to maximize training data per fold, a common approach in low-N settings, but it inherently lacks variance estimation across folds. Future studies with larger cohorts should adopt cross-validation schemes that allow for statistical resampling (e.g., k-fold cross-validation with bootstrapping) to quantify uncertainty and improve the robustness of classification metrics.

4.2.3 Potential applications of multimodal fear detection

Despite the limited accuracy gains from multimodal integration observed in this study, the use of multiple physiological measures remains promising for future applications in emotional state monitoring. While each modality—pupil size, EEG, and heart rate—may partly reflect overlapping processes (Nobukawa et al., 2024), they also capture distinct neurophysiological dimensions of fear, such as sympathetic activation, cortical arousal, and parasympathetic regulation. These complementary features may prove advantageous in more ecologically valid or high-stakes settings where robustness is critical.

Potential applications of such multimodal monitoring include clinical domains (e.g., assessment of preoperative anxiety or PTSD) (Senaratne et al., 2022), or safety-critical environments (e.g., driver monitoring, aviation) (Rastgoo et al., 2018). Furthermore, with advancements in wearable technologies and data fusion algorithms, it may become feasible to implement such systems in real-world contexts, allowing for continuous, adaptive support based on an individual's affective state. Future research should explore how to optimally leverage the complementary features of each modality to improve emotion monitoring in real-world applications.

5 Conclusions

In this study, we evaluated psychological and multimodal physiological responses to fear-induced stimuli. The results revealed characteristic responses in each modality, including heart rate, pupil size, and EEG, which likely reflect processes occurring in different neural systems. These findings suggest the potential for future applications in monitoring an individual's fear state by integrating such multimodal neural responses.

Data availability statement

The datasets presented in this article are not readily available because the informed consent did not include the declaration regarding publication of data. Requests to access the datasets should be directed to bm9idWthd2FAY3MuaXQtY2hpYmEuYWMuanA=.

Ethics statement

The studies involving humans were approved by Ethics Committee of the Chiba Institute of Technology. The studies were conducted in accordance with the local legislation and institutional requirements. The participants provided their written informed consent to participate in this study.

Author contributions

YE: Visualization, Formal analysis, Writing – original draft, Data curation, Software, Investigation, Validation, Methodology, Resources, Conceptualization, Writing – review & editing. TS: Data curation, Software, Investigation, Writing – review & editing, Resources, Conceptualization, Validation, Writing – original draft, Formal analysis, Visualization, Methodology. IW: Software, Data curation, Writing – review & editing. AU: Visualization, Writing – review & editing, Formal analysis. TI: Investigation, Writing – review & editing, Conceptualization. TT: Investigation, Validation, Writing – review & editing, Methodology, Funding acquisition, Project administration, Conceptualization. SN: Resources, Validation, Project administration, Funding acquisition, Formal analysis, Supervision, Writing – review & editing, Data curation, Investigation, Visualization, Methodology, Writing – original draft, Conceptualization.

Funding

The author(s) declare that financial support was received for the research and/or publication of this article. This work was supported by JSPS KAKENHI: Grant-in-Aid for Scientific Research (C) (JP22K12183 [SN], JP23K06983 [TT]), Grant-in-Aid for Scientific Research (B) (JP25K03198 [SN]), and Grant-in-Aid for Transformative Research Areas (A) (JP20H05921 and JP25H02626 [SN]). Additional financial support was provided by TOPPAN Edge Inc. under a collaborative research agreement. TOPPAN Edge Inc. was not involved in the study design, collection, analysis, interpretation of data, the writing of this article, or the decision to submit it for publication.

Acknowledgments

At the final proofing stage of this manuscript, we utilized the services of Editage for English language editing (www.editage.com).

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Generative AI statement

The author(s) declare that Gen AI was used in the creation of this manuscript. Portions of the text, including language refinement and editing, were generated with the assistance of ChatGPT-4, an AI language model developed by OpenAI.

Publisher's note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

References

Anders, C., and Arnrich, B. (2022). Wearable electroencephalography and multi-modal mental state classification: a systematic literature review. Comput. Biol. Med. 150:106088. doi: 10.1016/j.compbiomed.2022.106088

Andreano, J. M., and Cahill, L. (2010). Menstrual cycle modulation of medial temporal activity evoked by negative emotion. Neuroimage 53, 1286–1293. doi: 10.1016/j.neuroimage.2010.07.011

Asbrand, J., Vögele, C., Heinrichs, N., Nitschke, K., and Tuschen-Caffier, B. (2022). Autonomic dysregulation in child social anxiety disorder: an experimental design using CBT treatment. Appl. Psychophysiol. Biofeedback 47m 199–212. doi: 10.1007/s10484-022-09548-0

Aston-Jones, G., and Cohen, J. D. (2005). An integrative theory of locus coeruleus-norepinephrine function: adaptive gain and optimal performance. Annu. Rev. Neurosci. 28, 403–450. doi: 10.1146/annurev.neuro.28.061604.135709

Blanchard, D. C., and Blanchard, R. J. (2008). Defensive behaviors, fear, and anxiety. Handbook Behav. Neurosci. 17, 63–79. doi: 10.1016/S1569-7339(07)00005-7

Bradley, M. M., Miccoli, L., Escrig, M. A., and Lang, P. J. (2008). The pupil as a measure of emotional arousal and autonomic activation. Psychophysiology 45, 602–607. doi: 10.1111/j.1469-8986.2008.00654.x

Chaves-Coira, I., Rodrigo-Angulo, M. L., and Nu nez, A. (2018). Bilateral pathways from the basal forebrain to sensory cortices may contribute to synchronous sensory processing. Front. Neuroanat. 12:5. doi: 10.3389/fnana.2018.00005

Chen, S., Tan, Z., Xia, W., Gomes, C. A., Zhang, X., Zhou, W., et al. (2021). Theta oscillations synchronize human medial prefrontal cortex and amygdala during fear learning. Sci. Adv. 7:eabf4198. doi: 10.1126/sciadv.abf4198

Chien, J., Colloca, L., Korzeniewska, A., Cheng, J. J., Campbell, C., Hillis, A., et al. (2017). Oscillatory eeg activity induced by conditioning stimuli during fear conditioning reflects salience and valence of these stimuli more than expectancy. Neuroscience 346, 81–93. doi: 10.1016/j.neuroscience.2016.12.047

Davis, M., Walker, D. L., Miles, L., and Grillon, C. (2010). Phasic vs sustained fear in rats and humans: role of the extended amygdala in fear vs anxiety. Neuropsychopharmacology 35, 105–135. doi: 10.1038/npp.2009.109

Domínguez-Borràs, J., and Vuilleumier, P. (2022). Amygdala function in emotion, cognition, and behavior. Handb. Clin. Neurol. 187, 359–380. doi: 10.1016/B978-0-12-823493-8.00015-8

Friedman, B. H. (2007). An autonomic flexibility-neurovisceral integration model of anxiety and cardiac vagal tone. Biol. Psychol. 74, 185–199. doi: 10.1016/j.biopsycho.2005.08.009

Grupe, D. W., and Nitschke, J. B. (2013). Uncertainty and anticipation in anxiety: an integrated neurobiological and psychological perspective. Nat. Rev. Neurosci. 14, 488–501. doi: 10.1038/nrn3524

Hajcak, G., MacNamara, A., and Olvet, D. M. (2010). Event-related potentials, emotion, and emotion regulation: an integrative review. Dev. Neuropsychol. 35, 129–155. doi: 10.1080/87565640903526504

Harmon-Jones, E., and Gable, P. A. (2018). On the role of asymmetric frontal cortical activity in approach and withdrawal motivation: an updated review of the evidence. Psychophysiology 55:e12879. doi: 10.1111/psyp.12879

Hermans, E. J., Henckens, M. J., Roelofs, K., and Fernández, G. (2013). Fear bradycardia and activation of the human periaqueductal grey. Neuroimage 66, 278–287. doi: 10.1016/j.neuroimage.2012.10.063

Janitzky, K. (2020). Impaired phasic discharge of locus coeruleus neurons based on persistent high tonic discharge—a new hypothesis with potential implications for neurodegenerative diseases. Front. Neurol. 11:371. doi: 10.3389/fneur.2020.00371

Johansson, B., and Balkenius, C. (2018). A computational model of pupil dilation. Conn. Sci. 30:5–19. doi: 10.1080/09540091.2016.1271401

Keil, A., Bradley, M. M., Hauk, O., Rockstroh, B., Elbert, T., and Lang, P. J. (2002). Large-scale neural correlates of affective picture processing. Psychophysiology 39, 641–649. doi: 10.1111/1469-8986.3950641

Kral, T., Williams, C., Wylie, A., McLaughlin, K., Stephens, R., Mills-Koonce, W., et al. (2024). Intergenerational effects of racism on amygdala and hippocampus resting state functional connectivity. Sci. Rep. 14:17034. doi: 10.1038/s41598-024-66830-3

Kumano, H., Nobukawa, S., Shirama, A., Takahashi, T., Takeda, T., Ohta, H., et al. (2022). Asymmetric complexity in a pupil control model with laterally imbalanced neural activity in the locus coeruleus: a potential biomarker for attention-deficit/hyperactivity disorder. Neural Comput. 34, 2388–2407. doi: 10.1162/neco_a_01545

Laeng, B., Sirois, S., and Gredebäck, G. (2012). Pupillometry: A window to the preconscious? Perspect. Psychol. Sci. 7, 18–27. doi: 10.1177/1745691611427305

Leal, P. C., Goes, T. C., da Silva, L. C. F., and Teixeira-Silva, F. (2017). Trait vs. state anxiety in different threatening situations. Trends Psychiat. Psychother. 39, 147–157. doi: 10.1590/2237-6089-2016-0044

LeDoux, J. E. (2013). Emotion circuits in the brain. Fear Anxiety. 2013, 259–288. doi: 10.1146/annurev.neuro.23.1.155

Leuchs, L., Schneider, M., Czisch, M., and Spoormaker, V. I. (2017). Neural correlates of pupil dilation during human fear learning. Neuroimage 147, 186–197. doi: 10.1016/j.neuroimage.2016.11.072

Liu, Y., Rodenkirch, C., Moskowitz, N., Schriver, B., and Wang, Q. (2017). Dynamic lateralization of pupil dilation evoked by locus coeruleus activation results from sympathetic, not parasympathetic, contributions. Cell Rep. 20, 3099–3112. doi: 10.1016/j.celrep.2017.08.094

Malezieux, M., Klein, A. S., and Gogolla, N. (2023). Neural circuits for emotion. Annu. Rev. Neurosci. 46, 211–231. doi: 10.1146/annurev-neuro-111020-103314

Mathôt, S. (2018). Pupillometry: psychology, physiology, and function. J. Cognition 1:1. doi: 10.5334/joc.18

Nobukawa, S., Shirama, A., Takahashi, T., Takeda, T., Ohta, H., Kikuchi, M., et al. (2021). Pupillometric complexity and symmetricity follow inverted-u curves against baseline diameter due to crossed locus coeruleus projections to the edinger-westphal nucleus. Front. Physiol. 12:92. doi: 10.3389/fphys.2021.614479

Nobukawa, S., Shirama, A., Takahashi, T., and Toda, S. (2024). Recent trends in multiple metrics and multimodal analysis for neural activity and pupillometry. Front. Neurol. 15:1489822. doi: 10.3389/fneur.2024.1489822

Peck, C. J., and Salzman, C. D. (2014). The amygdala and basal forebrain as a pathway for motivationally guided attention. J. Neurosci. 34, 13757–13767. doi: 10.1523/JNEUROSCI.2106-14.2014

Phelps, E. A., and LeDoux, J. E. (2005). Contributions of the amygdala to emotion processing: from animal models to human behavior. Neuron 48, 175–187. doi: 10.1016/j.neuron.2005.09.025

Pinto, L., Goard, M. J., Estandian, D., Xu, M., Kwan, A. C., Lee, S.-H., et al. (2013). Fast modulation of visual perception by basal forebrain cholinergic neurons. Nat. Neurosci. 16, 1857–1863. doi: 10.1038/nn.3552

Pittig, A., Treanor, M., LeBeau, R. T., and Craske, M. G. (2018). The role of associative fear and avoidance learning in anxiety disorders: gaps and directions for future research. Neurosci. Biobehav. Rev. 88, 117–140. doi: 10.1016/j.neubiorev.2018.03.015

Porges, S. W. (2007). The polyvagal perspective. Biol. Psychol. 74, 116–143. doi: 10.1016/j.biopsycho.2006.06.009

Rafique, S., Kanwal, N., Karamat, I., Asghar, M. N., and Fleury, M. (2020). Towards estimation of emotions from eye pupillometry with low-cost devices. IEEE Access 9, 5354–5370. doi: 10.1109/ACCESS.2020.3048311

Rastgoo, M. N., Nakisa, B., Rakotonirainy, A., Chandran, V., and Tjondronegoro, D. (2018). A critical review of proactive detection of driver stress levels based on multimodal measurements. ACM Comp. Surv. 51, 1–35. doi: 10.1145/3186585

Richardson, M. P., Strange, B. A., and Dolan, R. J. (2004). Encoding of emotional memories depends on amygdala and hippocampus and their interactions. Nat. Neurosci. 7, 278–285. doi: 10.1038/nn1190

Sakairi, Y., Nakatsuka, K., and Shimizu, T. (2013). Development of the two-dimensional mood scale for self-monitoring and self-regulation of momentary mood states. Jap. Psychol. Res. 55, 338–349. doi: 10.1111/jpr.12021

Samuels, E. R., and Szabadi, E. (2008). Functional neuroanatomy of the noradrenergic locus coeruleus: its roles in the regulation of arousal and autonomic function part i: principles of functional organisation. Curr. Neuropharmacol. 6, 235–253. doi: 10.2174/157015908785777229

Senaratne, H., Oviatt, S., Ellis, K., and Melvin, G. (2022). A critical review of multimodal-multisensor analytics for anxiety assessment. ACM Trans. Comp. Healthc. 3, 1–42. doi: 10.1145/3556980

Sheikh, M., Qassem, M., and Kyriacou, P. A. (2021). Wearable, environmental, and smartphone-based passive sensing for mental health monitoring. Front. Digital Health 3:662811. doi: 10.3389/fdgth.2021.662811

Sperl, M. F., Panitz, C., Rosso, I. M., Dillon, D. G., Kumar, P., Hermann, A., et al. (2019). Fear extinction recall modulates human frontomedial theta and amygdala activity. Cereb. Cortex 29, 701–715. doi: 10.1093/cercor/bhx353

Spielberger, C. D., Gonzalez-Reigosa, F., Martinez-Urrutia, A., Natalicio, L. F., and Natalicio, D. S. (1971). The state-trait anxiety inventory. Interamerican J. Psychol. 5, 3–4.

Thayer, J. F., and Lane, R. D. (2000). A model of neurovisceral integration in emotion regulation and dysregulation. J. Affect. Disord. 61, 201–216. doi: 10.1016/S0165-0327(00)00338-4

Torrence, R. D., Troup, L. J., Rojas, D. C., and Carlson, J. M. (2021). Enhanced contralateral theta oscillations and n170 amplitudes in occipitotemporal scalp regions underlie attentional bias to fearful faces. Int. J. Psychophysiol. 165, 84–91. doi: 10.1016/j.ijpsycho.2021.04.002

Valentino, R. J., and Van Bockstaele, E. (2008). Convergent regulation of locus coeruleus activity as an adaptive response to stress. Eur. J. Pharmacol. 583, 194–203. doi: 10.1016/j.ejphar.2007.11.062

Keywords: electroencephalography, fear monitoring, multimodal measurement, pupil dynamics, heart rate

Citation: Ebato Y, Saki T, Wakita I, Ueno A, Ishibashi T, Takahashi T and Nobukawa S (2025) Evaluating multimodal physiological signals for fear detection: relative utility of pupillometry, heart rate, and EEG. Front. Hum. Neurosci. 19:1605577. doi: 10.3389/fnhum.2025.1605577

Received: 03 April 2025; Accepted: 30 June 2025;

Published: 29 July 2025.

Edited by:

Kuldeep Singh, Guru Nanak Dev University, IndiaReviewed by:

Amadeu Quelhas Martins, European University of Lisbon, PortugalFatemeh Hadaeghi, University Medical Center Hamburg-Eppendorf, Germany

Copyright © 2025 Ebato, Saki, Wakita, Ueno, Ishibashi, Takahashi and Nobukawa. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Sou Nobukawa, bm9idWthd2FAY3MuaXQtY2hpYmEuYWMuanA=

Yuki Ebato1

Yuki Ebato1 Isshu Wakita

Isshu Wakita Ayumu Ueno

Ayumu Ueno Tomoaki Ishibashi

Tomoaki Ishibashi Tetsuya Takahashi

Tetsuya Takahashi Sou Nobukawa

Sou Nobukawa