- 1Interdepartmental Graduate Program in Dynamical Neuroscience, University of California, Santa Barbara, Santa Barbara, CA, United States

- 2Department of Mechanical Engineering, University of California, Santa Barbara, Santa Barbara, CA, United States

In this paper, we calculate magnitude-constrained optimal stimuli for desynchronizing a population of neurons by maximizing the Lyapunov exponent for the phase difference between pairs of neurons while simultaneously minimizing the energy which is used. This theoretical result informs the way optimal inputs can be designed for deep brain stimulation in cases where there is a biological or electronic constraint on the amount of current that can be applied. By exploring a range of parameter values, we characterize how the constraint magnitude affects the Lyapunov exponent and energy usage. Finally, we demonstrate the efficacy of this approach by considering a computational model for a population of neurons with repeated event-triggered optimal inputs.

1 Introduction

As deep brain stimulation (DBS) emerges as an effective therapy for a wide range of neurological disorders, theoretical perspectives are growing to help inform effective stimulation protocols. DBS technology involves surgically implanting electrodes to deliver electrical stimuli over time to specific brain regions with wires connecting the implanted electrodes to an implantable pulse generator, which can be programmed to set DBS parameters (Lozano and Lipsman, 2013; Sandoval-Pistorius et al., 2023; Frey et al., 2022). Novel technologies enable the optimization of DBS stimulation parameters including the input pulse’s shape, amplitude, frequency, and interstimulus interval (Najera et al., 2023; Krauss et al., 2021). Computational analysis of the interaction between an applied electrical stimulus and the spiking dynamics of neural populations can inform the effective design of DBS parameters to enhance clinical outcomes while respecting device engineering constraints.

Among other conditions, deep brain stimulation has become an effective treatment for Parkinson’s disease, where pathological synchronization of the basal ganglia-cortical loop is associated with dopaminergic denervation of the striatum, which over time leads to motor impairment including tremors, bradykinesia, and akinesia (Hammond et al., 2007; Neumann et al., 2007). In particular, the parkinsonian low-dopamine state is observed to be related to excessive synchronization in the beta frequency band (15–30 Hz) in the subthalamic nucleus (STN) of the basal ganglia (Rubchinsky et al., 2012; Asl et al., 2022). It has been proposed that the symptoms of parkinsonian resting tremors are caused by excessive synchronization in populations of neurons firing at a similar frequency to that of the tremor (Tass, 2006). Deep brain stimulation works as a therapeutic intervention by modulating such synchronization patterns, often ameliorating motor impairment for patients living with Parkinson’s disease. DBS is also used in the treatment of essential tremor (ET), epilepsy, Tourette’s syndrome, obsessive compulsive disorder, and treatment-resistant depression.

The most commonly offered protocol for DBS therapy is continuous high-frequency stimulation (Lozano and Lipsman, 2013; Sandoval-Pistorius et al., 2023). Despite its clinical success, conventional high-frequency DBS faces several limitations including diminishing efficacy over time, stimulation-induced side effects, and high energy consumption necessitating battery replacements. Furthermore, as open-loop stimulation, where the device continuously applies electrical inputs as long as it is on, its static parameters do not adapt to the dynamic nature of disease symptoms, limiting its long-term effectiveness. In recent years, there has been growing interest in developing alternative stimulation paradigms that can effectively disrupt pathological synchrony while minimizing energy consumption and side effects. Several desynchronization methods have been proposed and tested on patients, including coordinated reset stimulation (Tass, 2003; Manos et al., 2021), adaptive deep brain stimulation (aDBS) (Sandoval-Pistorius et al., 2023), and phase-specific stimulation approaches (Cagnan et al., 2017). Such techniques often leverage theoretical frameworks from nonlinear dynamics and control theory to design stimuli that can efficiently desynchronize neural populations.

In particular, coordinated reset uses multiple electrode implants which deliver identical impulses separated by a time delay between implants (Tass, 2003; Lysyansky et al., 2011; Lücken et al., 2013; Popovych and Tass, 2014; Kubota and Rubin, 2018; Khaledi-Nasab et al., 2022). This leads to clustering behavior for the neural populations, in which each cluster fires at different times, giving (partial) desynchronization of the dynamics. This approach has achieved preliminary clinical success (Adamchic et al., 2014; Manos et al., 2021). In adaptive deep brain stimulation (aDBS), closed-loop systems monitor biomarkers in real time and can initiate changes in stimulation parameters (Sandoval-Pistorius et al., 2023; Oehrn et al., 2024). The goal of aDBS is to improve clinical outcomes by designing control signals based on neural data to deliver stimulation only when needed. For instance, in Parkinson’s disease, a potential biomarker is the amplitude of the pathological beta rhythms, with stimulation becoming active if this exceeds some prescribed threshold. On demand stimulation through aDBS can prolong device battery life, thereby extending device lifetimes and prolonging the interval between surgeries in clinical treatment protocols. Finally, for phase-specific stimulation the inputs occur at a particular dynamical phase in order to disrupt synchrony (Cagnan et al., 2017; Cagnan et al., 2019; cf. Holt et al., 2016).

In parallel, there have been a number of computational studies exploring the mechanisms by which DBS might be working (e.g., Santaniello et al., 2015; Spiliotis et al., 2022). There have also been theoretical and computational studies exploring different strategies for desynchronizing neural populations (Wilson and Moehlis, 2022), with approaches including delayed feedback control (Rosenblum and Pikovsky, 2004a; Rosenblum and Pikovsky, 2004b; Popovych et al., 2006; Popovych et al., 2017), phase randomization through optimal phase resetting (Danzl et al., 2009; Nabi et al., 2013; Rajabi et al., 2025), phase distribution control (Monga et al., 2018; Monga and Moehlis, 2019), cluster control (Wilson and Moehlis, 2015a; Matchen and Moehlis, 2018; Wilson, 2020; Qin et al., 2023), machine learning and data-driven approaches (Matchen and Moehlis, 2021; Vu et al., 2024), and chaotic desynchronization (Wilson et al., 2011; Wilson and Moehlis, 2014b; Wilson and Moehlis, 2014a). Each of these approaches has advantages and disadvantages based on the control objective and what is known about and what can be measured for the neural dynamics (Wilson and Moehlis, 2022).

In this paper, we focus on chaotic desynchronization, for which an energy-optimal stimulus exponentially desynchronizes a population of neurons. This approach relies on phase reduction methods, which have proven particularly valuable in analyzing and controlling neural oscillators (Monga et al., 2019; Wilson and Moehlis, 2022). These methods allow for the simplification of complex neuronal dynamics into phase models, where the behavior of an oscillating neuron can be characterized by its phase and response to perturbations, captured by the phase response curve (PRC). Unlike previous studies of chaotic desynchronization, here we include a constraint on stimulus magnitude. Such constraints are important engineering considerations for the practical applications of DBS, as there can exist both biological limitations on the maximum electrical stimulation that can be safely applied to brain tissue as well as electronic limitations on the current that stimulation devices can reliably store and deliver over time.

Specifically, in this paper we calculate magnitude-constrained optimal stimuli that maximize the Lyapunov exponent for the phase difference between pairs of neurons while simultaneously minimizing energy consumption. The Lyapunov exponent quantifies the exponential divergence rate of initially close trajectories, making it an appropriate measure of desynchronization efficiency. By systematically exploring different constraint magnitudes, we characterize the tradeoff between maximum allowable stimulus amplitude, desynchronization efficacy, and energy usage. We set up the optimal control problem in Section 2.1. In Section 2.2 we describe several canonical phase response curves representing different types of neuronal dynamics: Sinusoidal, SNIPER, Hodgkin-Huxley, and Reduced Hodgkin-Huxley models. In Section 3.1, we investigate our approach for each PRC, computing the optimal stimulus under various magnitude constraints and evaluating its performance in desynchronizing initially synchronized neurons. In Section 3.2, we validate our approach using computational simulations of neural populations with coupling and noise, demonstrating that our magnitude-constrained optimal stimuli can effectively desynchronize neural populations. Finally, a discussion of our results is given in Section 4. Overall, this work provides a theoretical foundation for designing energy-efficient DBS protocols that respect hardware and biological constraints while effectively disrupting pathological neural synchronization. We respectfully present this study as a tribute to the pioneering work of Hermann Haken on the control of complex systems.

2 Methods

2.1 Optimal control problem

We present a procedure for finding an energy-optimal stimulus which maximizes the Lyapunov exponent associated with the phase difference between a pair of neurons, while accounting for a constraint on the stimulus magnitude. This approach is based on the phase reduction of neural oscillators in the presence of an input (see, for example, Monga et al., 2019), and only requires knowledge of a neuron’s phase response curve (PRC). We note that the PRC can in principle be measured experimentally (Netoff et al., 2012), or can be calculated numerically if the model is known (Ermentrout, 2002; Monga et al., 2019). In particular, we consider the following set of equations:

where

Following Wilson and Moehlis (2014b), we suppose that the neurons are nearly synchronized

Linearizing about

Here

where the goal is to maximize the Lyapunov exponent while minimizing the energy used, where the energy is the integral of the square of the control stimulus

In order to account for this constraint, we use a Hamiltonian formulation for the optimal control problem (Kirk, 1998), with the Hamiltonian given in Equation 6:

where

This defines a two-point boundary value problem which must be solved subject to the boundary conditions

where

In particular, the optimal

2.2 Example phase response curves

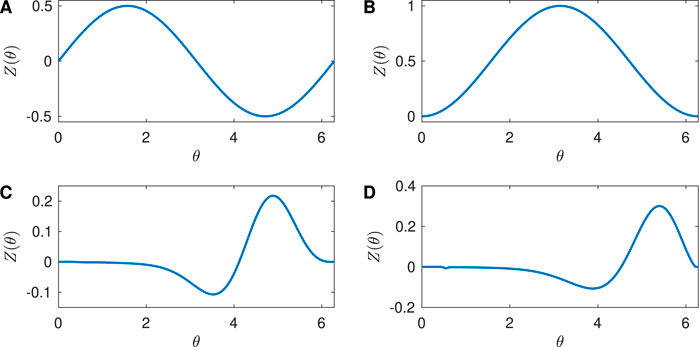

The PRC quantifies the effect of an external stimulus on the phase of a periodic orbit. In this paper, we consider four example PRCs: Sinusoidal, SNIPER, Hodgkin-Huxley, and Reduced Hodgkin-Huxley, as shown in Figure 1.

Figure 1. Phase Response Curves for the neuron models considered in the paper. (A) Sinusoidal with

The Sinusoidal PRC

shown in Figure 1A with

Periodic orbits can also arise from a SNIPER bifurcation, which stands for Saddle-Node Infinite Period bifurcation; this is also often called a SNIC bifurcation, which stands for Saddle-Node Invariant Circle bifurcation. Here, for a parameter on one side of the bifurcation there is a stable fixed point and a saddle fixed point that lie on an invariant circle. As the parameter is varied, these fixed points annihilate in a saddle-node bifurcation, and when the parameter is on the other side of the bifurcation there is a stable periodic orbit whose period approaches infinity as the bifurcation is approached. For a periodic orbit near a SNIPER bifurcation, the PRC is approximately given by Equation 13 (Ermentrout, 1996; Brown et al., 2004):

shown in Figure 1B with

The Hodgkin-Huxley equations are a well-studied conductance-based model for neural activity, and were developed to describe the dynamics for a squid giant axon (Hodgkin and Huxley, 1952). Mathematically, they are a four-dimensional set of coupled ordinary differential equations for the voltage across the neural membrane and three gating variables associated with the flow of ions across the membrane. The full equations are given in the Supplementary Appendix. We chose a baseline current value

Finally, the Reduced Hodgkin-Huxley equations are an approximation to the full Hodgkin-Huxley equations (Keener and Sneyd, 1998; Moehlis 2006). Mathematically, they are two-dimensional set of coupled ordinary differential equations for the voltage across the neural membrane and one gating variable. The equations are given in the Supplementary Appendix. We chose a baseline current value

3 Results

3.1 Results for pairs of neurons

In this section, we consider the dynamics of a pair of neurons satisfying Equation 1, where

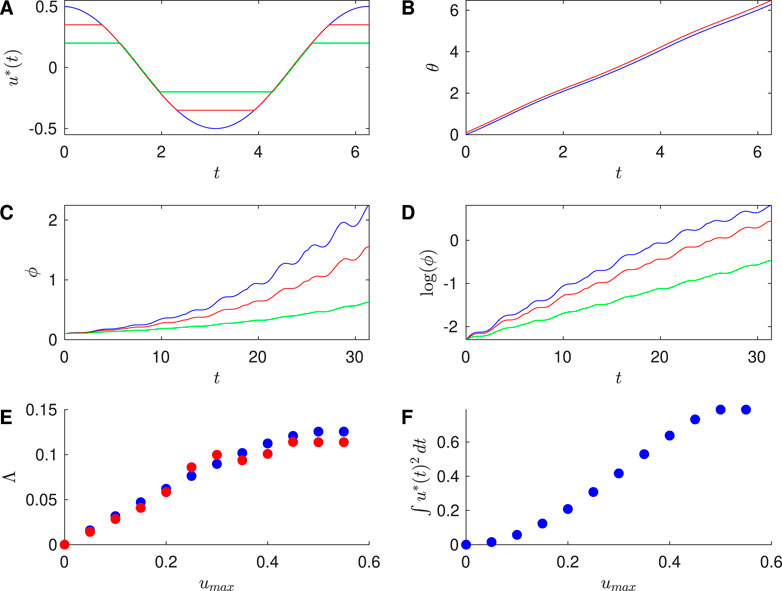

Figure 2 shows results for the Sinusoidal PRC with

Figure 2. Results for Sinusoidal PRC, with

To investigate the efficacy of desynchronization between Neurons 1 and 2, we ran simulations of the phases of Neurons 1 and 2 over a full cycle of the control stimulus, with initial conditions

Next, we apply successive control stimuli to pairs of neurons with the event-based approach described above. We compute the phase difference

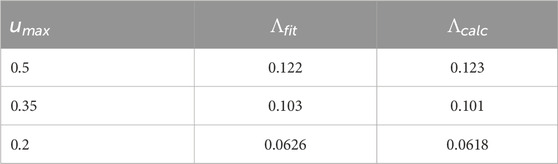

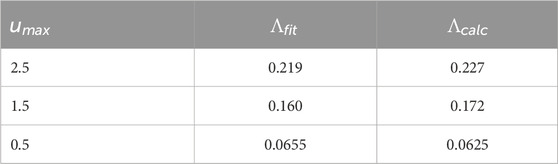

Table 1 compares

used over one cycle of the control stimulus for a range of values of

Table 1. For the Sinusoidal PRC, comparison of the Lyapunov exponent

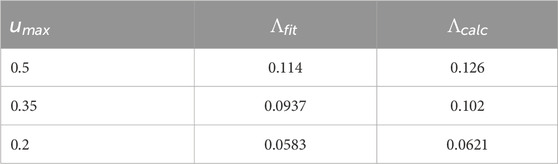

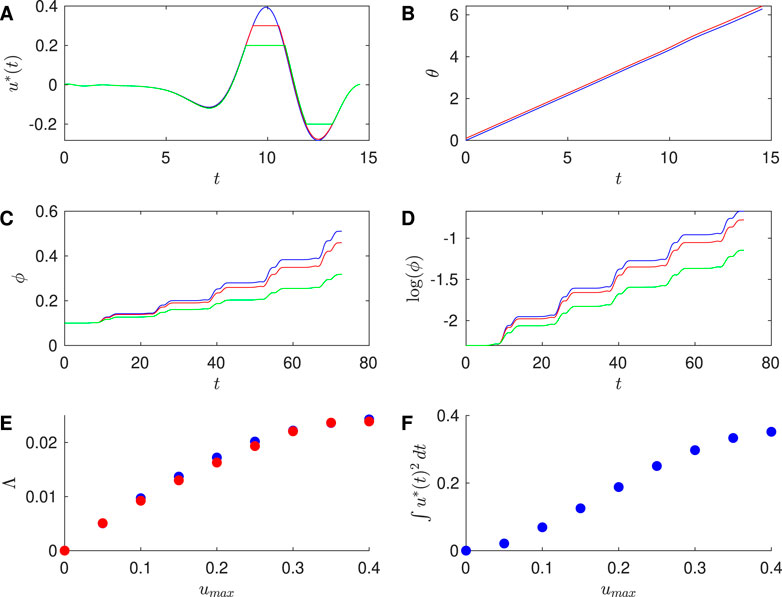

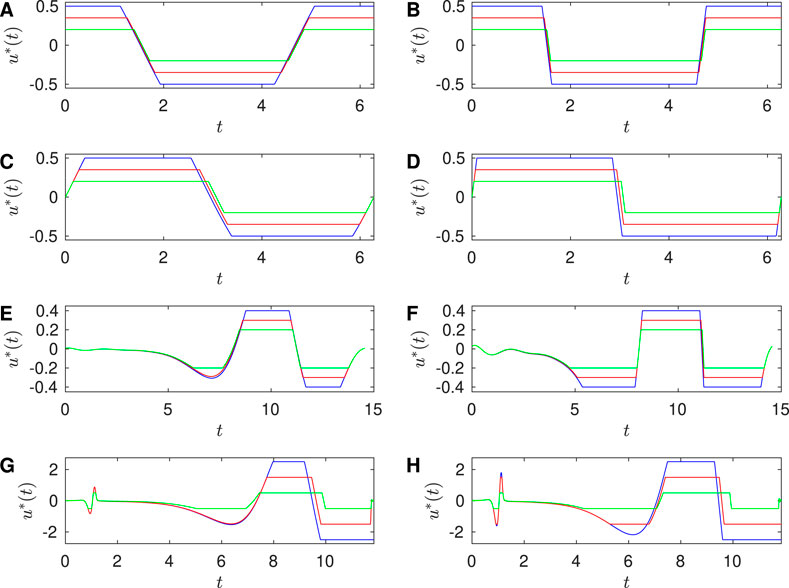

Similarly, Figure 3 shows results for the SNIPER PRC with

Figure 3. Results for SNIPER PRC with

Table 2. For the SNIPER PRC, comparison of the Lyapunov exponent

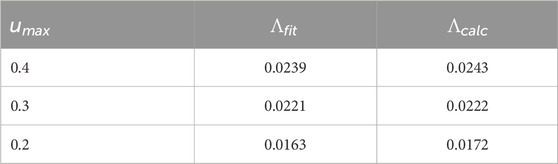

Figure 4. Results for Hodgkin-Huxley PRC with

Table 3. For the Hodgkin-Huxley PRC, comparison of the Lyapunov exponent

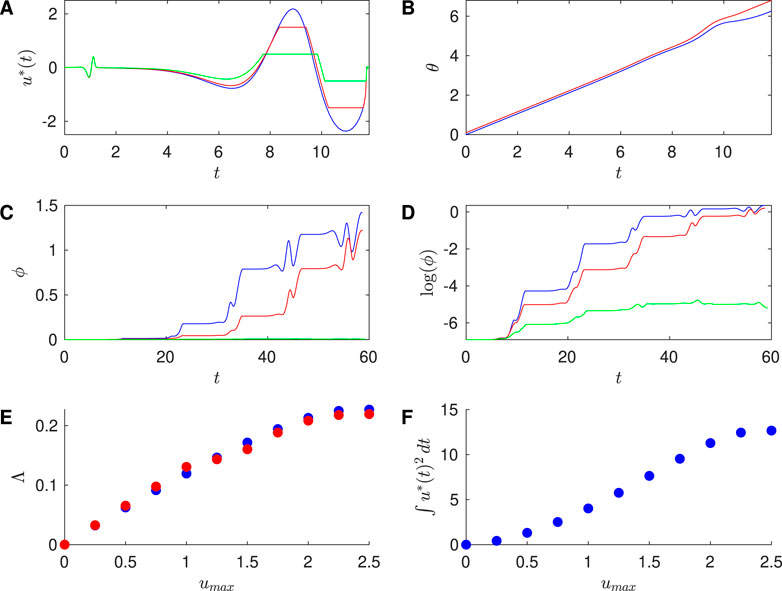

Figure 5. Results for Reduced Hodgkin-Huxley PRC with

Table 4. For the Reduced Hodgkin-Huxley PRC, comparison of the Lyapunov exponent

Here we make the new observation that in some cases the magnitude-constrained optimal input resembles the unconstrained input simply “chopped off” at the constraint, i.e.,

When

Figure 6. Approaching Bang-bang control in the large

3.2 Results for population-level simulations of neurons

While the results in the previous section illustrate that the stimuli with and without magnitude constraints can give positive Lyapunov exponents for the phase difference betweeen pairs of neural oscillators, we are also interested in how such inputs perform for a larger population of coupled neural oscillators. In this section, we consider a population of Reduced Hodgkin Huxley neurons with all-to-all electrotonic coupling, and independent additive noise for each neuron. The governing equations are:

where

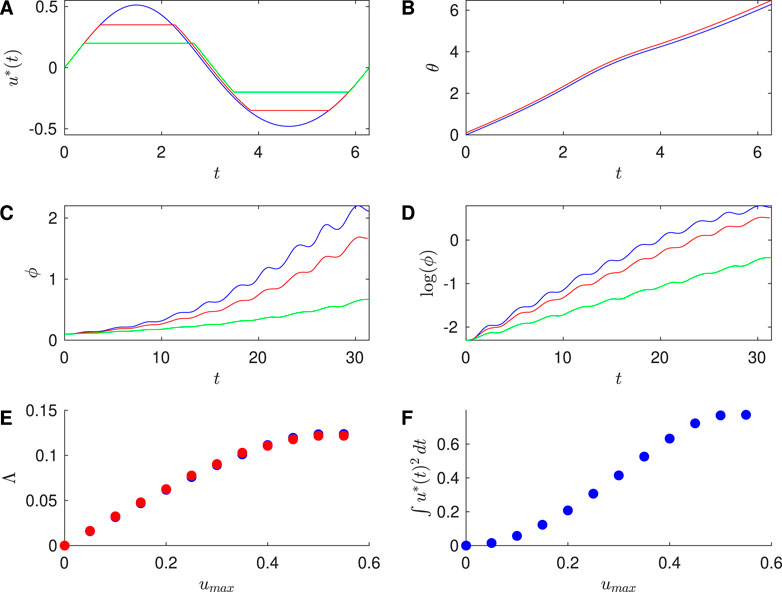

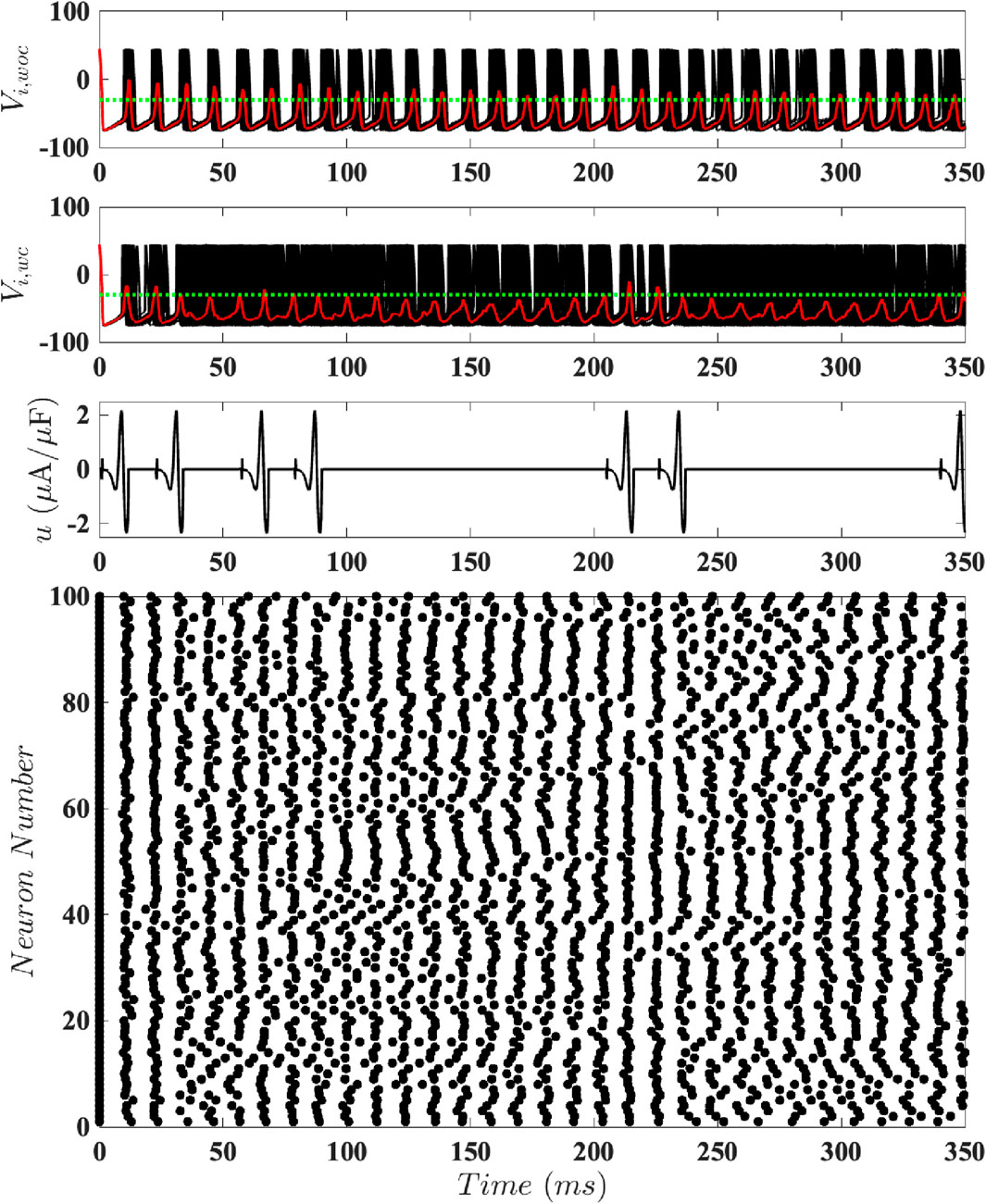

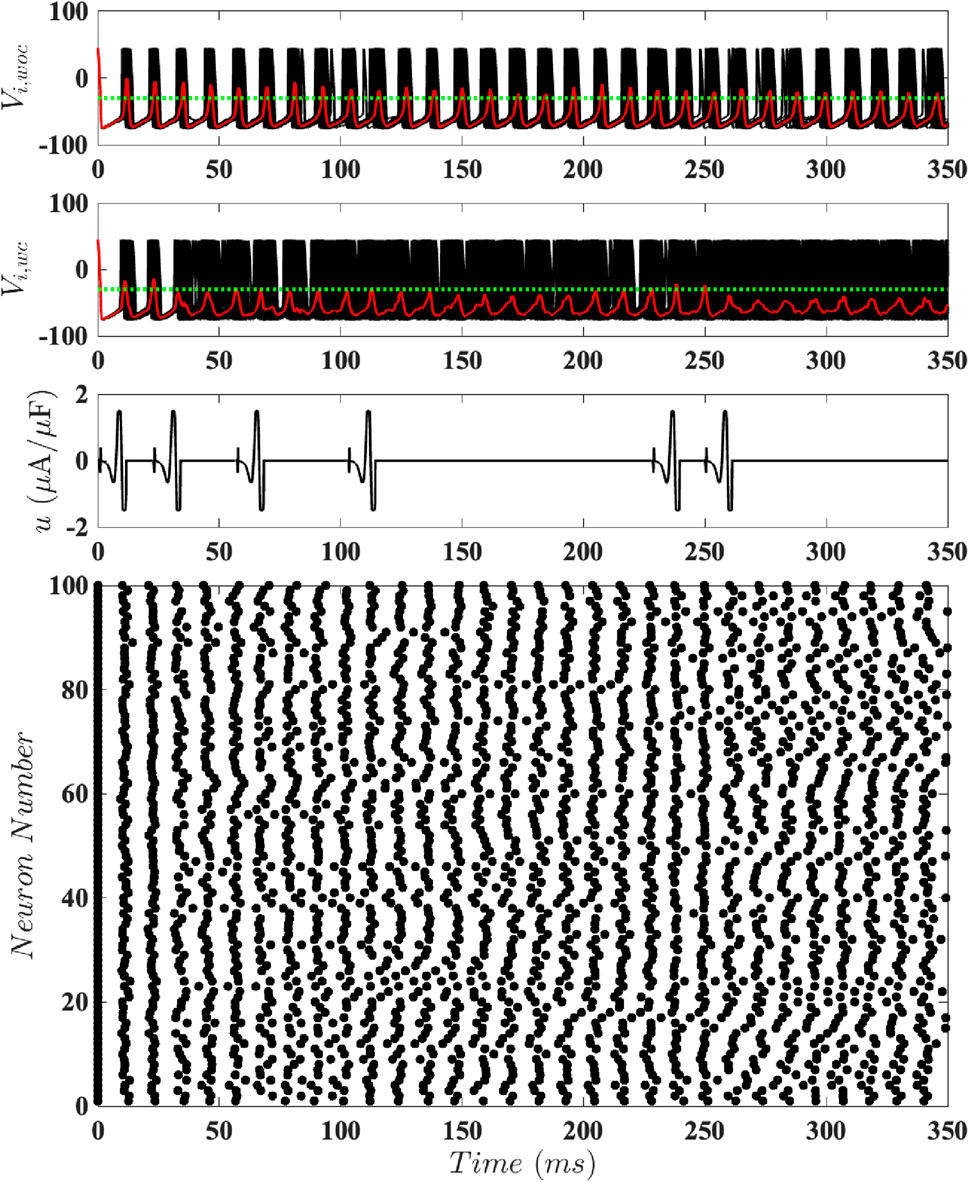

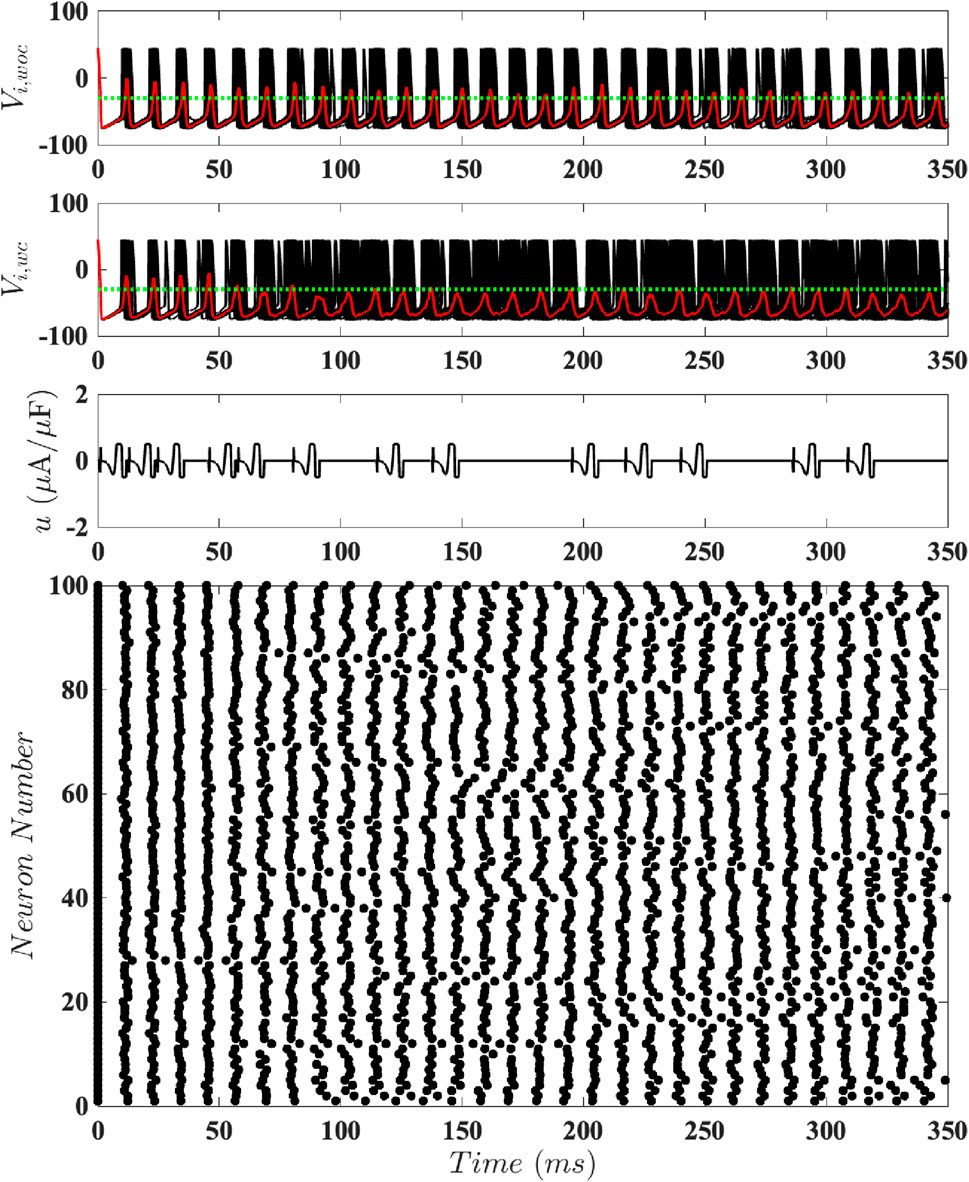

To test our magnitude-constrained optimal inputs

Figure 7. Results for Population-Level Simulations with Unconstrained Input. Here

Figure 8. Results for Population-Level Simulations with Constrained Input at

Figure 9. Results for Population-Level Simulations with Constrained Input at

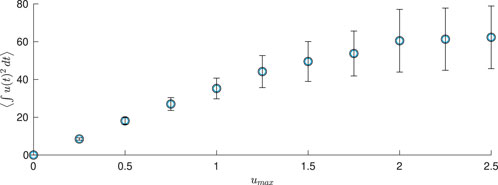

This motivates us to better understand how the average amount of energy required to keep the neural population desynchronized with this event-based scheme depends on the magnitude constraint. To investigate this, we consider 100 different population-level simulations for different values of

where

Figure 10. Energy used in population-level simulations for 350

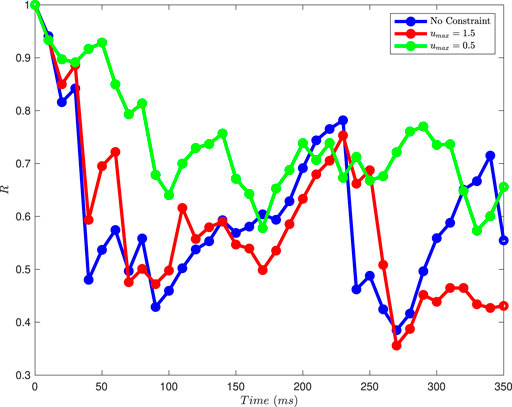

We used the average voltage as a measure of synchronization because this is easily defined, and one expects this to be related to the local field potential, which can be measured experimentally. However, a more common measure of synchronization is the Kuramoto order parameter (Kuramoto, 1984), whose amplitude is

where

To obtain the order parameter

Figure 11 shows results from this order parameter calculation for the plots shown in Figure 7 (no constraint on the magnitude of

Figure 11. The magnitude of the Kuramoto order parameter for different constraints on the magnitude of the control input, calculated at 10

4 Discussion

Motivated by deep brain stimulation treatment of neurological disorders including Parkinson’s disease, there has been much recent interest in using control theory to design optimal input stimuli which desynchronize neural populations. One such approach used chaotic desynchronization to achieve this goal in an energy-optimal fashion (Wilson and Moehlis, 2014b). In this paper, we generalized this optimal chaotic desynchronization methodology by including a magnitude constraint on the input. In particular, we showed how to calculate the optimal stimuli which satisfy such a contraint, and demonstrated that such inputs lead to exponential desynchronization of pairs of neurons and effective dysynchronization of populations of coupled, noisy neurons. This approach is based on a phase-reduction of the dynamics of a neuron, with the phase response curve quantifying the effect of an external input on a neuron’s phase. Based on the knowledge of a neuron’s phase response curve, we calculated the inputs that are optimally desynchronizing while minimizing energy utilization, using methods from optimal control. The addition of a magnitude constraint allows for the design of optimal stimulation inputs with a maximum amplitude that is customizable based on biological and electronic considerations. Interestingly, while these constrained inputs use less energy, they still achieve population-level desynchronization.

We note an extension of the current paper which could be of interest in future work: incorporation of both magnitude and charge-balance constraints on the control stimulus. This follows from the observation that non-charge-balanced stimuli, such as those considered in this paper, can cause harmful Faradaic reactions that may damage the DBS electrode or neural tissue (Merrill et al., 2005). This has motivated the use of a charge-balance constraint for optimal control design (Nabi and Moehlis, 2009; Wilson and Moehlis, 2014b). However, this presents additional challenges because it increases the dimension of the two-point boundary value problem which must be solved numerically. Our approach could be applied to other types of neurons, including those for common neurostimulation targets such as the subthalamic nucleus or the thalamus, even for periodically bursting neurons, as long as the phase response curve can be determined. If it is not possible to obtain this from electrophysiological measurements (Netoff et al., 2012), or if there is not an accurate mathematical model which would allow numerical techniques to be used Ermentrout (2002), Monga et al. (2019), an approach such as that described in Wilson and Moehlis (2015b), which can estimate the phase response curve based on aggregate measurements such as the local field potential, could be used. Moreover, we expect that similar population-level control results would be found for other types of coupling, such as synaptic coupling and/or heterogeneous coupling, provided that the coupling strength is not too strong.

We imagine that the results from this paper can be useful to the neuroscience community in cases where there are biological or electronic hardware considerations which limit the allowed input magnitude for a stimulus. With deep brain stimulation becoming an increasingly adopted therapeutic technique for treatment of neurological disorders, this research extends ongoing research efforts to theoretically inform the optimal design of deep brain stimulation protocols.

Data availability statement

The datasets presented in this study can be found in online repositories. The names of the repository/repositories and accession number(s) can be found below: (https://github.com/michaelzimet/MgOptChaoticDesync) (DOI: 10.5281/zenodo.15595745).

Author contributions

MZ: Software, Writing – review and editing, Methodology, Investigation, Writing – original draft, Conceptualization. FR: Conceptualization, Writing – review and editing, Software. JM: Writing – review and editing, Conceptualization, Methodology, Supervision, Software.

Funding

The author(s) declare that no financial support was received for the research and/or publication of this article.

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Generative AI statement

The author(s) declare that no Generative AI was used in the creation of this manuscript.

Any alternative text (alt text) provided alongside figures in this article has been generated by Frontiers with the support of artificial intelligence and reasonable efforts have been made to ensure accuracy, including review by the authors wherever possible. If you identify any issues, please contact us.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Supplementary material

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/fnetp.2025.1646391/full#supplementary-material

References

Abouzeid, A., and Ermentrout, B. (2009). Type-II phase resetting curve is optimal for stochastic synchrony. Phys. Rev. E 80, 011911. doi:10.1103/PhysRevE.80.011911

Adamchic, I., Hauptmann, C., Barnikol, U. B., Pawelczyk, N., Popovych, O., Barnikol, T. T., et al. (2014). Coordinated reset neuromodulation for Parkinson’s disease: proof-of-concept study. Mov. Disord. 29, 1679–1684. doi:10.1002/mds.25923

Asl, M. M., Vahabie, A.-H., Valizadeh, A., and Tass, P. A. (2022). Spike-timing-dependent plasticity mediated by dopamine and its role in Parkinson’s disease pathophysiology. Front. Netw. Physiology 2, 817524. doi:10.3389/fnetp.2022.817524

Brown, E., Moehlis, J., and Holmes, P. (2004). On the phase reduction and response dynamics of neural oscillator populations. Neural Comput. 16, 673–715. doi:10.1162/089976604322860668

Cagnan, H., Pedrosa, D., Little, S., Pogosyan, A., Cheeran, B., Aziz, T., et al. (2017). Stimulating at the right time: phase-specific deep brain stimulation. Brain 140, 132–145. doi:10.1093/brain/aww286

Cagnan, H., Denison, T., McIntyre, C., and Brown, P. (2019). Emerging technologies for improved deep brain stimulation. Nat. Biotechnol. 37, 1024–1033. doi:10.1038/s41587-019-0244-6

Danzl, P., Hespanha, J., and Moehlis, J. (2009). Event-based minimum-time control of oscillatory neuron models: phase randomization, maximal spike rate increase, and desynchronization. Biol. Cybern. 101, 387–399. doi:10.1007/s00422-009-0344-3

Ermentrout, B. (1996). Type I membranes, phase resetting curves, and synchrony. Neural Comput. 8, 979–1001. doi:10.1162/neco.1996.8.5.979

Ermentrout, B. (2002). Simulating, analyzing, and animating dynamical systems: a guide to XPPAUT for researchers and students. Philadelphia: Society for Industrial and Applied Mathematics.

Frey, J., Cagle, J., Johnson, K. A., Wong, J. K., Hilliard, J. D., Butson, C. R., et al. (2022). Past, present, and future of deep brain stimulation: hardware, software, imaging, physiology and novel approaches. Front. Neurol. 13, 825178. doi:10.3389/fneur.2022.825178

Guckenheimer, J., and Holmes, P. J. (1983). Nonlinear oscillations, dynamical systems and bifurcations of vector fields. New York: Springer-Verlag.

Hammond, C., Bergman, H., and Brown, P. (2007). Pathological synchronization in Parkinson’s disease: networks, models and treatments. Trends Neurosci. 30, 357–364. doi:10.1016/j.tins.2007.05.004

Hansel, D., Mato, G., and Meunier, C. (1995). Synchrony in excitatory neural networks. Neural Comp. 7, 307–337. doi:10.1162/neco.1995.7.2.307

Hodgkin, A. L., and Huxley, A. F. (1952). A quantitative description of membrane current and its application to conduction and excitation in nerve. J. Physiol. 117, 500–544. doi:10.1113/jphysiol.1952.sp004764

Holt, A. B., Wilson, D., Shinn, M., Moehlis, J., and Netoff, T. I. (2016). Phasic burst stimulation: a closed-loop approach to tuning deep brain stimulation parameters for Parkinson’s disease. PLoS Comput. Biol. 12, e1005011. doi:10.1371/journal.pcbi.1005011

Khaledi-Nasab, A., Kromer, J. A., and Tass, P. A. (2022). Long-lasting desynchronization of plastic neuronal networks by double-random coordinated reset stimulation. Front. Netw. Physiology 2, 864859. doi:10.3389/fnetp.2022.864859

Krauss, J. K., Lipsman, N., Aziz, T., Boutet, A., Brown, P., Chang, J. W., et al. (2021). Technology of deep brain stimulation: current status and future directions. Nat. Rev. Neurol. 17, 75–87. doi:10.1038/s41582-020-00426-z

Kubota, S., and Rubin, J. E. (2018). Numerical optimization of coordinated reset stimulation for desynchronizing neuronal network dynamics. J. Comput. Neurosci. 45, 45–58. doi:10.1007/s10827-018-0690-z

Lozano, A. M., and Lipsman, N. (2013). Probing and regulating dysfunctional circuits using deep brain stimulation. Neuron 77, 406–424. doi:10.1016/j.neuron.2013.01.020

Lücken, L., Yanchuk, S., Popovych, O. V., and Tass, P. A. (2013). Desynchronization boost by non-uniform coordinated reset stimulation in ensembles of pulse-coupled neurons. Front. Comput. Neurosci. 7, 63. doi:10.3389/fncom.2013.00063

Lysyansky, B., Popovych, O. V., and Tass, P. A. (2011). Desynchronizing anti-resonance effect of m: n on-off coordinated reset stimulation. J. Neural Eng. 8, 036019. doi:10.1088/1741-2560/8/3/036019

Manos, T., Diaz-Pier, S., and Tass, P. A. (2021). Long-term desynchronization by coordinated reset stimulation in a neural network model with synaptic and structural plasticity. Front. Physiology 12, 716556. doi:10.3389/fphys.2021.716556

Matchen, T., and Moehlis, J. (2018). Phase model-based neuron stabilization into arbitrary clusters. J. Comput. Neurosci. 44, 363–378. doi:10.1007/s10827-018-0683-y

Matchen, T., and Moehlis, J. (2021). Leveraging deep learning to control neural oscillators. Biol. Cybern. 115, 219–235. doi:10.1007/s00422-021-00874-w

Merrill, D., Bikson, M., and Jefferys, J. (2005). Electrical stimulation of excitable tissue: design of efficacious and safe protocols. J. Neurosci. Methods 141, 171–198. doi:10.1016/j.jneumeth.2004.10.020

Moehlis, J. (2006). Canards for a reduction of the Hodgkin-Huxley equations. J. Math. Biol. 52, 141–153. doi:10.1007/s00285-005-0347-1

Moehlis, J., Zimet, M., and Rajabi, F. (2025). Nearly optimal chaotic desynchronization of neural oscillators.

Monga, B., and Moehlis, J. (2019). Phase distribution control of a population of oscillators. Phys. D. 398, 115–129. doi:10.1016/j.physd.2019.06.001

Monga, B., Froyland, G., and Moehlis, J. (2018). “Synchronizing and desynchronizing neural populations through phase distribution control,” in 2018 Annual American Control Conference (ACC), 2808–2813. doi:10.23919/ACC.2018.8431114

Monga, B., Wilson, D., Matchen, T., and Moehlis, J. (2019). Phase reduction and phase-based optimal control for biological systems: a tutorial. Biol. Cybern. 113, 11–46. doi:10.1007/s00422-018-0780-z

Nabi, A., and Moehlis, J. (2009). “Charge-balanced optimal inputs for phase models of spiking neurons,” in Proceedings of the ASME 2009 Dynamic Systems and Control Conference, 685–687. doi:10.1115/DSCC2009-2541

Nabi, A., Mirzadeh, M., Gibou, F., and Moehlis, J. (2013). Minimum energy desynchronizing control for coupled neurons. J. Comput. Neurosci. 34, 259–271. doi:10.1007/s10827-012-0419-3

Najera, R. A., Mahavadi, A. K., Khan, A. U., Boddeti, U., Bene, V. A. D., Walker, H. C., et al. (2023). Alternative patterns of deep brain stimulation in neurologic and neuropsychiatric disorders. Front. Neuroinform. 17, 1156818. doi:10.3389/fninf.2023.1156818

Netoff, T., Schwemmer, M. A., and Lewis, T. J. (2012). “Experimentally estimating phase response curves of neurons: theoretical and practical issues,” in Phase response curves in neuroscience. Editors N. W. Schultheiss, A. A. Prinz, and R. J. Butera (New York, NY: Springer), 95–129.

Neumann, W.-J., Horn, A., and Kühn, A. A. (2007). Insights and opportunities for deep brain stimulation as a brain circuit intervention. Trends Neurosci. 46, 472–487. doi:10.1016/j.tins.2023.03.009

Oehrn, C. R., Cernera, S., Hammer, L. H., Shcherbakova, M., Yao, J., Hahn, A., et al. (2024). Chronic adaptive deep brain stimulation versus conventional stimulation in Parkinson’s disease: a blinded randomized feasibility trial. Nat. Med. 30, 3345–3356. doi:10.1038/s41591-024-03196-z

Popovych, O. V., and Tass, P. A. (2014). Control of abnormal synchronization in neurological disorders. Front. Neurology 5, 268. doi:10.3389/fneur.2014.00268

Popovych, O. V., Hauptmann, C., and Tass, P. A. (2006). Control of neuronal synchrony by nonlinear delayed feedback. Biol. Cybern. 95, 69–85. doi:10.1007/s00422-006-0066-8

Popovych, O. V., Lysyansky, B., Rosenblum, M., Pikovsky, A., and Tass, P. A. (2017). Pulsatile desynchronizing delayed feedback for closed-loop deep brain stimulation. PLoS One 12, e0173363. doi:10.1371/journal.pone.0173363

Qin, Y., Nobili, A. M., Bassett, D. S., and Pasqualetti, F. (2023). Vibrational stabilization of cluster synchronization in oscillator networks. IEEE Open J. Control Syst. 2, 439–453. doi:10.1109/OJCSYS.2023.3331195

Rajabi, F., Gibou, F., and Moehlis, J. (2025). Optimal control for stochastic neural oscillators. Biol. Cybern. 119, 9. doi:10.1007/s00422-025-01007-3

Rosenblum, M. G., and Pikovsky, A. S. (2004a). Controlling synchronization in an ensemble of globally coupled oscillators. Phys. Rev. Lett. 92, 114102. doi:10.1103/PhysRevLett.92.114102

Rosenblum, M. G., and Pikovsky, A. S. (2004b). Delayed feedback control of collective synchrony: an approach to suppression of pathological brain rhythms. Phys. Rev. E 70, 041904. doi:10.1103/PhysRevE.70.041904

Rubchinsky, L. L., Park, C., and Worth, R. M. (2012). Intermittent neural synchronization in Parkinson’s disease. Nonlinear Dyn. 68, 329–346. doi:10.1007/s11071-011-0223-z

Sandoval-Pistorius, S. S., Hacker, M. L., Waters, A. C., Wang, J., Provenza, N. R., de Hemptinne, C., et al. (2023). Advances in deep brain stimulation: from mechanisms to applications. J. Neurosci. 43, 7575–7586. doi:10.1523/JNEUROSCI.1427-23.2023

Santaniello, S., McCarthy, M. M., Montgomery, Jr., E. B., Gale, J. T., Kopell, N., and Sarma, S. V. (2015). Therapeutic mechanisms of high-frequency stimulation in Parkinson’s disease and neural restoration via loop-based reinforcement. Proc. Natl. Acad. Sci. 112, E586–E595. doi:10.1073/pnas.1406549111

Spiliotis, K., Starke, J., Franz, D., Richter, A., and Köhling, R. (2022). Deep brain stimulation for movement disorder treatment: exploring frequency-dependent efficacy in a computational network model. Biol. Cybern. 116, 93–116. doi:10.1007/s00422-021-00909-2

Tass, P. A. (2003). A model of desynchronizing deep brain stimulation with a demand-controlled coordinated reset of neural subpopulations. Biol. Cybern. 89, 81–88. doi:10.1007/s00422-003-0425-7

Tass, P. A. (2006). Phase resetting in medicine and biology: stochastic modelling and data analysis. Berlin: Springer.

Vu, M., Singhal, B., Zeng, S., and Li, J.-S. (2024). Data-driven control of oscillator networks with population-level measurement. Chaos 34, 033138. doi:10.1063/5.0191851

Wilson, D. (2020). Optimal open-loop desynchronization of neural oscillator populations. J. Math. Biol. 81, 25–64. doi:10.1007/s00285-020-01501-1

Wilson, D., and Moehlis, J. (2014a). Locally optimal extracellular stimulation for chaotic desynchronization of neural populations. J. Comput. Neurosci. 37, 243–257. doi:10.1007/s10827-014-0499-3

Wilson, D., and Moehlis, J. (2014b). Optimal chaotic desynchronization for neural populations. SIAM J. Appl. Dyn. Syst. 13, 276–305. doi:10.1137/120901702

Wilson, D., and Moehlis, J. (2015a). Clustered desynchronization from high-frequency deep brain stimulation. PLoS Comput. Biol. 11, e1004673. doi:10.1371/journal.pcbi.1004673

Wilson, D., and Moehlis, J. (2015b). Determining individual phase response curves from aggregate population data. Phys. Rev. E 92, 022902. doi:10.1103/PhysRevE.92.022902

Wilson, D., and Moehlis, J. (2022). Recent advances in the analysis and control of large populations of neural oscillators. Annu. Rev. Control 54, 327–351. doi:10.1016/j.arcontrol.2022.05.002

Wilson, C. J., Beverlin II, B., and Netoff, T. (2011). Chaotic desynchronization as the therapeutic mechanism of deep brain stimulation. Front. Syst. Neurosci. 5, 50. doi:10.3389/fnsys.2011.00050

Keywords: optimal control, desynchronization, deep brain stimulation, Lyapunov exponent, network physiology

Citation: Zimet M, Rajabi F and Moehlis J (2025) Magnitude-constrained optimal chaotic desynchronization of neural populations. Front. Netw. Physiol. 5:1646391. doi: 10.3389/fnetp.2025.1646391

Received: 13 June 2025; Accepted: 29 September 2025;

Published: 21 October 2025.

Edited by:

Eckehard Schöll, Technical University of Berlin, GermanyReviewed by:

Alexander Neiman, Ohio University, United StatesLouis M Pecora, Naval Research Laboratory, United States

Copyright © 2025 Zimet, Rajabi and Moehlis. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Jeff Moehlis, bW9laGxpc0B1Y3NiLmVkdQ==

Michael Zimet

Michael Zimet Faranak Rajabi2

Faranak Rajabi2 Jeff Moehlis

Jeff Moehlis