- 1Department of Neuroscience, Neuroscience of Imagination, Cognition, and Emotion Research Lab, Carleton University, Ottawa, ONT, Canada

- 2Chlildren’s Hospital of Eastern Ontario Research Institute, Ottawa, ONT, Canada

- 3Department of Clinical Neurosciences, University of Geneva and Geneva University Hospitals, Geneva, Switzerland

- 4Centre National de la Recherche Scientifique, Strasbourg University, Paris, Strasbourg, France

In this perspective, we introduce the Precision Principle as a unifying theoretical framework to explain self-organization across biological systems. Drawing from neurobiology, systems theory, and computational modeling, we propose that precision, understood as constraint-driven coherence, is the key force shaping the architecture, function, and evolution of nervous systems. We identify three interrelated domains: Structural Precision (efficient, modular wiring), Functional Precision (adaptive, context-sensitive circuit deployment), and Evolutionary Precision (selection-guided architectural refinement). Each domain is grounded in local operations such as spatial and temporal averaging, multiplicative co-activation, and threshold gating, which enable biological systems to achieve robust organization without centralized control. Within this framework, we introduce the Precision Coefficient,

1 Introduction

When most people think of a nervous system, they imagine vast webs of interconnected neurons shooting signals back and forth. This assumption raises an intriguing question: Do single-celled organisms have nervous systems?

If we define a nervous system by its ability to connect an organism to its external environment, allowing it to respond, adapt, and survive, then unicellular organisms arguably qualify. Certain bacteria and unicellular eukaryotes navigate their environment using molecular structures like flagella and microvilli (Lynch, 2024). Through chemical and physical sensors such as gated ion channels, these structures interact with the environment and initiate changes in the organism’s movement (Saimi et al., 1988). Rather than being directed by a central command, such reactions emerge from dynamic interactions between internal structures and external cues. Even single-celled life exhibits primitive forms of organization, sense integration, and environmental adaptation; these underlying mechanisms, rooted in life preservation and stimulus response, represent the early blueprint of nervous systems in complex organisms.

From this foundation, the principle of minimal, self-evoked structure scales upwards (Dresp-Langley, 2024). As organisms evolved nervous systems, their purpose remained constant, even as complexity increased; here, chaos gives rise to order. They integrate, prioritize, and represent the world (Wan et al., 2024) while adhering to the same logic: organisms self-organize not because they are told to, but because their survival demands it. In this perspective, we call this internal drive the Precision Principle, a field-unifying theory of constraint-driven coherence that governs how nervous systems organize, adapt, and endure across scales. We express this logic with a simple quantitative form, the Precision Coefficient,

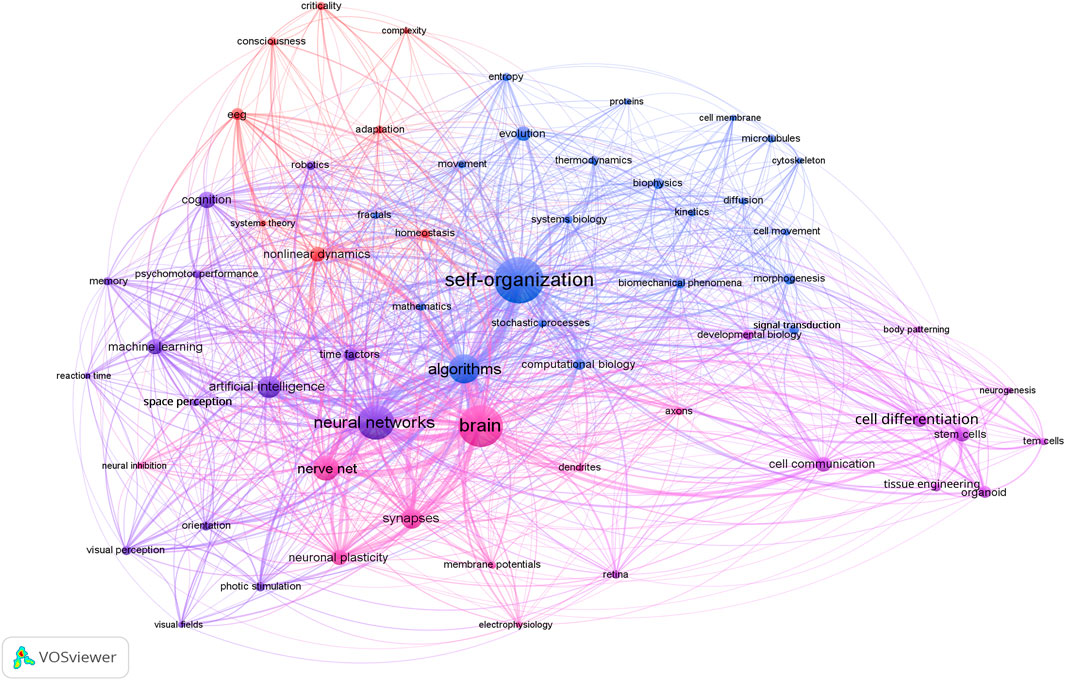

To underscore the scope and centrality of self-organization across disciplines, we propose Figure 1 above, which depicts a global co-occurrence network of key concepts extracted from 1,810 peer-reviewed articles on biological and artificial systems. Node size scales with term frequency and edge thickness with co-occurrence strength. At the heart of the network, “self-organization” exhibits the highest centrality and forms dense connections with a vast array of topics spanning thermodynamics, developmental biology, neural information processing, machine learning, robotics, and cognitive dynamics. Such prominence highlights how self-organization functions as a unifying principle, observed across physical, biological, and computational disciplines, to coordinate pattern formation, information flow, and adaptive behavior in systems characterized by Precision in the face of variability, the proper interpretation of which will be presented in the following section.

1.1 Entropy

The concept of entropy describes how isolated systems tend to evolve toward more probable states, which have a greater number of possible micro-configurations. This causes an increase in disorder or uncertainty. While the natural trend is for disorder to grow, living organisms keep their internal organization by exporting entropy to the outside environment, meaning they convert ordered energy into less useful, degraded energy.

Within a world that accelerates towards this effect under the 2nd law of thermodynamics, we consider self-organizing forces in living systems, under the drive of evolution towards functional stability, as designed to counteract entropy. Self-organization allows for the creation of our nervous system and brain, coordinating billions of cells to interact with reality. In this paper, we propose that the brain’s capacity for self-organization and multimodular plasticity is guided by a fundamental internal drive towards what we term Precision.

1.2 Precision

Precision in this context may be seen as an emergent, constraint-driven optimization criterion that governs both the architecture of brain circuits and their functional dynamics, sculpting their structure and activity in ways that maximize efficiency while preserving internal coherence. In what follows, “precision” refers to emergent coherence under constraint rather than to fine-tuning or generic optimization, and we use the Precision Principle as a unifying framework to organize the levels of analysis developed here. Just as intermolecular forces (i.e., London Dispersion Effect) determine how molecules settle into stable configurations under physical constraints, biological systems similarly exploit such principles to drive toward precision in structure, functionality, and evolution.

Drawing on and expanding ideas from Hebbian Law, memory consolidation, cross-modal plasticity, and predictive coding, the Precision Principle offers an adjusted perspective from theories like the Free Energy Principle (FEP). While both the FEP and Precision Principle yield similar outcomes, namely, pattern recognition and internal coherence, the underlying mechanisms differ fundamentally.

The FEP characterizes cognition as a predictive engine constantly questioning “what’s next?”, inherently driven by minimizing surprise through alignment with external reality (Friston, 2010). A closely related account, Bayesian brain theory, likewise portrays cognition as probabilistic inference, combining prior beliefs with sensory likelihoods in a precision-weighted manner to produce posterior estimates (Knill and Pouget, 2004; Yuille and Kersten, 2006). However, such personification, whether of a brain that minimizes free energy or one that optimally updates posteriors, misrepresents biological reality; the biological brain and conscious mind are distinct entities acting in tight conjunction (D’Angiulli and Sidhu, 2025).

The Precision Principle challenges the centrality of surprise minimization (and ideal Bayesian optimality), proposing instead that cognitive coherence and pattern recognition emerge primarily from internal, self-organizing neurobiological processes. In this framing, cognition arises through intrinsic molecular interactions and neural dynamics rather than an external alignment. Indeed, surprise minimization itself can be considered an emergent property within our consciousness resulting from deeper biological tendencies toward efficiency and equilibrium within an ever-changing environment. But precision, not merely prediction, organizes the brain’s structural and molecular logic. Adding surprise minimization to intrinsic neural dynamics is analogous to projecting human morality onto the animal kingdom, such as rooting for a gazelle escaping from a lion; these moral narratives have no intrinsic validity outside human consciousness. Similarly, cognitive coherence is fundamentally a product of internal neural organization, which, while grounded in environment, is not constrained by it.

2 Precision-driven self-organization: a three-part classification

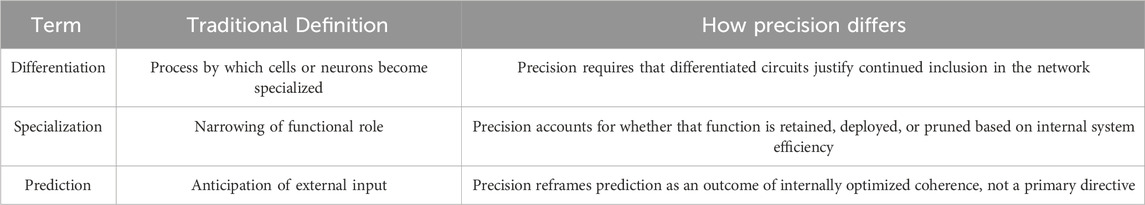

While differentiation delineates how neurons acquire distinct molecular and functional identities and specialization describes their progressive narrowing to a single computational role, Precision imposes an orthogonal constraint: circuits must not only exist but justify their persistence in the network’s economy. Thus, building on the foundational notion of Precision as constraint-driven structure, we propose that it manifests in three interwoven domains: Structural Precision, Functional Precision, and Evolutionary Precision.

Beyond its impact on individual circuits, Precision operates as a unifying principle across multiple timescales. Unlike theological or metaphysical invocations of design, this usage refers to biologically grounded rules (e.g., energy efficiency, redundancy minimization, metabolic optimization) that shape neural architecture through interaction, pruning and constraint, rather than the current status quo relating to foresight and or intention. Structurally, it generates compact, high-efficiency modules with minimal redundancy; functionally, it governs on-the-fly redeployment and robust buffering of circuits via gated short-term memory traces; and evolutionarily, it determines which functionally validated configurations become encoded in the genome under selective pressure. By embedding the same core rules across developmental, operational, and phylogenetic contexts, Precision offers a single, mechanistic framework explaining how the brain perpetually balances stability against adaptability in service of resilient, energy-efficient computation. This framework is further clarified in Table 1, which contrasts traditional definitions with their reinterpretation under Precision.

Table 1. Common definitions in the study of cell organization, and their key differences from precision.

2.1 Structural Precision: from local synapses to global network design

As previously stated, Structural Precision shapes modular architectures that minimize redundancy by promoting tight local clustering among co-activated neurons. It also establishes long-range pathways for distributed integration, an emergent pattern of modular connectivity grounded in unsupervised, activity-dependent learning (Dresp-Langley, 2020). Through long-range links, modules exchange information so that specialized circuits can operate independently while still integrating seamlessly into global computations. In such self-organizing networks, these circuit interactions are mirrored at the molecular scale, where structural modifications accumulate as long-term memory (LTM) traces along synaptic pathways (Milano et al., 2023). This coupling of local change and global coordination arises through unsupervised Hebbian learning: each synapse adjusts its weight solely from the timing of pre- and post-synaptic activity, without an external supervisor (Dresp-Langley, 2020). In this way, synaptic microstructure and global topology evolve together in a tightly coupled process. As Grossberg (1993) showed in his additive formulation below, (Equation 1), each LTM trace can be described by a differential equation with a selective gated-decay term that restricts plasticity to periods of significant postsynaptic activation:

where the gating function hj(zj), a thresholder sigmoid, ensures that only sufficiently strong postsynaptic responses permit plasticity, thereby capturing the balance between Hebbian growth and synaptic decay. Here, zji is the persistent component of synaptic strength from neuron j to neuron i, reflecting the accumulated history of neural interactions. The decay constant Kji eliminates spurious potentiation, while the Hebbian term Ljifi(zi) reinforces synapses in proportion to presynaptic activation fi (zi) (Grossberg, 1993). Because the same decay–gating–potentiation rule applies across scales, tiny synaptic tweaks propagate outward to create from-local-to-global organization, where distant nodes link into coherent functional motifs (Dresp-Langley, 2020). At its core, circular causality, where local changes shape global structure, which in turn biases future local activity, underpins the brain’s ability to form scale-free hubs without centralized control.

Over developmental and evolutionary timescales, these same multiplicative co-activation and threshold-gating rules also govern how the network’s wiring diagram evolves (Knoblauch, 2017). Synaptic pathways that repeatedly co-activate across environmental inputs accumulate LTM weight, forming densely interconnected modules that mirror the statistical “shape” of those inputs, while weakly used connections decay and are pruned. For example, we can imagine a network repeatedly exposed to vertical edges in natural scenes: each time two neurons co-fire on a vertical contour, their connecting weight zji gets a tiny boost, but only if the postsynaptic cell itself was “engaged” thanks to the gating function hj(zj). Across thousands of such episodes, clusters of vertical-edge detectors self-assemble into densely wired modules analogous to cortical columns, while all other, less-used cross-links atrophy away, yielding an architecture so well-tuned to vertical features that it functions like a biologically evolved edge detector, analogous to orientation columns in visual cortex. A continual cycle of selective potentiation and pruning exemplifies dynamic system growth, allowing a fixed-size network to explore an exponential repertoire of functional states without adding neurons (Dresp-Langley, 2020). By valorizing only connections that improve system fitness and suppressing the rest, the brain dynamically reallocates resources to emerging computational niches. This balance between strengthening coherence and reducing redundancy directly anticipates the variables of the Precision Coefficient,

By enforcing the identical LTM update rule on every outgoing synapse, a local symmetry axis in which all synapses update identically based on local activity, the network not only converges stably, but it also gains the ability to incorporate new patterns without biasing older ones (Pena and Cerqueira, 2017). In practical terms, when novel inputs arrive (say, learning a new texture), Structural Precision machinery carves out fresh modules in parallel to existing ones, without corrupting or destabilizing existing feature maps. This symmetry-enforced balance between plasticity and stability stands as a hallmark of cortical development and underlies phenomena like map reorganization after sensory deprivation or injury, where the same constraint-driven coherence quietly shapes both adaptation and learning (Rabinowitch and Bai, 2016).

Therefore, Structural Precision is encoded in the very equations of LTM, where selective reinforcement and decay rules bias networks toward coherence under constraint. Cultured neural networks illustrate the same principle at a different scale: when left to self-organize, they form compact architectures that minimize metabolic cost while preserving representational power. Dense hubs emerge around high-utility features, while less informative connections recede into sparse links. These dynamics echo the formation of cortical columns and the evolvable architectures of sensory and motor maps across species. Thus, Structural Precision is not an abstract labeling but an intrinsic outcome of the very rules that govern synaptic change and network design.

2.2 Functional Precision: adaptive reconfiguration without structural overhaul

Functional Precision describes how existing processing modules are transiently redeployed in response to novel or missing inputs, preserving latent computational mappings even as their active functions shift. A critical mechanism underlying this flexibility is the brain’s use of short-term memory (STM) traces, rapidly evolving activation patterns that buffer contextual information without altering long-term synaptic weights (Johnson et al., 2013). Within this framework, each node’s STM trace

Here, Functional Precision arises not from fixed architecture but through the dynamic regulation of transient neural activity. In this context, the decay term −azi ensures that modules deactivate without sustained input, minimizing interference. At the same time, the co-activation term

Rather than requiring structural rewiring, this framework supports conditional reuse: latent mappings are conserved, expressed only when internal and external signals converge, and decay naturally restores baseline functions once the drive subsides (Gillary et al., 2017). Accordingly, the equation does not model function directly, but rather the constraints that regulate functional access, capturing a reversible, selectively gated precision that avoids both rigidity and chaos. In this light, what emerges is the need for a compact expression that captures how coherence rises under constraint, with access tuned rather than prescribed. We later formalized this logic in the Precision Coefficient.

Empirical work illustrates how such constraint-driven coherence unfolds in real networks. In dissociated rat cortical cultures devoid of structured external input, neurons self-assemble into small-world functional networks within 4 weeks in vitro. One longitudinal study using planar microelectrode arrays reported a clustering coefficient that rose from approximately 1.2 at Day In Vitro (DIV) 14 to 2.3 by DIV35, while the small-world index surpassed 1.2 (p < 0.01), reflecting a transition from segregated modules to integrated hub-and-spoke architectures (Downes et al., 2012). According to transfer-entropy reconstructions, by DIV21 effective connectivity exhibits pronounced clustering and short path lengths characteristic of small-world topology (Orlandi et al., 2014). At the same time, spontaneous firing organizes into neuronal avalanches whose event-size distributions follow a power law with exponent α ≈ −1.5 across four orders of magnitude, indicating critical dynamics that maximize dynamic range and information throughput (Beggs and Plenz, 2003). In addition, rich-club analyses reveal the early emergence of highly connected hub neurons by DIV14, which consolidate by DIV28 to coordinate global network bursts (Hahn et al., 2010).

Crucially, functional reassignment depends on multiplicative co-activation and biologically gated thresholds. Potentiation of downstream targets via the term bimSmzm occurs only when both pre- and postsynaptic STM traces exceed a biologically meaningful threshold

Empirical studies in early-blind humans further provide compelling support for this STM-trace gating model. Sustained auditory or tactile stimulation can transiently raise STM traces in occipital areas, normally devoted to vision, above threshold, allowing those modules to without any structural rewiring (Rabinowitch and Bai, 2016; Kılınç et al., 2025; van der Heijden et al., 2019). For example, pitch discrimination by blind listeners activates V1 and surrounding cortex when prolonged auditory stimulation maintains STM traces long enough for functional recruitment (Pang et al., 2024), only to revert to baseline visual mappings upon removal of the drive.

More broadly, simple stimuli reshape primary cortices (e.g., V1) only when attention or task demands raise their STM traces above threshold, whereas higher-order areas such as V5/MT, with broader receptive fields and lower gating thresholds, can adopt new functions even during passive stimulation (Lewis et al., 2010). A similar form of gating logic builds in functional resiliency, as latent pathways can instantly compensate when primary circuits falter, preserving core computations under perturbation (Dresp-Langley, 2020). Since STM traces decay only when truly unused, transient disruptions never permanently silence the network’s critical routes.

Thus, STM-mediated gating, multiplicative co-activation, and threshold-gating jointly furnish Functional Precision’s reversible substrate (Hansel & Yuste, 2024). When particular circuits are repeatedly co-opted under stable demands, this reversible reuse gives way to functional plasticity, whose pathways gain lasting readiness without structural rewiring (Dresp-Langley, 2020).

This is further reinforced by the gradual lowering of gating thresholds for frequently engaged modules ensures that familiar tasks become ever more efficient yet remain reversible if contexts shift again. Building on that adaptability, a reversible form of co-option not only explains cross-modal plasticity in blindness but also exemplifies a general mechanism by which the brain dynamically allocates existing circuits to novel tasks, preserving latent computational mappings and enabling rapid restoration of original functions when the driving input subsides. Functional Precision, therefore, constitutes the dynamic basis by which neural networks sustain adaptability to shifting environments while safeguarding the coherence of their architectural design.

2.3 Evolutionary Precision: selection as the final editor of neural design

Across phylogenic timescales, Evolutionary Precision extends self-organizing principles, showing how natural selection sculpts not only survival-critical traits but also the internal efficiency of neural architectures. One possible compelling example of Evolutionary Precision under selective constraint is found in Eigenmannia vicentespelea, a blind, cave-dwelling electric fish. When its surface-dwelling ancestors became isolated in caves less than 20,000 years ago, the visual system quickly lost relevance (Fortune et al., 2020). Yet instead of fading spontaneously, the nervous system reorganized around a new sensory priority: electrosensation. Over generations, natural selection favored fish with stronger electric organ discharges (EODs), larger electric organs, and more flexible signal patterns, traits that enhanced electrolocation and electrocommunication in complete darkness. Selection acted on inherited neural traits that maintained coherence between sensory input and behavior under new environmental conditions. What began as functional re-weighting eventually stabilized as inherited neural architecture (Fortune et al., 2020).

Such a process reflects evolutionary self-organization, where local neural interactions converge on stable, adaptive configurations without central control. Underlying rules filter out noise, reinforce consistency, and refine structure. In E. vicentespelea, the electrosensory network did not emerge by chance; it arose via distributed adjustments that strengthened relevant pathways while de-emphasizing unused ones (Soares et al., 2023). Reiterated across generations, this consistent filtering produces a directional selection pressure that shapes circuitry. This aligns with multimodular plasticity: the nervous system reused and scaled pre-existing motor and sensory modules rather than building new circuits. Vision regressed not arbitrarily but because its computational role was no longer useful, a form of Precision through removal.

Put simply, the cavefish’s brain did not become more complex; it became more coherent. Precision acted as an organizing force, guiding evolution toward efficient, modular reuse attuned to persistent darkness. Crucially, this is not Lamarckian: use does not strengthen traits across generations. Random mutation supplies variation, and non-random selection in an aphotic environment favors variants that improve nonvisual sensing, giving a long-run appearance of reinforcement. Within-lifetime recalibration sets context, but only genetic changes that support it are retained and, across many generations, encoded. Though Evolutionary Precision unfolds on the longest timescales, it does not stand above the others; it ultimately crystallizes what Structural and Functional Precision continually generate. Processes like these underscores the need for a general formalism capable of capturing how coherence is preserved across structural, functional, and evolutionary scales. Together, the three are truly co-emergent expressions of a single logic, each shaping, constraining, and refining the others in a recursive dance of adaptation and coherence.

2.4 The Precision Coefficient: a quantitative outline of the Precision Principle

To make the Precision Principle more concrete without turning it into a full optimization routine, we introduce a quantitative expression that we call the Precision Coefficient,

This equation defines the Precision Coefficient,

where

where

In practice, the coefficient can be read operationally as a driver of learning. When activity updates tend to increase

This interpretation is consistent with established neural learning equations. Grossberg’s long-term memory rule (Equation 1) describes how persistent synaptic weights

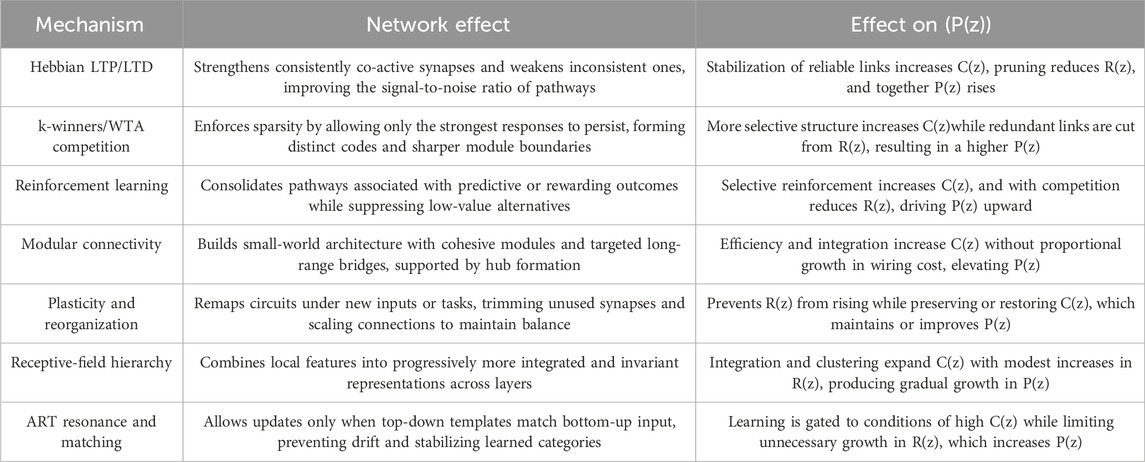

Conceptually, precision coding is an allocation rule on limited representational capacity. Hebbian correlation makes candidate features locally available; competition (winner-take-all or k-winners) selects the active subset; and resonance or template matching stabilizes categories when top-down and bottom-up signals agree (Dresp-Langley, 2024). Increases in

To make the role of the Precision Coefficient concrete, we outline representative learning mechanisms that map changes in synaptic strength, competition, and connectivity to

Table 2. Summary of representative neural learning mechanisms and their effects on the Precision Coefficient (Dresp-Langley, 2024).

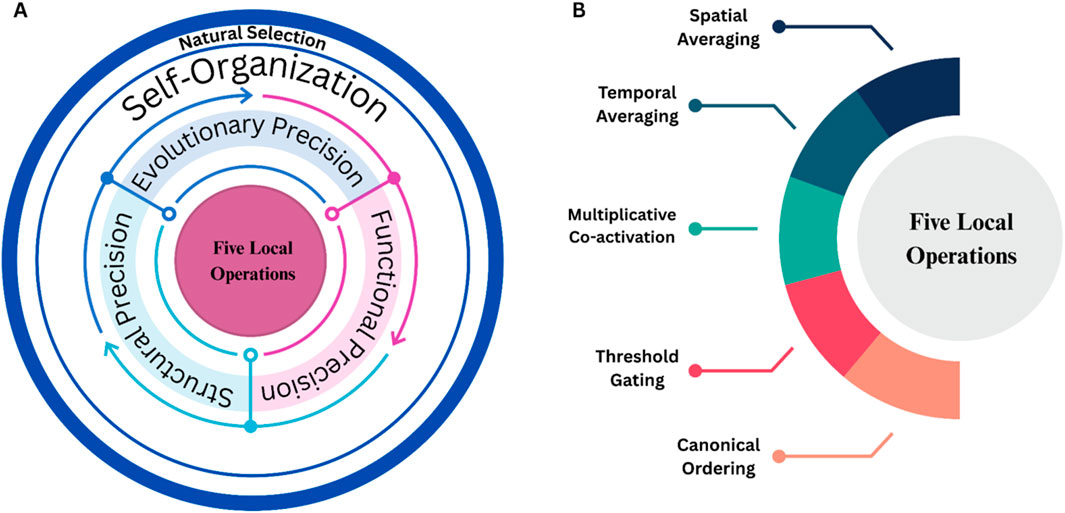

2.5 The core operations: a mechanistic basis of precision

To illustrate the mechanistic basis of the Precision Principle, we propose Figure 2. Therein, five core local operations are identified: Spatial Averaging, Temporal Averaging, Multiplicative Co-activation, Threshold Gating, and Canonical Ordering. These enable the emergence of adaptive, cluster-rich network architectures. Together, these five operations form the universal substrate across Structural, Functional, and Evolutionary Precision.

Figure 2. Panel (A) illustrates the overall recursive framework. At its center are five local operations, which both give rise to and are continuously shaped by three parallel “precision” arenas (middle annuli): Structural Precision, Functional Precision, Evolutionary Precision. Together, these form the Precision Principle. Panel (B) highlights these five core operations, spatial averaging, temporal averaging, multiplicative co-activation, threshold gating, and canonical ordering. This depiction avoids hierarchy, as no single form of Precision contains the others, and it preserves recursion: local processes generate Precision, precision generates self-organization, self-organization yields variation, and natural selection acts upon that variation which in turn influences the local rules over generations.

According to the Free Energy Principle (FEP), the brain functions as a surprise-minimizing engine: perception, action, and learning are all directed toward reducing prediction error, and any neural reconfiguration follows wherever free energy can be lowered (Friston, 2010). Presenting a contrasting logic for brain self-organization, the Precision Principle suggests that the brain is intrinsically compelled to preserve and refine its own circuitry. Prediction errors serve as targeted prompts within this broader structural refinement process rather than the sole guiding force. At its core, the deeper imperative of the Precision Principle is to maintain circuit coherence, an ongoing process that ensures neural networks remain economical, modular, and easily reusable.

Underlying mechanisms of this intrinsic self-organization unfold through molecular and developmental programs. Neuropeptide-gated pruning during critical periods, time-locked growth and stabilization phases, and long-term consolidation dynamics all contribute to actively streamlining connections and aligning network hubs to patterns of input. Refinement driven by coherence proceeds even when salient prediction errors are absent, allowing for elegant microcircuits and hierarchical modules to emerge reliably across individuals and species (Chatterjee and Zielinski, 2020).

Viewed from this perspective, behavior extends beyond mere mismatch avoidance with the external world. It also reinforces patterns that safeguard internal network integrity (D’Angiulli and Roy, 2024). Actions that stabilize coherent mappings grow increasingly valuable, further entrenching efficient architectures (D’Angiulli and Roy, 2024). Centering intrinsic circuit coherence as the key engine of self-organization, not just surprise minimization, the Precision Principle clarifies how rapid adaptation, developmental sensitivity, and evolutionary stability all arise from the brain’s internal structural logic.

3 Discussions and limitations

While the Precision Principle deliberately avoids conflating biological self-organization with conscious intention, more work is needed to define how it interacts with higher-order phenomena such as imagination, reflection, and volitional planning. The proposed mechanism as discussed in this paper, likely operates at multiple nested scales, from local microcircuits up to large-scale brain networks, with each of the five local operations tuned differently depending on the level of processing. In the case of primary sensory areas, spatial and temporal averaging windows may be narrow, sharpening feature detection, whereas in associative and prefrontal regions, broader windows could support the integration of diverse inputs over longer time spans, enabling sustained deliberation and planning (Chaudhuri et al., 2015). Exploring how these multi-scale precision parameters develop over childhood or become altered in conditions such as autism or schizophrenia could yield new biomarkers for cognitive flexibility and rigidity.

While the Precision Coefficient provides a compact expression of the balance between coherence and cost, it should be regarded as a generative lens rather than a closed optimization program. Its strength lies in clarifying how different pressures interact, but its limitations must also be explicit. The precise value of

Another important condition concerns dissipation, since neural circuits cannot sustain coherence without exporting entropy and consuming energy. The resource term must therefore reflect not only structural wiring costs but also the metabolic expenditure needed to maintain activity. In our formulation, dissipation falls under the metabolic component of R (z), yet in practice it should be tracked explicitly through physiological measures such as glucose uptake, oxygen use, or hemodynamic response. This ensures that increases in coherence that require disproportionate energy will reduce P (z), keeping the coefficient grounded in biological feasibility rather than abstract topology.

Building on this, models of perception should capitalize on biologically grounded self-organization to reduce explicit structural complexity while improving both Structural and Functional Precision, letting robustness arise from the interaction of simple local rules rather than from ever-deeper engineered architectures. For example, the Digital Hormone Model (DHM) simulates how uniform skin cells can self-organize into complex, biologically accurate patterns such as feather buds, which are the early developmental structures from which feathers grow in birds. These buds emerge in precise spatial arrangements not through centralized control, but via local hormone-like signaling between neighboring cells. The model demonstrates how simple, biologically inspired rules can generate functionally robust and structurally organized outcomes, illustrating the power of self-organization over engineered complexity (Shen and Chuong, 2002).

Additionally, while the five core local operations offer a useful minimal scaffold, several cautions must be considered. Much of the supporting evidence comes from in vitro preparations with limited neuromodulation and constrained inputs, which can artificially inflate apparent stability due to spatial and temporal averaging (Orlandi et al., 2014; Shew et al., 2009). Reports of modular, small-world, and near-critical organization are sensitive to thresholding, temporal binning, and finite-size effects, so parametric sweeps and surrogate-data tests are needed to separate biology from analysis artefact (Gireesh and Plenz, 2008; Klaus et al., 2011). Coincidence-based potentiation and gating can discard weak-but-predictive signals or sequence codes, and in vivo they are likely tempered by state-dependent control and homeostatic regulation (Mizraji and Lin, 2015; Shen and Loew, 2016; Elliott, 2024). Finally, a single canonical ordering may not capture laminar, cell-type, or behavioural-state differences in decay/gating/potentiation kinetics (Elliott, 2024). Overall, the operations remain a valuable constraint set, but their boundary conditions should be explicit and tested across states, timescales, and analysis choices.

As self-organization illustrates across disciplines, solutions discovered in one context can inform another. This perspective therefore aims to guide future work in perceptual research and artificial intelligence: favor parsimonious, self-organizing mechanisms with well-specified boundary conditions over highly elaborate constructions whose functional resilience is uncertain, so that complexity emerges from dynamics rather than design. Within neural systems, this same logic is implemented by neuromodulators that regulate when and how plasticity is expressed. For example, transient bursts of acetylcholine or dopamine can lower the threshold for potentiation and widen temporal averaging during learning episodes, admitting novel patterns into the network, and then restore stricter gating and faster decay during consolidation to protect established assemblies (Zannone et al., 2018). Dynamic modulation in this sense links precision to arousal, attention, and motivation, and it predicts that pharmacological or optogenetic manipulation of neuromodulators will shift the balance between plasticity and stability in systematic ways (Muir et al., 2024). Viewed through the lens of the coefficient, such surges can be read as temporary adjustments of the effective α, tilting the system between integration and economy across learning, consolidation, and arousal, thereby anchoring biochemical control to the coherence–cost equilibrium formalized in this paper.

At a conceptual level, the mechanistic foundations of the Precision Principle intersect with broader debates on the role of information not only in neural systems but as the building blocks of reality, and this framing sets the stage for how precision governs what gets connected and kept. The “information-as-power” model is a naïve view of information as a linear route to truth and control, an objective and representative of reality. In contrast, an enlightened perspective emphasizes information as a connector valued for sustaining meaningful relationships within a system (Harari, 2024), preparing the ground for a formal account of how such relationships are weighted and updated.

Building on this relational view, embedding the Precision Principle within active-inference frameworks recasts those five local operations as implementations of confidence weighting in predictive coding. Spatial averaging aggregates prediction errors across feature channels (Sweeny et al., 2009), while temporal averaging corresponds to the autocorrelation of error signals over time (Stier et al., 2025). Volitional planning and reflective thought can then be seen as top-down adjustments of these confidence weights, selectively amplifying or dampening error signals to guide belief updating toward desired goals. Empirical tests might therefore pair electrophysiological markers of neural precision with behavioral measures of decision confidence, leading directly to the biological operations that realize these weighting processes.

At the biological level, reinforcement and pruning become the concrete operations that determine when a synapse earns persistence. Correlated spiking under permissive modulatory state consolidates a subset of weights, while local inhibitory circuits and synaptic scaling impose competition by normalizing total excitatory drive so that only strongly supported pathways remain. Glial mechanisms contribute further by removing low-utility spines when activity chronically falls below threshold (Schafer et al., 2012). In the coefficient formalism, these operations add positive change to coherence when they stabilize paths that shorten communicability and strengthen integrative hubs, and they subtract from resource cost when they reduce wiring length and duplicate routes. Over-elimination can depress coherence even as cost falls, so homeostatic rules restore the balance that keeps net change in the coefficient non-negative under stable demands. The outcome is a family of metastable, high-coherence configurations that can be re-entered after perturbation, which provides the foundation for a systems-level reinterpretation of brain function.

Viewed through this lens, the brain is better described as a connection-maximizer than as a truth-maximizer. From this perspective, learning is not merely the correction of prediction errors but a process of connective rebalancing. Information is therefore not retained for external accuracy alone but because its incorporation actively reinforces and reshapes the functional architecture of our brains. Extending beyond neurobiology, one can imagine computational models that evaluate incoming data not by reward signals alone but by an “integration gain” metric, measuring how much each update improves network topology (modularity, hub centrality, small-worldness), thereby linking biological coherence to formal design principles for artificial systems.

Translating the Precision Principle into neuromorphic hardware then becomes a natural step, using leaky integrator circuits for temporal averaging, programmable comparators for threshold gating, and coincidence-detecting synapses for multiplicative co-activation, all cycling through ordered decay and potentiation phases (Indiveri et al., 2011; Davies et al., 2018; Prezioso et al., 2018). Embedded in silicon, these processes could yield processors that self-organize into efficient, robust architectures, capable of adapting under noisy conditions while consuming minimal power. In this way, the hardware instantiates the full pipeline from relational information to confidence weighting to coherence-preserving plasticity, realizing the Precision Principle as a principle of efficient and resilient self-organization.

Taken together, these considerations suggest that the Precision Coefficient should be regarded not as a narrow formula but as an expression of the Precision Principle’s role as a driver of neural learning. It foregrounds the interplay of coherence, dissipation, and selective elimination, showing how local mechanisms such as Hebbian reinforcement, k-winners competitive selection, and the elimination of weaker synaptic connections scale upward to organize networks and, in due course, entire brains.

Ultimately, the Precision Principle as proposed in this paper transcends mere wiring rules, emerging as a scale-invariant universal force that sculpts coherence at all dimensions. By filtering, gating, and temporally sculpting neural activity, the brain does not just record reality; it architects its own structure, demanding that every spark of imagination, every strategic plan, every reflective thought earn its place by reinforcing the network’s integrity. In this light, precision is not passive order-taking but the active crucible in which noise is forged into narratives, randomness into reason, and fleeting sparks into enduring circuitry.

Data availability statement

The original contributions presented in the study are included in the article/supplementary material, further inquiries can be directed to the corresponding authors.

Author contributions

RR: Writing – original draft, Writing – review and editing, Conceptualization. KS: Writing – original draft, Writing – review and editing. GB: Writing – original draft, Writing – review and editing. AD: Writing – original draft, Writing – review and editing. BD-L: Writing – original draft, Writing – review and editing, Conceptualization.

Funding

The author(s) declare that no financial support was received for the research and/or publication of this article.

Acknowledgements

R. Roy would like to thank Valsa Johnson, Elezebeth Fernandez, Stern Fernandez, Natasha Roy, and Neal Bhullar for their invaluable support and guidance during the creation of this manuscript. This work would not have been possible without you, my dear Ammachi, Aunty, Uncle, Sister, and Cousin. The authors would also like to thank Professor Gerry Leisman for his feedback on this manuscript. In addition, the authors are grateful to Professor Peter A. Tass from Stanford University, the editor of this manuscript, and Dr. Flavio Rusch from the University of São Paulo, the reviewer, for their guidance and critiques regarding the improvement of this work.

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

The author(s) declared that they were an editorial board member of Frontiers, at the time of submission. This had no impact on the peer review process and the final decision.

Generative AI statement

The author(s) declare that no Generative AI was used in the creation of this manuscript.

Any alternative text (alt text) provided alongside figures in this article has been generated by Frontiers with the support of artificial intelligence and reasonable efforts have been made to ensure accuracy, including review by the authors wherever possible. If you identify any issues, please contact us.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

References

Beggs, J. M., and Plenz, D. (2003). Neuronal avalanches in neocortical circuits. J. Neurosci. 23 (35), 11167–11177. doi:10.1523/jneurosci.23-35-11167.2003

Chatterjee, S., and Zielinski, P. (2020). Making coherence out of nothing at all: measuring the evolution of gradient alignment. ArXiv.org.

Chaudhuri, R., Knoblauch, K., Gariel, M.-A., Kennedy, H., and Wang, X.-J. (2015). A large-scale circuit mechanism for hierarchical dynamical processing in the primate cortex. Neuron 88 (2), 419–431. doi:10.1016/j.neuron.2015.09.008

D’Angiulli, A., and Roy, R. (2024). The frog-manikin holding the blue parasol umbrella: imaginative generativity in evolution, life, and consciousness. Curr. Opin. Behav. Sci. 58, 101397. doi:10.1016/j.cobeha.2024.101397

D’Angiulli, A., and Sidhu, K. (2025). Functionalist emergentist materialism: a pragmatic framework for consciousness. Imagination Cognition Personality 44, 387–415. doi:10.1177/02762366251316255

Davies, M., Srinivasa, N., Lin, T.-H., Chinya, G., Cao, Y., Choday, S. H., et al. (2018). Loihi: a neuromorphic manycore processor with On-Chip learning. IEEE Micro 38 (1), 82–99. doi:10.1109/mm.2018.112130359

Downes, J. H., Hammond, M. W., Xydas, D., Spencer, M. C., Becerra, V. M., Warwick, K., et al. (2012). Emergence of a small-world functional network in cultured neurons. PLoS Comput. Biol. 8 (5), e1002522. doi:10.1371/journal.pcbi.1002522

Dresp-Langley, B. (2020). Seven properties of self-organization in the human brain. Big Data Cognitive Comput. 4 (2), 10. doi:10.3390/bdcc4020010

Dresp-Langley, B. (2024). Self-organization as a key principle of adaptive intelligence. Lect. Notes Netw. Syst., 249–260. doi:10.1007/978-3-031-65522-7_23

Elliott, T. (2024). Stability against fluctuations: a two-dimensional study of scaling, bifurcations and spontaneous symmetry breaking in stochastic models of synaptic plasticity. Biol. Cybern. 118 (1-2), 39–81. doi:10.1007/s00422-024-00985-0

Fortune, E. S., Andanar, N., Madhav, M., Jayakumar, R. P., Cowan, N. J., Bichuette, M. E., et al. (2020). Spooky interaction at a distance in cave and surface dwelling electric fishes. Front. Integr. Neurosci. 14, 561524. doi:10.3389/fnint.2020.561524

Friston, K. (2010). The free-energy principle: a unified brain theory? Nat. Rev. Neurosci. 11 (2), 127–138. doi:10.1038/nrn2787

Gillary, G., Heydt, R., and Niebur, E. (2017). Short-term depression and transient memory in sensory cortex. J. Comput. Neurosci. 43 (3), 273–294. doi:10.1007/s10827-017-0662-8

Gireesh, E. D., and Plenz, D. (2008). Neuronal avalanches organize as nested theta- and beta/gamma-oscillations during development of cortical layer 2/3. Proc. Natl. Acad. Sci. 105 (21), 7576–7581. doi:10.1073/pnas.0800537105

Grossberg, S. (1993). Self-organizing neural networks for stable control of autonomous behavior in a changing world. 139–197. doi:10.1016/s0924-6509(08)70037-0

Hahn, G., Petermann, T., Havenith, M. N., Yu, S., Singer, W., Plenz, D., et al. (2010). Neuronal avalanches in spontaneous activity in vivo. J. Neurophysiology 104 (6), 3312–3322. doi:10.1152/jn.00953.2009

Hansel, C., and Yuste, R. (2024). Neural ensembles: role of intrinsic excitability and its plasticity. Front. Cell. Neurosci. 18, 1440588. doi:10.3389/fncel.2024.1440588

Harari, Y. N. (2024). Nexus: a brief history of information networks from the Stone Age to AI. First U.S. edition. Random House.

Indiveri, G., Linares-Barranco, B., Hamilton, T. J., Schaik, A. van, Etienne-Cummings, R., Delbruck, T., et al. (2011). Neuromorphic silicon neuron circuits. Front. Neurosci. 5, 73. doi:10.3389/fnins.2011.00073

Johnson, S., Marro, J., and Torres, J. J. (2013). Robust short-term memory without synaptic learning. PLoS ONE 8 (1), e50276. doi:10.1371/journal.pone.0050276

Kılınç, H., Yıldız, E., Alaydın, H. C., Boran, H. E., and Cengiz, B. (2025). Vision loss and neural plasticity: enhanced multisensory integration and somatosensory processing. J. Physiology 603, 4051–4061. doi:10.1113/jp288503

Klaus, A., Yu, S., and Plenz, D. (2011). Statistical analyses support power law distributions found in neuronal avalanches. PLoS ONE 6 (5), e19779. doi:10.1371/journal.pone.0019779

Knill, D. C., and Pouget, A. (2004). The Bayesian brain: the role of uncertainty in neural coding and computation. Trends Neurosci. 27 (12), 712–719. doi:10.1016/j.tins.2004.10.007

Knoblauch, A. (2017). Impact of structural plasticity on memory formation and decline. Academic Press, 361–386. doi:10.1016/B978-0-12-803784-3.00017-2

Lewis, L. B., Saenz, M., and Fine, I. (2010). Mechanisms of cross-modal plasticity in early-blind subjects. J. Neurophysiology 104 (6), 2995–3008. doi:10.1152/jn.00983.2009

Lynch, M. R. (2024). Environmental sensing. Oxford University Press EBooks, 570–600. doi:10.1093/oso/9780192847287.003.0022

Milano, G., Cultrera, A., Boarino, L., Callegaro, L., and Ricciardi, C. (2023). Tomography of memory engrams in self-organizing nanowire connectomes. Nat. Commun. 14 (1), 5723. doi:10.1038/s41467-023-40939-x

Mizraji, E., and Lin, J. (2015). Modeling spatial–temporal operations with context-dependent associative memories. Cogn. Neurodynamics 9 (5), 523–534. doi:10.1007/s11571-015-9343-3

Muir, J., Anguiano, M., and Kim, C. K. (2024). Neuromodulator and neuropeptide sensors and probes for precise circuit interrogation in vivo. Science 385 (6716), eadn6671. doi:10.1126/science.adn6671

Orlandi, J. G., Stetter, O., Soriano, J., Geisel, T., and Battaglia, D. (2014). Transfer entropy reconstruction and labeling of neuronal connections from simulated calcium imaging. PLOS ONE 9 (6), e98842. doi:10.1371/journal.pone.0098842

Pang, W., Xia, Z., Zhang, L., Shu, H., Zhang, Y., and Zhang, Y. (2024). Stimulus-responsive and task-dependent activations in occipital regions during pitch perception by early blind listeners. Hum. Brain Mapp. 45 (2), e26583. doi:10.1002/hbm.26583

Pena, A., and Cerqueira, F. (2017). Stability analysis for mobile robots with different time-scales based on unsupervised competitive neural networks. IEEE, 1–6. doi:10.1109/sbr-lars-r.2017.8215270

Prezioso, M., Mahmoodi, M. R., Bayat, F. M., Nili, H., Kim, H., Vincent, A., et al. (2018). Spike-timing-dependent plasticity learning of coincidence detection with passively integrated memristive circuits. Nat. Commun. 9 (1), 5311. doi:10.1038/s41467-018-07757-y

Rabinowitch, I., and Bai, J. (2016). The foundations of cross-modal plasticity. Commun. and Integr. Biol. 9 (2), e1158378. doi:10.1080/19420889.2016.1158378

Saimi, Y., Martinac, B., Gustin, M. C., Culbertson, M. R., Adler, J., and Kung, C. (1988). Ion channels in paramecium, yeast, and Escherichia coli. Cold Spring Harb. Symposia Quantitative Biol. 53 (0), 667–673. doi:10.1101/sqb.1988.053.01.076

Sajikumar, S., and Korte, M. (2011). Different compartments of apical CA1 dendrites have different plasticity thresholds for expressing synaptic tagging and capture. Learn. and Mem. 18 (5), 327–331. doi:10.1101/lm.2095811

Schafer, D. P., Lehrman, E. K., Kautzman, A. G., Koyama, R., Mardinly, A. R., Yamasaki, R., et al. (2012). Microglia sculpt postnatal neural circuits in an activity and complement-dependent manner. Neuron 74 (4), 691–705. doi:10.1016/j.neuron.2012.03.026

Shen, W.-M., and Chuong, C.-M. (2002). Simulating self-organization with the digital hormone model. Springer EBooks, 149–157. doi:10.1007/978-94-017-2376-3_16

Shen, J., and Loew, M. (2016). Hierarchical temporal and spatial memory for gait pattern recognition. IEEE, 1–9. doi:10.1109/aipr.2016.8010602

Shew, W. L., Yang, H., Petermann, T., Roy, R., and Plenz, D. (2009). Neuronal avalanches imply maximum dynamic range in cortical networks at criticality. J. Neurosci. 29 (49), 15595–15600. doi:10.1523/jneurosci.3864-09.2009

Soares, D., Gallman, K., Bichuette, M. E., and Fortune, E. S. (2023). Adaptive shift of active electroreception in weakly electric fish for troglobitic life. Front. Ecol. Evol. 11, 1180506. doi:10.3389/fevo.2023.1180506

Stier, C., Balestrieri, E., Fehring, J., Focke, N. K., Wollbrink, A., Dannlowski, U., et al. (2025). Temporal autocorrelation is predictive of age—An extensive MEG time-series analysis. Proc. Natl. Acad. Sci. 122 (8), e2411098122. doi:10.1073/pnas.2411098122

Sweeny, T. D., Grabowecky, M., Paller, K. A., and Suzuki, S. (2009). Within-hemifield perceptual averaging of facial expressions predicted by neural averaging. J. Vis. 9 (3), 1–11. doi:10.1167/9.3.2

van der Heijden, K., Formisano, E., Valente, G., Zhan, M., Kupers, R., and de Gelder, B. (2019). Reorganization of sound location processing in the auditory cortex of blind humans. Cereb. Cortex 30 (3), 1103–1116. doi:10.1093/cercor/bhz151

Wan, Q., Ardalan, A., Fulvio, J. M., and Postle, B. R. (2024). Representing context and priority in working memory. J. Cognitive Neurosci. 36 (7), 1374–1394. doi:10.1162/jocn_a_02166

Yuille, A., and Kersten, D. (2006). Vision as Bayesian inference: analysis by synthesis? Trends Cognitive Sci. 10 (7), 301–308. doi:10.1016/j.tics.2006.05.002

Keywords: self-organization, brain, evolution, neural circuits, neural learning, precision, plasticity, networks

Citation: Roy R, Sidhu K, Byczynski G, D’Angiulli A and Dresp-Langley B (2025) The precision principle: driving biological self-organization. Front. Netw. Physiol. 5:1678473. doi: 10.3389/fnetp.2025.1678473

Received: 08 September 2025; Accepted: 15 October 2025;

Published: 12 November 2025.

Edited by:

Peter A. Tass, Stanford University, United StatesReviewed by:

Flavio Rusch, University of São Paulo, BrazilCopyright © 2025 Roy, Sidhu, Byczynski, D’Angiulli and Dresp-Langley. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Raymond Roy, cmF5bW9uZHJveUBjbWFpbC5jYXJsZXRvbi5jYQ==; Birgitta Dresp-Langley, YmlyZ2l0dGEuZHJlc3BAY25ycy5mcg==

Raymond Roy

Raymond Roy Kiranpreet Sidhu1,2

Kiranpreet Sidhu1,2 Gabriel Byczynski

Gabriel Byczynski Amedeo D’Angiulli

Amedeo D’Angiulli Birgitta Dresp-Langley

Birgitta Dresp-Langley