- 1Indian Institute of Technology Madras, Chennai, India

- 2Virginia Tech, Blacksburg, VA, United States

This study presents a general trainable network of Hopf oscillators to model high-dimensional electroencephalogram (EEG) signals across different sleep stages. The proposed architecture consists of two main components: a layer of interconnected oscillators and a complex-valued feed-forward network designed with and without a hidden layer. Incorporating a hidden layer in the feed-forward network leads to lower reconstruction errors than the simpler version without it. Our model reconstructs EEG signals across all five sleep stages and predicts the subsequent 5 s of EEG activity. The predicted data closely aligns with the empirical EEG regarding mean absolute error, power spectral similarity, and complexity measures. We propose three models, each representing a stage of increasing complexity from initial training to architectures with and without hidden layers. In these models, the oscillators initially lack spatial localization. However, we introduce spatial constraints in the final two models by superimposing spherical shells and rectangular geometries onto the oscillator network. Overall, the proposed model represents a step toward constructing a large-scale, biologically inspired model of brain dynamics.

1 Introduction

Using extracranial electrodes, EEG measures the extracellular ionic current produced by a graded postsynaptic potential of vertically oriented pyramidal neurons in the III, V, and VI cortical layers (Nunez and Srinivasan, 2006). The electrical dipole field created by the soma and apical dendrites of pyramidal neurons is propagated through layers of the cortex, cerebrospinal fluid (CSF), skull, and scalp via volume conduction (Schaul, 1998) and recordable at the scalp site (Mule et al., 2021). EEG technology has diverse applications, including characterizing brain dynamics in the early stages of Parkinson’s disease (PD) (Han et al., 2013), epileptic seizure detection (Zhou et al., 2018), motor imagery and movement classification in brain-computer interfaces (Thomas et al., 2009), emotion classification (Alhagry et al., 2017) etc.

Oscillations are a key feature of sleep EEG and are crucial in various physiological and cognitive processes (Lambert and Peter-Derex, 2023). For example, the presence of theta waves and reduction of an alpha wave during stage 1 sleep, Stage 2 sleep is characterized by bursts of oscillatory activity and increased cortical synchrony; delta wave is a marker of deep sleep also affected by diseases like insomnia (Bakker et al., 2023), theta oscillation in the hippocampus during REM stage, etc. EEG-based Coherence (Watanabe et al., 2023) and phase synchronizations (Ojha and Panda, 2024) are the quantitative measures of functional connectivity during sleep and temporal coordination of neuronal activity. Achermann and Borbély (1997) shows the significance of neuronal synchrony during deep sleep and the increase in delta (0.1–4 Hz) and theta (4–7 Hz) band power following sleep onset, particularly in the fronto-central region. Studies also report the earlier emergence of alpha (8–12 Hz) and frontal theta oscillations after sleep deprivation (Gorgoni et al., 2019), the effect of transcranial oscillatory stimulation on memory consolidation during NREM sleep (Marshall et al., 2006), and the role of oscillations as biomarkers of sleep homeostasis such as theta activity during wakefulness and slow-wave activity (delta) during sleep (Finelli et al., 2000). Sleep also plays a major role in synaptic homeostasis and memory consolidation, emphasizing the importance of oscillatory activity in brain function (Esser et al., 2007; Pankka et al., 2024; Bahador et al., 2021; Paul, 2020; Sano et al., 2018; Michielli et al., 2019; Zhao et al., 2024; Burke and de Paor, 2004; Weigenand et al., 2014; Ospeck, 2019; Ghosh et al., 2025; Krishnamurthy et al., 2022; Buzsáki, 2006; Achermann et al., 1993). The EEG-based sleep transition model (Ising model) describes the synchronous dynamics of the neuronal population (Acconito et al., 2023). The Kuramoto model describes phase synchronization among different brain regions during sleep (Ingendoh et al., 2023).

In the past decade, several efforts have been made to model the brain as a nonlinear dynamical system and describe brain dynamics using complex nonlinear dynamical networks (Başar, 1983). A phenomenological model comprising van der Pol–Duffing double oscillator networks was used to model EEG signals from healthy controls as well as Alzheimer’s disease patients (Ghorbanian et al., 2015b). The model results compare favorably with experimental results in terms of time series, power spectrum, and Shannon entropy (Ghorbanian et al., 2015a; Ghorbanian et al., 2015b; Ghorbanian et al., 2014). Another model used coupled Duffing-van der pol oscillators to generate EEG ictal patterns from the temporal lobe (Szuflitowska and Orlowski, 2021). By analyzing EEG time series, the presence of low dimensional chaos in NREM N1 and REM sleep stages was described by Babloyantz et al. (1985). Studies have been made using stochastic limit cycle oscillators to model EEG data from healthy subjects (Rankine et al., 2006; Burke and de Paor, 2004).

Hopf oscillator networks offer a robust framework for modeling complex brain dynamics across different states (Burke and de Paor, 2004). Neural mass models have been used to explain slow-wave activity and K-complexes (Weigenand et al., 2014). Additionally, Hopf oscillators provide a powerful approach to modeling the oscillatory neural dynamics underlying memory consolidation and sleep spindles (Ospeck, 2019). Their properties closely align with observed sleep and memory research phenomena, offering insights into how the brain processes, stores, and consolidates information through oscillatory activity patterns. These models connect the neuronal-level dynamics and population-level behavior observed in EEG recordings.

Despite these efforts, the link between the mesoscopic EEG activity and the dynamics underlying neuronal circuits still needs to be fully unraveled. A weighted mean potential of a weakly-coupled, local cluster of Hindmarsh-Rose (HR) neurons collectively shows near-synchronization behavior and can optimally reconstruct epileptic EEG time series (Ren et al., 2017). A similar line of work was also proposed by Phuong and colleagues, in which networks of HR neurons and Kuramato oscillators were used to reconstruct EEG data in healthy and epileptic conditions (Nguyen et al., 2020). Ibáñez-Molina and Iglesias-Parro (2016) show a mean field of the Kuramoto oscillator to explore the dynamics of electroencephalographic (EEG) complexity during mind-wandering episodes. This work contributes to understanding how neural mechanisms underpin spontaneous thought processes and their representation in EEG signals. In another study (Das and Puthankattil, 2022), the authors used a phenomenological computational model of the Kuramoto oscillator to investigate functional connectivity and EEG complexity in mild cognitive impairment (MCI), a precursor to Alzheimer’s disease (AD). The study revealed that the brain’s dynamic repertoire results from the interplay between network topology and oscillatory dynamics by combining empirical structural and functional connectivity data with computational models (Kuramoto oscillatory model) of coupled oscillators (Cabral et al., 2022). This research highlights the role of synchronization mechanisms in shaping large-scale brain dynamics and offers a framework for understanding how the connectome supports diverse neural functions. Similar approaches have also been taken to model Functional magnetic resonance imaging (fMRI) (Logothetis et al., 2001) signals using the non-linear oscillators (Kuramoto, Hopf) model (Cabral et al., 2023; Breakspear, 2017; Deco et al., 2017). Several Hopf oscillatory models have been developed that explain several physiological phenomena such as cognitive behavior, sleep–wake cycle, Schizophrenia, and Alzheimer’s disease (Deco et al., 2015; Deco et al., 2017; Deco et al., 2021; Luppi et al., 2022; López-González et al., 2021; Deco and Kringelbach, 2014).

There is a growing interest in modeling large-scale brain activity using networks of nonlinear oscillators. A notable example of this kind is The Virtual Brain (TVB) framework, which uses large oscillatory networks to model various manifestations of functional brain dynamics like EEG, functional Magnetic Resonance Imaging (fMRI), and Magnetoencephalogram (MEG) (Sanz Leon et al., 2013). Al-Hossenat et al. (2019) proposed modeling of slow wave activity (delta and theta) of EEG from eight different regions using Jansen and Rit’s (JR) neural mass model and anatomical connectivity using the TVB framework. In another modeling study, using a similar kind of neural mass model, alpha wave activity was reproduced from four different brain regions (Al-Hossenat et al., 2017).

Several deep learning models have been proposed for EEG time series forecasting, including WaveNet (Pankka et al., 2024), correlation-based approaches (Bahador et al., 2021), and LSTM-based networks (Paul, 2020). LSTM-RNN networks have been applied to detect sleep stages from EEG signals (Sano et al., 2018; Michielli et al., 2019) and for sleep EEG reconstruction (Zhao et al., 2024), leveraging their ability to capture temporal dependencies in sleep EEG data. However, these networks primarily work in time series, not capturing the frequency and phase information. These approaches overlook key biological oscillatory features such as phase, frequency, and amplitude.

Feedforward spiking neural network models (Singanamalla and Lin, 2021; Zenke and Ganguli, 2018) generate synthetic EEG signals for Motor imagery and SSVEP EEG data. Yang et al. (2024) introduced a biologically inspired unsupervised learning framework for spiking neural networks (SNNs), enhancing neuromorphic vision systems with robust, efficient, and energy-adaptive visual perception for embodied applications in robotics and AI. The same group advanced SNN capabilities with a surrogate gradient learning framework (Yang and Chen, 2023a), demonstrating superior temporal precision and efficiency for neuromorphic computing and AI. Similar authors proposed SNIB (Yang and Chen, 2023b; Yang and Chen, 2024), a groundbreaking framework applying the nonlinear information bottleneck (NIB) principle to optimize the trade-off between information compression and retention, enabling more efficient, robust, and adaptive spike-based learning. There is a model of linearly coupled Hopf oscillatory model that can explain complex dynamics, and information processing of the human brain (Deco et al., 2017), where model dynamics depend on coupling coefficients among oscillators and oscillator amplitude (μ) (Deco et al., 2017). However, these kinds of models cannot accurately predict the neuroimaging data like fMRI (Hahn et al., 2021; Iravani et al., 2021).

Compared with fMRI, a smaller number of brain modeling approaches have been explored with EEG. EEG is less expensive than fMRI, more easily available, portable, and has high temporal resolution. Therefore, we can use all the advantages of EEG to understand brain dynamics. With this motivation, we propose a network of Hopf oscillators described in the complex domain and show how the network can be trained to model high-dimensional EEG data in the waking and sleep stages and publicly available BONN epilepsy dataset (Andrzejak et al., 2001). Sleep is a complex, naturally recurring dynamic process that occurs periodically in most animals (Březinová, 1974). Three critical physiological mechanisms or rhythms regulate sleep, viz., circadian rhythm, homeostasis, and ultradian rhythm (Fisher et al., 2013; Achermann and Borbély, 2003). Polysomnogram (Wolpert, 1969), which jointly measures brain electrical activity (EEG), muscle activity (Electromyogram—EMG), eye movement (Electrooculogram—EOG), and heart rate (Electrocardiogram—ECG), is a standard method of recording sleep activity. Sleep stages can be broadly categorized into five stages: waking, non-rapid eye movement N1 (NREM N1), non-rapid eye movement N2 (NREM N2), non-rapid eye movement N3 (NREM N3), and rapid eye movement (REM) sleep (Šušmáková, 2004). Sleep facilitates important neural and physiological functions, including memory consolidation (Siegel, 2001), and emotion control (Goldstein and Walker, 2014).

In earlier work, we showed how to achieve a stable phase relationship between oscillators with arbitrarily different frequencies using a special form of coupling known as power coupling (Biswas et al., 2021). The difficulties that arise in a pair of coupled oscillators, depicted by Arnold tongues, seem to be overcome effectively with power coupling. It was shown how networks of such coupled oscillator systems can be trained to learn a small number of EEG channels. In the present study, we add a hidden layer of sigmoidal neurons and geometrically constrain the network to accurately learn high-dimensional, “whole brain” EEG signals under various sleep conditions.

In this work, we have developed a Deep Oscillatory Neural Network (DONN) to reconstruct and predict sleep EEG and epileptic EEG time series. This type of model combines oscillatory neurons and sigmoid neurons (Ghosh et al., 2025). RNNs (Krishnamurthy et al., 2022) with gating mechanisms are excellent at sequence processing capabilities but fail to show biological plausibility. In contrast, neural activity in the brain exhibits complex dynamics characterized by key frequency bands such as alpha, beta, gamma, and theta (Buzsáki, 2006). Our proposed oscillatory network consists of Hopf oscillators, where each unit exhibits both amplitude and phase dynamics. The oscillators presented in the network effectively carry out a Fourier decomposition of the teaching signal (Biswas et al., 2021). Additionally, we demonstrate that a single oscillatory neuron is computationally more efficient than single LSTM neurons in terms of processing time, moreover, the DONN network has significantly fewer trainable parameters compared to an LSTM (Ghosh et al., 2025).

Our study shows that the EEG signal can be reconstructed as well as predicted optimally compared to the Kuramoto and HR neuron-based model proposed (Nguyen et al., 2020). Our model can reconstruct and predict the next 5 s of EEG data (2,500 data points) from 5 sleep stages. Various signal features like power spectrum density, Hurst exponent (Supplementary section 11), and Higuchi fractal (Supplementary section 12) dimension show good agreement with model-predicted EEG data compared to empirical EEG Data. The key contributions of this work are summarized below (1–5):

1. Modeling of different stages of sleep data. Reconstruction as well as prediction of future EEG data.

2. Statistical tests and error bar comparisons have been conducted with the existing literature. That shows significant improvement in contrast with the existing literature.

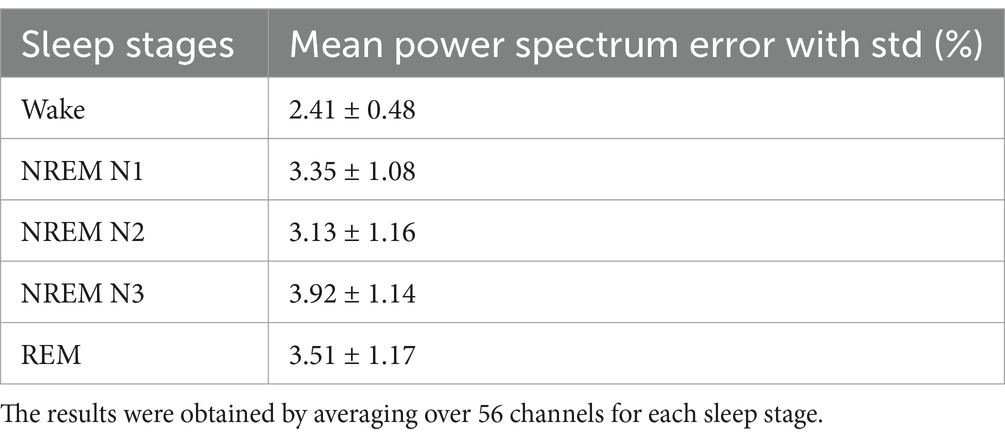

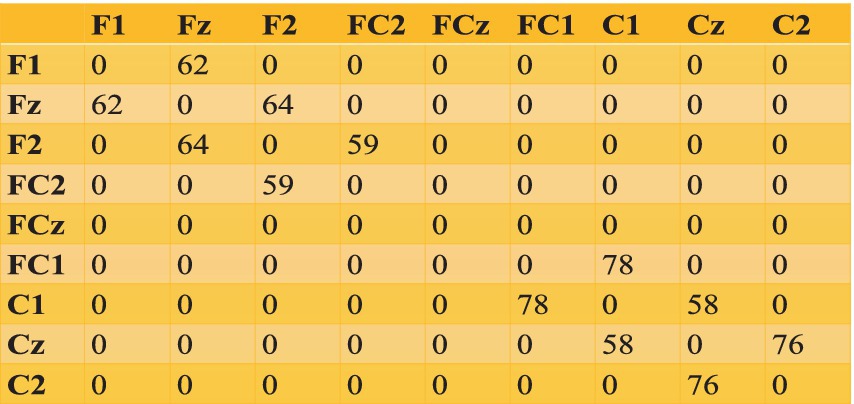

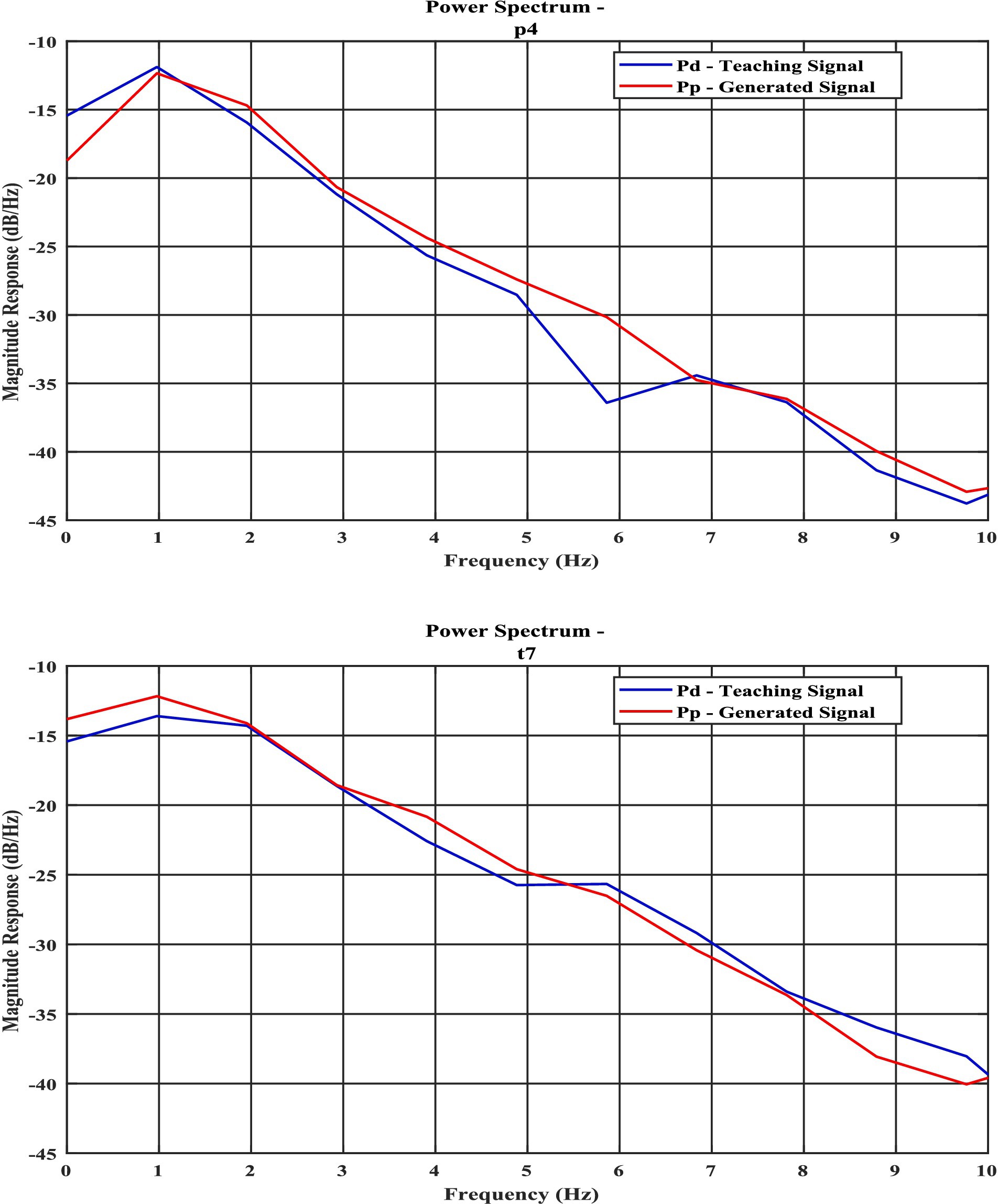

3. The predicted model signal exhibits significant similarity to real-time EEG data across different sleep stages, as evidenced by its power spectral density and Hurst component characteristics (Figure 1, Table 1; Supplementary sections 11–13).

4. We have created a spherical shell oscillatory model of the whole brain, where oscillators are spatially localized, which is a stepping stone toward large-scale brain modeling.

5. Find out optimal model parameters [Oscillator amplitude (μ), Coupling coefficient ( ), Beta (β) Additional Hidden Layer] (Section 3.4).

Figure 1. Power spectral density curves of experimental signal and model predicted signal for (a) NREM N1 for P4 channel; (b) NREM N3—“T7” channel, the blue line shows power spectrum of actual EEG Data (Pd) and orange line (Pp) shows model predicted power spectrum.

The outline of the paper is as follows. This article begins with an account of sleep EEG recording followed by preprocessing methodology. The two stages of training of the proposed network are described in the ‘1st stage of training’ and ‘2nd stage of training’ sections, respectively. The section ‘Insertion of hidden layer’ describes the deep oscillator network, which is a combination of an oscillatory layer and a feedforward network. The section ‘Prediction of EEG Data’ describes the prediction of EEG data using a trained Hopf oscillator model. The section ‘spatial distribution of oscillator’ shows how oscillators are distributed on a rectangular grid and spherical shell geometry. Results from the reconstruction, EEG data prediction, and statistical analysis are described in the results section. A discussion of the work is presented in the last section.

2 Materials and methods

2.1 EEG recording

The Polysomnogram (PSG) datasets comprising 56 EEG, two electro-oculogram (EoG) and four EMG electrodes were recorded from two healthy subjects (full night, 8 h) at the School of Neuroscience, Virginia Tech, USA. During data collection, all necessary instructions, such as those regarding caffeine and alcohol use restrictions, were adhered to.

The different stages of sleep are scored according to the American Academy of Sleep Medicine (AASM) rules by two sleep experts (Singh et al., 2019). A night’s sleep consists of periods of rapid eye movement (REM) sleep and periods of non-rapid eye movement (NREM) sleep; the latter consists of three stages, NREM N1, NREM N2, and NREM N3, also known as slow-wave sleep. NREM N1 is often when a transition occurs between waking and sleep; the awake state is characterized by low amplitude and relatively high-frequency waves. NREM N1 occurs for 3%–8% of total full night sleep duration and is dominated by theta waves (4–7 Hz) (Březinová, 1974). NREM N2 is defined by sleep spindles (11–16 Hz) and K-complexes. NREM N3 is also called slow-wave sleep, as there is a prominent activity of the delta band (0.1 to 4 Hz). REM sleep is somewhat similar to the wake stage, which occurs more frequently late at night and occupies 20% of total sleep (Fisher et al., 2013). REM sleep is marked by muscle atonia and conjugate eye movements.

These datasets are in European Data Format (.EDF) format. For further analysis, we converted these datasets into.mat format using the EEGLAB (Iversen and Makeig, 2019) plugin in Matlab and extracted 10 s (training) and subsequent 5-s chunks (testing) from each of the 56 channels of EEG data. EEG is inherently a noisy signal influenced by non-neural factors [e.g., muscle movement measured by Electromyogram (EMG), eye movement measured by Electrooculogram (EOG), and Electrocardiogram (ECG)], as well as equipment noise (power line interference (50/60 Hz)), impedance fluctuation and, cable movements. In addition, EEG data is normalized to remove DC noise. The sampling frequency of the system is 500 Hz.

2.2 A network of neural oscillators

For our current purpose of modelling multi-dimensional EEG signals, we use an enhanced version of a network of neural oscillators described in Biswas et al. (2021). The original model of Biswas et al. (2021) consists of a layer of Hopf oscillators with lateral coupling connections and an output layer that is directly connected by a single linear weight stage to the oscillator layer. The dynamics of the Hopf oscillators were described in the complex domain, coupled using a unique form of coupling known as power coupling. The layer of oscillators is connected to the output layer using all-to-all linear forward weights. Thus, the given time series is modelled as a linear sum of the outputs of the layer of oscillators.

In the present model, a hidden layer of sigmoid neurons is inserted between the oscillatory layer and the output, immensely reducing the fitting error. The original network of Biswas et al. (2021) has two components: the input oscillatory layer consisting of a network of coupled Hopf oscillators and a feedforward linear weight stage that maps the oscillator’s outputs onto the network’s output node(s). We use the Hopf oscillator in the supercritical regime where the oscillator exhibits a stable limit cycle. In the previous study, we introduced ‘power coupling’, which shows how to achieve a constant normalized phase difference between a pair of coupled Hopf oscillators with arbitrary intrinsic frequencies (Biswas et al., 2021). The dynamics of the oscillatory layer are described by Equations 1a–1e.

2.3 The network of Hopf oscillators

The complex domain representation of a single Hopf oscillator is described as:

where z is a state variable,

The dynamics of N-coupled Hopf oscillators without external input can be described as:

The polar coordinate representation of Equation 1c is:

where and are the state variables, is the amplitude of oscillation, and β are bifurcation parameter. In this brief μ = 1, β = −20. , is the magnitude of the complex coupling coefficient, ( ), is the angle of the complex coupling coefficient, and and are the ith oscillator’s phase and intrinsic frequency, respectively.

The network described above is trained in two phases: in stage 1, the intrinsic frequencies, , of the oscillators and the coupling weights among the oscillators ( ) in the oscillatory layer are trained; in stage 2, the feedforward linear weights between the oscillatory layer and the network output are trained.

2.4 1st stage of training

Since the aim of the 1st stage of training (Figure 2a) is to train the intrinsic frequencies, , these frequencies are initialized by sampling from a uniform random distribution over the interval [0, 10] Hz. The modified network dynamics is described in Equation 2a, where the error signal drives each oscillator. The teaching signal used for training is denoted by, , which is an EEG signal of a finite duration. The power coupling weight, , in Equation 2b, is the complex lateral connection, is the angle of lateral connection and is the magnitude of the lateral connection between the and oscillators, and , is the intrinsic frequency of the i’th oscillator. The oscillator activations are summed using feedforward weights, , which, in this stage of training, are taken to be small real numbers 0.2 (i.e., =0.2 for all i) and the lateral connection is initialized with complex numbers according to Equation 2b. The training of intrinsic frequency, , is described in Equation 2c, where is the learning rate, e(t) is the error signal, and is the oscillator phase. The whole network is driven by an error signal, e(t), which is the difference between the network’s predicted signal and the desired signal Equation 2d. Training of the real feedforward weights is done by modified delta rule, Equation 2e, where is the learning rate of feed-forward weight update. is the network reconstructed signal shown in Equation 2f. The network performs a Fourier-like decomposition of the target signal, and each oscillator is used to learn the frequency component closest to its own intrinsic frequency present in the signal. In the 1st stage, the intrinsic frequency, the angle of power coupling weight, and the amplitude of the signal (real feedforward weight) are trained. Lateral connections are trained by a complex-valued Hebbian rule (Equation 2g).

The weight of power coupling can be written as Equation 2b:

Frequency adaptation is done by the following rule:

Where is the teaching EEG time series and s(t) is the network predicted time series and e(t) is the output error.

Hebbian learning of complex power coupling is shown in Equation 2g

where is the time constant.

2.5 2nd stage of training

In the 2nd stage of training (Figure 2b), the oscillatory network with learned intrinsic frequencies and lateral connections of the oscillatory layer from the previous stage was used as a starting point. [Note that the oscillatory layer with trained parameters may be compared to a reservoir of reservoir computing (Biswas et al., 2021)].

But the main difference is that, in this stage, the feedforward weights are allowed to be complex (they were real in 1st stage training) and trained once again by supervised batch mode learning rule. and are the magnitude and angle of complex feedforward weights updated according to Equations 3c, 3d.

The complex feedforward weights are trained as follows (Equations 3a, 3b):

where and are the magnitude and angle of the complex feed-forward weight.

The update rules for and are discussed in Equations 3c, 3d.

The number of epochs for complex feedforward weight learning is 5,000, and learning parameters are , = .

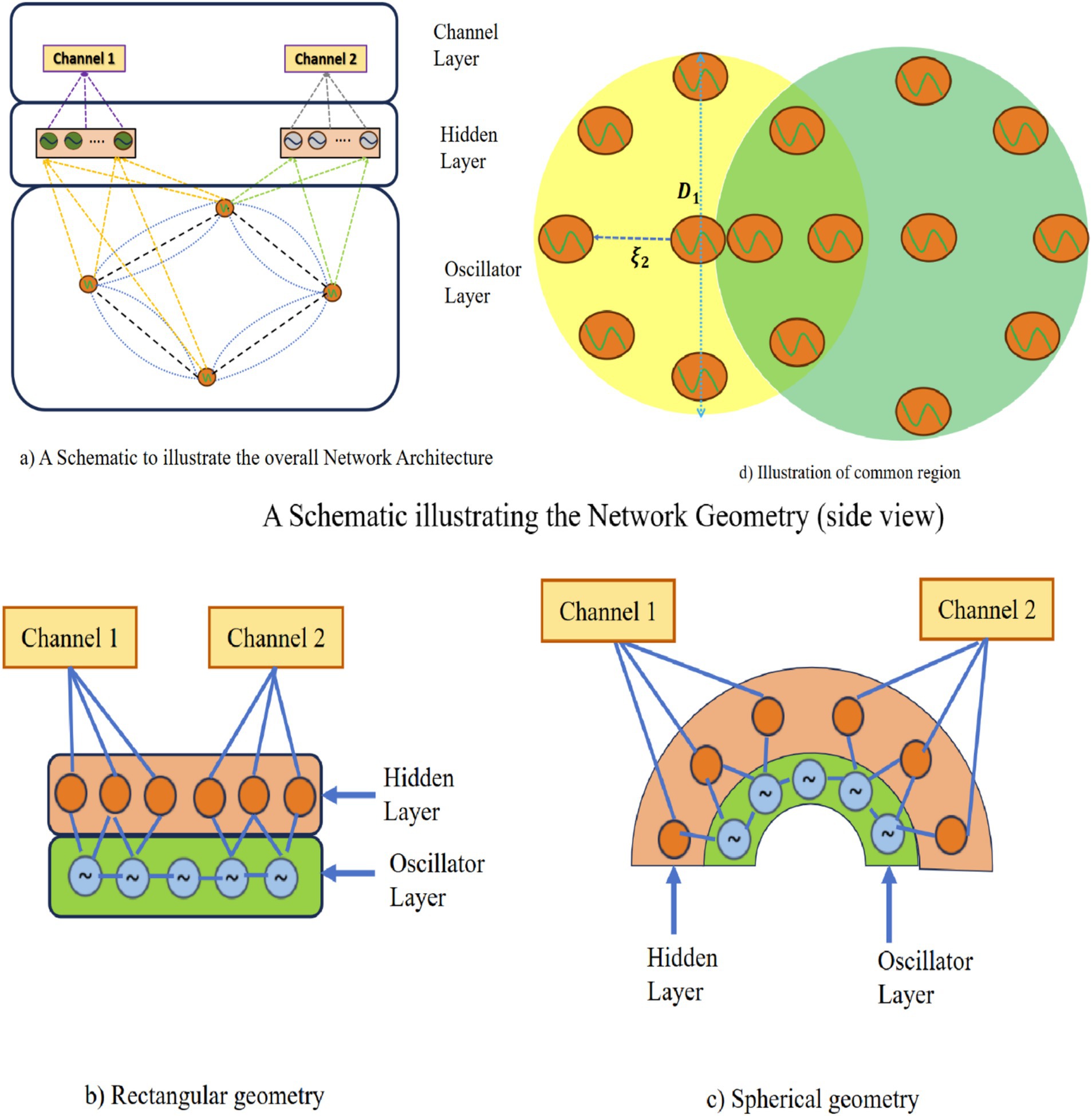

2.6 Insertion of the hidden layer

As we will see subsequently in the result section, despite the theoretical advantages, the model described above needs to yield satisfactory approximations of the empirical EEG signals. We insert a hidden layer of sigmoidal neurons between the oscillatory layer and the output to improve the approximation performance (Figure 3a). In the new version of the model with the hidden layer, the intrinsic frequencies of the oscillators and their lateral connections are trained using the learning mechanisms of the 1st stage of training described above (Equations 2a–2f). The mathematical derivation of hidden layers has been described in Supplementary section 3. Also, we have introduced two geometrical configurations: (a) rectangular and (b) spherical in this latest version of the model (Figures 3b,c), where a few oscillators are shared among EEG channels (Figure 3d).

Figure 3. (a) A schematic to illustrate the overall network architecture. (b,c) A schematic illustrating the network geometry (side view). (d) A schematic illustration of shared oscillator’s common region between two channels (top view).

2.7 Generation of EEG

So far, using supervised training, we have only reconstructed the data. To validate our model, we have to generate the output of the model without the network being driven by the training signal. The network can generate next the 5-s (2,500 samples) EEG signal without any external input. During generation, phases of oscillators, intrinsic frequency of oscillators, all feedforward weights (oscillatory layer to hidden layer weights and hidden layer to output layer weights) are adopted from the trained network. Here r and ϕ dynamics (Equations 4a, 4b) are derived by transform from complex variable representation (Equation 2a) to polar coordinates. r and θ dynamics are given below:

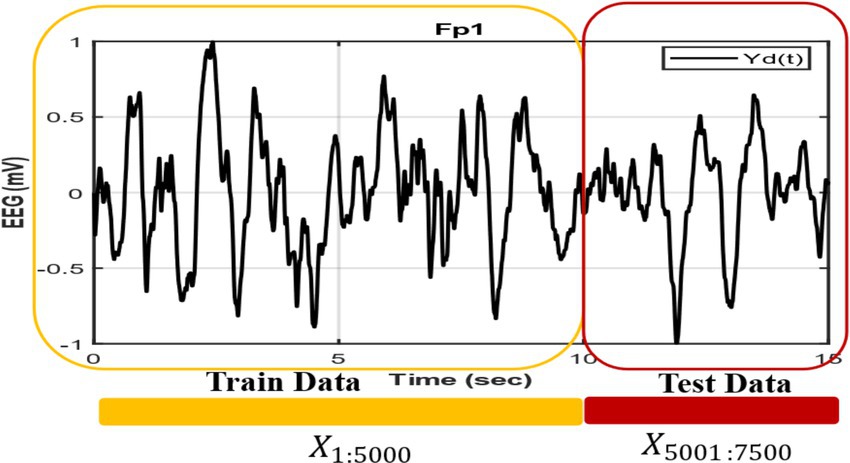

In Figure 4 we show how we construct the network and duration of the training and generating segment, where the first 5,000 points are used as training data taken from empirical EEG data, and last 2,500 points, which is not used during the training phase, are used for generation. Empirical EEG data has been divided for train and next 5 s EEG data is used to compare with the network generated output. To validate our model, we compare it with the method proposed in Nguyen et al. (2020) in which the Kuramoto oscillator and Hindmarsh-Rose (HR) neuron are used to produce EEG signal. Note that we extracted the train data in such a way that the next 5 s segment also belongs to the same sleep stage as that of the training segment (Figure 4).

Figure 4. EEG signals are divided into training and testing segments, where an initial 10-s interval is used for training and the following 5-s segment is used for testing.

2.8 Spatial distribution of oscillators

In the models described above, there is no spatial organized superimposed on the oscillators. In order to impart a greater realism and biological plausibility, we now impose a spatial organization on the proposed oscillator network. To this end, we consider two spatial distributions of the oscillators within a “cortical layer” which is modeled in two ways: (1) a spherical shell, and (2) a spherical shell a rectangular grid (Supplementary material). Electrodes are placed on the top of the cortical layer, inside another layer named the “electrode layer”. Real-world 10–20 electrode geometry is introduced in the “spherical shell” case.

Next, we specify which oscillators in the cortical layer contribute to which electrodes in the electrode layer. This is done by a simple nearest neighbor criterion: only the oscillators that lie within a threshold distance ( ) from a given electrode contribute to that electrode, as defined in Equation 5a.

where is the distance from a given electrode in the electrode layer to a given oscillator in the cortical layer, and where is the distance from the electrode layer to the cortical layer. And and is the Cartesian coordinate representation of electrode layer and cortical layer, respectively.

There is also another layer named “hidden layer” in between the cortical layer and the electrode layer. No specific spatial location is specified for the neurons in the hidden layer. Here basically the model architecture followed was similar to that described in Section 2.6, Figure 3a but with one important difference: a separate hidden layer of neurons is introduced for every electrode. In the schematic shown in Figures 3b,c, two different hidden layers are depicted corresponding to two distinct electrodes. The corresponding oscillators are also shown. Note that though the hidden layers are not shared between two electrodes, the corresponding oscillators can be partially shared (Figures 3b,c).

The thresholding process mentioned in above determines the connectivity between the electrodes and the oscillators. We use another threshold that determines the lateral connections among the oscillators. Here we use the spatial location of the oscillators in the cortical layer to specify local connectivity using another distance-based threshold ( ) derived in Equation 5b. Thus long-range connections among the oscillators are avoided.

Consider to be the distance between the and the oscillators. A pair of oscillators whose mutual distance exceeds the distance threshold limit ), are not connected. represents the magnitude of the complex-valued connection, , between and oscillators; is set to 0.001. Only those oscillator pairs are connected and trained which are within the threshold distance ( ) of each other, i.e., and oscillator are connected only if where,

if

else

Where ( ) is the Cartesian coordinate representation of oscillator and ( ) is the Cartesian coordinate representation of oscillator.

Note that the angle of the coupling connections, , is calculated by Hebbian learning as per Equation 2g.

2.9 Spatial distribution of the oscillators in a spherical shell

In this section, we describe a model in which the cortical layer is modeled as a spherical shell. Likewise, in reality, EEG electrodes also are not confined to a planar surface. We currently place the electrodes on a spherical surface on top of a spherical cortical layer. We extracted the precise electrode locations from EEGLab (Iversen and Makeig, 2019). Based on the position and radius of electrode layer we create a spherical shell. A set of 8 electrodes are shown on the cortical layer in Figure 3c. Similar to the case of the rectangular grid (Supplementary section 10), in this case too, there is a hidden layer between the cortical layer, which consists of oscillators, and the electrode layer. Network training is performed as per the equations described in Section 2.4 (Equations 2a–2g and hidden layer equation described in Supplementary section 3).

3 Results

In this section, we describe the performance of the models described in the previous section on modelling high-dimensional, whole-brain EEG data (56 electrodes). In order to model a large number of electrodes, as well as the essential frequency band (0.1 to 20 Hz), a large number (N = 200) of oscillators are used. Although the model is trained on the original EEG time series. In order to depict the signal spectrum, instead of using normal FFT, we use average periodogram method known as the “Welch method” (Naderi and Mahdavi-Nasab, 2010). We use the Welch method with a Hamming window of size 1 s and 50% overlap throughout the paper.

3.1 Reconstruction without hidden layer

3.1.1 1st stage of training

Following the method described in Section 2.4, we show how the intrinsic frequency of the oscillators adapts to the nearby frequency components present in the desired signal. The intrinsic frequencies of the oscillators, , are initialized by drawing from a uniform distribution over the interval [0, 10] Hz. The real feedforward weight ( ) which connects oscillator to the single output node is uniformly initialized with a small real number (=0.2). The complex-valued lateral connections, , are initialized according to Equation 2b. Note that in this stage, we do not use the hidden layer in the feedforward stage. Training is performed for 30 epochs.

EEG chunks of duration 10 s are used for training. Frequency learning rate is (Equation 2c), amplitude learning rate = 0.0001 (Equation 2e), and the learning rate for the coefficient of lateral connection weight, which determines the magnitude of oscillator-to-oscillator connections ( ), (Equation 2b), is = 0.001.

3.1.2 2nd stage of training

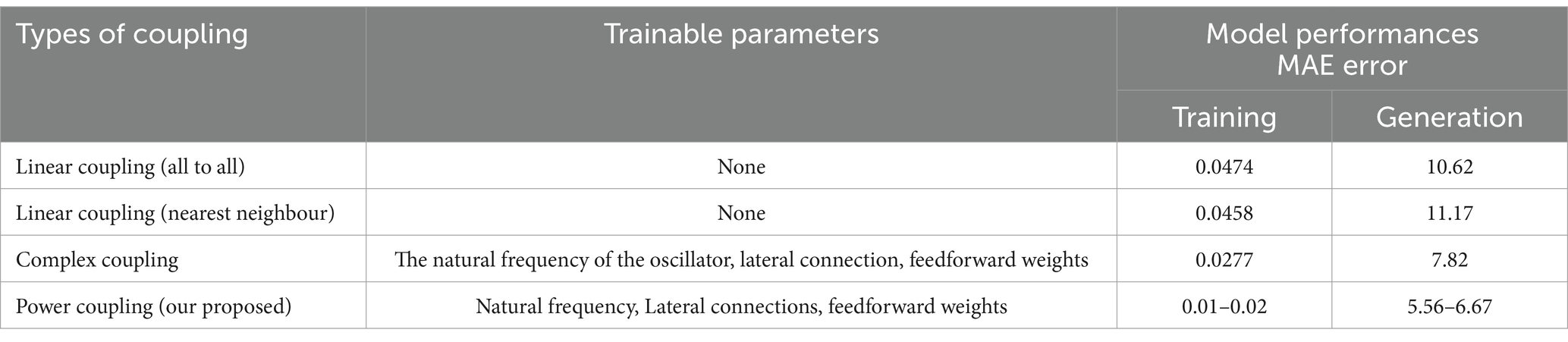

Following the method described in Section 2.5, in this stage, we use the learned intrinsic frequencies and lateral connections from the 1st stage of training, while amplitude and phases of the complex feed-forward weight ( ) are trained further. Although the equation shows a single electrode signal, by using a matrix of feedforward weights we can reconstruct any number of channels. Note that learning rate for weight magnitude ( ) is 0.00003, and the learning rate for angle learning is is 0.000001; n is the number of channels to be reconstructed. Therefore, the predicted signals (Supplementary Figure 2) from the 2nd stage look better than the 1st stage (Supplementary Figure 1). Whereas in the 1st stage we use real feedforward weights, in the 2nd stage we use complex feedforward weights: this is the only difference between the two stages of training. The ‘power coupling’ rule for coupling the oscillators was developed to produce a constant normalized phase difference among the oscillators (Biswas et al., 2021). We compare the results obtained with power coupling, with other forms of coupling in Table 2.

3.2 Reconstruction with hidden layer (an alternative approach to 2nd stage training)

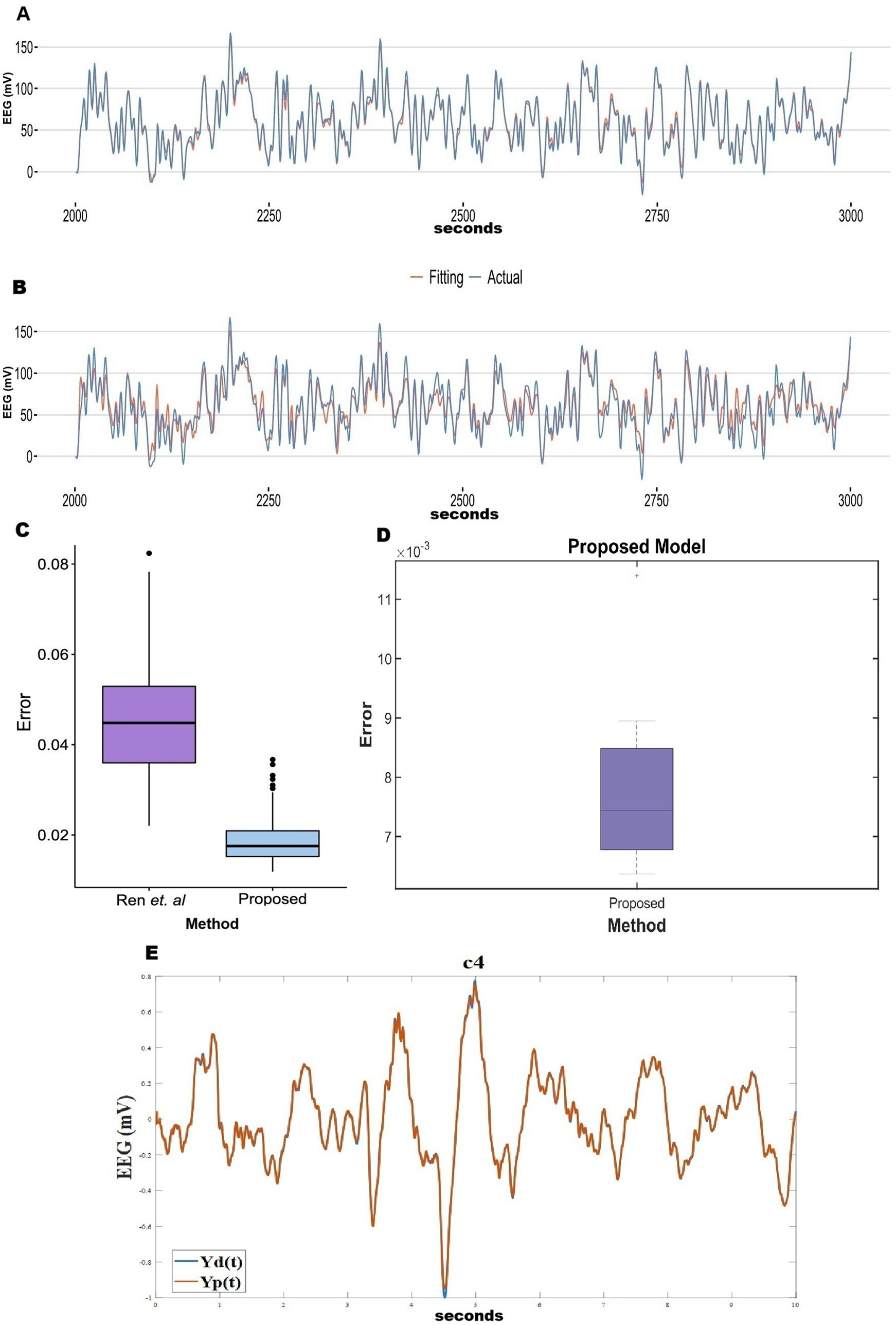

In the previous section, we observed that the reconstruction error is poor when there is no hidden layer. To improve the model performance, following the method described in Section 2.6, we inserted a hidden layer of 100 sigmoidal neurons between the oscillatory layer and the output layer in the 2nd stage of training (learning rates: =0.001; =0.001). This modification greatly improved performance, with the RMSE values dropping by an order of magnitude. Comparisons between the cases of “without hidden layer” and “with hidden layer” model prediction for all the five stages of sleep are shown in a bar plot (Supplementary Figure 4). How reconstruction RMSE changes with the change in hidden layer neurons, has been shown in a bar plot (Supplementary Figure 5). Also, the generality of the network has been described in terms of functional connectivity analysis (Supplementary section 8). Mean absolute error (MAE) of the reconstruction of our model has been compared with Ren et al. (2017) and Nguyen et al. (2020) (Figure 5). Our proposed model has reconstruction error in the range of (0.007–0.019). In comparison with Ren et al. (2017) and Nguyen et al. (2020) time series fitting and training error bar plots are shown in Figures 5A–E. Figures 5A–C are adapted from Nguyen et al. (2020); licensed under CC BY 4.0. From Figures 5C,E we can conclude that our proposed method performs better than the other two methods (Ren et al., 2017; Nguyen et al., 2020).

Figure 5. (A–C) Adopted from Nguyen et al. (2020). (C) Comparison between Ren et al. (2017) and Nguyen et al. (2020). (D) Reconstruction (during training) mean absolute Error distribution during training between EEG signals (our proposed method). (E) Blue line shows actual EEG and orange line show model reconstruction during training (Our proposed method).

3.3 Generation of EEG data using trained network

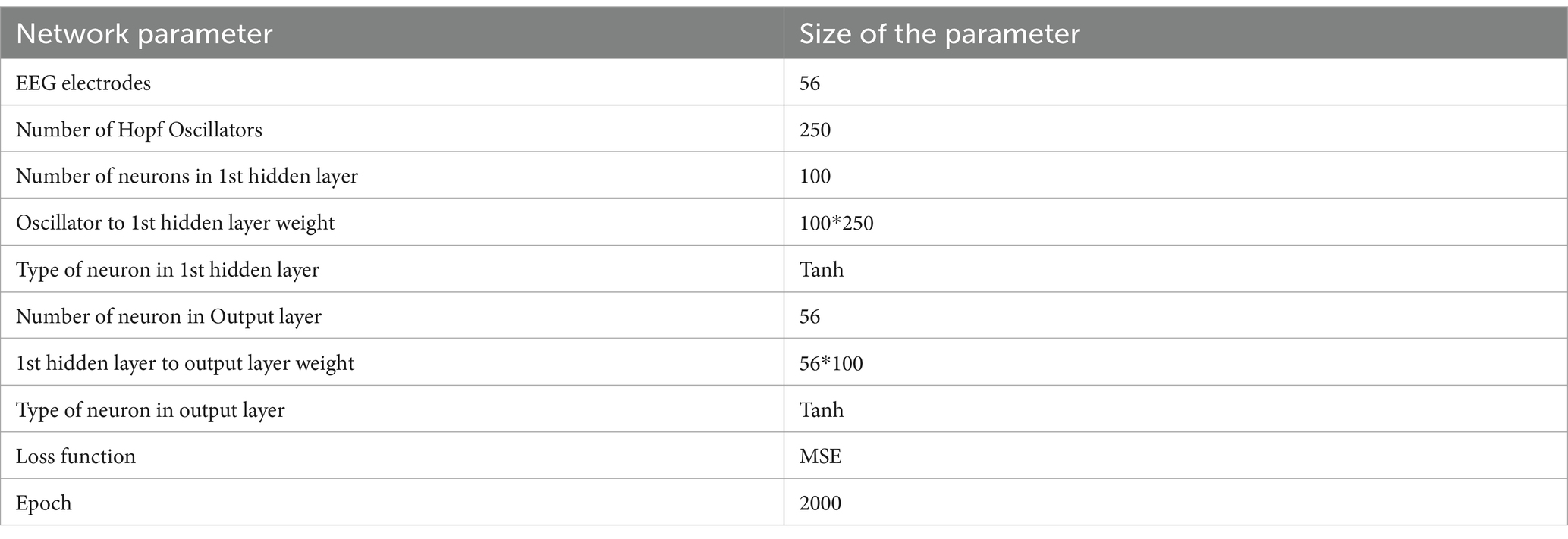

Here we generate EEG data for 5 s (after training with a 10 s-long EEG segment) using the trained parameters—intrinsic frequency of oscillators, lateral coupling weights among oscillators, and feed-forward weights [training of intrinsic frequencies and lateral are discussed in Section 2.5, feed-forward weight training rules are described in Section 2.6 (insertion of hidden layer)]. We have used MSE loss function in this model. The model parameters of this network has been described in Table 3.

Table 3. Model parameter of the “Insertion of hidden layer” model described in Figure 3a.

The power spectrum (Figures 1a,b), time series (Figures 6a,b), Hurst exponent (HC) (Supplementary section 11), and Higuchi fractal dimension (HFD) (Supplementary section 12) of the predicted signal are compared with the next 5 s segment of empirical EEG data. The time series of the training segment as well as the generated segment are plotted (Figures 6a,b) for two sleep stages. (The other three sleep stages have been shown in the Supplementary Figures 7a–c).

Figure 6. (a,b) Empirical EEG Data and training and generated (model reconstructed and model generated) of five sleep stages [(a) NREM N1-F8 channel; (b) NREM N3: Fp2 channel]. The blue line shows actual EEG Data (Yd(t)) and orange line (Yp(t)) shows model reconstructed (initial 10 s) and then generated output (next 5 s). Where initial 10 s data are reconstructed and 5 s data are generated from model.

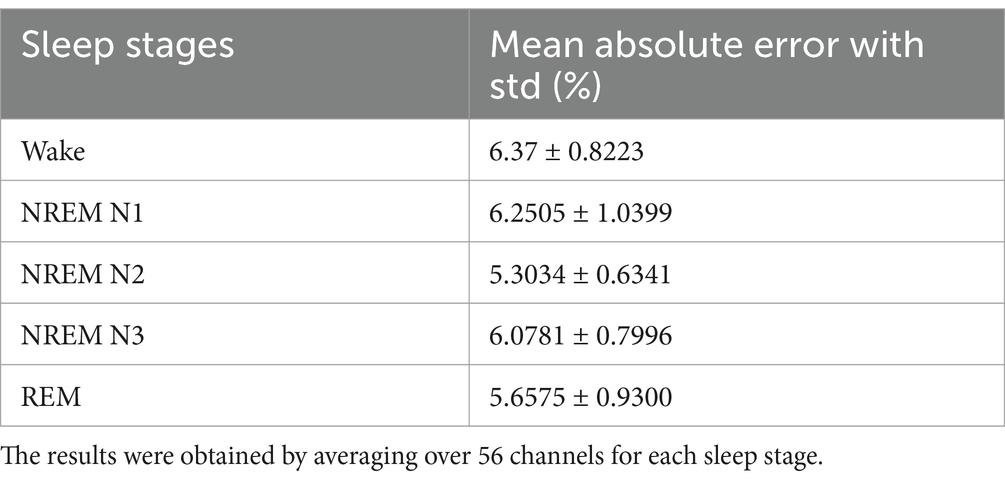

To evaluate our model performance, we calculated mean absolute error (MAE) (Nguyen et al., 2020), which basically determines the time average difference between network simulated data (model prediction) and empirical EEG Data. MAE can be mathematically expressed as Equation 6a:

where (predicted signal for a time interval ) is predicted EEG and is the actual EEG, and their absolute difference is averaged over a time window.

The average error score (average over all channels for each sleep stage) was calculated using MAE (Equation 6a). The result of MAE values along with standard deviation are listed in Table 4.

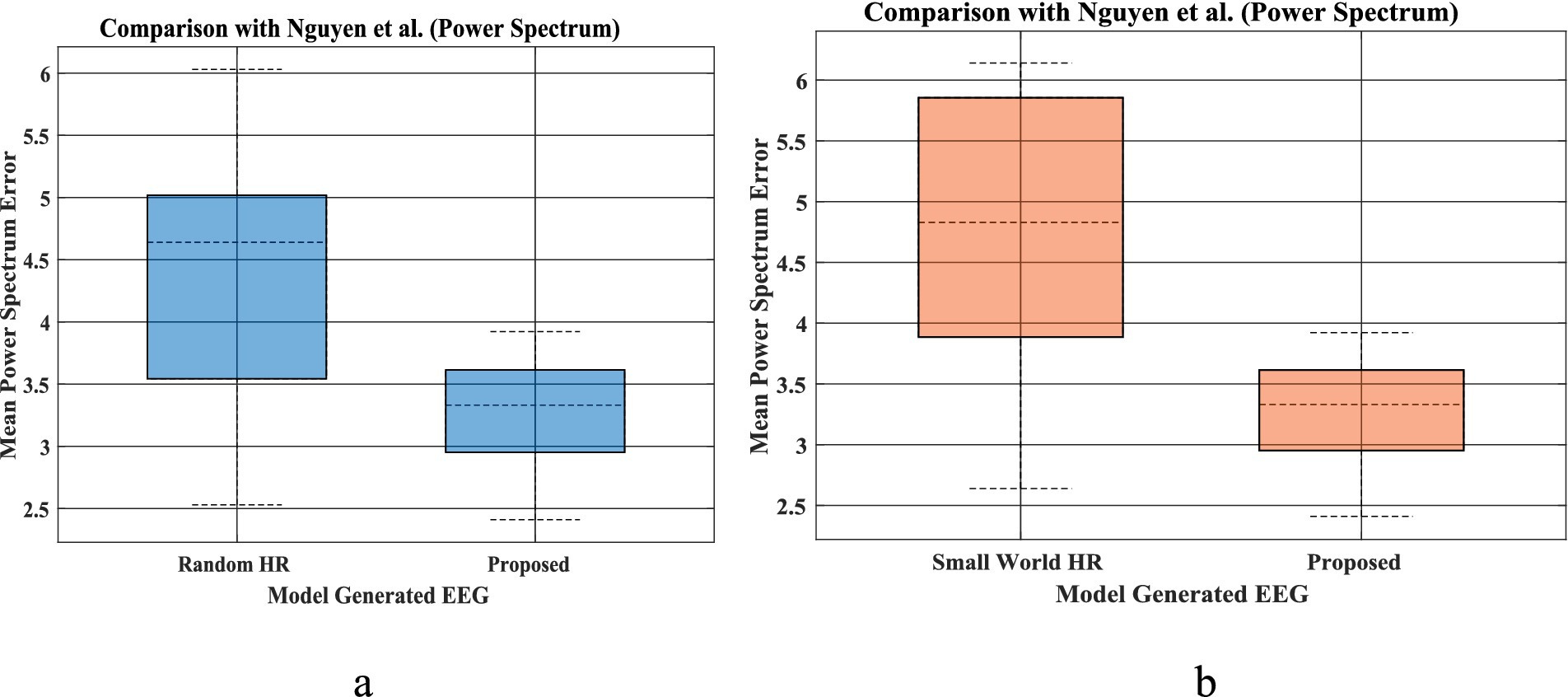

Power spectrum density is one of the critical methods to quantify the EEG data. It resembles the frequency content of a signal. The spectral features of the generated EEG segments are compared over the test duration [the next 5 s after the training duration (Figures 1a,b)]. The empirical power is calculated from the actual EEG data and the predicted spectrum from the model predicted signal. The average power spectrum error over 56 channels for each sleep stage between model predicted and empirical EEG is shown in Table 1. Note that compared to Nguyen et al., our model power spectrum prediction error is significantly lesser (Figures 7a,b).

Figure 7. (a,b) EEG mean power spectrum during prediction proposed by Nguyen et al. (left bar in each figure), and our proposed coupled Hopf network-with hidden layer model (Figure 3A, Section 2.6) (right bar plot in each figure). The bar plot is calculated using power spectrum prediction error for all 5 set of EEGs [(i)-Set A, (ii)-Set B, (iii)-Set C, (iv)-Set D, (v)-Set E] for 4 different networks proposed by Nguyen et al. Similarly, power spectrum prediction calculated from our proposed model for all 5 sleep stages [(i)-Wake, (ii)-NREM N1, (iii)-NREM N2, (iv)-NREM N3, (v)-REM]. [(a) Comparison between Random HR and proposed; (b) comparison between small world HR and proposed].

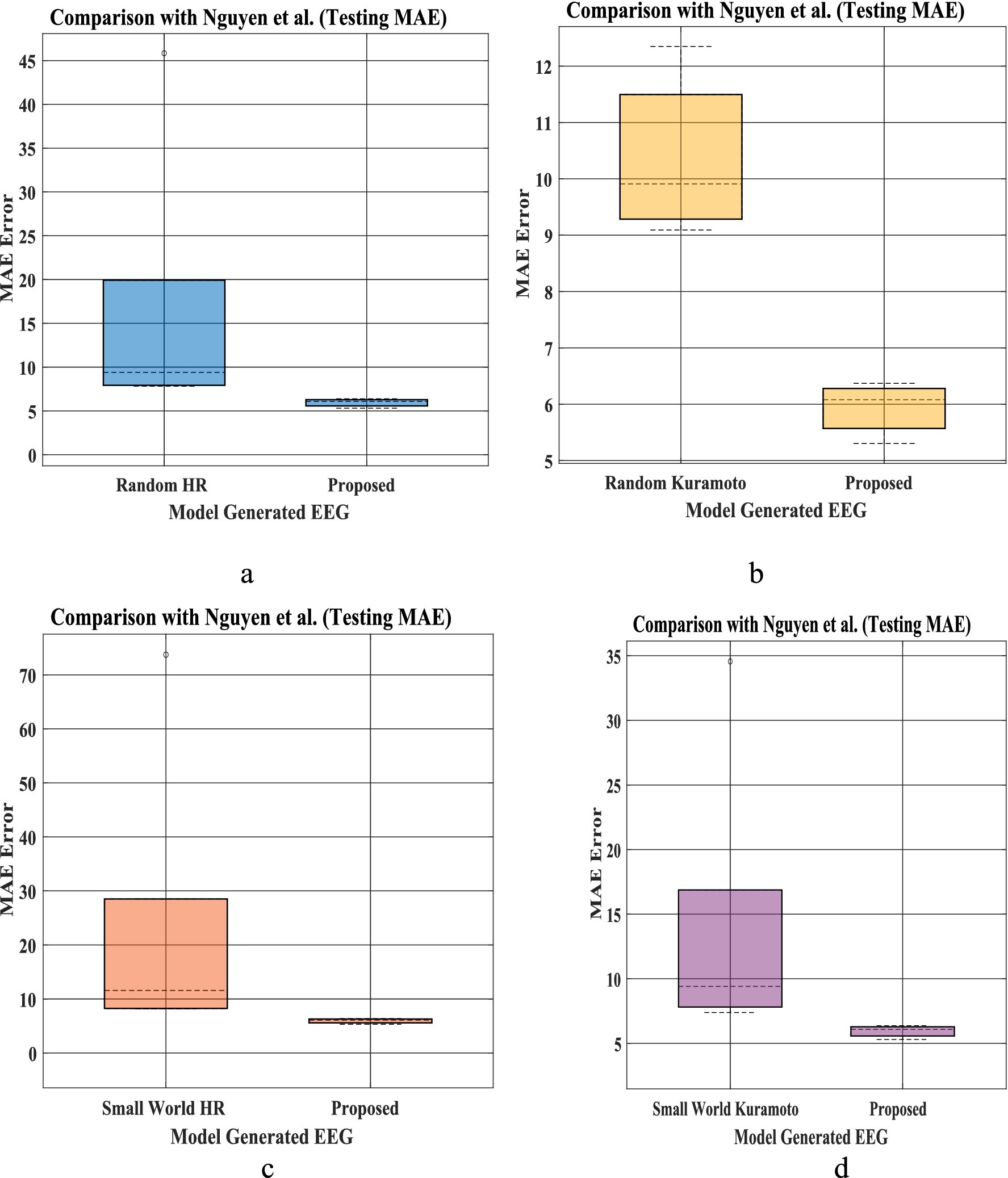

The bar plot in Figures 8a–d shows that mean absolute error between predicted and empirical EEG Data is less than the method proposed by Nguyen et al. To compare the mean error between our proposed network and that of Nguyen et al., we conduct Wilcoxon signed rank test. Our model produce a better fit [Test statistics (2) is less than critical value (8) for alpha value 0.05], indicating a significant difference between our proposed model and model proposed by Nagual et al. Also, Univariate statistical t-test was done to identify the relative significance of power spectral density between the EEG signal predicted by the model and empirical EEG Data (Supplementary section 13).

Figure 8. (a–d) EEG Mean absolute error (MAE) distribution for prediction proposed by Nguyen et al. (2020) (left bar in each figure for five set of EEG data), and our proposed with hidden layer model (Figure 3a, Section 2.6) (right bar plot in each figure). Bar plot is calculated using MAE of prediction for all 5 set of EEG [(i)-Set A, (ii)-Set B, (iii)-Set C, (iv)-Set D, (v)-Set E provided in the testing result section (Nguyen et al., 2020)] for 4 different network proposed by Nguyen et al. Similarly, MAE prediction calculated from our proposed model for all 5 sleep stages [(i)-Wake, (ii)-NREM N1, (iii)-NREM N2, (iv)-NREM N3, (v)-REM]. [(a) Comparison between Random HR and proposed; (b) comparison between small world HR and proposed; (c) comparison between Random Kuramoto and proposed; (d) comparison between small world Kuramoto model and the proposed model].

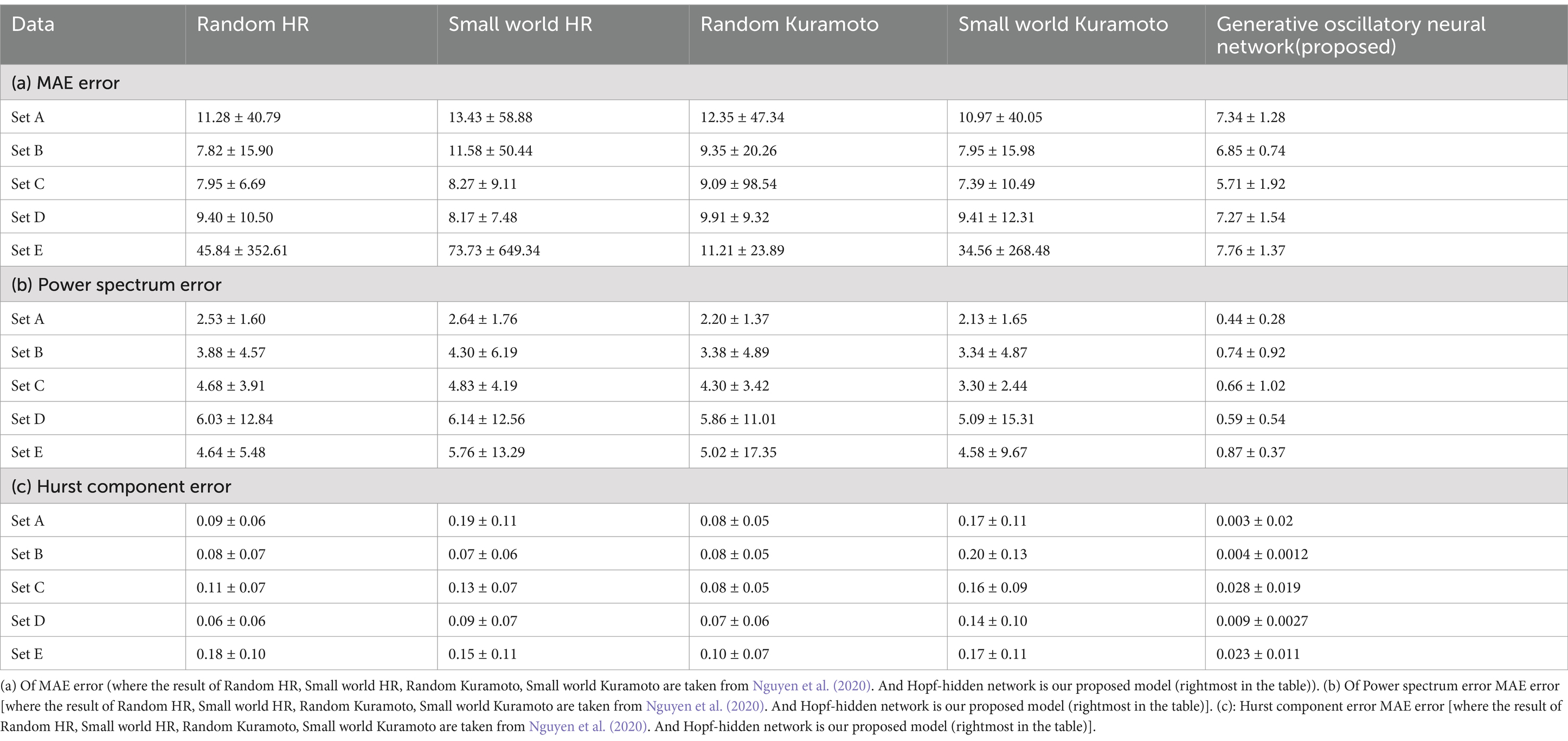

In this study, we also utilize an additional benchmark dataset from Nguyen et al. (2020) to evaluate the performance of our Hopf network. By comparing the MAE, power spectrum error, and Hurst component error metrics proposed in Nguyen et al. (2020), we demonstrate that our model achieves more promising results, outperforming the reference model. To compare this we have taken the BONN dataset (Andrzejak et al., 2001) and the details description of the BONN dataset has been explained into the Supplementary section 15. To maintain the consistency with the previous literature (Nguyen et al., 2020), here also we train with 1st 2,000 time points EEG and next 1,000 time points we used for testing (see Table 5).

Table 5. Comparison with Nguyen et al. (2020) and our proposed model on BONN dataset.

3.4 Sensitivity analysis

In our Model, there are several parameters that can be varied and the effect on the network performance can be assessed. For this purpose, we consider four parameters:

Oscillator amplitude (μ).

Coupling coefficient ( ), in Equation 1f.

Beta (β) parameter in the Hopf oscillator.

Additional Hidden Layer.

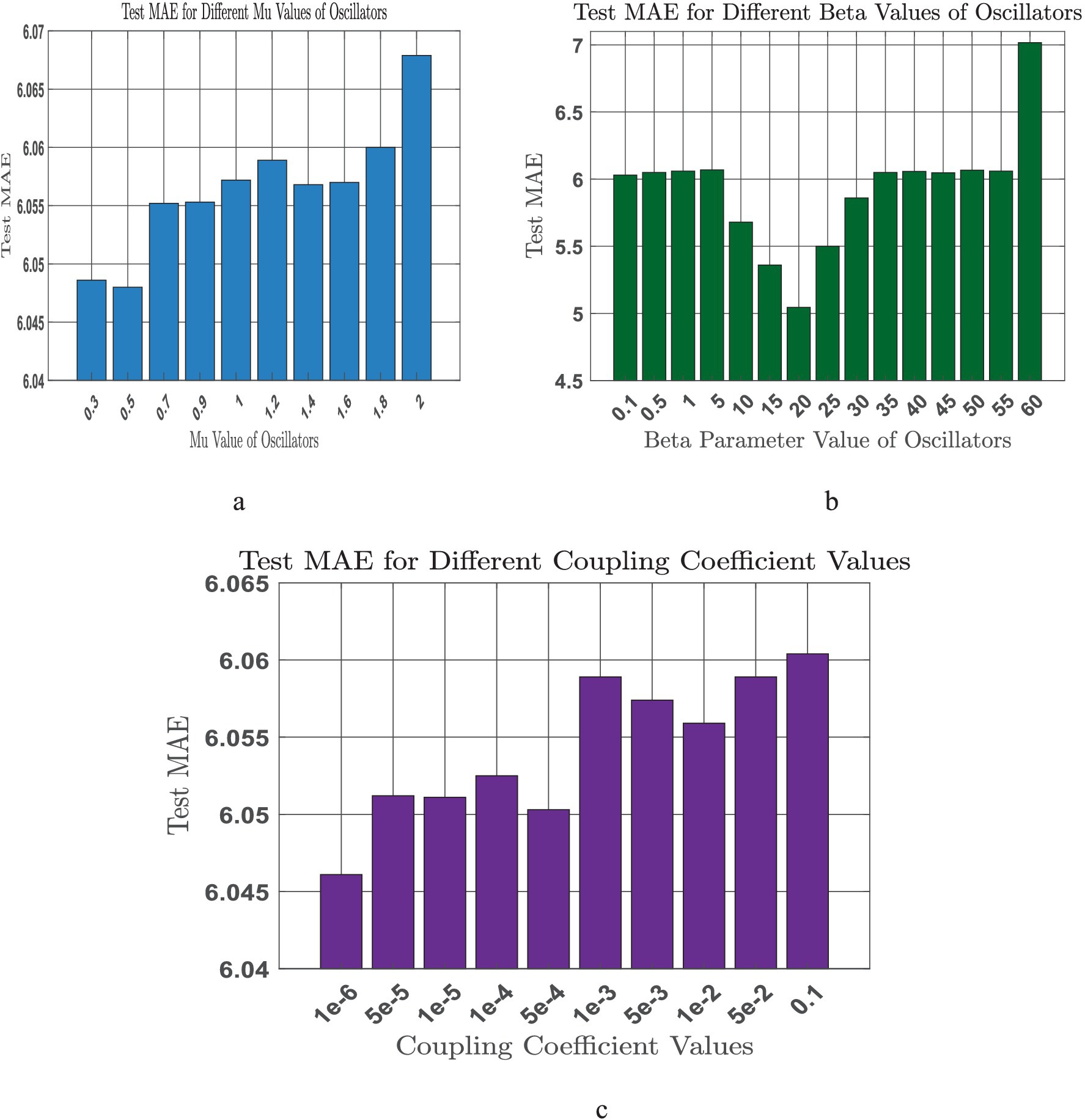

The oscillator amplitude parameter (μ) is varied over the range of (0.5 to 2), and the corresponding mean absolute error is shown below (Figure 9a). We observe that with an increase of mu(μ) over the given range no such significant change is visible in the output error (see Figure 9b). This probably because any change in μ is offset by an compensating change in the weights from the oscillators to the hidden layer, so as to produce the same output error.

Figure 9. (a-c) Sensitivity analysis on tunable model parameters [(a) Oscillator amplitude (μ); (b) Coupling coefficient (c) Beta (𝜷)].

Also, we next vary the coupling coefficient magnitude , which scales the coupling among the oscillators., We have seen with the increase of coupling coefficient MAE error also increased (Figure 9a). This probably because any change in the coupling coefficient is offset by an compensating change in the coupling weights among the oscillators, so as to produce the same output error.

We change the (β) parameter over the range of (0.1 to 60), and the corresponding mean absolute error is shown below (Figure 9c). We observe that the model gives optimal error in the neighborhood of beta = 20. Also, at the end we have optimized the model parameters.

Also, we have done a comparison analysis with the addition of another more hidden layer (Table 6), and we observed that there is no significant change in model training and testing performances.

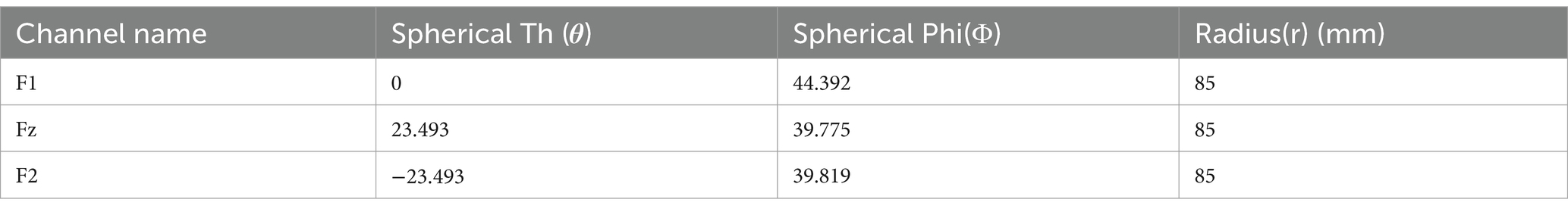

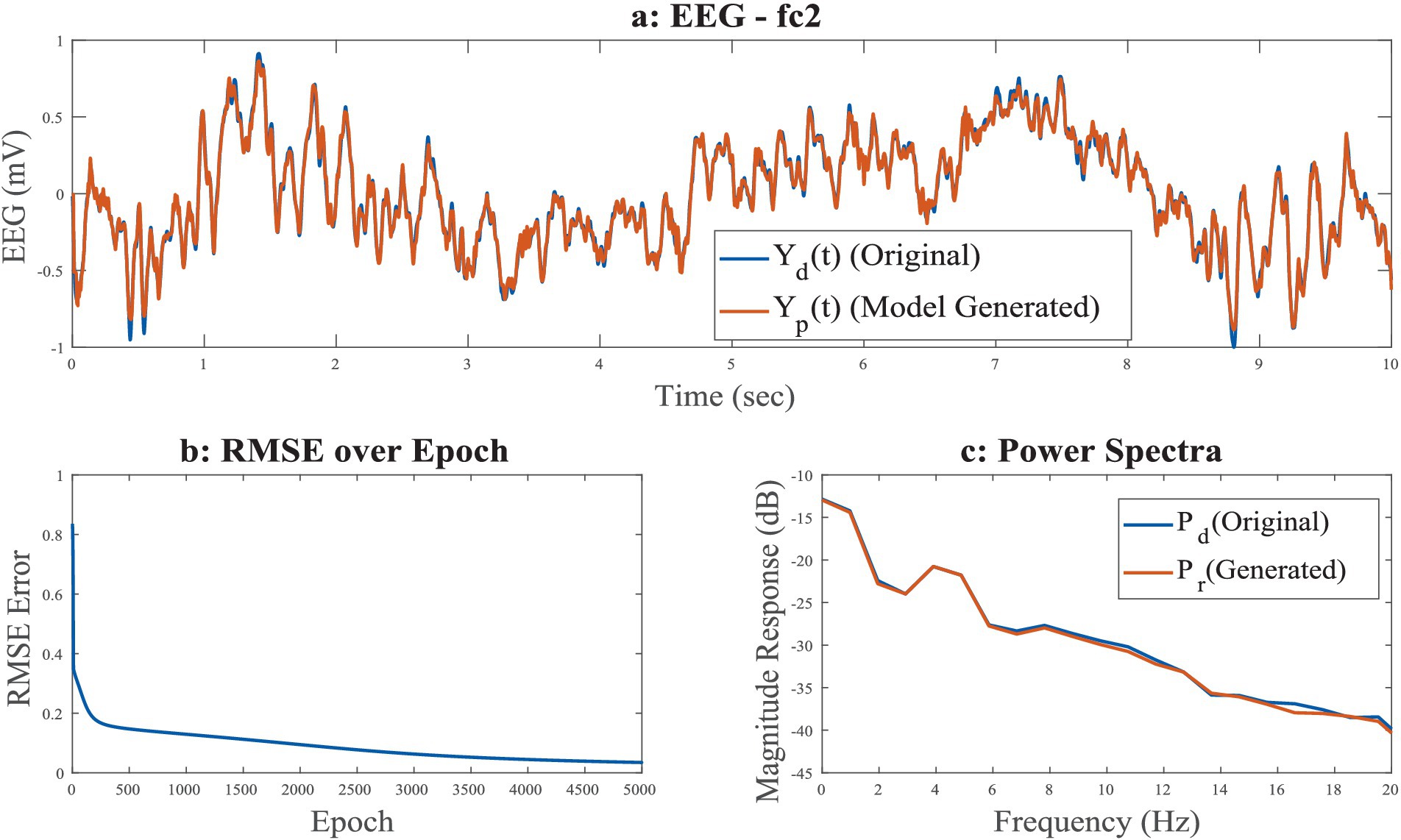

3.5 Spherical shell model

In this study we create a spherical (radius 85 mm obtained from EEGLab) on which the electrodes are placed. Underneath this spherical shell, we place two more spherical surfaces forming a hollow spherical shell, of inner radius (r_1 = 70 mm) and outer radius (r_2 = 75 mm), within which the oscillators are distributed. Within this spherical shell we distribute 1,000 Hopf oscillators. Sample locations for a few channels in spherical (Table 7) are shown. (Also, sample locations of electrodes in Cartesian coordinate has been shown in Supplementary Table S4).

Using a similar distance threshold ( ) defined in Equation 6a, we allocate oscillators to various electrodes (Supplementary Table S5). The number of shared oscillators between pairwise electrodes are given in Figure 10. Connectivity among oscillators is determined by the threshold value .

We can see the oscillator distribution is unequal for the rectangular grid and the spherical shell. That is because of their geometrical shape. In the case of the rectangular grid, a total of 972 oscillators are distributed over three layers; each unit of the rectangular grid consists of one oscillator, whereas in the spherical shell, 1,000 oscillators are randomly distributed over a spherical surface (see Figure 11).

Figure 11. (a–c) Time series of reconstructed signal and the desired signal for FC2 channel among 8 channels in a spherical shell, during training stage; (b) RMSE error w.r.t. training epochs; (c) power spectrum of desired and reconstructed signal, desired EEG, predicted EEG, desired EEG power, desired EEG power.

In the case of the rectangular grid, no proper electrode geometry was followed, all 8 electrodes are in same plane, and oscillators distributed over channels are not far from each other (see Supplementary section 10). But in the case of the spherical shell, the electrode layer also has a spherical geometry with the same curvature as that of the oscillator layer, and oscillators distribution within the layer is random. For example, channel Cz is estimated by 219 number of oscillators and its nearest channel C2 is estimated by 149 oscillators. One reason might be the same fixed threshold is used to calculate the number of oscillators belonging to each channel. Also, in comparison with a rectangular grid, a larger number of oscillators are assigned here.

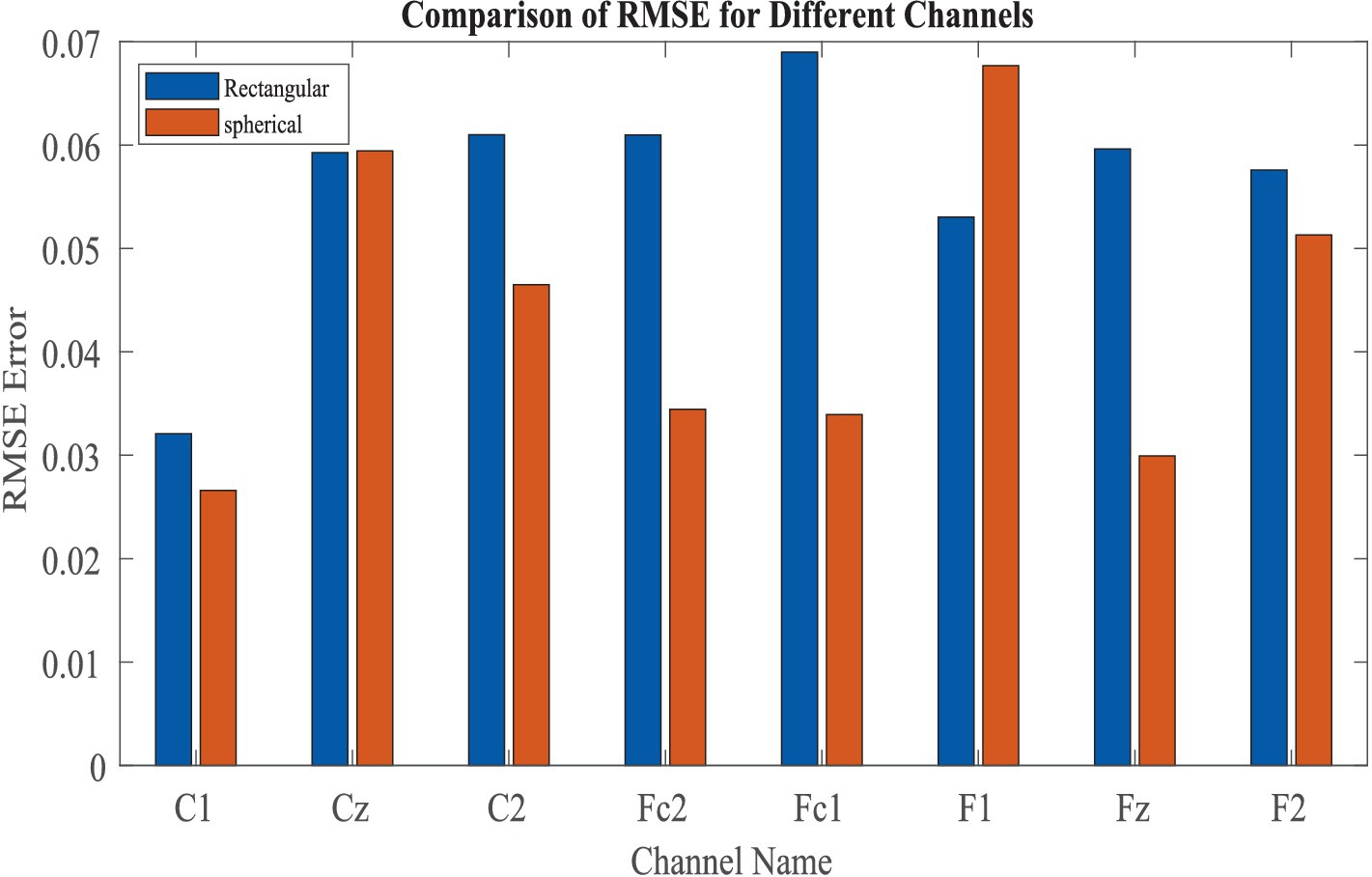

We have evaluated the reconstruction errors bar plot across eight channels using two model architectures: (a) Rectangular and (b) Spherical (Figure 12). The results indicate that the Spherical model provides a better overall fit. However, for the F1 channel, the rectangular model achieves higher reconstruction accuracy.

Figure 12. Comparison of reconstruction error in between “Spherical shell” and “rectangular grid” model.

4 Discussion

In the present study, we model a 56-channel EEG signal with a network of oscillatory neurons. The proposed network is able to model (both reconstruction and prediction) whole-brain EEG data. The network is able to successfully predict test signals over a significant duration beyond the training duration (5 s), and is able to retain properties (Hurst component and Higuchi fractal dimension) of the actual EEG signals over the frequency band of interest.

In the present study, insertion of a hidden layer between the oscillator layer and the output layer is proven to improve reconstruction quality (Supplementary section 7) significantly. This is because when there was no hidden layer, the output signal was essentially approximated by a finite set of sinusoidal signals represented by the oscillators of the input layer. But once we applied the hidden layer in our 2nd network, those sinusoids pass through the nonlinear sigmoid functions of the hidden layer. Even when a single sinusoid passes through a nonlinear sigmoid, we can get all the infinite harmonics. Furthermore, when a mixture of sinusoids is passed through a sigmoid function, we get the harmonics not only of the original frequencies but the harmonics of all the mixtures (e.g., ). Hence, the hidden layer is expanding the spectrum that is available at the output layer. Thus, the finite number of frequencies available when we did not apply a hidden layer suddenly explode to an infinite set of frequencies available when we apply a hidden layer.

In comparison with Nguyen et al. (2020), where 3,000 neurons used to simulate EEG data whereas in our model the network with only 250 Hopf oscillators was able to predict more accurately even without using any data reduction techniques like the PCA.

Our proposed method can predict EEG Data in the order of 5 s (2,500 Data points). The predicted signal has good agreement with actual EEG with respect to power spectrum, Hurst exponent (Supplementary material) and complexity measure (Higuchi fractal dimension) (Supplementary material) which are the key results of our study. A potential application of our network can be synthetic EEG generation for research and education.

Also, we have successfully demonstrated spatial localization architecture for oscillator reservoirs. The performance of spatially arranged oscillators with a hidden layer is good, as demonstrated by RMSE values. However, in our spatial geometry of oscillators, we only considered locally connected regions. Also, real structural data can be implemented on this proposed model to realize a large-scale TVB type of model (Sanz Leon et al., 2013; Al-Hossenat et al., 2019). In the future, we can easily expand our model to a real MRI-based surface where oscillators are placed according to structural-functional connectivity nodes. Thus, the problem regarding the unequal distribution of oscillators in the case of “spherical shell” geometry can be eliminated.

Each EEG channel was modeled by a single Hindmarsh Rose neuron in an ADHD study (Ansarinasab et al., 2023); since EEG represents the collective activity populations of neurons, in the present model, each EEG channel is modeled by a network of oscillators. Also, a graph-based brain topology was used in the same study (Ansarinasab et al., 2023), whereas in the proposed model, we distribute the oscillators spatially as per two different geometries: rectangular and spherical. It is very difficult to predict non-stationary signal like EEG beyond the training duration, however, deep learning generative model [GAN type model (Panwar et al., 2020; Aznan et al., 2019a)] requires a huge training sample, that problem also can be solved by our proposed oscillatory generative model.

While both works utilize, like a good number of existing models of brain dynamics (Ghorbanian et al., 2014; Ghorbanian et al., 2015a; Ghorbanian et al., 2015b; Szuflitowska and Orlowski, 2021; Babloyantz et al., 1985; Rankine et al., 2006; Burke and de Paor, 2004; Ren et al., 2017; Nguyen et al., 2020; Logothetis et al., 2001; Cabral et al., 2023; Breakspear, 2017; Deco et al., 2015; Deco et al., 2017; Deco et al., 2021; Luppi et al., 2022; López-González et al., 2021; Deco and Kringelbach, 2014; Ponce-Alvarez and Deco, 2024), our case, network of Hopf oscillators as the basic source of oscillations, the specific methods and the outcomes of the two studies are significantly different.

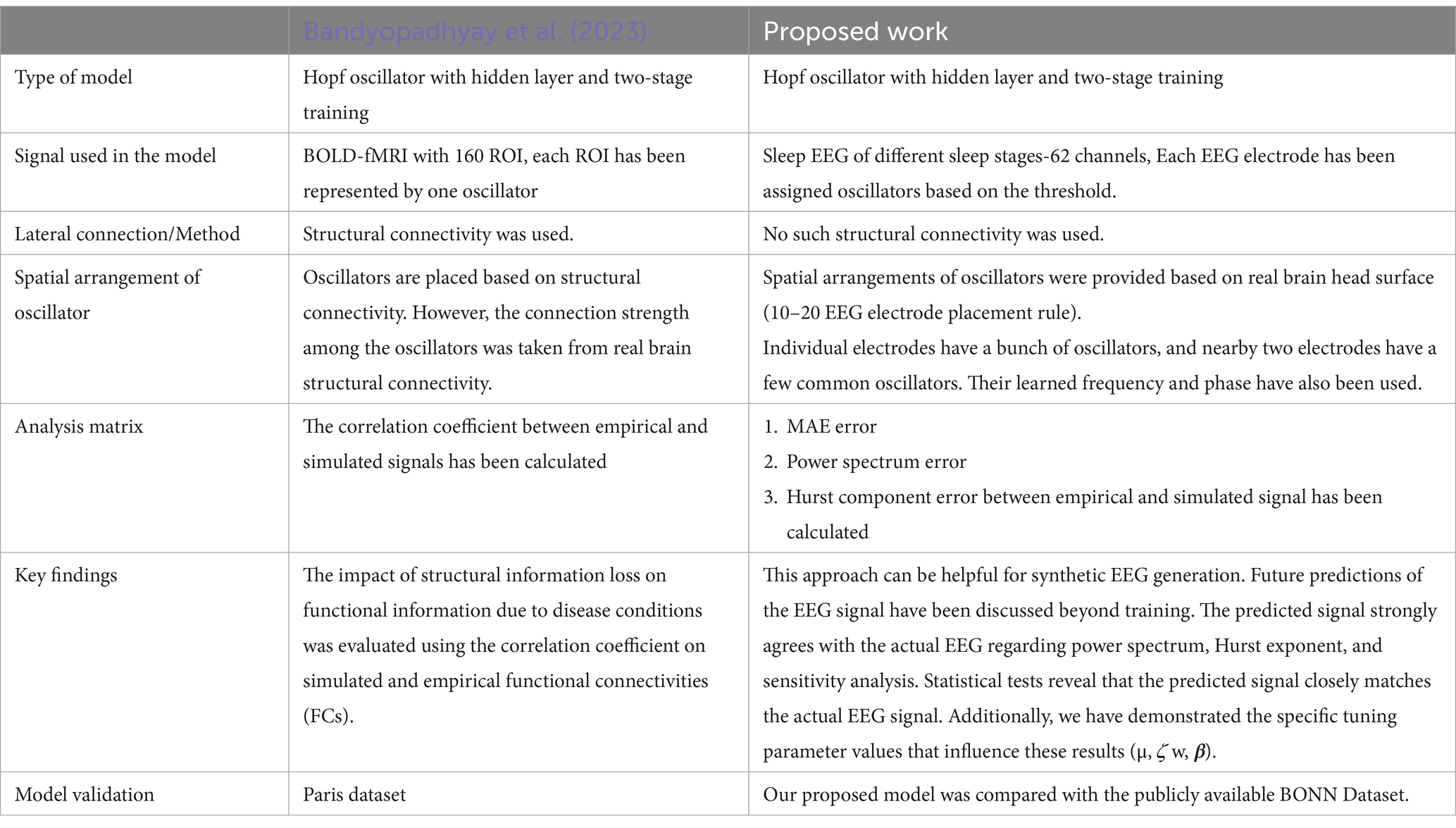

Our group recently developed a similar network using fMRI signals. In that work, Bandyopadhyay et al. (2023) demonstrated a strong alignment between predicted and empirical functional connectivity (FC), as validated through graph-theoretical analysis. Authors have shown that structural damage resulted in cascading disruptions in static and dynamic FC patterns. Computational interventions revealed that optimizing the coupling coefficient (μ) could restore functional integrity. This in silico perturbation study further highlighted how targeted parameter adjustments could compensate for structural degradation, providing insights into potential therapeutic applications. These findings underscore the model’s potential in understanding and addressing disruptions in brain network dynamics.

However, a key distinction between our proposed study and that of Bandyopadhyay et al. (2023) lies in the testing regime. While their work primarily focused on training, signal reconstruction, and the connection between structural and functional connectivity, they did not discuss the network’s behavior beyond training. In contrast, our proposed network can retain learned patterns beyond the training signal. Additionally, we show that the network-generated signals preserve key properties in both the time and frequency domains. Furthermore, we introduced a spherical shell model based on the 10–20 EEG electrode system, enabling EEG signal reconstruction while utilizing shared oscillators’ natural frequencies and phases. We also explored optimal network parameters to achieve the best fit, enhancing the model’s reliability and performance. Data augmentation for EEG has gained significant research attention in recent years (Torma and Szegletes, 2025; Aznan et al., 2019b; Kalaganis et al., 2020; Hartmann et al., 2018). Collecting EEG data has several challenges, primarily due to the strict requirements of the environment and the variability in subjects’ psychological and physiological conditions. Due to the limited accessibility of the highly dense EEG data, applying several deep learning models is challenging. To address this issue, data augmentation techniques have emerged as a viable solution. This deep oscillatory neural generative model offers strong potential for synthesizing realistic EEG data. Additionally, we have included a comparison table (Table 8) comparing our method with that of Bandyopadhyay et al. (2023).

Table 8. Comparison with Bandyopadhyay et al. (2023).

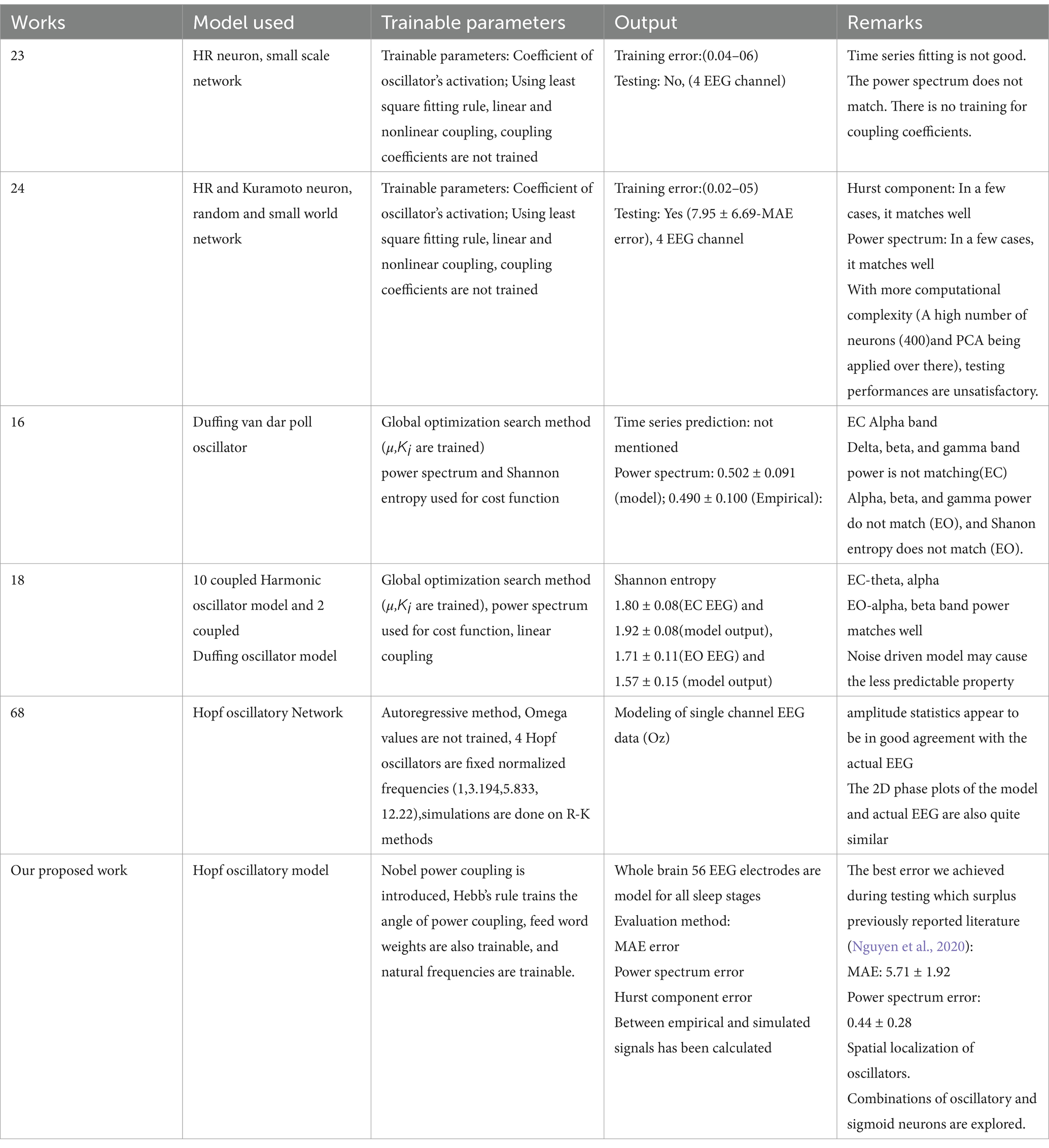

Baseline models Ren et al. (2017), Ghorbanian et al. (2015a), and Ghorbanian et al. (2015b) utilized the same modeling and training methodologies. Our assessment of existing EEG modelling approaches highlights significant differences and enhancements in our proposed framework (Table 9). This structured comparison emphasizes our framework’s advancements in achieving a more biologically plausible network with improved computational traceability.

Additionally, we introduced a spherical shell model based on the 10–20 electrode geometry, enabling the reconstruction of EEG signals. In this model, the natural frequencies and phases of the shared oscillators were utilized effectively. Furthermore, we explored and identified optimal network parameters to achieve the best fit, ensuring superior signal reconstruction and validation performance.

Future efforts will be directed to developing the current model into a more realistic model of sleep dynamics. In the current modelling approach, separate networks are trained to produce EEG signals of various sleep stages and the waking stage. However, in a more authentic model of sleep, it is desirable to generate the various stages in a single model and explicitly demonstrate the transitions from one stage to the next. Ideally, such a model will show the sleep–wake cycle at a longer or diurnal time scale, and also the entire architecture of sleep substages within the 8-h long sleep stage. The model also will permit a minimal representation of the main neural substrates of sleep regulation such as the Suprachiasmatic Nucleus (SCN), hypothalamic and thalamic nuclei and the neuromodulatory systems involved in sleep regulation, and the Reticular Activating System (RAS). Our approach combines empirical EEG data with a mathematical model to create virtual representations of the brain’s oscillatory dynamics. It can help to explore the impact of neuronal excitability, synaptic plasticity, and network connectivity on sleep stage transitions (Tatti and Cacciola, 2023).

In the future sleep model that we envisage, the interactions among the various subcortical circuits and neural systems produce the subrhythms of sleep, which, acting on the cortex, generate the EEG activity patterns characteristic of the relevant sleep stage. In this paper, we primarily focus on sleep EEG. However, our model is a general-purpose, universal framework that can be applied to any EEG dataset. To validate its universality, we tested the model on the publicly available BONN dataset, demonstrating improved performance compared to the previously reported study (Nguyen et al., 2020).

Data availability statement

The raw data supporting the conclusions of this article will be made available by the authors, without undue reservation.

Ethics statement

The studies involving humans were approved by Virginia Tech Institutional Review Borad. The studies were conducted in accordance with the local legislation and institutional requirements. The participants provided their written informed consent to participate in this study. Written informed consent was obtained from the individual(s) for the publication of any potentially identifiable images or data included in this article.

Author contributions

SG: Conceptualization, Data curation, Formal analysis, Investigation, Methodology, Software, Validation, Visualization, Writing – original draft, Writing – review & editing. DB: Supervision, Visualization, Writing – review & editing. NR: Visualization, Writing – review & editing. SV: Supervision, Visualization, Writing – review & editing. VC: Funding acquisition, Investigation, Project administration, Resources, Supervision, Visualization, Writing – review & editing.

Funding

The author(s) declare that financial support was received for the research and/or publication of this article. This work was supported by DBT project and Parkinson and Therapeutic Lab, IIT Madras. SV was supported by funding by grant W911NF-23-1-0014 from the U.S. Army Research Office and by grant 2047529 from the National Science Foundation. NR is funded by CSIR.

Acknowledgments

We acknowledged the suggestions from Dipayan Biswas towards developing the model.

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Generative AI statement

The author(s) declare that no Gen AI was used in the creation of this manuscript.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Supplementary material

The Supplementary material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/fninf.2025.1513374/full#supplementary-material

References

Acconito, C., Angioletti, L., and Balconi, M. (2023). Visually impaired people and grocery shopping in store: first evidence from brain oscillations electroencephalogram. Human Fact. Ergon. Manuf. Serv. Ind. 33, 246–258. doi: 10.1002/hfm.20981

Achermann, P., and Borbély, A. A. (1997). Low-frequency (< 1 Hz) oscillations in the human sleep electroencephalogram. Neuroscience 81, 213–222. doi: 10.1016/S0306-4522(97)00186-3

Achermann, P., and Borbély, A. A. (2003). Mathematical models of sleep regulation. Front. Biosci. Landmark 8, s683–s693. doi: 10.2741/1064

Achermann, P., Dijk, D. J., Brunner, D. P., and Borbély, A. A. (1993). A model of human sleep homeostasis based on EEG slow-wave activity: quantitative comparison of data and simulations. Brain Res. Bull. 31, 97–113. doi: 10.1016/0361-9230(93)90016-5

Alhagry, S., Fahmy, A. A., and El-Khoribi, R. A. (2017). Emotion recognition based on EEG using LSTM recurrent neural network. Int. J. Adv. Comput. Sci. Appl. 8, 355–358. doi: 10.14569/IJACSA.2017.081046

Al-Hossenat, A., Wen, P., and Li, Y. (2017). Simulation α of EEG using brain network model. In Proceedings of the first MoHESR and HCED Iraqi scholars conference in Australasia 2017 (ISCA 2017) (pp. 336–345). Swinburne University of Technology.

Al-Hossenat, A., Wen, P., and Li, Y. (2019). Modelling and simulating different bands of EEG signals with the virtual brain. Int. J. Electr. Electron. Data Commun. 7, 66–70. http://iraj.in

Andrzejak, R. G., Lehnertz, K., Mormann, F., Rieke, C., David, P., and Elger, C. E. (2001). Indications of nonlinear deterministic and finite-dimensional structures in time series of brain electrical activity: dependence on recording region and brain state. Phys. Rev. E 64:061907. doi: 10.1103/PhysRevE.64.061907

Ansarinasab, S., Parastesh, F., Ghassemi, F., Rajagopal, K., Jafari, S., and Ghosh, D. (2023). Synchronization in functional brain networks of children suffering from ADHD based on Hindmarsh-rose neuronal model. Comput. Biol. Med. 152:106461. doi: 10.1016/j.compbiomed.2022.106461

Aznan, N. K. N., Atapour-Abarghouei, A., Bonner, S., Connolly, J. D., Al Moubayed, N., and Breckon, T. P. (2019a). Simulating brain signals: creating synthetic EEG data via neural-based generative models for improved SSVEP classification. In 2019 international joint conference on neural networks (IJCNN) (pp. 1–8). IEEE.

Aznan, N. K. N., Connolly, J. D., Al Moubayed, N., and Breckon, T. P. (2019b). Using variable natural environment brain-computer interface stimuli for real-time humanoid robot navigation. In 2019 international conference on robotics and automation (ICRA) (pp. 4889–4895). IEEE.

Babloyantz, A., Salazar, J. M., and Nicolis, C. (1985). Evidence of chaotic dynamics of brain activity during the sleep cycle. Phys. Lett. A 111, 152–156. doi: 10.1016/0375-9601(85)90444-X

Bahador, N., Jokelainen, J., Mustola, S., and Kortelainen, J. (2021). Reconstruction of missing channel in electroencephalogram using spatiotemporal correlation-based averaging. J. Neural Eng. 18:056045. doi: 10.1088/1741-2552/ac23e2

Bakker, J. P., Ross, M., Cerny, A., Vasko, R., Shaw, E., Kuna, S., et al. (2023). Scoring sleep with artificial intelligence enables quantification of sleep stage ambiguity: hypnodensity based on multiple expert scorers and auto-scoring. Sleep 46:zsac154. doi: 10.1093/sleep/zsac154

Bandyopadhyay, A., Ghosh, S., Biswas, D., Chakravarthy, V. S., and Bapi, R. S. (2023). A phenomenological model of whole brain dynamics using a network of neural oscillators with power-coupling. Sci. Rep. 13:16935. doi: 10.1038/s41598-023-43547-3

Başar, E. (1983). “Synergetics of neuronal populations. A survey on experiments” in Synergetics of the brain. Eds. Erol Başar, Hans Flohr, Hermann Haken, Arnold J. Mandell (Berlin, Heidelberg: Springer), 183–200.

Biswas, D., Pallikkulath, S., and Chakravarthy, V. S. (2021). A complex-valued oscillatory neural network for storage and retrieval of multidimensional aperiodic signals. Front. Comput. Neurosci. 15:551111. doi: 10.3389/fncom.2021.551111

Breakspear, M. (2017). Dynamic models of large-scale brain activity. Nat. Neurosci. 20, 340–352. doi: 10.1038/nn.4497

Březinová, V. (1974). Sleep cycle content and sleep cycle duration. Electroencephalogr. Clin. Neurophysiol. 36, 275–282. doi: 10.1016/0013-4694(74)90169-2

Burke, D. P., and de Paor, A. M. (2004). A stochastic limit cycle oscillator model of the EEG. Biol. Cybern. 91, 221–230. doi: 10.1007/s00422-004-0509-z

Cabral, J., Castaldo, F., Vohryzek, J., Litvak, V., Bick, C., Lambiotte, R., et al. (2022). Metastable oscillatory modes emerge from synchronization in the brain spacetime connectome. Commun. Physics 5:184. doi: 10.1038/s42005-022-00950-y

Cabral, J., Fernandes, F. F., and Shemesh, N. (2023). Intrinsic macroscale oscillatory modes driving long range functional connectivity in female rat brains detected by ultrafast fMRI. Nat. Commun. 14:375. doi: 10.1038/s41467-023-36025-x

Das, S., and Puthankattil, S. D. (2022). Functional connectivity and complexity in the phenomenological model of mild cognitive-impaired Alzheimer's disease. Front. Comput. Neurosci. 16:877912. doi: 10.3389/fncom.2022.877912

Deco, G., and Kringelbach, M. L. (2014). Great expectations: using whole-brain computational connectomics for understanding neuropsychiatric disorders. Neuron 84, 892–905. doi: 10.1016/j.neuron.2014.08.034

Deco, G., Kringelbach, M. L., Jirsa, V. K., and Ritter, P. (2017). The dynamics of resting fluctuations in the brain: metastability and its dynamical cortical core. Sci. Rep. 7:3095. doi: 10.1038/s41598-017-03073-5

Deco, G., Tononi, G., Boly, M., and Kringelbach, M. L. (2015). Rethinking segregation and integration: contributions of whole-brain modelling. Nat. Rev. Neurosci. 16, 430–439. doi: 10.1038/nrn3963

Deco, G., Vidaurre, D., and Kringelbach, M. L. (2021). Revisiting the global workspace orchestrating the hierarchical organization of the human brain. Nat. Hum. Behav. 5, 497–511. doi: 10.1038/s41562-020-01003-6

Esser, S. K., Hill, S. L., and Tononi, G. (2007). Sleep homeostasis and cortical synchronization: I. Modeling the effects of synaptic strength on sleep slow waves. Sleep 30, 1617–1630. doi: 10.1093/sleep/30.12.1617

Finelli, L. A., Baumann, H., Borbély, A. A., and Achermann, P. (2000). Dual electroencephalogram markers of human sleep homeostasis: correlation between theta activity in waking and slow-wave activity in sleep. Neuroscience 101, 523–529. doi: 10.1016/S0306-4522(00)00409-7

Fisher, S. P., Foster, R. G., and Peirson, S. N. (2013). The circadian control of sleep. Circadian Clocks 217, 157–183. doi: 10.1007/978-3-642-25950-0_7

Ghorbanian, P., Ramakrishnan, S., and Ashrafiuon, H. (2015a). Stochastic non-linear oscillator models of EEG: the Alzheimer's disease case. Front. Comput. Neurosci. 9:48. doi: 10.3389/fncom.2015.00048

Ghorbanian, P., Ramakrishnan, S., Whitman, A., and Ashrafiuon, H. (2014). Nonlinear dynamic analysis of EEG using a stochastic duffing-van der pol oscillator model. In Dynamic systems and control conference (Vol. 46193, p. V002T16A001). American Society of Mechanical Engineers.

Ghorbanian, P., Ramakrishnan, S., Whitman, A., and Ashrafiuon, H. (2015b). A phenomenological model of EEG based on the dynamics of a stochastic duffing-van der pol oscillator network. Biomed. Signal Process. Control 15, 1–10. doi: 10.1016/j.bspc.2014.08.013

Ghosh, S., Chandrasekaran, V., Rohan, N. R., and Chakravarthy, V. S. (2025). Electroencephalogram (EEG) classification using a bio-inspired deep oscillatory neural network. Biomed. Signal Process. Control 103:107379. doi: 10.1016/j.bspc.2024.107379

Goldstein, A. N., and Walker, M. P. (2014). The role of sleep in emotional brain function. Annu. Rev. Clin. Psychol. 10, 679–708. doi: 10.1146/annurev-clinpsy-032813-153716

Gorgoni, M., Bartolacci, C., D’Atri, A., Scarpelli, S., Marzano, C., Moroni, F., et al. (2019). The spatiotemporal pattern of the human electroencephalogram at sleep onset after a period of prolonged wakefulness. Front. Neurosci. 13:312. doi: 10.3389/fnins.2019.00312

Hahn, G., Zamora-López, G., Uhrig, L., Tagliazucchi, E., Laufs, H., Mantini, D., et al. (2021). Signature of consciousness in brain-wide synchronization patterns of monkey and human fMRI signals. NeuroImage 226:117470. doi: 10.1016/j.neuroimage.2020.117470

Han, C. X., Wang, J., Yi, G. S., and Che, Y. Q. (2013). Investigation of EEG abnormalities in the early stage of Parkinson’s disease. Cogn. Neurodyn. 7, 351–359. doi: 10.1007/s11571-013-9247-z

Hartmann, K. G., Schirrmeister, R. T., and Ball, T. (2018). EEG-GAN: generative adversarial networks for electroencephalographic (EEG) brain signals. arXiv preprint arXiv:1806.01875.

Ibáñez-Molina, A. J., and Iglesias-Parro, S. (2016). Neurocomputational model of EEG complexity during mind wandering. Front. Comput. Neurosci. 10:20. doi: 10.3389/fncom.2016.00020

Ingendoh, R. M., Posny, E. S., and Heine, A. (2023). Binaural beats to entrain the brain? A systematic review of the effects of binaural beat stimulation on brain oscillatory activity, and the implications for psychological research and intervention. PLoS One 18:e0286023. doi: 10.1371/journal.pone.0286023

Iravani, B., Arshamian, A., Fransson, P., and Kaboodvand, N. (2021). Whole-brain modelling of resting state fMRI differentiates ADHD subtypes and facilitates stratified neuro-stimulation therapy. NeuroImage 231:117844. doi: 10.1016/j.neuroimage.2021.117844

Iversen, J. R., and Makeig, S. (2019). “MEG/EEG data analysis using EEGLAB” in Magnetoencephalography: from signals to dynamic cortical networks. Eds. Selma Supek, Cheryl J. Aine (Cham: Springer), 391–406.

Kalaganis, F. P., Laskaris, N. A., Chatzilari, E., Nikolopoulos, S., and Kompatsiaris, I. (2020). A data augmentation scheme for geometric deep learning in personalized brain–computer interfaces. IEEE Access 8, 162218–162229. doi: 10.1109/ACCESS.2020.3021580

Krishnamurthy, K., Can, T., and Schwab, D. J. (2022). Theory of gating in recurrent neural networks. Phys. Rev. X 12:011011. doi: 10.1103/PhysRevX.12.011011

Lambert, I., and Peter-Derex, L. (2023). Spotlight on sleep stage classification based on EEG. Nat. Sci. Sleep 15, 479–490. doi: 10.2147/NSS.S401270

Logothetis, N. K., Pauls, J., Augath, M., Trinath, T., and Oeltermann, A. (2001). Neurophysiological investigation of the basis of the fMRI signal. Nature 412, 150–157. doi: 10.1038/35084005

López-González, A., Panda, R., Ponce-Alvarez, A., Zamora-López, G., Escrichs, A., Martial, C., et al. (2021). Loss of consciousness reduces the stability of brain hubs and the heterogeneity of brain dynamics. Commun. Biol. 4:1037. doi: 10.1038/s42003-021-02537-9

Luppi, A. I., Cabral, J., Cofre, R., Destexhe, A., Deco, G., and Kringelbach, M. L. (2022). Dynamical models to evaluate structure–function relationships in network neuroscience. Nat. Rev. Neurosci. 23, 767–768. doi: 10.1038/s41583-022-00646-w

Marshall, L., Molle, M., and Born, J. (2006). Oscillating current stimulation–slow oscillation stimulation during sleep. Nat. Protoc. 9. doi: 10.1038/nprot.2006.299

Michielli, N., Acharya, U. R., and Molinari, F. (2019). Cascaded LSTM recurrent neural network for automated sleep stage classification using single-channel EEG signals. Comput. Biol. Med. 106, 71–81. doi: 10.1016/j.compbiomed.2019.01.013

Mule, N. M., Patil, D. D., and Kaur, M. (2021). A comprehensive survey on investigation techniques of exhaled breath (EB) for diagnosis of diseases in human body. Inform. Med. Unlocked 26:100715. doi: 10.1016/j.imu.2021.100715

Naderi, M. A., and Mahdavi-Nasab, H. (2010). Analysis and classification of EEG signals using spectral analysis and recurrent neural networks. In 2010 17th Iranian Conference of Biomedical Engineering (ICBME) (pp. 1–4). IEEE.

Nguyen, P. T. M., Hayashi, Y., Baptista, M. D. S., and Kondo, T. (2020). Collective almost synchronization-based model to extract and predict features of EEG signals. Sci. Rep. 10, 16342–16316. doi: 10.1038/s41598-020-73346-z

Nunez, P. L., and Srinivasan, R. (2006). Electric fields of the brain: the neurophysics of EEG. Oxford: Oxford University Press.

Ojha, P., and Panda, S. (2024). Resting-state quantitative EEG spectral patterns in migraine during ictal phase reveal deviant brain oscillations: potential role of density spectral array. Clin. EEG Neurosci. 55, 362–370. doi: 10.1177/15500594221142951

Pankka, H., Lehtinen, J., Ilmoniemi, R. J., and Roine, T. (2024). Forecasting EEG time series with WaveNet. bioRxiv, 2024-01.

Panwar, S., Rad, P., Jung, T. P., and Huang, Y. (2020). Modeling EEG data distribution with a Wasserstein generative adversarial network to predict RSVP events. IEEE Trans. Neural Syst. Rehabil. Eng. 28, 1720–1730. doi: 10.1109/TNSRE.2020.3006180

Paul, A. (2020). Prediction of missing EEG channel waveform using LSTM. In 2020 4th international conference on computational intelligence and networks (CINE) (pp. 1–6). IEEE.

Ponce-Alvarez, A., and Deco, G. (2024). The Hopf whole-brain model and its linear approximation. Sci. Rep. 14:2615. doi: 10.1038/s41598-024-53105-0

Rankine, L., Stevenson, N., Mesbah, M., and Boashash, B. (2006). A nonstationary model of newborn EEG. IEEE Trans. Biomed. Eng. 54, 19–28. doi: 10.1109/TBME.2006.886667

Ren, H. P., Bai, C., Baptista, M. S., and Grebogi, C. (2017). Weak connections form an infinite number of patterns in the brain. Sci. Rep. 7, 1–12. doi: 10.1038/srep46472

Sano, A., Chen, W., Lopez-Martinez, D., Taylor, S., and Picard, R. W. (2018). Multimodal ambulatory sleep detection using LSTM recurrent neural networks. IEEE J. Biomed. Health Inform. 23, 1607–1617. doi: 10.1109/JBHI.2018.2867619

Sanz Leon, P., Knock, S. A., Woodman, M. M., Domide, L., Mersmann, J., McIntosh, A. R., et al. (2013). The virtual brain: a simulator of primate brain network dynamics. Front. Neuroinform. 7:10. doi: 10.3389/fninf.2013.00010

Schaul, N. (1998). The fundamental neural mechanisms of electroencephalography. Electroencephalogr. Clin. Neurophysiol. 106, 101–107. doi: 10.1016/S0013-4694(97)00111-9

Siegel, J. M. (2001). The REM sleep-memory consolidation hypothesis. Science 294, 1058–1063. doi: 10.1126/science.1063049

Singanamalla, S. K. R., and Lin, C. T. (2021). Spiking neural network for augmenting electroencephalographic data for brain computer interfaces. Front. Neurosci. 15:651762. doi: 10.3389/fnins.2021.651762

Singh, A., Meshram, H., and Srikanth, M. (2019). American academy of sleep medicine guidelines, 2018. Int. J. Head Neck Surg 10, 102–103. doi: 10.5005/jp-journals-10001-1379

Szuflitowska, B., and Orlowski, P. (2021). “Analysis of complex partial seizure using non-linear duffing Van der pol oscillator model” in International conference on computational science. Eds. Derek Groen, Clélia de Mulatier, Maciej Paszynski, Valeria V. Krzhizhanovskaya, Jack J. Dongarra, Peter M. A. Sloot (Cham: Springer), 433–440.

Tatti, E., and Cacciola, A. (2023). The role of brain oscillatory activity in human sensorimotor control and learning: bridging theory and practice. Front. Syst. Neurosci. 17:1211763. doi: 10.3389/fnsys.2023.1211763

Thomas, K. P., Guan, C., Lau, C. T., Vinod, A. P., and Ang, K. K. (2009). A new discriminative common spatial pattern method for motor imagery brain–computer interfaces. IEEE Trans. Biomed. Eng. 56, 2730–2733. doi: 10.1109/TBME.2009.2026181

Torma, S., and Szegletes, L. (2025). Generative modeling and augmentation of EEG signals using improved diffusion probabilistic models. J. Neural Eng. 22:016001. doi: 10.1088/1741-2552/ada0e4

Watanabe, T., Itagaki, A., Hashizume, A., Takahashi, A., Ishizaka, R., and Ozaki, I. (2023). Observation of respiration-entrained brain oscillations with scalp EEG. Neurosci. Lett. 797:137079. doi: 10.1016/j.neulet.2023.137079

Weigenand, A., Schellenberger Costa, M., Ngo, H. V. V., Claussen, J. C., and Martinetz, T. (2014). Characterization of K-complexes and slow wave activity in a neural mass model. PLoS Comput. Biol. 10:e1003923. doi: 10.1371/journal.pcbi.1003923

Wolpert, E. A. (1969). A manual of standardized terminology, techniques and scoring system for sleep stages of human subjects. Arch. Gen. Psychiatry 20, 246–247. doi: 10.1001/archpsyc.1969.01740140118016

Yang, S., and Chen, B. (2023a). Effective surrogate gradient learning with high-order information bottleneck for spike-based machine intelligence. IEEE Trans. Neural Netw. Learn. Syst. 36, 1734–1748. doi: 10.1109/tnnls.2023.33295251

Yang, S., and Chen, B. (2023b). SNIB: improving spike-based machine learning using nonlinear information bottleneck. IEEE Trans. Syst. Man Cybern. Syst. 53, 7852–7863. doi: 10.1109/TSMC.2023.3300318

Yang, S., and Chen, B. (2024). Neuromorphic intelligence: learning, architectures and large-scale systems. Cham: Springer Nature.

Yang, S., He, Q., Lu, Y., and Chen, B. (2024). Maximum entropy intrinsic learning for spiking networks towards embodied neuromorphic vision. Neurocomputing 610:128535. doi: 10.1016/j.neucom.2024.128535

Zenke, F., and Ganguli, S. (2018). Superspike: supervised learning in multilayer spiking neural networks. Neural Comput. 30, 1514–1541. doi: 10.1162/neco_a_01086

Zhao, C., Li, J., and Guo, Y. (2024). Sequence signal reconstruction based multi-task deep learning for sleep staging on single-channel EEG. Biomed. Signal Process. Control 88:105615. doi: 10.1016/j.bspc.2023.105615

Keywords: EEG, Hopf oscillator, sleep stages modeling, large scale brain dynamics, biomedical signal analysis, Hopf oscillator model

Citation: Ghosh S, Biswas D, Rohan NR, Vijayan S and Chakravarthy VS (2025) Modeling of whole brain sleep electroencephalogram using deep oscillatory neural network. Front. Neuroinform. 19:1513374. doi: 10.3389/fninf.2025.1513374

Edited by:

Viktor Jirsa, Aix-Marseille Université, FranceReviewed by:

Shuangming Yang, Tianjin University, ChinaHongzhi Kuai, Maebashi Institute of Technology, Japan

Copyright © 2025 Ghosh, Biswas, Rohan, Vijayan and Chakravarthy. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: V. Srinivasa Chakravarthy, c2NoYWtyYUBlZS5paXRtLmFjLmlu

Sayan Ghosh

Sayan Ghosh Dipayan Biswas

Dipayan Biswas N. R. Rohan

N. R. Rohan Sujith Vijayan

Sujith Vijayan V. Srinivasa Chakravarthy

V. Srinivasa Chakravarthy