- 1PhD Program in Health Sciences and Engineering, Universidad de Valparaíso, Valparaíso, Chile

- 2Faculty of Engineering, School of Biomedical Engineering, Universidad de Valparaíso, Valparaíso, Chile

- 3Center of Interdisciplinary Biomedical and Engineering Research for Health - MEDING, Universidad de Valparaíso, Valparaíso, Chile

- 4Millennium Institute for Intelligent Healthcare Engineering (iHealth), Santiago, Chile

- 5Faculty of Medicine, School of Medical Technology, Universidad de Valparaíso, Valparaíso, Chile

- 6Servicio de Imagenología, Hospital Carlos van Buren, Valparaíso, Chile

- 7Centro para la Investigación Traslacional en Neurofarmacología (CITNE), Universidad de Valparaíso, Valparaíso, Chile

- 8Departamento de Informática, Universidad Técnica Federico Santa María, Santiago, Chile

Introduction: Brain tumors are a leading cause of mortality worldwide, with early and accurate diagnosis being essential for effective treatment. Although Deep Learning (DL) models offer strong performance in tumor detection and segmentation using MRI, their black-box nature hinders clinical adoption due to a lack of interpretability.

Methods: We present a hybrid AI framework that integrates a 3D U-Net Convolutional Neural Network for MRI-based tumor segmentation with radiomic feature extraction. Dimensionality reduction is performed using machine learning, and an Adaptive Neuro-Fuzzy Inference System (ANFIS) is employed to produce interpretable decision rules. Each experiment is constrained to a small set of high-impact radiomic features to enhance clarity and reduce complexity.

Results: The framework was validated on the BraTS2020 dataset, achieving an average DICE Score of 82.94% for tumor core segmentation and 76.06% for edema segmentation. Classification tasks yielded accuracies of 95.43% for binary (healthy vs. tumor) and 92.14% for multi-class (healthy vs. tumor core vs. edema) problems. A concise set of 18 fuzzy rules was generated to provide clinically interpretable outputs.

Discussion: Our approach balances high diagnostic accuracy with enhanced interpretability, addressing a critical barrier in applying DL models in clinical settings. Integrating of ANFIS and radiomics supports transparent decision-making, facilitating greater trust and applicability in real-world medical diagnostics assistance.

1 Introduction

The incidence rate of primary brain and other central nervous system (CNS) tumors has increased, likely due to advances in diagnostic technologies, revisions in classification systems, and increased access to diagnostic imaging devices worldwide (Louis et al., 2021; de Robles et al., 2015; Low et al., 2022). These tumors represent a significant public health concern due to their high rates of mortality and disability, particularly malignant forms, which account for 1.4% of all cancers and 2.3% of cancer-related deaths (McNeill, 2016). Approximately 30,000–35,000 new cases are expected yearly in the United States alone. In adults, the most frequently observed sites of non-brain malignancies were mammary, prostatic, colorectal, and cutaneous melanoma (Neff et al., 2023; Sancar et al., 2017). Notably, BT constitute the second most common cancer type in pediatric populations (Zhang et al., 2017). Treatment strategies vary depending on tumor type and location, with glioblastomas and meningiomas being the most common malignant and non-malignant tumors, respectively (Low et al., 2022; Chabert et al., 2024). A significant challenge in the management of BT is their infiltrative growth pattern. Unlike many other cancers, which may exhibit defined margins, glioblastomas can integrate into surrounding neural tissue, making complete surgical resection challenging (Ban et al., 2021). As tumors have different radiological characteristics, edema limits the correct definition of boundaries to analyze specific affected regions using medical imaging, making treatment planning difficult (Csaholczi et al., 2020; Khan and Park, 2024).

Diagnosis of BT traditionally depends on the expertise of neuro-radiologists, who perform detailed clinical analyses and thorough evaluations of imaging results. However, the global shortage of specialized professionals makes this process time-consuming and resource-intensive (Ali et al., 2022). Medical imaging techniques are an essential tool for visualization and diagnosis of anatomical structures and physiological processes. The most commonly used are MRI, Computed Tomography (CT), Positron Emission Tomography (PET), and X-rays (Bahkali and Semwal, 2021; Hussain et al., 2022). In contrast to previous techniques, MRI is considered the reference method for diagnosing and characterizing BT due to its superior anatomical resolution and its ability to differentiate soft tissue structures without using ionizing radiation (Song et al., 2016; Zhou et al., 2022). It presents an optimal modality for patients who require repeated examinations, such as pediatric cases or individuals who require long-term follow-up (Iradat et al., 2024; Jamieson et al., 2013). In response, Artificial Intelligence (AI) models have emerged as powerful tools to assist in tumor detection and classification using MRI (Dixit and Thakur, 2023; Bouhafra and El Bahi, 2024; Rasheed et al., 2023). Machine Learning (ML) and Deep Learning (DL) techniques have been widely adopted for BT detection, harnessing MRI scans to provide fast and accurate predictions (Tabatabaei et al., 2023; Özkaraca et al., 2023; Mohanty et al., 2024). These AI-driven approaches are increasingly helping medical professional improve patient care by improving diagnostic efficiency and accuracy (Cè et al., 2023; Veloz et al., 2011). Nevertheless, it is imperative to consider the limitations associated with implementing DL techniques in healthcare. The requirement for extensive annotated datasets presents a significant impediment (Vrochidou et al., 2023). The computational resources necessary to process and analyze these datasets are substantial, often necessitating a high-performance computing infrastructure that may not be easily accessible in healthcare environments (Zhang et al., 2024; Filippini et al., 2023). This challenge is exacerbated by the labor intensive nature of data annotation, which is crucial for training the DL model, but can be prohibitively resource intensive (Mitchell et al., 2021). The advent of transfer learning techniques has further accelerated progress, enabling AI models to achieve high accuracy even with limited datasets, while significantly reducing training time (Alnemer and Rasheed, 2021). The performance of DL algorithms is heavily dependent on the availability of large amounts of training data. However, healthcare data is often limited in volume and quality due to factors such as patient sparsity, variability in medical practices, and strict privacy regulations (Chen et al., 2019). Other studies, such as Chen et al. (2021), discuss the challenge of generalizing DL models trained on limited datasets, and highlight the importance of having diverse training sets to achieve robust performance across different settings or demographics of patients (Chen et al., 2021). Variations in data acquisition protocols between institutions can lead to discrepancies in image characteristics, affecting model performance. DL offers advantages over traditional ML approaches by automatically extracting high-level features from input data. It demonstrates efficacy in complex medical imaging tasks such as disease classification and tumor segmentation (Torres-Velázquez et al., 2020). However, the implementation of DL in medical settings remains challenging due to generalization issues (Yoon et al., 2023). Despite these advancements, a persistent challenge lies in the lack of transparency in AI methodologies (Burkart and Huber, 2021). This opacity undermines trust in AI-driven systems and raises ethical concerns among healthcare professionals, posing a significant barrier to widespread clinical adoption.

Explainable Artificial Intelligence (XAI) addresses these problems by designing transparent and interpretable models, i.e., models that can provide explanatory information in a form accessible to humans (Schiavon et al., 2023). The goal of XAI is to help humans trust AI systems more and thus enable better human-expert machine interaction (Ugalde et al., 2023). To explain the machine learning models currently being used for BT classification and segmentation, some methods have been proposed, such as class activation maps (CAMs) (Schiavon et al., 2023), attention maps (Tehsin et al., 2024), model-agnostic methods such as SHAP (SHapley Additive exPlanations) (Ahmed et al., 2023) or interpreting key features using radiomics (Afshar et al., 2019; Ponce et al., 2024). Such approaches demonstrate the potential to provide quantitative and reproducible metrics of interpretation and validation for medical imaging data (Zhang X. et al., 2022).

Most of the current explainability methods developed for BT classification algorithms do not provide deeper insights into their operational mechanisms and tend to produce heatmaps that simply determine the relevance of specific input features or variables. Although these approaches are beneficial, they are insufficient in providing a complete explanation of the rationale behind the predictions. Through this method, we intended to propose an interpretable decision rule based on fuzzy logic which further supports the prediction process and provides us insights based on that prediction. In the leading approach, MRI scans are preprocessed and tumor regions are segmented using 3D U-Nets convolutional neural networks (CNN) (Cavieres et al., 2023). From there, radiomic characteristics about compression, texture, and pixel values are extracted (Ponce et al., 2024). In order to increase their efficiency, dimensionality reduction methods are used to keep only the most discriminative features. Finally, it trains an Adaptive Network-based Fuzzy Inference System (ANFIS) to classify BT and obtain a set of decision rules that facilitate clinical interpretability (Querales et al., 2023; Allende-Cid et al., 2016). This method ensures the performance of the assisted diagnosis is maintained at a high level, and along with this, can provide explanations in an accurate and interpretable way, significantly enhancing the clinical reliability and applicability of AI therapy solutions.

This research is structured as follows. The Section 2 provides a comprehensive review of related work, highlighting previous studies and current methodologies used in BT detection and classification, as well as exploring strategies for the explainability of the models and the interpretation of their predictions. The Section 3 details the methodology used, including the main steps such as database, pre-processing, segmentation, feature extraction and classification. The results, presented in Section 4, highlight the performance and explainability capabilities of the proposed model, underlining its advantages over traditional approaches. Section 5 elaborates on the results' analysis, highlighting the proposal's novelty and its contribution to assisted diagnosis through detailed tumor characterization. Finally, Section 6 summarizes the main findings and suggests possible future research lines to improve the clinical applicability and interpretability of the models.

2 Related works

The integration of Explainable Artificial Intelligence (XAI) into BT classification and segmentation has become a critical area of research, addressing the dual challenge of improving the accuracy of diagnostic assistance while ensuring model interpretability. For example, studies such as Ullah et al. (2024) and Saeed et al. (2024) focus on BT segmentation and classification using advanced architectures like DeepLabV3+. Ullah et al. (2024) introduces a comprehensive two-component framework that incorpores Bayesian optimization for hyperparameter tuning. The study leverages models such as the Inverted Residual Bottleneck to enhance classification performance and uses Local Interpretable Model-Agnostic Explanations (LIME) to provide insights into predictions. Similarly, Saeed et al. (2024) integrates self-attention modules into the DeepLabV3+ architecture, combining features extracted from CNN architectures like Darknet53 and MobileNetV2 with a Bayesian optimized Support Vector Machine (SVM). Grad-CAM techniques are employed to visualize heatmaps, offering clinicians a clearer understanding of model predictions. In contrast, other studies, such as Selvapandian and Manivannan (2018) and Schiavon et al. (2023), explore alternative methodologies for BT classification. Selvapandian and Manivannan (2018) employ morphological operations for glioma segmentation, texture analysis, and classification using ANFIS, with good performance. Meanwhile, Schiavon et al. (2023) highlights the role of CNN architectures in classification, using XAI techniques like Grad-CAM and CAMs to interpret predictions. This underscores the importance of identifying critical features in medical images, offering valuable insights for clinical decision-making.

Recent studies have explored machine learning (ML) approaches for brain tumor (BT) classification using MRI. Various algorithms have been evaluated, including k-Nearest Neighbors (k-NN), Random Forest (RF), Linear Discriminant Analysis (LDA), Decision Trees (DT), Logistic Regression (LR), and Multilayer Perceptron (MLP) (Çınarer and Emiroğlu, 2019; Ferdous et al., 2021; Kale et al., 2024; Yin and Wang, 2024). For instance, Kale et al. (2024) analyzed MRI brain scans to classify tumor and non-tumor tissue using LR, MLP, and RF, achieving accuracy rates of 96%, 95%, and 96%, respectively. Similarly, Sahoo et al. (2020) assessed the effectiveness of various ML algorithms for BT classification, reporting that k-NN achieved an average detection accuracy of 96.4%. For multi-class classification, Saraswathi and Gupta (2019) demonstrated that RF achieved 88.7% accuracy in distinguishing between different tumor types. Additionally, Latif et al. (2018) proposed an enhanced classification methodology incorporating hybrid statistical and wavelet features, achieving 96.72% accuracy for high-grade gliomas and 96.04% for low-grade gliomas using MLP. These studies illustrate the successful application of ML classification methods for BT detection and segmentation. Furthermore, they provide a benchmark for evaluating the proposed framework, validating its comparative performance against existing classification models.

Additional studies, such as Afshar et al. (2019) and Padmapriya and Devi (2024), explore the interpretability of alternative architectures in BT analysis. Afshar et al. (2019) focuses on Capsule Networks, employing techniques such as maximization of activation to bridge the gap between automated classification and human-understandable reasoning, offering a novel perspective on interpretability. Similarly, Padmapriya and Devi (2024) develops a computer-aided diagnostic (CAD) system that uses Grad-CAM to visualize critical regions on MRI scans, improve clinician confidence by providing intuitive visual explanations. In addition, Benyamina et al. (2022) advances the explainability of deep transfer learning models by leveraging SHAP values to clarify the decision-making process in tumor classification tasks. By analyzing diverse MRI datasets, this study underscores the persistent challenges of balancing high classification accuracy with the need for interpretable AI models, highlighting the importance of transparent decision-making in clinical applications.

Although several studies have used ANFIS for the classification of BT and have achieved high accuracy, they often lack interpretability, limiting their clinical applicability. For example, Shanmugam and Surampudi (2022) achieved high accuracy in BT classification using ANFIS but failed to provide mechanisms to explain how radiomic features influenced the decisions. Similarly, Anitha et al. (2023) combined the curvelet transform with ANFIS for the detection and segmentation of meningioma, achieving high accuracy while partially addressing interpretability by linking specific tumor features to the classification results. Kalam et al. (2021) presented an optimized ANFIS classifier with improved precision, recall and sensitivity to detect meningiomas, gliomas, and pituitary tumors, but the contributions of individual characteristics to the decision-making process were not clarified. Likewise, Kshirsagar et al. (2022) used Gray Level Co-occurrence Matrix (GLCM) features with ANFIS to classify BT as normal, benign, or malignant, achieving high accuracy, yet without addressing the interpretability of the decision-making process. To our knowledge, there is still a gap in developing explainability mechanisms that clarify how Artificial Intelligence (AI) techniques combine and prioritize the most relevant features for classification. To address this, this work proposes a methodology for constructing interpretable rules that elucidate the key elements used to determine whether tissue is cancerous.

3 Materials and methods

3.1 Database

This study assesses the performance of a model designed for interpretability in classifying BT, using the BraTS2020 dataset. The dataset, available at Menze et al. (2015), is a benchmark resource for BT segmentation and consists of multi-contrast MRI scans. It includes four imaging sequences: native T1-weighted, post-contrast T1-weighted (T1ce), T2-weighted and Fluid Attenuated Inversion Recovery (FLAIR), collected from 366 patients. Each MRI scan has a resolution of 240 × 240 × 155 voxels and is accompanied by manually segmented masks and expert-validated tumor segmentation labels. These labels differentiate between three key regions: enhancing tumor (ET), tumor core (TC), and edema (E).

To prepare the images from the BraTS2020 database for segmentation, several pre-processing steps were applied. First, pixel values were normalized to a range of 0–1, minimizing intensity variations caused by differences in imaging equipment. The images were then resized to a uniform resolution of 128 × 128 × 128 voxels to ensure consistency throughout the data set. Resizing ensures uniformity in image dimensions, enhancing consistency in model input for robust training (Zubair Rahman et al., 2024). The process includes cropping dark areas from the images while preserving the brain region. This approach not only mitigates class imbalance but also reduces computational costs, thereby improving the model's efficiency (Das et al., 2022). Quadratic interpolation was applied to adjust the images, while nearest-neighbor interpolation was used for the segmentation masks to preserve their discrete nature. Non-informative regions, such as dark areas or white spaces around the edges, were removed to focus on the regions of interest. For model training, 80% of the dataset was allocated, with the remaining 20% reserved for testing.

3.1.1 Class imbalance adjustments

Class imbalance is a significant challenge in data classification, as it directly impacts model performance and parameter optimization (Luque et al., 2019). Imbalanced datasets impede the learning process, particularly for minority classes, often resulting in their misclassification (Rezvani and Wang, 2023). Although random undersampling and oversampling are commonly used baseline methods, our approach prioritizes undersampling to achieve a more effective balance between majority and minority classes. This strategy is especially useful for our dataset, where one class is disproportionately over-represented (Rezvani and Wang, 2023). Although oversampling can be advantageous in certain scenarios, it carries the risk of overfitting by duplicating examples from minority classes, which can reduce the generalizability of the model (Fernández et al., 2018; Estabrooks et al., 2004).

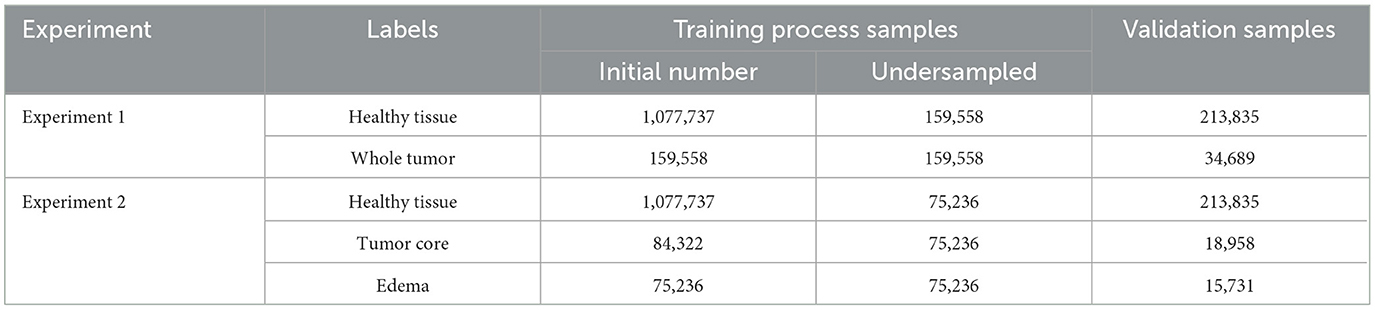

The label adjustment was applied exclusively to the training set. This adjustment was performed on the basis of two experimental criteria. In Experiment 1, the focus was on distinguishing between healthy tissue and whole tumor tissue, while in Experiment 2, the differentiation extended to three categories: healthy tissue, tumor core, and edema. For both experiments, the minority class served as the reference, and adjustments were made independently for each class to address imbalances. To emphasize the different outcomes of these adjustments, Table 1 presents the results for both experimental setups, highlighting the impact of the adjustments on class distribution.

3.2 Proposed framework

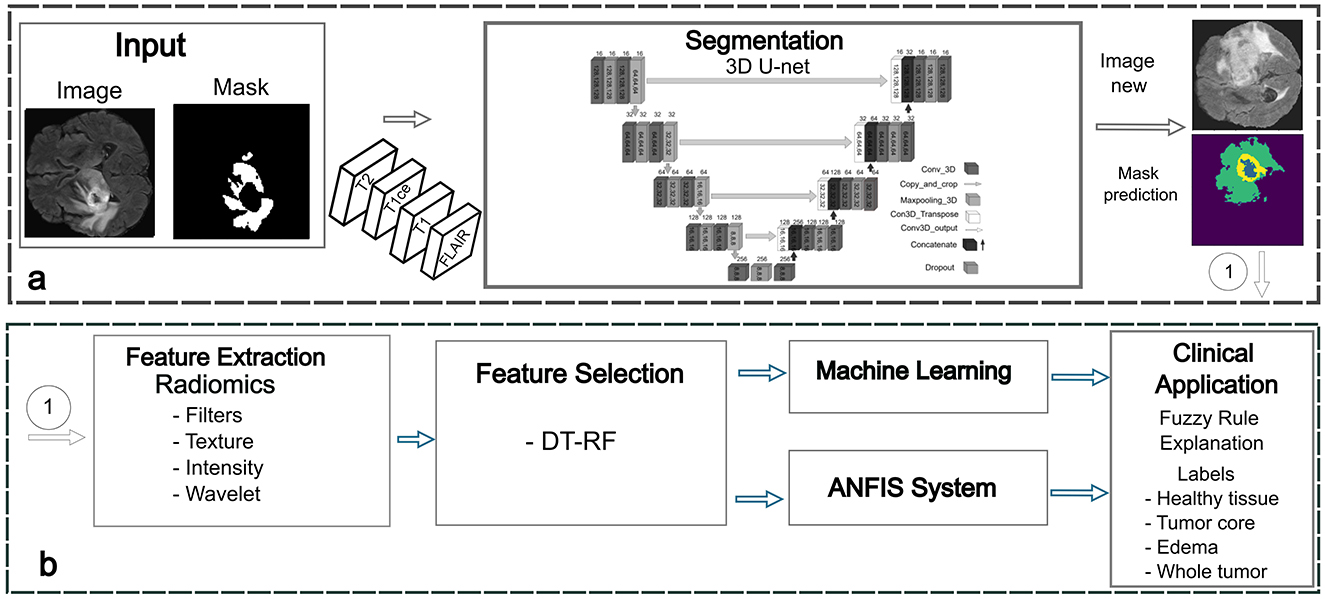

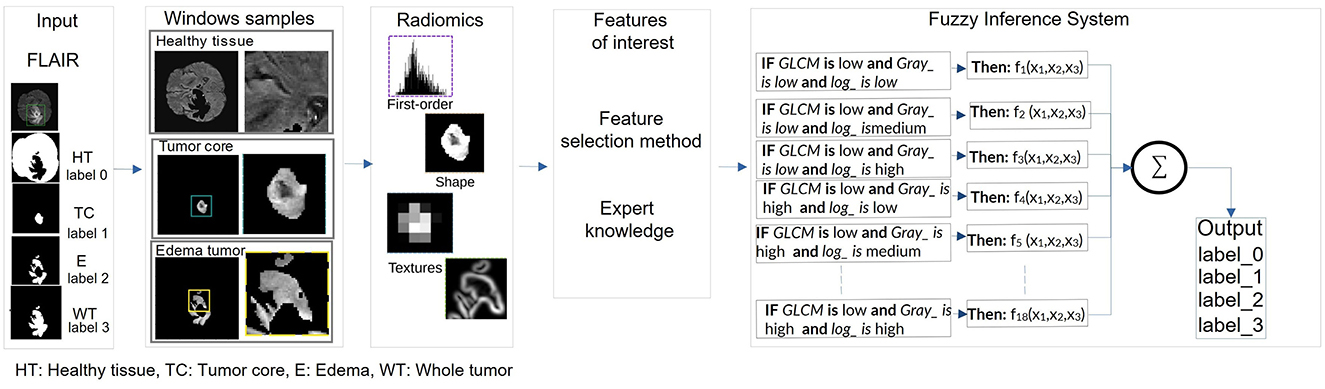

The proposed framework consists of four key stages: segmentation to identify regions of interest (ROI), feature extraction, feature selection, and classification using a fuzzy system to improve explainability (Figure 1). The following sections provide a detailed description of the methodology, highlighting each step in the process.

Figure 1. Proposed framework for BT detection. (a) Phase 1: segmentation stage for accurate delineation of tumor regions. (b) Phase 2: feature extraction and selection using radiomics. Phase 3: classification using machine learning and ANFIS techniques. Phase 4: fuzzy rules generation and optimization.

3.2.1 Phase 1: tumor segmentation using deep learning

A U-Net-like Convolutional Neural Network (CNN), initially introduced by Basnet et al. (2021), was utilized for the segmentation phase. Although the network was originally designed to segment gray matter, white matter, and cerebrospinal fluid (CSF), it was later adapted to segment BT on multimodal MRI. The 3D U-Net architecture employed in this framework enables the precise delineation of tumor boundaries by accounting for variations in tumor geometry and adjacent cerebral structures. The model integrates T1-weighted, T1ce, T2-weighted, and FLAIR sequences, each providing complementary imaging characteristics of tumor regions. For instance, T1-weighted images are crucial for identifying pathological changes and delineating tumor contours. Areas of abnormal vascularity and blood-brain barrier (BBB) breakdown, commonly observed in malignant tumors, appear highlighted in T1-weighted images (Shiroishi et al., 2015; Paek et al., 2013). The T1ce sequence, enhanced with a gadolinium-based contrast agent, improves tumor visibility by emphasizing regions where the BBB is compromised, making it particularly valuable for tumor characterization. This distinction between tumor tissue and healthy brain parenchyma is essential for surgical planning and treatment decisions (Paek et al., 2014). In glioblastoma, for example, the degree of contrast enhancement correlates with tumor aggressiveness and BBB disruption, making T1ce a critical modality for assessing tumor burden and guiding surgical interventions (Hattingen et al., 2017). T2-weighted and FLAIR images play a key role in evaluating edema and necrosis, both critical for tumor characterization. T2-weighted images highlight hyperintense regions of vasogenic edema, often associated with BBB disruption (Champ et al., 2012; Hung et al., 2023). FLAIR sequences suppress cerebrospinal fluid signals, improving the visualization of cortical and periventricular lesions, thus aiding in the detection of infiltrative tumor components that may not be visible with contrast-enhanced imaging (Zúñiga et al., 2023). This is particularly important in gliomas, where neoplastic cells often extend beyond the regions of enhancement (Huse et al., 2013; Zeineldin et al., 2020). The 3D U-Net architecture processes volumetric data through 3D convolutions, max-pooling, and upsampling operations, capturing spatial dependencies and contextual information across adjacent MRI slices. This approach is fundamental for accurately segmenting tumor regions and distinguishing between various tumor subtypes with high precision. The modified architecture used patch-wise learning, where 128 × 128 × 128 patches were extracted from input images at each training step [similar to the proposal of Mellado et al. (2023)].

The loss function, which combines cross-entropy and DICE loss (Equation 1), was used to quantify performance at the end of each epoch. The Adaptive Moment Estimation (ADAM) optimizer was then applied to update the model parameters accordingly.

The loss function evaluates the probability that a prediction for the input image b belongs to label c for both the ground truth segmentation Y and the network-predicted segmentation Ŷ. Here, N represents the batch size, b is the image input sample, and c denotes the segmentation label. The network was trained for 300 epochs using a batch size of 8 and an initial learning rate of 2 × 10−4, which was halved every 30 epochs to improve convergence.

3.2.2 Phase 2: feature extraction and selection using radiomics

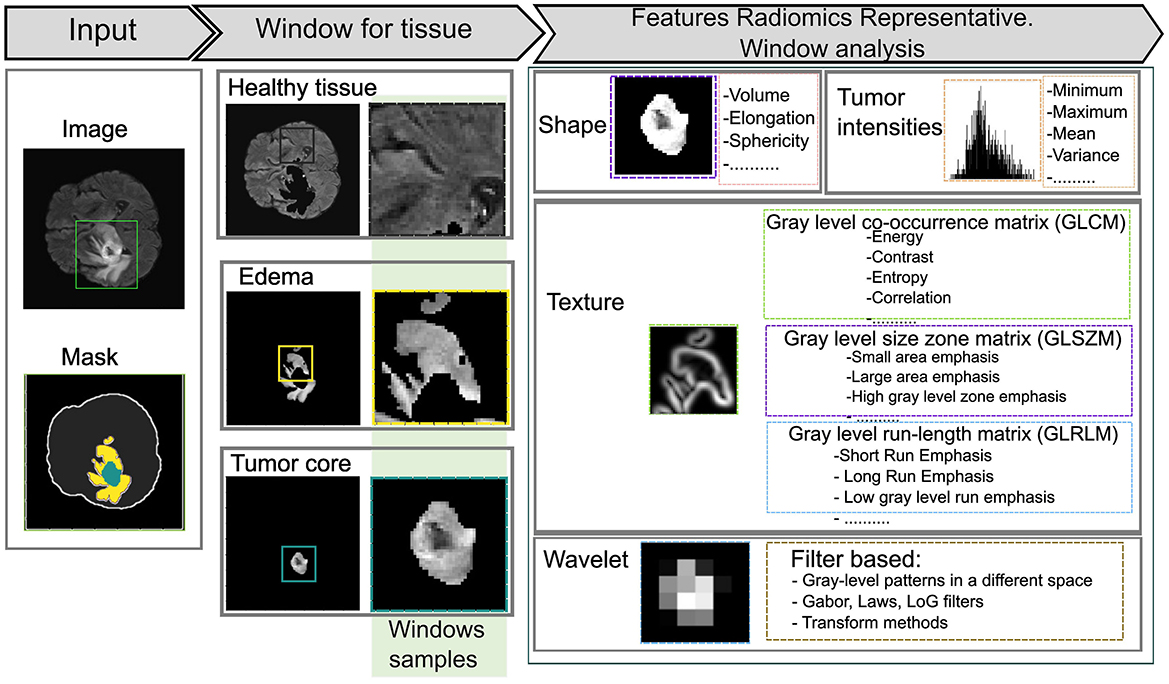

Radiomics involves the extraction of a large number of quantitative features from medical images, generating variables that can be analyzed to support clinical decision-making and enhance diagnostic accuracy (Saini et al., 2023). By transforming medical images into high-dimensional data, radiomics enables the identification of complex patterns and correlations that provide a deeper understanding of the lesion or region of interest. The extracted features are typically classified into several types as shown in Figure 2.

• Morphological features: describe the shape and volume of anatomical structures or lesions, providing information on their geometric properties.

• Histogram-based (first-order) features: quantify the distribution of pixel or voxel intensities, reflecting overall intensity patterns within the region of interest (ROI).

• Texture-based (second-order) features: such as those derived from the Gray Level Co-occurrence Matrix (GLCM) and Gray Level Dependence Matrix (GLDM), capture spatial relationships and variability in gray levels, offering information about the complexity and heterogeneity of tissues.

• Transformation-based features: including wavelet or log-sigma transformations highlight details at multiple scales or frequencies, revealing finer structural details.

Figure 2. Representation of radiomic features using various statistical approaches. A 45 × 45 kernel is applied to extract features within a defined window of the region of interest (ROI).

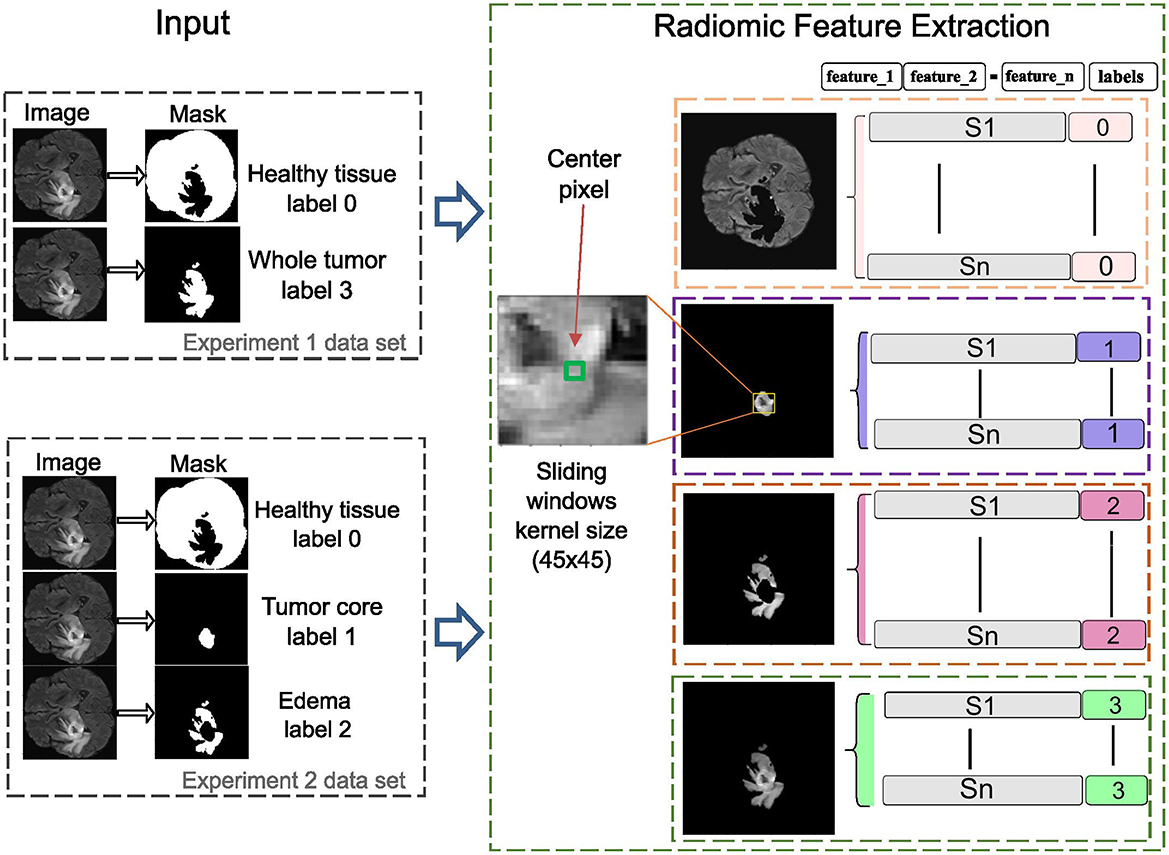

In this phase, radiomic features are extracted using sliding window analysis with a 45 × 45 kernel to capture fine details between different ROI about healthy tissue, whole tumor, tumor core, and edema according to the experiments carried out on the study image. Each window is processed using the SimpleITK interface to generate images and masks compatible with feature extraction. PyRadiomics toolbox is used to perform a quantitative analysis of radiomic features for each region while preserving its association with the original image and its corresponding label. The resulting feature matrix is constructed by organizing the computed characteristics into columns, while the objects associated with each label are arranged in rows, as illustrated in Figure 3. This method increases the data dimensionality because of the large number of calculated parameters.

Figure 3. The radiomics-based feature extraction methodology employs sliding window analysis to construct a feature matrix, with columns representing extracted features and rows corresponding to labeled objects in the images. A 45 × 45 kernel is used to enhance detail in each image segment, improving the accuracy of clinically relevant diagnoses.

Feature selection is a critical step in radiomics analysis, particularly in medical imaging, where extracted features often include irrelevant or redundant information, which can negatively impact model performance. Various techniques, including Sequential Forward Selection (SFS), Sequential Backward Selection (SBS), Recursive Feature Elimination (RFE), and Least Absolute Shrinkage and Selection Operator (LASSO), are widely employed to identify the most relevant features (Naveed et al., 2021; Johnpeter and Ponnuchamy, 2019; Bhattacharjee et al., 2022; Zhang J. et al., 2022). Among feature selection approaches, Decision Trees (DT) effectively assess feature importance, reduce dimensionality, and enhance classification accuracy (Kutikuppala et al., 2023; Paja, 2016). Similarly, Random Forest (RF) is well-suited for handling high-dimensional data and provides robust feature importance rankings (Lefkovits et al., 2017; Kumar et al., 2023).

3.2.3 Phase 3: classification using machine learning and ANFIS models

In this phase, several conventional machine learning models were implemented to assess their performance in BT classification tasks using the radiomic features extracted during the previous phase. These models include:

• Linear Discriminant Analysis (LDA): a statistical method that projects data onto a lower-dimensional space to maximize class separability by modeling the relationship between input features and class labels through linear decision boundaries.

• Decision Trees (DT): a rule-based model that partitions the dataset into subsets based on feature values, creating a hierarchical tree structure where each internal node represents a decision rule and the leaf nodes correspond to predicted outcomes.

• Random Forest (RF): an ensemble learning technique that combines multiple decision trees to improve accuracy and robustness. Reduce overfitting by averaging predictions from various trees, making it particularly effective for complex datasets.

• Logistic Regression (LR): a probabilistic model used for binary or multi-class classification that estimates the likelihood of class membership based on a logistic function applied to weighted input features.

• Multilayer Perceptron (MLP): a type of artificial neural network consisting of multiple layers of interconnected neurons. It learns complex, non-linear relationships in the data through backpropagation and is well-suited for high-dimensional feature spaces.

• k-Nearest Neighbors (k-NN): a non-parametric, instance-based learning algorithm that classifies data points by comparing them to their nearest neighbors in the feature space, relying on majority voting to determine class membership.

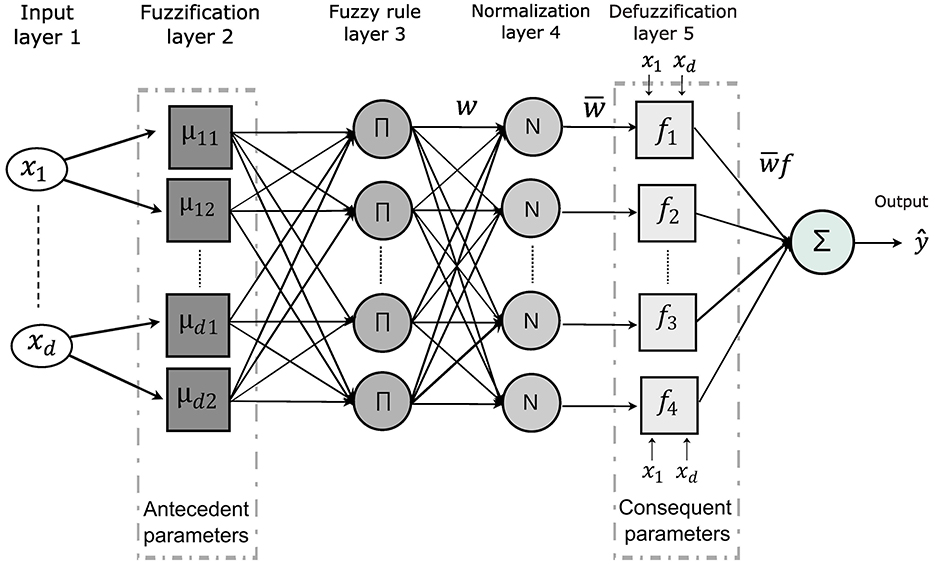

The Adaptive Neuro-Fuzzy Inference System (ANFIS) model, introduced by Jang (1993), combines neural networks with fuzzy logic systems to form a neuro-fuzzy framework. Built on the Takagi-Sugeno (TS) fuzzy inference system, ANFIS utilizes fuzzy logic for rule-based decision-making while adapting its parameters based on input data (Anggara and Munandar, 2023; Allende-Cid et al., 2016; Querales et al., 2023).

The architecture of ANFIS, illustrated in Figure 4, consists of multiple layers dedicated to processing fuzzy rules. The input layer applies Gaussian membership functions to perform the fuzzification of the input data. These membership functions are mathematically defined as follows:

where mkj denotes the mean and σkj the standard deviation, determining the center and width of each Gaussian function. After the inputs are fuzzified, the model calculates the firing strength of each fuzzy rule. This is done by computing the product of the membership values for all input variables associated with the corresponding rule:

where the product aggregates the degrees of membership, indicating the extent to which the inputs satisfy each rule's antecedents. The firing strengths are then normalized by dividing each rule's strength by the sum of all rule strengths. This ensures that the relative contribution of each rule is proportionate:

This normalization step ensures that the combined influence of the rules remains balanced and consistent across the system. The normalized firing strengths are then applied to the linear functions representing the fuzzified inputs. The output of each rule is weighted according to its firing strength, contributing proportionally to the final prediction. The overall output of the model is computed by summing the weighted contributions from all rules:

Here, pij and qi represent the consequent parameters. This final equation computes the system's output by integrating the contributions of all the rules applied to the input data, yielding the final prediction or classification.

Figure 4. The ANFIS model architecture highlights the key parameters involved in decision rule development. The second layer performs fuzzification, defining membership functions for each input variable and extracting antecedent parameters. In the fifth layer, defuzzification computes the consequent parameters. The classification process culminates in the generation of decision rules and the interpretation of the model's outputs.

3.2.4 Phase 4: fuzzy rule generation and optimization

The main functionality of the ANFIS model is the fuzzy IF-THEN rules, which provide a transparent and interpretable way of mapping input variables to output decisions. In ANFIS, the antecedents of these rules are represented by fuzzy sets that describe the degree to which input variables satisfy certain conditions, while the consequents are typically expressed as linear functions or singletons representing the system's output classes.

These rules follow the Takagi-Sugeno formulation, where the premise of each rule maps the input vectors to the corresponding output functions yk = fk(x). Each rule evaluates a specific condition and contributes proportionally to the final output. Mathematically, this can be expressed as:

Here, μkd represents a fuzzy set associated with the input variable xd for the k-th rule, K denotes the total number of fuzzy rules and Y is a fuzzy aggregation operator used to combine the outputs of all rules. It employs fuzzy IF-THEN rules, where the antecedents are fuzzy sets associated with input variables, and the consequents are fuzzy singletons representing output classes.

On the other hand, the Particle Swarm Optimization (PSO) algorithm was utilized to optimize the rules within the ANFIS model (Shihabudheen et al., 2018). Inspired by the collective behavior of animals such as fish or birds, the PSO adjusts both the antecedents and the consequences of the decision rules by treating the data as a swarm of particles (Shami et al., 2022). In the PSO-ANFIS framework, ANFIS serves as a particle, while PSO complements it by fine-tuning its parameters to identify the most optimal solution (Moayedi et al., 2020). Each particle is characterized by two key attributes: velocity estimation and position update, which are iteratively optimized during the learning process.

In an n-dimensional space, the position of a particle, xi(t), is updated by adding its velocity, vi(t), to the current position:

The velocity is calculated as:

where c1 and c2 are acceleration coefficients, r1 and r2 are random vectors, and pbest and gbest represent the best local position of the particle and the best global position, respectively.

For classification tasks, the PSO-ANFIS model relies on initial parameter settings derived from the literature review (Mercangöz, 2021; Gad, 2022; Shami et al., 2022). The process begins with the preparation of the data set, including collection, normalization, and preprocessing. Parameters such as particle population size, acceleration coefficients, number of iterations, and linguistic fuzzy sets are then configured. The initial adjustment of the model is crucial to obtain a good performance. For this reason, the bibliographies consulted (Adewuyi et al., 2022; Shami et al., 2022; Mercangöz, 2021), help to choose the group of informants equal to 15, the confidence coefficient corresponding to 2.05, the velocity factor of 0.9, the number of agents equal to 40, the number of iterations equal to 200, the variation in the mean of the premise functions corresponding to 0.2, the center premise functions and standard deviation corresponding to 0.5 and 0.2 respectively, the exponent range of the premise function between 1.0 and 3.0 and finally the range of values for the consequent functions between -10.0 and 10.0.

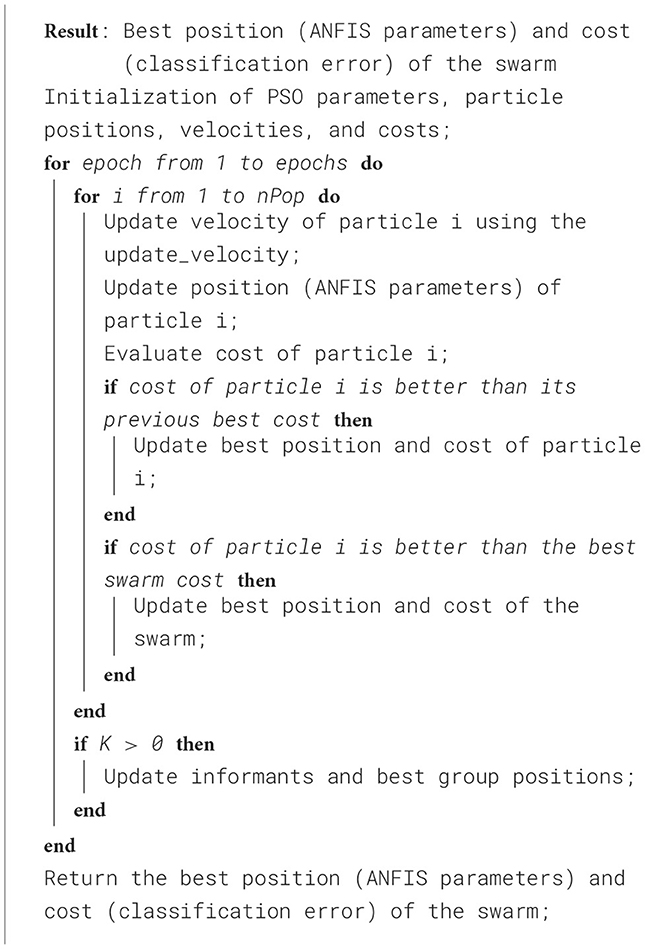

To better understand the proposed approach, the pseudocode in Algorithm 1 outlines how PSO-ANFIS is employed to solve classification problems for regions of interest (ROIs). The process begins by setting the PSO parameters, including the number of particles (nPop), the number of iterations (epochs), the confidence coefficient (phi), and the velocity factor (vel_fact). The positions and velocities of the particles are initialized to represent the ANFIS parameters. During each iteration, the velocities and positions of the particles are updated based on cognitive components (each particle's best position) and social components (best global position swarm's). The performance of each configuration is evaluated using a cost function and the best positions and associated costs are recorded to guide the global search of the swarm. At the end of the process, the algorithm outputs the optimal configuration of the ANFIS parameter and the corresponding minimized classification error. Additionally, Algorithm 2 details the velocity update mechanism for each particle, which combines cognitive and social components to refine parameter adjustments. This iterative approach efficiently tunes the antecedent and consequent parameters of the ANFIS, significantly enhancing its classification performance.

3.3 Performance metrics

The predicted labels from the test results were compared with the actual labels to construct a confusion matrix. Performance metrics such as accuracy, precision and F1-Score were calculated using the components of the confusion matrix: true positives (TP), false positives (FP), true negatives (TN) and false negatives (FN). Here, TP represents the number of ROIs correctly classified as tumor, FN denotes the number of tumor ROIs incorrectly predicted as normal, and TN indicates the number of ROIs correctly classified as normal.

Performance metrics considered in this study were defined as follows:

• Accuracy: The proportion of correctly classified ROIs to the total number of ROIs:

• Precision: The proportion of correctly predicted tumor ROIs to all predicted tumor ROIs:

• Recall (Sensitivity): The proportion of correctly predicted tumor ROIs to all actual tumor ROIs:

• F1 Score: The harmonic mean of Precision and Recall, providing a balanced evaluation:

The DICE Score is a widely used metric for evaluating the overlap between two regions of interest (ROIs) in an image, particularly for segmentation tasks. It is defined as:

where A represents the predicted ROI, B represents the ground truth ROI, |A ∩ B| is the number of pixels (or voxels) common to both A and B (the intersection), |A| is the total number of pixels in the predicted ROI, and |B| is the total number of pixels in the ground truth ROI. A DICE Score close to 1 signifies a near-perfect overlap between the predicted and ground truth ROIs, whereas a score of 0 indicates no overlap at all.

4 Results

4.1 Segmentation results

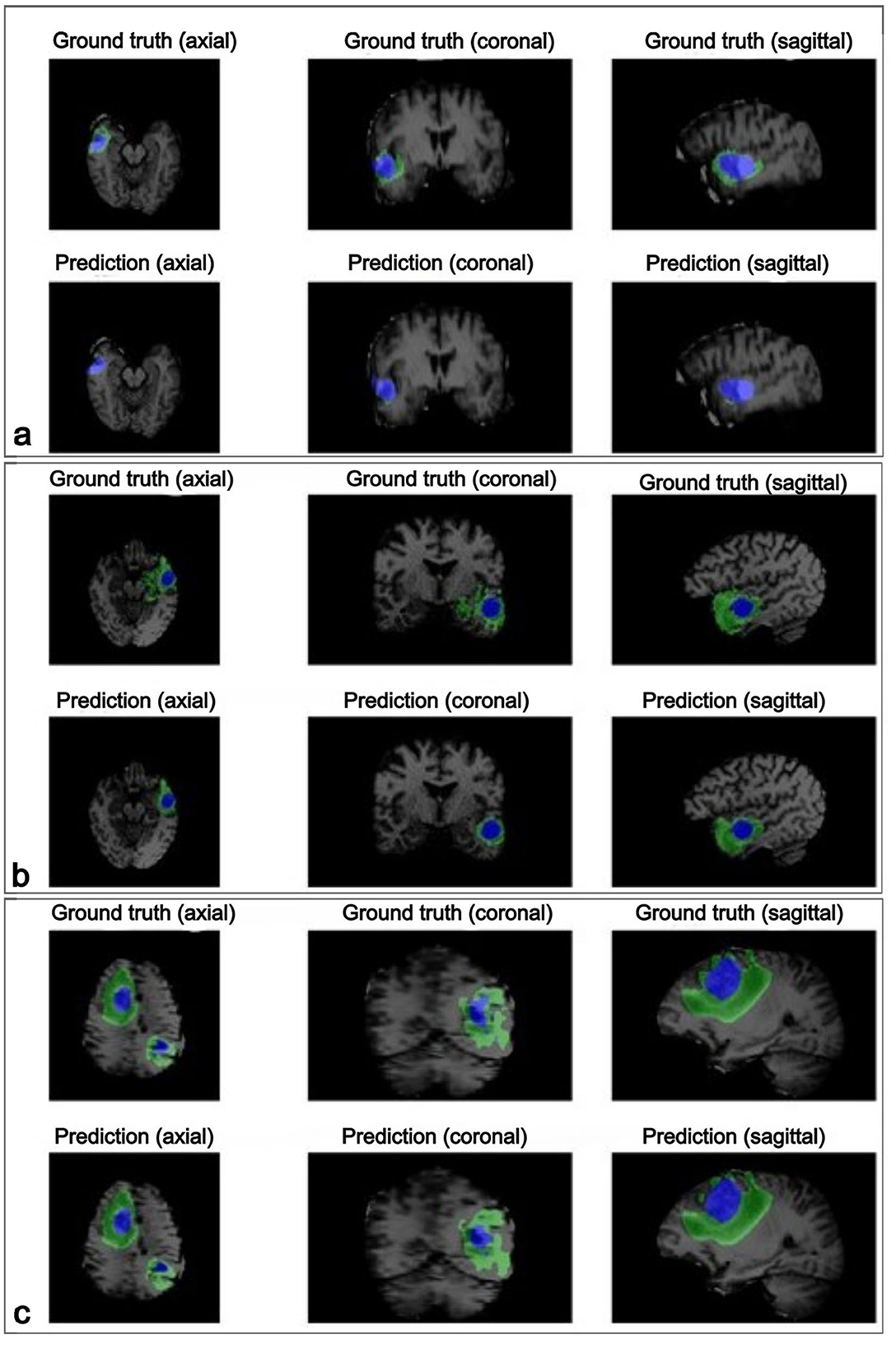

The neural network was trained over 300 epochs, achieving an average DICE Score of 86.07% during the segmentation process. Convergence occurred around epoch 50, with minimal fluctuations in validation accuracy and the loss function approaching its minimum values. The test results showed as average and standard deviation of the DICE Score for segmentation of 82.94% ± 16.92 for tumor core (TC), 76.06% ± 17.27 for edema (E), and 99.90% ± 0.06 for healthy tissue.

Further evaluation in three test subjects, visualized in Figure 5, illustrates the performance of the model in the axial, coronal, and sagittal planes, highlighting the predicted regions of interest (tumor core (TC), edema (E) and healthy tissue). For subject Figure 5a, the model achieved precisions of 90.47% for tumor core, 62.29% for edema, and 99.91% for healthy tissue. Subject Figure 5b exhibited precisions of 85.83% for the tumor core, 10.43% for edema, and 99.93% for healthy tissue. Lastly, for the subject Figure 5c, the model achieved a precision of 96.10% for the tumor core, 93.12% for edema, and 99.89% for healthy tissue.

Figure 5. The visualization displays the ground truth and predicted tumor segmentation across axial, coronal, and sagittal planes for three representative subjects (a–c). Each row presents the ground truth (top) and the model's prediction (bottom) for the same subject, highlighting the high similarity between the predicted and actual labeled tumor regions. The blue regions represent the tumor core, while the green regions indicate the edema surrounding the tumor core. Case (a) shows a small, localized tumor core with minimal edema; case (b) shows a moderately sized tumor core with more noticeable surrounding edema; and case (c) presents a large tumor with an extensive tumor core and widespread edema affecting multiple brain areas of the brain.

These results highlight the model's ability to accurately segment healthy tissue and tumor core, with consistent performance across these classes. However, edema segmentation exhibited greater variability, highlighting it as a challenging aspect and underscoring the need for further refinement in this area.

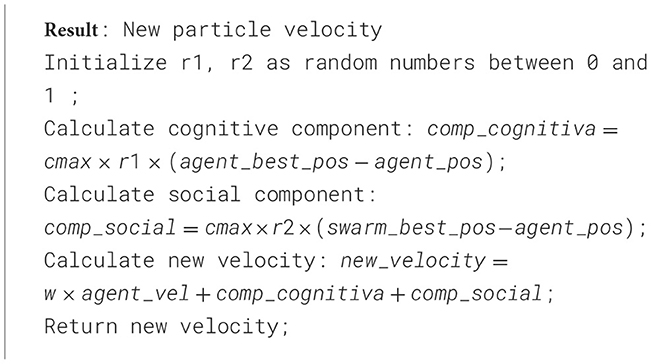

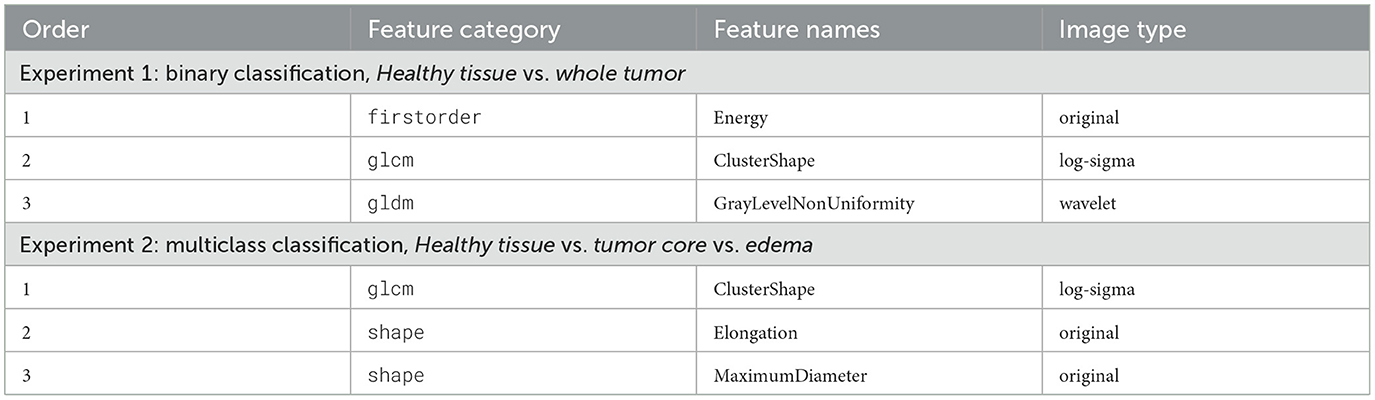

4.2 Radiomic features selected by importance

After extensively evaluating various feature selection methods, a combination of the top-ranked features from Decision Trees (DT) and Random Forest (RF) yielded the best performance in our study. Features were ranked based on their importance scores, and the most relevant ones from both models were selected to identify the most discriminative attributes for label identification (Renugadevi et al., 2023; Srinivasan et al., 2019). To ensure consistency and prevent numerical scale differences from impacting model performance, all features were normalized to a range of 0 to 1. Among the 218 extracted features, the top 20 were selected for further analysis based on their importance scores, ensuring that the most informative attributes were retained for classification.

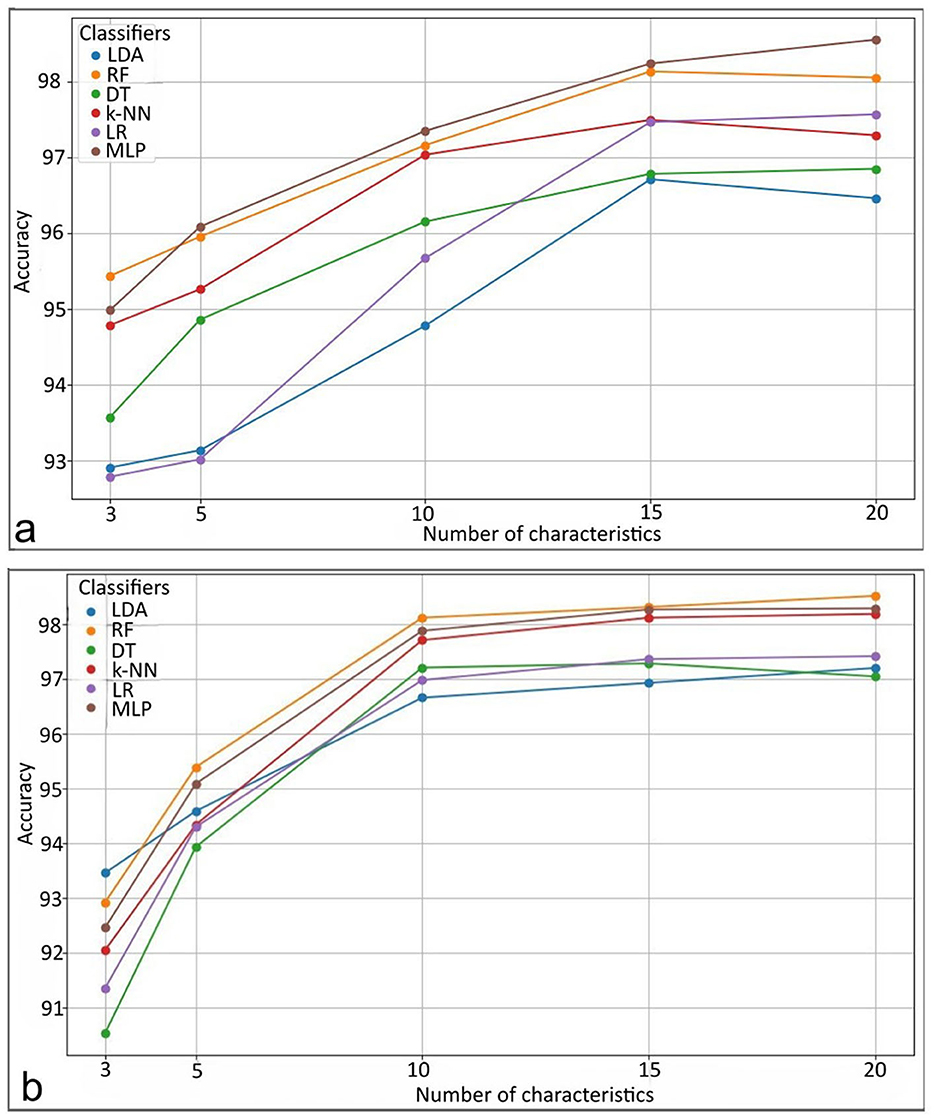

An exploratory analysis was conducted to assess the performance of various machine learning (ML) models for BT classification, varying the performance according to the number of features used. As illustrated in Figure 6, model performance improved with increasing features in both experiments. Among the models, the Multilayer Perceptron (MLP) demonstrated the highest performance in distinguishing between healthy and affected tissue. The Random Forest (RF) model performed closely, showing strong accuracy in classifying healthy tissue, tumor core (TC), and edema (E). In contrast, a minimal set of three features was also tested, but yielded the lowest performance compared to larger feature sets.

Figure 6. Performance results of the classifiers. For (a) distinguishing between healthy and affected tissue. For (b) distinguishing between healthy tissue, tumor core, and edema. LDA, Linear Discriminant Analysis; RF, Random Forest; DT, Decision Trees; k-NN, k-Nearest Neighbors; LR, Logistic Regression; MLP, Multilayer Perceptron.

Table 2 lists the top 10 most influential features of both experiments, ranked by relevance according to DT-RF methods. These findings suggest that performance comparable to existing proposals can be achieved using a subset of up to three features. For rule-based models such as ANFIS, this approach facilitates interpretability by using a smaller number of selected features, providing more precise explanations without sacrificing classification performance.

Several studies have identified key radiomic features that enhance the predictive accuracy of models in BT classification. Zhang et al. (2020) reported that specific textural characteristics, such as cellularity and peritumoral edema, vary between tumor types and play a significant role in classification. Çinarer et al. (2020) highlighted the importance of wavelet-based radiomic features in predicting glioma grades, demonstrating a strong association between certain wavelet features and tumor grade as well as patient survival outcomes. Similarly, Choi et al. (2020) emphasized the role of Gray Level Co-occurrence Matrix (GLCM) features in quantifying glioblastoma texture patterns, noting that contrast, correlation, energy, and homogeneity serve as strong prognostic factors. Chen et al. (2018) used a diverse set of radiomic features–including 18 first-order features, 13 shape features, and 74 texture features–to effectively identify gliomas. The findings from these studies align with the current work, which highlights radiomic features that capture variability in gray level, texture, complexity, and contrast through advanced transformation techniques. Key feature categories include first-order statistics, GLCM, Gray Level Dependence Matrix (GLDM), and shape features. Additionally, intensity analysis, energy, and clustering metrics are extracted from multiple image domains, including original, log-sigma, and wavelet-transformed images, providing a comprehensive representation of tumor characteristics.

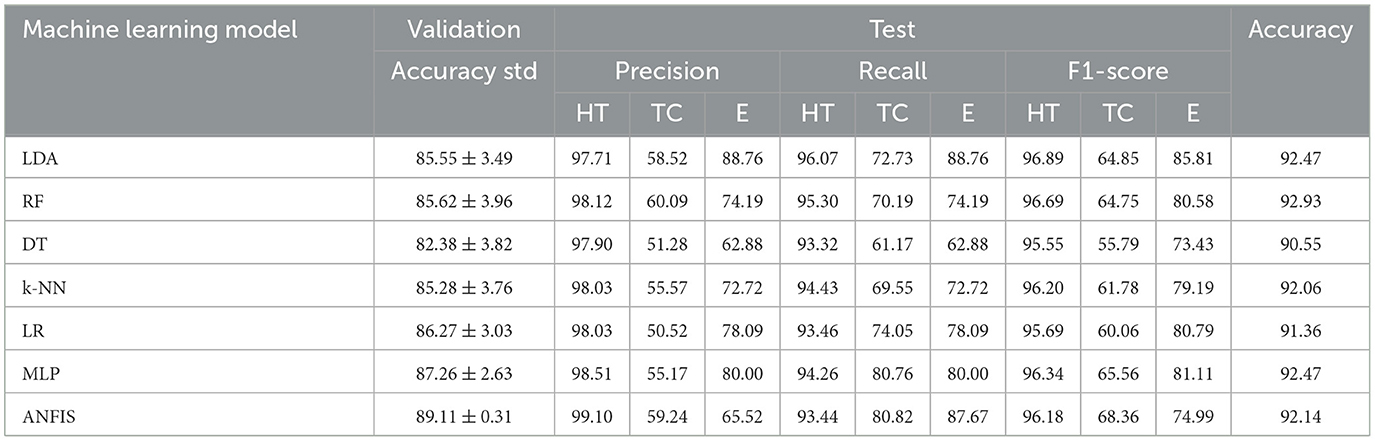

4.3 Evaluation of the classifier performances

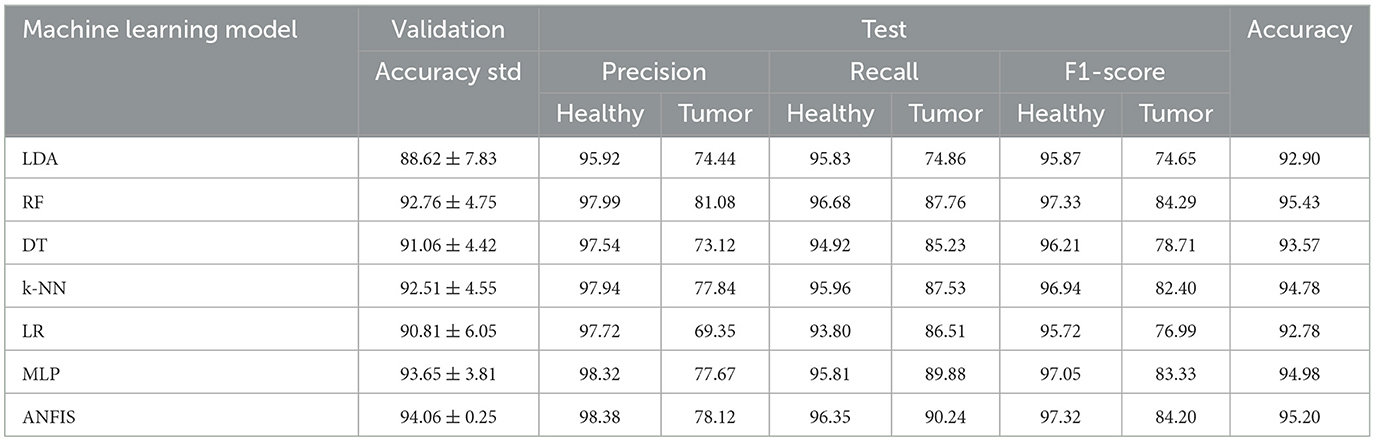

The use of fewer features improves the interpretability of the classification results, facilitating clearer insights into the decision-making process. The performance results for two experiments are summarized in Tables 3, 4. The study used 5-fold cross-validation to evaluate various methodologies for tissue classification. The dataset was systematically partitioned into 80% training and 20% validation data for each of the 5 folds, subsequently quantifying the mean and standard deviation of the classification accuracies in the 5 folds within the validation samples. Afterwards, the test set comprising fully independent data demonstrates the generalization performance.

Table 4. Validation and test results for the multi-class problem: healthy tissue (HT) vs. tumor core (TC) vs. edema (E).

In Experiment 1 (Table 3), which focused on distinguishing healthy tissue from tumor tissue, the Random Forest (RF) and ANFIS classifiers outperformed other models, achieving greater accuracy than 95% in the test data. This high accuracy underscores their strong detection capabilities. ANFIS demonstrated superior performance, with higher accuracy and lower variability during cross-validation, exhibiting a standard deviation of ±0.25%. This stability highlights its robustness and consistency across different data splits, reinforcing its reliability. Furthermore, both RF and ANFIS recorded the highest F1-score for individual labels, surpassing 97% for healthy tissue and 84% for tumor tissue.

In Experiment 2, which involved multiclass classification, several methods, including LDA, RF, k-NN, MLP, and ANFIS, achieved excellent results, with test accuracies exceeding 92%, demonstrating their efficacy in handling more complex medical image classification tasks. ANFIS obtained the lowest variability among all models (standard deviation = ±0.31), further strengthening its reliability. LDA excelled in classifying tissue of edema, achieving an F1-score of 85.81%, while ANFIS showed strength in identifying the tumor core, with an F1-score exceeding 68%. This highlights the ability of ANFIS to combine interpretability while preserving good performance using its rule-based approach.

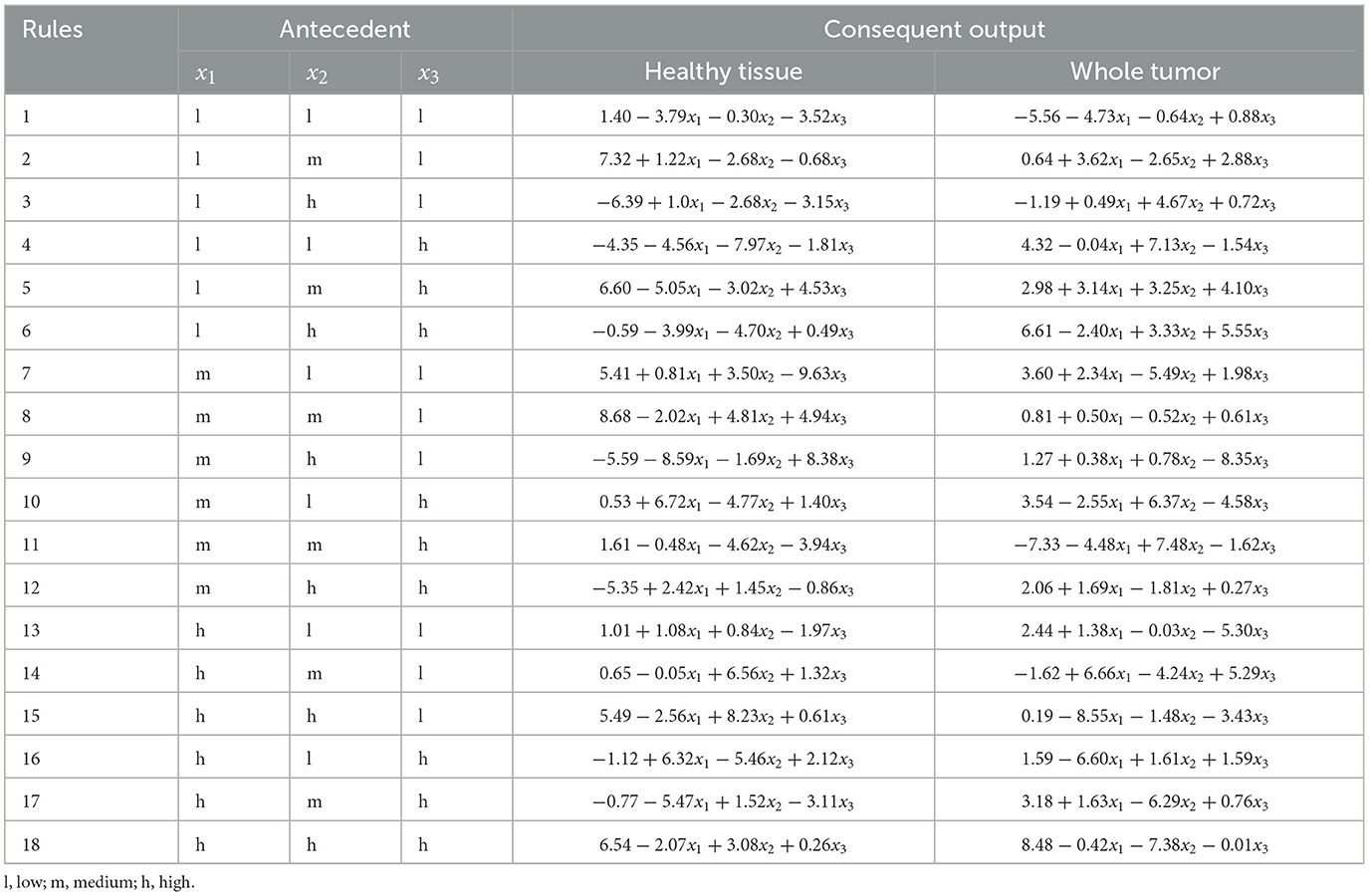

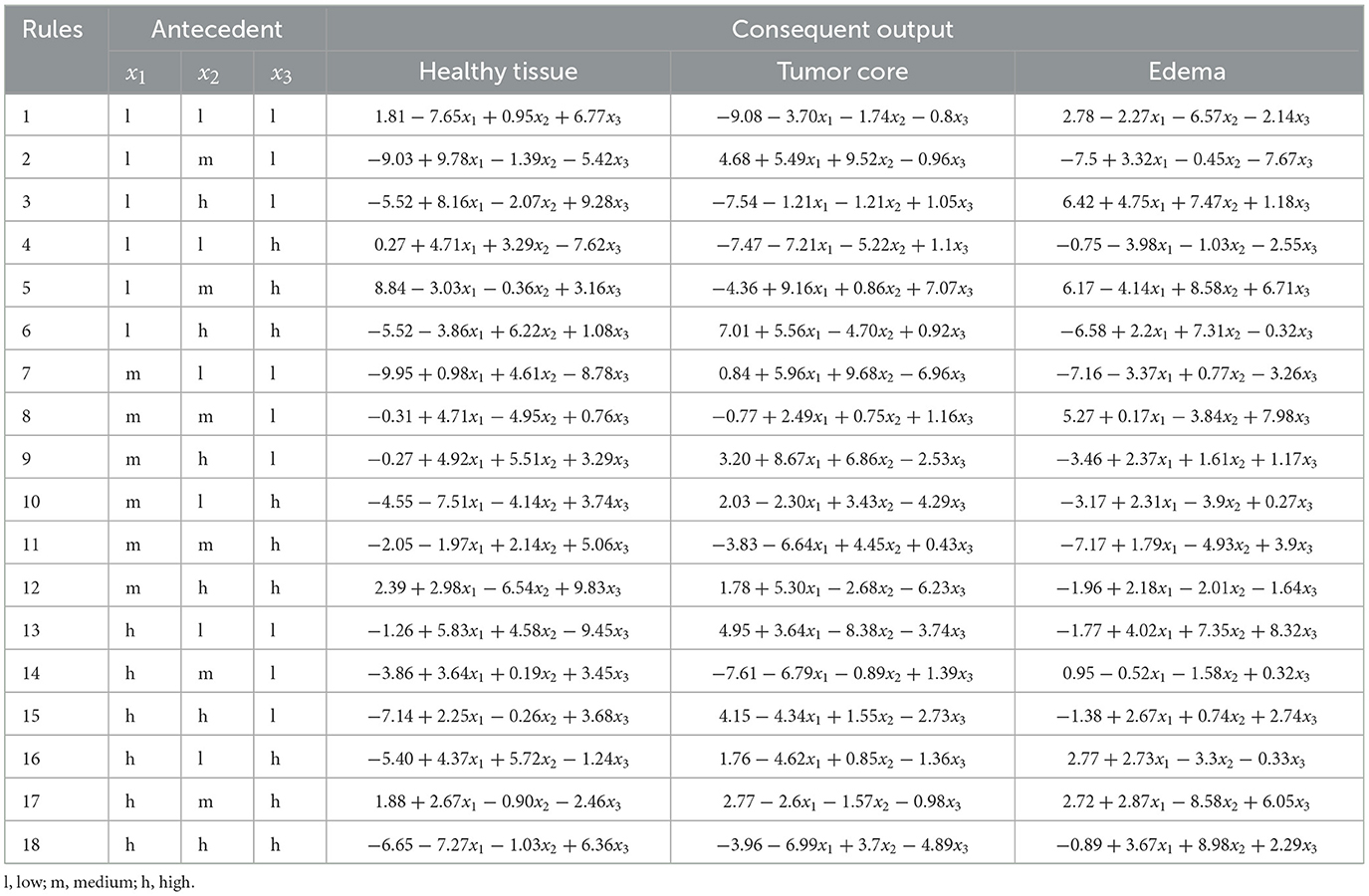

4.4 Interpretability analysis

The three most relevant radiomics features, selected according to their importance, as shown in Table 2, were used as input for the ANFIS model. Each feature was assigned linguistic variables according to its value ranges, following a structure of eight variables (3,3,2), where the first two features had three linguistic terms and the third had two. The membership functions for the three selected radiomic features are shown in Figure 7a for the analysis of healthy tissue and whole tumor, Figure 7b for the analysis of healthy tissue, tumor core and edema.

Figure 7. Representation of the membership functions generated by the ANFIS model. For (a) labels healthy tissue vs. whole tumor, and (b) for labels healthy tissue vs. tumor core vs. edema, divided into fuzzy subsets for both experiments.

The selected radiomic features are modeled using linguistic variables in the second experiment. Similarly to experiment 1, the first two features are divided into three terms: “low,” “medium,” and “high,” while the third feature is divided into two terms: “low” and “high.” However, in this case, the analysis focuses on three tissue classes: healthy tissue, tumor core, and edema. The selection of these features, based on their quantitative importance, underscores the need for expert knowledge to ensure that the variables selected represent meaningful patterns in the classification of tissue types. These linguistic variables enable the creation of fuzzy rules that capture the nonlinear relationships between input features and tissue classes, providing accurate classification and interpretability.

In both experiments, the selected features help interpret the tissue portions (healthy tissue, tumor core, edema, and whole tumor) listed below in Table 5.

Expert knowledge was essential in defining the appropriate ranges for linguistic variables to analyze the selected radiomic features. The membership functions corresponding to these features are illustrated in Figure 7a for experiment 1 and Figure 7b for experiment 2. Furthermore, the structure of the linguistic variables and the number of fuzzy rules remained consistent between both experiments, ensuring comparable conditions for the classification tasks. The Cartesian product of these variables generated 18 fuzzy rules that combine antecedents (based on the features) with consequences (the system outputs). These rules capture the non-linear relationships between inputs and tissue labels, allowing the interpretation of the results, as shown in Tables 6, 7.

Table 7. ANFIS rules: antecedents and consequents for labels healthy tissue vs. tumor core vs. edema.

The use of decision rules, as shown in Figure 8, illustrates the interpretability of the classification process for the experiments using the ANFIS model with only 18 fuzzy rules to classify brain tissue. The current study shows the use of automatic feature selection, but the proposal is open to feature selection with expert knowledge. Tables 6, 7 show the learned parameters, where each input variable is assigned linguistic labels based on expert knowledge. Fuzzy rules evaluate the classification by processing the input features through a weighted combination of rules to determine the final output.

Figure 8. Pipeline of the extraction of the radiomic features for the kernel size and the classification of the radiomic features by ANFIS for the tumor characterization.

The output of the ANFIS model generates the antecedent and consequent parameters. These parameters correspond to the inference process of decision rules that weights the classified label (e.g., healthy tissue, tumor core, edema). The model applies the IF-THEN rules for each input case, where each rule is associated with a degree of activation based on how well the input features match the linguistic conditions. For example, the first fuzzy rule in Experiment 1 evaluates whether the input corresponds to healthy tissue (output 0) or a whole tumor (output 1). Each rule assigns a weight or degree of confidence to potential outputs depending on how well the input characteristics (e.g., feature values) satisfy the conditions of the rule.

The system then aggregates these weighted outputs across all 18 rules using a weighted average. This process is known as rule aggregation, where the contributions of each rule are combined to generate the final decision. The output with the highest cumulative weight is selected as the final classification label. In this case, the model decides whether to classify the tissue as healthy (label_0) or tumor (label_3) based on the overall strength of the activated fuzzy rules.

5 Discussion

This study introduces a novel framework for tumor characterization in MRI, utilizing the combination of ANFIS and a feature selection approach. Integrating these methods aims to enhance the precision and efficiency of tumor analysis, addressing current challenges in medical imaging. The proposed model demonstrated good segmentation performance using the BraTS2020 dataset, achieving DICE Scores of 99.90% for healthy tissue, 82.94% for tumor core (TC) and 76.06% for edema. These results are consistent with those reported by Baid et al. (2020), who achieved DICE Scores of 92% for the whole tumor, 90% for tumor core and 81% for the enhancement tumor. Similarly, Akbar et al. (2021) reported DICE Scores of 78.02%, 80.73% and 89.07% for the enhancing tumor, tumor core, and whole tumor, respectively, using a 3D U-net architecture. Although the DICE Score for edema (76.06%) is lower than other lesions, primarily due to its diffuse nature, structural complexity, and inherent difficulty in distinguishing edema from tumor infiltration, our model demonstrates the ability to identify these challenging structures.

Several studies utilizing ANFIS classification mechanisms for BT are showing promising results in the identification of brain tissue. For example,the proposal by Shankar et al. (2020) presents an ANFIS method to classify brain MRI into benign and malignant tumors with an accuracy of 96.23%. Mathiyalagan and Devaraj (2021) and Nagarathinam and Ponnuchamy (2019), propose an ANFIS-based method to classify tumors. It achieves a rate of recognition of gliomas greater than 98% accuracy. However, while these developments demonstrate high performance in their outcomes, they do not elucidate how the results can be interpreted. Such proposals utilizing these ANFIS models fail to explain in their methodology how to derive output decisions based on their input data. This approach, based on ANFIS and radiomics, integrates fuzzy decision rules that offer valuable insights into the diagnostic process. The ANFIS-based model achieved an accuracy of 95.20% in the healthy tissue versus whole tumor experiment, and 92.14% in the classification of healthy tissue, tumor core and edema, closely aligned with the performance of other machine learning classifiers. However, this methodology addresses a critical gap: It emphasizes a comprehensive mechanism to explain how radiomic features contribute to the decision-making process, improving interpretability.

The challenge of balancing performance and interpretability is particularly evident in ANFIS models. Increasing the number of radiomics features has been shown to improve performance, but this often leads to an exponential increase in rule complexity, making models less interpretable and difficult to validate clinically. Previous studies have attempted to reduce the number of rules, but have often resulted in impractical rule sets that do not meet clinical needs. For example, Hien et al. (2022) reduced the number of rules in the ANFIS models without achieving low rules to facilitate interpretability. This feature selection approach is consistent with studies that emphasize the role of radiomic features in improving classification accuracy while balancing model complexity (Tahosin et al., 2023; Lefkovits et al., 2017; Khanna et al., 2024). This study overcomes these challenges by minimizing the complexity of the rule without sacrificing the accuracy of the classification. A streamlined feature selection process ensures that only the most informative features are used, resulting in a concise set of interpretable rules capturing the essence of diagnostic patterns. This approach reduces computational overhead and increases transparency, allowing clinicians to understand and validate the model's reasoning. Furthermore, by linking radiomics features to diagnostic assistance outcomes, the generated fuzzy rules provide a framework for interpreting the classification process.

6 Conclusion

This study presents an integrated system that combines a CNN-based segmentation model with an interpretability framework for a clinically relevant and accurate characterization of tumor diagnosis assistance using MRI data. The model achieved high precision in the segmentation stage, with average DICE Scores of 99.90% for healthy tissue, 82.94% for tumor core (TC), and 76.06% for edema. These results demonstrate the effectiveness of the model in accurately identifying relevant tissue regions, particularly healthy tissue and the tumor core. However, edema segmentation exhibited more variability due to its diffuse nature, structural complexity, and challenges posed by differential diagnoses.

Following segmentation, an interpretability framework was introduced, integrating radiomics-based feature extraction with an ANFIS model to interpret classification results through fuzzy rules. Initially, 218 radiomic features were extracted and a feature selection process was applied using Decision Tree and Random Forest to identify the most relevant features. These selected features were evaluated in two experiments: the first distinguishing between healthy tissue and whole tumor, and the second differentiating among healthy tissue, tumor core, and edema. The results demonstrated high accuracy, achieving over 95% in the two-class experiment and 92% in the three-class experiment. The combination of radiomics and ANFIS proved effective in delivering interpretability through 18 decision rules generated from the three most relevant radiomic features.

However, some limitations were observed. As the number of input features increases, the number of rules in ANFIS grows, which can reduce the system interpretability. The proposed framework was also tested exclusively on FLAIR images, highlighting the need to extend the analysis to other MRI modalities. Future research should address these limitations by exploring techniques to manage the growing number of fuzzy rules, such as rule reduction strategies or hybrid methods that balance interpretability and accuracy. Efforts should also include integrating additional MRI modalities and extending to 3D imaging to provide a more comprehensive and clinically relevant framework. In doing so, radiomics for feature extraction and ANFIS for classification can be further validated as promising approaches to improve both the accuracy and interpretability of BT diagnosis and characterization.

Data availability statement

Publicly available datasets were analyzed in this study. This data can be found at: https://www.med.upenn.edu/cbica/brats2020/data.html.

Ethics statement

Ethical approval was not required for the study involving humans in accordance with the local legislation and institutional requirements. Written informed consent to participate in this study was not required from the participants or the participants' legal guardians/next of kin in accordance with the national legislation and the institutional requirements. Written informed consent was not obtained from the individual(s) for the publication of any potentially identifiable images or data included in this article because the datasets analyzed in this study are publicly available as part of the BRATS 2020 dataset.

Author contributions

LM-R: Writing – original draft, Writing – review & editing, Formal analysis, Investigation, Methodology, Software, Validation. EC: Investigation, Software, Methodology, Writing – original draft. MS: Investigation, Methodology, Software, Writing – original draft. DM: Investigation, Software, Writing – original draft. SP: Investigation, Methodology, Software, Visualization, Writing – original draft. FT: Investigation, Writing – review & editing, Validation. SC: Funding acquisition, Investigation, Writing – review & editing, Validation. MQ: Conceptualization, Supervision, Writing – review & editing, Investigation, Validation, Writing – original draft. JS: Conceptualization, Investigation, Methodology, Supervision, Writing – review & editing, Formal analysis, Validation. RS: Conceptualization, Funding acquisition, Investigation, Methodology, Project administration, Supervision, Writing – review & editing, Formal analysis, Resources, Validation, Visualization, Writing – original draft.

Funding

The author(s) declare that financial support was received for the research and/or publication of this article. This work was supported by the National Agency for Research and Development (ANID) through the National Scholarship Program: Becas Doctorado Nacional 2023 -21231767, FONDECYT No. 1221938 “An Explainable Deep Neuro-Fuzzy Inference System for the segmentation of BT in multi-contrast magnetic resonance imaging”, FONDECYT No. 11200481 and No. 1231268, and ANID Millennium Science Initiative Program ICN2021 004.

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Generative AI Statement

The author(s) declare that Gen AI was used in the creation of this manuscript. In writing and refining this article, Generative AI (GenAI) tools were utilized exclusively to improve the clarity, coherence, and fluency of the English language. The use of AI was limited to language correction and stylistic enhancement, ensuring that the core content, research insights, and intellectual contributions remain original and entirely the product of the author(s). The author(s) retain full responsibility for the article's content and no AI was used to generate ideas, research, data analysis, or conceptual development.

Publisher's note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

References

Adewuyi, O. B., Folly, K. A., Oyedokun, D. T., and Ogunwole, E. I. (2022). Power system voltage stability margin estimation using adaptive neuro-fuzzy inference system enhanced with particle swarm optimization. Sustainability 14:15448. doi: 10.3390/su142215448

Afshar, P., Plataniotis, K. N., and Mohammadi, A. (2019). “Capsule networks' interpretability for brain tumor classification via radiomics analyses,” in 2019 IEEE International Conference on Image Processing (ICIP) (Chittagong: IEEE), 3816–3820.

Ahmed, S., Nobel, S. N., and Ullah, O. (2023). “An effective deep CNN model for multiclass brain tumor detection using MRI images and shap explainability,” in 2023 International Conference on Electrical, Computer and Communication Engineering (ECCE) (Chittagong: IEEE), 1–6.

Akbar, A. S., Fatichah, C., and Suciati, N. (2021). “UNet3D with multiple atrous convolutions attention block for brain tumor segmentation,” in International MICCAI Brainlesion Workshop (Cham: Springer), 182–193.

Ali, S., Li, J., Pei, Y., Khurram, R., Rehman, K. U., and Mahmood, T. (2022). A comprehensive survey on brain tumor diagnosis using deep learning and emerging hybrid techniques with multi-modal MR image. Arch. Comp. Methods Eng. 29, 4871–4896. doi: 10.1007/s11831-022-09758-z

Allende-Cid, H., Salas, R., Veloz, A., Moraga, C., and Allende, H. (2016). Sonfis: structure identification and modeling with a self-organizing neuro-fuzzy inference system. Int. J. Comp. Intellig. Syst. 9, 416–432. doi: 10.1080/18756891.2016.1175809

Alnemer, A., and Rasheed, J. (2021). “An efficient transfer learning-based model for classification of brain tumor,” in 2021 5th International Symposium on Multidisciplinary Studies and Innovative Technologies (ISMSIT) (Ankara: IEEE), 478-482.

Anggara, Y., and Munandar, A. (2023). Implementation of hybrid rnn-anfis on forecasting Jakarta Islamic Index. Jambura J. Mathem. 5, 419–430. doi: 10.34312/jjom.v5i2.20407

Anitha, R., Sundaramoorthy, K., Selvi, S., Gopalakrishnan, S., and Sheela, M. S. (2023). Detection and segmentation of meningioma brain tumors in MRI brain images using curvelet transform and anfis. Int. J. Elect. Electron. Res. 11, 412–417. doi: 10.37391/ijeer.110222

Bahkali, I., and Semwal, S. K. (2021). “Medical visualization using 3D imaging and volume data: a survey,” in Proceedings of the Future Technologies Conference (FTC) 2020 (Cham: Springer), 251–261.

Baid, U., Talbar, S., Rane, S., Gupta, S., Thakur, M. H., Moiyadi, A., et al. (2020). A novel approach for fully automatic intra-tumor segmentation with 3D U-Net architecture for gliomas. Front. Comput. Neurosci. 14:10. doi: 10.3389/fncom.2020.00010

Ban, J., Li, S., Zhan, Q., Li, X., Xing, H., Chen, N., et al. (2021). PMPC modified PAMAM dendrimer enhances brain tumor-targeted drug delivery. Macromol. Biosci. 21:2000392. doi: 10.1002/mabi.202000392

Basnet, R., Ahmad, M. O., and Swamy, M. (2021). A deep dense residual network with reduced parameters for volumetric brain tissue segmentation from MR images. Biomed. Signal Process. Control 70:103063. doi: 10.1016/j.bspc.2021.103063

Benyamina, H., Mubarak, A. S., and Al-Turjman, F. (2022). “Explainable convolutional neural network for brain tumor classification via MRI images,” in 2022 International Conference on Artificial Intelligence of Things and Crowdsensing (AIoTCs) (Nicosia: IEEE), 266–272.

Bhattacharjee, S., Prakash, D., Kim, C.-H., Kim, H.-C., and Choi, H.-K. (2022). Texture, morphology, and statistical analysis to differentiate primary brain tumors on two-dimensional magnetic resonance imaging scans using artificial intelligence techniques. Healthc. Inform. Res. 28, 46–57. doi: 10.4258/hir.2022.28.1.46

Bouhafra, S., and El Bahi, H. (2024). Deep learning approaches for brain tumor detection and classification using MRI images (2020 to 2024): a systematic review. J. Imag Inform. Med. 2024, 1–31. doi: 10.1007/s10278-024-01283-8

Burkart, N., and Huber, M. F. (2021). A survey on the explainability of supervised machine learning. J. Artif. Intellig. Res. 70, 245–317. doi: 10.1613/jair.1.12228

Cavieres, E., Tejos, C., Salas, R., and Sotelo, J. (2023). “Automatic segmentation of brain tumor in multi-contrast magnetic resonance using deep neural network,” in 18th International Symposium on Medical Information Processing and Analysis (Bellingham, WA: SPIE), 81–89.

Cè, M., Irmici, G., Foschini, C., Danesini, G. M., Falsitta, L. V., Serio, M. L., et al. (2023). Artificial intelligence in brain tumor imaging: a step toward personalized medicine. Curr. Oncol. 30, 2673–2701. doi: 10.3390/curroncol30030203

Chabert, S., Salas, R., Cantor, E., Veloz, A., Cancino, A., González, M., et al. (2024). Hemodynamic response function description in patients with glioma. J. Neuroradiol. 51:101156. doi: 10.1016/j.neurad.2023.10.001

Champ, C. E., Siglin, J., Mishra, M. V., Shen, X., Werner-Wasik, M., Andrews, D. W., et al. (2012). Evaluating changes in radiation treatment volumes from post-operative to same-day planning MRI in high-grade gliomas. Radiat. Oncol. 7, 1–8. doi: 10.1186/1748-717X-7-220

Chen, D., Liu, S., Kingsbury, P., Sohn, S., Storlie, C. B., Habermann, E. B., et al. (2019). Deep learning and alternative learning strategies for retrospective real-world clinical data. NPJ Digit. Med. 2:43. doi: 10.1038/s41746-019-0122-0

Chen, W., Liu, B., Peng, S., Sun, J., and Qiao, X. (2018). Computer-aided grading of gliomas combining automatic segmentation and radiomics. Int. J. Biomed. Imaging 2018:2512037. doi: 10.1155/2018/2512037

Chen, X., Li, Y., Yao, L., Adeli, E., and Zhang, Y. (2021). Generative adversarial u-net for domain-free medical image augmentation. arXiv [preprint] arXiv:2101.04793. doi: 10.1016/j.patrec.2022.03.022

Choi, S. W., Cho, H.-H., Koo, H., Cho, K. R., Nenning, K.-H., Langs, G., et al. (2020). Multi-habitat radiomics unravels distinct phenotypic subtypes of glioblastoma with clinical and genomic significance. Cancers 12:1707. doi: 10.3390/cancers12071707

Çinarer, G., Emiroğlu, B. G., and Yurttakal, A. H. (2020). Prediction of glioma grades using deep learning with wavelet radiomic features. Appl. Sci. 10:6296. doi: 10.3390/app10186296

Çınarer, G., and Emiroğlu, B. G. (2019). “Classificatin of brain tumors by machine learning algorithms,” in 2019 3rd International Symposium on Multidisciplinary Studies and Innovative Technologies (ISMSIT) (Ankara: IEEE), 1–4.

Csaholczi, S., Iclănzan, D., Kovács, L., and Szilágyi, L. (2020). “Brain tumor segmentation from multi-spectral MR image data using random forest classifier,” in International Conference on Neural Information Processing (Cham: Springer), 174–184.

Das, S., Swain, M. k, Nayak, G. K., Saxena, S., and Satpathy, S. (2022). Effect of learning parameters on the performance of u-net model in segmentation of brain tumor. Multimed. Tools Appl. 81, 34717–34735. doi: 10.1007/s11042-021-11273-5

de Robles, P., Fiest, K. M., Frolkis, A. D., Pringsheim, T., Atta, C., St. Germaine-Smith, C., et al. (2015). The worldwide incidence and prevalence of primary brain tumors: a systematic review and meta-analysis. Neuro-Oncol. 17, 776–783. doi: 10.1093/neuonc/nou283

Dixit, A., and Thakur, M. K. (2023). Advancements and emerging trends in brain tumor classification using MRI: a systematic review. Netw. Model. Analy. Health Inform. Bioinform. 12:34. doi: 10.1007/s13721-023-00428-z

Estabrooks, A., Jo, T., and Japkowicz, N. (2004). A multiple resampling method for learning from imbalanced data sets. Compu. Intellig. 20, 18–36. doi: 10.1111/j.0824-7935.2004.t01-1-00228.x

Ferdous, G. J., Sathi, K. A., and Hossain, M. A. (2021). “Application of hybrid classifier for multi-class classification of MRI brain tumor images,” in 2021 5th International Conference on Electrical Engineering and Information Communication Technology (ICEEICT) (IEEE), 1–6.

Fernández, A., García, S., Galar, M., Prati, R. C., Krawczyk, B., and Herrera, F. (2018). Learning from Imbalanced Data Sets, Volume 10. Cham: Springer.

Filippini, F., Lublinsky, B., de Bayser, M., and Ardagna, D. (2023). “Performance models for distributed deep learning training jobs on ray,” in 2023 49th Euromicro Conference on Software Engineering and Advanced Applications (SEAA) (Durres: IEEE), 30–35.

Gad, A. G. (2022). Particle swarm optimization algorithm and its applications: a systematic review. Arch. Comp. Methods Eng. 29, 2531–2561. doi: 10.1007/s11831-021-09694-4

Hattingen, E., Müller, A., Jurcoane, A., Mädler, B., Ditter, P., Schild, H., et al. (2017). Value of quantitative magnetic resonance imaging T1-relaxometry in predicting contrast-enhancement in glioblastoma patients. Oncotarget 8:53542. doi: 10.18632/oncotarget.18612

Hien, N. T., Nhung, N. P., and Linh, N. T. (2022). Adaptive neuro-fuzzy inference system classifier with interpretability for cancer diagnostic. J. Military Sci. Technol. 2022, 56–64. doi: 10.54939/1859-1043.j.mst.CSCE6.2022.56-64

Hung, N. D., Anh, N. N., Minh, N. D., Huyen, D. K., and Duc, N. M. (2023). Differentiation of glioblastoma and primary central nervous system lymphomas using multiparametric diffusion and perfusion magnetic resonance imaging. Biomed. Reports 19:82. doi: 10.3892/br.2023.1664

Huse, J. T., Edgar, M., Halliday, J., Mikolaenko, I., Lavi, E., and Rosenblum, M. K. (2013). Multinodular and vacuolating neuronal tumors of the cerebrum: 10 cases of a distinctive seizure-associated lesion. Brain Pathol. 23, 515–524. doi: 10.1111/bpa.12035

Hussain, S., Mubeen, I., Ullah, N., Shah, S. S. U. D., Khan, B. A., Zahoor, M., et al. (2022). Modern diagnostic imaging technique applications and risk factors in the medical field: a review. Biomed Res. Int. 2022:5164970. doi: 10.1155/2022/5164970

Iradat, P., Vitraludyono, R., and Yupono, K. (2024). Dexmedetomidine as an ambulatory sedation agent for abdominal MRI in patients with suspected pheochromocytoma. J. Anaesthesia Pain 5, 20–23. doi: 10.21776/ub.jap.2024.005.01.04

Jamieson, D. H., Shipman, P., and Jacobson, K. (2013). Magnetic resonance imaging of the perineum in pediatric patients with inflammatory bowel disease. Can. J. Gastroenterol. Hepatol. 27, 476–480. doi: 10.1155/2013/624141

Jang, J.-S. (1993). ANFIS: adaptive-network-based fuzzy inference system. IEEE Trans. Syst. Man Cybern. 23, 665–685. doi: 10.1109/21.256541

Johnpeter, J. H., and Ponnuchamy, T. (2019). Computer aided automated detection and classification of brain tumors using canfis classification method. Int. J. Imaging Syst. Technol. 29, 431–438. doi: 10.1002/ima.22318

Kalam, R., Thomas, C., and Rahiman, M. A. (2021). Detection of brain tumor in MRI images using optimized anfis classifier. Int. J. Uncert. Fuzzin. Knowl.-Based Syst. 29, 1–29. doi: 10.1142/S0218488521400018

Kale, P. V., Gadicha, A. B., and Dalvi, G. (2024). “Detection and classification of brain tumor using machine learning,” in 2024 Third International Conference on Smart Technologies and Systems for Next Generation Computing (ICSTSN) (Villupuram: IEEE), 1–6. doi: 10.1109/ICSTSN61422.2024.10670906

Khan, M. A., and Park, H. (2024). A convolutional block base architecture for multiclass brain tumor detection using magnetic resonance imaging. Electronics 13:364. doi: 10.3390/electronics13020364

Khanna, S. T., Khatri, S. K., and Sharma, N. K. (2024). Neutrosophic anfis machine learning model and explainable ai interpretation in identification of oral cancer from clinical images. Int. J. Neutrosophic Sci. 2, 198–198. doi: 10.54216/IJNS.240218

Kshirsagar, P. R., Manoharan, H., Siva Nagaraju, V., Alqahtani, H., Noorulhasan, Q., Islam, S., et al. (2022). Accrual and dismemberment of brain tumours using fuzzy interface and grey textures for image disproportion. Comput. Intell. Neurosci. 2022:2609387. doi: 10.1155/2022/2609387

Kumar, A., Jha, A. K., Agarwal, J. P., Yadav, M., Badhe, S., Sahay, A., et al. (2023). Machine-learning-based radiomics for classifying glioma grade from magnetic resonance images of the brain. J. Pers. Med. 13:920. doi: 10.3390/jpm13060920

Kutikuppala, S., Kondamadugula, P., Katakam, P. S., Kotla, P. R., Kanjarla, S. S., and Kakde, Y. (2023). Decision tree learning based feature selection and evaluation for image classification. Int. J. Res. Appl. Sci. Eng. Technol 11, 2668–2674. doi: 10.22214/ijraset.2023.54035

Latif, G., Iskandar, D. A., Alghazo, J. M., and Mohammad, N. (2018). Enhanced MR image classification using hybrid statistical and wavelets features. IEEE Access 7, 9634–9644. doi: 10.1109/ACCESS.2018.2888488

Lefkovits, L., Lefkovits, S., Emerich, S., and Vaida, M. F. (2017). “Random forest feature selection approach for image segmentation,” in Ninth International Conference on Machine Vision (ICMV 2016) (Bellingham, WA: SPIE), 227–231.

Louis, D. N., Perry, A., Wesseling, P., Brat, D. J., Cree, I. A., Figarella-Branger, D., et al. (2021). The 2021 who classification of tumors of the central nervous system: a summary. Neuro-Oncology 23, 1231–1251. doi: 10.1093/neuonc/noab106

Low, J. T., Ostrom, Q. T., Cioffi, G., Neff, C., Waite, K. A., Kruchko, C., et al. (2022). Primary brain and other central nervous system tumors in the united states (2014-2018): a summary of the cbtrus statistical report for clinicians. Neuro-Oncology Pract. 9, 165–182. doi: 10.1093/nop/npac015

Luque, A., Carrasco, A., Martín, A., and de Las Heras, A. (2019). The impact of class imbalance in classification performance metrics based on the binary confusion matrix. Pattern Recognit. 91, 216–231. doi: 10.1016/j.patcog.2019.02.023

Mathiyalagan, G., and Devaraj, D. (2021). A machine learning classification approach based glioma brain tumor detection. Int. J. Imaging Syst. Technol. 31, 1424–1436. doi: 10.1002/ima.22590

McNeill, K. A. (2016). Epidemiology of brain tumors. Neurol. Clin. 34, 981–998. doi: 10.1016/j.ncl.2016.06.014

Mellado, D., Querales, M., Sotelo, J., Godoy, E., Pardo, F., Lever, S., et al. (2023). “A deep learning classifier using sliding patches for detection of mammographical findings,” in 2023 19th International Symposium on Medical Information Processing and Analysis (SIPAIM) (Mexico City: IEEE), 1–5.

Menze, B. H., Jakab, A., Bauer, S., Kalpathy-Cramer, J., Farahani, K., Kirby, J., et al. (2015). The multimodal brain tumor image segmentation benchmark (brats). IEEE Trans. Med. Imaging 34, 1993–2024. doi: 10.1109/TMI.2014.2377694

Mercangöz, B. A. (2021). Applying Particle Swarm Optimization: New Solutions and Cases for Optimized Portfolios, Volume 306. Cham: Springer.

Mitchell, B. R., Cohen, M. C., and Cohen, S. (2021). Dealing with multi-dimensional data and the burden of annotation: easing the burden of annotation. Am. J. Pathol. 191, 1709–1716. doi: 10.1016/j.ajpath.2021.05.023

Moayedi, H., Raftari, M., Sharifi, A., Jusoh, W. A. W., and Rashid, A. S. A. (2020). Optimization of anfis with ga and pso estimating α ratio in driven piles. Eng. Comput. 36, 227–238. doi: 10.1007/s00366-018-00694-w

Mohanty, B. C., Subudhi, P., Dash, R., and Mohanty, B. (2024). Feature-enhanced deep learning technique with soft attention for MRI-based brain tumor classification. Int. J. Inform. Technol. 16, 1617–1626. doi: 10.1007/s41870-023-01701-0

Nagarathinam, E., and Ponnuchamy, T. (2019). Image registration-based brain tumor detection and segmentation using anfis classification approach. Int. J. Imaging Syst. Technol. 29, 510–517. doi: 10.1002/ima.22329

Naveed, N., Madhloom, H. T., and Husain, M. S. (2021). Breast cancer diagnosis using wrapper-based feature selection and artificial neural network. Appl. Comp. Sci. 17, 19–30. doi: 10.35784/acs-2021-18

Neff, C., Price, M., Cioffi, G., Kruchko, C., Waite, K. A., Barnholtz-Sloan, J. S., et al. (2023). Complete prevalence of primary malignant and nonmalignant brain tumors in comparison to other cancers in the united states. Cancer 129, 2514–2521. doi: 10.1002/cncr.34837

Özkaraca, O., Bağrıaçık, O. İ, Gürüler, H., Khan, F., Hussain, J., et al. (2023). Multiple brain tumor classification with dense CNN architecture using brain MRI images. Life 13, 349. doi: 10.3390/life13020349

Padmapriya, S., and Devi, M. G. (2024). “Computer-aided diagnostic system for brain tumor classification using explainable AI,” in 2024 IEEE International Conference on Interdisciplinary Approaches in Technology and Management for Social Innovation (IATMSI) (Gwalior: IEEE), 1–6.

Paek, S. H., Hwang, J. H., Kim, D. G., Choi, S. H., Sohn, C.-H., Park, S. H., et al. (2014). A case report of preoperative and postoperative 7.0 t brain MRI in a patient with a small cell glioblastoma. J. Korean Med. Sci. 29, 1012–1017. doi: 10.3346/jkms.2014.29.7.1012

Paek, S. L., Chung, Y. S., Paek, S. H., Hwang, J. H., Sohn, C.-H., Choi, S. H., et al. (2013). Early experience of pre-and post-contrast 7.0 t MRI in brain tumors. J. Korean Med. Sci. 28, 1362–1372. doi: 10.3346/jkms.2013.28.9.1362