- 1Université Paris Saclay, Université Paris Cité, ENS Paris Saclay, CNRS, SSA, INSERM, Centre Borelli, Gif-sur-Yvette, France

- 2SNCF, Technologies Department, Innovation and Research, Saint Denis, France

- 3Thales AVS France, Training and Simulation, Osny, France

- 4Ophthalmology Department, Hôpital Universitaire Necker-Enfants malades, AP-HP, Paris, France

- 5Université Paris-Saclay, Inria, CIAMS, Gif-sur-Yvette, France

- 6Institute of Information and Control, Hangzhou Dianzi University, Hangzhou, Zhejiang, China

Eye-tracking research offers valuable insights into human gaze behavior by examining the neurophysiological mechanisms that govern eye movements and their dynamic interactions with external stimuli. This review explores the foundational principles of oculomotor control, emphasizing the neural subsystems responsible for gaze stabilization and orientation. Although controlled laboratory studies have significantly advanced our understanding of these mechanisms, their ecological validity remains a critical limitation. However, the emergence of mobile eye tracking technologies has enabled research in naturalistic environments, uncovering the intricate interplay between gaze behavior and inputs from the head, trunk, and sensory systems. Furthermore, rapid technological advancements have broadened the application of eye-tracking across neuroscience, psychology, and related disciplines, resulting in methodological fragmentation that complicates the integration of findings across fields. In response to these challenges, this review underscores the distinctions between head-restrained and naturalistic conditions, emphasizing the importance of bridging neurophysiological insights with experimental paradigms. By addressing these complexities, this work seeks to elucidate the diverse methodologies employed for recording eye movements, providing critical guidance to mitigate potential pitfalls in the selection and design of experimental paradigms.

1 Introduction

Eye movements are controlled by three pairs of agonist-antagonist extra-ocular muscles. The lateral and medial recti generate horizontal movements, while the superior rectus, inferior rectus, superior oblique, and inferior oblique—collectively known as cyclovertical muscles—work together to produce both vertical and torsional rotations (Leigh and Zee, 2015). Sherrington’s law of reciprocal innervation states that the contraction of an ocular muscle is paired with the inhibition of its antagonist, while Hering’s law of equal innervation ensures equal neural input to synergistic muscles in both eyes for coordinated movements (Allary, 2018). Human oculomotor control is generally assumed to be governed by five distinct neural subsystems: the vestibulo-ocular reflex (VOR), the optokinetic reflex (OKR), the saccadic system, the smooth pursuit system, and the vergence system (Robinson et al., 1981; Büttner and Büttner-Ennever, 1988; Duchowski and Duchowski, 2017).

Early research on the neural pathways governing motor neurons of the extraocular muscles led researchers to adopt reductionist approaches in controlled laboratory environments, which limited natural human behavior. However, studies suggest that these artificial constraints, particularly the restriction of head movements, can alter the true functioning of the oculomotor system, leading to biased representations of its behavior in natural contexts (Dorr et al., 2010). This bias arises not only from isolating neural subsystems with controlled stimuli, but also from the recording methods themselves, which require stabilizing the head with devices such as chin rests and chin bars (Eggert, 2007; Wade, 2010; Wade, 2015).

More recently, the increasing availability of mobile eye trackers has significantly advanced the study of eye movements in natural, or ecological, settings (Kothari et al., 2020). However, analyzing eye movement data in such conditions remains challenging. Natural gaze exploration involves simultaneous movements of the eyes, head, trunk, and feet, and it has been shown that the properties of eye movements recorded in these contexts differ from those in laboratory settings (Carnahan and Marteniuk, 1991; Land, 1992; Land, 2004; Klein and Ettinger 2019). While lab experiments typically target specific subsystems, natural eye movements result from the combined action of multiple neural pathways. Indeed, automatic reflexes like the VOR and the OKR, as well as higher-order cognitive processes, motor signals, and sensory inputs, all contribute to ocular motoneuron activity during body movement (Anastasopoulos et al., 2009). The complexity of eye movement physiology and the limited studies available hinder a full understanding of eye movements in ecological contexts.

On the other hand, the growing accessibility of eye movement recording technologies has led to their integration across various research fields, such as neuroscience, marketing, psychology, and medicine, fostering the development of specialized communities. Each discipline has contributed significantly to advancing eye movement research. However, this rapid growth has also caused fragmentation, with insights dispersed across a wide range of literature. Since each field often pursues distinct goals, methodologies and findings are typically field-specific, limiting their applicability across disciplines. This review examines the neurophysiology of eye movements and the experimental paradigms employed in this field, with the aim of synthesizing studies of the oculomotor system across different research communities. Given the extensive scope of the topic, this review is not intended to be exhaustive; rather, it highlights key physiological insights into gaze control mechanisms. The objective is to inform the design of experimental protocols for investigating eye movements, both in controlled environments and in more ecologically valid settings—particularly those without physical constraints on head movements. It is important to note that this brief review focuses solely on the characteristics and description of ocular movements and does not explicitly address visual behavior or the allocation of visual attention.

With a primary emphasis on findings from human studies and on the functional aspects of eye movement, the following sections offer an overview of current knowledge on major eye movement types. This work distinguishes findings obtained under controlled laboratory conditions 2 — from those derived in more natural, head-free environments—see Section 3. Finally, building on these neurophysiological insights, we discuss practical considerations to support researchers in designing experimental protocols in Section 4.

This review stands at the intersection of multiple contributions in the existing literature, providing an overview of eye movements with a clear distinction between findings obtained under controlled laboratory conditions and those from ecological contexts. While aligned with prior works such as Lappi (2016), it is less exhaustive than the comprehensive treatment in Leigh and Zee (2015), which delves into the neurophysiology, neural circuits, and models underlying saccadic and smooth pursuit movements. Our goal is to offer foundational knowledge for researchers interested in integrating eye-tracking methodologies into their studies. The practical section of this review—highlighting methods for recording and analyzing eye-tracking data—distinguishes it from more theoretical works, aligning more closely with reviews focused on practical considerations (Singh and Singh, 2012; Lim et al., 2020; Klaib et al., 2021) or best practices in data acquisition (Carter and Luke, 2020). In summary, this work provides a concise synthesis of key knowledge on the neurophysiology of eye movements with a practical focus. By bridging theoretical insights and practical applications, it aims to help researchers develop robust experimental protocols.

2 When the head is physically restrained

Most laboratory protocols for studying eye movements are performed with the head constrained. In these conditions, gaze reorientation relies exclusively on eye movements. The following sections outline the canonical components of eye movements under such laboratory settings, i.e., saccades, smooth pursuits, fixational eye movements as well as the vestibulo-ocular reflex (VOR) and optokinetic reflex (OKR).

2.1 Saccades

Saccades are rapid, ballistic eye movements that typically occur at a frequency of

Functionally, saccades can be categorized as either reflexive, also known as visually guided (Klein and Ettinger, 2019), or volitional in nature (Pierrot-Deseilligny et al., 1995; Patel et al., 2012; Leigh and Zee, 2015). These two saccade types are controlled by parallel subsystems (Patel et al., 2012): visually guided saccades are primarily driven by external stimuli, while volitional saccades are internally generated, relying more on cognitive processes like attention, inhibition, and working memory (Seideman et al., 2018; Klein and Ettinger, 2019). Volitional saccades include tasks like predictive saccades, where eye movements anticipate a target’s appearance based on learned temporal or spatial patterns, such as tracking a stimulus appearing rhythmically at predictable locations (Leigh and Zee, 2015), and memory-guided saccades, which direct gaze toward a remembered target location without current visual input, engaging working memory to recall the target’s position (Seideman et al., 2018). Similarly, antisaccades require suppressing a reflexive saccade toward a sudden stimulus to instead look at the opposite location, relying on inhibitory control and attention as a measure of cognitive flexibility (Klein and Ettinger, 2019), while saccade sequencing involves planning and executing a series of saccades in a specific order to multiple targets, integrating attention, working memory, and motor planning for precise coordination (Patel et al., 2012).

These tasks highlight the cognitive demands of volitional saccades, distinguishing them from reflexive saccades while illustrating their interplay along a continuum of saccadic control (Klein and Ettinger, 2019). Earlier work hinted at these mechanisms, with Bahill et al. (1981) observing that intrinsic saccade properties—such as peak velocity, amplitude, and duration—were influenced by higher-order cognitive factors like attention, muscle fatigue, and tiredness. Importantly, the separation between reflexive and volitional saccades should be understood as a continuum rather than a strict dichotomy, as internal cognitive motivations and decision-making processes influence both saccade types (Klein and Ettinger, 2019).

Saccadic eye movements are generated by a distributed network of cortical and subcortical structures. The frontal eye fields (FEF), supplementary eye fields (SEF), and posterior parietal cortex (PPC) initiate voluntary and goal-directed saccades by sending commands to the superior colliculus (SC) and brainstem saccade generators (Leigh and Zee, 2015; Pierrot-Deseilligny et al., 2004). The SC, particularly its intermediate and deep layers, integrates multisensory inputs and contributes to both reflexive and voluntary saccades (Wurtz and Goldberg, 1972). Premotor structures in the brainstem, including the paramedian pontine reticular formation (PPRF) for horizontal saccades and the rostral interstitial nucleus of the medial longitudinal fasciculus (riMLF) for vertical and torsional saccades, generate high-frequency burst activity. These work in conjunction with omnipause neurons in the nucleus raphe interpositus, which inhibit saccade initiation and regulate timing (Scudder et al., 2002). The cerebellum, especially the fastigial nucleus and dorsal vermis, refines saccadic metrics and mediates motor learning and adaptation (Optican and Robinson, 1980; Robinson and Fuchs, 2001). This network operates both hierarchically and in parallel, integrating sensory, cognitive, and motor information to guide rapid eye movements.

Saccade kinematics are typically characterized by a stereotyped, symmetrical velocity profile for movements ranging from 5 to 25°, with larger saccades tending to display a skewed profile, where the deceleration phase is often longer than the acceleration phase. Saccades also exhibit a linear duration-amplitude relationship, with the slope estimated to be between 1.5 and 3 milliseconds per degree, as well as a non-linear relationship between peak velocity and amplitude (Bahill et al., 1981; Klein and Ettinger, 2019). This latter relationship is commonly referred to as the main sequence, a term introduced by Bahill et al. (1975) and borrowed from astronomy, which has since become a major focus of research (Freedman, 2008; Gibaldi and Sabatini, 2021). Notably, it has been observed that the peak velocity of a saccade increases as a function of its amplitude, reaching a peak at approximately

At the end of a typical saccadic eye movement, just before settling into steady fixation, the pupil signal often shows a damped oscillation, with one or two observable cycles before attenuation (Nyström et al., 2013b; Hooge et al., 2015). These post-saccadic oscillations (PSOs) typically have an amplitude of around 2°, with oscillation periods averaging about 20 milliseconds (Hooge et al., 2015). The origin of PSOs, long debated, is now believed to be due to dynamic deformations of the iris’s inner edge during saccades (Nyström et al., 2013b; Hooge et al., 2016). Specifically, these oscillations result from movements of the pupil within the eyeball, referred to as iris wobbling or the eye wobbling phenomenon. It’s important to note that PSO characteristics can vary significantly depending on the eye-tracking methods used (Hooge et al., 2016), the direction of the saccade (Hooge et al., 2015), and individual differences, such as the observer’s age (Mardanbegi et al., 2018) and pupil size (Nyström et al., 2016).

Saccade metrics were found to be stable within and across trials, thereby making them suitable biometric data for authentication, identification or to reveal differences in perceptual-motor style between individuals (Klein and Ettinger, 2019; Vidal and Lacquaniti, 2021). For example, the pioneering work of Holland and Komogortsev (2013) and Rigas and Komogortsev (2016) demonstrated the robustness of individual-specific eye movements characteristics for recognition purposes with different types of visual stimulus. Their approach led to the development of the complex eye movement extended biometrics, which consists of several fixation and saccade-related characteristics that together constitute an individual’s biometric fingerprint. While these approaches do not yet represent a realistic alternative to existing biometric standards, they represent a promising field of research.

In neurological and psychiatric disorders, abnormalities in saccadic eye movements provide insights into impaired motor planning, inhibitory control, and neural circuit dysfunction. In Parkinson’s disease (PD), saccades typically exhibit hypometria—reduced amplitude—and prolonged latencies, particularly for volitional saccades. These deficits stem from dysfunction in the basal ganglia, supplementary eye fields, and frontal eye fields (FEF), which impair the generation and execution of planned movements (Terao et al., 2011; Lal and Truong, 2019). Huntington’s disease (HD) is associated with increased antisaccade latencies and high error rates, reflecting early degeneration of the striatum and prefrontal cortex, both critical for suppressing automatic responses. Antisaccade errors in HD may precede overt motor symptoms and serve as early markers of cognitive decline (Lal and Truong, 2019). In progressive supranuclear palsy (PSP), vertical saccades—particularly downward—are severely impaired due to degeneration of the rostral interstitial nucleus of the medial longitudinal fasciculus (riMLF) and midbrain structures (Leigh and Zee, 2015). Cerebellar disorders, such as spinocerebellar ataxias, result in dysmetric saccades—overshooting—hypermetria—or undershooting—hypometria—of the target—and poor saccadic adaptation. These effects are attributed to damage in the dorsal vermis and fastigial nucleus, which modulate saccadic accuracy.

In schizophrenia, antisaccade errors are markedly increased and latencies highly variable, indicating core deficits in inhibitory control and executive functioning. These deficits are linked to dysfunction in the dorsolateral prefrontal cortex (DLPFC) and its connections with the FEF and basal ganglia. Impaired antisaccade performance is considered a potential endophenotype for schizophrenia (Gooding and Basso, 2008). Similarly, individuals with attention-deficit/hyperactivity disorder (ADHD) demonstrate increased antisaccade error rates and variable reaction times, pointing to immature or dysfunctional prefrontal inhibitory mechanisms (Munoz et al., 2003). These deficits reflect challenges in voluntary response suppression and sustained attention. Lastly, saccadic intrusions, such as square-wave jerks—involuntary saccades that briefly displace fixation—are common across neurodegenerative disorders and may interfere with steady gaze. While not volitional, these intrusions further signal brainstem or cerebellar dysfunction (Leigh and Kennard, 2004).

2.2 Smooth pursuit

Ocular pursuit movements are triggered primarily by the continuous motion of a target, causing its image to drift across the retinal surface, and their primary function is to preserve visual acuity by stabilizing the moving image on or near the fovea. The primary input driving these movements is the retinal slip velocity, which refers to the relative motion of the target across the retina (Binder et al., 2009; Klein and Ettinger, 2019). In contrast, saccadic eye movements are typically triggered by discrete positional changes, such as when a target suddenly jumps outside the foveal region, to rapidly recenter the target’s image on the fovea. Unlike the saccadic system, which operates in discrete bursts, the smooth pursuit system is continuous and does not exhibit a refractory period (Robinson, 1965). Typical optimal pursuit speeds range from 15 to 30° per second (Rashbass, 1961; Meyer et al., 1985; Ettinger et al., 2003; Klein and Ettinger, 2019), although efficient tracking of velocities up to 100° per second has been observed for predictable motion patterns. This suggests that pursuit control involves higher-level extra-retinal mechanisms, such as anticipation and predictive processes.

Smooth pursuit movements consist of two phases. The initial phase, known as pursuit initiation, is driven solely by visual motion information. It is characterized by a latency period—the time required for the eyes to begin tracking the target after it starts moving—which ranges between 120 and 180 milliseconds in healthy individuals, depending on task conditions and experience (Klein and Ettinger, 2019). During the first 100 milliseconds of pursuit initiation, the response is based solely on the initial appearance of the target, unaffected by changes in the retinal image due to eye movement. In this phase, pursuit operates in an open-loop manner, relying on target movement without feedback from eye position. This open-loop phase can be modified by experience as the system adapts to changes in target velocity, a process known as pursuit adaptation (Chou and Lisberger, 2004).

The second phase, pursuit maintenance, aims to stabilize the target on the fovea. It combines visual feedback with predictions of target velocity to maintain the image within the zone of optimal visual acuity. In this closed-loop phase, any deviations from the ideal trajectory are corrected through compensatory eye movements (Thier and Ilg, 2005). Retinal velocity, image acceleration (Lisberger et al., 1987), and target position relative to the fovea (Blohm et al., 2005) all serve as error signals guiding pursuit. While pursuit is largely feedback-driven, cognitive factors like experience with target motion and stimulus predictability can modulate its performance (Barnes, 2008).

Smooth pursuit eye movements are controlled by an interconnected network of cortical, subcortical, brainstem, and cerebellar structures. The frontal eye fields (FEF), particularly their pursuit-related subregion, initiate and sustain voluntary tracking, while the lateral intraparietal area (LIP) modulates attentional focus and target selection (Tanaka and Lisberger, 2002; Thier and Ilg, 2005). Visual motion signals are primarily processed in the middle temporal (MT) and medial superior temporal (MST) areas, which compute retinal slip velocity and convey motion-related input to pursuit pathways (Newsome et al., 1985). These signals are relayed to the dorsolateral pontine nuclei (DLPN) in the brainstem, which project to the cerebellum to help generate smooth pursuit commands (Mustari et al., 1988). The cerebellum, especially the flocculus and posterior vermis, refines pursuit accuracy and supports adaptation through motor learning mechanisms (Miles and Fuller, 1975; Thier and Ilg, 2005).

Due to delays in the visual pathways and the limitations of eye velocity and acceleration, smooth pursuits are often supplemented by corrective or catch-up saccades. These rapid saccades are important for maintaining target tracking when smooth pursuit alone cannot compensate for unpredictable target movement or rapidly varying velocities, leading to retinal error accumulation (Haller et al., 2008). Catch-up saccades are highly controlled and executed without visual feedback, with their precision essential for effective pursuit. Research has shown that their amplitudes are closely aligned with both positional error and retinal slip (De Brouwer et al., 2002). For a comprehensive discussion of saccade-pursuit interactions, see the recent review by Goettker and Gegenfurtner (2021).

Interestingly, studies have demonstrated that the horizontal component of pursuit eye movements is more accurate than the vertical component (Rottach et al., 1996; Grönqvist et al., 2006; Ingster-Moati et al., 2009; Ke et al., 2013). This increased accuracy in horizontal tracking has been observed not only for targets moving strictly along the horizontal or vertical axes but also for horizontal and vertical components in bidirectional pursuit sequences (Ke et al., 2013). Moreover, horizontal pursuit mechanisms are found to develop earlier in children, supporting a developmental asymmetry in pursuit capabilities (Grönqvist et al., 2006). These directional differences align with findings indicating distinct neurophysiological substrates for horizontal and vertical pursuit pathways (Saito and Sugimura, 2020; Kettner et al., 1996; Chubb et al., 1984). The distinct neurophysiological substrates for horizontal and vertical pursuit pathways suggest independent feedback control mechanisms. For instance, Rottach et al. (1996) demonstrated that horizontal smooth pursuit in healthy subjects is more accurate and exhibits lower variability than vertical pursuit, with these differences persisting across horizontal, vertical, and diagonal target trajectories. This asymmetry is further supported by Rottach et al. (1997), who studied Niemann-Pick type C disease and found that horizontal and vertical saccades are independently affected, implying separate neural feedback loops for each axis. These findings suggest that horizontal pursuit relies on more robust control circuits, potentially involving the medial superior temporal area and pontine nuclei, while vertical pursuit engages distinct brainstem and cerebellar pathways, which may be less precise or more susceptible to disruption (Saito and Sugimura, 2020; Kettner et al., 1996). Such independent control underscores the functional and developmental differences observed in pursuit performance.

Aberrant smooth pursuit eye movements, characterized by impaired tracking of a moving target, serve as sensitive biomarkers for neurological and psychiatric disorders. In schizophrenia, reduced pursuit gain—eye velocity divided by target velocity—and increased phase lag reflect impaired motion processing in the middle temporal and medial superior temporal areas (MT/MST) and disrupted prefrontal control, particularly in the dorsolateral prefrontal cortex (DLPFC) (Chen et al., 1999; O’Driscoll and Callahan, 2008; Lencer et al., 2015). Cerebellar ataxias, such as spinocerebellar ataxia type 3 (SCA3), exhibit low-gain pursuit, irregular tracking, and frequent catch-up saccades, stemming from floccular and posterior vermal dysfunction that impairs motor learning and predictive pursuit (Miles and Fuller, 1975; Buttner et al., 1998). In Parkinson’s disease, pursuit gain is mildly reduced, especially for unpredictable target trajectories, due to basal ganglia deficits disrupting movement initiation and predictive control (Lekwuwa et al., 1999; Frei, 2021). Attention-deficit/hyperactivity disorder (ADHD) patients show fluctuating pursuit gain and elevated velocity errors, linked to frontoparietal attentional control impairments (Karatekin, 2007). Unlike saccadic disorders, which produce discrete spatial errors—e.g., hypometria, square-wave jerks—pursuit dysfunction manifests as continuous tracking inaccuracies, notably altered gain and phase delay, quantifiable via high-resolution eye-tracking (Thier and Ilg, 2005).

2.3 Fixational eye movements

A fixation is defined as a period during which gaze is directed at a specific location, projecting the image onto the high-resolution processing region of the retina, the fovea centralis. Despite efforts to maintain a steady gaze, the eyes exhibit continuous, involuntary motion, influencing much of our visual experience. This creates a contradiction in the visual system: while gaze remains fixed on an object, the eyes are never entirely still. The precise roles of fixational eye movements—namely, tremors, drifts, and microsaccades (Martinez-Conde et al., 2004; Martinez-Conde, 2006) — in the visual process remain unclear and are the subject of ongoing discussion. It is believed that one function of these movements is to counteract neural adaptation by introducing small, random displacements of the retinal image. This helps ensure continuous stimulation of different photoreceptor cells in the fovea, preventing perceptual fading that would occur if the retinal image remained stationary (Pritchard, 1961). Additionally, fixational eye movements are proposed to play a role in the acquisition and processing of visual information by optimizing retinal sampling and enhancing the fine details of the visual scene (Klein and Ettinger, 2019).

2.3.1 Tremors

Ocular micro-tremors, sometimes called physiological nystagmus, are tiny, high-frequency, involuntary eye oscillations that occur naturally in healthy eyes. These movements typically vibrate at 70–100 cycles per second—though some studies report a broader range of 50–200 cycles per second—with amplitudes smaller than 0.01° (Martinez-Conde et al., 2004; Collewijn and Kowler, 2008; Klein and Ettinger, 2019). As a normal feature of vision, micro-tremors are not a sign of disease but one of three types of fixational eye movements, alongside slow drifts and microsaccades, which together maintain clear vision during steady gaze. They originate from the rapid, asynchronous firing of fast-twitch motor units in the extraocular muscles, controlled by motor neurons in the brainstem’s motor nuclei (Ezenman et al., 1985; Collewijn and Kowler, 2008).

The neuroanatomy of micro-tremors centers on the brainstem’s extraocular motor nuclei—abducens, oculomotor, and trochlear—which send precise signals to the six extraocular muscles that position the eyes (Leigh and Zee, 2015). These nuclei produce high-frequency firing patterns that create the microscopic oscillations observed in micro-tremors (Ezenman et al., 1985). The pontine reticular formation, a brainstem region involved in coordinating gaze, likely refines the timing of these signals, contributing to the tremors’ rapid frequency (Sparks, 2002). Often described as neural “noise” in the ocular motor system, micro-tremors may serve a functional role. One hypothesis suggests they facilitate stochastic resonance, where subtle noise enhances the detection of faint visual signals, such as slight environmental shifts (Simonotto et al., 1997; Hennig et al., 2002). This idea remains speculative, however, and further research is needed to confirm its significance in visual processing.

Early research proposed that micro-tremors in each eye were independent (Riggs and Ratliff, 1951; Ditchburn and Ginsborg, 1953). More recent studies, however, have observed partial synchronization, evidenced by peaks in spectral coherence between the two eyes, likely mediated by shared neural pathways like the medial longitudinal fasciculus (Spauschus et al., 1999). This brainstem structure connects the abducens and oculomotor nuclei, enabling coordinated eye movements. The mechanisms driving this synchronization are not fully understood, highlighting an active area of investigation.

Studying micro-tremors is challenging because their high frequency often falls below the noise threshold of standard eye-tracking systems and can overlap with other eye movements, such as drifts or microsaccades (Klein and Ettinger, 2019). Despite these difficulties, advancements in high-precision technologies, including video-based systems, scleral search coils, and specialized devices, have enabled accurate measurements, confirming the tremors’ small amplitude and rapid frequency (Collewijn and Kowler, 2008; McCamy et al., 2013; McCamy et al., 2014). These movements contribute to retinal image stability, preventing visual fading—known as Troxler fading—during fixation (Engbert and Kliegl, 2004). By introducing subtle motion across the retina, micro-tremors may refresh visual input, supporting sharp, high-resolution vision and potentially aiding tasks requiring fine visual detail.

2.3.2 Microsaccades

Microsaccades are small-amplitude saccadic eye movements, occurring approximately once or twice per second (Rolfs, 2009). While traditionally considered a type of fixational eye movement, emerging research suggests that microsaccades share neural pathways with larger saccades (Hafed, 2011) and exhibit many similar characteristics (Abadi and Gowen, 2004; Otero-Millan et al., 2013), notably adhering to the main sequence (Zuber et al., 1965). As such, microsaccades may be viewed as part of the broader continuum of saccadic movements. Interestingly, microsaccades are often regarded as involuntary or unconscious, yet they are regulated by the same endogenous control mechanisms that govern larger saccades (Collewijn and Kowler, 2008). Furthermore, assumption that humans are unaware of their microsaccades requires reconsideration, as individuals can exert a degree of control over them with appropriate training. For example, studies have demonstrated that individuals with experience in laboratory fixation tasks are capable of suppressing their microsaccades for several seconds during tasks requiring high visual acuity (Bridgeman and Palca, 1980; Steinman et al., 1967; Winterson and Collewijn, 1976).

The neuroanatomy underlying microsaccades involves a distributed network of brain regions that overlaps significantly with the neural circuitry responsible for larger saccadic eye movements. Key structures include the superior colliculus, which integrates sensory and motor signals to initiate microsaccades (Hafed, 2011), and the frontal eye fields, which contribute to their modulation, particularly in voluntary contexts (Tian et al., 2016). The brainstem, particularly the pontine reticular formation and the oculomotor nuclei, plays a critical role in generating the precise motor commands for these rapid eye movements (Scudder et al., 2002). Additionally, the cerebellum fine-tunes microsaccade amplitude and timing, ensuring their accuracy during fixation tasks (Otero-Millan et al., 2011). Neuroimaging and electrophysiological studies suggest that the same cortical and subcortical pathways that govern saccades are recruited for microsaccades, supporting the view that they are part of a continuum of oculomotor behavior (Martinez-Conde et al., 2009). This shared neural substrate enables the endogenous modulation of microsaccades, as seen in trained individuals who can suppress them to enhance visual acuity in specific tasks (Steinman et al., 1973).

Several studies have examined how anticipation affects microsaccade frequency. Betta and Turatto (2006) demonstrated that anticipating a motor response could reduce the microsaccade rate, while uncertainty about the motor response did not have the same effect (Rolfs, 2009). Similarly, anticipatory responses to sensory events can lead to a phenomenon called oculomotor freezing, characterized by a transient reduction in spontaneous microsaccade frequency lasting 100–400 milliseconds after the onset of an auditory, tactile, or visual stimulus.

The functional role of microsaccades remains a highly debated issue in the literature. Cornsweet (1956), Krauskopf et al. (1960) hypothesized that microsaccades help counteract the random drift of the eyes, serving a corrective role in both fixation position and binocular disparity—the slight difference between the retinal images of the left and right eyes. Other studies suggested that microsaccades may mitigate retinal adaptation by maintaining motion on the retina with respect to the visual environment (Ditchburn and Ginsborg, 1952; Riggs et al., 1953). Additional research suggests that microsaccades prevent retinal adaptation by promoting super-diffusive dynamics of gaze—where the gaze trajectory during fixation spreads faster than a normal random walk—over short time scales. Over longer time scales, the sub-diffusive dynamics of gaze—characterized by a slower spread of gaze trajectories compared to a normal random walk—mitigate fixation errors and reduce binocular disparity more effectively than an uncorrelated random walk (Engbert and Kliegl, 2004; Moshel et al., 2008; Roberts et al., 2013). Finally, some authors remain skeptical of the idea that microsaccades serve a unique role in sustaining fixation or preventing retinal adaptation, suggesting that these functions could be adequately fulfilled by smooth pursuit or slow drift movements (Collewijn and Kowler, 2008; Kowler, 2011; Klein and Ettinger, 2019). In fact, some researchers have even suggested that microsaccades represent an evolutionary enigma (Kowler and Steinman, 1980; Martinez-Conde et al., 2004).

Part of the confusion surrounding the functional role of microsaccades stems from ambiguity in their definition. Traditionally, microsaccades are distinguished from regular saccades by amplitude thresholds, with movements below a certain threshold classified as microsaccades. Early studies defined microsaccades as movements ranging from approximately 0.20–0.25° (Boyce, 1967; Cunitz and Steinman, 1969; Ditchburn and Foley-Fisher, 1967). Recent studies, however, have expanded the threshold to include movements up to

2.3.3 Drifts

Ocular drifts are slow, continuous eye movements occurring during inter-saccadic intervals, producing gaze trajectories that approximate a random walk—small, stochastic displacements with varying directions and amplitudes, typically shifting the retinal image by approximately 0.13° at velocities below 0.5° per second (Cornsweet, 1956; Engbert and Kliegl, 2004; Collewijn and Kowler, 2008; Klein and Ettinger, 2019). While often stochastic, drifts may exhibit subtle directional influences from visual or attentional factors. Neuroanatomically, they stem from tonic activity in the brainstem’s neural integrator, particularly the nucleus prepositus hypoglossi (NPH) and medial vestibular nucleus (MVN), which sustain low-frequency motor neuron firing to extraocular muscles (Cannon and Robinson, 1987; Fuchs et al., 1988). The superior colliculus (SC) modulates fixational stability, while the cerebellar flocculus and vermis fine-tune drift amplitude via feedback (Hafed et al., 2009; Arnstein et al., 2015). Drifts are involuntary and, alongside microsaccades, help maintain fixation, especially when microsaccades are limited, and contribute to retinal image motion that prevents neural adaptation, supporting continuous perception of visual detail (Engbert and Mergenthaler, 2006; Rucci and Victor, 2015).

Research investigating the respective roles of drift and microsaccades in correcting fixation disparity and stabilizing overall fixation position has developed along parallel lines. Early studies suggested that only microsaccades could adjust both binocular disparity and inaccurate fixation positions. However, later findings demonstrated that drifts also contribute to these corrections, particularly in the horizontal direction—for fixation position (Steinman et al., 1967) and fixation disparity (St.Cyr and Fender, 1969). More recent evidence indicates that both microsaccades and drifts can adjust fixation position on a timescale greater than 100 milliseconds, though only microsaccades appear to be involved in correcting fixation disparity over this relatively extended timescale (Engbert and Kliegl, 2004). The relative roles of microsaccades and drifts in maintaining stable binocular fixation were further examined by Møller et al. (2006), whose findings suggest that drift-related eye movements—known as slow control—primarily maintain the alignment of the visual line of sight within the foveal center during steady fixation.

A recent body of research has explored the role of inter-saccadic fixational eye movements—specifically, ocular drifts and tremors—in forming visual spatial representations (Aytekin et al., 2014; Rucci and Poletti, 2015; Poletti et al., 2015). Evidence indicates that the Brownian, or random-like, motion generated by these movements converts the static spatial information of the visual scene into a dynamic spatio-temporal signal on the retina. This movement causes retinal photoreceptors to encounter fluctuating luminance inputs, enhancing high spatial frequencies that emphasize object contours within the environment (Rucci and Victor, 2015). Thus, inter-saccadic fixational movements contribute to visual processing by encoding spatial information through temporal modulation, aiding in the extraction of features at early stages of visual processing (Rucci and Poletti, 2015; Rucci and Victor, 2015).

2.4 Vestibulo-ocular reflex

The vestibulo-ocular reflex (VOR) stabilizes the retinal image during head movements by producing compensatory eye movements in the direction opposite to head motion. This action maintains visual fixation on a static target in a stationary environment, thus preventing visual blurring. Laboratory research on VOR has been constrained by practical considerations, notably safety considerations that limit the range and intensity of vestibular stimuli for participants. Furthermore, laboratory protocols primarily assess passive head movements in the dark, focusing on controlled conditions in which the head is physically restrained or directly manipulated Büttner and Büttner-Ennever (2006), preventing neck proprioception and visual information to come into play. In healthy humans, passive whole-body motion using a rotating chair—with low-frequency sinusoidal oscillation or persistent rotation in one direction—or passive head rotations using a torque helmet are typically employed Collewijn and Smeets (2000); Bronstein et al. (2015).

The vestibulo-ocular reflex (VOR) is initiated when the vestibular system detects head motion, primarily through the semicircular canals and otolith organs of the inner ear. The semicircular canals sense angular acceleration resulting from rotational head movements; fluid displacement within the canals deflects hair cells in the crista ampullaris, transducing head rotation into neural signals that encode direction and velocity (Fernandez and Goldberg, 1971). In contrast, the otolith organs—the utricle and saccule—detect linear acceleration and head tilt by transducing otoconia displacement into hair cell activation, signaling translational motion and orientation relative to gravity (Angelaki and Cullen, 2008). These vestibular signals are conveyed via the vestibular nerve to the vestibular nuclei in the medulla and pons, where input from both ears and other sensory systems is integrated (Cullen, 2012). From there, signals are transmitted through the medial longitudinal fasciculus (MLF) to the oculomotor, trochlear, and abducens nuclei. This pathway drives compensatory, conjugate eye movements in the direction opposite to head motion, thereby stabilizing retinal images during movement (Leigh and Zee, 2015). The cerebellum, particularly the flocculus, nodulus, and posterior vermis, modulates the VOR by calibrating its gain and adapting reflex responses through motor learning. This allows for precise gaze stabilization even under varying head velocities, altered visual feedback, or long-term changes in sensorimotor conditions (Lisberger, 1988).

Functionally, the VOR manifests as vestibular nystagmus, a rhythmic pattern of compensatory slow phases interrupted by quick phases during sustained head rotations Robinson (1977); Land and Tatler (2009); Chun and Robinson (1978); Barnes (1979). The slow phase counteracts head movement by moving the eyes in the opposite direction, stabilizing the visual field. Ideally, the eye velocity during the slow phase matches the head’s velocity in the opposite direction, yielding a gain—eye velocity divided by head velocity—close to 1. The slow phase also demonstrates adaptability in response to visual or vestibular impairment, a process known as VOR adaptation or gain adjustment. For instance, when altered visual feedback is introduced, the slow phase incrementally adjusts its gain to restore stability, reflecting adaptation under changing conditions Shelhamer et al. (1992). For more details on VOR adaptation mechanisms, see the review from Schubert and Migliaccio (2019).

In contrast, the quick phase is a rapid saccadic movement that repositions the eyes centrally after the slow phase, allowing continued compensatory slow phases during sustained head rotation. Eye and head coordination during gaze orientation can follow two strategies, depending on the influence of slow and quick phases of vestibular nystagmus on eye eccentricity Lestienne et al. (1984). The first strategy, seen in highly alert animals, directs the gaze with head motion, known as the “look where you go” strategy. In this case, the overall eccentricity of the eye displacement in the orbit—also known as the beating field or schlagfeld—aligns with the head’s movement, as quick phases dominate the slow ones. The second strategy, “look where you came from”, involves directing the gaze opposite the head’s motion. Here, slow phases dominate, causing the beating field to shift contralaterally. These strategies represent the extremes of a spectrum, with intermediate patterns influenced by factors such as the level of alertness, behavioral context, and sensory-motor demands.

The VOR consists of rotational and translational components that stabilize vision during head movements. The rotational VOR compensates for angular rotations around the three principal axes, driven by semicircular canals detecting angular acceleration, ensuring near-complete visual stabilization during rapid movements Leigh and Zee (2015). The translational VOR stabilizes gaze during linear displacements—forward, backward, or lateral—via otolith organs, which detect linear acceleration and gravitational forces. However, the translational VOR is subject to limitations due to tilt-translation ambiguity, as the otolith organs respond similarly to both linear acceleration and changes in head tilt relative to gravity Angelaki and Yakusheva (2009). Resolving this ambiguity requires multimodal integration of signals from the semicircular canals, visual inputs, target distance, and image eccentricity Angelaki (1998); Paige and Tomko (1991); Telford et al. (1997). These findings suggest that the VOR is only one contributor to eye stabilization, which is based on multimodal sensory integration, which combines vestibular, visual, and proprioceptive information to optimize both precision and adaptability. Additionally, distinct VOR mechanisms are likely engaged during actively generated head movements, as opposed to passively induced ones Büttner and Büttner-Ennever (2006); Cullen and Roy (2004). These perspectives contrast with previous findings from controlled laboratory settings and will be elaborated in Section 3.1.2.

Abrupt head movements, known as head impulses, challenge the vestibulo-ocular reflex (VOR) to stabilize vision by producing eye movements that counteract rapid head rotations, typically at velocities of 150–300° per second. The VOR relies primarily on the inner ear’s semicircular canals (Leigh and Zee, 2015). During passive head impulses, such as when a clinician swiftly turns a patient’s head, the reflex depends almost entirely on this vestibular input, with little influence from neck muscle feedback or voluntary control. This isolation highlights the VOR’s ability to maintain gaze stability, achieving a gain—eye velocity divided by head velocity—close to 1 in healthy individuals, ensuring smooth compensatory eye movements that keep the visual world steady (Halmagyi and Curthoys, 1988). When vestibular disorders like vestibular neuritis disrupt this process, reduced gain causes the eyes to lag behind head motion, leading to retinal slip—blurred vision as the image drifts across the retina—often corrected by saccades to refocus on the target (Strupp and Brandt, 2009).

The advent of high-frequency video head impulse testing (vHIT) has transformed how clinicians evaluate VOR performance during these rapid movements. Using high-speed infrared cameras sampling at 250–500 cycles per second, vHIT captures eye and head movements with high spatial precision. This technology quantifies VOR gain and detects covert saccades—quick, involuntary eye adjustments that compensate for inadequate reflex performance—offering a sensitive measure of vestibular health (MacDougall et al., 2009) In unilateral vestibular hypofunction, such as in vestibular neuritis, vHIT reveals diminished gain and corrective saccades when the head turns toward the affected side. Bilateral vestibulopathy, often triggered by ototoxic drugs like aminoglycosides, shows severely reduced gain in both directions, resulting in oscillopsia, a disorienting visual motion that disrupts daily activities like walking (Zingler et al., 2007). Central disorders, particularly those affecting the cerebellum’s flocculus and nodulus, impair the brain’s ability to fine-tune VOR gain, leading to inconsistent eye responses across head velocities due to disrupted cerebellar modulation (Migliaccio et al., 2004; Kheradmand and Zee, 2011).

These impairments underscore the VOR’s vulnerability to disruptions in the semicircular canals, brainstem circuits, or cerebellar pathways, all of which can compromise the reflex’s ability to stabilize gaze. By pinpointing whether deficits stem from peripheral issues, like inner ear damage, or central causes, such as cerebellar lesions, vHIT provides critical diagnostic clarity, guiding tailored vestibular rehabilitation strategies to restore gaze stability (Tarnutzer et al., 2016; Sulway and Whitney, 2019).

2.5 Optokinetic reflex

The optokinetic reflex (OKR) is a visually mediated reflex that engages when a large segment of the visual field moves relative to the eyes, typically triggered when the surrounding environment appears to move while the observer remains stationary (Fletcher et al., 1990; Tarnutzer and Straumann, 2018; Büttner and Büttner-Ennever, 2006). This reflex primarily responds to “retinal slip”, the relative movement of images across the retina during both environmental and self-induced motion Fletcher et al. (1990). The OKR works synergistically with the vestibulo-ocular reflex (VOR) to process optic flow, responding either to rotational motion around the individual—rotational OKR—or to fronto-parallel translational motion—translational OKR. This synergy is especially important for low-frequency motions below 0.2 Hz, for which the gain of the VOR is low (Büttner and Büttner-Ennever, 2006; Fletcher et al., 1990; Schweigart et al., 1997; Land and Tatler, 2009). Although OKR and VOR share neural substrates, the OKR operates with a longer latency—around 150 milliseconds—due to its reliance on visual input (Land and Tatler, 2009).

The optokinetic reflex (OKR) is driven by a complex neural network involving the retina, brainstem, and cerebellum (Cohen et al., 1977; Leigh and Zee, 2015). Retinal ganglion cells detect large-field visual motion and transmit signals through the accessory optic system, including the nucleus of the optic tract (NOT) and dorsal terminal nucleus, which process directional motion cues (Simpson, 1984; Mustari and Fuchs, 1990). These brainstem structures integrate sensory input and collaborate with the vestibular nuclei to produce compensatory eye movements (BuÈttner-Ennever and Horn, 2002; Giolli et al., 2006). The cerebellum, particularly the flocculus and paraflocculus, fine-tunes OKR responses by modulating motor output based on visual feedback and predictive learning, ensuring precise gaze stabilization during head or environmental motion (Waespe et al., 1983; Voogd and Barmack, 2006).

Experimentally, the OKR is commonly induced by rotating a striped drum—known as the Bárány nystagmus drum—around the subject, who observes alternating black and white stripes or dot patterns (Fletcher et al., 1990; Distler and Hoffmann, 2011). This setup typically elicits a reflexive, oscillatory eye movement characterized by an alternating sequence of quick and slow phases (Garbutt et al., 2003; Büttner and Büttner-Ennever, 2006). Quick phases are fast, ballistic eye movements directed opposite to the direction of the visual flow. These movements share properties with ocular saccades and function to reposition the eyes toward a central orbital position, countering the visual motion stimulus (Fletcher et al., 1990; Kaminiarz et al., 2009). In contrast, the slow phases are low-velocity compensatory movements that align with the stimulus motion. The correction, however, is not perfect, as the gain—defined as the ratio of slow-phase velocity to stimulus velocity—is less than one and decreases as stimulus speed increases (Fletcher et al., 1990; Land and Tatler, 2009).

From a computational perspective, three primary models explain the alternation between quick and slow phases in optokinetic nystagmus:

Studies of the optokinetic reflex (OKR) primarily focus on its slow-phase components, often considered analogous to smooth pursuit (Robinson, 1968; Klein and Ettinger, 2019). The slow phase consists of two components: the direct component, or ocular following response (Büttner and Kremmyda, 2007), and the indirect component, also known as the velocity-storage mechanism (Raphan et al., 1979; Fletcher et al., 1990; Büttner and Büttner-Ennever, 2006). Despite similarities to smooth pursuit, these movements differ in key aspects. The direct component has a much shorter onset latency (60–70 ms) Büttner and Kremmyda (2007), is triggered by motion across a large visual field rather than a single target, and is reflexive rather than volitional. In humans, it accounts for most reflexive OKR movements at velocities up to 120°/s (Büttner and Büttner-Ennever, 2006; Büttner and Kremmyda, 2007). The indirect component, in contrast, develops gradually during sustained stimulation, integrating visual, vestibular, and somatosensory inputs to maintain slow-phase eye velocity (Raphan et al., 1979; Fletcher et al., 1990). Although the direct component dominates during initial stimulation (Van den Berg and Collewijn, 1988), the velocity-storage function of the indirect component is evident in optokinetic after-nystagmus, a gradually diminishing nystagmus that continues even after an abrupt transition to complete darkness, reflecting its sustained influence (Magnusson et al., 1985; Büttner and Büttner-Ennever, 2006; Tarnutzer and Straumann, 2018).

Abnormalities in the optokinetic reflex (OKR) are valuable diagnostic markers in a range of neurological and vestibular disorders. In cerebellar ataxias, particularly spinocerebellar ataxia type 1 (SCA1), OKR gain—defined as the ratio of slow-phase eye velocity to stimulus velocity—is typically reduced. The slow phases may appear irregular due to floccular dysfunction, which impairs the velocity storage mechanism that sustains the reflex (Leigh and Zee, 2015; Lal and Truong, 2019). These abnormalities reflect cerebellar contributions to OKR calibration and integration with vestibular signals. In vestibular neuritis, OKR responses become asymmetrical, with significantly diminished gain toward the side of the lesion. This reflects impaired visual-vestibular integration within the vestibular nuclei, particularly in the absence of peripheral vestibular input (Strupp and Brandt, 2009). Progressive supranuclear palsy (PSP), on the other hand, is associated with profound OKR impairment, especially for vertical motion stimuli, where slow-phase responses are either absent or show severely reduced gain. This is attributed to midbrain degeneration, notably involving the nucleus of the optic tract (NOT) and rostral interstitial nucleus of the medial longitudinal fasciculus (riMLF) (Chen et al., 2010; Leigh and Zee, 2015). OKR dysfunction specifically reflects compromised large-field visual motion processing and its integration with cerebellar and brainstem control systems. OKR gain and slow-phase variability, typically measured using rotating drum setups or full-field optokinetic stimulation, serve as sensitive, non-invasive biomarkers for both central and peripheral pathologies (Büttner and Kremmyda, 2007).

2.6 Vergence eye movements

Vergence eye movements are vital for binocular vision, enabling both eyes to align precisely on objects at varying distances. This alignment produces a single, fused visual image and supports stereoscopic depth perception, essential for activities such as reading, driving, or navigating complex environments (Leigh and Zee, 2015). Unlike saccades, which rapidly shift gaze, or smooth pursuit, which tracks moving objects, vergence involves simultaneous rotation of the eyes in opposite directions. Convergence directs the eyes inward for near objects, while divergence directs them outward for distant ones. These movements depend on four interdependent mechanisms—fusional, accommodative, proximal, and tonic—each responding to distinct visual or perceptual cues (Schor and Ciuffreda, 1983).

Fusional vergence, driven by retinal disparity, eliminates image misalignment between the eyes to maintain a single percept, proving vital for dynamic tasks like tracking moving objects (Schor and Ciuffreda, 1983). Accommodative vergence, linked to lens focusing, initiates convergence when ciliary muscles contract to sharpen a blurred image, functioning effectively even in monocular viewing or low-contrast conditions (Fincham and Walton, 1957). Proximal vergence, triggered by perceived object nearness through cues like object size or looming motion, enables rapid eye pre-alignment before disparity or blur cues fully engage, such as when approaching a book to read (Rosenfield and Rosenfield, 1997). Tonic vergence establishes a baseline eye alignment through sustained extraocular muscle tone, maintaining stable posture during rest or minimal visual stimulation (Schor, 1985). These mechanisms work together seamlessly. For instance, during reading, accommodative vergence initiates convergence to focus on text, fusional vergence fine-tunes alignment for single vision, proximal vergence adjusts to the perceived page distance, and tonic vergence ensures stable eye posture (Ciuffreda and Tannen, 1995).

A sophisticated neural network coordinates these vergence mechanisms. The midbrain supraoculomotor area, near the oculomotor nucleus, encodes vergence angle and velocity, integrating disparity, blur, and proximity cues to govern fusional, accommodative, and proximal vergence (Mays, 1984). The superior colliculus aligns vergence with saccades for smooth gaze shifts between near and far objects, while the cerebellum, through its vermis and flocculus, calibrates interactions to prevent misalignment (Gamlin, 2002). Cortical regions process complex cues: the visual cortex handles disparity for fusional vergence, the parietal cortex processes depth, and the frontal eye fields integrate vergence with saccades (Cumming and DeAngelis, 2001). The brainstem’s pontine reticular formation and pretectal area orchestrate the near triad, linking accommodative vergence to lens accommodation and pupillary constriction (Leigh and Zee, 2015). Disparity-sensitive neurons in the visual cortex drive fusional vergence, blur-sensitive pathways via the Edinger-Westphal nucleus trigger accommodative vergence, the middle temporal area processes motion and depth for proximal vergence, and sustained midbrain motor neuron activity maintains tonic vergence (Gamlin, 2002; Cumming and DeAngelis, 2001).

Physiologically, vergence relies on extraocular muscles. The medial rectus muscles, controlled by the oculomotor nerve, power convergence, while the lateral rectus muscles, controlled by the abducens nerve, facilitate divergence (Von Noorden, 1996). Vergence operates more slowly than saccades, achieving velocities of 10–20° per second with latencies of 160–200 milliseconds, relying on visual feedback from disparity and blur (Leigh and Zee, 2015). The near triad integrates accommodative vergence with autonomic processes: accommodation sharpens focus through ciliary muscle contraction, and pupillary constriction enhances depth of field via the sphincter pupillae (Von Noorden, 1996). Fusional vergence employs fine motor adjustments to align retinal images, proximal vergence initiates broader movements based on perceptual cues, and tonic vergence maintains stability through muscle spindle feedback (Schor, 1985). In young, healthy adults, convergence amplitude typically reaches 25 to 30 prism diopters—a unit measuring eye deviation—while divergence amplitude ranges from 6 to 10 prism diopters, reflecting the greater physiological demand for near vision. These amplitudes may decrease with age or in pathological conditions (Hung et al., 1986).

Clinical evaluation of vergence is crucial for diagnosing binocular vision disorders. The near point of convergence test measures the closest point at which the eyes maintain single binocular vision, typically 5–10 cm, assessing fusional and accommodative vergence. A receded near point often indicates convergence insufficiency (Scheiman et al., 2003). Vergence facility testing evaluates the ability to alternate efficiently between convergence and divergence, reflecting the adaptability of fusional and proximal vergence during prolonged near tasks (Gall et al., 1998). Prism vergence testing, using base-in and base-out prisms, quantifies the range of fusional vergence, determining the maximum disparity overcome before double vision occurs, critical for assessing compensatory capacity in latent deviations—phorias (Evans, 2021). The cover test, performed unilaterally or alternately, detects phorias and tropias by evaluating alignment under dissociated viewing conditions, revealing deficits in tonic vergence or overall coordination (Von Noorden, 1996).

Common dysfunctions include convergence insufficiency, marked by eye strain, intermittent double vision, headaches, or difficulty sustaining attention during reading or screen use, often due to impaired fusional or accommodative vergence (Scheiman et al., 2003). Other abnormalities, such as excessive convergence, limited divergence, or vergence paralysis, may stem from midbrain lesions, strabismus, or uncorrected refractive errors (Leigh and Zee, 2015). Management strategies include vision therapy targeting the deficient mechanism, prism lenses to aid compensation, or orthoptic exercises to enhance fusional reserves and vergence facility. These approaches, particularly effective for convergence insufficiency, are supported by clinical evidence (Scheiman et al., 2005; Scheiman and Wick, 2008).

3 Ecological conditions

First and foremost, the term ecological must be nuanced. Rather than striving for ecological validity in its broadest sense—an evolving concept across cognitive sciences and neurophysiology (Holleman et al., 2020) — the focus here is on experimental paradigms that do not impose physical constraints on the observer’s body. Under such conditions, gaze reorientation involves coordinated movements of not only the eyes but also the head, trunk, and feet (Anastasopoulos et al., 2009; Land, 2004). In contrast to tightly controlled environments—particularly experimental settings that involve physical head restraint, where gaze is typically studied in isolation—natural gaze behavior arises from a dynamic system that integrates vestibular, proprioceptive, and visual inputs into task-specific motor outputs.

To investigate eye movements in unconstrained settings, most studies have focused on eye-head coordination, a specific subset of the broader problem (Zangermeister and Stark, 1982; Afanador et al., 1986; Fuller, 1992; Guitton, 1992; Stahl, 1999; Stahl, 2001; Pelz et al., 2001; Einhäuser et al., 2007; Thumser et al., 2008). Coordinating gaze shifts with head movements introduces additional complexity, even within this more constrained framework (Freedman, 2008). posed key questions regarding eye-head coordination: ”Are the eye and head components of gaze shifts tightly linked, or are they dissociable? What factors determine the extent of head involvement? […] When the head contributes to gaze shifts, it moves concurrently and in the same direction as the eyes, so what role does the vestibulo-ocular reflex (VOR) play?” While these issues have been extensively explored and numerous hypotheses have been proposed, they remain subjects of active investigation.

3.1 Gaze stabilizing movements

Although maintaining a relatively stable retinal image is crucial for high visual acuity, the human head is almost always in motion. Consequently, tasks that demand fine visual focus, such as reading, would be unfeasible without robust compensatory systems to offset these head movements. Fortunately, a number of mechanisms come into play to provide a stable gaze.

3.1.1 Fixational eye movements

The role—and existence as canonical constituent of eye movements—of microsaccades is even more contentious under natural viewing conditions than when the head is restrained. Some researchers posit that microsaccades contribute to visual attention (Fischer and Weber, 1993b), enhance visual processing (Melloni et al., 2009), or may indicate levels of concentration (Buettner et al., 2019). However, others have observed that microsaccades are exceedingly rare in real-world activities. Malinov et al. (2000), for instance, analyzed eye movements during a naturalistic task and found that only 2 of the 3,375 saccades recorded could be classified as microsaccades. As Collewijn and Kowler (2008) summarize: “A special role for microsaccades seemed particularly unlikely to emerge under natural conditions, when head movements are permitted during either fixation or during the performance of active visual tasks.”

On the other hand, the precise measurement of fine eye movements, including ocular drift and micro-tremor, under natural conditions only became feasible in the 1990s with the development (Edwards et al., 1994) of the Maryland Revolving-Field Monitor (MRFM). To our knowledge, the MRFM remains the only eye tracker with a precision demonstrated to be sufficient to record these fixational eye movements during normal head movements (Aytekin et al., 2014; Rucci and Poletti, 2015). This field of research thus constitutes somewhat of a niche reserved for a few laboratories with such a set-up. However, a limitation of the MRFM system is the requirement that participants remain within the magnetic field of the device, restricting studies to tasks that involve minimal body movement.

Furthermore, during unconstrained fixation, ocular drift appears anticorrelated with involuntary head movements (Aytekin et al., 2014), effectively compensating—and even anticipating (Poletti et al., 2015) — for the fixational instability of the head (Aytekin et al., 2014). This compensation, however, is only partial (Poletti et al., 2015), allowing to maintain retinal image motion close to those experienced when the head is restrained (Poletti et al., 2015). As a result, the retinal stimulation produced by fixational head and eye movements in natural conditions retains key characteristics of the signal observed in head-fixed ocular drift, including correlated temporal structures and similar spatio-temporal retinal stimulation patterns (Roberts et al., 2013; Rucci and Poletti, 2015).

3.1.2 VOR and OKR

Introduced in Sections 2.4 and 2.5, respectively, the Vestibulo-Ocular Reflex (VOR) and Optokinetic Reflex (OKR) are two fundamental eye stabilizing mechanisms that act to maintain retinal image stability during body movements. In brief, the VOR counteracts head movements within a stationary environment, while the OKR compensates for movements within the visual field (Robinson, 1968). In practice, both mechanisms operate in tandem to achieve visual stability. Indeed, head movements are inevitably present during natural viewing—the VOR is thus highly active—generating vestibular inputs but also displacement of the visual field (Fletcher et al., 1990). Therefore, although not predominant compared to VOR (Pelisson et al., 1988) and with a higher latency (Collewijn and Smeets, 2000), the OKR is at least partly active as well. In sum, VOR and OKR interact naturally through visuovestibular mechanisms (Green, 2003), a phenomenon known as visually enhanced VOR, which has garnered substantial clinical interest for its potential applications (Arriaga et al., 2006; Szmulewicz et al., 2014; Rey-Martinez et al., 2018; Halmágyi et al., 2022).

Investigations of VOR and OKR in contexts of free head and body motion have revealed their multi-modal nature, i.e., numerous indirect sensory modalities are implicated in the neural circuits underlying these reflexes, suggesting a need for holistic analysis. For instance, primate studies found that neck muscle proprioception, activated during head movement, projects to neurons within the vestibular nuclei (Gdowski and McCrea, 2000). Furthermore, there is evidence that active head movement may lead to partial suppression of vestibular input through extra-retinal mechanisms (Roy and Cullen, 2004). For instance, abrupt head movements—known as head impulses—reveal differences between active—self-generated—and passive—externally applied—responses. In passive head impulses, such as those delivered by a clinician rotating the head, the VOR relies heavily on vestibular input, with minimal contribution from neck proprioception or voluntary control, making it a sensitive measure of vestibular function (Halmagyi and Curthoys, 1988). Active head impulses, where individuals initiate their own rapid head turns, engage additional mechanisms, including pre-programmed motor commands and cervical proprioceptive feedback, which can enhance VOR gain and reduce latency compared to passive conditions (Cullen and Roy, 2004).

Beyond vestibular input, active impulses incorporate pre-programmed motor commands from the brain’s motor cortex and feedback from cervical proprioceptors, which sense neck muscle activity. These contributions can increase VOR gain and reduce response latency compared to passive conditions, reflecting the brain’s ability to predict and optimize eye-head coordination (Cullen and Roy, 2004). For example, during active impulses, healthy individuals may achieve gains slightly above 1, as predictive mechanisms anticipate head motion, ensuring seamless gaze stabilization.

Interestingly, during locomotion, studies report distinct compensatory roles for rotational and translational VOR components. Specifically, rotational head movements are fully compensated by the VOR, while translational motion is stabilized only within a fixation plane, such that objects in front of this plane exhibit relative motion opposite to the translation direction (Miles, 1998; Miles, 1997). This limitation implies that simultaneous stabilization of near and far objects is not achievable. Subsequent research suggests that, during ambulation, the brain resolves this through an optimized stabilization plane that maximizes visual clarity over distances (Zee et al., 2017).

Furthermore, the incorporation of extra-vestibular information into early vestibular processing enables VOR modulation based on behavioral goals. For instance, the VOR remains robust across a range of velocities and frequencies when gaze stabilization is the primary objective. However, during intentional gaze shifts, an efference copy of the motor command temporarily suppresses the VOR (Laurutis and Robinson, 1986; Cullen, 2019). Nonetheless, vestibular feedback remains accessible to the oculomotor system, as demonstrated by the rapid recovery of VOR function following mechanical perturbations of the head during gaze shifts (Freedman, 2008; Boulanger et al., 2012). This dynamic inhibition of the VOR is thought to be a function of the gaze error, defined as the disparity between intended and actual gaze positions (Pelisson et al., 1988; Boulanger et al., 2012).

An intriguing area in the study of nystagmus fast phases is the phenomenon of the beating field shift. Specifically, research has shown (Watanabe, 2001) that during optokinetic nystagmus, the average gaze position, or beating field, shifts in the direction of the fast phases—meaning it moves opposite to the motion of the visual field. This shift has been observed not only in humans (Abadi et al., 1999; Watanabe, 2001) but also across multiple species (Schweigart, 1995; Bähring et al., 1994). A similar directional shift occurs during vestibular nystagmus, where the mean eye position shifts in the direction of head rotation (Vidal et al., 1982; Chun and Robinson, 1978).

Observations in optokinetic and vestibular nystagmus suggest that the beating field shift may be a goal-directed involuntary response, acting as a reflexive orienting mechanism toward a center of interest (Crommelinck et al., 1982; Vidal et al., 1982; Siegler et al., 1998). This shift likely helps align gaze with self-motion, enhancing target detection within the moving visual field. Siegler et al. (1998) proposed that cognitive factors influence its magnitude, reflecting an individual’s preference for allocentric or egocentric reference frames. Additionally, proprioceptive feedback modulates the beating field by adjusting fast phase amplitude and frequency during nystagmus (Botti et al., 2001).

3.2 Gaze orienting movements

In this section, we overview the mechanisms that enable foveal reorientation in ecological conditions. As we will discuss, the involvement of head and sometimes hand movements adds complexity to understanding these processes.

3.2.1 Gaze shifts

Under natural conditions, while gaze-orienting eye movements can occur without significant head or body segment involvement (Freedman, 2008), head movements frequently accompany gaze shifts (Pelz et al., 2001), even for small gaze amplitudes, such as those observed during reading tasks (Kowler et al., 1992; Lee, 1999). Importantly, for large-amplitude gaze shifts, coordinated movements between the eyes and head are necessary. Fuller (1992) observed that head movements were essential for horizontal gaze shifts exceeding 40°. Below this threshold, individual differences emerged, with some participants showing a tendency to move their heads with each gaze shift, reflecting an intrinsic behavioral inclination towards head involvement in gaze changes. This variability led to the categorization of individuals as head movers and non-head movers (Fuller, 1992; Afanador et al., 1986; Stahl, 1999).

Interestingly, individual predisposition for head movement during gaze shifts was not associated with differences in ocular motor control impairments at high eccentricities (Stahl, 2001). Instead, this tendency to activate the head during gaze shifts appears to be linked to the innate representation of visual space in the central nervous system (Fuller, 1992). Several factors influence the extent of head movement in gaze shifts, including the initial eccentricity of the eyes within their orbits. When the eyes are offset in the same direction as the intended gaze shift, head contribution tends to increase, and the opposite occurs when the offset is in the opposite direction (Freedman, 2008). Furthermore, head dynamics also impact eye movement properties; for instance, ocular saccade amplitude is inversely related to head velocity, with faster head movements resulting in smaller saccades (Guitton and Volle, 1987).

It might be hypothesized that the intrinsic properties of saccadic eye movements, such as the main sequence—the relationship between saccade amplitude, duration, and peak velocity—remain unchanged during combined eye-head gaze shifts. However, evidence reveals significant interactions between saccades and concurrent head movements that modify saccade kinematics, particularly the peak velocity-amplitude relationship. In head-free conditions, the peak velocity of saccades is often reduced compared to head-fixed saccades of the same amplitude, as the vestibulo-ocular reflex (VOR), which stabilizes gaze during head movement, interacts with the saccadic system to coordinate eye and head motion (Freedman and Sparks, 1997). While the main sequence relationship generally holds, the slope or scaling of the velocity-amplitude curve is altered, reflecting modified saccade dynamics influenced by head movement. Additionally, studies show that for horizontal gaze shifts with eyes and head aligned, saccade amplitude increases linearly for small gaze shifts but plateaus as head contribution grows for larger shifts (Guitton and Volle, 1987; Stahl, 1999). While this amplitude saturation could theoretically result from mechanical constraints of the eyes within the orbits, experimental data indicate that recorded saccade amplitudes rarely approach the physical limits of the orbital range (Guitton and Volle, 1987; Phillips et al., 1995; Freedman and Sparks, 1997).

From a descriptive perspective, eye-head coordination typically begins with a rapid saccadic eye movement toward the object of interest, immediately followed by a head movement in the same direction (Bartz, 1966; Barnes, 1979; Pelisson et al., 1988; Boulanger et al., 2012). This coordination results in a characteristic sequence as outlined by Freedman (2008). The gaze shift initiates with a high-velocity saccade—approximately

This sequence typically introduces a delay of 25–75 milliseconds between eye and head movement onset (Zangermeister and Stark, 1981; Zangermeister and Stark, 1982; Freedman, 2008). This delay is thought to result from the greater visco-inertial load on the neck muscles compared to the lower visco-elastic resistance required for eye movement (Zangermeister and Stark, 1981; Zangermeister and Stark, 1982). Electromyography (EMG) studies show that neck muscles exhibit an increase in agonist activity and a decrease in antagonist activity about 20 milliseconds before a similar change in eye muscle EMG activity (Bizzi et al., 1972; Zangemeister and Stark, 1981). These findings suggest that neural signals for coordinated eye-head movements are first dispatched to neck muscles, followed shortly by eye muscles (Bizzi et al., 1972).

This raises the question: Could synchronous eye-head movements be driven by a common, shared motor command? This idea has intrigued researchers. Lestienne et al. (1984) highlighted the close coupling between saccadic eye and attempted head movements, shown by neck muscle EMG in head-restrained subjects. They suggested that while eye-head coupling may not be mandatory in primates, it likely serves as a mechanism for coordination, particularly involving reticulo-spinal neurons (Vidal et al., 1983). Further studies have shown that the covariance of eye and head movement velocities, the timing correlation of latencies, and the linear phase-plane relationship between head acceleration and eye velocity during rapid gaze shifts support the hypothesis of a shared motor command driving both movements (Guitton et al., 1990; Galiana and Guitton, 1992)

Despite the strong coupling between eye and head movements and the possibility of a shared motor command, numerous studies show that eye and head movements can be initiated separately. The timing of these movements is influenced by factors such as target predictability (Bizzi et al., 1972; Zangemeister and Stark, 1982), gaze shift amplitude (Barnes, 1979; Guitton and Volle, 1987; Freedman and Sparks, 1997), and individual tendencies for head movement (Stahl, 1999). For instance, in non-human primates, as gaze shift amplitude increases, the time from saccade onset to head movement onset decreases, eventually reaching synchrony or even showing head movement preceding saccades (Freedman and Sparks, 1997). Similar findings in humans show that head movements can sometimes precede saccades, particularly when the target is predictable (Moschner and Zangemeister, 1993). Moreover, experimentally delaying saccadic onset by stimulating the pontine omnipause neurons does not affect head movement initiation, further supporting the partial independence of eye and head command signals in brainstem structures governing coordinated eye-head actions.

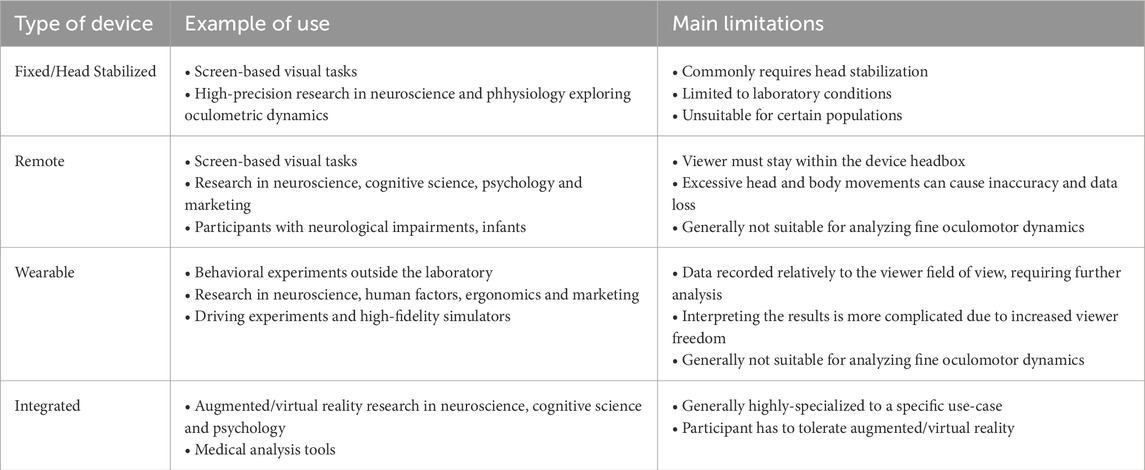

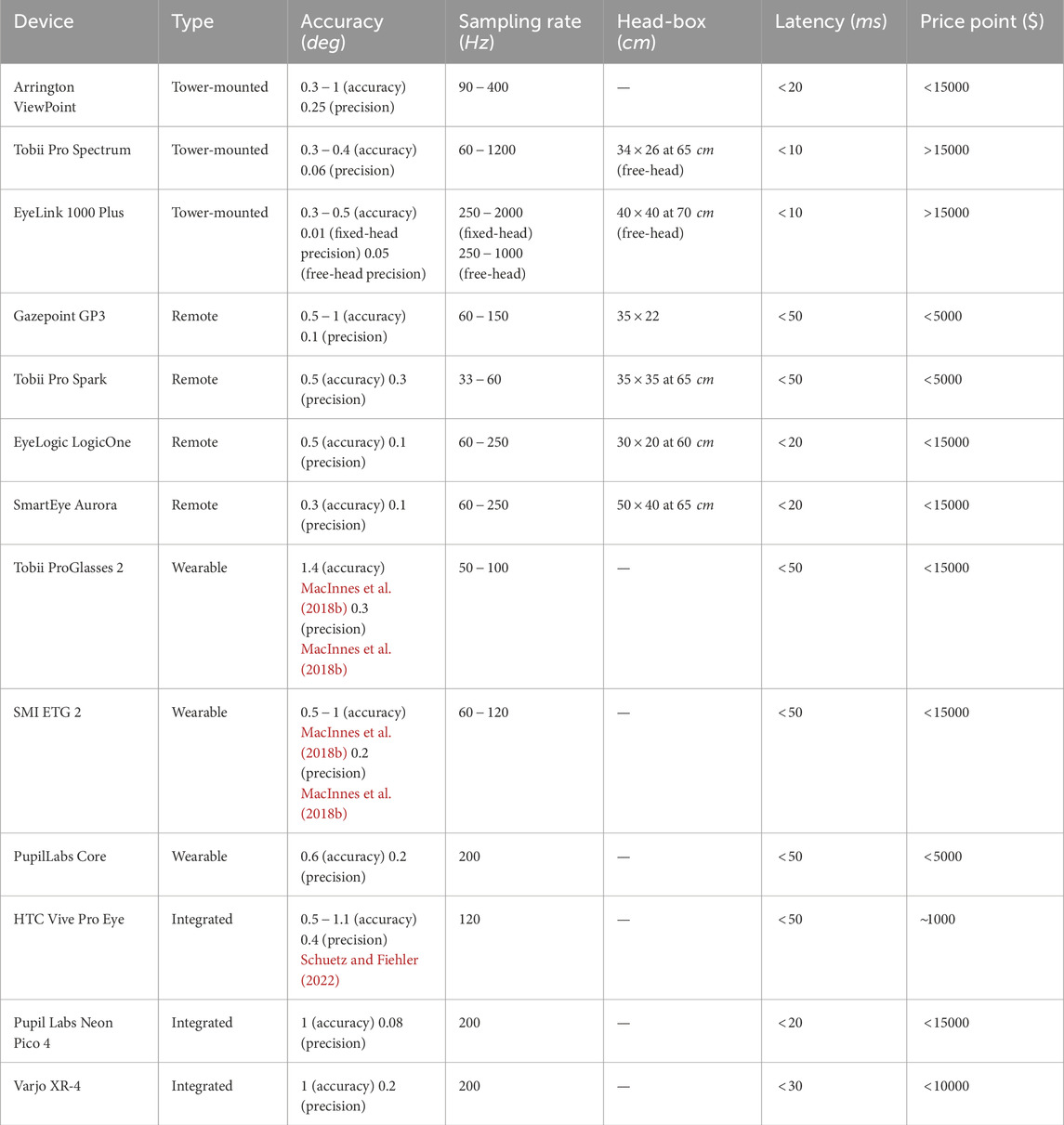

Yet, despite the relative independence of eye and head command signals, gaze itself—the sum of eye and head contributions—remains tightly controlled, preserving accuracy throughout movement. This precision holds even when the head is subjected to perturbations during its trajectory (Guitton and Volle, 1987; Boulanger et al., 2012). These observations have led some researchers to propose a gaze-feedback model in which VOR-saccade interactions are guided by a gaze-error signal (Guitton and Volle, 1987; Boulanger et al., 2012).