- 1Université Paris Saclay, Université Paris Cité, ENS Paris Saclay, CNRS, SSA, INSERM, Centre Borelli, Gif-surYvette, France

- 2SNCF, Technologies Department, Innovation & Research, Saint Denis, France

- 3Thales AVS France, Training & Simulation, Osny, France

- 4Université Paris-Saclay, Inria, CIAMS, Gif-surYvette, France

Eye movement analysis provides critical insights across domains such as perception, cognition, neurological diagnostics, and human-computer interaction. However, reliable quantification of oculomotor remains challenging due to the lack of clear boundaries between fixations, saccades, and smooth pursuits, or variability across individuals and contexts. This article reviews methods for segmenting oculometry data into canonical oculomotor events, and the computational tools that can be used to characterize them. Binary segmentation employs mostly threshold-based algorithms and learning-based algorithms to distinguish fixations from saccades. Ternary segmentation additionally considers smooth pursuits using primarily threshold-based approaches and deep learning techniques. The common challenges in the practical application of segmentation algorithms are highlighted, namely, parameter sensitivity, noise, and head movement artifacts in mobile eye trackers, and emphasize the need for standardized benchmarks. The usual oculomotor metrics that can be inferred from the canonical movements are described, encompassing temporal, spatial, and kinematic features. The critical insights they provide for cognitive and clinical research in fields such as reading comprehension, neurological disorder diagnostics, and sensorimotor development, are outlined. Finally, relatively underexplored methods from signal processing, including spectral, stochastic, and topological methods, are presented. Their potential in revealing oscillatory patterns and structural complexities in gaze dynamics is detailed. Together, these approaches enhance our understanding of eye movement behavior, with significant implications for psychology, neuroscience, and human-computer interaction.

1 Introduction

Eye movement research has a rich history, beginning with foundational work by Dodge and Cline (1901) in the early

A primary goal in eye movement research is to extract metrics that characterize the oculomotor system. Due to their close link with visual attention, eye movements analysis is a powerful tool for studying cognitive and behavioral processes. Recent studies have integrated eye movement analysis into cognitive psychology, exploring areas like language processing, reading, and problem-solving (Rayner, 1998). Research has also investigated connections between eye movements, visual attention, and perception (Collins and Doré-Mazars, 2006; Schütz et al., 2011). Additionally, individual differences in oculomotor patterns have paved the way for eye movement biometrics (Rigas and Komogortsev, 2016).

Clinical research increasingly employs eye movement analysis as a non-invasive method to identify neural irregularities linked to neurodegenerative and neurological disorders (MacAskill and Anderson, 2016). Distinct oculomotor patterns have been observed in individuals with early-stage Alzheimer’s disease (Fernández et al., 2013) and Parkinson’s disease (Wetzel et al., 2011), highlighting their potential as biomarkers for early diagnosis and disease monitoring. Furthermore, a growing body of evidence explores oculomotor features in behavioral disorders such as attention deficit hyperactivity disorder (ADHD) (Fried et al., 2014) and autism spectrum disorder (ASD) (Klin et al., 2002; Shirama et al., 2016), offering valuable insights into the neurocognitive mechanisms underlying these conditions.

The rapid growth of eye movement research has also brought significant challenges. The increasing volume of publications can obscure critical insights, while fragmentation across sub-disciplines hinders effective knowledge integration. As the different research communities pursue distinct objectives, definitions and methodologies often become highly specialized, which limits their generalizability. This has contributed to a fragmented conceptual framework within the field. Notably, a recent study highlights that even fundamental terms such as fixation and saccade are defined inconsistently, resulting in conceptual confusion (Hessels et al., 2018). These definitions vary considerably depending on whether the perspective is functional, oculomotor, or computational, with little consensus even within individual subfields.

Beyond conceptual and terminological inconsistencies, the field lacks standardized methods for defining and extracting eye movement features. Most studies emphasize feature subsets tailored to specific research questions, and the methodological variability in segmenting raw gaze data into canonical movements—such as fixations, saccades, and smooth pursuits—undermines reproducibility. The growing availability of portable, cost-effective eye-tracking devices has facilitated the study of naturalistic behavior in both laboratory and real-world settings (Hayhoe and Ballard, 2005; Land, 2009). However, the absence of standardized analysis protocols limits comparability between studies and hinders the integration of knowledge. This work aims to address these challenges by proposing a unified methodological framework to improve interoperability across research communities and improve comparison across experimental contexts.

This review focuses on methods for segmenting, extracting and analyzing fixations, saccades, and smooth pursuits, building on prior comprehensive reviews of fixation and saccade features (Sharafi et al., 2015; Rigas et al., 2018; Brunyé et al., 2019; Skaramagkas et al., 2021; Mahanama et al., 2022a; Spering, 2022) and pursuit-based features (Skaramagkas et al., 2021; Mahanama et al., 2022a; Spering, 2022). Some reviews target specific domains, such as emotional and cognitive processes (Skaramagkas et al., 2021) or decision-making (Spering, 2022). Additionally, several studies, including Komogortsev et al. (2010b); Birawo and Kasprowski (2022); Startsev and Zemblys (2023), evaluate segmentation algorithms, often comparing their performance on open-source datasets and proposing quality metrics. This work aligns with these efforts by reviewing segmentation methods and their associated oculomotor features.

Specifically, this review surveys methodologies for quantifying oculomotor system activity and explores their diverse applications. While not exhaustive due to the breadth and specialization of some methods, it provides a concise overview of key approaches for characterizing canonical eye movements and their oculometric signals. The following sections are organized as follows. Section 2 introduces segmentation algorithms for classifying fixations, saccades, and smooth pursuits. Two primary analytical approaches are then explored: physiological analysis—Section 3 — which extracts meaningful features like shape, dynamics, and kinematics from segmented sequences, and signal-based analysis—Section 4 — which applies time-series descriptors to examine eye movement behavior from a global dynamic perspective. Although a detailed discussion of metrics is beyond the scope of this review, we aim to provide a unified framework for oculometric signal analysis.

This article is part of a series of four reviews dedicated to methods for analyzing oculomotor signals and gaze trajectories. The overarching goals of the series are to evaluate the application of eye movement and gaze analysis techniques across diverse scientific disciplines and to work toward a unified methodological framework by defining standardized representations and concepts for quantifying eye-tracking data. The first article in the series, already published in Frontiers in Physiology (Laborde et al., 2025), provided an overview of current knowledge on canonical eye movements, with particular emphasis on distinguishing findings obtained in controlled laboratory settings from those observed in more natural, head-free conditions.

2 Segmentation algorithms

Three archetypal gaze patterns can typically be observed in eye-tracking data: periods of relative stability, rapid eye shifts, and slower shifts corresponding to the tracking of moving objects. These are commonly assumed to reflect the three main canonical oculomotor events that direct gaze movements, namely, fixations, saccades and smooth pursuits. Thus, a necessary preliminary step in eye-movement analysis is often to identify these canonical events from a continuous stream of gaze data using segmentation algorithms. Segmentation algorithms employ a number of predefined criteria, based on the underlying characteristics of the oculomotor events, in order to distinguish them. Such a process aligns with the traditional neurophysiological view, which postulates that distinct neural mechanisms govern specific movement types, such as the superior colliculus for saccades or the cerebellum for smooth pursuits.

However, the organization of the oculomotor system as a discrete set of events has been questioned, notably in the context of natural viewing conditions (Steinman et al., 1990). Under ecological conditions, a richer repertoire of ocular behavior can be observed. This results in potential overlap between the characteristics of the oculomotor events, which makes the segmentation task more challenging. Therefore, it seems more appropriate to refer to segmentation algorithms as event classification rather than event detection, since they merely assign a discrete event type to each data period based on some computationally inferred features—e.g., velocity thresholds for saccades or duration thresholds for fixations. This distinction is critical, as misclassification can distort interpretations of visual attention in fields such as psychology, neuroscience, and human-computer interaction.

A major challenge in eye movement segmentation is the dependence on user-defined parameters, such as velocity thresholds for saccades or minimum fixation durations. Although these events are grounded in physiological phenomena, no theoretical consensus exists on parameter values that definitively distinguish movement types. For instance, the transition from slow movements, such as smooth pursuits or drifts, to rapid saccades lacks a clear, physiologically validated threshold. Studies investigating optimal parameterization for specific algorithms (Blignaut, 2009; Shic et al., 2008) indicate that variations in parameter settings significantly influence classification outcomes (Komogortsev et al., 2010b; Salvucci and Goldberg, 2000). This sensitivity hampers reproducibility and can distort findings in fields requiring precise event classification, such as psychology or human-computer interaction. In psychology, for example, precision in detecting fixations is crucial for analyzing attention strategies, such as in studies on reading or visual information processing (Rayner, 1998). For instance, in experimental paradigms measuring cognitive load, accurate identification of fixations enables reliable quantification of the time spent on specific stimuli, thereby revealing underlying attentional processes (Duchowski and Duchowski, 2017). In human-computer interaction (HCI), precise classification of eye movement events is equally important for evaluating the usability of user interfaces (Jacob and Karn, 2003). Correct detection of saccades and fixations, for example, allows for the identification of interface areas that attract users’ attention or pose accessibility issues, directly influencing the design of more intuitive interfaces.

Conversely, errors in the detection of fixations or saccades can have significant repercussions on the interpretation of data in studies in cognitive psychology and human-computer interaction (HCI). As shown by Duchowski and Duchowski (2017) and Nyström and Holmqvist (2010), erroneous classification of eye movement events can bias the analysis of attentional processes or user behaviors. For example, a fixation incorrectly identified as a saccade can distort measures of cognitive load in experimental paradigms, leading to erroneous conclusions about underlying cognitive mechanisms (Rayner, 1998). Similarly, in HCI, imprecise detection of eye movement events can result in an incorrect evaluation of an interface’s usability, affecting recommendations for its optimization (Jacob and Karn, 2003). As such, threshold-based methods, including velocity or dispersion thresholding, provide computational interpretations of oculomotor events, but their criteria often vary across studies and implementations, leading to inconsistent classifications of identical gaze data due to insufficient standardization, which compromises the reproducibility of results in contexts requiring high precision (Holmqvist et al., 2011).

Finally, researchers must consider the coordinate system used when analyzing eye-tracking data, particularly with mobile eye trackers that permit free head movement. Unlike stationary trackers, which use a head-referenced coordinate system, mobile trackers record gaze in a world-referenced system, where head movements can complicate event classification. To avoid such conceptual confusion, researchers should ensure proper head movement compensation and clearly report their coordinate system. For a detailed discussion of challenges in defining oculomotor events, see the review by Hessels et al. (2018). Note that considerations regarding the utilization and transformation of these coordinates in relation to a moving observer’s visual field are addressed in the first part of this review series (Laborde et al., 2025)

Although some authors have called for the standardization of eye movement classification algorithms and evaluation tools (Komogortsev et al., 2010a), Startsev and Zemblys (2023), there is currently no clear consensus on how to benchmark these methods. This lack of agreement poses challenges to the development and comparison of new segmentation approaches. To address this gap, several concrete proposals have been suggested in the literature. First, minimal reporting standards could be established, requiring authors to clearly specify algorithm parameters, eye-tracker sampling rates, stimulus types, and data preprocessing steps. Second, the use of shared, openly available datasets would enable reproducible evaluation across diverse conditions, including static, dynamic, and naturalistic stimuli. Third, benchmark competitions or challenges could be organized, similar to practices in computer vision and machine learning, where algorithms are tested on identical datasets using standardized metrics such as precision, recall, F1-score, Cohen’s Kappa, and RMSD. By adopting these practices, the field could facilitate more transparent, reproducible, and comparable assessments of eye movement segmentation algorithms, ultimately accelerating methodological improvements.

In this review, we focus on fixations, saccades, and smooth pursuit eye movements, as these are the most commonly studied and well-characterized oculomotor events in the literature. Other canonical eye movement events, such as vergence, optokinetic reflexes, and vestibulo-ocular reflex (VOR), are not included. These events are less frequently analyzed in eye-tracking studies, and their detection often requires specialized experimental setups or instrumentation beyond conventional gaze-tracking paradigms. By concentrating on fixations, saccades, and pursuits, we ensure that the discussion is grounded in well-supported empirical evidence while acknowledging that additional eye movement types remain an important direction for future work. Despite these challenges, the following sections provide an overview of widely used segmentation methods (Salvucci and Goldberg, 2000; Komogortsev and Karpov, 2013; Andersson et al., 2016).

2.1 Separating saccades from fixations

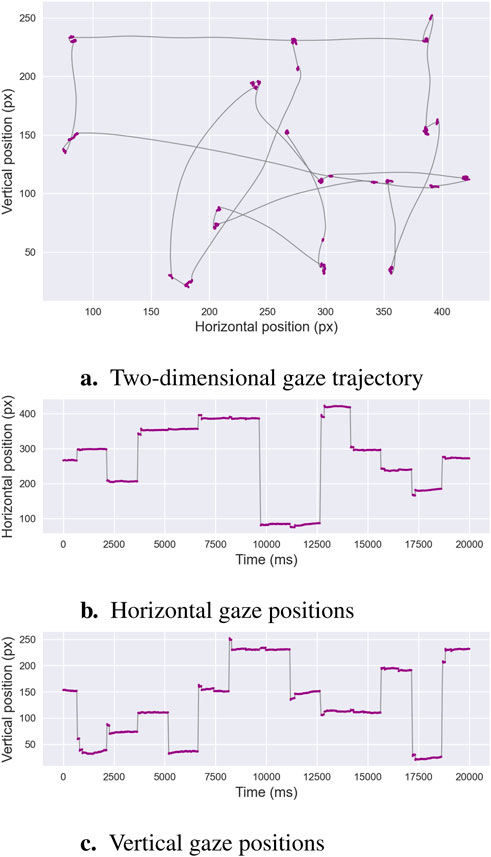

Numerous algorithms have been developed to address the challenge of distinguishing saccades from fixations, a process known as binary segmentation. This is illustrated in Figure 1, which depicts alternating periods of relative gaze stability—fixations, marked in purple—and rapid gaze reorientations—saccadic eye movements. The recording shown in Figure 1 is of exceptionally high quality, with minimal noise or signal loss. In contrast, real-world eye-tracking data often exhibit lower quality due to several factors. For instance, blinks or partial eyelid closures interrupt the signal, while head movements or poor participant stabilization can introduce spatial jitter. Changes in lighting conditions or reflections on glasses can reduce the accuracy of gaze detection, and low sampling rates or occasional data dropouts may cause missing or irregular samples. Additionally, physiological variability, such as micro-saccades or pupil size fluctuations, can further complicate event classification. These factors collectively increase the difficulty of distinguishing fixations from saccades, emphasizing the need for robust segmentation algorithms that can tolerate noise and handle incomplete or variable-quality data.

Figure 1. Binary Segmentation. This example illustrates an oculomotor recording containing both fixations and saccades. Panel (a) depicts the two-dimensional gaze trajectory, with alternating periods of stability—fixations shown in purple—and rapid ballistic reorientations—saccades shown in gray. Panels (b,c) present the horizontal and vertical gaze positions over time, respectively, using the same color scheme. These characteristic patterns form the basis of binary segmentation algorithms, which aim to distinguish fixation sequences from saccadic sequences.

Binary segmentation algorithms are broadly categorized into threshold-based and learning-based approaches. Threshold-based methods rely on predefined computational criteria, such as velocity or spatial dispersion, to classify fixations and saccades, ensuring transparent, rule-based classification. In contrast, learning-based methods, encompassing machine learning and deep learning techniques, infer patterns from annotated training data, which reflect expert or task-specific interpretations of fixations and saccades. These annotations may reduce the transparency of classification criteria compared to threshold-based methods due to their reliance on subjective or context-dependent definitions.

2.1.1 Threshold-based algorithms

The velocity-threshold identification (I-VT) algorithm (Salvucci and Goldberg, 2000) is a widely adopted method for distinguishing fixations from saccades in eye movement data. It leverages the distinct velocity profiles of eye movements: low velocities characterize fixations, while high velocities indicate saccades. The I-VT algorithm calculates the absolute velocity between consecutive gaze samples and classifies each sample as a fixation or saccade based on a user-defined velocity threshold. To address the subjectivity of manual threshold selection, Nyström and Holmqvist (2010) proposed an adaptive I-VT variant that dynamically computes thresholds for peak velocities and saccade onset/offset detection based on statistical properties of the data. This method incorporates constraints derived from the physical characteristics of eye movements—such as minimum and maximum velocities, accelerations, and event durations—to filter noise and enhance classification accuracy.

In contrast to velocity-based methods, the dispersion-threshold identification (I-DiT) algorithm offers an alternative approach by leveraging the tendency of fixation points—characterized by relatively low velocity—to cluster spatially (Salvucci and Goldberg, 2000; Komogortsev et al., 2010a; Andersson et al., 2016). The I-DiT algorithm distinguishes fixations from saccades based on the spatial dispersion of consecutive gaze points within a defined temporal window. Dispersion is quantified by summing the ranges—i.e., the differences between the maximum and minimum values—of the gaze coordinates in both the horizontal and vertical dimensions. If the resulting dispersion value falls below a predefined threshold, the corresponding gaze points are classified as a fixation. Otherwise, if the dispersion exceeds the threshold, the sequence is identified as a saccade.

Another notable approach is the minimum spanning tree (MST)-based method (Goldberg and Schryver, 1995; Salvucci and Goldberg, 2000; Komogortsev et al., 2010a; Andersson et al., 2016), which also employs a dispersion-based strategy to evaluate local gaze dispersion within a temporal window of eye position data. Unlike traditional methods, MST-based algorithms model gaze points as nodes in a graph, with edges weighted by the Euclidean distance between corresponding positions. A minimum spanning tree is constructed—typically using Prim’s algorithm (Camerini et al., 1988) — to connect all nodes while minimizing total edge length. The identification by minimum spanning tree (I-MST) algorithm classifies gaze points by applying edge-distance thresholds: points connected by edges shorter than the threshold are grouped as fixation components, while those separated by longer edges are classified as saccadic components. Thresholds may be applied globally across the graph (Komogortsev et al., 2010a) or adapted locally based on vertex density (Goldberg and Schryver, 1995). The MST-based approach offers flexibility, adapts to local data structures, and demonstrates robustness in handling missing or noisy data, making it suitable for complex eye-tracking datasets.

The Density-Threshold Identification (I-DeT) algorithm is an adaptation of the DBSCAN clustering method (Ester et al., 1996). I-DeT extends DBSCAN by incorporating the temporal dimension of gaze data, ensuring that segmented events reflect the sequential nature of eye movements. As introduced by Li et al. (2016), a gaze point is classified as a core point if:

Building on classical signal processing, Kalman filter-based algorithms (I-KF) model eye movements as a dynamic system. The two-state Kalman filter, as proposed by Komogortsev and Khan (2007), represents eye movements using position and velocity states, assuming linear dynamics and Gaussian noise. The algorithm operates recursively in two phases: (i) the predict phase, which forecasts the next state based on the system model, and (ii) the update phase, which refines the prediction using observed data to produce a more accurate state estimate. Saccade detection employs a Chi-square test (Sauter et al., 1991) to assess discrepancies between predicted and observed gaze velocities, with a threshold determining whether a sample is classified as a saccade—high velocity—or fixation—low velocity. This approach excels in handling noisy data by combining predictive modeling with statistical testing, offering a robust framework for eye movement classification applicable in fields such as human-computer interaction and clinical research.

2.1.2 Learning-based algorithms

The Hidden Markov Model Identification (I-HMM) algorithm, introduced by Salvucci and Goldberg (2000), extends the velocity-threshold identification (I-VT) approach by employing a probabilistic framework to segment eye movements into fixations and saccades. I-HMM models eye movements as a sequence of two latent states—fixation and saccade—each characterized by a Gaussian velocity distribution. Fixations typically exhibit low mean velocity, while saccades are defined by high mean velocity—e.g.,

The Two-Means Clustering Identification (I2MC) algorithm, introduced by Hessels et al. (2017), is designed to extract fixations from gaze data with high noise levels, such as those recorded from infants. The algorithm employs two-means clustering—k-means with

Building upon established machine learning techniques, Zemblys et al. (2018) introduced the Random Forest Classifier (I-RF) algorithm to distinguish fixations, saccades, and potentially other eye movement events from raw gaze data. The I-RF model is trained on a set of 14 features, including spatial measures—e.g., root mean square of sample-to-sample displacement, standard deviation of gaze positions, bivariate contour ellipse area—and statistical measures—e.g., sample dispersion, kurtosis. The random forest classifier leverages these features to model complex, non-linear relationships, achieving high classification accuracy. However, a key limitation is the reliance on hand-tagged training data, which is labor-intensive and hinders scalability. Reproducibility is also challenging, as model performance depends on the quality and representativeness of training datasets. Additional limitations include the computational cost of feature extraction and the risk of overfitting to specific datasets. Nevertheless, I-RF is particularly valuable in eye-tracking research for applications in cognitive psychology, human-computer interaction, and clinical diagnostics, offering robustness to noise and the potential to detect diverse eye movement types when trained appropriately.

The evaluation of binary segmentation algorithms, which aim to distinguish fixations from saccades, has been reported in benchmark studies comparing algorithm outputs to human coders using high-frequency datasets that include static images, text, moving dots, and videos (Andersson et al., 2016). These studies provide a valuable baseline for assessing segmentation quality. Performances are generally summarized using metrics such as Cohen’s Kappa, which captures agreement with human annotations, or RMSD for event durations, which reflects temporal precision. However, reported values vary considerably depending on the dataset, the type of stimulus, and the specific evaluation protocol, making it difficult to directly compare results across studies.

Among threshold-based methods, the velocity-threshold approach (I-VT) typically reaches Kappa values around

Learning-based approaches tend to report more robust and generalizable performance, particularly in challenging or noisy datasets. Hidden Markov models (I-HMM) achieve balanced results across stimulus types, with Kappa values close to 0.7 for saccades (Andersson et al., 2016). The two-means clustering method (I2MC), developed specifically for noisy infant recordings, reports an average F1-score of 0.83 across seven independent datasets, consistently outperforming several threshold-based methods (Hessels et al., 2017). Random forest classifiers (I-RF) have achieved state-of-the-art sample-level results, with F1-scores near 0.97 and Kappa values around 0.85 in validation data, though performance decreases to about 0.70 on independent test sets (Zemblys et al., 2018).

In summary, threshold-based methods are attractive for their simplicity and efficiency and remain effective under controlled static conditions, but they degrade substantially in noisy or dynamic environments. Learning-based methods demonstrate greater resilience, adaptability, and the ability to model complex data patterns, although they require annotated training datasets and greater computational resources. It is important to emphasize that these are reported performances drawn from heterogeneous studies, and differences in dataset characteristics, sampling frequency, and evaluation protocols likely account for a substantial part of the observed variability across algorithms.

2.2 Separating smooth pursuits from fixations and saccades

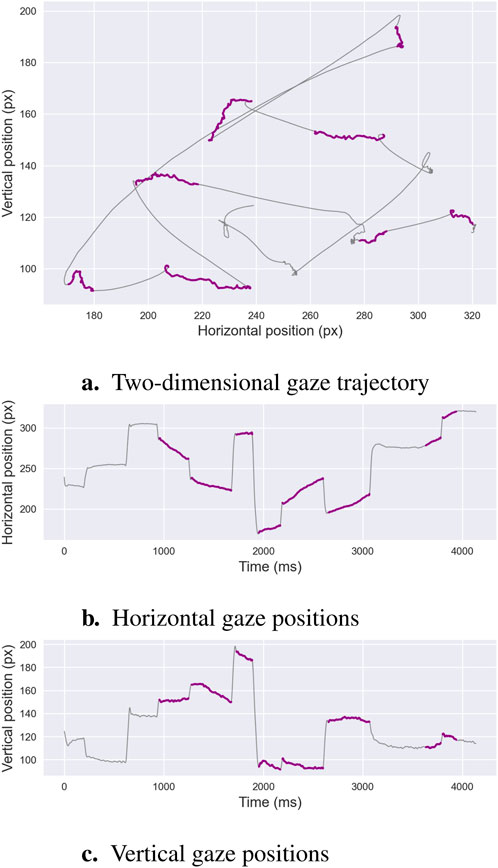

The detection of smooth pursuit events, characterized by low-velocity, consistent-directionality eye movements that track moving targets, has received less attention compared to saccade and fixation classification. This task, known as ternary segmentation—classifying fixations, saccades, and smooth pursuits—is illustrated in Figure 2, which depicts smooth pursuits—marked in purple—alongside fixations and saccades in high-quality eye-tracking data. Methods for identifying smooth pursuits are broadly categorized into threshold-based and learning-based approaches. Both approaches encounter the same limitations outlined in Section 2.1, including sensitivity to predefined thresholds in threshold-based methods and reliance on annotated training datasets in learning-based methods, which can be labor-intensive and specific to the dataset. Smooth pursuit detection is particularly challenging in noisy or low-quality data—e.g., from low-frequency eye trackers or studies involving infants—often necessitating preprocessing steps such as noise filtering or blink removal to improve accuracy.

Figure 2. Ternary Segmentation. This example illustrates an oculomotor recording comprising fixations, saccades, and smooth pursuits. Panel (a)shows the two-dimensional gaze trajectory, where fixations are marked in purple, saccades in gray, and smooth pursuits in blue. Panels (b,c)display the corresponding horizontal and vertical gaze positions over time, highlighting the gradual directional displacements characteristic of smooth pursuit movements. These distinguishing features are the focus of ternary segmentation algorithms, which aim to isolate pursuit sequences from other phases.

2.2.1 Threshold-based algorithms

Typically, a simple velocity threshold is first applied to isolate saccadic events, followed by a second step to distinguish between the remaining movements, namely, fixation and pursuit events. A straightforward but effective method for this task, known as the I-VVT approach, was proposed by Komogortsev and Karpov (2013). This method builds upon the I-VT algorithm by introducing a second velocity threshold to specifically isolate fixation events. Any remaining data points are then classified as pursuit events. However, a potential limitation of this approach is that eye movement velocities can vary between individuals and even within the same individual depending on the specific task being performed. As such, establishing universally effective thresholds to differentiate smooth pursuits from fixations—both of which are low-velocity movements—presents a challenge. This variability can complicate the application of this algorithm in real-world scenarios, particularly those involving dynamic scenes (Kasneci et al., 2015).

To reduce reliance on velocity thresholds, Komogortsev and Karpov (2013) proposed to distinguish between pursuit and fixation movements using a dispersion threshold combined with a temporal window—an approach commonly referred to as I-VDT. This method naturally extends the I-DiT approach by isolating fixation samples based on their spatial proximity. Similarly, Lopez (2009) proposed an alternative strategy where the standard deviation of movement direction within a time window is used to differentiate between fixation and pursuit events. This approach provides an additional method for segmentation that focuses on directional variability rather than relying solely on velocity-based thresholds.

The Velocity and Movement Pattern Identification (I-VMP) algorithm, proposed by Lopez (2009), provides an advanced method for detecting smooth pursuits in eye-tracking data. I-VMP employs a two-stage approach: it first applies a velocity threshold to isolate saccades, then analyzes the angular displacement between consecutive gaze points to identify smooth pursuits among low-velocity movements. Specifically, the angle between the horizontal axis and the line connecting successive gaze points is projected onto a unit circle, and a Rayleigh score is computed to quantify directional consistency within a defined temporal window. High Rayleigh scores indicate stable directionality, characteristic of smooth pursuits, distinguishing them from fixations, which exhibit random or minimal directional changes. While this method reduces dependence on velocity thresholds compared to traditional approaches, it requires preprocessing steps, such as noise filtering and blink removal, and knowledge of stimulus motion for optimal performance.

Finally, Santini et al. (2016) introduced a Bayesian decision theory-based approach (I-BDT), specifically designed for the classification of smooth pursuit eye movements when viewing dynamic stimuli. Unlike earlier methods that rely on a velocity-based initial step to isolate non-saccadic sequences, this approach directly separates smooth pursuits from saccades and fixations without the need for an initial velocity threshold. Grounded in physiological hypotheses, the I-BDT approach incorporates explicit formulas to compute the likelihoods and priors for each type of eye movement—fixation, saccade, and smooth pursuit. These formulas enable the efficient classification of eye movement events by applying Bayes’ theorem, offering a probabilistic framework for distinguishing between different types of oculomotor behavior.

2.2.2 Learning-based algorithms

Fuhl et al. (2018) introduced the Histogram of Oriented Velocities (I-HOV) method, which adapts a computer vision technique to classify fixations, saccades, and smooth pursuits in eye-tracking data. The I-HOV algorithm computes velocity-weighted angles between a gaze point and its predecessors or successors within a defined temporal window, generating a histogram that serves as a meta-representation of local gaze behavior for each sample. These histograms are used as feature vectors for machine learning algorithms, such as random forests, k-nearest neighbors, and support vector machines, to classify eye movement types. Similar to the I-VMP algorithm (Lopez, 2009), I-HOV leverages the consistent directionality and low-velocity profiles of smooth pursuits to distinguish them from fixations and saccades. While effective for ternary segmentation, I-HOV relies on high-quality annotated training data and is computationally intensive. Its performance is also sensitive to noise and the limitations of low-frequency eye trackers, which may reduce the accuracy of velocity and angle calculations.

Recent advances in eye movement classification have leveraged deep learning techniques to distinguish smooth pursuit sequences from fixations and saccades. One such approach, proposed by Hoppe and Bulling (2016), employs a convolutional neural network (CNN) combined with data windowing. In this method, gaze points within each temporal window are transformed into the frequency domain using a Fourier transform and then input to the CNN, which classifies the eye movement type. Similarly, Fuhl et al. (2021) introduced a CNN-based method, termed I-CNN, that operates directly on windowed raw eye data to isolate oculomotor events. These deep learning approaches demonstrate significant effectiveness, particularly when trained on datasets tailored to specific experimental conditions and eye-tracking devices, underscoring their potential for robust eye movement classification. However, their performance remains heavily dependent on the quality and annotation of training data, which can substantially impact model accuracy and generalizability.

Ternary segmentation, tasked with classifying fixations, saccades, and smooth pursuits, presents greater challenges than binary segmentation due to the subtle low-velocity characteristics of smooth pursuits. Insights from Komogortsev and Karpov (2013), Santini et al. (2016), Fuhl et al. (2018), and Fuhl et al. (2021), evaluated on varied datasets with dynamic stimuli, provide a foundation for assessing performance, although quantitative benchmarks remain less comprehensive than for binary segmentation. Moreover, the different evaluations were conducted on distinct datasets, making it challenging to provide a reliable comparative analysis of the various segmentation methods. As such, the following paragraphs will focus on qualitative considerations.

Among threshold-based approaches, extensions of velocity- and dispersion-threshold methods—e.g., I-VVT, I-VDT—have been applied to pursuits, while variants such as I-VMP incorporate directional information to reduce velocity ambiguities. Bayesian decision theory (I-BDT) has been reported to outperform dispersion-based methods (I-VDT) on several dynamic datasets at

In summary, ternary segmentation highlights the intrinsic difficulty of reliably detecting smooth pursuits, particularly at low velocities where they overlap with fixations. Threshold-based methods capture faster pursuits but remain sensitive to noise and sampling rate. Bayesian and direction-based extensions have been reported to reduce some of these ambiguities, though results vary across datasets. Learning-based methods appear more promising for handling complex or noisy recordings, especially with CNNs and histogram-based approaches, yet their effectiveness still depends on the availability of well-annotated training corpora. Reported performances point to relative strengths of each family of methods, but the absence of standardized benchmarks makes it difficult to establish a consensus hierarchy of algorithms.

3 Physiological features

Applying the segmentation algorithms presented in Section 2 produces a sequence of fixations, saccades, and possibly smooth pursuits from raw gaze data. The following sections will review the most common metrics found in the literature to describe and analyze these oculomotor events.

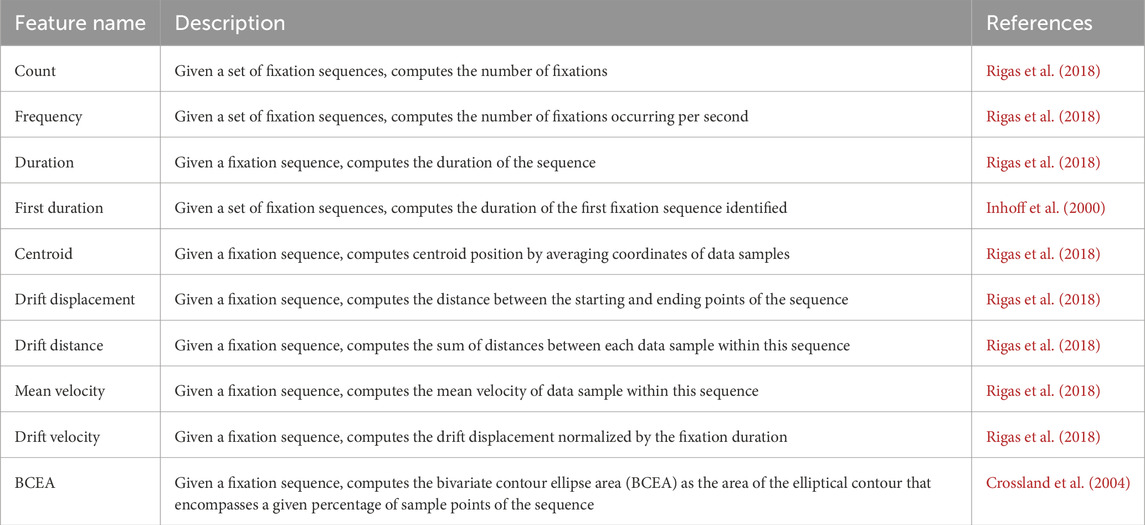

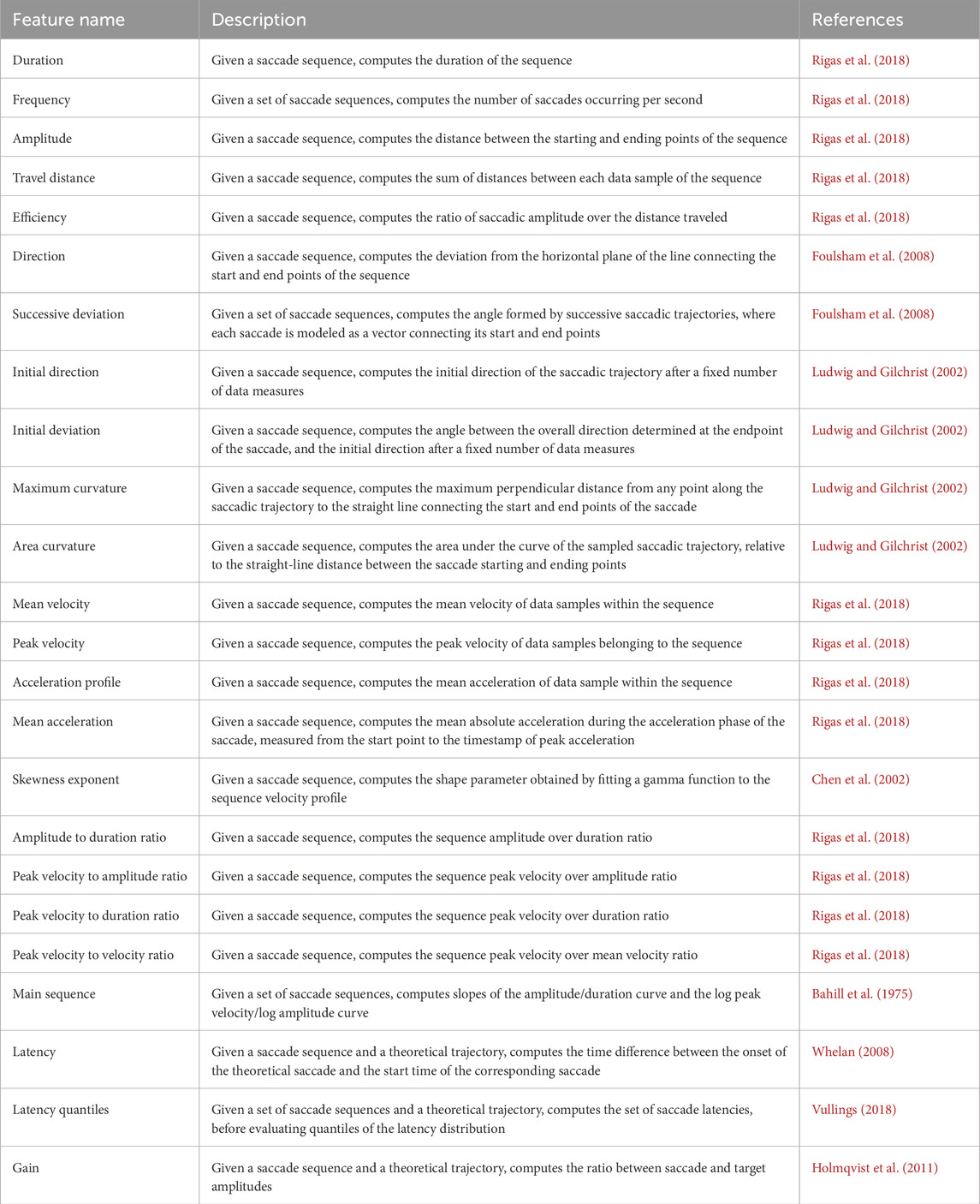

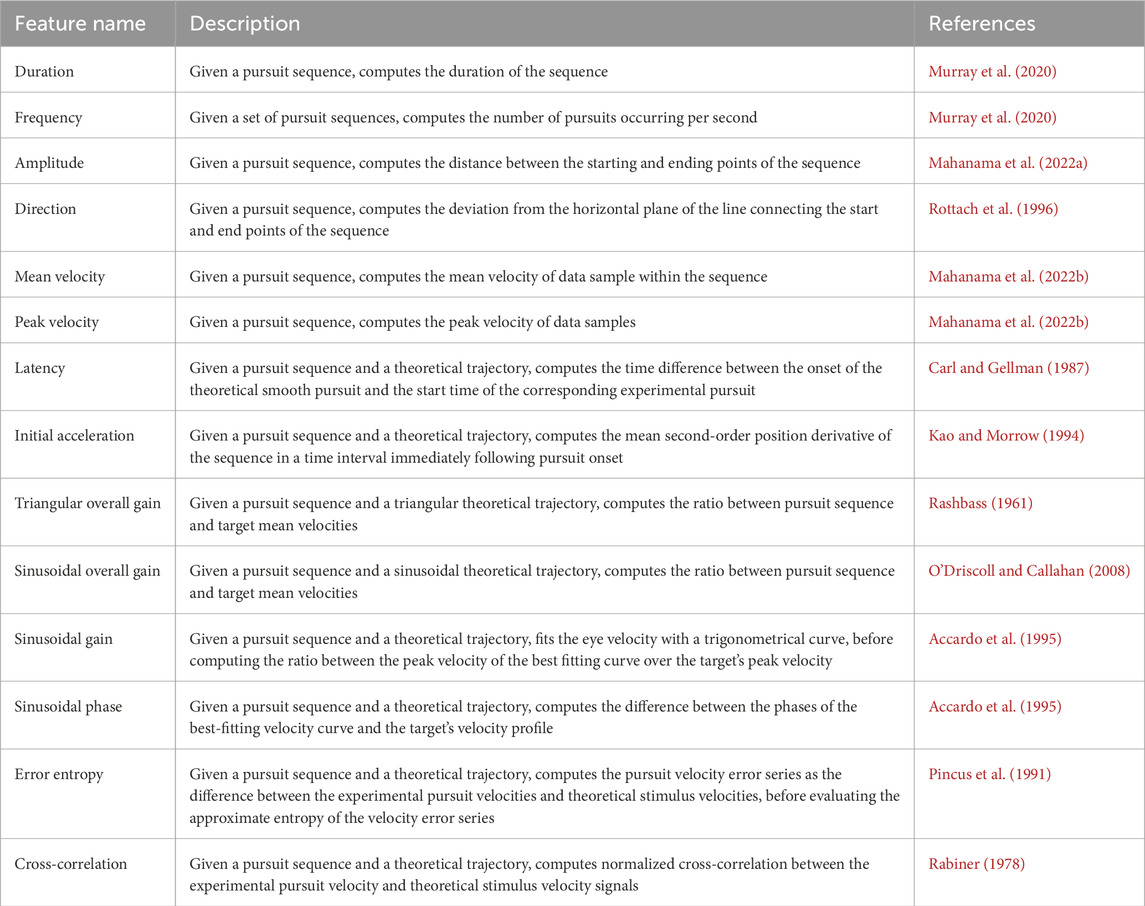

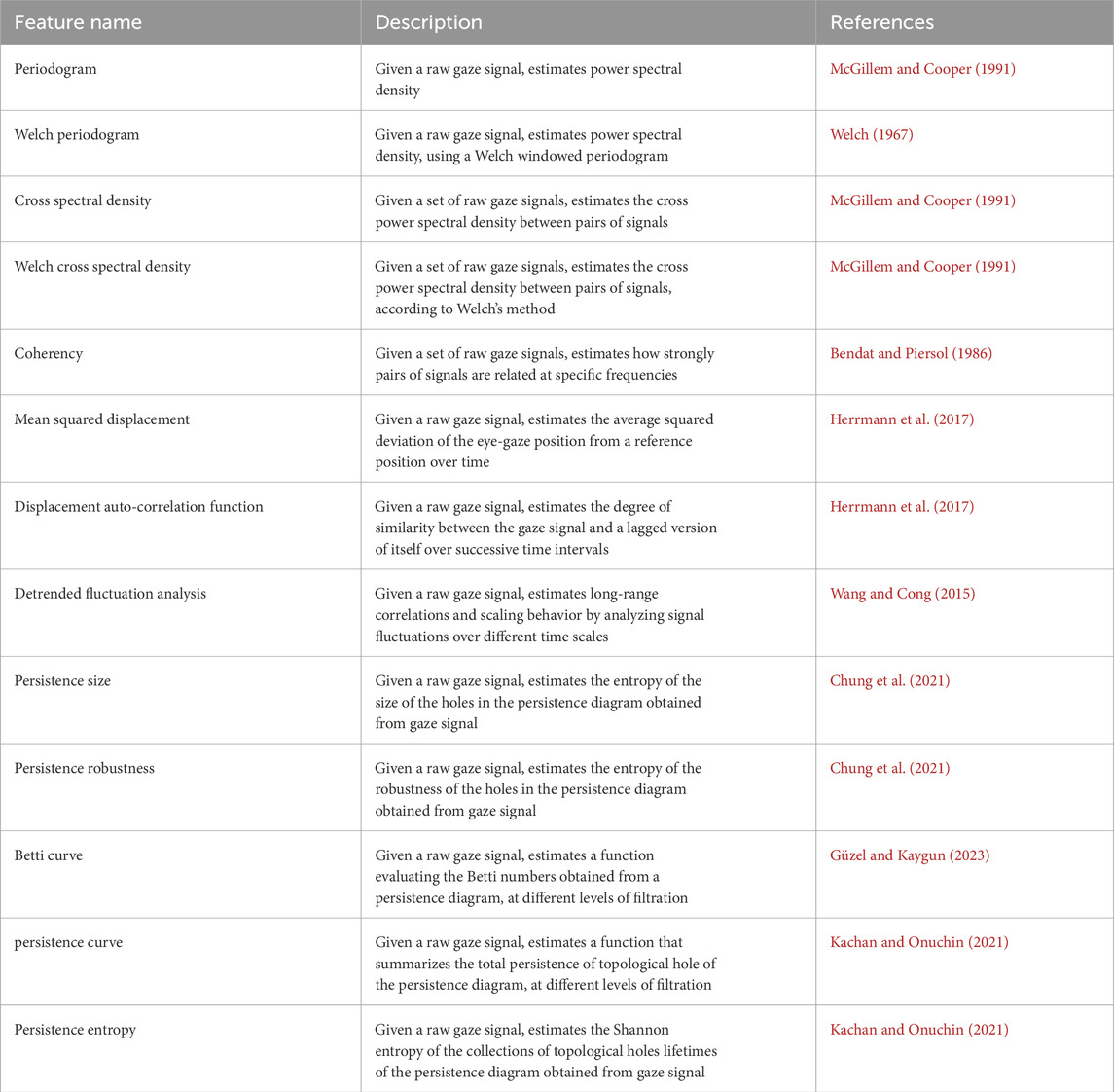

The fundamental features and metrics for fixations, saccades, and smooth pursuits are summarized in Tables 1–3, respectively. The tables provide a concise description of each feature and references from the literature that offer guidance for their implementation.

3.1 Fixation measures

A fixation is defined as a period during which the gaze is stabilized on a specific spatial location, projecting visual stimuli onto the fovea centralis, the retinal region with maximal photoreceptor density and visual acuity. Despite attempts to maintain steady fixation on a stationary target, the eyes exhibit continuous, involuntary micromovements, including microsaccades—rapid, small-amplitude saccades—drifts—slow, curvilinear deviations—and tremors—high-frequency, low-amplitude oscillations. This section examines the quantitative features characterizing fixations, including temporal, positional attributes, and dynamic characteristics. These properties are typically analyzed under head-constrained conditions using high-resolution eye-tracking systems to isolate oculomotor behavior.

3.1.1 Temporal features

Fixation count is defined as the total number of fixations within a defined time interval or stimulus region. Despite its simplicity, the fixation count remains a cornerstone metric in eye-tracking research due to its robustness and interpretability. It is frequently employed in exploratory analyses before applying more advanced techniques. Fixation count is widely utilized to assess visual attention allocation to regions of interest (ROIs) in textual or pictorial stimuli (Scheiter and Eitel, 2017), infer the depth and efficiency of visual processing (Jacob and Karn, 2003; Park et al., 2015), and investigate how expertise influences oculomotor behavior in visual tasks (Schoonahd et al., 1973; Megaw and Richardson, 1979).

Pioneering work by Goldberg and Kotval (1999) highlighted that a higher number of fixations directed at a stimulus often indicates inefficiency in the search for relevant information. As such, fixation count has been used in eye-tracking studies to identify visual regions that attract more attention or to infer the amount of cognitive effort required for a particular task. For example, in challenging tasks such as source code reading, a higher fixation count could signify increased visual effort and processing time (Binkley et al., 2013; Sharif et al., 2012). The fixation count is often expressed per unit of time or relative to a specific task or sub-task. For example, in reading tasks, the fixation count can be normalized to the length of the text by dividing the number of fixations by the number of words (Sharafi et al., 2015).

Another critical metric, fixation duration, quantifies the temporal dynamics of gaze behavior. Typical fixations last between 200 and 300 milliseconds; however, longer durations on a stimulus may indicate greater processing complexity (Jacob and Karn, 2003; Krejtz et al., 2016b; Liu and Chuang, 2011). This metric is frequently employed in eye-tracking studies to examine complex cognitive functions such as reading comprehension (Raney et al., 2014), learning processes (Liu, 2014), and mental workload assessment (Liu et al., 2022). Furthermore, individual fixation durations may be analyzed independently. A notable example is the first fixation duration during reading, which is a commonly reported linguistic measure used to assess initial processing of a word or phrase (Inhoff et al., 2000; Underwood et al., 2000).

The temporal characteristics of eye fixations are often analyzed in relation to specific regions within the visual field that are visually explored. These areas of interest (AoI), may represent regions particularly relevant to the task at hand, or with semantical meaning. Under this formalism, fixation duration metrics are also used, albeit with slight variations. For instance, the dwell time is defined as the cumulative duration of all fixations during a single visit to an AoI. The total dwell time sums all dwell time within a specific AoI over the entire experimental session. Additional AoI-specific metrics offer further granularity, such as the fixation ratio, defined as the sum of fixation durations within an AoI divided by the total fixation duration across all AoIs, or the average fixation duration within an AoI, derived by normalizing the sum of fixation durations by the number of fixations in that AoI. The concept of AoI as a symbolic tool will be explored in greater detail in the Areas of Interest part of this review series (Part 4).

3.1.2 Position and drift

The location of visual fixations is widely studied across various contexts, as it is often assumed to reflect the allocation of visual attention (Findlay and Gilchrist, 2003). A robust method for modeling the central position of fixations is the fixation centroid, calculated by averaging the coordinates of gaze points within individual fixation sequences. Analyzing the spatial distribution of these centroids provides valuable insights into the regions of a stimulus that are prioritized during task-specific processing, offering direct evidence of underlying cognitive processes (Henderson, 2003; Rayner, 1998).

For instance, in studies related to face processing, analyses of fixation patterns have identified specific gaze patterns, such as directing attention to a point just below the eyes (Hsiao and Cottrell, 2008; Peterson and Eckstein, 2012). Similarly, in reading tasks, research has shown that both the likelihood of misidentifying a word and the time required for identification decrease when the eyes fixate near the center of the word (O’Regan and Jacobs, 1992; Brysbaert et al., 1996). These phenomena, known as optimal viewing position effects, are thought to stem from the rapid decline in visual acuity as retinal eccentricity increases (Nazir et al., 1998).

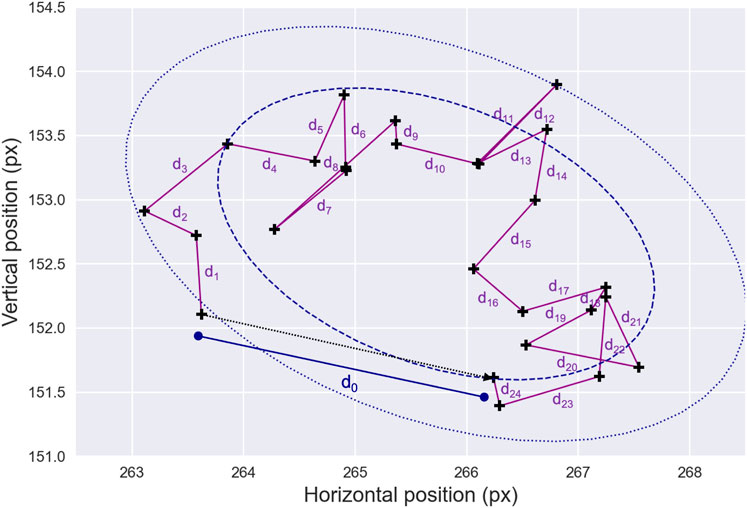

While fixational sequences typically exhibit limited eye mobility, the variability in the micro-movements can provide valuable information related to oculomotor function. Consequently, several additional features—many of which are illustrated in Figure 3 — have been proposed in the literature to better characterize fixational micro-movements.

Figure 3. Fixation Drift and Stability. An example of gaze data—black crosses—representing a fixation sequence is shown. Note that the raw data have been largely downsampled for presentation clarity. In this illustration, the drift displacement between the starting and ending points of the fixation sequence is denoted as

As such, the drift displacement is calculated as the distance between the starting and ending points of each fixation sequence. Similarly, the cumulative drift distance, which reflects ocular stability during fixation, is obtained by summing the distances between all consecutive fixational data samples from a given fixation sequence. Another feature, the drift mean velocity, is computed as the average of the first-order position derivatives of the fixation data samples and can be used to characterize the minor movements occurring during fixation sequences. Together, these measures can provide valuable insights into the stability of eye movements during fixation, which may be particularly useful for detecting pathological conditions, such as sight impairments and cerebellar diseases (Leech et al., 1977; Schor and Westall, 1984).

Lastly, fixation stability can be quantified by computing the area of the elliptical contour that encompasses a given percentage of fixation points (Steinman, 1965; Crossland et al., 2004). Assuming that the fixation positions follow a bivariate normal distribution, the dispersion of these positions is represented by an ellipse. The bivariate contour ellipse area (BCEA) thus provides a measure of fixation stability, with smaller values indicating more stable fixation. This metric is considered the current gold standard to measure the stability of fixation (Crossland et al., 2009) and has been widely used to examine changes in fixational eye movements, particularly in clinical contexts (Shaikh et al., 2016; Montesano et al., 2018; Leonard et al., 2021; Ghasia and Wang, 2022).

3.2 Saccade measures

Saccades are rapid, ballistic eye movements that direct the fovea toward objects of interest, enabling high-acuity vision. Since the inception of eye movement research, the kinematic properties—e.g., velocity, amplitude—and shape characteristics—e.g., trajectory, curvature—of saccadic eye movements have been extensively studied using diverse measurement techniques, which we will now review and discuss.

In experimental settings, saccadic behavior is investigated using paradigms involving both predictable and unpredictable target conditions. The metrics presented in the following sections are designed to quantify the dynamics of saccadic eye movements in these two conditions, that is free-viewing scenarios and those involving target-based stimuli. These metrics offer critical insights into saccade dynamics and their modulation by experimental manipulations.

3.2.1 Temporal features

Saccade duration is a commonly analyzed metric in eye movement research, with typical values ranging from 30 to 70 milliseconds. While these values may vary slightly across studies, various factors have been identified in the literature as influencing saccade duration. For example, during coordinated reaching movements, saccades that accompany hand motions tend to have shorter durations (Donkelaar et al., 2004; Snyder et al., 2002). Conversely, repeated saccades to the same visual stimulus often result in longer durations (Golla et al., 2008; Chen-Harris et al., 2008). The measurement of saccade duration typically involves estimating the onset and offset of the saccade. Given the brief nature of saccadic movements, the accuracy of this measurement is highly sensitive to the thresholds applied to segment raw gaze data—see Section 2.

In addition to duration, saccade count and saccade rate—or saccade frequency—are widely used metrics to characterize saccadic sequences. Generally, saccade frequency tends to decrease with increasing task difficulty (Nakayama et al., 2002) or under conditions of fatigue (Van Orden et al., 2000). Like saccade duration, saccade count is a simple and robust measure commonly employed in studies that investigate cognitive processes such as reading or scene perception (Inhoff and Radach, 1998). Furthermore, deviations from typical saccadic temporal characteristics, such as prolonged saccade duration, can serve as early indicators of neural disorders (Ramat et al., 2007).

In experimental paradigms that involve target-directed saccades, the temporal aspect of saccadic movements is frequently examined using saccadic latency, which is the time delay between stimulus onset and saccade initiation. For any given target, while saccade duration, velocity, and amplitude tend to remain relatively consistent, latency is notably variable across trials, ranging from 100 to 1,000 milliseconds (Liversedge et al., 2011). The distribution of saccadic latency is generally skewed toward shorter latencies, with a long tail representing longer latencies. Additionally, the distribution is often unimodal, although a second peak—referred to as express saccades—can sometimes appear, representing shorter saccadic responses (Fischer and Weber, 1993).

The mean saccade latency is typically used to describe the central tendency of reaction times, while the standard deviation is used to assess variability (Whelan, 2008). However, since the latency distribution is not Gaussian, these statistics may not fully capture the nature of the distribution. As a result, more robust statistical measures, such as the median or quantile estimators, are increasingly adopted to describe saccadic latency distributions more accurately (Vullings, 2018). In clinical contexts, saccadic latency distributions have shown promise as biomarkers for various neurological conditions. For instance, Michell et al. (2006) demonstrated that saccadic latency could be used as a diagnostic marker for Parkinson’s disease, highlighting its potential utility in clinical assessments of cognitive and motor dysfunctions.

3.2.2 Amplitude features

Describing saccade morphology is essential for a comprehensive understanding of eye movement dynamics. Among the various morphological features, saccade amplitude serves as a fundamental and easily accessible descriptor that reflects the distance the eye travels during a saccadic movement. It is typically calculated as the spatial distance between the starting and ending points of each identified saccade sequence. Alternatively, to model the non-linearity of saccade trajectory, the traveled distance can be computed by summing the distances between consecutive saccadic data samples within a saccade sequence. Lastly, saccade efficiency, derived as the ratio of saccadic amplitude to the total distance traveled, is often used to quantify the complexity and non-linearity of the saccadic trajectory. This metric provides insight into the degree to which the eye movement follows a straight path versus a more convoluted or inefficient trajectory.

Saccade amplitude is highly context-dependent, varying according to the task and visual environment. For example, in reading tasks, saccades are typically constrained to around 2 degrees of visual angle horizontally (Rayner et al., 2012). In contrast, during scene perception, the average saccade amplitude increases with the size of the visual stimulus, reflecting the broader spatial search required to process larger or more complex images (von Wartburg et al., 2007). Cognitive factors also influence saccade amplitude, with increases in task difficulty often leading to a decrease in the amplitude of saccadic movements. Phillips and Edelman (2008) demonstrated that variability in performance during visual scanning tasks was related to oculomotor variables such as amplitude, with smaller saccades indicating a reduced perceptual span. Similarly, May et al. (1990) provided evidence that this metric could serve as an indicator of cognitive workload, with smaller amplitudes reflecting greater cognitive demands. It should also be mentioned that saccade amplitude is closely related to its duration and peak velocity through the main sequence relationship—see Section 3.2.7 for further details. These oculomotor characteristics—amplitude, duration, and peak velocity—are often analyzed together as they provide complementary insights into the saccadic process.

When viewers are instructed to follow a visual target, the saccadic gain—the ratio between the amplitude of the saccade performed and the amplitude of the target displacement—becomes a critical measure. Saccadic gain is particularly useful in assessing saccadic dysmetria, a condition characterized by errors in saccade accuracy. In neurological studies, saccadic dysmetria is often investigated to identify impairments in saccadic control. For instance, in overshoot dysmetria, the saccade initially overshoots the target, requiring a corrective saccade in the opposite direction. While overshoots can occur in healthy individuals, they typically reduce over time as the oculomotor system adjusts to the target location. Persistent overshooting, however, is indicative of a cerebellar lesion (Selhorst et al., 1976; Ritchie, 1976). Conversely, undershoot dysmetria occurs when the initial saccade is too small, and a corrective saccade is required to bring the eye to the target. Significant undershooting is often associated with basal ganglia disorders, such as Parkinson’s disease (MacAskill et al., 2002) or progressive supranuclear palsy (Troost and Daroff, 1977).

More intriguingly, saccadic dysmetria—particularly hypometric saccades—has been proposed as a potential objective biomarker for neurodegenerative diseases. Abnormally hypometric saccades, along with other eye movement deficits, have shown promise as early indicators of conditions like Alzheimer’s disease, making them valuable targets for early diagnosis (Fletcher and Sharpe, 1986; Cerquera-Jaramillo et al., 2018). This highlights the importance of saccade morphology not only for understanding normal visual behavior but also as a potential tool for identifying and monitoring the progression of neurological disorders.

3.2.3 Direction and curvature

The direction of a saccadic trajectory—or sequence of saccades—provides a crucial descriptive measure of eye movements. This direction is typically quantified as the angle, measured in degrees or radians, between the horizontal axis and the line connecting the starting and ending points of the saccade. For instance, Walker et al. (2006) employed saccadic direction to examine the effects of target predictability, while Foulsham et al. (2008) explored the horizon bias during natural scene viewing, revealing a prevalent tendency for horizontal saccades. More recently, studies have employed saccadic direction to classify task-specific gaze patterns, offering valuable insights for designing effective learning strategies (Mozaffari et al., 2020).

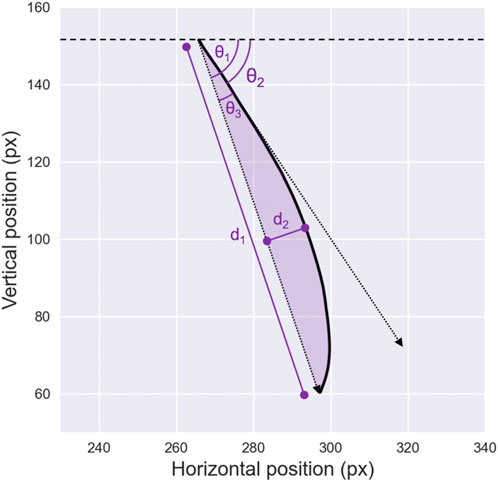

However, simple metrics such as amplitude, efficiency—as discussed in Section 3.2.2 — and direction alone are insufficient for fully capturing the complexity and non-linearity of saccadic trajectories. To address this gap, several additional features have been developed to better characterize the curvature of saccadic movements (Ludwig and Gilchrist, 2002).

One such metric is initial deviation, which measures the angle between the initial direction of the saccade—computed after a fixed number of time samples, e.g., 20 milliseconds (Van Gisbergen et al., 1987) — and the overall direction of the saccade at its endpoint. A limitation of this method is that it assigns varying curvature values to saccades with identical trajectories but different velocities, because it relies on a fixed time interval. Another common metric is maximum curvature, defined as the greatest perpendicular distance between a point on the saccadic trajectory and the straight line connecting the starting and ending points of the saccade (Smit and Van Gisbergen, 1990). Although widely used, this approach has limitations, as it relies on a single point to represent the curvature of a trajectory. This can be especially problematic for double-curved saccades, where the trajectory may involve multiple directional changes (Ludwig and Gilchrist, 2002).

To address these shortcomings, the area curvature metric has emerged as a more robust and popular approach, as it incorporates the entire trajectory of the saccadic eye movement (Walker et al., 2006). This metric is typically calculated by evaluating the area beneath the curve formed by the sampled trajectory, relative to the direct distance between the starting and ending points of the saccade. The curvature metrics discussed so far are illustrated in Figure 4. Additionally, Ludwig and Gilchrist (2002) proposed deriving saccade curvature directly from second- and third-order polynomial fits. Like the area curvature approach, this method uses the full set of samples from a given saccade, which enhances its robustness by making it less sensitive to sampling noise.

Figure 4. Saccade Direction and Curvature. Illustration of various metrics used to describe saccade non-linearity in the literature. The line connecting the starting point and the endpoint of the saccade, with amplitude

To investigate the inherent tendency for curvature observed in saccadic movements—particularly prominent in oblique saccades (Viviani and Swensson, 1982) — early research primarily focused on target location and the type of saccade being performed (Viviani, 1977; Smit and Van Gisbergen, 1990). More recent studies, however, have shown that both the direction and magnitude of saccadic curvature can be modulated by a variety of factors. Notably, strong correlations have been observed between saccade curvature and the modulation of eye movements by distractors. For example, Doyle and Walker (2001) found that both reflexive and voluntary saccades tended to curve away from irrelevant distractor stimuli when a target was presented. Similarly, Sheliga et al. (1997), Sheliga et al. (1995) demonstrated that saccades deviated from a previously attended location. These variations in saccadic trajectory have been attributed to antagonistic interactions between different populations of neurons in the superior colliculus, which help resolve conflicts caused by competing targets in the vicinity at the onset of movement (McPeek et al., 2003).

3.2.4 Velocity features

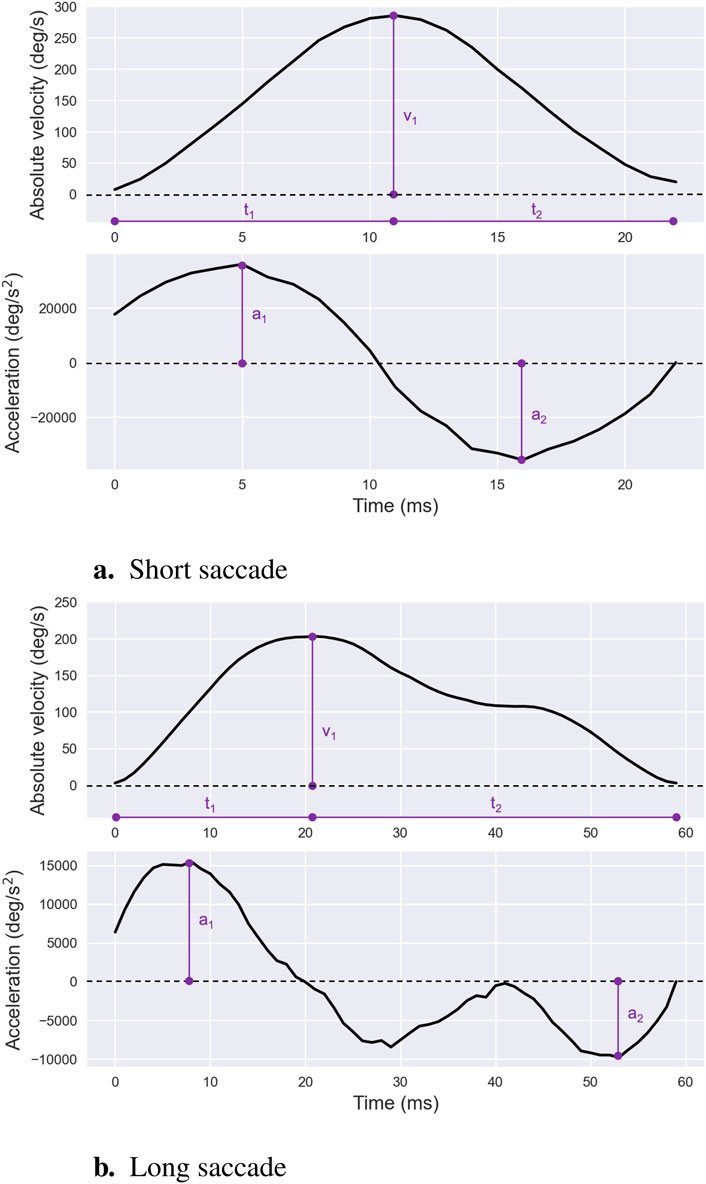

The velocity waveform of a saccade is generally described as symmetrical with comparable durations for the acceleration and deceleration phases—Figure 5a. Peak saccadic velocity, the maximum speed attained during a saccade, typically coincides with the cessation of the neural signal pulse and aligns with the point of maximum firing rate of burst neurons within the pontine reticular formation that project to oculomotor neurons (Galley, 1989; Leigh and Zee, 2015). It is noteworthy that average and peak saccadic velocities are frequently analyzed together due to their strong correlation. Their absolute values generally exhibit a consistent ratio of approximately

Figure 5. Saccade Velocity and Acceleration Profiles. Examples of saccade velocity and acceleration profiles for short — (a) — and long — (b) —- saccades, illustrating differences in peak values and overall shapes. For both types of saccades, the peak velocity is denoted as

More specifically, saccade mean velocity is regarded as a reliable metric for assessing the velocity of small saccades, particularly those with symmetrical velocity waveforms. The properties of saccadic velocity have been thoroughly investigated across numerous fields and clinical applications (Di Stasi et al., 2013). Early research observed that external factors such as alcohol, drugs, and fatigue lead to reductions in saccadic velocity (Dodge and Benedict, 1915; Miles, 1929), a phenomenon attributed to diminished central nervous system activation. More recently, studies have highlighted saccadic velocity as a marker for fluctuations in sympathetic nervous system activity (Di Stasi et al., 2013), variations in the intrinsic value of visual stimuli (Xu-Wilson et al., 2009), and the effects of task experience on oculomotor control (Xu-McGregor and Stern, 1996). Clinically, abnormally low saccadic velocities—commonly termed slow saccades—are symptomatic of midbrain disorders such as progressive supranuclear palsy, spinocerebellar ataxia type 2, and various cerebellar pathologies (Jensen et al., 2019).

While mean velocity provides a useful summary metric, it becomes less effective for saccades larger than 10°, which often exhibit asymmetric velocity profiles—Figure 5b. For such larger saccades, saccade peak velocity is typically preferred as it reflects the highest firing rates of burst neurons driving the movement (Galley, 1989). Unlike mean velocity, peak velocity has computational advantages: it remains consistent regardless of segmentation thresholds—see Section 2 for further details—making it robust to variations in how sharply a saccade terminates during its final phase.

Several methodological considerations are important when calculating velocity features, particularly for saccades, though these principles extend to other canonical gaze movements as well. The simplest and most common method calculates velocity by applying a two-point central difference algorithm to the eye position signal (Schmidt et al., 1979). However, this straightforward approach has significant drawbacks. First, the numerical derivative is inherently highly sensitive to noise. Depending on the specific eye-tracking device, characterizing and removing measurement noise can be challenging or even infeasible. While filtering techniques can mitigate noise, they may inadvertently alter velocity estimates, particularly the crucial peak velocity. Second, this method is strongly influenced by sampling frequency. Since saccade peak velocity typically occurs between recorded samples, devices with low sampling rates often underestimate this key measure.

To address these limitations, more sophisticated and robust methods have been developed. These include the eight-point central difference derivative algorithm (Inchingolo and Spanio, 1985; Federighi et al., 2011), which enhances noise resilience, as well as velocity profile fitting using gamma functions (Smit et al., 1987), and saccade trajectory curve fitting using sigmoid functions (Gibaldi and Sabatini, 2021), both of which provide refined estimates by leveraging model-based approaches. These advanced techniques are robust against noise and sampling artifacts, enabling accurate velocity estimation even when using low-cost, low-sampling-rate eye trackers. This compatibility with accessible technologies broadens the utility of such methods for a wide range of research and practical applications.

3.2.5 Acceleration features

To effectively quantify saccade acceleration characteristics, several metrics can be derived from the acceleration profile. As such, saccade peak acceleration is defined as the maximum absolute value of acceleration during the acceleration phase, which spans the interval from saccade onset to saccade peak velocity. Conversely, saccade peak deceleration represents the maximum absolute value of acceleration during the deceleration phase, occurring from peak velocity to saccade termination.

An additional metric of interest is the acceleration/deceleration ratio, computed as the ratio of the duration of the acceleration phase to that of the deceleration phase. This ratio reflects the skewness of the velocity profile. As expected, it tends to approximate one for small saccades but decreases as saccade amplitude increases. Finally, saccade skewness can be directly quantified through curve fitting, typically using a gamma function applied to the velocity profile. The resulting shape parameter provides a reliable estimate of skewness (Chen et al., 2002).

As briefly discussed in Section 3.2.4, the acceleration and deceleration characteristics of saccades vary markedly with saccade amplitude. Specifically, larger saccades exhibit left-skewed velocity profiles, where the acceleration phase constitutes roughly one-third of the total saccade duration (Baloh et al., 1975; Lin et al., 2004). This asymmetry correlates strongly with both saccade amplitude and, even more so, its duration (Van Opstal and Van Gisbergen, 1987). While the duration of the deceleration phase increases with saccade amplitude and duration, the duration of the acceleration phase remains relatively constant (Becker, 1991).

The asymmetry in saccade velocity profiles, as well as its relationship with saccade duration, has been consistently observed and documented over several decades. However, the physiological significance and underlying mechanisms of this phenomenon remain unclear, with no definitive hypothesis currently available in the literature. Research suggests that saccade acceleration characteristics may be subject to modification through motor learning processes (Collins et al., 2008). Furthermore, these characteristics have been linked to neurodevelopmental conditions, such as autism spectrum disorder, where abnormal acceleration and deceleration profiles have been observed (Schmitt et al., 2014). These findings highlight the potential for saccade dynamics to serve as biomarkers for both cognitive and neurological assessments.

3.2.6 Saccadic ratios

Various ratios derived from saccadic characteristics have been extensively studied, revealing valuable insights into the interconnections between oculomotor mechanisms. For instance, Garbutt et al. (2003) identified abnormally high peak velocity-to-mean velocity ratios in saccadic trajectories recorded from patients with progressive supranuclear palsy. This anomaly suggested that these movements might not be purely saccadic but rather comprise a sequence of small-amplitude saccades.

In healthy individuals, saccadic ratios have been shown to reflect low-level idiosyncrasies. For example, these ratios have been employed as biometric features for individual identification among other eye-movement metrics (Rigas and Komogortsev, 2016). Extending this analysis to higher cognitive functions, Gupta and Routray (2012) demonstrated a significant correlation between the peak velocity-to-duration ratio and human alertness, suggesting its utility for vigilance monitoring. These findings underscore the potential of saccadic ratios as versatile markers, ranging from physiological baselines to cognitive states.

Shifting focus to broader measures of eye movement dynamics, the saccade-fixation ratio, introduced by Goldberg and Kotval (1999), highlights the balance between exploratory behavior—searching—and cognitive processing—information extraction. A higher value for this ratio reflects increased searching relative to processing. This metric has been used in comparative studies of different layouts or visual representations. Both the total fixation-to-saccade duration ratio and the average fixation-to-saccade duration ratio per occurrence can be derived from this measure. These simple yet powerful metrics have been employed in diverse experimental contexts to assess attention and cognitive information processing levels (Bhoir et al., 2015; Berges et al., 2023).

Finally, we mention the K coefficient introduced by Krejtz et al. (2016a), Krejtz et al., (2017). This metric has emerged as an extension of the saccade-fixation ratio and is inherently linked to scanpath analysis. As such, it will be described in greater detail in the corresponding article of this review series.

3.2.7 Main sequence

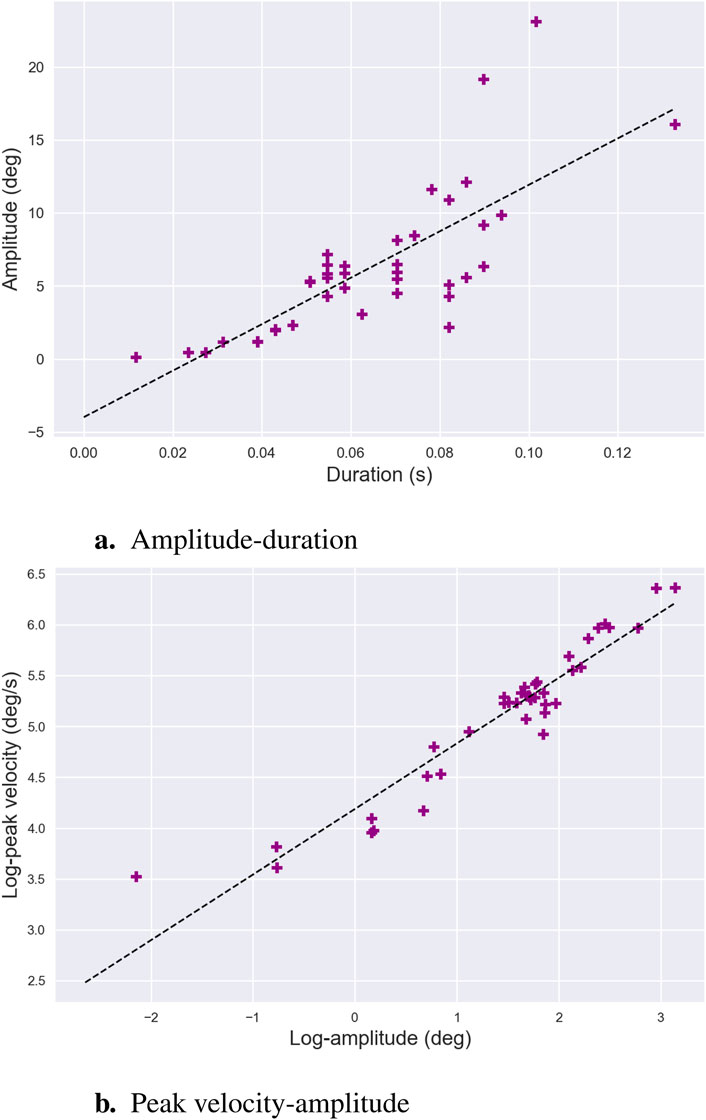

The term main sequence describes a consistent relationship between three fundamental saccadic parameters: amplitude, duration, and velocity (Bahill et al., 1975). Specifically, the relationship between saccadic peak velocity and amplitude demonstrates three key trends:

Figure 6. Main Sequence. Main-sequence relationships for saccades, along with the respective linear regression fits, are shown for amplitude-duration (a) and the logarithms of peak velocity-amplitude (b). Each colored dot represents a saccade from a set performed by the same individual during a reading task. The data emphasize the linear relationship between the logarithms of amplitude and peak velocity for saccades of moderate amplitude. While the amplitude-duration relationship is well-established in the literature, its experimental clarity appears to be less consistent.

The main sequence is widely employed in clinical research as a diagnostic tool to evaluate the integrity of the saccadic system. Deviations from its expected patterns and abnormalities in saccadic behavior are indicative of various neurological and ocular conditions, including palsy of extraocular muscles (Metz et al., 1970; Garbutt et al., 2003), myasthenia gravis (Yee et al., 1976), cerebellar disorders (Selhorst et al., 1976), and multiple sclerosis (Frohman et al., 2002; Bijvank et al., 2019). Recent work by Guadron et al. (2023) further highlighted the diagnostic relevance of the main sequence by examining patients with central and peripheral retinal defects. Their findings revealed that the characteristic relationships between saccadic parameters were most disrupted when targets were located within the subjects’ blind fields. This disruption underscores the critical role of visual input in planning saccadic kinematics, reinforcing the main sequence as a valuable lens through which the interplay between sensory input and motor control can be assessed.

Despite its widespread utility, there remains no universal consensus on the best mathematical model to describe the main sequence, particularly the non-linear relationship between peak velocity and saccade amplitude. Early work adopted power-law models to capture the non-linear growth of peak velocity with amplitude (Yarbus and Yarbus, 1967; Baloh et al., 1975; Lebedev et al., 1996). These models have proven useful for detecting performance deficits in clinical settings (Garbutt et al., 2003). For larger saccades,

In pursuit of greater robustness, alternative approaches have explored simpler models. For example, square-root models have been proposed to enhance the reliability of main sequence estimation (Lebedev et al., 1996). These models demonstrate strong generalization and repeatability, as highlighted in a recent review by Gibaldi and Sabatini (2021). Despite their simplicity, square-root models effectively capture the main sequence’s three primary trends when applied to saccades larger than 1°—a threshold that aligns with the typical amplitude range of microsaccades (Martinez-Conde et al., 2009). In conclusion, while multiple modeling approaches exist, the main sequence remains a foundational tool for understanding saccadic dynamics, with applications ranging from clinical diagnostics to explorations of the fundamental mechanisms underlying oculomotor control.

3.3 Smooth pursuit measures

Smooth pursuits represent another type of eye movement from which valuable metrics can be extracted. In natural scene viewing conditions, smooth pursuits occur alongside fixations and saccades to track moving objects within the field of view. To isolate these pursuit sequences, algorithms outlined in Section 2.2 must first be applied. In real-world scenarios, targets often move unpredictably, changing speed and direction rapidly. Such stimuli are rarely used in laboratory settings, as the performance of the smooth pursuit system is limited under these conditions, often resulting in interfering saccades that complicate the analysis.

In controlled experimental conditions, smooth pursuit tasks typically require the viewer to follow targets moving horizontally or vertically at a fixed frequency, back and forth. Two common types of stimuli used in these protocols are triangular and sinusoidal motion profiles. Triangular stimuli move the target at a constant velocity in one direction before abruptly reversing direction, forming a triangle in position-time space. This constant-velocity motion allows researchers to precisely measure the pursuit system’s ability to maintain a steady eye velocity and to detect catch-up saccades when the eye lags behind the target. In contrast, sinusoidal stimuli move the target in a smooth, oscillating pattern where velocity continuously varies, peaking at mid-path and slowing near the reversal points. Sinusoidal motion more closely mimics naturalistic motion and tests the pursuit system’s ability to adapt to continuously changing velocities. In these experimental setups, it is typically assumed that the oculomotor signal reflects primarily smooth pursuit eye movements, along with any catch-up saccades, without the inclusion of fixation sequences. The pursuit system is expected to generate smooth, coordinated eye movements that closely follow the target’s trajectory, minimizing interruptions from fixational pauses.

3.3.1 Temporal and velocity features

The analysis of smooth pursuit eye movements typically starts with the estimation of fundamental descriptors, such as pursuit duration, pursuit count, and pursuit rate—or pursuit frequency. However, interpreting these metrics is not as straightforward as it might initially appear. This complexity arises primarily from the influence of catch-up saccades, which are corrective eye movements that compensate for discrepancies between the target’s position and the smooth pursuit response. These saccades interrupt smooth pursuit sequences, effectively shortening their duration while increasing the overall pursuit frequency.

More specifically, catch-up saccades are rapid eye movements that occur during smooth pursuit when the eye falls behind the target. They help correct the eye’s position by quickly redirecting the gaze to the moving target. These saccades occur when the smooth pursuit mechanism, which is responsible for maintaining the eye’s tracking of a moving object, is unable to keep up with sudden changes in the target’s velocity or direction. Catch-up saccades are particularly common when the target moves too fast for the smooth pursuit system to follow continuously or during pursuit of targets with unexpected changes in velocity or direction (Boman and Hotson, 1992). Instead of maintaining a smooth motion, the eyes make these corrective jumps to catch up with the target, thus ensuring the target stays within the central vision. Additionally, their occurrence is modulated by factors such as target properties (Heinen et al., 2016) and clinical conditions, including schizophrenia and affective disorders (Abel et al., 1991).

Characterizing the velocity profile of smooth pursuit typically involves measurements of pursuit mean velocity and pursuit peak velocity. Smooth pursuit velocities are generally modest, ranging between 15 and 30° per second (Meyer et al., 1985; Zuber et al., 1968; Ettinger et al., 2003; Klein and Ettinger, 2019), significantly lower than saccadic velocities. However, trained observers or tasks involving accelerating stimuli can elicit higher peak velocities. For instance, Barmack (1970) reported peak pursuit velocities of up to 100° per second during acceleration tasks. In humans, peak eye velocity typically occurs between 200 and 300 milliseconds after pursuit onset when following targets moving at velocities up to 30° per second (Robinson et al., 1986).

Importantly, the velocity profile is closely linked to temporal characteristics: as stimulus velocity increases, the frequency of catch-up saccades also rises to correct for larger retinal offsets. A valuable descriptor for exploring this relation between velocity and compensation mechanisms is eye crossing time, defined as the duration required for the eye to align with the target at constant velocity. De Brouwer et al. (2002) demonstrated that catch-up saccades are initiated when the eye crossing time reaches the saccade zone, indicating that smooth acceleration alone is insufficient for target capture.

However, simple spatio-temporal features such as pursuit mean velocity and pursuit duration do not fully capture the complexity of smooth pursuit dynamics. Smooth pursuit consists of two distinct phases: open-loop and closed-loop. In the open-loop phase, the eye’s movement is primarily driven by the initial target presentation, with little to no influence from the retinal image changes caused by the eye movement. In contrast, during the closed-loop phase, the eye continuously adjusts to changes in the retinal image that result from its own movements, maintaining the pursuit of the target. In the following Sections 3.3.2, 3.3.3, we will introduce methods to quantify the initiation and maintenance of pursuit, respectively.

3.3.2 Smooth pursuit latency and acceleration

In this section, we introduce two classes of features used to characterize the pursuit initiation phase, namely, pursuit latency and pursuit acceleration. In target pursuit paradigms, pursuit latency—or pursuit onset—is commonly defined as the delay between the initiation of target motion and the start of ocular pursuit. The onset of smooth pursuit is typically calculated as the intersection point between two regression lines (Carl and Gellman, 1987). The first line represents the pre-response baseline, which fits the velocity signal during a time window from 100 milliseconds before target motion onset to 80 milliseconds after it begins. This baseline duration may vary depending on the experimental setup, particularly when anticipation of the target motion is expected (De Hemptinne et al., 2006). The second regression line fits the pursuit initiation velocity signal, typically recorded over a 50 milliseconds window after the pre-response baseline. This duration may differ across studies, often beginning at the first time point when eye velocity exceeds three to 4 standard deviations of the baseline velocity measures (Krauzlis and Miles, 1996).

Pursuit typically exhibits much shorter latency than saccades, with pursuit latency ranging from 100 to 125 milliseconds, compared to 200–250 milliseconds for saccades (Krauzlis, 2004). In experimental conditions involving anticipation, pursuit latency can be reduced to zero or even become negative, especially when pursuit begins before the target motion, such as when the direction and velocity of the stimulus are highly predictable (Burke and Barnes, 2006; De Hemptinne et al., 2006). Spering and Gegenfurtner (2007) further demonstrated that pursuit latency is influenced by the surrounding visual context, particularly by contrast and distracting motion orientation. They found that latency decreases when the context moves in the same direction as the target, while a rapidly moving context in the opposite direction tends to pull the eyes back, delaying pursuit onset. Additionally, higher contrast enhances the effect of co-linear drifting context motion, further reducing the latency before the pursuit begins.

In addition to latency, pursuit initiation is often examined through pursuit initial acceleration (Kao and Morrow, 1994). This is typically calculated as the mean second-order position derivative of the saccade-free component extracted from the tracking response within the first 100 milliseconds following pursuit onset. During this initial phase, acceleration continues until the eye velocity matches that of the target. The pursuit initial peak acceleration can also be assessed during this period. The first 20–30 milliseconds of eye acceleration show a modest increase with target velocity (Tychsen and Lisberger, 1986). However, between 60 and 80 milliseconds after pursuit onset, eye acceleration becomes much more strongly modulated by target velocity, and is also influenced by the eccentricity of the initial eye position (Fukushima et al., 2013).