- 1Science Research Center of Sports Training, China Institute of Sport Science, Beijing, China

- 2School of Physical Education, Shanghai University of Sport, Shanghai, China

- 3Physical Fitness Training Research Center, China Institute of Sport Science, Beijing, China

- 4Xinxin School, The Affiliated High School of Peking University, Beijing, China

Objective: Digital-based visual training (VT) is widely employed to improve visual-cognitive performance, yet its efficacy may be confounded by the “learning effect”.

Methods: A systematic literature search was conducted across PubMed, Web of Science, MEDLINE, SPORTDiscus, and Cochrane Library, covering all studies published up to 8 May 2025. The search was limited to peer-reviewed articles written in English. Only randomized controlled trials (RCTs) that included both baseline and post-intervention measures of visual-cognitive performance were eligible. Subgroup analysis was conducted based on the presence or absence of task similarity between training and testing conditions, to assess potential bias introduced by the “learning effect”.

Results: The search identified 3,798 articles, of which 33 RCTs involving 1,048 participants met the inclusion criteria for meta-analysis. VT was found to significantly improve visual attention, reaction time, decision-making time, decision-making accuracy, and eye–hand coordination. Subgroup analyses revealed that studies classified as “learning effect present” (LE+) consistently reported substantially larger effect sizes than those without (LE−). Significant between-group differences were observed for visual attention (SMD = 1.65 vs. 0.07; p = 0.00), reaction time (SMD = 2.66 vs. 0.50; p = 0.00), and decision-making accuracy (SMD = 1.46 vs. 0.62; p = 0.03), indicating that task similarity may artificially inflate performance outcomes.

Conclusion: These findings indicate that observed improvements may reflect task familiarity rather than true cognitive enhancement. To improve evaluation validity, future studies should avoid task redundancy, incorporate retention testing, and adopt structurally distinct outcome measures.

1 Introduction

Sports vision refers to the integrated skills to perceive, process, and respond to critical environmental information in competitive scenarios (Erickson, 2021). It not only serves as a vital bridge between decision-making and motor execution but also directly impacts athletic performance under high-speed confrontations, tactical adaptations, and extreme time pressure (Erickson et al., 2011). Enhancing sports vision has become a major focus of research and practice in elite athletic training (Appelbaum and Erickson, 2018; Laby and Appelbaum, 2021). The integration of sports science and digital technology has led to the widespread adoption of diverse visual training (VT) methods in elite sports, aiming to improve athletes’ visual function and optimize information processing and decision-making under pressure (Kittel et al., 2024; Jothi et al., 2025). Empirical studies and systematic reviews have shown that digital VT methods—such as stroboscopic visual training (Jothi et al., 2025; Zwierko et al., 2023; Zwierko et al., 2024), perceptual-cognitive training (Müller et al., 2024; Kassem et al., 2024; Zhu et al., 2024), and virtual reality training (Liu et al., 2024; Skopek et al., 2023) — can significantly improve key visual-cognitive skills like attention, reaction time, and decision-making, demonstrating strong potential for practical implementation. However, Fransen (Fransen, 2024) argued that current scientific evidence is insufficient to support the “far transfer” of perceptual or cognitive training to athletic performance. Many commercial digital training tools appear to facilitate “near transfer” but fail to improve on-field performance (Harris et al., 2018). This discrepancy may result from structural similarities between training and testing tasks, leading to a so-called “learning effect” (Basner et al., 2020). The term “learning effect” denotes performance improvements driven by procedural familiarity with tasks or devices rather than genuine skill acquisition. In such cases, repeated exposure enhances test scores through familiarity alone, independent of true training-induced adaptation.

Recent studies (Basner et al., 2020; Chaloupka and Zeithamova, 2024) have highlighted that the structural overlap between training and testing tasks—a common feature in cognitive training research—can trigger a “learning effect”, whereby participants improve on post-tests not due to true skill enhancement, but because of familiarity with stimuli, response formats, or device interfaces. If not adequately controlled, this bias may result in improvements driven by faster procedural memory or task-specific strategy optimization, rather than genuine gains in visual-cognitive skills. Similar issues are evident in VT research. Krasich, Ramger (Krasich et al., 2016) reported that repeated testing with digital devices led to linear performance improvements over a short period, largely due to growing familiarity with the equipment. Reported high training effects in this field may not truly reflect visual system plasticity, but may instead overestimate efficacy due to “learning effect”. Meta-analyses by Müller, Morris-Binelli (Müller et al., 2024) and Zhu, Zheng (Zhu et al., 2024) found that improvements in decision-making through perceptual-cognitive training were greater in laboratory tests than in field-based assessments (SMD = 1.26 vs. 0.85; 1.51 vs. 0.65), highlighting insufficient “far transfer” effects. To date, no study has systematically examined the “learning effect” as a moderator of VT outcomes. This gap represents an important methodological hindrance, as task-related familiarity may artificially inflate post-test performance and mask the true efficacy of training interventions. Consequently, the bias introduced by learning effects has persisted largely unaddressed in the literature.

Therefore, based on the above research background, this study conducted a systematic review and meta-analysis to examine whether the “learning effect” moderates the outcomes of VT interventions. Subgroup analyses were employed to compare studies with and without the presence of “learning effect”, aiming to identify a potential source of bias that may have been overlooked in previous research. The findings are intended to provide methodological guidance and empirical evidence for future studies in the areas of intervention design, outcome measure selection, and interpretative frameworks.

2 Materials and methods

This systematic review and meta-analysis followed the PRISMA (Preferred Reporting Items for Systematic Reviews and Meta-Analyses) guidelines (Moher et al., 2010) and was preregistered on PROSPERO (ID: CRD420251020142).

2.1 Search strategy

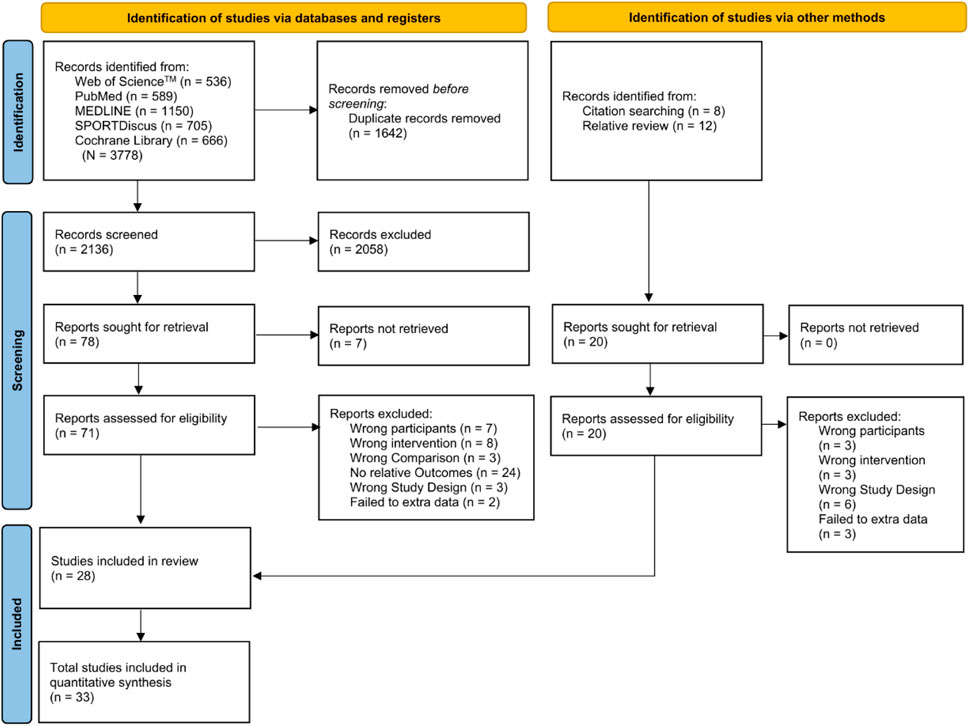

A comprehensive literature search up to 8 May 2025 was conducted across five electronic databases: PubMed, Web of Science (Core Collection), MEDLINE, SPORTDiscus, and Cochrane Library. Boolean search operators (“AND”, “OR”) were applied with combinations of the following keywords: “visual training”, “vision training”, “eye training”, “visuomotor training”, “visual motor training”, “perceptual training”, “perceptual-cognitive training”, “temporal occlusion training”, “strobe training”, “stroboscopic training”, “virtual reality training”, “VR training”, “visual-spatial training”, “visual search training”, “multiple object tracking training”, “randomized controlled trial”, “random allocation”, “RCT”, “randomized” and “randomly”. The full search strategy is provided in Supplementary Appendix 1. Manual searches of reference lists from included studies were conducted. In addition, narrative and systematic reviews (Jothi et al., 2025; Müller et al., 2024; Zhu et al., 2024; Lochhead et al., 2024) on related topics were retrieved. Automated duplicate detection and title-abstract screening were performed using Rayyan software (Ouzzani et al., 2016). After all duplicates were removed, two reviewers (YG and JQ) independently assessed the identified publications using predetermined criteria. Any disagreements were resolved by consultation with a third reviewer (MY). When the titles and abstracts suggested that the article might meet the inclusion criteria, full-text articles were retrieved. If a manuscript was unavailable, the corresponding author was contacted by email. The study selection process is illustrated in Figure 1.

2.2 Inclusion and exclusion criteria

Inclusion and exclusion criteria were established using a revised PICOS framework (Amir-Behghadami and Janati, 2020). Only English-language randomized controlled trials published in peer-reviewed journals were included; studies in other languages or those that were non-randomized, uncontrolled, or cross-sectional were excluded.

2.2.1 Types of population

Participants included in the study were not restricted by gender but were required to have a certain level of sport experience and engagement in a specific sport discipline. According to the criteria (McKay et al., 2022), all participants were classified at least at Tier 2 (Trained) or above, as individuals at this level possess a relatively stable foundation in physical fitness, technical skills, and sport-specific performance. This ensures greater reliability and validity of performance-related data and minimizes measurement error and bias associated with low physical activity levels. Additionally, participants were required to be older than 10 years (Sánchez-González et al., 2022; Leat et al., 2009) and younger than 60 years (Mehta, 2015), in order to avoid the confounding effects of growth, development, and age-related decline on visual and motor functions. All participants had to be healthy individuals with no existing musculoskeletal injuries (e.g., chronic ankle instability) or visual impairments (e.g., high myopia) that could influence the outcomes of visual ability assessments.

2.2.2 Types of intervention

According to a recent review (Lochhead et al., 2024), VT should be defined as a structured, task-specific intervention aimed at enhancing visual-perceptual and visual-cognitive skills that are critical to athletic performance. Based on the intended mechanisms (Appelbaum and Erickson, 2018), digitally-based VT can be classified into three categories: Component Skill Training, Naturalistic Training Approaches, and Integrated Training Batteries. Therefore, included studies must align with the core characteristics of these training modalities. In addition, acute intervention studies were excluded; thus, only interventions with a minimum duration of 1 week were included. This threshold was applied to exclude acute or single-session studies, which primarily capture immediate practice effects rather than training-based adaptations (Appelbaum and Erickson, 2018; Smith and Mitroff, 2012).

2.2.3 Types of comparison

In this review, single-arm trials or two-armed VT intervention design studies without a valid comparator were excluded. Control groups may include either active controls (e.g., alternative training such as regular training or training without visual intervention condition) or passive controls (no intervention). If a study incorporates both active and passive control (no-intervention) conditions, the passive control group were prioritized, because they minimize confounding effects from alternative training programs and provide a clearer estimate of the true efficacy of VT.

2.2.4 Types of outcomes

The visual skills measures were categorized into two main domains: visual-perceptual and visual-cognitive skills. According to the study by Krasich, Ramger (Krasich et al., 2016), significant learning effects were observed in tasks with high visuomotor control demands (Perception Span, Hand Reaction Time, Go/No Go, and Eye-Hand Coordination), whereas no significant progress was seen in tasks involving only visual sensitivity—measures that are also difficult to improve through specific VT (Shekar et al., 2021). This suggests that the observed improvements in performance due to repeated testing with digital devices were primarily attributable to participants’ increased familiarity with the equipment rather than the intervention itself. Therefore, the indicators included in the present meta-analysis were primarily visual-cognitive skills, including visual attention, reaction time, decision-making skills, and eye hand coordination. Studies that did not use digital devices to assess these specific outcomes were excluded from the meta-analysis. Digital devices were defined as electronic or computerized tools that provide standardized visual stimuli and/or automatically record responses, ensuring objective and reproducible measurement. Eligible devices included video-based testing platforms, multiple-object tracking software, light-board systems, and virtual reality headsets. By contrast, studies relying solely on in-game performance indicators (e.g., passing or shooting accuracy) or subjective coach observation without digital instrumentation were excluded. To allow for the calculation of effect sizes (ES), studies were required to provide adequate statistical information, including pre-post repeated measures and/or change scores along with their corresponding standard deviations. Studies were not excluded based on the specific methodologies employed to assess these outcomes.

2.2.5 Types of study design

Only randomized controlled trials (RCTs) were included.

2.3 Data extraction procedures

Two reviewers (YG and JQ) independently extracted the data using a customized Excel worksheet (Microsoft Corp., Redmond, WA, United States). Any discrepancies during the extraction process were resolved through discussion, with arbitration by a third reviewer (MY) when consensus could not be reached. The following data were extracted from each included study: (1) authors and year of publication; (2) participant characteristics, including sample size, sex, age, sport type, and performance level; (3) intervention characteristics, such as training modality, frequency, and duration; and (4) outcome measures, including the test instruments used, as well as the reported means, standard deviations, and standard errors for both intervention and control groups. In accordance with the approach proposed by Thiele, Prieske (Thiele et al., 2020), when multiple outcomes were reported, the outcome with the most significant was prioritized. For studies lacking complete numerical data or reporting results only in graphical form, the original authors were contacted to obtain the necessary information. If the data could not be retrieved through author correspondence, values were estimated from figures using WebPlotDigitizer website (https://automeris.io/WebPlotDigitizer) (Burda et al., 2017).

2.4 Risk of bias

The risk of bias and methodological quality of the included studies were independently evaluated by two reviewers (YG and JQ), following the Cochrane Risk of Bias 2.0 framework (Burda et al., 2017). This tool assesses potential bias across several domains, including: random sequence generation, allocation concealment, blinding of participants and personnel, blinding of outcome assessors, completeness of outcome data, and selective outcome reporting. Each domain was rated as having a low risk, high risk, or some concerns. Any discrepancies between the two reviewers were resolved through consensus discussions, with arbitration by a third reviewer (MY) when necessary.

2.5 Statistical analysis

2.5.1 Data synthesis and effect measures

To evaluate the effectiveness of VT on visual-cognitive skills and to investigate whether the “learning effect” has influenced the outcomes of existing studies, the present meta-analysis was conducted following the procedures outlined below. Following data extraction based on the aforementioned procedures, the first step involved calculating the mean difference (MDdiff) and the corresponding standard deviation (SDdiff). The MDdiff of the intervention and control groups between the pre- and post-test changes was calculated using Equation 1. The standard deviation (SDdiff) of the changes was determined using Equation 2. In cases where the correlation coefficient (Corr) was not explicitly reported in the studies, it was calculated through correlation analysis based on raw data. If the original data could not be obtained, the original research teams were contacted for provision. If these methods were not feasible, Corr was assumed to be 0.5, as suggested by the Cochrane Handbook (Cumpston et al., 2019). This intermediate value balances the potential under- and over-estimation of variability in the absence of study-specific correlation data.

In accordance with Hedges and Olkin (Hedges and Olkin, 1985), the standardized mean differences (SMD) were adjusted for sample size using the correction factor 1-[3/(4 N-9)]. Given that the sample sizes of most of the included studies are small, to enhance the reliability of the research, Hedge’s g, which has been adjusted for bias and based on Equations 3, 4, was used as the effect size indicator for each study.

In the above formula, Mchange represents the mean change from pre-to post-intervention in the VT and control groups, respectively. SDpooled denotes the pooled standard deviation of the change scores across both groups, while n1 and n2, as well as SD1 and SD2, refer to the sample sizes and standard deviations of the two groups, respectively. Hedge’s g values were classified as small (<0.50), medium (0.50–0.80), and large (≥0.80) (Hedges and Olkin, 1985).

2.5.2 Meta-analysis and test for heterogeneity

The meta-analysis and data visualization were conducted using the “meta” and “metafor” packages in R software (version 4.3.3, R Core Team, Vienna, Austria). A conventional two-level meta-analysis approach was applied, utilizing the inverse-variance weighting method. Effect sizes were synthesized under a random-effects model based on the DerSimonian–Laird method (DerSimonian and Laird, 1986). This model assumes that effect sizes are drawn from a distribution of true effects rather than from a single homogeneous population (Cumpston et al., 2019). By incorporating between-study variability, it allows for a more generalizable and accurate estimation of the overall effect size. To avoid unit-of-analysis errors, when multiple intervention groups were compared against a shared control group, the sample size of the shared group was evenly divided across comparisons (Poon et al., 2024).

Between-study heterogeneity was evaluated using both the I2 statistic and Cochran’s Q (Chi-square) test. The degree of heterogeneity, as indicated by I2, was categorized as low (<25%), moderate (25%–50%), high (50%–75%), or considerable (≥75%) in accordance with established guidelines (Higgins et al., 2003). These metrics provided insight into the extent to which variability in effect sizes was attributable to true heterogeneity rather than sampling error.

2.5.3 Subgroup analysis

To explore whether the presence of “learning effect” moderated the observed VT outcomes, a subgroup analysis was performed. A systematic evaluation of the full texts was conducted, focusing on the consistency between the training tasks and the outcome assessments, including the devices used. Studies were classified into the Learning Effect Present (LE+) group if both of the following criteria were met: (1) the digital device used for training and testing was identical or highly similar (Poltavski et al., 2021), and (2) the structure and mode of the training task closely matched those of the outcome measure (Fransen, 2024). Studies that did not meet both criteria—or met only one—were assigned to the Learning Effect Absent (LE−) group. For example, studies were classified as “LE+” if the intervention involved a multiple-object tracking task using the same or a highly similar digital platform as the outcome test, or if a computerized visual reaction-time training program was evaluated with the same reaction-time software during testing. Differences in pooled effect sizes between subgroups were tested using a mixed-effects model (Christ, 2009). When the number of studies meeting the inclusion criteria (n = 5) (Deeks et al., 2019) is insufficient to perform a subgroup analysis, a systematic review were conducted for that outcome.

2.5.4 Risk of publication bias and sensitivity analysis

To evaluate the presence of publication bias, contour-enhanced funnel plots (Peters et al., 2008) were generated and Egger’s test (Fernández-Castilla et al., 2021) was performed, provided that the number of included studies in the respective analysis was ten or more. A p-value greater than 0.05 was interpreted as indicating no significant risk of publication bias. These methods allow for both visual and statistical assessment of asymmetry in the distribution of effect sizes, thereby assisting in determining the robustness and reliability of the pooled estimates. A leave-one-out sensitivity analysis was conducted by sequentially excluding each individual study to assess whether the overall pooled effect size was disproportionately influenced by any single study.

3 Results

3.1 Study characteristics

A total of 3,798 studies were retrieved from PubMed (n = 589), Web of Science™ (n = 536), MEDLINE (n = 1,150), SPORTDiscus (n = 705), and Cochrane Library (n = 666). Additionally, 20 records were identified through manual search. After removing duplicates and applying predefined inclusion and exclusion criteria, 33 studies (Gabbett et al., 2007; Maman et al., 2011; Paul et al., 2011; Serpell et al., 2011; Schwab and Memmert, 2012; Lorains et al., 2013; Murgia et al., 2014; Nimmerichter et al., 2015; Alder et al., 2016; Alsharji and Wade, 2016; Hohmann et al., 2016; Milazzo et al., 2016; Romeas et al., 2016; Gray, 2017; Brenton et al., 2019a; Brenton et al., 2019b; Petri et al., 2019; Romeas et al., 2019; Liu et al., 2020; Schumac et al., 2020; Bidil et al., 2021; Ehmann et al., 2022; Harenberg et al., 2022; Theofilou et al., 2022; Fortes et al., 2023; Phillips et al., 2023; Zwierko et al., 2023; Di et al., 2024; Guo et al., 2024; Lachowicz et al., 2024; Lucia et al., 2024; Mancini et al., 2024; Rodrigues et al., 2025) were included in the meta-analysis (Figure 1).

All included studies adopted a randomized controlled trial design, involving a total of 1,048 participants. The participants were athletes from a variety of sports, including soccer (Lorains et al., 2013; Murgia et al., 2014; Nimmerichter et al., 2015; Romeas et al., 2016; Schumac et al., 2020; Ehmann et al., 2022; Harenberg et al., 2022; Theofilou et al., 2022; Fortes et al., 2023; Phillips et al., 2023; Rodrigues et al., 2025), volleyball (Zwierko et al., 2023; Mancini et al., 2024), softball (Gabbett et al., 2007), tennis (Maman et al., 2011), table tennis (Paul et al., 2011), rugby (Serpell et al., 2011), hockey (Schwab and Memmert, 2012), badminton (Alder et al., 2016; Romeas et al., 2019; Bidil et al., 2021), handball (Alsharji and Wade, 2016; Hohmann et al., 2016), karate (Milazzo et al., 2016; Petri et al., 2019), baseball (Gray, 2017; Liu et al., 2020), cricket (Brenton et al., 2019a; Brenton et al., 2019b), fencing (Di et al., 2024), skeet shooting (Guo et al., 2024), esports (Lachowicz et al., 2024) and basketball (Lucia et al., 2024). The sample sizes of individual studies ranged from 15 to 80, with intervention durations spanning 1 week to 6 months. Training frequency varied between one and seven sessions per week, and each session lasted from 6 to 180 min.

The VT interventions were classified into the following categories: (1) perceptual-cognitive training [n = 10 studies (Gabbett et al., 2007; Serpell et al., 2011; Lorains et al., 2013; Murgia et al., 2014; Nimmerichter et al., 2015; Alder et al., 2016; Alsharji and Wade, 2016; Hohmann et al., 2016; Brenton et al., 2019a; Schumac et al., 2020)]; (2) visuomotor coordination training [n = 13 studies (Maman et al., 2011; Paul et al., 2011; Schwab and Memmert, 2012; Brenton et al., 2019a; Brenton et al., 2019b; Liu et al., 2020; Bidil et al., 2021; Theofilou et al., 2022; Di et al., 2024; Guo et al., 2024; Lucia et al., 2024; Mancini et al., 2024; Rodrigues et al., 2025)]; (3) multiple object tracking training [n = 4 studies (Romeas et al., 2016; Romeas et al., 2019; Ehmann et al., 2022; Phillips et al., 2023)]; (Laby and Appelbaum, 2021); stroboscopic visual training [n = 3 studies (Liu et al., 2020; Fortes et al., 2023; Zwierko et al., 2023)]; (Kittel et al., 2024); virtual reality training [n = 3 studies (Gray, 2017; Petri et al., 2019; Lachowicz et al., 2024)]. Furthermore, upon detailed evaluation of the methodological rigor across studies, only eleven were ultimately considered to report outcome measures that were not confounded by potential “learning effect”. Further details regarding study characteristics and intervention protocols are summarized in Supplementary Appendix Table 2.1.

3.2 Risk of bias

The risk of bias was evaluated using the Cochrane Risk of Bias 2.0 (RoB 2) tool, and the results are presented in Supplementary Appendix Figures 3.1 and 3.2. Although all included studies identified themselves as randomized controlled trials, only 11 clearly described the method used for random sequence generation. Consequently, the domain D1 (randomization process) was rated as “low risk” in these studies, while the others were judged as having “some concerns” due to insufficient reporting on randomization procedures. For D3 (missing outcome data), six studies were classified as “high risk” owing to substantial attrition that resulted in marked imbalance between groups. In terms of D4 (measurement of the outcome), most studies (n = 23) were rated as having “high risk” due to employing subjective evaluation methods and the presence of “learning effect”. In addition, nine other studies were judged as having “some concerns” due to the lack of blinding in outcome assessment. All included studies were marked as having “some concerns” for D5 (selection of the reported result), primarily due to incomplete reporting or absence of pre-specified analysis plans. Overall, 22 studies were deemed to be at “high risk”, and the remaining were categorized as having “some concerns”. It should be noted that the high proportion of studies rated as “high risk” was primarily driven by Domain 4, where the presence of “learning effects” compromised the validity of outcome measures. In addition, several studies suffered from high attrition rates. These limitations may have inflated the reported effects and should be considered when interpreting the overall findings.

3.3 Main analyses

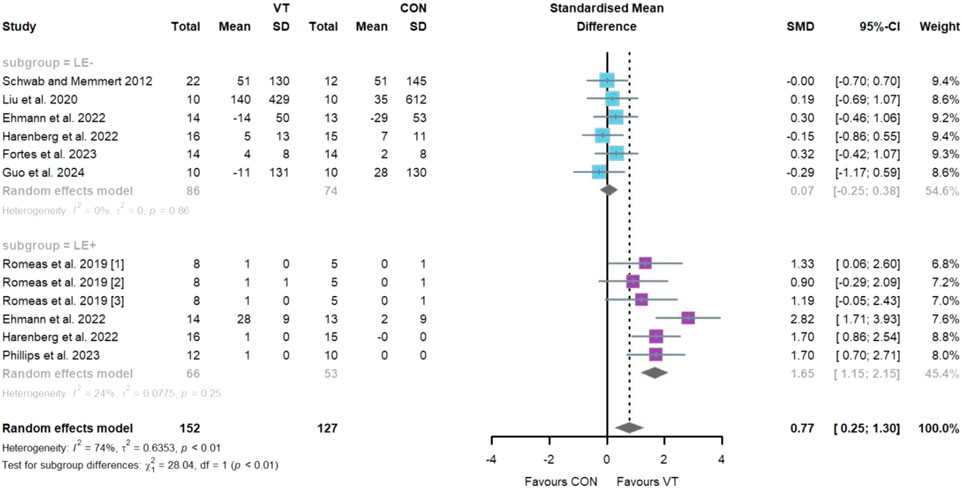

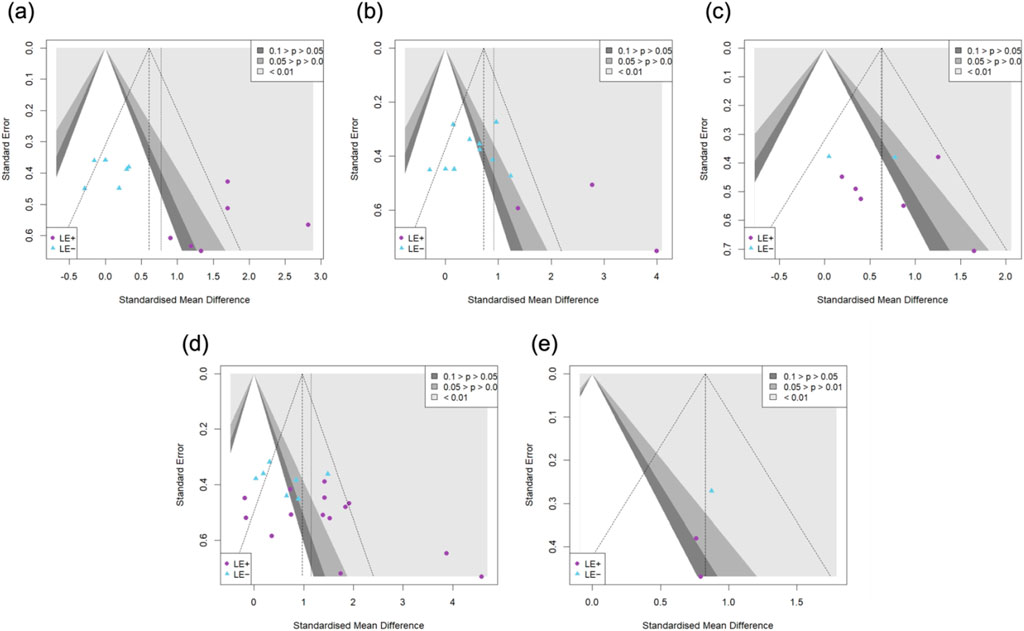

Regarding the impact of VT on visual attention (Figure 2), eight studies comprising twelve intervention groups and a total of 269 participants were included. The meta-analysis revealed a statistically significant improvement [SMD = 0.77; 95% CI = (0.25–1.30); I2 = 74%; p = 0.00], indicating a medium effect size. High heterogeneity was observed. Egger’s test indicated potential publication bias in the primary pooled effect size (p = 0.03), supported by asymmetry in the funnel plot (Figure 7a). Sensitivity analysis confirmed the robustness of the pooled estimate (Supplementary Appendix Figure 4.1). Subgroup analysis showed that the “LE−” group did not exhibit a statistically significant improvement [k = 6, n = 160, SMD = 0.07; 95% CI = (−0.25 to 0.38); I2 = 0% (low); p > 0.05], while the “LE+” group demonstrated a significant improvement with a large effect size [k = 6, n = 109, SMD = 1.65; 95% CI = (1.15–2.15); I2 = 24% (low); p < 0.05]. A significant between-group difference was detected (p = 0.00).

Figure 2. Random-effects meta-analysis of the comparative effects of visual training on visual attention between the “LE−” and “LE+” groups.

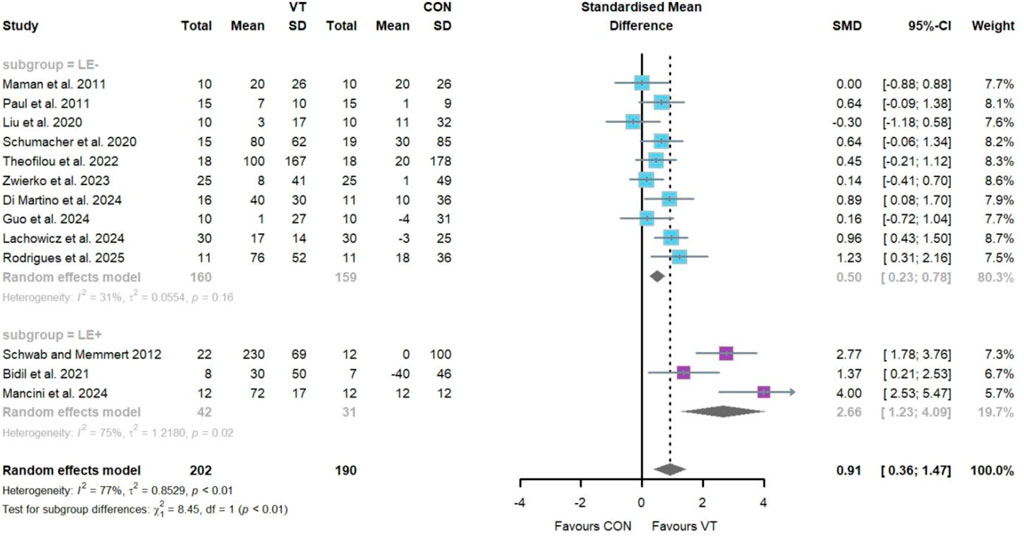

Regarding the impact of VT on reaction time (Figure 3), thirteen studies involving thirteen intervention groups and a total of 392 participants were included. The meta-analysis revealed a statistically significant improvement [SMD = 0.91; 95% CI = (0.36–1.47); I2 = 77%; p = 0.00], indicating a large effect size. Considerable heterogeneity was observed. Egger’s test did not indicate significant publication bias (p = 0.07), although visual inspection revealed asymmetry in the funnel plot (Figure 7b). Sensitivity analysis confirmed the robustness of the pooled effect size (Supplementary Appendix Figure 4.2). Subgroup analysis showed that the “LE−” group exhibited a statistically significant improvement with a moderate effect size [k = 10, n = 319, SMD = 0.50; 95% CI = (0.23–0.78); I2 = 31% (moderate); p > 0.05], while the “LE+” group demonstrated a large and significant effect [k = 3, n = 73, SMD = 2.66; 95% CI = (1.23–4.09); I2 = 75% (high); p > 0.05]. A statistically significant difference was observed between the two subgroups (p = 0.00).

Figure 3. Random-effects meta-analysis of the comparative effects of visual training on reaction time between the “LE−” and “LE+” groups.

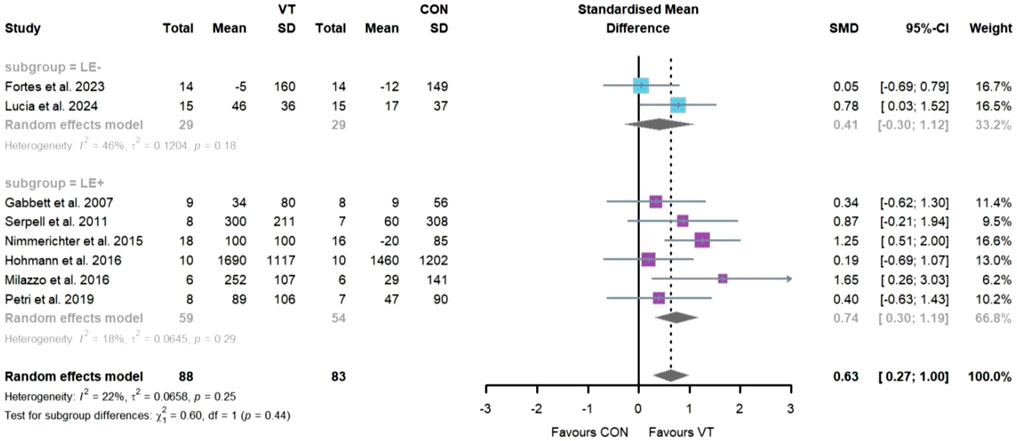

Regarding the impact of VT on decision-making time (Figure 4), eight studies comprising eight intervention groups and a total of 171 participants were included. The meta-analysis showed a statistically significant improvement [SMD = 0.63; 95% CI = (0.27–1.00); I2 = 22%; p = 0.00], with low heterogeneity observed. Egger’s test indicated no significant publication bias (p = 0.55), which was consistent with the symmetrical distribution observed in the funnel plot (Figure 7c). Sensitivity analysis supported the robustness of the pooled effect size (Supplementary Appendix Figure 4.3). Subgroup analysis revealed that the “LE−” group did not show a statistically significant improvement [k = 2, n = 58, SMD = 0.41; 95% CI = (−0.30–1.12); I2 = 46% (moderate); p > 0.05], while the “LE+” group exhibited a significant improvement with a moderate effect size [k = 6, n = 113, SMD = 0.74; 95% CI = (0.30–1.19); I2 = 18% (low); p < 0.05]. No significant difference was detected between the two subgroups (p = 0.44).

Figure 4. Random-effects meta-analysis of the comparative effects of visual training on decision-making time between the “LE−” and “LE+” groups.

Regarding the impact of VT on decision-making accuracy (Figure 5), seventeen studies comprising twenty-one intervention groups and a total of 471 participants were included. The meta-analysis revealed a statistically significant improvement [SMD = 1.15; 95% CI = (0.69–1.61); I2 = 77%; p = 0.00], indicating a large effect size. Considerable heterogeneity was observed. Egger’s test indicated potential publication bias (p = 0.01), consistent with the asymmetry observed in the funnel plot (Figure 7d). Sensitivity analysis confirmed the robustness of the pooled effect size (Supplementary Appendix Figure 4.4). Subgroup analysis showed that the “LE−” group demonstrated a statistically significant improvement with a moderate effect size [k = 7, n = 194, SMD = 0.62; 95% CI = (0.23–1.00); I2 = 47% (moderate); p < 0.05], while the “LE+” group exhibited a significant improvement with a large effect size [k = 14, n = 277, SMD = 1.46; 95% CI = (0.80–2.12); I2 = 80% (considerable); p < 0.05]. A statistically significant difference was observed between the two subgroups (p = 0.03).

Figure 5. Random-effects meta-analysis of the comparative effects of visual training on decision-making accuracy between the “LE−” and “LE+” groups.

Regarding the impact of VT on eye-hand coordination (Figure 6), three studies comprising three intervention groups and a total of 110 participants were included. The meta-analysis revealed a statistically significant improvement [SMD = 0.83; 95% CI = (0.44–1.22); I2 = 0%; p = 0.00], indicating a large effect size with low heterogeneity. Egger’s test indicated no evidence of publication bias (p = 0.39), which was consistent with the symmetrical distribution observed in the funnel plot (Figure 7e). Sensitivity analysis supported the robustness of the pooled effect size (Supplementary Appendix Figure 4.5). As the number of included studies was fewer than five, subgroup analysis was not conducted.

![Forest plot illustrating a meta-analysis of three studies by Paul et al. 2011, Guo et al. 2024, and Lachowicz et al. 2024. The chart compares standardized mean differences of VT versus CON with a random effects model. Study weights are 27.6%, 18.2%, and 54.2%, respectively. Combined effect size is 0.83 with a 95% confidence interval of [0.44; 1.22]. Results indicate minimal heterogeneity with I² = 0%. The plot shows individual and pooled effect sizes, favoring VT.](https://www.frontiersin.org/files/Articles/1664572/fphys-16-1664572-HTML/image_m/fphys-16-1664572-g006.jpg)

Figure 6. Random-effects meta-analysis of the comparative effects of visual training on eye-hand coordination.

Figure 7. Funnel plots for studies reporting (a) visual attention; (b) reaction time; (c) decision-making time; (d) decision-making accuracy; (e) eye-hand coordination.

4 Discussion

4.1 Influence of “learning effect” on the effectiveness of visual training

This study conducted a systematic subgroup analysis to examine the moderating role of the “learning effect” on the efficacy of VT across various visual-cognitive outcomes. Among the 33 RCTs included, 22 studies met the predefined criteria for the presence of a “learning effect” (LE+). Across four key outcomes—visual attention, reaction time, decision-making time, and decision-making accuracy—the “LE+” group consistently exhibited larger effect sizes compared to the “LE−” group. Notably, significant between-group differences were observed for visual attention (p = 0.00), reaction time (p = 0.00), and decision-making accuracy (p = 0.03).

These findings suggest that when test tools and procedures are structurally similar to the training tasks, participants tend to exhibit better performance, likely due to familiarity with the device interface, stimulus presentation, and response format. Such improvements do not necessarily reflect genuine enhancement of neural processing capabilities but may instead result from task-dependent procedural memory activation or strategic response optimization, known as the “learning effect” (Basner et al., 2020). This also supports the argument of Fransen (Fransen, 2024), who emphasized that the benefits of VT fail to demonstrate robust “far transfer” effects to actual athletic performance. In VT interventions—particularly in perceptual-cognitive and visuomotor coordination training—the “learning effect” has emerged as a systematically overlooked source of bias. It systematically inflates training outcomes through task structural overlap, thereby obscuring the actual extent of neuroplasticity in the visual system (Krasich et al., 2016; Yoon et al., 2019). For example, in the case of visual attention, studies (Romeas et al., 2019; Ehmann et al., 2022; Harenberg et al., 2022; Phillips et al., 2023) in the “LE+” group included multiple object tracking tasks during training and reported significant improvements (SMD = 1.65). In contrast, when training tasks lacked similarity to the tests—even when they contained attention-related components (Ehmann et al., 2022; Harenberg et al., 2022; Guo et al., 2024) —no meaningful improvement was observed (SMD = 0.07). While our previous studies (Guo et al., 2024) reported no significant effects of VT on reaction time, the current analysis showed different results, potentially due to the inclusion of Tier 2 trained individuals who have greater room for improvement compared to athletes. A similar pattern was observed for reaction time: the “LE+” group showed markedly stronger effects, and all three studies (Schwab and Memmert, 2012; Bidil et al., 2021; Mancini et al., 2024) in this group used choice reaction time tasks. Choice reaction time tasks typically involve discriminating and matching multiple stimuli and making rule-based judgments, making them more complex than simple reaction time tasks (Rosenbaum, 2010). As a result, participants may develop specific strategies or response patterns through repeated exposure, relying on strategic responses rather than genuine improvements in neural conduction speed or visual-cognitive processing. In addition, this type of test may be influenced by participants’ compensatory mechanisms for slower responses (Guo et al., 2024), further contributing to inflated test scores.

In decision-making assessments, both response time and accuracy appeared to be affected by the “learning effect”, suggesting that improvements in decision-making following VT may be substantially influenced by test design and task structure. Decision-making inherently involves the rapid identification of external cues, judgment based on experiential rules, and the selection of appropriate behavioral responses—a process requiring the coordination of visual perception (Zhu et al., 2024; Klatt and Smeeton, 2022), working memory (Glavaš et al., 2023; Wu et al., 2025), attentional allocation (Silva et al., 2022), and cognitive control (Heilmann et al., 2024). Decision-making tests often simulate realistic competitive scenarios—such as anticipating an opponent’s movement direction (Milazzo et al., 2016; Petri et al., 2019; Di et al., 2024), predicting ball trajectories (Lucia et al., 2024), or selecting optimal responses from multiple alternatives (Lucia et al., 2024; Mancini et al., 2024; Rodrigues et al., 2025). The complexity of such tasks means that test validity largely depends on the logic and realism of the testing context. However, when test tasks closely resemble the training conditions in terms of stimulus presentation, number and structure of decision options, or feedback mechanisms, participants may develop fixed decision pathways or strategy templates through repeated exposure. Such gains, rooted in familiarity and procedural memory, differ from true cognitive transfer and instead reflect automation in processing specific tasks, rather than improvements in generalized decision-making under dynamic conditions (Cretton et al., 2025). For example, in the “LE+” group, most studies (Gabbett et al., 2007; Lorains et al., 2013; Nimmerichter et al., 2015; Alder et al., 2016; Alsharji and Wade, 2016; Milazzo et al., 2016; Brenton et al., 2019a; Brenton et al., 2019b) employed test stimuli and discrimination formats nearly identical to those used in training—often utilizing the same visual simulation software or platforms. While this setup ensured procedural alignment between training and testing, it also substantially increased the likelihood of test-dependent learning, thereby inflating the observed effect sizes. This bias was particularly evident in decision accuracy, where the “LE+” group exhibited a notably higher effect size compared to the “LE−” group (SMD = 1.46 vs. 0.62), with the between-group difference reaching statistical significance (p = 0.03).

Therefore, task structure–dependent performance gains not only compromise the external validity of VT evaluations but also pose challenges for the development of subsequent intervention strategies. If researchers overlook the influence of the “learning effect” on assessment outcomes, they may mistakenly interpret structurally closed and task-specific training protocols as having generalizable transfer value and extend them to other sports or populations. In reality, the true effectiveness of VT hinges on its ability to promote the generalization of cognitive processing and the enhancement of strategic decision-making skills.

4.2 Recommendations for research design and outcome assessment

The findings of this study suggest that when test tasks closely resemble training content in structural design, the “learning effect” may substantially inflate the observed benefits of VT, thereby compromising the validity and interpretability of experimental outcomes. This issue is particularly salient in current studies that extensively use digital tools and standardized test methods, where performance gains driven by task familiarity and procedural memory—rather than actual ability—have emerged as a critical source of bias in evaluating training effectiveness (Fransen, 2024). Therefore, proactively identifying and mitigating the influence of “learning effect” during the research design phase has become a crucial prerequisite for improving the methodological quality of VT intervention studies. To this end, researchers should make more deliberate and systematic decisions regarding the structural design of intervention and testing tasks, the selection of outcome measures, and the implementation of evaluation procedures.

First, researchers should avoid selecting test tools and designing tasks that closely resemble the training conditions in terms of interface layout, stimulus type, response format, or feedback mechanisms. When training and testing share the same platform, procedures, or task logic, participants may rely on previously formed procedural strategies during testing, potentially masking the true effects of the intervention on visual–cognitive skills (Lloyd et al., 2025). In contrast, using structurally dissimilar but functionally equivalent heterogenous tasks as assessment tools can better capture transferable improvements and enhance the interpretability and generalizability of research findings. The “learning effect” is typically most pronounced in the early stages of repeated testing and tends to diminish as participants become more familiar with the task (Hammers et al., 2024). Therefore, researchers should carefully plan the timing of interventions and test sessions, ensuring that key evaluations are conducted after participants have adapted to the task and the “learning effect” has stabilized or dissipated. To more accurately assess the true effects of VT, researchers should also prioritize the use of gold-standard visual assessment tools with high reliability and validity. These gold-standard tests should not only demonstrate robust psychometric properties but also distinguish between ability-based improvements and strategy-based gains driven by task familiarity. Additionally, only seven of the included studies conducted retention tests ranging from 1 to 10 weeks post-intervention. To comprehensively evaluate the long-term value of VT, future studies should incorporate retention assessments, which help distinguish short-term strategy-based gains from true neural adaptation and ability consolidation, thereby reflecting the durability and stability of training effects (Willey and Liu, 2018). In addition, future trials should adopt standardized protocols to minimize potential learning effects, such as randomizing device configurations and employing alternate stimulus sets across training and testing.”

The ultimate goal of VT is to enhance sport-specific performance. Therefore, relying solely on laboratory-based visual metrics may be insufficient to fully capture the practical benefits of such training (Fransen, 2024). Study designs should incorporate field-based assessments of sport-specific skills, such as motor responses, decision-making execution, and technical performance under competitive conditions, to evaluate whether improvements in visual abilities effectively transfer to athletic performance. Integrating laboratory-based evaluations with field tests that offer higher ecological validity allows for a more comprehensive assessment of VT outcomes and provides a stronger foundation for optimizing and scaling intervention programs (Laby and Appelbaum, 2021).

4.3 Limitations of the present study

The present study has several limitations as follows: (1) Although relatively clear criteria were established to classify the presence of the “learning effect”, this process still involved a degree of subjective judgment. Some included studies lacked detailed reporting of training and testing task characteristics, which may have led to misclassification bias. This subjectivity could have influenced the subgroup comparisons and potentially inflated or underestimated the differences observed between LE+ and LE− groups. Future studies should provide more standardized and transparent reporting of task characteristics to allow for more objective classification and replication across reviews; (2) Although all included participants were experienced athletes at Tier 2 or above, considerable heterogeneity existed in terms of sport type, training background, age, and gender. These factors may influence participants’ receptiveness to training and learning rates, thereby moderating intervention outcomes. Furthermore, variations in training frequency, and intervention duration across studies posed challenges to the accuracy of effect synthesis; (3) This review included only peer-reviewed RCTs published in English-language databases, excluding studies in other languages, which may have introduced both language and publication bias. This restriction could have led to the exclusion of potentially relevant studies published in other languages or in the gray literature, where null or negative findings are more likely to appear. As a result, the pooled estimates presented in this review may be somewhat inflated. Future reviews should consider incorporating multilingual databases and trial registries to reduce the risk of such bias and provide a more comprehensive evidence base. Finally, the potential for small-study effects should also be acknowledged. Although we performed leave-one-out sensitivity analyses to test the robustness of the findings, the limited number of studies and participants in some subgroups increases the likelihood that effect sizes may have been inflated by small-study effects. Therefore, these results should be interpreted with caution until they can be confirmed by larger, well-controlled trials.

5 Conclusion

This meta-analysis examined the moderating role of the “learning effect” on the outcomes of VT across different visual–cognitive skills. The results revealed that when the “learning effect” was present, the effectiveness of VT was significantly overestimated. When training and testing tasks shared high structural similarity, participants likely developed task-specific response strategies due to familiarity with the interface, procedures, and task format, leading to inflated test performance that did not reflect genuine improvements in sports vision. These findings suggest that the “learning effect” may constitute a significant source of systematic bias that warrants greater attention and control in future research. To improve the validity and interpretability of future findings, researchers are advised to avoid high structural overlap between training and testing tasks, or to incorporate sufficient familiarization periods and retention tests to distinguish between short-term strategic gains and true neural adaptations.

Author contributions

YG: Conceptualization, Data curation, Methodology, Visualization, Writing – original draft, Writing – review and editing. TY: Conceptualization, Funding acquisition, Methodology, Writing – original draft, Writing – review and editing. MY: Conceptualization, Data curation, Methodology, Writing – review and editing, Writing – original draft. JQ: Conceptualization, Data curation, Methodology, Writing – review and editing.

Funding

The author(s) declare that financial support was received for the research and/or publication of this article. This study was supported by the Fundamental Research Funds for the China Institute of Sport Science, the Basic Project (no. 24-19) and the General Administration of Sport of China.

Acknowledgments

The authors acknowledge the assistance of ChatGPT, which was used for translation and language polishing.

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Generative AI statement

The author(s) declare that Generative AI was used in the creation of this manuscript. The authors acknowledge the assistance of ChatGPT, which was used for translation and language polishing.

Any alternative text (alt text) provided alongside figures in this article has been generated by Frontiers with the support of artificial intelligence and reasonable efforts have been made to ensure accuracy, including review by the authors wherever possible. If you identify any issues, please contact us.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Supplementary material

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/fphys.2025.1664572/full#supplementary-material

References

Alder D., Ford P. R., Causer J., Williams A. M. (2016). The effects of High- and low-anxiety training on the anticipation judgments of elite performers. J. Sport Exerc. Psychol. 38 (1), 93–104. doi:10.1123/jsep.2015-0145

Alsharji K. E., Wade M. G. (2016). Perceptual training effects on anticipation of direct and deceptive 7-m throws in handball. J. Sports Sci. 34 (2), 155–162. doi:10.1080/02640414.2015.1039463

Amir-Behghadami M., Janati A. (2020). Population, intervention, comparison, outcomes and study (PICOS) design as a framework to formulate eligibility criteria in systematic reviews. Emerg. Med. J. 37 (6), 387. doi:10.1136/emermed-2020-209567

Appelbaum L. G., Erickson G. (2018). Sports vision training: a review of the state-of-the-art in digital training techniques. Int. Rev. Sport Exerc. Psychol. 11 (1), 160–189. doi:10.1080/1750984x.2016.1266376

Basner M., Hermosillo E., Nasrini J., Saxena S., Dinges D. F., Moore T. M., et al. (2020). Cognition test battery: adjusting for practice and stimulus set effects for varying administration intervals in high performing individuals. J. Clin. Exp. Neuropsychol. 42 (5), 516–529. doi:10.1080/13803395.2020.1773765

Bidil S., Arslan B., Bozkurt K., Düzova S. C., Örs B. S., Onarici GüngÖR E., et al. (2021). Investigation of the effect of badminton-specific 8 week reaction training on visual-motor reaction time and visual cognitive dual task. Turkiye Klinikleri J. Sports Sci. 13 (1), 33–40. doi:10.5336/sportsci.2019-71769

Brenton J., Muller S., Dempsey A. (2019a). Visual-perceptual training with acquisition of the observed motor pattern contributes to greater improvement of visual anticipation. J. Exp. Psychol. Appl. 25 (3), 333–342. doi:10.1037/xap0000208

Brenton J., Müller S., Harbaugh A. G. (2019b). Visual-perceptual training with motor practice of the observed movement pattern improves anticipation in emerging expert cricket batsmen. J. Sports Sci. 37 (18), 2114–2121. doi:10.1080/02640414.2019.1621510

Burda B. U., O’Connor E. A., Webber E. M., Redmond N., Perdue L. A. (2017). Estimating data from figures with a web-based program: considerations for a systematic review. Res. Synth. Methods 8 (3), 258–262. doi:10.1002/jrsm.1232

Chaloupka B., Zeithamova D. (2024). Differential effects of location and object overlap on new learning. Front. Cognition. 2, 1325246–2023. doi:10.3389/fcogn.2023.1325246

Christ A. (2009). Mixed effects models and extensions in ecology with R. Book Rev. 32 (1), 1–3. doi:10.18637/jss.v032.b01

Cretton A., Ruggeri P., Brandner C., Barral J. (2025). When random practice makes you more skilled: applying the contextual interference principle to a simple aiming task learning. J. Cognitive Enhanc. 9 (2), 167–183. doi:10.1007/s41465-025-00317-5

Cumpston M., Li T., Page M. J., Chandler J., Welch V. A., Higgins J. P., et al. (2019). Updated guidance for trusted systematic reviews: a new edition of the cochrane handbook for systematic reviews of interventions. Cochrane Database Syst. Rev. 10 (10), Ed000142. doi:10.1002/14651858.ED000142

Deeks J. J., Higgins J. P. T., Altman D. G.on behalf of the Cochrane Statistical Methods G (2019). Analysing data and undertaking meta-analyses. Cochrane handbook for systematic reviews of Interventions. 241–284.

DerSimonian R., Laird N. (1986). Meta-analysis in clinical trials. Control Clin. Trials 7 (3), 177–188. doi:10.1016/0197-2456(86)90046-2

Di M. G., Giommoni S., Esposito F., Alessandro D., Della Valle C., Iuliano E., et al. (2024). Enhancing focus and short reaction time in épée fencing: the power of the science vision training academy system. J. Funct. Morphol. Kinesiol 9 (4), 213. doi:10.3390/jfmk9040213

Ehmann P., Beavan A., Spielmann J., Mayer J., Ruf L., Altmann S., et al. (2022). Perceptual-cognitive performance of youth soccer players in a 360°-environment – an investigation of the relationship with soccer-specific performance and the effects of systematic training. Psychol. Sport Exerc. 61, 102220. doi:10.1016/j.psychsport.2022.102220

Erickson G. B. (2021). Topical review: visual performance assessments for sport. Optom. Vis. Sci. 98 (7), 672–680. doi:10.1097/OPX.0000000000001731

Erickson G. B., Citek K., Cove M., Wilczek J., Linster C., Bjarnason B., et al. (2011). Reliability of a computer-based system for measuring visual performance skills. Optometry 82 (9), 528–542. doi:10.1016/j.optm.2011.01.012

Fortes L. S., Faro H., Faubert J., Freitas-Júnior C. G., Lima-Junior D., Almeida S. S. (2023). Repeated stroboscopic vision training improves anticipation skill without changing perceptual-cognitive skills in soccer players. Appl. Neuropsychol. Adult 32, 1123–1137. doi:10.1080/23279095.2023.2243358

Fernández-Castilla B., Lies D., Laleh J., Natasha B. S., Patrick O., Van den Noortgate W. (2021). Detecting selection bias in meta-analyses with multiple outcomes: a simulation study. J. Exp. Educ. 89 (1), 125–144. doi:10.1080/00220973.2019.1582470

Fransen J. (2024). There is no supporting evidence for a far transfer of general perceptual or cognitive training to sports performance. Sports Med. 54 (11), 2717–2724. doi:10.1007/s40279-024-02060-x

Gabbett T., Rubinoff M., Thorburn L., Farrow D. (2007). Testing and training anticipation skills in softball fielders. Int. J. Sports Sci. Coach. 2 (1), 15–24. doi:10.1260/174795407780367159

Glavaš D., Pandžić M., Domijan D. (2023). The role of working memory capacity in soccer tactical decision making at different levels of expertise. Cogn. Res. Princ. Implic. 8 (1), 20. doi:10.1186/s41235-023-00473-2

Gray R. (2017). Transfer of training from virtual to real baseball batting. Front. Psychol. 8, 2183. doi:10.3389/fpsyg.2017.02183

Guo Y., Yuan T., Peng J., Deng L., Chen C. (2024). Impact of sports vision training on visuomotor skills and shooting performance in elite skeet shooters. Front. Hum. Neurosci. 18, 1476649. doi:10.3389/fnhum.2024.1476649

Hammers D. B., Bothra S., Polsinelli A., Apostolova L. G., Duff K. (2024). Evaluating practice effects across learning trials - ceiling effects or something more? J. Clin. Exp. Neuropsychol. 46 (7), 630–643. doi:10.1080/13803395.2024.2400107

Harenberg S., McCarver Z., Worley J., Murr D., Vosloo J., Kakar R. S., et al. (2022). The effectiveness of 3D multiple object tracking training on decision-making in soccer. Sci. Med. Footb. 6 (3), 355–362. doi:10.1080/24733938.2021.1965201

Harris D. J., Wilson M. R., Vine S. J. (2018). A systematic review of commercial cognitive training devices: implications for use in sport. Front. Psychol. 9, 709. doi:10.3389/fpsyg.2018.00709

Heilmann F., Knöbel S., Lautenbach F. (2024). Improvements in executive functions by domain-specific cognitive training in youth elite soccer players. BMC Psychol. 12 (1), 528. doi:10.1186/s40359-024-02017-9

Higgins J. P. T., Thompson S. G., Deeks J. J., Altman D. G. (2003). Measuring inconsistency in meta-analyses. BMJ 327 (7414), 557–560. doi:10.1136/bmj.327.7414.557

Hohmann T., Obelöer H., Schlapkohl N., Raab M. (2016). Does training with 3D videos improve decision-making in team invasion sports? J. Sports Sci. 34 (8), 746–755. doi:10.1080/02640414.2015.1069380

Jothi S., Dhakshinamoorthy J., Kothandaraman K. (2025). Effect of stroboscopic visual training in athletes: a systematic review. J. Hum. Sport Exerc. 20, 562–573. doi:10.55860/vm1j3k88

Kassem L., Pang B., Dogramaci S., MacMahon C., Quinn J., Steel K. A. (2024). Visual search strategies and game knowledge in junior Australian rules football players: testing potential in talent identification and development. Front. Psychol. 15, 1356160. doi:10.3389/fpsyg.2024.1356160

Kittel A., Lindsay R., Le Noury P., Wilkins L. (2024). The use of extended reality technologies in sport perceptual-cognitive skill research: a systematic scoping review. Sports Med. Open 10 (1), 128. doi:10.1186/s40798-024-00794-6

Klatt S., Smeeton N. J. (2022). Processing visual information in elite junior soccer players: effects of chronological age and training experience on visual perception, attention, and decision making. Eur. J. Sport Sci. 22 (4), 600–609. doi:10.1080/17461391.2021.1887366

Krasich K., Ramger B., Holton L., Wang L., Mitroff S. R., Gregory Appelbaum L. (2016). Sensorimotor learning in a computerized athletic training battery. J. Mot. Behav. 48 (5), 401–412. doi:10.1080/00222895.2015.1113918

Laby D. M., Appelbaum L. G. (2021). Review: vision and on-field performance: a critical review of visual assessment and training studies with athletes. Optometry Vis. Sci. 98 (7), 723–731. doi:10.1097/OPX.0000000000001729

Lachowicz M., Serweta-Pawlik A., Konopka-Lachowicz A., Jamro D., Zurek G. (2024). Amplifying cognitive functions in amateur esports athletes: the impact of short-term virtual reality training on reaction time, motor time, and eye-hand coordination. Brain Sci. 14 (11), 1104. doi:10.3390/brainsci14111104

Leat S. J., Yadav N. K., Irving E. L. (2009). Development of visual acuity and contrast sensitivity in children. J. Optometry 2 (1), 19–26. doi:10.3921/joptom.2009.19

Liu S., Ferris L. M., Hilbig S., Asamoa E., LaRue J. L., Lyon D., et al. (2020). Dynamic vision training transfers positively to batting practice performance among collegiate baseball batters. Psychol. Sport Exerc. 51, 101759. doi:10.1016/j.psychsport.2020.101759

Liu P. X., Pan T. Y., Lin H. S., Chu H. K., Hu M. C. (2024). VisionCoach: design and effectiveness study on VR vision training for basketball passing. Ieee Trans. Vis. Comput. Graph. 30 (10), 6665–6677. doi:10.1109/TVCG.2023.3335312

Lloyd M., Curley T., Hertzog C. (2025). Does perceptual learning contribute to practice improvements during speed of processing training? J. Cognitive Enhanc. 9 (2), 192–205. doi:10.1007/s41465-025-00320-w

Lochhead L., Jiren F., Appelbaum L. G. (2024). Training vision in athletes to improve sports performance: a systematic review of the literature. Int. Rev. Sport Exerc. Psychol., 1–23. doi:10.1080/1750984x.2024.2437385

Lorains M., Ball K., MacMahon C. (2013). An above real time training intervention for sport decision making. Psychol. Sport Exerc. 14 (5), 670–674. doi:10.1016/j.psychsport.2013.05.005

Lucia S., Digno M., Madinabeita I., Di Russo F. (2024). Integration of cognitive-motor dual-task training in physical sessions of highly-skilled basketball players. J. Sports Sci. 42 (18), 1695–1705. doi:10.1080/02640414.2024.2408191

Maman P., Gaurang S., Sandhu J. S. (2011). The effect of vision training on performance in tennis players. Serbian J. Sports Sci. 5 (1), 11–16.

Mancini N., Di Padova M., Polito R., Mancini S., Dipace A., Basta A., et al. (2024). The impact of perception–action training devices on quickness and reaction time in female volleyball players. J. Funct. Morphol. Kinesiol. 9 (3), 147. doi:10.3390/jfmk9030147

McKay A. K. A., Stellingwerff T., Smith E. S., Martin D. T., Mujika I., Goosey-Tolfrey V. L., et al. (2022). Defining training and performance caliber: a participant classification framework. Int. J. Sports Physiol. Perform. 17 (2), 317–331. doi:10.1123/ijspp.2021-0451

Mehta S. (2015). Age-related macular degeneration. Prim. Care 42 (3), 377–391. doi:10.1016/j.pop.2015.05.009

Milazzo N., Farrow D., Fournier J. F. (2016). Effect of implicit perceptual-motor training on decision-making skills and underpinning gaze behavior in combat athletes. Percept. Mot. Ski. 123 (1), 300–323. doi:10.1177/0031512516656816

Moher D., Liberati A., Tetzlaff J., Altman D. G.PRISMA Group (2010). Preferred reporting items for systematic reviews and meta-analyses: the PRISMA statement. Int. J. Surg. 8 (5), 336–341. doi:10.1016/j.ijsu.2010.02.007

Müller S., Morris-Binelli K., Hambrick D. Z., Macnamara B. N. (2024). Accelerating visual anticipation in sport through temporal occlusion training: a meta-analysis. Sports Med. 54 (10), 2597–2606. doi:10.1007/s40279-024-02073-6

Murgia M., Sors F., Muroni A. F., Santoro I., Prpic V., Galmonte A., et al. (2014). Using perceptual home-training to improve anticipation skills of soccer goalkeepers. Psychol. Sport Exerc. 15 (6), 642–648. doi:10.1016/j.psychsport.2014.07.009

Nimmerichter A., Weber N. J. R., Wirth K., Haller A. (2015). Effects of video-based visual training on decision-making and reactive agility in adolescent football players. Sports (Basel, Switzerland) 4 (1), 1. doi:10.3390/sports4010001

Ouzzani M., Hammady H., Fedorowicz Z., Elmagarmid A. (2016). Rayyan—a web and mobile app for systematic reviews. Syst. Rev. 5 (1), 210. doi:10.1186/s13643-016-0384-4

Paul M., Biswas S. K., Sandhu J. S. (2011). Role of sports vision and eye hand coordination training in performance of table tennis players. Braz. J. Biomotricity 5, 106–116.

Peters J. L., Sutton A. J., Jones D. R., Abrams K. R., Rushton L. (2008). Contour-enhanced meta-analysis funnel plots help distinguish publication bias from other causes of asymmetry. J. Clin. Epidemiol. 61 (10), 991–996. doi:10.1016/j.jclinepi.2007.11.010

Petri K., Masik S., Danneberg M., Emmermacher P., Witte K. (2019). Possibilities to use a virtual opponent for enhancements of reactions and perception of young karate athletes. Int. J. Comput. Sci. Sport (Sciendo) 18 (2), 20–33. doi:10.2478/ijcss-2019-0011

Phillips J., Dusseault M., Andre T., Nelson H., Polly Da Costa Valladão S. (2023). Test transferability of 3D-MOT training on soccer specific parameters. Res. Directs Strength Perform. 3 (1). doi:10.53520/rdsp2023.10566

Poltavski D., Biberdorf D., Praus Poltavski C. (2021). Which comes first in sports vision training: the software or the hardware update? Utility of electrophysiological measures in monitoring specialized visual training in youth athletes. Front. Hum. Neurosci. 15, 732303. doi:10.3389/fnhum.2021.732303

Poon E. T.-C., Wongpipit W., Li H.-Y., Wong S. H.-S., Siu P. M., Kong A. P.-S., et al. (2024). High-intensity interval training for cardiometabolic health in adults with metabolic syndrome: a systematic review and meta-analysis of randomised controlled trials. Br. J. Sports Med. 58 (21), 1267–1284. doi:10.1136/bjsports-2024-108481

Rodrigues P., Woodburn J., Bond A. J., Stockman A., Vera J. (2025). Light-based manipulation of visual processing speed during soccer-specific training has a positive impact on visual and visuomotor abilities in professional soccer players. Ophthalmic Physiological Opt. 45 (2), 504–513. doi:10.1111/opo.13423

Romeas T., Guldner A., Faubert J. (2016). 3D-Multiple object tracking training task improves passing decision-making accuracy in soccer players. Psychol. Sport Exerc. 22, 1–9. doi:10.1016/j.psychsport.2015.06.002

Romeas T., Chaumillon R., Labbe D., Faubert J. (2019). Combining 3D-MOT with sport decision-making for perceptual-cognitive training in virtual reality. Percept. Mot. Ski. 126 (5), 922–948. doi:10.1177/0031512519860286

Rosenbaum D. A. (2010). “Chapter 9 - keyboarding,” in Human motor control. 2nd Ed. (San Diego: Academic Press), 277–321.

Sánchez-González M. C., Palomo-Carrión R., De-Hita-Cantalejo C., Romero-Galisteo R. P., Gutiérrez-Sánchez E., Pinero-Pinto E. (2022). Visual system and motor development in children: a systematic review. Acta Ophthalmol. 100 (7), e1356–e1369. doi:10.1111/aos.15111

Schumacher N., Reer R., Braumann K. M. (2020). On-field perceptual-cognitive training improves peripheral reaction in soccer: a controlled trial. Front. Psychol. 11, 1948. doi:10.3389/fpsyg.2020.01948

Schwab S., Memmert D. (2012). The impact of a sports vision training program in youth field hockey players. J. Sports Sci. Med. 11 (4), 624–631.

Serpell B. G., Young W. B., Ford M. (2011). Are the perceptual and decision-making components of agility trainable? A preliminary investigation. J. Strength Cond. Res. 25 (5), 1240–1248. doi:10.1519/JSC.0b013e3181d682e6

Shekar S. U., Erickson G. B., Horn F., Hayes J. R., Cooper S. (2021). Efficacy of a digital sports vision training program for improving visual abilities in collegiate baseball and softball athletes. Optometry Vis. Sci. 98 (7), 815–825. doi:10.1097/OPX.0000000000001740

Silva A. F., Afonso J., Sampaio A., Pimenta N., Lima R. F., Castro H. O., et al. (2022). Differences in visual search behavior between expert and novice team sports athletes: a systematic review with meta-analysis. Front. Psychol. 13, 1001066. doi:10.3389/fpsyg.2022.1001066

Skopek M., Heidler J., Hnizdil J. A. N., Kresta J. A. N. (2023). The use of virtual reality in table tennis training: a comparison of selected muscle activation in upper limbs during strokes in virtual reality and normal environments. J. Phys. Educ. Sport 23 (7), 1736–1741. doi:10.7752/jpes.2023.07213

Smith T. Q., Mitroff S. R. (2012). Stroboscopic training enhances anticipatory timing. Int. J. Exerc Sci. 5 (4), 344–353. doi:10.70252/OTSW1297

Theofilou G., Ladakis I., Mavroidi C., Kilintzis V., Mirachtsis T., Chouvarda I., et al. (2022). The effects of a visual stimuli training program on reaction time, cognitive function, and fitness in young soccer players. Sensors 22 (17), 6680. doi:10.3390/s22176680

Thiele D., Prieske O., Chaabene H., Granacher U. (2020). Effects of strength training on physical fitness and sport-specific performance in recreational, sub-elite, and elite rowers: a systematic review with meta-analysis. J. Sports Sci. 38 (10), 1186–1195. doi:10.1080/02640414.2020.1745502

Willey C. R., Liu Z. (2018). Long-term motor learning: effects of varied and specific practice. Vis. Res. 152, 10–16. doi:10.1016/j.visres.2017.03.012

Wu C., Zhang C., Li X., Ye C., Astikainen P. (2025). Comparison of working memory performance in athletes and non-athletes: a meta-analysis of behavioural studies. Memory 33 (2), 259–277. doi:10.1080/09658211.2024.2423812

Yoon J. S., Roque N. A., Andringa R., Harrell E. R., Lewis K. G., Vitale T., et al. (2019). Intervention comparative effectiveness for adult cognitive training (ICE-ACT) trial: rationale, design, and baseline characteristics. Contemp. Clin. Trials 78, 76–87. doi:10.1016/j.cct.2019.01.014

Zhu R., Zheng M., Liu S., Guo J., Cao C. (2024). Effects of perceptual-cognitive training on anticipation and decision-making skills in team sports: a systematic review and meta-analysis. Behav. Sci. (Basel). 14 (10), 919. doi:10.3390/bs14100919

Zwierko M., Jedziniak W., Popowczak M., Rokita A. (2023). Effects of in-situ stroboscopic training on visual, visuomotor and reactive agility in youth volleyball players. PeerJ 11, e15213. doi:10.7717/peerj.15213

Keywords: digital-based training, visual-cognitive skills, practice effect, task similarity, sports vision training

Citation: Guo Y, Yuan T, Yang M and Qiu J (2025) Does the “learning effect” caused by digital devices exaggerate sports visual training outcomes? A systematic review and meta-analysis. Front. Physiol. 16:1664572. doi: 10.3389/fphys.2025.1664572

Received: 12 July 2025; Accepted: 28 August 2025;

Published: 05 September 2025.

Edited by:

Xian Song, Zhejiang University, ChinaReviewed by:

Matthias Nuernberger, University Hospital Jena, GermanyCarlos Humberto Andrade-Moraes, Federal University of Rio de Janeiro, Brazil

Hemantajit Gogoi, Rajiv Gandhi University, India

Copyright © 2025 Guo, Yuan, Yang and Qiu. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Tinggang Yuan, eXVhbnRpbmdnYW5nQGNpc3MuY24=

†These authors have contributed equally to this work

Yuqiang Guo

Yuqiang Guo Tinggang Yuan1*

Tinggang Yuan1*