- 1Department of Orthopedics, Suzhou Ninth People’s Hospital, Suzhou, Jiangsu, China

- 2Department of Orthopedics, The First Affiliated Hospital of Soochow University, Suzhou, Jiangsu, China

Introduction: This study investigates the application of a deep learning model, YOLOv8-Seg, for the automated classification of osteoporotic vertebral fractures (OVFs) from computed tomography (CT) images.

Methods: A dataset of 673 CT images from patients admitted between March 2013 and May 2023 was collected and classified according to the European Vertebral Osteoporosis Study Group (EVOSG) system. Of these, 643 images were used for training and validation, while a separate set of 30 images was reserved for testing.

Results: The model achieved a mean Average Precision (mAP50-95) of 85.9% in classifying fractures into crush, anterior wedge, and biconcave types.

Discussion: The results demonstrate the high proficiency of the YOLOv8-Seg model in identifying OVFs, indicating its potential as a decision-support tool to streamline the current manual diagnostic process. This work underscores the significant potential of deep learning to assist medical professionals in achieving early and precise diagnoses, thereby improving patient outcomes.

1 Introduction

Osteoporotic vertebral fractures (OVFs) are often caused by minor external forces, typically resulting in mild compression fractures of the vertebral body. In severe cases of osteoporosis or due to inappropriate treatment, these fractures can escalate into vertebral body burst fractures, significant collapse, and kyphosis (1–3). OVFs not only trigger symptoms of spinal cord nerve damage but also adversely affect cardiopulmonary and gastrointestinal functions, thereby increasing mortality risk. Such conditions place substantial burdens on individuals, families, and society at large (4–6). Therefore, thorough investigations into the prevention and treatment of OVFs are crucial to improve patient survival quality and reduce mortality rates (7, 8).

Imaging serves as the principal method for diagnosing orthopedic conditions, including fractures, osteoarthritis, bone tumors, etc. (9). Misdiagnosis, often due to image misinterpretation or misjudgment, is prevalent in clinical settings. This issue is frequently linked to the inexperience or heavy workload of the radiologist, compounded by the subtle or atypical nature of the lesions (10). Addressing misdiagnoses in orthopedic diseases is vital, as incorrect diagnoses can severely impact patient outcomes. For example, a misdiagnosed fracture could delay surgical intervention, leading to complications like malunion or osteoarthritis. Similarly, a delayed diagnosis of a bone tumor could prevent timely surgical intervention, resulting in exacerbated symptoms and reduced functionality (11). From a clinical perspective, developing a user-friendly diagnostic model that facilitates early and accurate medical image diagnosis by less experienced physicians is essential. The integration of deep learning technology in clinical settings primarily aims at the swift identification of abnormal structures or regions within medical images, providing critical reference points for physicians' assessments and diagnoses (12–16). This study introduces a novel fracture classification method leveraging YOLOv8-Seg technology, aimed at refining the complex manual diagnostic processes.

2 Methods

2.1 Study subjects

The dataset comprised 643 computed tomography (CT) images of osteoporotic vertebral fractures collected from our hospital and affiliated institutions between March 2013 and May 2023. These images, which originated from patients treated for osteoporotic vertebral fractures, distinctly revealed the fractures.

2.2 YOLOv8-based fracture classification

This research introduces a novel fracture classification method utilizing the YOLOv8 deep learning network. YOLOv8 has been employed to analyze a substantial dataset of pre-labeled CT images representing various types of fractures. The network extracts distinctive features from these samples, which serve as inputs for the classifier, thereby facilitating accurate classification of the test data corresponding to different fracture types. Besides, we used the official Ultralytics YOLOv8-Seg implementation (version 8.0.*),specified the initial learning rate (lr0 = 0.01), final learning rate (lrf = 0.01), batch size (batch = 16), and number of training epochs (epochs = 300),detailed the techniques used [e.g., Mosaic (enabled for first 90% of epochs), horizontal flipping, scaling (±10%), and rotations (±10 degrees)] as part of the model's built-in augmentation pipeline and used the AdamW optimizer with a weight decay of 5 × 10−5.

2.3 Data preprocessing

In preparation for input into YOLOv8, the CT images undergo a preprocessing stage. This stage involves standardizing the images to a uniform size and resolution and converting them to grayscale. The conversion to grayscale, which reduces the number of image channels, enhances the efficiency of image processing. LABELME, a Python-based tool, is utilized for implementing this grayscale processing (17).

2.4 Dataset construction

Under the supervision of an experienced orthopedic surgeon, a medical trainee classified and confirmed CT images using the Imaging Labeling system, thus constructing the training and validation sets for the deep learning networks. This process followed data preprocessing guidelines. According to the 1999 EVOSG OVF classification, vertebral fractures are categorized as crush (complete collapse of the vertebral body), anterior wedge (collapse of the anterior border of the vertebral body), and biconcave (collapse of the central portion of the body) (6). For this study, 643 osteoporotic vertebral fracture images were selected for training, including 120 crush, 198 anterior wedge, and 325 biconcave types. Additionally, 30 images were chosen as the test sample, with 10 of each fracture type. Though the proportion of samples of the three types of fractures was seriously unbalanced, we have employed class-weighted loss functions to mitigate this issue, increasing the loss penalty for misclassifications on the minority classes.

2.5 Applying YOLOv8 to extract features

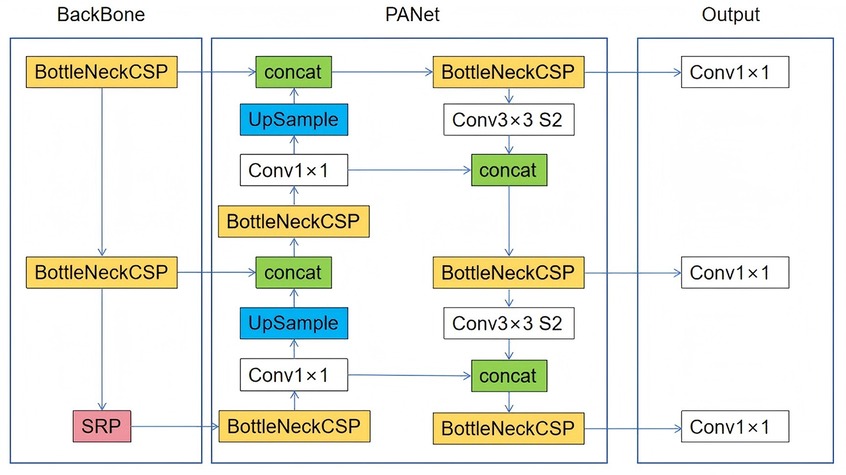

YOLOv8, the latest iteration of the YOLO object detection model (18, 19), retains the architecture of its predecessors but incorporates several enhancements, such as a new neural network architecture that integrates both the Feature Pyramid Network (FPN) and the Path Aggregation Network (PAN), along with a new labeling tool that streamlines the annotation process (18, 19). The FPN progressively decreases the spatial resolution of the input image while augmenting the number of feature channels, thereby generating feature maps capable of detecting objects across various scales and resolutions. The PAN architecture amalgamates features from different levels of the network through skip connections, enabling the network to capture features at multiple scales and resolutions effectively. This capability is crucial for accurately detecting objects of varying sizes and shapes. Figure 1 shows the architecture of YOLOv8.

2.6 Evaluation indicators

In this study, the evaluation of the overall classification effect was conducted using mean average precision (mAP) values derived from the test set. mAP, a widely used metric for object detection, calculates the average precision (AP) across all classes at predetermined Intersection over Union (IoU) thresholds. Precision is determined based on true positives and false positives in object detection. A prediction is deemed a true positive if the IoU between the predicted box and the ground truth exceeds the set IoU threshold, while it is considered a false positive if the IoU falls below this threshold. The mean average precision (mAP) for each class is calculated by iterating over a series of thresholds, starting from an IoU of 0.5 and increasing in steps of 0.05 up to 0.95. The class AP represents the average precision across this range. By computing this value for all classes and averaging the results, the mAP50-95 is obtained.

Formula 1. Recalls (n) = 0, Precisions (n) = 1, n = Number of thresholds

Formula 2. APk = the AP of class k, n = the number of classes

2.7 Experimental environment

The experimental setup employed an NVIDIA A6000 graphics card alongside the YOLOv8n-seg model, which is designed for simultaneous target detection and instance segmentation. This dual-function capability allows the model to perform both tasks concurrently. This enables the model to complete two tasks concurrently.

3 Results

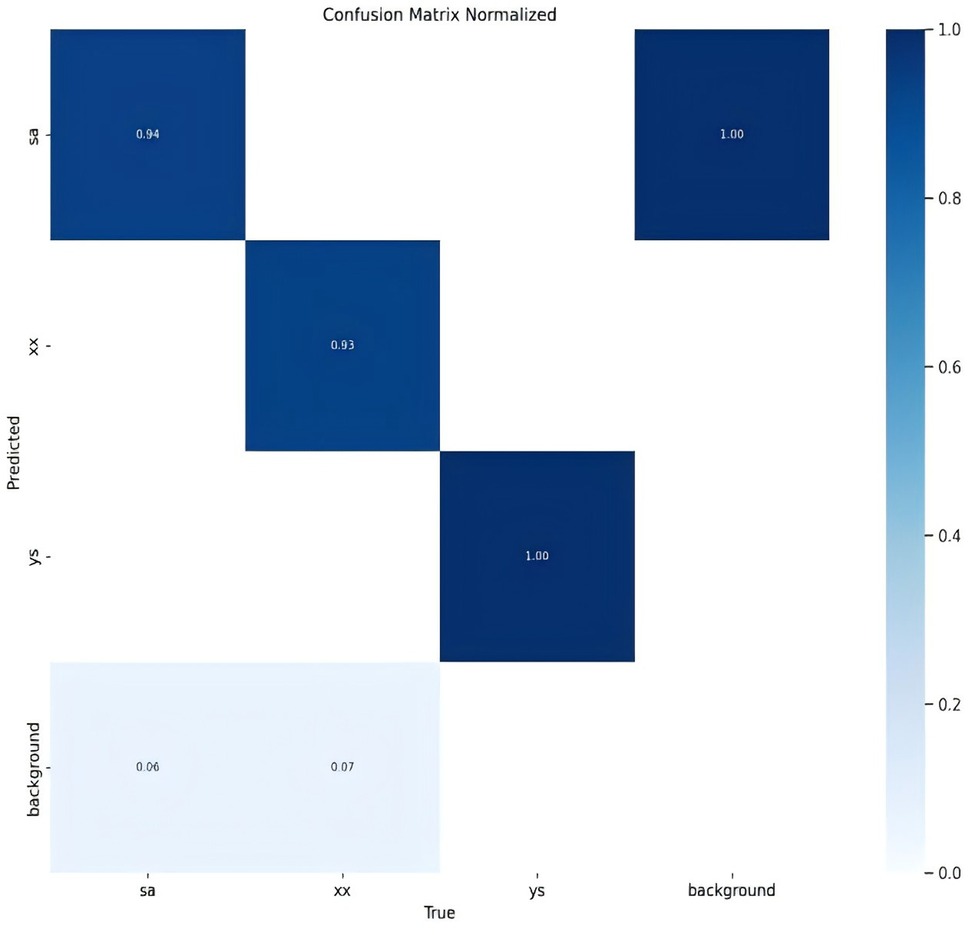

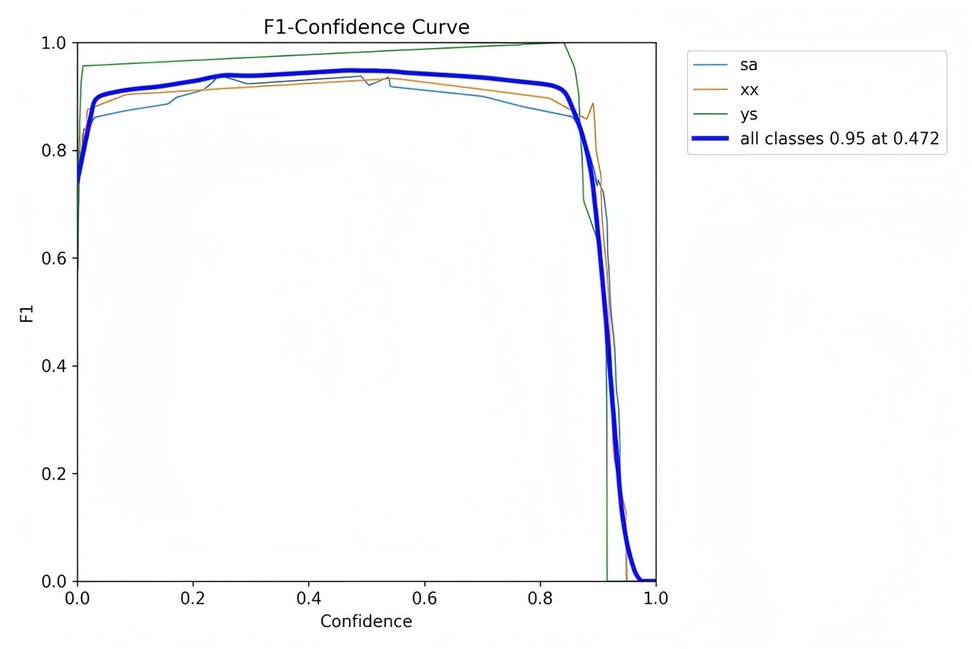

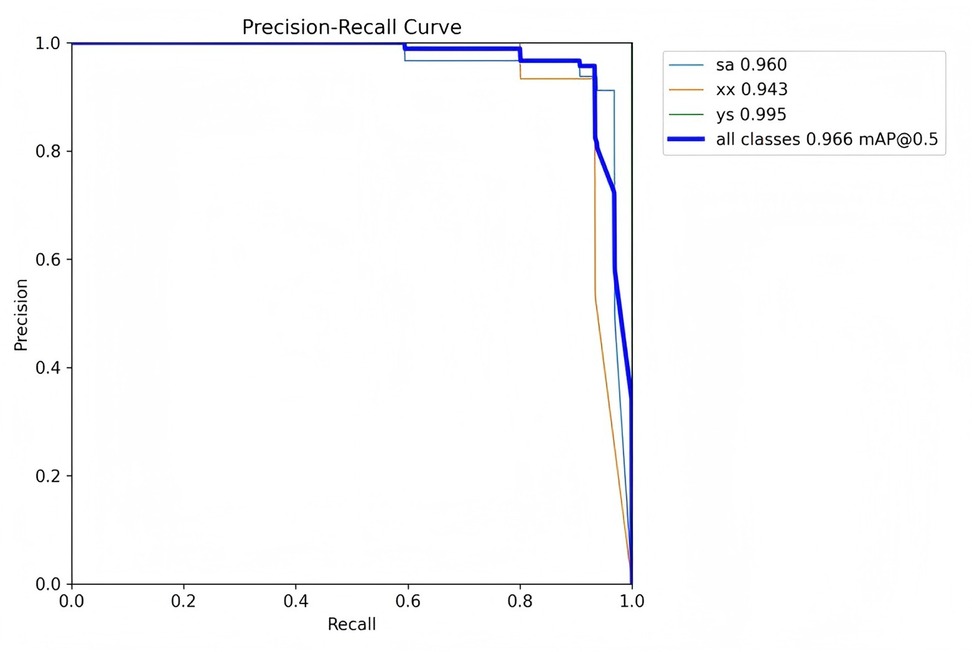

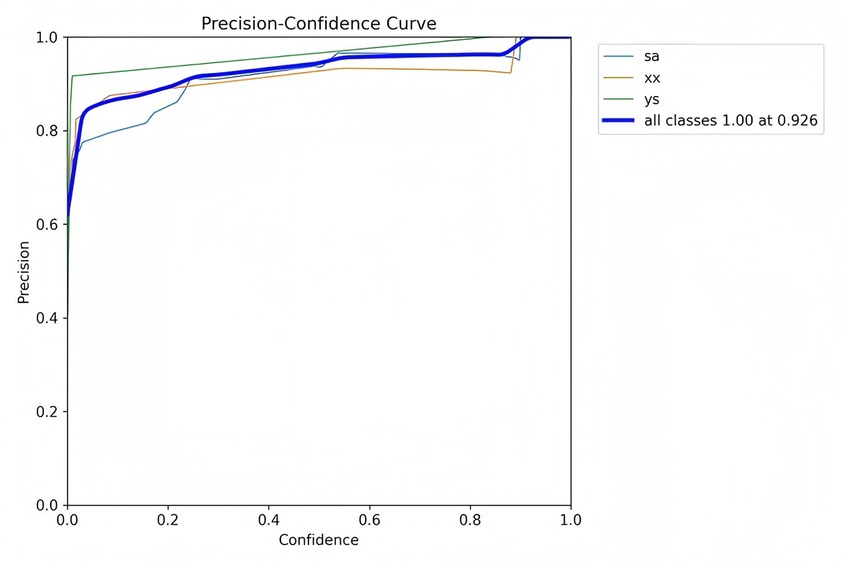

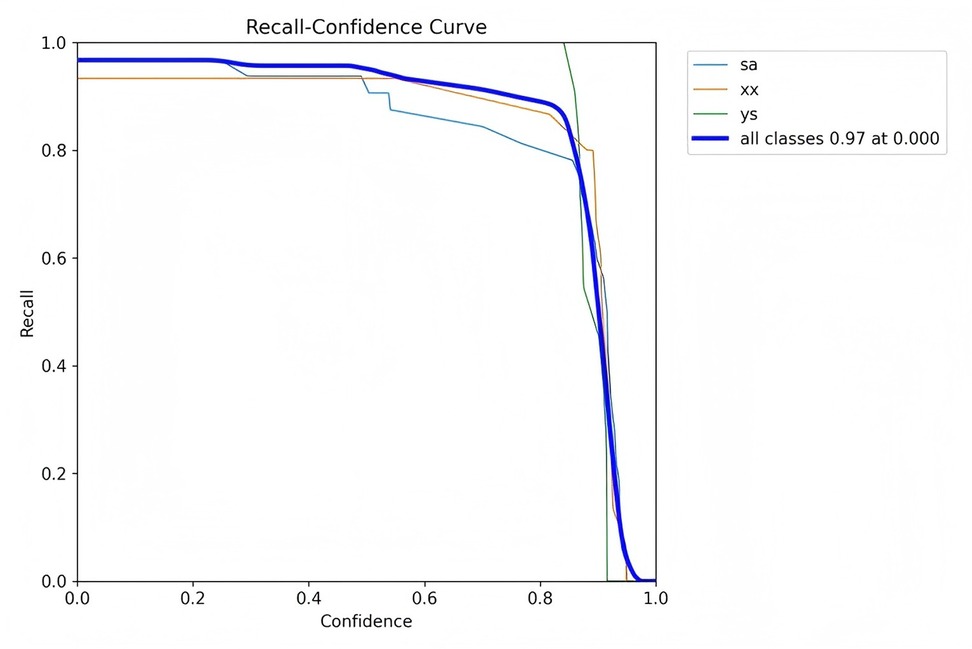

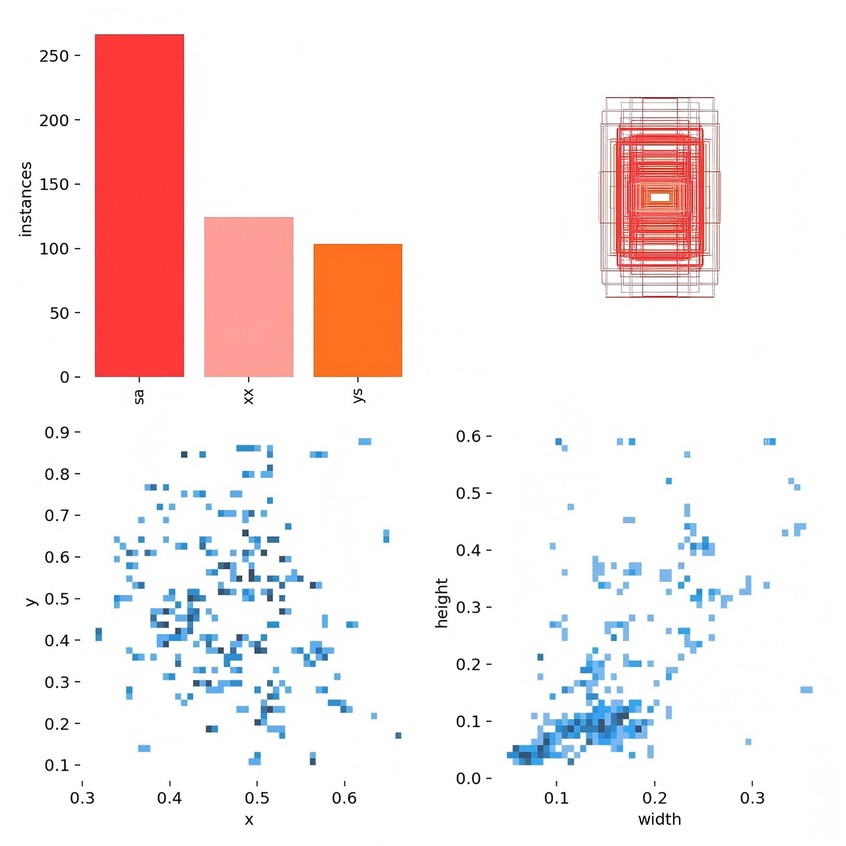

For clarity in the labeling process, this study adopts specific nomenclature: “ys” for crush fractures, “xx” for wedge fractures, and “sa” for biconcave fractures. This nomenclature is consistently used in the figures.

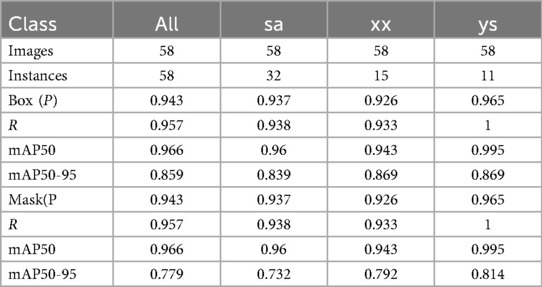

The classification results for the three types of osteoporotic vertebral fractures (OVF)—biconcave fractures, anterior wedge fractures, and crush fractures—are detailed in Figure 2 and Table 1. The YOLOv8 algorithm's performance was assessed using a comprehensive dataset. Out of the 315 biconcave fractures analyzed, 6% were not detected, while 94% were correctly identified, indicating a high level of accuracy in detecting biconcave fractures. Similarly, of the 188 anterior wedge fractures examined, 7% were not detected, and 93% were accurately identified, demonstrating the algorithm's effectiveness in recognizing anterior wedge fractures. Notably, each of the 110 crush fractures was correctly identified, resulting in a perfect detection rate of 100%. The overall mean Average Precision (mAP50-95) for the classification of these fractures was calculated to be 85.9%, reflecting the robust performance of the YOLOv8 algorithm in accurately classifying different types of osteoporotic vertebral fractures.

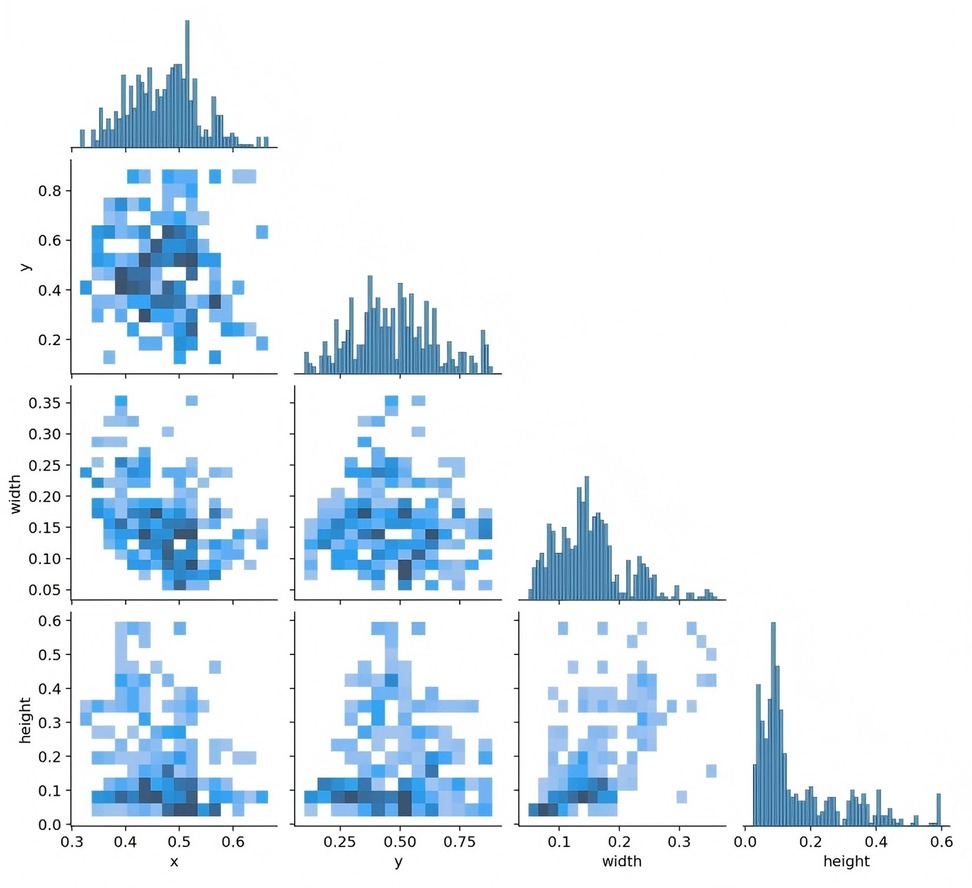

Figure 3 presents data on the training set, including the number of instances in each category, the dimensions and number of frames, the position of the center point relative to the entire map, and the height-to-width ratio of the target within the map.

Figure 3. The quantity of data in the training set, the number of instances in each category, the dimensions and number of frames, the position of the center point in relation to the entire map, and the height-to-width ratio of the target in the map relative to the entire map.

Figure 4 depicts the relationship between the horizontal and vertical coordinates of the center point and the dimensions of the frame.

Figure 4. The horizontal and vertical coordinates of the center point and the height and width of the frame.

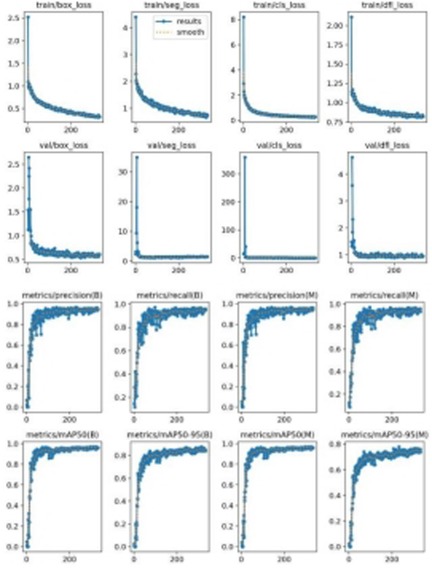

Figure 5 illustrates the model's loss functions, which quantify the discrepancy between the predicted and actual values. These loss functions significantly influence the model's performance, as they guide the training process towards more accurate predictions. The positioning loss (box_loss) is represented by the error between the prediction box and the calibration box, calculated using the Generalized Intersection over Union (GIoU) metric. A lower GIoU value indicates better accuracy in positioning, reflecting a more precise overlap between the predicted and true bounding boxes. The confidence loss (obj_loss) measures the network's confidence in its predictions; a lower value suggests higher accuracy in determining the presence of an object, thus improving the model's ability to differentiate between objects and the background. Lastly, the classification loss (cls_loss) evaluates the accuracy of classifying the anchor frame relative to the calibration. A lower classification loss indicates more precise classification of detected objects into their respective categories, ensuring that the model accurately identifies the types of objects present. Collectively, these loss functions are integral to optimizing the YOLOv8 model, driving enhancements in both detection precision and classification accuracy.

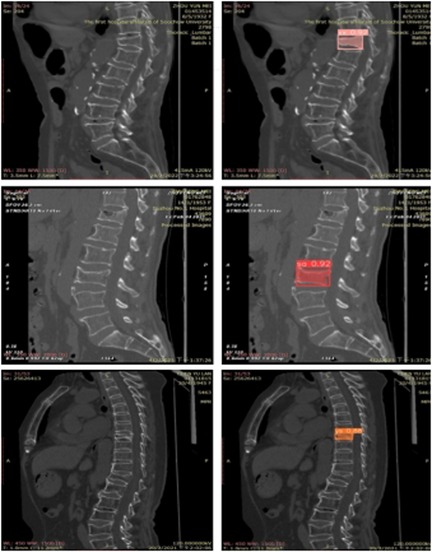

Extensive experiments were conducted on multiple spinal computed tomography (CT) images to validate the performance of the YOLOv8 algorithm. Figure 6 illustrates some of the detection results. In this figure, regions identified as wedge fractures and biconcave fractures by the YOLOv8 algorithm are highlighted, with corresponding confidence scores annotated. Specifically, the upper row of images in Figure 6 displays the detection of wedge fractures, labeled as “xx,” while the lower row of images shows the detection of biconcave fractures, labeled as “sa.” Both types of fractures are detected with a high confidence score of 0.92.

Precision is defined as the measure of the accuracy of the model's positive predictions, calculated as the ratio of correctly identified positive instances (true positives) to all identified positive instances (both true positives and false positives). A higher precision indicates that the model commits fewer false positive errors, thereby ensuring that most of its positive predictions are accurate. Recall, in contrast, represents the proportion of actual positive samples that are correctly identified by the model, essentially measuring the model's ability to identify all relevant instances within the dataset. Specifically, recall is calculated as the ratio of true positives to the sum of true positives and false negatives. Essentially, recall quantifies the number of true positive examples in the test set that are accurately identified by the binary classifier.

The mean Average Precision (mAP) is an aggregate metric that encapsulates both precision and recall into a singular value. It is determined by the area under the precision-recall curve, where precision is plotted on the y-axis and recall on the x-axis. The mAP offers a balanced assessment of the model's performance, considering both the accuracy and completeness of the positive predictions.

Figures 7–10 depict various relationships involving the F1 score, confidence, precision, and recall. The F1 score, a harmonic mean of precision and recall, provides a single metric that balances these two aspects. These figures elucidate how alterations in the confidence threshold influence the F1 score, precision, and recall, providing insights into the trade-offs between these metrics. By examining these relationships, one can gain a deeper understanding of the model's performance and make informed decisions regarding the optimal confidence thresholds for various applications.

The YOLOv8-Seg model demonstrated superior performance in terms of mAP50-95 and inference speed against a benchmark Mask R-CNN model (with a ResNet-50 backbone) trained and tested on our dataset under identical conditions.

4 Discussion

This study utilized the YOLOv8-Seg deep learning model to classify osteoporotic vertebral fractures (OVFs)—specifically biconcave, wedge, and crush types—based on spinal CT images. The model achieved an overall mean Average Precision (mAP50-95) of 85.9%, demonstrating high proficiency in detecting and segmenting fracture regions. Notably, the model showed perfect detection (100%) for crush fractures, which may be attributable to their more pronounced morphological deformation compared to wedge and biconcave types.

Our results align with recent advances in applying deep learning to medical image analysis, particularly in orthopedics. For instance, Yang et al. (16) reported comparable mAP values in fracture detection using convolutional neural networks, though their model did not perform instance segmentation. The incorporation of both FPN and PAN in YOLOv8-Seg allows multi-scale feature aggregation, enhancing detection across fracture types of varying shapes and sizes—a challenge noted in earlier studies such as Zhou et al. (20). Moreover, our model's lightweight architecture offers faster inference speeds compared to heavier networks like Mask R-CNN, making it more suitable for real-time clinical applications.

The integration of YOLOv8-Seg into clinical workflows has the potential to significantly reduce diagnostic time and assist less experienced radiologists in identifying OVFs accurately. By providing automated, high-confidence fracture classifications and localizations, the system can serve as a decision-support tool, particularly in high-volume settings. Future implementation may involve embedding the model into PACS systems for real-time inference during image reading, thereby offering immediate diagnostic suggestions.

While this study demonstrates the promising performance of YOLOv8-Seg in classifying osteoporotic vertebral fractures, several limitations should be acknowledged. First, the model was trained and validated on a single-center dataset with inherent demographic and diagnostic biases, which may limit its generalizability to other populations or imaging protocols. Future studies should incorporate multi-institutional data to improve robustness and external validity. Second, the dataset exhibited significant class imbalance, particularly with underrepresentation of crush and wedge fractures. Although class-weighted loss was employed to mitigate this issue, future work could explore advanced techniques such as synthetic minority oversampling (SMOTE) or generative adversarial networks (GANs) to create more balanced training sets.

Furthermore, the current study lacks comparative validation against other state-of-the-art segmentation models or clinician performance (21–23). A future reader study involving radiologists of varying experience levels would help contextualize the model's diagnostic accuracy and practical utility. Finally, the translation of such AI tools into clinical practice faces practical barriers, including integration into existing Picture Archiving and Communication Systems (PACS), compliance with healthcare data privacy regulations, and the need for real-time inference capabilities. Future efforts should also address clinician trust and interpretability by incorporating explainable AI techniques, such as attention maps or uncertainty quantification, to provide deeper insight into model predictions.

5 Conclusion

This study addresses the prevalent challenges in diagnosing spinal fractures, an issue that is increasingly significant due to the aging population. It introduces cutting-edge deep learning methods for the classification of fracture diagnoses, contributing significantly to the enhancement of medical image diagnosis, assisted decision-making, and pre-operative planning for various clinical treatments. Furthermore, this research advances the precision of invasive operations and the execution of plans in areas such as surgical robotics.

It is important to note that the study's limitations include a relatively small sample size, which impacts the classification accuracy of the proposed method. Additionally, the potential for misidentification and misclassification increases with a smaller dataset. Future research will aim to overcome these limitations by developing a more robust network structure and algorithm, enhancing the accuracy of learning fracture patterns.

Data availability statement

The original contributions presented in the study are included in the article/Supplementary Material, further inquiries can be directed to the corresponding authors.

Author contributions

FY: Conceptualization, Data curation, Writing – original draft, Writing – review & editing. YQ: Conceptualization, Data curation, Investigation, Methodology, Writing – original draft, Writing – review & editing. HX: Conceptualization, Investigation, Methodology, Project administration, Resources, Software, Writing – original draft. ZG: Data curation, Formal analysis, Resources, Writing – review & editing. XZ: Resources, Visualization, Writing – original draft. YC: Methodology, Validation, Visualization, Writing – review & editing. HS: Conceptualization, Data curation, Investigation, Validation, Writing – original draft, Writing – review & editing. YL: Conceptualization, Writing – review & editing. YW: Conceptualization, Resources, Visualization, Writing – original draft, Writing – review & editing. LW: Conceptualization, Data curation, Software, Writing – original draft. YQ: Conceptualization, Data curation, Formal analysis, Funding acquisition, Investigation, Methodology, Project administration, Resources, Software, Supervision, Validation, Visualization, Writing – original draft, Writing – review & editing. TC: Conceptualization, Data curation, Formal analysis, Funding acquisition, Investigation, Methodology, Project administration, Resources, Software, Supervision, Validation, Visualization, Writing – original draft, Writing – review & editing.

Funding

The author(s) declare that financial support was received for the research and/or publication of this article. This work was supported by the National Natural Science Foundation of China (Grant No. 82572432).

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Generative AI statement

The author(s) declare that no Generative AI was used in the creation of this manuscript.

Any alternative text (alt text) provided alongside figures in this article has been generated by Frontiers with the support of artificial intelligence and reasonable efforts have been made to ensure accuracy, including review by the authors wherever possible. If you identify any issues, please contact us.

Publisher's note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

References

1. Bassani JE, Galich FM, Petracchi MG. Osteoporotic vertebral fractures. In: Slullitel P, Rossi L, Camino-Willhuber G, editors. Orthopaedics and Trauma: Current Concepts and Best Practices. Cham: Springer International Publishing (2024). p. 691–700.

2. Ballane G, Cauley JA, Luckey MM, El-Hajj Fuleihan G. Worldwide prevalence and incidence of osteoporotic vertebral fractures. Osteoporos Int. (2017) 28(5):1531–42. doi: 10.1007/s00198-017-3909-3

3. Al Taha K, Lauper N, Bauer DE, Tsoupras A, Tessitore E, Biver E, et al. Multidisciplinary and coordinated management of osteoporotic vertebral compression fractures: current state of the art. J Clin Med. (2024) 13(4):930. doi: 10.3390/jcm13040930

4. Balasubramanian A, Zhang J, Chen L, Wenkert D, Daigle SG, Grauer A, et al. Risk of subsequent fracture after prior fracture among older women. Osteoporos Int. (2019) 30(1):79–92. doi: 10.1007/s00198-018-4732-1

5. Compston JE, McClung MR, Leslie WD. Osteoporosis. Lancet. (2019) 393(10169):364–76. doi: 10.1016/s0140-6736(18)32112-3

6. Ismail AA, Cooper C, Felsenberg D, Varlow J, Kanis JA, Silman AJ, et al. Number and type of vertebral deformities: epidemiological characteristics and relation to back pain and height loss. Osteoporos Int. (1999) 9(3):206–13. doi: 10.1007/s001980050138

7. Jang H-D, Kim E-H, Lee JC, Choi S-W, Kim K, Shin B-J. Current concepts in the management of osteoporotic vertebral fractures: a narrative review. Asian Spine J. (2020) 14(6):898–909. doi: 10.31616/asj.2020.0594

8. Patel D, Liu J, Ebraheim NA. Managements of osteoporotic vertebral compression fractures: a narrative review. World J Orthop. (2022) 13(6):564–73. doi: 10.5312/wjo.v13.i6.564

9. Wu M, Sun H, Sun Z, Guo X, Duan L, Tan Y, et al. A machine learning-based method for automatic diagnosis of ankle fracture using x-ray images. Int J Imaging Syst Technol. (2022) 32(3):831–42. doi: 10.1002/ima.22665

10. Hsu W, Hearty TM. Radionuclide imaging in the diagnosis and management of orthopaedic disease. J Am Acad Orthop Sur. (2012) 20(3):151–9. doi: 10.5435/JAAOS-20-03-151

11. Leithner A, Windhager R. Guidelines for the biopsy of bone and soft tissue tumours. Orthopade. (2007) 36(2):167–74. doi: 10.1007/s00132-006-1039-2

12. Chang MC, Canseco JA, Nicholson KJ, Patel N, Vaccaro AR. The role of machine learning in spine surgery: the future is now. Front Surg. (2020) 7:54. doi: 10.3389/fsurg.2020.00054

13. Zech JR, Santomartino SM, Yi PH. Artificial intelligence (AI) for fracture diagnosis: an overview of current products and considerations for clinical adoption, from the AJR special series on AI applications. Am J Roentgenol. (2022) 219(6):869–78. doi: 10.2214/AJR.22.27873

14. Zhou X, Wang H, Feng C, Xu R, He Y. Emerging applications of deep learning in bone tumors: current advances and challenges. Front Oncol. (2022) 12:908873. doi: 10.3389/fonc.2022.908873

15. Lee J, Chung SW. Deep learning for orthopedic disease based on medical image analysis: present and future. Appl Sci. (2022) 12(2):681. doi: 10.3390/app12020681

16. Yang S, Yin B, Cao W, Feng C, Fan G, He S. Diagnostic accuracy of deep learning in orthopaedic fractures: a systematic review and meta-analysis. Clin Radiol. (2020) 75(9):713.e17–e28. doi: 10.1016/j.crad.2020.05.021

17. Russell BC, Torralba A, Murphy KP, Freeman WT. Labelme: a database and web-based tool for image annotation. Int J Comput Vis. (2008) 77(1–3):157–73. doi: 10.1007/s11263-007-0090-8

18. Hussain M. YOLOv1 to v8: unveiling each variant-A comprehensive review of YOLO. IEEE Access. (2024) 12:42816–33. doi: 10.1109/ACCESS.2024.3378568

19. Li Y, Fan Q, Huang H, Han Z, Gu Q. A modified YOLOv8 detection network for UAV aerial image recognition. Drones. (2023) 7(5):304. doi: 10.3390/drones7050304

20. Zhou Z, Wang S, Zhang S, Pan X, Yang H, Zhuang Y, et al. Deep learning-based spinal canal segmentation of computed tomography image for disease diagnosis: a proposed system for spinal stenosis diagnosis. Medicine (Baltimore). (2024) 103(18):e37943. doi: 10.1097/MD.0000000000037943

21. Zhang S, Niu Y. LcmUNet: a lightweight network combining CNN and MLP for real-time medical image segmentation. Bioengineering. (2023) 10(6):712. doi: 10.3390/bioengineering10060712

22. Jiang X, Hu Z, Wang S, Zhang Y. Deep learning for medical image-based cancer diagnosis. Cancers (Basel). (2023) 15(14):3608. doi: 10.3390/cancers15143608

Keywords: deep learning, osteoporotic vertebral fracture, YOLOv8-Seg, computed tomography (CT), fracture classification

Citation: Yang F, Qian Y, Xiao H, Gao Z, Zhao X, Chen Y, Sun H, Li Y, Wang Y, Wang L, Qiao Y and Chen T (2025) YOLOv8-Seg: a deep learning approach for accurate classification of osteoporotic vertebral fractures. Front. Radiol. 5:1651798. doi: 10.3389/fradi.2025.1651798

Received: 22 June 2025; Accepted: 29 September 2025;

Published: 14 October 2025.

Edited by:

Abhirup Banerjee, University of Oxford, United KingdomCopyright: © 2025 Yang, Qian, Xiao, Gao, Zhao, Chen, Sun, Li, Wang, Wang, Qiao and Chen. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Yu Wang, MTIyNjEwNzg5MUBxcS5jb20=; Lingjie Wang, MzI2OTA4NzI2NUBxcS5jb20=; Yusen Qiao, cWlhb3l1c2VuODYxMkBzdWRhLmVkdS5jbg==; Tonglei Chen, dGxjaGVuMjAwMkAxNjMuY29t

†These authors have contributed equally to this work

Feng Yang1,†

Feng Yang1,† Yuchen Qian

Yuchen Qian Zhiheng Gao

Zhiheng Gao Haifu Sun

Haifu Sun Yusen Qiao

Yusen Qiao