- 1Systems Neuroscience & Neurotechnology Unit, Faculty of Medicine, Saarland University, Homburg, Germany

- 2School of Engineering, Saarland University of Applied Sciences, Saarbruecken, Germany

- 3Research Computing Center and Organismal Biology and Anatomy, University of Chicago, Chicago, IL, United States

- 4Leibniz-Institute for New Materials, Saarbruecken, Germany

The phase-reset model of oscillatory EEG activity has received a lot of attention in the last decades for decoding different cognitive processes. Based on this model, the ERPs are assumed to be generated as a result of phase reorganization in ongoing EEG. Alignment of the phase of neuronal activities can be observed within or between different assemblies of neurons across the brain. Phase synchronization has been used to explore and understand perception, attentional binding and considering it in the domain of neuronal correlates of consciousness. The importance of the topic and its vast exploration in different domains of the neuroscience presses the need for appropriate tools and methods for measuring the level of phase synchronization of neuronal activities. Measuring the level of instantaneous phase (IP) synchronization has been used extensively in numerous studies of ERPs as well as oscillatory activity for a better understanding of the underlying cognitive binding with regard to different set of stimulations such as auditory and visual. However, the reliability of results can be challenged as a result of noise artifact in IP. Phase distortion due to environmental noise artifacts as well as different pre-processing steps on signals can lead to generation of artificial phase jumps. One of such effects presented recently is the effect of low envelope on the IP of signal. It has been shown that as the instantaneous envelope of the analytic signal approaches zero, the variations in the phase increase, effectively leading to abrupt transitions in the phase. These abrupt transitions can distort the phase synchronization results as they are not related to any neurophysiological effect. These transitions are called spurious phase variation. In this study, we present a model to remove generated artificial phase variations due to the effect of low envelope. The proposed method is based on a simplified form of a Kalman smoother, that is able to model the IP behavior in narrow-bandpassed oscillatory signals. In this work we first explain the details of the proposed Kalman smoother for modeling the dynamics of the phase variations in narrow-bandpassed signals and then evaluate it on a set of synthetic signals. Finally, we apply the model on ongoing-EEG signals to assess the removal of spurious phase variations.

1. Introduction

The assessment of voltage changes of measured neural activities in terms of their level of synchronization has been one of the main evaluation methods for understanding the behavior of numerous cognitive processes and biological systems (Tass et al., 1998; Lachaux et al., 1999; Rosenblum et al., 2001; Fell and Axmacher, 2011; Park and Rubchinsky, 2012; Mortezapouraghdam et al., 2014, 2016; Thounaojam et al., 2014; Watanabe et al., 2015; Noda et al., 2017). Phase synchronization is observed from a single cell recording where groups of neurons from the same or different populations fire simultaneously, up to a larger scale where different regions of the brain exhibit a synchronized and coherent behavior in their neural activities (Siapas et al., 2005; Laine et al., 2012). Analyzing the phase of neural activities from invasive to non-invasive scales has been found to be informative for decoding the underlying neural mechanisms, understanding the propagation of neural firing and thereby giving us insights on the association between different neural assemblies (Lutz et al., 2002; Nolte et al., 2004; Busch et al., 2009; Uhlhaas et al., 2009; Canavier, 2015; Voloh and Womelsdorf, 2016).

The coherent activity in neural population can be observed among different neural assemblies for different cognitive and motor tasks. It has been particularly used to study the effect of cognitive binding with regards to different stimulations (Makeig et al., 2002; Strauss et al., 2005; Klimesch et al., 2007). For example, in Mortezapouraghdam et al. (2015) the level of phase alignment has been used to differentiate between different processes of habituation and non-habituation. In Bernarding et al. (2013) phase synchronization has been used to objectively determine the level of selective attention to auditory stimulations. Abnormal activities in phase synchronization of neural oscillators have been investigated across different spectrums of neuropsychiatric disorders such as schizophrenia and attention deficit disorders (Tcheslavski and Beex, 2006; Bob et al., 2008). Epilepsy is associated with a hypersynchronous state of neuronal activities across the brain. Using the phase synchrony techniques, different approaches for prediction of epileptic episodes have been proposed (Zheng and Voight, 2006).

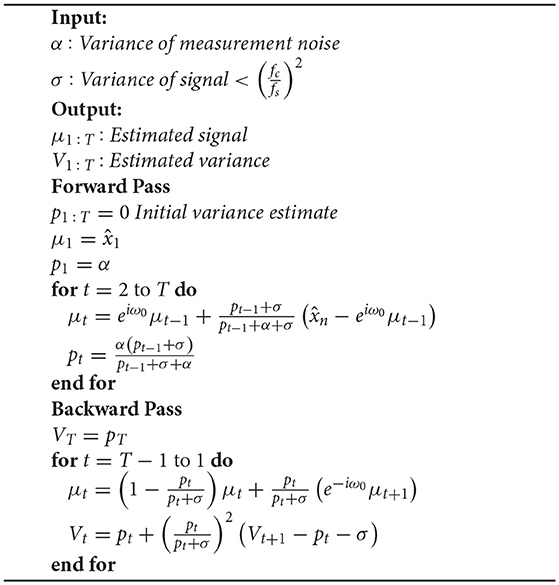

The role of phase synchronization of ongoing oscillatory activities has been deeply discussed in the area of ERP generation as well (Min et al., 2007). The phase modulation view of ERP genesis states that the generation of evoked-related potential (ERP) is not independent from the background (ongoing) EEG activities (see Sayers et al., 1974; Yeung et al., 2004 and the reference therein for more details). It is assumed that ERPs are generated by the re-organization of stimulus induced phase resets of ongoing EEG rhythms (Sayers et al., 1974; Makeig et al., 2002; Penny et al., 2002). Thereby, the ERP generation is not solely based on superposition of evoked, fixed-latency and fixed-polarity responses that are independent from the ongoing EEG activity (Kolev and Yordanova, 1997; Sauseng et al., 2007). Based on this definition, the background EEG activity comprise an important part of the ERP generation process. This view is also referred to as phase modulation (PM), in contrast to the classical view, namely the amplitude modulation(AM). Figure 1 illustrates the classical view of ERP generation against the phase-resetting model.

Figure 1. (A) The evoked classical model assumes that the evoked components of averaged ERP is generated by a constant evoked response that is added onto EEG activity. The evoked responses have the same latency and polarity for all trials. The average of all responses over all trials will yield the averaged ERP. (B) Another explanation of ERP genesis model, the average evoked response is based on phase-resetting of background EEG activity. Averaging over all trials by which phase reset has occurred, the same results as in (A) is produced.

Given the importance and the broad applicability of phase synchronization across different domains, applying proper tools and techniques for measuring the level of phase synchrony of neural activities is crucial. Phase locking value (PLV) is among the most common approaches used for measuring the phase synchrony (Rosenblum et al., 2000; Hurtado et al., 2004; Park and Rubchinsky, 2012; Aydore et al., 2013). Empirical mode decomposition (EMD), frequency flow analysis (FFA), phase-amplitude coupling and event-related synchronization are among examples of methods used for quantifying the level of phase synchronization among different neural oscillatory activities (Lachaux et al., 1999; Rudrauf et al., 2006; Dvorak and Fenton, 2014). In Mortezapouraghdam et al. (2014, 2016), a Bayesian framework has been used to detect the significant changes in the phase synchronization level. Thereby, a reliable phase estimation is important for obtaining a realistic and stable estimate of the level of phase synchrony and coherency of neural activities.

Removal of artificial phase variations in the signal is one of the important steps for obtaining a reliable measure of phase synchronization. Spurious phase variation in this context refers to phase resets which are not related to any underlying neurophysiological activities. In the recent study by Sameni and Seraj (2016, 2017), one of the effects that lead to artificial phase variations in EEG signals has been thoroughly explained. It has been demonstrated that the low envelope of an analytical signal after narrow band-passing can cause abrupt changes in the instantaneous phase (IP) or instantaneous frequency (IF) of the signal. As a consequence, these spurious phase variations can be falsely correlated and interpreted as a response to stimulations or cognitive activities and distort the results. These artificial phase alignments are called spurious phase-resets. In Sameni and Seraj (2016), a robust measure based on a Monto-carlo estimation scheme has been proposed for computing a more reliable estimate of the phase.

As a contribution to the proposed method in Sameni and Seraj (2016) and Seraj and Sameni (2017) and the concept of removal of noisy phase resets, we present a model that is able to remove spurious phase variations in the IP component of signals by modeling the behavior of IP over time. Our proposed method is a special case of a Kalman smoother (KS), which is applied after applying a set of different narrow-bandpassed filters with slight parameter variations for a robust estimation [an initial study of the proposed methodology has been published in Mortezapouraghdam and Strauss (2017)]. The IP and IE are modeled using a multivariate complex Gaussian distribution and illustrate how the information in the IE of narrow-bandpassed signals can contribute to elimination of spurious phase jitters.

The organization of the paper is as follows: We first describe the proposed methodology in detail and test it on synthetic data with spurious phase variations. The method is evaluated using different signal-to-noise (SNR) ratios. We apply the proposed method on examples of ongoing EEG measurements to examine the applicability of the model to real measurements1. Finally, we discuss the setting of the KS parameters and its potential use for future studies.

2. Materials and Methods

In this section, we briefly explain the effect of spurious phase variation followed by describing the proposed approach for removing the phase slips. In the second part of this section, the experimental setting and the measuring procedures are explained.

2.1. Phase Singularities: Definition of Spurious Phase Slips and Types

One of the main steps before extraction of IP is the filtering process. To obtain a meaningful interpretation of phase modulations, the signal is narrow-bandpassed (Chavez et al., 2006; Sameni and Seraj, 2017). A FIR (finite impulse response) filter is usually applied to avoid the distortion of phase information (see chapter 5 of Handy, 2005 for more references). The IP is obtained by computing the analytic signal, commonly by either applying a wavelet transformation (WT) or Hilbert transformation (HT). The HT of a signal x(t) is represented as

such that a real-valued signal is converted to a complex plane using HT. Measures such as the IE and IP are computed from and respectively, where θ ∈ [−π, π), t ∈ ℝ. The IP transits to −π when it reaches π. One of the techniques for tracking the modulations of IP in long time intervals is analyzing the unwrapped version of the IP. The unwrapped IP is obtained by adding 2π every time a reset occurs. We use p:ℝ → ℝ+ for unwrapped phase. Using the unwrapped phase, the angular frequency is defined as with Δt being the sampling period.

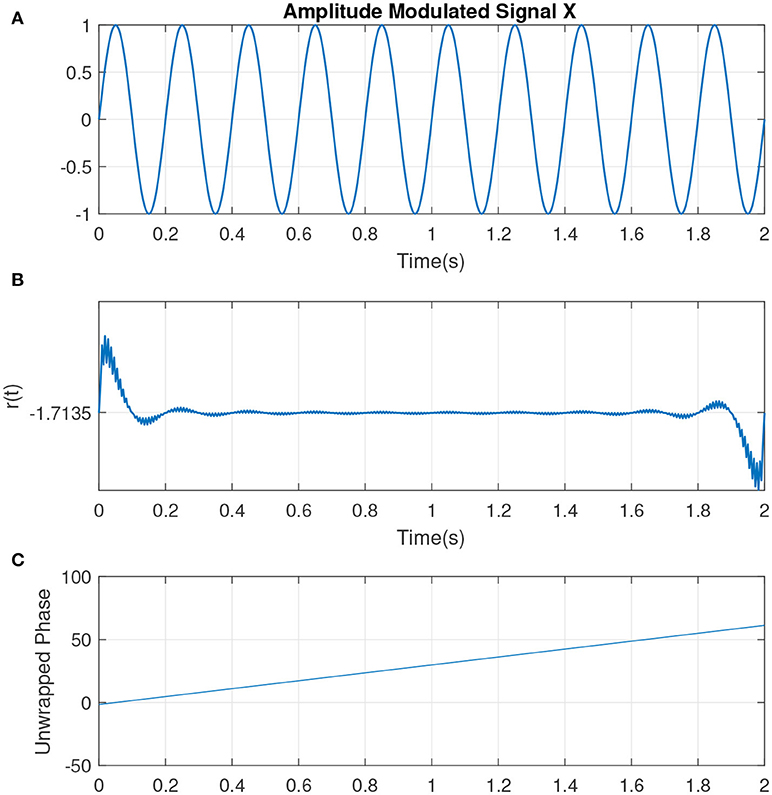

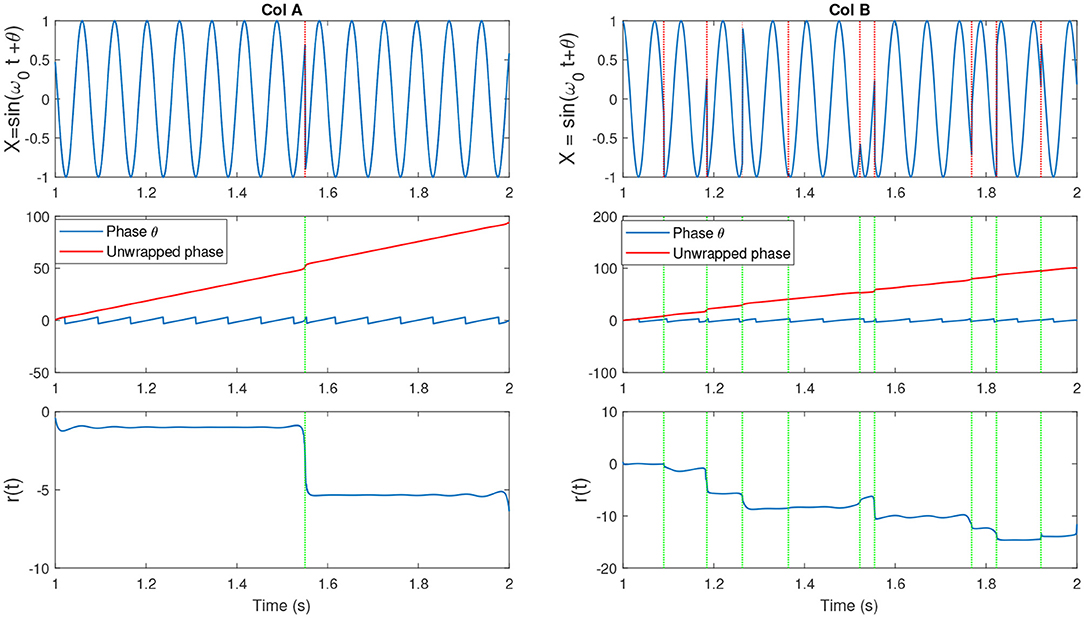

The slope of p(t) is related to the mean frequency. The slope of a signal with center frequency fc which best determines the activities at that particular frequency is given by ω0 = 2πfc (measured in rad/sec). If the IP contains no additional resets, it is uniformly distributed. This can represented by a sine wave with complete cycle, at which the IP is uniformly distributed (see Figure 2, illustrating the simplest condition along with the parameters such as unwrapped phase and residual defined earlier as indicators for the variations in the phase of the signal). Hence the change to the uniformity can be represented by the difference between p(t) and the line ω0t, i.e., r(t) = p(t) − ω0t. See Figure 3 which is an example of two sine waves with high and low number of resets along with r(t).

Figure 2. (A) An example of a sine wave X = sin(ω0t) with a center frequency of fc = 5Hz or ω0 ≈ 31 (rad/s). (B) The residual r(t). As there are no phase shifts in the signal, no jumps is observed in r(t). The small jitters at the beginning correspond to the filtering. (C) The unwrapped phase that is reflecting the same interpretation as in (B).

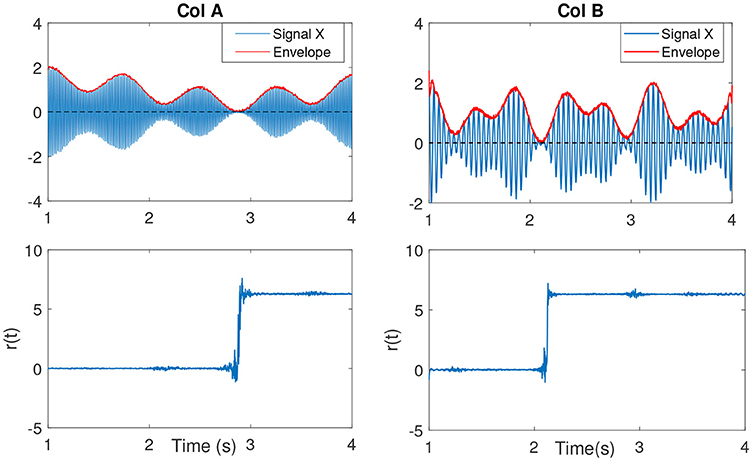

Figure 3. (Col A, top) An example of a sine wave X = sin(ω0t + θ) with fc = 7. We introduced one artificial shift in the signal. (Middle) The unwrapped IP of X [denoted as (p(t)] and the wrapped version θ(t). (Bottom) The residual r(t) which is computed as r(t) = p(t) − ω0t. The shift in the signal has been shown as an abrupt jump in the residual. (Col B, top) An example of a sine wave with more random shifts in the signal. Corresponding phase and residual, similar to previous plot have been illustrated. Figure with some modifications has been adapted from Mortezapouraghdam and Strauss (2017).

2.1.1. Spurious Phase Variation

After applying a narrow band-pass filter to the measured EEG oscillations, the resulting xt, θt can be described by the additive model of

where θt is the residual phase r(t), , and Xt is the envelope. It is assumed that the EEG signal is a superposition of background activities (considered as noise) and the desired neural activity. The noise or the background EEG is modeled by Wt and it is assumed to be Gaussian distributed . The specific desired neural activity is denoted as st. The IP θt (θt ∈ [−π, π)) and the envelope Xt of the narrow-bandpassed signal are slowly varying functions of t. Hence, it is expected that the changes between θt and θt + 1 to be small.

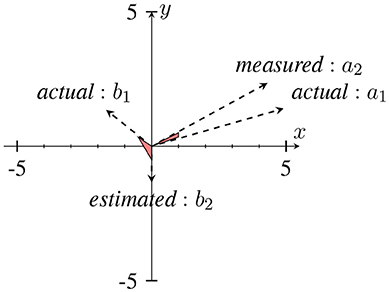

In Sameni and Seraj (2016), it has been shown that as the IE becomes small (near zero), the IP will contain sudden changes or jitters (see Figure 4) that can be falsely correlated to phase-resets induced from an event. We can describe the effect of spurious phase variation in a small example as in Figure 5 using four samples of an analytic signal. The distance between the samples |a1 − a2| and |b1 − b2| are the same. However, the envelope, which can be represented as the distance of the individual samples to the origin are different. Considering a2 and b2 represent the noisy samples, the noise effect between the actual sample of a1 and a2 yields an angle of ~ 13°, where as in case of sample b, this is about 116°. Such variation in this example relates to the low envelope of the signal.

Figure 4. (Col A, first plot) An example of an amplitude modulated signal with a mean frequency fc = 50Hz. Red line shows the IE. The second plot shows r(t). As the IE of the signal approaches to zero, the phase variations increase, best seen as an abrupt change. This is clearly evident in comparison to the other envelope values throughout the signal at other time samples. (Col B, first plot) The same explanation as in Col A, however with a lower mean frequency signal fc = 15Hz.

Figure 5. An example of a sudden phase change between b1 and b2 due to the low envelope in comparison to a1 and a2. The distance between (a1, a2) and (b1, b2) are approximately the same, however with different envelopes. This has been adapted from Mortezapouraghdam and Strauss (2017). Figure with some modifications has been adapted from Mortezapouraghdam and Strauss (2017).

This effect has been studied in Chavez et al. (2006), Rudrauf et al. (2006), and Sameni and Seraj (2017) where the effect of spurious phase jumps have been described in terms of calculation of the phase. The IP is computed using the arctan operator . Minor changes due to noise or background EEG variations to the real and imaginary parts of the analytic signal (which are narrow-bandpassed) can lead to significant changes to the computation of phase as the numerator and denominator values are small (see Rudrauf et al., 2006 and the references therein). Hence, the aim of this study is to estimate the true phase using the IE and IP and have a more reliable measure of the IP such that the spurious phase variations due to a low envelope will be removed.

2.2. Modeling the Variations Between Instantaneous Phase and Amplitude

The analytic form of the signal st ∈ ℝ is denoted as . We assume that the measurements are corrupted by Gaussian noise with mean 0 and variance α. The noise of the measurements is denoted by Wt. It's analytical form is modeled using a symmetric complex Gaussian distribution . This can be shown as follows: as stated, the bandpassed signal is modeled as

It's HT is denoted by

using the linearity of the HT. Here is also a Gaussian distributed random variable (see chapter 8 of Davenport and Root, 1958; Sameni and Seraj, 2016) with mean 0 and variance α. The analytic form of the signal xt using the HT is then

where the analytic form of Wt is modeled by a complex Gaussian distribution with a zero mean and independent real and imaginary part with σ2 as the variance2. The resulting complex Gaussian distribution is a symmetric distribution with non-diagonal elements set to zero.

The analytic form of the measurements are hence modeled as , where ŝt ∈ ℂ is the analytic form of the signal without noise. We work directly with the complex analytic signals, as we can later obtain the denoised version of the IP to simplify our model. With our assumption of st, the observations can be modeled using a complex Gaussian distribution , where ŝt is the mean and α is the variance. The signal st is filtered with a narrow bandpass filter centered around fc prior to computing the analytic signal (see Equation 1). Thus we can assume that , using the linearity of HT. Here, is the denoised analytical signal that we want to recover. The ratio of successive values of ŝt is

The results are obtained based on the assumption that at a narrow frequency, At and θt are varying slowly compared to ω0. We can therefore express the phase modulations over time using our assumptions as , and model , where . This means that the analytic signal at time t + 1 is obtained using the phase at time t multiplied by a small factor of with some additive noise. The additive noise η correspond to the simplifications that have been applied for obtaining Equation (3). We assume that the additive noise follows a Gaussian distribution. The proposed model for the phase guarantees that the changes in phase of a narrow-bandpassed signal are rather slow and gradual over time.

2.2.1. Model Derivation

We describe the model derivation in two phases of forward and backward pass. The model assumptions as described previously are as follows:

with the following assumptions about the distributions of states and the data observations:

We can write the above expressions as a two dimensional real linear Gaussian state-space model by separating the real and imaginary parts. In the following we take to be two dimensional vectors of real numbers consisting of the real and imaginary parts of the underlying complex number. With this convention we have:

In this form we can directly apply the Kalman smoother that has been described in Briers et al. (2010). This consists of a forward and a backward pass which we describe in the following two sections.

The forward pass

To estimate the state (an estimation of the analytic form of the signal), we have to derive the following posterior distribution:

by using the first order Markov property that the current data at time t is independent from the past given the state at time ŝt. Simplifying the normalization factor we can write:

after expanding the inner bracket, we obtain:

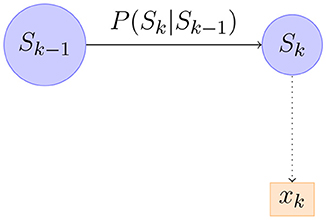

Equation 7 can be realized as in Figure 6. Figure 6 shows a part of the first order Markov model. We can compute the distribution of recursively starting from P(ŝ0). It turns out that in our model the distribution of is always Gaussian so it suffices to compute its mean and covariance matrix. Writing μt, Pt for the mean and covariance matrix of we have the following equations from Briers et al. (2010).

Furthermore, it turns out that Pt is always diagonal of the form ptI. This allows us to simplify the equations above. To see this, assume that Pt = ptI and plug this expression into the equations above.

The kalman gain factor is an expression for determining how reliable the measurement at time t + 1 is compared to the estimated state (signal) based on the level of noise in the data. If data has a small level of SNR (high noise), the algorithm will rely more on the estimated value than the measurement. Therefore, a realistic and good estimate of SNR can improve the performance of the KS.

Figure 6. An illustration of part of a Bayesian Network corresponding to forward-passing of information.

The backward pass

Given we compute recursively starting from and moving backwards. In general we have

and hence we can compute given . Again, we find that is always a Gaussian distributed random variable. Writing for the mean and covariance matrix of we have the following equations from Briers et al. (2010).

As in the forward pass this simplifies in our model, since we only deal with symmetric covariance matrices of the form and since BT = B−1. In the following we assume that . We have

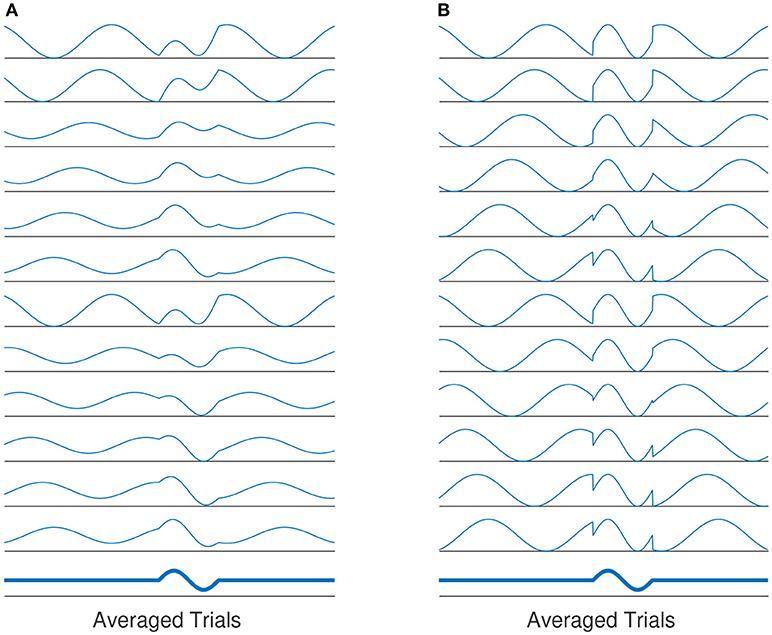

The steps for applying a KS are shown in the algorithm below:

3. Results

3.1. Analysis of Synthetic Generated Spurious Phase Variation

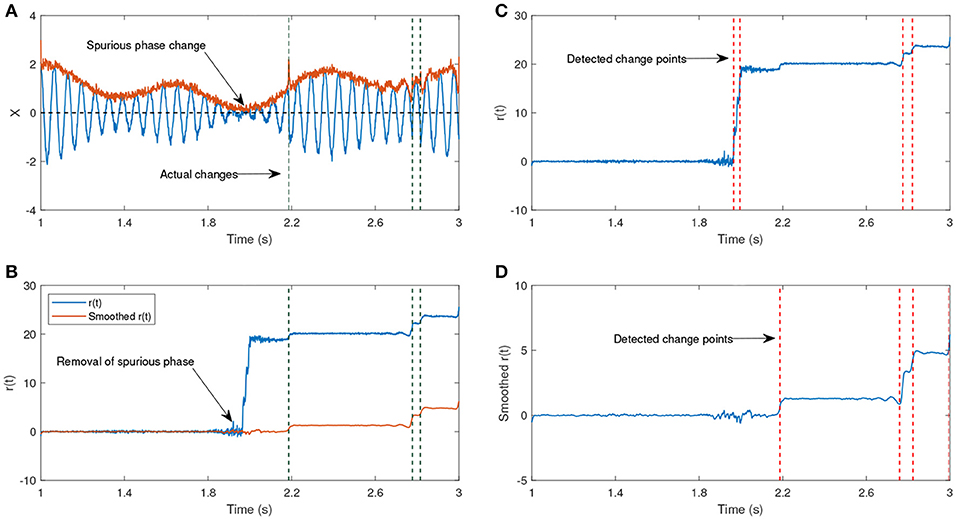

In Figure 7, we show an example of an amplitude modulated signal with phase reset at the time step t = 2.18s. The signal has been generated according to

The signal generated after the time step u is shifted by π, hence the time step u is the actual change point. The parameters ω0 and ω1 have different frequencies, such that one of them corresponds to the generation of a lower amplitude signal. The shift after the time step u will lead to a phase reset that is related to the signal and we are interested to track this change over time. The other phase jitters which we aim to remove correspond to the low envelope of the signal. Figure 7b shows the estimated phase before and after applying the KS. Before applying KS, there are two phase jitters due to the low envelope and the actual shift in the signal. After applying the KS, the jitter corresponding to the low envelope has been diminished. In addition, the standard deviation of the estimated phase is an indicator for the degree of reliability of the phase jitter. A low standard deviation in the estimated signal phase indicates that it is less likely that the phase variation is due to the low envelope, whereas a high standard deviation indicates a higher likelihood that the phase variation has been generated due to noise.

Figure 7. (A) An example of an oscillatory signal which contains a low envelope. The signal length is about 2 s. The vertical lines show the actual shifts in the signal which are random. (B) The phase after removing the line corresponding to the center frequency before and after applying a Kalman smoother (KS). (C) The detected changes in r(t) without any smoothing have been plotted. (D) The detected changes in r(t) after applying KS. (A,B) Have been adapted from Mortezapouraghdam and Strauss (2017) with some modifications.

In this example, the standard deviation from the sample t = 300 increases, indicating a high uncertainty due to the artificial phase reset. To assess the accuracy of the model, we generated 500 synthetic time-series with different levels of added noise and random shifts in the signal. For every number of change points n, we randomly shift the signal at n random time steps u between and . This generates n instances at which the signal has been shifted, and the other phase variations correspond to either the noise or low envelope of the signal (see Figure 7).

We apply a KS to remove the noisy variations in the signal. To get an estimate of how reliably the change points have been removed, we apply a change point algorithm to detect the time steps that the mean of the signal has been significantly altered3. The detected time instances are recorded as the estimated significant change points before and after applying KS (see Figure 7). If the difference of the estimated change points and the actual random change points are less than 10 samples, the estimated change point is assumed to be correct (true positive, TP). However, if the difference is larger than the determined threshold, the data point has been falsely recognized as a change point (and is referred to false positive, fp). If the change point algorithm fails to detect the actual change point, then the point is referred to as false negative, fn.

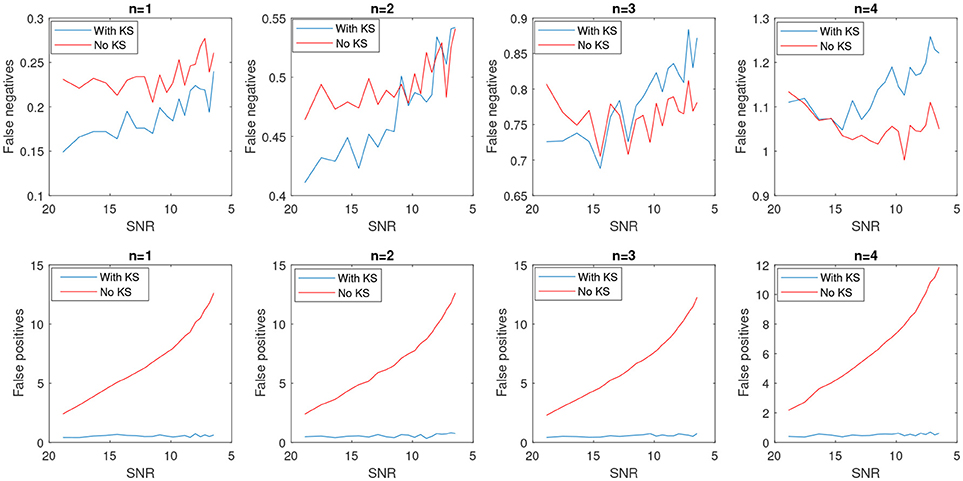

In Figure 8, the average number of false positives (fp) and false negatives (fn) for different number of change points have been plotted. For every number of change points n, we generated 2000 batches of data with different levels of noise and reported the average number of fn and fp. After applying KS, the average number of fps is significantly reduced. However, the case with no filtering yields very unstable results as the noise level increases. The average false negatives is however lower for the case that we apply no smoothing compared to results after KS. This is mainly due to the fact that more random changes are detected in the pre-smoothing condition. Therefore, as many indices will be assigned correctly as change points, satisfying the minimum distance criteria. In the case of smoothing, the overlap of a change point and low envelope can cause an increase in the number of detected false negatives. Using the measured rates of false negatives and positives, we computed the Matthews correlation coefficient, MCC. MCC takes the number of false/true positive/negatives and returns a correlation coefficient between the observed and predicted binary classifier. It is computed as

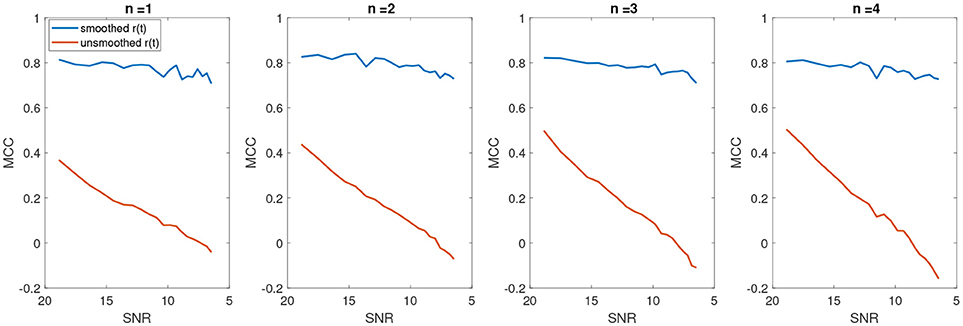

A coefficient of +1 indicates a perfect prediction (i.e., in our case a perfect detection of change points at the correct indices), zero indicates no better than random assignment of change points and a coefficient of -1 means a complete disagreement between the predicted change points and the actual ones. Figure 9 shows the average MCC for different SNRs for varying change points n. The MCC significantly decreases as the SNR increases (as noise level decreases), indicating a random assignment of change points. This is also consistent with the average results of falsely detected change points. As the number of falsely detected change points increases, we have a more random assignment of change points. This is however not the case for the post-KS condition. The higher average of MCC indicates a significant improvement in the accuracy of detected change points.

Figure 8. Average number of false positives and false negatives for 2,000 batch of synthetic time-series signals with length of 1,500 samples. The number of actual changes in the signal are indicated as n and results are shown for an increasing SNR.

Figure 9. The average Matthews correlation coefficient for different number of SNRs and different number of change points.

3.2. Analysis of IP of Synthetic EEG Signal

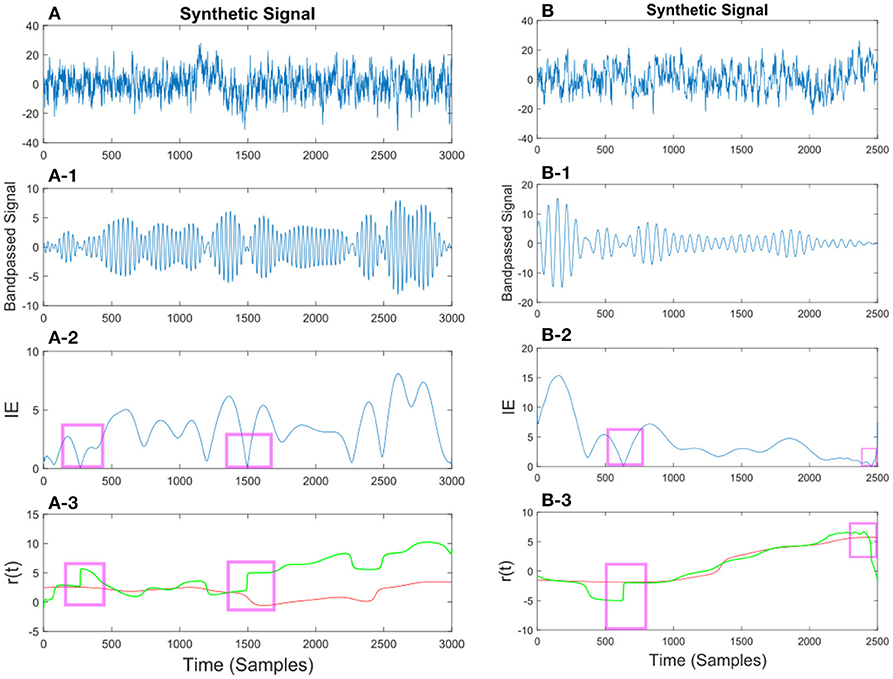

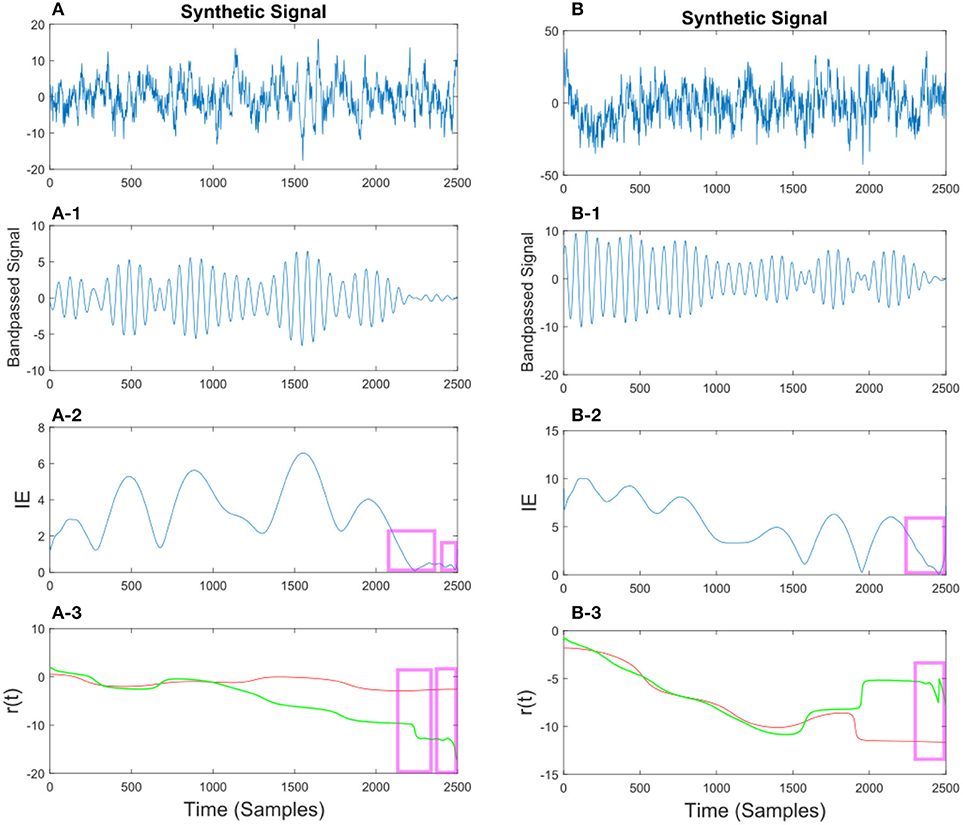

In this section we generate additional synthetic signals for testing the effect of spurious phase variations. The synthetic signals are generated as a superposition of sinusoidal signals and noise with different amplitudes4. The signals has been narrow-bandpassed using a FIR filter to a center frequency of 7.6 Hz. The goal is to analyze the effect of the KS on removing the spurious phase variation for regions where the envelope is low. In this case, we considered the IP of signal corresponding to envelopes below 0.2 to be noise, and therefore need to be removed.

In Figures 10, 11 we show different examples of synthetic EEG signals where at some instances the corresponding envelope of the band-passed signal approaches zero. The regions corresponding to the low envelope has been illustrated in a red box. We show how applying the method with proper set of parameters can remove the spurious changes in the IP of the signal. The main incentive is to remove the spurious variations in r(t). As we are considering the phase information in a narrow-bandpass, we are required to filter the data accordingly. In section 3.4 we describe in more detail on the choices of parameter setting.

Figure 10. (A) Synthetic EEG signal generated at a fs = 250Hz with a SNR of 0.11 (high noise variance) bandpassed at fc = 7.6 Hz. Different noise amplitudes have been used for the two examples. The instantaneous envelope (IE) has been plotted with red boxes showing the regions with a low envelope below 0.2. In the last plot the resulting r(t) has been plotted for the smoothed (red) and non-smoothed (green) signal. The red regions show the effect of the smoothing on the regions with a low IE. (B) Same description as in (A) applies to (B) with a SNR of 0.13.

Figure 11. (A) Synthetic EEG signal generated at a fs = 250 Hz with a SNR of 0.43 bandpassed at fc = 7.6 Hz. The instantaneous envelope (IE) (A-2) has been plotted with red boxes showing the regions with a low envelope below 0.2. In the last (A-3) plot the resulting r(t) has been plotted for the smoothed (red) and non-smoothed (green) signal. The red regions show the effect of the smoothing on the regions with a low IE. (B) Same description as in (A) applies to (B) with a SNR of 0.047.

3.3. Application of KS on EEG Recordings

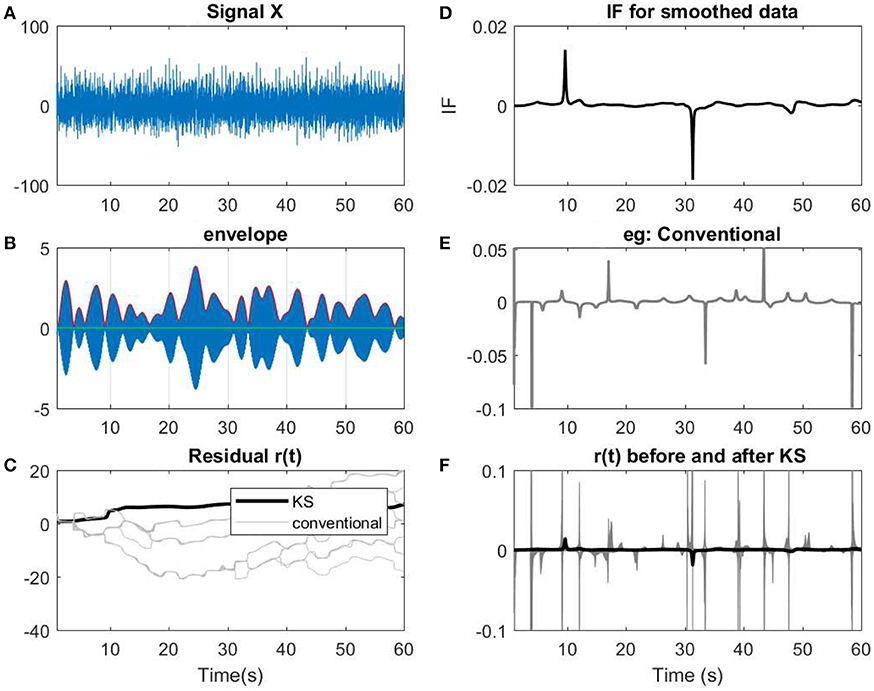

In this section, we apply the proposed model on few examples of an EEG recording (See Figures 12, 13). The recording measurements are obtained from the right and left mastoid electrodes that have been obtained during an experimental listening paradigm (for more information about the data measurement and the details see Corona-Strauss and Strauss, 2017). The EEG signals were bandpassed between 1–70 Hz. In order to test the applicability of the model, we need to narrow-bandpass the signal and then estimate the IP of the filtered data.

Figure 12. (A) A 60 s segment of measured EEG data. (B) Data has been narrow band-passed with a center frequency of 7 Hz. The envelope of the analytic band-passed signal has been plotted in red. (C) The residual of IP before and after applying KS has been applied. The term conventional corresponds to the filtered signals using a narrow band-pass filter before applying KS. As described previously, for every filter, a slight perturbation has been applied to the filter. (D) The IF of the smoothed data. (E) The resulting IF of one of the instances of the filtering process that has been applied on the data. As shown, in the conventional method, more spikes or variations are observed in te IF. (F) Comparing the IF of smoothed narrow-bandpassed signal (dark line) with IF of all the non-smoothed signals. The changes in regard to low envelopes in (B) can be observed as fewer abrupt changes after applying KS in the smoothed version than the conventional approach.

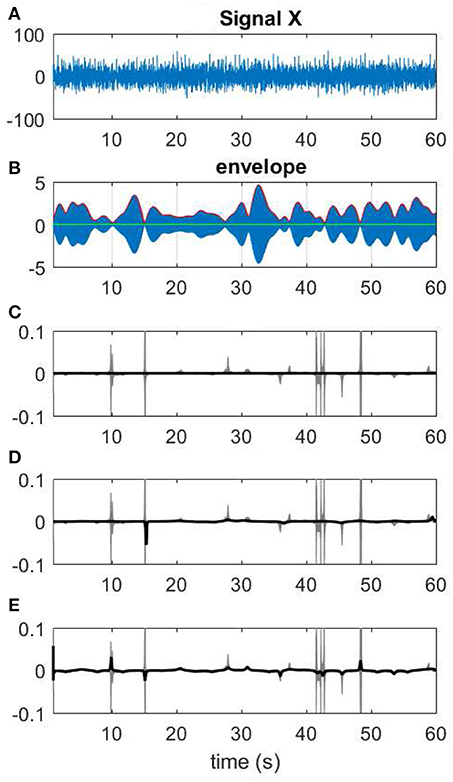

Figure 13. Different choices of Kalman smoothing factor. (A) A 60-s segment of EEG measurement. (B) The narrow band-passed signal along with its envelope. (C) The extreme case of zero factor yields a line for IF. (D) A factor of 0.005. (E) IF resulting from a high factor >100.

We compute the IP using the analytic form of the signal with the Hilbert transformation. To have a meaningful interpretation of the IP, the signal is narrow-bandpassed around a certain center frequency of interest. A zero-phase forward backward IIR elliptic filter is used to narrow-bandpass the signal. As noted in Seraj and Sameni (2017), in many studies a FIR filter is used to avoid phase distortion, however as the signal is narrow-bandpassed, the order of the filter can significantly increase which leads to long transient response episode. The transient response are usually discarded for analysis. We therefore applied an IIR filter which requires a much lower order than a FIR filter and a zero-phase forward backward filtering ensures a zero-phase distortion.

In Sameni and Seraj (2016), it is shown that variations to the filter parameters in filtering process can lead to changes in the IP and IF responses. Therefore, a robust estimation method that estimates the IP from the average ensemble of infinitesimal perturbations to the filter parameters is presented by Seraj and Sameni (2017). To estimate the IP, we apply a narrow-bandpass filter with slight variations in the frequency range for M = 100 iterations. At every iteration, the filter parameters are as follows: the filter order is 6, the reduction in the stop-band is 50dB, the ripple-passband is 0.01 and the filter bandwidth is set to 0.5. The center frequency is fc = 7.4Hz and at every iteration, a slight perturbation is being applied to the center frequency. It has to be noted that the changes to filter parameters are very small that the effect is irrelevant for the study of most physiological effects.

3.4. The Setting of the Parameters

One of the important settings in using the proposed algorithm is the setting of the variance of noise of the measurement and signal (α and σ, respectively). In the examples provided in Figures 7 10, 11, we have access to the original signal without noise. This is equivalent to having prior knowledge of the distribution or shape of the signal for real scenarios. However, in most cases, we don't have access to the actual distribution of the signal and hence a proper estimation of the parameters is required. In this section we give suggestions for setting the parameters of the KS.

As described in Equation (4), the additive noise of the signal and measurement are defined as and . By reordering , we have . Therefore the variance of noise of the signal σ can be estimated as the variance of the difference equation of the analytic signal. In case of real measurements, as we don't have access to the actual signal, we can estimate the variance of the noise as follows:

As stated earlier Wt + 1 and Wt are samples from a Gaussian distribution with a variance of . Due to symmetry of the Gaussian distributions, the sum of two normal distribution is a Gaussian with the summation of the means and the variances. Therefore, in our case, the resulting distribution will be . By taking the variance of both sides in Equation (10), we have

Hence the variance of noise of the signal can be estimated as . Setting , the optimal σ is in the range σ ∈ [max(ϵ − 2α, 0), ϵ] where ϵ is the upper-bound of our estimation. The input for EEG measurements is that is the average over all M filters. Another possibility for controlling the level of smoothing is to optimize over βvar(ηt) where β is a free parameter that controls the degree we rely on the measurements over the predictions. In the extreme case of β = 0, the KS will remove all variations in IP which can be observed as a straight line in the corresponding IF. This has been shown in Figure 13 where different KS parameters are used for smoothing the data.

The setting of α is based on the study that is presented in Sameni and Seraj (2016). We set the variance of the measurement noise to be the variance of the M estimated phases obtained from infinitesimal perturbations to the filter parameters. The signal that we use at the end is the ensemble average of the bandpassed signals. The setting of the parameters rely mostly on the application and condition of the experiment. If data is prone to a lot of noise sources, we need to smooth out the noise more, therefore higher β values are used. In case of a classification task between different neural cognitive processes, we can optimize the range of σ such that the distance between the analyzing measure of the two processes is maximized. In general, setting of the KS parameters require more investigation given the specifics of the experiment and its goals.

4. Discussion

In order to investigate phase of neural oscillatory activities in general, the need of better analytic machinery to exactly characterize the phase is high. The general limitations can come from the preprocessing of wide-band neural signals being recorded, and phase computation methods themselves. By taking a parametric approach, we focused on removing the spurious phase variations that can produce misleading or in-comprehensive results.

We apply a linear Gaussian state space model to the dynamics of the analytical signal at a narrow band around a specific frequency. The method was tested on synthetic signals as well as variants of EEG signals where low envelopes were considered as potential instances of spurious phase variations. Given the flexibility in the model parameters (α and σ) we are able to remove the jitters in phase as the envelope approaches zero.

In the current approach, the specific setting of the σ and α parameters are important for removing the spurious phase variations as they are used for computing the Kalman gain factor. The Kalman gain factor determines how strongly the model relies on its own prediction over the measurement. In this framework, we needed to assume that α was constant throughout the signal. However, in real EEG applications or neural oscillatory activities in general, the noise can be varying over time due to different sources of artifacts and the signals have a strong non-stationary behavior, especially around the event times of scientific interests. For example, β oscillation is known to increase its amplitude around the external visual or auditory cues and to decrease behavioral onset (e.g., movement onsets) in various cortical areas (Takahashi et al., 2015; Watanabe et al., 2015; Noda et al., 2017; Rule et al., 2017), while the phase dynamics locked to the sensory cues or behavioral onsets where the temporal evolution of the phase dynamics remain unclear (Rubino et al., 2006; Takahashi et al., 2011, 2015; Watanabe et al., 2015; Rule et al., 2017). A particular challenge to characterize phase oscillation dynamics is one of the motivations for our current work - how to precisely characterize oscillation phase when the amplitude of the oscillation is attenuated. Successful characterization of such transient dynamics will unveil underlying neural mechanism that is responsible for neural oscillation in general.

We therefore aim to additionally investigate the effect of a varying α in time and its impact on the smoothing procedure. We will also investigate the impact of the proposed method in different experimental settings where IP is heavily used for classification between different neural processes. In many past studies (Strauss et al., 2008; Mortezapouraghdam et al., 2015), the phase locking of IP has been used as an indicator for separating the presence and absence of attentional-binding due to different auditory stimulations. We therefore require proper methods to measure the level of phase locking of the neural activities in response to different stimulations and denoising the spurious phase variations by optimizing over KS parameters such that the phase resets bounded to neurological activities are preserved. In this regard, the overlap of the IP resets due to neural activities with the ones due to low envelope has to be investigated.

Furthermore, thanks to recent interest to investigate spatiotemporal dynamics of neural oscillaations using various types of array recording methods to simultaneously capture dynamics of evoked responses or phase variations across multiple channels over space, mostly horizontally, the demand to precisely characterize phase of neural oscillation has been arising (Liotti et al., 2010; Takahashi et al., 2011, 2015; Keane and Gong, 2015; Moon et al., 2015; Watanabe et al., 2015; Rule et al., 2017; Denker et al., 2018) using various types of multiple channel devices such as EEG, ECoG, or intracortical arrays. Although these recent work or methods developed therein can characterize various spatiotemporal patterns of oscillation activities, but those results are heavily relying on oscillation phases that are not as faithfully computed as in our current work.

Moreover, another trend in neuroscience is to characterize variability of neural signals in single trials or attempt to relate between neural variability and sensory/motor variations being observed (Matsuoka, 1990; Szymanski et al., 2011; Matsuo et al., 2013; Cui et al., 2016; Barczak et al., 2018; Dechery and MacLean, 2018). Therefore, we would like to extend our current method to characterize single trial neural oscillation data as well.

5. Conclusion

One of the main incentives of the current study is to remove the spurious variations in IP for a more reliable assessment of phase information. We present a model based on a Kalman smoother that models the variations of phase in a narrow-bandpassed signal. We evaluated the model for synthetic signals with spurious and actual phase jitters. We added different level of noises to signals and evaluated the number of true and false positives as an indicator for correct detection of actual phase jumps. Results show a significant improvement in reducing the number of false positives. The method is also applied on synthetic EEG signals generated as the superposition of sinusoidal waves with noise to assess the removal of spurious phase variations. Inspecting on various settings the method is able to remove the rapid transitions in phase that correspond to a low envelope. In both cases of known and unknown underlying phase shifts, an estimation to the variance of signal and noise measurements has been presented. We use the same approach on ongoing EEG recordings for testing the applicability of the approach. The proposed approach shows success in removing the spurious phase variations corresponding to a low envelope.

Ethics Statement

The design of the experiment was planned and in accordance with ethics guidelines and the Declaration of Helsinki and the study was also approved as a scientific study by the local ethics committee [Arztekammer des Saarlandes (Medical Council of the Saarland), Germany]. Every person had the free choice to abandon the procedure and withdraw their participation at any time.

Author Contributions

FC-S and DS were both involved in implementation of the study, measurements, and discussions. ZM is involved in proposing the methodology part of the study and analysis of data. KT has participated in the discussion of the results, future directions of the study, and contributing in the revision of the paper in its accepted form. All authors participated in the discussion of the results.

Funding

This work was partially supported by the German Federal Ministry of Education and Research, grant number BMBF-FZ 03FH004IX5.

Conflict of Interest Statement

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Footnotes

1. ^The measurements are derived from a repetition of the seminal dichotic tone detection experiment of Hillyard et al. (1973) which demonstrated the N1–effect of late auditory evoked responses for the first time and their relation to early auditory selective attention. In a study conducted by Corona-Strauss and Strauss (2017), they report on the analysis of the collected data using different circular analysis techniques to the (Hardy space projected) segmented ERP and unsegmented ongoing EEG data suggesting a unified framework to analyze neural correlates of selective attention in ERPs and their ongoing EEG activity.

2. ^For simplicity of notation, we write instead of in the rest of the paper.

3. ^The function findchangepts in MATLAB using the mean returns the time instances at which the mean of the data changes abruptly.

4. ^The code for generating the signals has been mainly used from https://github.com/pchrapka/phasereset with slight variation.

References

Aydore, S., Pantazis, D., and Leahy, R. M. (2013). A note on the phase locking value and its properties. Neuroimage 74, 231–244. doi: 10.1016/j.neuroimage.2013.02.008

Barczak, A., O'Connell, M. N., McGinnis, T., Ross, D., Mowery, T., Falchier, A., et al. (2018). Top-down, contextual entrainment of neuronal oscillations in the auditory thalamocortical circuit. Proc. Natl. Acad. Sci. U.S.A. 115:201714684. doi: 10.1073/pnas.1714684115

Bernarding, C., Strauss, D. J., Hannemann, R., Seidler, H., and Corona-Strauss, F. I. (2013). Neural correlates of listening effort related factors: influence of age and hearing impairment. Brain Res. Bull. 91, 21–30. doi: 10.1016/j.brainresbull.2012.11.005

Bob, P., Palus, M., Susta, M., and Glaslova, K. (2008). Eeg phase synchronization in patients with paranoid schizophrenia. Neurosci. Lett. 447, 73–77. doi: 10.1016/j.neulet.2008.09.055

Briers, M., Doucet, A., and Maskell, S. (2010). Smoothing algorithms for state–space models. Ann. Inst. Stat. Math. 62, 61–89. doi: 10.1007/s10463-009-0236-2

Busch, N. A., Dubois, J., and VanRullen, R. (2009). The phase of ongoing eeg oscillations predicts visual perception. J. Neurosci. 29, 7869–7876. doi: 10.1523/JNEUROSCI.0113-09.2009

Canavier, C. C. (2015). Phase-resetting as a tool of information transmission. Curr. Opin. Neurobiol. 31, 206–213. doi: 10.1016/j.conb.2014.12.003

Chavez, M., Besserve, M., Adam, C., and Martinerie, J. (2006). Towards a proper estimation of phase synchronization from time series. J. Neurosci. Methods 154, 149–160. doi: 10.1016/j.jneumeth.2005.12.009

Corona-Strauss, F. I., and Strauss, D. J. (2017). “Circular organization of the instantaneous phase in erps and the ongoing eeg due to selective attention,” in Neural Engineering (NER), 2017 8th International IEEE/EMBS Conference on (Shanghai: IEEE), 625–628.

Cui, Y., Liu, L. D., McFarland, J. M., Pack, C. C., and Butts, D. A. (2016). Inferring cortical variability from local field potentials. J. Neurosci. 36, 4121–4135. doi: 10.1523/JNEUROSCI.2502-15.2016

Davenport, W. B. Jr, and Root, W. L. (1958). An Introduction to the Theory of Random Signals and Noise, Vol. 159. New York, NY: McGraw-Hill.

Dechery, J. B., and MacLean, J. N. (2018). Functional triplet motifs underlie accurate predictions of single-trial responses in populations of tuned and untuned v1 neurons. PLoS Comput. Biol. 14:e1006153. doi: 10.1371/journal.pcbi.1006153

Denker, M., Zehl, L., Kilavik, B. E., Diesmann, M., Brochier, T., Riehle, A., et al. (2018). Lfp beta amplitude is linked to mesoscopic spatio-temporal phase patterns. Sci. Rep. 8, 1–21. doi: 10.1038/s41598-018-22990-7

Dvorak, D., and Fenton, A. A. (2014). Toward a proper estimation of phase–amplitude coupling in neural oscillations. J. Neurosci. Methods 225, 42–56. doi: 10.1016/j.jneumeth.2014.01.002

Fell, J., and Axmacher, N. (2011). The role of phase synchronization in memory processes. Nat. Rev. Neurosci. 12:105. doi: 10.1038/nrn2979

Hillyard, S. A., Hink, R. F., Schwent, V. L., and Picton, T. (1973). Electrical signs of selective attention in the human brain. Science 182, 177–180. doi: 10.1126/science.182.4108.177

Hurtado, J. M., Rubchinsky, L. L., and Sigvardt, K. A. (2004). Statistical method for detection of phase-locking episodes in neural oscillations. J. Neurophysiol. 91, 1883–1898. doi: 10.1152/jn.00853.2003

Keane, A., and Gong, P. (2015). Propagating waves can explain irregular neural dynamics. J. Neurosci. 35, 1591–1605. doi: 10.1523/JNEUROSCI.1669-14.2015

Klimesch, W., Sauseng, P., Hanslmayr, S., Gruber, W., and Freunberger, R. (2007). Event-related phase reorganization may explain evoked neural dynamics. Neurosci. Biobehav. Rev. 31, 1003–1016. doi: 10.1016/j.neubiorev.2007.03.005

Kolev, V., and Yordanova, J. (1997). Analysis of phase-locking is informative for studying event-related eeg activity. Biol. Cybern. 76, 229–235. doi: 10.1007/s004220050335

Lachaux, J.-P., Rodriguez, E., Martinerie, J., and Varela, F. J. (1999). Measuring phase synchrony in brain signals. Hum. Brain Mapp. 8, 194–208. doi: 10.1002/(SICI)1097-0193(1999)8:4<194::AID-HBM4>3.0.CO;2-C

Laine, C. M., Nickerson, L. A., and Bailey, E. F. (2012). Cortical entrainment of human hypoglossal motor unit activities. J. Neurophysiol. 107, 493–499. doi: 10.1152/jn.00769.2011

Liotti, M, Ingham, J. C., Takai, O., Paskos, D. K., Perez, R., and Ingham, R. J. (2010). Spatiotemporal dynamics of speech sound perception in chronic developmental stuttering. Brain Lang. 115, 141–147. doi: 10.1016/j.bandl.2010.07.007

Lutz, A., Lachaux, J. P., Martinerie, J., and Varela, F. J. (2002). Guiding the study of brain dynamics by using first-person data: synchrony patterns correlate with ongoing conscious states during a simple visual task. Proc. Natl. Acad. Sci. U.S.A. 99, 1586–1591. doi: 10.1073/pnas.032658199

Makeig, S., Westerfield, M., Jung, T. P., Enghoff, S., Townsend, J., Courchesne, E., et al. (2002). Dynamic brain sources of visual evoked responses. Science 295, 690–694. doi: 10.1126/science.1066168

Matsuo, T., Kawai, K., Uno, T., Kunii, N., Miyakawa, N., Usami, K., et al. (2013). Simultaneous recording of single-neuron activities and broad-area intracranial electroencephalography: electrode design and implantation procedure. Neurosurgery 73(Suppl. 2):146–154. doi: 10.1227/01.neu.0000430327.48387.e1

Matsuoka, S. (1990). Theta rhythms: state of consciousness. Brain Topogr. 3, 203–208. doi: 10.1007/BF01128877

Min, B. K., Busch, N. A., Debener, S., Kranczioch, C., Hanslmayr, S., Engel, A. K., et al. (2007). The best of both worlds: phase-reset of human EEG alpha activity and additive power contribute to ERP generation. Int. J. Psychophysiol. 65, 58–68. doi: 10.1016/j.ijpsycho.2007.03.002

Moon, I. J., Won, J. H., Kang, H. W., Kim, D. H., An, Y. H., and Shim, H. J. (2015). Influence of tinnitus on auditory spectral and temporal resolution and speech perception in tinnitus patients. J. Neurosci. 35, 14260–14269. doi: 10.1523/JNEUROSCI.5091-14.2015

Mortezapouraghdam, Z., Haab, L., Corona-Strauss, F. I., Steidl, G., and Strauss, D. J. (2015). Assessment of long-term habituation correlates in event-related potentials using a von Mises model. IEEE Trans. Neural Syst. Rehabil. Eng. 23, 363–373. doi: 10.1109/TNSRE.2014.2361614

Mortezapouraghdam, Z., Haab, L., Steidl, G., and Strauss, D. J. (2014). Detection of change points in phase data: a bayesian analysis of habituation processes. Conf. Proc. IEEE Eng. Med. Biol. Soc. 1, 1014–1017. doi: 10.1109/EMBC.2014.6943765

Mortezapouraghdam, Z., and Strauss, D. (2017). Removal of spurious phase variations in oscillatory signals. Conf. Proc. IEEE Eng. Med. Biol. Soc. 2017, 2209–2212. doi: 10.1109/EMBC.2017.8037293

Mortezapouraghdam, Z., Wilson, R. C., Schwabe, L., and Strauss, D. J. (2016). Bayesian modeling of the dynamics of phase modulations and their application to auditory event related potentials at different loudness scales. Front. Comput. Neurosci. 10:2. doi: 10.3389/fncom.2016.00002

Noda, T., Amemiya, T., Shiramatsu, T. I., and Takahashi, H. (2017). Stimulus phase locking of cortical oscillations for rhythmic tone sequences in rats. Front. Neural Circuits 11:2. doi: 10.3389/fncir.2017.00002

Nolte, G., Bai, O., Wheaton, L., Mari, Z., Vorbach, S., and Hallett, M. (2004). Identifying true brain interaction from eeg data using the imaginary part of coherency. Clin. Neurophysiol. 115, 2292–2307. doi: 10.1016/j.clinph.2004.04.029

Park, C., and Rubchinsky, L. L. (2012). Potential mechanisms for imperfect synchronization in parkinsonian basal ganglia. PLoS ONE 7:e51530. doi: 10.1371/journal.pone.0051530

Penny, W. D., Kiebel, S. J., Kilner, J. M., and Rugg, M. D. (2002). Event-related brain dynamics. Trends Neurosci. 25, 387–389. doi: 10.1016/S0166-2236(02)02202-6

Rosenblum, M., Pikovsky, A., Kurths, J., Schäfer, C., and Tass, P. A. (2001). “Phase synchronization: from theory to data analysis,” in Handbook of Biological Physics, Vol. 4, ed A. J. Hoff (Elsevier), 279–321.

Rosenblum, M., Tass, P., Kurths, J., Volkmann, J., Schnitzler, A., and Freund, H.-J. (2000). “Detection of phase locking from noisy data: application to magnetoencephalography,” in Chaos In Brain? (World Scientific), 34–51.

Rubino, D., Robbins, K. A., and Hatsopoulos, N. G. (2006). Propagating waves mediate information transfer in the motor cortex. Nat. Neurosci. 9, 1549–1557. doi: 10.1038/nn1802

Rudrauf, D., Douiri, A., Kovach, C., Lachaux, J. P., Cosmelli, D., Chavez, M., et al. (2006). Frequency flows and the time-frequency dynamics of multivariate phase synchronization in brain signals. Neuroimage 31, 209–227. doi: 10.1016/j.neuroimage.2005.11.021

Rule, M. E., Vargas-Irwin, C. E., Donoghue, J. P., and Truccolo, W. (2017). Dissociation between sustained single-neuron spiking and transient β-lfp oscillations in primate motor cortex. J. Neurophysiol. 117, 1524–1543. doi: 10.1152/jn.00651.2016

Sameni, R., and Seraj, E. (2016). A robust statistical framework for instantaneous electroencephalogram phase and frequency estimation and analysis. Available online at: https://hal.archives-ouvertes.fr/hal-01355465

Sameni, R., and Seraj, E. (2017). A robust statistical framework for instantaneous electroencephalogram phase and frequency estimation and analysis. Physiol. Meas. 38:2141. doi: 10.1088/1361-6579/aa93a1

Sauseng, P., Klimesch, W., Gruber, W. R., Hanslmayr, S., Freunberger, R., and Doppelmayr, M. (2007). Are event–related potential components generated by phase resetting of brain oscillations? A critical discussion. Neuroscience 146, 1435–1444. doi: 10.1016/j.neuroscience.2007.03.014

Sayers, B. M., Beagley, H., and Henshall, W. (1974). The mechanism of auditory evoked EEG responses. Nature 247, 481–483. doi: 10.1038/251608a0

Seraj, E., and Sameni, R. (2017). Robust electroencephalogram phase estimation with applications in brain-computer interface systems. Physiol. Meas. 38:501. doi: 10.1088/1361-6579/aa5bba

Siapas, A. G., Lubenov, E. V., and Wilson, M. A. (2005). Prefrontal phase locking to hippocampal theta oscillations. Neuron 46, 141–151. doi: 10.1016/j.neuron.2005.02.028

Strauss, D. J., Corona-Strauss, F. I., and Froehlich, M. (2008). “Objective estimation of the listening effort: towards a neuropsychological and neurophysical model,” in Engineering in Medicine and Biology Society, 2008, EMBS 2008, 30th Annual International Conference of the IEEE (Vancouver, BC: IEEE), 1777–1780.

Strauss, D. J., Delb, W., D'Amelio, R., and Falkai, P. (2005). “Neural synchronization stability in the tinnitus decompensation,” in Proceedings of the 2st Int. IEEE EMBS Conference on Neural Engineering (Arlington, VA), 186–189.

Szymanski, F. D., Rabinowitz, N. C., Magri, C., Panzeri, S., and Schnupp, J. W. (2011). The laminar and temporal structure of stimulus information in the phase of field potentials of auditory cortex. J. Neurosci. 31, 15787–15801. doi: 10.1523/JNEUROSCI.1416-11.2011

Takahashi, K., Kim, S., Coleman, T. P., Brown, K. A., Suminski, A. J., Best, M. D., et al. (2015). Large-scale spatiotemporal spike patterning consistent with wave propagation in motor cortex. Nat. Commun. 6, 1–11. doi: 10.1038/ncomms8169

Takahashi, K., Saleh, M., Penn, R., and Hatsopoulos, N. (2011). Propagating waves in human motor cortex. Front. Hum. Neurosci. 5:40. doi: 10.3389/fnhum.2011.00040

Tass, P., Rosenblum, M., Weule, J., and Kurths, J. (1998). Detection of n: m phase locking from noisy data: application to magnetoencephalography. Phys. Rev. 81, 3291–3294.

Tcheslavski, G. V., and Beex, A. L. (2006). Phase synchrony and coherence analyses of eeg as tools to discriminate between children with and without attention deficit disorder. Biomed. Signal Process. Control 1, 151–161. doi: 10.1016/j.bspc.2006.08.001

Thounaojam, U. S., Cui, J., Norman, S. E., Butera, R. J., and Canavier, C. C. (2014). Slow noise in the period of a biological oscillator underlies gradual trends and abrupt transitions in phasic relationships in hybrid neural networks. PLoS Comput. Biol. 10:e1003622. doi: 10.1371/journal.pcbi.1003622

Uhlhaas, P., Pipa, G., Lima, B., Melloni, L., Neuenschwander, S., Nikolić, D., et al. (2009). Neural synchrony in cortical networks: history, concept and current status. Front. Integr. Neurosci. 3:17. doi: 10.3389/neuro.07.017.2009

Voloh, B., and Womelsdorf, T. (2016). A role of phase-resetting in coordinating large scale neural networks during attention and goal-directed behavior. Front. Syst. Neurosci. 10:18. doi: 10.3389/fnsys.2016.00018

Watanabe, H., Takahashi, K., and Isa, T. (2015). “Phase locking of β oscillation in electrocorticography (ecog) in the monkey motor cortex at the onset of emgs and 3d reaching movements,” in Proceedings of the Annual International Conference of the IEEE Engineering in Medicine and Biology Society, EMBS, Vol. (Milan).

Yeung, N., Bogacz, R., Holroyd, C. B., and Cohen, J. D. (2004). Detection of synchronized oscillations in the electroencephalogram: an evaluation of methods. Psychophysiology 41, 822–832. doi: 10.1111/j.1469-8986.2004.00239.x

Keywords: instantaneous phase, spurious phase, Kalman smoother, phase synchronization, phase reset

Citation: Mortezapouraghdam Z, Corona-Strauss FI, Takahashi K and Strauss DJ (2018) Reducing the Effect of Spurious Phase Variations in Neural Oscillatory Signals. Front. Comput. Neurosci. 12:82. doi: 10.3389/fncom.2018.00082

Received: 22 May 2018; Accepted: 12 September 2018;

Published: 08 October 2018.

Edited by:

Jonathan D. Victor, Cornell University, United StatesReviewed by:

Carmen Castro Canavier, LSU Health Sciences Center New Orleans, United StatesSurjo R. Soekadar, Universitätsklinikum Tübingen, Germany

Copyright © 2018 Mortezapouraghdam, Corona-Strauss, Takahashi and Strauss. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Zeinab Mortezapouraghdam, emVpbmFiLm1vcnRlemFwb3VyYWdoZGFtQHVuaS1zYWFybGFuZC5kZQ==

Zeinab Mortezapouraghdam1,2*

Zeinab Mortezapouraghdam1,2* Daniel J. Strauss

Daniel J. Strauss