- 1School of Electronic Information, Hunan First Normal University, Changsha, Hunan, China

- 2School of Computer and Information Science, Hunan Institute of Technology, Hengyang, China

- 3School of Data Science and Artificial Intelligence, Wenzhou University of Technology, Wenzhou, China

- 4School of Software Quanzhou University of Information Engineering, Quanzhou, China

- 5School of Intelligent Manufacturing and Equipment, Shenzhen University of Information Technology, Shenzhen, China

Circular RNAs (circRNAs) are a unique class of non-coding RNAs with stable covalently closed structures that play key regulatory roles in gene expression and drug response. However, experimental identification of circRNA-drug sensitivity remains labor-intensive. To overcome these limitations, we introduce DMAGCL, a Dual-Masked Graph Contrastive Learning framework, whose core innovations include: (1) a synergistic dual-masking strategy (path- and edge-level) that forces the model to learn robust representations against both macro-level path disruptions and micro-level edge noise; (2) an adaptive contrastive loss with a scheduled temperature parameter (t) to dynamically balance exploration and exploitation during training; and (3) an attention-based fusion classifier (AFC) that explicitly models complex cross-modal interactions between circRNA sequences and drug molecular graphs for adaptive multi-source information fusion. Comprehensive evaluations demonstrate that DMAGCL achieves state-of-the-art performance, attaining an average AUC of 0.8940 and AUPR of 0.9006 under five-fold cross-validation, and a slightly higher average AUC of 0.8982 under the more stringent ten-fold cross-validation, consistently surpassing strong baselines including GATECDA and MNGACDA. This performance advantage stems from our core design choices, as evidenced by systematic ablation studies confirming the indispensable and complementary roles of the dual-masking strategy and the effectiveness of the adaptive loss and fusion classifier. Case studies on four representative anticancer drugs (doxorubicin, gefitinib, sorafenib, and paclitaxel) achieved an average experimental validation rate of 80%, highlighting the framework’s predictive reliability and biological relevance. In conclusion, this study makes three primary contributions: (1) it introduces the novel DMAGCL framework, establishing a new paradigm for circRNA-drug association prediction via its synergistic dual-masking, adaptive learning, and attentive fusion components; (2) it delivers a highly robust and interpretable model with validated predictive reliability through extensive experiments and case studies (80% average validation rate); and (3) it provides a scalable computational tool that offers valuable insights for discovering novel circRNA-drug associations, understanding drug resistance mechanisms, and informing precision therapy design, with clear pathways for extension to other biological interaction tasks.

1 Introduction

Circular RNAs (circRNAs) exist stably through their covalently closed structure and have been proven to be key regulatory factors for tumor drug resistance (Chen and Yang, 2015; Suzuki and Tsukahara, 2014). As competitive endogenous RNAs, they can “sponge” miRNAs through miRNA response elements and thereby interfere with post-transcriptional silencing of mRNAs (Min et al., 2019). However, the complete molecular network of circRNA-drug sensitivity (CDS) remains to be elucidated. For instance, miRNAs can mediate post-transcriptional silencing by binding to the 3′-untranslated region of target mRNAs, while circRNAs can antagonize this process through molecular sponge effects (Bartel, 2004). circRNAs are pivotal regulators of tumor drug resistance (Min et al., 2019), yet computational elucidation of their interactions with drug sensitivity remains challenging (Bartel, 2004). Multiple studies have indicated that circHIPK3, Circ_0006528, etc. participate in tumor occurrence by regulating apoptosis and proliferation pathways (Hanahan and Weinberg, 2000), and can serve as diagnostic or prognostic markers (Li et al., 2018). At the level of drug resistance, circ_0076305 is upregulated by miR-186-5p to induce ABCC1 expression, thereby enabling non-small cell lung cancer to tolerate cisplatin (Liu et al., 2018); while circ_0072083 enhances the resistance of glioma to temozolomide through the miR-1252-5p/ALKBH5/NANOG axis (Wang et al., 2023). Although functional evidence is constantly emerging, experimental identification of CDS associations remains time-consuming and labor-intensive, and there is an urgent need for efficient computational frameworks to systematically analyze their network patterns. Additionally, in bladder cancer, CircNR3C1 can bind to BRD4 and interfere with the formation of the oncogenic complex between C-myc, thereby inhibiting tumor progression (Ding et al., 2021); studies have also shown that the ectopic expression of C-myc can partially reverse the tumor suppressive effect of this circRNA in vivo (Xie et al., 2020). These findings systematically reveal the important regulatory functions of circRNAs in tumor resistance. However, the complete molecular network of their interaction with drug sensitivity still needs to be further elucidatedExtensive experiments demonstrate that DMCL achieves state-of-the-art performance. Case studies on four anti-cancer drugs show an average experimental validation rate of 80%, underscoring its predictive reliability and biological relevance. This work provides a powerful computational tool and offers new insights into non-coding RNA mechanisms in drug resistance.

In the circRNA and drug sensitivity studies, the statistical models based on correlations play a crucial role. The core of these models lies in using computational methods to predict and uncover potential circRNA-drug sensitivity correlations, thereby effectively avoiding the time-consuming and labor-intensive drawbacks of traditional biological experiments. Computational models offer an efficient approach for large-scale identification of such associations. Existing methods primarily rely on strategies such as graph neural networks (GNNs), multi-source feature integration, or random walks. Multi-source biological information integration and machine learning algorithms have become the mainstream approach for predicting drug sensitivity of circRNAs(Wei et al., 2023a). By introducing graph regularization into protein language models, it has been verified that graph constraints can improve the prediction of TCR-epitope binding specificity (Fu et al., 2025b); Zhou et al. proposed a global-local dual perspective to achieve multi-scale feature fusion of drug-protein interactions (Zhou et al., 2024b). The multi-source feature framework emphasizes the crucial regulatory role of circular RNAs in drug sensitivity and efficacy (Yin et al., 2024), while MNCLCDA reveals the association between circular RNAs and drug resistance and tumor progression through mixed neighborhood contrast learning (Li et al., 2023). Given the natural advantages of graph neural networks in complex biological networks, studies usually combine attention mechanisms to strengthen key features (Ru et al., 2022): MNGACDA uses graph autoencoders to construct multimodal networks (Yang and Chen, 2023), DeepWalk-GAT combines convolutional neural networks to extract topological sequence information (Li et al., 2023), Graph Attention Autoencoder (Deng et al., 2022), HETACDA (Xiao et al., 2023), Double-Layer Multi-Core Attention Network (Lu et al., 2023), and multimodal graph representation framework (Liu Z. et al., 2025) all effectively predict circular RNA-drug sensitivity through computational means, compensating for the lack of experimental throughput (Ru et al., 2021a). However, these methods have inherent limitations: GNN models are susceptible to information redundancy and noise within networks, and their decision-making process often lacks transparency. Similarity-based methods, on the other hand, struggle to capture complex non-linear biological relationships. To address these challenges, we propose a double-mask contrastive learning framework. The core innovation involves the simultaneous application of a path mask and an edge mask. The path mask is designed to deconstruct high-order semantic information in heterogeneous circRNA-drug relationships, while the edge mask enhances the model’s robustness to local critical interactions. This dual design effectively suppresses information redundancy and noise in biological networks through contrastive learning. Furthermore, we introduce a dynamic temperature parameter strategy that adaptively optimizes the learning process based on the training state. Random walks and similarity measures are intertwined, forming a network that, through topological propagation, triggers potential correlations: an asymmetric dual-walk strategy re-evaluates the connection between circular RNAs and diseases, providing algorithmic support for rapid target identification (Toprak, 2024). The multi-head attention mechanism is used to convert the potential vocabulary between non-coding RNAs and proteins into interaction codes (Zhou et al., 2023). Graph collaborative filtering and multi-view contrastive learning are combined, enabling miRNAs to confront their own sensitivity to drugs in the generated embedding space (Wei et al., 2023b). Pairwise learning guides dual graph convolution to shape a clearer interface representation of ncRNA-protein pairs (Zhuo et al., 2022), while the head-tail sampling protocol extracts key nodes from the sparse interaction wasteland, providing replenishment for downstream GNNs(Wei et al., 2023a). Benchmarking and framework parallel development: an evaluation standard for circular RNA-disease prediction was established and quietly expanded to the field of drug sensitivity (Lan et al., 2023); through the method of extracting graph skeletons and fusing attention, researchers gradually revealed the progressive process of non-coding RNA-mediated drug resistance (Zhang et al., 2023); a comprehensive study revealed how deep learners locate binding sites of RNA-binding proteins on circular RNAs and how this binding implicitly regulates drug responses (Wang Z. et al., 2025). Multidimensional data convergence, complex algorithms take off, the statistical-graph hybrid now charts a high-throughput, low-cost route for drug sensitivity based on circular RNAs, accelerating the transformation of potential biomarkers and therapeutic targets from the realm of stars to clinical reality (Ru et al., 2021b).

Network models have emerged as a pivotal tool in bioinformatics and systems biology for modeling complex biological processes and diseases. For instance, Peng et al. developed an explainable multi-scale framework for circRNA-miRNA interaction prediction, highlighting the utility of multi-scale feature engineering in circRNA research (Peng et al., 2025b). In another study, Peng et al. introduced metaCDA, a meta-learning framework for circRNA-driven drug discovery, showcasing the potential of adaptive aggregation and meta-knowledge in circRNA studies (Peng et al., 2025a). By modeling biological entities and their interactions as graphs, network science has been widely applied in protein-protein interaction networks, gene regulatory networks, and brain connectomes. For instance, Milan and Canataro applied network inference to the research of cancer and neurodegenerative diseases (Milano and Cannataro, 2023); through the heterogeneous GCN combined with pseudo-path bidirectional attention (Niu et al., 2025), NSL2CD’s adaptive subspace embedding (Xiao et al., 2021b), AAECDA’s MSCNN-adversarial autoencoder pipeline (Wang Y. et al., 2025), and Xiao’s propagation, path, matrix and depth-based classification framework (Xiao et al., 2021a), as well as the cross-modal ternary attention in ET-PROTACs (Cai et al., 2025), Kolmogorov-Arnold drug KANs (Fu et al., 2025a), and masked path GAM-MDR (Zhou et al., 2024a), they jointly advanced the frontier of topological research. These studies have jointly promoted the development of circRNA-drug sensitivity association prediction based on network topological structures, providing new perspectives and methods for biomarker identification and drug development in complex diseases.

Machine learning is playing an increasingly important role in drug discovery and prediction, especially in drug sensitivity prediction. In the field of predicting drug sensitivity for cancer cell lines, even when the training samples are limited, traditional molecular fingerprints have been surpassed or even equalled by end-to-end TextCNN (Baptista et al., 2022). Breakthroughs have also been frequently observed in the circRNA domain, such as LSNSCDA, which overcomes the limitations of fixed step size and negative sample noise through a local smoothing graph neural network and reliable negative sampling (Fan et al., 2024); MAGSDMF framework, which precisely captures the potential circRNA-drug sensitivity associations through multiple attention, graph learning, and deep matrix decomposition (Ai et al., 2024); DCDA uses a feedforward-self-encoding hybrid to integrate multi-source data to shape circRNA-disease features and performs exceptionally well (Turgut et al., 2023); SNFTPGd-CDA achieves high AUC in nonlinear fusion of multi-source information through similar network fusion-tensor product graph diffusion parallel cascaded forest (Liu et al., 2024); JLCRB multi-view collaborative representation network enhances cross-view consistency to locate circRNA binding sites, and the average AUC has reached a new high (Du and Xue, 2022). Looking at the entire process of drug development, ModDRDSP uses deep bidirectional GRU and message passing networks to jointly depict multimodal drug information, and then integrates cell line multi-omics data, and is completed by a deep forest for sensitivity prediction, with the model’s performance comprehensively leading existing methods (Song et al., 2024).

In the research on the association between circRNAs and drug sensitivity prediction, statistical models based on correlations, models based on graph neural networks (GNN) and attention mechanisms, as well as models based on random walks and similarity measures each have their own advantages and limitations. The statistical model based on 155-dimensional correlation coefficients integrates multi-source biological data and employs machine learning algorithms to construct a prediction framework. It can simultaneously capture correlation signals in multiple dimensions such as expression profiles, drug structures, and clinical phenotypes, thereby enhancing prediction coverage and accuracy (Chen et al., 2022). Due to its high dependence on data quality and completeness, this method is prone to overfitting when the training samples are insufficient or the feature dimensions are excessive, resulting in a decline in generalization performance. In contrast, the combination of graph neural networks and attention mechanisms utilizes topological structure information for embedding learning of circRNAs and drugs, highlighting key node features through dynamic weights, demonstrating strong association mining capabilities in large-scale network scenarios (Li X. et al., 2025). However, this strategy has high computational costs, high memory usage, and a large number of hyperparameters, resulting in significant tuning costs. With the random walk and similarity measurement methods constructing a similarity network of biological entities and performing information propagation on it, potential associations can be rapidly scanned with linear complexity, suitable for initial screening tasks of large datasets (Li M. et al., 2025). Its performance is highly dependent on the accuracy of similarity measurement, and because the model is essentially a linear method, it is difficult to depict the nonlinear interactions within the biological system, resulting in insufficient performance in complex association prediction scenarios. Moreover, during the process of dimensionality reduction or network embedding, important biological details may be lost, affecting the precision of the prediction.

In summary, these three types of methods have distinct characteristics and complement each other in the prediction of the association between circRNA and drug sensitivity. Future research can integrate the advantages of these methods, such as combining the interpretability enhancement technology of GNN and the integration of multi-source features, to improve the prediction accuracy and biological relevance (Ma et al., 2024). At the same time, introducing data augmentation techniques, interpretability tools, and establishing standardized benchmark test datasets and evaluation indicators will help further optimize the model performance and promote the progress of this field (Liu T. et al., 2025). Moreover, exploring the application of these methods in other biomedical fields, such as gene regulatory networks and protein interaction networks, will further verify their universality and effectiveness.

2 Methods

We put forward a novel framework entitled DMAGCL. This framework is specifically designed to concurrently tackle three crucial challenges during the process of graph representation learning: information redundancy, negative sample noise, and class imbalance issues. Through an innovative architectural design, our method integrates graph encoding, masked reconstruction, dynamic negative sampling, ensemble learning, and ranking optimization into a unified end-to-end learning objective. This integration allows for the coordinated evolution of pseudo-label generation, sampling distribution adjustment, model ensemble, and evaluation metric optimization throughout the training process. The overall process is shown in Figure 1.

Figure 1. The computational flowchart of DMAGCL. (A) CircRNA and drug feature extraction (B) Dual view masking graph learning, (C) Adaptive contrastive learning (D) circRNA-drug graph resonstration.

2.1 Multi-source feature extraction

To comprehensively characterize the characteristics of nodes in the network, we systematically extracted multi-source features from two dimensions: circRNAs and drugs. We then constructed a unified feature representation through an effective fusion strategy, providing high-quality feature inputs for subsequent graph representation learning. For circRNA nodes, we integrated two complementary feature sources. Based on the nucleic acid sequence information of circular RNA host genes, we used the edit distance algorithm to calculate the similarity between sequences, and constructed a circRNA sequence similarity matrix

Here,

The nuclear bandwidth parameter

Ultimately, we obtained the comprehensive feature matrix

Here,

The parameter

The similarity features of the two drugs are fused through the same weighted strategy under the same conditions, resulting in a comprehensive feature matrix

2.2 Dual masked graph views

While graph structure is vital for representation learning, standard GNNs can be limited in capturing complex topological dependencies. To address this, we propose a self-supervised contrastive learning framework with a dual-masking strategy to enhance the robustness and quality of graph representations. This method constructs two masking views with different structural perturbation intensities, capturing local structural patterns at the path level and edge level respectively, and leveraging the contrastive learning mechanism to improve the model’s perception of graph structure information.

Specifically, we designed two complementary masking modules: the Path Masking Module (MaskPath) and the Edge Masking Module (MaskEdge). The Path Masking Module randomly samples the node paths in the graph and performs overall masking on the nodes and their associated edges within the path, thereby generating the first perturbed view

Among them,

We employ a shared-weight graph encoder

The encoder

2.3 Multi-view graph encoder

In the field of graph neural networks, the effective modeling and feature extraction of multi-view graph data has always been a key and challenging research direction. Since multi-view graph data typically contains heterogeneous structural information from different sources or different feature spaces, how to fully integrate the effective features from each view and retain their inherent topological relationships is an important issue for improving the representational learning ability of graphs. To effectively integrate heterogeneous information from our constructed multi-view graph (e.g., sequence similarity, GIP similarity), we propose a Multi-View Graph Encoder. This encoder combines GCN for intra-view feature extraction and GAT for inter-view fusion, ensuring a balanced and comprehensive representation. The core design of this method consists of two main stages: local structure modeling within views and global feature fusion between views. Firstly, for the structural characteristics within each view, GCN is used to aggregate the neighborhood of nodes to capture the specific local topological patterns of the view. Specifically, for the

Here,

After obtaining the node representations of each view, in order to further integrate the information from multiple views, this paper introduces the GAT mechanism to adaptively learn the contribution weights of different views to the current view. Specifically, for the target view

Here,

Here,

Finally, the feature representations of each view, weighted by attention, are integrated and input into a fully connected layer or a specific downstream task module to generate the final graph representation or node representation. The integrated output can be expressed as:

Here,

In conclusion, the multi-view graph encoder proposed in this paper combines the advantages of GCN in modeling the internal structure of views with the flexibility of GAT in integrating views, which not only effectively extracts the local features of each view, but also captures the global correlations between views. Thus, it achieves more comprehensive and robust feature learning in multi-view graph data processing.

2.4 Adaptive contrastive loss

As a robust and effective alternative, we introduce a Scheduled Contrastive Loss featuring a dynamic temperature parameter (t) that follows a predefined linear decay schedule. Specifically, this mechanism sets a higher temperature value in the early training stage to encourage the model to conduct more extensive exploration and avoid prematurely settling into suboptimal solutions; while gradually reducing the temperature value in the later training stage to enhance the model’s ability to distinguish between positive and negative samples, thereby improving its generalization performance.

The mathematical definition of the adaptive contrastive loss function is as follows. Let the input feature vectors be

Here,

Here,

During the training process, we first initialize the key parameters, including

2.5 Attention fusion classifier

Simple feature fusion (e.g., concatenation) fails to capture complex cross-modal interactions between circRNA sequences and drug molecular graphs. We therefore propose an Attention-based Fusion Classifier (AFC) to dynamically model these correlations and adaptively fuse multi-source information. Compared with traditional feature concatenation methods, AFC incorporates a multi-head attention structure, which can extract and fuse the interaction information between the circRNA sequence mode and the drug molecule graph mode from multiple subspaces, effectively enhancing the discriminative ability of the feature representation. Additionally, to further improve the robustness and generalization ability of the model, the classification layer adopts a hierarchical multi-layer perceptron (MLP) structure and combines batch normalization (BatchNorm) for regularization processing. Experiments have shown that AFC exhibits superior performance in multiple multimodal classification tasks.

Specifically, the core component of AFC is the multi-head attention mechanism. This mechanism can dynamically adjust the fusion weights based on the correlation between the input features of the circRNA sequence mode and the drug molecule graph mode, thereby focusing on the cross-modal information that is more helpful for the current task. Assuming the input is the feature embeddings of the circRNA sequence mode and the drug molecule graph mode, which are

Among them,

The attention weight

Through the multi-head attention mechanism, AFC can jointly capture the complex dependencies between the circRNA sequence mode and the drug molecule graph mode from multiple representation subspaces, thereby more comprehensively integrating cross-modal information. In the classification module, AFC employs a hierarchical MLP structure to conduct deep semantic encoding of the fused features, and combines BatchNorm technology to enhance the training stability and generalization ability of the model. Specifically, the fused features

Among them,

Ultimately, the normalized high-level features

Among them,

3 Experiment

3.1 Experimental setup and parameter settings

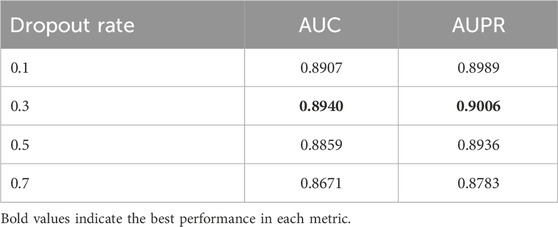

To ensure the reproducibility of our experimental results, we provide a comprehensive description of our implementation details and hyperparameter settings. All experiments were conducted under the same computational environment, and the following key parameters were utilized throughout the study: Network Architecture: The number of layers for both the GCN and GAT in our multi-view graph encoder was set to 3. The hidden dimension of this encoder was configured to 32. Training Strategy: The model was trained for 300 epochs. The Adam optimizer was employed with a learning rate of 0.001. Regularization: A Dropout rate of 0.3 was applied to mitigate overfitting. Reproducibility: A fixed random seed of 42 was used to guarantee the stability of all experimental outcomes and to facilitate replication. The rationale behind the selection of these hyperparameters, including the learning rate and model depth, is further supported by the sensitivity and ablation studies presented in subsequent sections.

3.2 Performance comparison

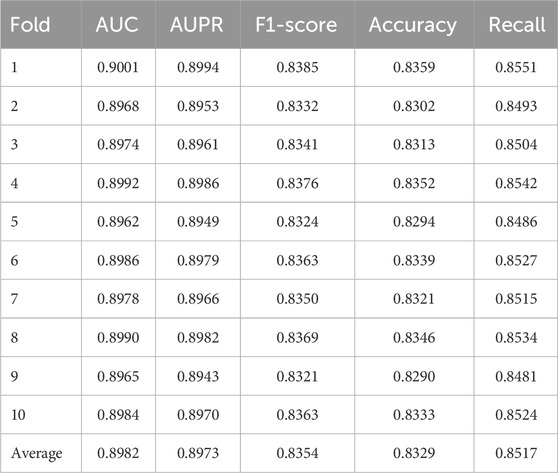

The five-fold cross-validation results, detailed in Table 1, demonstrate the remarkable stability of our model across different data partitions. The AUC values across all five folds exhibited minimal fluctuation, ranging narrowly from 0.8918 to 0.8962, yielding a high average AUC of 0.8940. This consistency, mirrored in other metrics such as AUPR, F1-score, Accuracy, and Recall, indicates that the model’s performance is not dependent on a particular split of the data and reliably reproduces the performance of the complete model. This low variance is a strong indicator of the model’s robustness. Other indicators, including AUPR, F1-score, Accuracy, and Recall, also showed similar consistency. This indicates that the model has good adaptability to different training-test data partitions. To further stress-test the model’s robustness, we conducted a more stringent ten-fold cross-validation. The results, presented in Table 2, reveal an even higher level of consistency across ten different data splits. The average AUC improved slightly to 0.8982, with values ranging from 0.8962 to 0.9001. This minor performance improvement under a more granular data division suggests that the model can effectively leverage larger training subsets. The consistently high performance and low standard deviations across all key metrics (AUPR, F1-score, etc.) in this setting provide compelling statistical evidence for the model’s generalizability and stability. The AUC values ranged from 0.8962 to 0.9001, with an average of 0.8982, and this result was even slightly higher than that of the five-fold cross-validation. Other key indicators such as AUPR (average 0.8973), F1-score (average 0.8354), Accuracy (average 0.8329), and Recall (average 0.8517) also remained at a high level, and the standard deviations between each fold were all within a relatively small range.

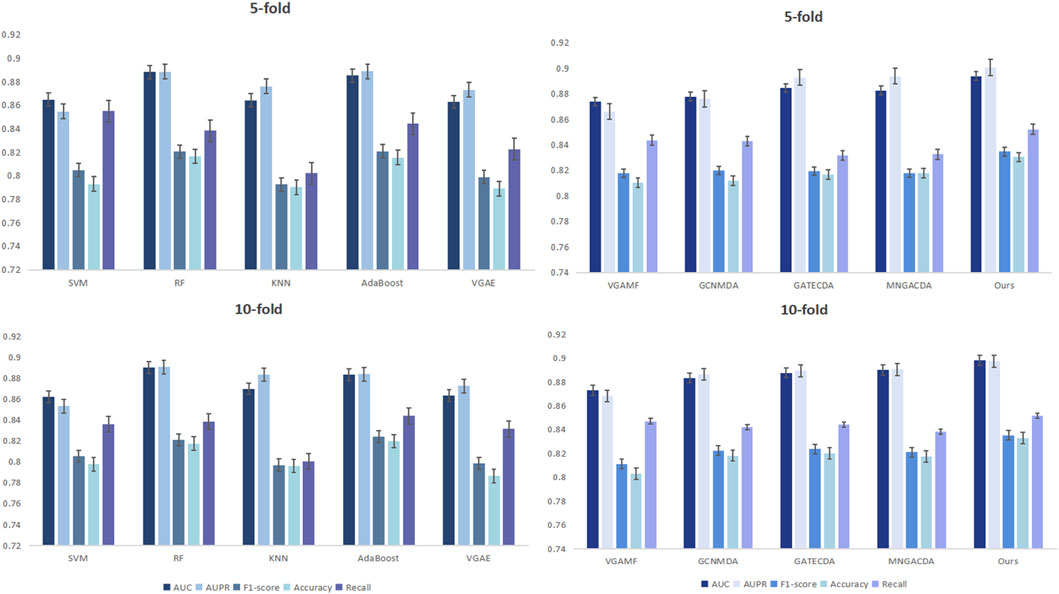

Figure 2 can more intuitively show the comparison of the results of DMAGCL with the baseline model 5-fold and 10-fold cross-validation. The results of cross-validation fully demonstrate from a statistical perspective that the model has excellent generalization ability and stability. Under different data divisions, the model can maintain excellent performance, indicating that our method not only has a good fitting ability for the training data, but also has reliable predictive performance for unknown data.

We evaluated our dual-mask graph contrastive learning framework against several representative methods for circRNA-drug sensitivity association prediction. The baselines included traditional machine learning models (SVM, RF, KNN, AdaBoost) and recent graph neural networks (VGAE, VGAMF, GCNMDA, GATECDA, MNGACDA). All experiments were conducted under the same experimental environment and dataset, using five-fold and ten-fold cross-validation to ensure the statistical reliability of the results.

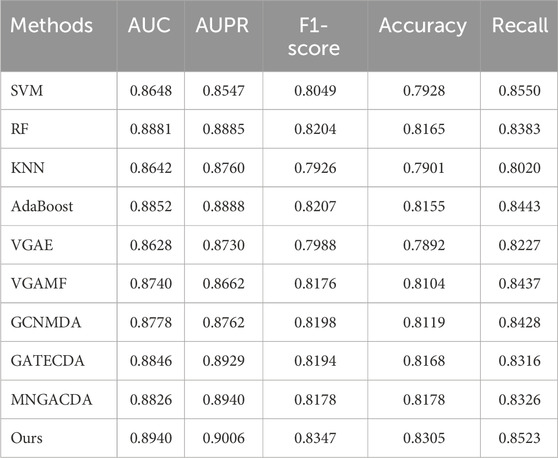

A comprehensive performance comparison against various baseline methods under five-fold cross-validation is summarized in Table 3. Our proposed method consistently outperforms all competitors, achieving the top scores across all five evaluation metrics. Notably, it attains an AUC of 0.8940 and an AUPR of 0.9006, representing a clear margin over the strongest baselines. The results reveal a key trend: graph neural network-based methods (e.g., GATECDA, MNGACDA) generally surpass traditional machine learning models (e.g., RF, SVM), underscoring the critical importance of explicitly modeling the graph structure for this prediction task. However, even among these advanced graph methods, our dual-mask framework establishes a new state-of-the-art. Including AUC, AUPR, F1-score, accuracy, and recall rate. The AUC reached 0.8940, and AUPR reached 0.9006, significantly outperforming other comparison methods. Traditional methods like RF and AdaBoost showed competitive results but were limited in capturing complex graph topology. Graph-based methods generally outperformed them, underscoring the importance of graph structure modeling. Notably, even attention-based methods like GATECDA and MNGACDA were outperformed by our framework, suggesting that our dual-mask strategy and contrastive learning paradigm better capture the intricate circRNA-drug associations than attention mechanisms alone.

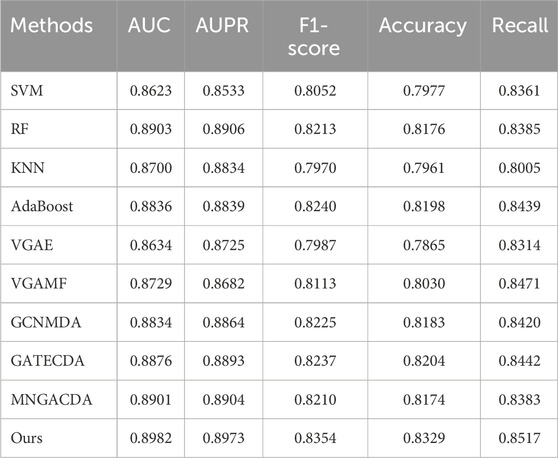

The superiority of our method is further confirmed under the more granular ten-fold cross-validation, as detailed in Table 4. Here, our model maintains its leading advantage with an average AUC of 0.8982 and AUPR of 0.8973. It is worth noting that while some baselines like GATECDA also showed improved performance with more folds, the performance gap between our method and these strong competitors persists. This consistent outperformance across both five-fold and ten-fold validations strongly suggests that the gains from our dual-mask contrastive learning framework are robust and not merely an artifact of a specific evaluation setup. Other indicators also show a similar trend. Compared with the five-fold cross-validation, the performance fluctuations of each method under the ten-fold validation are smaller, indicating that the method proposed in this paper has better adaptability to different data divisions. It is worth noting that in the ten-fold validation, the performance of the GATECDA method has improved compared to the five-fold validation, but its AUC (0.8876) and AUPR (0.8893) are still lower than that of the method proposed in this paper, thereby further demonstrating the superiority of the dual-mask contrastive learning framework we proposed in capturing complex biological association patterns.

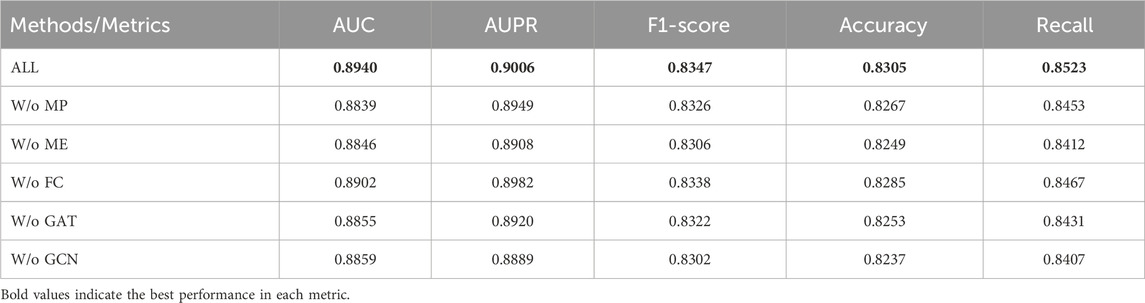

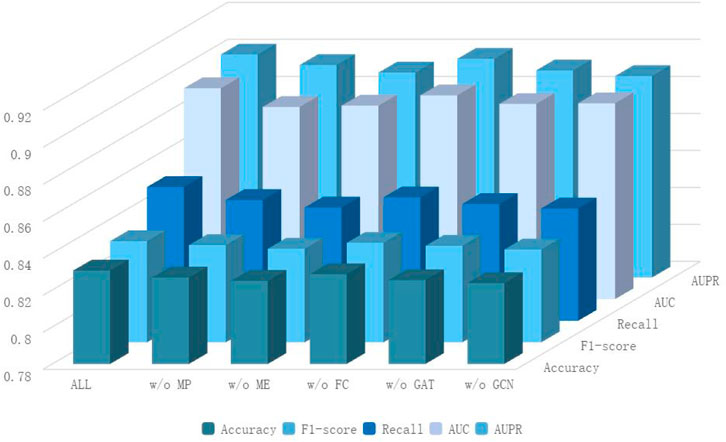

3.3 Ablation experiment

To dissect the contribution of each core component in our framework, we conducted a systematic ablation study, with results detailed in Table 5; Figure 3. We evaluated five ablated variants: removing the path mask (w/o MP), removing the edge mask (w/o ME), replacing the attention fusion classifier with an MLP (w/o FC), and removing either the GAT (w/o GAT) or GCN (w/o GCN) component from the encoder. The complete model achieves the best performance (AUC: 0.8940), confirming the synergistic design. The removal of either masking strategy (w/o MP or w/o ME) caused a substantial and nearly equivalent performance drop (AUC

The complete model achieved the best performance (AUC: 0.8940), demonstrating the synergy among its components. Removing either the path mask (w/o MP) or edge mask (w/o ME) caused a noticeable performance drop (AUC: 0.884). This indicates that both masking strategies contribute uniquely to learning robust representations: path masking helps capture higher-order dependencies, while edge masking forces the model to rely on broader neighborhood structures. When the path masking module was removed, it was observed that all indicators showed significant declines, especially the AUC value dropping to 0.8839 and the recall rate dropping to 0.8453. This phenomenon indicates that the path-level structural information plays an important role in capturing the complex association patterns between circRNAs and drugs. The path masking effectively enhances the model’s reasoning ability for graph structure dependencies by simulating the absence of local connectivity patterns. Similarly, the removal of the edge masking module also led to a significant performance reduction, with AUC dropping to 0.8846 and recall rate dropping to 0.8412. Edge masking weakens the direct connections between nodes at a finer granularity, prompting the model to pay more attention to other structural cues within the neighborhood. This strategy is crucial for learning robust graph representations. Notably, the performance decline of the path masking and edge masking modules was similar but with different focuses, indicating that the two masking strategies capture graph structure information at different levels, and their combined use can produce a complementary effect, jointly enhancing the model’s representation learning ability.

In terms of the feature fusion mechanism, when we replaced the attention-based feature fusion classifier with a simple multi-layer perceptron, the performance showed a slight but consistent decline, with AUC dropping to 0.8902. This result indicates that the attention mechanism can effectively allocate the importance weights of different features when integrating multi-view features, thereby improving the effect of feature fusion. However, the relatively small decline in performance also suggests that the MLP can still capture the interaction relationships between features to a certain extent, but lacks the ability to focus on important features prominently. The ablation experiments on the graph neural network components further revealed the complementary characteristics of GCN and GAT. When only using GCN and removing GAT, AUC dropped to 0.8855; while only using GAT and removing GCN, AUC dropped to 0.8859. GCN captures the local topological patterns of the graph through the aggregation of neighbor node features, while GAT dynamically emphasizes important structural features through attention weights. The combination of the two enables the model to fully utilize the regular structure of the graph and adaptively focus on key information.

3.4 Case experiment

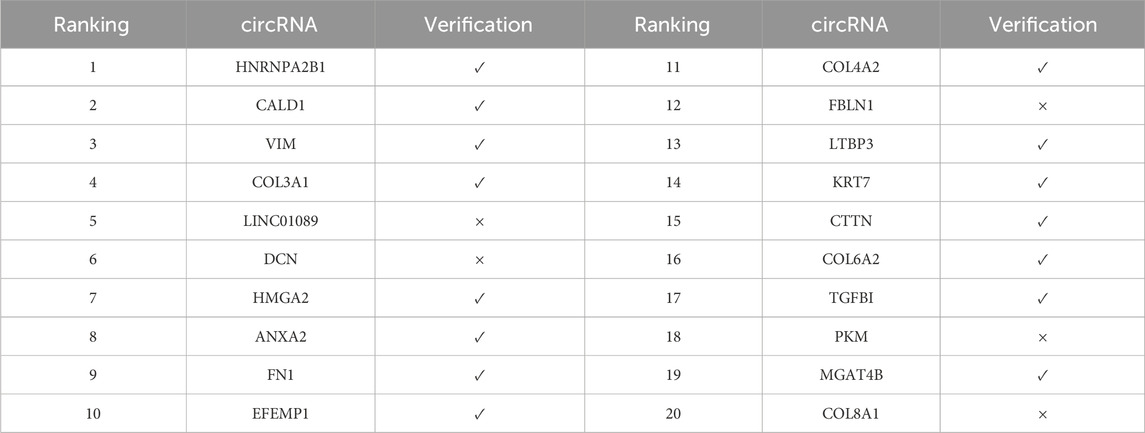

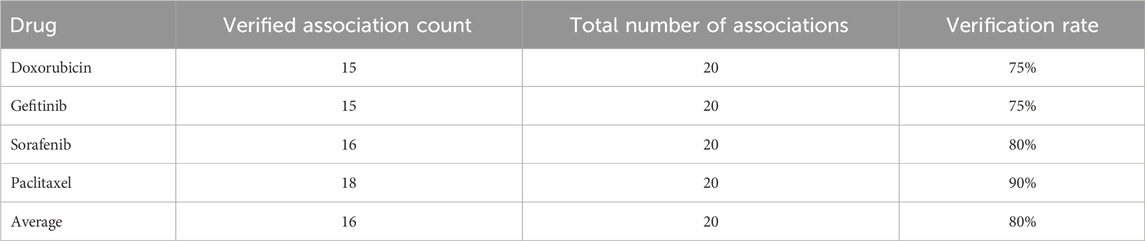

In this study, representative anticancer drugs (Doxorubicin, Gefitinib, Sorafenib, and Paclitaxel) from the CTRP (Cancer Therapeutics Response Portal) database were selected as an independent validation set to test the model’s generalization capability and biological interpretability in real biological scenarios. Numerous existing works have utilized public drug response databases (such as CTRP, GDSC, CCLE) to validate the extrapolation performance of drug–gene or drug–RNA prediction models. Compared to these studies, the differences of this work lie in: (1) We predict circRNA–drug sensitivity associations, which represent a structured yet relatively sparse and noisy biological network problem; (2) The model employs multi-view encoding and various self-supervised enhancement strategies to improve the representation learning capability for complex graph structures, thereby aiming to maintain prediction accuracy even for unseen drugs/samples. By testing the model’s top 20 candidate circRNAs predicted on CTRP and comparing them with known experimental or literature-validated results in the database, we can directly compare our method with existing sequence/network-based or single GNN-based methods: if the validation rate on the independent database is significantly higher than random or baseline models, it indicates that our method holds an advantage in capturing biologically relevant signals, thereby establishing a connection with and surpassing relevant prior works. These drugs include doxorubicin, gefitinib, sorafenib, and paclitaxel, which represent different mechanisms of action of chemotherapy drugs and have good clinical representativeness and biological diversity. The experiment sorted the circRNA-drug sensitivity associations by prediction scores, selected the top 20 high-confidence associations in the CTRP database for independent external validation, and used the validation rate as the main evaluation indicator.

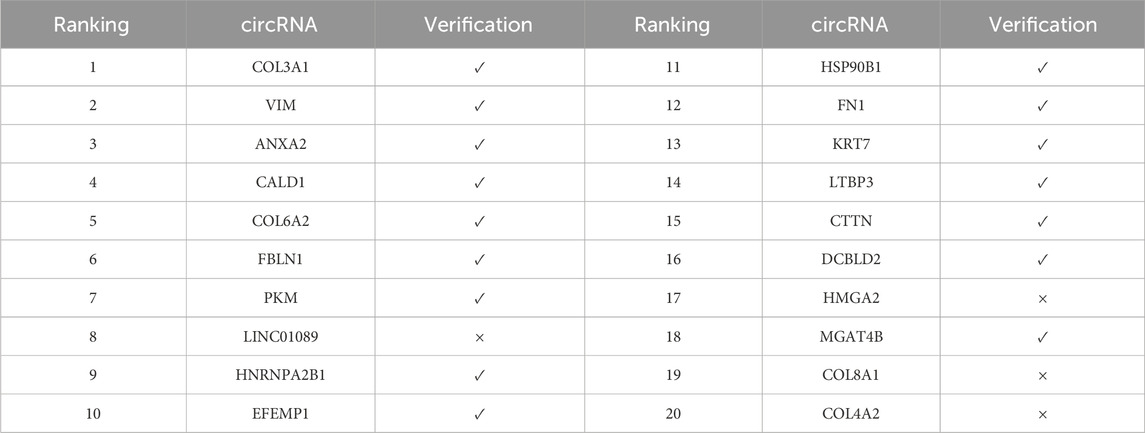

In the validation results of doxorubicin (Table 6), 15 out of the top 20 predicted circRNAs were validated, with a validation rate of 75%. It is noteworthy that the top 4 circRNAs, including HNRNPA2B1, CALD1, VIM, and COL3A1, were all validated as significantly associated, indicating that the model has a good ability to identify highly confident associations. Doxorubicin, as an anthracycline broad-spectrum anti-tumor drug, induces DNA damage by integrating into the DNA double helix and activates the apoptosis signaling pathway. These validated circRNAs may play an important regulatory role in its mechanism of action.

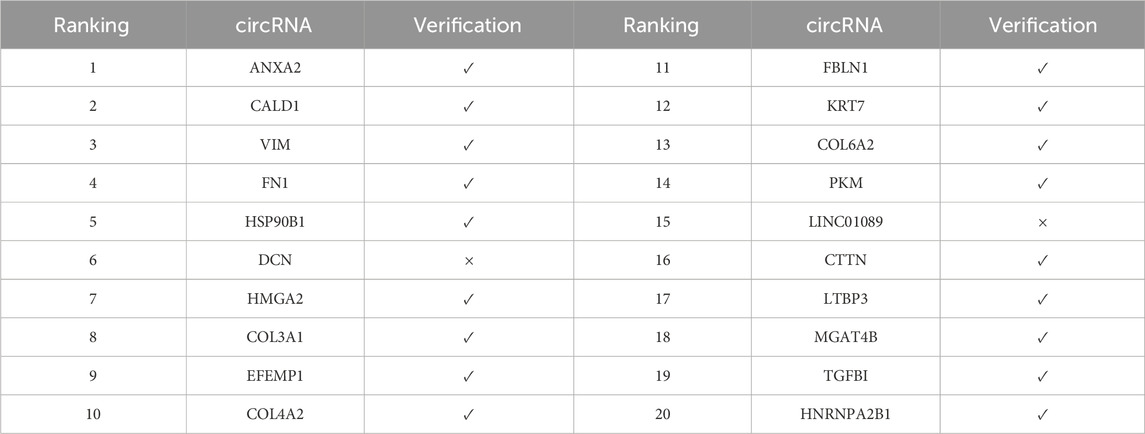

Gefitinib, as a selective EGFR tyrosine kinase inhibitor, is mainly used for the treatment of EGFR-mutated non-small cell lung cancer. According to the verification results (Table 7), 15 out of the top 20 predicted associations were verified, with a verification rate of 75%. It is worth noting that EFEMP2 ranked 10th was not verified, while HNRNPA2B1 ranked 18th was verified. This phenomenon indicates that the model still has room for optimization in the discrimination of certain moderate confidence associations, possibly related to the expression heterogeneity of circRNA in specific cell lines.

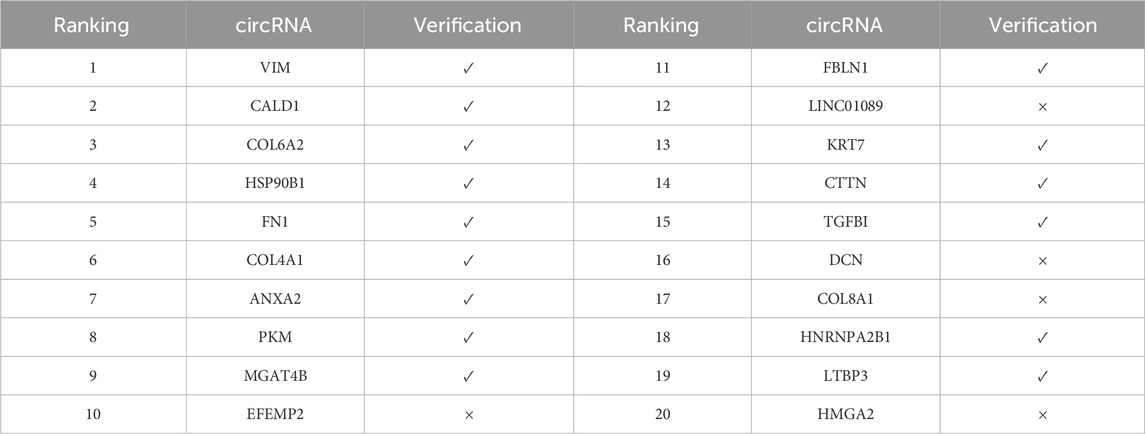

In the validation analysis of sorafenib (Table 8), the model demonstrated superior predictive performance, with 16 out of 20 predicted associations confirmed, yielding a validation rate of 80%. As a multi-target tyrosine kinase inhibitor, sorafenib is widely used in the treatment of hepatocellular carcinoma and renal cell carcinoma. Notably, all of the top six ranked circRNAs—including COL3A1, VIM, and ANXA2—were experimentally validated, indicating high prediction accuracy for top-ranking associations. These circRNAs may modulate tumor cell sensitivity to sorafenib through the regulation of multiple signaling pathways.

The most encouraging result comes from the validation analysis of paclitaxel (Table 9). Among the first 20 predicted correlations, 18 were validated, with a validation rate of up to 90%. Paclitaxel, as a microtubule stabilizer, inhibits microtubule depolymerization to block the cell cycle and is widely used in the treatment of breast cancer and ovarian cancer. This excellent predictive performance indicates that the dual-mask graph contrastive learning framework proposed in this paper has a special advantage for the prediction of sensitivity correlations of microtubule-targeted drugs. From the validation results, it can be seen that multiple cytoskeleton-related circRNAs including ANXA2, CALD1, and VIM have been validated, which is highly consistent with the mechanism of paclitaxel. Through the comprehensive analysis of the validation results of the four drugs (Table 10), the average validation rate of this model reached 80%, demonstrating good generalization ability. Further analysis revealed that some circRNAs were predicted to have high-ranking correlations in multiple drugs and were validated, such as VIM, CALD1, ANXA2, and FN1, etc. These circRNAs may be involved in the universal drug resistance mechanism of tumor cells, and their molecular functions are worthy of in-depth study through subsequent experiments. Additionally, the model’s predictions for some circRNAs (such as LINC01089, COL8A1) showed deviations, which may be related to the incomplete annotation or low expression level of these circRNAs in the CTRP database.

3.5 Parameter experiment

To determine the optimal parameter configuration of the model and to deeply understand the influence mechanism of each hyperparameter on the model’s performance, we conducted further parameter experiments on three key parameters: the number of GCN and GAT layers, the hidden layer dimension of the multi-view graph encoder, and the Dropout rate. These parameters respectively affect the model’s expression ability and generalization performance from three different dimensions: model depth, representation ability, and regularization strength. All experiments were conducted under the setting of five-fold cross-validation, with AUC and AUPR as the main evaluation indicators to ensure the statistical reliability of the results. Table 11 shows the impact of the number of GCN and GAT layers on the model’s performance. As the number of network layers increased from 1 layer to 3 layers, the model’s AUC improved from 0.8911 to 0.8940, and AUPR improved from 0.8957 to 0.9006. This indicates that appropriately increasing the network depth helps the model capture more complex graph structure features. However, when the number of layers continued to increase to 4 layers and 5 layers, the performance slightly decreased, with AUC dropping to 0.8914 and 0.8897 respectively. This might be due to the overfitting or gradient vanishing problem caused by an overly deep network. This phenomenon is consistent with the theoretical analysis of graph neural networks, that is, an excessively deep GNN layer may lead to the oversmoothing of node features, thereby reducing the model’s discriminative ability. Finally, 3 layers were selected as the optimal configuration for GCN and GAT, achieving a good balance between model expression ability and training stability.

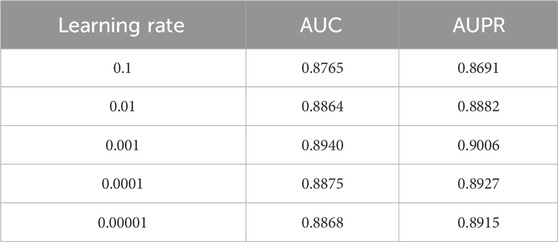

To determine the optimal learning rate for model training, we conducted a sensitivity analysis by evaluating a spectrum of values ranging from 0.1 to 0.00001. The performance, measured by AUC and AUPR, is summarized in Table 12. The results demonstrate that the learning rate significantly impacts model efficacy. A relatively high learning rate of 0.1 led to suboptimal performance (AUC = 0.8765, AUPR = 0.8691), suggesting potential instability during the gradient descent process. As the learning rate decreased to 0.01, the performance improved notably. The best performance was achieved with a learning rate of 0.001, yielding the highest AUC (0.8940) and AUPR (0.9006). Further decreasing the learning rate to 0.0001 and 0.00001 resulted in a slight but consistent performance degradation, indicating that overly small learning rates might hinder the model’s convergence to an optimal solution. Consequently, a learning rate of 0.001 was selected as the optimal configuration for all subsequent experiments, striking a balance between training efficiency and model performance.

In terms of the selection of the hidden layer dimensions in the multi-view graph encoder, we compared different dimension settings ranging from 16 to 256. The experimental results are shown in Table 13. When the hidden layer dimension was set to 32, the model achieved the best performance, with an AUC of 0.8940 and an AUPR of 0.9006. Smaller dimension settings (such as 16) might limit the model’s representational ability, resulting in an AUC of only 0.8882; while overly large dimensions (such as 64, 128, 256) might introduce too many parameters, increasing the risk of overfitting and causing performance to decline to varying degrees. This result indicates that an appropriate hidden layer dimension can achieve the best balance between model capacity and generalization ability, ensuring sufficient feature representation space while avoiding optimization difficulties caused by parameter redundancy.

The Dropout rate, as a key parameter for controlling the regularization intensity of the model, has an impact as shown in Table 14. When the Dropout rate is set to 0.3, the model exhibits the best performance, with an AUC of 0.8940 and an AUPR of 0.9006. A lower Dropout rate (0.1) may lead to insufficient regularization, causing the model to overfit the training data; while a higher Dropout rate (0.5 and 0.7) may overly suppress the neuron activation, resulting in underfitting of the model, especially when the Dropout rate reaches 0.7, the performance significantly drops to an AUC of 0.8671 and an AUPR of 0.8783. This trend clearly demonstrates the dual role of the Dropout mechanism in deep learning models: an appropriate Dropout rate can effectively prevent overfitting and enhance the model’s generalization ability; but an overly strong Dropout rate will damage the model’s learning ability and lead to performance degradation.

Based on the results of the parameter experiments, the optimal parameter configuration of the model was determined: the number of layers for GCN and GAT is 3, the hidden layer dimension is 32, and the Dropout rate is 0.3. This configuration achieved the best performance in all three key parameters. Through this experiment, not only did it provide a reliable parameter basis for the method in this paper, but it also offered valuable references for other bioinformatics tasks based on graph neural networks.

4 Conclusion

This study introduces a dual-mask graph contrastive learning (DMAGCL) framework for predicting circRNA-drug sensitivity associations. The core innovation lies in its synergistic use of path- and edge-level masking to learn robust node representations, an adaptive contrastive loss to dynamically balance exploration and exploitation during training, and an attention fusion classifier to effectively integrate multi-modal features. Extensive evaluations confirmed that DMAGCL achieves state-of-the-art performance, with case studies on anti-cancer drugs demonstrating its biological relevance and an average verification rate of 80 Looking forward, this work opens several promising directions: First, the framework could be applied to related tasks like miRNA-disease association prediction, specifically to model the complex interactions in competing endogenous RNA (ceRNA) networks. Second, integrating multi-omics data (e.g., transcriptomics from specific cell lines) could help address the heterogeneity observed in some predictions and improve cell context-specific modeling. Finally, exploring the integration of large language models for biological sequence encoding could further enrich feature representations. This study thus establishes a robust and extensible computational paradigm with significant potential for biomarker discovery and drug sensitivity analysis in precision oncology.

Data availability statement

The data and code presented in the study are publicly available at https://github.com/yuqi23-guo/DMAGCL.

Author contributions

PW: Methodology, Data curation, Supervision, Funding acquisition, Resources, Writing – review and editing, Conceptualization, Writing – original draft. YG: Writing – review and editing, Formal Analysis, Software, Data curation. ZL: Writing – original draft, Visualization, Software. DT: Software, Visualization, Writing – original draft. MQ: Investigation, Validation, Writing – review and editing, Supervision. ZZ: Writing – original draft, Investigation, Validation. LZ: Writing – review and editing, Resources.

Funding

The authors declare that financial support was received for the research and/or publication of this article. This research was financially supported by the National Natural Science Foundation of China (No. 62172158), the Natural science foundation of Fujian Province (Grant No.2024J08372) and the National Natural Science Foundation of China (No. 62471318).

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

The reviewer WL declared a past co-authorship with the author ZL to the handling editor.

Generative AI statement

The authors declare that no Generative AI was used in the creation of this manuscript.

Any alternative text (alt text) provided alongside figures in this article has been generated by Frontiers with the support of artificial intelligence and reasonable efforts have been made to ensure accuracy, including review by the authors wherever possible. If you identify any issues, please contact us.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

References

Ai, N., Yuan, H., Liang, Y., Lu, S., Ouyang, D., Lai, Q. H., et al. (2024). Multi-view multiattention graph learning with stack deep matrix factorization for circrna-drug sensitivity association identification. IEEE J. Biomed. Health Inf. 28, 7670–7682. doi:10.1109/jbhi.2024.3431693

Baptista, D., Correia, J., Pereira, B., and Rocha, M. (2022). Evaluating molecular representations in machine learning models for drug response prediction and interpretability. J. Integr. Bioinforma. 19, 20220006. doi:10.1515/jib-2022-0006

Bartel, D. P. (2004). Micrornas: genomics, biogenesis, mechanism, and function. Cell 116, 281–297. doi:10.1016/s0092-8674(04)00045-5

Cai, L., Yue, G., Chen, Y., Wang, L., Yao, X., Zou, Q., et al. (2025). Et-protacs: modeling ternary complex interactions using cross-modal learning and ternary attention for accurate protac-induced degradation prediction. Briefings Bioinforma. 26, bbae654. doi:10.1093/bib/bbae654

Chen, L.-L., and Yang, L. (2015). Regulation of circrna biogenesis. RNA Biol. 12, 381–388. doi:10.1080/15476286.2015.1020271

Chen, Y., Wang, J., Wang, C., Liu, M., and Zou, Q. (2022). Deep learning models for disease-associated circrna prediction: a review. Briefings Bioinforma. 23, bbac364. doi:10.1093/bib/bbac364

Deng, L., Liu, Z., Qian, Y., and Zhang, J. (2022). Predicting circrna-drug sensitivity associations via graph attention auto-encoder. BMC Bioinforma. 23, 160. doi:10.1186/s12859-022-04694-y

Ding, C., Yi, X., Chen, X., Wu, Z., and You, H. (2021). Warburg effect-promoted exosomal circ_0072083 releasing up-regulates NANGO expression through multiple pathways and enhances temozolomide resistance in glioma. J. Exp. and Clin. Cancer Res. 40, 164. doi:10.1186/s13046-021-01942-6

Du, X., and Xue, Z. (2022). Jlcrb: a unified multi-view-based joint representation learning for circrna binding sites prediction. J. Biomed. Inf. 136, 104231. doi:10.1016/j.jbi.2022.104231

Fan, Z., Zhang, Y., Li, Y., Zhong, Z., and Deng, L. (2024). “Lsnscda: unraveling circrna-drug sensitivity via local smoothing graph neural network and credible negative samples,” in 2024 IEEE International Conference on Bioinformatics and Biomedicine (BIBM).

Fu, X., Du, Z., Chen, Y., Chen, H., Zhuo, L., Lu, A., et al. (2025a). Drugkans: a paradigm to enhance drug-target interaction prediction with Kans. IEEE J. Biomed. Health Inf., 1–12. doi:10.1109/JBHI.2025.3566931

Fu, X., Peng, L., Chen, H., Rong, M., Chen, Y., Cao, D., et al. (2025b). Grape: graph-regularized protein language modeling unlocks tcr-epitope binding specificity. Briefings Bioinforma. 26, bbaf522. doi:10.1093/bib/bbaf522

Hanahan, D., and Weinberg, R. A. (2000). The hallmarks of cancer. Cell 100, 57–70. doi:10.1016/s0092-8674(00)81683-9

Lan, W., Dong, Y., Zhang, H., Li, C., Chen, Q., Liu, J., et al. (2023). Benchmarking of computational methods for predicting circrna-disease associations. Briefings Bioinforma. 24, bbac613. doi:10.1093/bib/bbac613

Li, X., Yang, L., and Chen, L.-L. (2018). The biogenesis, functions, and challenges of circular rnas. Mol. Cell 71, 428–442. doi:10.1016/j.molcel.2018.06.034

Li, G., Li, Y., Liang, C., and Luo, J. (2023). Deepwalk-aware graph attention networks with cnn for circrna-drug sensitivity association identification. Briefings Funct. Genomics 23, 418–428. doi:10.1093/bfgp/elad053

Li, M., Coşkun, M., and Koyutürk, M. (2025a). Topological-similarity based canonical representations for biological link prediction. IEEE Trans. Comput. Biol. Bioinforma. 22, 1278–1287. doi:10.1109/tcbb.2024.3462730

Li, X., Wang, J., and Yan, Z. (2025b). Can graph neural networks be adequately explained? A survey. ACM Comput. Surv. 57, 1–36. doi:10.1145/3711122

Liu, Y., Dong, Y., Zhao, L., Su, L., and Luo, J. (2018). Circular rna-mto1 suppresses breast cancer cell viability and reverses monastrol resistance through regulating the traf4/eg5 axis. Int. J. Oncol. 53, 1752–1762. doi:10.3892/ijo.2018.4485

Liu, H., Chen, C., Su, Y., Zuo, E., Wu, L., Li, M., et al. (2024). Tensor product graph diffusion based on nonlinear fusion of multi-source information to predict circrna-disease associations. Appl. Soft Comput. 152, 111215. doi:10.1016/j.asoc.2023.111215

Liu, T., Wang, S., Zhang, Y., Pang, S., Yin, W., Wu, W., et al. (2025a). Deciphering circrna-drug sensitivity associations via global-local heterogeneous matrix factorization and hypergraph contrastive learning. Expert Syst. Appl. 292, 128548. doi:10.1016/j.eswa.2025.128548

Liu, Z., Dai, Q., Yu, X., Duan, X., and Wang, C. (2025b). Predicting circrna-drug resistance associations based on a multimodal graph representation learning framework. IEEE J. Biomed. Health Inf. 29, 1838–1848. doi:10.1109/jbhi.2023.3299423

Lu, S., Liang, Y., Li, L., Liao, S., Zou, Y., Yang, C., et al. (2023). Inferring circrna-drug sensitivity associations via dual hierarchical attention networks and multiple kernel fusion. BMC Genomics 24, 796. doi:10.1186/s12864-023-09899-w

Ma, J., Qin, T., Zhai, M., and Cai, L. (2024). Agcnaf: predicting disease-gene associations using gcn and multi-head attention to fuse the similarity features. Eng. Res. Express 6, 045221. doi:10.1088/2631-8695/ad8c9f

Milano, M., and Cannataro, M. (2023). Network models in bioinformatics: modeling and analysis for complex diseases. Briefings Bioinforma. 24, bbad016. doi:10.1093/bib/bbad016

Min, S., Xiao, Y., and Ma, J. (2019). Circular rnas in cancer: emerging functions in hallmarks, stemness, resistance and roles as potential biomarkers. Mol. Cancer 18, 1–17. doi:10.1186/s12943-019-1002-6

Niu, M., Wang, C., Chen, Y., Zou, Q., and Luo, X. (2025). Interpretable multi-instance heterogeneous graph network learning modelling circrna-drug sensitivity association prediction. BMC Biol. 23, 131. doi:10.1186/s12915-025-02223-w

Peng, L., Li, H., Yuan, S., Meng, T., Chen, Y., Fu, X., et al. (2025a). Metacda: a novel framework for circrna-driven drug discovery utilizing adaptive aggregation and meta-knowledge learning. J. Chem. Inf. Model. 65, 2129–2144. doi:10.1021/acs.jcim.4c02193

Peng, L., Wang, W., Yang, Z., Fu, X., Liang, W., and Cao, D. (2025b). Leveraging explainable multi-scale features for fine-grained circrna-mirna interaction prediction. BMC Biol. 23, 121. doi:10.1186/s12915-025-02227-6

Ru, X., Ye, X., Sakurai, T., and Zou, Q. (2021a). Application of learning to rank in bioinformatics tasks. Briefings Bioinforma. 22, bbaa394–11. doi:10.1093/bib/bbaa394

Ru, X., Ye, X., Sakurai, T., Zou, Q., Xu, L., and Lin, C. (2021b). Current status and future prospects of drug–target interaction prediction. Briefings Funct. Genomics 20, 312–322. doi:10.1093/bfgp/elab031

Ru, X., Ye, X., Sakurai, T., and Zou, Q. (2022). Nerltr-dta: drug-target binding affinity prediction based on neighbor relationship and learning to rank. Bioinformatics 38, 1964–1971. doi:10.1093/bioinformatics/btac048

Song, J., Wei, M., Zhao, S., Zhai, H., Dai, Q., and Duan, X. (2024). Drug sensitivity prediction based on multi-stage multi-modal drug representation learning. Interdiscip. Sci. Comput. Life Sci. 17, 231–243. doi:10.1007/s12539-024-00668-1

Suzuki, H., and Tsukahara, T. (2014). A view of pre-mrna splicing from rnase r resistant rnas. Int. J. Mol. Sci. 15, 9331–9342. doi:10.3390/ijms15069331

Toprak, A. (2024). circrna-disease association prediction with an improved unbalanced bi-random walk. J. Radiat. Res. Appl. Sci. 17, 100858. doi:10.1016/j.jrras.2024.100858

Turgut, H., Turanli, B., and Boz, B. (2023). Dcda: circrna-disease association prediction with feed-forward neural network and deep autoencoder. Interdiscip. Sci. Comput. Life Sci. 16, 91–103. doi:10.1007/s12539-023-00590-y

Wang, X., Wang, H., Jiang, H., Qiao, L., and Guo, C. (2023). Circular rna circ_0076305 promotes cisplatin (ddp) resistance of non-small cell lung cancer cells by regulating abcc1 through mir-186-5p. Cancer Biotherapy Radiopharm. 38, 293–304. doi:10.1089/cbr.2020.4153

Wang, Y., Lei, X., Chen, Y., Guo, L., and Wu, F.-X. (2025a). Circular rna-drug association prediction based on multi-scale convolutional neural networks and adversarial autoencoders. Int. J. Mol. Sci. 26, 1509. doi:10.3390/ijms26041509

Wang, Z., Lei, X., Zhang, Y., Wu, F.-X., and Pan, Y. (2025b). Recent progress of deep learning methods for rbp binding sites prediction on circrna. Curr. Bioinforma. 20, 487–505. doi:10.2174/0115748936308564240712053215

Wei, J., Zhuo, L., Pan, S., Lian, X., Yao, X., and Fu, X. (2023a). Headtailtransfer: an efficient sampling method to improve the performance of graph neural network method in predicting sparse ncrna–protein interactions. Comput. Biol. Med. 157, 106783. doi:10.1016/j.compbiomed.2023.106783

Wei, J., Zhuo, L., Zhou, Z., Lian, X., Fu, X., and Yao, X. (2023b). Gcfmcl: predicting mirna-drug sensitivity using graph collaborative filtering and multi-view contrastive learning. Briefings Bioinforma. 24, bbad247. doi:10.1093/bib/bbad247

Xiao, Q., Dai, J., and Luo, J. (2021a). A survey of circular rnas in complex diseases: databases, tools and computational methods. Briefings Bioinforma. 23, bbab444. doi:10.1093/bib/bbab444

Xiao, Q., Fu, Y., Yang, Y., Dai, J., and Luo, J. (2021b). Nsl2cd: identifying potential circrna-disease associations based on network embedding and subspace learning. Briefings Bioinforma. 22, bbab177. doi:10.1093/bib/bbab177

Xiao, X., Qian, Y., Huang, Z., Zheng, R., and Deng, L. (2023). “Predicting associations between circrnas and drug sensitivity using heterogeneous graphs and graph attention networks,” in 2023 IEEE International Conference on Bioinformatics and Biomedicine (BIBM), 191–196. doi:10.1109/bibm58861.2023.10385513

Xie, F., Xiao, X., Tao, D., Huang, C., Wang, L., Liu, F., et al. (2020). circnr3c1 suppresses bladder cancer progression through acting as an endogenous blocker of brd4/c-myc complex. Mol. Ther. - Nucleic Acids 22, 510–519. doi:10.1016/j.omtn.2020.09.016

Yang, B., and Chen, H. (2023). Predicting circrna-drug sensitivity associations by learning multimodal networks using graph auto-encoders and attention mechanism. Briefings Bioinforma. 24, bbac596. doi:10.1093/bib/bbac596

Yin, S., Xu, P., Jiang, Y., Yang, X., Lin, Y., Zheng, M., et al. (2024). Predicting the potential associations between circrna and drug sensitivity using a multisource feature-based approach. J. Cell. Mol. Med. 28, e18591. doi:10.1111/jcmm.18591

Zhang, P., Wang, Z., Sun, W., Xu, J., Zhang, W., Wu, K., et al. (2023). Rdrgse: a framework for noncoding rna-drug resistance discovery by incorporating graph skeleton extraction and attentional feature fusion. ACS Omega 8, 27386–27397. doi:10.1021/acsomega.3c02763

Zhou, Z., Du, Z., Wei, J., Zhuo, L., Pan, S., Fu, X., et al. (2023). Mham-npi: predicting ncrna-protein interactions based on multi-head attention mechanism. Comput. Biol. Med. 163, 107143. doi:10.1016/j.compbiomed.2023.107143

Zhou, Z., Du, Z., Jiang, X., Zhuo, L., Xu, Y., Fu, X., et al. (2024a). Gam-mdr: probing mirna–drug resistance using a graph autoencoder based on random path masking. Briefings Funct. Genomics 23, 475–483. doi:10.1093/bfgp/elae005

Zhou, Z., Liao, Q., Wei, J., Zhuo, L., Wu, X., Fu, X., et al. (2024b). Revisiting drug–protein interaction prediction: a novel global–local perspective. Bioinformatics 40, btae271. doi:10.1093/bioinformatics/btae271

Keywords: attention fusion classifier, circRNA, drug sensitivity prediction, dual-masked graph contrastive, multi-source feature integration

Citation: Wang P, Guo Y, Li Z, Tang D, Qi M, Zhu Z and Zhang L (2025) DMAGCL: A dual-masked adaptive graph contrastive learning framework for predicting circRNA-drug sensitivity. Front. Genet. 16:1721716. doi: 10.3389/fgene.2025.1721716

Received: 09 October 2025; Accepted: 30 October 2025;

Published: 26 November 2025.

Edited by:

Xiaoqing Ru, Hebei University of Engineering, ChinaCopyright © 2025 Wang, Guo, Li, Tang, Qi, Zhu and Zhang. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Zejun Li, bHpqZm94QGhuaXQuZWR1LmNu; Mingming Qi, MjAyMTAzMDFAd3p1dC5lZHUuY24=; Lichao Zhang, emhhbmdsaWNoYW9Ac3ppaXQuZWR1LmNu

Peng Wang

Peng Wang Yuqi Guo

Yuqi Guo Zejun Li

Zejun Li Di Tang1

Di Tang1 Lichao Zhang

Lichao Zhang