- 1School of Interactive Arts and Technology, Simon Fraser University, Burnaby, BC, Canada

- 2Department of Computer Science, University of Calgary, Calgary, AB, Canada

In the coming years, emergency calling services in North America will begin to incorporate new modalities for reporting emergencies, including video-based calling and picture sharing. The challenge is that we know little of how future call-taking systems should be designed to support emergency calls with rich multimedia and what benefits or challenges they might bring. We have conducted three studies, along with design work, as part of our research to address this problem. First, we conducted observations and contextual interviews within three emergency response call centers to investigate call taking practices and reactions to the incorporation of rich multimedia in emergency call taking practices. Following this, we created user interface design mock-ups and conducted two additional studies with call takers. One involved low-fidelity designs and one involved the use of a medium-fidelity digital prototype. Across the studies, our results show that 9-1-1 call takers will need a next generation interface that supports multimedia, including video calling, as part of calls. Yet user interfaces will need to be different from commercial video conferencing applications that are commonplace today. Design features for 9-1-1 systems must focus on supporting camera work and the capture of emergency scenes; situational awareness of incidents across call takers, including current and historical media associated with them; and, the regulation of media flow to balance privacy concerns and the viewing of potentially traumatic visuals.

Introduction

In Canada, people experiencing an emergency situation can call the number 9-1-1 to be connected with an emergency call center. They share information about their situation with a call taker and a dispatcher relays the information to a first responder (e.g., fire, police, and ambulance). First responders attend to the scene. In the next few years, emergency calling services in Canada will move toward Next Generation 9-1-1 (NG911) and include support for text messaging, video calling, and the sharing of photos or videos between callers and 9-1-1 call centers (Camp et al., 2000; CRTC 2016, 2016; NG911 Now Coalition, n.d.). In turn, this will involve new ways of sharing information between 9-1-1 call takers, first responders and dispatchers.

Studies have explored emergency call taking practices and we know that calls to 9-1-1 can be stressful for call takers and dispatchers to handle (Adams et al., 1995; Forslund et al., 2004; Neustaedter et al., 2018). Research has also uncovered details around collaboration in call taking centers that illustrate the ways that call takers and dispatchers work together and maintain situational awareness—the moment to moment actions of others that can help orient and guide one's own work (Artman and Wærn, 1999; Mancero et al., 2009). Yet there is a gap when it comes to understanding how user interfaces should be designed for 9-1-1 takers and dispatchers as we move toward supporting NG911 and rich multimedia in the form of video calls and photo sharing. Our prior work explores the ways that 9-1-1 callers imagine NG911 user interfaces should work and how they would benefit from them (Singhal and Neustaedter, 2018). In this article, we focus on addressing the research gap by exploring the design and evaluation of 9-1-1 call taking software from the call taker/dispatcher perspective. Our efforts are directed at how to design software that allows call takers to deal with small-scale emergencies like burglaries, house fires, car accidents, and medical emergencies. This contrasts emergency response in crisis situations (e.g., earthquake response and flooding) that are typically less frequent and often require large units of first responders over a prolonged period of time (Blum et al., 2014). Much of our work emphasizes video calling, given the rich information that video can provide to 9-1-1 call takers about an emergency situation.

Although the emergency number 9-1-1 in Canada matches that of the USA, the jurisdiction over operations is completely separate between the two nations and has no geographical overlap. A caller calling 9-1-1 within Canada is always routed to a call-center located in Canada. Furthermore, call centers are generally regionally based within Canadian provinces where calls are routed to the call center directly responsible for that area's 9-1-1 response. Thus, our studies focus specifically on call taking/dispatcher practices and needs in Canada. Though, given the similarity between 9-1-1 emergency services and other countries (e.g., USA and UK), our results are likely generalizable to these countries.

First, we conducted studies within three 9-1-1 call centers in Canada. We observed police, fire, and ambulance call takers and dispatchers during their normal work practices and conducted contextual interviews with them about their work. We probed about a possible future with video calling and photo sharing technologies incorporated into 9-1-1 services given that they can offer rich visual information about a situation (Neustaedter et al., 2018). Our overarching goal was to understand what factors would be important for the design of such call taking systems if 9-1-1 services were expanded to include video calling and photo sharing. By video calling, we are referring to Skype-like calls that could occur between a caller and call taker. Photo sharing would be akin to how people presently share photos with others through text messaging applications. Early results of this work are published in Neustaedter et al. (2018). In the current article, we recast this knowledge and tie it to the user interface design of NG911 call taking systems.

Second, we built on our first series of studies with user interface design work and formative evaluations of our designs. We used the knowledge from our previous observations and studies to create low fidelity paper prototypes of a 9-1-1 call talking system that supports media-based calling including video conferencing capabilities, picture sharing, and text messaging. Next, we worked with another 9-1-1 call center to conduct a study with 9-1-1 call takers where they tried out the system for a range of small-scale emergency situations using mock calls that were created based on our first observational study and 9-1-1 call taking manuals. Call takers gave feedback on the design and its associated workflow. Our evaluation focused heavily on camera work: the continual movement and reorientation of a caller's mobile phone camera (Jones et al., 2015) in order to capture the necessary information of the scene for the call taker. Third, we performed changes to the user interface and created a medium-fidelity digital version of the prototype. We then, again, had 9-1-1 call takers work through a series of mock calls with the interface and provide feedback. The goal of both interface design studies was to understand how 9-1-1 call takers interact with prototype software that allows them to receive video calls, images, and text messages as data, and how we should design such systems to best meet the workflow and information acquisition needs of call takers.

Overall, our results show that 9-1-1 call-taking in the future will need a next generation interface that supports multimedia, including video calling, as part of calls. Yet user interfaces will need to be different from commercial video conferencing applications like Zoom, FaceTime, or Skype that are commonplace today. Design features for 9-1-1 systems must focus on supporting camera work (moving and zooming the camera to properly frame the scene), situational awareness, and the flow of media. This includes designing to allow call takers to maintain situation awareness at call centers with incoming multimedia; allowing call takers to provide visual and verbal instructions to callers on their phones to guide camera work; and, reducing the risk of call takers viewing traumatizing media through the regulation of media flow (e.g., video feeds being on/off and visuals appearing).

Related Work

During an emergency when people call 9-1-1, a call taker asks them a series of questions (Forslund et al., 2004; Mann, 2004; Mancero et al., 2009) and records this information in a Computer-Aided Dispatch (CAD) system (Whalen and Zimmerman, 1998). This information is then dispatched to a first responder. The call taker relies on the information provided by the caller. Sometimes the information is not accurate, or it can be difficult to acquire (Whalen and Zimmerman, 1998; De Vasconcelos Filho et al., 2009). 9-1-1 call takers face the challenge of acquiring information from callers who are panicked and sometimes people who have language barriers (Artman and Wærn, 1999; Forslund et al., 2004). Call takers ask specific questions in a specific order to take control and structure calls as clearly as possible (Neustaedter et al., 2018). Research has shown that 9-1-1 call takers can benefit from video calls as they could allow them to assess a situation better by seeing firsthand what is happening during an emergency (Neustaedter et al., 2018). We also know that during crisis management, first responders find strong benefits in seeing live video (Ludwig et al., 2013).

Managing and answering 9-1-1 calls is by no means easy. Call takers can face a great deal of distress from dealing with traumatic situations and have had to rely on coping mechanisms such as counseling (Mann, 2004; Dicks, 2014) and peer support (Adams et al., 1995; Shakespeare-Finch et al., 2015). Call taking often becomes complicated because the information received by call takers can be ambiguous, callers may find it difficult to describe what is happening, or callers may not speak English well (Adams et al., 1995; Forslund et al., 2004). Sometimes call takers imagine the scene that the caller is telling them about and this can add to the stress level (Adams et al., 1995; Rigden, 2009). Call takers work shift work and some work during the nighttime, which can create additional stress and create challenges with sleeping (Shakespeare-Finch et al., 2015; Hayes, 2017).

Situation awareness is critical in emergency call centers (Bowers and Martin, 1999). Situation awareness involves a moment-to-moment understanding of what is happening during an incident and how this information should be acted upon (Endsley and Jones, 2011; Adams et al., 2015). Call takers can gain it by listening to others in an open call center environment, by purposefully looking around to see if people are in calls or not, or by noticing information in one's visual or aural periphery (Bentley et al., 1992; Heath, 1992; Hughes et al., 1992; Bowers and Martin, 1999; Toups and Kerne, 2007). For example, call centers may use large displays to show information about the latest calls. Call takers use situation awareness to maintain an understanding of incoming calls to ensure that multiple calls about the same incident are known (Artman and Wærn, 1999; Pettersson et al., 2004; Mancero et al., 2009).

Some researchers have looked at providing a software-based solution for the collection and management of media data curated by third party volunteers (Blum et al., 2014). In natural disaster response, decentralized uses of media have been shown to be critical (Liu et al., 2008; Bica et al., 2017), if information can be deemed credible (Sarcevic et al., 2012). Our work is similar in that we explore how everyday people can share videos of a scene with a 9-1-1 call taker. For improving efficiency in watching a large number of videos, various software solutions can be implemented that change playback speed based on the content of videos (Camp et al., 2000; Kurihara, 2012; Higuch et al., 2017). Our work builds on this literature.

Some researchers have looked at the ways on-line photo sharing on social media can help in disaster management by first responders (Liu et al., 2008; Wu et al., 2011; Ludwig et al., 2015). Similarly, text message archives and dedicated crisis-oriented Twitter accounts have been used to interact with the public during ongoing emergencies (Sarcevic et al., 2012; Denef et al., 2013; Hughes et al., 2014). During the response to an emergency, short videos from incident commanders have been found to provide important contextual information for other crewmembers (Bergstrand and Landgren, 2009). While this research is valuable, the focus of our work is not on large-scale crisis management. Instead, we focus on small-scale emergency scenarios. We explore how media like photos and videos shot on an ordinary citizen's smartphone can be leveraged by 9-1-1 call takers.

Several researchers have envisioned a full-featured next-generation emergency system in the past that moves beyond just audio-based calling. Some have explored the challenges and benefits associated with a video based call for 9-1-1 callers where they found promise for such technology (Singhal and Neustaedter, 2018). However, most research work focuses on the technicality of implementing such a system or testing basic functionality (Yang et al., 2009; Markakis et al., 2017) as opposed to our focus on user interface design. For example, research has explored the feasibility of implementing IP-based network hubs, so that they can be used for enabling audio and video calls to call takers (Markakis et al., 2017). Others have tried utilizing better location-based services to track callers' mobile phone positions and develop better tracing software for call takers (Yang et al., 2009). These works mostly discuss the software infrastructure that is needed to carry out a transition from existing 9-1-1 systems to futuristic ones. Some look at implementing specific functionalities that make operations better. However, most research work has not focused on understanding the requirements to build new call-taking interfaces that would enable call takers to leverage rich media during 9-1-1 calls and allow call takers to have better control over camera work. This is our focus where we explore call needs and workflows with an emphasis on video calling and photo sharing. We combine this with prototyping and user studies of user interfaces.

More generally, video calls are now commonplace in work and domestic settings. For example, mobile devices are commonly used to support video calling between family and friends, yet they require careful camera work to sufficiently show the remote viewer a scene (O'Hara et al., 2006; Massimi and Neustaedter, 2014; Procyk et al., 2014; Jones et al., 2015). By camera work, we refer to changes in camera orientation to find appropriate camera angles and zoom levels to show an ideal view of the scene (Inkpen et al., 2013; Rae et al., 2015; Jones et al., 2016). This act has been found to be difficult for people to do for family activities because sometimes it requires holding cameras at awkward angles (Inkpen et al., 2013; Jones et al., 2015; Rae et al., 2015). To overcome these challenges, design work has focused on combining or providing multiple camera views (Endsley and Jones, 2011; Engström et al., 2012; Kim et al., 2014; Reeves et al., 2015; Neustaedter et al., 2017). We use this work as a backdrop for our own investigations and compare our findings on video calling within 9-1-1 emergencies to video calls in domestic situations.

Study 1: 9-1-1 Call Center Observations and Interviews

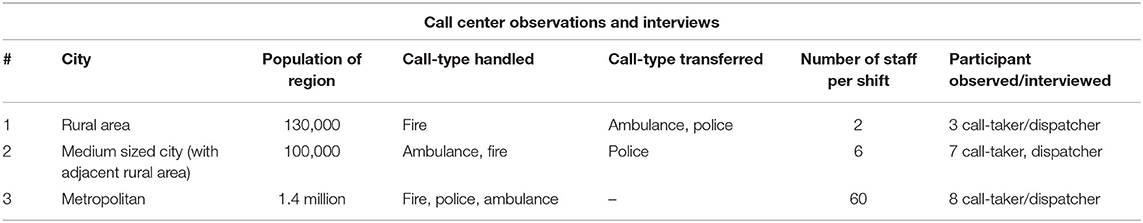

First, we conducted a study of 9-1-1 call centers to provide an initial understanding of the design space. We were interested in learning about the benefits and challenges that call takers/dispatchers would find with video-based 9-1-1 calls. The study was approved by our research ethics board. We worked with three 9-1-1 call centers in Western Canada that represented a range of geographical areas in terms of their size and scope for handling 9-1-1 calls. Table 1 summarizes details for each call center.

We spent between 7 and 10 h at each of the call centers observing call taker and dispatcher work practices, listening in on calls, and conducting in situ interviews. During our observations, we watched and listened to how call takers and dispatchers worked, what software and hardware systems they used, how they organized their work and work area, how they maintained situation awareness and what they asked callers during calls. We also conducted interviews with nine participants outside of our observations. Our interview questions focused on understanding work practices in terms of what was done during calls and why. We also probed specifically about future technology usage where we asked questions about the possibility of using video calling and picture sharing for 9-1-1 calls. Full details on the study methods are found in Neustaedter et al. (2018).

Results Summary

Design to Support Camera Work

When a caller called 9-1-1, the call taker answered the phone call and began by asking what service the caller required—fire, police, or ambulance—and then asked for the caller's name, phone number, and location for re-contact in case the call was dropped. Next, call takers asked a sequence of questions about the caller and their situation based on the emergency response card questions. Information was entered and stored textually in the CAD system and which could be seen by call dispatchers and emergency responders or police services within their own CAD system. Despite the systematic process used to acquire these details, information was not always clear, complete, or easily acquired from callers. The incorporation of video calling, and picture sharing was seen by participants as a means to acquire more contextual information about the call situation in order to disambiguate what was happening and gain a deeper understanding.

We also learned that if callers were able to share live video or even pictures of a situation, the types of information call takers would need to extract would be highly varied. On one hand this might mean trying to understand an entire situation if someone who could not speak well called in (e.g., a child, person with an accent, or non-English speaker). It could also mean trying to use visual information for higher-level scene size-up compared to up-close video of specific information. It could even be used to acquire information to augment (e.g., acquiring more-specific location information) or change one's understanding of the situation to be more accurate (e.g., overcoming dishonesty). Together, this illustrates that different types of camera work are needed for capturing information about emergency situations. Callers might need to take close-up video, far-out video, pan the scene, or hold a camera steady on a single location, for example. This could certainly help call takers gather better on-field information to maintain situational awareness regarding any ongoing situation.

Call takers are trained to take control of a call and extract valuable information from frantic or angry callers. Some call takers felt that the protocols followed might be disrupted with the introduction of video in a call as they would also have to focus on the incoming media. However, call takers felt that the main challenge would be to guide the caller to perform the necessary camera work, like pan, zoom, change camera view and focus during a live video stream. If a caller was already in distress, the act of trying to show the right camera angle or viewpoint could be additionally challenging. This could be in addition to calming a caller down enough to get a verbal description. They felt that further amendments to call-taking protocols and different training sessions to handle multimedia would be necessary to gain control over a call. This suggests that designs should allow call takers to direct camera work through the user interface, while finding ways to mitigate user challenges in acquiring the necessary camera footage.

Design to Manage Information Overload and Trauma

All call takers in our study used three to five displays placed in front of them in a single row. These monitors displayed the central CAD system, radio dispatch software, email client, web browser and one or two maps. They felt that any additional windows that dealt with multimedia must be placed “front and center” or close to it. They felt that although video was important and had the potential to reveal crucial missing information, it would demand a lot of visual attention and they could get distracted by it. A distraction could mean not entering important data into the CAD system. They felt that they would use audio for the most part and would only switch to video when they felt it was necessary, akin to a Skype or FaceTime call, with the help of a toggle button. This suggests that designs should consider ways to turn video information on or off at the call taker's discretion and fluidly migrate between audio-only calls to audio augmented with video-enabled calls. This might be akin to how users can turn their video feed on and off within video calling applications like Skype or FaceTime, at the push of a button. While such a design choice would present an asymmetry in the call experience, this asymmetry would reflect the roles each user is playing, and the clear “leadership-and-control” structure that the call taker wishes to maintain.

In general, participants felt that as a result of potentially challenging scenes being shown on a video call or the possibility of sexual harassment, video should be considered secondary in nature. Audio could act as the primary communication mode for handling calls and video could augment it periodically, possibly being turned on and off at the discretion of the call taker. Together, these findings suggest that video should not be considered a primary tool for 9-1-1 that is always available and going. Instead, call takers need to be able to fluidly migrate between audio-only calls and audio coupled with video. This could help call takers decide if and when to show video. When video first appears, one could also consider strategies such as blurring video and allowing call takers to progressively adjust the level of blur so that more details are shown and they can decide if they are okay seeing the scene.

Design for Asymmetrical Sharing of Video

We talked with our participants about whether they would want to have themselves visible in the video call so callers could see them. However, this was strongly seen as being undesirable. First, call takers must manage many things at the same time when a call comes in. Their viewpoint changes between the CAD system where they enter notes to the map where they look-up location to sometimes a dispatch screen that shows where various units are located. They felt that, with a synchronous video mode on, the caller would clearly be aware of the fact that call takers do not focus all their attention on the caller during a call. This could install anger or mistrust among 9-1-1 callers. Second, many call takers had strong feelings about their identity staying anonymous. They did not want people to know they were a 9-1-1 call taker because they felt there was a chance of harm if a person saw them in public, e.g., if situations had gone poorly during a call, or an assailant somehow knew they had had handled a call about them. This suggests that call takers should not be forced to show their own camera view when connecting with callers. Such features should be regarded as optional components of an NG911 system. At times, a call taker may decide to show themselves, e.g., to create empathy, but it should not be considered a requirement.

Study 2: Low-Fidelity Prototyping and Design

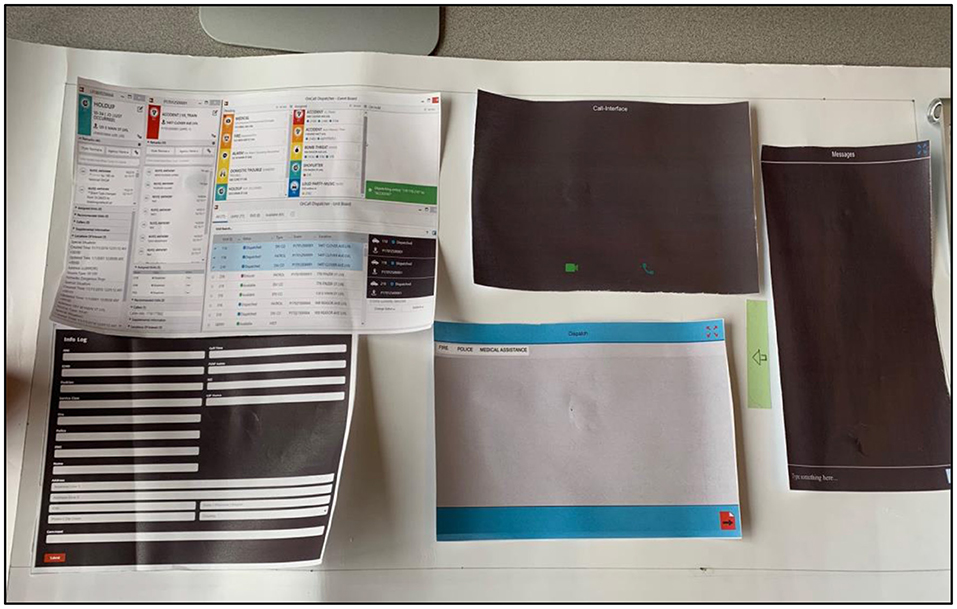

Based on our first study's findings, we conducted our next phase of the research, which involved designing low- and medium-fidelity prototypes to test out our design ideas. We began our design explorations by brainstorming and iteratively creating paper prototypes of possible call-taking interfaces for 9-1-1 call centers (for example, see Figure 1). The primary objective was to understand how to design a 9-1-1 call-taking software interface that allows call takers to conduct an audio or video-based call, receive photos or video clips, and exchange text messages. We also wanted to use our design work and the forthcoming evaluations of it to validate the findings from our initial studies of 9-1-1 call centers.

Our design ideas were based on an analysis of the related literature along with the study described in the previous section. Through our prototyping efforts, we explored different ways of laying out video call components, presenting photos shared by callers, and methods of forwarding this information to dispatchers and, subsequently, first responders. We chose to create paper prototypes so that we could engage with 9-1-1 call takers in an early state of design and solicit their feedback.

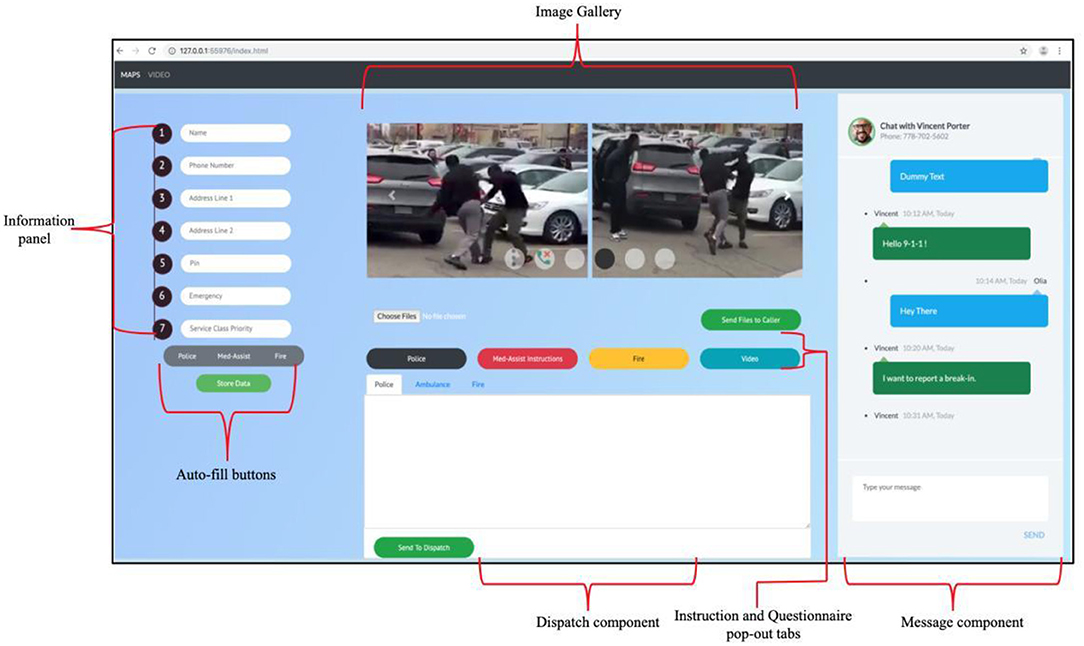

Our design work led us to converge on the paper prototype (stylized) shown in Figures 2, 3. This prototype included interface components printed on paper and used for Wizard-of-Oz interactions. Our first study showed that call takers use multiple monitors in call-taking centers, so we emulated this with our prototype. We printed interface components on two A3 sheets of paper and placed them on 27-inch monitors to emulate typical screen sizes. One of the paper sheets showed the proposed new call-taking interface, which we call the Media Screen (Figure 2). The other sheet emulated a mapping system that builds on their existing mapping software, described more shortly. We call this the Map Screen (Figure 3). Most 9-1-1 CAD systems use generic white/gray screens and interface components. We purposefully chose a slightly more colorful design in hopes of shifting the thinking of call takers to be more future looking. The colors we chose were not necessarily meant to suggest an ideal color scheme.

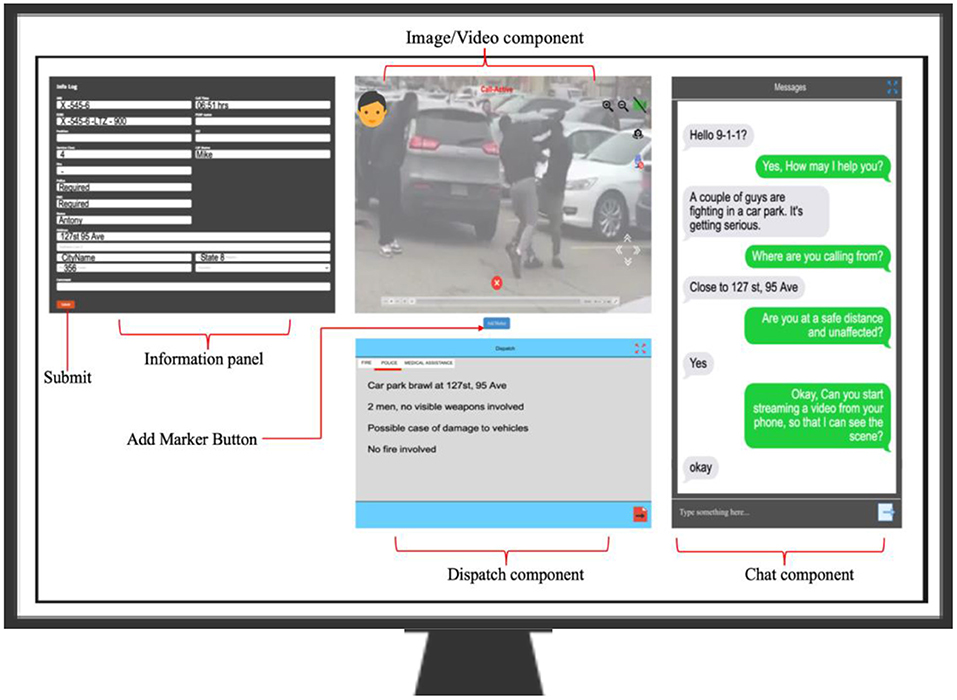

The Media Screen included the essential CAD system components used by present day 9-1-1 call centers to enter and store data. This included name, address, age, cause of distress, phone number, approximate coordinates, service emergency category, etc. (Figure 2, left). Users use a keyboard and mouse to interact. In addition to this information, we included components to support futuristic call-taking scenarios. We discuss these features next.

Video and Picture Viewing

The middle column of the Media Screen shows an audio/video component on the top of the screen (Figure 2, middle). When a call comes in to 9-1-1, the call taker clicks a “phone” icon to answer it (not shown in Figure 2 since a call already in progress). All calls begin as an audio-only call by default based on the findings in our first study. This reflects concerns that callers may not be comfortable immediately showing video and call takers want to assess the situation first before seeing video. The call taker can push the green camera button in the top right corner of the component to ask the caller to switch to video mode. The caller receives a request and can choose to turn on video from their phone, if their phone supports video calling. Once video mode is on, the UI looks like the one shown in Figure 2. To stay anonymous or maintain privacy, the call taker can choose to not show their face and keep their camera off by clicking on the face icon present in the top left of the video call component. In Figure 2, the call taker has decided not to show their face to the caller and a face icon is shown in the top left of the Image/Video component. If the call taker does not want to see traumatic or disturbing footage, they can switch off the caller's video feed by clicking on the cancel-camera icon in the top right corner of the window. In a real version of this system, video could continue to stream in (for record-keeping purposes) but would not be shown to the call taker.

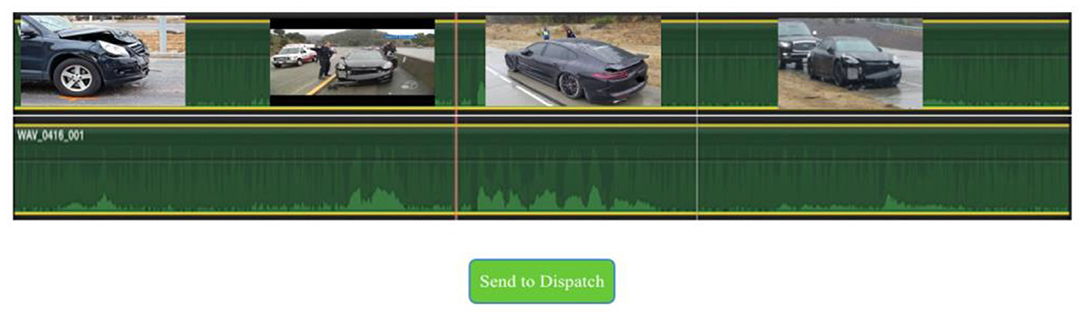

In addition to simply talking to callers and telling them what to capture over video, we allow call takers to guide the camera work in the call using the magnifying glass icons in the top right corner of the Image/Video component. Clicking on the icons sends a suggestion to the caller to zoom in on their video feed. While not shown on this paper prototype, this suggestion could show up on the caller's end as a visual annotation. Arrow icons in the bottom right corner of the Image/Video window allow the call taker to suggest the caller to move their camera up, down, left, or right. For example, a mouse-click on the left arrow sends visual feedback to the caller's mobile phone. This visual feedback is overlaid on the caller's screen with an arrow suggesting that they move their phone camera in the direction indicated. The add marker button (Figure 2, middle) can be pushed when the call taker wants to create timestamps in the video to mark important events. If timestamps are created, a photo timeline is shown under the video as shown in Figure 4. If a caller sends in photos from the scene, these appear in the same photo timeline.

Information Sharing With First Responders

The Dispatch component located at the bottom of the Audio/Video component (Figure 2, bottom middle) is used to send useful information to the appropriate first responder, e.g., police, ambulance, or fire department. This component is very similar to what already exists in 9-1-1 CAD software. After entering data into boxes on the left of the screen, the call taker can select the appropriate data and copy/paste it into the dispatch window. Photos or timestamped video clips are saved into a database for an ongoing case and are automatically available in the dispatch window. The call taker can choose to remove any photos they feel are not necessary for the case. The call taker can type in the dispatch window and add any information that they feel may be required by the first responders; this is presently standard practice. When the call taker clicks the red send icon (bottom right corner of Dispatch component), all the photos and text comments are sent to the first responders. The ability to attach and send media to first responders is important, as identified in our first study. First responders like police officers and firefighters can make more informed decisions based on photographic evidence rather than just relying on text descriptions.

Instant Messaging

The right most column shown in Figure 2 supports the ability for call takers to exchange text messages with 9-1-1 callers. There are situations where texting may be preferable, especially if a person is trying to connect with 9-1-1 in a covert manner. A call taker sees incoming messages assigned to them inside the messaging window and is then able to talk to the caller through text messages. This interface is like commercially available instant messaging systems, e.g., Facebook Messenger and iMessage.

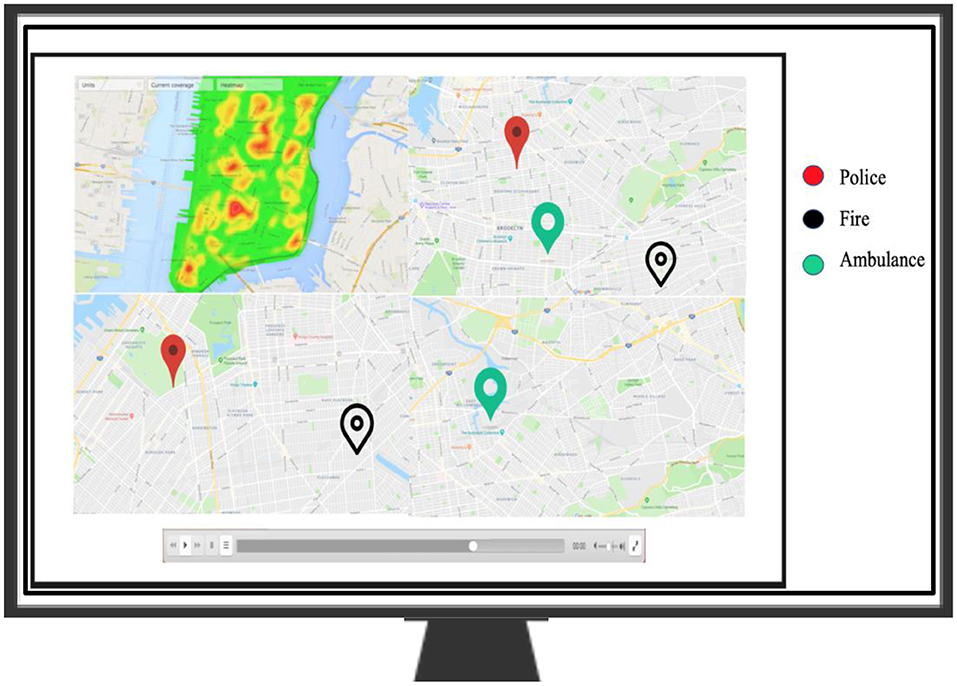

History and Mapping

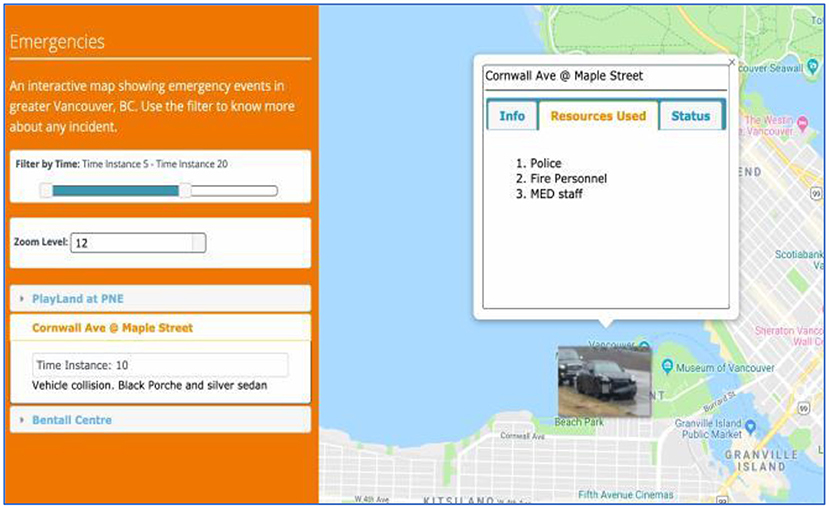

While our primary design focus was on the main call taking window, we also explored the presentation of call history information as part of a map interface. Figure 3 shows the Map Screen. There are two main components: a map and a slider. The map supports zooming, which can be done using a mouse scroll-wheel or through keyboard interaction. The slider is interactive and can be moved left or right (e.g., “scrubbed”) like a timeline in a video player. The timeline goes from oldest to newest. Based on the scrub position, the map updates to show 9-1-1 call events that have occurred within a 24-h time window of the selected time in the timeline. Events appear as pins located across the map based on their respective locations. The call taker can click on a marker to view information on the call.

Paper Prototype Sessions With Call Takers

Next, we worked with a different 9-1-1 call-taking center in a major metropolitan city in Canada to explore our paper prototype design. We worked with a different call center due to access and proximity to our university location. This call center handled more than 8,000 calls a day for the metropolitan area and various other regions within the province. Here we focused on understanding how our prototype could be used by 9-1-1 call takers to carry out their tasks, how call takers could use video/photo data, and how they could help callers capture media by guiding them to perform appropriate camera work. Across all these topics, we were interested in learning how our interface could be redesigned to improve workflows, what design elements were important to include, and how they should be included. This study was approved by our research ethics board.

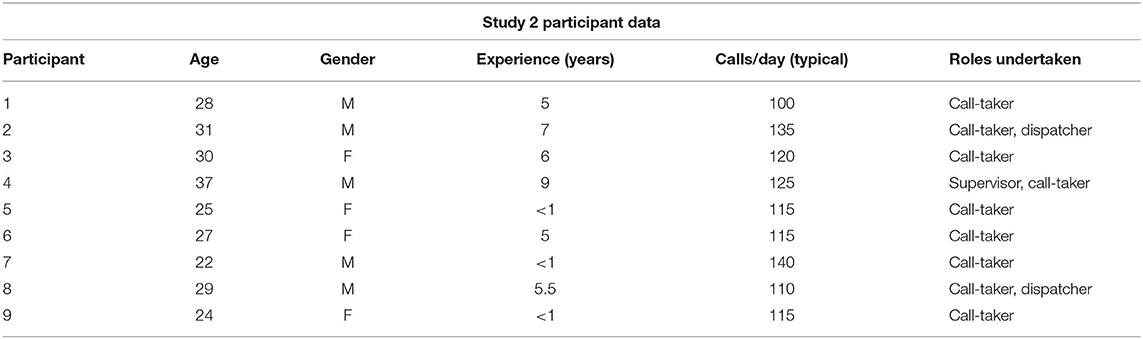

Participants

We conducted our study with nine call takers (five men, four women, Table 2 provides a summary) from the call center. Our participants' ages ranged between 22 and 37 (μ = 28.1, SD = 4.17). Three of the nine participants had job experience of less than a year. The remaining had more than 5 years of experience. Each participant, on average, dealt with around 120 calls in an 8-h work shift. All participants had some experience in video calling in their personal lives using software like Skype.

Methods

We started off by asking the participants basic background questions like age, experience as a 9-1-1 operator, the number of calls they received in a day, and the categories these calls fell into. Next, we had our participants go through six mock scenarios where they interacted with the paper prototype for each scenario. The scenarios were designed to test out a range of NG911 capabilities and cover a relatively broad set of circumstances. These scenarios were based on situations presented in the guidebook used by call takers during a call (Neustaedter et al., 2018). The guide presents the series of questions that call takers ask for different types of calls and covers a wide range of police, fire, and medical emergencies. We also based our scenarios on the types of situations that we observed in our first study. Furthermore, as part of the current study, we had participants comment on the realism of each scenario to ensure that they accurately depicted likely call situations. Thus, our scenarios are highly representative of what actually happens in 9-1-1 call centers within Canada.

For each scenario, participants used the prototype and its new features in some way or another. First, we had the call takers read through a printed script for each scenario. This script described the conversation between the caller and a call taker. Next, participants would enact the scenario using the paper prototype where a researcher would manually change pieces of paper to update the screens, depending on what the participant did (e.g., touch for mouse clicks). The scenarios were:

Scenario 1—Break and Enter: A person sees an assailant attempting to break into a house across the street. The person engages in a 9-1-1 audio call and is asked by the call taker to capture and share photos of the scene.

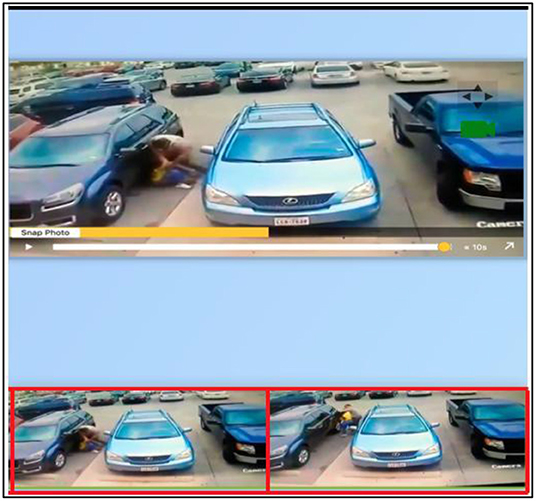

Scenario 2—Parking Lot Brawl: A person sees two people quarrel in a parking lot and witnesses it escalate to a physical altercation. The person notifies 9-1-1 over text and is asked by the call taker to stream a video of the ongoing altercation. The call taker saves the video and bookmarks certain moments for future referencing.

Scenario 3—Medical Assistance at Home: A person calls 9-1-1 about an unconscious grandparent. The person is asked by the call taker to check if the victim is still breathing and, upon negative affirmation, is asked to perform cardiopulmonary resuscitation (CPR). The call taker sends a short video clip of CPR to guide the caller. Medical assistance is then sent out.

Scenario 4—Roadside Accident: A driver is in a car accident while taking a turn. He calls 9-1-1 for assistance. The operator asks him to check the health condition of his passenger and decides whether immediate assistance (such as CPR) is required. A backup ambulance is sent. In the meantime, the caller is asked to send photos of the situation and later is asked to stream a video. The call taker saves the photos and marks important scenes from the video.

Scenario 5—Roadside Accident with Map: A caller calls in to report a car accident that she had witnessed a few blocks before. The caller is asked for a description of the vehicles. The operator uses the map's time slider to check if this is a redundant case and finds out that it is. They then notify the caller.

Scenario 6—Fire: A caller calls in to report a fire coming from a transmission line. The caller is asked to immediately stream a video so that the size of the fire can be gauged. Some camera work like zooming and titling is suggested by the call taker. Dispatch is sent to fire units and the call is ended.

We probed our participants about our prototype's design in relation to each scenario along with the scenario's workflow. We asked questions such as:

• Do you think a video or photo of the scenario would be useful? Why or why not?

• What would you like to see in the streamed video?

• How would this type of media affect your work?

• What features in the prototype do you think would be helpful for you? Why?

• What other functionalities would you want added?

We also asked participants for layout suggestions of the components, if they had any. Each session lasted about 50–60 min. Sessions were done individually in a separate room away from the call-taking floor and in private so that each participant could openly express their opinions.

Data Collection and Analysis

We audio recorded all sessions and kept handwritten notes. We transcribed the audio recordings and conducted open and axial coding of our notes and the transcripts. We used Nvivo (software) for organizing our transcribed data and for all our coding purposes. A researcher went through all the participants' data to identify interesting/insightful views and problem points across all the transcripts. Then the researcher performed inductive coding by using portions of text in the transcripts that had insightful views as starting points. The main open codes revealed issues with texting, work-flow impact, camera-control, likes and dislikes in viewing videos, and more general UI suggestions. The researcher also maintained a list of code definitions and cited rules to generate codes from the text. The open codes were then grouped into categories, such as information gain and video data, which became our axial codes. The coding was done by a single researcher initially, and then reviewed with a second researcher. The second researcher used the same cited rules stated by the first researcher for the generation of codes and created codes independently. The two researchers then compared codes and made minor modifications to the codes to come up with the axial codes. Lastly, both researchers performed a selective coding pass which focused on drawing core themes out from our axial groups. This involved two researchers exploring and talking about the themes. We report our high-level themes in the Results section. We show quotes from participants with P#.

Results: Video and Picture Viewing

Participants firmly believed that having visuals in the form of videos or images would improve the comprehensibility of an emergency situation and improve call-taking efficiency, similar to our initial study.

We introduced participants to the idea of being able to guide the remote caller in capturing good footage of the emergency situation. This involved conducting the appropriate camera work, which includes holding the phone properly so that videos and photos were captured at valuable angles. We also wanted to ensure that, apart from getting the basic angles correct, the call taker could ask the caller to move their phone or themselves in any direction. We let call takers imagine a futuristic software feature which would allow them to relay instructions to the caller's screen as a visual overlay, like in augmented reality, where call takers could input what to show on callers' phones. Participants really valued this idea. They felt that in noisy or crowded places, a visual overlay would be useful. Participants felt that such a feature would be beneficial for older adults who may have limited experience using a mobile phone camera, and for those who face language barriers. These people could look at on-screen visuals as opposed to trying to understand verbal instructions.

Yet, despite such a feature, it became clear that participants did not always know how to direct the camera work. That is, they did not always know what to ask the caller to do with their camera and what types of actions would produce the best shots. Our interface lacked features that might help them out with suggestions based on different types of emergencies. This is somewhat akin to the standard manual that call takers have on hand that lists what questions to ask and in what order for a certain emergency. No such suggestions or manuals are currently available for camera work. Participants were also concerned that, even if there was guidance or they knew what to tell the caller to do with their phone, the caller might not be able to perform the actions due to reasons such as stress. Moreover, participants did not know if the incoming videos were needed to be shown on a large screen to provide situational awareness to others at the call center or if others should be viewing incoming video. Participants felt that in cases pertaining to large events like games or festivals, a video or photo of a situation replayed at the call center may help other call takers/dispatchers handle situations or manage resources better.

These results build on our initial study of 9-1-1 call taking centers to show implications for how support for camera work needs to be designed. Our first study showed user interfaces for 9-1-1 need to support varied camera work, and now we see that designs need to provide call takers with guidance for suggesting appropriate camera work to callers. Existing video conferencing systems (e.g., Skype and FaceTime) do not provide camera work suggestions, nor ways to share this information to people on the other end of a call. Thus, it is clear that the standard design paradigms for video conferencing systems need to be modified or extended for future 9-1-1 video calling systems.

Results: History and Mapping

Currently the existing CAD system in the call center only allowed “open” cases to be seen. Participants liked the fact that they could go back in time with our paper prototype and check for incidents that were closed using the Map screen. This could provide them with situation awareness of the broader set of emergencies being handled by the 9-1-1 call center as well as the ability to refresh their memory about cases they handled themselves.

To aid situation awareness, participants talked about wanting to see additional details about each scene in the map view without having to open each individual case. Currently our design just showed balloons for each incident. Participants felt that seeing media from the cases directly on the map could make it easy to spot repeat calls. Yet they were cautious that showing video or images of an emergency directly on the map could easily present traumatic situations that they may not want to necessarily see when searching.

Some participants were concerned that when they adjusted the slider in the timeline, they might miss out on knowing what was inside or outside the selected time range. Participants also wanted additional filters, such as incident type and date.

“It's very interesting. This would be pretty beneficial to have the slider bar and be able to go back on the timeline. Yeah. It maybe would also be nice to have an option where you can type in exactly how many minutes you want to go or filter files through different category types.” - C9

These results build on our initial study to show that 9-1-1 call takers would value historical multimedia information about video calls, which could aid situation awareness. Yet this type of feature should be designed for cautiously so as to not create additional trauma for call takers who may view the information. Such information could be stress-inducing if gory or traumatic scenes are depicted in the video or photo history.

Results: Instant Messaging

Texting between call takers and callers was mostly seen to be efficient in cases where the texter had to be surreptitious, like in the case of an abusive relationship. Based on the knowledge they had gained from a trial implementation of texting services at another 9-1-1 facility, participants felt that texting came with many problems. They said that people often used it to abuse 9-1-1 operators or send inappropriate content. Moreover, people with emergencies often texted abruptly and did not respond fast enough, which left 9-1-1 call takers anticipating the worst, when in most cases the reality was not necessarily as serious. For these reasons, participants were cautious about the benefits of text messaging and preferred communication over audio or video, unless the caller was forced to communicate via text. In terms of our user interface, participants liked the basic layout and the fact that they could easily transfer text from the messaging window to the dispatch window.

“Text messaging wise, having the option to directly link it into the file would be helpful because right now we have to retype everything that comes.” - P4

Many existing video conferencing systems are designed to have text messaging capabilities alongside video (e.g., Skype and Zoom). Based on our results, we, again, see that this common design paradigm has challenges when it comes to 9-1-1 emergency calling. While text messaging may be valuable in some situations, call takers prefer interfaces that focus on video and audio calling.

Study 3: Medium Fidelity Digital Prototype

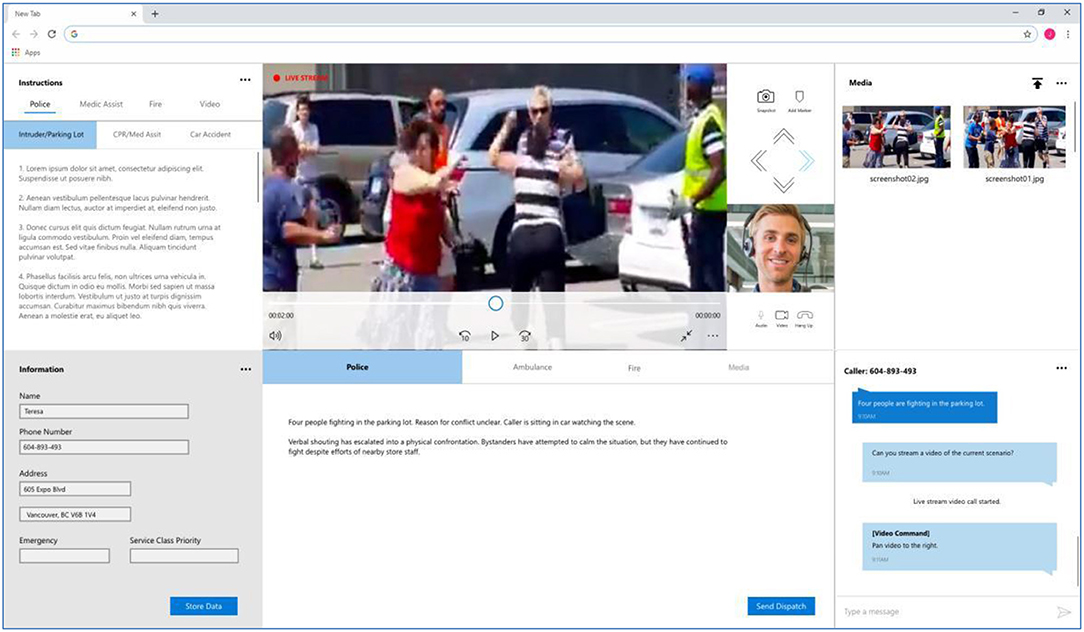

Based on our study findings, we iterated our design and created a medium-fidelity prototype to test out our design ideas as an interactive user interface. We used HTML, CSS and JavaScript to create a front-end web application that showcased our design ideas. The prototype was created as a responsive web design that could scale to any layout. The system was medium fidelity in the sense that it did not integrate with the 9-1-1 call-taking network and infrastructure. This was purposeful because we were focused on understanding user experience needs, rather than infrastructure needs. We did not create a user interface for the caller side as we were focused on the call taker's interactions and experiences. Our goal for the digital prototype was to probe call takers on some of our refined design ideas and gain feedback on the interface through interactive use of it on a computer. We also wanted to provide further triangulation to the results of our previous studies, if possible.

We kept the same main features in our interface. Figure 5 shows the digital prototype's Media Screen, which contains the same basic components as the paper prototype. Currently the figure shows images shared by a 9-1-1 caller as part of an Image Gallery. If the “Video” button is pushed, this view changes to show live video, as shown in Figure 6 (top). We made a custom video player that plays the live video (we used “fake” live video for the purpose of illustration in our prototype) and provides the call taker with the ability to take snapshots of the video stream. These snapshots are time-stamped. Figure 6 shows two such snapshots taken at different timepoints. Based on the findings from our first study, we made the following main changes to the design:

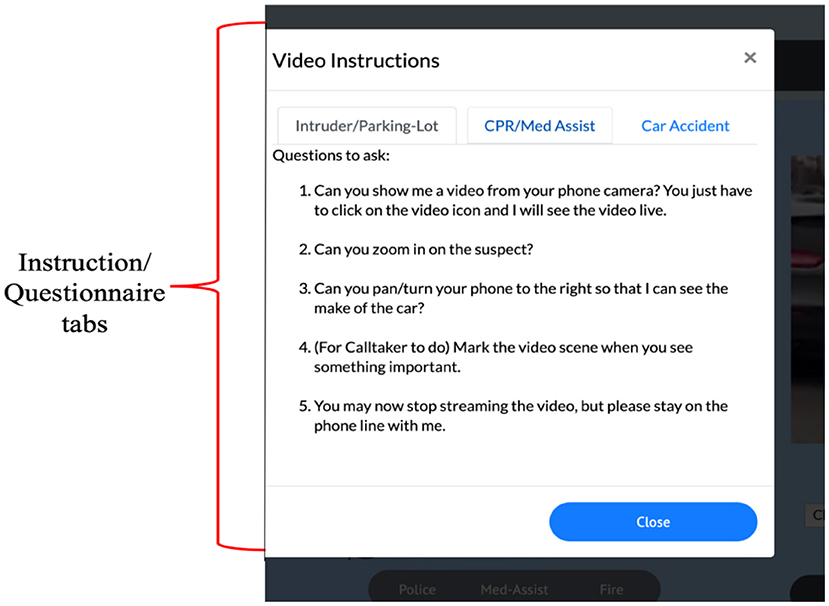

a. Instructions: Like the paper prototype, the call taker could suggest directions for moving or zooming the phone and these would appear on the caller's phone as visual overlays. We also added an “instructions” window (Figure 7). Clicking the “Police,” “Med-Assist Instructions,” or “Fire” button in the middle of the screen (Figure 5) opens the “instructions” window, which lists a series of questions to ask callers for specific types of emergencies. To better support camera work, we added a “Video Instructions” tab. Clicking it shows steps to suggest to the caller to best capture scene footage for the specific type of emergency.

b. Fast Interactions: To improve interaction speeds, we added keyboard shortcuts. For example, users can press “tab” on their keyboard to easily move between data entry fields, press “spacebar” to pause the video feed, “M” to save a snapshot from the live video feed, etc. When a live video feed is open, call takers can press arrow keys on the keyboard to suggest directions for the caller to move their camera as a part of their camera work. These appear as visual overlays on the caller's phone. Ctrl/Cmd + arrows can be pressed to suggest tilting the camera. Alternatively, users click areas of the video with their mouse to trigger visual overlays of arrows on the caller's phone.

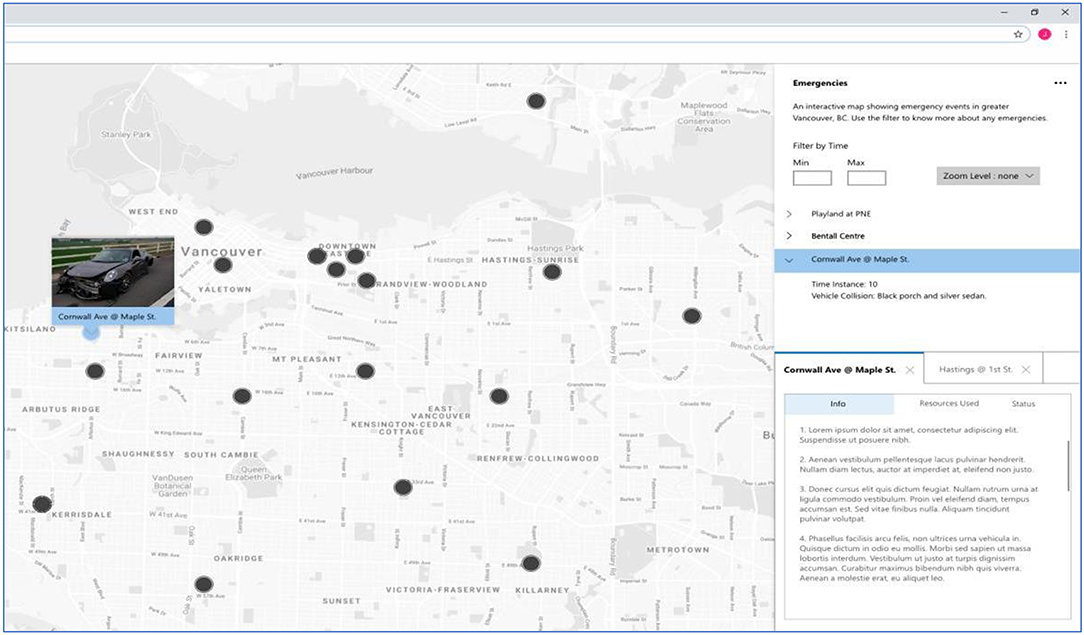

c. Media and Filters for the Map: Figure 8 shows the Map Screen that ideally is opened on a separate computer display. We enhanced the features in the map interface by letting call takers filter cases shown on the map based on the type of emergency (fire, police, ambulance) and select date ranges. While participants in our first study were cautious about seeing visuals from each emergency on the map, we added this functionality to further probe call takers about the idea.

Medium-Fidelity Prototype Evaluation

We conducted a study at the same call center to evaluate the digital medium-fidelity prototype. We focused on understanding how our digital prototype would be used by 9-1-1 call takers to carry out emergency calls. This included further exploring the design features and the changes we had made since our first prototype study. We also wanted to understand if call takers had different views of the user interface now that they could interact with a higher-fidelity version of it.

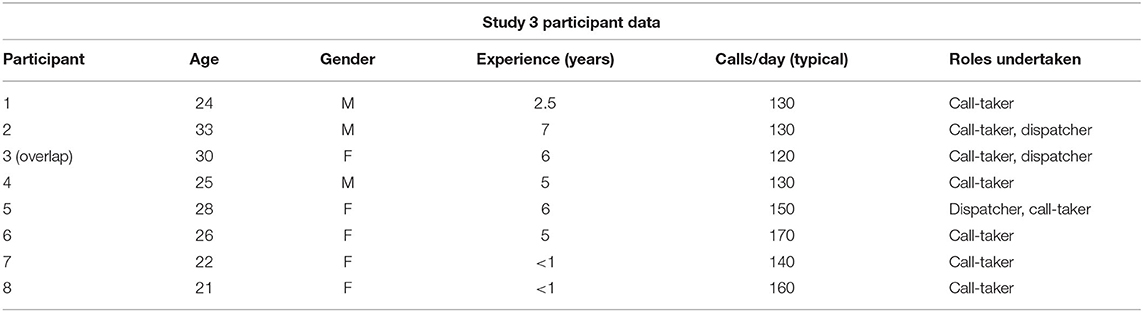

Participants

We worked with eight participants: three men and five women (Table 3 provides a summary). Only one participant, P3, overlapped with the previous study due to availability issues. We also wanted to get different perspectives, if possible. Participants' ages ranged from 21 to 33 (μ = 26.1, SD = 3.78). Two participants had job experience of less than a year, while the remaining had more than 2 years of experience. Each participant, on average dealt, with around 140 calls during their 8-h work shifts.

Methods

We asked our participants background questions regarding their age and work experience as a call taker. We then had participants work through the same mock scenarios that we used in our last study. Participants were now shown the digital version of the system and asked to interact with the medium-fidelity prototype. For the scenarios, we showed them videos of each incident using example video clips that we had gathered from YouTube. These videos were played in our digital prototype as if they were incoming live video streams, and participants had to perform tasks based on the scenes they saw. After going through the scenarios, we interviewed them to find out their reactions to the prototype and interactions with it. We probed them with questions like:

• How do instruction menus help you in guiding camera work of a caller?

• How helpful do you find the mechanism for suggesting better camera work?

• What do you think about the photo sharing with callers and how will it impact your work?

We also asked for suggested improvements to the interface. Each session lasted about 40–45 min.

Data Collection and Analysis

We audio recorded the study sessions and kept handwritten notes. We transcribed the audio for analysis and then conducted open and axial coding. We performed the same procedure for data collection and coding as discussed in the previous study. Open codes revealed issues around camera control, saving snapshots, uploading files, and slider control. Axial codes grouped open codes into categories such as workflow impact and media interaction. We then performed a selective coding pass that created high level themes, which we report in the Results section. Like the last study, the analysis was initially done by one researcher and then reviewed and adjusted based on conversations with a second researcher. In the results, quotes are listed with P#.

Results: Video and Picture Viewing

Again, participants believed that videos, both live and recorded, were important, as they could reveal more about an emergency than was possible with just a verbal description. Participants highly valued the ability to turn video on/off. Most participants felt that, beyond a certain point, visual information could be unnecessary, and they would choose to ignore it to lessen traumatic experiences or cognitive overload. The visual arrow markings that would suggest camera work directions were seen as an important add-on. Participants valued the keyboard shortcuts for interacting with media and suggesting camera work to the caller.

Like the last study, participants found value in being able to send photos to dispatchers. They also liked the added ability to capture and share snapshots of live videos as a means of personally curating content for dispatchers. Participants felt that this additional feature would reduce their reliance on photos sent in by callers alone. Participants thought that callers might simply not notice important information in the scene, and these features would help the call taker add to the information available to dispatchers and responders.

“You may be able to get those specific snapshots of what's going on and then later help the police with our investigation or court and stuff like that. So I think the ability to be able to take an actual picture of somebody's face or tattoo would be incredibly useful from a video.” - P15.

Participants felt that snapshots could potentially save a lot of time for police officers and dispatchers in gaining information like suspect descriptions or license plates. This could lead to better on-field situation awareness among first responders. Images of tattoos, specific car mods or other known symbols could help police identify the nature and affiliation of criminals and would help them prepare better for emergencies.

Results: Call Taking and Camera Work Instructions

We had participants use the Instructions window (Figure 6) in our mock tasks. Most participants found use in the “Video Instructions” for suggesting appropriate camera work. They felt such a list would be helpful for both experienced and new employees in dealing with future 9-1-1 technologies. Participants explained that the instruction set for videography should be customized for different scenarios, as the camera work required for a “break and enter” would be different from, for example, that required for a car accident. Participants suggested that the software could possibly detect scenes in the background and start suggesting some correctional camera work. For example, if a lot of sky came in the camera stream of a caller, the software could start suggesting the call taker or caller to start pointing the phone on a target.

Results: Situation Awareness

We wanted to learn how situation awareness can be affected with the introduction of multimedia at a call center. There was a feeling among many call takers that they would have to undergo training just to be able to look at an incoming video and take notes without getting distracted. Some of the call takers felt that a dedicated “media” team of trained personnel could be assigned to look at videos and photos. In that case the call-taking procedure and workflow of the call taker would not change vastly. The call taker could just engage with the caller and ask them to shoot video and photos and send the media to 9-1-1. The media would simply get forwarded to the media team who could look at the media, extract important clips, write information in the CAD system and forward necessary details to a dispatcher. This also meant that whenever a call taker decided it was necessary to see multimedia data, they would have to “tag” the multimedia team present at the call center into the call. The call taker would engage with the caller over audio, while any instructions for camera work would now have to be provided by the media team via visual/textual inputs.

Currently at most call centers there is a division of tasks and responsibilities between various personnel (call taker, dispatcher, first-responders). Call takers and dispatchers remain aware of each other's activity, due to physical proximity or through communication via software like CAD systems, radios, and overhead displays. A potential introduction of a media team would mean that designs have to add features to allow the easy sharing of curated media from the media team to provide situation awareness to call takers while they are taking a call.

Results: History and Mapping

When it came to the Map screen, some participants liked the idea of being able to visually search faster with thumbnails of events shown on the screen, while some, again, expressed concerns over the possibility of gory images being shown. Again, there was a tradeoff between situation awareness and privacy concerns. Participants also felt that maps with stored data could help supervisors and legal departments gather information from past incidents quickly, thus, broadening out the concept of situation awareness to other team members and employees. In some instances, break-ins or burglaries are linked to a particular geography and time of the year. Stored visual data like markers and heat maps could help create awareness about the nature and probability of crimes to occur in a region at any given time of the year. This could help law enforcing agencies plan better patrolling in a given area of a city.

Some participants raised concerns that thumbnails could clutter the map if they had to see too many clustered together. A potential solution suggested by a participant is to display icons while the map is zoomed out, and display photos while the map is zoomed in. Participants also wanted additional filters to more deeply sort cases based on incident types, such as motor vehicle accidents, burglaries, etc. Thus, the categorization of police, fire, or ambulance that we provided was still not fine-grained enough.

Final Design

Based on the findings from our second study, we refined the design further (Figures 9, 10). This involved building on participants' suggestions and adjusting the layout to better utilize the available screen space and improve the flow of interactions (Figure 9). Figure 9 at the top middle section and to the right, there are four arrows (chevron signs) that allow the call taker to input desired directions of camera movement to the caller. These symbols would appear as overlays on the caller's phone screen. We enlarged the dispatch window and added a media tab for dispatchers (Figure 9, bottom middle) to reflect participant comments. We also made it so the media gallery and messaging windows were stacked on top of each other (Figure 9, right) but could be collapsed to only show one. This would allow the visible item to display in the full height of the window. Events on the map (Figure 10) could be clicked on to reveal media and show details of the call. More filters were added for various types of emergencies.

Discussion and Conclusions

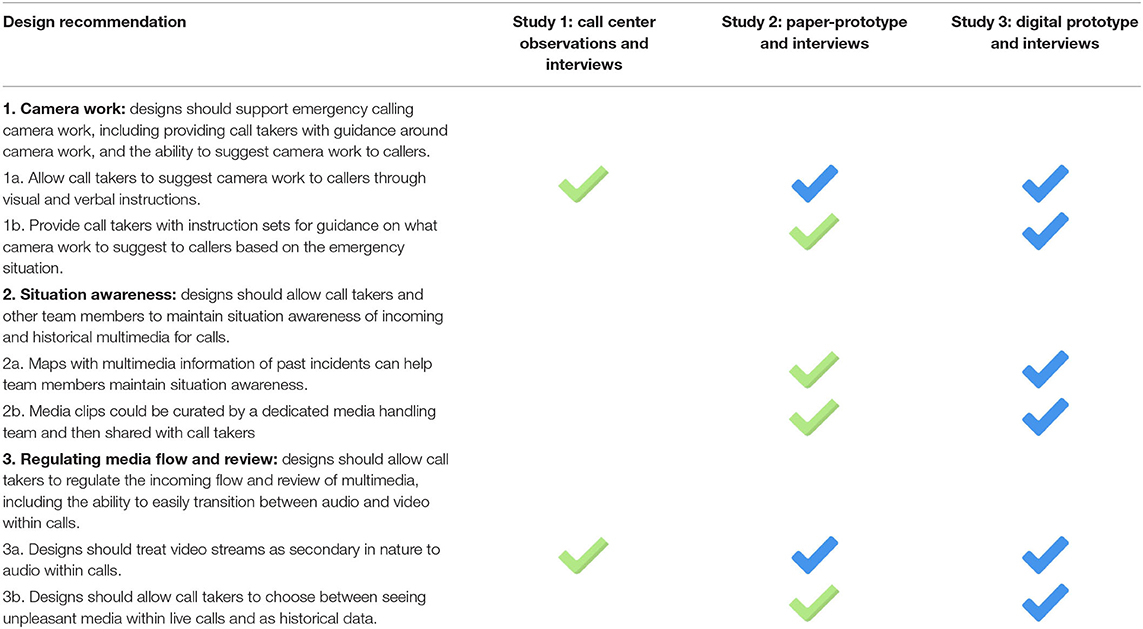

Our studies point to a range of user interface design ideas and highlight some challenges that designers should keep in mind when building NG911 call-taking systems. Here we triangulate the results from our studies and show where findings from one study validate the findings from another. To be clear, we are not proposing that we have produced a best-case user interface for call taking. Instead, we have used our design explorations to draw out an understanding of what is important to consider when designing emergency call-taking interfaces. We also provide thoughts on generalizing our work beyond NG911 settings and compare our findings on video calling to the related literature on video calling in other settings. Table 4 summarizes our design recommendations and lessons. Green checkmarks illustrate the first time a recommendation was seen in our studies, while blue checkmarks illustrate where additional studies validated and extended the recommendation.

Overall, our work shows that, in the future, 9-1-1 call-taking will need a next generation interface that supports video calling and picture sharing. Yet designs will need to be different from commercial applications like Zoom or FaceTime where design features must focus on supporting camera work, providing situational awareness, and allowing call takers to regulate the flow of media. We break these down further into the following design recommendations.

Designs Should Support Emergency Calling Camera Work, Including Providing Call Takers With Guidance Around Camera Work, and the Ability to Suggest Camera Work to Callers

All of our studies pointed to the value that video calling and picture sharing would bring to NG911. Our first study of call-taking centers showed that call takers were concerned about the quality of media captured and shared by callers. Unlike domestic situations between family and friends where video calling does not need to be precise in terms of what to show (Judge and Neustaedter, 2010; Massimi and Neustaedter, 2014) with 9-1-1, it is very important that the right information is shown quickly. In our design studies, call takers found that the ability to take snapshots of a livestream or recorded video may be crucial to future 9-1-1 call-taking operations, as it allowed them to circumvent challenges with callers' footage.

Our first study showed that 9-1-1 call taking is largely about control: control over what information is received and when. Our design studies validated this finding. As systems transition to support NG911, it is important that control stays with the call taker, so they can direct the call and the information-acquisition process. NG911 systems must inherently provide an asymmetric experience in which the call taker remains in control while assessing the caller's situation. Similar to how managing asymmetries in workplace video conferencing helps maintain and promote people's roles and the workplace culture (Saatçi et al., 2019), asymmetries in NG911 systems must be designed and managed to reflect and maintain the desired roles and relationship structure in a 9-1-1 call: one in which the call taker is the leader, the controller, and the consoler; while the caller is the information provider and the follower.

This creates a very different situation for video conferencing systems than is found in family/friend connections where a person, for example, may be streaming a family event such as a birthday party. In that case, the local person with the camera likely had a good idea of what is relevant to show the remote person (Massimi and Neustaedter, 2014). In contrast, with an emergency video call, the remote call taker may have specific things they want to see that may be unknown to the caller. This presents a very different design situation for video conferencing systems. As such, our design studies revealed opportunities for providing mechanisms to allow call takers to provide instructions to callers about what footage they should capture with their phones. This extends prior work looking at the caller side of 9-1-1 video calls (Singhal and Neustaedter, 2018). In our designs, call takers provided camera-work instructions by interacting with the interface to provide visual markers on callers' phones (Table 4, Recommendation #1a).

Yet providing suggestions for camera work was not always seen as being easy for call takers. Our design studies showed that call takers needed support and would value guidance when it came to suggesting footage, often specific to the type of emergency being reported. Our proposed solution used instruction windows listing steps for how call takers could direct camera work (Table 4, Recommendation #1b). This was valued by participants and is potentially a good first step. Moving forward, it would be pertinent to explore additional instruction sets that can support a range of non-emergency or emergency situations. Of course, there is a chance that not all emergency situations will easily fit a particular pattern and it could be challenging to use a set of generic instructions. Alternative solutions may involve additional computational processing that looks for elements within a photo or video and suggests context-specific instructions on the fly. For example, systems could check for large amounts of sky or ground in a camera feed, which may not be useful, and make suggestions for camera adjustments to the call taker. Commercially available systems do not provide such features and we also do not see related research that has explored how designs could provide such functionality. We know from the related work that information received from callers can sometimes be ambiguous and difficult to discern (Adams et al., 1995; Forslund et al., 2004). Our results extend this work to illustrate that multimedia may add to this ambiguity unless callers are able to be directed in ways to capture useful and relevant footage.

Designs Should Allow Call Takers and Other Team Members to Maintain Situation Awareness of Incoming and Historical Multimedia for Calls

At a call center, different kinds of information, including multimedia, could come from a variety of people such as citizens and first responders. As such, software can play an important role in connecting the relevant personnel involved with a case. This could, for example, be done through pop-up notifications, text data, and radio communication and, at the same time, exclude call takers from receiving data that does not pertain to them. Software could keep supervisors stay up to date with respect to ongoing situations within a call center and allow them to step in, communicate with personnel handling a call, and guide employees if necessary.

At present, call takers remain aware of their immediate surroundings using physical proximity and hearing other or through the help of radios, CAD software, or overhead displays showing case infomration (Bentley et al., 1992; Heath, 1992; Hughes et al., 1992; Bowers and Martin, 1999; Toups and Kerne, 2007). This encompasses situation awareness. Our results extend these ideas to illustrate the ways that situation awareness can be created and shared when calls include multimedia like video and photos. Here we see the value of having call takers gain access to historical multimedia information about calls where we provided this information on a map view (Table 4, Recommendation #2a). Yet this information is not always easy for call takers to view because it could be considered traumatic; we touch on this idea in our third recommendation below.

Our work also shows that designers need to think more broadly about how streamed video can be reviewed, curated, and segmented as images or clips for further sharing with other individuals (e.g., dispatchers and first responders) (Table 4, Recommendation #2b). In the future, 9-1-1 call centers may use a dedicated “media” team of trained personnel who can be assigned to look at videos and photos streamed or sent by callers. Media could be viewed and then sent to the appropriate call taker during a call to aid situation awareness. This, in turn, could cause large changes in the way that situation awareness is maintained as workers may need to work in a much more tightly-coupled fashion to exchange information especially since calls happen in real time and information must be received and viewed very quickly. This is perhaps similar to the way that call takers and dispatchers must work closely together if the roles are separated between two people: the former receives information from 9-1-1 callers and the latter dispatches emergency first responders based on the information from the call taker (Neustaedter et al., 2018). Our current findings extend these workflows to show the increased reliance on information exchange if multimedia viewing is separated across roles in call centers. The resulting implication is that designs would need to provide the easy exchange of multimedia call information between people in a call center.

Designs Should Allow Call Takers to Regulate the Incoming Flow and Review of Multimedia, Including the Ability to Easily Transition Between Audio, and Video Within Calls

Across all our studies, participants had many concerns when it came to their own autonomy—being able to choose what they saw on video, when they saw it, and whether their own video would appear. Some calls may be challenging or undesirable to see despite the potential value for assessing scenes and gaining additional contextual information. This is different from video calling situations that have been reported in family-and-friend situations where people tend to be okay seeing the video being shared with them (Judge and Neustaedter, 2010; Kirk et al., 2010; Brubaker et al., 2012; Massimi and Neustaedter, 2014). Overall designs should consider ways to turn video information on or off at the call taker's discretion and fluidly migrate between audio-only calls to audio augmented with video-enabled calls. In this way, audio should be considered the primary medium for communicating with callers while video and other media are secondary in nature (Table 4, Recommendation #3a). If call takers are able to choose when they view media, they can work so to not be overwhelmed by too much media or be traumatized by incoming media content (Table 4, Recommendation #3b). Media could be used when the call taker feels that a visual aid would greatly improve the understanding of a situation or reveal important details. We supported this through buttons that would turn on/off such features and ask callers to begin/stop sharing such media, while not permitting media to simply “pop-up” on-screen during a call. This was seen as being an appropriate solution in our design studies. Similarly, our results point out that designs need to be cautious with history systems (e.g., a map showing past calls) such that traumatic visuals are not shown to call takers unless they are comfortable in viewing them (Table 4, Recommendation #3b).

Limitations and Future Work

Our study was conducted specifically in Canada, though at a general level, our findings likely apply to emergency-call-taking services in most Western countries where procedures are relatively similar. Nonetheless, future work should explore call taking and associated user interfaces in other regions of the world. We specifically chose to present early designs to participants in order to gain feedback for improving our design ideas. This means that we do not know what call takers' reactions and interactions might be if working with a fully-fledged NG911 interface. Building and testing actual NG911 systems is incredibly challenging, as such systems would need to include front-end user interfaces as well as back-end systems that integrate into existing call networks and infrastructure. For these reasons, it is typically not feasible to evaluate designs that are of a higher fidelity than we have produced. Such evaluations would require large software and hardware development teams.

We did not focus on the perceptions of race, gender or other identification parameters of callers and how these factors may impact decision making by 9-1-1 call takers. With video calling, these factors come more into play as callers become easily identifiable. Although 9-1-1 call takers are trained to overlook such factors and deal with just the situation at hand, it may be still worth exploring if any biases exist and whether video plays a role in bringing forward these biases even further. However, studying such nuanced human psychology is hard and at the very least requires observation of a fully implemented system. For this reason, we did not focus on this aspect in our work.

Our work is also limited in that we did not create or test an interface for 9-1-1 callers. This was purposeful so we could deeply explore the call taker side first. Future work should look more deeply into the caller side, in order to gain more insights on callers' needs and interactions. It is especially important to understand how stress and danger could influence how callers use NG911 systems, and how they might interact with a call taker through such a system while in distress. This work could provide additional important design considerations for call-taking interfaces as well.

Data Availability Statement

The raw data supporting the conclusions of this article will be made available by the authors, without undue reservation.

Ethics Statement

The studies involving human participants were reviewed and approved by School of Interactive Arts and Technology, SFU Ethics Application Number: 2017s0043. The patients/participants provided their written informed consent to participate in this study.

Author Contributions

BJ proof read and helped with theme generation. All authors contributed to the article and approved the submitted version.

Funding

This work was funded by NSERC and SSHRC Canada.

Conflict of Interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher's Note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Acknowledgments

We thank the 9-1-1 call centers and employees who participated in our research.

References

Adams, K., Shakespeare-Finch, J., and Armstrong, D. (2015). An interpretative phenomenological analysis of stress and well-being in emergency medical dispatchers. J. Loss Trauma. 20, 430–448. doi: 10.1080/15325024.2014.949141

Adams, M. J., Tenney, Y. J., and Pew, R. W. (1995). Situation awareness and the cognitive management of complex systems. Hum. Fact. 37, 85–104. doi: 10.1518/001872095779049462

Artman, H., and Wærn, Y. (1999). Distributed cognition in an emergency co-ordination center. Cogn. Technol. Work 1, 237–246. doi: 10.1007/s101110050020

Bentley, R., Hughes, J. A., Randall, D., Rodden, T., Sawyer, P., Shapiro, D., et al. (1992). “Ethnographically-informed systems design for air traffic control,” in Proceedings of the 1992 ACM Conference on Computer-Supported Cooperative Work (New York, NY), 123–129.

Bergstrand, F., and Landgren, J. (2009). “Information sharing using live video in emergency response work,” in ISCRAM 2009 - 6th International Conference on Information Systems for Crisis Response and Management: Boundary Spanning Initiatives and New Perspectives, May (Brussels).

Bica, M., Palen, L., and Bopp, C. (2017). “Visual representations of disasterm,” in Proceedings of the 2017 ACM Conference on Computer Supported Cooperative Work and Social Computing (New York, NY), 1262–1276.

Blum, J. R., Eichhorn, A., Smith, S., Sterle-Contala, M., and Cooperstock, J. R. (2014). Real-time emergency response: improved management of real-time information during crisis situations. J. Multimodal User Interf. 8, 161–173. doi: 10.1007/s12193-013-0139-7

Bowers, J., and Martin, D. (1999). Informing collaborative information visualisation through an ethnography of ambulance control. ECSCW 99, 311–330. doi: 10.1007/978-94-011-4441-4_17

Brubaker, J. R., Venolia, G., and Tang, J. C. (2012). Focusing on shared experiences: moving beyond the camera in video communication. Proc. Desig. Inter. Syst. Conf. 12, 96–105. doi: 10.1145/2317956.2317973

Camp, P. J., Hudson, J. M., Keldorph, R. B., Lewis, S., and Mynatt, E. D. (2000). “Supporting communication and collaboration practices in safety-critical situations,” in CHI'00 Extended Abstracts on Human Factors in Computing Systems (New York, NY), 249–250.

CRTC 2016 (2016). Available online at: http://www.crtc.gc.ca/eng/archive/2016/2016-116.html (accessed April 4, 2020).

De Vasconcelos Filho, J. E., Inkpen, K. M., and Czerwinski, M. (2009). “Image, appearance and vanity in the use of media spaces and videoconference systems,” in GROUP'09 - Proceedings of the 2009 ACM SIGCHI International Conference on Supporting Group Work (New York, NY), 253–261.

Denef, S., Bayerl, P. S., and Kaptein, N. (2013). “Social media and the police-tweeting practices of British police forces during the August 2011 riots,” in Conference on Human Factors in Computing Systems - Proceedings, August 2011 (New York, NY), 3471–3480.

Dicks, R.-L. H. (2014). Prevalence of PTSD Symptoms in Canadian 911 Operators. Fraser Valley, BC: University of the Fraser Valley.

Endsley, M. R., and Jones, D. G. (2011). Designing for Situation Awareness: Understanding Situation Awareness in System Design. Boca Raton, FL: CRC Press.

Engström, A., Zoric, G., Juhlin, O., and Toussi, R. (2012). “The mobile vision mixer: a mobile network based live video broadcasting system in your mobile phone,” in Proceedings of the 11th International Conference on Mobile and Ubiquitous Multimedia, MUM 2012 (New York, NY), 3–6.

Forslund, K., Kihlgren, A., and Kihlgren, M. (2004). Operators' experiences of emergency calls. J. Telemed. Telecare 10, 290–297. doi: 10.1258/1357633042026323

Hayes, E. A. (2017). Commonly Identified Symptoms of Stress Among Dispatchers: A Descriptive Assessment of Emotional, Mental, and Physical Health Consequences St Cloud, MN.

Heath, C. (1992). Crisis management and multimedia technology in London underground line control rooms. J. Comp. Supp. Cooperat. Work 1, 24–48. doi: 10.1007/BF00752451

Higuch, K., Yonetani, R., and Sato, Y. (2017). “EgoScanning: quickly scanning first-person videos with egocentric elastic timelines,” in Conference on Human Factors in Computing Systems - Proceedings 2017-May (New York, NY), 6536–6546.

Hughes, A. L., St. Denis, L. A., Palen, L., and Anderson, K. M. (2014). “Online public communications by police and fire services during the 2012 Hurricane Sandy,” in Conference on Human Factors in Computing Systems – Proceedings (New York, NY), 1505–1514.

Hughes, J. A., Randall, D., and Shapiro, D. (1992). “Faltering from ethnography to design,” in Proceedings of the 1992 ACM Conference on Computer-Supported Cooperative Work (New York, NY), 115–122.

Inkpen, K., Taylor, B., Junuzovic, S., Tang, J., and Venolia, G. (2013). “Experiences2Go: sharing kids' activities outside the home with remote family members,” in Proceedings of the ACM Conference on Computer Supported Cooperative Work, CSCW (New York, NY), 1329–1339.