Abstract

Interactive systems based on augmented reality (AR) and tangible user interfaces (TUI) hold great promise for enhancing how to learn and understand abstract phenomena. In particular, they combine the advantages of numerical simulation and pedagogical support while keeping the learner involved in true physical experimentation. In this paper, we discuss three examples of such AR and TUI systems that address hardly perceivable concepts. The first example, Helios, targets K-12 learners in the field of astronomy. The second one, Hobit, is dedicated to experiments in wave optics. Finally, the third one, Teegi, allows one to get to know more about brain activity. These three hybrid interfaces have emerged from a common basis that jointly combines research and development work in the fields of instructional design and human–computer interaction (HCI), from theoretical to practical aspects. Based on investigations carried out in the context of real use and grounded on works in education and HCI, we formalize how and why the hybridization of the real and the virtual leverages the way learners understand intangible phenomena in Science education.

Introduction

Many concepts are not “tangible” in the real world and therefore remain diffuse in Science education. The learning of Science is complex as defined by Van Merriënboer and Kirschner (2012). It implies that the pupils and students mobilize and/or construct integrated knowledge (e.g., light properties, asters’ motions, or neuronal electrical activities in our applications), skills (e.g., know how/what to observe, manipulate, and/or adjust technical material), and attitudes (e.g., formulate and validate questions or hypotheses, and argue). Therefore, learners generally have difficulties to construct and integrate scientific knowledge when facing the complexity of the real world. They may fail to build the link between what they perceive around them and what they are asked to do during practical work or theoretical courses.

The standard human–computer interaction (HCI) paradigm based on 2D screens, mouse, and keyboards has shown undeniable benefits in a number of fields. This paradigm is well suited for a wide number of interactive educative applications among which text editing, web research, or computer-assisted experimentation. On the other hand, there are limits to this paradigm when rich user experiences are targeted. Due to augmented reality (AR) and tangible user interfaces, new forms of learning interaction have been emerging beyond standard 2D flat screens and mouse pointing. These new input and output modalities push the frontiers of the digital world further. They open up new perspectives for tomorrow’s teaching applications. The purpose of this work is to expose how the hybridization of the real and the virtual associated with tangible interactions could enhance the learning of intangible concepts in a real learning context.

Related Work

Many studies have been carried out using AR in formal education, from pre-school [e.g., Campos et al. (2011), Huang et al. (2015), Bhadra et al. (2016), and Yilmaz et al. (2016)] to higher education [e.g., Alexander (2004), Liarokapis and de Freitas (2010), and Munnerley et al. (2014)]. Probably because it is difficult to conduct ecological studies in a school context, implementing the use of AR in a primary school remains uncommon (Kerawalla et al., 2006; Freitas and Campos, 2008; Luckin and Fraser, 2011; Chen and Tsai, 2012). Compared to standard displays, AR offers new visualization opportunities. This is notably true in the fields that rely on 3D modeling and spatial perception such as architecture, chemistry, and mathematics [e.g., Kaufmann and Schmalstieg (2002), Chen (2006), Blum et al. (2012), Di Serio et al. (2013), and Ibáñez et al. (2014)]. Most of the studies have concluded that AR supports understanding by providing unique visual and interactive experiences that combine real to virtual information. This help teachers to communicate abstract problems to learners (Billinghurst and Kato, 2002; Regenbrecht and Wagner, 2002; Kaufmann and Schmalstieg, 2003; Campos et al., 2011). However, even if AR’s high level of interactivity could enhance learning [e.g., Shelton (2002), Billinghurst and Duenser (2012), and Chiang et al. (2014)], the usability of the underlying technologies could cause problems of discomfort resulting in learners’ loss of attention, especially with mobile AR and HMD-AR [e.g., Tang et al. (2002), Kerawalla et al. (2006), Morrison et al. (2009), and Shah et al. (2012)]. This could be due to the detriment of interacting with contents.

Because TUIs enable users to physically interact with both digital and real worlds, they may have many advantages in education. Some examples of TUIs are props that are associated with multi-touch or interactive tables [e.g., Jordà et al. (2007) and Brown et al. (2015)], physical blocks for constructive assembly [e.g., Horn and Jacob (2007) and Horn et al. (2012)], or tokens in a constrained system [e.g., Ullmer et al. (2005)]. Similarly as in our approach, TUIs can be also associated with AR [e.g., Bonnard et al. (2012)]. Computer augmentations make abstractions “touchable” (Schneider et al., 2011). The association of AR and TUIs may be used as a very efficient way to scaffold the understanding of theoretical contents by embedding dynamic graphics on physical objects. Tangible interaction provides a very hands-on approach by offering different input affordances (as well as physical constraints) to the user, while AR offers a flexible and situated way to give feedback. The content can be manipulated and augmented in many dimensions of space and time in order to influence the learning processes of the intangible and complex scientific concepts. Furthermore, it is well reported that TUIs enhance and support collaborative tasks [e.g., Kaufmann and Schmalstieg (2003) and Do-Lenh et al. (2010)]. Interested readers are directed to the various reviews proposed by Marshall (2007), Shaer and Hornecker (2010), Schneider et al. (2011), Horn et al. (2012), Cuendet et al. (2015), or Zhou (2015).

Only a few examples of systems based on TUIs have been evaluated in real school contexts (Cochrane et al., 2015; Cuendet et al., 2015; Kubicki et al., 2015). The investigations in this domain tend to be limited to the pedagogical models and psychological perspective of AR and TUIs toward learning [e.g., Bujak et al. (2013) and Wu et al. (2013)]. However, in educational contexts, the learning benefits are related to the effective activities implemented to achieve the instruction goals (knowledge and/or skills). In order to be efficient, “technological applications have to be well designed, based on learning and pedagogical principles, used under appropriate conditions, and be well integrated into the school curriculum” (Lai, 2008). Consequently, the real impact of such approaches in education is still unclear, and experiments need to be conducted. In this paper, we report three experiments that enabled us to gain knowledge about the relevance of tangible and augmented approaches in real learning tasks.

Three Hybrid Interfaces for Understanding Intangible Phenomena

We aim at providing adapted pedagogical supports to learners in order to help them discover new scientific concepts and overtake misconceptions [i.e., information collected by means of personal experience that contradicts contemporary scientific theory; Vosniadou (1992)]. In situations of Science learning, misconceptions frequently lead to errors. They could create obstacles to understanding, especially if the concepts are abstract. In a more general sense, concepts rely on a set of perceptual and behavioral features reactivated in thought, and sensorimotor patterns enable the learner to visually and physically interact with them. To overcome their potentially wrong representations, the learners should be put in a situation to question them (Vosniadou et al., 2001; Duit and Treagust, 2003).

The learning environment could then act as a pedagogical support for reflection. In this context, as in all activities involving abstract knowledge, the learners’ actions on objects and the observation of the objects’ reactions are both important (Kamii and DeVries, 1993). Interacting with hybrid interfaces, which associate TUIs and AR, could provide the multisensory spatial and temporal information that are the foundations for cognition [e.g., Klatzky et al. (2003) and Lederman and Klatzky (2009)].

In the following sections, we will present three examples of hybrid interfaces that have been designed in our research teams (see list of participants in the Acknowledgment Section) and whose goals are to help learners to get to know more about intangible phenomena in the fields of astronomy, wave optics, and brain activity (see Table 1).

Table 1

| Helios | Hobit | Teegi | |

|---|---|---|---|

| Topics | Astronomy | Wave optics | Physiology |

| Thema | Earth in the solar system | Michelson interferometry | Brain electrical activity |

| Learning goals: understand | Night and day alternation | Origin of interference fringes | Active zones in function of three factors: |

| Origins of seasons | Origin of interference rings | 1—motor activity; 2—visual activity; 3—meditation | |

| Origin of Moon phases | Role of the different optical elements of the Michelson interferometer | Brain structures | |

| Time difference | Influence of the nature of the light source (mono or polychromatic) | Neuronal electric activity | |

| Motions involved in the solar system (rotation and revolution) | Adjustment of the interferometer for each example | EEG principles | |

| Required skills | Observation | Observation | Observation |

| Manipulation | Precision adjustment | Manipulation | |

| Inquiry | Inquiry | Manipulation | |

| Inquiry | |||

| Previous knowledge | Solid geometry | Mathematics | None |

| Motions | Base of ray optics | ||

| Base of wave optics | |||

| Education levels | Primary school | Higher education | General public |

| Grades 4 and 5 | Institute of Technology |

Pedagogical characteristics of the hybrid interfaces Helios, Hobit, and Teegi.

Summary of the Design Process

The three hybrid interfaces were designed with a specific regard to instructional design (ID) principles. ID consists of elaborating learning environments (i.e., defining learning goals, pedagogical contents and supports, activities, and assessments) with a particular care on the intrinsic and extrinsic factors that impact the learning efficacy and efficiency. As in HCI design processes, which are focused on the acceptability and usability of an environment [see, e.g., Nielsen (1994) and Hassenzahl (2003)], ID relies on a user/learner-centered process. ID integrates learners’ specificities that are linked to the content to learn in accordance with learning theories, education and/or development psychologies, and curriculum [e.g., Sweller (1999) and Reigeluth (2013)].

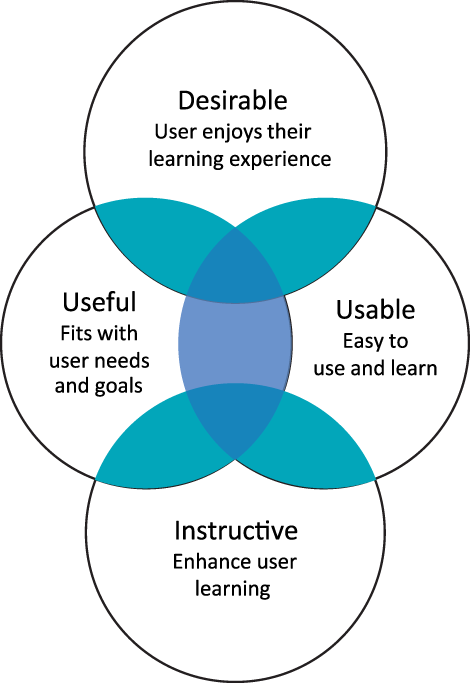

In our approach, we couple HCI and ID in a holistic approach in order to create relevant learning experiences that overcome learning barriers (Figure 1). The objective is to define the learning goals in accordance with the official curriculums and the learners’ profiles for each level of study (e.g., school or university). This implies taking into account the age, developmental stages, learners’ capabilities, and personal needs face to the contents to construct or to what they expect from the training, and so on. Learning contents and activities are then defined after identifying the requirements focused on learning barriers. This includes literature review, analyses of preliminary pupils’ or students’ production, and interviews with teachers.

Figure 1

Main targets of an interface aiming at enhancing user–learner experience.

The three prototypes that will be presented in the following sections have been designed with our colleagues following this general approach. For each of these prototypes, we have targeted high levels of acceptability and usability, and we have conducted dedicated user studies to this end (including co-design sessions and user experience survey). All the results led us to refine the prototypes as expected in iterative design processes.

Evaluations were conducted in empirical and comparative studies in regard to classical systems, carried out in real teaching conditions (exception for Teegi—see below—due to the high complexity to implement the system in a school) and all included in a pedagogical sequence or scenario.

Our analyses of learning benefits are based on qualitative and quantitative results. We focused on the user’s experience (UX, i.e., with respect to usability, usefulness, and meaningfulness), their learning achievement (efficacy), and the behaviors and interactions observed when the tasks were executed. In all studies, we used UX survey (questionnaires and interviews) to assess pragmatic and also hedonist users’ perceptions. The learning assessments were conducted with pre-tests and post-tests centered on learning goals defined by the teachers. All the learning sessions were video recorded (after the authorizations and clearance consents, i.e., from educational establishments, students or their parents for the children). Video recordings were separately visualized and coded using The Observer XT (Noldus, Info Tech, Wageninen, The Netherlands). Coding was based on a behavior grid containing four behavior classes: (i) task involvement (e.g., duration of activity, dropout rate, and amount of aid claimed); (ii) verbal/social behaviors, (iii) gestural, and (iv) visual interactions typologies (e.g., amount, nature). With the help of the observer, behavior frequencies and duration were computed.

Astronomy

Learning Barriers

In astronomy, one of the most difficult skills to acquire for children and many adults is the ability to construct the causal relationship between the position of the light source and the relative position of the moving celestial bodies. Especially children who are in full development of their spatial cognition have many difficulties to assimilate geometric optical problems that are linked to astronomy (Piaget and Inhelder, 1956; Piaget et al., 1960; Spencer et al., 1989). The movements of non-material natural entities such as energy, heat, or light, are complex to understand because they are detached from perceptual experiences (Palacios et al., 1989; Ravanis et al., 2013). For example, learners find it very difficult to understand the light path between a light source and an object because most of them ignore the space in which light beams propagate (Ravanis et al., 2013). A light path is immaterial and imperceptible. Consequently, learners do not spontaneously plan the form of the resulting shadows. Moreover, the evidence that structures the environmental perception for an adult is not totally developed for children. Children have to use concrete, topological spatial relationships (properties of single object or configuration) before being able to use projective or Euclidean representations. Moreover, to understand phenomena such as lunation or seasons, learners have to mentally construct a 3D model of moving celestial bodies from an allocentric point of view and also to understand how light propagates. The problem is that the geocentric position of the observations (i.e., egocentric point of view) and the large scale of phenomena constitute barriers for children to understand astral motions and the related influence of the Sun’s position on shadows that project onto the Earth and Moon.

Helios: Hybrid Environment to Learn the Influence of the Sunlight in the Solar System

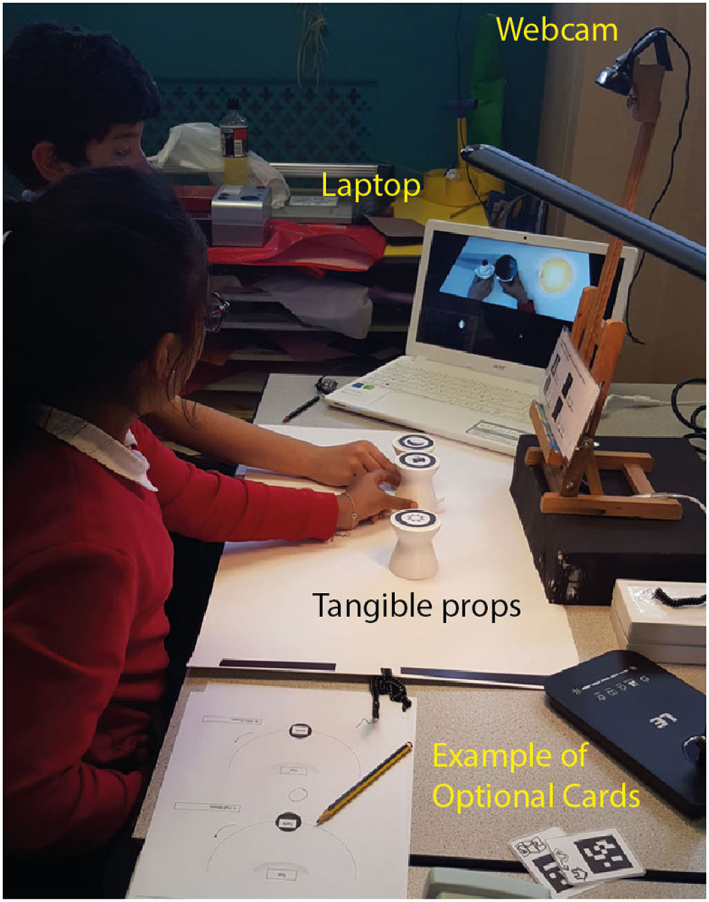

Based on this diagnosis, Helios was developed (Fleck and Simon, 2013). It is an AR platform that aims at enhancing the understanding of abstract concepts in astronomy, specifically for primary schools’ curriculum with children aged from 8 to 11. In order to provide physical evidence for the influence of sunlight on the Earth and the Moon, and of the consequences of their relative positions, the learning tasks are designed on inquiry-based learning principles. Children have to test their own hypotheses by using tangible props and a set of cards that trigger dedicated pedagogical activities (e.g., seasons and the Earth revolution around the Sun, lunation origin, Earth rotation, and time measurement).

Helios basically consists of a standard laptop computer, a webcam, printable AR markers placed on tangible props, and on dedicated pedagogical cards (Figure 2). The Sun, the Earth, and the Moon, associated to specific AR props markers, appear realistic as they are represented using textured 3D spheres featured from space images. The virtual Sun is an omnidirectional light source that produces self-shadows of the Earth and the Moon. Shadow cones are made visible due to an appropriate visual feedback. Dashed lines are drawn between the center of the Sun and the centers of the Moon and the Earth. This helps the learner to understand the relationships between the three celestial bodies and to perceive the light paths.

Figure 2

(A) Example of first person perspective view of the Helios environment. Visual guides support learners: (a) dashed lines between celestial bodies’ centers; (b) a terrestrial observer; (c) shadow cone; and (d) viewport showing the terrestrial observer’s view in real time. (B) Tangible props and optional pedagogical AR cards.

Young users can then freely move the virtual Sun, Moon, and Earth by manipulating the dedicated tangible props. They can observe the results of their actions on the augmented scene displayed on a screen (see Figure 3). This enables them to perceive the astronomical position of the asters from an allocentric point of view and to observe, for example, that only a half of the Moon and of the Earth is illuminated. By interacting with the system, children can solve problems such as “why are the Moon phases changing daily,” “in which configuration will an observer located in Japan see a half-moon?,” or “why is the weather warmer in summer?”

Figure 3

Actual Helios prototype. Pupils manipulate tangible props and observe the augmented scene on a laptop.

Additionally, a dedicated viewport makes it possible to visualize what would be visible by an observer who is standing in a specific location on the Earth. This feature may help children to connect various perceptions of a same phenomenon depending on their point of view (i.e., perception from space vs. perception from Earth). It may enhance their understanding of the causal links, too. To support investigation, dedicated cards are used to activate options. For example, with one of these cards, one can visualize the angle with which the Sun rays intersect the Earth. Another one enables the users to change the location of the observer on the Earth.

Several studies involving more than 150 children from 7 primary classrooms showed the efficacy of Helios toward learning (Fleck and Simon, 2013; Fleck et al., 2014a,b, 2015). Helios provides a learning environment that is easy to use for pupils in grades 4 and 5. It significantly enhances astronomical learning; the learning achieved with Helios was twice better than the one achieved in a classical environment (see Figure 4A). Helios helps children to overcome their misconceptions of intangible astronomical phenomena. For further details about these experiments, please refer to the related papers cited above.

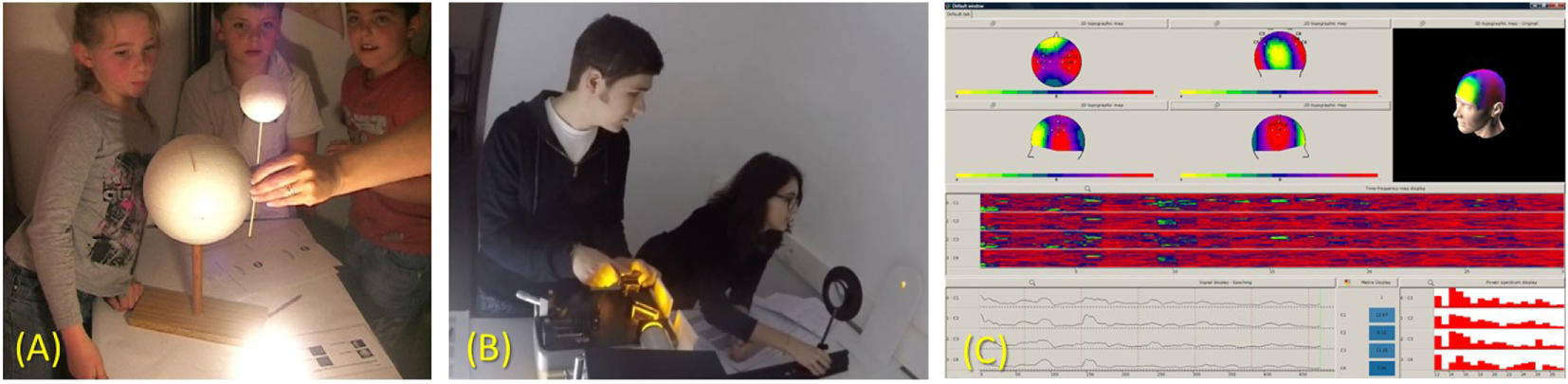

Figure 4

Example of standard learning models used to teach (A) astronomy at K-12 school; (B) interferometry; and (C) brain activity (OpenVIBE).

Wave Optics

Learning Barriers

As for astronomy for children, learning wave optics may be difficult for many students at university. It requires strong abstraction ability and scientific reasoning to understand wave properties and to construct corresponding mental images [e.g., Galili and Hazan (2000), Colin et al. (2002), and Djanette and Fouad (2014)]. Students often neither consider the critical role of reasoning nor understand what constitutes an explanation in physics. Many students at university do not easily understand the theoretical physical models. These students are then not able to develop a coherent framework for important optical concepts, despite having finished their introductory physics studies. Connections among concepts, formal representations and the real world are often missing after traditional instruction (McDermott, 2001). Moreover, ray optics that was previously learned may induce misconceptions in wave optics. For example, the superimposition of two waves coming from a split light source may result in a dark area, as demonstrated with a Michelson interferometer (see Figure 4B). Therefore, to ease the understanding of wave optics and to initiate students to the technical adjustments of optical benches, experiments are needed. They enable students to “touch” and experience what they have learned during theory classes. The problem with the current optics experiments is that they are generally expensive and fragile. They require time to set up and to maintain, and they may be dangerous (e.g., when using laser sources). Moreover, the teachers who participated in our work indicated that observations during learning processes could enhance understanding, but only if the students could find relevant guidance directly in their learning environment. In addition, despite taking active control in the experiment, students may still have difficulties in operating with the appropriate precision. They may have difficulties to understand the link between their actions (adjustments of the optical components) and the resulting observed phenomena if they are not guided by the teacher step by step.

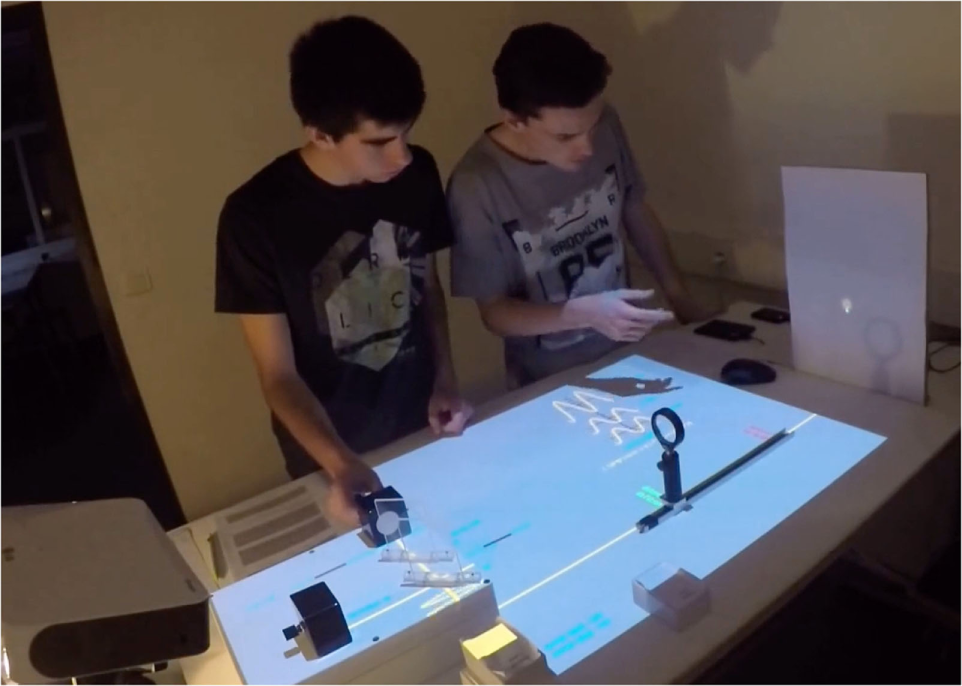

Hobit: Hybrid Optical Bench for Innovative Teaching

To overcome these limitations, we are currently exploring an approach that exploits the combination of physical manipulation, a digital simulation, and pedagogical augmentations. We participated in the design of a platform, called Hobit for Hybrid Optical Bench for Innovative Teaching, where the optical elements (e.g., lenses, mirrors mounted on supports equipped with micro-metric screws) are replaced by 3D-printed replicas that were equipped with electronic sensors (Furio et al., 2015). Compared to related works, especially Underkoffler and Ishii (1998) and Agarwal and Tripathi (2015), Hobit targets hybrid experiments that mimic and augment true optical experiments and not only conceptual setups. In particular, we have built a hybrid well-known Michelson interferometer from our platform.

The primary goal of this hybrid environment is to enhance the technical skills of students of optics at university. The manipulation of the hybrid platform is very close to what experimenters are used to doing in their standard activities. The users modify the parameters by manipulating the optical replicas with mechanical adjustments (translation, rotation) in a way that is similar to what is done with a real optical bench. They observe the patterns resulting from their action directly on a projection screen (see Figure 5). Technically, a communicating network of sensors triggers the users’ actions and inputs a numerical simulation. This simulation computes a physical model in real time, whose result is projected onto a screen. Beyond the simulation of a real experimental setup, Hobit takes advantage of spatial augmented reality (SAR) to augment the experiment. Digital information can be optionally projected on top of the experimental board (see Figure 5). This provides the opportunity to augment the working area with pedagogical content during the learning processes. Hence, achieving the manipulation task can be supported if necessary. Augmentation is done through a projector located above the board. The latter projects visual elements that are co-located with the physical components. They can be information that gives feedback about the state of the system (e.g., angle of a mirror), highlights of the light paths, or additional pedagogical supports like formulas or graphs (see Figure 5). These augmentations have been designed to support students’ needs. The final objective is to help students to understand how their actions impact the observable phenomenon and to bring the underlying scientific concept out.

Figure 5

The Hobit platform allows simulating and augmenting a Michelson interferometer experiment. Standard optical elements are replaced by 3D-printed replicas equipped with electronic sensor (right). The observed patterns result from a numerical simulation (left). Pedagogical supports are embedded within the experiment (yellow and blue texts and drawings) (Furio et al., 2015).

This hybrid setup was evaluated during a large user study with 101 students and 7 teachers. Hobit was compared to a standard Michelson setup for 3 months in usual Michelson practical work. The full analysis of the recorded data is under progress. However, first observations enable us to discuss the benefit of a hybrid approach compared to a standard Michelson interferometer. First, Hobit has shown undeniable benefits as to its convenience compared to a real experiment. Teachers and students were able to instantaneously start and reset the experiment, which is not possible with a standard setup. Then, a pre-test/post-test set of exercises showed that the students who have learned with Hobit obtained higher scores than the ones who have learned with its conventional counterpart. This tends to show that the augmentations co-located with the physical objects that are manipulated by the students play a role in constructing the knowledge.

Brain Activity

Learning Barriers

Brain functions and the means to access them are important for the general public. Our society is normally concerned with health-care public questions (e.g., brain injuries, stroke, sleep disturbances, epilepsy, and the aging of the population) or learning brain mechanisms and disorders. However, brain activity is difficult to understand because it cannot be sensed, contrary to other physiological activities (e.g., respiratory, muscular contraction) that could be perceived through sensory–motor feedback. Numerous studies have indicated that misconceptions about brain functions prevail in general public [e.g., Herculano-Houzel (2002), Simons and Chabris (2011), and Dekker et al. (2012)]. In the case of brain activities with no body perception (motor functions, sensory–motor perceptions, and so on), no mental cause and effect representation could be conceptualized. Cerebral activities are immaterial when they do not have a body self-image. Hence, brain activities need to be conceptualized. Traditional computer/screen interfaces are widely used to show EEG signals (see examples in Figure 4C). However, the visualization of EEG signals with such interfaces is designed for professional use. The provided information remains inaccessible to the general public. This maintains the idea that brain activity is a complex concept, which can be understood only by specialists. Consequently, it could lead to an estrangement from knowledge and to a lack of simple access to understanding. Even if the model is significant, it could be difficult for an observer to understand the causes and effects between cerebral activities and specific ongoing actions (e.g., motor, sensory–motor, cognitive).

Teegi: Tangible Electroencephalography Interface

Teegi is a tangible electroencephalography interface that exploits SAR and tangible interaction (Frey et al., 2014). It has been designed with the goal of enhancing physical manipulation to ease the understanding of complex phenomena that are linked to brain activity. The whole design process of Teegi combines knowledge in psychophysiology, educational techniques, and playful interaction. Users, equipped with an EEG helmet, can observe their own cerebral activity through a visualization projected onto a physical puppet in real time (Figure 6). For example, when moving their right hand, they can see which brain zones are active directly on the puppet’s head. They can also select filters, or various visualizations, by manipulating physical objects directly onto the table. Three filters can be applied to the raw data, and each of them is selected by placing a trackable iconic doll representing the corresponding filter into a tracked area (see Figure 6). Several additional trackable artifacts and associated visual feedbacks are used to interact with the visualized brain activity (e.g., amplitude of the signal). To help the user visualize the relationship between data recorded from their scalp and activity in the brain, a tracked brain model is also used as a projection target. Compared to Mind Mirror (Mercier-Ganady et al., 2014), which superimposes brain visualizations directly onto the user’s head, the Teegi project focuses on an approach that gives a large importance to physical manipulation and collaboration.

Figure 6

Teegi: brain activity is visualized directly onto the head of a physical doll (top left). Tangible objects (mini-Teegi) allow activating filters to concentrate on dedicated brain activities: motor (situated here in the detection zone), meditation, and vision (from right to left) (Frey et al., 2014).

Technically, OpenVIBE is used to associate a given visualization to the recorded brain signal. This visualization is projected onto the puppet head that is tracked thanks to an Optitrack tracking system. VVVV is used to master the whole application. Additional details on the system are given in Frey et al. (2014).

Due to the great complexity of deploying Teegi in an ecological context such as a classroom (i.e., installation and calibration issues), we conducted a classical evaluation in laboratory conditions with 10 subjects who performed pedagogical activities. This preliminary study showed that the subjects enjoyed manipulating a physical puppet for visualizing their brain activity. Even if we cannot draw conclusions about the relevance of this approach for the learning process as a whole, it appeared that manipulating physical objects that are augmented in real time has produced a motivational effect. First results show that all the subjects have understood the basic functioning of the brain due to the Teegi platform.

Lessons Learnt and Discussion

Helios, Hobit, and Teegi enable the learner to become conscious of their misconceptions. As discussed above, they contribute to construct scientific mental models for the majority of learners (see related papers). The experiments that have been conducted with these three interfaces show evidence that hybrid environments may favor and enhance conceptual change. In order to explore how and why hybrid interfaces may empower these learnings, we propose an overview of their advantages drawn from our own results as well as from the grounding works in education and HCI, which corroborate the design choices that were made for Helios, Hobit, and Teegi. We also highlight some limits of such approaches that need to be taken into account during the design processes.

Hybridization Enhances Contents Significance

In Science learning, pupils have to mobilize or construct integrated knowledge. From a cognitive point of view, the subjects synthesize (construct) their knowledge on the basis of two information sources: observations of the world and explanations given by other people (Vosniadou and Brewer, 1992), which include documents, models, and artifacts. Modeling the learning contents should then appear significant to the learners.

In contrast, classical pedagogical supports (e.g., books, simulation, physical models) generally present low significance to the learners. The gap between the learners’ previous conceptions and those of the person who designed the support could make the content to learn too complex to the students. Moreover, the lack of concrete references or of anchoring in a significant environment could affect scientific learning (Kahneman and Tversky, 1973; Rolando, 2004). For example, children who used the physical models without augmentation in the Helios study declared in 57% of the cases during the UX survey that it was difficult for them to figure out that a ball was not a ball but the Earth or the Moon (see Figure 4A), as previously observed by Shelton and Hedley (2002) in the case of adults. Similarly, in the Hobit study, the students tended to use classical interferometers somewhat randomly due to the gap between their theoretical courses and their concrete manipulations.

To summarize, standard supports and models can often be understood only by those who already know things. This is paradoxical as they are supposed to support the learners’ needs. Since the nature of the content modeling plays an important role in the learning processes, designers have to consider it from the first steps of the design processes.

The three interfaces described above were instructionally designed by taking into account the content structure and the main learning barriers. In order to make not only the interface but also the contents appealing, esthetic and attractive environments were designed. They correspond to relevant models, as reliable as possible when seen from scientific and pedagogical points of view. For the three learning interfaces, the following components were emphasized: (i) photo-realistic rendering (e.g., textures coming from true astronomy images in Helios, and realistic interference patterns in Hobit), (ii) significant augmented representations that can easily be understood by the learners from their previous learning (e.g., shadow cones, wavelength, EEG signal transformed in a colored map), and (iii) meaningful references to the physical and sociocultural world such as semiotic AR markers or real objects (e.g., tangible props and option cards in Helios, replicas of optical components in Hobit, and filters and anthropomorphic tangible supports in Teegi; see Figures 2B, 5 and 6).

With the three systems, the video analyses of all studies indicated that the visual augmentations and the tangible supports were immediately identified, without any confusion. The users found the systems appealing, and they expressed how surprising it was for them to be able to perceive what is usually impossible to see. In the UX survey, the users of the three interfaces endorsed the significance of the modeling. It led to a focus on contents, and these positive emotions initiated a desire to learn. This was evidenced by the fact that with each interface, all learners started their tasks very quickly, either children or adults, regularly before their teachers finished giving the instructions. These hybrid interfaces reduced the need for working instructions. It tends to show that the hybridization ensured a significant virtual and physical information coexistence, which reduces the learner’s perception of the abstraction level.

The symbolic significance and the concrete aspect of the hybridized supports upgraded the awareness of scientific explanation. For example, with Helios, all children declared that the realistic rendering of the asters and the symbolic light path provided by AR was the main factor that helped them to learn better. Similarly, with Hobit, 83% of the students declared that the hybrid display helped them (i) to make the link between the experiment and the theoretical courses, which became more understandable, (ii) to connect their technical knowledge to the problem-solving tasks of an interferometer, and (iii) to enhance their perception of the underlying wave phenomenon. The most obvious example of the importance of the modeling choices was observed with Teegi. Video analyses indicated that the participants did not find it difficult to observe and understand the signals on Teegi’s head. They also interacted with Teegi as if it was a human being (e.g., the subjects used morphological zones specific to human interactions while manipulating it). They were able to quickly understand the consequence of their action (e.g., moving my left hand activates the right zone of my brain dedicated to motor activities) even if they did not have previous knowledge about the cerebral structures. Teegi, which mediated the EEG signals through an anthropomorphic model (i.e., a physical character), provided here a consistent self-representation and an implicit self-perception of one’s own brain activity.

By “making the invisible visible,” hybrid interfaces with specific designed content then provides the opportunity to go beyond the limits of classical physical models.

Hybridization Engages in Relevant Learning Tasks

In our approach, hybridization seeks to make real-world phenomena easier to deal with by simplifying matters and by highlighting significant parameters to understand. Moreover, in order to construct skills and become conscious of complex phenomena and/or change their misconceptions, learners should investigate and manipulate (Vosniadou, 1994; Duit and Treagust, 2003). Hershkowitz et al. (2001) defined “abstraction as a process in which students vertically reorganize previously constructed contents into a new structure. […] The process of abstraction is influenced by the task(s) on which students work; it may capitalize on tools and other artifacts; it depends on the personal histories of students and teachers; and it takes place in a particular social and physical setting.” Ideally, pupils and students would then mobilize the skills required for scientific and technological inquiry for solving problems such as observation or for testing hypotheses by direct manipulation. This process leads to constructive theoretical models that are logically consistent (Piaget and Inhelder, 1966).

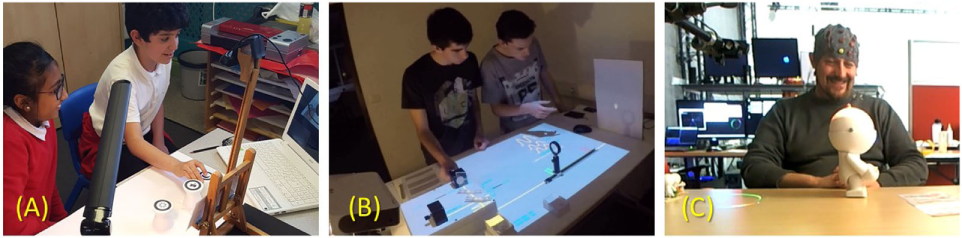

Therefore, in order to stimulate this active control in the learning tasks, the hybrid environments should be designed in a way that invites users to interact physically with the system. It is then totally related to the concept of affordance in ergonomics of HCI (Gibson, 2000). However, as emphasized by Hornecker (2012), “the affordances of physical objects are potentially endless and users creatively select those that fit their understanding of the system, their aims and the situation.” We then tried to emphasize learning tasks by following an epistemic approach (Kastens et al., 2008): we coupled AR modeling added value to the design of tangible interaction techniques in an iterative process. The main objective was that learners could change the nature of their mental tasks (i.e., epistemic action) by gathering information and not only by engaging in pragmatic actions. The design of the learning interfaces described in this paper also integrated inquiry-based learning (Helios) or problem-based learning (Hobit and Teegi) tasks. The choices made were motivated by the design of hybrid interfaces that engage users to fully enter into their task. For example, with Teegi, the users were inactive only during 1.9% (SD = 1.7) of the session duration. Regarding visual attention, learners continuously gazed at the monitor (Helios) or augmented physical objects (Hobit and Teegi) during the experiments while manipulating at the same time. Our results confirm previous studies indicating that TUIs could enhance the immersion in the task (Price et al., 2003; Xie et al., 2008). In addition, video analyses indicated that the learners’ visual attention was essentially related to their manipulation toward the inquired phenomena. For all these hybrid interfaces behavior, analyses indicated that participants made predictions and hypotheses and tested them immediately by conducting experiments. This could be explained not only by the tangibility of the interaction but also by the task affordance provided by AR and pedagogical scenarios. From Bottiroli et al. (2010), task-affordance stems from a situation or artifacts that activates a learner’s knowledge about a specific strategy to involve and from the degree to which it affords the strategy. This suggests that the hybrid environments described in this paper, which are easy to use and to learn (i.e., usable), and which are rather transparent interfaces compared to a more classical interface, provide meaningful elements to trigger the desire to interact and to engage learners in achieving tasks (i.e., useful). Moreover, the visual augmentations with substantial pedagogical supports, as well as the tangibility of the interactions and problem-solving challenges, mobilize their attention. This involves personal active control of the task and inquiry processes. Moreover, as confirmed by video analyses, these hybrid environments involved learners in a pleasant and enjoyable experience (even for the Teegi users who wore a constraining EEG helmet). They were totally motivated in what they had to do and by the difficulty of the challenge they had to face (see Figure 7).

Figure 7

Example of typical learning interactions in situ with (A) Helios; (B) Hobit; and (C) Teegi.

Therefore, by using such hybrid interfaces, designed by coupling ID and HCI principles, learners are able to perceive both the challenge they need to tackle to solve the scientific problems and their ability and skills to deal with this challenge. These factors are linked to intrinsic motivation and the leverage of flow states (Jackson and Csikszentmihalyi, 1999; Viau, 2009). By supporting learners’ motivation and task achievement, the hybrid environments scaffold learners to understand technical and scientific principles and their mutual relationships. In education, scaffolding refers to a variety of instructional techniques that are tailored to the needs of the learner and that are used to progressively move the latter toward stronger understanding and higher independence in his or her learning process.

Hybridization Integrates Learners in Situated Experiments

The hybrid environments presented above allow the co-location of digital and physical information in a unique shared space. In the case of Helios, this hybridization is achieved through a screen. Hobit and Teegi rely on a SAR approach, where the physical space is directly augmented by way of overhead projectors, in a similar way to what was done by Raskar et al. (2001). In any cases, these environments could be characterized as situated learning environments. Indeed, situated learning is a matter of creating meaning from activities related to daily life even if the learning occurs in a teaching environment (Lave and Wenger, 1991; Stein, 1998).

Thus, why did the learners grasp the abstract scientific concepts that are emphasized by such hybrid environments? First, in our examples, the hybrid systems were conceived to provide the learners with concrete and topological information about the real world, augmented with digital content. Supported by the perception of a known physical environment, the learners can plainly, visually and gesturally study the hybrid layout to perform their problem-solving tasks. Beyond visual perception, body movements are important levers of learning. The registration of movements in the proprioceptive system contributes to become conscious of the surrounding world (Borghi, 2005; Gallagher, 2005). Therefore, supported by multimodal interactions, most learners were able to engage, by their own initiative, in various strategies in order to test hypotheses involving heuristics or adjustment accuracy. The most obvious examples were observed during our investigations with Helios. In the related study, motions of tangible supports were recorded during the learning session, and the analysis of these movements enabled us to highlight the underlying processes by which children planned to solve where the Moon’s phases come from. Five different motions were identified, representing children’s hypotheses and their metacognitive mental models (Fleck et al., 2015). The learners then engaged not only movements but also gestures that are expressive movements, not necessarily done consciously, which contribute to the accomplishment of thought (Gallagher, 2005). In all studies, the learners focused on their learning goals (e.g., find the relative position of the Moon around the Earth in order to visualize the first quarter; adjust the interferometer in order to observe interference rings; identify the active brain zone during a visual task) rather than on the gestural strategies used to achieve them. Unlike in virtual environments where, for example, the perception of distance could be complex for the user, in a hybrid but also familiar environment, the learners can easily adopt accurate gestural strategies. This minimizes the body efforts and maximizes the chance of finding the answer efficiently.

Compared to Helios, Hobit and Teegi benefit from an augmentation that occurs directly within the physical space. It enhances the proximity between concrete and abstract concepts. The users are thus integrated in an in situ learning experience with an identifiable and meaningful learning tool, “just like in the real world.” For example, with the Hobit system, students (and their teachers) found the tangible replicas similar to the original ones. Moreover, all of them qualified Hobit as easier to use than a classic optical bench due to the immediate vicinity of dedicated contents (e.g., formulas and additional drawings) and of adjuster marking (see Figure 5) while interacting with the tangible optical replicas. Contrary to the standard systems where learners need to put in relation what they manipulate on the one hand, and pedagogical supports (e.g., paper forms) on the other hand, the complexity of the task is here reduced. This leads to a decrease in the learner’s cognitive load. The Hobit design enabled students to acquire, develop, and use cognitive tools in an authentic domain activity. Compared to students who used a single simulated version of an interferometer, the Hobit users effectively reinvested their learning gains that were constructed with a realistic but also augmented interferometer. Our results are in line with previous ones supporting the idea that co-locating pedagogical supports with the manipulated content could reduce the extraneous cognitive load (Bujak et al., 2013) and that this frees the cognitive load used to build a knowledge (Sweller et al., 2011). Finally, as noticed in numerous previous works, co-location encourages collaborative work. In our study with Hobit and Helios, we indeed observed that learners tend to have collaborative or cooperative strategies to solve the problems (e.g., task sharing, co-manipulating). Collaboration is one of the factors that may favor the building of knowledge (Dillenbourg, 1999; Hutchins, 2001). Students or pupils frequently exchanged their points of view and ideas, worked together toward the same purpose (e.g., one moved tangible supports and the second provided screen control), and manipulated the system alternatively. All these specific social skills are crucial in Sciences learning to develop reasoning skills and critical judgment. This would have been more difficult to achieve with standard 2D screens, mouse, and keyboard interfaces. Social interactions are the core of the pedagogical benefit that is looked for. With Teegi, an anthropomorphic cartoon metaphor has been chosen. Surprisingly, the majority of participants spoke with Teegi as they could do with a child or a friend. As exposed by Wallace and Maryott (2009) in virtual worlds, the use of avatars for representation can increase a student’s sense of social presence, i.e., the feeling that others are present with the user in the mediated environment. Due to SAR, the identification with a childlike character can be constructed as associations between character-related concepts and self-perception. This augmented social perception could partially explain the learning enhancement by creating a feeling of affinity, friendship, and similarity, as also observed in video game or avatar-based supports (Paiva et al., 2005; Klimmt et al., 2010).

Learning is a function of the activity, context, and culture in which it occurs (Lave and Wenger, 1991). Such SAR hybridizations have been little explored in the scope of education (Johnson and Sun, 2013). However, we believe that they open new opportunities to enhance the learning and understanding of abstract phenomena.

Risks and Limitations

Usability of the systems is a key factor for ensuring effective learning. To be efficient, learners should not be disturbed by technical reliability issues such as offsets between the displays and the physical supports, temporal delay, or occlusions. For example, many problems of occlusion were observed when children used the first prototype of Helios (called AstroRA 1.0), which was based on standard flat AR cardboards (Fleck and Simon, 2013). Even if the simple displacement of the virtual celestial objects was strongly promoted (93.1% of the pupils), 23% of the pupils expressed irritation signs due to the wrist movements required to rotate the markers. This induced a lack of attention on the learning goal, a dropout, and/or frustration feeling. This led us to modify the form factor of the manipulated objects to improve the ergonomic quality of the system. The design of the tangible props is based on the results of a co-design process with children (Roussel and Fleck, 2015). This led to 157 different ideas and to the design of the current 3D-printed props (see Figure 3). We modified the form factor of the tangible supports in order to get closer to children’s morphology and skills (e.g., hand size, ability to manipulate various objects at the same time). Recent experiments conducted with 20 children in France and England indicated that these tangible supports afford manipulation tasks. They make rotation and revolution of the markers easier. They also limit the number of occlusions induced by the children’s hands on top of the markers.

Similarly, gestural interaction, which has to focus on the task to scaffold the learning, should not be too demanding. Gestural interaction should focus more on the learner’s attention regarding the action than on the contents. This was also observed by Cuendet et al. (2012) in mathematics learning supported by TUIs. The design of hybrid and interactive environments has to be adapted to pedagogical goals and integrated in a pedagogical scenario composed of not only manipulation activities but also reflection times. Moreover, the design of task affordance has to be considered in the light of the learners’ perception of the usefulness of this task for his/her learning. For example, with Teegi, all the filters were used. However, it is worth noticing that the vision filter, which was the one for which subjects had more initial knowledge, was used less (16.9% of the session duration time) than the other filters (between 26 and 31% of the time). It is interesting to observe that the progress for the knowledge associated to this vision filter has been lower than for the other filters. This confirms our previous observation that the preliminary learners’ knowledge of the content and/or their personal learning goals affects their learning experiences in hybrid environments.

Furthermore, the in situ studies over a long period with Helios (i.e., 6 weeks in each school) and Hobit (i.e., 3 months) proved that the technological attractiveness is not sufficient to enduringly engage the learners in their tasks. Their eagerness to use the systems rapidly decreases once they get used to it. For these pupils and students, who are generally frequently exposed to new technologies, the motivation comes mainly from the scientific question and its challenge goal and not from the attractiveness of the new technology. Therefore, our results, which were obtained in ecological situations, indicate the importance of taking into account this motivational aspect before inferring on the influences of a new environment in educational context. Thus, to improve learning and performance, a hybrid environment needs to be built as close as possible to the learners’ various previous knowledge about the content to learn and pedagogical needs, thus in order to help them to perceive the intangible scientific content in a reliable modeling. In other words, designers have to identify what remains “invisible” to the learners. So, in regard to current learning barriers, at the early stages of the design process, each designer has to ask what the learners need to know. What do they have to do to succeed? Which type of interactions and tasks are relevant? What are their capabilities toward these tasks? Or what could be useful for them to learn efficiently?

To provide relevant interactive hybrid learning environments that are relevant for the diversity of learners’ representations, all these limits raise the same challenge as the one exposed by Hornecker (2012) on the question of affordance. This encourages us to continue our work on the learners’ perception of the contents, in a sociocultural approach, beyond the usability and usefulness aspects of the learning interfaces.

Conclusion

The hybrid interfaces presented in this paper address very different topics in Science education. However, all of these interfaces enable learners to explore inferential logic, causality, and complementarity. AR and tangible interaction, when designed in an instructional and user-centered way, offers relevant supports for scaffolding learning. In accordance with constructivist principles, the possibilities for inquiry provided by the tangible supports enable the learners to access intangible concepts. The coexistence of co-located virtual and real objects lowers the learners’ abstraction levels by providing situated, concrete, spatial, and scientific references. In our experiments, most learners have overcome their misconceptions and then have understood the abstract concepts differently. By coupling HCI and ID principles, the hybridization of virtual and physical elements in unique seamless environments gathers most of the factors that foster quality learning in Science. Therefore, as evidenced by the results obtained with Helios, Hobit, and Teegi, hybridization helps learners to gain attention by providing positive emotions and relevant visual supports. These learning environments present the stimulus toward the contents to learn. The learning of the tasks to achieve is favored by the significant virtual and physical models. Our first experiment showed that these types of interfaces succeed in initiating a motivational process that will act positively on the desire to learn and the quality of learning. Moreover, the pedagogical supports, which will guide the learners, appear as essential. They help the students to understand by providing a meaning to the content to learn (semantic encoding). Problem-solving performance is facilitated by ergonomic technological supports, co-locations, and co-working facilities. This stimulates and motivates the learners to engage manipulation and critical thought in the process of problem solving. Due to the integration of active learning principles, gestural interactions associated with relevant visualizations enable the learner to take an active control of the tasks. This encourages self-determination and autonomous decision making. Moreover, these interfaces provide relevant multimodal feedbacks of the learning processes engaged by the learner and then scaffold the construction of mental models. They favor the awareness and understanding of causal effects of learners’ gestures and, consequently, of the underlying complex scientific phenomena. Moreover, the situated aspect of such interfaces favors social interactions. All these criteria, which are crucial in learning, make hybrid environments very powerful.

When thought of as pedagogical supports, by involving ID and HCI principles, hybrid interfaces provide a framework for guidance that ensures the stability of Science learning. Helios, Hobit, and Teegi are only three examples among the infinity of domains where hybridization can benefit to learning processes. In the future, new hybrid interfaces should be designed to help learners, from kindergarten to senior residential homes, to (continue to) discover and understand their surrounding world.

Statements

Ethics statement

The evaluation protocol for Helios went through an agreement procedure with educational establishments, teachers, and parents of the pupils. The procedure for the storage and exploitation of the data for Hobit was validated by “Correspondant Informatique et Libertés Inria.” With Teegi, the authors only get some feedback from 10 subjects: they did not go through the Ethics Committee validation. In all studies, they went through an agreement procedure with all participants (consent forms, image right).

Author contributions

SF was the main designer of Helios and she participated to the discussions for the augmentations of Hobit. MH participated with his colleagues in Bordeaux to the whole design of Hobit and Teegi, and he took part in the evolutions of Helios. The evaluation protocols were conceived by SF and MH. The analysis of the data was performed by SF. Interpretation and writing was done by SF and MH.

Acknowledgments

The three projects discussed in this paper come from initial works conducted with our following colleagues. Helios: Robin Gourdel, Benoit Roussel, Jérémy Laviole, Christian Bastien, and Gilles Simon. This project was financially supported by University of Lorraine and SATT Grand-Est. Hobit: Bruno Bousquet, Jean-Paul Guillet, Lionel Canioni, David Furio, Benoit Coulais, Jérémy Bergognat, and Patrick Reuter. This project was supported by Inria and Idex—Université de Bordeaux. Teegi: Renaud Gervais, Jérémy Frey, and Fabien Lotte. The authors thank the schools of the academy of Lorraine and of Manchester City, the technological institute of the University of Bordeaux, their teachers, and all the learners who took part in these studies. Finally, they also thank Marjorie Antoni for her careful proofreading.

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

References

1

AgarwalB.TripathiR. (2015). “Sketch-play-learn-an augmented paper based environment for learning the concepts of optics,” in Proceedings of the 2015 ACM SIGCHI Conference on Creativity and Cognition (Glasgow: ACM), 213–216.

2

AlexanderB. (2004). Going nomadic: mobile learning in higher education. Educ. Rev.39, 28–35.

3

BhadraA.BrownJ.KeH.LiuC.ShinE.-J.WangX.et al (2016). “ABC3D- Using an augmented reality mobile game to enhance literacy in early childhood,” in 2016 IEEE International Conference on Pervasive Computing and Communication Workshops (PerCom Workshops) (Sidney: IEEE), 1–4.

4

BillinghurstM.DuenserA. (2012). Augmented reality in the classroom. Computer7, 56–63.10.1109/MC.2012.111

5

BillinghurstM.KatoH. (2002). Collaborative augmented reality. Commun. ACM45, 64–70.10.1145/514236.514265

6

BlumT.KleebergerV.BichlmeierC.NavabN. (2012). “Mirracle: an augmented reality magic mirror system for anatomy education,” in Virtual Reality Short Papers and Posters (VRW), 2012 IEEE (Costa Mesa, CA: IEEE), 115–116.

7

BonnardQ.VermaH.KaplanF.DillenbourgP. (2012). “Paper interfaces for learning geometry,” in 21st Century Learning for 21st Century Skills: 7th European Conference of Technology Enhanced Learning, EC-TEL 2012, Saarbrücken, Germany, September 18–21, 2012, eds RavenscroftA.LindstaedtS.KloosC. D.Hernández-LeoD. (Berlin, Heidelberg: Springer-Verlag), 37–50.

8

BorghiA. (2005). “Object concepts and action,” in Grounding Cognition: The Role of Perception and Action in Memory, Language, and Thinking, eds PecherD.ZwaanR. A. (New York: Cambridge University Press), 8–34.

9

BottiroliS.DunloskyJ.GueriniK.CavalliniE.HertzogC. (2010). Does task affordance moderate age-related deficits in strategy production?Neuropsychol. Dev. Cogn. B Aging Neuropsychol. Cogn.17, 591–602.10.1080/13825585.2010.481356

10

BrownM.ChinthammitW.NixonP. (2015). “An implementation of tangible interactive mapping to improve adult learning for preparing for bushfire,” in Proceedings of the Annual Meeting of the Australian Special Interest Group for Computer Human Interaction (Parkville, VIC: ACM), 545–548.

11

BujakK. R.RaduI.CatramboneR.MacintyreB.ZhengR.GolubskiG. (2013). A psychological perspective on augmented reality in the mathematics classroom. Comput. Educ.68, 536–544.10.1016/j.compedu.2013.02.017

12

CamposP.PessanhaS.JorgeJ. (2011). Fostering collaboration in kindergarten through an augmented reality game. Int. J. Virtual Real.10, 33–39.

13

ChenC.-M.TsaiY.-N. (2012). Interactive augmented reality system for enhancing library instruction in elementary schools. Comput. Educ.59, 638–652.10.1016/j.compedu.2012.03.001

14

ChenY.-C. (2006). “A study of comparing the use of augmented reality and physical models in the chemistry education,” in VRCIA ’06 Proceedings of the 2006 ACM International Conference on Virtual Reality Continuum and Its Applications (Hong Kong: ACM), 369–372.

15

ChiangT. H.YangS. J.HwangG.-J. (2014). An augmented reality-based mobile learning system to improve students’ learning achievements and motivations in natural science inquiry activities. Educ. Technol. Soc.17, 352–365.

16

CochraneT.PanH.HuiE. (2015). “Three little pigs in a sandwich: towards characteristics of a sandwiched storytelling based tangible system for Chinese primary school English,” in Proceedings of the 15th New Zealand Conference on Human-Computer Interaction (Hamilton: ACM), 5–8.

17

ColinP.ChauvetF.ViennotL. (2002). Reading images in optics: students’ difficulties and teachers’ views. Int. J. Sci. Educ.24, 313–332.10.1080/09500690110078923

18

CuendetS.Dehler-ZuffereyJ.OrtolevaG.DillenbourgP. (2015). An integrated way of using a tangible user interface in a classroom. Int. J. Comput. Support. Collab. Learn.10, 183–208.10.1007/s11412-015-9213-3

19

CuendetS.JermannP.DillenbourgP. (2012). “Tangible interfaces: when physical-virtual coupling may be detrimental to learning,” in Proceedings of the 26th Annual BCS Interaction Specialist Group Conference on People and Computers (Birmingham: British Computer Society), 49–58.

20

DekkerS.LeeN. C.Howard-JonesP.JollesJ. (2012). Neuromyths in education: prevalence and predictors of misconceptions among teachers. Front. Psychol.:3.10.3389/fpsyg.2012.00429

21

Di SerioÁIbáñezM. B.KloosC. D. (2013). Impact of an augmented reality system on students’ motivation for a visual art course. Comput. Educ.68, 586–596.10.1016/j.compedu.2012.03.002

22

DillenbourgP. (ed.). (1999). “What do you mean by collaborative learning?” in Collaborative-Learning: Cognitive and Computational Approaches (Oxford: Elsevier), 1–19.

23

DjanetteB.FouadC. (2014). Determination of university students’ misconceptions about light using concept maps. Proc. Soc. Behav. Sci.152, 582–589.10.1016/j.sbspro.2014.09.247

24

Do-LenhS.JermannP.CuendetS.ZuffereyG.DillenbourgP. (2010). “Task performance vs. learning outcomes: a study of a tangible user interface in the classroom,” in Sustaining TEL: From Innovation to Learning and Practice, eds WolpersM.KirschnerP.ScheffelM.LindstaedtS.DimitrovaV. (Berlin, Heidelberg: Springer), 78–92.

25

DuitR.TreagustD. F. (2003). Conceptual change: a powerful framework for improving science teaching and learning. Int. J. Sci. Educ.25, 671–688.10.1080/09500690305016

26

FleckS.HachetM.BastienJ. M. C. (2015). “Marker-based augmented reality: instructional-design to improve children interactions with astronomical concepts,” in ACM SIGCHI 14th International Conference on Interaction Design and Children (Boston, MA: ACM), 21–28.

27

FleckS.SimonG. (2013). “An augmented reality environment for astronomy learning in elementary grades: an exploratory study,” in 25ème ACM conférence francophone sur l’Interaction Homme-Machine-IHM 2013 (Bordeaux: ACM), 14–22.

28

FleckS.SimonG.BastienC. (2014a). “AIBLE: an inquiry-based augmented reality environment for teaching astronomical phenomena,” in 13th IEEE International Symposium on Mixed and Augmented Reality – ISMAR (Munich: IEEE), 65–66.

29

FleckS.SimonG.DinetJ.BastienC.BarcenillaJ. (2014b). “Astronomy learning in elementary grades: influences of an augmented reality learning environment on conceptual changes,” in 28th International Congress of Applied Psychology, Paris.

30

FreitasR.CamposP. (2008). “SMART: a system of augmented reality for teaching 2nd grade students,” in Proceedings of the 22nd British HCI Group Annual Conference on People and Computers: Culture, Creativity, Interaction, Vol. 2 (Liverpool: British Computer Society), 27–30.

31

FreyJ.GervaisR.FleckS.LotteF.HachetM. (2014). “Teegi: tangible EEG interface,” in 27TH ACM User Interface Software and Technology Symposium, UIST 2014 (Honolulu: ACM), 301–308.

32

FurioD.HachetM.GuilletJ.-P.BousquetB.FleckS.ReuterP.et al (2015). “AMI: augmented Michelson interferometer,” in SPIE 9793 ETOP-Education and Training in Optics and Photonics (Bordeaux: SPIE), 97930M–97931M.

33

GaliliI.HazanA. (2000). Learners’ knowledge in optics: interpretation, structure and analysis. Int. J. Sci. Educ.22, 57–88.10.1080/095006900290000

34

GallagherS. (2005). How the Body Shapes the Mind. Oxford: Clarendon Press.

35

GibsonE. J. (2000). Where is the information for affordances?Ecol. Psychol.12, 53–56.10.1207/S15326969ECO1201_5

36

HassenzahlM. (2003). “The thing and I: understanding the relationship between user and product,” in Funology: From Usability to Enjoyment, eds BlytheM. A.OverbeekeK.MonkA. F.WrightP. C. (Dordrecht: Springer), 31–42.

37

Herculano-HouzelS. (2002). Do you know your brain? A survey on public neuroscience literacy at the closing of the decade of the brain. Neuroscientist8, 98–110.10.1177/107385840200800206

38

HershkowitzR.SchwarzB. B.DreyfusT. (2001). Abstraction in context: epistemic actions. J. Res. Math. Educ.32, 195–222.10.2307/749673

39

HornM. S.CrouserR. J.BersM. U. (2012). Tangible interaction and learning: the case for a hybrid approach. Pers. Ubiquitous Comput.16, 379–389.10.1007/s00779-011-0404-2

40

HornM. S.JacobR. J. (2007). “Tangible programming in the classroom with tern,” in CHI’07 Extended Abstracts on Human Factors in Computing Systems (San Jose, CA: ACM), 1965–1970.

41

HorneckerE. (2012). “Beyond affordance: tangibles’ hybrid nature,” in Proceedings of the Sixth International Conference on Tangible, Embedded and Embodied Interaction (Kingston: ACM), 175–182.

42

HuangY.LiH.FongR. (2015). Using Augmented Reality in early art education: a case study in Hong Kong kindergarten. Early Child Dev. Care186, 879–894.10.1080/03004430.2015.1067888

43

HutchinsE. (2001). “Distributed cognition,” in International Encyclopedia of the Social and Behavioral Sciences, eds SmelserN.BaltesP. (Oxford: Elsevier Science), 2068–2072.

44

IbáñezM. B.Di SerioÁVillaránD.Delgado KloosC. (2014). Experimenting with electromagnetism using augmented reality: impact on flow student experience and educational effectiveness. Comput. Educ.71, 1–13.10.1016/j.compedu.2013.09.004

45

JacksonS. A.CsikszentmihalyiM. (1999). Flow in Sports. Champaign: Human Kinetics.

46

JohnsonA. S.SunY. (2013). “Exploration of spatial augmented reality on person,” in 2013 IEEE Virtual Reality (VR) (Orlando: IEEE), 59–60.

47

JordàS.GeigerG.AlonsoM.KaltenbrunnerM. (2007). “The reactable: exploring the synergy between live music performance and tabletop tangible interfaces,” in Proceedings of the 1st International Conference on Tangible and Embedded Interaction, 139–146.

48

KahnemanD.TverskyA. (1973). On the psychology of prediction. Psychol. Rev.80, 237.10.1037/h0034747

49

KamiiC.DeVriesR. (1993). Physical Knowledge in Preschool Education: Implications of Piaget’s Theory. New York: Teachers College Press.

50

KastensK. A.LibenL. S.AgrawalS. (2008). “Epistemic actions in science education,” in Spatial Cognition VI. Learning, Reasoning, and Talking about Space: International Conference Spatial Cognition 2008, Freiburg, Germany, September 15–19, 2008, eds FreksaC.NewcombeN. S.GärdenforsP.WölflS. (Berlin, Heidelberg: Springer), 202–215.

51

KaufmannH.SchmalstiegD. (2002). “Mathematics and geometry education with collaborative augmented reality,” in ACM SIGGRAPH Conference Abstracts and Application (New York, NY: ACM), 37–41.

52

KaufmannH.SchmalstiegD. (2003). Mathematics and geometry education with collaborative augmented reality. Comput. Graph.27, 339–345.10.1016/S0097-8493(03)00028-1

53

KerawallaL.LuckinR.SeljeflotS.WoolardA. (2006). “Making it real”: exploring the potential of augmented reality for teaching primary school science. Virtual Real.10, 163–174.10.1007/s10055-006-0036-4

54

KlatzkyR. L.LippaY.LoomisJ. M.GolledgeR. G. (2003). Encoding, learning, and spatial updating of multiple object locations specified by 3-D sound, spatial language, and vision. Exp. Brain Res.149, 48–61.10.1007/s00221-002-1334-z

55

KlimmtC.HefnerD.VordererP.RothC.BlakeC. (2010). Identification with video game characters as automatic shift of self-perceptions. Media Psychol.13, 323–338.10.1080/15213269.2010.524911

56

KubickiS.WolffM.LepreuxS.KolskiC. (2015). RFID interactive tabletop application with tangible objects: exploratory study to observe young children’ behaviors. Pers. Ubiquitous Comput.19, 1259–1274.10.1007/s00779-015-0891-7

57

LaiK.-W. (2008). “ICT supporting the learning process: the premise, reality, and promise,” in International Handbook of Information Technology in Primary and Secondary Education, eds VoogtJ.KnezekG. (Boston, MA: Springer US), 215–230.

58

LaveJ.WengerE. (1991). Situated Learning: Legitimate Peripheral Participation. New York, NY: Cambridge University Press.

59

LedermanS. J.KlatzkyR. L. (2009). Haptic perception: a tutorial. Atten. Percept. Psychophys.71, 1439–1459.10.3758/APP.71.7.1439

60

LiarokapisF.de FreitasS. (2010). “A case of study of augmented reality serious games,” in Looking Towards the Future of Technology Enhanced Education: Ubiquitous Learning and the Digital Native, eds EbnerM.SchiefnerM. (Hershey, New York: Information Science Reference), 178–191.

61

LuckinR.FraserD. S. (2011). Limitless or pointless? An evaluation of augmented reality in the school and home. Int. J. Technol. Enhanc. Learn.3, 510–524.10.1504/IJTEL.2011.042102

62

MarshallP. (2007). “Do tangible interfaces enhance learning?” in Proceedings of the 1st International Conference on Tangible and Embedded Interaction (Baton Rouge: ACM), 163–170.

63

McDermottL. C. (2001). Oersted medal lecture 2001: “physics education research – the key to student learning”. Am. J. Phys.69, 1127–1137.10.1119/1.1389280

64

Mercier-GanadyJ.LotteF.Loup-EscandeE.MarchalM.LécuyerA. (2014). “The mind-mirror: see your brain in action in your head using EEG and augmented reality,” in 2014 IEEE Virtual Reality (VR) (Minneapolis: IEEE), 33–38.

65

MorrisonA.OulasvirtaA.PeltonenP.LemmelaS.JacucciG.ReitmayrG.et al (2009). “Like bees around the hive: a comparative study of a mobile augmented reality map,” in Proceedings of the SIGCHI Conference on Human Factors in Computing Systems (Boston, MA: ACM), 1889–1898.

66

MunnerleyD.BaconM.FitzgeraldR.WilsonA.HedbergJ.SteeleJ. (2014). Augmented Reality: Application in Higher Education. Sidney, Australia.

67

NielsenJ. (1994). “Usability inspection methods,” in Conference Companion on Human Factors in Computing Systems (Boston, MA: ACM), 413–414.

68

PaivaA.DiasJ.SobralD.AylettR.WoodsS.HallL.et al (2005). Learning by feeling: evoking empathy with synthetic characters. Appl. Artif. Intell.19, 235–266.10.1080/08839510590910165

69

PalaciosF. J. P.CazorlaF. N.MadridA. C. (1989). Misconceptions on geometric optics and their association with relevant educational variables. Int. J. Sci. Educ.11, 273–286.10.1080/0950069890110304

70

PiagetJ.InhelderB. (1956). The Child’s Conception of Space. New York: Humanities Pr.

71

PiagetJ.InhelderB. (1966). L’image mentale chez l’enfant. Paris: PUF.

72

PiagetJ.InhelderB.SzeminskaA. (1960). The Child’s Conception of Geometry. New York: Routledge & Kegan Paul.

73

PriceS.RogersY.ScaifeM.StantonD.NealeH. (2003). Using ‘tangibles’ to promote novel forms of playful learning. Interact. Comput.15, 169–185.10.1016/S0953-5438(03)00006-7

74

RaskarR.WelchG.LowK.-L.BandyopadhyayD. (2001). “Shader lamps: animating real objects with image-based illumination,” in Rendering Techniques 2001: Proceedings of the Eurographics Workshop in London, United Kingdom, June 25–27, 2001, eds GortlerS. J.MyszkowskiK. (Vienna: Springer), 89–102.

75

RavanisK.ChristidouV.HatzinikitaV. (2013). Enhancing conceptual change in preschool children’s representations of light: a sociocognitive approach. Res. Sci. Educ.43, 2257–2276.10.1007/s11165-013-9356-z

76

RegenbrechtH. T.WagnerM. T. (2002). “Interaction in a collaborative augmented reality environment,” in CHI’02 Extended Abstracts on Human Factors in Computing Systems (Minneapolis: ACM), 504–505.

77

ReigeluthC. M. (2013). Instructional-Design Theories and Models: A New Paradigm of Instructional Theory. New York, NY: Routledge.

78

RolandoJ.-M. (2004). Astronomie à l’école élémentaire: quelques réfléxions sur la construction des competences. Grand N74, 99–107.

79

RousselB.FleckS. (2015). “Moi, voilà ce que je voudrais que tu me fabriques! (Lucie, 9 ans): design participatif pour l’utilisabilité de marqueurs tangibles en contexte scolaire,” in 27ème ACM Conférence Francophone sur l’Interaction Homme-Machine-IHM 2015, Vol. 2 (Toulouse: ACM), 1–9.

80

SchneiderB.JermannP.ZuffereyG.DillenbourgP. (2011). Benefits of a tangible interface for collaborative learning and interaction. IEEE Trans. Learn. Technol.4, 222–232.10.1109/TLT.2010.36

81

ShaerO.HorneckerE. (2010). Tangible user interfaces: past, present, and future directions. Found. Trends Hum. Comput. Interact.3, 1–137.10.1561/1100000026

82

ShahM. M.ArshadH.SulaimanR. (2012). “Occlusion in augmented reality,” in 8th International Conference on Information Science and Digital Content Technology (ICIDT) (Hyatt Regency Jeju: IEEE), 372–378.

83

SheltonB. E. (2002). Augmented reality and education. Current projects and the potential for classroom learning. New Horiz. Learn.9.

84

SheltonB. E.HedleyN. R. (2002). “Using augmented reality for teaching Earth-Sun relationships to undergraduate geography students,” in Augmented Reality Toolkit, the First IEEE International Workshop (Darmstadt: IEEE), 8.

85

SimonsD. J.ChabrisC. F. (2011). What people believe about how memory works: a representative survey of the US population. PLoS ONE6:e22757.10.1371/journal.pone.0022757

86

SpencerC.BladesM.MorsleyK. (1989). The Child in the Physical Environment: The Development of Spatial Knowledge and Cognition. Chichester: Wiley.

87

SteinD. (1998). Situated Learning in Adult Education ERIC Digest. ED418250 (No. 195), 1–7.

88

SwellerJ. (1999). Instructional Design in Technical Areas. Australian Education Review. Melbourne: ACER Press.

89

SwellerJ.AyresP.KalyugaS. (2011). Cognitive Load Theory. New York, NY: Springer Science + Business Media.

90

TangA.OwenC.BioccaF.MouW. (2002). “Experimental evaluation of augmented reality in object assembly task,” in IEEE International Symposium on Mixed and Augmented Reality – ISMAR (Darmstadt: IEEE), 265.

91

UllmerB.IshiiH.JacobR. J. K. (2005). Token+constraint systems for tangible interaction with digital information. ACM Trans. Comput. Hum. Interact.12, 81–118.10.1145/1057237.1057242

92

UnderkofflerJ.IshiiH. (1998). “Illuminating light: an optical design tool with a luminous-tangible interface,” in Proceedings of the SIGCHI Conference on Human Factors in Computing Systems (Los Angeles, CA: ACM Press/Addison-Wesley Publishing Co.), 542–549.

93

Van MerriënboerJ. J.KirschnerP. A. (2012). Ten Steps to Complex Learning: A Systematic Approach to Four-Component Instructional Design. New York, NY: Routledge.

94

ViauR. (2009). La Motivation en Contexte Scolaire. Bruxelles: De boeck.

95

VosniadouS. (1992). Knowledge acquisition and conceptual change. Appl. Psychol.41, 347–357.10.1111/j.1464-0597.1992.tb00711.x

96

VosniadouS. (1994). Capturing and modeling the process of conceptual change. Learn. Instruct.4, 45–69.10.1016/0959-4752(94)90018-3

97

VosniadouS.BrewerW. F. (1992). Mental models of the earth: a study of conceptual change in childhood. Cogn. Psychol.24, 535–585.10.1016/0010-0285(92)90018-W

98

VosniadouS.IoannidesC.DimitrakopoulouA.PapademetriouE. (2001). Designing learning environments to promote conceptual change in science. Learn. Instruct.11, 381–419.10.1016/S0959-4752(00)00038-4

99

WallaceP.MaryottJ. (2009). The impact of avatar self-representation on collaboration in virtual worlds. Innovate J. Online Educ.5, 3.

100

WuH.-K.LeeS. W.-Y.ChangH.-Y.LiangJ.-C. (2013). Current status, opportunities and challenges of augmented reality in education. Comput. Educ.62, 41–49.10.1016/j.compedu.2012.10.024

101

XieL.AntleA. N.MotamediN. (2008). “Are tangibles more fun? Comparing children’s enjoyment and engagement using physical, graphical and tangible user interfaces,” in Proceedings of the 2nd International Conference on Tangible and Embedded Interaction (Bonn: ACM), 191–198.

102

YilmazR. M.KucukS.GoktasY. (2016). Are augmented reality picture books magic or real for preschool children aged five to six?Br. J. Educ. Technol.10.1111/bjet.12452

103

ZhouY. (2015). “Tangible user interfaces in learning and education,” in International Encyclopedia of the Social & Behavioral Sciences, ed. WrightJ. D. (Oxford: Elsevier), 20–25.

Summary

Keywords

augmented reality, tangible interaction, human–computer interaction, instructional design, education

Citation

Fleck S and Hachet M (2016) Making Tangible the Intangible: Hybridization of the Real and the Virtual to Enhance Learning of Abstract Phenomena. Front. ICT 3:30. doi: 10.3389/fict.2016.00030

Received

24 August 2016

Accepted

28 November 2016

Published

19 December 2016

Volume

3 - 2016

Edited by