- Niigata University of International and Information Studies, Niigata, Japan

Gamified lecture courses are defined as lecture courses formatted as games, for the purposes of this research. This paper presents an example of a traditional instruction-based lecture course that was redesigned using a game-like design. First, confrontations specific to gaming situations were considered, to derive goals for students in a classroom. Students fought using a game system in these experiments. The teacher acted as a game administrator and controlled all the game materials. He also became an interface between the game system and students. Redesigned lecture courses were compared with traditional instruction-based lecture courses for their effects on relieving student dissatisfaction with the classroom. The achievement levels of students showed no improvement in the gamified design compared to the traditional instruction-based format.

1. Gamified Lecture Course

1.1. What Is a Gamified Lecture Course?

Gamified lecture courses are defined as lecture courses formatted as games, for the purposes of this research. In other words, they are lecture courses that employ gamification. Gamification is often defined as the use of game components in non-game activities (Deterding et al., 2011; Huotari and Hamari, 2012). This definition implies the addition of something to activities. However, the focus of this study is on the redesign of activities based on game design methods.

Components of gamification are known as follows (Hamari et al., 2014): points, leaderboards, badges, story, clear goals, subgoals, feedback, progress, quests, meaningful play, and motivational affordances are components and design perspectives that are often used in a game design. Confrontation is a game component referring to the design of a users’ enemy. Confrontation is a key point in gamified lecture courses because it does not seem to be explicitly considered in traditional lecture course design. In gamified lecture courses, confrontation is also employed in order to derive lecture course goals.

1.2. Redesign from Instruction-Based to Gamified Lecture Courses

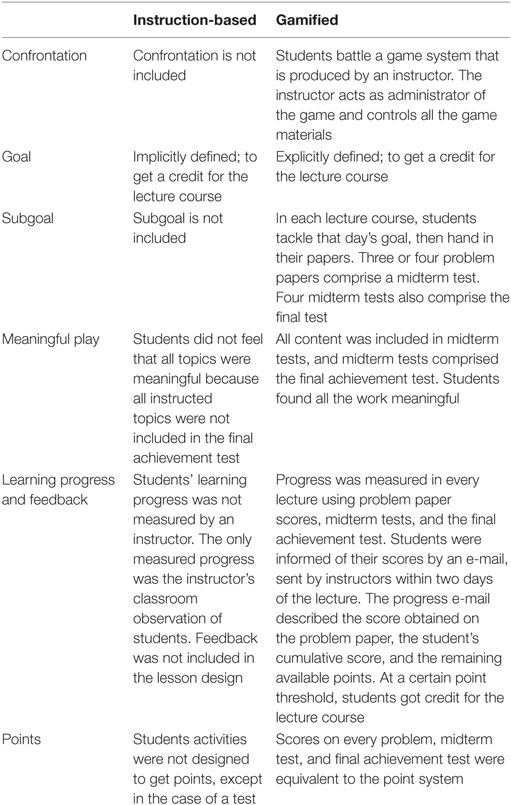

No singular widely accepted method for game design exists. Moreover, the definition of game as a seed in game design is also controversial. Research on gaming was started by Huizinga (1964) and Caillois (1958). More recently, Salen and Zimmerman (2004) laid out some perspectives on game design. With regards to gamification, Hamari et al. (2014) organized previous gamification studies by their game components. In this study, a traditional instruction-based lecture course is redesigned using previous research. A description of the redesign is shown in Table 1.

The redesign process proceeded roughly as shown from top line to bottom in Table 1. Confrontation was first considered. In this study, the confrontation was designed such that students battled a game system produced by an instructor. The instructor acts as administrator of the game and controls all game materials. Classroom instructors thus also became interfaces between game systems and students. They produced problems for the students. The students’ goal was to solve the problems in order to get a credit for the lecture course. This goal was divided into subgoals, which included midterm tests and a final achievement test. In rules of play (Salen and Zimmerman, 2004), meaningful play was mentioned as an important component of student classroom activities and the most important component in game design. All classroom activities were made into a midterm test, and the midterm tests together formed a final achievement test. Each students activity progress and midterm and final test scores were measured and immediately fed back to him/her. Students measured progress translated into points, and when a certain number of points were accrued, student received credit for the lecture course. This represented the attainment of a goal. In other words, the student would thus have won the game. Badges, rewards, leaderboards, collaboration, quests, and stories are additional components often used in gamification and active learning, but these are not included in the study. Additionally, this redesign was focused on classroom students learning processes. Homework was, therefore, not considered in this study.

1.3. Research Design

The study hypothesis that gamified lecture courses would enable students to study without stress. This might be reflected in changes in students’ qualitative evaluation of gamified lecture courses. However, student achievement, as measured by tests, might not be significantly improved by gamification because these are often dependent on many other factors.

In this study, traditional lecture courses were changed to gamified ones and both student evaluations and final achievement test were used to determine the efficacy of these modifications.

2. Experiments

2.1. Experimental Environment

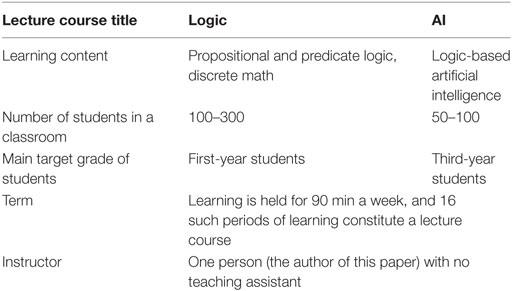

Experiments were performed on lecture courses held at the Niigata University of International and Information Studies in Japan. Two types of lecture courses were redesigned from instruction-based to gamified lecture courses. From 2008 to 2011, these two types of lecture courses were performed in the instruction-based format. From 2012 to 2014, they were performed in the gamified mode. Descriptions of the lecture courses are shown in Table 2. The lecture courses were conducted by the author of this paper. The total number of students in the experiments was 1,658, across a period of 7 years.

2.2. One Day of Instruction-Based Lecture Course

An instructor initially explained a topic. Once the explanation was finished, students tackled problems. The instructor walked around the classroom and observed students states. As there were many students, the instructor could not give each student individual attention. The instructor used sampled students’ states to determine whether to explain the same topic again or to move on to the next topic. When the instructor judged that many students had finished solving the problems, explanation of the next topic was started. At this point, all the students had to stop solving the previous problems, even though they may not have finished. The end point of the lecture was announced by the instructor, after he/she considered the remaining time and students’ learning progress.

2.3. One Day of Gamified Lecture Course

An instructor first delivered one paper containing written problems to all the students. Students could tackle the problems immediately, or after the instructors’ explanation. The instructor observed students states before starting the explanation of the problem paper topic. If students seemed to be at a loss as to how to approach the problems, the instructor explained what the problems were and how to solve them. If most students had already tackled the problems, the instructor would first give no information and then would explain the problem that most students have difficulty approaching. When the students had solved all problems, they could finish the days lecture. If a student could not solve the problems within the lecture time, the instructor would show and explain to them the answers, before the end of the lecture.

3. Results

3.1. Improvement in Student Evaluation and No Improvement in Exam Scores

The gamified lecture courses were evaluated qualitatively and quantitatively in comparison to the instruction-based lecture courses. Qualitative ratings consisted of student questionnaires, which were conducted every semester, for all university lecture courses. Quantitative evaluations consisted of a final achievement test that was a paper test held once during the final day of the course. The exam topics included everything that was learnt in the class. The format of the final exam was not changed extensively between instruction-based and gamified for comparison.

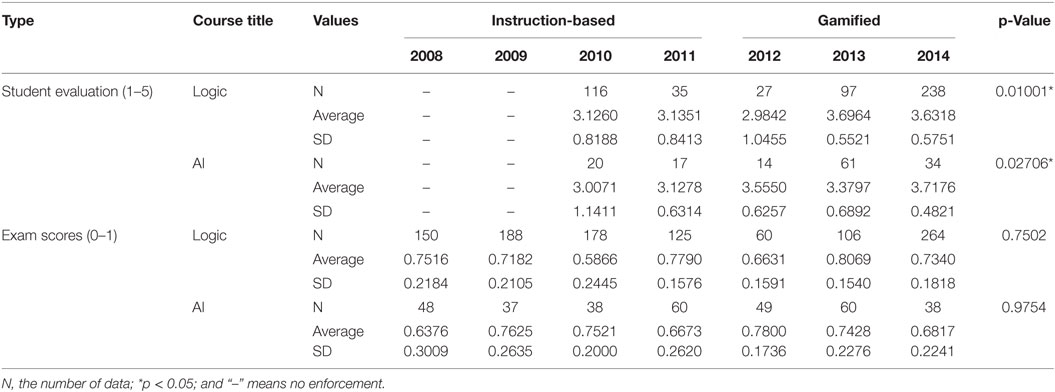

The student evaluations of logic and AI lecture courses were analyzed using Scheffé’s method of multiple comparisons. Instruction-based and gamified lecture courses were significantly different, F(4, 508) = 3.35590, p-value = 0.01001 for the logic lecture course and F(4, 141) = 2.82770, p-value = 0.02706 for the AI lecture course, respectively. Further, please note that the significant difference of another lecture course that was taken by the same teacher of this experiments could not be confirmed, F(4, 67) = 0.390390, p-value = 0.8148, because the lecture course was not redesigned from instruction-based to gamified.

The exam scores were not significantly different, F(6, 1,064) = 0.5754, p-value = 0.7502 for the logic lecture course and F(6, 323) = 0.2039, p-value = 0.9754 for the AI lecture course. For another lecture course, which was not redesigned, since the scoring method was not the type of exam, statistical analysis could not be performed. These statistics are depicted in Table 3.

3.2. Student Evaluation

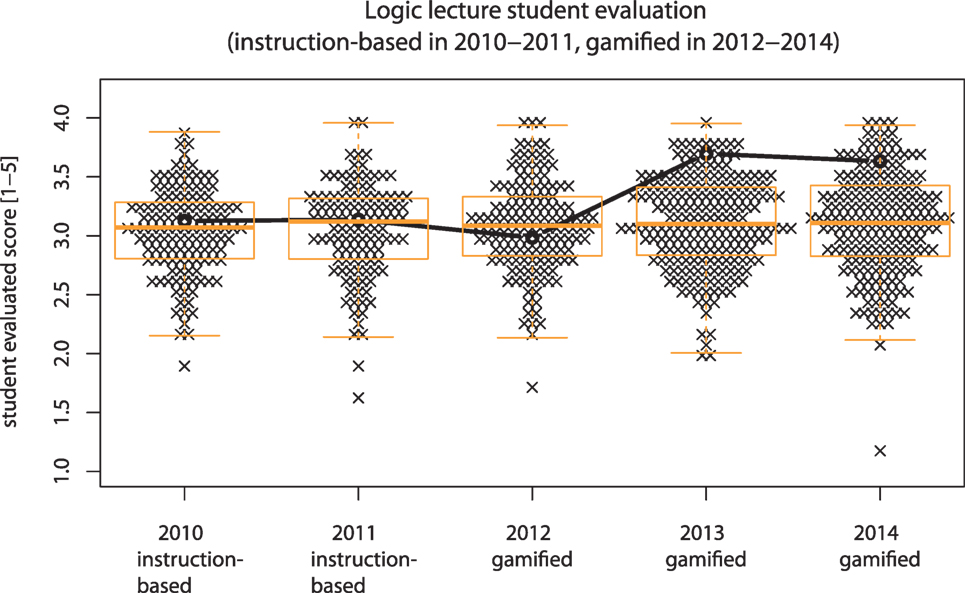

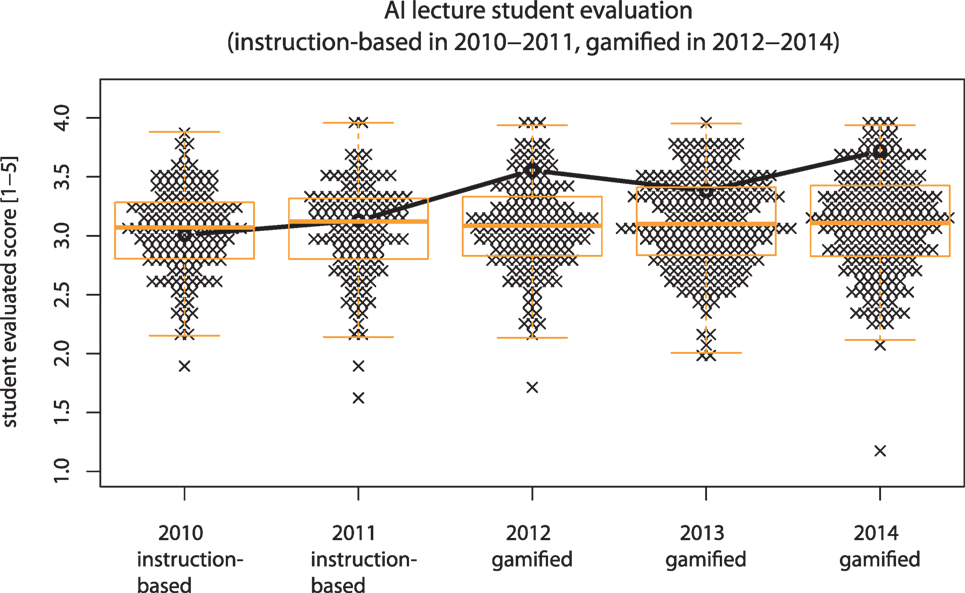

Students evaluated every university lecture course, each term, using questionnaires. Questionnaires contained many items, but only the question, “What did you like about the lecture course?” located toward the end of the questionnaire was used in the evaluation. Note that the sentence as written here is a translation—the actual questionnaire sentences were presented in Japanese. Answers were collected using a 5-item Likert scale (Likert, 1932). These answers were translated to an interval scale using the sigma method, in which larger values are more positive. Figures 1 and 2 show the mean evaluation values of all lecture courses held in a semester for the logic and AI lecture courses, respectively. Each cross on the figures represents a lecture course. Each point in Figures 1 and 2 represents a lecture course evaluation score, and the bold circles and line connecting these indicate average scores for the logic lecture course in Figure 1 and the AI lecture course in Figure 2. Evaluation scores are on the y-axis; higher scores are better. The years in which the lecture courses were performed are on the x-axis. Lecture courses with the same score in the same year were spread horizontally for visibility. Logic and AI lecture course were began in 2008, but 2010 is the earliest point plotted on these graphs because student evaluations were only performed starting in 2010. These figures also contain box and whisker plots, in which the upper, lower, and central lines represent the first quartile, median, and third quartile, respectively. The whiskers represent confidence intervals. Lecture courses with fewer than five participating students were removed from the analysis.

In all cases but the 2012 logic lecture course, gamified lecture course scores increased relative to scores on instruction-based lecture courses. This data suggest that gamified lecture courses can get higher student evaluations than instruction-based lecture courses.

3.3. Student Achievement

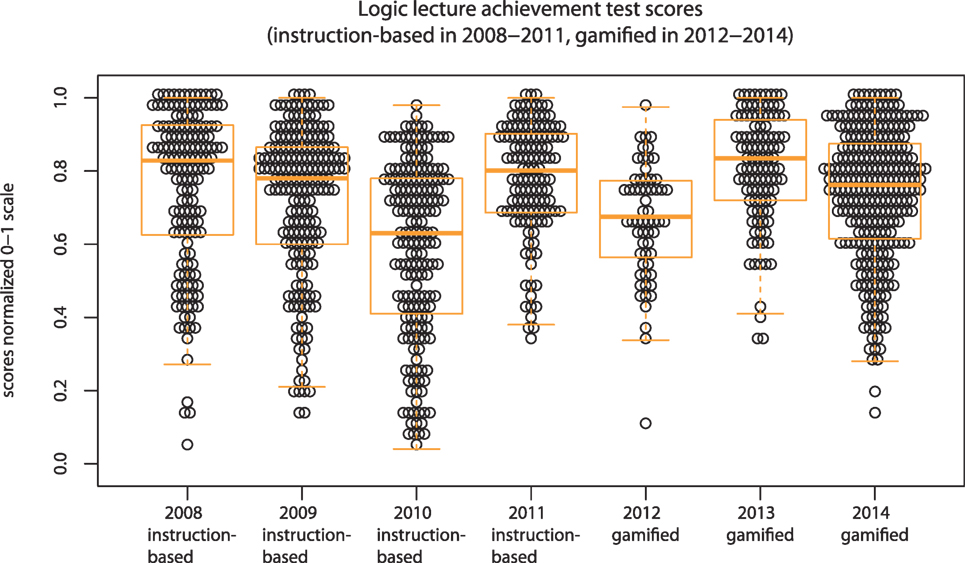

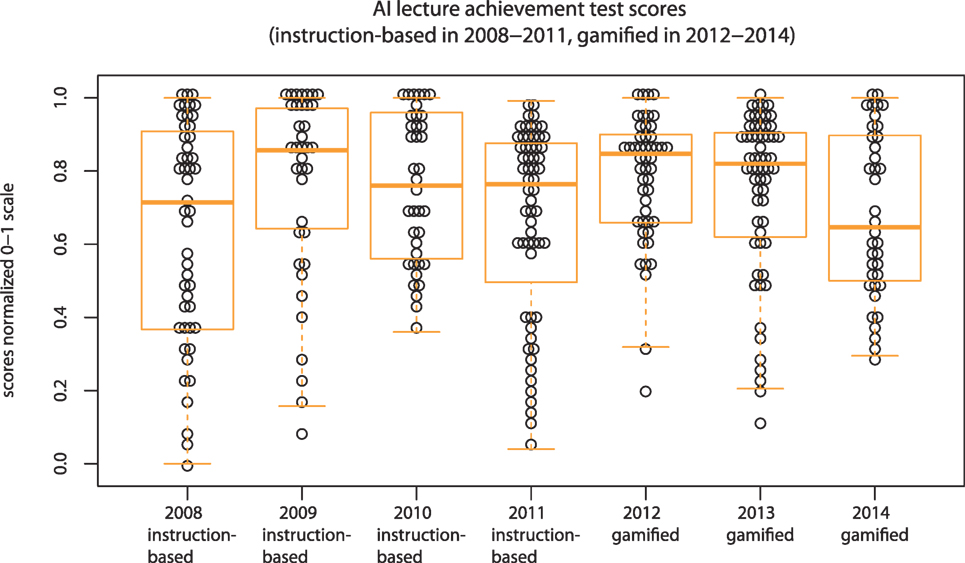

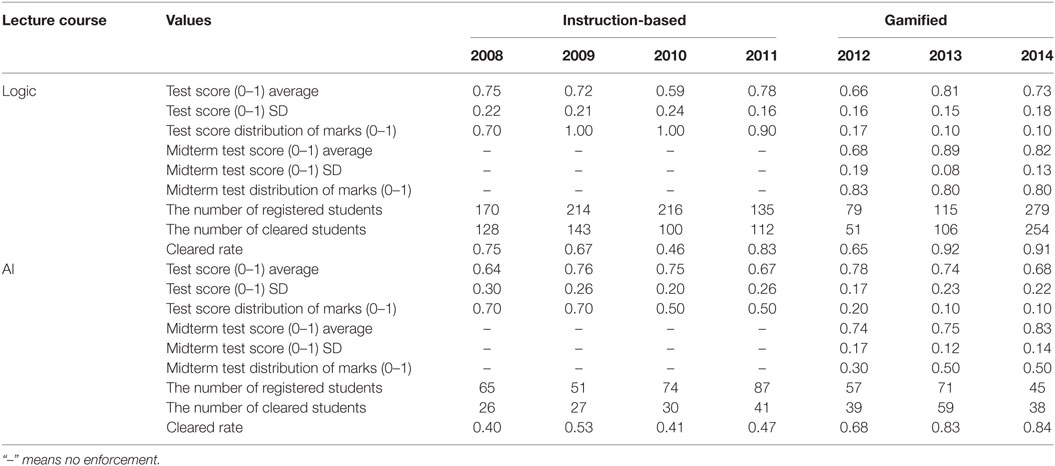

Table 4 shows some characteristics of the quantitative student evaluations, including final achievement test and midterm test scores, and the number of registered/cleared students. Final achievement tests were given once toward, the end of the lecture series. In gamified lecture courses only, midterm tests were performed four times during the term. Since midterm tests were not performed in instruction-based lecture course classes, final achievement tests from gamified lecture courses were compared to those from instruction-based lecture courses, even though the metrics used to determine final grades for lecture course credit were largely different between these two class formats. In gamified lecture courses, midterm test scores tend to be better than final test scores, because the range of exam topics on midterm tests is relatively small, and the period between learning a topic and taking its test is short. Therefore, the final achievement test scores are the most valid metric for comparison of instruction-based and gamified lecture courses in this study.

Table 4. Quantitative student evaluations: final achievement test scores, midterm test scores, and the number of registered and cleared students.

The last logic and AI test scores are shown in Figures 3 and 4. Since variance is very important to consider in evaluating test scores, the scores of all students are plotted in these figures. Each circle represents a student’s score. Because the scales used changed between 2008 and 2014, scores are standardized to between 0.0 and 1.0. The lines of the boxes also represent the first quartile, median, and third quartile from bottom to top. The whiskers represent confidence intervals. The center lines of the boxes in Figures 3 and 4 change independent of lecture course type. Therefore, it is reasonable to suggest that student achievement remained unchanged between instruction-based and gamified lecture courses.

3.4. Other Characteristics of Gamified Lecture Courses

In Table 4, the rate of cleared students seems to have moderately improved. However, this rate is very varied. The worst cleared student rate value in gamified lecture courses, is that of the logic lecture course in 2012, and is smaller than the corresponding value in the 2008 and 2011 instruction-based logic lecture courses. Therefore, we cannot verify that gamified lecture courses enhance the cleared student rate above that of instruction-based lecture courses. The number of registered students also seems to be independent of lecture course type.

4. Discussion

4.1. Gamification

Gamified lecture courses are an application of gamification in education. Quest to Learn (Salen, 2011; Shute and Torres, 2012) is also one of the trial applications used for school-scale gamification. The differences between Quest to Learn and this papers research are that this study focused on a larger lecture with a maximum of 300 students, and this paper focused on how to change from traditional instruction-based lecture courses to gamified lecture courses. Generally, gamification is defined in many areas, as shown by Huotari and Hamari (2012) and Deterding et al. (2011). While there are many definitions of gamification, a widely accepted definition is “the use of game components to enhance non-game activities.” However, this definition only describes the methods of gamification and does not specify the user model of the gamification system. Hamari et al. (2014) surveyed many gamification projects and proposed the following model:

In Hamari’s research, the objective of a system using gamification is to encourage user interest and to introduce them the system. The users are usually autonomous and may not use the system if they do not think that it is necessary. Therefore, the first evaluation of gamification systems is a measure of user participation. Next, the quality of the participants is also discussed. Farzan and Brusilovsky (2011) created a course recommender system and stated that gamification mechanisms should be applied to the substance of the systems. When the periphery of the system is enhanced through gamification, such as giving points, users may become absorbed in the periphery task. Such a phenomenon was called “gaming” by Bretzke and Vassileva (2003) and Cheng and Vassileva (2006) also said that the use of rewards, which are a component of gamification, should be restricted, because many rewards may enhance the participation of both desired users, as well as those whose objectives do not match that of the system designer.

In gamified lecture courses, the first evaluations of gamification by users are hard to estimate because student users normally attend these lecture courses whether they are gamified or instruction-based. The data for estimating the first evaluation of gamified lecture course is thus only the cleared student rate. The rate is a moderate improvement, but is not always valid, because instruction-based lecture courses sometimes exceed gamified lecture courses on the metric of cleared student rates. The next evaluation, quality of user behavior, is more important for gamified lecture courses. The desired user behavior is, of course, that students study with positive attitudes. If students’ behavior quality can be estimated by student evaluations, the evaluations are good. However, student evaluations seem to be lecture impressions and hence do not represent good or bad classroom behavior. For example, when a student watches a favorite movie in the classroom, the student will respond by giving the lecture a good evaluation, even though the student did not study anything.

4.2. What Did Student Evaluations Measure?

In this study, questionnaire items located toward the end of the whole questionnaire were often those asking about the overall impression of the lecture course. Responses to this item were used to evaluate lecture course quality. Interpretation of student evaluations is controversial because student and instructor characteristics and cultures are different. In previous studies, discussions about student evaluations cover the following:

1. Is evaluation strongly influenced by an instructors personality rather than method of instructing?

2. Can students evaluate lecture courses with validity?

3. Does evaluation represent student motivation in a lecture course?

4. Does evaluation represent student satisfaction in a lecture course?

4.2.1. Is Evaluation Strongly Influenced by an Instructors Personality Rather than Method of Instructing?

The phenomenon wherein student evaluations influenced by an instructors personality rather than method of instructing is the so-called Dr. Fox effect (Naftulin et al., 1973; Ware and Williams, 1975). This effect means that student evaluations of instructors are not accurate, because lectures conducted by a passionate actor tend to be evaluated as better than those of professionals, even when the actor’s content is intentionally not well-organized, or the actor’s content knowledge is low. Marsh and Ware (1982) found that when students were not given incentives to learn, expressiveness had a much greater effect on student ratings than lecture content. This effect is thus supported only in circumstances in which students have no incentives, for instance, in any form of entertainment. Normally, students in a university have some incentives for taking lecture courses. Therefore, the Dr. Fox effect is normally not supported in university lecture courses. In other words, if students have no requirements for knowing something in a lecture course, and if the lecture course is entertaining, better evaluations are likely a result of the Dr. Fox effect.

4.2.2. Can Students Evaluate Lecture Courses with Validity?

Even though the Dr. Fox effect is likely not supported here, student evaluations are still controversial. Sproule (2002) argued that using student evaluations to rate instructors is pseudoscience. Since students perspectives on lecture content is normally narrower than that of the instructor, instructor evaluations become a part of lecture assessments. This evaluation is called underdetermination, and the validity of estimation techniques using underdetermination is a kind of pseudoscience. Meanwhile, Aleamoni (1999) surveyed research about student evaluations and found that many myths about student evaluations are not true. Aleamoni laid out 16 myths that are often used to criticize student evaluations. One example is how the ease of getting credit for a lecture course influences the quality of student evaluations positively. Another example of a myth is that students cannot produce valid evaluations of lecture courses because they are immature. These myths were refuted with evidence supporting the contrary. However, evidence supporting these myths is given in Wachtel (1998). Therefore, interpretations of student evaluations are dependent on situations that determine how to gather questionnaires, how to instruct students to fill out the questionnaires, the characteristics of students, and so on.

4.2.3. Does Evaluation Represent Student “Motivation” in a Lecture Course?

Gamification is defined as being able to create motivational affordances. Therefore, it is necessary to evaluate whether gamified lecture courses can create motivational affordances. If student evaluations represent student motivation and evaluations are returned at a high rate, it is reasonable to deduce that motivational affordances increased. However, it is not reasonable to consider the results of student evaluations as student motivation because student achievement showed no improvement. From the definition of motivation, if student motivation increases, then the students will study hard. Carroll (1963) showed that learning is a function of time, i.e., if students study for a long time, then achievement should improve. In this study, student achievement did not improve but the student evaluations improved. Therefore, it is reasonable to deduce that student evaluations do not represent student motivation.

4.2.4. Does Evaluation Represent Student “Satisfaction” in a Lecture Course?

Satisfaction is ones overall impression of something. While satisfaction is not directly related to Motivation, in that it does not acts as a factor that makes someone do something, it might generate motivation. Herzberg (1966) said that satisfaction can be divided into motivator and hygiene factors. Motivator factors are those activities that are enhanced with satisfaction, and those activities that are not decreased without satisfaction. On the other hand, hygiene factors are those activities that are not enhanced even though the factor is satisfied but are decreased when the factor is not satisfied. Thus, Herzbergs theory is that factors that enhance and decrease motivation are different. Herzberg also said that the opposite of satisfaction is not dissatisfaction, but no-satisfaction, and that the opposite of dissatisfaction is no-dissatisfaction. Factors of satisfied and dissatisfied people are not different.

In this study, student evaluations represented a reversed dissatisfaction indicator, like the hygiene factor proposed by Herzberg (1966). Some students wished to avoid stressful lecture courses, and this became their reasons for participating in the student evaluation system. Chen and Hoshower (2003) revealed that students think of lecture course evaluations as capable of improving lecture courses; they also implied that students do not like stressful lecture courses. Marlin (1987) showed that students tend to view evaluations as a “vent to let off student steam.” According to Jacobs (1987), students reported hearing of others who were plotting to get back at instructors by collectively giving low ratings on evaluation forms.

While some criticize Hersbergs methodology, the concepts of hygiene and motivator influenced many other studies. Student satisfaction with university libraries was surveyed by Stamatoplos and Mackoy (1998), using the concepts of hygiene and motivator factors. Evans (1998) also discussed that while Herzbergs research methods were not sound, his notions were useful. Wu et al. (2008) used this theory to examine user studies on search engines.

4.3. Why Did Student Achievement Not Improve?

O’Donovan et al. (2013) created game-based lecture courses. They did not find significant improvements in student achievement, however, because students achievement is often influenced by many other factors, this result is understandable. In this comparative study, students who had learned via both instruction-based and gamified lecture courses did not show the same degree of dissatisfaction, which implies that their dissatisfaction did not strongly influence their achievement. From this evidence, the lack of effect of gamified lecture courses on achievement is considered.

Many teachers and researchers believe that learning time strongly influences learning achievement. Even though achievement efficiency is different for appropriate and inappropriate instruction methodologies, students tend to achieve at levels proportional to their learning time. Carroll (1963) proposed that learning is a function of time engaged, relative to the time needed for learning. Learning time is considered to be not only an element of achievement but also as a measurement of effectiveness of a lecture course. Gettinger and Seibert (2002) proposed the best practice of allocating learning time for students in a classroom.

From the experiment herein, the following model is hypothesized. Decreased student dissatisfaction is not directly related to student achievement because student achievement was not improved even though student evaluations were improved. However, increased learning time is strongly related to student achievement (Carroll, 1963). Thus, the model posits a weak relationship between decreasing student dissatisfaction and increasing learning time (this is represented by a dashed arrow below).

4.4. Other Related Pedagogy

4.4.1. Characteristics of Gamified Lecture Courses in Relation to Traditional Instructional Theories

There are many instructional theories in the fields of educational psychology, educational technology, sociology of education, and so on. They are recognized as perspectives on the methodology of teaching. Gamified lecture course based on gamification is also one of these perspectives. Among these traditional theories, a key point of gamified lecture course is that it is based on service. Recently, a new research area about service [e.g., service science (Spohrer et al., 2007)] has emerged. In service science, a service is designed for a user experience, rather than for the equipment or systems of the service. User experience is considered in game design regardless of whether it is computer based or not. Education is, of course, one of the aforementioned services. Therefore, knowledge derived from the experience of game design is useful for the design of a lecture course.

4.4.2. Student-Centered Learning

Student-centered learning (Jonassen and Land, 2012) is a recently proposed learning theory, in which students learn knowledge of their choice, using their own learning methods, actively and autonomously. Teachers are supporters of student learning and serve as designers of the learning environment. This is similar to the ways in which scientists simulate problems to uncover new knowledge and solve problems that arise in practical work using appropriate scaffolds and guidelines. The important thing in student-centered learning is that learners themselves can control their own learning activities. This process is also called metacognition (Bransford et al., 1999).

Boud (2000) proposed sustainable assessment, and Boud and Molloy (2013) also present efficient feedback that is related to the student-centered learning theory. This feedback theory is more active than previously those previously known. Its most important element is to enable one to rethink ones own learning by himself when given feedback. This feedback appears in many video games. For example, when a player is about to die, a users have to think of new strategies for extending their lives. Video games thereby make effective learning tools; they give constant and immediate feedback to players (Gee and Shaffer, 2010). Foster et al. (2013) proposed educational games for learning programming based on an analysis of traditional popular games.

In this study, gamified lecture courses conformed to the student-centered learning theory, as the game presupposes that the user actively and autonomously moves. However, feedback to improve students learning methods was not inherently different from that in conventional instruction-based lecture courses, even though time and response speed of feedback were improved. This problem persists in a large number of lecture courses and will require further research to solve.

4.4.3. Gamified Lecture Course Involves Active Learning

Gamified lecture course is related to active learning (Johnson et al., 1991), which captured the attention of educators, as it focuses on how to enhance student activities in a classroom. Since a game is an intrinsically user initiated activity, students in gamified lecture courses study autonomously. The difference between active learning and gamified lecture course is that confrontation is an element of gamified lecture course. This element is useful for creating clearer student goals and relationships between students’ activities.

4.4.4. Gamified Lecture Course Is Problem-Based Learning

Our lecture courses also contain elements of problem-based learning (Hmelo-Silver, 2004). In problem-based learning, students are first given problems, then they survey relevant information and discuss this with other students. In the current study, the gamified lecture course was problem-based, but was more comprehensive than typical problem-based exercises, as the gamified model mainly focused on educational contexts in which problems were one component.

4.4.5. The Differences between Gamified Lecture Course and Entertainment Education

Games are a form of entertainment. There is already a lecture style based on entertainment without games, called entertainment education (Slater and Rouner, 2002). Entertainment education lecture styles are very different from gamified lecture courses because students in entertainment education are mainly passive. In entertainment education, students often receive learning content via movies or verbal stories. This lecture style requires students to recognize instructional messages. If a student does not have the ability to do this, he or she would be unable study anything. This phenomenon is described as the peripheral root in the Elaboration Likelihood Model (Petty and Cacioppo, 1986) by Slater and Rouner (2002). In contrast, gamified lecture courses encourage active learning.

5. Conclusion

Herein, an empirical study about redesigning instruction-based lecture course into gamified lecture courses was introduced. Gamified lecture courses use game design in the design of lecture courses. Confrontation was considered in order to help students to derive lecture course goals. These goals were divided into subgoals, and all requirements for the achievement of course credit were linked to these subgoals. The connections between students’ classroom activities, subgoals, and final lecture course goals were considered “meaningful play.” Students could also easily and quantitatively see their own progress. Experiments were performed in three sessions, which were compared to instruction-based lecture courses, held before the change to gamified lecture courses. Student evaluations showed that lecture course quality was improved through gamification. However, students’ final achievement test scores showed no improvement with lecture course gamification.

Gamification, therefore, improved qualitative but not quantitative aspects of the course. This suggests that gamified lecture courses have the potential to improve the learning environment. However, unfortunately, this improved learning environment does not directly influence student achievement, perhaps because learning time still seemed to be the same between gamified and instruction-based lecture courses. While student achievement was not improved in this study, gamified lecture courses nonetheless hold promise for improving student achievement. If students’ dissatisfaction is high, it is hard to give students many problems to solve. On the other hand, if students’ dissatisfaction is nearly zero or low, students may tackle more problems. Thus, the relief of student dissatisfaction achieved herein might increasing student-learning time.

Ethics Statement

As an educational audit, ethical review and approval were not required for this type of study according to the national and institutional requirements. Consent from the students was implied by the return of the questionnaires, and the use of their anonymous data was in accordance with the terms and conditions of the Niigata University of International and Information Studies information security policies. Permission for the curriculum change was granted by Mitsuo Kobayashi, the dean of faculty of Information Culture, Niigata University of International and Information Studies.

Author Contributions

The author confirms being the sole contributor of this work and approved it for publication.

Conflict of Interest Statement

The author declares that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

References

Aleamoni, L. M. (1999). Student rating myths versus research facts from 1924 to 1998. J. Pers. Eval. Educ. 13, 153–166. doi: 10.1023/A:1008168421283

Boud, D. (2000). Sustainable assessment: rethinking assessment for the learning society. Stud. Contin. Educ. 22, 151–167. doi:10.1080/713695728

Boud, D., and Molloy, E. (2013). Feedback in Higher and Professional Education: Understanding It and Doing It Well. London: Routledge.

Bransford, J. D., Brown, A. L., and Cocking, R. R. (1999). How People Learn: Brain, Mind, Experience, and School. Washington, DC: National Academy Press.

Bretzke, H., and Vassileva, J. (2003). “Motivating cooperation on peer to peer networks,” in 9th International Conference, UM 2003, eds P. Brusilovski, A. Corbett, F. de Rosis (Johnstown, PA).

Chen, Y., and Hoshower, L. B. (2003). Student evaluation of teaching effectiveness: an assessment of student perception and motivation. Assess. Eval. High. Educ. 28, 71–88. doi:10.1080/02602930301683

Cheng, R., and Vassileva, J. (2006). Design and evaluation of an adaptive incentive mechanism for sustained educational online communities. User Model. User-adapt. Interact. 16, 321–348. doi:10.1007/s11257-006-9013-6

Deterding, S., Dixon, D., Khaled, R., and Nacke, L. (2011). “From game design elements to gamefulness: defining gamification,” in Proceedings of the 15th International Academic MindTrek Conference: Envisioning Future Media Environments (New York: ACM), 9–15.

Farzan, R., and Brusilovsky, P. (2011). Encouraging user participation in a course recommender system: an impact on user behavior. Comput. Human Behav. 27, 276–284. doi:10.1016/j.chb.2010.08.005

Foster, S. R., Esper, S., and Griswold, W. G. (2013). “From competition to metacognition: designing diverse, sustainable educational games,” in Proceedings of the SIGCHI Conference on Human Factors in Computing Systems (Paris: ACM), 99–108.

Gee, J. P., and Shaffer, D. (2010). Looking where the light is bad: video games and the future of assessment. Phi Delta Kappa Int. EDge 6, 3–19.

Gettinger, M., and Seibert, J. K. (2002). Best practices in increasing academic learning time. Best pract. Sch. Psychol. IV 1, 773–787.

Hamari, J., Koivisto, J., and Sarsa, H. (2014). “Does gamification work? – a literature review of empirical studies on gamification,” in 47th Hawaii International Conference on System Sciences (Hawaii, USA: IEEE), 3025–3034.

Hmelo-Silver, C. E. (2004). Problem-based learning: what and how do students learn? Educ. Psychol. Rev. 16, 235–266. doi:10.1023/B:EDPR.0000034022.16470.f3

Huizinga, J. (1964). Homo Ludens; A Study of the Play-Element in Culture. (Translated from the German Ed.). Boston: Beacon Press.

Huotari, K., and Hamari, J. (2012). “Defining gamification: a service marketing perspective,” in Proceeding of the 16th International Academic MindTrek Conference (Tampere: ACM), 17–22.

Jacobs, L. C. (1987). University Faculty and Students’ Opinions of Student Ratings. Indiana Studies in Higher Education. Washington, DC: ERIC Clearinghouse.

Johnson, D. W., Johnson, R. T., and Smith, K. A. (1991). Active Learning: Cooperation in the College Classroom. Edina, MN: Interaction Book Company.

Jonassen, D., and Land, S. (2012). Theoretical Foundations of Learning Environments. London: Routledge.

Marlin, J. W. Jr. (1987). Student perceptions of end-of-course evaluations. J. Higher Educ. 58, 704–716. doi:10.2307/1981105

Marsh, H. W., and Ware, J. E. (1982). Effects of expressiveness, content coverage, and incentive on multidimensional student rating scales: new interpretations of the dr. fox effect. J. Educ. Psychol. 74, 126. doi:10.1037/0022-0663.74.1.126

Naftulin, D. H., Ware, J. E. Jr., and Donnelly, F. A. (1973). The doctor fox lecture: a paradigm of educational seduction. Acad. Med. 48, 630–635. doi:10.1097/00001888-197307000-00003

O’Donovan, S., Gain, J., and Marais, P. (2013). “A case study in the gamification of a university-level games development course,” in Proceedings of the South African Institute for Computer Scientists and Information Technologists Conference, SAICSIT ’13 (New York, NY: ACM), 242–251.

Petty, R., and Cacioppo, J. (1986). Communication and Persuasion: Central and Peripheral Routes to Attitude Change. New York: Springer-Verlag doi:10.1007/978-1-4612-4964-1

Salen, K., and Zimmerman, E. (2004). Rules of Play: Game Design Fundamentals. Cambridge, MA: MIT Press.

Shute, V. J., and Torres, R. (2012). Where Streams Converge: Using Evidence-Centered Design to Assess Quest to Learn. Technology-Based Assessments for 21st Century Skills: Theoretical and Practical Implications from Modern Research. Charlotte, NC: Information Age Publishing, 91–124.

Slater, M. D., and Rouner, D. (2002). Entertainment-education and elaboration likelihood: understanding the processing of narrative persuasion. Commun. Theory 12, 173–191. doi:10.1111/j.1468-2885.2002.tb00265.x

Spohrer, J., Maglio, P. P., Bailey, J., and Gruhl, D. (2007). Steps toward a science of service systems. Computer 40, 71–77. doi:10.1109/MC.2007.33

Sproule, R. (2002). The underdetermination of instructor performance by data from the student evaluation of teaching. Econ. Educ. Rev. 21, 287–294. doi:10.1016/S0272-7757(01)00025-5

Stamatoplos, A., and Mackoy, R. (1998). Effects of library instruction on university students satisfaction with the library: a longitudinal study. Coll. Res. Libr. 59, 322–333. doi:10.5860/crl.59.4.322

Wachtel, H. K. (1998). Student evaluation of college teaching effectiveness: a brief review. Assess. Eval. High. Educ. 23, 191–212. doi:10.1080/0260293980230207

Ware, J. E. Jr., and Williams, R. G. (1975). The Dr. Fox effect: a study of lecturer effectiveness and ratings of instruction. Acad. Med. 50, 149–156. doi:10.1097/00001888-197502000-00006

Keywords: gamification, education, large-scaled lecture course design, game design, student evaluation, meaningful play

Citation: Nakada T (2017) Gamified Lecture Courses Improve Student Evaluations but Not Exam Scores. Front. ICT 4:5. doi: 10.3389/fict.2017.00005

Received: 01 September 2016; Accepted: 23 March 2017;

Published: 12 April 2017

Edited by:

Leman Figen Gul, Istanbul Technical University, TurkeyReviewed by:

Anthony Philip Williams, Avondale College of Higher Education, AustraliaAydin Oztoprak, TOBB University of Economics and Technology, Turkey

Copyright: © 2017 Nakada. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) or licensor are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Toyohisa Nakada, bmFrYWRhQG51aXMuYWMuanA=

Toyohisa Nakada

Toyohisa Nakada