- Department of Psychology, Institute for Psychology, Goethe-University Frankfurt am Main, Frankfurt am Main, Germany

Recent psychophysical research supports the notion that horizontal information of a face is primarily important for facial identity processes. Even though this has been demonstrated to be valid for young adults, the concept of horizontal information as primary informative source has not yet been applied to older adults’ ability to correctly identify faces. In the current paper, the role different filtering methods might play in an identity processing task is examined for young and old adults, both taken from student populations. Contrary to most findings in the field of developmental face perception, only a near-significant age effect is apparent in upright and un-manipulated presentation of stimuli, whereas a bigger difference between age groups can be observed for a condition which removes all but horizontal information of a face. It is concluded that a critical feature of human face perception, the preferential processing of horizontal information, is less efficient past the age of 60 and is involved in recognition processes that undergo age-related decline usually found in the literature.

Introduction

Humans rely heavily on the sense of vision, and there is perhaps no stimulus of greater social importance than a face. It is easy to understand its evolutionary significance if the influences faces have on behavior, such as face symmetry on attractiveness or others’ degree of resemblance to one’s kin on willingness to help others, are regarded as vital characteristics for mate selection, altruistic behavior, and other routine social interactions (Alvergne et al., 2007; Bressan and Zucchi, 2009). Predicting other people’s intentions or behavior is one such important social interaction that is mediated by face processing (Baron-Cohen, 1994, 1995).

This kind of visual processing has been demonstrated to be susceptible to an aging brain: older adults (OA) are found to exhibit lower sensitivity scores than younger adults (YA; Grady et al., 2000; Firestone et al., 2007) as well as higher latencies (Maylor and Valentine, 1992). The former seems to occur mainly because of higher false alarm rates (Bartlett et al., 1989; Fulton and Bartlett, 1991; Edmonds et al., 2012) and the latter appears to be due to decision-making, not sensory, or perceptual processing impairments (Pfütze et al., 2002). Taking into account these specific differences, research studying developmental trajectories indicate two key adult development phases which show a decreased ability for face perception: the first one has been noted to occur around the age of 50, and the second – more noticeable – one is believed to take place between 60 and 80 years of age (Crook and Larrabee, 1992; Chaby and Narme, 2009). Since face perception and its importance to memory processes is a key cognitive component that ensures adequate functioning at a higher age, effort is being put into finding possible reasons for this apparent age-related decline by the fields of memory, cognition, and human lifespan development.

Since many vital behaviors hinge on face recognition, young, and old humans must form an identity for each individual; they must transfer identities to memory, link them with other information such as name or personality traits, as well as retrieve this kind of information at any given time. For a face to be recognized by its observer, the most widely known – and perhaps most complex – perceptual network, the visual system, has to carry out a number of intricate processes.

The human visual system is a complex array of cells with the retina, lateral geniculate nucleus, and visual cortex being its main perceptive components (with the prefrontal cortex arguably being the first integrative cognitive component). Even at the level of the retina, different neurons are highly specialized for detecting orientation of lines, edges, color, movement, shape, and contrast (among others) and convey this information to the lateral geniculate nucleus. This structure in turn receives reciprocal innervations from cortical layers and acts as a relay station that directs visual information to the occipital lobe. The lion’s share of visual processing is consequently done by the visual cortex and its association cortices, which ultimately results in the separation of two streams (a ventral “what” stream integrating recognition, categorization, and identification as well as a dorsal “where” stream, which mainly handles spatial attention of visual information) that converge at the level of higher cortical processing (Mishkin and Ungerleider, 1982). In order to simulate how the visual system operates while initially breaking down a stimulus, image filtering is done to imitate the first stages of human visual perception, as displayed in various computational models in visual- and neuropsychological research (Watt, 1994; Watt and Dakin, 2010).

Specifically, breaking down (and thereby filtering) an image into its spatial frequencies is of special importance, as it has been linked to face perception (Dakin and Watt, 2009; Goffaux and Dakin, 2010). Filtering images spatially results in exclusion of certain spatial frequencies; image information is restricted by the kind of filter that is applied. If for example an orientation pass-filter of 90° is applied to an image, all spatial frequencies are filtered in a way so that information primarily aligned at a 90° angle will pass, thus filtering an image horizontally (a small amount of frequencies aligned at other angles are filtered as well, due to the application of a wrapped Gaussian profile; for further information see Dakin and Watt, 2009; Goffaux and Dakin, 2010). These filtered images serve as stimuli that are used to test the influence of such spatial frequencies on early visual processes in human vision. Since psychophysical data show higher recognition sensitivity for horizontally filtered stimuli (as opposed to vertical ones), the notion of a “biological bar code” in the human visual system that drives human face perception by preferential processing of horizontal spatial frequencies has been put forth (Dakin and Watt, 2009). When the visual system has to operate on limited and degraded information during the presentation of orientation-discriminate (filtered) stimuli, the “bar code” describes the likelihood of horizontally filtered faces to be recognized. Horizontal spatial frequencies are an informative source for face identification and a good approximate for an image that contains all information, mainly due to the alignment of prominent features such as eyes, mouth, nose, as well as brow and chin regions.

Other, more global, influential theories concerning age-related cognitive decline have described internal processing stages as mediating factors between sensory and motor processes, where peripheral sensory processes may not be affected at all (Cerella, 1985). These stages have been further linked to theories of cognitive decline with increasing age, such as theories attempting to explain apparent age differences in terms of either a decrease of efficiency in localized frontal lobe structures (West, 1996, 2000) or a decrease in less localized network-based connections (Greenwood and Parasuraman, 2010; Zanto et al., 2011). Furthermore, age-related decreases in various cognitive tasks, including face perception, have been attributed to a more synchronous and lateralized brain in OA, whereas in comparison YA show more localized activity in each hemisphere (Cabeza, 2002).

In order to attribute decreases cognitive functioning to specific structures or processes, the method of stimulus presentation and its influence on response behavior, especially in OA, must be considered. There is still an ongoing debate whether encoding or retrieval difficulties for OA are responsible for an age-related decline in declarative memory. This debate is rooted in arguments for retrieval impairment due to an increasing lack of internal organization with age (Craik and Lockhart, 1972; Burke and Light, 1981) and arguments for encoding impairment due to increasing lack of encoding strategies with age (Sanders et al., 1980; Craik and Byrd, 1982). More specifically, studies measuring regional cerebral blood flow in episodic memory tasks including face perception have shown that YA encode information using the left prefrontal cortex and retrieve information using its right counterpart, the right prefrontal cortex (Cabeza et al., 1997; Grady, 1998). OA do not exhibit the same pattern: very little regional cerebral blood flow during encoding can be seen over the entire prefrontal cortex and only slightly more can be observed during retrieval. However, OA show heightened regional cerebral blood flow in other areas as compared to YA, such as the thalamus and hippocampus. This pattern suggests that the prefrontal cortex carries out complex visual analysis in YA, but OA’s brains involve more scattered areas of activation, possibly to compensate for organization difficulties (Grady et al., 1998). Additionally, during presentation of degraded stimuli, OA and YA alike shift activation from visual association cortices to the prefrontal cortex during encoding (possibly for a greater need of complex visual analysis), even though OA spread the shift of activation over other areas as well, giving rise to the idea that a more localized activity (as in YA) translates to superior response behavior (Grady et al., 1994). The importance of the prefrontal cortex during encoding seems apparent by its clear activation pattern in YA; OA show – at least physiologically – greater deficits during encoding, which in turn raises the question if confronting OA with manipulated stimuli during the encoding phase in face perception tasks is methodologically sound (the face-inversion effect is a popular choice at this point, where encoding and target stimuli are traditionally orientation-congruent; Grady et al., 2000).

There are various theories that attempt to explain age differences. More global theories fare well when explaining an overall set of abilities that diminish with age, but are susceptible to complex interactions of individual abilities that may or may not undergo age-related decline and may or may not have an impact on other abilities. An approach that focuses on perception of specific aspects of a face (its spatial frequencies) might in fact help to explain an age-related decline in face perception in a sense that it is a focused approach to a single perceptual ability that has been shown to undergo age-related decline. It has been demonstrated (for YA) that horizontally filtered images carry more information of a face than vertically filtered images, and it is therefore supposed that neurons in the visual cortex can decode information more meaningful, which leads to the statement that preferential processing of horizontal frequencies is necessary for the ability of face processing (Goffaux and Dakin, 2010).

From a developmental standpoint, it remains to be seen if the same holds true for OA and if a possible deficit in such preferential processing might shed light on age-related decline in face perception. In this experiment, OA with a comparatively high cognitively and perceptually challenging social background are compared to young university students under relatively realistic learning situations, where an un-manipulated and upright face had to be encoded and compared to faces of various orientation and filter conditions during recall.

Materials and Methods

Participants

Thirty (22 female) young participants (M = 21.07 years, SD = 2.83 years) and 30 (21 female) old participants (M = 66.2 years, SD = 4.75 years) were assessed in this study. Age of male and female participants did not significantly differ in either young or old age group [t(28) = 0.51, p = 0.615; t(28) = 0.85, p = 0.402, respectively]. All participants were right-handed and had normal or corrected-to-normal vision. All young participants were undergraduate students of Frankfurt’s Goethe-University; all old participants were enrolled in the university’s U3L (“University of the third age”) program for education at a higher age.

Procedure

Subjects took a computerized motor reaction time test and two short paper-and-pencil tests. The paper-and-pencil tests consisted of a (digit-span) subtest of the WAIS-R (Wechsler Adult Intelligence Scale, Tewes, 1994) for working memory assessment and the FAIR (Frankfurt Attention Inventory, Moosbrugger and Oehlschlägel, 1996) for attention assessment. After a short break, subjects took part in the face recognition experiment. For all tests, dummy-trials were used to familiarize the subject with specifics of the test. Overall, the session lasted for about 90 min. Informed consent was obtained from all subjects. The Experiment was conducted in accordance with the ethical guidelines of the German Psychological Society and is also in line with the Ethical Principles of Psychologists and Code of Conduct of the American Psychological Association. This research was conducted in the absence of any commercial or financial relationships.

Face Recognition Experiment

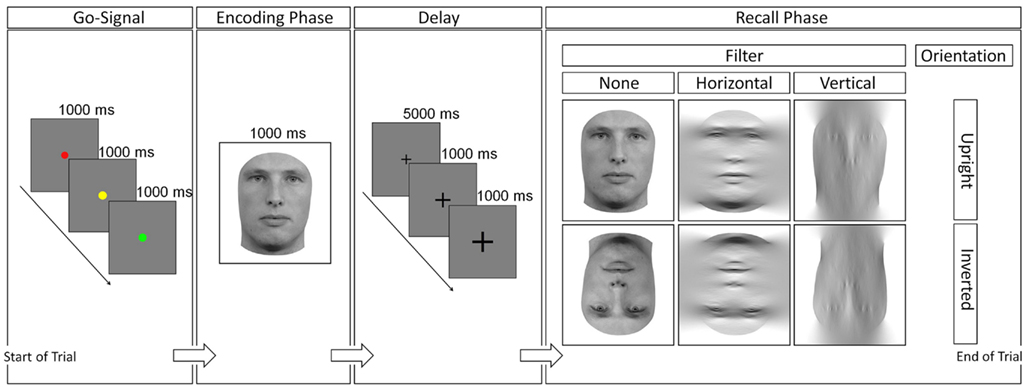

The psychophysical experiment was presented by E-Prime (E-Prime 2.0, psychology Software Tools, Inc., Sharpsburg, PA, USA). Subjects sat at a distance of roughly 60 cm from the screen. It consisted of 132 trials which served to measure latency and sensitivity of the subjects’ responses. Each trial commenced after a 3000 ms “get-ready” signal, which took the form of a dot (2° visual angle) that changed in color from red to yellow to green, with each colored dot being presented for 1 s apiece. No subject reported difficulties identifying different colors. Subsequently, the stimulus of the learning phase was shown for 1000 ms while subjects were instructed to remember the face of this part of the trial, as it would have to be compared to a face in the testing phase. The latter appeared after 7000 ms, where a fixation cross was shown in the center of the screen for 5000 ms and became enlarged twice in the last 2000 ms in order to prepare the subject for the beginning of the testing phase (2°, 4°, and 6° visual angle, respectively). During the testing phase, subjects were instructed to respond as quickly and as accurately as possible to the question “does this face appear familiar in comparison to the face just learned?” (Figure 1). Subjects were thus forced to give a yes-or-no answer, and accordingly pressed different keys on the keyboard. These keys were assigned in a way that half of the subjects pressed the “x” key to a positive (“yes”) and the “m” key to a negative (“no”) response, whereas the other half had the opposite assignment, as to eliminate the possibility of a bias toward eliciting a positive response with the left hand, and a negative response with the right hand, or vice versa. There were no differences in sensitivity or latency for the different assignments within the young and old age group [for sensitivity: t(28) = 0.84, p = 0.406; t(28) = 0.74, p = 0.462, respectively and for latency: t(28) = 0.34, p = 0.735; t(28) = 0.27, p = 0.786, respectively].

Figure 1. Procedure of a trial. The go-signal was followed by the encoding phase, a fixation cross accompanying a short delay, and finally the recall-phase. Response was given at the last stage and served as measures for sensitivity and latency measurements. One stimulus of the combination upright/inverted (orientation), and unfiltered/horizontally/vertically (filter) was presented in the recall-phase.

Stimuli

Stimuli in the learning phase were presented in an upright, unfiltered manner, to maximize potential learning of the stimulus. The target image in the testing phase could either be upright or inverted, as well as filtered (exclusion of horizontal or vertical spatial frequencies) or unfiltered. In addition to orientation and filter levels, the test-stimulus could take one of 11 different morph levels – 0–100% learning phase stimulus content (LSC) with 10% increments. This was done to introduce ambiguity and to avoid a learning process to either accept or reject the stimulus based on a completely different or entirely identical appearance. Stimuli in the experiment showed young, Caucasian, and male or female human faces with neutral expressions (with hair and ears were completely removed). Stimuli did not exhibit beards or other distinctive features such as jewelry, scars, or alike. Stimuli that did not meet these criteria were excluded based on the judgment of four individual raters. Images were normalized and gray-scaled to HSV color space. Stimuli were presented on a 38 by 30 (width by height) cm monitor, with a resolution of 1280 × 1024 pixels. Stimuli varied slightly from 522 to 690 pixels in height (M = 607.18, SD = 39.45) to preserve the individual aspect ratio, but always had a width of 400 pixels (or at an approximate viewing distance of 60 cm, 11.3, and 16.9° of visual angle in width and height, respectively). Stimuli were provided by a face databases as well as colleagues (Langner et al., 2010; special thanks to R. C. L. Lindsay and Queen’s University Legal Studies Lab Members). Morpheus Photo Morpher 3.16 (Morpheus Software LLC, Santa Barbara, CA, USA) and Gimp 2.6 (The Gimp Team, www.gimp.org) were used to crop and edit the stimuli; filtering was done by Matlab 7.13 (The Mathworks, Inc., Natick, MA, USA). The matlab-code was provided by its developer (for details of the filtering method see Dakin and Watt, 2009; Goffaux and Dakin, 2010; special thanks to S. C. Dakin).

Motor Reaction Assessment

The motor reaction time assessment consisted of a psychophysical test, also programmed in E-Prime, in which subjects simply pressed a key on the keyboard once the stimulus, a black dot (2° visual angle) on a gray background, appeared. Subjects completed the test with each hand separately (2 × 60 trials), pressing the same two keys they later did in the face recognition experiment. Each subject’s motor reaction time mean was assessed for each hand and subsequently subtracted from the appropriate hand in the face recognition experiment in order to obtain individual cognitive latencies as clean as possible. This is especially important since motor reaction times between the age groups differed [t(58) = 3.81, p = 0.003].

Covariates

Subjects completed the FAIR attention test, which involves the test taker to highlight as many correct stimuli (geometric figures) as possible in a given amount of time (2 × 3 min). They also took the WAIS-R digit-span subtest that required subjects to remember a steadily growing chain of numbers that was read aloud by the experimenter and repeated by the subjects in the same order as they were announced, as well in the reverse order (during a subsequent second test).

Results

Data Analysis

For sensitivity, latency, Hit/False Alarm Rates, 2 (age group) × 2 (orientation) × 3 (filter) mixed-model repeated-measures ANOVAs were used to analyze the results. The “age group” factor is the only between-subjects factor that compares means of YA and OA, whereas the other factors describe within-subjects factors. The “orientation” factor compares means of upright faces versus inverted faces, and the “filter” factor describes the comparison of unfiltered versus horizontally filtered versus vertically filtered stimuli. SPSS 19 (IBM Corporation, Armonk, NY, USA) was used for all analyses.

Removal of Outliers

Prior to descriptive statistical analysis, outliers were removed: simple motor reaction time data points were excluded had the subject pressed the key before the stimulus appeared on the screen, or if s/he took less than 100 ms or more than 400 ms to respond. Individual outliers of the remaining data points were treated by means of inter-quartile range (IQR) outlier exclusion [values below (mean-1.5*first IQR) as well as above (mean + 1.5*third IQR), Hoaglin et al., 1983; Tukey, 1977]. In the end, a mean of motor reaction time for each subject’s hand was created so that this latency could be subtracted from the corresponding hand yielding the latency in the face recognition experiment. For face recognition reaction time data, lenient low and high cut-offs as well as an IQR exclusion were applied (low cut-off below 500 ms; high cut-off above 4000 ms). After outlier exclusion, 4.54% of data points from the young age group and 9.42% from the old age group were not available for statistical analysis, which is an acceptable amount of data loss (Ratcliff, 1993). For trials that had latency outliers, matching sensitivity measures were also excluded. For the dependent variable sensitivity, a d′-analysis was carried out, which included 80–100% LSC-stimuli and 0–20% LSC-stimuli grouped in Hit/Miss and Correct Rejection/False Alarm categories, respectively. Morph levels spanning the upper and lower 20% of either end of the morph-degree spectrum were grouped together to perform a more robust analysis, since accuracy rates for these morph levels were very similar across orientation and filter conditions within the age groups [YA: F(2,29) = 0.89, p = 0.416; OA: F(2,29) = 0.42, p = 0.662]. In addition to a d′-analysis, a Hit/False Alarm rate analysis as well as a Reaction Time analysis were carried out on the same data set.

Covariates

Standardized scores (according to age category) were obtained from raw score measurements. For the FAIR, an independent t-test yielded a non-significant comparison [MYA = 6.87, SDYA = 1.76, MOA = 6.17, SDOA = 1.72, t(58) = 1.56, p = 0.125], as well as a significant WAIS-R comparison, favoring OA [MYA = 10.37, SDYA = 2.63, MOA = 11.83, SDOA = 1.95, t(58) = 2.45, p = 0.017].

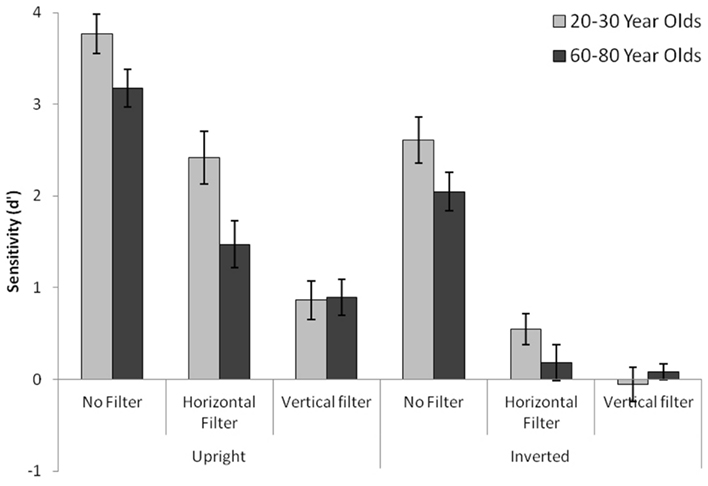

Face Recognition: Sensitivity

A descriptive d′-analysis as a function of age and stimulus type can be seen in Figure 2. An analysis of variance with the dependent variable Sensitivity (d′) revealed a significant age group main effect [MYA = 1.69, SDYA = 0.74, MOA = 1.32, SDOA = 0.52, F(1,58) = 5.1, p = 0.028], a significant orientation main effect [MUpright = 2.11, SDUpright = 1.66, MInverted = 0.9, SDInverted = 1.46, F(1,58) = 142.91, p < 0.001], a significant filter main effect [MUnfiltered = 2.9, SDUnfiltered = 1.35, MHorizontal = 1.16, SDHorizontal = 1.53, MVertical = 0.45, SDVertical = 1.05, F(2,57) = 172.34, p < 0.001], and a significant filter with age group interaction [F(1,58) = 4.32, p = 0.015]. An independent t-test further classified the only significant comparison as d′-values of upright horizontally filtered stimuli: MYA = 2.42, SDYA = 1.56, MOA = 1.47, SDOA = 1.41, t(58) = 2.33, p = 0.023, Cohen’s d = 0.69. Furthermore, comparisons of horizontal and vertical conditions for each orientation within each age group were significant for YA but not for OA (YA, upright orientation: MHorizontal = 2.42, SDHorizontal = 1.56, MVertical = 0.86, SDVertical = 1.14, t(29) = 5.52, p < 0.001; YA, inverted orientation: MHorizontal = 0.54, SDHorizontal = 0.93, MVertical = −0.06, SDVertical = 2.33, t(29) = 2.38, p = 0.027; OA, upright orientation: MHorizontal = 1.52, SDHorizontal = 1.41, MVertical = 0.9, SDVertical = 1.07, t(29) = 1.98, p = 0.057; OA, inverted orientation: MHorizontal = 0.18, SDHorizontal = 1.07, MVertical = 0.08, SDVertical = 0.46, t(29) = 0.44, p = 0.666].

Figure 2. Sensitivity scores for both age groups across different filter and orientation conditions.

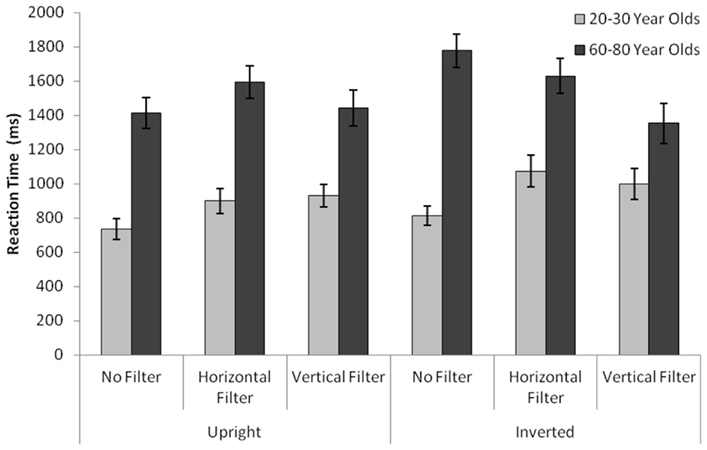

Face Recognition: Latency

Mean reaction times as a function of age and stimulus type can be seen in Figure 3. The analysis of variance with the dependent variable Reaction Time (milliseconds) revealed a significant age group main effect [MYA = 908.14, SDYA = 416.13, MOA = 1534.6, SDOA = 568.65, F(1,58) = 34.49, p < 0.001], a significant orientation main effect [MUpright = 1168.84, SDUpright = 553.81, MInverted = 1273.9, SDInverted = 617.73, F(1,58) = 12.72, p = 0.001], a significant filter main effect [MUnfiltered = 1183.71, SDUnfiltered = 608.99, MHorizontal = 1298.88, SDHorizontal = 587.04, MVertical = 1175.59, SDVertical = 564.89, F(2,57) = 4.66, p = 0.011], as well as a significant filter with age group interaction [F(2,57) = 9.64, p < 0.001], a significant orientation with filter interaction [F(2,57) = 8.84, p < 0.001].

Figure 3. Time it took for young and old subjects to respond to stimuli of different conditions. “Cognitive” latencies are depicted; each subject’s individual motor reaction time was subtracted from the total latency.

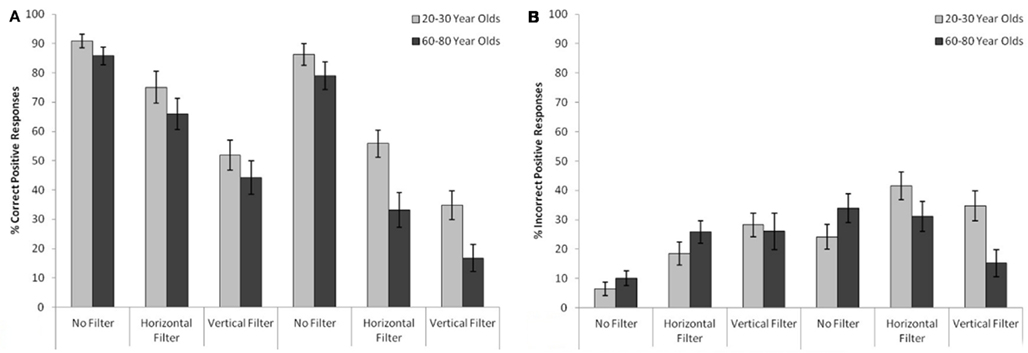

Face Recognition: Hit/False Alarm Rates

Hit rates as well as false alarm rates (given in percent of total answers) are shown in Figures 4A,B as a function of age and stimulus type. Further analyses of variance similar to the sensitivity-ANOVA were carried out in order to test not just for an overall sensitivity difference, but to also test for hit rate and false alarm rate differences between the groups separately. The false alarm rate ANOVA yielded no significant age group difference, MYA = 25.61%, SDYA = 25.22%, MOA = 23.67%, SDOA = 26.8%, F(1,58) = 0.27, p = 0.606. Conversely, the hit rate ANOVA instead yielded a significant age group difference, MYA = 65.8%, SDYA = 31.5%, MOA = 54.17%, SDOA = 36.74%, F(1,58) = 8.49, p = 0.005, with the only significant age group comparisons being both inverted filter conditions. For inverted horizontally filtered stimuli: MYA = 55.88%, SDYA = 25.4%, MOA = 33.18%, SDOA = 32.99%, t(58) = 2.99, p = 0.004, Cohen’s d = 1.25. For inverted vertically filtered stimuli: MYA = 34.74%, SDYA = 26.82%, MOA = 16.77%, SDOA = 25.18%, t(58) = 2.68, p = 0.01, Cohen’s d = 0.95.

Figure 4. (A) Hit rates. Amount of correct “yes” responses for both age groups for different stimulus types (B) false alarm rates. Amount of incorrect “yes” responses for both age groups for different stimulus types.

Discussion

The ability to recognize a face has been attributed to the specific arrangement of horizontal information that a face possesses. Indeed, subjects in this experiment had lower sensitivity scores when confronted with a vertically filtered stimulus as opposed to a horizontally or unfiltered one.

This was found to be true for both upright and inverted orientation conditions: learned image information can be retrieved relatively accurately when the target stimulus does not differ from the encoding stimulus, less accurately when horizontal spatial frequency processing had to be used exclusively to extrapolate the target stimulus’s identity from encoded information and least accurately when the stimulus contained only vertical spatial frequencies. This pattern was found for both age groups alike, albeit statistical differences within each age group concerning the various filter levels differ.

Whereas young participants showed clear-cut, significant, decreases for each filter level in its respective orientation, older participants only showed significantly higher d′-values for unfiltered stimuli. In other words, horizontally and vertically filtered stimuli did not affect older subjects’ ability to recognize a learned identity significantly, regardless of orientation. Older subjects do not recognize horizontally filtered faces better than vertically filtered stimuli – at least statistically – even though a trend toward significance does exist for this comparison. However, taking into account the evenly distinguishable decreasing recognition sensitivity with different filter methods in YA, greater differences of OA in this regard suggest that older subjects are not as able to make use of horizontal spatial frequency processing as younger subjects are. Furthermore, a bigger age effect for all informative recall-stimuli conditions could have possibly been found had the subject pool been extended to older university students as well as regular older individuals; this is certainly a topic for further research.

The noticeable difference of horizontal (as compared to vertical) conditions that was only observed in young adults also results in a difference between the two age groups, as sensitivity scores in vertically filtered conditions were nearly identical among young and old adults: young and old subjects clearly differed for the judgment of upright horizontally filtered stimuli. Thus, as this being the only statistically significant condition across age groups, the hypothesis that processing of horizontal spatial frequencies undergoes age-related decline is strengthened.

Older participants did perform worse on this recognition task for all relevant stimuli (if vertically filtered stimuli are excluded as informative source), but the means separating the two age groups apart differ enough to generate a statistical difference only in the condition which offers the preferential processing of horizontal spatial frequencies as a useful recognition tool. If the purpose of such filtering methods is to simulate how the visual system operates during early stages of stimulus break-down (in this case mainly break-down due to edge detection of spatial frequencies), given the finding that OA are seemingly less efficient at a condition which requires the use of preferential processing of horizontal spatial frequencies, it seems feasible that a general disadvantage of OA can be generalized and attributed to this relative lack of ability. At the same time, however, the less obvious difference for the unfiltered upright condition, albeit statistically near-significant, might be interpreted to be due to some adaptation mechanism that takes place at an older age, where the filtering of spatial frequencies does not play as big of a role as it does to YA.

There are reasons why older subjects performed relatively equal to young subjects. Expertise for identifying faces is acquired through repeated contact with individuals, where learning new faces, identifying, and remembering them are important tasks with social consequences. An influential model, the in-group/out-group model of face recognition (Sporer, 2001, see also Levin, 1996), proposes that in-group faces are automatically processed due to underlying perceptual expertise, whereas out-group faces are merely categorized as not belonging to the same group than its observer. Further, the model states that there is a strong possibility that this out-group coding does not extend beyond initial labeling as being different thereby limiting the motivation to develop expertise for out-group faces. At the same time, subjects have been noted to perform better on stimuli depicting faces closer to their own age, which might be linked to the time that is spent with individuals of their own age. This finding has been confirmed for young and old age groups and has been subsequently coined the own age bias (Anastasi and Rhodes, 2005; Perfect and Moon, 2005). Recent visual experience with other-age groups, especially with previously unknown individuals (such as other university students as opposed to own young family members whose configural information has been consolidated over years), has been theorized to change the behavior of an individual, in this case the ability to discriminate identities of young faces. Based on these findings it appears plausible that a more cognitive stimulating environment (compared to OA that do not attend university at a higher age) affects cognitive abilities in a positive way: unlike most studies researching age differences, the present older age group was a select group of older university students, which leads to increased “face time” older subjects had interacting with young university students, thereby familiarizing OA with the configuration of young faces.

This study confronted subjects with stimuli exclusively depicting young faces. It is entirely possible that these near-significant differences disappear if older faces are tested as well. Despite antithetic findings concerning the OAB, findings indicate a stronger bias for OA than for YA that hinges strongly on the target stimulus’s perceived age (Freund et al., 2011). A goal of future research thus is to investigate whether horizontal spatial frequency processing is intact for the identification of older faces or remains impaired. A third age group, subjects in their mid-thirties, could be of significant interest as well, as it was recently stated that face recognition reaches its peak later than previously thought (Germine et al., 2011). It would be interesting to see how these subjects perform for age groups below and above their own age as postulated higher perceptual abilities go along with expertise of face configurations of older and younger individuals alike (i.e., individuals in their mid-thirties increasingly spend more time with representatives of both age groups).

The present findings indicate that, although it is not entirely evident that OA and YA perform equally in un-manipulated conditions, OA perform less accurately when it comes to identifying faces using the preferential processing of horizontal spatial frequencies. In fact, the greatest difference in sensitivity performance is observed for this horizontal filter condition.

Reaction times between the age groups interact with sensitivity measures in a more clear-cut way. Latencies show that OA need more time to make a judgment about the identity of a face. These differences are present for each condition that was tested, indicating that latency measurements are independent of information content that has to be processed for these age groups. This raises the question what components are responsible for this consistent finding. Since motor reaction times were accounted for by subtracting them individually from the latency during the face recognition experiment, motor reactivity can be excluded as a factor. There is no way to tell from the present data whether sensory perception was slower for OA, or whether it was indeed decision-making that led to a heightened reaction time. Interestingly, however, YA appear to have a higher tendency to respond with “yes” answers during ambiguous or non-informative conditions (inverted filtered conditions), which in general take more time to make, as more parts of the target face have to be compared to the stored encoded information to ensure resemblance. Alternatively, a “no” response can be given as soon as differences are found while matching the target image with the learned stimulus from memory (Lockhead, 1972). If YA exhibit a higher percentage of decisions requiring more decision-making time, higher latencies of OA suggest that indeed sensory processes are slower in OA, which stands contrary to research that states sensory processes remain relatively intact and decision-making drives higher latencies (Pfütze et al., 2002).

Future research in this field could flourish if such a select group of subjects are studied more closely. Psychophysical evidence of this experiment hints at high performing OA being relatively spared when it comes to identifying unknown faces. This is accompanied by better working memory performance and sensitivity scores that fail to reach significance. At this time it is pure speculation, but this select group of older individuals might indeed possess a different physiology than other people of their own age. This could be of substantial relevance given Cabeza’s HAROLD model (Cabeza, 2002), Grady’s plasticity theory (Grady, 1998; Grady et al., 1998), and Gazzaley’s account of impaired attention processes (Gazzaley et al., 2005, 2008) and its involvement of face processing. Perhaps these OA show a smaller degree of compensatory mechanisms, namely less bilateral brain activation and a more localized blood flow to prefrontal cortices.

What stands to debate is also the notion that encoding processes are responsible for a difference in performance between age groups: when encoding and recall conditions were the same, the difference between the groups was not statistically significant and much less apparent as compared to a scenario in which subjects had to use horizontal processing to gather information of identity and compare it to a learned identity. This is supported by previous research that determined memory load during encoding as the factor on which accurate face recognition hinges (Lamont et al., 2005). Encoding of horizontally filtered stimuli would clearly not increase the memory load, but still pose a challenge for older individuals, as impaired cognitive processes (such as the abstraction of a horizontally filtered to an unfiltered face) might already impact the encoding processes (which, as theorized might also be a factor for impaired face recognition).

Another supporting fact for this notion is that what normally seems to be a crucial difference between younger and older age groups, a higher false alarm rate in OA. This finding cannot be supported in this study, since those scores did not differ in conditions where an informed judgment could be made (i.e., unfiltered or horizontally filtered stimuli), but in conditions in which guessing would most likely contribute to the overall low sensitivity scores. This could be explained by a general tendency for OA to respond with a “no,” whereas YA respond to ambiguous scenarios more often with a “yes.” The same is present for hit rates, whereas OA did not show a different response behavior except perhaps the least informative, inverted vertically filtered, condition, where OA showed less positive responses, thus having a lower hit rate. These findings are both consistent and further explain the overall relatively evenly distributed response behaviors.

Gathering information from a horizontally filtered stimulus and comparing it to stored information appears to be more challenging for OA than for YA, despite OA’s general comparable performance, foremost given the disadvantage they were exposed to with young-faced stimuli. Thus, a difference between YA and OA concerning general face recognition ability can be further explained if preferential processing of horizontal spatial frequencies is considered to be a major prerequisite for this ability.

Conflict of Interest Statement

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

References

Alvergne, A., Faurie, C., and Raymond, M. (2007). Differential facial resemblance of young children to their parents: who do children look like more? Evol. Hum. Behav. 28, 135–144.

Anastasi, J. S., and Rhodes, M. G. (2005). An own-age bias in face recognition for children and older adults. Psychon. Bull. Rev. 12, 1043–1047.

Baron-Cohen, S. (1994). How to build a baby that can read minds: cognitive mechanisms in mind-reading. Curr. Psychol. Cogn. 13, 513–552.

Baron-Cohen, S. (1995). Mindblindness: An Essay on Autism and Theory of Mind. Cambridge, MA: MIT Press/Bradford Books.

Bartlett, J. C., Leslie, J. E., Tubbs, A., and Fulton, A. (1989). Aging and memory for pictures of faces. Psychol. Aging 4, 276–283.

Bressan, P., and Zucchi, G. (2009). Human kin recognition is self- rather than family referential. Biol. Lett. 5, 336–338.

Burke, D. M., and Light, L. L. (1981). Memory and aging: the role of retrieval processes. Psychol. Bull. 90, 513–546.

Cabeza, R. (2002). Hemispheric asymmetry reduction in older adults: the HAROLD model. Psychol. Aging 17, 85–100.

Cabeza, R., Grady, C. L., Nyberg, L., McIntosh, A. R., Tulving, E., Kapur, S., Jennings, J. M., Houle, S., and Craik, F. I. M. (1997). Age-related differences in neural activity during memory encoding and retrieval: a positron emission tomography study. J. Neurosci. 17, 391–400.

Chaby, L., and Narme, P. (2009). Processing facial identity and emotional expression in normal aging and neurodegenerative diseases. Psychol. Neuropsychiatr. Vieil. 7, 31–42.

Craik, F. I. M., and Byrd, M. (1982). “Aging and cognitive deficits: the role of attentional resources,” in Aging and Cognitive Processes, eds F. I. M. Craik, and S. Trehub (New York: Plenum Press), 191–211.

Craik, F. I. M., and Lockhart, R. S. (1972). Levels of processing: a framework for memory research. J. Verb. Learn. Verb. Behav. 11, 671–684.

Crook, T. H., and Larrabee, G. J. (1992). Changes in facial recognition memory across the adult life span. J. Gerontol. 47, 138–141.

Edmonds, E. C., Glisky, E. L., Bartlett, J. C., and Rapcsak, S. Z. (2012). Cognitive mechanisms of false facial recognition in older adults. Psychol. Aging 27, 54–60.

Firestone, A., Turk-Browne, N. B., and Ryan, J. D. (2007). Age-related deficits in face recognition are related to underlying changes in scanning behavior. Neuropsychol. Dev. Cogn. B Aging Neuropsychol. Cogn. 14, 594–607.

Freund, A. M., Kourilova, S., and Kuhl, P. (2011). Stronger evidence for own-age effects in memory for older as compared to younger adults. Memory 19, 429–448.

Fulton, A., and Bartlett, J. C. (1991). Young and old faces in young and old heads: the factor of age in face recognition. Psychol. Aging 6, 623–630.

Gazzaley, A., Clapp, W., Kelley, J., McEvoy, K., Knight, R. T., and D’Esposito, M. (2008). Age-related top-down suppression deficit in the early stages of cortical visual memory processing. Proc. Natl. Acad. Sci. U.S.A. 105, 13122–13126.

Gazzaley, A., Cooney, J. W., Rissman, J., and D’Esposito, M. (2005). Top-down suppression deficit underlies working memory impairment in normal aging. Nat. Neurosci. 8, 1298–1300.

Germine, L. T., Duchaine, B., and Nakayama, K. (2011). Where cognitive development and aging meet: face learning ability peaks after age 30. Cognition 118, 201–210.

Goffaux, V., and Dakin, S. C. (2010). Horizontal information drives the behavioral signatures of face processing. Front. Psychol. 1:143. doi: 10.3389/fpsyg.2010.00143

Grady, C. L. (1998). Brain imaging and age-related changes in cognition. Exp. Gerontol. 33, 661–674.

Grady, C. L., Maisog, J. M., Horwitz, B., Ungerleider, L. G., Mentis, M. J., Salemo, J. A., Pietrini, P., Wagner, E., and Haxby, J. V. (1994). Age-related changes in cortical blood flow activation during visual processing of faces and location. J. Neurosci. 14, 1450–1462.

Grady, C. L., McIntosh, A. R., Bookstein, F., Horwitz, B., Rapoport, S. I., and Haxby, J. V. (1998). Age-related changes in regional cerebral blood flow during working memory for faces. Neuroimage 8, 409–425.

Grady, C. L., McIntosh, A. R., Horwitz, B., and Rapoport, S. I. (2000). Age-related changes in the neural correlates of degraded and non-degraded face processing. Cogn. Neuropsychol. 17, 165–186.

Greenwood, P. M., and Parasuraman, R. (2010). Neuronal and cognitive plasticity: a neurocognitive framework for ameliorating cognitive aging. Front. Aging Neurosci. 2:150. doi: 10.3389/fnagi.2010.00150

Hoaglin, D. C., Mosteller, F., and Tukey, J. W. (1983). Understanding Robust and Exploratory Data Analysis. New York, NY: Wiley.

Lamont, A. C., Stewart-Williams, S., and Podd, J. (2005). Face recognition and aging: effects of target age and memory load. Mem. Cognit. 33, 1017–1024.

Langner, O., Dotsch, R., Bijlstra, G., Wigboldus, D. H. J., Hawk, S. T., and van Knippenberg, A. (2010). Presentation and validation of the Radboud Faces Database. Cogn. Emot. 24, 1377–1388.

Levin, D. T. (1996). Classifying faces by race: the structure of face categories. J. Exp. Psychol. Learn. Mem. Cogn. 22, 1364–1382.

Maylor, E. A., and Valentine, T. (1992). Linear and nonlinear effects of aging on categorizing and naming faces. Psychol. Aging 7, 317–323.

Mishkin, M., and Ungerleider, L. G. (1982). Contribution of striate inputs to the visuospatial functions of parieto-preoccipital cortex in monkeys. Behav. Brain Res. 6, 57–77.

Moosbrugger, H., and Oehlschlägel, J. (1996). Frankfurter Aufmerksam-keits-Inventar (FAIR). Bern: Verlag Hans Huber.

Perfect, T. J., and Moon, H. (2005). “The own-age effect in face recognition,” in Measuring the Mind, eds J. Duncan, P. McLeod, and L. Phillips (Oxford, Oxford University Press), 317–340.

Pfütze, E. M., Sommer, W., and Schweinberger, S. R. (2002). Age-related slowing in face and name recognition: evidence from event-related brain potentials. Psychol. Aging 17, 140–160.

Sanders, R. E., Murphy, M. D., Schmitt, F. A., and Walsh, K. K. (1980). Age differences in free recall rehearsal strategies. J. Gerontol. 35, 550–558.

Sporer, S. L. (2001). Recognizing faces of other ethnic groups: an integration of theories. Psychol. Public Policy Law 7, 36–97.

Tewes, U. (1994). HAWIE-R. Hamburg-Wechsler-Intelligenztest für Erwachsene, Revision 1991; Handbuch und Testanweisung. Bern: Verlag Hans Huber.

Watt, R. J. (1994). Computational analysis of early visual mechanisms. Ciba Found. Symp. 184, 104–117.

Watt, R. J., and Dakin, S. C. (2010). The utility of image descriptions in the initial stages of vision: a case study of printed text. Br. J. Psychol. 101, 1–26.

West, R. L. (1996). An application of prefrontal cortex function theory to cognitive aging. Psychol. Bull. 120, 272–292.

West, R. L. (2000). In defense of the frontal lobe hypothesis. J. Int. Neuropsychol. Soc. 6, 727–729.

Keywords: face perception, age differences, memory, spatial frequencies

Citation: Obermeyer S, Kolling T, Schaich A and Knopf M (2012) Differences between old and young adults’ ability to recognize human faces underlie processing of horizontal information. Front. Ag. Neurosci 4:3. doi: 10.3389/fnagi.2012.00003

Received: 27 February 2012; Accepted: 02 April 2012;

Published online: 23 April 2012.

Edited by:

Hari S. Sharma, Uppsala University, SwedenReviewed by:

Luis Francisco Gonzalez-Cuyar, University of Washington School of Medicine, USAGregory F. Oxenkrug, Tufts University, USA

Copyright: © 2012 Obermeyer, Kolling, Schaich and Knopf. This is an open-access article distributed under the terms of the Creative Commons Attribution Non Commercial License, which permits non-commercial use, distribution, and reproduction in other forums, provided the original authors and source are credited.

*Correspondence: Sven Obermeyer, Department of Psychology, Goethe-University Frankfurt, Georg-Voigt-Strasse 8, Frankfurt 60325, Germany. e-mail:b2Jlcm1leWVyQHBzeWNoLnVuaS1mcmFua2Z1cnQuZGU=

Thorsten Kolling

Thorsten Kolling