- 1Department of Mathematics, MOE-LSC, and Institute of Natural Sciences, Shanghai Jiao Tong University, Shanghai, China

- 2Courant Institute of Mathematical Sciences and Center for Neural Science, New York University, New York, NY, USA

- 3NYUAD Institute, New York University Abu Dhabi, Abu Dhabi, UAE

Granger causality (GC) is a powerful method for causal inference for time series. In general, the GC value is computed using discrete time series sampled from continuous-time processes with a certain sampling interval length τ, i.e., the GC value is a function of τ. Using the GC analysis for the topology extraction of the simplest integrate-and-fire neuronal network of two neurons, we discuss behaviors of the GC value as a function of τ, which exhibits (i) oscillations, often vanishing at certain finite sampling interval lengths, (ii) the GC vanishes linearly as one uses finer and finer sampling. We show that these sampling effects can occur in both linear and non-linear dynamics: the GC value may vanish in the presence of true causal influence or become non-zero in the absence of causal influence. Without properly taking this issue into account, GC analysis may produce unreliable conclusions about causal influence when applied to empirical data. These sampling artifacts on the GC value greatly complicate the reliability of causal inference using the GC analysis, in general, and the validity of topology reconstruction for networks, in particular. We use idealized linear models to illustrate possible mechanisms underlying these phenomena and to gain insight into the general spectral structures that give rise to these sampling effects. Finally, we present an approach to circumvent these sampling artifacts to obtain reliable GC values.

1. Introduction

There have been great advances in experimental observational techniques in neuroscience, from single-unit, multi-unit, local field potential (LFP), to non-invasive electroencephalography (EEG), magnetoencephalography (MEG), and functional Magnetic Resonance Imaging (fMRI). These experimental tools have expanded the examination of physiological correlations of neuronal responses to stimuli to causal effects of how different parts of the nervous system influence one another. One of the fundamental questions is how one can infer causal relations between neurons or between neuronal populations through measured time series, which represent neural activities of different neurons or neuronal populations.

There is a long history of studying causal relations between time series. Granger causality (GC) has been developed to study how one time series is influencing the other. Recently GC has been applied to extract causal or structural information from time series (Strogatz, 2001; Boccaletti et al., 2006; Bressler and Seth, 2011). It has been applied to analyze times series obtained through invasive techniques, such as single-unit, multi-unit (Passaro et al., 2008), LFP (Brovelli et al., 2004; Bressler et al., 2007) recordings as well as non-invasive techniques, such as EEG (Astolfi et al., 2006), MEG (Gow et al., 2008), and fMRI (Roebroeck et al., 2005; Deshpande et al., 2008; Hamilton et al., 2010). The GC theory aims to analyze causal influence of one time series Xt on the other Yt by examining whether the prediction of Yt can be improved upon the incorporation of information of Xt (Wiener, 1956; Granger, 1969; Geweke, 1982). Although GC has proven to be a powerful framework to study directed causal connectivity within the brain, there are many challenges in its application to acquire reliable results. Usually the application of GC requires the time series to be linear. When time series are linear and Gaussian, GC is equivalent to the transfer entropy (Barnett et al., 2009; Bressler and Seth, 2011). For non-linear, non-Gaussian time series, it remains an active research field to study the applicability of the GC. Note that, the notion of GC is statistical rather than structural, i.e., through statistical features of responses, it identifies directed statistical functional connectivity, which may be different from physical causal interactions in the systems.

As a statistical method, one of the important issues in the GC analysis is how to sample the dynamics in order to obtain suitable time series for a reliable result from GC analysis. It has been pointed out that GC is not invariant to the sampling interval (McCrorie and Chambers, 2006). Spurious causality may arise if sampling is not sufficiently fine to capture the evolution of dynamical variables (McCrorie and Chambers, 2006). In its application to the analysis of fMRI data, one is confronted with the unavoidable issue of low-pass filtering and down-sampling in processing the BOLD signal (Roebroeck et al., 2005; Danks and Plis, 2013).

However, the directed statistical functional connectivity as revealed in the GC analysis is intimately related to the structural connectivity of networks. Previously, we have applied the GC analysis to reconstruct network structural connectivity and our results demonstrate that the GC analysis can be successfully used to reconstruct the coupling structures of the integrate-and-fire (I&F) networks (Zhou et al., 2013b, 2014). We note in passing that for smoothly coupled oscillator networks (with or without noisy inputs), techniques including chaotic synchronization or phase dynamics have been used to uncover the structural connectivity via random phase reset or their responses to perturbations (Yu et al., 2006; Timme, 2007; Napoletani and Sauer, 2008; Smirnov and Bezruchko, 2009; Ren et al., 2010; Levnajić and Pikovsky, 2011), whereas, for some simple non-smooth network dynamics, e.g., δ-pulse coupled, current-based integrate-and-fire systems, their topologies can be reconstructed by designing a specific set of external inputs (Bussel et al., 2011). However, in general, it remains a challenging task to directly uncover the network topology for a non-smooth, non-linear system, such as the conductance-based I&F networks, given the condition that only the dynamical activity at individual nodes can be measured. The GC analysis in our network construction uses time series measured from the network dynamics response, such as the membrane potential, to a natural input. In this approach, we do not design specific inputs to probe the specific response of the system in order to uncover the network topology.

Because most dynamical quantities are continuous in time, GC values are computed using the discrete time series sampled from these continuous time processes. In this work, we analyze sampling artifacts in the GC analysis. In particular, we examine the reliability of the I&F network topology reconstructed using the GC analysis, by studying behaviors of the GC value as a function of sampling interval length. We will term this function as the GC sampling structure. As will be shown below, surprisingly, there are oscillations in the GC sampling structure and the GC may even vanish on a set of finite sampling interval lengths even if there are physical couplings between neurons. The phenomenon of vanishing GC on a set of sampling interval lengths obviously complicates the interpretation of causal inference and raises the issue of the reliability of network topology reconstruction using the GC analysis. In addition, if sampling interval is not sufficiently small, as mentioned above, GC may not vanish even when there is actually no causal influence (McCrorie and Chambers, 2006). Therefore, in general, without properly taking into account the sampling effect, different conclusions can be drawn about causality, due to the difference in sampling, when the GC analysis is applied to experimental observational data. One may presume that if we used discrete time series sampled using ever finer interval lengths from a continuous process in time, a more reliable GC value for causal inference could be obtained since the sampled time series in such case is more and more close to the continuous dynamical process. However, as we will show below, the GC value actually approaches zero linearly in proportion to the sampling interval length. Therefore, in the GC analysis for the network topology reconstruction, one of the main theoretical challenges is what is an appropriate sampling interval in order to obtain reliable GC values.

To answer the question of how to determine the sampling interval, we first characterize various features associated with the GC sampling structures arising from the network topology reconstruction. We will use idealized linear models to illustrate possible mechanisms underlying these structures and to gain insight into reliability of the GC analysis applied to linear and non-linear systems. Finally, we will present general strategies to circumvent these complications in the GC analysis. It is important to point out that these sampling issues arise from how discrete time series for the GC analysis are sampled from continuous time processes and they need to be addressed regardless of whether one uses a parametric method or a non-parametric method for the GC evaluation (Geweke, 1982; Dhamala et al., 2008).

This article is organized as follows. In the section of Methods, we briefly introduce the I&F network model, the GC analysis and its application in the network reconstruction. In the section of Results, first, we numerically study the GC sampling structures for the I&F networks. We observe that GC values oscillate with respect to the sampling interval length τ and approach zero linearly in proportion to τ as τ tends to zero. These two phenomena may give rise to complications for reliable GC analysis of the I&F network, in addition to the possible spurious GC in the absence of causal influence. Second, we study mechanisms of oscillations in GC sampling structures through linear models. Third, we study the limiting behavior of GC as τ tends to zero and discuss the underlying mechanisms. In the section of Discussion and Conclusion, we describe our approach of eliminating the sampling artifacts in the application of GC and present our conclusions. In Appendices A–C of Supplementary Material, we present related mathematical details.

2. Materials and Methods

2.1. I&F Network Model

As we mentioned in the Introduction, we have applied GC analysis to reconstruct the topological connectivity of I&F networks. Experimentally, it has been shown that models of I&F neurons are able to capture rather well the subthreshold dynamics of a real neuron in terms of linear response properties as well as statistical firing properties of a real neuron (Carandini et al., 1996; Rauch et al., 2003; Burkitt, 2006). The models of I&F neurons have been extensively used in simulations of large-scale neuronal networks in the modeling of cortical phenomena in the brain (Somers et al., 1995; Troyer et al., 1998; McLaughlin et al., 2000; Tao et al., 2004; Cai et al., 2005; Rangan et al., 2005; Zhou et al., 2013a). In this work, we study the network of the conductance-based, I&F neurons of excitatory type and its dynamics is governed by the following equations:

where Vi and Gi are the membrane potential and conductance for the ith neuron in the network, respectively, GL is the leak conductance, ϵL is the resting potential, ϵE is the reversal potential, σ is the conductance time constant. When Vi is between the threshold Vth and the resting potential ϵL, the dynamics of a neuron is described by Equation (1). However, when the membrane potential of a neuron reaches the threshold Vth, it is reset to the resting potential ϵL for a certain period τref (refractory period). An action potential of a real neuron is modeled here by the event of the threshold crossing and the voltage resetting. The event of this threshold-reset dynamics is referred to as a firing event (spike) of a neuron. sji is the connection strength from the presynaptic neuron j to the postsynaptic neuron i. For simplicity, we study a homogeneously coupled network, i.e., sji = sAji, where s is the connection strength, A = (Aji) is the adjacency matrix of the network. Tj,k is the time of the kth spike of the jth neuron, whereas TPi,l is the arrival time of the lth spike of the input to the ith neuron. The input is a Poisson process with rate ν and strength λ.

In our work, we use dimensionless unit for membrane potentials, in particular, Vth = 1, ϵL = 0, ϵE = . These correspond to unscaled physiological values, Vth = −55 mV, ϵL = −70 mV, and ϵE = 0 mV (McLaughlin et al., 2000; Cai et al., 2005; Rangan et al., 2005; Zhou et al., 2013a). Time constants retain its dimension, for which we use ms for its unit. We set τref = 2 ms and the conductance time constant σ = 2 ms. Conductance has the unit ms−1. We have GL = 0.05 ms−1, which corresponds to the physiological membrane time constant 20 ms. The method we used to solve system (1) numerically is a fourth order Runge-Kutta method with spike-spike corrections, the details of which can be found in Rangan and Cai (2007).

In addition to voltage signals, we also use time series constructed from spike trains:

where, δ(t − Tif) is the Dirac delta function, Tif is the firing time of the ith spike. In order to avoid the infinity in the Dirac δ-function, we convolve these spikes with a kernel. The simplest kernel is the indicator function with width d. Then, a spike train can be represented as the following:

where, Id(x) = 1 for |x| ≤ and Id(x) = 0 for |x| > . We use sufficiently small d so there are no overlaps between spikes. Then, we can obtain a time series of binary type by sampling signal (2) with a certain sampling interval length.

In the following, we will perform the GC analysis on the time series of voltage or spike trains to reconstruct the I&F network topology, i.e., the adjacency matrix A = (Aji) and discuss the sampling issues in this approach.

2.2. Brief Introduction to the GC Analysis

The GC analysis is based on the idea that, if one can reduce the prediction error about a time series after incorporating the history of the second time series, then there must be a causal influence of the second time series on the first (Wiener, 1956; Granger, 1969; Geweke, 1982). The GC analysis can be naturally formulated in a bivariate form. However, the causality analysis can also be naturally generalized to a multivariate form using conditional Granger causality (Geweke, 1984; Ding et al., 2006). In the following, for ease of later discussions, we briefly recapitulate properties of Granger causality in the bivariate setting.

For two stationary time series Xt and Yt, one can perform autoregression to obtain

where ϵ1t and η1t are autoregression residuals representing the prediction errors when we consider the history of each time series separately. Here, Σ1 = var(ϵ1t), Γ1 = var(η1t). The corresponding joint regression has the following form for times series Xt and Yt

where ϵ2t and η2t are joint regression residuals representing the prediction errors after we consider a shared history for both time series. The covariance matrix for ϵ2t and η2t is denoted by

where Σ2 = var(ϵ2t), Γ2 = var(η2t), ϒ2 = cov(ϵ2t, η2t). By the Gauss-Markov theorem (Scheffé, 1959), the series {ϵ1t}, {ϵ2t}, {η1t}, and {η2t} are white noise time series. It is evident from Equations (3) and (4) that Σ2 ⩽ Σ1 and Γ2 ⩽ Γ1, that is, one can never obtain a worse prediction (i.e., greater residual variance) of one time series after incorporating information from the other time series.

Following the idea of Granger causality, if Σ2 = Σ1, i.e., the prediction error cannot be reduced by the joint regression, then there is no causal influence from Yt to Xt. However, if Σ2 < Σ1, there is a causal influence from Yt to Xt. This causal influence is characterized by the Granger causality which is defined as

Clearly, Fy→x ⩾ 0 because Σ2 ⩽ Σ1; Fy→x = 0 when Σ2 = Σ1. Similarly, Granger causality from {Xt} to {Yt} is defined as

The idea of instantaneous causality is to quantify the mutual instantaneous influence of both time series without any time lag. If ϒ2 = 0, i.e., ϵ2t and η2t are uncorrelated, then incorporation of the new instantaneous information of Xt (ϵ2t) cannot help to reduce the variance of η2t and vice versa. Therefore, the instantaneous causality can be defined as

where |Σ| is the determinant of the matrix Σ. Note that, when ϒ2 = 0, |Σ| = Γ2Σ2 − ϒ22 = Γ2Σ2, therefore Fx.y vanishes, i.e., no instantaneous causality. The total Granger causality between {Xt} and {Yt} can now be defined as

which can easily be seen to be the sum of all the three causality terms Equations (5), (6), and (7):

Note that, in the GC analysis of our network topology reconstruction, we mainly focus on the values of Fy→x and Fx→y because they quantify the directional causal strengths. As can be seen below, Fy→x and Fx→y can be related to the directional connectivity of a network (Zhou et al., 2013b, 2014).

For our later discussions, in addition to the time-domain description of GC, we also briefly summarize the description of GC in the frequency domain (Geweke, 1982; Ding et al., 2006). For the bivariate time series Xt, Yt, the spectral matrix S(ω) is represented as , where Sxx(ω) is the auto-spectrum of the time series Xt, defined by , Syy(ω) is the auto-spectrum of the time series Yt, Sxy(ω) is the cross-spectrum of Xt and Yt, defined by . Note that Syx(ω) is the complex conjugate of Sxy(ω). The covariances of residuals possess the following spectral representations [see (Geweke, 1982; Ding et al., 2006) for details]:

where |S(ω)| = Sxx(ω)Syy(ω) − Sxy(ω)Syx(ω) is the determinant of the spectral matrix S(ω).

2.3. Extraction of the Network Topology

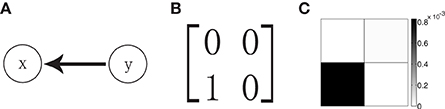

We now turn to the reconstruction of the network topology using the GC analysis. For a two-neuron network as shown in Figure 1A in which neuron x is postsynaptic to neuron y whereas there is no synaptic input from neuron x to neuron y, the corresponding adjacency matrix of the network is shown in Figure 1B. Applying the GC analysis on voltage time series with sampling interval length τ = 0.5 ms, time length T = 106 ms and regression order p = 20, we obtain Fy→x = 8.3 × 10−4 and Fx→y = 0.1 × 10−4 (Figure 1C). Note that, in our work, the Bayesian information criterion (Schwarz, 1978) is used to determine the regression order p. In our example in Figure 1, we have Fy→x » Fx→y and the GC value from neuron y to neuron x is almost two orders of magnitude larger than the inverse direction. From this, one may conclude that GC values are linked to the adjacency matrix of the network, i.e., Ayx = 1 and Axy = 0. This is also consistent with the result by performing statistical significance test (see Appendix C in Supplementary Material for details) (Zhou et al., 2013b, 2014). In the present work, we focus on the implication of sampling effects and on the assessment of the reliability of this reconstruction by the GC analysis.

Figure 1. (A) Schematic representation of a unidirectional two-neuron network with only neuron y synaptically connected to the postsynaptic neuron x. (B) The corresponding adjacency matrix A with Ayx = 1 for the network in (A). (C) The grayscale grid representation of the GC matrix F (Fij = Fi → j) for the network. The gray scale bar indicates the value of GC. The parameters of the network are ν = 1ms−1, λ = 0.012, and s = 0.02.

3. Results

3.1. Effects of Sampling

As discussed in the Introduction, the GC analysis has emerged as a popular tool in detecting causal relations among times series of physiological data measured in the brain. Note that most of the dynamical quantities underlying these times series are continuous in time, therefore, a natural and important issue arises about what is the proper way of constructing discrete time series from continuous quantities when applying the GC analysis. To address this issue, we examine the following two related questions: one is whether it makes great differences when discrete time series sampled at different time intervals are used and the other is whether the GC analysis would become more reliable as the sampling interval length shrinks to zero. We will answer these two questions using our I&F network dynamics below. Due to its own importance, as mentioned previously, we will refer to the GC value as a function of sampling interval length τ as the GC sampling structure.

3.1.1. Oscillations in the GC sampling structure

In the topology extraction for the network dynamics of two neurons (see Methods) with unidirectional interactions, whose topology is shown in Figure 1A, we perform the GC analysis on both voltage and spike train signals of the neurons. In order to examine possible differences of GC values with different sampling intervals, we first perform a scan of interval lengths in sampling of the dynamical variables from the I&F network. Note that we evaluate the GC values with different sampling interval lengths based on the same original signal, i.e., the same total duration of the signal.

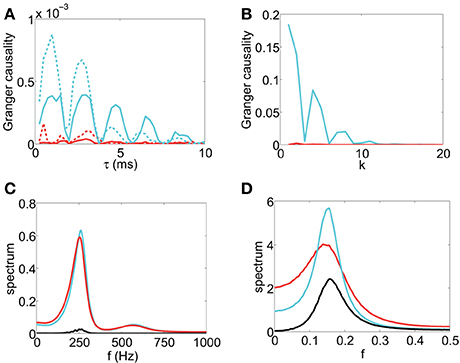

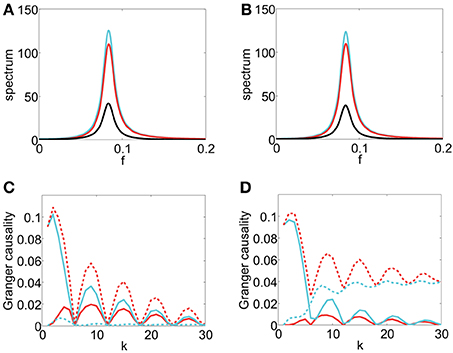

The scanning result is summarized in Figure 2A, which displays the GC value as a function of sampling interval length τ for the I&F dynamics. From Figure 2A, for GC values obtained from both the voltage and spike train time series, we have the following Observations: (i) there are oscillations in the GC sampling structure, (ii) the GC value becomes almost zero periodically, and (iii) the GC value oscillates almost at a constant frequency (approximately 500 Hz in Figure 2A), (iv) the GC value decays to zero as τ becomes sufficiently large. It is easy to appreciate Observation (iv) because for time series with a sufficiently large sampling interval length, much of the information is lost, resulting in unreliable causal detection, i.e., vanishing GC values. These observations demonstrate that for I&F dynamics there are great effects of sampling interval length on the GC values regardless of whether the voltage or spike train time series are used.

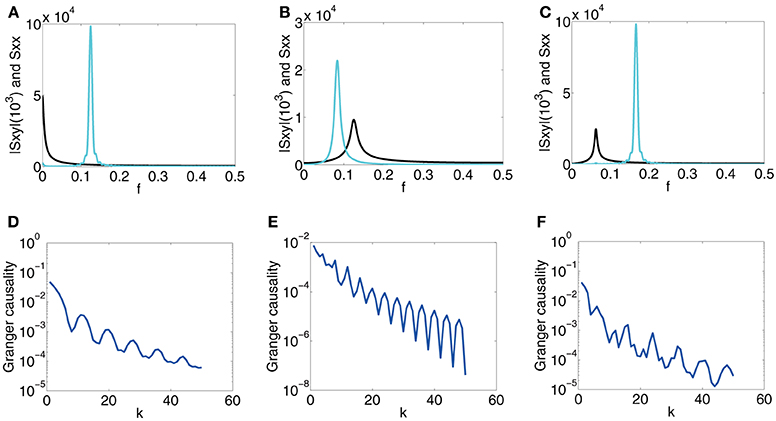

Figure 2. The GC sampling structure and corresponding spectra. The top row of panel is GC vs. sampling interval length: (A) Fx → y (red), Fy → x (cyan) obtained from voltage time series and Fx → y (red dash), Fy → x (cyan dash) obtained from spike train time series with sampling interval τ. The time series are generated by the I&F network whose topology is shown in Figure 1A with parameters ν = 1ms−1, λ = 0.066, s = 0.02. (B) Fx → y (red) and Fy → x (cyan) with sampling interval length k (see text) for the second order autoregressive model (13). (C) and (D): Corresponding spectra of the voltage time series for (A) and the autoregressive time series for (B), respectively: Sxx (cyan), Syy (red), |Sxy| (black).

In general, there are oscillations, with a narrow spectral band or a broad spectral band, in time series from the I&F networks. It is natural to use the frequency domain to examine these features of the time series. We ask whether there is a relation between the oscillations of time series which can be revealed by the spectra and the GC sampling structure. In Figure 2C, the peak frequencies of all spectra Sxx, |Sxy| and Syy are approximately 250 Hz which is half of the oscillation frequency 500 Hz in the GC sampling structure in Figure 2A. In the following, we will investigate this relation.

From the results of Figure 2A, a question naturally arises: whether the oscillation phenomenon is general and is not confined to the time series from the non-linear dynamics of the I&F networks. In particular, we ask whether the oscillations exist for linear autoregressive models, for which the GC framework is established. In order to answer this question, we study a simple example of linear models. A representative second-order autoregressive model we study is

where ϵt and ηt are Gaussian white noise time series with variances var(ϵt) = 0.5, var(ηt) = 1 and covariance cov(ϵt, ηt) = 0.2. Figure 2B displays the GC values computed using the time series generated by the model (13) with different “sampling interval length” τ = k, i.e., we use every datum point after skipping k − 1 points in between. From Figure 2B, we can also observe the oscillatory feature in the GC sampling structure, which also exhibits the behavior of GC that almost periodically vanishes. Note that we have examined many other parameters for second-order autoregressive models and found that the oscillatory features are quite common, as will be addressed below. In Figure 2D, the peak frequencies of all spectra for this model are approximately which is again half of the oscillation frequency in the GC sampling structure in Figure 2B.

In summary, it can be seen that the oscillations of the GC values with respect to sampling interval length is a common feature, not only for I&F networks but also for linear models. These features in the GC sampling structure have strong ramifications in the application of the GC analysis. Importantly, it is disconcerting to observe that the GC value almost become zero at some sampling interval lengths despite the fact that there are clearly physical connections in the network or the coupling in the linear model between the two time series. We further note that, in Figure 2A, Fx→y from both voltage and spike train time series displays non-zero values for some ranges of τ, in contrast to the physical couplings in the system for which there is no synaptic connection from neuron x to y. As will be shown below, this spurious GC also arises for linear systems. Evidently, the phenomena of vanishing GC and spurious GC will greatly complicate the reliability of any conclusions about the causal influence and will make the validity of the network topology reconstruction questionable. Later, we will return to the resolution of these issues.

3.1.2. The GC sampling structure as τ→0

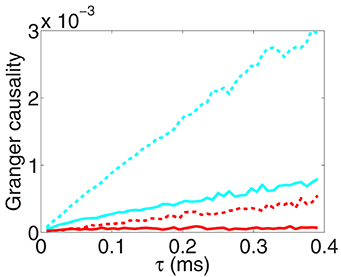

As demonstrated through the oscillation phenomenon of GC above, clearly, we cannot choose sampling interval length arbitrarily in the application of GC analysis. Then, an important issue arises: what should be the criteria of choosing a correct sampling interval length to obtain discrete time series in measurement for reliable GC analysis. For a natural discrete-time dynamics, we can simply use their intrinsic intervals, e.g., for discrete time series generated by an autoregressive model, and we can then obtain reliable GC values for causal interpretations because the entire information is incorporated in the causal analysis. However, most physical quantities are continuous in time, one does not have particular intrinsic intervals for sampling. In order to obtain reliable GC values, one possible scenario, similar to the discrete case, is that it is always better if one uses more finely sampled time series because apparently more information is incorporated for causality determination. To examine this scenario, we study the convergence property of the GC sampling structure as the sampling interval length τ tends to 0. The corresponding numerical results are shown in Figure 3 for a two-neuron I&F network.

Figure 3. The GC sampling structure as sampling interval length tends to zero. Fx→y (red), Fy→x (cyan) obtained from voltage time series and Fx→y (red dash), Fy→x (cyan dash) obtained from spike train time series with sampling interval length τ. Note that, by the asymptotic distribution theory of GC (Geweke, 1982), the estimator of a directed GC has a bias , where p is the regression order and n is the length of the discrete time series. We have used the Bayesian information criterion (Schwarz, 1978) to determine the regression order p and have subtracted this type of biases in the figure (see Appendix C in Supplementary Material for details). The time series are generated by the I&F network whose topology is shown in Figure 1A with parameters ν = 1 ms−1, λ = 0.0177, s = 0.02.

From Figure 3, we observe that for both voltage and spike train time series, GC values approach 0 almost linearly as the sampling interval length τ tends to 0. This phenomenon of vanishing GC values implies that we eventually fail to extract real causal relation through GC analysis when sampling interval length vanishes regardless of whether or not there are interactions between two time series. In this situation, obviously, we cannot use the limit GC value as τ → 0 to infer the causal interaction especially for the I&F networks. Here, we note that this limit effect of sampling is general for continuous-time processes and the underlying mechanism will be demonstrated later.

In summary, the phenomena shown in Figures 2, 3 demonstrate that time series obtained by using different sampling interval lengths lead to great differences in GC values and a finer sampling does not result in more reliable GC values for causal interpretation. Therefore, in order to obtain meaningful interpretation through GC, how to choose a sampling interval length becomes an important issue in practice. Without properly taking this issue into account, GC analysis may produce unreliable or even opposite conclusions about causal influence when applied to empirical data sampled with different sampling interval lengths. In what follows, we first use linear regressive models and spectral methods to study theoretically possible mechanisms underlying the oscillation phenomenon. With these idealized models we can gain insight into possible origins of general oscillations in the GC sampling structure.

3.2. Mechanism of Oscillations in the GC Sampling Structure

3.2.1. Spectra and the GC sampling structure

We will invoke the frequency domain description of GC for our analysis of the GC sampling structure. First, we discuss the relation between the spectrum S(ω) for the time series {X0, X1, X2, …} whose sampling time interval is τ, and spectrum S(k)(ω) for time series {X0, Xk, X2k, …}, which is a time series sampled at the interval length kτ, k being a positive integer. Using the Wiener-Khinchin theorem, we can relate the spectra S(ω) and S(k)(ω) of these two time series to their time correlations, thus enabling us to show that

The details of the proof can be found in Appendix A in Supplementary Material. Note that Equation (14) is also valid for cross-spectrum Sxy(ω) of time series Xt and Yt, which is defined by .

A direct implication of Equation (14) is that if S(ω) = C (C is a constant), then S(k)(ω) ≡ C. That is, if the original time series is white, then the time series obtained by sampling with any interval length remains to be white. In addition, we have the following results about GC in the case in which one of the bivariate time series is white and the other has no correlation with it. If Yt, t is an integer, is a white noise series and cov(Yt, Xt − i) = 0 for any positive integer i > 0, then F(k)x→y ≡ 0. In particular, when cov(Yt, Xt − i) = 0 for any integer i ⩾ 0, F(k)x→y ≡ F(k)x·y ≡ 0. These facts conform with our intuition that there is no causal flow if there is no correlation between Xt and Yt and the Yt remains always white (see Appendix A in Supplementary Material for details). We will use these facts in our discussions below.

We now turn to the question of what is the structure in time series that will give rise to oscillations in the GC sampling structure. A possible origin of oscillations in the GC sampling structure may be a consequence of oscillations in the original time series. Our strategy of tackling this question is as follows: First, we note that the spectrum of the original time series characterizes the oscillation strength of the time series over different frequencies. We will construct simplified models in which we can focus on the relationship between the peak frequencies of the spectrum and oscillations in the GC sampling structure. We will construct different classes of models which allow us to directly analyze the GC sampling structure through the spectral representation (14). Using Equation (14), we can derive an expression of the spectral matrix S(k) with sampling interval k, , and S(k) can be directly used to compute the total causality F(k)x,y through Equation (12) as follows

Because the total causality in Equation (8) involves three different terms and it is usually difficult to derive the analytical formula for each term, one way of tackling this issue is to consider each term separately. In particular, we construct a special model for which we have F(k)x→y ≡ F(k)x.y ≡ 0. Then, the directional GC F(k)y→x is identical to the total causality F(k)x,y and we can therefore analyze the GC sampling structure F(k)y→x through the total causality in Equation (15). If the condition S(k)xx(ω)S(k)yy(ω) » S(k)xy(ω)S(k)yx(ω) holds, we can obtain the following simplified approximation for the directional GC F(k)y→x:

In the following, we will use simplified models to reveal the origin of oscillations in the GC sampling structure, thus, providing insight into an intuitive understanding of the possible origin of oscillations in the GC sampling structure for the I&F network as well as for other general situations.

3.2.2. Idealized model I

The first idealized linear model we have constructed is as follows

where {ϵt} and {ηt} are independent white noise series with covariance matrix . L is the lag operator satisfying LXt = Xt − 1. a(L) = 1 + a1L + a2L2 + …, b(L) = b1L + b2L2 + …. We can obtain the spectra of Xt, Yt, which are

i.e., Yt is a white noise time series, and

where and , which are the Fourier transforms of a(L) and b(L), respectively.

By the result of the previous section (also see Appendix A in Supplementary Material for details), we have F(k)x·y ≡ F(k)x→y ≡ 0 for this model, therefore, the other directional GC is equal to the total causality, i.e., F(k)y→x ≡ F(k)x,y. Then, we can use Equations (15) and (16) to analyze the relation between F(k)y→x and sampling interval length k.

To illustrate clearly the sampling phenomena, we discuss two classes of a(L) and b(L) in the following.

For the first class, we fix Sxx = a(ω)a*(ω)μ + b(ω)b*(ω) = C, where C is constant and we always assume C » max(b(ω)b*(ω)) so as to guarantee that Equation (16) is a good approximation. Under this condition, there are no oscillations in the time series Xt as discussed previously and the only spectrum that varies over k is the cross-spectrum Sxy. Then, we can find explicit asymptotic expressions and approximations of F(k)y→x in order to study the relation between the peak frequency of Sxy(ω) and the oscillations of F(k)y→x with respect to the sampling interval length k. The spectrum Sxy corresponds to the Fourier transform of the correlation function between Xt and Yt. In applications, it is common that a correlation function has damping and oscillation structures. Therefore, we will study the following three cases in the first class:

Case 1.1 .

Case 1.2 .

Case 1.3 .

Here, τd, β and ϕ are constants. We note that there are no oscillations in b(L) in Case 1.1, and there are oscillations in b(L) in Cases 1.2 and 1.3 and with a further phase shift in Case 1.3.

For the second class, we do not fix Sxx(ω) as a constant, i.e., the time series Xt is no longer white. Instead, we study some special combinations of a(L) and b(L). For this class, we cannot derive explicit analytic forms of F(k)y→x. Therefore, we turn to GC values obtained numerically through Equations (14) and (15) to study the relation between the oscillations of F(k)y→x and the peak frequencies of Sxx(ω) and |Sxy(ω)|. We will study the following cases in this class:

Case 2.1 ,

Case 2.2 .

Here, τd, β1, β2 are constants. We note that in Cases 2.1 and 2.2 there are oscillations in the time series in Xt with a non-oscillatory b(L) in Case 2.1 and with an oscillatory b(L) in Case 2.2.

We now turn to the detailed discussion of the properties of F(k)y→x in all the listed cases.

Case 1.1 We consider the case which has no oscillations in b(L) and, therefore, the peak frequency of |Sxy(ω)| is at 0. We will examine whether F(k)y→x oscillates with respect to k. From Equation (16), Under Approximation I: C » bk(ω)bk(ω)*, we can derive the following asymptotic result (see Appendix A in Supplementary Material for details)

for which, we can clearly see that GC decreases exponentially with respect to k.

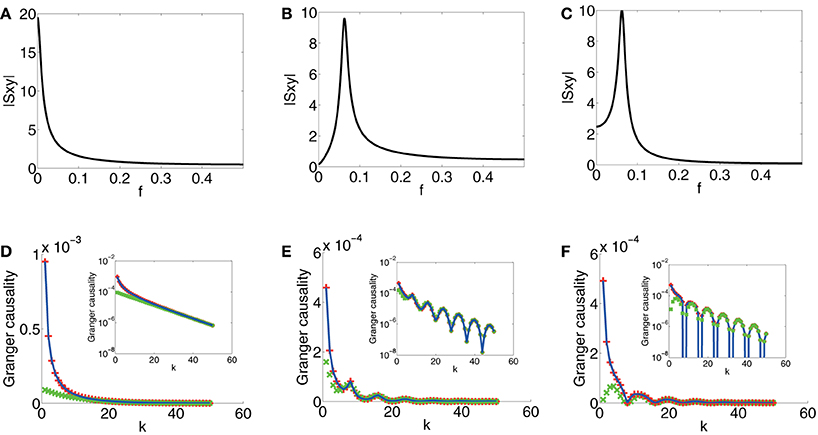

In Figure 4A, which displays spectrum |Sxy|, we can observe that the magnitude of Sxy concentrates near 0 which signifies that there are no oscillations induced by the coupling b(L). In Figure 4D, which displays the GC sampling structure for this case, clearly, there are no oscillations. It can also be seen that GC obtained through the asymptotic result, [Equation (2) in Supplementary Material], agrees very well with the numerically obtained GC for all k's and the asymptotic formula Equation (18) approximates GC rather well when k is large. Therefore, we can conclude that if there are no oscillations in the coupling b(L), as signified in the peak frequency of |Sxy| near 0 and if Sxx and Syy are constant (i.e., both are white), then F(k)y→x does not oscillate with respect to k.

Figure 4. Contrast between spectra and GC sampling structures for the first class. Magnitude of Sxy (|Sxy|) vs. frequency f (f = ) (black) for (A) Case 1.1. (B) Case 1.2. (C) Case 1.3. (D) Comparison of F(k)y → x obtained through numerical solution Equation (15) (blue), asymptotic expressions Equation (2) in Supplementary Material (red plus) and Equation (18) (green cross) vs. sampling interval k for Case 1.1. (E) Comparison of F(k)y → x obtained through numerical solution Equation (15) (blue), Equation (5) in Supplementary Material (red plus) and Equation (19) (green cross) vs. sampling interval k for Case 1.2. (F) Comparison of F(k)y → x obtained through numerical solution Equation (15) (blue), Equation (9) in Supplementary Material (red plus) and Equation (21) (green cross) vs. sampling interval k for Case 1.3. Insets are corresponding log-linear plots of GC vs. sampling interval length k. The exponential decay is clearly seen in the insets. The parameters are C = 104, τd = 0.05, and β = .

Case 1.2 From Case 1.1 in Model I, no oscillations in b(L) implies no oscillations in the GC sampling structure. Next, we consider to examine whether oscillations in the coupling b(L) may induce oscillations in the GC sampling structure.

Under Approximation II, i.e., large τ and k, we can obtain the following asymptotic expression through Equation (16) (see Appendix A in Supplementary Material for details)

from which we can observe that there are oscillations in the GC sampling structure.

In Figure 4B, the peak of |Sxy(ω)| is located approximately at f = , which faithfully reflects the oscillation frequency f0 (f0 = = ) of b(L). In Figure 4E, the oscillation frequency f′0 of F(k)y→x is approximately , which is twice of f0. In addition, the curve obtained by using Approximation I [Equation (5) in Supplementary Material] overlaps with the numerically computed curve, and for large k, the curve obtained by using Approximation II, Equation (19), fits well with the numerically computed curve. Therefore, from Equation (19), we can conclude that oscillations at frequency f0 in the coupling b(L), manifested as the peak frequency f = f0 = in |Sxy|, implies double frequency oscillations (f′0 = 2f0 = ) in the GC sampling structure.

Case 1.3 By the study of Case 1.2, we can further ask how the phase of oscillations in b(L) regulates the oscillations in the GC sampling structure. We consider oscillations with phase ϕ in b(L), that is, . Under Approximation II, we obtain following asymptotic form through Equation (16) (see Appendix A in Supplementary Material for details)

For a special case, when ϕ = − , Equation (20) becomes

In Figure 4C, which depicts the spectrum of Sxy, the peak frequency of |Sxy| is the same as the peak frequency f = in Figure 4B, and this reflects the same frequency of the oscillations in the coupling term b(L). In Figure 4F, the oscillation frequency f′0 of F(k)y→x is again twice of the peak frequency of |Sxy| [as can be seen in Equation (21)]. In addition, the GC sampling structure via the approximation of Equation (9) in Supplementary Material is in excellent agreement with numerically solved GC sampling structure for all k's. For large k, the asymptotic expression of Equation (21) fits well with numerically solved GC sampling structure. However, in contrast to Case 1.2, there is a shift in k for the points at which F(k)y→x attains minimum (or maximum). Therefore, from Equation (20), we can conclude that the phase factor ϕ in b(L) does not affect the frequency of F(k)y→x but it regulates the phase of the oscillations, i.e., it changes the locations of the extrema of F(k)y→x depending on ϕ. We also note that, in the present case, the minimum value of F(k)y→x in oscillations approaches 0.

Case 2.1 and Case 2.2 For the first class we studied above, we have S(k)xx(ω) ≡ C and S(k)yy(ω) ≡ 1, which do not contribute to the variations of F(k)y→x. Then, we obtain a direct relation between the cross-spectrum Sxy(ω) and the GC sampling structure. However, because Sxx(ω) often has peaks at some frequencies especially for time series in neural systems, we further examine the role of Sxx(ω) [or Syy(ω)] in the oscillations of the GC sampling structure. Therefore, we turn to the study of the second class. In order to examine how the oscillation of the causality depends on the oscillations of a(L) and b(L), we examine the large μ limit, and thus, the location of frequency in the peak of Sxx(ω) is determined by a(L), whereas the peak of Sxy(ω) is determined by b(L).

First, for , , from which there are no oscillations in the coupling b(L), we study the behavior of F(k)y→x to look for the relation between the oscillations in F(k)y→x and oscillations in a(L). In Figure 5A, which displays spectra of Sxx and |Sxy|, the peak of Sxx is located approximately at f = , which reflects the oscillation frequency in a(L) and |Sxy| concentrates near 0, which reflects the non-oscillatory nature of b(L). In Figure 5D, which displays the corresponding GC sampling structure, the oscillation frequency f0 in the GC sampling structure is approximately , which equals to the peak frequency of Sxx as well as the oscillation frequency in a(L). In this case, we conclude that Sxx with a peak at f0 can induce oscillations with frequency f0 in the GC sampling structure.

Figure 5. Contrast between spectra and the GC sampling structure for the second class. The top row of the panel is spectra Sxx (cyan) and |Sxy| (black), which is three orders of magnitude smaller than that of Sxx and is magnified by a factor 103, vs. frequency f, (A) Case 2.1 with parameters β1 = , τd = 0.02, μ = 200. (B) Case 2.2 with parameters β1 = , β2 = , τd = 0.05, μ = 200. (C) Case 2.2 with parameters β1 = , β2 = , τd = 0.02, μ = 200. (D–F) the corresponding log-linear plots of F(k)y → x obtained numerically (blue) with respect to sampling interval length k.

Second, for the case of , and , in which both have oscillations with frequency β1 and β2, respectively, we investigate the interactions of different oscillations in a(L) and b(L) in inducing the oscillations in F(k)y→x. From the studies above, one may suspect that the oscillations in F(k)y→x should be a combination of frequency and , implying that we may encounter complicated oscillation structures for F(k)y→x with frequency consisting of and . Shown in Figures 5E,F are such cases. In Figure 5B, the peak frequencies of Sxx and |Sxy| meet the oscillation frequency of a(L) ( = ) and b(L) ( = ), respectively. The GC sampling structure is shown in Figure 5E, where F(k)x,y oscillates at frequency which is twice of and is three times of . In Figure 5C, the peak frequencies of Sxx and |Sxy| meet the oscillation frequency of a(L) ( = ) and b(L) ( = ), respectively. The GC sampling structure is displayed in Figure 5F, in which the oscillation features are complicated, making it difficult to discern simple frequencies. Therefore, the results in Figure 5 confirm that both oscillations of a(L) and b(L) can contribute to the oscillations in the GC sampling structure whose oscillation frequency can be a combination of those in a(L) and b(L).

3.2.3. Idealized model II

In Model I, there is no oscillation in the white noise time series Yt. For Case 1, the oscillations in the GC sampling structure are related to the peak in Sxy, whereas, for Case 2, the GC oscillations are related to the interplay between peaks in Sxy and Sxx. However, in the reconstruction of the topology of the simple two-neuron network, as shown in Figure 2C, there are peaks at approximately the same frequency in Sxx, |Sxy|, Syy, and this frequency is half of the oscillation frequency in the GC sampling structure. We consider the following linear model that possesses the same properties as those in the I&F network case, i.e., Sxx, |Sxy|, and Syy all possess a peak at the same frequency:

where the covariance matrix Σ for the white noise time series ϵt, ηt is . Then, Sxx = a(ω)a*(ω) + b(ω)b*(ω), Syy = c(ω)c*(ω), and Sxy = b(ω)c*(ω). In this case, we set , , , where τd, β, γ, ϕ are constants. Note that this model is different from Idealized Model I in that we no longer have F(k)y→x ≡ F(k)x,y and F(k)x→y ≡ F(k)x·y ≡ 0.

From the spectrum plots shown in Figures 6A,B, it can be seen that the peak frequencies of all spectra are approximately . Figures 6C,D display the corresponding GC sampling structure. We can observe that the oscillation frequencies in the GC sampling structure are approximately , which is again twice of the peak frequency in Figures 6A,B. Therefore, for both the linear model (22) and the I&F networks, there is the same relation between the frequency in the GC sampling structure and that of the peaks in the spectra as those shown in Figure 2. We note that the phase difference ϕ between b(L) and a(L) [or c(L)] may lead to a rather different behavior of the instantaneous Granger causality, Fx·y, as demonstrated in the two cases shown in Figures 6C,D (ϕ = in Figure 6C and ϕ = in Figure 6D). Note that, by construction, there is no causal influence from Xt to Yt in Equation (22). However, Fx→y in Figures 6C,D show significantly non-zero values for ranges of k. Clearly, these GC values are spurious and potentially give rise to erroneous causal inferences even in this linear setting. As pointed out previously, this spurious GC phenomenon also occurs in the I&F neuronal networks (see Figure 2A).

Figure 6. Contrast between spectra and the GC sampling structure obtained numerically for Model II. Sxx (cyan), |Sxy| (black), Syy (red) with parameters τd = 0.05, β = , γ = 0.4, (A) ϕ = , (B) ϕ = . Fx → y (red), Fy → x (cyan), Fx,y (red dash), Fx·y (cyan dash) with respect to sampling interval length k with the parameters (C) same as the parameters in (A). (D) same as the parameters in (B).

In summary, from the results above, we see that the oscillations in the GC sampling structure are related to the oscillations in the original time series and in the coupling of the time series regardless of their linear or non-linear nature. For special cases, there is a doubling frequency in the GC oscillations in comparison with the peak frequency in spectra. However, in general, there may not be a simple relation between these oscillation frequencies. Nevertheless, we can infer that, in general, all oscillations manifested as peaks of Sxx, |Sxy| and Syy may give rise to oscillations in the GC sampling structure.

3.3. Vanishing GC as τ→0

We have already observed in Figure 3 that the GC value vanishes as the sampling interval length τ tends to 0 for GC obtained from both voltage and spike train time series. Moreover, we note that Figure 3 also exhibits that the GC value approaches 0 in a manner almost linearly proportional to the sampling interval length τ as τ → 0. As mentioned before, this sampling effect gives rise to the paradox that GC analysis seems to be less reliable as more information is incorporated as τ → 0. In the following, we will study the mechanisms of such effect and address the question of extracting reliable causal interaction as τ → 0 in order to resolve this paradox.

Because GC can be analyzed through the spectra, we first study the limit of the spectral matrix S(τ)(ω), which is the spectrum of the time series with sampling interval length τ. Suppose that we perform a uniform sampling of a bivariate continuous-time stationary process Xt and Yt with sampling interval length τ to obtain Xnτ, Ynτ, where n is an integer. Using the Wiener-Khinchin theorem, through the relation between the time correlation of discrete time series and that of the original continuous signal, we can obtain the relation between the corresponding spectral matrix S(τ)(ω) and the power spectral density P(f) (ω = 2πτf) of the continuous-time processes Xt and Yt as follows (see Appendix B in Supplementary Material for details):

as τ → 0. Therefore, S(τ)(ω) is scaled as as τ → 0.

Using Equation (12), total Granger causality F(τ)x,y with sampling interval length τ can be directly computed using each component of spectral matrix S(τ)(ω), which is

Taking the limit of τ → 0 and using Equation (23), we obtain

as τ → 0, where is the coherence of continuous-time processes Xt, Yt.

Because a spectral matrix possesses the property of factorization (Wilson, 1972), i.e., S(ω) = A(eiω)A*(eiω), where * denotes matrix adjoint, the factorization of the spectral matrix gives rise to the decomposition S(τ)(ω) = H(τ)(ω)Σ(τ) H(τ)(ω)* of spectral matrix S(τ)(ω), where H(τ)(ω) = A(τ)(eiω)A(τ)(0)−1, and the covariance matrix Σ(τ) = A(τ)(0)A(τ)(0)*, , (see Appendix B in Supplementary Material for details). Defining , , we obtain the limit expressions for F(τ)x→y, F(τ)y→x and F(τ)x·y, which are

as τ → 0.

Then, if is finite, we can easily show that F(τ)x,y, F(τ)x→y, F(τ)y→x and F(τ)x·y all approach finite values in the limit of τ → 0. Therefore, the Granger causality is linearly proportional to the sampling interval length τ for small τ. Hence, vanishing F(τ)x,y, F(τ)x→y, F(τ)y→x and F(τ)x·y as τ → 0. The corresponding limits are related to the intrinsic properties of the continuous-time processes.

4. Discussion and Conclusion

4.1. Selection of Sampling Intervals in the Application of GC

From our results above, it becomes evident that one has to be careful in interpreting causal inference using the GC analysis. For sufficiently large sampling interval length, the GC in general decays exponentially to zero because much of information over dominant frequencies is lost and the GC value becomes small and unreliable for causal inference. When there is causal flow, to avoid a possible vanishing GC value due to zeroes in the oscillations in the GC structure, one should use a range of sampling interval lengths to obtain discrete time series to ascertain the general features of the GC sampling structure, such as Figure 2, so as to avoid using accidental vanishing GC values for causal inference and to obtain a reliable interpretation of causality. When there is no causal flow, spurious causality may also arise (e.g., Figures 2, 6) when the sampling interval length is large because the loss of high frequency information for self prediction of one time series can possibly be compensated by the other time series.

In order to preserve the high frequency information, one would use very fine sampling intervals to sample a continuous-time process. However, as we have pointed out above that the GC values exhibit a linear relation with τ as the sampling interval vanishes, thus, again leading to seemingly unreliable inference of causality. We can circumvent this difficulty by using the following procedure.

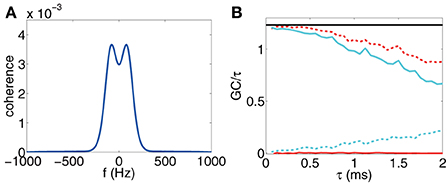

First, for a range of small τ's, we ascertain the linear range of the GC value as a function of τ, then we plot the ratio of the GC value to the corresponding sampling interval length τ to extract its limiting value as τ → 0, e.g., by Equation (25), Figure 7 shows such an example for time series sampled from the I&F network dynamics. It is clear that the directed couplings of the neuronal network dynamics are correctly inferred by the clearly non-zero ratio for the presence of the synaptic coupling and a vanishing ratio for the absence of synaptic coupling. According to asymptotic distributions of GCs, the GC computed from time series is a biased estimate, e.g., the bias is for Fx → y, where p is the regression order, n is the length of time series. To plot the ratio of GC to τ, we used the numerical computed GC with the bias subtracted (see Appendix C in Supplementary Material for details).

Figure 7. (A) Coherence vs. frequency f. (b) F(τ)x → y (red), F(τ)y → x (cyan), F(τ)x,y (red dash), F(τ)x · y (cyan dash) for voltage time series and Fx,y computed through Equation (25) (black horizontal line) vs. sampling interval length τ. The time series are generated by the I&F network whose topology is shown in Figure 1A with parameters ν = 1 ms−1, s = 0.02, λ = 0.0177. Note that we have subtracted the estimation bias of GC from the estimate of GC values for (B). The procedure of removing biases is described in Appendix C in Supplementary Material.

In applications, we may wonder how we determine this linear range and whether this linear range is sufficiently large for the GC extraction. Here, we present an estimation using Equation (25). The idea is that if is a good approximation of for some τ0, which implies that the causal information with frequency higher than can be ignored, then, τ0 is inside the linear range for small GCs. We can determine such range by inspecting directly the figure of C(f) and choose a proper cut-off frequency f0 such that C(f) is sufficiently small, thus can be ignored when |f| > f0. We take the voltage time series of a two-neuron I&F network as an example. Figure 7A displays the coherence function C(f). It can be seen from the figure that we can choose the cut-off frequency f0 = 500 Hz, above which the coherence almost vanishes. Then, we can conclude that the linear range for GC is from 0 ms to ~1 ms. Figure 7B demonstrates the validity of our estimate where F(τ)x,y is approximately a constant over the range from 0 ms to ~1 ms of the sampling interval. Therefore, it is sufficient to use voltage time series sampled with time interval 0.5 ms or less to extract good approximations of the limiting GC-to-τ ratios. We note in passing that, in the Method section, we used τ = 0.5 ms to evaluate the GC values for the network reconstruction.

4.2. Conclusions

In this work, we have discussed general features of the GC sampling structure and their strong implications for causal inference by using the GC analysis. We have also discussed a general approach that can overcome these artifacts arising from sampling interval lengths to obtain reliable GC values. We note that these issues arise when we sample continuous-time processes to obtain discrete time series for the GC analysis. Therefore, the issues should be examined regardless of whether one uses parametric or non-parametric methods for the GC estimation. Furthermore, the general strategies of overcoming these sampling issues are not limited to the bivariate time series with unidirectional connection as discussed in this work. They are also applicable to the GC analysis of bivariate time series with bidirectional connections (shown in Supplementary Figure 2) as well as multivariate time series with any general connectivity structure (Zhou et al., 2013b, 2014).

Author Contributions

Conceived and designed the research: Douglas Zhou, Yaoyu Zhang, Yanyang Xiao, and David Cai. Performed experiments and analyzed data: Douglas Zhou, Yaoyu Zhang, Yanyang Xiao, and David Cai. Wrote the paper: Douglas Zhou, Yaoyu Zhang, Yanyang Xiao, and David Cai.

Conflict of Interest Statement

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Acknowledgments

Douglas Zhou is supported by Shanghai Pujiang Program (Grant 10PJ1406300), National Science Foundation in China (Grants No. NSFC-11101275 and No. 91230202) and the Scientific Research Foundation for the Returned Overseas Chinese Scholars from State Education Ministry in China. David Cai is supported by Grant NSF-DMS-1009575. All authors are supported by the NYUAD Research Institute Grant No. G1301. Yanyang Xiao and Yaoyu Zhang were partially supported by an Undergraduate Research Program in Zhiyuan College at Shanghai Jiao Tong University.

Supplementary Material

The Supplementary Material for this article can be found online at: http://www.frontiersin.org/journal/10.3389/fncom.2014.00075/abstract

References

Astolfi, L., Cincotti, F., Mattia, D., Marciani, M. G., Baccala, L. A., de Vico Fallani, F., et al. (2006). Comparison of different cortical connectivity estimators for high-resolution EEG recordings. Hum. Brain Mapp. 28, 143–157. doi: 10.1002/hbm.20263

Barnett, L., Barrett, A. B., and Seth, A. K. (2009). Granger causality and transfer entropy are equivalent for gaussian variables. Phys. Rev. Lett. 103:238701. doi: 10.1103/PhysRevLett.103.238701

Boccaletti, S., Latora, V., Moreno, Y., Chavez, M., and Hwang, D.-U. (2006). Complex networks: structure and dynamics. Phys. Rep. 424, 175–308. doi: 10.1016/j.physrep.2005.10.009

Bressler, S. L., Richter, C. G., Chen, Y., and Ding, M. (2007). Cortical functional network organization from autoregressive modeling of local field potential oscillations. Stat. Med. 26, 3875–3885. doi: 10.1002/sim.2935

Bressler, S. L., and Seth, A. K. (2011). Wiener–Granger causality: a well established methodology. Neuroimage 58, 323–329. doi: 10.1016/j.neuroimage.2010.02.059

Brovelli, A., Ding, M., Ledberg, A., Chen, Y., Nakamura, R., and Bressler, S. L. (2004). Beta oscillations in a large-scale sensorimotor cortical network: directional influences revealed by granger causality. Proc. Natl. Acad. Sci. U.S.A. 101, 9849–9854. doi: 10.1073/pnas.0308538101

Burkitt, A. N. (2006). A review of the integrate-and-fire neuron model: I. homogeneous synaptic input. Biol. Cybern. 95, 1–19. doi: 10.1007/s00422-006-0068-6

Bussel, F. V., Kriener, B., and Timme, M. (2011). Inferring synaptic connectivity from spatio-temporal spike patterns. Front. Comput. Neurosci. 5:3. doi: 10.3389/fncom.2011.00003

Cai, D., Rangan, A. V., and McLaughlin, D. W. (2005). Architectural and synaptic mechanisms underlying coherent spontaneous activity in v1. Proc. Natl. Acad. Sci. U.S.A. 102, 5868–5873. doi: 10.1073/pnas.0501913102

Carandini, M., Mechler, F., Leonard, C., and Movshon, J. (1996). Spike train encoding by regular-spiking cells of the visual cortex. J. Neurophysiol. 76, 3425–3441.

Chatfield, C. (2003). The Analysis of Time Series: An Introduction, Vol. 59. London: Chapman & Hall/CRC.

Danks, D., and Plis, S. (2013). “Learning causal structure from undersampled time series,” in JMLR: Workshop and Conference Proceedings, Vol. 1, (Nevada), 1–10.

Deshpande, G., LaConte, S., James, G. A., Peltier, S., and Hu, X. (2008). Multivariate granger causality analysis of fMRI data. Hum. Brain Mapp. 30, 1361–1373. doi: 10.1002/hbm.20606

Dhamala, M., Rangarajan, G., and Ding, M. (2008). Estimating granger causality from fourier and wavelet transforms of time series data. Phys. Rev. Lett. 100:018701. doi: 10.1103/PhysRevLett.100.018701

Ding, M., Chen, Y., and Bressler, S. L. (2006). “Granger causality: basic theory and application to neuroscience,” in Handbook of Time Series Analysis, eds B. Schelter, T. Winterhalder, and J. Timmer (Berlin: Wiley-VCH Verlag GmbH & Co. KGaA), 437–460.

Geweke, J. (1982). Measurement of linear dependence and feedback between multiple time series. J. Am. Stat. Assoc. 77, 304–313. doi: 10.1080/01621459.1982.10477803

Geweke, J. (1984). Measures of conditional linear dependence and feedback between time series. J. Am. Stat. Assoc. 79, 907–915. doi: 10.1080/01621459.1984.10477110

Gow, D. W., Segawa, J. A., Ahlfors, S. P., and Lin, F. H. (2008). Lexical influences on speech perception: a granger causality analysis of MEG and EEG source estimates. Neuroimage 43, 614–623. doi: 10.1016/j.neuroimage.2008.07.027

Granger, C. W. J. (1969). Investigating causal relations by econometric models and cross-spectral methods. Econometrica 37, 424–438. doi: 10.2307/1912791

Hamilton, J. P., Chen, G., Thomason, M. E., Schwartz, M. E., and Gotlib, I. H. (2010). Investigating neural primacy in major depressive disorder: multivariate granger causality analysis of resting-state fMRI time-series data. Mol. Psychiatry 16, 763–772. doi: 10.1038/mp.2010.46

Levnajić, Z., and Pikovsky, A. (2011). Network reconstruction from random phase resetting. Phys. Rev. Lett. 107, 34101. doi: 10.1103/PhysRevLett.107.034101

McCrorie, J. R., and Chambers, M. J. (2006). Granger causality and the sampling of economic processes. J. Econ. 132, 311–336. doi: 10.1016/j.jeconom.2005.02.002

McLaughlin, D., Shapley, R., Shelley, M., and Wielaard, D. J. (2000). A neuronal network model of macaque primary visual cortex (v1): Orientation selectivity and dynamics in the input layer 4Cα. Proc. Natl. Acad. Sci. U.S.A. 97, 8087–8092. doi: 10.1073/pnas.110135097

Napoletani, D., and Sauer, T. D. (2008). Reconstructing the topology of sparsely connected dynamical networks. Phys. Rev. E Stat. Nonlin. Soft Matt. Phys. 77, 26103–26103. doi: 10.1103/PhysRevE.77.026103

Passaro, P., Harris, C., Seth, A., O'Shea, M., and Husbands, P. (2008). Spike sorting and functional connectivity analysis using self-organizing maps and granger causality. Front. Neuroinform. Conference Abstract: Neuroinformatics. doi: 10.3389/conf.neuro.11.2008.01.099. Available online at: http://www.frontiersin.org/10.3389/conf.neuro.11.2008.01.099/event_abstract

Rangan, A., and Cai, D. (2007). Fast numerical methods for simulating large-scale integrate-and-fire neuronal networks. J. Comput. Neurosci. 22, 81–100. doi: 10.1007/s10827-006-8526-7

Rangan, A. V., Cai, D., and McLaughlin, D. W. (2005). Modeling the spatiotemporal cortical activity associated with the line-motion illusion in primary visual cortex. Proc. Natl. Acad. Sci. U.S.A. 102, 18793–18800. doi: 10.1073/pnas.0509481102

Rauch, A., La Camera, G., Lüscher, H.-R., Senn, W., and Fusi, S. (2003). Neocortical pyramidal cells respond as integrate-and-fire neurons to in vivo–like input currents. J. Neurophysiol. 90, 1598–1612. doi: 10.1152/jn.00293.2003

Ren, J., Wang, W.-X., Li, B., and Lai, Y.-C. (2010). Noise bridges dynamical correlation and topology in coupled oscillator networks. Phys. Rev. Lett. 104, 58701. doi: 10.1103/PhysRevLett.104.058701

Rieke, F., Warland, D., van Steveninck, R. D. R., and Bialek, W. (1999). Spikes: Exploring the Neural Code. Cambridge: MIT press.

Roebroeck, A., Formisano, E., and Goebel, R. (2005). Mapping directed influence over the brain using granger causality and fMRI. Neuroimage 25, 230–242. doi: 10.1016/j.neuroimage.2004.11.017

Schwarz, G. (1978). Estimating the dimension of a model. Ann. Stat. 6, 461–464. doi: 10.1214/aos/1176344136

Smirnov, D. A., and Bezruchko, B. P. (2009). Detection of couplings in ensembles of stochastic oscillators. Phys. Rev. E 79:046204. doi: 10.1103/PhysRevE.79.046204

Somers, D. C., Nelson, S. B., and Sur, M. (1995). An emergent model of orientation selectivity in cat visual cortical simple cells. J. Neurosci. 15, 5448–5465.

Tao, L., Shelley, M., McLaughlin, D., and Shapley, R. (2004). An egalitarian network model for the emergence of simple and complex cells in visual cortex. Proc. Natl. Acad. Sci. U.S.A. 101, 366–371. doi: 10.1073/pnas.2036460100

Timme, M. (2007). Revealing network connectivity from response dynamics. Phys. Rev. Lett. 98, 224101. doi: 10.1103/PhysRevLett.98.224101

Troyer, T. W., Krukowski, A. E., Priebe, N. J., and Miller, K. D. (1998). Contrast-invariant orientation tuning in cat visual cortex: thalamocortical input tuning and correlation-based intracortical connectivity. J. Neurosci. 18, 5908–5927.

Wiener, N. (1956). The Theory of Prediction Modern Mathematics for Engineers. New York, NY: McGraw-Hill.

Wilson, G. T. (1972). The factorization of matricial spectral densities. SIAM J. Appl. Math. 23, 420–426. doi: 10.1137/0123044

Yu, D., Righero, M., and Kocarev, L. (2006). Estimating topology of networks. Phys. Rev. Lett. 97, 188701. doi: 10.1103/PhysRevLett.97.188701

Zhou, D., Rangan, A. V., McLaughlin, D. W., and Cai, D. (2013a). Spatiotemporal dynamics of neuronal population response in the primary visual cortex. Proc. Natl. Acad. Sci. U.S.A. 110, 9517–9522. doi: 10.1073/pnas.1308167110

Zhou, D., Xiao, Y., Zhang, Y., Xu, Z., and Cai, D. (2013b). Causal and structural connectivity of pulse-coupled nonlinear networks. Phys. Rev. Lett. 111:054102. doi: 10.1103/PhysRevLett.111.054102

Keywords: Granger causality, sampling rate, causal inference, reliability, neuronal dynamics, topology extraction

Citation: Zhou D, Zhang Y, Xiao Y and Cai D (2014) Analysis of sampling artifacts on the Granger causality analysis for topology extraction of neuronal dynamics. Front. Comput. Neurosci. 8:75. doi: 10.3389/fncom.2014.00075

Received: 11 April 2014; Accepted: 29 June 2014;

Published online: 30 July 2014.

Edited by:

Si Wu, Beijing Normal University, ChinaReviewed by:

Gregor Kovacic, Rensselaer Polytechnic Institute, USAIlya Timofeyev, University of Houston, USA

Copyright © 2014 Zhou, Zhang, Xiao and Cai. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) or licensor are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Douglas Zhou and David Cai, Institute of Natural Sciences, Shanghai Jiao Tong University, 800 Dongchuan Road, Minhang District, Shanghai 200240, China e-mail:emR6QHNqdHUuZWR1LmNu;Y2FpQGNpbXMubnl1LmVkdQ==

Douglas Zhou

Douglas Zhou Yaoyu Zhang

Yaoyu Zhang Yanyang Xiao

Yanyang Xiao David Cai

David Cai