- 1École des Neurosciences de Paris, Université Pierre et Marie Curie, Paris, France

- 2Department of Biology, Loyola University Chicago, Chicago, IL, USA

- 3Bioinformatics Program, Loyola University Chicago, Chicago, IL, USA

- 4Department of Psychology, Loyola University Chicago, Chicago, IL, USA

- 5Department of Medical and Social Sciences, Northwestern University, Chicago, IL, USA

- 6Department of Computer Science, Loyola University Chicago, Chicago, IL, USA

To accurately perceive the world, people must efficiently combine internal beliefs and external sensory cues. We introduce a Bayesian framework that explains the role of internal balance cues and visual stimuli on perceived eye level (PEL)—a self-reported measure of elevation angle. This framework provides a single, coherent model explaining a set of experimentally observed PEL over a range of experimental conditions. Further, it provides a parsimonious explanation for the additive effect of low fidelity cues as well as the averaging effect of high fidelity cues, as also found in other Bayesian cue combination psychophysical studies. Our model accurately estimates the PEL and explains the form of previous equations used in describing PEL behavior. Most importantly, the proposed Bayesian framework for PEL is more powerful than previous behavioral modeling; it permits behavioral estimation in a wider range of cue combination and perceptual studies than models previously reported.

Introduction

On a daily basis, our bodies integrate millions of separate stimuli to enable our perception of the world, ranging from visual and auditory stimuli to internal cues. Our brain integrates all of these separate stimuli into what appear to be clear perceptions (Ernst, 2006). As a result, in order to fully understand perception, we must understand how various stimuli are combined and interpreted. Various methods have been used to accomplish this in previous research, such as maximum-likelihood estimation (Hillis et al., 2004), modified weak fusion (Landy et al., 1995), perturbation analysis (Young et al., 1993), and Bayesian analysis (Kersten et al., 2004). Importantly, all these behavioral modeling approaches use relatively simple mathematical frameworks to explain what can appear to be complex behavioral phenomena.

The use of Bayesian analysis in perceptual studies is well justified by previous models of perceptual decision making. The Bayesian coding hypothesis assumes that the brain represents information probabilistically (Knill and Pouget, 2004). Although, finding direct neural correlates of these statistical distributions is not always readily apparent, the ability to simulate behavior using these principles suggests this type of computation plays a substantial high-level role in perceptual processing. Previous research has explored this type of Bayesian analysis for cue combination, object perception (Kersten et al., 2004), spatial localization (Battaglia et al., 2003), and forms of visual perception (Bridgeman, 2003).

Perceived eye level (PEL) is a self-reported measure of elevation angle interpreted from a combination of visual and internal cues. Estimating PEL is important as having an accurate PEL plays a role in judging height, distance, elevation, and size of objects. Different factors can confound our perception of eye level, however, causing PEL to differ from the actual eye level. These factors can be classified as internal factors, such as the head's position relative to gravity, or external factors, such as strength of visual stimuli. Further, it is known that visual stimuli can contribute to the error in perceiving eye level as we make a wide variety of assumptions regarding the orientation of objects relative to gravity. For example, we have a strong disposition to assume near-vertical lines on a distant wall are truly vertical, and observed visual pitches can be attributed to perspective above or below the horizontal. Estimating this behavior can be structured as a Bayesian cue-combination problem, modeling the effect of multiple stimuli on the perception of eye level.

In this study, we use Bayesian analysis to derive a simple framework that can be used to estimate perception from multiple sensory cues. This model provides a parsimonious explanation of the additive effects of low fidelity cues, as well as the averaging effect of high fidelity cues, previously documented in other Bayesian cue combination psychophysical studies (Young et al., 1993; Gu et al., 2008). Further, our model accurately predicts PEL and provides the means of deriving an appropriate analytical function that can describe behavior. We demonstrate the Bayesian model as a principled approach in estimating PEL.

Methods

Experimental Design

An experiment can be conducted to measure the effect of multiple visual cues on PEL—we will follow the experimental design from Matin and Li for comparison (Matin and Li, 2000). Subjects are seated upright with their heads stationary on a chin rest, one meter away from a blackened wall with pitched illuminated lines (visual cue). A laser point target is shown on the wall, along the subjects' midsagittal plane. The subject is asked to identify where their eye level is located by repositioning the laser target, without any visual cues present in the room. The PEL of the subject, due to internal stimuli, is recorded. After this initial measurement, a pair of vertical illuminated lines of different lengths are introduced on the wall. The subject is again tasked with lining up the laser target with their eye level. The PEL of the subject is recorded. This would be continuously repeated with the illuminated lines of different pitches and lengths until multiple measurements are collected for each subject. From this description, we apply a Bayesian framework to derive a model of PEL from first principles allowing us to predict the form of PEL expected prior to performing the experiment.

Gaussian Cue Combination

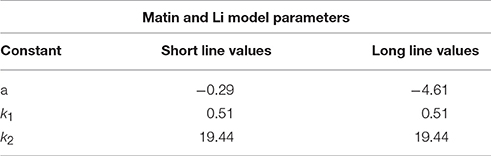

In order to use a Bayesian framework, we must define the prior, likelihood, and posterior distributions. Our prior in this experiment is the body-referenced mechanism for PEL estimation—the internal cues are what originally structure our belief about eye level before being presented with stimuli. We approximate this prior as a Gaussian distribution with a mean μb and standard deviation σb. There is also the estimate of eye level that comes directly from a geometric interpretation of visual information. In this case, lines can be pitched to give the appearance of a particular tilt from vertical, assuming the observed line is vertical but tilted due to geometric perspective. This purely visual contribution to the perception is μvi for individual visual cues and μv for the combination of visual cues—and similarly σvi and σv for the standard deviations. The posterior distribution, or the effect of n visual stimuli and internal cues on PEL, would be the combined effect of the body prior and the visual likelihood, described by μp and σp. This posterior distribution would then provide the estimate of the perceived of eye level. These distributions and their final combinations are demonstrated in Figure 1.

Figure 1. Perceived eye level using a Bayesian model with Gaussian priors and likelihoods. (A) The subject's perceived eye level distribution in the dark from proprioceptive and vestibular cues—the “body prior.” (B) Two-line stimuli of differing lengths and pitch angles. (C) The likelihood for eye level estimation from the 2-line visual stimuli, with means centered on the angle expected from a geometric interpretation and variances dependent on the length of the lines. (D) The resulting posterior distribution, the combination of the internal stimuli and the visual stimuli, as determined by Bayes rule. (E) The means of the posterior distributions, the expected reported value for perceived eye level, are then calculated, based on the Bayesian model.

As a first step in deriving the equation for PEL in this experimental condition, we first combine the likelihoods of multiple pitched lines. Since we are using Gaussian distributions for our likelihoods, we can achieve this by multiplying them together. The resulting distribution is also Gaussian, with a mean μv and variance :

As is in both equations, we can simplify through substitution to obtain the following likelihood estimate based only on visual information. This is the composite likelihood function which will be used in the derivation of μp.

Estimating Variances for the Model

To use Gaussian distributions for the priors and likelihoods, two variables are necessary for each stimulus—the mean and variance. The means for the body and individual visual stimuli can be assumed/easily measured and estimated based on geometric interpretation, respectively. However, the variances must be experimentally determined.

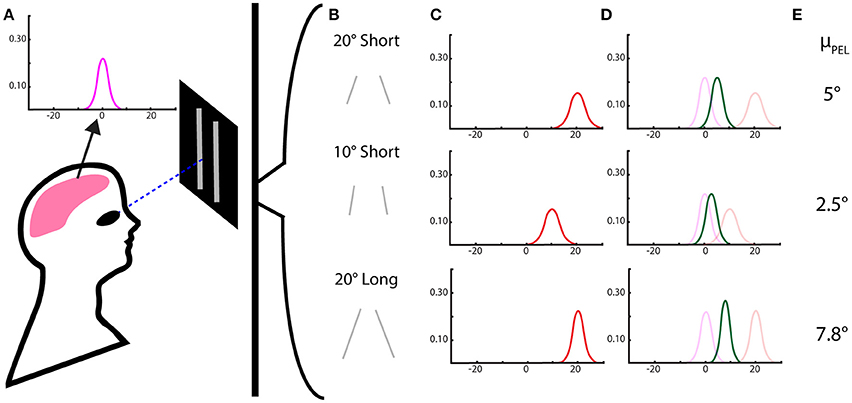

A common method to quantifying the effect of priors and likelihoods in Bayesian analysis is to observe the change in the percept as the mean of the likelihood is altered. When plotted, as done in Figure 2, the slope indicates the relative strength of the visual likelihood compared to the body-based prior. Analytically, this slope is directly related to the ratio of the variances, as shown in Equation (4). Using experimental data of true angle versus perceived angle from Matin and Li this slope, m, can be experimentally determined.

Figure 2. This graph depicts the experimental trends of average perceived eye level based on pitch. The prior (black), the body-referenced mechanism, is centered on the true eye level. The likelihood (blue), represents PEL if only the visual information was used. The experimentally measured PEL is shown for single short line (purple) and long line (green). The equation for the short-line regression is y = 0.1415x − 1.869, whereas the equation for the long-line regression is y = 0.3234x − 4.9429. These single line slopes are used to solve for the variances in our model, as shown in Equation (5).

With only the two unknown variances in the above equation, having one value will be sufficient for estimating PEL. Fortunately, , is readily available experimentally by recording the standard deviation of the PEL estimates when there is no visual information. Using this information, we can then estimate the variance of the combined visual likelihood using Equation (5).

Results

Derivation of the Bayesian Model

Proceeding from Equation (3), if line length is constant, all visual stimuli will have equal variance (σvi are equal for all values of i). As such, σvi can be factored out of both summations and becomes n. Further, the likelihood mean from each line, μvi, can be relabeled θi for clarity as the angle is known and does not need to be estimated. This allows us to create a new estimated likelihood mean based on visual cues of the same line length.

We proceed to introduce the prior internal (“body”) mean, μb, and variance, (which are equivalent to the mean and variance of PEL in complete darkness). Using the same, multiplication-of-Gaussians approach to find the mean and variance of the posterior, as discussed in the Methods Section, we arrive at the following formulation for the mean PEL.

This equation can be algebraically rearranged and simplified to:

However, we note that σvi depends on line length, and we would like a function independent of line length if possible. If we assume each additional segment of line length provides an independent sample to establish the slope of the line, we can approximate the relationship between line length and visual variance as an inversely proportional relationship. The validity of this independence assumption is clearly more questionable as line length increases, however, this approach provides a theoretically-justifiable approximation to parametrically estimate behavior when presented with variations in line lengths. Under this assumption, the form of that relationship is then similar to the standard error estimate from a sample mean, , where σvl is independent of line length. The result of this substitution yields:

This is our final model, however, we can make a few substitutions to simplify use in an experimental setting. The first term in Equation (9) can be simplified as a function based on line length, abbreviated a(l), as given in Equation (11). Note, this indicates than an increase in the number of lines or line length should diminishes the value of a(l). Further, is composed of constants, so it can be substituted as a constant, k, as shown in Equation (12). This yields a simple form of the model to predict average PEL, given in the follow set of equations:

Predictive Ability of the Bayesian Model

To test the validity of our derived Bayesian model in experimental circumstances we used data from the experiment outlined in Section Methods, performed by Matin and Li (2001). The experiment was performed with 2 line lengths (short 12° and long 64°) with pitches from −30 to 30° in 10° increments. According the Bayesian model as pitch angle (θi) increases, the mean PEL increases as well. However, PEL will never exceed the pitch angle. This is demonstrated in Figure 2. As such, PEL lies between estimates from internal cues (prior) and the pitch angle. Similarly, as the strength (line length) of a visual input increases, so does the influence of the visual stimuli on the perception of eye level, as PEL moves away from the prior. Both of these phenomena are demonstrated experimentally (Matin and Li, 2000, 2001).

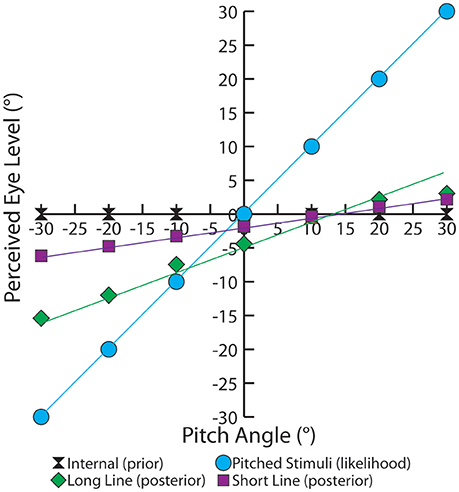

The interpretation of multiple visual cues demonstrates an interesting perceptual trend. When two separate visual cues are observed independently, two distinct percepts of PEL are obtained. When the cues are given together, the resulting PEL appears to fall in two distinct regimes. Weak cues (e.g., short lines) PELs are additive, while strong cues (e.g., long lines) are averaging. This is a fact that have been observed and explained in previous psychophysical studies (Young et al., 1993; Gu et al., 2008) as well as neural cue integration (Fetsch et al., 2013). This was also demonstrated experimentally, long-line stimuli almost averaged in their influence on PEL and short-line stimuli appearing to be summed in the determination of PEL (Matin and Li, 1999, 2000). Figure 3 is an adaptation of the result from Matin and Li demonstrating this phenomenon. One can also observe from Equation (10) that as line length increases, the denominator tends toward “n” thus approaching an averaging effect, while shorter line lengths lead to the constant “k” dominating the denominator which leads to a summation effect. The compensatory effect of a(l) should be minimal given the tendency of the body prior to be near 0. In this way, this observed combination effect can be seen analytically in our model.

Figure 3. Experimental data, collected by Matin and Li. A linear regression for the data is displayed (black), as well as lines displaying the summation of single-line PELs (red) and averaging of single-line PELs (blue). (A) This depicts long-line experimental data with m = 0.666, signifying a near-averaging relationship between high-fidelity cues (blue dotted line). (B) This chart depicts short-line experimental data with m = 0.9998, signifying a summative relationship between low-fidelity cues (red dotted line).

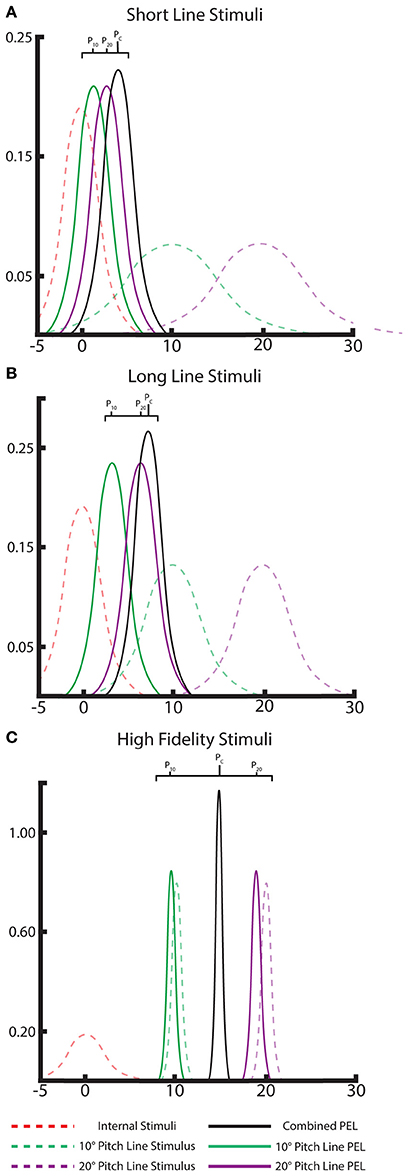

Note, this additive/averaging effect in cue combination is well understood in Bayesian literature. Our model also exhibits these effects straightforwardly by adjusting the likelihood variances based on line length. Weaker, short line stimuli lead to shallow, high-variance likelihoods which individually only marginally move the posterior from the body prior, but together the combined posterior moves farther than any one alone (“additive”). With the strong, low-variance likelihoods from long line stimuli, the posteriors are dominated by the likelihoods, leading to a combined posterior between the likelihoods when presented with two strong long line stimuli (“averaging”). The visual demonstration of this effect using the Bayesian framework is shown in Figure 4. The Bayesian approach successfully explains the effects observed with multiple cues.

Figure 4. A Bayesian interpretation of the “averaging” and “additive” effects of multiple cues in PEL. Gaussian distributions of the internal priors (dashed, red), likelihood of the individual visual lines (dashed, green and purple), and the single-line posterior distributions/PELs for each visual cue (solid, green and purple). The location of these PEL posterior means can be compared to the combined-cue posterior (black) to note the averaging or additive effect of combining multiple cues. This is shown for both long-line cues (A) and short-line cues (B) and displays the “nearly averaging” and additive effects, respectively, as seen in the data. Ideal, high-fidelity stimuli are shown as well (C) demonstrating a clear averaging effect.

Comparison to Previous Model

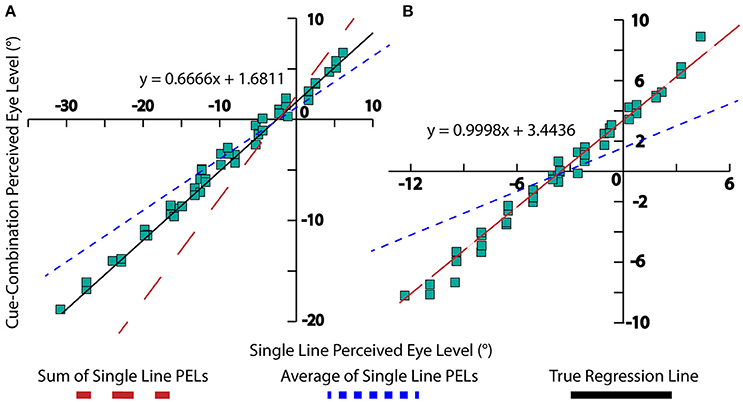

From experimental results and fitting the behavioral data, a model was previously developed to explain the effects of multiple lines on the perception of eye level (Matin and Li, 2001).

Bayesian Model:

Matin and Li Model:

The Matin and Li model is very similar to our model. The notable difference between the previous model and the Bayesian model is the existence of k1 in the Matin and Li model. Another small difference remains, as a is defined as a constant in the Matin and Li model, whereas it is derived as a function dependent on line length in our model. Our framework also allows the model to be derived for various different scenarios, such as for multiple lines of different lengths, as well as has the ability to be extended to other studies, perhaps even unrelated to PEL as studied here.

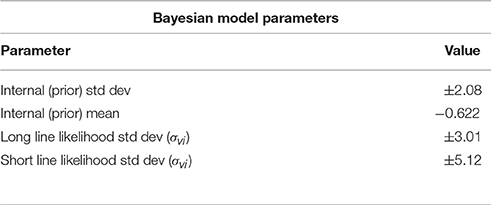

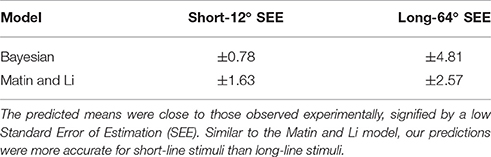

From experimental results shown in Figure 2 we calculated variance estimates for single line cues from a linear fit of the data. These results are presented in Table 1. With the prior and likelihood distributions' variance estimates, all necessary values are known for a complete Bayesian model. The fitted constants for Matin and Li's behavioral model (Equation 14) are displayed in Table 2. For each model we predicted the mean PEL given the experimental condition. Using the standard error in estimation we measured the accuracy of our predictions, displayed in Table 3. Both models are accurate (no standard errors over 3°) with the Matin and Li model slightly more accurate for long-line stimuli and the Bayesian model performing better with short-line stimuli. This is fitting with the independence assumption for how line segments contribute to variance estimates, which suggested the Bayesian model may not hold as well for longer line stimuli.

Table 1. Standard deviation and mean estimates for single-line inputs calculated from experimental data as discussed in Methods.

Table 3. Using variance estimates from Table 1 in our Bayesian model and constant values from Table 2 in the Matin and Li Model, we predict the effect of two short or long pitched-from-vertical line stimuli on perceived eye level.

Discussion

Our Bayesian framework successfully derived a model to predict PEL from first principles without the need for experimental data. Whereas, the Matin and Li derived equation could only take into account n visual stimuli of the same length, our framework can be extended analytically to take into account n visual stimuli of varying lengths, all from the same basic principles demonstrated in this manuscript. The simplicity of the Bayesian framework is also an indication of its latent power for prediction. This framework can be analytically derived and expanded to multiple studies in a straightforward way, including multiple lines of varying lengths, non-line visual stimuli, and manipulations of the body prior for PEL. The same tools can be applied to enable a straightforward interpretation for combining these, or additional stimuli, to estimate the percept.

It is important to stress that this Bayesian interpretation does not preclude a more detailed, biophysical explanation. The approach explains the computational principles likely involved, but not how they are implemented, consistent with David Marr's levels of analyses (Marr, 1982). Marr categorizes all models into three levels: the computational level, the algorithmic level and the implementation level. Here, we only address the first level, which constrains but does not conflict with other potential models that explain this behavior. Matin and Li's experimentally justified creation of Equation (13) provides a succinct, high-level summary of behavior; however, it should be apparent that such fitting to the data may explain “what” is occurring, but a Bayesian derivation adds a clearer picture of “why” such an equation fits the data as presented. Again, this Bayesian interpretation does not preclude other lower-level interpretations. For example, Matin and Li in 2001 proposed a neurophysiological model to explain this behavior. This is a separate level of analysis as the Bayesian approach and should not be considered in conflict with this, or other, potential algorithmic or implementation-level explanations of observations.

Our Bayesian PEL model adds to the growing repertoire of Bayesian perceptual papers addressing a wide range of perceptual topics. Bayesian principles have explained visual illusions in end-point occluded or low-contrast rhombus motion (Weiss et al., 2002). Bayesian inference can predict the effect of visual and vestibular cues in the determination of heading (Butler et al., 2010). Bayesian integration has also successfully modeled the effect of visual and auditory signals on spatial localization (Battaglia et al., 2003). Our PEL Bayesian analysis and Bayesian framework adds to this body of knowledge further demonstrating the power of Bayesian techniques in perceptual studies.

We have successfully demonstrated a Bayesian framework to model cue combination in a perceptual study of PEL. Using the approach we derived a model for PEL from first principles which matched the experimentally determined behavior demonstrated by researchers Matin and Li. Further, this model explains trends and phenomena associated with PEL, providing a straightforward, parsimonious explanation of the “summing” and “averaging” effects on PEL when combining weak and strong visual cues. Most importantly, the Bayesian approach is more amenable to incorporate different experimental conditions, such as varied line lengths or additional stimulus types. This framework is more parsimonious, and subsequently more powerful, further enabling other perceptual studies.

Author Contributions

EO and MA designed the study and performed the necessary derivations. EO extracted the behavioral data, performed the analyses, and created the initial draft of the manuscript. RP aided in the initial analysis steps. LK confirmed the results and completed the manuscript.

Funding

MA was funded through startup costs for the Pervasive and Ambient Computing (PAC) lab at Loyola University Chicago. The work of author LK was funded by NIH Grant R01NS063399 to Konrad Kording.

Conflict of Interest Statement

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Acknowledgments

We would like to thank the students of the Computational Neuroscience course at Loyola University Chicago who supported the initial development of this work.

References

Battaglia, P. W., Jacobs, R. A., and Aslin, R. N. (2003). Bayesian integration of visual and auditory signals for spatial localization. J. Opt. Soc. Am. A 20:1391. doi: 10.1364/JOSAA.20.001391

Bridgeman, B. (2003). [Book review: probabilistic models of the brain: perception and neural function]. Q. Rev. Biol. 78, 124–125. doi: 10.1086/377908

Butler, J. S., Smith, S. T., Campos, J. L., and Bülthoff, H. H. (2010). Bayesian integration of visual and vestibular signals for heading. J. Vis. 10:23. doi: 10.1167/10.11.23

Ernst, M. O. (2006). “A Bayesian view on multimodal cue integration,” in Human Body Perception from the Inside Out, Vol. 131, eds G. Knoblich, I. M. Thornton, M. Grosjean, and M. Shiffrar (New York, NY: Oxford University Press), 105–131.

Fetsch, C. R., DeAngelis, G. C., and Angelaki, D. E. (2013). Bridging the gap between theories of sensory cue integration and the physiology of multisensory neurons. Nat. Rev. Neurosci. 14, 429 doi: 10.1038/nrn3503

Gu, Y., Angelaki, D. E., and DeAngelis, G. C. (2008). Neural correlates of multisensory cue integration in macaque MSTd. Nat. Neurosci. 11, 1201–1210. doi: 10.1038/nn.2191

Hillis, J. M., Watt, S. J., Landy, M. S., and Banks, M. S. (2004). Slant from texture and disparity cues: optimal cue combination. J. Vis. 4, 1–1. doi: 10.1167/4.12.1

Kersten, D., Mamassian, P., and Yuille, A. (2004). Object perception as bayesian inference. Annu. Rev. Psychol. 55, 271–304. doi: 10.1146/annurev.psych.55.090902.142005

Knill, D. C., and Pouget, A. (2004). The Bayesian brain: the role of uncertainty in neural coding and computation. Trends Neurosci. 27, 712–719. doi: 10.1016/j.tins.2004.10.007

Landy, M. S., Maloney, L. T., Johnston, E. B., and Young, M. (1995). Measurement and modeling of depth cue combination: in defense of weak fusion. Vis. Res. 35, 389–412. doi: 10.1016/0042-6989(94)00176-M

Marr, D. (1982). Vision: A Computational Investigation into the Human Representation and Processing of Visual Information. New York, NY: Freeman.

Matin, L., and Li, W. (1999). Averaging and summation of influences on visually perceived eye level between two long lines differing in pitch or roll-tilt. Vis. Res. 39, 307–329. doi: 10.1016/S0042-6989(98)00059-5

Matin, L., and Li, W. (2000). Linear combinations of signals from two lines of the same or different orientations. Vis. Res. 40, 517–527. doi: 10.1016/S0042-6989(99)00182-0

Matin, L., and Li, W. (2001). Neural model for processing the influence of visual orientation on visually perceived eye level (VPEL). Vis. Res. 41, 2845–2872. doi: 10.1016/S0042-6989(01)00150-X

Weiss, Y., Simoncelli, E. P., and Adelson, E. H. (2002). Motion illusions as optimal percepts. Nat. Neurosci. 5, 598–604. doi: 10.1038/nn0602-858

Keywords: Bayesian analysis, perceived eye level, cue combination, elevation estimation, visual psychophysics

Citation: Orendorff EE, Kalesinskas L, Palumbo RT and Albert MV (2016) Bayesian Analysis of Perceived Eye Level. Front. Comput. Neurosci. 10:135. doi: 10.3389/fncom.2016.00135

Received: 16 September 2016; Accepted: 01 December 2016;

Published: 15 December 2016.

Edited by:

Si Wu, Beijing Normal University, ChinaReviewed by:

He Wang, Hong Kong University of Science and Technology, Hong KongWenhao Zhang, Carnegie Mellon University, USA

Copyright © 2016 Orendorff, Kalesinskas, Palumbo and Albert. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) or licensor are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Mark V. Albert, bXZhQGNzLmx1Yy5lZHU=

Elaine E. Orendorff

Elaine E. Orendorff Laurynas Kalesinskas

Laurynas Kalesinskas Robert T. Palumbo4,5

Robert T. Palumbo4,5 Mark V. Albert

Mark V. Albert