- 1Laboratório de Sistemas Neurais, Department of Physics, School of Philosophy, Sciences and Letters of Ribeirão Preto, University of São Paulo, São Paulo, Brazil

- 2Theory of Complex Systems and Neurophysics, Bernstein Center for Computational Neuroscience, Berlin, Germany

- 3Department of Physics, Humboldt Universität zu Berlin, Berlin, Germany

Recurrent networks of spiking neurons can be in an asynchronous state characterized by low or absent cross-correlations and spike statistics which resemble those of cortical neurons. Although spatial correlations are negligible in this state, neurons can show pronounced temporal correlations in their spike trains that can be quantified by the autocorrelation function or the spike-train power spectrum. Depending on cellular and network parameters, correlations display diverse patterns (ranging from simple refractory-period effects and stochastic oscillations to slow fluctuations) and it is generally not well-understood how these dependencies come about. Previous work has explored how the single-cell correlations in a homogeneous network (excitatory and inhibitory integrate-and-fire neurons with nearly balanced mean recurrent input) can be determined numerically from an iterative single-neuron simulation. Such a scheme is based on the fact that every neuron is driven by the network noise (i.e., the input currents from all its presynaptic partners) but also contributes to the network noise, leading to a self-consistency condition for the input and output spectra. Here we first extend this scheme to homogeneous networks with strong recurrent inhibition and a synaptic filter, in which instabilities of the previous scheme are avoided by an averaging procedure. We then extend the scheme to heterogeneous networks in which (i) different neural subpopulations (e.g., excitatory and inhibitory neurons) have different cellular or connectivity parameters; (ii) the number and strength of the input connections are random (Erdős-Rényi topology) and thus different among neurons. In all heterogeneous cases, neurons are lumped in different classes each of which is represented by a single neuron in the iterative scheme; in addition, we make a Gaussian approximation of the input current to the neuron. These approximations seem to be justified over a broad range of parameters as indicated by comparison with simulation results of large recurrent networks. Our method can help to elucidate how network heterogeneity shapes the asynchronous state in recurrent neural networks.

1. Introduction

The autonomous dynamics of recurrent networks of spiking neurons is an important topic in computational neuroscience. Networks of randomly connected excitatory and inhibitory integrate-and-fire (IF) neurons are often used in the study of this problem, because this model is computationally efficient for numerical simulations and even sometimes permits analytical insights (see e.g., Abbott and van Vreeswijk, 1993; Brunel, 2000; Lindner et al., 2005; Richardson, 2009; Deger et al., 2014). Exploring the possible spike statistics in such network models may help us to further our understanding of healthy and pathological neural activity in different brain areas and brain states. Moreover, understanding the autonomous (i.e., spontaneous) activity is also a necessary prerequisite for the comprehension of the network response to external signals and signal transmission and processing capabilities of the network in general.

Recurrent networks of IF neurons can already show a rich repertoire of activity states (Brunel, 2000) shaped by pronounced synchronization and by oscillations on which many computational studies have focused (see e.g., van Vreeswijk et al., 1994; Hopfield and Herz, 1995; Ermentrout et al., 2001; Timme et al., 2006; Ladenbauer et al., 2012). One state that lacks obvious collective effects but still can show a statistically rich behavior is the asynchronous state with low or absent cross-correlations among neurons. This state is found in many network models (van Vreeswijk and Sompolinsky, 1996; Brunel, 2000; Renart et al., 2010; Helias et al., 2014) and also in experimental recordings in different brain areas in the awake and attentive animal (Poulet and Petersen, 2008; Harris and Thiele, 2011).

Although it is frequently assumed in theoretical studies, approximating the asynchronous activity as Poisson spiking with a total lack of temporal correlations is generally not justified. Despite the characteristic absence or weakness of spatial correlations among neurons, neural spike trains in the asynchronous state can still show a pronounced temporal correlation: experiments have revealed non-flat (i.e., non-Poissonian) spike-train power spectra exhibiting reduced power at low frequency (Edwards et al., 1993; Bair et al., 1994), peaks attained at frequencies close to the firing rate and multiples (Pesaran et al., 2002) or increased power at low frequencies indicating slow fluctuations or bursting (Bair et al., 1994). Some of these features (but also additional ones) have been found for spike-train power spectra from neurons in the sensory periphery (Neiman and Russell, 2011; Grewe et al., 2017) that lack synaptic input from other neurons but are subject to channel noise and other signal-unrelated fluctuations. Theoretically, some (but not all) of these spectral shapes can be already understood if we consider simple stochastic models, e.g., a Poisson process with refractory period (Bair et al., 1994; Jarvis and Mitra, 2001) or, more elaborate, integrate-and-fire models driven by white (Lindner et al., 2002; Richardson, 2008; Vilela and Lindner, 2009b) or colored noise (Middleton et al., 2003; Bauermeister et al., 2013; Droste and Lindner, 2017).

Interestingly, even if completely deterministic neuron models are connected in a random network, corresponding observations of random spiking can be made: the total chaotic input from the network impinging on the single cell acts as an effectively stochastic drive and the resulting spike-train power spectra exhibit in many cases a non-trivial (in particular, non-flat, i.e., non-Poissonian) shape. Depending on cellular parameters as the reset value after spiking (Dummer et al., 2014) or on the strength of synaptic coupling (Ostojic, 2014; Wieland et al., 2015), the spectrum can change drastically (e.g., from strongly peaked spectra to low-frequency dominated spectra with a 1/fα form). How spike-train power spectra depend on system parameters in a recurrent network is generally poorly understood [for some effects of presynaptic refractoriness, slow presynaptic rate changes, and short-term synaptic plasticity, see (Schwalger et al., 2015), for effects of the postsynaptic refractory period, see (Bair et al., 1994; Franklin and Bair, 1995)]. Some progress has been achieved though for a related but distinct statistics at a higher modeling level, namely, the power spectrum of the population activity, for which different approximations and numerical schemes have been put forward (Knight, 1972; Brunel and Hakim, 1999; Spiridon and Gerstner, 1999; Mattia and Giudice, 2002; Lindner et al., 2005; Trousdale et al., 2012; Deger et al., 2014; Schwalger et al., 2017). In our paper we focus exclusively on single spike-train power spectra.

According to early work by Mari (2000) and, particularly, by Lerchner et al. (2006), a strong theoretical argument against the white-noise approximation is the self-consistency of the fluctuation statistics. If we think of a homogeneous network of statistically equivalent neurons (identical neural parameters and a fixed number of input connections as in the popular Brunel network; Brunel, 2000), the output statistics of a cell should be related to the input statistics because in the network every driven cell is also a driving cell. In the simple case of current-pulse-coupled IF neurons without a synaptic filter (homogeneous Brunel network), the power spectrum of the input current should be proportional to the power spectrum of the spike train generated by the neuron. As the output power spectrum of a white-noise driven IF neuron is generally not flat (Poisson-like) (Lindner et al., 2002; Vilela and Lindner, 2009a) and, contrary to some claims in the literature, summing many presynaptic spike trains does not remove the temporal correlations of the single process (Lindner, 2006), the flat white-noise spectrum cannot be a self-consistent solution for the network neuron, unless all neurons are poised deep in the subthreshold fluctuation-driven regime of very rare firing (rates are smaller than 1Hz).

The self-consistency of the temporal correlations of input and output in random networks is not an entirely new idea: in statistical physics it has been used to derive correlation functions of disordered spin systems (Sompolinsky and Zippelius, 1982; Eissfeller and Opper, 1992); in neuroscience, it was applied to random networks of coupled rate units by Sompolinsky et al. (1988) (for various recent extensions, see Aljadeff et al., 2015; Kadmon and Sompolinsky, 2015; Mastrogiuseppe and Ostojic, 2017). Generally, the self-consistency condition of the asynchronous state can be employed to determine correlation functions or power spectra without actually simulating the network but by simulating a single element iteratively. If we make a Gaussian approximation for the incoming stream of input spikes, we may ask how correlated (“colored”) this Gaussian noise has to be to evoke a neural spike train with a temporal correlation proportional to that of the driving noise; equivalently, we can ask about the proportionality of power spectra. This idea can be translated into an iterative scheme that finds this solution numerically (if it exists). Such a scheme has first been developed for a spin system (Eissfeller and Opper, 1992); in the neural context it works essentially as follows (Lerchner et al., 2006; Dummer et al., 2014): A single neuron is driven by a Gaussian noise, the output spike train is recorded, its power spectrum is estimated and serves to generate a new Gaussian noise to again stimulate the neuron in the next generation (step in the iterative scheme). Repeating this procedure over a few generations only, for a network with (nearly) balanced recurrent input and moderate synaptic amplitudes, yields an excellent quantitative agreement with the single-cell statistics of a neuron in a large network (Dummer et al., 2014) (Lerchner et al., 2006 used the equivalent correlation function). The simplest version of the procedure fails in the case of strong inhibition and is naturally restricted to homogeneous networks, in which all neurons (excitatory and inhibitory ones) share the same cellular and presynaptic connection parameters. Cortical neural networks are strongly heterogeneous (Meunier et al., 2010; Tomov et al., 2014; Harrison et al., 2015) and, hence, an extension of the method to cases in which neural and connection parameters vary across the network is desirable.

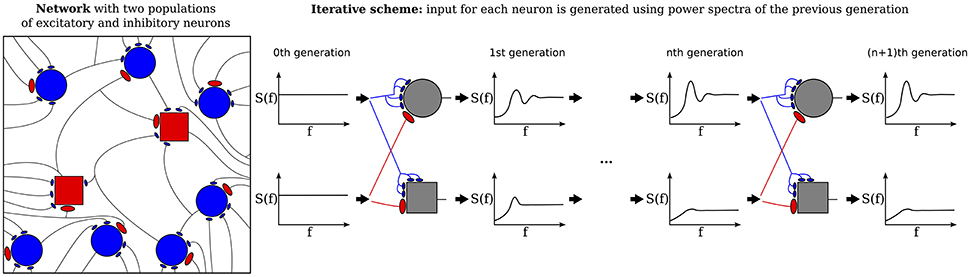

The purpose of the present study is to extend the iterative scheme in several directions. First, we develop a simple method to deal with the instability of the iterative scheme at strong recurrent inhibition, which makes the scheme applicable to a much broader range of network parameters. Secondly, as sketched in Figure 1, we study a heterogeneous network, in which excitatory and inhibitory neurons have different parameters (either cellular or with respect to their connectivity) or we consider even several (more than two) populations, which differ in their parameters. As indicated in Figure 1, every population is then represented by a single cell in the iterative scheme, and the input statistics to each cell in a certain generation will be determined from all output spectra of the previous generation.

Figure 1. Heterogenous network of excitatory and inhibitory neurons differing in intrinsic parameters. Sketch of the network (left) and the corresponding iterative scheme where a single neuron is simulated to represent one population (right). The input of a neuron in the next generation is composed of all power spectra from the previous generations. The power spectrum of each population converges after the nth generation.

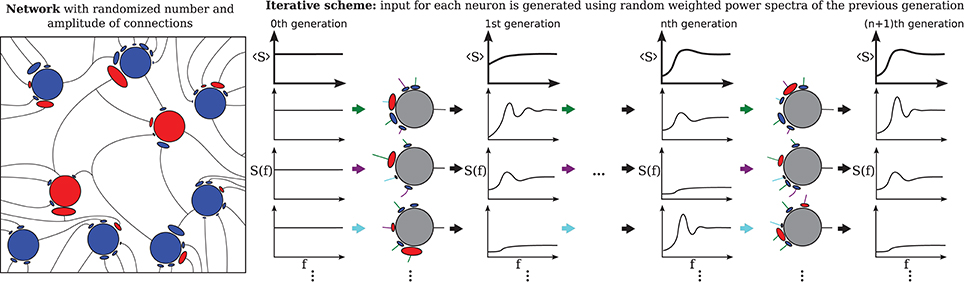

The third extension, illustrated in Figure 2 concerns the number and strength of synaptic input connections that is in reality certainly not constant and fixed, respectively, as in the Brunel network studied with the iterative scheme by Dummer et al. (2014). Just choosing a simple Erdős-Rényi topology, yields a broad distribution of firing rates and this kind of heterogeneity can be captured in the iterative scheme as well if we simulate a sufficient number of representative neurons, i.e., a sample of the network neurons (cf. Figure 2).

Figure 2. Heterogeneous network with distributed numbers and strengths of synaptic connections (Erdős-Rényi) where the number of presynaptic neurons and the synaptic weights follow a distribution (left) and the corresponding iterative scheme where few neurons represent the distributions (right). For each neuron in each generation these parameters are drawn from a distribution and the input is composed randomly from all power spectra from the previous generation. The average power spectrum over all neurons converges after the nth generation.

Our motivation for all these extensions of the method is twofold. For once, in cases in which the single-neuron correlation statistics is of interest, e.g., for the emergence of slow fluctuations in recurrent networks (Litwin-Kumar and Doiron, 2012; Ostojic, 2014; Wieland et al., 2015), our extended scheme provides a numerically efficient method that does not require large-network simulations. Hence, if the temporal correlation statistics of the asynchronous state is studied, our results permit to explore the role of network heterogeneity in shaping those correlations. The second purpose of our study is to trigger interest in the self-consistent description of the Gaussian colored noise generated by recurrent spiking networks. Showing that the numerical scheme works in a physiologically relevant parameter regime can also be regarded as a demonstration of the colored-Gaussian-noise approximation's validity and may encourage looking for an analytical description of the network noise via Markovian embedding (Schwalger et al., 2015).

Our paper is organized as follows. Section 2 presents the neuron and network models, introduces important spike-train statistics, shows how to stabilize the iterative procedure such that it works also for strong recurrent inhibition and how to incorporate a synaptic filter, and extends the scheme to the different heterogeneous cases. In section 3, we consider first the fluctuation statistics of the spike trains in the so-called “heterogeneous asynchronous state” of a homogeneous network with strong recurrent inhibition (Ostojic, 2014). Here we demonstrate that slow fluctuations emerge due to their preferred amplification by the network. We review briefly the effect of a synaptic filter and then turn to the different heterogeneous cases. All power spectra found with the iterative scheme are compared to numerical simulations of large and sparse networks. We conclude with a brief discussion of our findings.

2. Methods

2.1. Neuron Model and Spike-Train Statistics

A single neuron is described by the standard leaky integrate-and-fire neuron model (Gerstner et al., 2014). The membrane voltage v evolves according to:

When v(t) > vth, a spike is emitted and, after a refractory period of τR = 2 ms, the voltage is reset to v(t) = vr. The parameter τm in Equation (1) is the membrane time constant, which may be different depending on whether the neuron belongs to the excitatory or the inhibitory population. The input current (scaled by the membrane resistance R), is denoted by RI.

The statistics inspected in this work are based on spike-trains, which are defined as sums of Delta functions

where ti is the time instant of the ith spike. The instantaneous firing rate ν is the (generally time-dependent) average of the spike train, ν = 〈x(t)〉, and can be determined for a specific neuron within the network by an average over different runs with randomized initial conditions. We are only interested in the asynchronous state, in which ν does not depend on time. In practice, we often average the rate over the population (if appropriate, i.e., if the neurons are statistically equivalent) which is indicated by 〈.〉 (ensemble average) and over time:

For the calculation of spectral measures, we define the Fourier transform by

where T is our time window and is set in our simulations to T = 2 s if not mentioned otherwise. In all simulations we neglect a transient period of 1 s before extracting the statistics over the next T = 2 s. The power spectrum of a spike train is then defined by

where is the complex conjugate of . We note that the power spectrum saturates for infinite frequency at the firing rate, .

Two important statistical measures can be extracted from the power spectrum. The first is the Fano factor FF which is defined as the variance of the spike count over its mean, an expression that can be related to the power spectrum at zero frequency:

The second statistical measure is the correlation time τc. Following Neiman et al. (2007) and Wieland et al. (2015), we consider the spike train's correlation function c(τ) = 〈x(t)x(t + τ)〉−〈x(t)〉〈x(t + τ)〉 (note that here 〈.〉 indicates a time average) and its continuous part ĉ(τ) = c(τ) − νδ(τ) to define the correlation time as an integral over the squared and normalized ĉ(τ)

an integral which in turn can be related to an integral over the power spectrum via the Parseval theorem on the right side.

2.2. Network Model

Different network compositions are studied, many of which are based on the work of Brunel (2000), specifically on his Model B, a heterogeneous random network with fixed in-degree. In contrast to Brunel (2000), we use a larger number of neurons, i.e., an excitatory population size instead of . Independently of the number of populations, there is always a mixture of excitatory to inhibitory neurons with a ratio of 4:1, i.e., NI = γNE where γ = 0.25. Therefore, the total network size is N = NE + NI.

The ℓth neuron from the network has the dynamics

The external input current to each neuron and its membrane time constant depend on the population it belongs to which is here indicated by index α. The ℓth neuron receives a fixed number of () excitatory (inhibitory) randomly selected neurons connections from population α = {E, I}. The local input is described by:

where gα is the ratio between average inhibitory and average excitatory synaptic weights, which depends via α on the target neuron (α ∈ E, I) The number of presynaptic neurons will be constant in some cases (fixed in-degree, in sections 2.3 and 2.4 as well as from 3.1 to 3.3) and random in others (as a consequence of a Erdős-Rényi topology in sections 2.5 and 3.4). The excitatory (inhibitory) input neurons are picked randomly from the network and the set of the neuron indexes is denoted by mℓ,k and nℓ,i. The synaptic coupling strength (also called synaptic weight or synaptic efficacy) will be either constant, Jℓj = J, (in sections 2.3–3.4) or exponentially distributed with mean value 〈Jℓj〉 = J (in section 3.4). We fix the transmission delay at τD = 1.5 ms unless otherwise indicated, and K(t) is a an optional synaptic filter. In most cases, the filter is not used, which means K(t) = δ(t). Otherwise, it is a simple exponential filter:

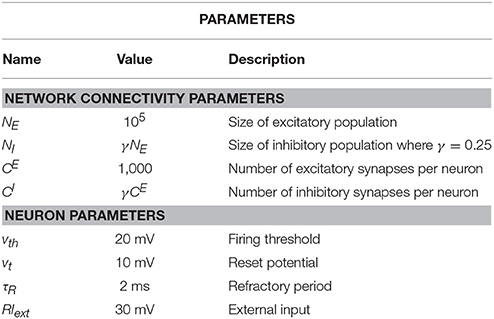

where θ(t) is the Heaviside function and τs is the synaptic filter time. Note that in the limit τs → 0, the case without synaptic filter is recovered. If not explicitly stated otherwise, we use the parameter values that are given in Table 1.

2.3. Self-Consistent Scheme for a Homogeneous Population—Stabilization of the Scheme for Strong Recurrent Inhibition

The iterative self-consistent scheme developed by Lerchner et al. (2006) and Dummer et al. (2014) is able to reproduce the single-spike-train power spectrum for homogeneous populations close to the balanced regime. In this procedure, in one generation a single neuron is stimulated with a colored noise over many trials, the power spectrum of its spike train is estimated, and using this spectrum and the output firing rate, a new surrogate colored Gaussian noise is generated which is used as the stimulus in the next generation. This procedure is repeated iteratively until the mean value and the spectrum of the driving noise matches in a self-consistent manner approximately the firing rate and the power spectrum of the resulting spike train. We present in detail the heterogeneous iterative self-consistent scheme in section 2.4, for further details of the homogeneous scheme we refer to Dummer et al. (2014).

The version of the scheme by Lerchner et al. (2006) and Dummer et al. (2014) is unable to reproduce self-consistently the statistics of single neurons in a recurrent network with strong relative inhibition g. More specifically, in cases where the inhibition is high, the scheme loses stability and the measured firing rate ν oscillates as a function of the generations (Dummer et al., 2014) (a numerical instability in the balanced case is reported in Lerchner et al., 2006, which is unrelated to the instability at strong recurrent inhibition). As a result, network regimes of low firing rate (such as those seen in cortex) cannot be captured.

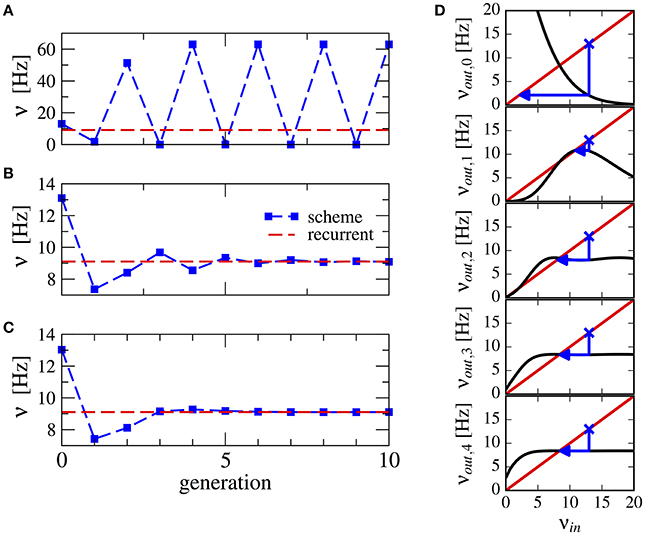

Here, we propose a method to ensure convergence even with strong recurrent inhibition. We observed that the firing rate oscillations are around the target firing rate, therefore we can use the average firing rate over all past n generations as input to the next generation:

This procedure stabilizes the scheme, see Figure 3 for a numerical example. Note that averaging over a higher number of past generations can yield a faster convergence (cf. Figures 3B,C). The effect can be visualized using a similar approach as in Dummer et al. (2014): a map from the input rate to the output rate. We calculate the output rate from the input rate with the approximation for synaptically filtered white noise (Brunel and Sergi, 1998). The effect of the averaging over resulting output rate and input can be captured by the functions νout,0 = νout(νin), ,…, which are shown in Figure 3D. These functions display an increasingly flatter shape in the dependence on the initial firing rate illustrating the stabilizing effect of the averaging.

Figure 3. Stabilization of the iterative scheme by averaging over previous generations (inhibition-dominated regime). Convergence of the firing rate in the iterative scheme (blue line) using different procedures. Parameters are g = 5.5, J = 0.2 mV, τs = 10 ms, γ = 0.25, and RIext = 30 mV. Recurrent network (red line) is firing at ν = 9.1 Hz. (A) No average is considered, only the previous generation. (B) Firing rate is averaged over the past two generations. (C) Firing rate is averaged over all past generations. (D) Visualization of the averaging procedure: firing rate resulting from i iterations of the averaging procedure νout,i of a neuron driven by the firing rate νin (see text). The function νout(νin) is approximated using the expression for a LIF neuron driven by synaptically filtered white noise (Brunel and Sergi, 1998). The fixed point νin = νout, 1 is unstable because |dνout/dνin| > 1 (see Dummer et al., 2014). Few iterations suffice to yield a flat curve indicating a stable fixed point.

The procedure of averaging the rate over the past generations will be used only in cases of unstable convergence. Typically, if excitation and inhibition are (nearly) balanced, the scheme is stable and we do not need to apply the averaging procedure.

2.4. The Self-Consistent Scheme for Several Populations

The self-consistent scheme for a homogeneous population can be generalized in different ways. First of all, real networks consist of several types of neurons, that all differ with respect to their physiological parameters. A first important step is to distinguish between excitatory and inhibitory neurons not solely with respect to their postsynaptic effect but to endow inhibitory neurons also with other cellular parameters (membrane time constant, leak potential, mean input current) than excitatory cells. Generally, we distinguish between PE excitatory and PI inhibitory populations. In the self-consistent scheme each population is represented by one neuron.

2.4.1. Determination of the Second-Order Statistics

In the situation considered here, every neuron in the network receives a fixed number of inputs. First of all, the mean recurrent input to a given population α is determined by the firing rates of the presynaptic neurons and by the connection parameters in the network:

where νE,k and νI,k are the excitatory and inhibitory firing rates determined by the kth presynaptic neuron. Furthermore, by writing the effective input in the Fourier domain, we can obtain the power spectrum of the effective input to a neuron in the α population given by:

where and are the spike-train power spectra from the kth E and lth I-cells that provide synaptic input to the population α, respectively, and is the Fourier transformed synaptic filter in Equation (10). Note that in order to distinguish it from the output spectra, the input spectra to the population α is identified by a bar, i.e., . If more than two populations are present, in Equations (12, 13) their contributions are taken into account by the number PE and PI of populations.

2.4.2. Gaussian Approximation of the Input

We want to use Equations (12, 13) to create an input with the same first- and second-order statistics. For a large number of presynaptic neurons that are only weakly correlated, this statistics will be approximately Gaussian by virtue of the central limit theorem1. To generate an input to a neuron embedded in the αth population with a prescribed power spectrum, we generate the Fourier transform

of a time-dependent function RIG,α(t) by drawing two independent Gaussian numbers , with unit variance and zero mean in each frequency bin. The frequency resolution is set by the length of the time window, Δf = T−1. Finally, we generate the time-dependent current RIG,α(t) by inverse Fourier transformation of RĨG,α(f).

We start with Gaussian white noise as input as the 0-th generation in the scheme and drive P neurons, where P = PE + PI is the number of populations. The neurons are simulated over a number of trials, the output spike-trains are measured and their power spectra, , are estimated (1st generation). For the next generation, an input is created using the spike-train power spectra of the first generation in the Gaussian approximation described above. The procedure is repeated until the output power spectra matches the input power spectra, i.e., self-consistency is achieved. In all simulations of the scheme we observed that iterating up to the 30th generation and using 10,000 trials for each generation was enough to reach a self-consistent solution, provided that the scheme converged for the given parameters.

In summary, we simulate the single LIF neuron representing the population α with

2.5. Self-Consistent Scheme for a Single Population with Distributed Connection Parameters

Here, we generalize the iterative scheme to the case where the number of input neurons and the synaptic weights are not the same for the same type of neuron. We deal with a fixed connection probability (Erdős-Rényi topology) and an exponential distribution of synaptic weights, resulting in heterogeneity of firing rates and spike-train spectra. For simplicity, we use the same membrane time constant for inhibitory and excitatory neurons τE = τI = τ = 20 ms and do not use a synaptic filter. We represent the neurons of the network in the iterative scheme by a number of M neurons in each generation. The connection parameters of each neuron in each generation are randomly drawn from the assumed distributions. The input to each neuron in the subsequent generation is determined independently from the other neurons taking into account all power spectra of the previous generation.

In case of an Erdős-Rényi topology the numbers of presynaptic neurons are binomially distributed. Likewise, in the scheme we draw binomially distributed random numbers and as numbers of presynaptic neurons connected to neuron α with power spectrum Sk(f) from the previous generation. The mean of these random numbers is and respectively. In case of exponentially distributed synaptic weights we draw excitatory synaptic weights and inhibitory synaptic weights for each neuron in the previous generation from the exponential distribution. The input current to the αth neuron is constructed with the mean input

and with the power spectrum of the effective input that is now taken to be

We assume here that E and I cells have the same spectrum if their connection parameters are equal. With given spectrum and mean input we calculate the voltage dynamics of neuron α as in section 2.4. To adapt the stabilization method described in section 2.3 to the case of a single heterogeneous population described by M single neurons, we sum a constant to all firing rates in the n-th generation, in order to set its mean firing rate to the average of all generations

where νk,n is the firing rate of the k-th neuron in generation n and is the transformed firing rate that should be used to calculate the input of the next generation. Note that the mean of the first two terms is zero. These are used in place of νk in Equation (16) to stabilize the scheme in case of strong inhibition.

For the simulation of these networks, we use the Brian spiking network simulator (Goodman and Brette, 2009).

3. Results

3.1. Homogeneous Network With Strong Recurrent Inhibition and Additional Synaptic Filtering

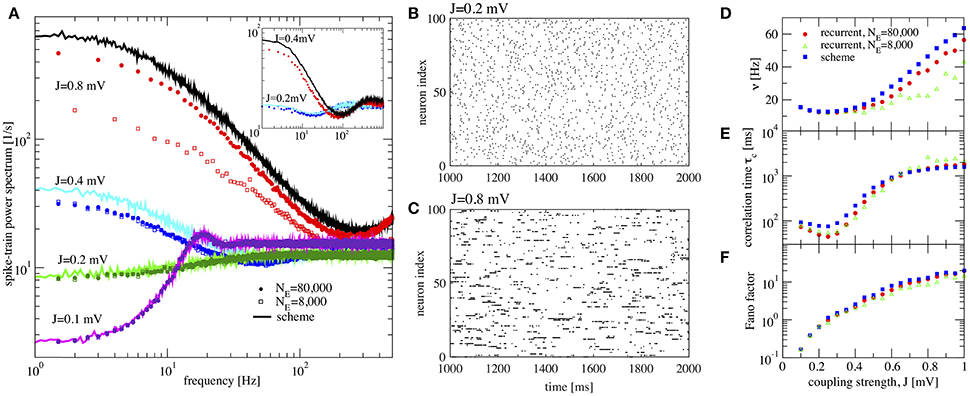

We would like to start with results for the inhibition-dominated network (g > 4), in which firing rates are low. In this regime, the iterative scheme as proposed by Dummer et al. (2014) is highly unstable and we only obtain convergence with the averaging procedure described in section 2.3. To demonstrate that the averaging procedure works in such a situation, we consider in Figure 4 the network studied by Ostojic (2014) who found two contrasting asynchronous states when varying the synaptic strength J.

Figure 4. Large amplification of slow fluctuations explains heterogeneous asynchronous state in a homogeneous network. Same set-up as in Ostojic (2014), τE = τI = 20 ms, RIext = 24 mV, γ = 0.25, τD = 0.55 ms, g = 5, and τR = 0.5 ms. This is a parameter region where the network fires at low-frequency regime and high inhibition g = 5 is set. (A) Single-neuron spike-train power spectra from recurrent network (circles for NE = 80,000 and squares for NE = 8,000) and self-consistent scheme (solid lines) for different values of J. Inset: power spectra for a large network (NE = 100, 000) of exponential IF neurons (single-neuron dynamics is where τE = τI = 20 ms, RIext = 30 mV, γ = 0.25, τD = 0.55 ms, g = 5, τR = 0.5 ms, and ΔT = 0.2) for two different values of the synaptic coupling, showing the same qualitative difference in low-frequency power as the LIF networks. (B,C) Raster plots containing 100 neurons from the LIF network with NE = 80,000 for J = 0.2 mV, and J = 0.8 mV, respectively. (D–F) Firing rate ν, correlation time τc, and Fano factor for different values of J for both recurrent and self-consistent scheme evaluated in a simulation of T = 100 s.

In Figure 4A spike-train spectra for strong recurrent inhibition (g = 5) for different values of J and different network sizes are shown. The power spectra of these network simulations are close to those of the iterative scheme in most cases. For weak coupling, the agreement between spectra is always good; discrepancies for large J become smaller with increasing network size because cross-correlations become less important in this limit. An additional reason for discrepancy is that the Gaussian approximation becomes less accurate for strong synaptic strength. The change in spike-train power spectra upon increase of the synaptic coupling does not hinge on the specific nature of the subthreshold function in the IF model. If we replace the leaky IF model by an exponential IF model (Fourcaud-Trocmé et al., 2003) in the network and in the recurrent scheme, we observe a similarly drastic change in low-frequency power if the synaptic strength is doubled (inset of Figure 4A). Also for this single-neuron model the agreement between spectra from the network and from the self-consistent scheme is fairly good.

When the coupling strength J increases, the firing rate first decreases and then increases (Figure 4D). More interestingly, with increasing coupling we see a transition from Poisson-like irregular firing (Figure 4B) to bursty firing of single neurons (Figure 4C), i.e., periods of strong firing are separated by pauses. In the latter state one can observe a broad distribution of spike counts, and that is why this state has been referred to as heterogeneous asynchronous state (Ostojic, 2014). In terms of the power spectrum this transition becomes manifest as an amplification at low frequencies (Wieland et al., 2015); correspondingly the Fano factor increases (Figure 4F). Together with the minimum in the correlation time (Figure 4E) (attained at a coupling where the Fano factor is about unity), our results confirm that the transition described by Ostojic (2014) in the inhibition-dominated regime is essentially the same as the one observed and explained by Wieland et al. (2015) for the balanced case g = 4.

In summary, the results in Figure 4 indicate that the emergence of a new heterogeneous asynchronous state for strong synaptic coupling can be explained only using the properties of a single neuron and the self-consistency condition, here demonstrated by our iterative single-neuron scheme.

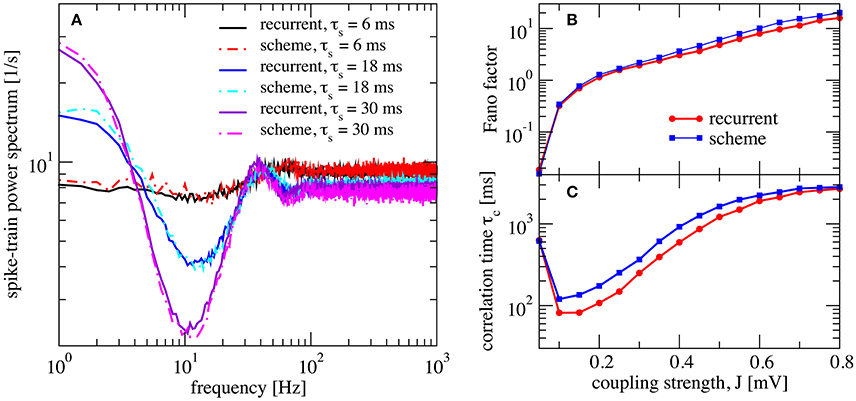

We now investigate the effect of a finite synaptic filter, Equation (10). Not surprisingly, a pronounced synaptic filter (large τs) leads to a long time scale in the network dynamics, as revealed by the increased power at low frequencies (Figure 5). The synaptic filter, Equation (10) is scaled such that the total charge per input spike remains constant. Therefore, an increased time constant for the exponential decay renders the postsynaptic response smaller in amplitude and longer in duration. This longer duration of postsynaptic responses extends the range of temporal correlations in the input to the neuron, which in turn causes the slow fluctuations in the neuron's activity. The resulting power spectrum (Figure 5A), especially for long synaptic time constant, looks similar to that of a colored-noise driven perfect IF model (see Figure 9 in Middleton et al., 2003). We emphasize that the emergence of the slow time scale is here imposed by the long-lasting synaptic filter which is in marked contrast to the network amplification of slow fluctuations for strong synaptic coupling discussed before in Figure 4.

Figure 5. Self-consistent scheme also works for a network with synaptic filtering. (A) Effect of τs on the recurrent network and iterative scheme, the plot displays power spectra for different values of τs. This is an inhibition-dominated regime with exponential synapses and J = 0.2 mV. In this region, the network fires at low firing rates. In (B,C) we fix τs at 10 ms and show Fano factor and correlation time τc for different values of J for both recurrent and self-consistent scheme. Other parameters as in Figure 4.

We also verified that the synaptic filter does not change qualitatively the emergence of slow fluctuations for strong coupling (i.e., the heterogeneous asynchronous state discussed above). Using τs = 10 ms we still see the characteristic strong increase in Fano factor (Figure 5B) and a minimum in the correlation time (Figure 5C).

3.2. Networks With Different Parameters for Excitatory and Inhibitory Neurons

In the following, we return to the limit of instantaneous synapses τs → 0, i.e., K(t) = δ(t), and introduce different parameter values for excitatory neurons (E-cells) and inhibitory neurons (I-cells). First of all, in order to test whether the applicability of the scheme hinges on the exact value of crucial parameters, we choose a small change of the membrane time constant between E and I-cells: τI = 19 ms and τE = 20 ms. Secondly, we make the relative strength of recurrent inhibition, gE and gI, different for the two populations in order to see whether the generalized iterative scheme with two neurons can cope with this heterogeneous situation.

In Figure 6 we show power spectra obtained from simulations of the recurrent network and of the iterative scheme for different combinations (gE,gI). In Figure 6A the two populations are statistically rather different with an E-cell firing rate of νE = 3.2 Hz whereas I-cells fire at νI = 9.7 Hz. Both spectra are well reproduced by the iterative scheme and show a “green” shape (in the colored noise lingo, this is white minus red noise). That means, the spectra exhibit a dip at low frequencies, but this is much more pronounced for the I-cells. Even when we increase the difference in recurrent inhibition and the two types of neurons fire at lower frequencies of νE = 0.1 Hz and νI = 7.4 Hz, the agreement of the spectra from the iterative scheme and from the network simulations is excellent (Figure 6C). If we choose the relative recurrent inhibition to be the same, the neural dynamics differ only by the small difference of the membrane time constants, which does neither cause significant differences in the firing rates (νE = 128.9 Hz and νI = 129.9 Hz) nor in the shape of the power spectra (cf. Figure 6B).

Figure 6. Example spectra for heterogeneous network of excitatory and inhibitory neurons differing in their parameters. Comparison of power spectra of the recurrent network with two different populations and the self-consistent iterative scheme with two neurons. Parameters are in (A) (gE, gI) = (4.2, 4.0), in (B) (gE, gI) = (3.7, 3.7), and in (C) (gE, gI) = (4.25, 3.6).

In order to explore the quality of the approximation systematically, we evaluated the discrepancy using the relative integrated error

where fcut = 2νI (we use the inhibitory firing rate because it is usually higher).

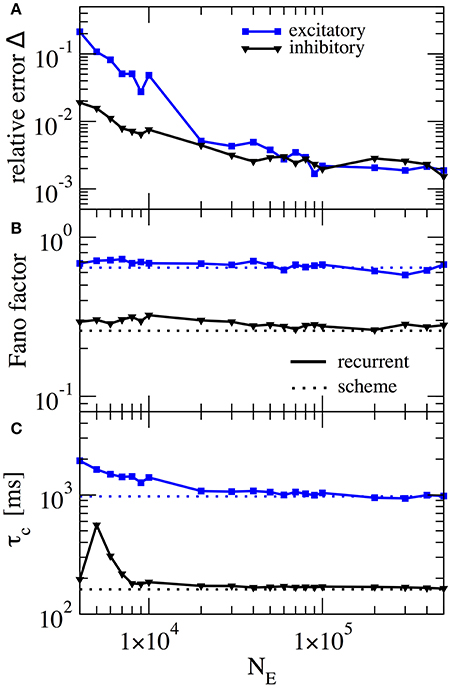

In our scheme the assumption of weak cross-correlations among neurons in the network is crucial - indeed we assume an infinitely sparse system that is in a perfectly asynchronous state. This is, of course, a somewhat artificial limit and thus it is interesting how, for a fixed number of connections (about 103), the squared deviation as well as important statistics such as the Fano factor depend on the system size. In Figure 7 this dependence is illustrated for the case where gE = 4.2 and gI = 4.0, the same parameters as in Figure 6A. For the chosen connectivity, a minimal number of NE = 20, 000 E-cells seems to be required to reach a good approximation (relative error below 1% for both E and I cells) with the self-consistent scheme. This plot illustrates that although sparsity is an important assumption for the self-consistent determination of spike-train power spectra, it does not lead to the necessity to consider exorbitantly large networks.

Figure 7. Performance of iterative scheme improves with network size. The performance of the iterative scheme depends on the network size. Curves were produced for the case gE = 4.2 and gI = 4.0, same parameters as in Figure 6A. (A) Relative error dependence of the scheme for a network size NE evaluated with the integrated relative error defined in Equation (19). (B) Fano factor dependence where dotted line represents the iterative scheme prediction and solid lines the recurrent network. (C) correlation time τc dependence.

3.3. Networks With Three Distinct Populations and Distinct Modules

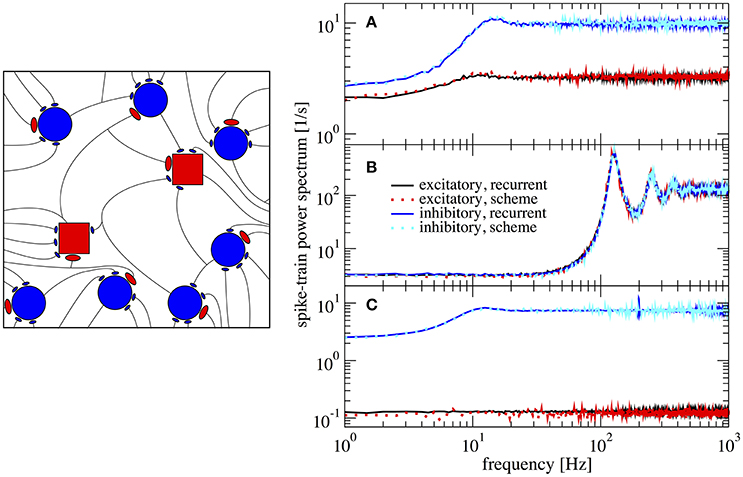

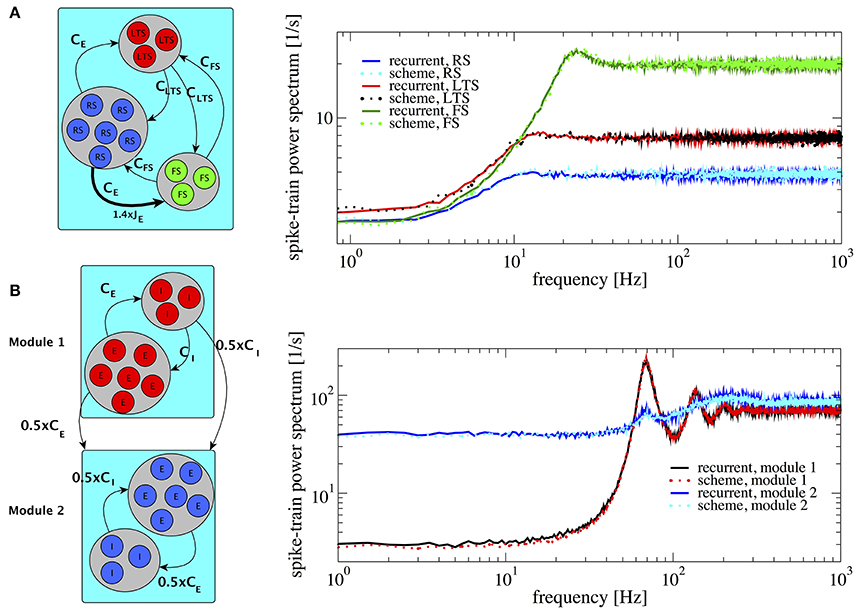

In principle, the proposed iterative scheme is applicable to any number of populations. As long as the resulting activity is sufficiently asynchronous (implying weak cross-correlations) and the synaptic strength is not excessively large (needed for the Gaussian approximation), the iterative scheme should converge to a self-consistent result. Here we demonstrate that the extended scheme also works for networks with more than two populations and study two cases: a network with three distinct populations and a modular network.

An example of three populations is given by a combination of one excitatory and two inhibitory populations (Figure 8A), biologically inspired by a cortical network with excitatory regular spiking neurons (RS), inhibitory fast-spiking (FS), and low-threshold spiking (LTS) neurons (see Izhikevich, 2003; Tomov et al., 2014 and references therein). Their firing rates are ordered such that νFS > νLTS > νRS. This heterogeneous situation is achieved by changing both membrane time constants, which are chosen to be τFS = 21 ms, τLTS = 20 ms, and τRS = 19 ms, and making one of the synaptic weights in the network (connecting RS neurons to FS neurons) 1.4 times stronger (indicated by the thick arrow in Figure 8A, left). This setting illustrates how heterogeneity of connectivity and membrane time constants shape the power spectra statistics.

Figure 8. Heterogeneous networks with more than two populations. Comparison between power spectra of the recurrent network (solid lines) with the iterative scheme (dashed lines). (A) Three different populations (RS, LTS, and FS) whereby the inhibitory population is composed of LTS (NLTS = 0.25 × NE, CLTS = 0.25 × CE) and of FS (NFS = 0.05 × NE, CFS = 0.05 × CE). Parameters are g = 4.0, τRS = 20 ms, τLTS = 19 ms, τFS = 21 ms. We multiply the synaptic strength by 1.4 for the excitatory weight to inhibitory neuron from population 2. (B) Modular network, modules 1 and 2 communicate through connections represented at the sketch in the left. Each module contains 1.25 × 105 cells with E:I ratio 4:1. In both modules τ = 20 ms. At module 1 J = 0.1 mV and at module 2 J = 0.27 mV, for all modules g = 4.0. Module 2 exhibits a spectral hump around 80 Hz which comes from the interaction with module 1.

The resulting spectra are well-captured by the iterative scheme; they all display the effect of neural refractoriness by the dip at low frequencies (Bair et al., 1994; Franklin and Bair, 1995) but to a different degree. The dip is most pronounced for the fast spiking neurons; the regular spiking neurons fire with a statistics that is closest to a Poisson process with a flat power spectrum.

According to a common view, the cortex possesses a modular structure (Boucsein et al., 2011; Tomov et al., 2014, 2016), a feature that we take into account in the next setup. We consider two different modules as shown in Figure 8B. The two modules are equal to each other with respect to the population size and each consists of an E-I network with NE = 100, 000 and membrane time constants τ = 20 ms, requiring the simulation of two neurons in total in the self-consistent scheme. For module 1 and 2 we choose J = 0.1 mV and J = 0.27 mV, respectively. With this choice, the two modules operate in different regimes: module 1 in a fast-fluctuation mode with low Fano factor and peaked power spectrum, module 2 in a regime of dominating slow fluctuations (cf. section 3.1). In module 2, we rewired 50% of the connections so that they come from module 1, i.e., it receives 0.5CE excitatory inputs and 0.5CI inhibitory inputs from module 1. This results in a highly heterogeneous situation which is reflected in the power spectrum of module 2: in contrast to the behavior observed in Figure 4, the power spectrum of module 2 contains an additional hump around 80 Hz. The power spectra of all different neurons in this setup are well represented by the iterative scheme, cf. Figure 8B.

The result in Figure 8B demonstrates that the iterative scheme can capture complex situations involving the interaction among different modules. The simulated network contained in total 250,000 neurons and the iterative scheme reproduced the single neuron correlation statistics with high accuracy using only two neurons.

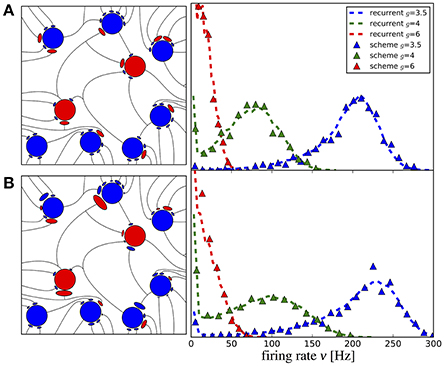

3.4. Network With Randomized Number and Weight of Synaptic Inputs

Here we use the iterative scheme to represent network dynamics for distributed connection parameters in a single population. As a first example, we consider a network in which connections are made with a fixed probability (Erdos and Rényi, 1960) instead of a fixed in-degree (as in all previous examples). This Erdős-Rényi topology yields a binomially distributed in-degree which is certainly a more realistic scenario than the fixed in-degree. Furthermore, a distributed in-degree leads to a distribution of firing rates, as seen in cortical networks (Griffith and Horn, 1966; Koch and Fuster, 1989; Shafi et al., 2007; Hromádka et al., 2008; O'Connor et al., 2010; Roxin et al., 2011). In addition to a random number of connections, in cortical networks also the synaptic weights are not fixed but follow a long-tailed distribution (Song et al., 2005; Gilson and Fukai, 2011). This feature can be approximated in the recurrent network model by drawing the weights from an exponential distribution.

In this version of the iterative scheme, we have M neurons that represent independent samples of the distributions of excitatory and inhibitory in-degrees and weights. Consequently, the firing rates and power spectra of the M neurons will differ and reflect the heterogeneity of these measures in the recurrent network (for further details, see section 2.5).

The first striking feature for a randomized connectivity is a broad distribution of firing rates. The stronger source for the rate variability seems to origin in the random number of connections (the case shown in Figure 9A); an exponential distribution of the synaptic strength makes the histograms only somewhat broader (case with additional weight variability, shown in Figure 9B). The histograms obtained from our iterative scheme with a modest number of M = 50 representative neurons (data are collected over several generations to improve the sampling) agree well with those from the network simulations. Note that analytical expressions for self-consistent distributions of the firing rate have been found previously using the diffusion approximation (Amit and Brunel, 1997; Roxin et al., 2011).

Figure 9. Iterative scheme reproduces firing rate distributions of Erdős-Rényi networks. Firing rate histograms of the iterative scheme with M = 50 neurons per generation (symbols) and the network simulation (dashed lines) for (A) binomially distributed numbers of presynaptic neurons [all excitatory (inhibitory) weights are the same as indicated on the left] and (B) additionally randomized synaptic weights (weights are drawn from distributions, sketched on the left). To build the histograms of the scheme, we used all firing rates from the 6th to the 20th generation (after transients have vanished). Parameters are as in Figure 10.

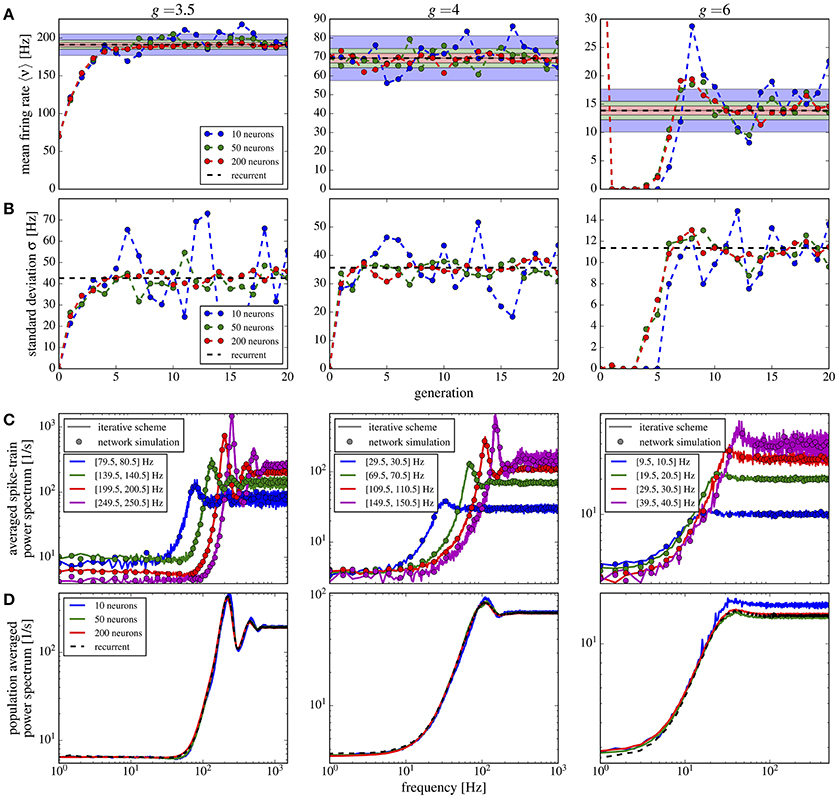

In Figure 10 we show the mean Figure 10A and the standard deviation Figure 10B of the firing rate histograms of the iterative scheme as a function of the generation. Because the number M of neurons per generation (i.e., the number of samples) is finite we consider the standard error represented by the colored areas in Figure 10A, where σ is the standard deviation of the firing rates measured from the network. The mean firing rate and standard deviation of the iterative scheme fluctuate around the network values within the expected standard error (colored areas), which indicates that the scheme works even for rather limited numbers of neurons M ≪ N. For the considered parameter sets, we observe fast convergence within five iterations for all used numbers of neurons except in the case of strong recurrent inhibition with g = 6 for which we observe convergence only after the 10th generation.

Figure 10. Iterative scheme captures the heterogeneous statistics due to Erdős-Rényi topology. Statistics from the iterative scheme and recurrent network for binomially distributed numbers of presynaptic neurons and different recurrent inhibition as indicated; remaining parameters: 〈CE〉 = 1000, 〈CI〉 = 250, J = 0.1 mV, τm = 20 ms, τD = 2 ms. For each neuron 100 trials of 10 s were used. From top to bottom: (A) mean firing rate for each generation of the scheme and the mean network rate (dashed line); standard error (with σ being the standard deviation of the network firing rates) for different numbers of neurons M in the iterative scheme (colored areas). (B) Standard deviation of firing rates in the iterative scheme vs. generation compared to σ (dashed line). (C) Power spectra averaged over single neuron spectra with firing rates within the indicated intervals in the iterative scheme (lines, data from generations 6 to 20 for g = 3.5, 4 and 11–20 for g = 6) and from neurons in the network (circles). (D) Power spectra averaged over all neurons in the scheme (solid lines, data from generations 11 to 20) and network (dashed). For g = 6 we used the stabilization procedure from section 2.5.

Power spectra are different for all neurons in the network and depend most strongly on the mean input that the respective cell receives. In the network we group neurons with similar firing rates (within a 1 Hz interval) and average their spectra within each group. We compare these spectra to those resulting from the self-consistent scheme having firing rates in the same interval (Figure 10C); we find a good agreement for a modest number of neurons M = 50 used in the scheme. Note that averaged spectra are very different from each other and from the average over all neurons in the network (Figure 10D). Even for a low number M = 10, the iterative scheme reproduces this average spectrum well.

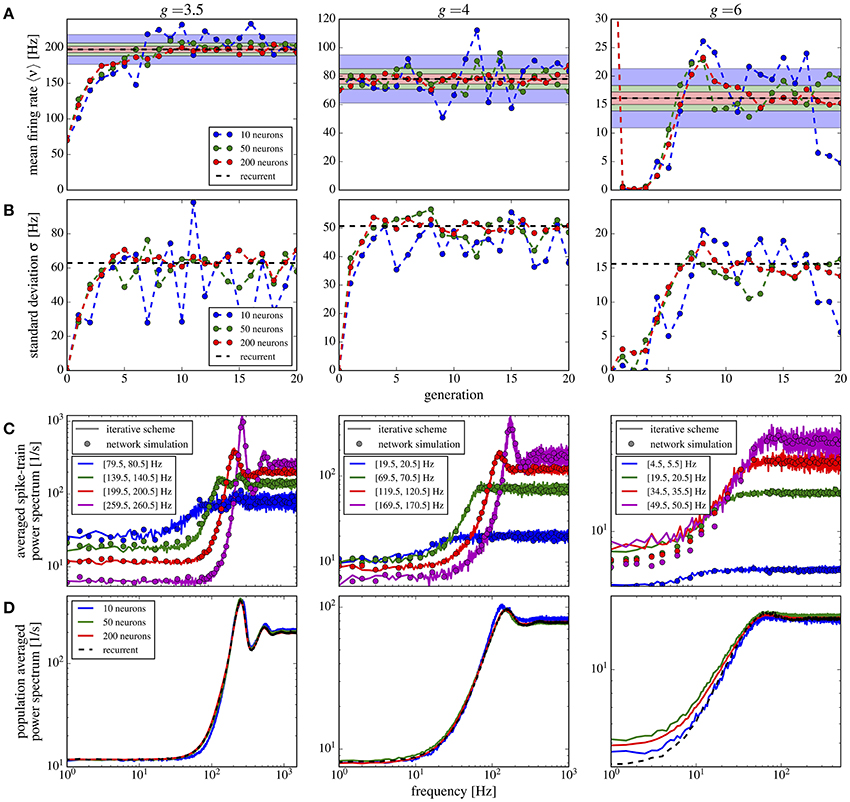

If we use in addition to the random number of connections also randomly distributed synaptic weights, the overall picture does not change qualitatively (Figure 11). Compared to the case of equal weights, the variance in the firing rates goes up by about 50% (Figure 11B) whereas the mean firing rate increases only slightly (Figure 11A). Power spectra look similar to the previous case and the agreement between scheme and network simulation is again good, except for the case of strong inhibition g = 6, for which we find a discrepancy between the averaged power spectra for the scheme and the network (Figure 11D) even for a large M (similarly for the single neuron spectra in Figure 11C). Due to the very low firing rates and some high synaptic weights, the Gaussian approximation becomes inaccurate. We calculated the relative error defined in Equation (19) for the averaged power spectra for M = 10 up to M = 100, using fcut = 2〈ν〉. All observed relative errors were smaller than five percent.

Figure 11. Iterative scheme captures the heterogeneous statistics due to Erdős-Rényi topology and randomly distributed synaptic weights. First- and second-order statistics from an iterative scheme for binomial distributed numbers of presynaptic neurons and additionally exponentially distributed synaptic weights. See caption of Figure 10 for details.

The results of this section demonstrate how a relatively small number of neurons in the self-consistent scheme can be used to capture the first- and second-order statistics of neurons in a network with a variable number of inputs and synaptic weights.

3.5. Deviations Due to Non-Gaussianity of Current Input

The iterative scheme assumes neuronal input that obeys a Gaussian distribution. This assumption is only accurate for a large number of pre-synaptic spikes with small amplitude. A low firing rate or an increase of (some) synaptic weights (as observed for stronger recurrent inhibition, i.e., larger values of g) shifts the statistics from approximately Gaussian to a distribution with pronounced non-Gaussian features (skewed and with fat tails). In fact, even the most basic statistics of the output, the firing rate, can systematically deviate from the Gaussian setting if synaptic amplitudes are sufficiently large (Richardson and Swarbrick, 2010). We can thus expect that at large values of g the iterative scheme (that assumes Gaussianity) may provide power spectra that deviate from those measured in the network; indeed, this is what we observed above. Here we want to illustrate the relation between the deviations of power spectra in the network and for the isolated neuron on the one side and non-Gaussian features of the network input statistics on the other side.

We simulate the heterogeneous network from section 3.4 with exponentially distributed weights and either g = 4 (as in Figure 11, middle column) or g = 6 (as in Figure 11, right column). We pick out 1,000 representative neurons randomly. For each of these neurons we measure the input current spectrum and the output spike-train spectrum. Input currents are integrated over a time bin of 2 ms (10% of the membrane time constant), in which the membrane voltage does not change too drastically but we can expect to collect many input spikes and thus Gaussian statistics. Clearly, for a much smaller time bin of the order of 0.01 ms (where is the average firing rate of the presynaptic neurons), one single spike at most would typically fall into one time bin. Thus, on a very fine temporal scale, the input has always a highly non-Gaussian statistics. Nevertheless, the Gaussian approximation works well if the temporally integrated input noise has approximately Gaussian statistics for an effective time step, in which the voltage does not change much. As the deterministic drift causes already a change by several mV over a time of 2 ms, our choice of the effective time step is conservative in the sense that non-Gaussian features of the input in this time step will certainly become noticeable in the output statistics of the driven cell.

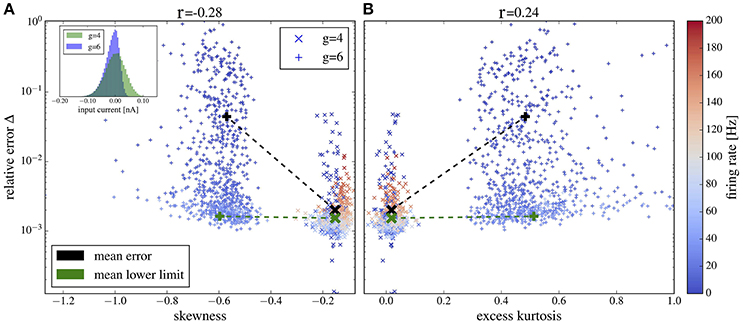

For each of the 1,000 selected neurons, we first measure the skewness and excess kurtosis of its input current to quantify non-Gaussianity. Then, we generate a Gaussian noise with the same power spectrum as the input current to this specific neuron and use it to drive an isolated LIF neuron. Finally, we calculate the relative error, Equation (19), between the output power spectrum of this isolated neuron and the corresponding neuron from the recurrent network. Note that for this comparison, we do not iterate the self-consistent scheme but rather use a Gaussian version of the (neuron-specific) network input noise for the isolated cell. In this way, we isolate non-Gaussianity of the input noise as one potential source of deviation between network spectra and spectra from the self-consistent scheme.

Plots of the relative error vs. skewness and excess kurtosis are presented in Figures 12A,B, respectively. For g = 4 (balanced case, moderate inhibitory synaptic amplitudes), the relative error is generally small and skewness and excess kurtosis deviate only little from zero. In contrast, for stronger inhibitory synaptic weigths g = 6 (inhibition-dominated recurrent feedback), the input current has a pronounced negative skew and fat tails because of large inhibitory amplitudes, resulting in stronger deviations between the spectra of isolated neurons and the corresponding network neurons. Consequently, we find a correlation between non-Gaussianity and relative error (cf. the Pearson correlation coefficients given atop of the figure). To gauge the relative error, we need a lower limit. To this end, we consider the deviation between two measurements of the same power spectrum for a given neuron of the network. The average of this lower bound over the 1,000 selected neurons is shown in Figure 12 for comparison. It is close to the mean error for g = 4 but is significantly lower than the mean error for g = 6, and it barely depends on the skewness and kurtosis, again confirming the relation between non-Gaussianiaty of the input noise and the relative error between isolated neuron and network neuron.

Figure 12. Deviation between spectra vs. statistical measures of non-Gaussianity of the input current. Relative error, Equation (19), of spike-train spectra from neurons in the network and from neurons driven by corresponding Gaussian noise plotted vs. skewness (A) and excess kurtosis (B) of the input current. Inset in (A) probability distributions for g = 4 and g = 6 as indicated. Color in (A,B) encodes the firing rate of each neuron (cf. scale on the right). We used the heterogeneous network from section 3.4 with exponentially distributed synaptic weights and g = 4 (crosses) and g = 6 (plusses) and picked 1,000 representative neurons randomly. For these neurons, we determined the skewness and excess kurtosis as well as the power spectrum of the input current. For the former two, we used the input current integrated over an effective time step of 2 ms (see main text for a discussion, how to choose this time step). We computed the relative error for each spike train-power spectrum from the network and its corresponding spike-train spectrum for the isolated neuron driven by the Gaussian version of the input noise (mean values shown by black symbols). The Pearson correlation coefficients are shown atop and indicate that deviations from Gaussian input statistics and deviations between network and single-neuron spectra co-occur. As a lower limit of the relative error, we computed Δ for two independent measurements of the power spectrum of a network neuron; this lower bound for the specific cell was then averaged over all neurons in the network and is shown by green crosses for both networks with g = 4 and g = 6 (there is no significant dependence of the mean lower bound on g).

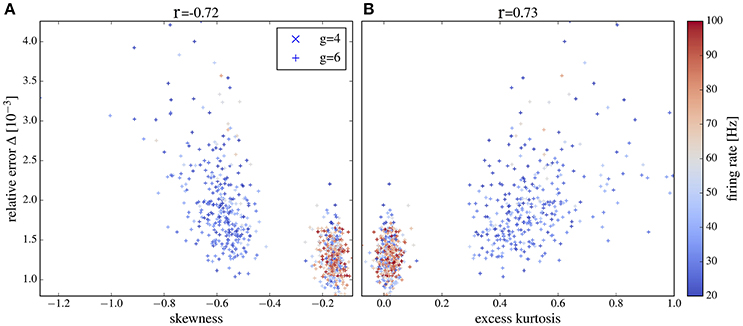

For both values of g we obtain a high relative error for exceptionally large firing rates (>100Hz) (because the time step of 2 ms becomes too large in comparison to the mean ISI) or exceptionally low firing rate (because spectra are very noisy in this case). If we consider only a range of moderate firing rates (Figure 13) then the correlation between the deviation from Gaussianity and the discrepancy between network and isolated becomes even clearer (cf. Pearson correlations coefficients stated atop of the figure).

Figure 13. Deviation between spectra vs. statistical measures of non-Gaussianity of the input current for an intermediate range of output firing rates. Correlations between the relative error and skewness (A) and excess (B) are higher than in Figure 12 if only neurons with firing rates from 20 to 100 Hz are selected. Other parameters as in Figure 12.

4. Conclusions

In this work we extended the self-consistent scheme described by Dummer et al. (2014) to situations with strong inhibition, synaptic filtering, networks with subpopulations of distinct neuron types, and networks with random (instead of constant) number and strength of synaptic connections. In all cases we employed the Gaussian approximation and in the heterogeneous systems considered we used a small number of neurons each representing a certain subclass of similar neurons. Despite these approximations, our comparison of the determined spike-train power spectra with those found by numerical simulations of large and sparse recurrent networks revealed a good quantitative agreement.

Admittedly, with an increasing number of subtypes of neurons, we loose some of the numerical advantages of the scheme compared to a network simulation because in order to get reliable estimates of the power spectrum, we have to simulate the few neurons in each generation many times. If the convergence of the scheme is slow then, adding up all neurons in all generations and all trials, we may have to simulate in the end as many neurons as in the network (however, the typical bottleneck of many simulations, to keep track of all synaptic connections, is still absent in the scheme). It is thus questionable, whether much more complicated situations than discussed here can be studied in depth by our scheme.

Another short-coming of the approach concerns cases in which neural cross-correlations (a very vivid topic of current research, see Doiron et al., 2016) cannot be neglected anymore or in which weak cross-correlations still have a significant impact on the population activity (Schneidman et al., 2006). There are different causes for cross-correlations including common (shared) input, spatially homogeneous external stimuli, and a slight overall synchronization in the network (some of which are reviewed by Helias et al., 2014; Doiron et al., 2016). Not all of these factors can be taken into account by extending the scheme to pairs of neurons that are stimulated by correlated Gaussian noise processes2. We may still learn something from finding situations in which neural cross-correlations can quantitatively be described by extensions of the scheme to pairs of neurons in each generation.

Nevertheless, the results and the approach put forward in our paper are useful in several respects. If the single-neuron statistics is of interest (because this is what is recorded or this is what shows particularly interesting features), our method provides a computationally cheap solution to calculate the spike-train power spectrum and to study its dependence on cellular and network parameters without the need to simulate a network. The scheme is particularly suited for the idealized case of a perfectly asynchronous network that is difficult to study numerically because an almost completely asynchronous state can be reached only in a very sparse, hence, very large network. This case is interesting because it often permits analytical calculations via a density equation for the membrane voltage (Knight, 1972; Abbott and van Vreeswijk, 1993; Amit and Brunel, 1997; Brunel, 2000; Mattia and Giudice, 2002) and thus our scheme might be useful for comparison to simpler theories.

As already mentioned in the introduction, we can regard our results as a confirmation that the approximation of the synaptic input by a correlated Gaussian noise is a reasonable one over a physiological range of parameters for a sparse recurrent network in the asynchronous state. Using Markovian embedding, an arbitrary colored Gaussian noise can be described by a (possibly very high-dimensional) Ornstein-Uhlenbeck process, an idea that has been worked out in the neural context by Schwalger et al. (2015); for examples from the physics literature, see, for instance, Schimansky-Geier and Zülicke (1990); Hänggi and Jung (1995); Siegle et al. (2010). Hence, a stochastic mean-field theory in terms of the corresponding multidimensional Fokker-Planck equation seems to be in reach, generalizing the successful framework of the diffusion approximation, which was based on the Poissonian (white-noise) approximation and thus led to a one-dimensional Fokker-Planck equation. A theory using the colored-noise Fokker-Planck equation would faithfully reproduce the second-order temporal correlations of the spiking neurons and, possibly, provide novel insights into the bifurcation between asynchronous and synchronous states. This may be particularly relevant for larger synaptic amplitudes (Ostojic, 2014; Wieland et al., 2015), for which the color of the noise becomes more and more important.

Author Contributions

RP, SV, DB, ACR, and BL: Conceived the work; RP and SV: Developed the codes and performed the computations; RP, SV, DB, and BL: Analyzed the results; RP, SV, DB, ACR, and BL: Wrote the manuscript.

Funding

This paper was developed within the scope of the IRTG 1740/TRP 2015/50122-0, funded by DFG/FAPESP. RP and ACR are also part of the Research, Innovation and Dissemination Center for Neuromathematics FAPESP grant (2013/07699-0). RP is supported by a FAPESP scholarship (2013/25667-8). ACR is partially supported by a CNPq fellowship (grant 306251/2014-0). DB is supported by the DFG (GRK 1589/2).

Conflict of Interest Statement

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Footnotes

1. ^This is evident for a finite synaptic filter, that leads to a summation of many independent continuous functions. For delta synapses, we add up spike trains and so it becomes questionable, how they can approach a Gaussian statistics. In this latter case we should consider the effect of the summed shot noise on a dynamical system such as the integrate-and-fire neuron: the sum over a small time interval (smaller than the membrane time constant but large enough to collect many independent spikes from the presynaptic neurons) yields a spike count which can be well approximated by a Gaussian variable; this is similar to the common diffusion approximation (Tuckwell, 1988), which, however, additionally involves the assumption of a Poissonian input spike train. In this sense, the Gaussian approximation can be applied to sums of spike trains (see also Figure 12 and the surrounding discussion, for a test of the Gaussianity of the input current).

2. ^For the simpler but still formidable problem of how neuron pairs respond to cross-correlated Gaussian white noise sources, see, for instance, Doiron et al. (2004); de la Rocha et al. (2007); Shea-Brown et al. (2008); Ostojic et al. (2009); Vilela and Lindner (2009b); Deniz and Rotter (2017).

References

Abbott, L., and van Vreeswijk, C. (1993). Asynchronous states in networks of pulse-coupled oscillators. Phys. Rev. E 48:1483.

Aljadeff, J., Stern, M., and Sharpee, T. (2015). Transition to chaos in random networks with cell-type-specific connectivity. Phys. Rev. Lett. 114:088101. doi: 10.1103/PhysRevLett.114.088101

Amit, D. J., and Brunel, N. (1997). Dynamics of a recurrent network of spiking neurons before and following learning. Netw. Comput. Neural Syst. 8:373.

Bair, W., Koch, C., Newsome, W., and Britten, K. (1994). Power spectrum analysis of bursting cells in area MT in the behaving monkey. J. Neurosci. 14, 2870–2892.

Bauermeister, C., Schwalger, T., Russell, D., Neiman, A. B., and Lindner, B. (2013). Characteristic effects of stochastic oscillatory forcing on neural firing: analytical theory and comparison to paddlefish electroreceptor data. PLoS Comput. Biol. 9:e1003170. doi: 10.1371/journal.pcbi.1003170

Boucsein, C., Nawrot, M. P., Schnepel, P., and Aertsen, A. (2011). Beyond the cortical column: abundance and physiology of horizontal connections imply a strong role for inputs from the surround. Front. Neurosci. 5:32. doi: 10.3389/fnins.2011.00032

Brunel, N. (2000). Dynamics of sparsely connected networks of excitatory and inhibitory spiking neurons. J. Comput. Neurosci. 8, 183–208. doi: 10.1023/A:1008925309027

Brunel, N., and Hakim, V. (1999). Fast global oscillations in networks of integrate-and-fire neurons with low firing rates. Neural Comput. 11, 1621–1671.

Brunel, N., and Sergi, S. (1998). Firing frequency of leaky integrate-and-fire neurons with synaptic current dynamics. J. Theor. Biol. 195, 87–95.

de la Rocha, J., Doiron, B., Shea-Brown, E., Josic, K., and Reyes, A. (2007). Correlation between neural spike trains increases with firing rate. Nature 448, 802–806. doi: 10.1038/nature06028

Deger, M., Schwalger, T., Naud, R., and Gerstner, W. (2014). Fluctuations and information filtering in coupled populations of spiking neurons with adaptation. Phys. Rev. E 90:062704. doi: 10.1103/PhysRevE.90.062704

Deniz, T., and Rotter, S. (2017). Solving the two-dimensional Fokker-Planck equation for strongly correlated neurons. Phys. Rev. E 95:012412. doi: 10.1103/PhysRevE.95.012412

Doiron, B., Lindner, B., Longtin, A., Maler, L., and Bastian, J. (2004). Oscillatory activity in electrosensory neurons increases with the spatial correlation of the stochastic input stimulus. Phys. Rev. Lett. 93:048101. doi: 10.1103/PhysRevLett.93.048101

Doiron, B., Litwin-Kumar, A., Rosenbaum, R., Ocker, G. K., and Josić, K. (2016). The mechanics of state-dependent neural correlations. Nat. Neurosci. 19, 383–393. doi: 10.1038/nn.4242

Droste, F., and Lindner, B. (2017). Exact analytical results for integrate-and-fire neurons driven by excitatory shot noise. J. Comp. Neurosci. 43, 81–91. doi: 10.1007/s10827-017-0649-5

Dummer, B., Wieland, S., and Lindner, B. (2014). Self-consistent determination of the spike-train power spectrum in a neural network with sparse connectivity. Front. Comp. Neurosci. 8:104. doi: 10.3389/fncom.2014.00104

Edwards, B. W., Wakefield, G. H., and Powers, N. L. (1993). The spectral shaping of neural discharges by refractory effects. J. Acoust. Soc. Am. 93, 3353–3364.

Eissfeller, H., and Opper, M. (1992). New method for studying the dynamics of disordered spin systems without finite-size effects. Phys. Rev. Lett. 68, 2094–2097.

Erdos, P., and Rényi, A. (1960). On the evolution of random graphs. Publ. Math. Inst. Hung. Acad. Sci 5, 17–60.

Ermentrout, B., Pascal, M., and Gutkin, B. (2001). The effects of spike frequency adaptation and negative feedback on the synchronization of neural oscillators. Neural Comput. 13, 1285–1310. doi: 10.1162/08997660152002861

Fourcaud-Trocmé, N., Hansel, D., van Vreeswijk, C., and Brunel, N. (2003). How spike generation mechanisms determine the neuronal response to fluctuating inputs. J. Neurosci. 23, 11628–11640. doi: 10.3410/f.1016851.201577

Franklin, J., and Bair, W. (1995). The effect of a refractory period on the power spectrum of neuronal discharge. SIAM J. Appl. Math. 55, 1074–1093.

Gerstner, W., Kistler, W. M., Naud, R., and Paninski, L. (2014). Neuronal Dynamics: From Single Neurons to Networks and Models of Cognition. Cambridge: Cambridge University Press.

Gilson, M., and Fukai, T. (2011). Stability versus neuronal specialization for stdp: long-tail weight distributions solve the dilemma. PLoS ONE 6:e25339 doi: 10.1371/journal.pone.0025339

Goodman, D. F., and Brette, R. (2009). The brian simulator. Front. Neurosci. 3:192. doi: 10.3389/neuro.01.026.2009

Grewe, J., Kruscha, A., Lindner, B., and Benda, J. (2017). Synchronous spikes are necessary but not sufficient for a synchrony code. Proc. Natl. Acad. Sci. U.S.A. 114, E1977–E1985. doi: 10.1073/pnas.1615561114

Griffith, J., and Horn, G. (1966). An analysis of spontaneous impulse activity of units in the striate cortex of unrestrained cats. J. Physiol. 186, 516–534.

Harris, K. D., and Thiele, A. (2011). Cortical state and attention. Nat. Rev. Neurosci. 12, 509–523. doi: 10.1038/nrn3084

Harrison, P. M., Badel, L., Wall, M. J., and Richardson, M. J. E. (2015). Experimentally verified parameter sets for modelling heterogeneous neocortical pyramidal-cell populations. PLoS Comput. Biol. 11:8. doi: 10.1371/journal.pcbi.1004165

Helias, M., Tetzlaff, T., and Diesmann, M. (2014). The correlation structure of local neuronal networks intrinsically results from recurrent dynamics. PLoS Comput. Biol. 10:e1003428. doi: 10.1371/journal.pcbi.1003428

Hopfield, J. J., and Herz, A. V. M. (1995). Rapid local synchronization of action potentials: towards computation with coupled integrate-and-fire neurons. Proc. Natl. Acad. U.S.A. 92, 6655–6662.

Hromádka, T., DeWeese, M. R., and Zador, A. M. (2008). Sparse representation of sounds in the unanesthetized auditory cortex. PLoS Biol. 6:e16. doi: 10.1371/journal.pbio.0060016

Izhikevich, E. M. (2003). Simple model of spiking neurons. IEEE Trans. Neural Netw. 14, 1569–1572. doi: 10.1109/TNN.2003.820440

Jarvis, M., and Mitra, P. (2001). Sampling properties of the spectrum and coherency of sequences of action potentials. Neural Comput. 13, 717–749. doi: 10.1162/089976601300014312

Kadmon, J., and Sompolinsky, H. (2015). Transition to chaos in random neuronal networks. Phys. Rev. X 5 5:041030. doi: 10.1103/PhysRevX.5.041030

Knight, B. W. (1972). Relationship between firing rate of a single neuron and level of activity in a population of neurons - experimental evidence for resonant enhancement in population response. J. Gen. Physiol. 59, 767–778.

Koch, K., and Fuster, J. (1989). Unit activity in monkey parietal cortex related to haptic perception and temporary memory. Exp. Brain Res. 76, 292–306.

Ladenbauer, J., Augustin, M., Shiau, L., and Obermayer, K. (2012). Impact of adaptation currents on synchronization of coupled exponential integrate-and-fire neurons. PLoS Comput. Biol. 8:e1002478. doi: 10.1371/journal.pcbi.1002478

Lerchner, A., Sterner, G., Hertz, J., and Ahmadi, M. (2006). Mean field theory for a balanced hypercolumn model of orientation selectivity in primary visual cortex. Netw. Comput. Neural Syst. 17, 131–150. doi: 10.1080/09548980500444933

Lindner, B. (2006). Superposition of many independent spike trains is generally not a poisson process. Phys. Rev. E Stat. Nonlin. Soft Matter Phys. 73:022901. doi: 10.1103/PhysRevE.73.022901

Lindner, B., Doiron, B., and Longtin, A. (2005). Theory of oscillatory firing induced by spatially correlated noise and delayed inhibitory feedback. Phys. Rev. E Stat. Nonlin. Soft Matter Phys. 72:061919. doi: 10.1103/PhysRevE.72.061919

Lindner, B., Schimansky-Geier, L., and Longtin, A. (2002). Maximizing spike train coherence or incoherence in the leaky integrate-and-fire model. Phys. Rev. E Stat. Nonlin. Soft Matter Phys. 66:031916. doi: 10.1103/PhysRevE.66.031916

Litwin-Kumar, A., and Doiron, B. (2012). Slow dynamics and high variability in balanced cortical networks with clustered connections. Nat. Neurosci. 15, 1498–1505. doi: 10.1038/nn.3220

Mari, C. F. (2000). Random networks of spiking neurons: instability in the Xenopus tadpole moto-neural pattern. Phys. Rev. Lett. 85, 210–213. doi: 10.1103/PhysRevLett.85.210

Mastrogiuseppe, F., and Ostojic, S. (2017). Intrinsically-generated fluctuating activity in excitatory-inhibitory networks. PLoS Comput. Biol. 13:e1005498. doi: 10.1371/journal.pcbi.1005498

Mattia, M., and Giudice, P. D. (2002). Population dynamics of interacting spiking neurons. Phys. Rev. E 66:051917. doi: 10.1103/PhysRevE.66.051917

Meunier, D., Lambiotte, R., and Bullmore, E. T. (2010). Modular and hierarchically modular organization of brain networks. Front. Neurosci. 4:200. doi: 10.3389/fnins.2010.00200

Middleton, J., Chacron, M., Lindner, B., and Longtin, A. (2003). Firing statistics of a neuron model driven by long-range correlated noise. Phys. Rev. E Stat. Nonlin. Soft Matter Phys. 68:021920. doi: 10.1103/PhysRevE.68.021920

Neiman, A. B., and Russell, D. F. (2011). Sensory coding in oscillatory electroreceptors of paddlefish. Chaos 21:047505. doi: 10.1063/1.3669494

Neiman, A. B., Yakusheva, T. A., and Russell, D. F. (2007). Noise-induced transition to bursting in responses of paddlefish electroreceptor afferents. J. Neurophysiol. 98, 2795–2806. doi: 10.1152/jn.01289.2006

O'Connor, D. H., Peron, S. P., Huber, D., and Svoboda, K. (2010). Neural activity in barrel cortex underlying vibrissa-based object localization in mice. Neuron 67, 1048–1061. doi: 10.1016/j.neuron.2010.08.026

Ostojic, S. (2014). Two types of asynchronous activity in networks of excitatory and inhibitory spiking neurons. Nat. Neurosci. 17, 594–600. doi: 10.1038/nn.3658

Ostojic, S., Brunel, N., and Hakim, V. (2009). How connectivity, background activity, and synaptic properties shape the cross-correlation between spike trains. J. Neurosci. 29, 10234–10253. doi: 10.1523/JNEUROSCI.1275-09.2009

Pesaran, B., Pezaris, J., Sahani, M., Mitra, P., and Andersen, R. (2002). Temporal structure in neuronal activity during working memory in macaque parietal cortex. Nat. Neurosci. 5, 805–811. doi: 10.1038/nn890

Poulet, J. F. A., and Petersen, C. C. H. (2008). Internal brain state regulates membrane potential synchrony in barrel cortex of behaving mice. Nature 454, 881–885. doi: 10.1038/nature07150

Renart, A., De La Rocha, J., Bartho, P., Hollender, L., Parga, N., Reyes, A., et al. (2010). The asynchronous state in cortical circuits. Science 327, 587–590. doi: 10.1126/science.1179850

Richardson, M. J. E. (2008). Spike-train spectra and network response functions for non-linear integrate-and-fire neurons. Biol. Cybern. 99, 381–392. doi: 10.1007/s00422-008-0244-y

Richardson, M. J. E. (2009). Dynamics of populations and networks of neurons with voltage-activated and calcium-activated currents. Phys. Rev. E Stat. Nonlin. Soft Matter Phys. 80:021928. doi: 10.1103/PhysRevE.80.021928

Richardson, M. J. E., and Swarbrick, R. (2010). Firing-rate response of a neuron receiving excitatory and inhibitory synaptic shot noise. Phys. Rev. Lett. 105:178102. doi: 10.1103/PhysRevLett.105.178102

Roxin, A., Brunel, N., Hansel, D., Mongillo, G., and van Vreeswijk, C. (2011). On the distribution of firing rates in networks of cortical neurons. J. Neurosci. 31, 16217–16226. doi: 10.1523/JNEUROSCI.1677-11.2011

Schimansky-Geier, L., and Zülicke, C. (1990). Harmonic noise: effect on bistable systems. Z. Phys. B 79, 451–460.

Schneidman, E., Berry, M. J., Segev, R., and Bialek, W. (2006). Weak pairwise correlations imply strongly correlated network states in a neural population. Nature 440, 1007–1012. doi: 10.1038/nature04701

Schwalger, T., Deger, M., and Gerstner, W. (2017). Towards a theory of cortical columns: From spiking neurons to interacting neural populations of finite size. PLoS Comput. Biol. 13:e1005507. doi: 10.1371/journal.pcbi.1005507

Schwalger, T., Droste, F., and Lindner, B. (2015). Statistical structure of neural spiking under non-poissonian or other non-white stimulation. J. Comput. Neurosci. 39, 29–51. doi: 10.1007/s10827-015-0560-x

Shafi, M., Zhou, Y., Quintana, J., Chow, C., Fuster, J., and Bodner, M. (2007). Variability in neuronal activity in primate cortex during working memory tasks. Neuroscience 146, 1082–1108. doi: 10.1016/j.neuroscience.2006.12.072

Shea-Brown, E., Josić, K., de la Rocha, J., and Doiron, B. (2008). Correlation and synchrony transfer in integrate-and-fire neurons: basic properties and consequences for coding. Phys. Rev. Lett. 100:108102. doi: 10.1103/PhysRevLett.100.108102

Siegle, P., Goychuk, I., P. Talkner, P., and Hänggi, P. (2010). Markovian embedding of non-Markovian superdiffusion. Phys. Rev. E 81:011136. doi: 10.1103/PhysRevE.81.011136

Sompolinsky, H., Crisanti, A., and Sommers, H. J. (1988). Chaos in random neural networks. Phys. Rev. Lett. 61:259. doi: 10.1103/PhysRevLett.61.259

Sompolinsky, H., and Zippelius, A. (1982). Relaxational dynamics of the edwards-anderson model and the mean-field theory of spin-glasses. Phys. Rev. B 25:6860. doi: 10.1103/PhysRevB.25.6860

Song, S., Sjöström, P. J., Reigl, M., Nelson, S., and Chklovskii, D. B. (2005). Highly nonrandom features of synaptic connectivity in local cortical circuits. Plos Comput. Biol. 3:e68. doi: 10.1371/journal.pbio.0030068

Spiridon, M., and Gerstner, W. (1999). Noise spectrum and signal transmission through a population of spiking neurons. Netw. Comput. Neural Syst. 10, 257–272.