- 1School of Electronics and Information Engineering, Taizhou University, Zhejiang, Taizhou, China

- 2Data Mining Research Center, Xiamen University, Fujian, Xiamen, China

- 3School of Mathematics and Statistics, Central South University, Hunan, Changsha, China

In this study, we investigate a new neural network method to solve Volterra and Fredholm integral equations based on the sine-cosine basis function and extreme learning machine (ELM) algorithm. Considering the ELM algorithm, sine-cosine basis functions, and several classes of integral equations, the improved model is designed. The novel neural network model consists of an input layer, a hidden layer, and an output layer, in which the hidden layer is eliminated by utilizing the sine-cosine basis function. Meanwhile, by using the characteristics of the ELM algorithm that the hidden layer biases and the input weights of the input and hidden layers are fully automatically implemented without iterative tuning, we can greatly reduce the model complexity and improve the calculation speed. Furthermore, the problem of finding network parameters is converted into solving a set of linear equations. One advantage of this method is that not only we can obtain good numerical solutions for the first- and second-kind Volterra integral equations but also we can obtain acceptable solutions for the first- and second-kind Fredholm integral equations and Volterra–Fredholm integral equations. Another advantage is that the improved algorithm provides the approximate solution of several kinds of linear integral equations in closed form (i.e., continuous and differentiable). Thus, we can obtain the solution at any point. Several numerical experiments are performed to solve various types of integral equations for illustrating the reliability and efficiency of the proposed method. Experimental results verify that the proposed method can achieve a very high accuracy and strong generalization ability.

1. Introduction

Volterra and Fredholm integral equations have many applications in natural sciences and engineering. A linear phenomenon appearing in many applications in scientific fields can be modeled by linear integral equations (Abdou, 2002; Isaacson and Kirby, 2011). For example, as mentioned by Lima and Buckwar (2015), a class of integro-differential equations, known as neural field equations, describes the large-scale dynamics of spatially structured networks of neurons. These equations are widely used in the field of neuroscience and robotics, and they also play a crucial role in cognitive robotics. The reason is that the architecture of autonomous robots, which are able to interact with other agents in dealing with a mutual task, is strongly inspired by the processing principles and the neuronal circuitry in the primate brain.

This study aims to consider several kinds of linear integral equations. The general form of linear integral equations is defined as follows:

Where the functions k1(x, t), k2(x, t), and g(x) are known, but y(x) is the unknown function that will be determined; a and b are constants; and ϵ, λ and μ are parameters. Notably, we have

(i). Equation (1) is called linear Fredholm integral equation of the first kind if ϵ, μ = 0 and λ = 1.

(ii). Equation (1) is called linear Volterra integral equation of the first kind if ϵ, λ = 0 and μ = 1.

(iii). Equation (1) is called linear Fredholm integral equation of the second kind if μ = 0 and ϵ, λ = 1.

(iv). Equation (1) is called linear Volterra integral equation of the second kind if λ = 0 and ϵ, μ = 1.

(v). Equation (1) is called linear Volterra–Fredholm integral equation if μ, ϵ, λ = 1.

Many methods for numerical solutions of Volterra integral equations, Fredholm integral equations, and Volterra-Fredholm integral equations have been presented in recent years. Orthogonal polynomials (e.g., wavelets Maleknejad and Mirzaee, 2005, Bernstein Mandal and Bhattacharya, 2007, Chebyshev Dastjerdi and Ghaini, 2012) were proposed for solving integral equations. The Taylor collocation method (Wang and Wang, 2014), Lagrange collocation method (Wang and Wang, 2013; Nemati, 2015), and Fibonacci collocation method (Mirzaee and Hoseini, 2016) were effective and convenient for solving integral equations. The Sinc-collocation method (Rashidinia and Zarebnia, 2007) and Galerkin method (Saberi-Nadjafi et al., 2012) also give good performance in solving Volterra integral equation problems. However, most of these traditional methods have the following disadvantage: they provide the solution, in the form of an array, at specific preassigned mesh points in the domain, and they need an additional interpolation procedure to yield the solution for the whole domain. In order to have an accurate solution, one either has to increase the order of the method or decrease the step size. This, however, increases the computational cost.

The neural network has excellent application potential in many fields (Habib and Qureshi, 2022; Li and Ying, 2022) owing to its universal function approximation capabilities (Hou and Han, 2012; Hou et al., 2017, 2018). In this case, the neural network is widely used as an effective tool for solving differential equations, integral equations, and integro–differential equations (Mall and Chakraverty, 2014, 2016; Jafarian et al., 2017; Pakdaman et al., 2017; Zuniga-Aguilar et al., 2017; Rostami and Jafarian, 2018). Golbabai and Seifollahi presented radial basis function networks for solving linear Fredholm and Volterra integral equations of the second kind (Golbabai and Seifollahi, 2006), and they solved a system of nonlinear integral equations (Golbabai and Seifollahi, 2009). Effati and Buzhabadia presented multilayer perceptron networks for solving Fredholm integral equations of the second kind (Effati and Buzhabadi, 2012). Jafarian and Nia proposed a feedback neural network method for solving linear Fredholm and Volterra integral equations of the second kind (Jafarian and Nia, 2013a,b). Jafarian presented artificial neural networks-based modeling for solving the Volterra integral equations system (Jafarian et al., 2015). However, the traditional neural network algorithms have some problems, such as over-fitting, difficulty to determine hidden layer nodes, optimization of model parameters, being easily trapped into local minima, slow convergence speed, and reduction in the learning speed and efficiency of the model when the input data are large or the network structure is complex (Huang and Chen, 2008).

Huang et al. (2006a,b) proposed an extreme learning machine (ELM) algorithm, which is a single-hidden-layer feed-forward neural network. The ELM algorithm only needs to set the number of hidden nodes of the network but does not need to adjust the input weights and bias values, and the output weights can be determined by the Moore–Penrose generalized inverse operation. The ELM algorithm provides faster learning speed, better generalization performance, with least human intervention. Based on the advantages, the ELM algorithm has been widely applied to many real-world applications, such as regression and classification problems (Wong et al., 2018). Many neural network methods based on the improved extreme learning machine algorithm for solving ordinary differential equations (Yang et al., 2018; Lu et al., 2022), partial differential equations (Sun et al., 2019; Yang et al., 2020), the ruin probabilities of the classical risk model and the Erlang (2) risk model in Zhou et al. (2019), Lu et al. (2020), and one-dimensional asset-pricing (Ma et al., 2021) have been developed. Chen et al. (2020, 2021, 2022) proposed the trigonometric exponential neural network, Laguerre neural network, and neural finite element method for ruin probability, generalized Black–Scholes differential equation, and generalized Black–Scholes–Merton differential equation. Inspired by these studies, the motivation of this research is to present the sine-cosine ELM (SC-ELM) algorithm to solve linear Volterra integral equations of the first kind, linear Volterra integral equations of the second kind, linear Fredholm integral equations of the first kind, linear Fredholm integral equations of the second kind, and linear Volterra–Fredholm integral equations. In the latest study, a linear integral equation of the third kind with fixed singularities in the kernel is studied by Gabbasov and Galimova (2022), and Volterra integral equations of the first kind on a bounded interval are considered by Bulatov and Markova (2022). For more results, we may refer to Din et al. (2022) and Usta et al. (2022).

In this study, we propose a neural network method based on the sine-cosine basis function and the improved ELM algorithm to solve linear integral equations. Specifically, the hidden layer is eliminated by expanding the input pattern utilizing the sine-cosine basis function, and this simplifies the calculation to some extent. Moreover, the improved ELM algorithm can automatically satisfy the boundary conditions and it transforms the problem into solving a linear system, which provides great convenience for calculation. Furthermore, the closed-form solution by utilizing this model can be obtained, and the approximate solution of any point for linear integral equations can be provided from it.

The remainder of the article is organized as follows. In Section 2, a brief review of the ELM algorithm is provided. In Section 3, a novel neural network method based on the sine-cosine basis function and ELM algorithm for solving integral equations in the form of Equation (1) are discussed. In Section 4, we show several numerical examples to demonstrate the accuracy and the efficiency of the improved neural network algorithm. In Section 5, concluding remarks are presented.

2. The ELM algorithm

The ELM algorithm was originated from the single-hidden-layer feed-forward network (SLFN) and then got developed into a generalized SLFN algorithm (Huang and Chen, 2007). The ELM algorithm not only is fully automatically implemented without iterative tuning but also tends to the minimum training error. The ELM algorithm can provide least human intervention, faster learning speed, and better generalization performance. Therefore, the ELM algorithm is widely used in classification and regression tasks (Huang et al., 2012; Cambria and Huang, 2013).

For a data set with N + 1 different training samples (xi, gi) ∈ ℝ × ℝ(i = 0, 1, ..., N), the neural network with M+1 hidden neurons is expressed as follows:

Where f is the activation function, wj is the input weight of the j-th hidden layer node, bj is the bias value of the j-th hidden layer node, and βj is the output weight connecting the j-th hidden layer node and the output node.

The error function of SLFN is as follows:

Assuming the error between the output value oi of SLFN and the exact value gi is zero, the relationship between xi and gi can be modeled as follows:

Where both the input weight wj and the bias value bj are randomly generated. The equations (4) can be rewritten in the following matrix form, that is:

Where H is the output matrix of the hidden layer, and it is defined as follows:

A common minimum norm least-squares solution of the linear system Equation (5) is calculated by

3. The proposed method

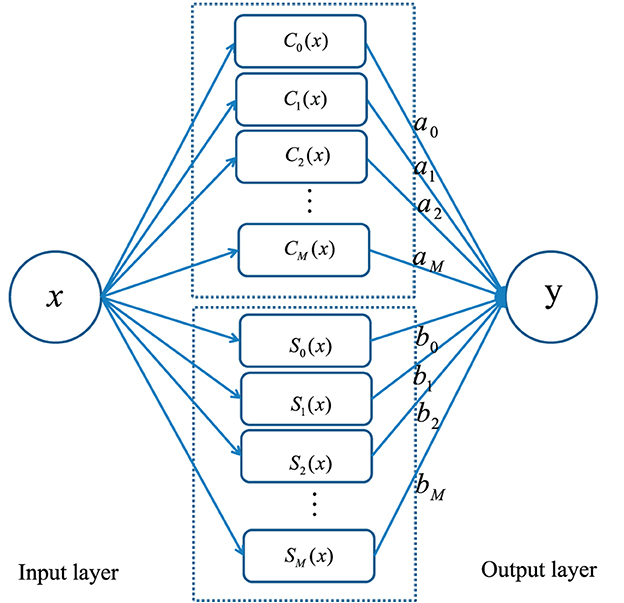

In this section, we propose a neural network method based on sine-cosine basis function and extreme learning machine algorithm to solve linear integral equations. The single-hidden-layer sine-cosine neural network algorithm consists of three layers: an input layer, a hidden layer, and an output layer. The unique hidden layer consists of two parts. The first part uses the cosine basis function as the basis function and the other part implements the superposition of the sine basis function. The structure of sine-cosine neural network method is shown in Figure 1.

Figure 1. The structure of sine-cosine neural network method for solving several kinds of linear integral equations.

The steps of the sine-cosine neural network method for solving several kinds of linear integral equations are as follows:

Step 1: Discretize the interval [a, b] into a series of collocation points Ω = {a = x0 < x1 < ... < xN = b}, .

Step 2: Construct the approximate solution by using sine-cosine basis as an activation function, that is

Step 3: According to different problems and different data sets, we substitute the trial solution ŷSC-ELM into the Equation (1) to be solved. Then, we convert this equation into a matrix form:

Where ; .

Step 4: From the theory of Moore–Penrose generalized inverse of matrix H, we can obtain the net parameters as

Step 5: Find the connection parameters aj, bj and the number of neurons M with the smallest MSE as the optimal value. The corresponding optimal number of neurons M and output weights aj, bj are, respectively, the optimal number of neurons M and optimal output weights .

Step 6: Substitute aj, bj, j = 0, 1, 2, …, M into Equation (7) to get the new numerical solution.

Some advantages of the single-layer sine-cosine neural network method for solving integral equations are as follows:

(i) The hidden layer is eliminated by expanding the input pattern using the sine-cosine basis function.

(ii) The sine-cosine neural network algorithm only needs to determine the weights of the output layer. The problem could be transformed into a linear system, and the output weights can be obtained by a simple generalized inverse matrix, which greatly improves the calculation speed.

(iii) We can obtain the closed-form solution by using this model, and most important of all, the approximate solution of any point for linear integral equations can be given from it. It provides a good method for solving integral equations.

4. Numerical experiments

In this section, some numerical experiments are performed to demonstrate the reliability and powerfulness of the improved neural network algorithm. The sine-cosine neural network method based on the sine-cosine basis function and ELM algorithm is applied to solve the linear Volterra integral equations of the first kind, linear Volterra integral equations of the second kind, linear Fredholm integral equations of the first kind, linear Fredholm integral equations of the second kind, and linear Volterra–Fredholm integral equations.

The algorithm is evaluated with MATLAB R2021a running in an Intel Xeon Gold 6226R CPU with 64.0GB RAM. The training set is obtained by taking points at equal intervals, and the testing set is randomly selected. The validation set is the set of midpoints V = {vi|vi = (xi+xi+1)/2, i = 0, 1, ..., N}, where are training points in the following studies. We use mean square error (MSE), absolute error (AE), mean absolute error (MAE) and root mean square error (RMSE) to measure the error of numerical solution. They can be defined as follows:

Where y(xi) denote the exact solution and ŷ(xi) represent the approximate solution obtained by the proposed algorithm. Note that wj = jπ/(b−a) and bj = −jπa/(b−a)(j = 0, 1, 2, ..., M) are selected in our proposed method. Moreover, the number M of hidden neurons that results in minimum mean squared error on the validation set can be selected.

4.1. Example 1

Consider linear Volterra integral equation of the second kind (Guo et al., 2012) as

The analytical solution is f(x) = cos(x).

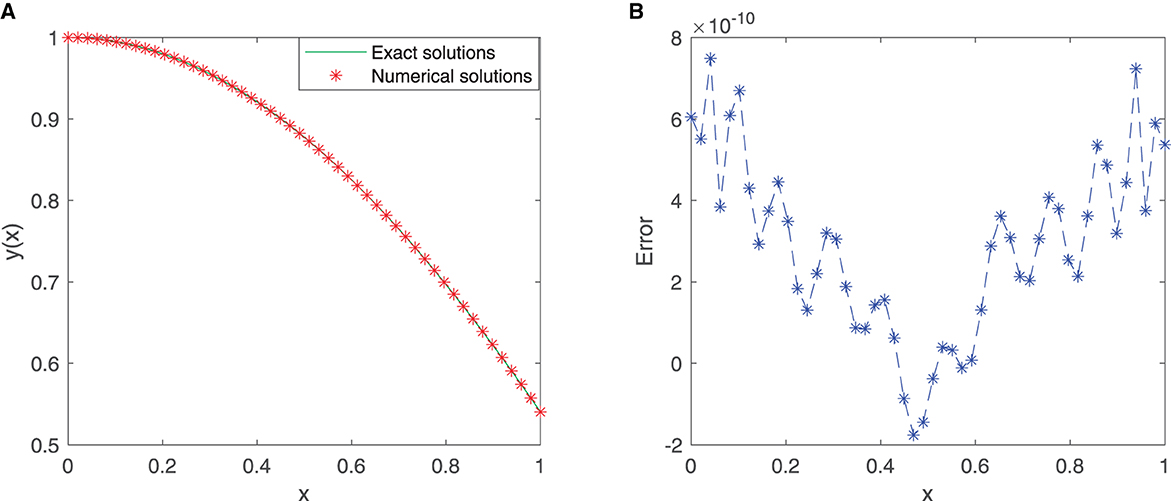

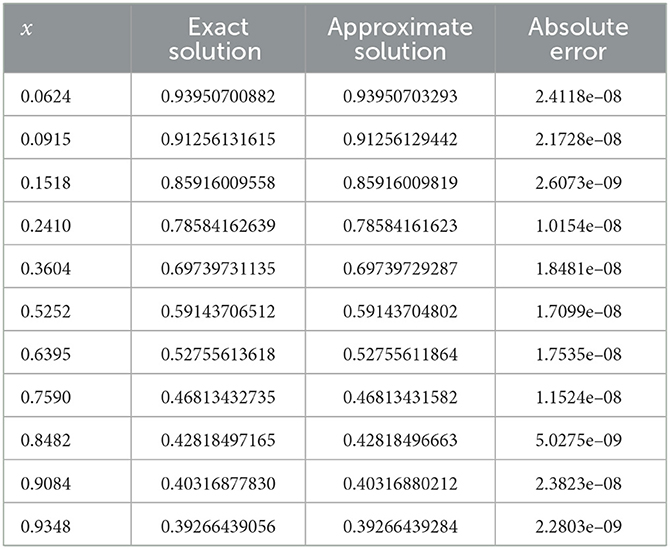

We train our proposed neural network for 50 equidistant points in the given interval [0, 1] with the first 12 sine-cosine basis functions. Comparison between the exact solution and the approximate solution via our improved neural network algorithm is depicted in Figure 2A, and the plot of the error function between them is cited in Figure 2B. As shown in the figures, the mean squared error is 1.3399 × 10−19, and the maximum absolute error is approximately 7.4910 × 10−10.

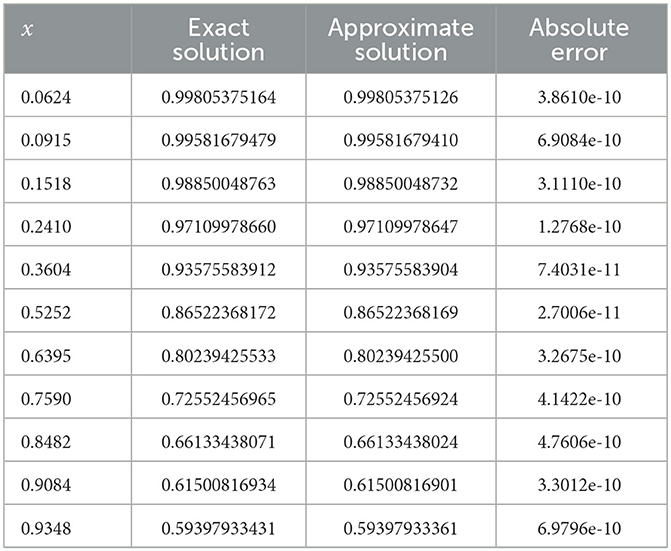

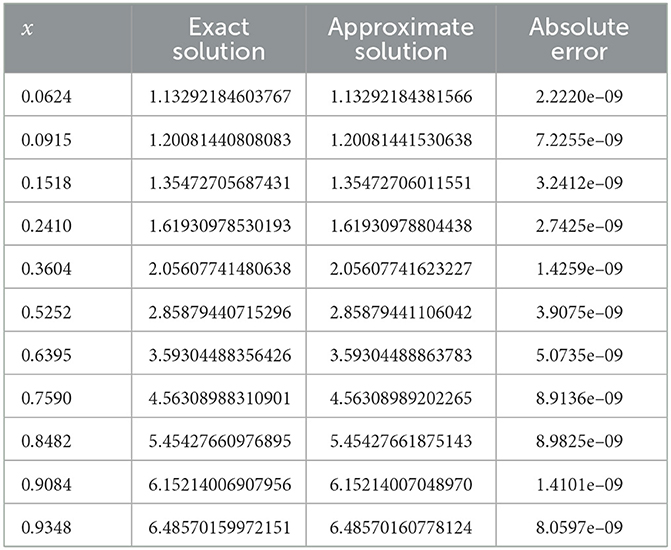

Table 1 incorporates the results of the exact solution and the approximate solution via our proposed neural network algorithm for 11 testing points at unequal intervals in the domain [0, 1]. The absolute errors are listed in Table 1, in which we observe that the mean squared error is approximately 1.6789 × 10−19. These results imply that the proposed method has higher accuracy.

Table 2 compares the proposed method with the LS-SVR method. The maximum absolute error is approximately 6.8246 × 10−10. Note that in Guo et al. (2012), the maximum absolute error shown in Guo et al. (2012) Table 5 is approximately 2.4981 × 10−7. The solution accuracy of the proposed algorithm is higher.

4.2. Example 2

Consider the linear Volterra integral equation of the first kind (Masouri et al., 2010) as

The analytical solution is f(x) = e−x.

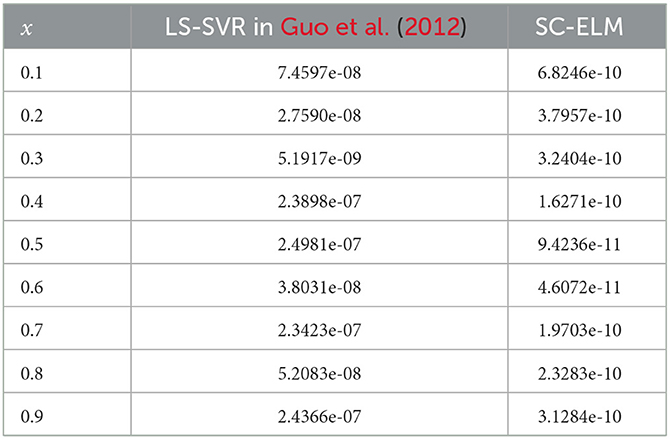

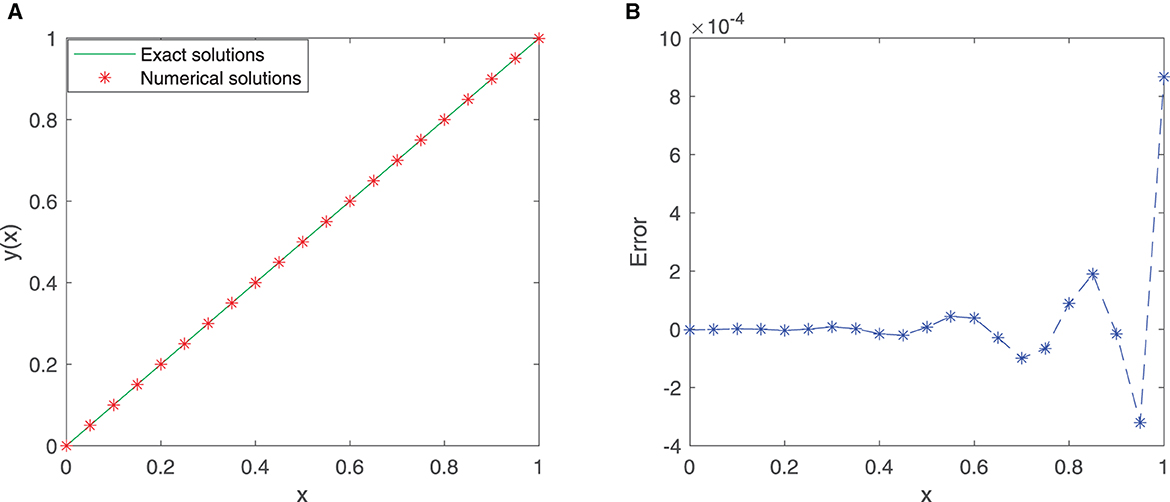

A total of 21 equidistant points in the given interval [0, 1] are used as the training points, and the neural network adapts the first 10 sine-cosine basis functions. Figures 3A, B shows that the exact solution and the approximate solution are highly consistent. The maximum absolute error is approximately 1.3959 × 10−6.

Table 3 lists the results of the exact solution and the approximate solution via our proposed neural network algorithm in the domain [0, 1]. The mean squared error is approximately 2.5781 × 10−16. These findings provide a strong support for the effectiveness of our proposed method.

4.3. Example 3

We consider linear Fredholm integral equation of the first kind (Rashed, 2003) as

The analytical solution is f(x) = x.

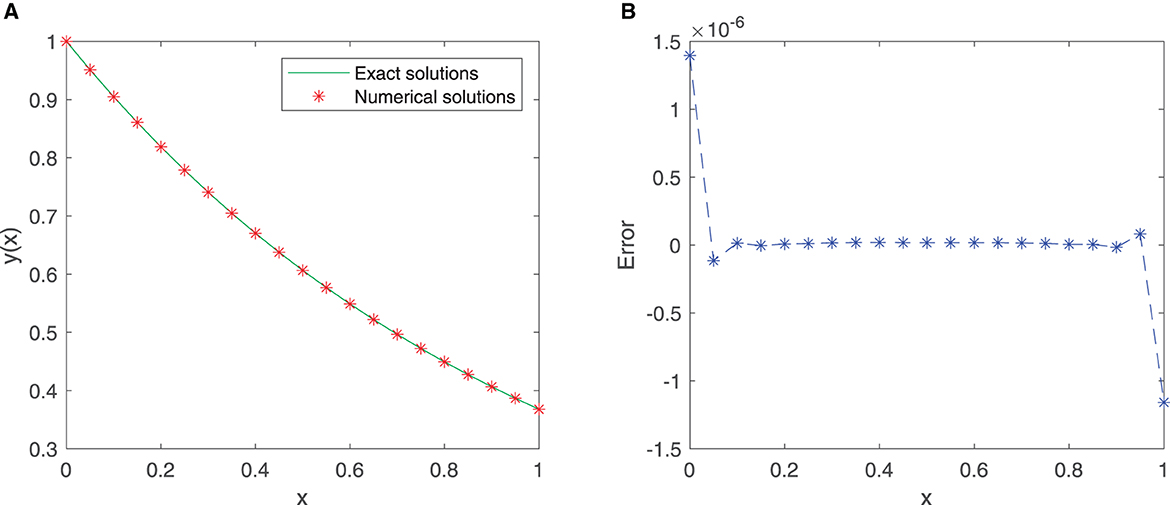

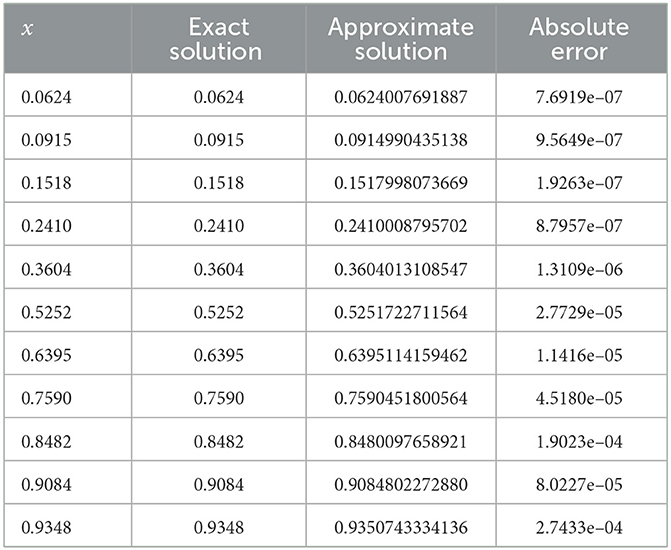

This problem is solved by utilizing our proposed neural network model in the given interval [0, 1]. We consider 21 equidistant points in the domain [0, 1] with the first six sine-cosine basis functions to train the model. Comparison between the exact solution and the approximate solution via our improved neural network algorithm is depicted in Figure 4A, and the error plot is depicted in Figure 4B. Note that the mean squared error is 4.5915 × 10−8 for these training points.

Table 4 incorporates the results of the exact solution and the approximate solution via our proposed neural network algorithm for 11 testing points at unequal intervals in the domain [0, 1]. We observe that the maximum absolute error is approximately 2.7433 × 10−4. The results show that this new neural network has a good generalization ability.

4.4. Example 4

We consider the linear Fredholm integral equation of the second kind (Golbabai and Seifollahi, 2006) as

The analytical solution is f(x) = e2x.

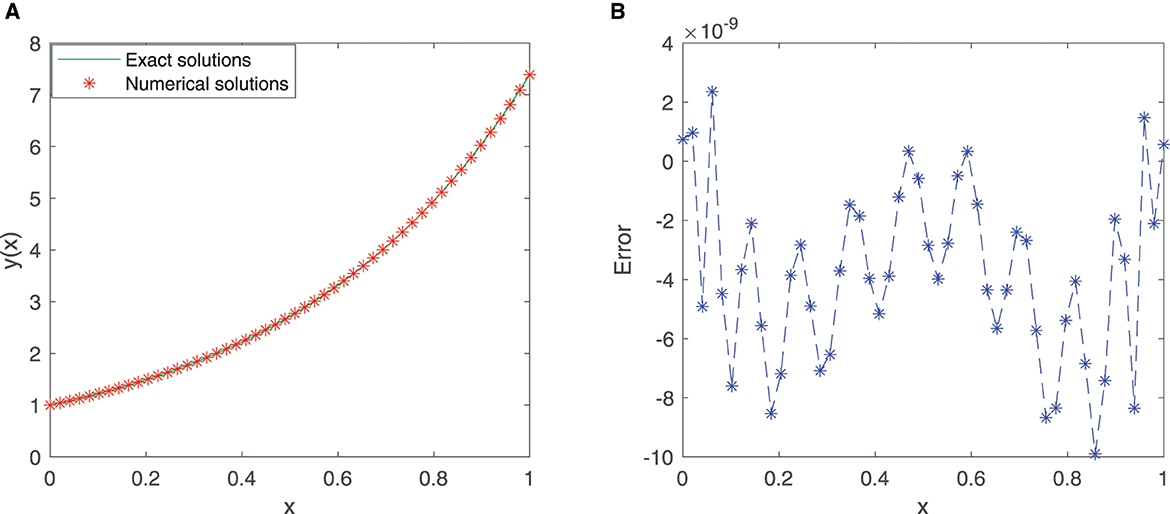

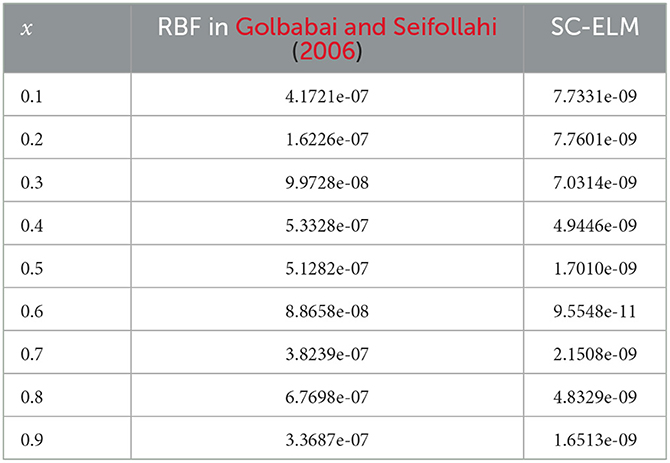

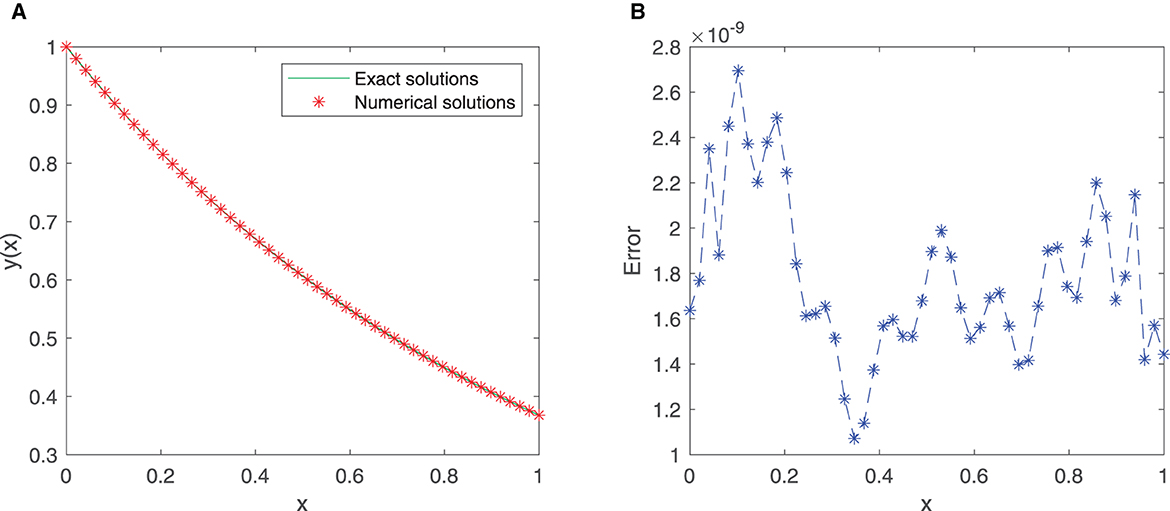

The improved neural network algorithm for the linear Fredholm integral equation of the second kind has been trained with 50 equidistant points in the given interval [0, 1] with the first 12 sine-cosine basis functions. The approximate solution obtained by the improved neural network algorithm and the exact solution are shown in Figure 5A, and the error function is displayed in Figure 5B. Especially, the mean squared error is 2.3111 × 10−17, and the maximum absolute error is approximately 9.8998 × 10−9, which fully demonstrates the superiority of the improved neural network algorithm.

Finally, Table 5 provides the results of the exact solution and the approximate solution via our proposed neural network algorithm for 11 testing points at unequal intervals in the domain [0, 1]. As shown in Table 5, the mean squared error is approximately 3.1391 × 10−17, which undoubtedly shows the power and effectiveness of the proposed method.

Table 6 compares the proposed method with RBF networks. The maxmium absolute error by our proposed method is approximately 7.7601 × 10−9. Note that in Golbabai and Seifollahi (2006), the maxmium absolute error shown in Golbabai and Seifollahi (2006), as shown in Table 1, is approximately 6.7698 × 10−7. The solution accuracy of the proposed algorithm is higher.

4.5. Example 5

Consider the linear Volterra–Fredholm integral equation (Wang and Wang, 2014) as

The analytical solution is f(x) = e−x.

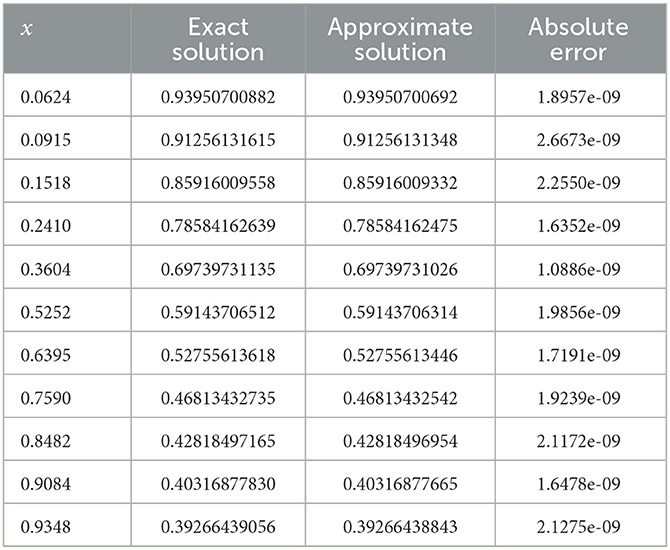

A total of 50 equidistant points in the given interval [0, 1] and the first 11 sine-cosine basis functions are considered to train the neural network model. The comparison images and error images of the exact solution and the approximate solution are listed in Figures 6A, B, from which we can see that the mean squared error is 3.3499 × 10−18.

Table 7 shows the results of the exact solution and the approximate solution via the improved ELM method for 11 testing points at unequal intervals in the domain [0, 1]. As shown in the table, the maximum absolute error is approximately 2.6673 × 10−9, which reveals that the improved neural network algorithm has higher accuracy and excellent performance.

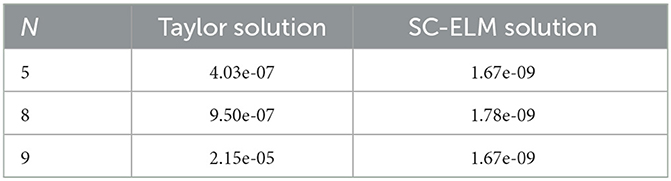

We compare the RMSE of our proposed method and the Taylor collocation method in Wang and Wang (2014). From Table 8, we can see clearly that our algorithm is more accurate than the algorithm in the Taylor collocation method. As can be seen from Table 8, when 5, 8, and 9 points are tested, the RMSEs shown by the Taylor collocation method in Wang and Wang (2014) are approximately 4.03 × 10−7, 9.50 × 10−7, and 2.15 × 10−5, but the RMSEs shown by our proposed method are respectively 1.67 × 10−9, 1.78 × 10−9, and 1.67 × 10−9.

4.6. Example 6

We consider linear the Volterra integral equation of the second kind (Saberi-Nadjafi et al., 2012).

The analytical solution is f(x) = sin(2x)+cos(2x).

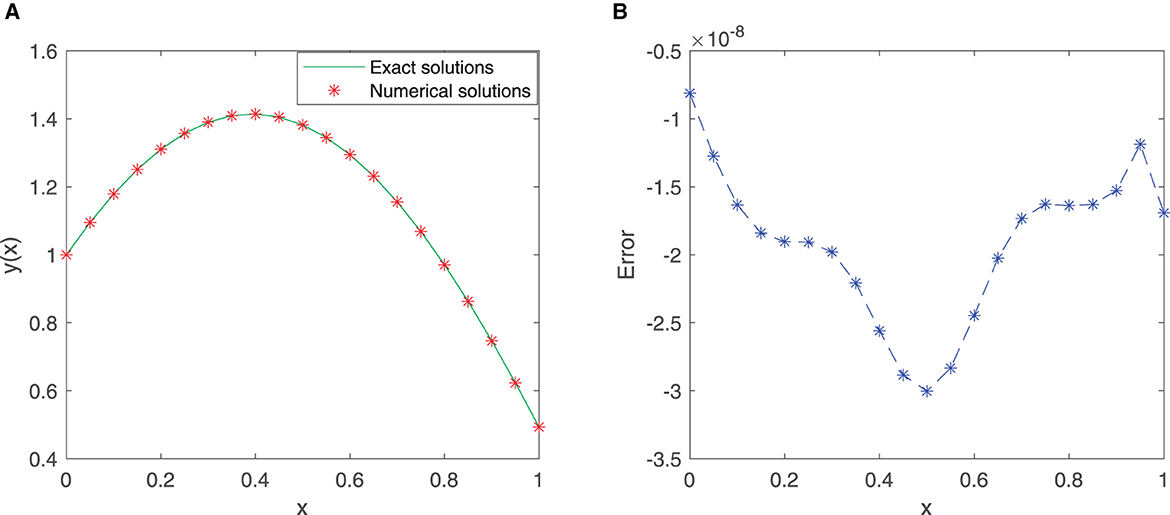

A total of 21 equidistant discrete points and the first 11 sine-cosine basis functions are utilized to construct the neural network model. The comparison images and error images of the exact solution and the approximate solution are displayed in Figures 7A, B. It ia not hard to find that the MSE is 4.2000 × 10−16, and this implies that the proposed algorithm has higher accuracy.

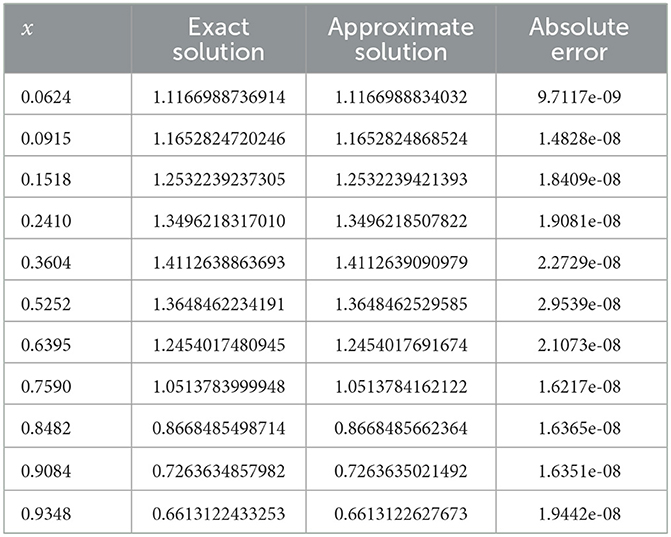

To verify the effectiveness of our proposed method, we provide the results of the exact solution and the approximate solution via the improved ELM method for 11 testing points at unequal intervals in the domain [0, 1], see Table 9. As shown in the table, the maximum absolute error is approximately 2.9539 × 10−8, which shows that the proposed algorithm has good generalization ability.

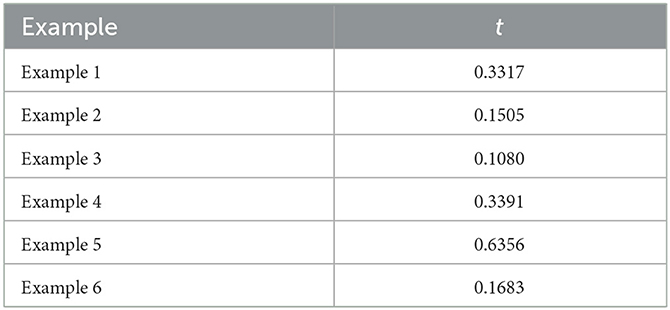

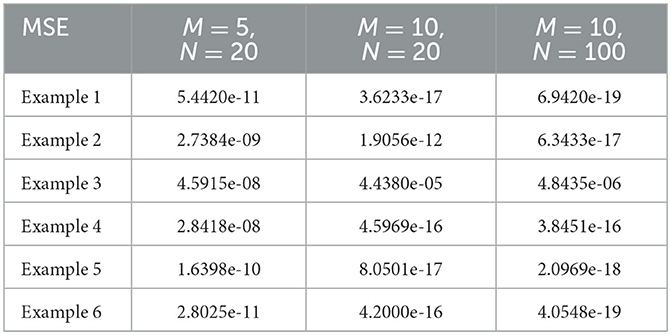

Table 10 compares the MSE of the numerical solutions obtained by the SC-ELM model when more training points are added and different numbers of hidden layer neurons are configured. From these results, it can be seen that the proposed method can achieve good accuracy. The calculation time of different examples is listed in Table 11. These data suggest that our method is efficient and feasible.

Table 10. Comparison of the different examples of MSE with different numbers of training points and hidden neurons.

5. Conclusion

In this study, the improved neural network algorithm based on the sine-cosine basis function and extreme learning machine algorithm has been developed for solving linear integral equations. The accuracy of the improved neural network has been checked by solving a linear Volterra integral equation of the first kind, a linear Volterra integral equation of the second kind, a linear Fredholm integral equation of the first kind, a linear Fredholm integral equation of the second kind, and a linear Volterra-Fredholm integral equation. The experimental results of the improved ELM approach with different types of integral equations show that the simulation results are close to the exact results. Therefore, the proposed model is very precise and could be a good tool for solving linear integral equations.

Data availability statement

The original contributions presented in the study are included in the article/supplementary material, further inquiries can be directed to the corresponding author.

Author contributions

All authors listed have made a substantial, direct, and intellectual contribution to the work and approved it for publication.

Acknowledgments

The authors sincerely thank all the reviewers and the editor for their careful reading and valuable comments, which improved the quality of this study.

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher's note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

References

Abdou, M. A. (2002). Fredholm-Volterra integral equation of the first kind and contact problem. Appl. Math. Comput. 125, 177–193. doi: 10.1016/S0096-3003(00)00118-1

Bulatov, M. V., and Markova, E. V. (2022). Collocation-variational approaches to the solution to volterra integral equations of the first kind. Comput. Math. Math. Phys. 62, 98–105. doi: 10.1134/S0965542522010055

Cambria, E., and Huang, G. B. (2013). Extreme learning machine: trends and controversies. IEEE Intell. Syst. 28, 30–59. doi: 10.1109/MIS.2013.140

Chen, Y., Wei, L., Cao, S., Liu, F., Yang, Y., and Cheng, Y. (2022). Numerical solving for generalized Black-Scholes-Merton model with neural finite element method. Digit. Signal Process. 131, 103757. doi: 10.1016/j.dsp.2022.103757

Chen, Y., Yi, C., Xie, X., Hou, M., and Cheng, Y. (2020). Solution of ruin probability for continuous time model based on block trigonometric exponential neural network. Symmetry 12, 876. doi: 10.3390/sym12060876

Chen, Y., Yu, H., Meng, X., Xie, X., Hou, M., and Chevallier, J. (2021). Numerical solving of the generalized Black-Scholes differential equation using Laguerre neural network. Digit. Signal Process. 112, 103003. doi: 10.1016/j.dsp.2021.103003

Dastjerdi, H. L., and Ghaini, F. M. M. (2012). Numerical solution of Volterra-Fredholm integral equations by moving least square method and Chebyshev polynomials. Appl. Math. Model 36, 3283–3288. doi: 10.1016/j.apm.2011.10.005

Din, Z. U., Islam, S. U., and Zaman, S. (2022). Meshless procedure for highly oscillatory kernel based one-dimensional volterra integral equations. J. Comput. Appl. Math. 413, 114360. doi: 10.1016/j.cam.2022.114360

Effati, S., and Buzhabadi, R. (2012). A neural network approach for solving Fredholm integral equations of the second kind. Neural Comput. Appl. 21, 843–852. doi: 10.1007/s00521-010-0489-y

Gabbasov, N. S., and Galimova, Z. K. (2022). On numerical solution of one class of integral equations of the third kind. Comput. Math. Math. Phys. 62, 316–324. doi: 10.1134/S0965542522020075

Golbabai, A., and Seifollahi, S. (2006). Numerical solution of the second kind integral equations using radial basis function networks. Appl. Math. Comput. 174, 877–883. doi: 10.1016/j.amc.2005.05.034

Golbabai, A., and Seifollahi, S. (2009). Solving a system of nonlinear integral equations by an RBF network. Comput. Math. Appl. 57, 1651–1658. doi: 10.1016/j.camwa.2009.03.038

Guo, X. C., Wu, C. G., Marchese, M., and Liang, Y. C. (2012). LS-SVR-based solving Volterra integral equations. Appl. Math. Comput. 218, 11404–11409. doi: 10.1016/j.amc.2012.05.028

Habib, G., and Qureshi, S. (2022). Global Average Pooling convolutional neural network with novel NNLU activation function and HYBRID parallelism. Front. Comput. Neurosci. 16, 1004988. doi: 10.3389/fncom.2022.1004988

Hou, M., and Han, X. (2012). Multivariate numerical approximation using constructive L2(R) RBF neural network. Neural Comput. Appl. 21, 25–34. doi: 10.1007/s00521-011-0604-8

Hou, M., Liu, T., Yang, Y., Zhu, H., Liu, H., Yuan, X., et al. (2017). A new hybrid constructive neural network method for impacting and its application on tungsten rice prediction. Appl. Intell. 47, 28–43. doi: 10.1007/s10489-016-0882-z

Hou, M., Yang, Y., Liu, T., and Peng, W. (2018). Forecasting time series with optimal neural networks using multi-objective optimization algorithm based on AICc. Front. Comput. Sci. 12, 1261–1263. doi: 10.1007/s11704-018-8095-8

Huang, G. B., and Chen, L. (2007). Letters: Convex incremental extreme learning machine. Neurocomputing 70, 3056–3062. doi: 10.1016/j.neucom.2007.02.009

Huang, G. B., and Chen, L. (2008). Enhanced random search based incremental extreme learning machine. Neurocomputing 71, 3460–3468. doi: 10.1016/j.neucom.2007.10.008

Huang, G. B., Chen, L., and Siew, C. K. (2006a). Universal approximation using incremental constructive feedforward networks with random hidden nodes. IEEE Trans. Neural Netw. 17, 879–892. doi: 10.1109/TNN.2006.875977

Huang, G. B., Zhou, H., Ding, X., and Zhang, R. (2012). Extreme learning machine for regression and multiclass classification. IEEE Trans. Syst. Man Cybern. B Cybern. 42, 513–529. doi: 10.1109/TSMCB.2011.2168604

Huang, G. B., Zhu, Q. Y., and Siew, C. K. (2006b). Extreme learning machine: theory and applications. Neurocomputing 70, 489–501. doi: 10.1016/j.neucom.2005.12.126

Isaacson, S. A., and Kirby, R. M. (2011). Numerical solution of linear Volterra integral equations of the second kind with sharp gradients. J. Comput. Appl. Math. 235, 4283–4301. doi: 10.1016/j.cam.2011.03.029

Jafarian, A., Measoomy, S., and Abbasbandy, S. (2015). Artificial neural networks based modeling for solving Volterra integral equations system. Appl. Soft. Comput. 27, 391–398. doi: 10.1016/j.asoc.2014.10.036

Jafarian, A., Mokhtarpour, M., and Baleanu, D. (2017). Artificial neural network approach for a class of fractional ordinary differential equation. Neural Comput. Appl. 28, 765–773. doi: 10.1007/s00521-015-2104-8

Jafarian, A., and Nia, S. M. (2013a). Feedback neural network method for solving linear Volterra integral equations of the second kind. Int. J. Math. Model. Numer. Optim. 4, 225–237. doi: 10.1504/IJMMNO.2013.056531

Jafarian, A., and Nia, S. M. (2013b). Using feed-back neural network method for solving linear Fredholm integral equations of the second kind. J. Hyperstruct 2, 53–71.

Li, Y. F., and Ying, H. (2022). Disrupted visual input unveils the computational details of artificial neural networks for face perception. Front. Comput. Neurosci. 16, 1054421. doi: 10.3389/fncom.2022.1054421

Lima, P. M., and Buckwar, E. (2015). Numerical solution of the neural field equation in the two-dimensional case. SIAM J. Sci. Comput. 37, B962-B979. doi: 10.1137/15M1022562

Lu, Y., Chen, G., Yin, Q., Sun, H., and Hou, M. (2020). Solving the ruin probabilities of some risk models with Legendre neural network algorithm. Digit. Signal Process. 99, 102634. doi: 10.1016/j.dsp.2019.102634

Lu, Y., Weng, F., and Sun, H. (2022). Numerical solution for high-order ordinary differential equations using H-ELM algorithm. Eng. Comput. 39, 2781–2801. doi: 10.1108/EC-11-2021-0683

Ma, M., Zheng, L., and Yang, J. (2021). A novel improved trigonometric neural network algorithm for solving price-dividend functions of continuous time one-dimensional asset-pricing models. Neurocomputing 435, 151–161. doi: 10.1016/j.neucom.2021.01.012

Maleknejad, K., and Mirzaee, F. (2005). Using rationalized Haar wavelet for solving linear integral equations. Appl. Math. Comput. 160, 579–587. doi: 10.1016/j.amc.2003.11.036

Mall, S., and Chakraverty, S. (2014). Chebyshev neural network based model for solving Lane-Emden type equations. Appl. Math. Comput. 247, 100–114. doi: 10.1016/j.amc.2014.08.085

Mall, S., and Chakraverty, S. (2016). Application of Legendre neural network for solving ordinary differential equations. Appl. Soft. Comput. 43, 347–356. doi: 10.1016/j.asoc.2015.10.069

Mandal, B. N., and Bhattacharya, S. (2007). Numerical solution of some classes of integral equations using Bernstein polynomials. Appl. Math. Comput. 190, 1707–1716. doi: 10.1016/j.amc.2007.02.058

Masouri, Z., Babolian, E., and Hatamzadeh-Varmazyar, S. (2010). An expansion-iterative method for numerically solving Volterra integral equation of the first kind. Comput. Math. Appl. 59, 1491–1499. doi: 10.1016/j.camwa.2009.11.004

Mirzaee, F., and Hoseini, S. F. (2016). Application of Fibonacci collocation method for solving Volterra-Fredholm integral equations. Appl. Math. Comput. 273, 637–644. doi: 10.1016/j.amc.2015.10.035

Nemati, S. (2015). Numerical solution of Volterra-Fredholm integral equations using Legendre collocation method. J. Comput. Appl. Math. 278, 29–36. doi: 10.1016/j.cam.2014.09.030

Pakdaman, M., Ahmadian, A., Effati, S., Salahshour, S., and Baleanu, D. (2017). Solving differential equations of fractional order using an optimization technique based on training artificial neural network. Appl. Math. Comput. 293, 81–95. doi: 10.1016/j.amc.2016.07.021

Rashed, M. T. (2003). Numerical solution of the integral equations of the first kind. Appl. Math. Comput. 145, 413–420. doi: 10.1016/S0096-3003(02)00497-6

Rashidinia, J., and Zarebnia, M. (2007). Solution of Voltera integral equation by the Sinc-collection method. J. Comput. Appl. Math. 206, 801–813. doi: 10.1016/j.cam.2006.08.036

Rostami, F., and Jafarian, A. (2018). A new artificial neural network structure for solving high-order linear fractional differential equations. Int. J. Comput. Math. 95, 528–539. doi: 10.1080/00207160.2017.1291932

Saberi-Nadjafi, J., Mehrabinezhad, M., and Akbari, H. (2012). Solving Volterra integral equations of the second kind by wavelet-Galerkin scheme. Comput. Math. Appl. 63, 1536–1547. doi: 10.1016/j.camwa.2012.03.043

Sun, H., Hou, M., Yang, Y., Zhang, T., Weng, F., and Han, F. (2019). Solving partial differential equation based on bernstein neural network and extreme learning machine algorithm. Neural Process. Lett. 50, 1153–1172. doi: 10.1007/s11063-018-9911-8

Usta, F., Akyiğit, M., Say, F., and Ansari, K. J. (2022). Bernstein operator method for approximate solution of singularly perturbed volterra integral equations. J. Math. Anal. Appl. 507, 125828. doi: 10.1016/j.jmaa.2021.125828

Wang, K. Y., and Wang, Q. S. (2013). Lagrange collocation method for solving Volterra-Fredholm integral equations. Appl. Math. Comput. 219, 10434–10440. doi: 10.1016/j.amc.2013.04.017

Wang, K. Y., and Wang, Q. S. (2014). Taylor collocation method and convergence analysis for the Volterra-Fredholm integral equations. J. Comput. Appl. Math. 260, 294–300. doi: 10.1016/j.cam.2013.09.050

Wong, C., Vong, C., Wong, P., and Cao, J. (2018). Kernel-based multilayer extreme learning machines for representation learning. IEEE Trans. Neural Netw. Learn. Syst. 29, 757–762. doi: 10.1109/TNNLS.2016.2636834

Yang, Y., Hou, M., and Luo, J. (2018). A novel improved extreme learning machine algorithm in solving ordinary differential equations by Legendre neural network methods. Adv. Diff. Equat. 469, 1–24. doi: 10.1186/s13662-018-1927-x

Yang, Y., Hou, M., Sun, H., Zhang, T., Weng, F., and Luo, J. (2020). Neural network algorithm based on Legendre improved extreme learning machine for solving elliptic partial differential equations. Soft Comput. 24, 1083–1096. doi: 10.1007/s00500-019-03944-1

Zhou, T., Liu, X., Hou, M., and Liu, C. (2019). Numerical solution for ruin probability of continuous time model based on neural network algorithm. Neurocomputing 331, 67–76. doi: 10.1016/j.neucom.2018.08.020

Zuniga-Aguilar, C. J., Romero-Ugalde, H. M., Gomez-Aguilar, J. F., Jimenez, R. F. E., and Valtierra, M. (2017). Solving fractional differential equations of variable-order involving operators with Mittag-Leffler kernel using artificial neural networks. Chaos Solitons Fractals 103, 382–403. doi: 10.1016/j.chaos.2017.06.030

Keywords: Volterra-Fredholm integral equations, approximate solutions, neural network algorithm, sine-cosine basis function, extreme learning machine

Citation: Lu Y, Zhang S, Weng F and Sun H (2023) Approximate solutions to several classes of Volterra and Fredholm integral equations using the neural network algorithm based on the sine-cosine basis function and extreme learning machine. Front. Comput. Neurosci. 17:1120516. doi: 10.3389/fncom.2023.1120516

Received: 10 December 2022; Accepted: 13 February 2023;

Published: 09 March 2023.

Edited by:

Jia-Bao Liu, Anhui Jianzhu University, ChinaReviewed by:

Yinghao Chen, Eastern Institute for Advanced Study, ChinaZhengguang Liu, Xi'an Jiaotong University, China

Copyright © 2023 Lu, Zhang, Weng and Sun. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Hongli Sun, aG9uZ2xpc3VuQDEyNi5jb20=

Yanfei Lu

Yanfei Lu Shiqing Zhang

Shiqing Zhang Futian Weng2

Futian Weng2