- 1Department of Ultrasound, The Second Affiliated Hospital of Fujian Medical University, Quanzhou, China

- 2Department of Computed Tomography and Magnetic Resonance Imaging (CT/MRI), The Second Affiliated Hospital of Fujian Medical University, Quanzhou, China

Purpose: This study aims to automatically classify color Doppler images into two categories for stroke risk prediction based on the carotid plaque. The first category is high-risk carotid vulnerable plaque, and the second is stable carotid plaque.

Method: In this research study, we used a deep learning framework based on transfer learning to classify color Doppler images into two categories: one is high-risk carotid vulnerable plaque, and the other is stable carotid plaque. The data were collected from the Second Affiliated Hospital of Fujian Medical University, including stable and vulnerable cases. A total of 87 patients with risk factors for atherosclerosis in our hospital were selected. We used 230 color Doppler ultrasound images for each category and further divided those into the training set and test set in a ratio of 70 and 30%, respectively. We have implemented Inception V3 and VGG-16 pre-trained models for this classification task.

Results: Using the proposed framework, we implemented two transfer deep learning models: Inception V3 and VGG-16. We achieved the highest accuracy of 93.81% by using fine-tuned and adjusted hyperparameters according to our classification problem.

Conclusion: In this research, we classified color Doppler ultrasound images into high-risk carotid vulnerable and stable carotid plaques. We fine-tuned pre-trained deep learning models to classify color Doppler ultrasound images according to our dataset. Our suggested framework helps prevent incorrect diagnoses caused by low image quality and individual experience, among other factors.

1. Introduction

With the advent of aging, cerebrovascular disease has become one of the world's three significant causes of death and disability (1). Atherosclerosis is a systemic and progressive disease, and its progression is reflected in the transformation of stable and unstable lipid plaques in the arterial lumen. Cerebrovascular disease is closely related to carotid atherosclerotic plaque. The vulnerability of carotid plaque is a significant risk for the recurrence of cerebral infarction (2). The recurrence rate of ischemic cerebral infarction patients within 7 days is as high as 8.1% (3). Carotid color Doppler ultrasound is a routine method for examining carotid plaque. Conventional two-dimensional ultrasound can accurately determine the plaque's location, size, shape, and echo and observe the degree of carotid artery stenosis and even the ulcer on the plaque surface and intraplaque hemorrhage (4). Different echoes represent changes in plaque composition, and plaque stability can be preliminarily judged according to plaque morphology, echoes, thickness, and integrity of the fibrous cap. Studies have shown that hypoechoic plaques are more prone to stroke than iso-echoic and hyperechoic plaques (5). However, the detection of ulcer plaque and intraplaque hemorrhage by conventional ultrasound is limited, and the nature of plaque mainly depends on the operator's experience and subjective judgment, which has certain limitations (6). Multiple studies used numerical simulations to analyze the fluid–structure interaction between the blood vessel wall and blood flowing through elastic arteries with eccentric stenotic plaque (7). With the rapid development of inspection techniques, contrast-enhanced ultrasound and ultra-microvascular imaging techniques are able to display new blood vessels with plaques, better assess the vulnerability of plaques, and improve the predictive value of cerebral infarction recurrence (8, 9). However, due to the limitations of inspection instruments and contrast media, contraindications cannot be used as routine screening.

Numerous imaging approaches, such as 3D imaging, auto-fluorescence imaging (AFI), and narrow-band imaging (NBI), have been developed to enhance the diagnostic system and get over the constraints listed above (10). A precise 3D reconstruction and modeling (11) of the segmented cardiac structures (12) is crucial because hemodynamic modeling of these structures aids in the evaluation of blood dynamics. There is still a need for a computer-aided autonomous framework to improve the efficiency and quality of diagnosis in daily clinical practice (13). Deep learning technology has recently permeated several areas of medical study and has taken a center stage in modern science and technology.

Deep learning technology can fully utilize vast amounts of data, automatically learn the features in the data, accurately and rapidly support clinicians in diagnosis, and increase medical efficiency. Traditional machine learning and deep learning methods in medical image analysis have been widely used in medical image diagnosis (14), and ensemble learning techniques are also used in various medical examinations (15). For the automatic segmentation of images in cardiac radiography, Song et al. (16) utilized the deep learning technique and obtained significant results. To segment the carotid plaque in ultrasound longitudinal B-mode images, Meshram et al. (17) used U-Net architecture, where the dilated convolution layers were used in the bottleneck. Savaş et al. (18) used a multi-hidden layer neural network to detect and classify intima-media thickness and achieved 89.1% accuracy. In their experiment, they used U-Net architecture to segment the same plaque manually, and the results showed the Dice coefficients of 0.55 for automatic segmentation and 0.84 for semi-automatic segmentation. Pre-trained deep learning models based on massive datasets have demonstrated their superiority to conventional approaches as the processing capacity of modern hardware continues to grow. Therefore, from a deep learning perspective, transfer learning can be used to solve the image categorization problem. The study found that the transfer learning and convolutional neural network technique achieve several cutting-edge achievements in medical image analysis (19). Chatterjee et al. (20) used MobileNet and various feature selection techniques to determine the amount of plaque in the carotid artery to predict the heart risk and achieved 95% accuracy on the validation set.

We utilized the benefits of pre-trained deep learning models to enhance the diagnosis and overcome the mentioned limitations. Our deep learning framework improves the carotid color Doppler ultrasound for examining carotid plaque using MRI scans. We used pre-trained models, including VGG-16, ResNet-50, and Inception V3, and adjusted their hyperparameters to fit our classification task.

The study is organized as follows: Section 2 of this study illustrates the methodology, including the dataset description, feature extraction, and implementation detail of deep learning models. Section 3 explains the results and discussion. Section 4 presents the conclusion and possibilities for future research.

2. Materials and methods

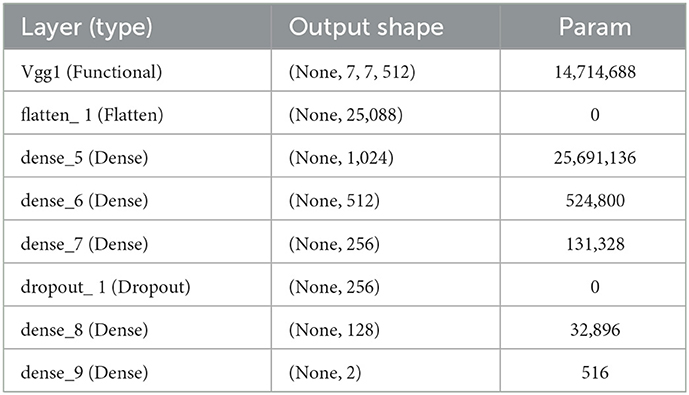

A computer-aided autonomous framework is needed to classify color Doppler ultrasound images into two types to enhance carotid plaque diagnosis. Deep learning technology has recently permeated several areas of medical study and has taken a center stage in modern science and technology (21). Deep learning technology can fully utilize vast amounts of data, automatically learn the features in the data, accurately and rapidly support clinicians in diagnosis, and increase medical efficiency. Our research implemented a deep learning framework based on transfer learning to classify color Doppler ultrasound images into vulnerable and stable carotid plaques. We used VGG-16 and Inception V3 pre-trained models, fine-tuned them, and adjusted hyperparameters according to our classification problem. The proposed framework to address the mentioned research gap is shown in Figure 1.

Figure 1. Proposed framework to classify color Doppler ultrasound images. An image is fed to the feature extraction module of a neural network; then, data are split to the train and test set. Inception V3 and VGG-16 are trained according to the dataset to classify images into two classes.

2.1. Data collection and statistics

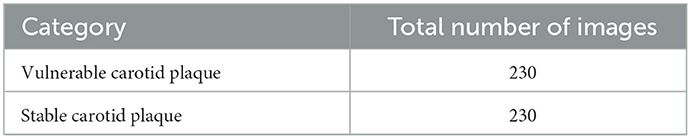

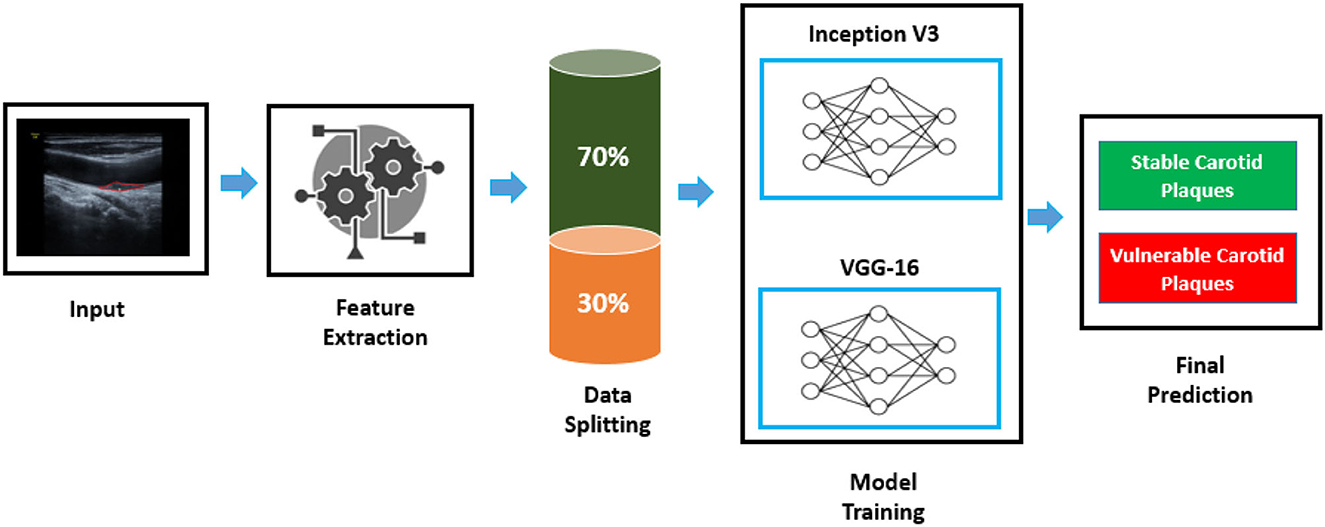

The data were collected from the Second Affiliated Hospital of Fujian Medical University, including stable cases and vulnerable cases. A total of 87 patients with risk factors for atherosclerosis in the mentioned hospital were selected. Due to the complexity of medical images and the requirement for extremely high accuracy of results, the current analysis of medical images is mainly performed by experienced personnel. We randomly selected 230 sample images of vulnerable carotid plaque from color Doppler ultrasound images, and similarly, 230 images were selected randomly for the stable carotid plaque category. We divided the dataset in the ratio of 70 and 30% for training and testing, respectively. The sample data are shown in Figure 2.

Figure 2. Sample dataset (A) pertains to vulnerable carotid plaque ultrasounds images scans and (B) belongs to stable carotid plaque ultrasounds images scans, whereby (i) and (ii) represent the color Doppler image scans and their respective enlarged views for clarity.

Furthermore, the dataset statistics are shown in Table 1 for a better understanding.

The carotid artery color ultrasound confirmed the presence of the carotid artery homogeneous hypoechoic plaque (with the sternocleidomastoid muscle as the reference, the echo was slightly lower than sternocleidomastoid echoes and may have hyperechoic fibrous caps) or heterogeneous hypoechoic plaque (the echoes are marginally lower than those of the sternocleidomastoid muscles, mainly hypoechoic, with hyperechoic and is echoic parts <25 %). Patients with diabetes, hypertension, and hyperlipidemia should receive regular symptomatic treatment.

Two-dimensional ultrasound diagnostic criteria for vulnerable plaques are as follows: The overall shape of the plaque is irregular, the fibrous surface cap is thin or not smooth, and the internal lipid core is low or low to anechoic, heterogeneity, and basal lines. The echo-like continuity is poor or inconsistent, or there is an ulcer on the surface of the plaque. The RESONA 7OB diagnostic ultrasound system (Mindray Medical International, Shenzhen, Guangdong Province, China) linear array probe is in the frequency of 3–11 MHz, and the patient is in a supine position with the occiput removed, with the head slightly turned to one side (to avoid hyperextension), and conventional color Doppler ultrasound of the carotid artery is performed, the thickness of plaque was observed according to the characteristics of the first echo. Homogeneous or heterogeneous hypoechoic plaques were selected as representative plaques. If the plaques were multiple, the homogeneous plaque with the lowest visual echo was selected. After determining the target plaque, the long-axis section of the plaque is taken; then, the probe is kept steady, and the image is acquired.

2.2. Feature extraction

We begin with a pre-trained model for extracting characteristics and changing the weights of the bottom layer, from which we obtain predictions. It is called feature extraction because we change the output layer and apply the pre-trained CNN as a fixed feature extractor (22). As the number of convolution steps increases, convolution neural networks successfully learn the edge features of the input image and some or all objects—high-level semantic information. In the convolution neural network, the convolution layer and complete connection layer can be used to extract the image's deep features; however, the convolution layer contains several dimensions, making it difficult to calculate the dimensionality reduction that comes next (23). The last layer consists of the softmax layer that computes the categorical cross-entropy loss function (E) between the symptomatic and asymptomatic classes and is mathematical, as given in Equation 1.

where y is the binary indicator for the observed class, “*” denotes the product, and p is the predicted probability of the plaque belonging to a specific class computed using deep learning models.

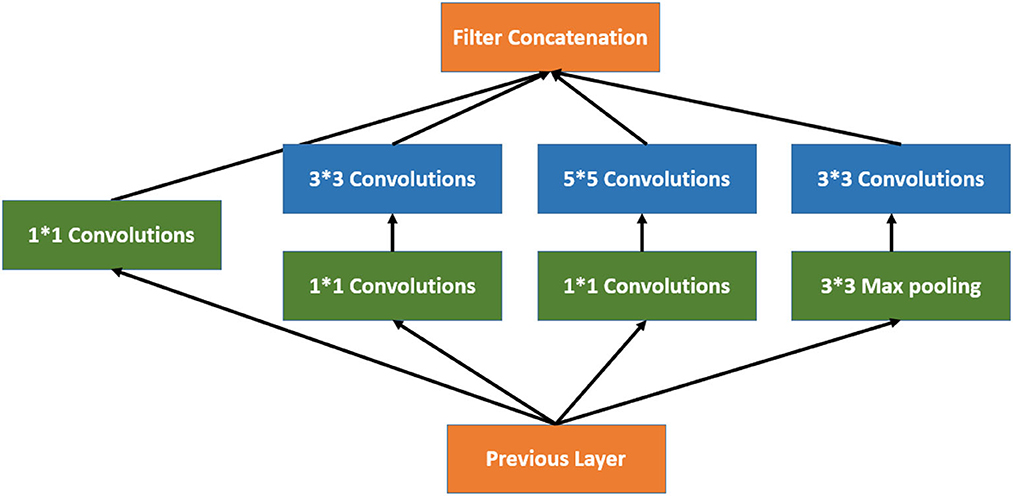

2.3. Inception V3

Inception V3 is a 48-layer deep pre-trained convolutional neural network model, as shown in Equation 2. This network was trained using a subset of the more than a million images in the ImageNet collection. Typically, the inception module includes one maximum pooling and three convolutions of various sizes (24). After the convolution operation, the channel is aggregated for the network output of the preceding layer, and the non-linear fusion is then carried out. In this model, overfitting can be avoided while enhancing the network's expression and flexibility to various scales, as shown in Figure 3.

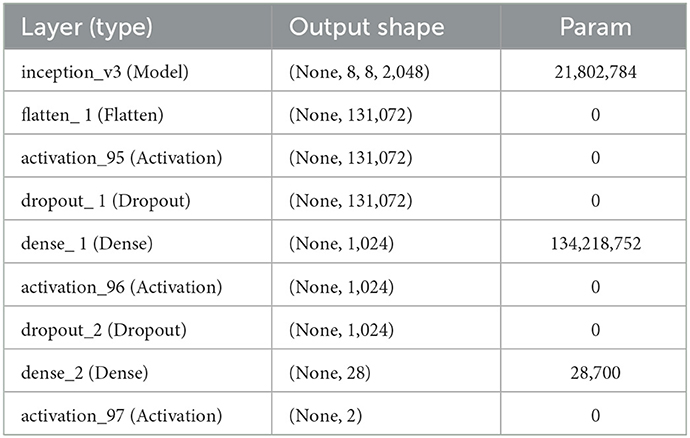

By flattening the output layer, reducing its dimensions to one, and adding a sigmoid layer for classification along with a fully connected layer with 1,024 hidden units, with a ReLU activation function, as shown in Equation 3, and a dropout rate of 0.4, we were able to use Inception V3 by avoiding its overfitting. The neuronal weights of the classification layers are initialized with the algorithm described in (25), as shown in Equation 4.

where e U (–a, b) is a uniform distribution in the interval [–a, b], m is the size of the previous layer, and Wk stands for weight parameters in the CNN at iteration k. The complete model architecture and hyperparameter details are shown in Table 2.

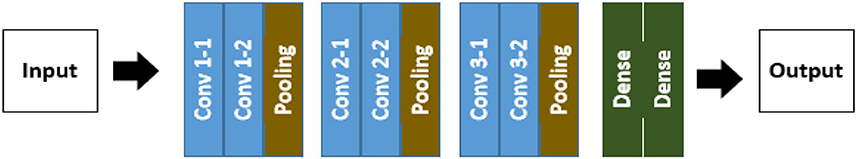

2.4. VGG-16

The convolution neural network model known as the VGG-16 neural network was created and trained by the Visual Geometry Group (VGG) at the University of Oxford (26). The architecture structure of VGG-16 is shown in Figure 4, which consists of convolutions, pooling, and dense layers. A layer where y = f (x) should be considered. We are interested in learning which x components affect which y components.

In addition, we consider this to be the receptive field. As a result, the output component yi, j is solely dependent on the input components xi, j, where (i, j) ∈ Ω (i″, j″). The set Ω (i″, j″) rectangle is defined by Equations 5 and 6.

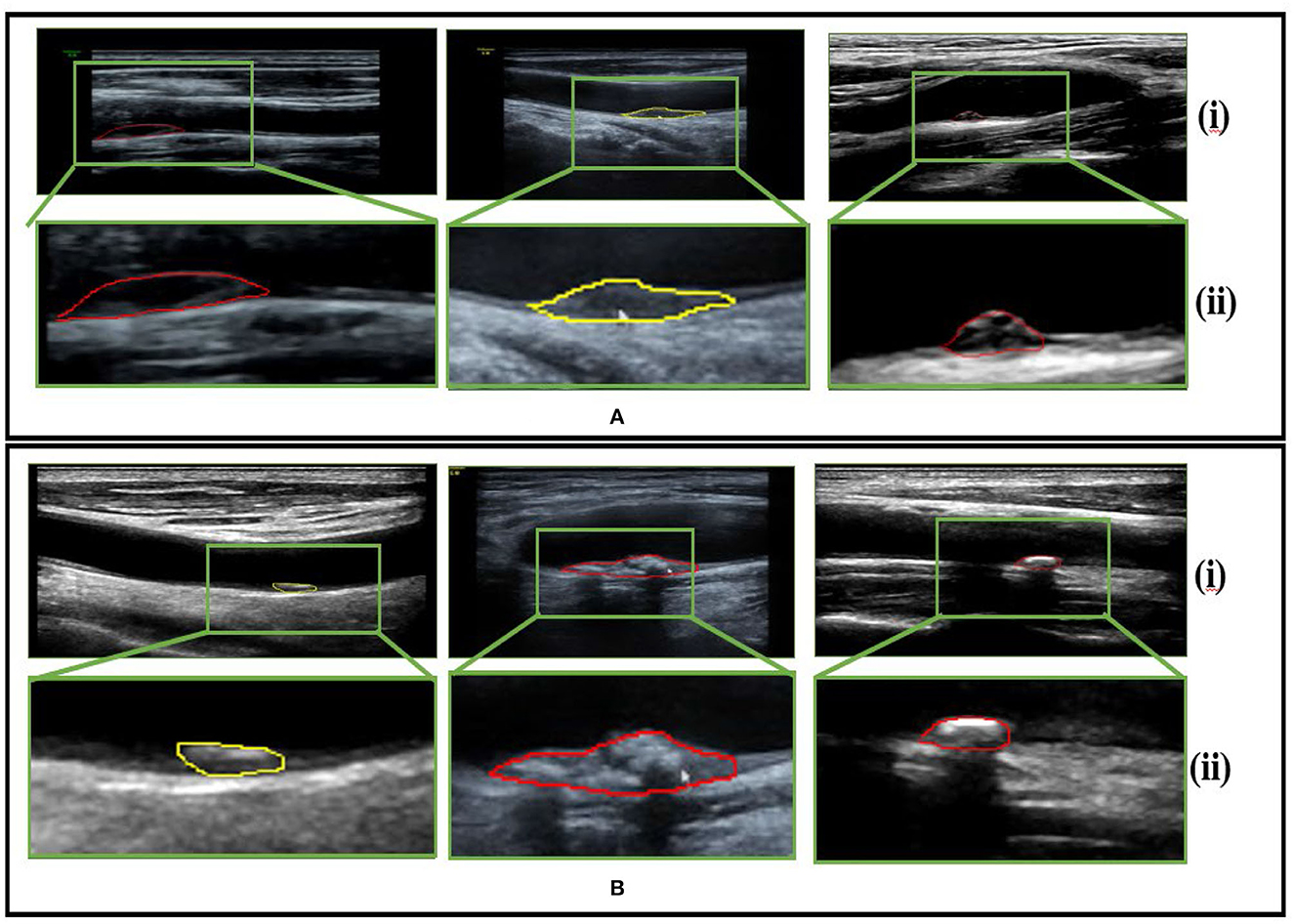

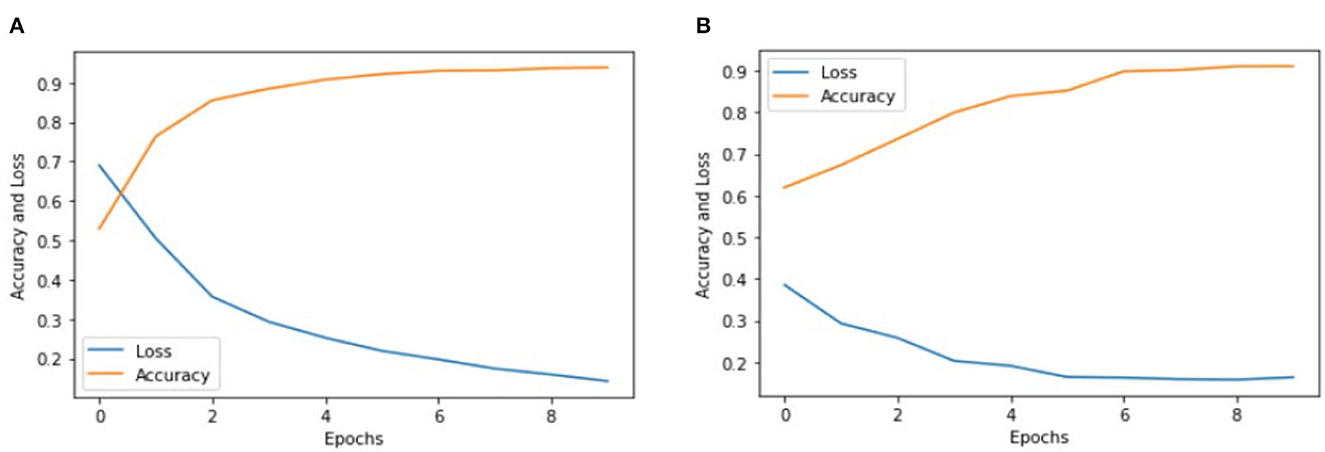

where (αh, αv) is the stride, and (βh, βv) and (Δh, Δv) are the offset and respective field size, respectively. We enhanced the VGG-16 pre-trained models using Keras (27) and fine-tuned this model following our dataset. The complete model architecture and hyperparameter details are shown in Table 3.

Our VGG-16 model consists of a dense layer in which each neuron in the preceding layer sends signals to the neurons in the thick layer, which multiply matrices and vectors. A matrix-vector product's standard formula is shown in Equation 7.

where x is a matrix with a diagonal of 1, and α is a (M × N) matrix. The values inside the matrix are the trained parameters of the earlier layers, and backpropagation can likewise be used to update them. We assessed our model's performance using accuracy, loss graphs, and ROC curves as described in Equations 8–10.

where true positive (TP) is the total number of accurately identified positive cases; the number of accurately categorized negative cases (cases without stenosis) is known as true negative (TN); false positive (FP) and false negative (FN) are the numbers of false positive and false negative instances with ground truth, that is, respectively, classified as positive and negative (28).

where y is the input patch's ground truth label, and calculating the gradient of the function L for the network weights W minimizes the loss function during the model training process. The receiver operating characteristics (ROC) curve (29), used to assess a test's overall diagnostic performance, compares the performance of two or more diagnostic tests. The area under the ROC, commonly referred to as ROC-AUC, can be interpreted as shown in Equation 10.

where x1 and x0 are the continuous random variable giving the “score” output by our binary classifier for randomly chosen positive and negative samples. The ROC curve shows the trade-off between sensitivity (TPR) and specificity (FPR), represented in Equations 11, 12, respectively.

Pérez-Fernández et al. (30) by the definition of TPR, it corresponds to the probability of correctly classifying a randomly chosen positive sample, so TPR (T) = P(X1 > T) = 1 – P(X1 ≤ T) = 1–F1(T) by definition of the density function as shown in Equation 13.

By definition, ROC is shown in Equation 14.

By changing a variable, the integral equation becomes as shown in Equation 15.

By summarizing Equation 15, it becomes as shown in Equation 16.

By using this change in the variable for the inner integral, as shown in Equation 18, we obtained Equation 18.

In general, the change in the variable for the inner integral is u = v + T, and the equation becomes as shown in Equation 19.

According to the convolution theorem and assuming the convergence, with a density of X1–X0 = X1+ (–X0), our ROC-AUC equation becomes as shown in Equation 20.

3. Experimental results and discussion

A Doppler ultrasound is a non-invasive diagnostic that uses circulating red blood cells to reflect high-frequency sound waves (ultrasound) to assess the hemodynamic characteristics of blood flow via the cardiovascular vessels. The hemodynamic quantification of cardiovascular flow is vital to evaluating plaque rupture (30, 31), and medical imaging using ultrasound can obtain such information. By monitoring the rate of change in frequency, a color Doppler ultrasound may calculate the frequency of blood flow. A sonographer with ultrasound imaging training presses down on your skin with a small, handheld tool (transducer) while moving it over the area of your body being examined. Studies have shown that hypoechoic plaques are more prone to stroke than isoechoic and hyperechoic plaques (5). However, the detection of ulcer plaque and intraplaque hemorrhage by conventional ultrasound is limited, and the nature of plaque mainly depends on the operator's experience and subjective judgment, which has certain limitations. There is a need for a computer-aided autonomous framework to improve color Doppler efficiency and quality in daily clinical practice (31). Deep learning technology has recently permeated several areas of medical study and has taken a center stage in modern science and technology. In this research, we collected data from the Second Affiliation of Fujian Medical University, including stable cases and vulnerable cases.

We implemented two pre-trained deep learning models, which include the Inception V3 and VGG-16, to classify color Doppler ultrasound images into two categories: one is high-risk carotid vulnerable plaque, and the second is stable carotid plaque. We fine-tuned (32) pre-trained deep learning models according to our dataset to classify color Doppler ultrasound images into two categories. Our trained Inception V3 model achieved 93.81% accuracy, and VGG-16 achieved 91.13% accuracy in classifying color Doppler ultrasound images into two categories. Figures 5A reports the training accuracy and loss by using Inception V3, and (Figure 5B) represents the training accuracy and loss by using VGG-16, respectively, according to our dataset. ROC curves are typically used to graphically represent the relationship or trade-off between clinical sensitivity and specificity for each potential cutoff for a test or combination of tests.

Figure 5. Accuracy and loss graph using the Inception V3 and VGG-16. (A) Representing the training accuracy and loss by using Inception V3 and (B) representing the training accuracy and loss of the VGG-16 models according to our dataset.

Figures 5A, B show the training accuracy and loss of Inception V3 and VGG-16 models for eight epochs. We can observe that the accuracy of Inception V3 is higher than the VGG-16, and the model loss is also decreased from 70 to 20%. We used the same number of epochs and hyperparameters for both models, and the results indicate that the Inception V3 model can better classify color Doppler ultrasound images into two categories. Figure 6 represents the performance of two models by using the ROC curve. Here, Figure 5A illustrates the implementation of the Inception V3 model for classifying color Doppler ultrasound images.

Figure 6. ROC curves for Inception V3 and VGG-16. (A) Representing the ROC by using Inception V3 and (B) representing the ROC by using VGG-16.

Similarly, Figure 5B illustrates the performance of the VGG-16 model for classifying color Doppler ultrasound images into two categories. The probability curve known as the ROC curve effectively separates the “signal” from the “noise” by plotting the true positive rate (TPR) against the false positive rate (FPR) at various threshold values (33). The capacity of a classifier to differentiate between classes is measured by the area under the curve (AUC), which is used as a summary of the ROC curve. When AUC is near 1, the classifier can correctly distinguish all the positive and the negative class points (34). In Figure 6A, the AUCs for class 0 and class 1 are 0.85 and 0.92, respectively. Hence, Inception V3 performed very well in classifying color Doppler ultrasound images. Similarly, in Figure 6B, the AUC for both classes is far from 1, and there is a high chance that the classifier will be able to distinguish the positive class values from the negative class values.

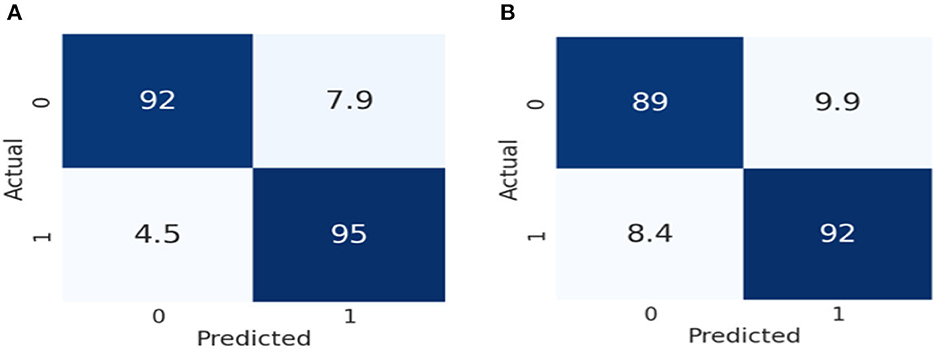

Figure 7 represents the performance of these two models by using the 2 × 2 confusion matrix. Here Figure 7A illustrates the performance of the Inception V3 model for classifying color Doppler ultrasound images. Similarly, Figure 7B represents the performance of VGG-16 in terms of the confusion matrix to classify color Doppler ultrasound images into high-risk, vulnerable, and stable carotid plaque.

Figure 7. Confusion matrix representation of the performance of our models. (A) Represents the performance of Inception V3, while (B) illustrates the VGG-16 according to our dataset.

As we can observe in Figure 7A, the Inception V3 model classifies 92% of the data into true positive (TP), meaning 92% of the data belongs to this class. 7.9% of the data was negative but falsely predicted as positive, 4.5% of data was positive but falsely predicted as negative, and 95% of the data was negative and also predicted as negative by the Inception V3 model. Similarly, in Figure 7B, the VGG-16 models classify 89% of the data into true positive (TP), meaning 89% of the data belongs to this class. 9.9% of the data was negative but falsely predicted as positive, 8.4% of the data was positive but falsely predicted as negative, and 92% was negative and also predicted as negative by the VGG-16 model. From the result, we can conclude that Inception V3 performs better than the VGG-16 in classifying color Doppler ultrasound images. In VGG-16, the fifth block is the first tuned block, followed by backward tuning until the first block represents the whole network (35). Whereas, the Inception V3 model has 11 inception model blocks, backward tuning starts from the mixed 10 inception module and then to the entire basic convolutional network (36). The Inception V3 model performs better in classifying color Doppler ultrasound images. We fine-tuned these two models according to our dataset to classify color Doppler images into two categories. Our trained Inception V3 achieved 93.81% accuracy VGG-16 model which gained 91.13% accuracy in classifying color Doppler images. Furthermore, we have compared our proposed framework performance with the previously proposed approach, as shown in Table 4.

Conclusion

In this study, we concluded that deep learning-based methods for carotid risk assessment are the most promising and successful. Our trained Inception V3 model achieved 93.81% accuracy, and the VGG-16 achieved 91.13% accuracy in classifying color Doppler ultrasound images. We assessed and compared our model's performance with previous methods using accuracy, loss graphs, and ROC curves, showing that our proposed framework outperformed other methods. Our framework will avoid inaccurate diagnoses caused by inadequate image quality and individual experience. Thus, the assessments in this study have shown that this methodology performs reasonable results for Doppler ultrasound image classification. In future implementations, extreme learning may be used as a more advanced classifier for plaque classification problems. Due to differences in patients and the appearance of the prostate, future studies should focus on testing the model with a larger dataset. Therefore, even though the results of studies have the potential for deep learning associated with different kinds of images, additional studies may need to be carried out clearly and transparently, with database accessibility and reproducibility, in order to develop useful tools that aid health professionals. In addition to the stenosis appearing in the carotid bifurcation and related arteries, the blockage of heart vessels may also occur, and this sometimes leads to left atrial enlargement [aa], and detailed analysis is required to understand the reason behind the blockage and to find effective ways to rectify the cardiac health problems (40).

Data availability statement

The original contributions presented in the study are included in the article/supplementary material, further inquiries can be directed to the corresponding author/s.

Ethics statement

The studies involving human participants were reviewed and approved by the Ethics No. of [2022] No. 288 of the Second Affiliated Hospital of Fujian Medical University. The patients/participants provided their written informed consent to participate in this study. Written informed consent was obtained from the individual(s) for the publication of any potentially identifiable images or data included in this article.

Author contributions

S-SS proposed the study, discussed the contents with medical experts, and wrote the paper. L-YL performed the experiments, checked the equipment, and studied the results. YW checked all experimental image results and prepared the statistics in the paper. Y-ZL suggested improvements, modified parts of the experiment, and checked the entire manuscript. All authors contributed to the article and approved the submitted version.

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher's note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

References

1. Dagenais GR, Leong DP, Rangarajan S, Lanas F, Lopez-Jaramillo P, Gupta R, et al. Variations in common diseases, hospital admissions, and deaths in middle-aged adults in 21 countries from five continents (PURE): a prospective cohort study. Lancet. (2020) 395:785–94. doi: 10.1016/S0140-6736(19)32007-0

2. Hosseini AA, Kandiyil N, MacSweeney ST, Altaf N, Auer DP. Carotid plaque hemorrhage on magnetic resonance imaging strongly predicts recurrent ischemia and stroke. Ann Neurol. (2013) 73:774–84. doi: 10.1002/ana.23876

3. Rantner B, Goebel G, Bonati LH, Ringleb PA, Mas JL, Fraedrich G, et al. The risk of carotid artery stenting compared with carotid endarterectomy is greatest in patients treated within 7 days of symptoms. J Vasc Surg. (2013). 57:619–26. doi: 10.1016/j.jvs.2012.08.107

4. Wong KK, Thavornpattanapong P, Cheung SC, Tu JY. Biomechanical investigation of pulsatile flow in a three-dimensional atherosclerotic carotid bifurcation model. J Mech Med Biol. (2013) 13:1350001. doi: 10.1142/S0219519413500012

5. AbuRahma AF, Wulu JT Jr, Crotty B. Carotid plaque ultrasonic heterogeneity and severity of stenosis. Stroke. (2002) 33:1772–5. doi: 10.1161/01.STR.0000019127.11189.B5

6. Liu Y, Hua Y, Feng W, Ovbiagele B. Multimodality ultrasound imaging in stroke: current concepts and future focus. Exp Rev Cardiovasc Ther. (2016) 14:1325–33. doi: 10.1080/14779072.2016.1254043

7. Liu G, Wu J, Huang W, Wu W, Zhang H, Wong KK, et al. Numerical simulation of flow in curved coronary arteries with progressive amounts of stenosis using fluid-structure interaction modelling. J Med Imaging Health Inform. (2014) 4:605–11. doi: 10.1166/jmihi.2014.1301

8. Zhang M, Xie Z, Long H, Ren K, Hou L, Wang Y, et al. Current advances in the imaging of atherosclerotic vulnerable plaque using nanoparticles. Mater Today Bio. (2022) 14:100236. doi: 10.1016/j.mtbio.2022.100236

9. Rafailidis V, Li X, Sidhu PS, Partovi S, Staub D. Contrast imaging ultrasound for the detection and characterization of carotid vulnerable plaque. Cardiovasc Diagn Ther. (2020) 10:965. doi: 10.21037/cdt.2020.01.08

10. East JE, Suzuki N, Palmer N, Thapar C, Swain D, Saunders BP. Autofluorescence imaging (AFI) and narrow band imaging (NBI) with magnification in colonoscopy: an early experience. Gastrointesti Endosc. (2006) 63:AB230. doi: 10.1016/j.gie.2006.03.583

11. Zhao C, Lv J, Du S. Geometrical deviation modeling and monitoring of 3D surface based on multi-output Gaussian process. Measurement. (2022) 199:111569. doi: 10.1016/j.measurement.2022.111569

12. Zhao M, Wei Y, Lu Y, Wong KK. A novel U-Net approach to segment the cardiac chamber in magnetic resonance images with ghost artifacts. Comput Methods Prog Biomed. (2020) 196:105623. doi: 10.1016/j.cmpb.2020.105623

13. Wong KK, Sun Z, Tu J, Worthley SG, Mazumdar J, Abbott D. Medical image diagnostics based on computer-aided flow analysis using magnetic resonance images. Comput Med Imaging Grap. (2012) 36:527–41. doi: 10.1016/j.compmedimag.2012.04.003

14. Wong KK, Fortino G, Abbott D. Deep learning-based cardiovascular image diagnosis: a promising challenge. Fut Gener Comput Syst. (2020) 110:802–11. doi: 10.1016/j.future.2019.09.047

15. Lu Y, Fu X, Chen F, Wong KK. Prediction of fetal weight at varying gestational age in the absence of ultrasound examination using ensemble learning. Artif Intell Med. (2020) 102:101748. doi: 10.1016/j.artmed.2019.101748

16. Song Y, Ren S, Lu Y, Fu X, Wong KK. Deep learning-based automatic segmentation of images in cardiac radiography: a promising challenge. Comput Methods Prog Biomed. (2022) 220:106821. doi: 10.1016/j.cmpb.2022.106821

17. Meshram NH, Mitchell CC, Wilbrand S, Dempsey RJ, Varghese T. Deep learning for carotid plaque segmentation using a dilated U-Net architecture. Ultrason Imaging. (2020) 42:221–30. doi: 10.1177/0161734620951216

18. Savaş S, Topaloglu N, Kazci Ö, Koşar PN. Classification of carotid artery intima media thickness ultrasound images with deep learning. J Med Syst. (2019) 43:1–12. doi: 10.1007/s10916-019-1406-2

19. Zhu X, Wei Y, Lu Y, Zhao M, Yang K, Wu S, et al. Comparative analysis of active contour and convolutional neural network in rapid left-ventricle volume quantification using echocardiographic imaging. Comput Methods Progr Biomed. (2021) 199:105914. doi: 10.1016/j.cmpb.2020.105914

20. Chatterjee A, Nair JR, Ghoshal T, Latha S, Samiappan D. Diagnosis of atherosclerotic plaques in carotid artery using transfer learning. In: 2020 5th International Conference on Communication and Electronics Systems (ICCES). Coimbatore: IEEE (2020). doi: 10.1109/ICCES48766.2020.9138052

21. Litjens G, Kooi T, Bejnordi BE, Setio AA, Ciompi F, Ghafoorian M, et al. A survey on deep learning in medical image analysis. Med Image Anal. (2017) 42:60–88. doi: 10.1016/j.media.2017.07.005

22. Ahmed KB, Hall LO, Goldgof DB, Liu R, Gatenby RA. Fine-tuning convolutional deep features for MRI based brain tumor classification. In: Medical Imaging 2017: Computer-Aided Diagnosis. Orlando, FL: SPIE (2017). doi: 10.1117/12.2253982

23. Jogin M, Madhulika MS, Divya GD, Meghana RK, Apoorva S. Feature extraction using convolution neural networks (CNN) and deep learning. In: 2018 3rd IEEE International Conference on Recent Trends in Electronics, Information & Communication Technology (RTEICT). Bangalore: IEEE (2018). doi: 10.1109/RTEICT42901.2018.9012507

24. Guan Q, Wan X, Lu H, Ping B, Li D, Wang L, et al. Deep convolutional neural network inception-v3 model for differential diagnosing of lymph node in cytological images: a pilot study. Ann Transl Med. (2019) 7:307. doi: 10.21037/atm.2019.06.29

25. Atitallah SB, Driss M, Boulila W, Koubaa A, Atitallah N, Ghézala HB. An enhanced randomly initialized convolutional neural network for columnar cactus recognition in unmanned aerial vehicle imagery. Proc Comput Sci. (2021) 192:573–81. doi: 10.1016/j.procs.2021.08.059

26. Guan Q, Wang Y, Ping B, Li D, Du J, Qin Y, et al. Deep convolutional neural network VGG-16 model for differential diagnosing of papillary thyroid carcinomas in cytological images: a pilot study. J Cancer. (2019) 10:4876. doi: 10.7150/jca.28769

27. Poojary R, Pai A. Comparative study of model optimization techniques in fine-tuned CNN models. In: 2019 International Conference on Electrical and Computing Technologies and Applications (ICECTA). Ras Al Khaimah: IEEE (2019). doi: 10.1109/ICECTA48151.2019.8959681

28. Beauxis-Aussalet E, Hardman L. Simplifying the visualization of confusion matrix. In: 26th Benelux Conference on Artificial Intelligence (BNAIC) (2014).

29. Nahm FS. Receiver operating characteristic curve: overview and practical use for clinicians. Korean J Anesthesiol. (2022) 75:25–36. doi: 10.4097/kja.21209

30. Pérez-Fernández S, Martínez-Camblor P, Filzmoser P, Corral N. Visualizing the decision rules behind the ROC curves: understanding the classification process. Adv Stat Anal. (2021) 105:135–61. doi: 10.1007/s10182-020-00385-2

31. Rodrigues JF Jr, Paulovich FV, de Oliveira MC, de Oliveira ON Jr. On the convergence of nanotechnology and big data analysis for computer-aided diagnosis. Nanomedicine. (2016) 11:959–82. doi: 10.2217/nnm.16.35

32. Vasan D, Alazab M, Wassan S, Naeem H, Safaei B, Zheng Q. IMCFN: image-based malware classification using fine-tuned convolutional neural network architecture. Comput Netw. (2020) 171:107138. doi: 10.1016/j.comnet.2020.107138

33. Henderson AR. Assessing test accuracy and its clinical consequences: a primer for receiver operating characteristic curve analysis. Ann Clin Biochem. (1993) 30:521–39. doi: 10.1177/000456329303000601

34. Brzezinski D, Stefanowski J. Prequential AUC: properties of the area under the ROC curve for data streams with concept drift. Knowl Inform Syst. (2017) 52:531–62. doi: 10.1007/s10115-017-1022-8

35. Luo JH, Wu J, Lin W. Thinet: a filter level pruning method for deep neural network compression. In: Proceedings of the IEEE International Conference on Computer Vision. (2017). doi: 10.1109/ICCV.2017.541

36. Lin C, Li L, Luo W, Wang KC, Guo J. Transfer learning based traffic sign recognition using inception-v3 model. Period Polytech Transport Eng. (2019) 47:242–50. doi: 10.3311/PPtr.11480

37. Yekkala I, Dixit S, Jabbar MA. Prediction of heart disease using ensemble learning and particle swarm optimization. In: 2017 International Conference on Smart Technologies for Smart Nation (SmartTechCon). Bangalore: IEEE. (2017). p. 691–8. doi: 10.1109/SmartTechCon.2017.8358460

38. Wankhede J, Sambandam P, Kumar M. Effective prediction of heart disease using hybrid ensemble deep learning and tunicate swarm algorithm. J Biomol Struct Dyn. (2021) 40:13334–45. doi: 10.1080/07391102.2021.1987328

39. Uddin MN, Halder RK. An ensemble method based multilayer dynamic system to predict cardiovascular disease using machine learning approach. Inform Med Unlock. (2021) 24:100584. doi: 10.1016/j.imu.2021.100584

Keywords: color Doppler ultrasound images, stroke risk prediction, deep learning, transfer learning, carotid artery

Citation: Su S-S, Li L-Y, Wang Y and Li Y-Z (2023) Stroke risk prediction by color Doppler ultrasound of carotid artery-based deep learning using Inception V3 and VGG-16. Front. Neurol. 14:1111906. doi: 10.3389/fneur.2023.1111906

Received: 30 November 2022; Accepted: 16 January 2023;

Published: 14 February 2023.

Edited by:

Kelvin Kian Loong Wong, University of Saskatchewan, CanadaReviewed by:

Defu Qiu, China University of Mining and Technology, ChinaJianshe Shi, Huaqiao University Affiliated Strait Hospital, China

Copyright © 2023 Su, Li, Wang and Li. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Shan-Shan Su,  susan@fjmu.edu.cn; Li-Ya Li,

susan@fjmu.edu.cn; Li-Ya Li,  lly7473@fjmu.edu.cn

lly7473@fjmu.edu.cn

Shan-Shan Su

Shan-Shan Su Li-Ya Li1*

Li-Ya Li1* Yuan-Zhe Li

Yuan-Zhe Li