- 1Department of Mathematics and Statistics, Swarthmore College, Swarthmore, PA, United States

- 2School of Mathematical Sciences, Shanghai Jiao Tong University, Shanghai, China

- 3Ministry of Education Key Laboratory of Scientific and Engineering Computing, Shanghai Jiao Tong University, Shanghai, China

- 4Institute of Natural Sciences, Shanghai Jiao Tong University, Shanghai, China

Determining the structure of a network is of central importance to understanding its function in both neuroscience and applied mathematics. However, recovering the structural connectivity of neuronal networks remains a fundamental challenge both theoretically and experimentally. While neuronal networks operate in certain dynamical regimes, which may influence their connectivity reconstruction, there is widespread experimental evidence of a balanced neuronal operating state in which strong excitatory and inhibitory inputs are dynamically adjusted such that neuronal voltages primarily remain near resting potential. Utilizing the dynamics of model neurons in such a balanced regime in conjunction with the ubiquitous sparse connectivity structure of neuronal networks, we develop a compressive sensing theoretical framework for efficiently reconstructing network connections by measuring individual neuronal activity in response to a relatively small ensemble of random stimuli injected over a short time scale. By tuning the network dynamical regime, we determine that the highest fidelity reconstructions are achievable in the balanced state. We hypothesize the balanced dynamics observed in vivo may therefore be a result of evolutionary selection for optimal information encoding and expect the methodology developed to be generalizable for alternative model networks as well as experimental paradigms.

1. Introduction

The connectivity of neuronal networks is fundamental for establishing the link between brain structure and function (Boccaletti et al., 2006; Stevenson et al., 2008; Gomez-Rodriguez et al., 2012); however, recovering the structural connectivity in neuronal networks is still a challenging problem both theoretically and experimentally (Salinas and Sejnowski, 2001; Song et al., 2005; Friston, 2011; Kleinfeld et al., 2011; Bargmann and Marder, 2013). Recent experimental advances, such as diffusion tensor imaging (DTI), dense electron microscopy (EM), and highly resolved tracer injections, have facilitated improved measurement of network connectivity, but constructing complete neuronal wiring diagrams for networks of thousands or more neurons is currently infeasible due largely to the small spatial scale and the dense packing of nervous tissue (Lichtman and Denk, 2011; Sporns, 2011; Briggman and Bock, 2012; Markov et al., 2013). Likewise, modern mathematical approaches for recovering network connectivity based on measured neuronal activity, such as Granger causality, information theory, and Bayesian analysis, typically demand linear dynamics or long observation times (Aertsen et al., 1989; Sporns et al., 2004; Timme, 2007; Eldawlatly et al., 2010; Friston, 2011; Hutchison et al., 2013; Zhou et al., 2013b, 2014; Goñi et al., 2014). Is it possible to achieve the successful reconstruction of network connectivity from the measurement of individual non-linear neuronal dynamics within a short time scale?

To address this central question, we develop a novel theoretical framework for the recovery of neuronal connectivity based on both network sparsity and balanced dynamics. Sparse connectivity among neurons is widely observed on large (inter-cortical) and small (local circuit) spatial scales (Mason et al., 1991; Markram et al., 1997; Achard and Bullmore, 2007; He et al., 2007; Ganmor et al., 2011), and, therefore, the amount of observed activity required to reconstruct network connectivity may be significantly smaller than suggested by estimates using only the total network size. Compressive sensing (CS) theory has emerged as a useful methodology for sampling and reconstructing sparse signals (Candes et al., 2006; Donoho, 2006; Gross et al., 2010; Wang et al., 2011b) and has primarily been utilized in estimating the connectivity of linear or time-invariant network models (Hu et al., 2009; Mishchenko and Paninski, 2012). In the case of realistic neuronal networks, their non-linear dynamics in time pose a major conceptual difficulty, particularly in isolating the impact of direct network connections on recorded activity from the effects of indirect neuronal interactions and the external drive.

We demonstrate that the reconstruction of neuronal connectivity based on compressive sensing of non-linear network dynamics is indeed possible in an appropriately balanced dynamical regime in which fluctuations in neuronal input largely drive firing events. Numerous experimental studies demonstrate that neuronal firing events are generally irregular, with large excitatory and inhibitory inputs dynamically balanced such that the voltage of a neuron typically resides near the resting potential for a broad class of external stimulation (Britten et al., 1993; Shadlen and Newsome, 1998; Compte et al., 2003; Haider et al., 2006; Tan and Wehr, 2009; London et al., 2010; Runyan et al., 2010; Isaacson and Scanziani, 2011; Xue et al., 2014). Theoretical work corroborates the existence of this operating regime for balanced network models in which neurons are sparsely connected while strongly coupled, such that neuronal activity is highly variable and heterogeneous across the network (van Vreeswijk and Sompolinsky, 1996, 1998; Troyer and Miller, 1997; Vogels and Abbott, 2005; Miura et al., 2007; Mongillo et al., 2012). Here, we utilize the same binary-state network model as such previous studies and demonstrate that using a small ensemble of random inputs and corresponding time-averaged measurements of neuronal dynamics collected over a short time scale, it is possible to achieve high fidelity reconstructions of recurrent connectivity for sparsely connected networks of excitatory and inhibitory neurons. We show that the quality of this reconstruction improves as the network dynamics are further balanced, expecting that for physiological networks, once in the balanced state, CS-based estimates of network connectivity are feasible. We hypothesize that the balanced operating regime may have arisen in sensory systems from evolution as a means of optimally encoding both connectivity and stimulus information through network dynamics.

2. Results

2.1. Compressive Sensing of Balanced Dynamics

To investigate the reconstruction of neuronal network connectivity in the balanced state, we consider a mechanistic binary-state model with non-linear dynamics (van Vreeswijk and Sompolinsky, 1996, 1998). The model network is composed of N neurons, such that NE neurons are excitatory (E) and NI neurons are inhibitory (I). The state of the ith neuron in the kth population (k = E, I) at time t is prescribed by

where H(·) denotes the Heaviside function and θk is the firing threshold for the neurons in population k. The total synaptic drive into the ith neuron in the kth population at time t is

where denotes the connection strength between the ith post-synaptic neuron in the kth population and the jth pre-synaptic neuron in the lth population (l = E, I), and is the total external input into the ith neuron in the kth population. The connection strength is chosen to be with probability K/Nl and 0 otherwise. In this case, the excitatory connection strength RkE > 0 and the inhibitory connection strength RkI < 0. Since each neuron is expected to receive projections from K pre-synaptic excitatory neurons and K pre-synaptic inhibitory neurons, sparse connectivity is reflected by the assumption that K ≪ NE, NI. In advancing the model dynamics for each neuron, the mean time between subsequent updates is τE = 10 ms for excitatory neurons and τI = 9 ms for inhibitory neurons, reflecting experimental estimates of cortical membrane potential time constants (McCormick et al., 1985; van Vreeswijk and Sompolinsky, 1996; Shelley et al., 2002). Based on the total synaptic drive at each time the system is updated, a given neuron is either in a quiescent () or firing () state.

To partition the model across the two subpopulations, the neurons and their corresponding activity variables may also be indexed from l = 1, …, N, with the first NE indices corresponding to neurons in the excitatory population and the second NI indices corresponding to neurons in the inhibitory population. Using this choice of indexing, R is the N×N recurrent connectivity matrix and p is the N-vector of static external inputs for the network. The external input p is selected such that is for each neuron, thereby comparable to the total synaptic drive from each population. Analogously, the feed-forward connectivity matrix F is N × N and diagonal, such that diagonal entries Fii = fE for i = 1, …, NE and Fii = fI for i = NE+1, …, NE+NI, thereby scaling the relative external input strength for each respective population. Since the absolute scale of the neuronal input is inconsequential in this non-dimensional model, we assume connectivity parameters REE = RIE = 1, so the primary parameters that determine the inhibition relative to excitation are the post-synaptic connection strengths for the inhibitory neurons and the external input strengths.

Since Rkl as well as θk are and the external drive is , if the excitatory and inhibitory inputs are not balanced, the total synaptic drive is and thus each neuron either fires with an excessively high rate or remains nearly quiescent. In the balanced operating regime, however, the excitatory and inhibitory inputs instead dynamically cancel and produce physiological firing dynamics, leaving the mean synaptic input nearly vanishing with relatively large input fluctuations responsible for the exact timing of firing events and their irregular distribution. This leads to theoretical conditions on the connection strength parameters (van Vreeswijk and Sompolinsky, 1996, 1998):

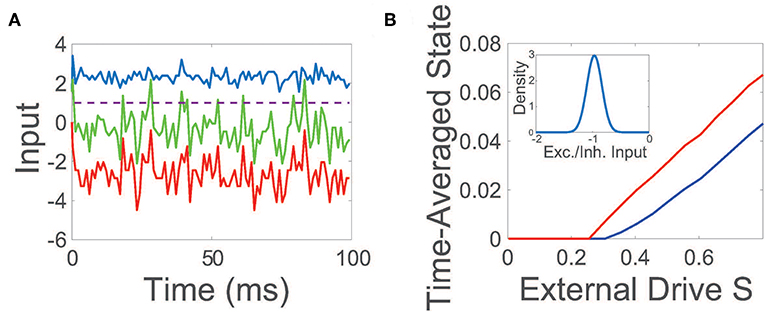

The net input into a representative neuron in the balanced state is plotted in Figure 1A, demonstrating a dynamic tracking of excitatory and inhibitory inputs such that the mean total input is far below threshold. On the larger scale of the entire network, an equilibrium between excitation and inhibition is also achieved in the balanced regime, with the time-averaged mean of the ratio between the excitatory and inhibitory input (E/I input ratio) across the network narrowly distributed near −1.

Figure 1. Balanced network dynamics. (A) Excitatory (blue), inhibitory (red), and net (green) inputs into a sample excitatory neuron in a balanced network. The dashed line indicates the firing threshold. (B) The time-averaged state of the excitatory (blue) and inhibitory (red) population as a function of external input scaling strength S. Inset: Probability density of the time-averaged ratio of excitatory and inhibitory inputs across the network. Unless otherwise specified, parameters utilized are REE = RIE = 1, RII = −1.8, REI = −2, NE = 800, NI = 200, fE = 1.2, fI = 1, K = 0.03NE, θE = 1, and θI = 0.7. The external drive into a neuron in the kth population is an excitatory constant current that is independently generated and identically uniformly-distributed with magnitude scaled by fk.

While the sparsity of R in principle reduces the necessary data for a successful reconstruction of the network connectivity, compressive sensing theory generally only applies to the recovery of sparse inputs into linear and time-invariant systems (Candes et al., 2006; Donoho, 2006), rather than from measurements of the non-linear and time-evolving dynamics of a neuronal network. To overcome this theoretical challenge, it is important to note that for a broad class of physiological neurons as well as realistic neuron models, the neuronal firing activity exhibits linear dependence on relatively strong external inputs in the proper dynamical regime (Brunel and Latham, 2003; Rauch et al., 2003; Fourcaud-Trocmé and Brunel, 2005; La Camera et al., 2006; Barranca et al., 2014a). Considering the dynamic balance between excitatory and inhibitory inputs facilitates a rapid and robust linear response to external inputs (van Vreeswijk and Sompolinsky, 1996, 1998), we hypothesize that balanced neuronal network dynamics are critical to the efficient CS reconstruction of sparse network connectivity.

For the binary-state balanced network model, the temporal expectation of Equation (2) yields a natural linear input-output mapping in response to a single input vector p

where μ is an N-dimensional vector denoting the time-averaged total input into each neuron and x is an N-dimensional vector denoting the time-averaged state of each neuron.

To demonstrate the generality of our network reconstruction framework with respect to external inputs and to avoid specializing their design, we drive the network with an ensemble of r random input vectors with independent identically uniformly distributed elements, denoted by , and measure the evoked time-averaged net input and state of the neurons, denoted by and , respectively, over a short time duration. From a physiological standpoint, on a given trial, we inject into each neuron a distinct constant current of magnitude determined by a uniformly distributed random variable and measure the evoked dynamics across the network, subsequently reconstructing the network connectivity from a linear mapping relating these quantities. To facilitate efficient recovery, the number of trials utilized r ≪ N2. Here the N2 entries of R are to be recovered using only Nr state measurements, leading to a highly underdetermined inverse problem. However, since R is sparse, CS may still potentially yield a successful reconstruction (see the Methods section for details).

While conventional balanced network theory assumes a constant and homogeneous excitatory external input is injected into each population (van Vreeswijk and Sompolinsky, 1996, 1998), note that we choose the excitatory external input vector p to be composed of independent and identically distributed random variables. Even for these heterogeneous external inputs, balanced dynamics are still well-maintained under population scalings with fE > fI. The maintenance of balance across the majority of the network can be seen in the inset of Figure 1B, plotting the mean E/I input ratio across the network, which closely resembles the distribution for the constant homogeneous input case in its narrow peak near −1 (van Vreeswijk and Sompolinsky, 1996, 1998; Barranca et al., 2019). To further probe the evoked network dynamics, we empirically examine the response of the network to increasingly large random external inputs in Figure 1B, adjusting scaled external input SFp by increasing the scaling strength S. We observe that as the external drive strength is increased, the time-averaged state of both the excitatory and inhibitory populations intensifies linearly with S for sufficiently large inputs, thereby demonstrating linear gain in agreement with Equation (4) and as expected theoretically in the large network limit in the case of homogeneous external inputs (van Vreeswijk and Sompolinsky, 1996, 1998).

With linear input-output mapping (4), we obtain a system of equations relating the network input, evoked dynamics, and the connectivity structure of R. To recover the ith row of R in this case, denoted Ri*, it is necessary to utilize the full set of inputs, , the respective time-averaged inputs into the ith neuron, , and the respective evoked time-averaged states of the ith neuron, .

The resultant underdetermined linear system in recovering the ith row, Ri* of the recurrent connectivity matrix is

Since R is sparse and the respective average states in X are approximately uncorrelated in the balanced regime (van Vreeswijk and Sompolinsky, 1996, 1998), the optimal row reconstruction is the solution to Equation (5) with minimal L1 norm (Candes et al., 2006; Donoho, 2006) in accordance with CS theory. Considering the resultant L1 minimization problem is solvable in polynomial time (Donoho and Tsaig, 2008) and since Equation (5) represents a sequence of independent linear systems with respect to the row index i, parallelization furnishes a computationally efficient reconstruction of R.

In Figure 2A, we consider a sparsely connected network with balanced dynamics and 0.05 connection density, and reconstruct its connectivity matrix composed of N2 = 106 entries using Equation (5) for i = 1, …, N, recording the network response to r = 900 random inputs for 2.5 s each. The connectivity matrix for a subset of 100 excitatory neurons is depicted alongside the corresponding reconstruction error, demonstrating that the majority of connections, or lack thereof, are indeed captured. Improving significantly upon preexisting approaches for reconstructing network connectivity, which commonly require long observation times and focus primarily on excitatory networks (Timme, 2007; Eldawlatly et al., 2010; Hutchison et al., 2013; Zhou et al., 2013b; Goñi et al., 2014), this reconstruction framework successfully distinguishes between excitatory and inhibitory connection types over short observation times. Since the neuron types are not assumed to be known a priori, we note that while there is no constraint that excitatory and inhibitory connections are of the appropriate sign directly enforced in solving optimization problem (5) via L1 minimization, with sufficiently rich measurements of the network dynamics, the connectivity reconstructions nevertheless are generally able to successfully identify both connection signs and magnitudes, as indicated by the small relative error obtained in recovering the connectivity matrix.

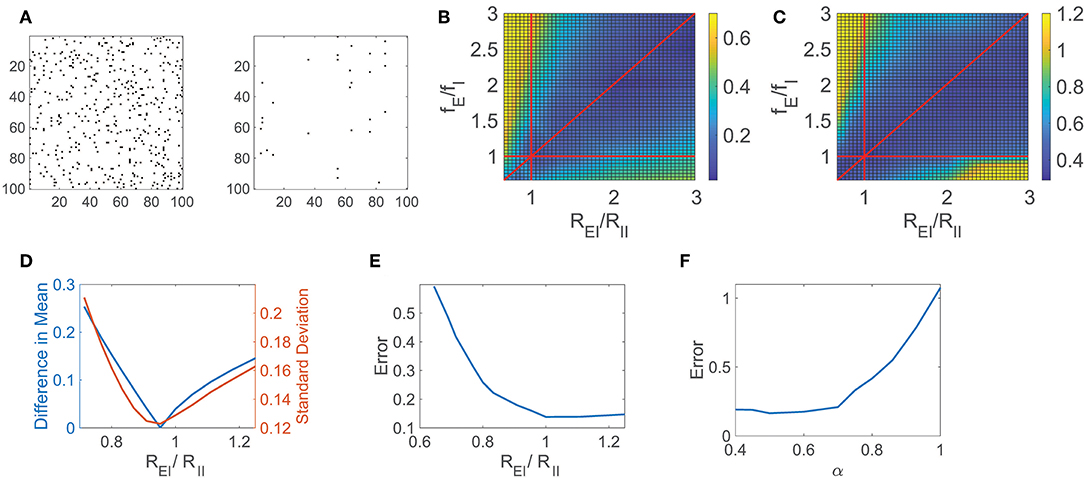

Figure 2. CS network reconstruction and dynamical regime. (A) The connectivity matrix R for a 100 excitatory neuron subset of a balanced network with NE = 800, NI = 200, and 0.05 connection density is depicted on the left. Existing connections are marked in black. On the right, errors in the CS reconstruction of R are marked in black. The relative reconstruction error is ϵ = 0.14. (B) Difference in absolute value between the mean of the time-averaged ratio of excitatory and inhibitory input across the network (E/I input ratio) and −1, the value expected in the balanced state, as a function of the quotients REI/RII and fE/fI. (C) Relative reconstruction error of R as a function of REI/RII and fE/fI. In (B,C), red lines denote REI/RII = 1, fE/fI = 1, and REI/RII = fE/fI. (D) Statistics of the E/I input ratio across the network as a function of REI/RII for fixed fE/fI = 1.2. Left ordinate axis: Difference in absolute value between the mean E/I input ratio and −1, Right ordinate axis: Standard deviation of E/I input ratio. (E) Relative reconstruction error of R as a function of REI/RII for fixed fE/fI = 1.2. (F) Relative reconstruction error of R as a function of the exponent α. Each reconstruction utilizes r = 900 inputs with 2.5 s observation time.

To quantify the accuracy of the entire connectivity matrix reconstruction, Rrecon, we measure the relative reconstruction error, ϵ = ∥R−Rrecon∥/∥R∥, using the Frobenius norm, . In this particular case, utilizing significantly less trials than entries in R, the network relative reconstruction error is only ϵ = 0.14, yielding close agreement with the original connection matrix. We remark that in this network the ratio of excitatory to inhibitory neurons is chosen to be 4:1 in agreement with estimates in the primary visual cortex (Gilbert, 1992; Liu, 2004; Cai et al., 2005; Zhou et al., 2013a), though this framework is adaptable to other distributions of neuron types corresponding to alternative cortical regions. While in this work we specifically consider the role of balanced dynamics in the context of an analytically tractable binary-state model setting, the compressive sensing reconstruction framework naturally generalizes to alternative model networks. In the case of the integrate-and-fire model (Lapicque, 1907; Burkitt, 2006; Mather et al., 2009; Barranca et al., 2014a), for example, rather than requiring detailed knowledge of the networks' inputs as in the binary-state model, the network input-output mapping may instead involve the time-averaged neuronal membrane potentials and firing rates (Barranca et al., 2014b), yielding a framework that is more amenable to experimental settings.

2.2. Balanced Network Characteristics for Optimal Reconstruction

We posit that the network functioning in the balanced operating regime is fundamental to the success of the CS reconstruction and demonstrate that the relative reconstruction error indeed increases as the network departs from the balanced state. We confirm the central role of the balanced state in network reconstruction by varying several network connectivity parameters, which crucially determine the network operating state, and examining the resultant impact on the CS reconstruction of R.

For the network dynamics to be appropriately balanced in the large K limit, Equation (3) gives restrictions on the external and cortical input strengths for the network. These parameter restrictions hold approximately for the sparsely-connected networks of large yet finite size that we examine, and we analyze the impact of these parameters on the network reconstruction accuracy. Since we are considering the connectivity reconstruction for networks composed of a finite number of neurons and therefore Equation (3) only holds approximately, in many cases the dynamics may be well-balanced even though the corresponding theoretical condition in the large network limit is violated (van Vreeswijk and Sompolinsky, 1996; Gu et al., 2018). For this reason, to gauge the degree to which a finite-sized network exhibits balanced dynamics, we analyze the absolute difference between the mean E/I input ratio for all neurons and −1, the expected value for balanced dynamics, as depicted in Figure 2B across network parameters. Here we vary the quotients, REI/RII and fE/fI, which are each crucial to Equation (3), observing a clear region of well-balanced dynamics. Investigating the impact of the network dynamical regime on the CS reconstruction of R, we plot in Figure 2C the corresponding relative reconstruction error over the same parameter space. The highest quality reconstructions are generally achieved when the mean E/I input ratio is near −1, and the network is consequently in the balanced operating regime, with degradation in accuracy incurred as the mean E/I input ratio departs from −1.

Similarly, we examine a detailed one-dimensional slice of these plots in Figures 2D,E, respectively, as we fix fE/fI = 1.2 and vary the quotient REI/RII. In particular, we plot the absolute difference between the mean E/I input ratio and −1 as well as the standard deviation of the E/I input ratio across the network in Figure 2D to further classify the network operating state. We observe that when Equation (3) is approximately satisfied, the difference between the mean E/I input ratio and −1 is small. In this same regime, the standard deviation of the E/I input ratio is also near zero, indicating a dynamic balance between the excitatory and inhibitory inputs over the entire network. For nearly identical parameter choices as those producing balanced dynamics, we observe that the corresponding relative reconstruction error, depicted in Figure 2E, is minimal. As the reconstruction accuracy diminishes, increasingly large proportions of neurons remain either active with unrealistically high firing rate or are completely quiescent. Since the relatively rare and irregular threshold crossings due to input fluctuations in the balanced regime largely reflect the impact of the network connectivity on dynamics, nearly frozen or excessively high neuronal activity results in significantly diminished reconstruction quality.

Another crucial assumption in formulating the balanced network model is strong synapses. Similar models could be formulated with connection strengths of form . However, the dynamics are only well-balanced in the large K limit for α = 1/2. For 1/2 < α ≤ 1, the weaker synapse case, the temporal input fluctuations decrease with K, scaling as K1/2−α, leading to mean-driven dynamics in the large K limit. In contrast, for 0 < α <1/2, the stronger synapse case, input fluctuations instead grow with K, and thus the net input wildly fluctuates well above and below threshold.

Using our CS framework to reconstruct the network connectivity R, we examine the reconstruction error achieved for the network model initialized across choices of α in Figure 2F while fixing K and the remaining model parameters. The optimal reconstruction is achieved near α = 0.5, when the network is in the balanced operating regime, with error generally increasing as α moves away from 0.5 and the mean E/I input ratio deviates from balance. Note that while here we study the impact of α for network realizations with a fixed and finite choice of K, the theoretical considerations in the large network limit suggest that these effects become more pronounced for larger networks with correspondingly larger K. Considering that the reconstruction error increases especially rapidly as α → 1, we hypothesize that weaker synapses in non-balanced network models are not conducive to the reconstruction of network connectivity, particularly in the mean-field limit. While mean-driven dynamics generally well encode information regarding network inputs and feed-forward connectivity (Barranca et al., 2016b), in this case, we instead observe that the balanced dynamical regime is better suited for encoding recurrent interactions in the network dynamics.

2.3. Robustness of Connectivity Reconstruction

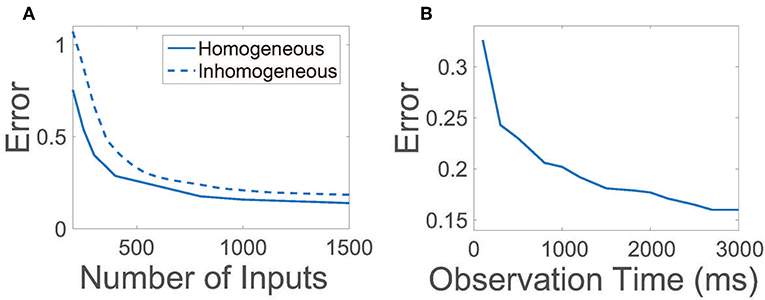

For efficiency, it is desirable to achieve an accurate reconstruction of the network connections using a relatively small number of random inputs and also by collecting the evoked network activity over a small observation time. In Figure 3A, we plot the relative reconstruction error for R as the number of input vectors is increased given a fixed observation time. Initially, as the number of inputs is increased, the error rapidly decreases. Once the number of inputs utilized is sufficiently large, near ~800, more marginal improvements are garnered, at which point additional experiments are of less utility. Hence, only a relatively small number of trials are necessary to yield near-maximum reconstruction quality.

Figure 3. Efficiency and robustness of CS network reconstruction. (A) Relative reconstruction error as a function of the number of random input vectors r utilized. Solid line depicts error using homogeneous thresholds θE = 1, θI = 0.7. Dashed line depicts error using inhomogeneous thresholds such that , where inhomogeneities are uniformly distributed random variables and d = 0.3. In each case, the observation time is 2.5 s. (B) Relative reconstruction error as a function of observation time. In each case, 900 random input vectors are utilized.

Given a sufficient number of inputs such that the reconstruction error saturates, we next examine the duration of time over which data must be recorded for successful connectivity reconstruction in Figure 3B. The relative reconstruction error precipitously drops for small observation times, leveling off for sufficiently large time durations over 2 s. Thus, for each set of inputs utilized, it is only necessary to record neuronal activity over a short time duration.

Similar dependence on input ensemble size and observation time holds for networks of alternative sizes with analogous connection density and architecture, yielding comparably accurate reconstructions by using a relatively small number of input vectors. As the irregular dynamics of neurons in the balanced state is crucial to the success of the CS recovery framework, we note that regardless of the observation time and number of inputs utilized, reconstruction of R remains intractable if the network dynamics are not sufficiently well-balanced.

In our original binary-state model, we had assumed that all excitatory neurons and inhibitory neurons are statistically homogeneous. We now examine the effect of inhomogeneity in the network on the reconstruction of R by varying the firing threshold for each neuron. In this case, thresholds are chosen such that the firing threshold for the ith neuron in the kth population is , with inhomogeneities prescribed by identically uniformly distributed random variables . In Figure 3A, we plot the reconstruction error dependence on the number of random inputs for inhomogeneity strength d = 0.3 ≈ 0.43θI, observing only a minor degradation in reconstruction quality relative to the homogeneous threshold case. Thus, we expect that even if a network is composed of neurons of many types, as long as the neuronal dynamics are robustly balanced, it is possible to still utilize our CS framework to reconstruct the network connectivity.

3. Discussion

Addressing the current theoretical and experimental difficulties in measuring the structural connectivity in large neuronal networks, we show that the high degree of sparsity in network connections makes it feasible to accurately reconstruct network connectivity from a relatively small number of measurements of evoked neuronal activity via CS theory. The success of this reconstruction depends on the dynamical regime of the network, with the balanced operating state facilitating optimal recovery. Just as the connectivity matrix R may be recovered from dynamical activity based on an underlying linear mapping, such as Equation (4), unknown network feed-forward connectivity as well as natural stimuli may analogously be reconstructed (Barranca et al., 2016b). We have empirically verified such reconstructions are also improved when the network is in the balanced operating regime. In light of this, we hypothesize that evolution may have fine-tuned much of the cortical network connectivity to optimize both the encoding of sensory inputs as well as local connectivity based on balanced network dynamics.

It is important to note that while the compressive sensing theory leveraged in this work is well-suited for the reconstruction of sparse signals, the reconstruction of densely-connected neuronal networks in the brain with potentially strongly correlated dynamics remains a challenging area for future investigation (Wang et al., 2011a; Markov et al., 2013; Yang et al., 2017). Though we considered a balanced network model with statistically homogeneous random connectivity among neuron types, physiological neuronal circuits observed in experiment typically exhibit a complex network structure (Massimini et al., 2005; Bonifazi et al., 2009; Markov et al., 2013), which may induce stronger correlations and oscillations in neuronal dynamics (Honey et al., 2007; Wang et al., 2011a; Yang et al., 2017). While prolonged synchronous dynamics may make it infeasible to reconstruct network connections using our methodology, intermixed periods of irregular dynamics may provide sufficient neuronal interaction data or, otherwise, connections between functional modules may be potentially identifiable. Recent theoretical analysis demonstrates that even for networks with small-world or scale-free structure, balanced dynamics can persist in these neuronal networks with various types of single-neuron dynamics, particularly in an embedded active core of neurons hypothesized to play a key role in sparse coding (Gu et al., 2018). For such a balanced core in a network with heterogeneous connectivity, the primary dynamical assumptions of our reconstruction framework hold as does compressive sensing theory in the presence of mildly structured sampling matrices (Elad, 2007; Barranca et al., 2016a; Adcock et al., 2017), and thus it may be possible to extend our framework in recovering connections within the balanced core.

While this work utilized specific modeling choices for which the balanced state is well-characterized, in alternative settings, a similar framework can potentially be utilized to reconstruct sparse network connectivity as long as the dynamics are in the balanced operating regime. Linear mappings in the balanced state similar to Equation (4) have been well-established for various classes of neuronal network models, including those with more physiological dynamics (Brunel and Latham, 2003; Fourcaud-Trocmé and Brunel, 2005; Barranca et al., 2014a, 2019; Gu et al., 2018), and experimental measurements of neuronal firing-activity also generally exhibit a similar linear dependence on input strength (Rauch et al., 2003; La Camera et al., 2006). Advances in multiple neuron recording, such as multiple-electrode technology, optical recording with fast voltage-sensitive dyes, and light-field microscopy, have facilitated the recording of increasingly large numbers of neurons simultaneously (Stevenson and Kording, 2011; Prevedel et al., 2014; Frost et al., 2015), and combined with new optogenetic as well as optochemical techniques for precisely stimulating specific neurons (Banghart et al., 2004; Rickgauer et al., 2014; Packer et al., 2015), we expect the theoretical framework developed to be generalizable by combining these techniques in experiment. To circumvent potential experimental difficulties in simultaneously stimulating specific neurons and recording their evoked dynamics, we expect it to be also possible to extend our theoretical framework by driving a subset of neurons and recording the response of a random group of neurons in each trial. Since a particular subnetwork of neurons in the brain generally receives inhomogeneous and unknown input from external neurons, the development and utilization of an accurate input-output mapping involving only the recorded network dynamics and applied drive in experiments marks a key area for future exploration. While there are known mappings that make no assumption of the detailed input into each neuron, they do assume that external inputs are fully characterized. Since such mappings are quite robust in the presence of noise (Barranca et al., 2014b,c, 2016b), it may still be possible to well discern recurrent connections even in the presence of unknown external neuronal inputs for sufficiently strong forcing applied in experiments.

4. Methods

4.1. Compressive Sensing Theory

Compressive sensing theory states that for sparse data, the number of measurements required for a successful reconstruction in a static and linear system is determined by the number of dominant non-zero components in the data (Candes et al., 2006; Donoho, 2006). Using this reasoning, optimally reconstructing sparse data from a small number of samples requires selecting the sparsest reconstruction consistent with the measured data, since such a signal is most compressible. CS theory thus provides a significant improvement in sampling efficiency from the conventional Shannon-Nyquist theorem, which asserts that the sampling rate should instead be determined by the full bandwidth of the data (Shannon, 1949).

The reconstruction of time-invariant data from a small number of samples in a linear system can be considered an underdetermined inverse problem. For an n-component signal, y, m discrete samples of y can be represented by Ay, where A is an m×n measurement matrix composed of rows which are each a set of measurement weights. This yields an m-component measured signal, b. Reconstructing the true data y from the measured data b is therefore equivalent to solving

When the number of samples taken is significantly smaller than the number of components in y, i.e., m ≪ n, the above system is highly underdetermined with an infinite number of possible solutions. While one approach to selecting the most compressible solution is to choose the sparsest y satisfying Equation (6), this is generally too computationally expensive for real-world signals.

For sufficiently sparse y and a broad class of measurement matrices, CS theory shows that a viable surrogate is in fact minimizing (Candes and Wakin, 2008), which is efficiently solvable in polynomial time using numerous algorithms (Tropp and Gilbert, 2007; Donoho and Tsaig, 2008). From an experimental standpoint, it is relatively straightforward to devise sampling schemes such that the corresponding measurement matrices are amenable to CS. Measurement matrices exhibiting randomness in their structure are particularly viable candidates (Baraniuk, 2007; Candes and Wakin, 2008; Barranca et al., 2016a), and, consequently, the response matrix X in the left-hand side of Equation (5) is suited for CS reconstructions in the balanced regime since X demonstrates little correlation among its entries.

Data Availability Statement

All datasets generated for this study are included in the manuscript/supplementary files.

Author Contributions

All authors listed have made a substantial, direct and intellectual contribution to the work, and approved it for publication.

Funding

This work was supported by NSF DMS-1812478 (VB), by a Swarthmore Faculty Research Support Grant (VB), by NSFC-11671259, NSFC-11722107, NSFC-91630208, SJTU-UM Collaborative Research Program, and by the Student Innovation Center at Shanghai Jiao Tong University (DZ).

Conflict of Interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Acknowledgments

We thank the late David Cai for initial thoughtful discussions of this work.

References

Achard, S., and Bullmore, E. (2007). Efficiency and cost of economical brain functional networks. PLoS Comput. Biol. 3:e17. doi: 10.1371/journal.pcbi.0030017

Adcock, B., Hansen, A. C., Poon, C., and Roman, B. (2017). “Breaking the coherence barrier: a new theory for compressed sensing,” in Forum of Mathematics, Sigma, Vol. 5. (Cambridge, UK: Cambridge University Press).

Aertsen, A. M., Gerstein, G. L., Habib, M. K., and Palm, G. (1989). Dynamics of neuronal firing correlation: modulation of “effective connectivity”. J. Neurophysiol. 61, 900–917.

Banghart, M., Borges, K., Isacoff, E., Trauner, D., and Kramer, R. H. (2004). Light-activated ion channels for remote control of neuronal firing. Nat. Neurosci. 7, 1381–1386. doi: 10.1038/nn1356

Baraniuk, R. (2007). Compressive sensing. IEEE Signal Process. Mag. 24, 118–120. doi: 10.1109/MSP.2007.4286571

Bargmann, C. I., and Marder, E. (2013). From the connectome to brain function. Nat. Methods 10, 483–490. doi: 10.1038/nmeth.2451

Barranca, V. J., Huang, H., and Li, S. (2019). The impact of spike-frequency adaptation on balanced network dynamics. Cogn. Neurodyn. 13, 105–120. doi: 10.1007/s11571-018-9504-2

Barranca, V. J., Johnson, D. C., Moyher, J. L., Sauppe, J. P., Shkarayev, M. S., Kovačič, G., et al. (2014a). Dynamics of the exponential integrate-and-fire model with slow currents and adaptation. J. Comput. Neurosci. 37, 161–180. doi: 10.1007/s10827-013-0494-0

Barranca, V. J., Kovačič, G., Zhou, D., and Cai, D. (2014b). Network dynamics for optimal compressive-sensing input-signal recovery. Phys. Rev. E 90:042908. doi: 10.1103/PhysRevE.90.042908

Barranca, V. J., Kovačič, G., Zhou, D., and Cai, D. (2014c). Sparsity and compressed coding in sensory systems. PLoS Comput. Biol. 10:e1003793. doi: 10.1371/journal.pcbi.1003793

Barranca, V. J., Kovačič, G., Zhou, D., and Cai, D. (2016a). Improved compressive sensing of natural scenes using localized random sampling. Sci. Rep. 6:31976. doi: 10.1038/srep31976

Barranca, V. J., Zhou, D., and Cai, D. (2016b). Compressive sensing reconstruction of feed-forward connectivity in pulse-coupled nonlinear networks. Phys. Rev. E 93:060201. doi: 10.1103/PhysRevE.93.060201

Boccaletti, S., Latora, V., Moreno, Y., Chavez, M., and Hwang, D.-U. (2006). Complex networks: structure and dynamics. Phys. Rep. 424, 175–308. doi: 10.1016/j.physrep.2005.10.009

Bonifazi, P., Goldin, M., Picardo, M. A., Jorquera, I., Cattani, A., Bianconi, G., et al. (2009). GABAergic hub neurons orchestrate synchrony in developing hippocampal networks. Science 326, 1419–1424. doi: 10.1126/science.1175509

Briggman, K. L., and Bock, D. D. (2012). Volume electron microscopy for neuronal circuit reconstruction. Curr. Opin. Neurobiol. 22, 154–161. doi: 10.1016/j.conb.2011.10.022

Britten, K. H., Shadlen, M. N., Newsome, W. T., and Movshon, J. A. (1993). Responses of neurons in macaque MT to stochastic motion signals. Vis. Neurosci. 10, 1157–1169. doi: 10.1017/S0952523800010269

Brunel, N., and Latham, P. (2003). Firing rate of the noisy quadratic integrate-and-fire neuron. Neural Comp. 15, 2281–2306. doi: 10.1162/089976603322362365

Burkitt, A. N. (2006). A review of the integrate-and-fire neuron model: I. Homogeneous synaptic input. Biol. Cybern. 95, 1–19. doi: 10.1007/s00422-006-0068-6

Cai, D., Rangan, A. V., and McLaughlin, D. W. (2005). Architectural and synaptic mechanisms underlying coherent spontaneous activity in V1. Proc. Natl. Acad. Sci. U.S.A. 102, 5868–5873. doi: 10.1073/pnas.0501913102

Candes, E. J., Romberg, J. K., and Tao, T. (2006). Stable signal recovery from incomplete and inaccurate measurements. Commun. Pur. Appl. Math. 59, 1207–1223. doi: 10.1002/cpa.20124

Candes, E. J., and Wakin, M. B. (2008). An introduction to compressive sampling. Signal Process. Mag. 25, 21–30. doi: 10.1109/MSP.2007.914731

Compte, A., Constantinidis, C., Tegner, J., Raghavachari, S., Chafee, M. V., Goldman-Rakic, P. S., et al. (2003). Temporally irregular mnemonic persistent activity in prefrontal neurons of monkeys during a delayed response task. J. Neurophysiol. 90, 3441–3454. doi: 10.1152/jn.00949.2002

Donoho, D. L. (2006). Compressed sensing. IEEE Trans. Inform. Theory 52, 1289–1306. doi: 10.1109/TIT.2006.871582

Donoho, D. L., and Tsaig, Y. (2008). Fast solution of l1-norm minimization problems when the solution may be sparse. IEEE Trans. Inform. Theory 54, 4789–4812. doi: 10.1109/TIT.2008.929958

Elad, M. (2007). Optimized projections for compressed sensing. IEEE Trans. Signal Process. 55, 5695–5702. doi: 10.1109/TSP.2007.900760

Eldawlatly, S., Zhou, Y., Jin, R., and Oweiss, K. G. (2010). On the use of dynamic Bayesian networks in reconstructing functional neuronal networks from spike train ensembles. Neural Comput. 22, 158–189. doi: 10.1162/neco.2009.11-08-900

Fourcaud-Trocmé, N., and Brunel, N. (2005). Dynamics of the instantaneous firing rate in response to changes in input statistics. J. Comput. Neurosci. 18, 311–321. doi: 10.1007/s10827-005-0337-8

Friston, K. J. (2011). Functional and effective connectivity: a review. Brain Connect. 1, 13–36. doi: 10.1089/brain.2011.0008

Frost, W. N., Brandon, C. J., Bruno, A. M., Humphries, M. D., Moore-Kochlacs, C., Sejnowski, T. J., et al. (2015). Monitoring spiking activity of many individual neurons in invertebrate ganglia. Adv. Exp. Med. Biol. 859, 127–145. doi: 10.1007/978-3-319-17641-3_5

Ganmor, E., Segev, R., and Schneidman, E. (2011). The architecture of functional interaction networks in the retina. J. Neurosci. 31, 3044–3054. doi: 10.1523/JNEUROSCI.3682-10.2011

Gomez-Rodriguez, M., Leskovec, J., and Krause, A. (2012). Inferring networks of diffusion and influence. T. Knowl. Discov. D. 5:21. doi: 10.1145/2086737.2086741

Goñi, J., van den Heuvel, M. P., Avena-Koenigsberger, A., Velez de Mendizabal, N., Betzel, R. F., Griffa, A., et al. (2014). Resting-brain functional connectivity predicted by analytic measures of network communication. Proc. Natl. Acad. Sci. U.S.A. 111, 833–838. doi: 10.1073/pnas.1315529111

Gross, D., Liu, Y. K., Flammia, S. T., Becker, S., and Eisert, J. (2010). Quantum state tomography via compressed sensing. Phys. Rev. Lett. 105:150401. doi: 10.1103/PhysRevLett.105.150401

Gu, Q. L., Li, S., Dai, W. P., Zhou, D., and Cai, D. (2018). Balanced active core in heterogeneous neuronal networks. Front. Comput. Neurosci. 12:109. doi: 10.3389/fncom.2018.00109

Haider, B., Duque, A., Hasenstaub, A. R., and McCormick, D. A. (2006). Neocortical network activity in vivo is generated through a dynamic balance of excitation and inhibition. J. Neurosci. 26, 4535–4545. doi: 10.1523/JNEUROSCI.5297-05.2006

He, Y., Chen, Z. J., and Evans, A. C. (2007). Small-world anatomical networks in the human brain revealed by cortical thickness from MRI. Cereb. Cortex 17, 2407–2419. doi: 10.1093/cercor/bhl149

Honey, C. J., Kötter, R., Breakspear, M., and Sporns, O. (2007). Network structure of cerebral cortex shapes functional connectivity on multiple time scales. Proc. Natl. Acad. Sci. U.S.A. 104, 10240–10245. doi: 10.1073/pnas.0701519104

Hu, T., Leonardo, A., and Chklovskii, D. (2009). “Reconstruction of sparse circuits using multi-neuronal excitation (RESCUME),” in Advances in Neural Information Processing Systems 22 (Vancouver, BC), 790–798.

Hutchison, R. M., Womelsdorf, T., Allen, E. A., Bandettini, P. A., Calhoun, V. D., Corbetta, M., et al. (2013). Dynamic functional connectivity: promise, issues, and interpretations. Neuroimage 80, 360–378. doi: 10.1016/j.neuroimage.2013.05.079

Isaacson, J. S., and Scanziani, M. (2011). How inhibition shapes cortical activity. Neuron 72, 231–243. doi: 10.1016/j.neuron.2011.09.027

Kleinfeld, D., Bharioke, A., Blinder, P., Bock, D. D., Briggman, K. L., Chklovskii, D. B., et al. (2011). Large-scale automated histology in the pursuit of connectomes. J. Neurosci. 31, 16125–16138. doi: 10.1523/JNEUROSCI.4077-11.2011

La Camera, G., Rauch, A., Thurbon, D., Lüscher, H. R., Senn, W., and Fusi, S. (2006). Multiple time scales of temporal response in pyramidal and fast spiking cortical neurons. J. Neurophysiol. 96, 3448–3464. doi: 10.1152/jn.00453.2006

Lapicque, L. (1907). Recherches quantitatives sur l'excitation electrique des nerfs traitee comme une polarization. J. Physiol. Pathol. Gen. 9, 620–635.

Lichtman, J. W., and Denk, W. (2011). The big and the small: challenges of imaging the brain's circuits. Science 334, 618–623. doi: 10.1126/science.1209168

Liu, G. (2004). Local structural balance and functional interaction of excitatory and inhibitory synapses in hippocampal dendrites. Nat. Neurosci. 7, 373–379. doi: 10.1038/nn1206

London, M., Roth, A., Beeren, L., Häusser, M., and Latham, P. E. (2010). Sensitivity to perturbations in vivo implies high noise and suggests rate coding in cortex. Nature 466, 123–127. doi: 10.1038/nature09086

Markov, N. T., Ercsey-Ravasz, M., Van Essen, D. C., Knoblauch, K., Toroczkai, Z., and Kennedy, H. (2013). Cortical high-density counterstream architectures. Science 342:1238406. doi: 10.1126/science.1238406

Markram, H., Lübke, J., Frotscher, M., Roth, A., and Sakmann, B. (1997). Physiology and anatomy of synaptic connections between thick tufted pyramidal neurones in the developing rat neocortex. J. Physiol. 500, 409–440.

Mason, A., Nicoll, A., and Stratford, K. (1991). Synaptic transmission between individual pyramidal neurons of the rat visual cortex in vitro. J. Neurosci. 11, 72–84.

Massimini, M., Ferrarelli, F., Huber, R., Esser, S. K., Singh, H., and Tononi, G. (2005). Breakdown of cortical effective connectivity during sleep. Science 309, 2228–2232. doi: 10.1126/science.1117256

Mather, W., Bennett, M. R., Hasty, J., and Tsimring, L. S. (2009). Delay-induced degrade-and-fire oscillations in small genetic circuits. Phys. Rev. Lett. 102:068105. doi: 10.1103/PhysRevLett.102.068105

McCormick, D. A., Connors, B. W., Lighthall, J. W., and Prince, D. A. (1985). Comparative electrophysiology of pyramidal and sparsely spiny stellate neurons of the neocortex. J. Neurophysiol. 54, 782–806. doi: 10.1152/jn.1985.54.4.782

Mishchenko, Y., and Paninski, L. (2012). A Bayesian compressed-sensing approach for reconstructing neural connectivity from subsampled anatomical data. J. Comput. Neurosci. 33, 371–388. doi: 10.1007/s10827-012-0390-z

Miura, K., Tsubo, Y., Okada, M., and Fukai, T. (2007). Balanced excitatory and inhibitory inputs to cortical neurons decouple firing irregularity from rate modulations. J. Neurosci. 27, 13802–13812. doi: 10.1523/JNEUROSCI.2452-07.2007

Mongillo, G., Hansel, D., and van Vreeswijk, C. (2012). Bistability and spatiotemporal irregularity in neuronal networks with nonlinear synaptic transmission. Phys. Rev. Lett. 108:158101. doi: 10.1103/PhysRevLett.108.158101

Packer, A. M., Russell, L. E., Dalgleish, H. W., and Häusser, M. (2015). Simultaneous all-optical manipulation and recording of neural circuit activity with cellular resolution in vivo. Nat. Methods 12, 140–146. doi: 10.1038/nmeth.3217

Prevedel, R., Yoon, Y. G., Hoffmann, M., Pak, N., Wetzstein, G., Kato, S., et al. (2014). Simultaneous whole-animal 3D imaging of neuronal activity using light-field microscopy. Nat. Methods 11, 727–730. doi: 10.1038/nmeth.2964

Rauch, A., La Camera, G., Luscher, H.-R., Senn, W., and Fusi, S. (2003). Neocortical pyramidal cells respond as integrate-and-fire neurons to in vivo-like input currents. J. Neurophysiol. 90, 1598–1612. doi: 10.1152/jn.00293.2003

Rickgauer, J. P., Deisseroth, K., and Tank, D. W. (2014). Simultaneous cellular-resolution optical perturbation and imaging of place cell firing fields. Nat. Neurosci. 17, 1816–1824. doi: 10.1038/nn.3866

Runyan, C. A., Schummers, J., Van Wart, A., Kuhlman, S. J., Wilson, N. R., Huang, Z. J., et al. (2010). Response features of parvalbumin-expressing interneurons suggest precise roles for subtypes of inhibition in visual cortex. Neuron 67, 847–857. doi: 10.1016/j.neuron.2010.08.006

Salinas, E., and Sejnowski, T. J. (2001). Correlated neuronal activity and the flow of neural information. Nat. Rev. Neurosci. 2, 539–550. doi: 10.1038/35086012

Shadlen, M. N., and Newsome, W. T. (1998). The variable discharge of cortical neurons: implications for connectivity, computation and information coding. J. Neurosci. 18, 3870–3896.

Shelley, M., McLaughlin, D., Shapley, R., and Wielaard, J. (2002). States of high conductance in a large-scale model of the visual cortex. J. Comp. Neurosci. 13, 93–109. doi: 10.1023/A:1020158106603

Song, S., Sjöström, P. J., Reigl, M., Nelson, S., and Chklovskii, D. B. (2005). Highly nonrandom features of synaptic connectivity in local cortical circuits. PLoS Biol. 3:e68. doi: 10.1371/journal.pbio.0030068

Sporns, O. (2011). The human connectome: a complex network. Ann. N. Y. Acad. Sci. 1224, 109–125. doi: 10.1016/j.tics.2004.07.008

Sporns, O., Chialvo, D. R., Kaiser, M., and Hilgetag, C. C. (2004). Organization, development and function of complex brain networks. Trends Cognit. Sci. 8, 418–425. doi: 10.1038/nn.2731

Stevenson, I. H., and Kording, K. P. (2011). How advances in neural recording affect data analysis. Nat. Neurosci. 14, 139–142. doi: 10.1038/nn.2731

Stevenson, I. H., Rebesco, J. M., Miller, L. E., and Kording, K. P. (2008). Inferring functional connections between neurons. Curr. Opin. Neurobiol. 18, 582–588. doi: 10.1016/j.conb.2008.11.005

Tan, A. Y., and Wehr, M. (2009). Balanced tone-evoked synaptic excitation and inhibition in mouse auditory cortex. Neuroscience 163, 1302–1315. doi: 10.1016/j.neuroscience.2009.07.032

Timme, M. (2007). Revealing network connectivity from response dynamics. Phys. Rev. Lett. 98:224101. doi: 10.1103/PhysRevLett.98.224101

Tropp, J. A., and Gilbert, A. C. (2007). Signal Recovery From Random Measurements Via Orthogonal Matching Pursuit. IEEE Trans. Inform. Theory 53, 4655–4666. doi: 10.1109/TIT.2007.909108

Troyer, T. W., and Miller, K. D. (1997). Physiological gain leads to high ISI variability in a simple model of a cortical regular spiking cell. Neural Comput. 9, 971–983.

van Vreeswijk, C., and Sompolinsky, H. (1996). Chaos in neuronal networks with balanced excitatory and inhibitory activity. Science 274, 1724–1726.

van Vreeswijk, C., and Sompolinsky, H. (1998). Chaotic balanced state in a model of cortical circuits. Neural Comput. 15, 1321–1371.

Vogels, T. P., and Abbott, L. F. (2005). Signal propagation and logic gating in networks of integrate-and-fire neurons. J. Neurosci 25, 10786–10795. doi: 10.1523/JNEUROSCI.3508-05.2005

Wang, S. J., Hilgetag, C. C., and Zhou, C. (2011a). Sustained activity in hierarchical modular neural networks: self-organized criticality and oscillations. Front. Comput. Neurosci. 5:30. doi: 10.3389/fncom.2011.00030

Wang, W.-X., Lai, Y.-C., Grebogi, C., and Ye, J. (2011b). Network reconstruction based on evolutionary-game data via compressive sensing. Phys. Rev. X 1:021021. doi: 10.1103/PhysRevX.1.021021

Xue, M., Atallah, B. V., and Scanziani, M. (2014). Equalizing excitation-inhibition ratios across visual cortical neurons. Nature 511, 596–600. doi: 10.1038/nature13321

Yang, D. P., Zhou, H. J., and Zhou, C. (2017). Co-emergence of multi-scale cortical activities of irregular firing, oscillations and avalanches achieves cost-efficient information capacity. PLoS Comput. Biol. 13:e1005384. doi: 10.1371/journal.pcbi.1005384

Zhou, D., Rangan, A., McLaughlin, D., and Cai, D. (2013a). Spatiotemporal dynamics of neuronal population response in the primary visual cortex. Proc. Natl. Acad. Sci. U.S.A. 110, 9517–9522. doi: 10.1073/pnas.1308167110

Zhou, D., Xiao, Y., Zhang, Y., Xu, Z., and Cai, D. (2013b). Causal and structural connectivity of pulse-coupled nonlinear networks. Phys. Rev. Lett. 111:054102. doi: 10.1103/PhysRevLett.111.054102

Keywords: neuronal networks, balanced networks, signal processing, network dynamics, connectivity reconstruction

Citation: Barranca VJ and Zhou D (2019) Compressive Sensing Inference of Neuronal Network Connectivity in Balanced Neuronal Dynamics. Front. Neurosci. 13:1101. doi: 10.3389/fnins.2019.01101

Received: 18 August 2019; Accepted: 30 September 2019;

Published: 17 October 2019.

Edited by:

Chunhe Li, Fudan University, ChinaReviewed by:

Changsong Zhou, Hong Kong Baptist University, Hong KongMainak Jignesh Patel, College of William & Mary, United States

Copyright © 2019 Barranca and Zhou. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Victor J. Barranca, vbarran1@swarthmore.edu; Douglas Zhou, zdz@sjtu.edu.cn

Victor J. Barranca

Victor J. Barranca Douglas Zhou

Douglas Zhou