- 1Laboratory of Lighting Technology, Department of Electrical Engineering and Information Technology, Technical University of Darmstadt, Darmstadt, Germany

- 2Interactive Graphic Systems, Department of Computer Science, Technical University of Darmstadt, Darmstadt, Germany

The human pupil behavior has gained increased attention due to the discovery of the intrinsically photosensitive retinal ganglion cells and the afferent pupil control path’s role as a biomarker for cognitive processes. Diameter changes in the range of 10–2 mm are of interest, requiring reliable and characterized measurement equipment to accurately detect neurocognitive effects on the pupil. Mostly commercial solutions are used as measurement devices in pupillometry which is associated with high investments. Moreover, commercial systems rely on closed software, restricting conclusions about the used pupil-tracking algorithms. Here, we developed an open-source pupillometry platform consisting of hardware and software competitive with high-end commercial stereo eye-tracking systems. Our goal was to make a professional remote pupil measurement pipeline for laboratory conditions accessible for everyone. This work’s core outcome is an integrated cross-platform (macOS, Windows and Linux) pupillometry software called PupilEXT, featuring a user-friendly graphical interface covering the relevant requirements of professional pupil response research. We offer a selection of six state-of-the-art open-source pupil detection algorithms (Starburst, Swirski, ExCuSe, ElSe, PuRe and PuReST) to perform the pupil measurement. A developed 120-fps pupillometry demo system was able to achieve a calibration accuracy of 0.003 mm and an averaged temporal pupil measurement detection accuracy of 0.0059 mm in stereo mode. The PupilEXT software has extended features in pupil detection, measurement validation, image acquisition, data acquisition, offline pupil measurement, camera calibration, stereo vision, data visualization and system independence, all combined in a single open-source interface, available at https://github.com/openPupil/Open-PupilEXT.

Introduction

The pupil diameter is an essential metric in visual neuroscience, as it has a direct impact on the retinal irradiance, visual acuity and visual performance of the eye (Campbell, 1957; Campbell and Gubisch, 1966; Woodhouse, 1975; Schwiegerling, 2000). Since the early days of pupillary research (Reeves, 1918), the modeling of the pupil light response and its retinal processing path was the main focus of investigations (Zandi and Khanh, 2021). Additionally, the pupil diameter is used as a biomarker in research disciplines such as cognitive science (Aminihajibashi et al., 2020; Cherng et al., 2020; Clewett et al., 2020; Sibley et al., 2020), circadian photoentrainment (Münch et al., 2012; Bonmati-Carrion et al., 2016; Spitschan et al., 2019; Tähkämö et al., 2019; Van Egroo et al., 2019), clinical diagnostics (Lim et al., 2016; Joyce et al., 2018; Chougule et al., 2019) or neuroscience (Schwalm and Jubal, 2017; Carle et al., 2019). Pupil changes of 0.015 to 0.5 mm are the range of interest in such studies, leading to increased resolution and robustness requirements for pupil measurement equipment. Closed commercial eye-tracking systems are common in pupil examinations, associated with high investments without offering the possibilities of validating the pupil detection’s measurement accuracy. Additionally, with closed systems, it is not possible to identify the applied pupil detection algorithm, making it challenging to reproduce experiments since small inaccuracies in a range of 0.01 mm could propagate errors to the statistical evaluation of the pupil diameter. Apart from commercial solutions, there is currently a lack of an end-to-end open-source measurement platform that can be easily set up for high-precision pupillometry under laboratory conditions. Therefore, we developed a freely available hardware and software platform for pupil measurements to support the increased interest of interdisciplinary research groups in studying the pupil behavior. Our proposed platform is a comprehensive solution for performing accurate, verifiable and reproducible pupil examinations, competitive with high-end commercial stereo eye-tracking systems.

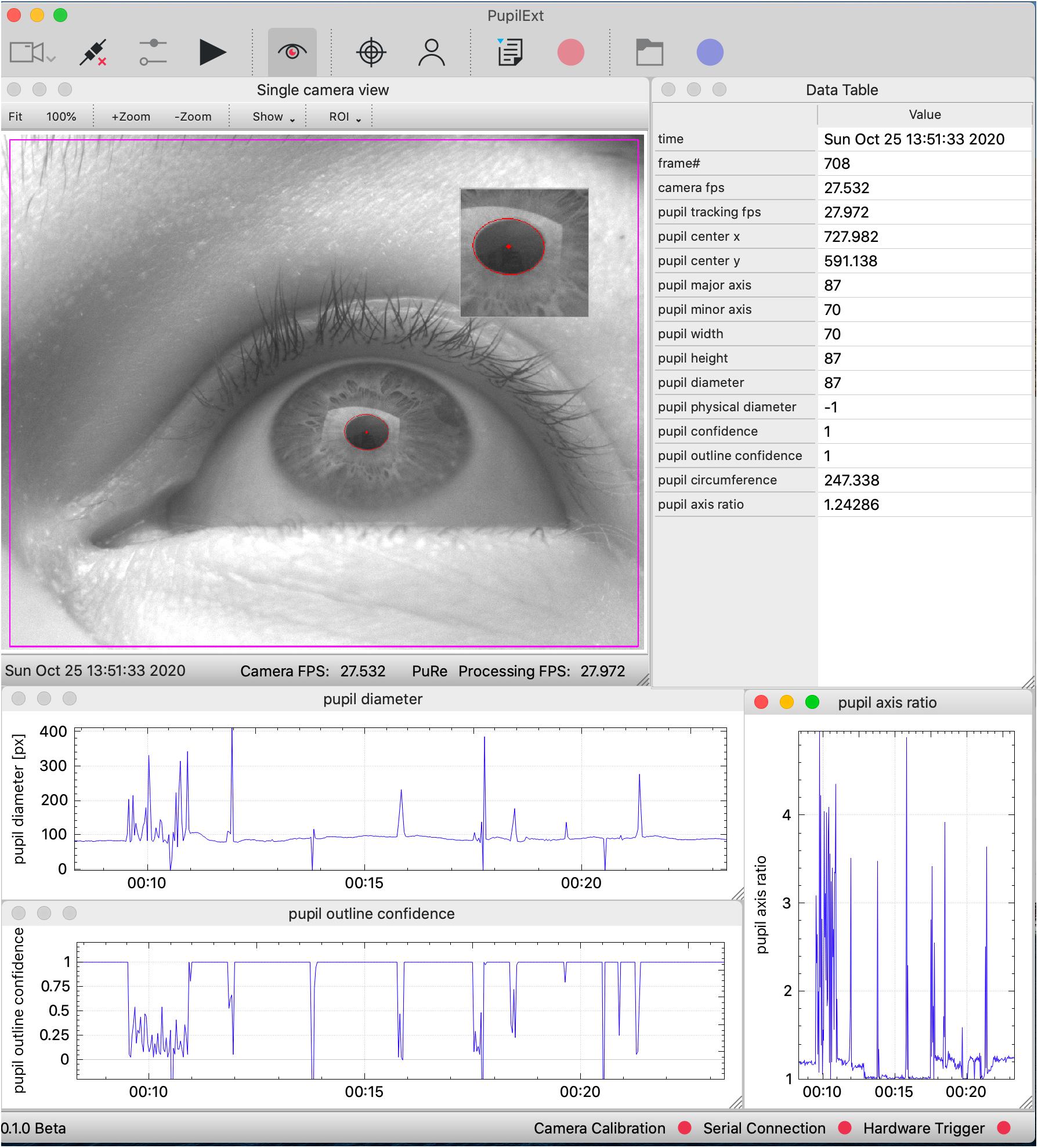

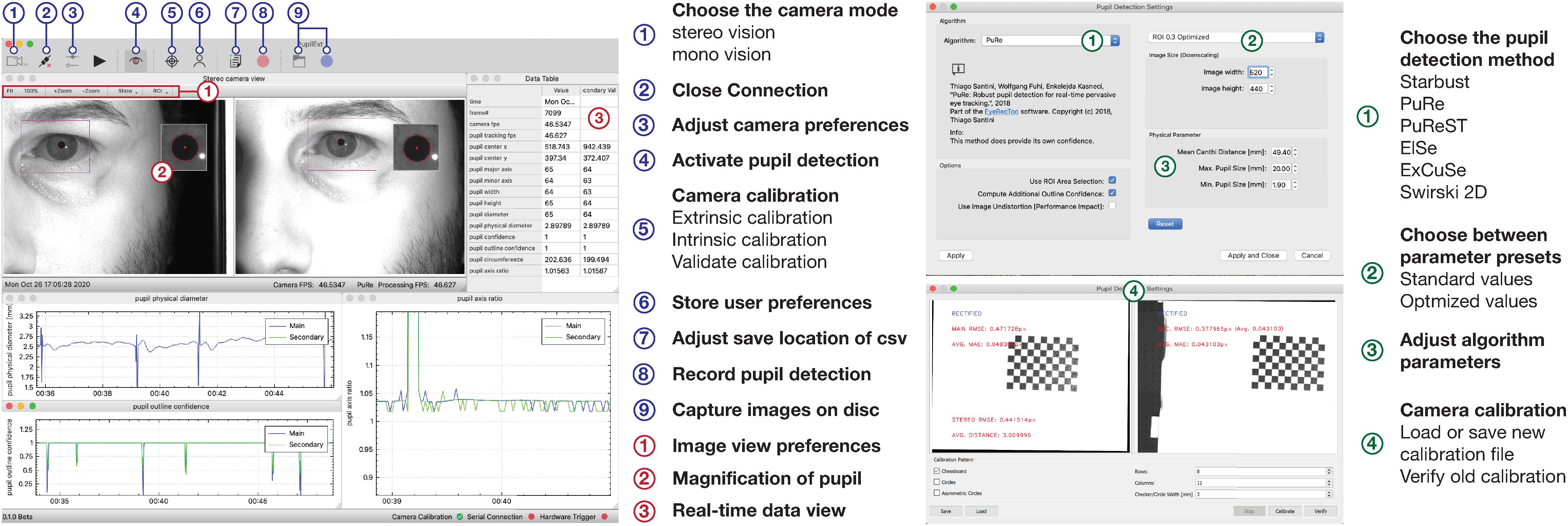

The core outcome of this work is an integrated cross-platform (macOS, Windows and Linux) pupillometry software called PupilEXT, featuring a user-friendly graphical interface (C++, QT), covering the relevant requirements of professional pupil behavior research (Figure 1). The open-source philosophy offers insight into how the pupil measurement framework performs, motivating to more transparency in collecting pupil data. We aimed to provide a plug-and-play integrated hardware and software platform, allowing interdisciplinary research groups a precise pupil behavior research without high investments. The proposed software is designed to incorporate high-resolution industrial cameras that can be run either individually or in a stereo camera arrangement. We guarantee a stable frame rate and synchronous operation of stereo cameras by using a microcontroller as an external hardware trigger. The integrated solution with hardware and software is provided in a way that even scientists with a non-technical background can reproduce the system. Users simply need to purchase industrial cameras and run the proposed PupilEXT software.

Figure 1. The graphical user interface of the PupilEXT software during a pupil measurement with one connected industrial camera. The measured pupil values are listed in real-time in a table or can be visualized graphically. We provide a selection of six state-of-the-art pupil detection algorithms from the literature. Stereo camera systems can be connected and calibrated seamlessly to acquire the absolute pupil diameter. The accuracy of a pupil measurement or calibration can be verified by implemented routines.

Inspired by the eye-tracking software EyeRecToo (Santini et al., 2017) from Santini et al., we offer end-users a selection of six state-of-the-art open-source pupil detection algorithms (Starburst, Swirski, ExCuSe, Else, PuRe and PuReST) to perform the pupil measurement. The system allows researchers to report the used pupil algorithm with the respective parameters since the pupil detection method itself could influence the captured data. Additionally, end-users will be able to determine the pupil diameter from externally acquired image sequences through the software suite. The integrated platform is available to other research groups as an open-source project, ensuring continuous development in the future. We aimed to bridge the gap between visual neuroscience or experimental psychology and engineering sciences, making professional remote pupil measurements under laboratory conditions accessible for everyone, without suffering the features of commercial solutions.

The first section of this work deals with the scientific background of pupil behavior research and the rising popularity of this topic, from which we derive the motivation of the proposed pupil measurement platform. Based on that, the current state of pupillometry and the availability of suitable open-source frameworks are highlighted. Next, we conducted a meta-analysis of existing pupil detection algorithms from the literature intending to select and integrate appropriate algorithms in the proposed PupilEXT software. The functionality of the platform is covered by starting with the hardware components, consisting of cameras, microcontroller and a near-infrared (NIR) illumination. Here, we describe the possible hardware topologies with which end-users can conduct a pupil measurement or offline analysis of external captured images. In particular, we show the possibilities of validating a pupil measurement and camera calibration with the PupilEXT software. Finally, the performance of the system is demonstrated with an experiment concerning the pupil light response, clarifying the provided pupil metrics for reliable data evaluation.

The Rising Popularity of Pupil Light Response Research

The human retina contains receptors with distinct photopigments, capable of transforming light quanta of different wavelengths λ into frequency-coded action potentials with information on color and brightness features from a visual stimulus. Photoreceptors in the retina are classified according to their broad spectral sensitivity in the visible spectrum range and respective peak response λPeak. In the photopic adapted eye, the retinal image-forming pathway is mainly controlled by the short-wavelength (S, λPeak 420 nm), medium-wavelength (M, λPeak 535 nm) and long-wavelength (L, λPeak 565 nm) sensitive cones (Stockman and Sharpe, 2000; Solomon and Lennie, 2007; Lucas et al., 2014). At scotopic and mesopic light conditions, the more sensitive rods (λPeak 498 nm) dominate the vision. Both cones and rods transmit, depending on the adaptation state of the eye, integrated signals in different stages through ganglion cells to the visual cortex of the brain (Van Meeteren, 1978; Smith et al., 2008; Jennings and Martinovic, 2014). In 1924, the International Commission on Illumination (CIE) introduced the photopic luminous efficiency function V(λ) to estimate the visual effectiveness of light spectra for humans (Bodmann, 1992; Sharpe et al., 2005; Sagawa, 2006).

A standard value in estimating the human brightness perception is the luminance L given in cd/m2, which is a V(λ) weighted photometric quantity (Berman et al., 1990; Lennie et al., 1993; Withouck et al., 2013). The luminance is merely a first approximation of the brightness perception, as only the additive contribution of L- and M-cones to the image-forming pathway is managed by V(λ) (CIE, 2011; Besenecker and Bullough, 2017; Hermans et al., 2018; Zandi et al., 2021). Since 1926, about eight pupil models were proposed that integrated the luminance as a main dependent parameter, assuming that the afferent pupil control pathway can be described by a V(λ) weighted quantity (Holladay, 1926; Crawford, 1936; Moon and Spencer, 1944; de Groot and Gebhard, 1952; Stanley and Davies, 1995; Blackie and Howland, 1999; Barten, 1999; Watson and Yellott, 2012; Zandi et al., 2020).

The discovery of a new type of receptors in the outer retina called intrinsically photosensitive retinal ganglion cells (ipRGCs) was a turning point of vision science (Provencio et al., 1998, 2000; Gooley et al., 2001; Berson et al., 2002; Hattar, 2002; Mure, 2021), which has led to a rethinking of classical retinal processing models. This subset of ganglion cells are part of the non-image-forming mechanism of the eye because of their projection to regions of the suprachiasmatic nucleus (SCN) and olivary pretectal nucleus (OPN) (Ruby et al., 2002; Berson, 2003; Hattar et al., 2003; Do et al., 2009; Ecker et al., 2010; Allen et al., 2019; Do, 2019). As a result, the ipRGCs can modulate the circadian rhythm (Freedman, 1999; Brainard et al., 2001; Thapan et al., 2001; Rea and Figueiro, 2018; Truong et al., 2020) and pupil light response (Lucas et al., 2001, 2020; Gamlin et al., 2007; Young and Kimura, 2008; Barrionuevo et al., 2018; Murray et al., 2018) via a processing path that works independently of the classical image-forming pathway (Hattar et al., 2006; Güler et al., 2008; Schmidt et al., 2014; Spitschan, 2019a). Recent studies showed that the pupil light response cannot be described by the V(λ) weighted luminance alone, making a revision of classical pupil models necessary (Zandi et al., 2018, 2020; Spitschan, 2019b; Zele et al., 2019). Therefore, one key topic in pupillary research is the development of a valid empirical model (Zandi et al., 2020), providing a spectral and time-variant function with dynamic receptor weighting to predict the temporal aperture across individuals (Rao et al., 2017; Zandi and Khanh, 2021). When using stimulus spectra along the Planckian locus for triggering the pupil light response, it is essential in measurements that amplitudes in the range of 0.1 to 0.4 mm are captured accurately to specify intrasubject variability (Kobashi et al., 2012) in a pupil model. However, a special requirement for pupil measurements arises when the pupil is used as a biomarker for quantifying the cognitive state (Morad et al., 2000; Merritt et al., 2004; Murphy et al., 2014; Ostrin et al., 2017; Tkacz-Domb and Yeshurun, 2018; Hu et al., 2019; Van Egroo et al., 2019; de Winter et al., 2021; Van der Stoep et al., 2021) or clinical symptoms of diseases (Hreidarsson, 1982; Maclean and Dhillon, 1993; Connelly et al., 2014; Lim et al., 2016; Granholm et al., 2017; Wildemeersch et al., 2018; Chougule et al., 2019). Cognitive processes such as memory load, arousal, circadian status, or sleepiness have a transient impact (Watson and Yellott, 2012) on the pupil diameter with aperture changes of 0.015 to 0.53 mm (Beatty and Wagoner, 1978; Beatty, 1982; Schluroff et al., 1986; Jepma and Nieuwenhuis, 2011; Pedrotti et al., 2014; Bombeke et al., 2016; Tsukahara et al., 2016; Winn et al., 2018), making the reproducibility of such effects difficult if the accuracy of the measurement equipment has not been sufficiently validated.

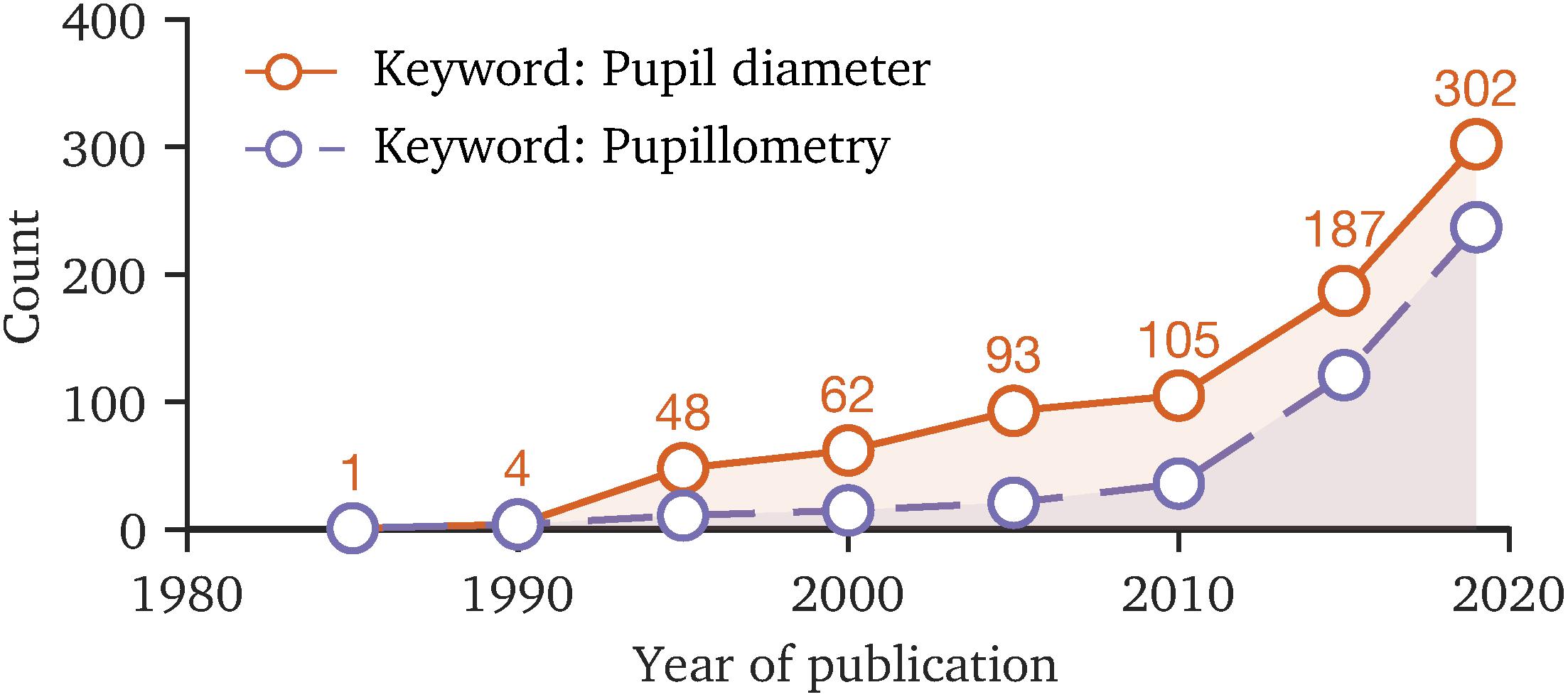

Today, the pupil behavior has become an interdisciplinary field of research (La Morgia et al., 2018; Schneider et al., 2020; Joshi, 2021; Pinheiro and da Costa, 2021) in which the number of involved scientists rises, as the trend of the number of publications with the keywords “pupil diameter” or “pupillometry” reveals (Figure 2). The renewed attention to the temporal pupil aperture (Binda and Gamlin, 2017), its application in clinical diagnostics (Granholm et al., 2017; Joyce et al., 2018; Chougule et al., 2019; Kercher et al., 2020; Tabashum et al., 2021) and increasing popularity of chromatic pupillometry (Rukmini et al., 2017; Crippa et al., 2018) topics requires additional efforts in terms of standardization and provision of consistent tools, contributing to comparability in measurement and pre-processing methodologies. For instance, one key point of standardization is the prevention of artificially induced changes to raw data by the used tools, as in cognitive or vision-related pupillary research small diameter margins are of interest. The main methodology factors that could influence the research results or reliability of pupil behavior studies are as follows:

(1) Number and depth of described experimental metrics when reporting the results concerning the stimulus modality or pre-conditioning state of the subjects.

(2) The used pre-processing method to smooth out and clean the measured pupil raw data.

(3) The used measurement hardware and software framework in collecting pupil data.

Figure 2. The number of publications with the keywords “pupil diameter” and “pupillometry” since 1985 to 2019, based on the Web of Science database. The rising count of publications in recent years indicates that the topic of pupil behavior is becoming more important. Due to the interdisciplinary field of research, standardization of measurement methodology and data processing is favorable, making study results comparable.

In order to minimize the influencing factors, there are actions in the research community to provide the essential tools for pupil research to lower the barrier of entering the topic and ensuring the comparability of future research. A major step in this direction was the work “Standards in Pupillography” by Kelbsch et al., which summarized the current knowledge on pupil behavior and defined recommendations to be considered by author groups when reporting pupil study results (Kelbsch et al., 2019). The standardization approach mainly dealt with the minimal set of metrics that authors need to specify in published research, allowing third parties to reproduce experiments when necessary. Regarding the topic of data pre-processing, the focus is on which methods should be used to detect and remove artificially induced pupil changes, caused by eye blinks and fast gaze jumps during pupil recording sessions. Ranging from catching artifacts to smoothing out the measured raw data, a large number of software libraries and guidelines exist that can assist researchers in carrying out such tasks (Pedrotti et al., 2011; Canver et al., 2014; Lemercier et al., 2014; Attard-Johnson et al., 2019; Kret and Sjak-Shie, 2019; van Rij et al., 2019).

The research area of pupil behavior benefits from the interdisciplinarity of the research groups, which is promoted by the provision of tools and predefined standardized methodologies. However, the pupillometry technique itself is a significant hurdle, since there are no standardized requirements or reliable end-to-end open-source systems for recording pupil data in high-precision experiments under laboratory conditions.

The Issue of Pupillometry

Typically, a pupil measurement can be performed manually by using a double-pinhole pupillometer (Holladay, 1926) or photographs with a reference object (Crawford, 1936) or through an integrated eye-tracking system. A higher proportion of pupil behavior studies is conducted by using an eye-tracking system, as identifying the pupil region is often a necessary step before estimating the gaze position (Lee et al., 2012). Commercial eye trackers from Tobii Pro, Smart Eye Pro or Eyelink are common solutions, which are easy to set up and usable without a technical background but cost approximately between 5,000 and 40,000 euros (Hosp et al., 2020; Manuri et al., 2020). Purchasing a set of high-resolution professional industrial cameras costs about 200 to 600 euros, with which an optical accuracy of 0.01 mm/px or more could be achieved. Thus, the price gap from commercial products results from the integrated software and license fees.

Commercial systems rely on closed software, restricting thereby conclusions about the used pupil-tracking algorithms, which is essential for the reproducibility. Additionally, based on the authors’ best knowledge, there is no commercial eye-tracking system that states the accuracy of their measured pupil diameter in the datasheet nor is a manual validation possible, as their solutions’ primarily focus is on gaze tracking. Especially in studies where pupil diameter effects are in a range of 10–2 mm, a validation of the system’s pupil measurement accuracy through a reference object is desirable.

The open-source head-mounted eye tracker project by Pupil Labs (Kassner et al., 2014) is an alternative to fully commercialized solutions, allowing free head movements and experiments in natural environments where a classic remote eye-tracking set-up is not possible. However, we do not recommend this system for precise pupil measurement applications, due to the cameras’ positions which are highly off-axis, causing pupil foreshortening errors (Hayes and Petrov, 2016). Additionally, the absolute pupil diameter is calculated indirectly by a method from which conversion accuracy is not yet fully validated for pupil measurements. Therefore, the solution provided by Pupil Labs is more suitable for experiments in which only the relative pupil diameter is of interest.

Remote tracking systems, positioned on the optical axis of the eye, are better suited for reliable pupil measurements. Various published approaches provide isolated components to build a custom remote stereo camera system (Hiley et al., 2006; Long et al., 2007; Kumar et al., 2009; San Agustin et al., 2010), which is not always feasible for interdisciplinary research groups, leading to a preference for commercial solutions. However, a groundbreaking project called EyeRecToo by Santini et al. (2017) has taken the first steps in establishing the idea of a competitive open eye-tracking software suite, which even has the option of choosing between different state-of-the-art pupil detection algorithms. Unfortunately, the software is mainly designed for head-mounted eye trackers or webcams and the use-cases are not targeted for the experimental pipeline of pupil research under laboratory conditions. For instance, a stereo camera arrangement with extrinsic calibration and the subsequent validation of a camera’s accuracy is not possible, to our best knowledge. Additionally, the software does not offer the option for evaluating external captured images from a stereo or mono camera system.

The success of the Pupil Labs project shows that end-users wish to have a fully integrated system consisting of hardware and software, packed with the functionalities of a professional commercial solution. Thus, in developing our proposed platform, we have focused not only on the functionalities and requirements of pupil researchers but also on the end-user’s experience, which should provide an easy way to build and run a pupil measurement system.

Choosing Pupil Detection Algorithms for PupilEXT

The main application for an eye-tracking system is the estimation of a subject’s gaze location, which usually needs to recognize the pupil contour and its center position. Due to the high contrast between the sclera and the pupil region in a digital image, the recognition of the pupil is in principle possible through a combination of thresholding, edge detection and morphological operations (Goñi et al., 2004; Keil et al., 2010; Topal et al., 2017). State-of-the-art pupil detection approaches have additional steps in the image processing pipeline, ensuring a more robust contour fit while having a high and accurate detection rate. Under laboratory conditions, eye images are mainly captured using a NIR light source to avoid cornea reflections of the ambient environment, leading to optimized pupil detection. However, accurate pupil detection is an essential step in eye-tracking systems since a flawed edge detection could have an impact on the performance of an eye tracker (Santini et al., 2018a). Therefore, pupil detection methods intended for eye-tracking systems can also be used for pupil measurement, if an algorithm features the detection of aperture sizes.

There are three different illumination set-ups proposed for capturing a series of eye images that need to be in line with the used pupil detection algorithm (Li et al., 2005). In the bright-pupil method, a NIR-light source is placed close to the optical axis of a camera, resulting in a positive contrast between the iris and pupil region (Hutchinson et al., 1989). Due to the retinal reflection of the illumination back to the camera, the pupil region appears brighter than the iris and sclera itself (Li et al., 2005). In the dark-pupil method, the light source is placed off-axis to the camera. Thus, the pupil appears as a dark spot surrounded by the brighter iris (negative contrast). A third method called the image-difference technique leverages the image difference between dark- and bright-pupil to extract the pupil’s contour. For this, one NIR illumination should be positioned close to the camera’s optical axis (NIR 1) and a second one off-axis (NIR 2). By synchronizing the illuminations’ flashing interval with the sampling rate of a camera, one positive contrast image can be captured in a first frame (NIR 1, ON; NIR 2, OFF) and a second frame with negative contrast (NIR 1, OFF; NIR 2, ON). This approach can lead to a more robust pupil detection but has the drawback that more effort has to be invested in the illumination. Furthermore, two frames are needed for each captured pupil size value, reducing the overall sampling rate. The recent work of Ebisawa (1994, 2004), Morimoto et al. (2002), and Hiley et al. (2006) used this image-difference technique.

However, the core of a pupil measurement system is the algorithm that is used to determine the pupil diameter. Recently published works developed state-of-the-art approaches that can be applied in our proposed software PupilEXT. Similar to the work of Topal et al. (2017), we conducted a meta-analysis of 35 published pupil detection methods (Table 1) to evaluate and select suitable algorithms for our proposed measurement platform.

The potential algorithms need to estimate the pupil size, as this is the main focus of this work. From the 35 evaluated algorithms, we can rule out 11 approaches since they are not able to output the pupil size (Table 1). We decided to consider only algorithms designed for dark-pupil detection, serving to more freedom in setting up the position of the NIR light source. Another criterion for the selection was the availability of the implementation since we started from the working hypothesis that published procedures with existing programming code are ready for practical applications. Since our graphical user interface (GUI) should offer real-time pupil detection, only C++-implemented approaches were of interest.

Based on these criteria and taking the algorithms’ recency into account, we selected a total of six pupil detection approaches for PupilEXT. First, we decided to use the robust Starburst algorithm by Li et al. (2005), which was considered as a standard approach in pupil detection for a long time, implemented in several works throughout the years. Furthermore, we added the algorithm by Świrski et al. (2012), ExCuSe by Fuhl et al. (2015), ElSe by Fuhl et al. (2016a), PuReST by Santini et al. (2018b) and PuRe by Santini et al. (2018a). The algorithms ElSe, ExCuSe, PuRe and PuReST are licensed for non-commercial use only. The pupil detection algorithm from Swirski et al. is licensed under MIT, and the Starburst algorithm under GNU GPL. More details about the licensing terms of the detection algorithms can be found on the project page of PupilEXT1.

We did not select pupil detection approaches based on neural networks (Mazziotti et al., 2021). Models such as DeepEye (Vera-Olmos et al., 2018) and PupilNet (Fuhl et al., 2016b, 2017) reveal promising results, but their computational complexity is still too high for real-time pupil measurement applications without special hardware.

The user has the option to choose between these state-of-the-art algorithms for pupil measurement in the proposed PupilEXT platform. Additionally, the algorithms’ parameter can be checked and adjusted in the user interface to increase the software-based measurement accuracy, if necessary. By default, the PuRe algorithm is selected because it is considered as a top performer and the number of parameters are relatively user-friendly, making it to a generalized procedure for different measurement settings (Santini et al., 2018a, b). While the algorithms are solely based on recent publications from various author groups, the interested readership is referred to the original works of the respective pupil detection methods or works that already reviewed the algorithms (Topal et al., 2017; Manuri et al., 2020).

Hardware Set-Up of the Camera System

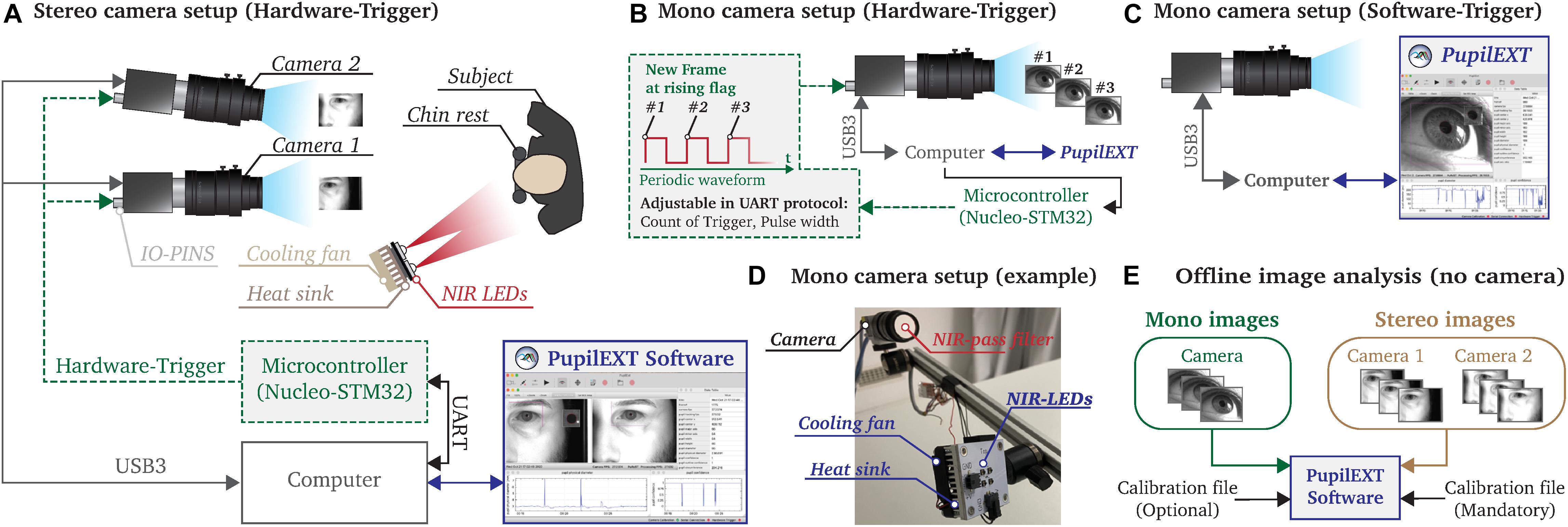

We linked the PupilEXT software with a specific camera brand (Basler) to provide a comprehensive platform for pupillometry. In this way, we allow a plug-and-play usage of the proposed system since the software is adapted to the hardware. The Pylon SDK is used to interface the cameras with the measurement software PupilEXT. Thus, any Basler branded industrial camera is integrable into the pupillometry platform. We explicitly do not support consumer webcams since PupilEXT is intended for reliable and accurate research applications. Generally, live or post-acquisition pupil measurements are supported through different measurement configurations (Figure 3).

Figure 3. Illustration of the possible measurement configurations that can be realized with the PupilExt software. (A) In stereo vision mode, two cameras are connected to the computer via USB3.0. A microcontroller is connected to the IO-pins of the camera, which triggers a synchronized image acquisition on both cameras. We recommend using a Nucleo-STM32 microcontroller since the provided source code can be used to flash the electronic. We implemented a UART communication protocol in the microcontroller so that PupilExt can automatically control and synchronize the stereo camera system via the connected hardware, via the electronics. (B) In mono camera vision mode, it is possible to control the image acquisition by an external hardware trigger, which has the advantage that the recording time can be set accurately. When capturing multi-image sequences, the hardware trigger consists of a square wave signal in which each rising edge triggers an image acquisition. (C) The use of a microcontroller is optional when connecting a single camera. Without the use of a microcontroller, a software trigger is used for image acquisition. (D) Prototype of a pupillometry set-up with a single camera and respective near-infrared (NIR) illumination unit. (E) The software PupilExt can be used without connected cameras in an offline mode to detect the pupil diameter from externally captured images.

Two cameras are needed for the stereo camera arrangement to detect the absolute pupil diameter directly (Figure 3A). One essential factor in the processing accuracy of such a configuration is the synchronization level between the cameras. Therefore, we synchronized the cameras through an external hardware trigger, leading to a stable system comparable with a professional manufactured commercial solution. Such a hardware trigger is needed to acquire images from both cameras simultaneously. In low-budget systems, the image acquisition is usually made by a software trigger that cannot guarantee synchronized image acquisitions, leading to reduced measurement accuracy. In our proposed system, the trigger signal is generated through a microcontroller, which is automatically controlled by PupilEXT. Additionally, we support pupil measurements with a single camera (Figures 3B–D). Here, the integration of a microcontroller for triggering an image acquisition is optional (Figure 3B). However, by including a microcontroller in the one-camera set-up, the duration of a recording session can be set. Note that when using a single camera, the pupil diameter is measured in pixels. Through an extra recording trial with a reference object, the pixel values can be manually converted to millimeters. If cameras are connected to PupilEXT, a real-time pupil measurement with one of the six pupil detection algorithms can be carried out. Furthermore, we support the option of recording images without pupil detection. In this way, it is possible to analyze the images in a post-acquisition mode without connected cameras (Figure 3E). In such an offline mode, image sequences from externally recorded cameras can also be loaded, making it possible to leverage the software on already existing pupil image datasets.

We recommend a NIR illumination unit to avoid corneal light reflections in the eye from the visible spectrum, which could impact the accuracy of pupil detection. For this, a NIR bandpass filter should be mounted in front of the camera’s lens. The advantage of a NIR-based measurement is that the image quality does not suffer in pupil light response experiments. Both the source code of the microcontroller for generating the hardware trigger and the respective NIR circuit board design (Figure 3D) are provided together with the PupilEXT software, allowing to set up the system effortlessly. The following subsections deal with the different operational configurations of the platform (Figure 3) and the needed hardware elements in more detail, ensuring the reproducibility of the measurement platform.

Camera Set-Up

We built a prototype consisting of two Basler acA2040-120um cameras with 50-mm lenses to validate the pupillometry platform in a sample study. The cameras operated in stereo vision mode to measure the absolute pupil diameter. The cameras support a resolution of 2,048 px × 1,536 px with a maximal frame rate of 120 fps. We positioned the system in front of an observer at a working distance of 700 mm, with a baseline distance between the cameras of 75 mm in which the secondary camera has an angle of 8° to the main camera (Figure 3A). A NIR illumination unit, consisting of four LEDs with a peak wavelength of 850 nm (SFH-4715AS), is placed near the subject’s head without obstructing the view of the cameras. Furthermore, the camera lenses are equipped with a high-pass infrared filter (Schneider IF 092 SH) with a transmission range of 747 to 2,000 nm, which should reduce artifacts from the ambient illumination.

The cameras are connected through their USB 3.0 interface with the computer for data transmission. Additionally, the IO-Pin connector of the cameras is used to adjust the timing, execution and synchronization of the image capturing. A microcontroller (Nucleo STM32F767ZI) is integrated into the pupillometry platform, controlling the cameras’ capturing interval through a shared digital signal.

For this, the microcontroller transmits a periodic square waveform modulated signal with a voltage amplitude of 3.3 V. Each rising edge of the signal triggers an image (Figure 3B). The frequency and duration of the square wave signal are adjustable through PupilEXT, affecting the frame rate and recording time of the camera. While the use of a microcontroller is obligatory when shooting stereo vision, it can be used optionally in the single-camera set-up (Figures 3B,C). Before an absolute pupil measurement can be carried out in stereo vision mode, extrinsic and intrinsic calibrations of the cameras need to be performed in PupilEXT.

Embedded Hardware Trigger

In stereo vision mode, the microcontroller must be connected to the computer so that PupilEXT can communicate with the embedded electronic via UART. We have implemented a simple text-based protocol in the microcontroller, for starting and stopping the trigger signal. Control commands can be dispatched via the graphical interface in PupilEXT or manually through a serial port terminal application like CoolTerm or HTerm. If the provided embedded microcontroller source code is not used, users can easily implement the protocol themselves in their preferred microcontroller brand.

To start a trigger signal, the parameters COUNT_OF_TRIGGER and TIME_TRIGGER_ON must be set in the protocol. The parameter COUNT_OF_TRIGGER indicates how many rising flags should be transmitted. The parameter TIME_TRIGGER_ON sets the pulse width in microseconds, which is used to set the sampling rate of the camera. Both parameters are set with the string command < TxCOUNT_OF_TRIGGERxTIME_TRIGGER_ ON > via the UART interface of the microcontroller. The “x” term is used as a separator between the parameters. For instance, if a trigger signal should be used for capturing a total of 100 images with a rate of 10 ms, the protocol would correspond to < Tx100x5000 >. A detailed introduction of how to flash and install the embedded electronic is provided on the project’s webpage.

The Cross-Platform Software Suite

The core of the pupillometry platform consists of the software PupilEXT, structured and implemented based on the requirements of scientifically oriented pupil behavior research. Although pupil measurements can be performed with commercial eye-tracking solutions, the closed system design blocks the transparency of used pupil detection algorithm and the determination of its pupil measurement accuracy. Moreover, such commercial systems are not fully intended for absolute pupil measurements. With PupilEXT, we offer not only a free alternative to commercial solutions but also extended features in the topics of pupil detection, measurement resolution, data acquisition, image acquisition, offline measurement, camera calibration, stereo vision, data visualization and system independence, all combined in a single open-source interface.

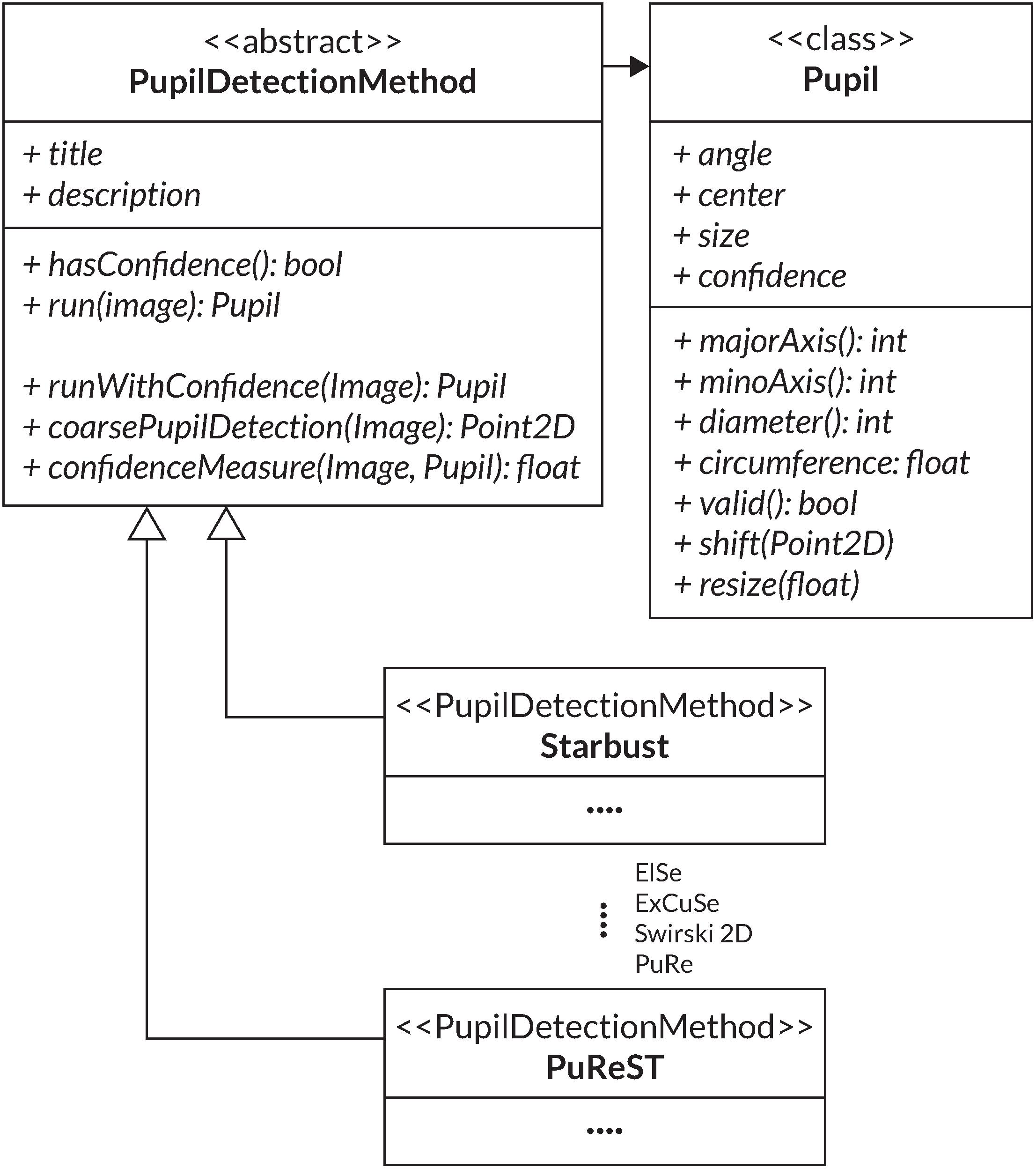

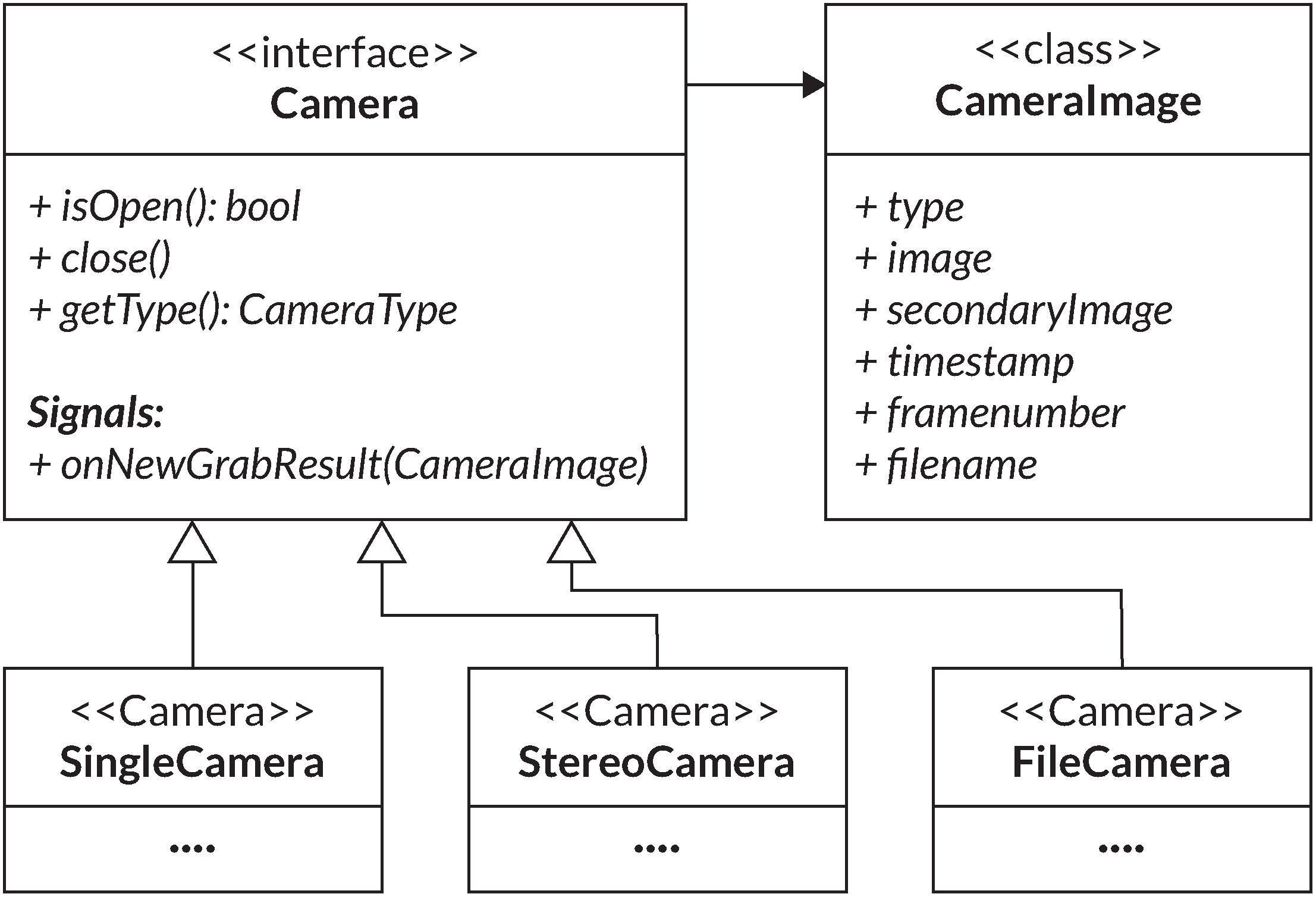

It is possible to choose between the six discussed pupil algorithms (Starburst, Swirski, ExCuSe, ElSe, PuRe and PuReST) and to freely adjust their processing parameters and to optimize the pupil contour’s detection accuracy. Additionally, the parameters of a pupil detection method can be reported, leading to an increase in the reproducibility of pupil examinations. We have integrated the pupil detection methods into one unified framework by using a standard pupil detection interface (Figure 4).

Figure 4. UML diagram of the PupilDetectionMethod interface used to implement the various pupil detection algorithms. Additionally, the Pupil class is used for collecting a pupil detection result.

For this, the PupilDetectionMethod interface is adapted from the EyeRecToo eye-tracking software (Santini et al., 2017), which employs an interface to integrate multiple pupil detection algorithms. It defines a set of abstract methods like run and hasConfidence, which are concretized through the specific algorithm implementation (Santini et al., 2017). The run method defines the respective pupil detection algorithm that returns a detected pupil from an image. Through hasConfidence, we verify the availability of a confidence measure from a respective algorithm. The interface provides a general confidence measure that can be used if an algorithm does not provide its confidence measure (Santini et al., 2018a). An additional component that is adapted from EyeRecToo (Santini et al., 2017) is the Pupil class, which aggregates all data of a detected pupil and its fitted ellipse into one class. A simplified UML diagram of the adapted structure is illustrated in Figure 4.

In PupilEXT, the camera frame rate is adjustable up to 120 Hz. Pupil measurement data are stored in a comma-separated value (CSV) file containing the pupil diameter, confidence measure and ellipse parameters. Besides recording real-time pupil data, the software features storage of raw images for later pupil evaluation. A comprehensive stereo and mono calibration procedure within the software guarantees an accurate and validatable measurement pipeline. The unique feature is the integration of professional industrial cameras with stereo vision capabilities, dedicated to absolute pupil diameter measurements. Metrics are visualized in real-time during pupil measurements, providing an ad-hoc evaluation of metrics.

Camera Interface

Before PupilEXT can perform a remote pupil detection, images must be grabbed from the camera(s). We access the Basler cameras with their USB 3.0 interface using a manufacturer-provided programming library called Pylon. Through the library, we configure both the camera preferences and activate an image capturing trigger for passing to the image processing pipeline. We distinguish between two image acquisition modes of a camera. With a software trigger, the camera acquisition is controlled over the Pylon library interface to record images at a specified frame rate continuously. In the single-camera mode, commonly, the software trigger is used, and the hardware trigger is optional. The hardware trigger is mainly implemented for the stereo vision mode, in which two cameras synchronously capture images upon a receiving a signal flag on an IO-pin. In stereo camera set-ups, the integration of the hardware trigger is obligatory. In such set-ups, a software trigger cannot guarantee that both cameras capture an image at the same time, affecting the performance of a stereo system. Connection establishment and message transmission to the microcontroller is accomplished via a serial port. The microcontroller configuration includes the settings for a camera frame rate as well as the duration of the recording.

To integrate the camera(s) in PupilEXT, a Camera interface was created, defining a set of functions for all camera types (Figure 5). Three types of cameras are differentiated: a single camera, a file camera and a stereo camera consisting of the main and secondary cameras (Figure 5). The file camera can be viewed as a camera emulation used in offline pupil detection sessions from previously recorded images retrieved from disk storage. However, by emulating the playback of images as a camera object, it can be integrated seamlessly into existing functions of PupilEXT. For the representation of the camera image, the CameraImage class is defined (Figure 4). The image distribution in the PupilEXT software from the camera(s) is organized with an internal event handler function. For this, the Pylon camera library provides an interface that is called every time a corresponding camera acquires a new image. However, for a stereo camera set-up, an image recording consists of two corresponding images that will be delivered by two separate function calls.

Figure 5. UML diagram of the Camera interface and its implementations modeling for different types of cameras in the PupilEXT software. The CameraImage class is used to represent resulting images and their corresponding metadata.

The initial approach was to leverage a camera internal timestamp to associate the two corresponding images. However, matching the two cameras, internal timestamps of corresponding images led to a buggy image rectification. Therefore, it was necessary to find a more reliable approach. Besides the camera(s) internal timestamp, additional metadata such internal frame count is provided by the Pylon API. As long as both cameras start the acquisition simultaneously, the frame counts match. This approach ensures a fixed and reliable order of stereo image acquisitions processed by PupilEXT.

Image Recording and Reading for Offline Analysis

For retrospective detection of the pupil diameter, raw image sequences from the camera can be stored directly on the hard disk. Here, a decision about the format of the images needs to be made. Users can choose between Windows Bitmap (BMP), Tagged Image File Format (TIFF) and JPEG in the preferences of PupilEXT. The BMP format represents an uncompressed image format, resulting in large file size. In contrast, JPEG is a lossy compressed format commonly used in consumer photography due to its small size. The TIFF cannot be directly categorized into either of these classes, as it represents an adaptable container that can hold both compressed and uncompressed image formats. A clear-cut decision on which format to use cannot be made easily. While uncompressed formats such as BMP would result in the highest quality of images, the size of data that needs to be handled cannot be underestimated. For the use case of recording images on a disk, one needs to be able to write image data with a rate up to the camera’s maximal frame rate, i.e., 120 fps.

Given the camera(s) of the reference system with a resolution of 2,048 px × 1,536 px and assuming a bit depth of 8 bits for greyscale images, the resulting image size is ≈3.15 MB. However, with 120 images per seconds, this results in a required writing speed of ≈377.49 MB/s for a single camera and ≈755 MB/s for the stereo set-up. Image size for compressed formats such as JPEG cannot be estimated this easily. Thus, an average image size observed from sample recordings of the reference system is taken. Results are greyscale images with an average size of around 840 kB. Consequently, JPEG requires a writing speed of up to ≈100 MB/s for a single camera and around 200 MB/s in a stereo camera setting. Solely based on the required writing speed without incorporating delays from, i.e., the computational overhead of compression, the speed of traditional hard disk drives (HDDs) is only sufficient for writing JPEG images in a single-camera set-up. More modern hardware in form of SATA 3, solid-state drives (SSDs) can further handle single and stereo camera set-ups for JPEG images, or just a single camera using BMP images. For recent NVMe-based SSDs, the writing speed is theoretically sufficient for writing BMP images in a stereo camera set-up. Note that the discussed rates all referred to the maximal frame rate of 120 fps. Saving images for later analysis is generally recommended for short recordings where the accuracy of the various pupil detection algorithms is of interest.

Pupil Diameter Recording

Pupil data are recorded in CSV files that store all acquired values of a pupil measurement. Pupil values can be recorded in an online measurement with connected cameras or in an offline measurement in which images are loaded in PupilEXT for post-acquisition evaluation. For online measurements, each pupil measurement is associated with a timestamp provided by the system time in milliseconds since Unix epoch, which is synchronized with the camera’s internal hardware clock. In offline measurements, where images are read from files, no timestamp is available. Thus, the corresponding filename is used to associate each measurement. The fitted ellipse can be reconstructed from the stored ellipse parameters: width, height, center position and angle. Further recorded data for analysis are the pupil diameter, circumference and confidence measure. The pupil diameter is stated in pixel by default, and when in stereo mode, it is additionally stated in absolute units.

Regarding the pupil detection confidence, a value is only available when the applied pupil detection algorithm provides such a measure. However, a second confidence value called outline confidence is provided independently of the used algorithm. This confidence measure is based on the outline contrast of the inner and outer regions of the fitted ellipse (Santini et al., 2018a). The goal of such value is to describe the reliability of the detected pupil diameter. These measures are useful to directly filter pupil detections that may constitute a false detection or include high uncertainty. Filtering out such detections is a common practice in the pre-processing of pupil detection results (Kret and Sjak-Shie, 2019). Santini et al. (2018a, b) apply a combination of different metrics for their confidence measure. Besides the outline confidence, the ellipse axis ratio and an angular edge spread metric are used. The ellipse axis ratio describes the ratio between major and minor axes of the ellipse, aiming to state the degree of distortion of pupil fit. The angular edge spread measures the spread of the found points on the fitted ellipse. If the points are evenly distributed, it is more likely that they originate from an exact pupil contour. We simplified the accessibility of the data by using a tabular text-based format, i.e., in the form of a CSV file. This format is independent on the used system and is commonly used for measurement recordings.

Camera Calibration

The goal of the camera calibration is to remove distortions caused by the camera lens and to estimate a projective transformation for mapping world coordinates to image coordinates. A camera projection matrix in the form of M = K[R⋅T] is used for mapping. K denotes the intrinsic parameter and R⋅T the extrinsic parameter matrices. The intrinsic matrix K projects points in the camera coordinate system to the image coordinate system with the values of the focal lengths (fx, fy) and the optical center (cx, cy) of a camera. These parameters are independent on the viewed scene and are reusable. The extrinsic matrix [R⋅T] represents the projection of world coordinates to camera coordinates, consisting of a 3 × 3 rotation matrix R and the 3 × 1 translation vector T (OpenCV, 2020). By using the camera projection matrix M, an image coordinate Pc can be projected into the associated world coordinates PW. Such projection is typically applied in stereo vision, where the camera matrices of two or more cameras are used to estimate the depth and position of a point in world coordinates captured by these cameras. A further application of camera calibration is the correction of lens-induced distortion. Here, two types of distortion exist, radial and tangential distortions. For correcting distortions in a pinhole camera model, the calibration process estimates coefficients representing the distortions in the image, resulting in the five distortion coefficients C = (k1, k2, p1, p2, k3).

Implementing Single-Camera Calibration

In PupilEXT, we perform the single-camera calibration, e.g., the estimation of the camera parameters K with the computer vision library OpenCV library and its calibration routines. For this, a total of 30 images are collected with a rate of 0.5 fps, independently from the adjusted camera frame rate. After one image is collected, the depicted calibration pattern is detected, and feature points of the pattern were extracted. Successfully detected feature points and their positions are then stored and visualized in the calibration interface of PupilEXT. If the detection was not successful, the image is discarded, and the process will be applied again to the next camera image. This procedure is repeated until the specified number of images is collected. The camera calibration process is performed when enough feature points are collected. This function optimizes the camera parameters by minimizing the reprojection error according to the algorithm of Zhan (Zhang, 2000). The reprojection error describes the root mean square error (RMSE) distance between the reprojection of the observed feature points using the current camera parameters and their known position in the calibration pattern.

After successful camera calibration, the quality of the resulting calibration is an essential metric. Its quality is primarily dependent on the accuracy of the detected feature points, which is an edge detection task similar to pupil detection. We report in the PupilEXT interface the final reprojection error in the form of the RMSE. However, as this error constitutes a mean squared distance, it may be less intuitive for the user. Therefore, we compute an additional error using the mean absolute error (MAE), measuring the arithmetic mean of the absolute distances between the observed feature points and their projected estimation. The reprojection procedure of the MAE distance is identical to the reprojection error returned by the calibration routine. A set of ideal feature points of the calibration pattern in world coordinates are projected into the image plane using the estimated intrinsic and extrinsic parameters K, R and T. After the projection of the ideal feature point positions into their estimated image coordinates, they can be compared with the actual detected feature points in the captured image. The deviation is stated in the form of the Euclidian distance between the detected and idealized point positions, describing how well the camera parameter approximates the actual camera projection.

Validate Single-Camera Calibration

The reported reprojection error is based on the camera’s projection matrix, optimized for the collected set of images during calibration. Therefore, the reprojection error may contain a bias due to overfitting. For quantifying potential overfitting, an additional verification feature is implemented in PupilEXT, performing the same procedure as in the calibration step but using fixed camera parameters. For this, we capture new calibration pattern images during the verification and calculate the reprojection error again, representing an unbiased approximation of the calibration quality. For instance, our prototyped single-camera system (Figure 3D) achieved an RMSE reprojection error of 0.341 px, where values under one pixel are commonly referred to as good calibration quality. For the MAE reprojection error, we achieved a value of 0.041 px, meaning that the average feature point coordinate was projected into the image plane with such a distance error. The verification with a new set of images showed a MAE reprojection error of 0.040 px.

In PupilEXT, the calibration parameters are stored to support the reuse at a later point. For this, a configuration file is saved after a successful calibration is completed. The file contains all essential information to reproduce and assess the state of the camera calibration, such as the attributes of the calibration pattern, the estimated camera parameter matrices and all projection error measures. The functionality of saving and restoring the calibration configuration enables an additional use case, the correction of image distortions in offline pupil measurements.

Stereo Camera Calibration

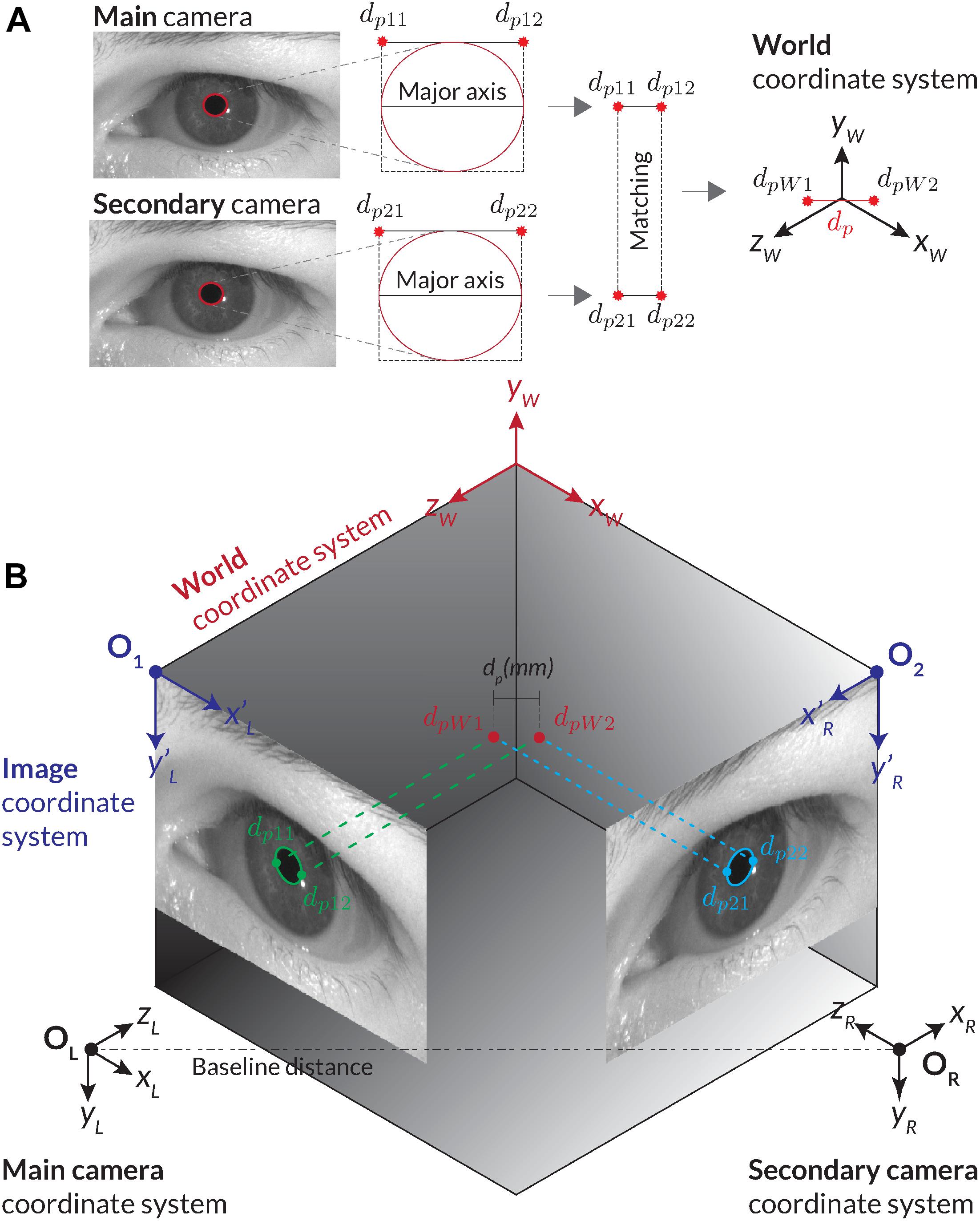

Stereo vision offers the possibility of tracking the depth information and absolute pupil size from two or more images captured by cameras of known position. By using the calibration matrices Mi of two cameras, it is possible to triangulate image coordinates in both images to their corresponding world coordinates PW. For this, matched points in both images must be found. Therefore, the pupil detection must be applied to images from both cameras (Figure 6A). For absolute pupil size calculation, the ends of the major axis of the ellipse are extracted and triangulated into world coordinates, and their distance was computed through the Euclidian distance (Figure 6A).

Figure 6. Illustration of calculating the absolute pupil diameter with the stereo vision set-up. (A) For the corresponding stereo images, two pupil detections are carried out. We use the ellipses of the pupil detections and their minimal encompassing rectangle as feature points for matching. Through triangulation, the corresponding stereo images are transformed into world coordinates. The absolute pupil diameter is calculated with the Euclidian distance between the two world coordinates. (B) Procedure of the stereo transformation with the main and second cameras’ images.

Triangulation determines the world position of an image point through its perspective projection in two or more images. Each projection point in an image corresponds to a projection line in world coordinates, representing all possible world coordinate positions that could have projected this point into the image. The projection lines of corresponding points can be used to determine their intersection in world coordinates. Figure 6B shows two corresponding image points of the main and secondary cameras (dp11, dp21) and their intersection point dpW1 in the world coordinate system. There are two challenges with this approach. First, the corresponding pupil detections in both images are required to retrieve matching points.

Second, extraction of feature points from a pupil contour may be ambiguous due to blurriness of the edge. If an identical pupil detection in both images cannot be guaranteed, potential deviations can be prevented by detecting and filtering those situations from the data stream. In PupilEXT, we use the corners of the minimal encompassing rectangle of the fitted ellipse (dp11, dp21) and (dp21, dp22) as feature points for triangulation (Figure 6A). The corner points correspond to the major axis of the ellipse for having a more robust feature selection in both images.

Implementation of Stereo Vision

Given the two recognized pupil ellipse results from the main and second cameras (Figure 6B), we check the success of pupil detection and confidence in both images. Naturally, if one of the detections failed, no matching points (Figure 6A) can be extracted or triangulated into the world coordinate system. In valid cases, the feature points (dp11, dp12) and (dp21, dp22) of both ellipse fits are extracted. Here, the bounding rectangle of the ellipse fit is leveraged, and the corner points from the major axis are extracted (Figure 6A).

Assuming the calibration parameters of both cameras are available, the paired ellipse image point coordinates (dp11, dp12) and (dp21, dp22) are corrected for potential distortions using the distortion coefficient matrices. Next, the corresponding image feature points (dp11, dp21) and (dp12, dp22) are triangulated using the OpenCV function cv::triangulatePoints. The triangulation results PH1 and PH2 are represented in homogeneous coordinates, which then are converted into Cartesian coordinates (Eqs. 1 and 2).

With the transformed points in the world coordinate system (dpW1, dpW2), we determine the absolute pupil diameter through the Euclidian distance (Figure 6B). In the experiments, the computation time of this procedure (feature extraction, distortion correction and triangulation) was on average 0.03 ms, which should not significantly influence the maximum possible processing rate of pupil measurements.

However, no further criteria are applied for checking the reliability of the stereo vision result, as it is left open for the user applying the post-processing procedure. We did not consider a general threshold for pre-filtering to be necessary since the user should have full control over the evaluation of the data. For this, we provide all necessary raw data from both cameras in the recorded CSV file.

Calibration of Stereo Vision

A requirement for the stereo triangulation is the projection matrices Mi of both cameras. As discussed in the Camera Calibration section, the parameters of a single camera are estimated in the calibration procedure, resulting in the intrinsic parameters of the cameras. As the projection matrix M consists of both the intrinsic and extrinsic parameters, the extrinsic parameters are estimated through a OpenCV stereo calibration procedure, which takes the intrinsic parameters of each camera, returning the extrinsic parameter in the form of the rotation matrix R and the translation matrix T. Thereby (R, T) describe the relative position and orientation of the main camera with respect to the secondary camera coordinate system (OpenCV, 2020). After the estimation of these extrinsic parameters, the projecting matrices M1, M2 can be calculated with the equation M = K[R⋅T]. Notably, in a stereo camera set-up, the main camera is typically selected as the origin of the stereo camera coordinate system. Thus, the projection matrix of the main camera does not apply rotation or translation and is therefore given by M1 = K⋅[I|0], where T is replaced with the identity matrix I and R is replaced with the zero vector.

Validate Quality of Calibration

Similar to the single-camera calibration, the reprojection error is returned as RMSE by the stereo calibration procedure. In stereo vision mode, the reprojection error states the distance between the observed and reprojected feature points combined for both cameras in image coordinates. However, for the user, it would be more useful to be able to assess the quality of the stereo calibration in terms of absolute units. Therefore, we leveraged the predefined size of the calibration pattern to calculate the measurement error of the calibration in absolute units. For this, we measure the absolute square size of, i.e., the chessboard pattern, using the detected feature points from both cameras in the calibration routine. The detected feature points of the calibration pattern are undistorted, stereo triangulated and converted into Cartesian world coordinates.

Next, the measured square size is compared with the known distance between two corner feature points of the calibration pattern. As a result, we report the calculated error of the stereo camera system in absolute units calculated by the distance deviation between the measured and idealized sizes of the pattern. However, the stated error again could be biased by the overfitting in the calibration routine. Therefore, we implemented a verification routine that checks the absolute measurement error using a new set of images with the calculated projection matrices. Similar to the single-camera mode, the stereo calibration matrix can be saved and loaded into the software for the next usage, reducing new calibration effort. Here, we recommend verifying the old calibration before a pupil measurement is conducted. If the lens settings or camera position are slightly changed, the transformation matrix needs to be re-created by a new calibration procedure. The necessity can be quickly checked using the verification function in PupilEXT.

Performance of PupilEXT

The performance of PupilEXT in pupil measurements depends on various factors such as processing power of the system, frame rate of the camera and the applied pupil detection algorithm. As listed in Table 1, the runtimes of the pupil detection algorithms vary significantly. For the goal of conducting pupil measurements with a frame rate of 120 fps, a maximal runtime of around 8 ms or less is necessary. Additional computations such as correcting lens distortion can increase the needed computation time per image. We optimize the computational complexity in PupilEXT by using a region of interest (ROI), reducing the amount of pixel that needs to be processed. The ROI can be adjusted interactively by the user in the interface.

In combination with the PuRe pupil detection algorithm, we achieved a stable pupil measurement at 120 fps on full images. With manually specified ROI selection, the frame rate can be pushed further, as PupilEXT is completely implemented in C++, supported by parallel computation using CPU threads.

The Graphical User Interface of PupilEXT

Figure 7 illustrates the GUI of PupilEXT during a pupil measurement in the stereo camera mode. Via the taskbar of the GUI (Figure 7, points 1 to 9, blue), the essential function of the software is linked. Before a pupil measurement, the camera mode and the respective cameras must be selected to establish a connection (Figure 7, point 1, blue). In the camera settings also a connection to the microcontroller can be established if a hardware trigger is required. After successful connection to the cameras, a window with a live image view of the cameras is opened. Camera parameters such as gain factor, exposure time or maximum frame rate can be changed at any time via a quick start button (Figure 7, point 3, blue). Next, one of the six pupil algorithms can be selected in the pupil detection preferences (Figure 7, point 1, green). In addition to the algorithms, the parameters of the method can be set to optimize the detection accuracy when necessary (Figure 7, point 3, green). We have provided a preset of parameters that can be selected (Figure 7, point 2, green). In addition to the standard parameters from the original papers, we have added optimized values that are adapted to different ROI sizes. We have set the PuRe method as a standard method in PupilEXT.

Figure 7. The graphical user interface of the programmed software PupilEXT during a pupil recording in stereo camera mode. In the main window’s taskbar, various functions can be accessed for quick actions. In the pupil detection sub-window, a pupil algorithm with respective parameters can be adjusted. The camera calibration can be done directly in PupilEXT. Calibration files can be saved and validated to give an outline of the camera system’s edge detection accuracy, which is essential for a pupil measurement pipeline.

The pupil detection of the captured live images can be started with the eye symbol in the main window (Figure 7, point 4, blue). We provided in the live view window a quick action menu (Figure 7, point 1, red), which can be used to adjust the image size, setting the ROI or displaying magnification of the pupil. The ROI features allow placement of a rectangular area over the eye to improve performance further when recordings at a higher frame rate of 120 Hz are needed. Note that for the stereo camera mode, a calibration should be carried out; otherwise, the absolute pupil diameter will not be available. The calibration window can be reached through the taskbar in the main window (Figure 6, point 5, blue).

In the calibration window (Figure 7, point 4, green), one can select the type of calibration pattern. Next, the calibration can be started, resulting in the calibration file that is saved locally on the hard disk. If a calibration file already exists, it can be loaded via the calibration window (Figure 7, point 4, green), and its validity can be again verified. The stated calibration accuracy can be recorded in a CSV file during the validation procedure.

After the calibration is completed, the absolute pupil diameter is displayed in the data view, which also lists all tracked pupil values in real-time (Figure 7, point 3, red). Each of these values can be visualized in a real-time plot by selecting the specific value in the data view. For recording the pupil measurements, a disk location can be selected to save the pupil data in a CSV file (Figure 7, point 7, blue). The data can be saved continuously with the recording button (Figure 7, point 8, blue). The raw images can be saved with the blue recording button (Figure 7, item 9, blue) for later offline pupil detection in PupilEXT. In the Supplementary Materials, we have added hands-on video materials to illustrate the pipeline of usage and the features. Additionally, we offer the feature of creating and loading custom profiles (Figure 7, point 6, blue), which opens the software in a specified state to avoid the workload when PupilEXT is started next time.

Demonstration of a Measurement Pipeline With PupilExt

To illustrate the measuring procedure with PupilEXT, we performed an exemplary experiment on the wavelength-dependent pupil light response. We recorded the pupil diameter of an observer with six repetitions (trials) using PupilEXT, while different light spectra were turned on at a steady luminance. For this, a subject looked into a 700 mm × 700 mm sized homogeneously illuminated observation chamber. The illumination was generated by a custom-made temperature-controlled (30°C ± 0.1°C) multi-channel LED luminaire, which was used to trigger the pupil diameter with chromatic stimulus spectra (Zandi et al., 2020). Pupil foreshortening error (Hayes and Petrov, 2016) was minimized by using a chin rest for positioning the subject’s head. Additionally, the gaze point was fixed with a 0.8° sized fixation target (Thaler et al., 2013) in the middle of the adaptation area. On the left eye’s optical axis, a stereo camera system consisting of two Basler acA2040-120um cameras with 50-mm lenses was set up (Figure 3A).

The pupil diameter was triggered using chromatic LED spectra with peak wavelengths λPeak of 450 nm [full width at half maximum (FWHM): 18 nm, L = 100.4 cd/m2 ± SD 0.23 cd/m2) and 630 nm (FWHM: 16 nm, L = 101 cd/m2 ± SD 0.31 cd/m2], which were switched on for 30 s. Before each stimulus spectrum, a phosphor-converted white-colored LED with a correlated color temperature of 5,500 K (L = 201 cd/m2 ± SD 0.48 cd/m2) was presented to adapt the pupil diameter to its baseline. The order of the chromatic stimulus spectra was randomized. One pupil measurement trial lasted 240 s, as the anchor spectrum (5,500 K) was switched on twice between each chromatic stimulus for 90 s, and the main stimuli (450 and 630 nm) were switched on 30 s. The spectra were measured 20 times before and after the experiment using a Konica Minolta CS2000 spectroradiometer. We controlled the luminaire with a custom-made MATLAB script, which stored the switch-on times of the spectra in a CSV-File. Possible switch-on latency times during the command transmission from MATLAB to the luminaire’s hardware were taken into account by tracking the processing time in the embedded software. We recorded stereo eye images with 30 fps (∗.bmp) during each pupil examination trial (240 s), making it possible to detect the pupil diameter from the images with different detection algorithms, later on using the offline pupil analysis mode of PupilEXT. The pupil data were synchronized with the luminaire’s switch-on times afterward using a MATLAB script.

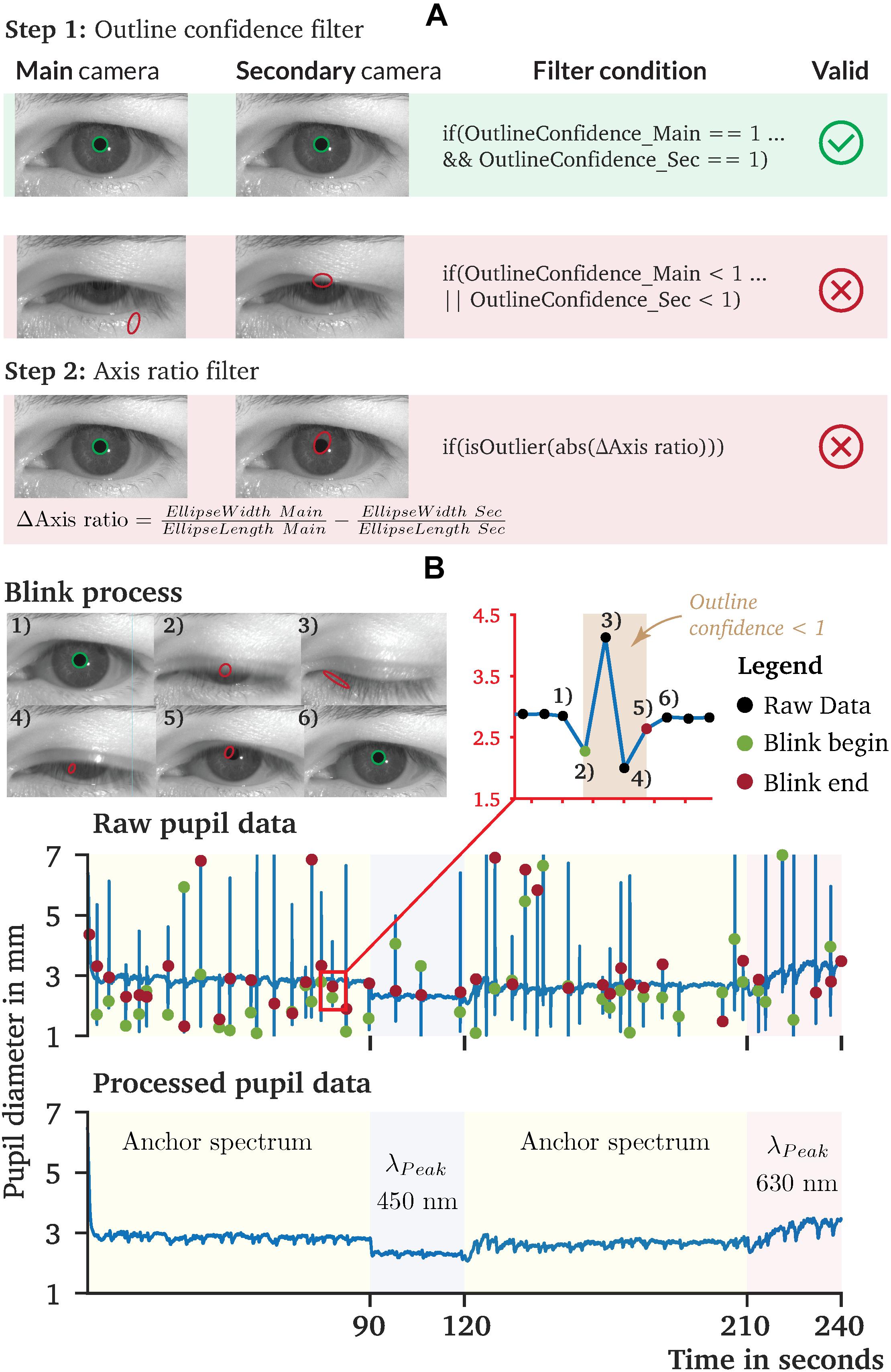

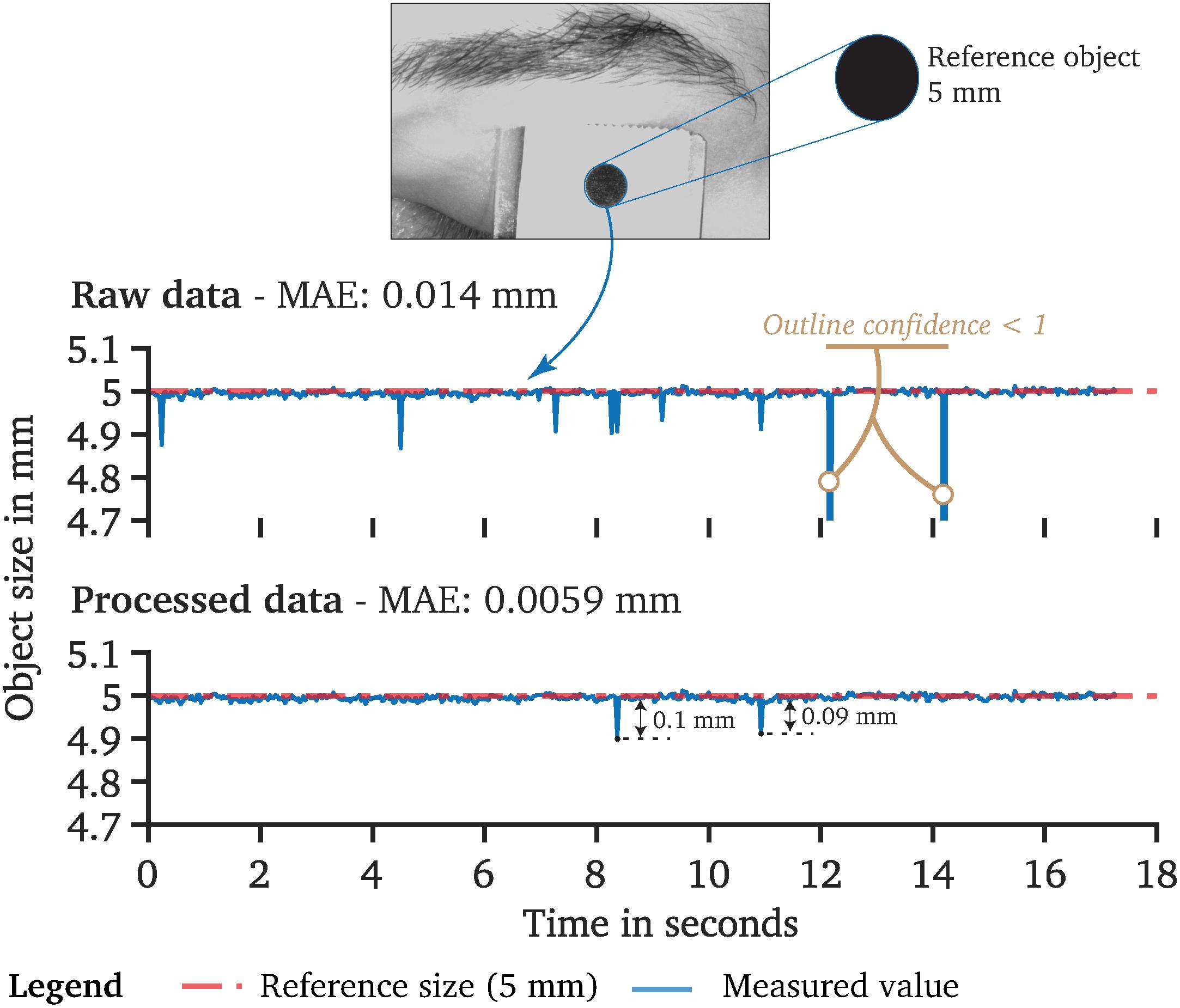

Pre-processing the Measured Raw Data

Recorded raw pupil data are usually occupied by artifacts or other non-physiological pupil changes that need to be pre-processed (Figure 8A). For the pupil data recorded by PupilEXT, we recommend a two-step filtering procedure. First, every data point that has an outline confidence measure (Santini et al., 2018a) lower than 1 should be left. With this step, artifacts caused by eye blinks are detected robustly (Figure 8A). Other artifacts can occur if the matching points (Figure 6B) between the first and second cameras differ, resulting in a non-physiological shift of the pupil diameter, visible through slight peaks in the data. We identify matching point errors by comparing the stated axis ratio of the ellipses between the main and second cameras. The axis ratios differ because of the second camera’s positioning causing a perspective pupil area change. However, the ellipse axis ratio difference between the ellipses of cameras 1 and 2 should remain constant within a certain range. Thus, the reliability of the matching points (Figure 6B) can be detected by calculating the difference of the axis ratio across the data points and removing all strong outliers from the sample dataset (Figure 8A). We have pre-processed the recorded pupil data according to this two-step procedure. The results of one raw pupil measurement trial (240 s) using the PuRe algorithm and respective pre-processed pupil data are shown in Figure 8B. Eye blinks can approximately be tracked by identifying the outline confidence areas that fall below one. However, an eye-blink detection via the outline confidence measure can only work if the algorithm’s detection rate is robust; i.e., the pupil is detected in more than 90% of valid eye image cases. We implemented the proposed two-step pre-processing method in MATLAB. The script is available on the GitHub repository of the PupilEXT project. Additionally, the recorded eye images are made available online together with the stereo calibration file. The data can directly be loaded into PupilEXT for a hands-on experience.

Figure 8. Recorded pupil data and proposed pre-processing procedure of pupil diameter data collected by PupilEXT. (A) A two-step pre-processing procedure is proposed, which uses the outline confidence and the axis ratio of the cameras’ tracked ellipses. (B) Recorded pupil data from our sample experiment to illustrate the performance of PupilEXT. The outline confidence can be used to identify eye occlusions in the data approximately. The two-step pre-processing can remove artifacts and other unnatural physiological pupil diameter changes.

Comparison of the Pupil Detection Approaches

A majority of pupil detection algorithms was evaluated based on their accuracy in estimating the pupil center (Table 1), as they are mainly intended for eye-tracking applications. One of the works evaluating the pupil fit was Świrski et al. (2012) in which their approach was compared against the Starburst algorithm. The pupil fit was assessed utilizing hand-labeled pupil measurements and the Hausdorff distance. The Hausdorff distance (Rote, 1991) thereby describes the maximum Euclidean distance of one ellipse to any point on the other ellipse (Świrski et al., 2012). Results show that the Swirski algorithm improves the detection rate for a five-pixel error threshold from 15% for Starburst to 87%, showing that not every eye-tracking algorithm is suited for pupil measurements. Fuhl et al. (2015) evaluated the ExCuSe algorithm, comparing their approach with the Swirski and Starburst algorithms. However, only the distance between the pupil center estimation and ground-truth was evaluated. The evaluation was performed on 18 datasets of pupil images captured under highly challenging real-world conditions. The detection rate for a five-pixel error threshold shows an average rate of 17% for Starburst, 40% for Swirski and 63% for ExCuSe.

A similar evaluation was repeated in the works of ElSe (Fuhl et al., 2016a), PuRe (Santini et al., 2018a), and PuReST (Santini et al., 2018b), where they conducted evaluations using overlapping datasets and the pupil center distance as a performance value. Within a five-pixel error threshold, the algorithm of Starburst shows a detection rate of 13.44, 28 to 36% for Swirski, 50 to 58% for ExCuSe, 66 to 69% for ElSe, 72% for PuRe and 87% for PuReST. In these evaluations, a performance loss for highly challenging recorded images was observed. Specifically, images with low-intensity contrast and pupils containing small reflections impaired the pupil detection algorithms. Santini et al. showed that the average runtime of the PuReST algorithm is 1.88 ms, compared with PuRe with 5.17 ms (Santini et al., 2018b), making PuReST the fastest approach with the highest pupil center detection rate. Note that these results apply to images that do not occur under laboratory conditions. Topal et al. (2017) evaluated the APPD algorithm (Topal et al., 2017) together with Starburst, ElSe and Swirski. The pupil fit and processing time were used to quantify the performance of the algorithms. For the pupil fit, the pupil localization was used, which quantifies the overlap ratio between the detected ellipse and the ground-truth, stated as [0, 1]. The results indicate a high pupil localization of 0.97 for APPD compared with 0.93 for Swirski, 0.92 for ElSe and 0.77 for Starburst. Additionally, Topal et al. measured an average computation time of 5.37 ms for APPD, 7.12 ms for Else (7 ms), 47.17 ms for Swirski (3.77 ms) and 49.22 ms for Starburst (100 ms). The numbers in parentheses define the originally reported runtime of the respective algorithms.

Based on the literature, it can be stated that PuReST is the top performer when evaluating the pupil’s center detection rate with highly noisy images. However, these results represent the detection rate with a five-pixel error threshold and do not state the accuracy of their pupil size measurements. Only the evaluation of Topal et al. (2017), Świrski et al. (2012) carried out a performance test on the pupil fit. Their results state a different picture, with Swirski performing better than ElSe.

Another aspect that could significantly affect the performance of a pupil detection algorithm is the parameters’ count. Each algorithm has a set of parameters that need to be tuned by the user to match the image composition. Selecting appropriate values may constitute a challenge for the user. Thus, the fewer parameters an algorithm possesses, the simpler its application. Comparing the number of parameters of the pupil detection algorithms, Swirski includes 11, followed by Starburst with five and PuRe and PuReST with three. Else and ExCuSe have only two parameters. We have stored in our proposed software PupilEXT the standard values of the algorithms as stated by the authors and additionally optimized three sets of parameters for pupil measurement applications under different image compositions.

Validation of the Pupil Detection Algorithms

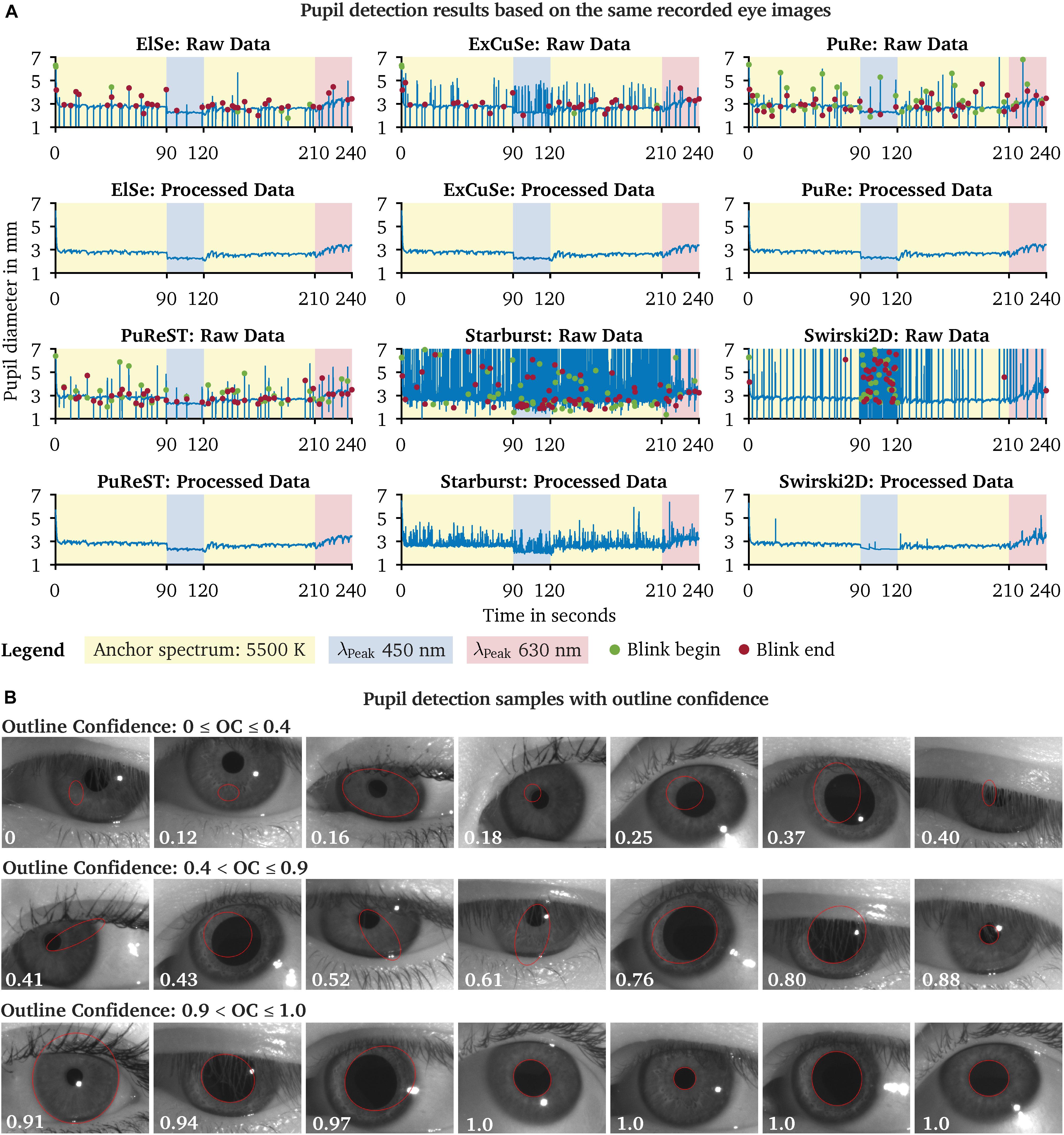

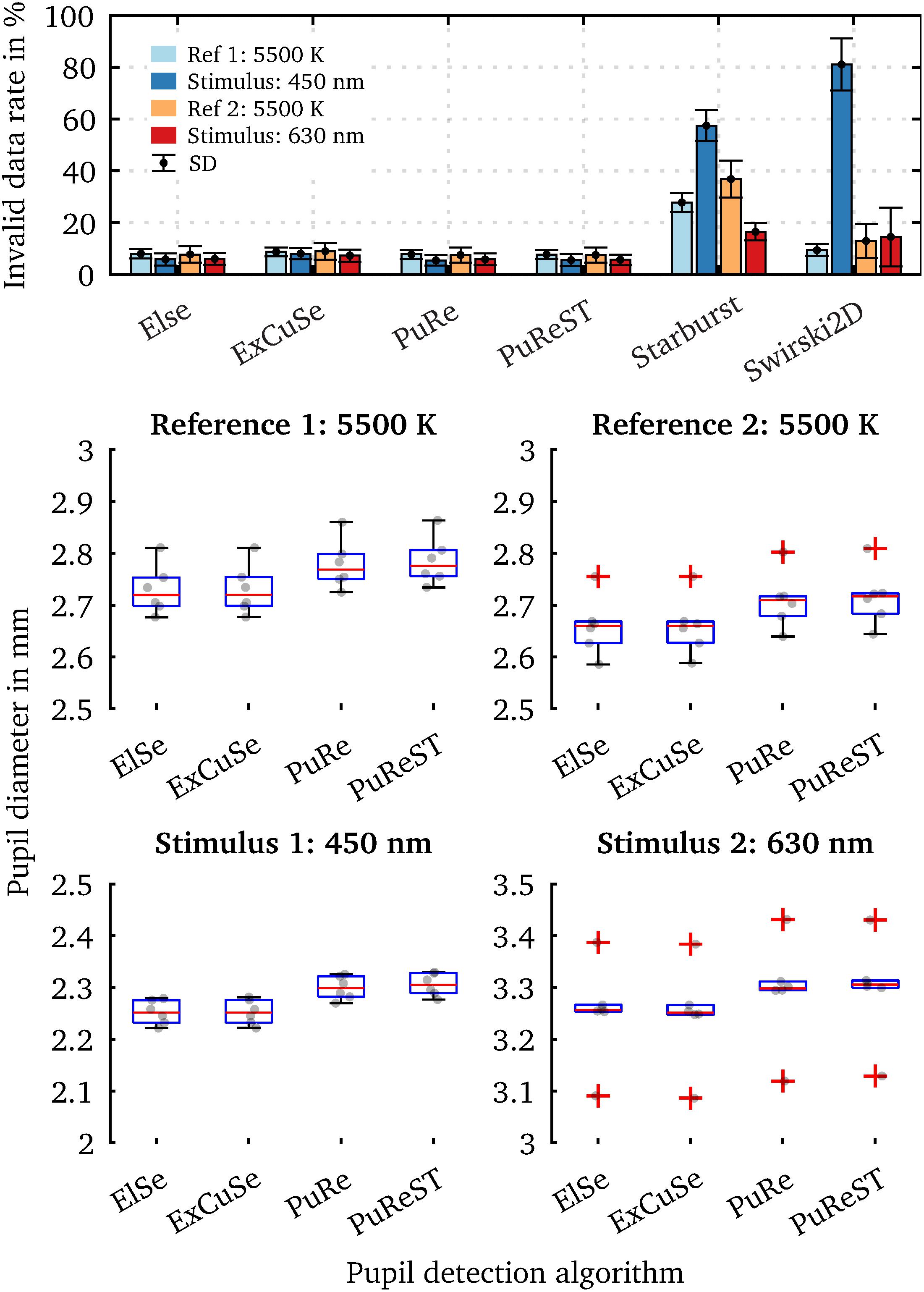

We evaluated the captured eye images from our pupil experiment using the six available pupil detection algorithms in PupilEXT. Ideally, the pupil diameter should remain steady across the detection algorithms, as the same eye image sets were used for evaluation. However, due to the algorithms’ different parameters settings and approaches, the measured diameter may differ. In Figure 9A, we have plotted the detected raw pupil diameter from one experimental trial (240 s) to illustrate how differently the algorithms perform based on the same acquired image set. For each raw data plot panel, the respective pre-processed pupil data are illustrated, which were obtained using the proposed two-step method. The ElSe, ExCuSe, PuRe and PuReST algorithms achieved an acceptable pupil detection rate, visually noticeable through the lower number of artifacts in the respective raw dataset (Figure 9A). As discussed, the artifacts in the raw data can be filtered by removing the detected pupil diameter with an outline confidence of less than 1. In Figure 9B, we illustrated a sample of recorded pupil images with the respective outline confidence, showing that an invalid pupil fit can be detected and removed when using such a metric.

Figure 9. Comparison of the pupil detection algorithms based on the same eye image set and visualization of the pupil ellipse fit as a function of the outline confidence. (A) Eye images from one subject were recorded during a chromatic pupillometry experiment using PupilEXT. The pupil was exposed to LED spectra of the peak wavelengths 450 nm (L = 100.4 cd/m2 ± SD 0.23) and 630 nm (L = 101 cd/m2 ± SD 0.31) for 30 s. An anchor spectrum with a correlated color temperature (CCT) of 5,500 K (L = 201 cd/m2 ± SD 0.48) was turned on for 90 s between each stimulus. The pupil diameter from the recorded images was extracted using the available algorithms in PupilEXT and pre-processed to illustrate the algorithms’ detection differences. (B) For each detected diameter, an outline confidence measure is provided and used as an indicator to filter unreliable pupil fits from the dataset. Pupil fits from different measurement sessions are illustrated as a function of the outline confidence. We recommend discarding all pupil diameters with a lower outline confidence measure of 1.

The Starburst algorithm caused a higher number of artifacts. Subsequent pre-processing of the raw data using the two-step method was not helpful, as the Starburst algorithm caused too many false detections. The Swirski algorithm had difficulties in detecting small pupil diameter at the 450-nm stimulus. After the invalid pupil data were filtered from the 450-nm time frame, there were almost no valid data left for linearly interpolating the missing values. Also, the Swirski algorithm had no robust detection rate for the pupil recording with the 630-nm spectrum. However, the cameras’ lenses were equipped with optical IR-high-pass filters so that the spectral-dependent detection quality was not due to the type of light spectrum. Each pupil detection algorithm has a certain number of parameters that need to be adjusted depending on the image resolution or how large the pupil is in relation to the image size. An incorrect combination of parameters could affect the pupil detection at differently sized diameters, as the algorithm itself could rule out smaller pupils.

The proposed technique for detecting eye blinks based on an outline confidence (Figure 8B) is highly affected by the detection rate. For example, it is no longer possible to distinguish between a false pupil fit or a closed eyelid at a higher rate of pupil detection artifacts (Figure 9A). Additionally, the ExCuSe algorithm offers a threshold value that can be used to detect eye blinks. In this way, values that indicate a closed eyelid will automatically be removed by the respective pupil detection algorithm itself, leading to the fact that a subsequent analysis of eye blinks is no longer possible. Therefore, an eye-blink recognition using the outline confidence seems to work well only with PuRe and PuReST.