- Korea Policy Center for the Fourth Industrial Revolution (KPC4IR), Korea Advanced Institute of Science and Technology, Daejeon, South Korea

Governing emerging technologies is one of the most important issues of the twenty-first century, and primarily concerns the public, private, and social initiatives that can shape the adoption and responsible development of digital technologies. This study surveys the emerging landscape of blockchain and artificial intelligence (AI) governance and maps the ecosystem of emerging platforms within industry and public and civil society. We identify the major players in the public, private, and civil society organizations and their underlying motivations, and examine the divergence and convergence of these motivation and the way they are likely to shape the future governance of these emerging technologies. There is a broad consensus that these technologies represent the present and future of economic growth, but they also pose significant risks to society. Indeed, there is also considerable confusion and disagreement among the major players about navigating the delicate balance between promoting these innovations and mitigating the risks they pose. While some in the industry are calling for self-regulation, others are calling for strong laws and state regulation to monitor these technologies. These disagreements, are likely to remain for the foreseeable future and may derail the optimal development of governance ecosystems across jurisdictions. Therefore, we propose that players should consider erecting new safeguards and using existing frameworks to protect consumers and society from the harms and dangers of these technologies. For instance, through re-examining existing legal and institutional arrangements to check whether these cater for emerging issues with new technologies, and as needed make necessary update/amendments. Further, there may be cases where existing legal and regulated systems are completely outdated and can't cover for new technologies, for example, when AI is used to influence political outcomes, or crypto currency frauds, or AI-powered autonomous vehicles, such cases call of agile governance regimes. This is important because different players in government, industry, and civil are still coming to terms with the governance challenges that these emerging technologies pose to society, and no one has a clear answer on optimal way to promote these technologies, at the same time limit the dangers they pose to users.

Introduction

Fourth Industrial Revolution (4IR) technologies, such as blockchain and artificial intelligence (AI), are being adopted rapidly by industry and governments worldwide, and consumers use services that depend on these technologies every day. The increased adoption of blockchain and AI has led to far-reaching changes in every aspect of human life—from getting a job to keeping it; from how people connect and with whom to how people date; from how the police keep us safe to who gets imprisoned and who gets released, among many other use cases (McKinsey Company, 2019)—disrupting the business processes of traditional industries in almost every sector (Quintais et al., 2019).

However, the increased adoption of these digital technologies has also introduced new and unprecedented challenges, such as privacy breaches, digital fraud, new forms of money laundering, intensified bias and discrimination, new safety and liability issues, technology-related unemployment, expanded surveillance, the potential for destructive robot-powered (Dempsey, 2020) wars, and market polarization (Acemoglu and Restrepo, 2020), among others. These technologies have opened a Pandora's Box of legal, ethical, policy, regulatory, and governance issues currently being grappled with by many actors in the government, industry, and civil society.

Thus, the question of governing1 disruptive digital technologies (we broadly define governance as methods of monitoring technologies by the public and private sectors to promote potential digital innovations and mitigate the risks posed by these innovations), has become one of the most important questions of the twenty-first century (Winfield and Jirotka, 2018). Governance is not just about mitigating the risks of emerging technologies. It is also about making changes to ensure that emerging technologies can be legally deployed and their full potentials maximized. Since 2017, we have witnessed a rising global debate (Pagallo, 2018) in private, public, academic, and social arenas on the governance of digital technologies (Winfield and Jirotka, 2018). During this period, for example, civil society and inter-governmental platforms have published over 200 AI principles that ask AI developers to adopt “ethics by design principles,” while regulators are expressing strong concerns about potential abuse of blockchain-powered applications, such as crypto assets.

Within industry, there is an ongoing debate between entrepreneurs and shareholders and “BigTech firms, with some calling for regulation of digital technologies (BBC, 2020), while, the other tech firms, are keen to protect their innovations from stifling regulations. Many tech firms have published their own ethical principles (Jobin et al., 2019) and established ethics committees. Additionally, the Partnership on AI (PAI) and the Blockchain Association—inter-industry associations advocating for responsible development of emerging technologies—were launched recently as attempts to promote self-regulation and influence public policy so that innovations can thrive.

Moreover, the public sector is responding to a varied mixture of governance frameworks aimed at promoting digital innovations and guarding against risks they may pose to society (OECD, 2019). In the past 2 years alone, almost all major countries have published digital policies and investment plans aimed at promoting digital innovations. Furthermore, some, such as South Korea and the UK, have experimented with regulatory sandboxes (regulatory tools that permit innovators to experiment and introduce their innovations to the market under minimum and controlled regulations and supervision) (Financial Conduct Authority, 2015). During the same period, countries have issued over 450 regulatory proposals targeting digital innovations, more than 200 in Europe alone (Hogan Lovells, 2019). A contradiction or paradox is apparent in governments wanting to invest in digital innovations while at the same time expecting to regulate digital technologies, instilling a growing sense of confusion about how best to govern these emerging technologies across all major jurisdictions.

Amid this debate, only few studies have focused on emerging platforms for digital innovation governance. Some scholars have focused more on the ethical governance of emerging technologies. For example, Winfield and Jirotka (2018) proposed a framework that guides the ethical governance of AI and robotics, showing how ethics feed into standards, which, in turn, lead to regulations (Winfield and Jirotka, 2018). Furthermore, Cath (2018) studied the question of governing high-risk technologies and the appropriate frameworks, and provided suggestions on digital technology governance. Another expert, Etzioni2, proposed a framework of three rules for AI regulation: AI should be subjected to existing laws, which must be updated to suit AI systems; AI systems should fully disclose that they are not human; and AI systems must not keep or publish users' private information without their explicit permission. Similarly, Takanashi et al. (2020) called for multiple stakeholders to establish governance mechanisms for emerging technologies, and (Feijóo et al., 2020) highlighted emerging platforms and called for technology diplomacy. Their contributions are important in answering critical questions about emerging technology governance.

However, we still find that the role of emerging technology governance platforms in the public, private, and civil society arenas, and how they are already interacting (working for, with, and against each other) in governing digital technologies is poorly understood. There is a broad agreement that the public and private sectors as well as civil society need to work together. The missing puzzle is how this can be realized in practice. As above, some argue for self-regulation, how this can be realized is not yet clear. For example, should the public sectors trust the private sector that relies emerging technologies such as AI and Blockchain to self-regulate? Others argue for a strong public hand in governing of emerging technologies, they suggest that most private sector players especially big tech will not self-regulate without a strong government hand (Kwoka and Valletti, 2021). However, they may be overestimating the ability or “power” of governments (most) to have say in the emerging technology governance debate. The reality is that majority of governments may not have the ability, knowhow, resources to effectively govern, regulate emerging technologies (Cusumano et al., 2021). Then how about the civil society players? Do they have impactful say on shaping the rules to govern emerging technologies?

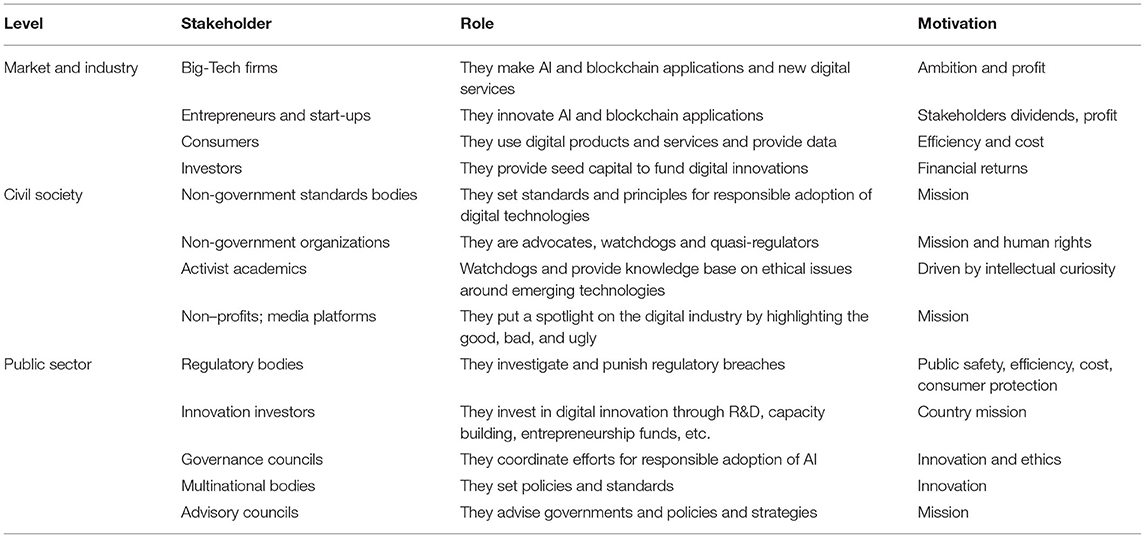

This study argues that the forces shaping digital technology governance across different jurisdictions emerge from the governance struggles and evolution of these emerging platforms. Therefore, we map out the ecosystem of emerging platforms in the public, civil society, and private sectors to improve our overall understanding of emerging technology governance. We seek to identify the major players and their underlying motivations in the new digital technologies' governance space, outline where these motivations differ and where they converge (see outline in Table 1 above). Finally, we consider (preliminarily) their relative strength and power to shape how emerging technologies are governed. Through this approach, this study contributes to the governance debate by attempting to provide realistic view each major player and their role in shaping governance of emerging technologies. In the following sections, we examine some of the emerging platforms and perspectives of the players.

Defining Emerging Technology Governance Platforms

Throughout this article, we have introduced the concept of emerging technology governance “platforms” and by this, we specifically refer to an organization or grouping of organizations from either the public, private or civil society that may use “their organization base/platform or group voice/platform to push for policies, or standards or laws, or principles, or voice opinions, etc., regarding the governance of emerging technologies. This definition should not be confused with AI-powered digital platforms (e.g., Facebook, Google) or Blockchain-powered platforms, e.g., Binance that focuses on helping to facilitate interactions across a large number of participants, using modern software, hardware, and networking technologies (Cusumano et al., 2019).

The later are now dominated by global tech behemoths whose technologies and platforms are used by billions of users across the globe, facilitating speed and convenience in interactions, whether it is financial transactions across borders, buy and selling of products and services or personal connections. Much as such platforms have enormous benefits to users, they also pose risks such as privacy breaches, fraud, and cyber security breaches, etc., which can instantly affect millions of users and impact can reverberate to far flung of the world. For example, a privacy breach at Facebook or Google can instantly affect users across the Globe. Similarly, crypto frauds and scams such as the recent “Squid Game crypto” (BBC, 2021b) and hundreds of others such scams affect small investors from South Korea to Uganda.

Emerging Approaching To Governing The Fourth Industrial Revolution

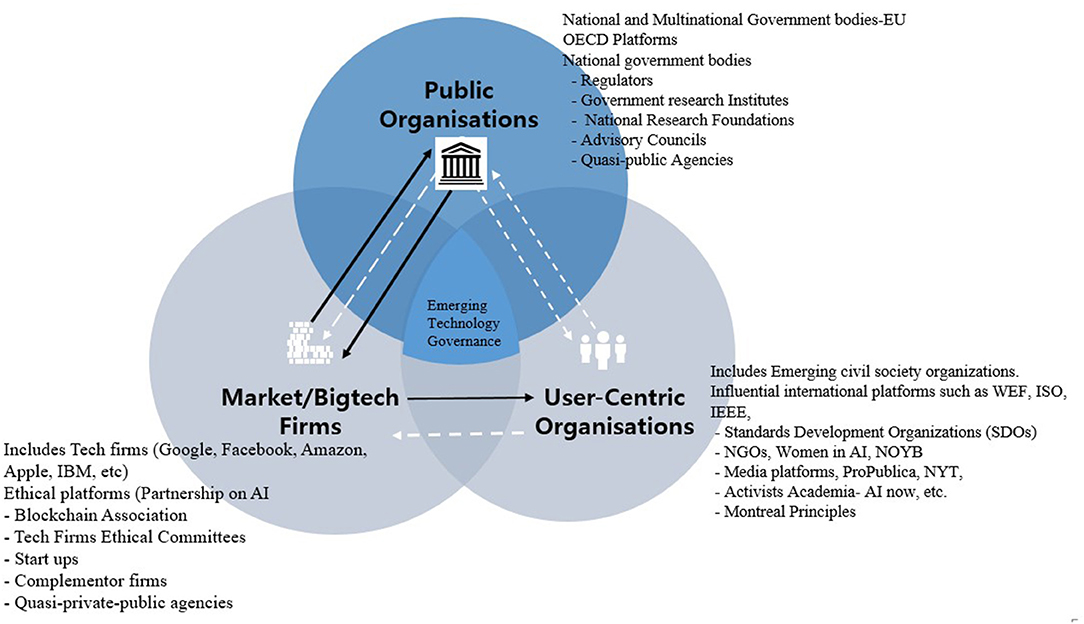

In Figure 1 below, we propose a simple analytical model adapted from Lynn (2010) to provide a descriptive understanding of the many layers and players in the governance of emerging technologies.

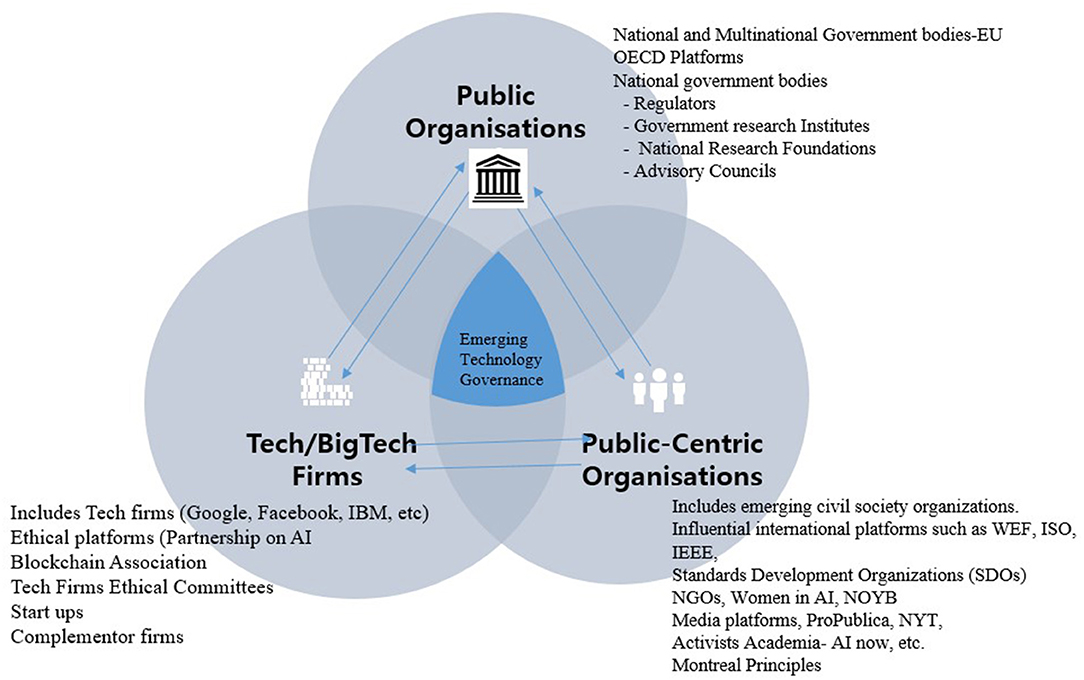

Emerging Technology Governance Centered in the Market

In this governance model (Figure 2), the institutional platforms for the governance of emerging technologies are dictated by the market or industry, while public sector players (regulators) and civil society have a limited role in issues of governance and innovation promotion. This model prevails in countries such as the US, where the IT industry, in general, has a long history of self-/light regulation. In this model, industry, especially US BigTech, is primarily motivated by profit, and the profit lens plays a dominant role in shaping technology governance. Firms view emerging technologies as “real-world applications technology, which is part of every fabric of society,3” and are capable of transforming industries using smarter algorithms and big data. These firms also recognize that new technologies are a source of business and competitive advantage that, however, may pose significant risks to users.

Tech firms have adopted various approaches to harness the business potential of emerging technologies and deal with the numerous challenges they face. The first and most common approach focuses on first deploying and marketing emerging technologies, and deals with ethical, legal, and governance issues later. In other words, this approach prioritizes business first and ethics second (Murgia and Shrikanth, 2019). We look at a recent case of AI-powered smart speakers to illustrate how this practice works. A UN report and a number of consumer complaints have been leveled against these “sexist and discriminatory speakers” (Rawlinson, 2019), which have nevertheless been released to the market; indeed, aside from some typically minimizing public relations responses, firms manufacturing, and selling these products have barely addressed the ethical issues raised by them. There are similar cases in the blockchain context, where products and services such as initial coin offerings (ICOs) of cryptocurrencies have turned out to offer only fraudulent, valueless tokens. This model of putting profits before ethical considerations has forced regulators to take reactive measures. Experts argue, however, that it is difficult for regulators to come up with comprehensive and adequate measures, beyond issuing warnings and guidance, because of the global nature of digital technologies (Takanashi et al., 2020). Similar issues have included fights between Google and its employees over the company's plans to make contentious technologies, and Amazon's plans to sell facial recognition software to governments even after stakeholders and employees have raised ethical issues (Waters, 2018). The participation of these companies in profitable but ethically questionable projects reinforces the narrative that industry is not serious about ethical and social issues (Gregg and Greene, 2019). In this view, their recent governance initiatives represent only “ethics washing”; their focus will always be on profits first and ethics later.

However, ethical issues continue to raise concerns among corporate customers as well (e.g., a survey by Deloitte in 2018 of 1,400 executives showed that 32% were concerned about ethical issues around emerging technologies). Thus, major tech firms, including IBM, Microsoft, Amazon, and Google, have started embracing “ethical and responsible” platforms for technology governance. For example, in 2016, they established a powerful inter-industry association called the PAI and the Blockchain Association for responsible innovations. However, industrial experts, such as Yochai (2019) and Bengio (see Benkler 2019; Castelvecchi, 2019), have interpreted such moves as attempts to create lobbying platforms to influence the formation of rules that govern emerging technologies (Simonite, 2019) and as attempts to avoid regulation (Wagner, 2018). While the industry argues that self-regulation is preferable because regulation stifles innovation, the strategy of “ethics washing” (Peukert and Kloker, 2020) and lobbying are leading to fears of regulatory inertia and failure to address the fundamental challenges and issues posed by unaccountable digital technologies.

In addition, tech firms have established technology ethics committees and principles as platforms for shaping the governance of emerging technologies and addressing issues of fairness, safety, privacy, transparency, inclusiveness, and accountability. However, many experts suggest that industry efforts fall short of showing that these firms are committed to addressing the emerging ethical and governance issues. Critics say that, at best, the industry is engaging in ethics washing (Wagner, 2018, 6–7), pointing to the fact that the principles proposed by tech firms are not binding and there are no mechanisms or frameworks to ensure that they are implemented. The ethical committees are just advisory, and have no power to ensure that their advice is adhered to by the companies. Moreover, there is no verification/auditing mechanisms of ethics adherence. Thus far, the examples of Amazon and Google moving ahead in contentious projects despite protests from employees and stakeholders confirm critics' fears that the tech firms lack any genuine commitment to addressing ethical and governance issues.

Further, tech firms are shaping governance rules through International Telecommunication Union (ITU) standards platforms (Gross et al., 2019). For example, recent reports indicate that Chinese AI firms, such as ZTE and Zahua, are working with the ITU to propose standards for AI-powered facial recognition technologies. The ability to influence standards gives companies not only a competitive and market advantage over others but also the ability to influence the technology policies adopted by countries, especially in the developing world. Thus, the fact that AI firms are writing standards for technologies that have proven to be contentious globally (especially in the US, China, and the UK) should be considered with caution. Such efforts offer tech firms a chance to self-regulate and set rules with minimal oversight from civil society4 and consumer advocacy agencies—two constituents that are always underrepresented in drafting these standards. In addition, these standards are again voluntary; therefore, there is a real challenge to guarantee that other companies will not introduce rogue digital products and services.

As tech firms continue to push for self-regulation, critics and experts point out that given the high-stakes ethics issues accompanying AI, the industry should not be trusted to self-regulate (Benkler, 2019). To critics, “self-regulation is not going to work for emerging technologies, because companies that follow ethical guidelines would be disadvantaged with respect to the companies that do not. It's like driving. Whether it is on the left or the right side, everybody needs to drive in the same way; otherwise, we are in trouble.”

In general, the tech firms have an unmatched influence and power relative to other platforms (public or civil society) in the technology governance debate, except in a handful of jurisdictions, such as EU, majority of jurisdictions especially in emerging economies can barely master a coherent governance strategy when it comes to these firms and their services as highlighted above. Further example include is blockchain-powered platforms such as Binance which operate in multiple countries and is used in trading of billions of dollars in crypto assets without an office or regulatory oversight5. Other examples are case of AI-powered platforms such as Airbnb or Uber that are “illegal” in number countries (e.g., South Korea is one such example) but continue to operate in such countries as if those countries legal systems don't matter. Such cases are numerous and it is beyond the scope of this article to discuss these in details.

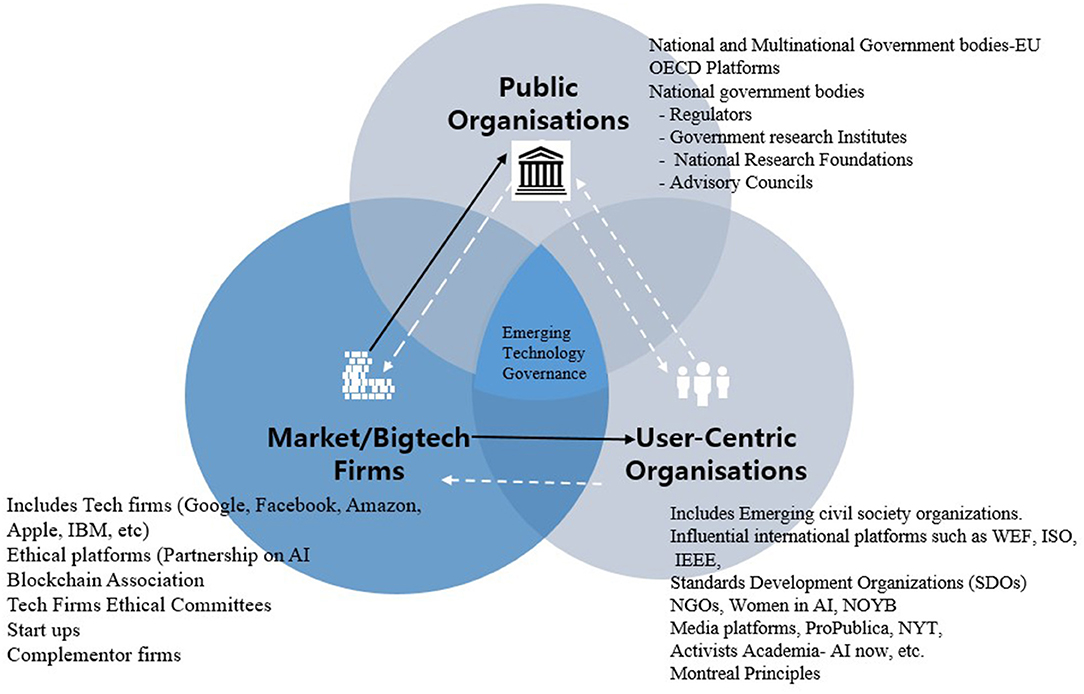

User-Centric Organizations Driven Digital Governance

Figure 3 captures the emerging efforts and initiatives of civil society actors to establish policies, standards, and institutional mechanisms that govern digital technologies. The institutional players emerging in this space include the influential International Standards Organization (ISO), the World Economic Forum (WEF), the Institute of Electrical and Electronics Engineers (IEEE), international media houses, and non-government organizations (NGOs), among others. These organizations are playing roles as advocates, quasi-regulators, watchdogs, and policy and standards setters, all of which form building blocks for the global governance of emerging technologies.

As advocates and quasi-regulators, civil society organizations have published over 100 AI principles, such as the Top 10 principles for ethical AI by the UNI Global Union, the Toronto Declaration by Amnesty International and Access Now, and Universal Guidelines for AI by the Public Voice Coalition. These initiatives add weight to the growing importance of mitigating the risks and dangers posed by unregulated and unaccountable adoption of emerging technologies, and point toward regulatory reform and industrial policy in governing new technologies. These efforts have not only brought global attention to the ethical, human rights, and social problems that surfaced due to the increasing application of new digital technology, but also provided critical input for digital policy-making in the public and private sectors. Such efforts have also increased the pressure on industry players to adopt ethics by designing models in their technology development chains.

As a policy- and standard-setter, civil society is emerging as a quasi-regulator; for example, the ISO has drafted standards, such as ISO/IEC JTC 1/SC 42 (ISO, 2017), which focus on responsible adoption and use of AI. Similarly, IEEE, the global association of engineers, has established a global initiative for ethical considerations in AI and autonomous systems (IEEE SA, 2017), publishing standards such as IEEE P7OO4TM Standard for Child and Student Data Governance, IEEE P7005TM Standard for Transparent Employer Data Governance, and IEEE P7006TM Standard for Personal Data Artificial Intelligence (AI) Agent. Although these standards are voluntary, these efforts translate into the civil society by imposing some form of regulatory control on the AI industry, despite it being a weak control due to the scale of standards required for the pervasive adoption of AI.

As watchdogs, civil society players and international media organizations have highlighted the unethical and biased application of emerging technologies developed by the industry, in what has been termed “whipping, naming, and shaming” private and public sectors to rethink about the development and adoption of AI and blockchain products and services. For instance, platforms such as ProPublica, The New York Times, and CNN have given global consumers a daily dose of news on the dangers of unethical digital products from tech firms (Murgia and Shrikanth, 2019). Similarly, NGOs such as Women in AI and NOYB (None of Your Business) have called out tech firms and national governments to ignore ethically questionable and human rights—abusing products and services. Such activism and campaigns using mass media platforms have not only increased global consumer awareness but also banned some companies from participating in some markets (Knight, 2019). In some cases, activism and campaigns have become critical tools and levers for “civil society to control the emerging technology industry6.” For example, campaigns highlighting issues of AI algorithm bias, discrimination, and racism have resulted in firms such as Google and Facebook issuing apologies (Grush, 2015) and fixing flaws in their algorithms (Gillum and Tobin, 2019).

Activism and complaints focusing on privacy and data protection have influenced governments to intervene through regulation and monetary actions, which have affected tech firms; for example, NOYB complaints resulted in the invalidation of the Safe Harbor agreement—an international agreement designed to transfer European data to the US—and the organization's other campaigns have resulted in monetary fines issued by governments for breach of privacy laws.

Although the above steps are moving in the right direction in terms of effective governance of emerging technologies, an important unanswered question concerns the translation of these standards, principles, and any associated research into practice. How should society be organized to govern disruptive technologies? Who will be responsible for governing these products and services through monitoring, certifying, and approving them? Moreover, how can we ensure that the work of civil society can translate into policy and regulatory action? Relevant activities of civil society have been limited to date. On the one hand, they largely only target ethical and consumer protection in rich countries, resulting in an “ethics and governance divide.7” This means that the privacy and data protection in European countries is prioritized by industry due to regulation, while users elsewhere are left to the mercy of domineering BigTech firms (Kelion, 2019); for instance, the “right to be forgotten” applies only to European consumers. Civil society groups have also only pushed industry to respond through ethics washing (Wagner, 2018) as opposed to taking fundamental steps to address the ethical problems posed by their products. Despite many civil society initiatives, these efforts have had a limited impact, barely touching the ethical, legal, and governance challenges associated with the adoption of new digital technologies. Questions are emerging as to whether civil society can ensure the responsible adoption of these technologies. Some proposed models for governance look at the evolution of civil society groups from advocacy and watchdog roles to tech-certification platforms, similar to the case of environmental civil society, as a means of creating sustainable and impactful governance models.

This preliminary review thus suggests that civil society platforms—beyond publishing principles and nudging the tech firms to adopt “ethics by design” have limited enforceable power to translate these principles, guidelines and standards that can be adopted by tech firms. Tech firms in particular have shown disdain and “untouchable” attitude when “ethical and governance issues are raised. A case in point is google rebuffing of these “ethics”—see Google firing its own ethics team for pointing out ethical issues with their AI-powered algorithms (Karen, 2020). Similar allegations have be made against Facebook in the ongoing “Facebook Files” scandal.

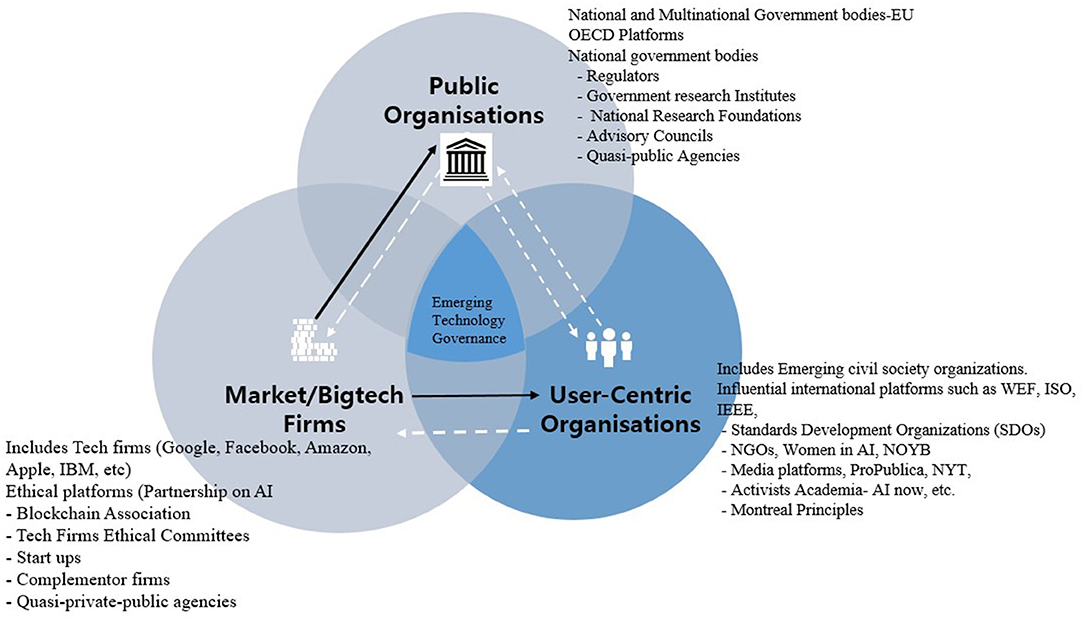

Emerging Digital Governance in the Public Sector

Next, this section discusses emerging tech governance platforms of various governments and tries to untangle their association with other players to promote digital innovations and good governance. These emerging platforms consist of government and inter-government initiatives focusing on policy, institutional, and capacity-building efforts for the governance of emerging technologies (Figure 4). In this model, governments take on complex changing roles as regulators or non-regulators, buyers of digital technologies, innovation policy-makers, taxation bodies, standards- and principle-setters, and self-regulation monitors. A combination of these roles typically helps governments and public agencies achieve the dual objectives of promoting digital innovations and safeguarding the public against the risks and dangers posed by these technologies. Typically, the role(s) of a particular government also dictate the kind of platforms needed to achieve these objectives: emerging governance platforms tend to be divided between innovation-promoting platforms, focusing on innovation and investment in new technologies, and governance platforms, which deal with the risks and dangers of new automated technologies.

In this context, the governance efforts of governments vary from those that exert a strong public hand in promoting innovation to those that leave this role to the market, and from those pushing for comprehensive technological governance regimes with strong laws enforced by national regulatory agencies, who have the authority to investigate and punish regulatory breaches in a given jurisdiction (see Newman, 2012), to others that favor restrained regimes with soft regulation and a limited government role in emerging technology governance. In the latter approach, tech firms are typically left to monitor themselves. Below, we untangle the emerging, complex web of governance platforms within the public sector.

Governments as Digital Innovation Policymakers

Many governments view emerging technologies as a new economic growth engine (Kalenzi et al., 2020). The strategies adopted by major countries focus on four main areas: the adoption and diffusion of digital technologies, strategies for collaborative innovation, research and innovation, and digital entrepreneurship (OECD, 2019). Additionally, these vary between countries that advocate strong promotion of digital innovation and those that leave it to the market.

National and Multinational Initiatives on AI Governance

Governments of major countries have reached a consensus that the new digital technologies, including AI and blockchain, are a new, endless frontier (Kalenzi et al., 2020). For instance, on AI technologies, governments in developed countries have committed significant investment and developed comprehensive policy agendas to support the development and adoption of these technologies. In China, the government published the New Generation Artificial Intelligence Development Plan, which envisions spending 150 billion dollars to establish China's leadership in AI (Future of Life Institute8). China has also put in place institutions to properly execute the policy, such as a new AI promotion office to coordinate their policy and investment plans on AI.

In the US, we see similar initiatives aimed at maintaining America's innovation and technology leadership on critical technologies, including AI and blockchain. In June, the Senate passed a bipartisan 250 billion dollar tech bill to fund advanced research on emerging technologies, including AI, blockchain, and robotics, to maintain US global leadership (Franck, 2021). Moreover, the US enjoys well-funded private investments in digital innovations. Relatedly, the EU has followed the US and China, investing 806.9 billion euros in the “NextGeneration” transnational EU economy, with part of this investment going to promoting AI, digital innovations, and renewable energy (European Commission9).

Other governments have also joined the race to develop new economies powered by digital innovations and AI. This year, the government in South Korea launched the Digital New Deal (Yonhap News, 2020), a massive investment and policy strategy to renew the Korean economy on the basis of AI, data, 5G, and other digital technologies. In Singapore, the government established the Digitalizing Singapore agenda; in Australia, the Digital Transformation Strategy with an AU $1.2 billion investment plan targets similar goals as the others (Pash, 2021). Others, such as Canada, Israel, Japan, Switzerland, and France, have similar policies, investment plans, and institutional frameworks to promote the development and adoption of AI and digital technologies.

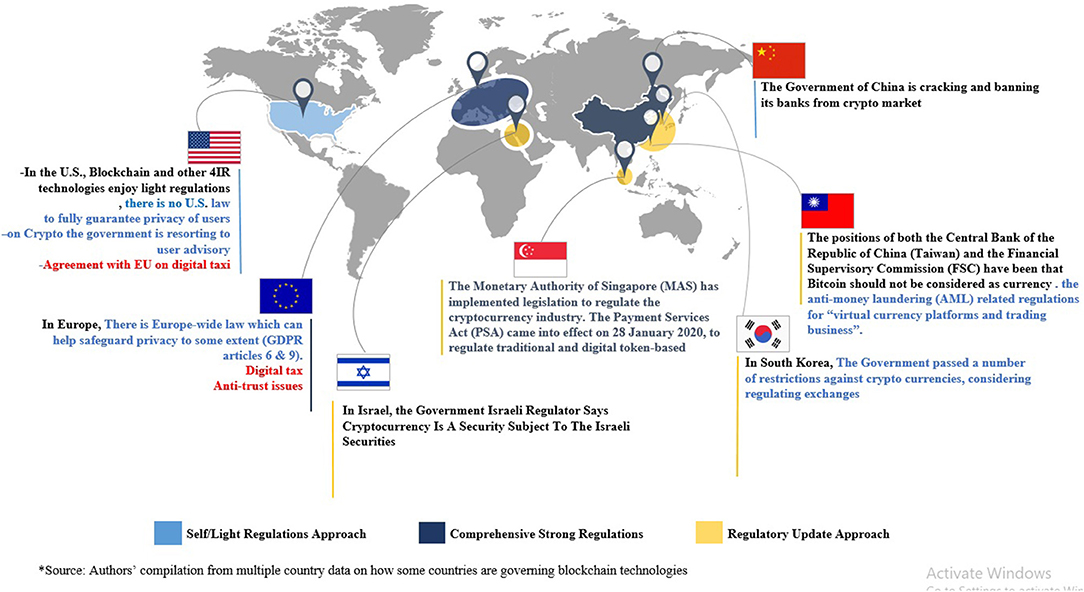

National and Multinational Initiatives on Blockchain Governance

Similar to the AI promotion policies above, governments of major countries are taking a strong interest in developing and adopting blockchain technologies. However, unlike AI promotion policies, most of the investment agenda in blockchain is driven by the private sector and startups in the majority of the countries. On this front, the European Union is one of the leading contenders. For example, for 2016–2019, the European Commission provided over 180 million euros in grants under the Horizon 2020 program to fund the development of blockchain technologies by startups (European Commission10). Further, the commission is streamlining the standardization and usage of data, which is critical to developing blockchain and AI ecosystems. Some prominent initiatives include the 4.9 million euro DECODE project11 and the 3.4 million euro MyHealthMyData projects (European Commission12). Regarding institutional arrangements, Europe established the European Blockchain Partnership (EBP) to develop coordinated efforts to build transnational blockchain infrastructure for public services.

In China, the government is cautiously promoting the development of blockchain/distributed ledger technologies (DLT). In recent years, the Chinese government has launched a National Blockchain-Based Service Network as its leading platform for public and private sector companies to collaborate in developing blockchain technologies.

In the US and UK, blockchain technology development is driven by private sector investment, BigTech, and startups. They together represent the biggest innovators and promoters of blockchain technologies. On a smaller scale, similar initiatives are going on in Korea, Singapore, and Canada, among others.

The preliminary lesson from the above diverse policy agendas is that when it comes to promoting AI, blockchain, and other digital technologies, the main concern is whether a country can get and maintain a competitive advantage, or whether it will stagnate in the lower ranks of global value chains. Even though the execution of the policy will differ from country to country—from those that favor a strong government hand (China, Korea) to those that favor a mixed public and private approach to those that are mainly leaving the market to take the lead—there is no doubt that major countries are taking solid action to renew their economies on the pillars of AI, blockchain, data, and digital technologies.

Governments as AI and Blockchain Regulators

As mentioned above, there is broad agreement on a policy agenda to promote the development and adoption of AI, blockchain, and other digital technologies across countries. There is also, however, broad agreement that these technologies increase monopolies, pose privacy risks, increase inequality, endanger democracies, and increase misinformation, fraud, bias, safety issues, and that these issues call for regulatory mechanisms to limit the negative impacts of these technologies.

Despite the policy consensus, there are vast disagreements across governments, the private sector, and civil society organizations on how to achieve optimal regulation to mitigate these negatives. The only exception to these disagreements is the recent global agreement on digital taxes. If we consider cryptocurrencies, for example, many of them offer cross-border payments and trading applications, among other applications. Given the multi-national operations of cryptocurrency platforms, many would expect players, especially in the public and private sectors, to agree on common rules and standards to regulate the industry. In fact however, where some major countries, such as China, have recently imposed bans on all transactions of cryptocurrencies (BBC, 2021a), and others, such as South Korea and Japan, are proposing strong regulatory mechanisms to regulate cryptocurrencies and blockchain technologies, others, such as the US and UK, are taking softer approaches such as consumer advisories and fines. As a result of these disagreements, the effective regulation and hence also development and adoption of blockchain cross-border applications, including in healthcare (COVID-19 digital certificates), supply chains, payments, and so on faces significant challenges.

The disagreements are more pronounced in the governance of AI and related digital technologies. On this front, countries are taking different approaches, with some favoring strong and comprehensive regimes, such as the EU and China, and others taking light/soft strategies (Floridi, 2018), such as the US and UK; still others taking a mixed approach; and the majority belong to the wait-and-see camp. To illustrate, in Europe, besides the well-known GDPR, which regulates privacy and data-sharing (Albrecht, 2016), the European is proposing new rules and policy actions aimed at furthering the responsible development of AI. These include communication to foster a European approach to responsible AI, a coordinated plan for member states, and the Artificial Intelligent Act, which lays down harmonized rules to regulate AI. Still, even within Europe, we see that the UK has so far taken a softer approach to AI regulation. For example, the UK Center for Data Ethics and Innovation was established to oversee, but not regulate, AI and data-driven digital technologies. But recently, there have been ongoing discussions on a proposed Online Safety Bill, which will regulate data and AI-driven applications when passed.

On one extreme in this debate is the US, which primarily embraces market-driven “self-regulation” and has no comprehensive data and privacy regulations, except California's AB 5 Law (Bukaty, 2019) and San Francisco's banning of AI-powered facial recognition technologies, somewhat ironical given its status as the center of the US tech industry, including blockchain and AI. Beyond these, the US largely relies on light approaches: principles, standards, self-regulation, and generally non-binding guidelines. The absence of regulations has forced private companies and user-centric organizations such as media groups to “step into the breach” and defend consumers from actual and potential harmful effects of AI. For example, Apple has recently upgraded privacy settings on all its devices to give users more power over their data. Relatedly, media organizations such as The New York Times, The Wall Street Journal, ProPublica, among others, are pursuing a sustained campaign to alert users in the US and globally of abuses and dangers of AI-powered applications. However, the effects of these efforts and whether they can lead to fundamental changes to mitigate the risk of AI remains in question.

The broader take away from public efforts in the governance debate is that much as many governments have a better grasp of innovation policies to promote the technologies. However, this article agrees with (Cusumano et al., 2021) that many governments (except in handful of jurisdictions such as Europe and China) are relatively weak to govern emerging technologies. “Most do not have the skills, or resources, to regulate and monitor the dynamic, on-going changes with digital platforms and their complex technologies and operations.”

Multi-National Platforms for Governing AI and Digital Technologies

Because of these weakness, most countries (especially developing countries), have taken a wait-and-see approach and remained silent on the question of governing emerging technologies. Recently, however, this debate is taking on a more multi-national dimension. Some countries are grouping to try and shape regulation and responsible development of AI and data-driven technologies. For instance, in May 2019, OECD member countries and non-member countries such as Argentina, Brazil, and Romania signed and adopted AI principles (Budish and Gasser, 2019) which, although non-binding, sends a strong message to the private sector that these countries want responsible development and adoption of AI technologies. OECD member countries and the US and Poland have followed up by establishing the Global Partnership on Artificial Intelligence (GPAI); this newly established platform aims to advocate for principles for responsible stewardship and global coordination of national policies and international cooperation for trustworthy AI. It remains to be seen whether such efforts will lead to a global agreement on standard and enforceable rules on responsible development and adoption of AI; it may remain difficult, given competing interests, disagreements on how to regulate AI, the technology race to develop AI, and technology-hegemonic struggles among major “developer” nations.

Worse still, these multi-national groupings are essentially “rich nations' clubs,” exclusive groups that have left the Global South on the sidelines of the AI governance debate.

Is There Common Ground?

In this overview, we consider disparate emerging platforms from the perspectives of public, private, and civil society. Based on our preliminary analysis, we can clearly see that there is a convergence of interests among all parties in promoting the responsible development and adoption of emerging technologies. For example, as shown, all three major players have established technology principles and codes, have developed or are in the process of developing standards, and have established a framework to realize these. In industry, we see the development of ethics committees; in government, we see rise in advisory committees and digital innovation watchtowers; and in civil society, we see the rise of advocacy groups.

In principle, these three groups of actors—firms, government, and civil society—want similar things. However, their diverse interests appear in their underlying motivations, and emerge as they take action to shape the rules governing emerging technologies. In this study, we argue that this might be the key to understand the shaping of technology governance and its possible future. Ultimately, the question of responsible development and adoption of emerging innovations is a question of the public (represented by the public sector and civil society) vs. the market, and a question of whether industry will put the interests of users ahead of profit. It is a question that turns on whether governments have the resolve to overcome pressures from tech firms and the digital innovation race to safeguard the interests of the public. Finally, there is the question of whether civil society in the emerging technology governance space can generate the clout to translate advocacy into action.

In this preliminary analysis, we show that the current motivation for industry is profit and that critics might be correct in suggesting that industry actions, such as establishing powerless ethics committees and technology principles, are classic examples of ethics washing or paying lip service while, in fact, doing everything possible to profit from these new, flawed yet powerful technologies (e.g., facial recognition technologies, ICOs, fraudulent cryptocurrencies, participation in military AI projects).

Emerging Self-Regulation Platforms

Our overview shows that there is a convergence of interest among nations to promote emerging innovations. For example, all major countries have published digital innovation policies and followed them up with concrete investment plans to develop new technology capabilities. Similar principles have been adopted by the OECD and the G20, including the US. However, governments diverge in terms of how they develop and apply safeguards and laws to protect users from the unethical use of these powerful innovations. Countries with tech firms that have vested interests in digital innovation, such as China and the US, are opting for self-regulation and softer regimes. In the case of the US, hard standards, such as the California consumer privacy law and San Francisco's banning of AI-powered facial recognition technologies, do exist at the state level (and have generated global buzz). In the foreseeable future, self-regulation platforms are likely to consolidate in countries with large tech sectors.

Comprehensive and Strong Government Platforms

Among the rest of the world, Europe is taking the lead to promote innovations (policies and funding), while simultaneously regulating tech firms through laws and codes of conducts relatively strictly. It can do this as it has recognized market power, and there are serious incentives to promote and protect the budding tech industry in some European countries. However, it is unlikely that most developing countries and other countries in “wait and see group” can exert any serious regulatory or governance effort—certainly not on the scale of GDPR, which may upset tech firms. A case in point is the recent ruling on the “right to be forgotten” in the case against Google, which stipulated that the GDPR applies in Europe but not in other countries. Europe, therefore, is likely to remain a bedrock of comprehensive regulations, while most other countries, especially developing ones, remain as laggards (running wait-and-see platforms) in the emerging technology governance space.

Soft Law Platforms

Between these two sides, self-regulation and strong government regulation, lie many middle powers, such as Singapore, France, Sweden, and New Zealand, with actions such as grand digital innovation funding schemes, governance frameworks, advisory committees, Canada–France–New Zealand, and a mixture of strong regulations such as France's digital taxation policy and Singapore's anti-fake news laws with market–friendly soft regulations in several places. We suggest that the motivation for these varies. Singapore, which is Asia's hub for AI BigTech firms and is quite economically dependent on their presence, is between a rock (rubbing BigTech the wrong way) and a hard place (protecting users); hence, its middle ground actions should be interpreted in that light. France, Sweden, and much of Europe may feel left behind in the digital technology race and, thus, may be eager to adopt aggressive policies to promote innovation while taking protective measures against tech firms.

We also show that civil society groups largely agree with industry and public sector actors on principles and standards. However, beyond publishing principles, advocacy, and calling out BigTech firms, civil society is in an unenviably weak position. Its actions are at best reactive, and although its organizations have proposed principles and values, they have no teeth. This is akin to having laws or values without the means to implement them. Thus, we propose that for civil society to be effective, it will have to evolve and develop stronger certified platforms.

Conclusion

In this study, we survey the approaches of different players to the governance of emerging digital technologies, with a focus on AI and blockchain. Our preliminary analysis suggests that the different relevant parties are just beginning to come to terms with the governance challenges that emerging technologies pose for society. Within industry, we see the emergence of entities pushing for self-regulation, such as the PAI and the Blockchain Association; formation of ethics committees; published principles; and emerging global collaboration on standards and ethical frameworks. Conversely, within civil society, we see a rise in advocacy and the need for standard platforms that call for responsible development and adoption of emerging technologies, where different governments would establish platforms for promoting innovations and responsible adoption of new digital technologies.

There appears to be a broad consensus on the need for innovation policies and the establishment of effective principles, standards, and frameworks for responsible digital innovation. However, here, we have shown that firms, governments, and civil society are driven by different motivations and vested interests; we believe these differences will be a challenge to a broad consensus on the governance of these new technologies.

Due to the different motivations, governance platforms will evolve and mature or stagnate depending on the environment. In some environments, such as in the US, where BigTech firms have a dominant presence, governance issues will likely take a back seat, and we may see a consolidation of self-regulation approaches, which are apt to focus on profits and their stakeholder's interests (“Regulations stifle innovation narrative”). In consumer protection–oriented environments, such as Europe, there will likely be a consolidation of comprehensive approaches to regulate and promote digital innovations that protect consumers as well as budding industry, to grow Europe's own digital technology base. Amid such a power play, civil society players will likely remain insignificant in the emerging technology governance space unless there is a shift to a more active and stronger civil society.

It is now widely accepted that these emerging technologies represent present and future economic growth engines, but also pose significant risks to society. Given the lack of global consensus on how to mitigate emerging problems, we suggest that it is up to each country to erect safeguards in each domain. This is particularly true in healthcare, security, public safety, and transportation, where digital technologies present significant potential but also serious bias, safety, and privacy risks. Immediate safeguarding mechanisms should be implemented to protect consumers from the rogue and unaccountable use of digital technologies. One good example of such safeguards is the proposed FDA Medical Device action plan, which puts in place measures such as the total product life cycle (TPLC) approach for AI and ML medical device safety.

Other safeguards could be implemented following the example of Britain's Center for Ethics and Innovation, which has as its mission to identify how users can enjoy the full potential benefits of data-driven technology within the ethical and social constraints of liberal democracy. By taking on this mission, players, especially governments, could avoid the command and control narrative favored by some (in government, civil society, and even industry) or the self-regulation favored by others, especially in the tech industry. Instead, players could focus on the practicalities of how emerging technologies can be feasibly governed, how to build trust across cooperating entities, how to figure out where to start to deal with ethical and social issues, and how to work together for optimal governance and regulation.

Finally, governments and the private sector could use their buying and licensing powers especially for large AI and blockchain projects such as city-wide facial recognition projects and shipping supply chains, to push industry to adopt ethics by design principles. Beyond this, they could put in place sufficient guidelines and principles for responsible agencies, such as police and security agencies, on the safe and ethical use of these technologies.

For further research, We use the representative cases of AI and Blockchain technologies in the emerging technology governance even though they are different technologies, with different origins and maturity levels because these technologies are some of the leading forces shaping every sector of public and private lives in the digital era. For instance, they are now used more universally, e.g., these days AI is in “virtually everything” but most associated with AI-powered recommender systems that power every leading platform such as Facebook, Google, Amazon, etc. Similarly, blockchain technologies are known powering the current crypto-currency mania but is also applied in numerous other use cases across the globe. Everyday users across the world, enjoy the benefits and conveniences of these technologies but also suffer collectively when these technologies are abused as earlier mentioned. This fact withstanding, further research could focus on one of the two technologies more thoroughly. For instance, the ongoing debate around governing blockchain technologies and applications such as crypto-currencies' issues such as fraud and privacy can benefit from similar earlier studies in the AI space.

The other question that merits further research: How can the different governance platforms in the public, private sector and civil society collaborate to create better governance systems, considering their relative positions?

Author Contributions

All authors contributed equally to the conception and design of the study, as well as researching, drafting, revisions, and approving the submitted version.

Conflict of Interest

The author declares that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher's Note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Footnotes

1. ^“Governance refers to processes of governing; or changed conditions of ordered rule; or new methods by which society is governed” (Rhodes, 2012). In this article, we expand on this definition in context of emerging technology governance to include all initiatives aimed at technology promotion and mitigating risks that these technologies pose to society.

2. ^“Nevertheless, even A.I. researchers like me recognize that there are valid concerns about its impact on weapons, jobs, and privacy. It's natural to ask whether we should develop A.I. at all” (Etzioni, 2017).

3. ^According to Google, “AI has now become a real-world application technology and part of every fabric of society. Harnessed appropriately, we believe AI can deliver great benefits for economies and societies” (Google, 2019).

4. ^According to the World Bank: “Civil society... refers to a wide array of organizations: community groups, non-governmental organizations [NGOs], labor unions, indigenous groups, charitable organizations, faith-based organizations, professional associations, and foundations”; for definition see, https://www.weforum.org/agenda/2018/04/what-is-civil-society/.

5. ^The Wall Street Journal, $76 Billion a Day: How Binance Became the World's Biggest Crypto Exchange: The trading platform surged by operating fromnowhere in particular–without offices, licenses or headquarters. Now governments are insisting on taking some control. WSJ News. November 11, 2021. https://www.wsj.com/articles/binance-became-the-biggest-cryptocurrency-exchange-withoutlicenses-or-headquarters-thats-coming-to-an-end-11636640029 (accessed November 23, 2021).

6. ^“In some instances, civil society campaigns are critical social controls of industry.” For detailed discussion see Christopher et al. (2012).

7. ^We define the “ethics and governance divide” as a situation where technology ethics and governance considerations are determined based on location of implementation. Richer and stronger countries for example have strong ethics and governance systems in place in comparison to less developed countries, hence the divide.

8. ^Future of Life Institute. AI Policy China. https://futureoflife.org/ai-policy-china/.

9. ^European Commission. Recovery Plan for Europe. https://ec.europa.eu/info/strategy/recovery-plan-europe_en#nextgenerationeu (accessed October 28, 2021).

10. ^European Commission. Blockchain Funding and Investment. https://digital-strategy.ec.europa.eu/en/policies/blockchain-funding.

11. ^See Decode project. https://decodeproject.eu/.

12. ^European Commission. My Health - My Data. https://cordis.europa.eu/project/id/732907 (accessed June 30, 2021).

References

Acemoglu, D., and Restrepo, P. (2020). The wrong kind of AI? Artificial intelligence and the future of labour demand. Cambridge J. Reg. Econ. 13, 25–35. doi: 10.1093/cjres/rsz022

Albrecht, J. P.. (2016). How the GDPR will change the world. Eur. Data Prot. Law Rev. 2, 287–289. doi: 10.21552/edpl/2016/3/4

BBC (2020). Google boss Sundar Pichai calls for AI Regulation. BBC News (January 20, 2020). Available online at: https://www.bbc.com/news/technology-51178198 (accessed January 10, 2021).

BBC (2021a). China declares all crypto-currency transactions illegal. BBC News (September 24, 2021). Available online at: https://www.bbc.com/news/technology-58678907 (accessed September 26, 2021).

BBC (2021b). Squid Game crypto token collapses in apparent scam. BBC News (November 2, 2021). Available online at: https://www.bbc.com/news/business-59129466 (accessed November, 2021).

Benkler, Y.. (2019). Don't let industry write the rules for AI. World View. Nature (May 1). Available online at: https://www.nature.com/articles/d41586-019-01413-1?utm_source=commission_junctionandutm_medium=affiliate (accessed December 15, 2020).

Budish, R., and Gasser, U. (2019). What Are the OECD Principles on AI? https://www.oecd.org/digital/artificial-intelligence/ai-principles/ (accessed January 4, 2020).

Castelvecchi, D.. (2019). AI pioneer: “The dangers of abuse are very real”. Nature (April 4). Available online at: https://www.nature.com/articles/d41586-019-00505-2 (accessed April 30, 2020).

Cath, C.. (2018). Governing artificial intelligence: ethical, legal and technical opportunities and challenges. Phil. Trans. R. Soc. A376, 20180080. doi: 10.1098/rsta.2018.0080

Christopher, T. B., Bartley, T., and Roberts, W. T. (2012). “NGOs: between advocacy, service provision, and regulation,” in The Oxford Handbook of Governance, ed D. Levi-Faur (New York, NY: Oxford University Press). Available online at: https://www.oxfordhandbooks.com/view/10.1093/oxfordhb/9780199560530.001.0001/oxfordhb-9780199560530-e-23 (accessed November 12, 2019).

Cusumano, M., Gawer, A., and Yoffie, D. (2019). The Business of Platforms: Strategy in the Age of Digital Competition, Innovation, and Power. New York, NY: Harper Business.

Cusumano, M., Gawer, A., and Yoffie, D. (2021). Can self-regulation save digital platforms? Ind. Corp. Change. 30, 1259–1285. doi: 10.1093/icc/dtab052

Dempsey, M.. (2020). Robot Tanks: On patrol But Not Allowed to Shoot – ‘Yet'. BBC News (January 2021). Available online at: https://www.bbc.com/news/business-50387954 (accessed July 10, 2021).

Etzioni, O.. (2017). How to Regulate Artificial Intelligence, NYT (September 1), Available online at: https://www.nytimes.com/2017/09/01/opinion/artificial-intelligence-regulations-rules.html (accessed April 25, 2020).

Feijóo, C., Kwon, Y., Bauer, J. M., Bohlin, E., Howell, B., Jain, R., et al. (2020). Harnessing artificial intelligence (AI) to increase wellbeing for all: the case for a new technology diplomacy. Telecomm. Policy 44:101988. doi: 10.1016/j.telpol.2020.101988

Financial Conduct Authority (2015). Regulatory Sandbox (November). Available online at: http://www.ifashops.com/wp-content/uploads/2015/11/regulatory-sandbox.pdf (accessed June 2, 2020).

Floridi, L.. (2018). Soft ethics, the governance of the digital and the general data protection regulation. Philos. Trans. Math. Phys. Eng. Sci. 376. doi: 10.1098/rsta.2018.0081

Franck, T.. (2021). Senate passes $250 billion bipartisan tech and manufacturing bill aimed at countering China. CNBC (June 9). Available online at: https://www.cnbc.com/2021/06/08/senate-passes-bipartisan-tech-and-manufacturing-bill-aimed-at-china.html (accessed August 28, 2021).

Gillum, J., and Tobin, A. (2019). Facebook won't let employers, landlords or lenders discriminate in ads anymore. Propublica (March 19). Available online at: https://www.propublica.org/article/facebook-ads-discrimination-settlement-housing-employment-credit (accessed April 15, 2021).

Google (2019). Perspectives on Issues in AI Governance (January). Available online at: https://ai.google/static/documents/perspectives-on-issues-in-ai-governance.pdf (accessed February 14, 2021).

Gregg, A., and Greene, J. (2019). Fierce backlash against Amazon paved the way for Microsoft's stunning Pentagon cloud win. Washington Post (October 31). Available online at: https://www.washingtonpost.com/business/2019/10/30/fierce-backlash-against-amazon-paved-way-microsofts-stunning-pentagon-cloud-win/ (accessed June 03, 2020).

Gross, A., Murgia, M., and Yang, Y. (2019). Chinese tech groups shaping UN facial recognition standards. Financial Times (December 1). Available online at: https://www.ft.com/content/c3555a3c-0d3e-11ea-b2d6-9bf4d1957a67 (accessed January 26, 2020).

Grush, L.. (2015). Google engineer apologizes after photos app tags two black people as gorillas. The Verge (July 1). Available online at: https://www.theverge.com/2015/7/1/8880363/google-apologizes-photos-app-tags-two-black-people-gorillas (accessed February 15, 2019).

Hogan Lovells (2019). A Turning Point for Tech – Global Survey on Digital Regulation (October). Hogan Lovells Publication. Available online at: https://www.hoganlovells.com/en/publications/a-turning-point-for-tech-global-survey-on-digital-regulation (accessed January 15, 2020).

IEEE SA (2017). IEEE Global Initiative for Ethical Considerations in Artificial Intelligence (AI) and Autonomous Systems (AS) drives, together with IEEE Societies, New Standards Projects; Releases New Report on Prioritizing Human Well-Being. (July 19). Available online at: https://standards.ieee.org/news/2017/ieee_p7004.html (accessed June 28, 2020).

ISO (2017). ISO/IEC JTC 1/SC 42 Artificial Intelligence. Available online at: https://www.iso.org/committee/6794475.html (accessed January 19, 2020).

Jobin, A., Marcello, I., and Vayena, E. (2019). Artificial Intelligence: The Global Landscape of Ethics Guidelines.

Kalenzi, C., Yup, S., and Lee Kim, S. Y. (2020). The Fourth Industrial Revolution: A New Endless Frontier. Available online at: https://www.apctt.org/techmonitor/technological-innovations-control-covid-19-pandemic (accessed April 27, 2021).

Karen, H.. (2020). We read the paper that forced Timnit Gebru out of Google. Here's what it says. MIT Technology Review (December 4). Available online at: https://www.technologyreview.com/2020/12/04/1013294/google-ai-ethics-research-paper-forced-out-timnit-gebru/ (accessed November 22, 2021).

Kelion, L.. (2019). Google wins landmark right to be forgotten case. BBC News (September 24). Available online at: https://www.bbc.com/news/technology-49808208 (accessed May 20, 2020).

Knight, W.. (2019). The US bans trade with six Chinese companies, ostensibly for their work against Uighurs. Wired (October 9). Available online at: https://www.wired.com/story/trumps-salvo-against-china-targets-ai-firms/ (accessed January 15, 2020).

Kwoka, J., and Valletti, T. (2021). Scrambled eggs and paralyzed policy: breaking up consummated mergers and dominant firms. Indus Corp. Change. Available at SSRN: https://ssrn.com/abstract=3736613

Lynn, L. E.. (2010). Adaptation? Transformation? Both? Neither? The Many Faces of Governance. Available online at: http://regulation.huji.ac.il (accessed March 7, 2019).

McKinsey Company (2019). Notes from the AI Frontier: Insights From Hundreds of Use Cases. Available online at: https://www.mckinsey.com/~/media/mckinsey/featured%20insights/artificial%20intelligence/notes%20from%20the%20ai%20frontier%20applications%20and%20value%20of%20deep%20learning/notes-from-the-ai-frontier-insights-from-hundreds-of-use-cases-discussion-paper.ashx (accessed January 17, 2020).

Murgia, M., and Shrikanth, S. (2019). How big tech is struggling with the ethics of AI (April 29). Available online at: https://www.ft.com/content/a3328ce4-60ef-11e9-b285-3acd5d43599e (accessed December 20, 2019).

Newman, A. L.. (2012). “The governance of privacy,” in The Oxford Handbook of Governance, ed. D. Levi-Faur (New York, NY: Oxford University Press). Available online at: https://www.oxfordhandbooks.com/view/10.1093/oxfordhb/9780199560530.001.0001/oxfordhb-9780199560530-e-42 (accessed August 08, 2020).

OECD (2019). The Digital Innovation Policy Landscape in 2019. Technology and Industry Policy Papers. Available online at: https://www.oecd-ilibrary.org/science-and-technology/the-digital-innovation-policy-landscape-in-2019_6171f649-en (accessed January 19, 2021).

Pagallo, U.. (2018). Apples, oranges, robots: Four misunderstandings in today's debate on the legal status of AI systems. Philos. Trans. A Math. Phys. Eng. Sci. 376, 20180168. doi: 10.1098/rsta.2018.0168

Pash, C.. (2021). Federal budget - the $1.2 billion digital economy strategy. Adnews (May 12). Available online at: https://www.adnews.com.au/news/federal-budget-the-1-2-billion-digital-economy-strategy (accessed August 15, 2021).

Peukert, C., and Kloker, S. (2020). “Trustworthy AI: how ethicswashing undermines consumer trust,” in WI2020 Zentrale Tracks, ed P. Z. Heiden (Berlin: GITO Verlag), 1100–1115. doi: 10.30844/wi_2020_j11-peukert

Quintais, S. P., Bodo, B., Giannopoulou, A., and Ferrari, V. (2019). Blockchain and the law: a critical evaluation, Stan. J. Blockchain. Law Pol.

Rawlinson, K.. (2019). Digital assistants like Siri and Alexa entrench gender biases, says UN. The Guardian (May 22). Available online at: https://www.theguardian.com/technology/2019/may/22/digital-voice-assistants-siri-alexa-gender-biases-unesco-says (accessed January 04, 2020).

Rhodes, R. A. W.. (2012). “Waves of governance,” in The Oxford Handbook of Governance, ed D. Levi-Faur (New York, NY: Oxford University Press). Available online at: https://www.oxfordhandbooks.com/view/10.1093/oxfordhb/9780199560530.001.0001/oxfordhb-9780199560530-e-3

Simonite, T.. (2019). How tech companies are shaping the rules governing AI. Wired (May 16). Available online at: https://www.wired.com/story/how-tech-companies-shaping-rules-governing-ai/ (accessed February 19, 2020).

Takanashi, Y., Matsuo, S., Burger, E., Sullivan, C., Miller, J., and Sato, H. (2020). Call for Multi-Stakeholder Communication to Establish a Governance Mechanism for the Emerging Blockchain-Based Financial Ecosystem, Part 2 of 2. Stanford Journal of Blockchain Law Policy. Available online at: https://stanford-jblp.pubpub.org/pub/multistakeholder-comm-governance2

Wagner, B.. (2018). “Ethics as an escape from regulation: from ‘ethics-washing' to ethics-shopping?” in Being Profiled: Cogitas Urgo Sum: 10 Years of Profiling the European Citizen, eds E. Bayamlioglu, I. Baraliuc, L. Janssens, and M. Hildebrandt (Amsterdam: Amsterdam University Press) Available online at: https://www.jstor.org/stable/pdf/j.ctvhrd092.18.pdf (accessed January, 19, 2021).

Waters, R.. (2018). Google staff protest at company's AI work with the Pentagon. Financial Times (April 4). https://www.ft.com/content/b0ca4b9a-384a-11e8-8b98-2f31af407cc8 (accessed December 20, 2020).

Winfield, A. F. T., and Jirotka, M. (2018). Ethical governance is essential to building trust in robotics and artificial intelligence systems. Philos. Trans. A Math. Phys. Eng. Sci. 376, 20180085. doi: 10.1098/rsta.2018.0085

Yochai, B.. (2019). Don't let industry write the rules for AI: World view. Nature 569, 161. Available online at: https://www.nature.com/articles/d41586-019-01413-1?utm_source=commission_junctionandutm_medium=affiliate

Yonhap News (2020). S. Korea to invest 160 trillion won in ‘New Deal' projects, create 1.9 million jobs. Yonhap News Agency (July 14). Available online at: https://en.yna.co.kr/view/AEN20200714004851320 (accessed June 17, 2021).

Keywords: artificial intelligence, blockchain, governance, innovation policy, AI ethics, AI principles

Citation: Kalenzi C (2022) Artificial Intelligence and Blockchain: How Should Emerging Technologies Be Governed? Front. Res. Metr. Anal. 7:801549. doi: 10.3389/frma.2022.801549

Received: 25 October 2021; Accepted: 17 January 2022;

Published: 11 February 2022.

Edited by:

Marina Ranga, European Commission, Joint Research Centre, SpainCopyright © 2022 Kalenzi. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Cornelius Kalenzi, kalenzi.c@kaist.ac.kr; kalenzi.c@gmail.com

Cornelius Kalenzi

Cornelius Kalenzi