- 1State Key Laboratory of Theoretical Physics, Institute of Theoretical Physics, Chinese Academy of Sciences, Beijing, China

- 2Department of Physics, Hong Kong Baptist University, Kowloon Tong, Hong Kong

- 3Centre for Nonlinear Studies, Beijing-Hong Kong-Singapore Joint Centre for Nonlinear and Complex Systems, Institute of Computational and Theoretical Studies, Hong Kong Baptist University, Kowloon Tong, Hong Kong

- 4Beijing Computational Science Research Center, Beijing, China

- 5Research Centre, HKBU Institute of Research and Continuing Education, Shenzhen, China

In neural systems, synaptic plasticity is usually driven by spike trains. Due to the inherent noises of neurons and synapses as well as the randomness of connection details, spike trains typically exhibit variability such as spatial randomness and temporal stochasticity, resulting in variability of synaptic changes under plasticity, which we call efficacy variability. How the variability of spike trains influences the efficacy variability of synapses remains unclear. In this paper, we try to understand this influence under pair-wise additive spike-timing dependent plasticity (STDP) when the mean strength of plastic synapses into a neuron is bounded (synaptic homeostasis). Specifically, we systematically study, analytically and numerically, how four aspects of statistical features, i.e., synchronous firing, burstiness/regularity, heterogeneity of rates and heterogeneity of cross-correlations, as well as their interactions influence the efficacy variability in converging motifs (simple networks in which one neuron receives from many other neurons). Neurons (including the post-synaptic neuron) in a converging motif generate spikes according to statistical models with tunable parameters. In this way, we can explicitly control the statistics of the spike patterns, and investigate their influence onto the efficacy variability, without worrying about the feedback from synaptic changes onto the dynamics of the post-synaptic neuron. We separate efficacy variability into two parts: the drift part (DriftV) induced by the heterogeneity of change rates of different synapses, and the diffusion part (DiffV) induced by weight diffusion caused by stochasticity of spike trains. Our main findings are: (1) synchronous firing and burstiness tend to increase DiffV, (2) heterogeneity of rates induces DriftV when potentiation and depression in STDP are not balanced, and (3) heterogeneity of cross-correlations induces DriftV together with heterogeneity of rates. We anticipate our work important for understanding functional processes of neuronal networks (such as memory) and neural development.

1. Introduction

Neuronal spike trains typically exhibit spatial randomness and temporal stochasticity. For example, firing rates are long-tailed distributed in many brain areas (Shafi et al., 2007; O'Connor et al., 2010; Buzsáki and Mizuseki, 2014), spatio-temporal correlations within neuronal population often exhibit rich structures (Funahashi and Inoue, 2000; Kohn and Smith, 2005; Dragoi and Buzsáki, 2006; Schneidman et al., 2006); and two neurons will not emit the same spike train even if they are receiving exactly the same stimuli (Allen and Stevens, 1994; Mainen and Sejnowski, 1995; Shadlen and Newsome, 1998). The spatial randomness may emerge from the randomness of the connection details (Ostojic et al., 2009; Roxin et al., 2011), and the temporal stochasticity may be due to the inner stochasticity of neurons and synapses (Allen and Stevens, 1994; Mainen and Sejnowski, 1995; Shadlen and Newsome, 1998), both of which are inherent properties of neurons, synapses or networks so that the exact spike patterns of the network cannot be fully determined by its inputs. Note that the inputs of a network may exhibit spatial heterogeneity and temporal fluctuation; here, by spatial randomness and temporal stochasticity, we mean the spatial heterogeneity and temporal fluctuation of spike patterns that emerge from the inner randomness and stochasticity of the network. As synaptic plasticity depends on the spike times in the pre- and post-synaptic spike trains (Dan and Poo, 2006; Caporale and Dan, 2008; Markram et al., 2012), these variabilities of spike trains should result in the variability of synaptic changes during plasticity, which we call efficacy variability in this paper (See Section 2.1 for more discussions on efficacy variability). When the synapses are fixed, downstream neurons may work under the variability of spike trains by reading out the coded information through spatial and temporal averaging; however, how the variability of the spike trains influences the ability of the neuronal population to facilitate information processing under synaptic plasticity remains poorly understood.

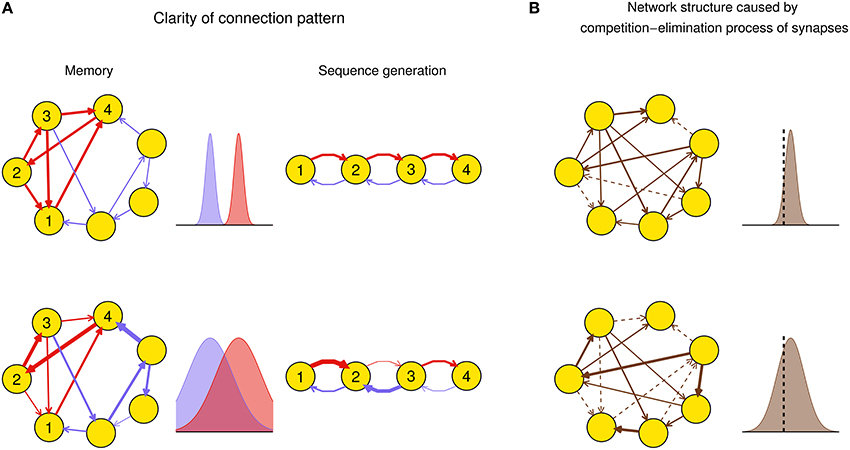

Efficacy variability may have important influence on the function of a network after plasticity. For example, suppose a function of a neuronal network, say memory (Mongillo et al., 2008) or spike sequence generation (Long et al., 2010), requires a connection pattern in which a few synapses (foreground synapses) have stronger efficacies than the others (background synapses). When the efficacy variability is small, both the foreground and background synapses tend to be uniform around their mean values, respectively: thus the connection pattern is clear-cut. However, when the efficacy variability is large, some foreground synapses can be very weak and some background ones can be very strong, which destroys the connection pattern even if the mean strength of the foreground synapses is still larger than that of the background ones (Figure 1A). As another example, synaptic competition and elimination is a classical scenario for the formation of neural network structure during development, when synapses compete with each other for strength and those that are too weak will disappear (Cancedda and Poo, 2009). In this case, efficacy variability quantifies the degree of competition. If we suppose that the total synaptic strength before elimination is constrained by, say, synaptic homeostasis (Watt and Desai, 2010), then when the efficacy variability is small, only a few synapses are below the elimination threshold and get eliminated, and those left also have similar strength; when the efficacy variability is large, a larger portion of synapses get below the elimination threshold, while the remaining ones have a wider efficacy distribution with also a larger mean value than the case of small efficacy variability (Figure 1B). This is consistent with the scenario found in the early development of auditory cortex (Clause et al., 2014): if the spontaneous activity of the medial nucleus of the trapezoid body (MNTB) is modified using genetic methods, then its feedforward projection to the lateral superior olive (LSO) becomes denser and weaker, which suggests that the normal pattern induces stronger efficacy variability than the genetically modified one.

Figure 1. Biological implications of efficacy variability. (A) Connection patterns used for, say, memory (left column), or spike sequence generation (right column) are defined as a few synapses (red) being stronger than the others (blue). In the left column, a network of excitatory neurons stores a memory using its attractor dynamics after the intra-connections within a subpopulation (here, neurons 1–4) are strengthened (the inhibitory population that keeps the total activity of the network is not shown). When the efficacy variability is small (upper row), this subpopulation will exhibit persistently high activity if a sufficiently large number of neurons in the subpopulation have high activities initially, so the memory is retrieved. When the efficacy variability is large (lower row), this memory retrieval will fail even if the mean strength of the intra-connections (red) is stronger than that of the other ones (blue). In the right column, when the efficacy variability is small (upper row), the network is able to generate spike sequence from neuron 1 to neuron 4; but when the efficacy variability is large (lower row), it cannot generate such sequence even if the mean strength of the red synapses is larger than that of the blue ones. Widths of arrows indicate synaptic strengths. (B) Efficacy variability causes different network structures by controlling the degree of synaptic competition. When the efficacy variability is small (upper), only a few synapses are weaker than the elimination threshold (black dashed vertical line) and get eliminated during neural development, so most synapses are left and their strengths tend to be uniform; when the efficacy variability is large (lower), more synapses are eliminated, and the left ones are more heterogeneous and also stronger than the upper case on average. Dashed arrows represent eliminated synapses.

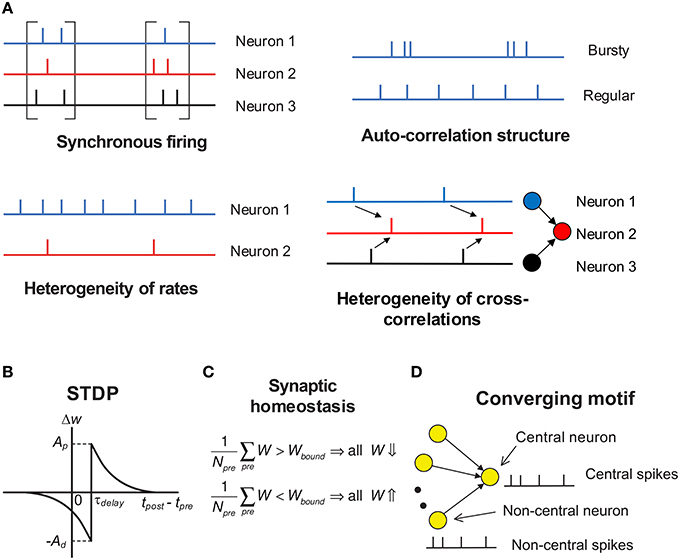

Under temporal stochasticity and spatial heterogeneity, spike trains may exhibit a variety of statistical features, which form rich spike pattern structures. Groups of neurons may spurt firing activity (synchronous firing) (Kamioka et al., 1996; Buzsáki and Draguhn, 2004; Bartos et al., 2007), the spike train of a single neuron can be bursty or regular (auto-correlation structure) (Softky and Koch, 1993; Schwindt and Crill, 1999; Jacob et al., 2012), firing rates of cortical neurons are typically long-tailed distributed in vivo (heterogeneity of rates) (Shafi et al., 2007; O'Connor et al., 2010; Buzsáki and Mizuseki, 2014), and spike trains of different neurons also reveal rich degrees of interdependence (heterogeneity of cross-correlations) (Funahashi and Inoue, 2000; Schneidman et al., 2006; Ostojic et al., 2009; Trousdale et al., 2012, see Figure 2A). As synaptic plasticity is driven by spike trains, spike pattern structure must have strong influence on efficacy variability, thereby inducing neuronal networks with sharply different structures even under the same population rate. How spike pattern structures influence the efficacy variability of synapses remains unclear. In this study, we will move a step forward along this direction by studying this influence under a conventional pair-wise additive STDP (Gerstner et al., 1996), which involves potentiation of the synapse when presynaptic spikes precede postsynaptic spikes, and depression for the reverse ordering (Figure 2B). Neuronal networks with STDP alone are not stable due to effects of positive resonance which results in runaway excitation; and a neuron may conserve its activity level by adjusting its total input strength, which is called synaptic homeostasis (Turrigiano and Nelson, 2004; Turrigiano, 2011). In this study, we model synaptic homeostasis by supposing that the mean strength of all the synapses input to a neuron is dynamically bounded (see Figure 2C, Equation 7 in Section 2).

Figure 2. Schematic of key concepts in our modeling work. (A) The four aspects of pattern structure studied in this paper. By “synchronous firing”, we typically mean the spurt of firing activity of a population. For stationary spike trains, “auto-correlation structure” reflects the burstiness/regularity of the spike trains, which is quantified by coefficient of variance (CV) in this paper. Here, by “burstiness”, we typically mean the irregular structure of spike trains, instead of the regular burstiness in the spike patterns of, say, central pattern generator. For spike trains with synchronous firing, we consider three-types of “auto-correlation structure” to reflect the burstiness/regularity features of the spike patterns (see Figure 8). By “heterogeneity of rates”, we mean that the time-averaged firing rates are different for different neurons. By “heterogeneity of cross-correlations”, we mean that different pre-synaptic neurons of a neuron tends to fire spikes at different times relative to the spikes of the neuron. For example, in the right-bottom subplot, before a spike of neuron 2, neuron 1 tends to fire before neuron 3. (B) The STDP time window used in our work. The synapses are updated according to the time difference between when the post-synaptic spike is back-propagated to the dendritic end and when the pre-synaptic spike arrives at the axonal terminal. τdelay is the difference between the axonal delay and the dendritic delay. In this paper, we generally discuss the case when τdelay > 0 (i.e., the axonal delay is larger than the dendritic delay); and for the convenience of discussion, we set the dendritic delay to be zero, so τdelay is the axonal delay. The case when τdelay < 0 is then discussed in Section 3.8. The STDP updatings of all spike pairs are summed together. (C) Synaptic homeostasis. The synapses input to a neuron are subject to a bound on their mean strength: when their mean strength is different from this bound, all the incoming synapses of that neuron will undergo an adjustment. (D) Converging motif, on which we conduct all the simulations in this paper. We call the neuron that receives from many neurons in a converging motif to be the central neuron, and call the other neurons the non-central neurons; we then call the spikes emitted by the central neuron (or non-central neurons) to be central spikes (or non-central spikes). Synaptic homeostasis is imposed onto the central neuron of a converging motif. Modeling details are presented in Section 2.

Synaptic efficacies under STDP can be regarded as particles doing 1-dimensional random walks driven by the stochastic processes of spike trains: a synapse gets depressed at the time when a pre-synaptic spike arrives at the axonal terminal, and gets potentiated at the time when a post-synaptic spike is back-propagated to the dendritic end. A group of particles doing random walks starting from the same point can have displacement variability either because that they have different trial-averaged drift velocities with each other, or because of diffusion. Similarly, the efficacies of two synapses can also get dissimilar because of two reasons. One is that they have different tendencies to be potentiated or depressed. For example, the first (second) pre-synaptic neuron tends to fire spikes before (after) the first (second) post-synaptic neuron. The other reason is the variability of the synaptic changes caused by the stochasticity of the spike trains, which can be accumulated with time, inducing dissimilarity even if the two synapses have the same tendency to be potentiated or depressed. In general, the total variance (TotalV) can be written as the summation of the variance caused by heterogeneity of learning rates (DriftV, short for “drift variance”) and the variance caused by diffusion (DiffV, short for “diffusion variance”) (see Equation 3 in Section 2)

During plasticity, DriftV is usually caused by the spatial heterogeneity of spike trains. For example, in classical Hebbian learning, synapses sharing the same presynaptic neuron may have different learning rates depending on the firing rates of the post-synaptic neurons; if the plasticity is spike-timing dependent, the heterogeneity of cross-correlations can induce different learning rates even if the firing rates are the same. Because of the inner stochasticity of neurons and synapses, even two neurons receiving exactly the same stimuli emit different spike trains, causing DiffV. In our STDP model, synaptic updatings do not depend on current synaptic weights, and the contributions of all spike pairs are added together; what's more, we do not consider synaptic bounds, and assume that synapses are free of bounds for simplicity. These make DriftV ∝ t2 and DiffV ∝ t during time t of running, so that the synaptic variances caused by drift velocities and diffusion in our model have the same order of time-dependence as those in Brownian motion.

In this paper, we systematically study how the four aspects of spike pattern structures, i.e., synchronous firing, auto-correlation structure, heterogeneity of rates and heterogeneity of cross-correlations as well as their interactions, influence efficacy variability under STDP and synaptic homeostasis using converging motifs (i.e., simple networks in which one neuron receives from many other neurons). See Figure 2 for the concepts above. The activities of all the neurons in converging motifs (including the non-central neurons and the central neuron, see Figure 2D) are generated using statistical models (Section 2.3), which explicitly control different aspects of pattern structure while keeping population rate constant.

The reason why we study converging motifs here is that in a recurrent network, all the synapses input to a neuron form the links of a converging motif, and all the synapses of the recurrent network can be considered as the union of the links in all the converging motifs. Under synaptic homeostasis, the mean synaptic strengths in these converging motifs are almost the same, therefore, the efficacy variability of the recurrent network is approximately to be the mean of the efficacy variability of all the converging motifs in it (see Equation 8 in Section 2). Therefore, converging motifs are basic units to understand the efficacy variability of a recurrent network.

Using statistical models (see Section 2.3), we generate spike patterns with different statistical features (such as coefficients of variance (CV), firing rate distributions, distributions of the spike number that a neuron fires during a synchronous event, and so on), and then study how they influence the efficacy variability of converging motifs. These spike generating models do not aim to generate spike trains with precise pre-determined spatio-temporal characteristics (see, for example, Krumin and Shoham, 2009; Macke et al., 2009; Gutnisky and Josić, 2010), but instead aim to implement physical intuitions on spatio-temporal characteristics in a simple way. For example, we use Gamma processes with different shape parameters to model bursty or regular spike trains, and use lognormal distributions to model heterogeneity of rates. Note that in our study, the spike trains of the central neuron are not generated by integrating its inputs, but instead are also generated according to statistical models, independent of the synaptic weights in converging motifs. In this way, we can explicitly control the statistical features of both the central and non-central spike trains using statistical models, and study their influences onto the efficacy variability without worrying about the feedback of the synaptic changes onto the spike train of the post-synaptic neuron as usually happens in biologically more realistic models.

The spike trains of both the central and non-central neurons are generated according to the same model, so that they have similar auto-correlation structure and synchronous firing rate fluctuation. This makes our results particularly suitable to understand the efficacy variability of a recurrent network without hidden feedforward dynamics (Ganguli and Latham, 2009). For example, in a random excitatory-inhibitory network that works in the balanced state (van Vreeswijk and Sompolinsky, 1996, 1998), spike trains exhibit irregularity and stochasticity due to being driven by fluctuation. If the dynamics of the network is unstable to perturbations of population firing rates, then oscillation may emerge (Brunel and Hakim, 1999; Brunel, 2000), resulting in synchronous firing (Figure 2A). If its dynamics is unstable to heterogeneous perturbations, then the neurons may fire with strong burstiness (Ostojic, 2014) (auto-correlation structure). Due to the heterogeneity of the input degrees and nonlinear current-rate relationship of neurons, their firing rates are typically long-tailed distributed (Roxin et al., 2011) (heterogeneity of rates). Cross-correlations between neurons may result from connectivity details such as unidirectional connections or input sharing (Ostojic et al., 2009) (heterogeneity of cross-correlations). In this paper, we aim to mimick these spike patterns using statistical models, thereby gaining understanding on their influence onto the efficacy variability of a network. In another paper that will be published soon (Bi and Zhou, in preparation), we will study the influence of spike pattern structures onto the efficacy variability of recurrent networks by implementing sophisticated spike shuffling methods onto the spike patterns self-organized by recurrent LIF networks, thereby examining the results of this paper in a biologically more plausible manner.

The Results part of this paper is organized as follows. We will first study how auto-correlation structure, synchronous firing, heterogeneity of rates and their interactions influence DiffV and DriftV (Sections 3.1–3.6), then discuss the influence of heterogeneity of cross-correlations onto DriftV (Section 3.7). Under STDP, the main effect of heterogeneity of cross-correlations is to change the drift velocities of different synapses by different degrees, thereby influencing DriftV; so we don't seriously consider how heterogeneity of cross-correlations influence DiffV, except for briefly discussing it in Supplementary Materials Section S6. In this paper, we generally discuss the case when τdelay > 0 in STDP (i.e., the axonal delay is larger than the dendritic delay, see Figure 2B); and for the convenience of discussion, we set the dendritic delay to be zero, so τdelay becomes the axonal delay. The case when τdelay < 0 is then discussed in Section 3.8. The results of this paper are then summarized in Section 3.9.

2. Materials and Methods

2.1. The Definition of Efficacy Variability

In this subsection, we give the exact definition of efficacy variability, explain the meanings of DiffV and DriftV, and show our general strategy to study efficacy variability using simulations.

Suppose a set of synapses in a neuronal network . Now we run a plasticity process in for several trials (or on several ensembles), and construct a matrix ΔW, each column of which represents the weight changes of in one trial, and different columns represent different trials. Then we define efficacy variability of during the plasticity process to be the variance of the elements of the matrix ΔW, i.e., VarS, T(ΔW), in which the subscript S represents integrating over row index, i.e., structural index, and T represents integrating over column index, i.e., trial index.

The law of total variance says that if the probability space of Y is decomposed into several subspaces labeled by X, then the variance of Y in the whole space is equal to the summation of the variance of the expectations in these subspaces and the expectation of the variances in these subspaces, i.e.,

Using the law of total variance, efficacy variability can be written as

Here, ET(ΔW) represents the trial expectations of the changes of all the synapses in ; and VarS(ET(ΔW)) is the variance of these trial expectations, representing DriftV. VarT(ΔW) represents the trial-to-trial variances caused by diffusion, and ES(VarT(ΔW)) is the average of these variances over all the synapses, representing DiffV. Equation 3 is the formal writing of Equation 1 in the introduction.

The law of total variance can decompose VarS, T(ΔW) in another way:

Here VarT(ES(ΔW)) is the trial-to-trial variability of the mean synaptic change of the whole network. But a real biological process only allows a single trial, so this trial-to-trial variability cannot contribute to biological functions except for individual differences. Fortunately, usually VarT(ES(ΔW)) ~ (1∕||), with || being the number of synapses in . So when || is large enough, Equation 4 becomes VarS, T(ΔW) ≈ ET(VarS(ΔW)), which means that the trial expectation of the efficacy variance (i.e., ET(VarS(ΔW))) can be used to approximate the efficacy variability VarS, T(ΔW). Under this insight, Equation 3 becomes

In our simulations, we use ET(VarS(ΔW)) to quantify efficacy variability. As we mentioned in the introduction, DriftV is usually caused by the spatial heterogeneity of spike trains, and DiffV is by temporal stochasticity. Methodologically, when we analyze DiffV, we set the spatial properties of spike trains (here, firing rates of neurons and cross-correlations between neuronal pairs) homogeneous, so that DriftV = 0. When we analyze DriftV, we use the fact that DriftV ~ (t2) and DiffV ~ (t) after time t of running, so the efficacy variability after a sufficiently long time largely reflects DriftV.

2.2. Converging Motifs, STDP and Synaptic Homeostasis

Converging motifs are simple networks in which one neuron receives inputs from many other neurons (Figure 2D). We call the neuron that receives from many neurons in a converging motif to be the central neuron, and call the other neurons the non-central neurons; we then call the spikes emitted by the central neuron (or non-central neurons) to be central spikes (or non-central spikes). All the simulations in this paper are conducted on converging motifs.

In this paper, the STDP updating caused by a pair of pre- and post-synaptic spike (here, non-central and central spike) at tpre and tpost is

with τdelay being the difference between the delay caused by the propagation of pre-synaptic spike along the axon and the delay caused by the back-propagation of the post-synaptic spike along the dendrite. τdelay > 0 (or τdelay < 0) if the axonal delay is larger (or smaller) than the dendritic delay. In this paper, we generally discuss the case when τdelay > 0 (i.e., the axonal delay is larger than the dendritic delay); and for the convenience of discussion, we set the dendritic delay to be zero, so τdelay becomes the axonal delay. The case when τdelay < 0 is then discussed in Section 3.8. The contribution of all pairs of pre- and post-synaptic spikes are added together. τSTDP = 20 ms throughout the paper, and τdelay = 1ms by default. For simplicity, synaptic weights in this paper are free of boundaries.

Under synaptic homeostasis, the synaptic efficacies are updated every ΔT time according to

with wa being the synaptic efficacy between the ath non-central neuron and the central neuron, Nin being the in-degree of central neuron, wbound being the ground line of synaptic homeostasis, and ϵ being the plasticity rate. Therefore, synaptic homeostasis dynamically bounds the mean strength of the synapses to the central neuron, and it does not change the efficacy variability of a converging motif due to its additive nature.

According to the law of total variance Equation 2, the efficacy variability of a large recurrent network can be written as

with Δwab being the synaptic change from neuron b to neuron a, and ∂a being all the neurons that input to neuron a in the network. If synaptic homeostasis Equation 7 is imposed onto the synapses input to each neuron in the network, Vara(Eb∈∂a(Δwab)) ≈ 0, especially if the plasticity rate is high (i.e., ϵ ≈ 1 in Equation 7). Therefore, the efficacy variability of the recurrent network is approximately the mean of the efficacy variability of all the converging motifs in it. This is the reason why we study converging motifs in this paper.

2.3. Spike Generating Models

Here are the statistical models we use to generate the spike trains of the neurons in a converging motif (Figure 2D). The spike trains of the central neuron and the non-central neurons are generated according to the same model, mimicking the dynamics of converging motifs embedded in a recurrent network without hidden feedforward dynamics (Ganguli and Latham, 2009).

2.3.1. Model Auto

This model generates spike trains with stationary firing rate, whose auto-correlation structure (burstiness/regularity) can be controlled.

Spikes trains are Gamma processes with inter-spike intervals following the distribution

The rate of the Gamma process is β∕α, and the coefficient of variance is .

We use α to control the burstiness/regularity of the spike train, while adjusting β to keep the firing rate at 20 Hz. The spike train becomes more bursty when α is smaller, and more regular when α is large.

2.3.2. Model Sync

This model generates synchronous firing patterns whose broadness of the distribution of spike number per neuron per synchronous event can be explicitly controlled.

In this model, a synchronous event lasts for τcross. The spike number per neuron per synchronous event is distributed the same as the spike number of an underlying Gamma process within an interval of length τcross. The rate of the Gamma process is p∕τcross and its coefficient of variance is CVSpikeNum. So the mean value of the distribution of spike number per neuron per synchronous event is p, and this distribution is narrow if CVSpikeNum is small, and gets broadened when CVSpikeNum increases. If a neuron is to fire M spikes in a synchronous event, then the spike times of these M spikes will be randomly and uniformly chosen within this time interval of duration τcross. The occurrence of synchronous events is a Poisson process with rate r0∕p, so that the firing rate of a neuron is kept at r0 = 20Hz when p changes.

2.3.3. Model Long Tail

This model generates long-tailed distributed firing rates for the non-central neurons in a converging motif, the firing rate of the central neuron is always kept at r0 = 20Hz.

The firing rates of the non-central neurons are lognormal distributed as

The mean of this distribution is at . Parameter s is used to control the shape, while m is accordingly adjusted to keep the mean at r0 = 20Hz. This distribution is a δ function when s = 0, and gradually becomes long tailed when s increases.

This model generates homogeneous Poisson processes by default, but it can be also combined with other models to introduce heterogeneity of rates into the spike patterns with other aspects of pattern structure.

2.3.4. Model Sync-Auto-LongTail

In this model, the firing rates of the non-central neurons follow log-normal distribution with mean r0 = 20Hz (with r0 being the firing rate of the central neuron) and shape parameter s. A synchronous event lasts for τcross, and the mean spike number per neuron per synchronous event is p. The occurrence of synchronous events is a Gamma process with coefficient of variance CVevents and rate r0∕p, so that the population firing rate is kept at r0 when p changes. The spike train of a neuron with firing rate ri is generated as follows: we generate a Gamma process with rate pri∕r0 and coefficient of variance CVrescale, and divide the Gamma spike train into bins of length τcross. Then we randomly shuffle the order of these bins to destroy possible spike dependency between adjacent bins, and the piece of spike train within the jth bin after shuffling is to be the piece of spike train of the neuron within the jth synchronous event.

In this model, the auto-correlation structure of the occurrence of synchronous events (see Figure 10) is controlled by the parameter CVevents, while the auto-correlation structure of a spike train in the rescaled time is controlled by the parameter CVrescale.

3. Results

3.1. The Scheme to Investigate the Influence of Synchronous Firing and Auto-correlation Structure onto DiffV

When the firing rates of neurons and the cross-correlations between neurons are homogeneous, synchronous firing and auto-correlation structure influence efficacy variability as a DiffV effect. To understand how these two pattern statistics influence DiffV, we write the total change of the ath synapse as

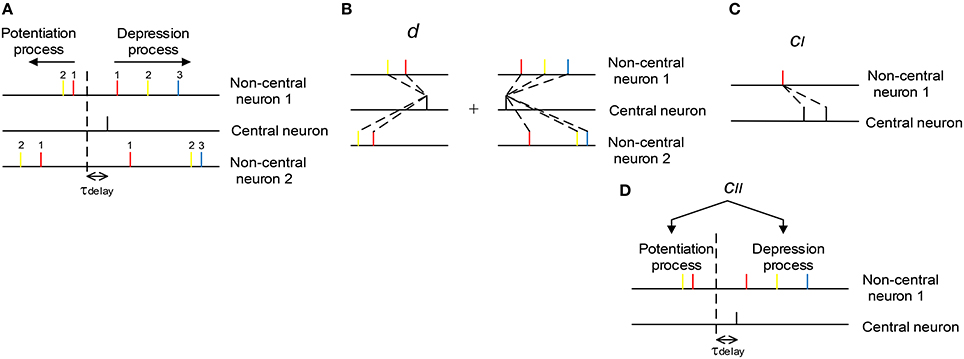

with Δwa, p being the total potentiation value on the synapse, Δwa, p(ti, tj|a, i, p) being the potentiation value pairing the ith spike of central neuron and the jth spike of the ath non-central neuron (the index of j depends on a, i, and k, see below), and Δwa, d and Δwa, d(ti, tj|a, i, d) being the corresponding depression values. The index of i starts from the beginning of the spike train of the central neuron; but the indexing of j, however, is a little complicated, and depends on a, i and k. In our modeling work, axons have delay τdelay (τdelay = 1 ms by default), and the sign of STDP updatings depends on the time difference between ti − τdelay and tj|a, i, k. For the potentiation process (k = p) of the ath non-central neuron, we let j start from the spike immediately before ti − τdelay in the spike train, and go backward along the spike train; for the depression process (k = d), we let j start from the spike immediately after ti − τdelay, and go forward along the spike train (Figure 3A). In the following discussions, we sometimes do not explicitly write the dependence of j on a, i and k for simplicity.

Figure 3. The scheme we use to understand the influence of synchronous firing and auto-correlation structure onto DiffV. (A) The indexing of j (see text). The non-central spikes of the same color have the same j index (indicated by the numbers). The dashed vertical line represents the separation of the potentiation and depression process caused by the black spike of the central neuron. Synapses between the central and non-central neurons have axonal delay τdelay. (B) d represents the mean efficacy variability caused by a single central spike in the potentiation process plus that in the depression process. (C) cI represents the efficacy variability contributed by the correlation between synaptic changes caused by adjacent central spikes. (D) cII represents the efficacy variability contributed by the correlation between the total potentiation and depression value imposed on the same synapse.

Using Equation 9, we can rewrite the variance of efficacy changes per spike like this:

with being the expectation of the spike number of the central neuron during simulation time (r0 is the firing rate of the central neuron, and T is the duration of simulation), and

and

Apparently, d means the mean efficacy variability contributed by a single spike of the central neuron. To understand cII, note that

with being the correlation coefficient between the total potentiation and depression value imposed on the same synapse. Therefore,

with

being the coupling factor. Similarly,

with being the correlation coefficient between the synaptic changes contributed by the mth and nth spikes of the central neuron, which is particularly non-zero for two central spikes adjacent in time, and

being the coupling factors.

Therefore, we can see that there are three factors that are important to understand the efficacy variability Vara(Δwa) (Figures 3B–D):

(1) The mean efficacy variability contributed by a single spike of the central neuron, which is represented by d.

(2) The correlation between the synaptic changes caused by adjacent spikes of the central neuron, which contributes to Vara(Δwa) through cI.

(3) The correlation between the total potentiation and depression value imposed on a synapse, which contributes to Vara(Δwa) through cII.

In the following discussions, we will generate spike patterns with different synchronous firing and auto-correlation structure with homogeneous firing rates and cross-correlations using spike-generating models, and investigate how the efficacy variability as well as d, cI and cII change with model parameters; then we will discuss the physical pictures underlying these phenomena, aiming to giving the readers an intuitive understanding on how synchronous firing and temporal structure influences DiffV.

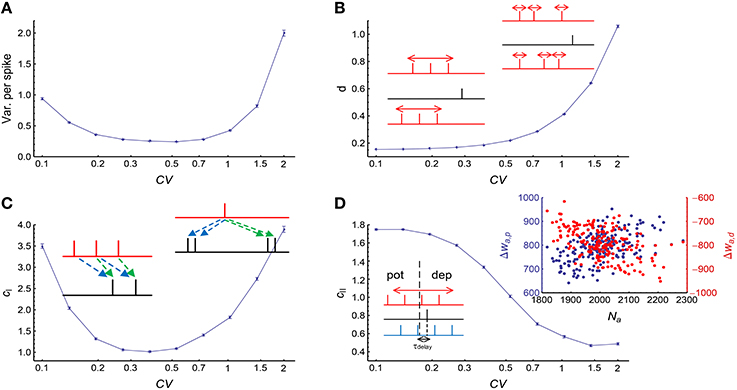

3.2. The Influence of Auto-correlation Structure onto DiffV in Stationary Spike Trains

We used Gamma processes (Model Auto in Section 2) to model the auto-correlation structure of stationary spike trains (whose firing rates do not change with time). We changed the shape parameter α of the Gamma processes while conserving the firing rates at r0 = 20Hz. The coefficient of variance (CV) of a Gamma process is . When CV gets larger, spike trains are burstier; when CV gets smaller, spikes are more regular. We found that both burstiness and strong regularity in auto-correlation structure increased the efficacy variability, and the efficacy variability gets its minimal value when CV is around 0.3 ~ 0.7 (Figure 4A), which is the range most neurons lie within in vivo (Softky and Koch, 1993).

Figure 4. The influence of auto-correlation structure onto DiffV in stationary spike trains. (A) Variance per spike (, see Equation 10) as a function of CV, when spike trains are generated according to Model Auto (see Section 2). (B) d as a function of CV. Left inset: when the non-central spike trains are regular, the non-central spikes move left or right almost simultaneously. Right inset: when the non-central spike trains get bursty, each spike can move with a larger freedom, thereby contributing to the increase of DiffV. Black lines in the insets represent the central spike train, and colored (here, red) lines represent the non-central spike trains: the same color scheme is also used in the insets of the following panels. (C) cI as a function of CV. Left inset: when the spike trains are regular, the synaptic updatings caused by adjacent central spikes are correlated because of transient cross-correlation. Right inset: when the central spike train gets bursty, the synaptic updatings caused by adjacent spikes in the same bursting events are correlated. Dashed arrows of the same color in the two insets represent similar synaptic updatings during STDP. (D) cII as a function of CV. Left inset: when the non-central spike trains are regular, the spikes that potentiate and depress the synapses move left or right almost simultaneously, which correlates the total potentiation and depression values. In this inset, the red spike train cause weaker potentiation and stronger depression than the blue spike train. Right inset: when the spike trains are bursty (here, CV = 2), the spike number of a non-central neuron (horizontal coordinate) is positively correlated with the total potentiation (blue) value and negatively correlated with the total depression (red) value. In (A–D), error bars represent s.e.m., the converging motif has 200 non-central neurons. Parameters for STDP: Ad = Ap = 1 (see Figure 2B and Equation 6 in Section 2). All synaptic efficacies were 0 at the beginning, and simulations were run for 100 s biological time, with 32 trials.

Spike trains in this model are stationary processes, and we also let Ap = Ad in our model, with Ap and Ad being the strengths of the exponentially decayed STDP windows for potentiation and depression (see Figure 2B). Therefore,

(1) the variance caused by potentiation and depression processes should be equal. From Equation 16, this means that fPD = 1, so that cII can link with ρPD in a direct way:

(2) does not change with the i index. From Equations 17 and 18, this means that cI can link with ρm, n; k in a direct way:

with N0 being the spike number of the central neuron.

Equations 19 and 20 mean that in this model the change of cI and cII with model parameters directly reflect the change of the correlations.

To understand Figure 4A, we investigated how d, cI and cII change with CV (Figures 4B–D). We found that d monotonically increases with CV (Figure 4B), cI gets its minimum when CV is around 0.3 ~ 0.7, and gets large both when CV is too large or too small (Figure 4C), and cII monotically decreases with CV (Figure 4D). With the help of Equations 19 and 20, we can understand their changes with CV, and thus gain insight on the reason for the change of DiffV with CV.

3.2.1. Understanding the Change of d with CV

To understand the change of d with CV, consider the term in Equation 13. When the spike trains of the non-central neurons are regular, tj|a, i, p ≈ t1|a, i, p − (j − 1)∕r0, with r0 being the firing rate, so the difference of for different as mainly comes from the difference of t1|a, i, p (Figure 4B, left inset). What's more, {t1|a, i, p}a approaches to a uniform distribution within the interval (ti − τdelay − 1∕r0, ti − τdelay) when the spike trains get regular. When the spike trains get burstier, not only the distribution of t1|a, i, p gets broader, but the other spikes tj≠1|a, i, p can also move with a larger freedom, which increases the variability of (Figure 4B, right inset). Similar arguments also apply to the k = d case. It is easy to show (Supplementary Material Section S1.2) that under strict regularity (CV = 0),

with Δt = 1∕r0 being the inter-spike interval; and under Poisson process (CV = 1),

As (Supplementary Material Section S1.2), dreg < dPoi.

3.2.2. Understanding the Change of cI with CV

cI does not change monotonically with CV, it is large both when CV is large (i.e., spike trains are bursty) and when CV is very small (i.e., spike trains are very regular), and gets its minimal value when CV is around 0.3 ~ 0.7 (Figure 4B). Now we try to understand the change of cI with CV.

Suppose there are two spikes of the central neuron at time x and y. Because the spike trains in this model are stationary processes, the correlation between the potentiation (or depression) value caused by these two spikes only depends on y − x:

with Δwa, k(x, j) being the synaptic updating caused by the interaction of x and a spike of the ath non-central neuron at time j. This ρk(l) function depends on the CV of the spike trains of the non-central neurons. Because of the time-reversal invariance of the statistics of the spike trains, ρp(l) = ρd(l)≡ρ(l) in our model. Using ρ(l), cI (Equation 20) can be rewritten as

with r0 being the firing rate of the central neuron, and C0(l) being the auto-correlation of the central neuron. means the probability density to find another central spike at time l given an central spike at time 0, which can be written as

with qi(l) being the distribution of the interval between the spike at time 0 and the next ith spike. Spike trains in our model are Gamma processes, so that the inter-spike interval follows . It is easy to show that qi(l) = Γ(l|iα, β) (http://mathworld.wolfram.com/GammaDistribution.html).

From Equation 25, we know that the CV of the non-central spike trains influences cI through ρ(l), while the CV of the central spike train influences cI through q0(l).

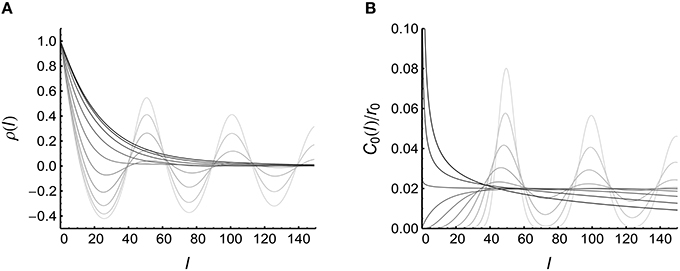

To understand the change of cI with CV, we plot the functions ρ(l) and C0(l)∕r0 under different CV values (Figure 5). We find that:

(1) When spike trains are bursty (CV > 1), ρ(l) increases with CV, and C0(l)∕r0 becomes more concentrated near zero (at which point ρ(l) gets its maximal value) when CV increases. Both of these two factors increase the integration in Equation 25, contributing to the increase of cI with CV when spike trains are bursty.

(2) When spike trains are very regular (CV is very small), both ρ(l) and C0(l)∕r0 become more concentrated near l = N∕r0 (with r0 = 20 Hz being the firing rate and N being an positive integer): in this case, also increases because of the overlap of the peaks of ρ(l) and C0(l)∕r0.

Figure 5. Understanding the influence of auto-correlation structure onto cI (see Equation 25). (A) ρ(l) when CV = 0.1, 0.139, 0.195, 0.271, 0.379, 0.528, 0.737, 1.03, 1.43, 2.00 (represented by lines from light to dark). (B) C0(l)∕r0 when CV takes values the same as in (A). In (A), the results are from 10,000 trials of simulations on a converging motif with 200 non-central neurons. (B) is plotted using Equation 26.

Intuitively, the change of cI with CV can be understood like this:

(1) When CV is large, the non-central spike trains tend to be clustered into bursting events, and the time scale τauto of the bursting events increases with CV (it is easy to show that when CV > 1, see Supplementary Material Section S1.1): this strengthened and broadened auto-correlation makes ρ(l) increase with CV when CV > 1. At the same time, more adjacent central spikes are also gathered closer by bursting events, which increases the correlation between the synaptic changes caused by adjacent central spikes (Figure 4C, right inset): this is the effect of C0(l)∕r0.

(2) When CV is very small, the spike trains are very regular. In this case, two adjacent spikes {ti, ti+m} of the central neuron have the relation ti+m ≈ ti+m∕r0 if they are well within the time scale τauto of the oscillating decaying auto-correlation (it is easy to show that when CV < 1, see Supplementary Material Section S1.1); and two spikes {tj|a, i, k, tj|a, i+m, k} of the ath non-central neuron also have the relation tj|a, i+m, k ≈ tj|a, i, k+m∕r0. This means that ti−tj|a, i, k ≈ ti+m−tj|a, i+m, k, which makes the synaptic changes caused by ti and ti+m almost the same (i.e., ) (Figure 4C, left inset). This increases the correlation ρi, i+m; k caused by adjacent central spikes, thereby increasing cI.

In this model (Model Auto in Section 2), we suppose that the firing rates of the non-central neurons r* are the same as the firing rate of the central neuron r0. What if r* ≠ r0? Figure 5 suggests that when spike trains are very regular, ρ(l) peaks at N∕r* (with N being a positive integer), and C0(l)∕r0 peaks at N∕r0 when CV ≪ 1. Equation 25 indicates that cI gets its maximum value when the peaks of ρ(l) overlap with the peaks of C0(l)∕r0. This overlap is strong when r* = r0, but can also occur when N1r* = N2r0, with N1 and N2 being two positive integers with no common divisor larger than 1. For the simplicity of the following argument, we call the increase of the correlation ρm, n; k caused by adjacent central spikes under strong regularity and N1r* = N2r0 to be the mechanism of transient cross-correlation. Because of the decaying oscillation of ρ(l) and C0(l)∕r0, N1 and N2 tend to be small integers.

3.2.3. Understanding the Change of cII with CV

We find that cII > 1 when spike trains are regular, which means that the potentiation and depression values are positively correlated (ρPD > 0) in this case; and cII < 1 when spike trains are bursty, which means that the potentiation and depression values are negatively correlated (ρPD < 0). Now we try to understand the change of cII with CV.

(1) Under strong regularity, if tj|a, i, k are well within the time scale of τauto with t1|a, i, p, then tj|a, i, p ≈ t1|a, i, p − (j − 1)∕r0 and tj|a, i, d ≈ t1|a, i, p+j∕r0. This means that if t1|a, i, p moves farther away from ti, the potentiation value will get weakened, while the depression value will get strengthened (Figure 4D, left inset), which means that and positively correlated. This makes and are also positively correlated. By the definition of ρPD, we know that ρPD > 0 in this case.

(2) To understand the case when CV is large, note that the Fano factor of a long time scale approaches to CV2 (Cox, 1962; Tuckwell, 1988; Nawrot et al., 2008), which means that different non-central neurons may emit quite different numbers of spikes during simulation when CV is large. If the ath non-central neuron fires more (less) spikes, then both the potentiation and depression values imposed on the ath synapses tend to be strong (weak) (Figure 4D, right inset). This is the reason why ρPD is negative when spike trains are bursty.

Comparing Figure 4A with Figures 4B–D, we know that both d and cI are the reasons for the steep increase of DiffV under large CV, while cI (i.e., the mechanism of transient cross-correlation) is the reason for the fast increase of DiffV with the decrease of CV under small CV. cII, however, does not significantly contribute to the change of DiffV under large or small CV.

3.2.4. The Interaction of Auto-correlation Structure with Heterogeneity of Rates

Till now, we only considered the case when the spike trains are Gamma processes with the same firing rate. In Supplementary Material Section S2, we will consider the case that the Gamma processes of different non-central neurons have different firing rates, while their mean firing rate is still the same as the firing rate of the central neuron r0. Our simulations suggest that heterogeneity of rates does not strongly influence DiffV (as different synapses have different diffusion strengths under heterogeneity of rates, DiffV here means the mean diffusion strength over all the synapses) when the spike trains are bursty, but discounts the increase of DiffV with the decrease of CV under strong regularity because of it destroying transient cross-correlation (Supplementary Figure 1). This makes DiffV tend to monotonically increase with CV.

3.3. The Influence of Synchronous Firing onto DiffV

There are usually two scenarios regarding to synchronous firing: one is spike synchrony, which means that the spikes of neurons are emitted almost simultaneously; the other one is rate synchrony, which means that the firing rates of neurons rise and fall at the same time. The key difference between these two scenarios is the distribution of the spike number of a neuron within a synchronous event. For spike synchrony, a neuron can fire no more than a single spike in a synchronous event, so that this distribution is narrow; but for rate synchrony, different neurons can fire a different number of spikes in a synchronous event, so that this distribution is broad. To understand how DiffV is influenced by synchronous firing under different distributions of spike numbers per neuron per synchronous event, we generated spike trains using Model Sync (Section 2). In this model, a synchronous event lasts for τcross. The spike number per neuron per synchronous event is distributed the same as the spike number of an underlying Gamma process within an interval of length τcross. The rate of this Gamma process is p∕τcross and its coefficient of variance is CVSpikeNum. So the mean value of the distribution of spike number per neuron per synchronous event is p, and this distribution is narrow if CVSpikeNum is small, and gets broadened when CVSpikeNum increases. If a neuron is to fire M spikes in a synchronous event, then the spike times of these M spikes will be randomly and uniformly chosen within this time interval of duration τcross. The occurrence of synchronous events is a Poisson process with rate r0∕p, so that the firing rate of a neuron is kept at r0 = 20Hz when p changes. We focus on the case that τcross ≪ τSTDP and τcross ≪ p∕r0 (with p∕r0 being the average inter-event interval) in our study. It is easy to think that if τcross ≪ p∕r0 but τcross > τSTDP, STDP updatings will tend to zero so that DiffV will decrease with τcross; and if τcross > p∕r0, the spike pattern will approach to asynchronous state.

For spike patterns with synchronous firing, we don't have simple equations like Equations 19 and 20 to directly link ρm, n; k and ρPD with cI and cII. The coupling factors fPD (Equation 16) and fm, n; k (Equation 18) must be considered. As

and the equality is obtained only when , fm, n; k reflects the inhomogeneity of for different m. And similarly, fPD reflects the difference between and relative to their total values.

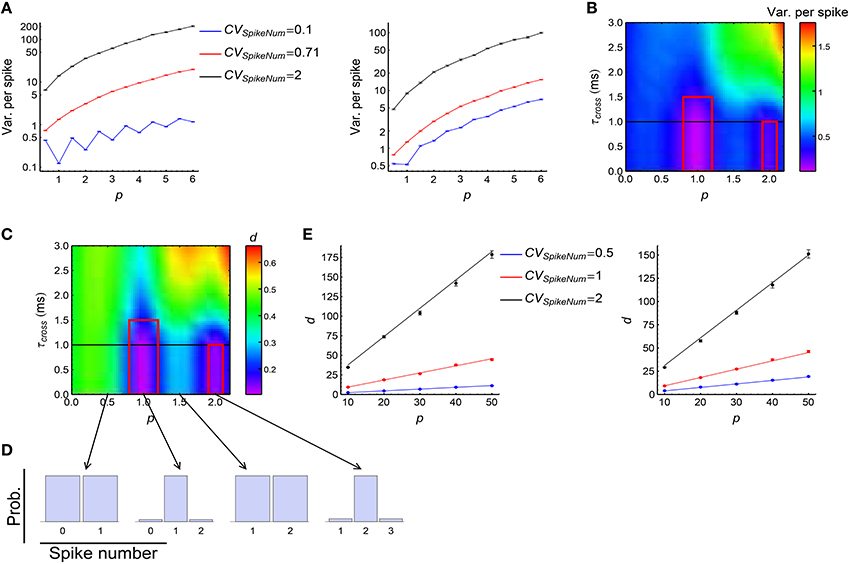

We investigated how DiffV changes with p, τcross and the CVSpikeNum. Our simulations suggest:

(1) DiffV tends to increase with the strength of synchronous firing (i.e., p) and the broadness of the distribution of the spike number per neuron per synchronous event (i.e., CVSpikeNum) (Figure 6A, see Supplementary Figures 2A1,B1,C1 for more information);

(2) when CVSpikeNum is small enough, DiffV can be significantly decreased if p is close to an integer and τcross < τdelay (the red squares in Figure 6B).

Figure 6. The influence of synchronous firing onto DiffV and the contribution of d. (A) Variance per spike (, see Equation 10) as a function of p under different CVSpikeNum values for τcross = 0.5 ms (left) and τcross = 2.5 ms (right), when spike trains are generated according to Model Sync (see Section 2). The efficacy variances span a large range, so here we use log scale to better show their changes. (B) Variance per spike as a function of p and τcross when CVSpikeNum = 0.1. Note the reduction of variance per spike in the red squares. The black horizontal line represents the axonal delay τdelay = 1 ms. (C) d as a function of p and τcross when CVSpikeNum = 0.1. Note the reduction of d in the red squares. (D) The distribution of spike number per neuron per synchronous event under different p values indicated by the starting points of the arrows, when CVSpikeNum = 0.1. Note that the distribution peaks at p when p is an integer. (E) Comparison of the numeric results (dots with error bars) of d with the analytic results (solid lines) given by Equations 28 and 29 for τcross = 0.5 ms (left) and τcross = 2.5ms (right). In (A–E), the size of the converging motif, the parameters for STDP as well as the simulation time and trials are the same as in Figure 4. Error bars represent s.e.m.

Here we explain the underlying physical pictures of these phenomena based on the observation of the change of d, cI and cII with model parameters:

(1) d is the only reason for the second phenomenon above (the red squares in Figure 6C, compare with the changes of cI and cII in Supplementary Figures 2A3,A4). To understand this, suppose a synchronous event happening during [t1, t2] (t2 = t1 + τcross), then the central neuron will receive its afferents during [t1 + τdelay, t2 + τdelay], with τdelay being the axonal delay. If τcross ≤ τdelay, the spike time t0 of the central neuron t0 < t1 + τdelay, which means that the central neuron always starts to receive spikes after its own firing in a synchronous event, so that all the spikes it receives depress the corresponding synapses after the synchronous event. If τcross ≤ τdelay ≪ τSTDP, the depression value Δw contributed by each non-central spike is approximately the same. If p is an integer and CVSpikeNum is small enough, the distribution of the spike number per neuron per synchronous event will sharply peak at p (Figure 6D). In this case, nearly all the non-central neurons fire exactly p spikes during a synchronous event, so that almost all the synapses are depressed by pΔw after this synchronous event. This is the reason why d is so small in this case. Mathematically, it can be shown (Supplementary Materials Section S3.1) that when τcross ≤ τdelay ≪ τSTDP,

with ET represent average over trials, and Vara(Na) being the variance of spike numbers of the non-central neurons in a synchronous event. We can see that d gets reduced if Vara(Na) is small, which is the case when CVSpikeNum is small and p is an integer. When τcross > τdelay, however, it is possible that t1 + τdelay < t0 < t2 + τdelay, so that all the in-coming spikes of the central neuron during [t1 + τdelay, t0) potentiate the corresponding synapses, and all the in-coming spikes during (t0, t2 + τdelay] depress the corresponding synapses. This may split the synapses into different directions, thereby increasing d (note the large d value when τcross > τdelay in Figure 6C).

(2) d tends to increase with p or CVSpikeNum (Figure 6E, see Supplementary Figures 2A2,B2,C2 for more information), thereby contributing to the tendency of the increase of DiffV with p or CVSpikeNum. In this paper, we generally suppose that τcross ≪ τSTDP and τdelay ≪ τSTDP. When p large, it can be shown (Supplementary Materials Section S3.1) that if τcross ≤ τdelay, then

with ET representing trial average; and if τcross > τdelay, then

with

We compare the analytic results with numeric results in Figure 6E, we can see the tendency that d increases with both p and CVSpikeNum. The first terms of Equations 28 and 29 represent the contribution of the synchronous event 0 that the central spike ti belongs to, and the last terms represent the contributions of the other synchronous events. p only exists in the first terms of Equations 28 and 29, which suggests that p increases d through increasing the synchrony strength of 0. CVSpikeNum exists in both terms, which suggests that the broadness of the spike number distribution in both 0 and the other synchronous events contribute to d.

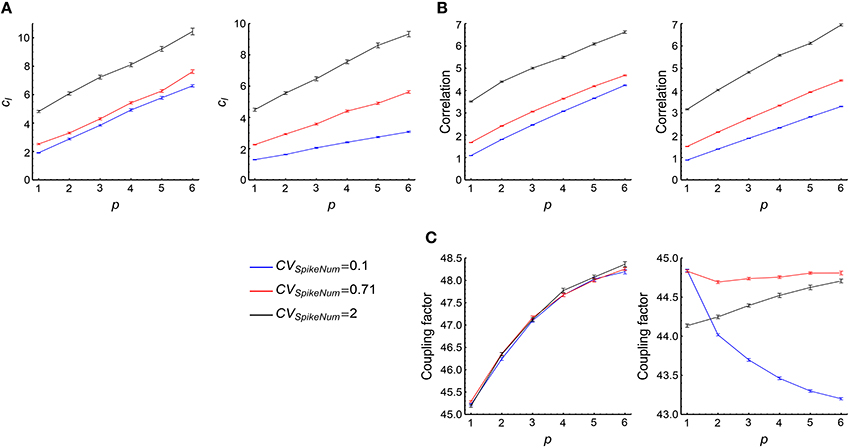

(3) cI tends to increase with both p and CVSpikeNum (Figure 7A, see more in Supplementary Figures 2A3, B3, C3), thereby contributing to the tendency of the increase of DiffV with p or CVSpikeNum. From Equation 17, we know that the change of cI may either caused by the correlation ρm, n; k or by the coupling factor fm, n; k. To estimate their contributions to cI, we define

and

We find that the change of with model parameters is far slower than the change of (Figures 7B,C), which suggests that the coupling factors fm, n; k do not significantly contribute to the change of cI, and the correlation ρm, n; k between synaptic updatings caused by adjacent central spikes is the main reason for the change of cI. It is easy to think that if two spikes of the central neuron are in the same synchronous event, then the correlation of the synaptic updatings caused by them tends to be large; but if they are in different synchronous events, then the correlation of the synaptic changes caused by them tends to be small. To understand the increase of cI with p and CVSpikeNum, let us consider the following toy model. Suppose that ρmn = a0 if m and n are in the same synchronous event (this assumption is particularly correct when τcross < τdelay), and ρmn = 0 if they are in different synchronous events. Suppose that the central neuron fires Ns spikes in the sth synchronous event, then the total value of the correlation coefficients will be

with M being the number of synchronous events, and q(Ns) being the distribution of the spike number per synchronous event. If p is sufficiently large, with . In this case

with N0 being the total spike number of the central neuron. From Equation 32, we can see that tend to increase with both p and CVSpikeNum, which explains why cI tend to increase with p and CVSpikeNum. Intuitively, p gathers more spikes into the same synchronous event; and CVSpikeNum, which induces heterogeneity of spike numbers in a synchronous event, concentrates spikes into fewer synchronous events. Both of them increase the number of spike pairs that lie in the same synchronous event, thereby increasing .

(4) We found that when p is large, the change of cII with p is small (Supplementary Figures 2A4, B4, C4), which suggests that the contribution of cII to the increase of DiffV with p is small compared to the other factors. The change of cII with CVSpikeNum is not strong when τcross ≤ τdelay; and cII tends to decrease with CVSpikeNum when τcross > τdelay (Supplementary Figures 2A4, B4, C4), which negatively contributes to the increase of DiffV with CVSpikeNum. These suggest that cII is not an important factor to understand the change of DiffV under synchronous firing. We move more discussions on cII into Supplementary Materials Section S3.2.

Figure 7. The contribution of cI to the influence of synchronous firing onto DiffV. (A) cI as a function of p under different CVSpikeNum values for τcross = 0.5 ms (left) and τcross = 2.5 ms (right), when spike trains are generated according to Model Sync (see Section 2). (B) as a function of p under different CVSpikeNum for τcross = 0.5 ms (left) and τcross = 2.5 ms (right). We found that ρl, k safely decays to zero when l ≥ 50 in our parameter range, therefore we cut off l at l = 50 when calculating the summation. (C) as a function of p for τcross = 0.5 ms (left) and τcross = 2.5 ms (right). Note that the percentage that changes with p or CVSpikeNum is far smaller than that of . In (A–C), the size of the converging motif, the parameters for STDP as well as the simulation time and trials are the same as in Figure 4. Error bars represent s.e.m.

From the discussion above, we know that both the mean (controlled by p) and variance (controlled by CVSpikeNum) of the spike number per neuron per synchronous event are important factors to understand the change of DiffV under synchronous firing. They contribute to DiffV mainly through d and cI.

3.4. The Influence of the Interaction of Auto-correlation Structure And Synchronous Firing onto DiffV

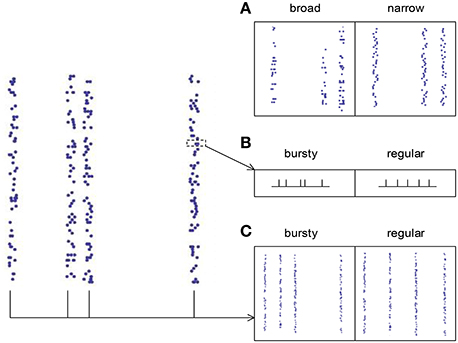

The spike pattern of a real neuronal population possesses both synchronous firing and auto-correlation structure, so it is desirable to know how these two pattern structures interact to influence DiffV. This is a complicated problem, because auto-correlation structure comes into spike patterns with synchronous firing in at least three ways (Figure 8):

(1) The broadness of the distribution of the spike numbers a neuron fires in different synchronous events (ATSpikeNum). If the distribution gets broad, a neuron may burst several spikes in some synchronous events, while keep silent in some others.

(2) The burstiness/regularity of the pieces of spike trains within synchronous events (ATWithinEvent).

(3) The burstiness/regularity of the occurrence of synchronous events (ATevents).

Figure 8. The three types of auto-correlation structure we consider under synchronous firing. (A) The broadness of the distribution of the spike numbers a neuron fires in different synchronous events (ATSpikeNum). Note that in the left panel, a neuron fires quite different number of spikes during different synchronous events; while in the right panel, the spike numbers of a neuron during different synchronous events are almost the same. (B) The burstiness/regularity of the pieces of spike trains within synchronous events (ATWithinEvent). (C) The burstiness/regularity of the occurrence of synchronous events (ATevents).

There may be other auto-correlation structures under synchronous firing. For example, in biological systems, the amplitudes of synchronous events may exhibit strong variability (Gireesh and Plenz, 2008; Petermann et al., 2009), which further increases the complexity of the problem. However, we argue that this varying-amplitude situation may be included into the constant-amplitude scenario using ATevents, after noting that strong synchronous events can be regarded as the burstiness of many small synchronous events. For simplicity, we will not consider this varying-amplitude situation in our following discussions.

We have already discussed the effect of ATSpikeNum in the previous subsection, and we will perform detailed discussions on how ATWithinEvent and ATevents influence DiffV through d, cI and cII in Supplementary Material Section S4. Here we summarize our main results:

(1) The broadness of the distribution of the spike numbers a neuron fires in different synchronous events increases DiffV through increasing d and cI. When τcross ≤ τdelay, it does not significantly change cII; when τcross > τdelay, it decreases cII, which negatively contributes to the increase of DiffV (see the previous subsection).

(2) The burstiness of the piece of spike train within a synchronous event significantly increases DiffV when τcross > τdelay. It does this through increasing d and cII, it does not significantly influence cI (Supplementary Material Section S4.1, Supplementary Figure 3).

(3) The burstiness of the occurrence of synchronous events increases DiffV through increasing d and cI. It decreases cII, which negatively contributes to the increase of DiffV (Supplementary Material Section S4.2, Supplementary Figure 7).

In Supplementary Material Section S4 and Supplementary Figures 3–10, we explain the underlying mechanisms of these influences using both numeric and analytic approaches. We find that broader distribution of spike number per neuron per synchronous event, burstier spike trains within synchronous events, and burstier occurrence of synchronous events tend to increase DiffV. These results can be concluded into a rule of thumb: the burstiness of spike trains tends to increase DiffV, while the regularity tends to decrease DiffV.

From Section 3.2, we know that when the spike trains are stationary processes, DiffV increases with the decrease of CV if CV ≪ 1 (Figure 4A), because of transient cross-correlation. However, here we find that after synchronous firing is added into the spike pattern, DiffV usually monotonically decreases with the decrease of CV. To understand this, note that in our model, we suppose that the pieces of spike trains that belong to different synchronous events are independent of each other. In this case, transient cross-correlation may be fragile under synchronous firing, because the synaptic changes caused by two central spikes tm and tn (i.e., and ) are hard to be correlated if tm and tn belong to different synchronous events, even if m and n are nearby by index.

3.5. The Influence of Heterogeneity of Rates onto DriftV

Under heterogeneity of rates or heterogeneity of cross-correlations, different synapses may drift with different velocities, thereby inducing DriftV. Heterogeneity of rates and heterogeneity of cross-correlations may also influence the diffusion of synapses. However, as DriftV ∝ t2 and DiffV ∝ t, DriftV will dominate in a long run. Therefore, we will focus on their influence onto DriftV in the main text, their influence on DiffV will be briefly discussed in the Supplementary Materials Section S6.

Suppose that the activities of the central and non-central neurons are time-modulated simultaneously, so that the firing rate of the central neuron r0(t) = 〈r0(t)〉x(t) while the firing rate of the ath non-central neuron ra(t) = 〈ra(t)〉x(t), with 〈·〉 representing time average, and x(t) representing a function with 〈x(t)〉 = 1. Then, the change of the ath synapse per unit time is

with H(τ) being the STDP time window and C(−τdelay − τ) = 〈x(t)x(t − τdelay − τ)〉 being the auto-correlation of x(t). If we average the equation above over a, we will have

The two equations above togther give

Therefore,

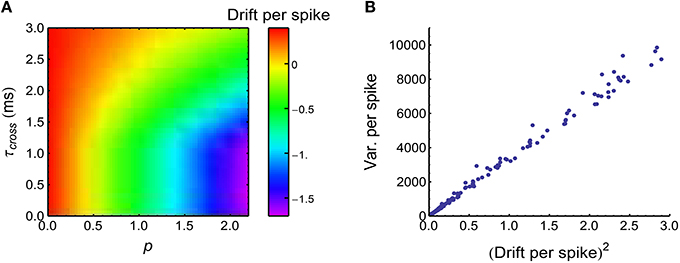

This equation suggests that the heterogeneity of rates 〈ra(t)〉 can induce DriftV; but if the population rate Ea(〈ra(t)〉) is kept constant, DriftV also depends on |Ea(va)|, which quantifies the imbalance of the strengths of the potentiation and depression process (P-D imbalance). If Ea(va) = 0, which means that STDP cannot change the mean synaptic strength (P-D balance), then DriftV = 0; if |Ea(va)| is large, which means that P-D imbalance is strong, then DriftV is also large. From Equation 34, we know that if the firing rates 〈r0(t)〉 and Ea(〈ra(t)〉) are kept constant, then P-D imbalance is controlled by the time-modulated pattern x(t), which will be changed if synchronous firing is added into the spike pattern. Intuitively, during a synchronous event, the axonal delay in the converging motif tends to make the central neuron receive spikes from the non-central neurons after its own spikes, which depresses the synapses under STDP (Figure 9A, a similar phenomenon has been observed in Lubenov and Siapas, 2008). Under heterogeneity of rates, this makes DriftV become accordingly changed (Figure 9B). In summary, heterogeneity of rates makes use of P-D imbalance to induce DriftV; and synchronous firing can influence P-D imbalance, thereby influencing DriftV under heterogeneity of rates.

Figure 9. Synchronous firing influences P-D imbalance, and heterogeneity of rates makes use of P-D imbalance to change DriftV. (A) Drift per spike (, with being the trial-averaged spike number of the central neuron) as a function of p and τcross, when the spike trains have synchronous firing and the firing rates of neurons are long-tailed distributed (Model Long Tail in Section 2; s = 1). Note that synchronous firing strengthens depression in this model. (B) , which is consistent with Equation 36. Different dots represent different (τcross, p) pairs that are uniformly distributed in the range of (A). In (A,B), Ap = 2, Ad = 1. The size of the converging motif, and the simulation time and trials are the same as in Figure 4.

3.6. The Interaction of Heterogeneity of Rates, Synchronous Firing And Auto-correlation Structure

From the arguments above, we know that if spike trains are stationary processes (with x(t) = 1 for any t), then auto-correlation structure cannot influences DriftV under heterogeneity of rates (because auto-correlation structure cannot change x(t)). Its influences onto DiffV in this case are discussed in Supplementary Materials Section S3. Interesting things happen when synchronous firing also comes into the picture. As we mentioned in Section 3.4, under synchronous firing, auto-correlation structure may come into the spike pattern in three ways (Figure 8):

(1) The broadness of the distribution of spike number per neuron per synchronous event (ATSpikeNum).

(2) The burstiness/regularity of the pieces of spike trains within synchronous events (ATWithinEvent).

(3) The burstiness/regularity of the occurrence of synchronous events (ATevents).

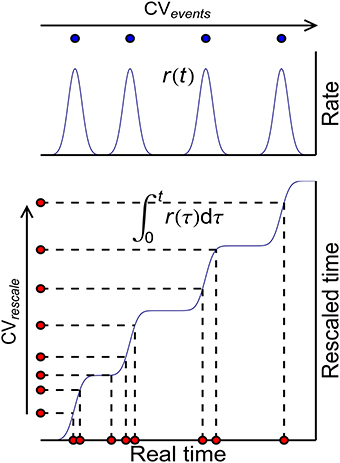

These three auto-correlation structures can be classified into two kinds: ATSpikeNum and ATWithinEvent do not change x(t), so that they do not change P-D imbalance, nor DriftV under heterogeneity of rates; ATevents, however, changes x(t), thereby changing P-D imbalance, and DriftV under heterogeneity of rates. An idea to separate these two kinds of auto-correlation structure is to define rescaled time using the accumulative function of population firing rate (Pillow, 2009)

which stretches the inter-spike intervals in proportion to the firing rate (Figure 10). Auto-correlation structure then comes into the picture in two ways: the auto-correlation structure of the spikes in the rescaled time, which can be quantified by the CV value CVrescale, and the auto-correlation structure of the occurrence of synchronous events, which can be quantified by CVevents. Apparently, CVrescale has no influence on x(t), thereby nor DriftV under heterogeneity of rates; but CVevents influences x(t), thereby DriftV under heterogeneity of rates. Combining the discussion on the influence of temporal structure onto DiffV in Section 3.4, we come to the conclusion that under synchronous firing and heterogeneity of rates,

(1) The temporal factors that increases either CVrescale or CVevents increase DiffV.

(2) The temporal factors that influences CVrescale do not change P-D imbalance, thereby nor DriftV under heterogeneity of rates.

(3) The temporal factors that influences CVevents change P-D imbalance, thereby DriftV under heterogeneity of rates.

Figure 10. The scheme to classify auto-correlation structure under synchronous firing. Blue curves represent the population firing rate (upper) and the accumulative function of the population firing rate (lower) in the real time. Blue dots (upper) represent the times when the synchronous events occur, whose auto-correlation structure is quantified by their CV value CVevents. Red dots (lower) represent the spikes in the real time and their correspondences in the rescaled time, whose auto-correlation structure is quantified by their CV value CVrescale.

Indeed, we find that both ATSpikeNum and ATWithinEvent increase CVrescale and don't influence P-D imbalance (Supplementary Figures 11A,B); while ATevents increases CVevents and may change P-D imbalance especially when CVevents > 1 (Supplementary Figures 11C,D). The influence of CVevents onto P-D imbalance seems complicated, and depends on the values of τdelay, Ap and Ad (see Supplementary Figure 12, the physical mechanisms will be explained in Supplementary Material Section S5.2):

(1) Suppose during a synchronous event 0, the central neuron fires at time t0. Because of the axonal delay τdelay, there is usually a time interval between t0 and when the spikes from non-central neurons arrive at the axonal terminal, and the typical length of this interval is τdelay. If synchronous events are not close to each other, so that no non-central spikes from synchronous events other than 0 arrive at the central neuron during this interval (i.e., different synchronous events do not overlap with each other), then Ea, T(Δwa) will increases (or decreases) with CVevents if Apexp(τdelay∕τSTDP) > Adexp(−τdelay∕τSTDP) (or Apexp(τdelay∕τSTDP) < Adexp(−τdelay∕τSTDP)). In this paper, typically τdelay ≪ τSTDP, so these conditions become Ap > Ad or Ap<Ad.

(2) If synchronous events are allowed to overlap with each other, then CVevents will increase the chance of this overlapping when it is large (typically when CVevents > 1). In this case, Ea, T(Δwa) will decrease (or increase) with CVevents if τdelay > 0 (or τdelay < 0). See the meaning of τdelay < 0 in Figure 2B.

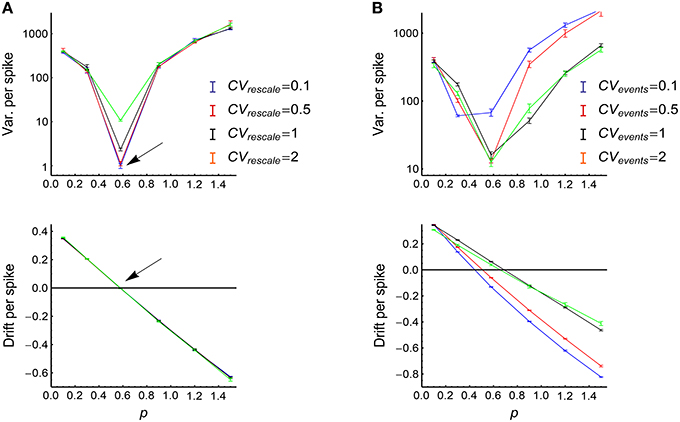

To get a better understanding on how heterogeneity of rates, synchronous firing and auto-correlation structure interact with each other, we used Model Sync-Auto-LongTail (see Section 2) to generate spikes. In this model, for simplicity, the auto-correlation structure in the rescaled time and the auto-correlation structure of the occurrence of the synchronous events are respectively controlled by a single parameter (i.e., CVrescale and CVevents). We generated spike patterns using this model, and investigated how the efficacy variability changes with model parameters (Figure 11). The results validate our arguments above:

(1) CVrescale hardly influences P-D imbalance (Figure 11A, lower panel).

(2) When the strength of firing events is adjusted so that the potentiation and depression almost balance each other (so that DriftV ≈ 0), the efficacy variability significantly increases with CVrescale (Figure 11A, upper panel). However, when the potentiation and depression are imbalanced, the efficacy variability becomes hardly influenced by CVrescale (Figure 11A, upper panel). This is because that CVrescale can only increase DiffV, and can hardly influence DriftV. As DiffV ∝ t while DriftV ∝ t2, the contribution of CVrescale to efficacy variability after a long run is only obvious when DriftV ≈ 0, which is realized at P-D balance.

(3) The influence of CVevents onto efficacy variability is non-monotonically complicated (Figure 11B, upper panel). The reason is that CVevents not only influences DiffV, but also influences DriftV in a complicated way (Figure 11B, lower panel), and DriftV is also influenced by p. Therefore, potentiation and depression are balanced at different p values for different CVevents values.

Figure 11. The influence of auto-correlation structure onto the efficacy variability under synchronous firing and heterogeneity of rates. (A) Upper: Variance per spike as a function of p at different CVrescale, keeping CVevents = 0.71 and s = 1 (see Model Sync-Auto-LongTail in Section 2). Lower: the corresponding mean efficacy changes per spike, which represent P-D imbalance. The arrows indicate the p value at which the mean efficacy change is almost zero (indicated by the horizontal black line), so DriftV ≈ 0 at this point due to P-D balance. Error bars represent s.e.m. in normal scale and relative errors corresponding to s.e.m. in log scale. (B) The same as (A) except that different lines represent different CVevents values, keeping CVrescale = 0.71. In (A,B), τcross = 2ms, Ap = 2, Ad = 1, τdelay = 1 ms. The size of the converging motif, as well as the simulation time and trials are the same as in Figure 4.

3.7. The Influence of Heterogeneity of Cross-correlations onto DriftV

Heterogeneity of cross-correlations mainly influences efficacy variability in DriftV manner. Suppose the firing rate of the central neuron is r0(t) = r0·x0(t) and the firing rate of the ath non-central neuron is ra(t) = ra·xa(t), with r0 and ra being their time-averaged firing rates and 〈x0(t)〉 = 〈xa(t)〉 = 1 (here 〈·〉 represent time average), then similarly as Equation 33, we have

with Ca(τ) = 〈x0(t)xa(t + τ)〉 being the unit cross-correlation between the central neuron and the ath non-central neuron. Therefore,

so the heterogeneity of Ca(τ) influences DriftV together with the heterogeneity of ra.

For simplicity, we did not investigate how the heterogeneity of Ca(τ) interact with other pattern structures. Under STDP, the main effect of the heterogeneity of Ca(τ) is to influence DriftV with its interaction with the heterogeneity of firing rates through Equation 38, which will not change even when the other pattern statistics are considered. The influence of the heterogeneity of Ca(τ) onto DriftV can be understood by comparing Equation 38 with

which quantifies the DriftV caused by heterogeneity of rates under P-D imbalance.

3.8. The Case When τdelay < 0

In the discussions above, we generally suppose that τdelay ≥ 0, which means that the axonal delay for pre-synaptic spikes is no smaller than the dendritic delay for post-synaptic back-propagated spikes. Here we discuss the case when τdelay < 0, i.e., the dendritic delay is larger than the axonal delay.

To understand the relationship between the case τdelay > 0 and the case τdelay < 0, let us first consider a synapse from neuron a to neuron b in the following two cases. Case I: suppose neuron a (neuron b) fires a spike at time ta (tb), and Ap = A, Ad = B in the STDP time window Equation 6. In this case, whether the synapse is potentiated or depressed depends on the time difference between tb and ta + τdelay (i.e., the sign of Δt1 ≡ tb − (ta + τdelay)). Case II: now we let the spike delay become −τdelay, and Ap = B, Ad = A in Equation 6, and we also reverse the spike trains (just like showing the two neurons a backward movie), then neuron a will fire at time T−ta (with T being the total duration of the spike pattern), and neuron b will fire at time T−tb. In this case, whether the synapse is potentiated or depressed depends on the sign of Δt2 ≡ (T − tb) − ((T − ta) − τdelay) = −Δt1. If Δt1 > 0, then the synapse in Case I will be potentiated by Aexp(−Δt1∕τSTDP), and the synapse in Case II will be depressed by −Aexp(−Δt1∕τSTDP); if Δt1 < 0, then the synapse in Case I will be depressed by −Bexp(Δt1∕τSTDP), and the synapse in Case II will be potentiated by Bexp(Δt1∕τSTDP). After applying this argument to every pair of spikes in the spike trains of the central neuron and the ath non-central neuron in a converging motif, we have that

Therefore, the total synaptic change Δwa = Δwa, p + Δwa, d satisfies

which means that

but

The spike trains generated by our statistical models (Section 2.3) are all statistically time-reversal invariant (i.e., people cannot tell whether a spike pattern is forward or backward by analyzing its statistics). Therefore, from Equation 43, our results on how auto-correlation structure, synchronous firing and heterogeneity of rates influence the efficacy variability are all valid for τdelay < 0, after replacing τdelay by |τdelay|. The influence of heterogeneity of cross-correlations can be obtained by directly substituting τdelay < 0 into Equation 38.

3.9. A Summary on the Efficacy Variability in Converging Motifs

Here we summarize our results in the previous subsections on the efficacy variability in converging motifs.

3.9.1. DiffV

(1) In stationary spike trains (i.e., spike trains with constant trial-averaged firing rate over time) with homogeneity of rates, DiffV gets large either when the spike trains get bursty or strongly regular, and DiffV is smallest when CV is within the range 0.3~0.7 (Section 3.2). The only reason why DiffV is large under strong regularity and homogeneity of rates is transient cross-correlation. In stationary spike trains with heterogeneity of rates, DiffV tends to monotonically increase with CV (Supplementary Material Section S2), because the transient cross-correlation under strong regularity is destroyed by heterogeneity of rates.