- 1Department of Tourism Management, Sichuan Polytechnic University, Deyang, Sichuan, China

- 2Department of Civil Engineering, Southwest Jiaotong University, Deyang, Sichuan, China

- 3Bureau of Housing and Urban-Rural Development of Deyang, Deyang, China

Introduction: Tourism planning, particularly in rural areas, presents complex challenges due to the highly dynamic and interdependent nature of tourism demand, influenced by seasonal, geographical, and economic factors. Traditional tourism forecasting methods, such as ARIMA and Prophet, often rely on statistical models that are limited in their ability to capture long-term dependencies and multi-dimensional data interactions. These methods struggle with sparse and irregular data commonly found in rural tourism datasets, leading to less accurate predictions and suboptimal decision-making.

Methods: To address these issues, we propose NeuroTourism xLSTM, a neuro-inspired model designed to handle the unique complexities of rural tourism planning. Our model integrates an extended Long Short-Term Memory (xLSTM) framework with spatial and temporal attention mechanisms and a memory module, enabling it to capture both short-term fluctuations and long-term trends in tourism data. Additionally, the model employs a multi-objective optimization framework to balance competing goals such as revenue maximization, environmental sustainability, and socio-economic development.

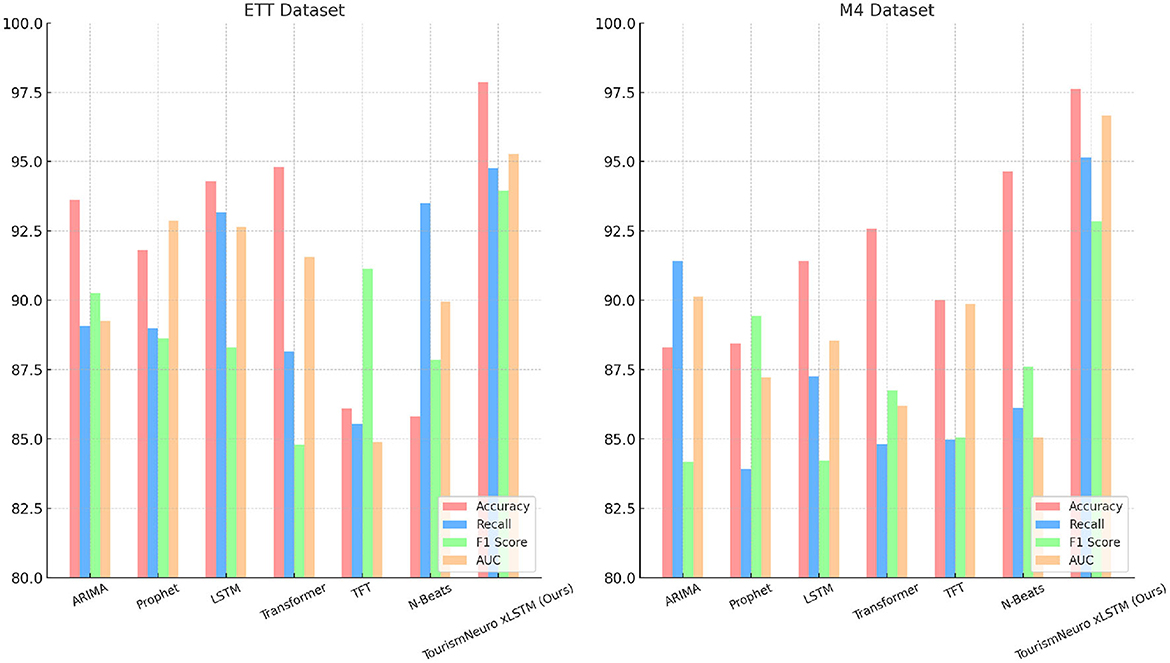

Results: Experimental results on four diverse datasets, including ETT, M4, Weather2K, and the Tourism Forecasting Competition datasets, demonstrate that NeuroTourism xLSTM significantly outperforms traditional methods in terms of accuracy.

Discussion: The model's ability to process complex data dependencies and deliver precise predictions makes it a valuable tool for rural tourism planners, offering actionable insights that can enhance strategic decision-making and resource allocation.

1 Introduction

Accurate tourism demand forecasting is a critical component in tourism planning and management, particularly in rural areas where demand is influenced by seasonal fluctuations, geographic factors, and economic conditions (Song et al., 2019). Effective forecasting provides decision-making support for policymakers, optimizes resource allocation, and drives both economic development and improved visitor experiences (Goh et al., 2019). As data science and artificial intelligence technologies have advanced, the integration of machine learning into traditional forecasting methods has opened up new possibilities (Liu Z. et al., 2022). However, the challenges of multidimensional relationships, data sparsity, and long-term dependencies in tourism data call for more advanced and efficient forecasting models (Zhou et al., 2021).

Traditional methods such as ARIMA and exponential smoothing (Box et al., 2015; Gardner, 2006) offer strong interpretability and computational efficiency, particularly in tasks involving small datasets. However, their reliance on linear assumptions limits their ability to capture the complex nonlinear relationships and multidimensional factors that drive tourism demand (Li and Liu, 2020). These methods are also sensitive to missing or sparse data, making them less effective in real-world scenarios (Wang et al., 2018). As tourism dynamics become increasingly complex, these limitations are further amplified. More recently, deep learning-based models, such as Long Short-Term Memory (LSTM) networks (Li X. et al., 2024) and Transformer models (Zhang et al., 2024), have been introduced for tourism demand forecasting. LSTM networks, with their gating mechanisms, are particularly effective at capturing long-term dependencies, while Transformer models, with self-attention mechanisms, can handle longer sequences and complex nonlinear data (Lim and Zohren, 2021). Despite their advantages, these models often struggle with processing spatial information, a critical aspect in tourism forecasting. They also require large datasets and significant computational resources, which can pose challenges in rural tourism applications (Zhou et al., 2021). To address these gaps, spatio-temporal models have been explored. These models simultaneously capture both spatial and temporal dependencies, offering a more holistic approach to modeling tourism demand. However, existing spatio-temporal models like Spatio-Temporal Convolutional Networks (ST-ConvNet) (Yu et al., 2018) and Spatio-Temporal Graph Convolutional Networks (ST-GCN) (Yan et al., 2018) require substantial computational power and complete datasets, which may not always be available in practice (Liu Y. et al., 2022). While spatial and temporal attention mechanisms have been introduced to guide models toward key regions and periods, optimizing these models to handle sparse data while maintaining computational efficiency remains an ongoing challenge (Wang et al., 2022).

In response to these challenges, we propose the TourismNeuro xLSTM model, a neuro-inspired extended Long Short-Term Memory network designed specifically for rural tourism planning and innovation. By integrating spatial and temporal attention mechanisms alongside memory modules, this model is capable of capturing both long-term dependencies and short-term fluctuations in tourism demand while addressing complex spatial relationships. Our approach is particularly suited for tasks involving sparse data and nonlinear interactions between geographic and temporal factors. Through experiments on multiple tourism datasets, TourismNeuro xLSTM demonstrates significant improvements in prediction accuracy, computational efficiency, and scalability, making it a strong candidate for practical applications in rural tourism forecasting.

• The TourismNeuro xLSTM introduces neuro-inspired spatial and temporal attention mechanisms combined with a memory module, enhancing the model's ability to capture complex dependencies in rural tourism demand forecasting.

• This model is highly versatile, performing efficiently across various tourism datasets and scenarios. Its design makes it suitable for handling sparse data, long-term dependencies, and multiple forecasting objectives in diverse rural settings.

• Experimental evaluations demonstrate that TourismNeuro xLSTM consistently outperforms state-of-the-art methods, achieving superior F1 score on multiple datasets, thus proving its robustness and reliability.

2 Related work

2.1 Neural computing in tourism forecasting

Neural computing has increasingly been applied to tourism forecasting as traditional statistical methods, such as ARIMA and exponential smoothing, have shown limitations in capturing the non-linear relationships and complex dependencies that often characterize tourism demand. Neural networks, particularly artificial neural networks (ANNs), have emerged as powerful tools for modeling these relationships. In tourism forecasting, neural computing leverages large amounts of historical data, such as visitor numbers, weather conditions, economic indicators, and events, to predict future trends (Wang et al., 2019b). Neural computing models can automatically learn from data, recognizing patterns without needing explicit programming. This is particularly valuable in tourism, where data can be diverse and complex. One of the key advantages of neural computing in tourism forecasting is its ability to handle non-linearity, which is common in tourism data due to factors such as seasonality, economic conditions, and sudden external events like natural disasters or pandemics (Li J. et al., 2024). However, one of the main challenges in applying neural computing to tourism is the requirement for large amounts of high-quality data, which may not always be available, particularly in rural or less developed areas. Moreover, while neural networks can produce highly accurate forecasts, their black-box nature can limit interpretability, making it difficult for decision-makers in tourism management to understand the reasoning behind the models predictions. Despite these challenges, neural computing continues to advance, with newer architectures like recurrent neural networks (RNNs) and convolutional neural networks (CNNs) showing promise in improving accuracy and interpretability in tourism demand forecasting (Wang W. et al., 2024).

2.2 Brain-inspired intelligence in tourism forecasting

Brain-inspired intelligence, or neuromorphic computing, is an emerging area in artificial intelligence (AI) that mimics the way the human brain processes information. In the context of tourism forecasting, brain-inspired models offer a novel approach to handling complex, multi-dimensional data, particularly where there are spatial and temporal dependencies, such as seasonality and geographic trends in tourism demand (Wang et al., 2019a). These models take inspiration from the cognitive functions of the human brain, such as memory, attention, and learning, to build systems that are more adaptive and capable of handling dynamic environments. One application of brain-inspired intelligence in tourism forecasting is through the use of spiking neural networks (SNNs), which model the firing of neurons to simulate biological neural processes. SNNs have been found to be highly efficient in processing temporal data, making them suitable for time-series prediction tasks in tourism forecasting (Zhu et al., 2024). Another area where brain-inspired models excel is in their ability to integrate sensory data, such as images and sounds, with structured data, like historical tourism numbers, to create more comprehensive forecasts. The use of attention mechanisms, inspired by human cognitive attention, allows these models to focus on the most relevant features of the data, improving both the accuracy and interpretability of the forecasts. However, despite their potential, brain-inspired models are still in the early stages of application within tourism forecasting. Challenges such as high computational cost and the need for specialized hardware to simulate brain-like processes remain significant barriers to widespread adoption (Wang C. et al., 2024). Nonetheless, brain-inspired intelligence holds the promise of significantly improving the adaptability and efficiency of tourism forecasting systems, particularly in dynamic and complex environments.

2.3 LSTMs in time-series forecasting

Long Short-Term Memory (LSTM) networks have become a popular choice for time-series forecasting due to their ability to capture long-term dependencies in sequential data. Unlike traditional RNNs, which struggle with vanishing gradient problems, LSTMs introduce memory cells that allow information to be retained over long periods, making them ideal for tasks such as tourism demand forecasting, where historical patterns can influence future outcomes (Wang et al., 2016). LSTMs have been particularly successful in modeling tourism time-series data, which often exhibits complex seasonality and trends. In tourism forecasting, LSTMs are used to predict future visitor numbers, hotel occupancy rates, and revenue based on historical data, incorporating external factors such as weather, economic indicators, and events (Hong et al., 2024). One of the strengths of LSTMs is their ability to learn from and adapt to these changing conditions, making them highly suitable for environments where demand fluctuates seasonally or in response to external factors. Moreover, LSTMs can handle missing data better than traditional models, which is crucial in tourism, where complete datasets are not always available. However, LSTMs are not without limitations. They require large datasets for training, which can be a challenge in rural or less-developed tourism markets. Additionally, LSTMs are computationally expensive, particularly when applied to large-scale datasets or when predicting multiple variables simultaneously. Despite these challenges, LSTMs remain one of the most widely used models for time-series forecasting due to their flexibility, adaptability, and superior performance in capturing long-term dependencies in sequential data.

3 Methodology

3.1 Overview

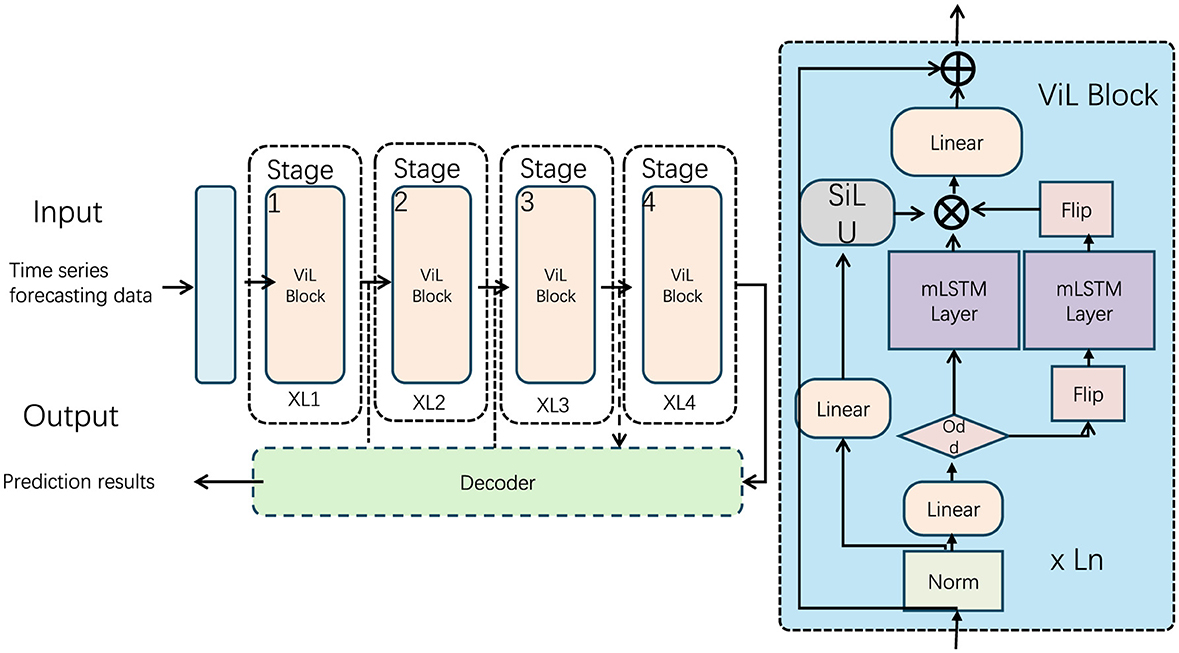

In this study, we present a novel model framework tailored for the domain of rural tourism planning and innovation, inspired by neuro-computational mechanisms and recent advances in extended Long Short-Term Memory (xLSTM) architectures. Our approach, termed NeuroTourism xLSTM, leverages neuro-inspired principles combined with the structural advantages of xLSTM to enhance decision-making processes in tourism planning. The model is designed to capture long-term dependencies within complex tourism datasets while maintaining computational efficiency, making it suitable for practical applications in rural environments, which often present unique challenges in data sparsity and irregular patterns. The NeuroTourism xLSTM framework is organized into distinct modules, each addressing specific aspects of the problem. First, we introduce a neuro-inspired input preprocessing layer that incorporates domain-specific knowledge, including geographical and socio-economic factors, to ensure that the model handles diverse inputs effectively. This is followed by the core xLSTM module, which processes time-dependent sequences, capturing both short- and long-term trends in tourism data. Finally, we incorporate a decision-making layer that provides actionable insights for tourism stakeholders based on the model's predictions (as shown in Figure 1).

Figure 1. Overall model structure. The figure shows a time series data prediction model based on a multi-stage architecture, including multiple ViL Block processing stages and decoders. The core module is the ViL Block that combines SiLU activation and mLSTM layers.

The remainder of this article is organized as follows: In Section 3.2, we provide the formal problem definition and preliminaries necessary for understanding the technical details of our model. Section 3.3 introduces the architectural details of the NeuroTourism xLSTM model, including the design of its core components. Section 3.4 outlines the innovative strategies incorporated into the model, inspired by prior knowledge in tourism planning, and how these strategies are integrated into the overall framework.

3.2 Preliminaries

The objective of our work is to tackle the problem of rural tourism planning using a model that leverages sequential data, reflecting trends and dependencies that occur over time. To begin formalizing this, we define our dataset , where zi represents the input vector for each tourism area at a time step ti, and yi corresponds to the desired output, such as tourism demand, revenue, or other relevant performance metrics. The input vector zi is composed of multiple sub-vectors that capture distinct aspects relevant to tourism, such as environmental factors, historical trends, and regional economic indicators.

Our goal is to predict the future output ŷi+1 by leveraging the historical data . This is formulated as a time series forecasting task, where the objective is to minimize the deviation between the actual values yi+1 and the predicted values ŷi+1. The minimization of this prediction error can be described mathematically as:

where denotes a loss function such as mean squared error (MSE), and Θ represents the trainable parameters of the model.

We define the input vector as a concatenation of several feature sub-vectors that represent different dimensions of tourism dynamics, including spatial features (zi, loc), economic indicators (zi, econ), temporal variables (zi, temp), and historical data (zi, hist). The full input vector is expressed as:

This setup poses challenges in developing a model that can generalize across regions with varying data availability. To handle this, we propose a modified Long Short-Term Memory (LSTM) network, referred to as xLSTM, which captures both short- and long-term temporal dependencies while accommodating sparse data.

The xLSTM operates by updating its hidden states ht and cell states St at each time step. The update equations incorporate gating mechanisms to manage the flow of information:

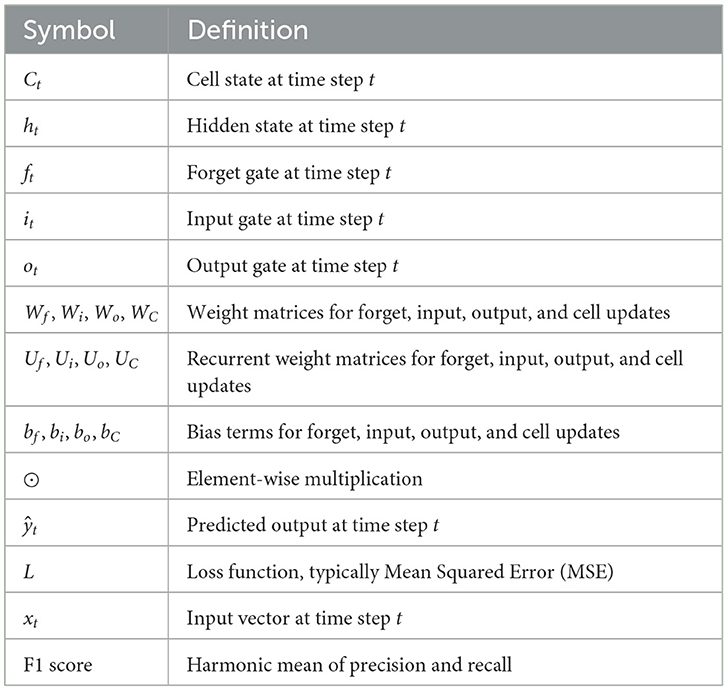

where gt, ut, and ot represent forget, update, and output gates, while St and ht are the cell state and hidden state, respectively. The parameters Wg, Wu, WS, Wo, and biases bg, bu, bS, bo are learned during training. These gating mechanisms enable the model to retain or discard information as necessary, making it suitable for capturing long-term trends in tourism data (the terms used herein are shown in Table 1).

3.3 NeuroTourism xLSTM model

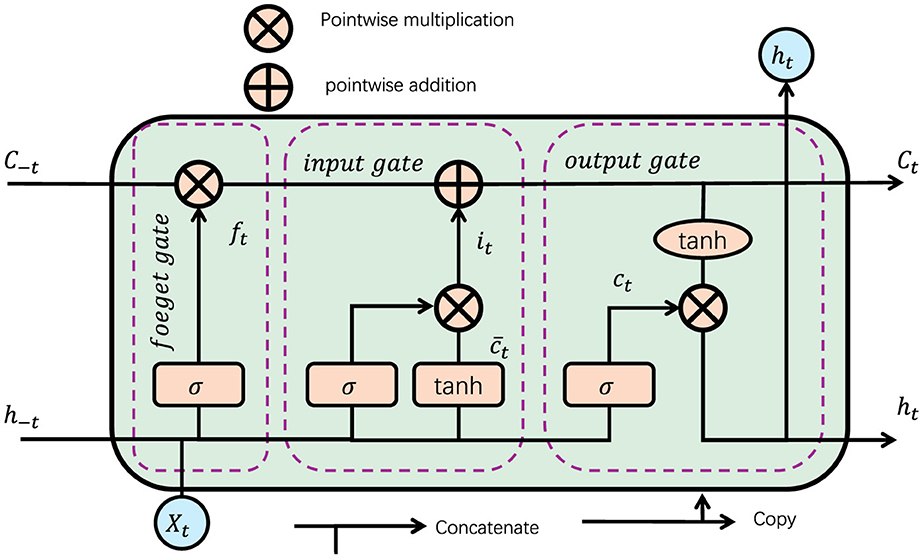

The NeuroTourism xLSTM model is designed to address the complexities of rural tourism planning by leveraging the extended capabilities of xLSTM architectures. This model introduces a neuro-inspired approach to enhance long-term dependency modeling and adaptability in the face of sparse and irregular datasets. In this section, we will detail the core components of the model, including the input preprocessing, xLSTM block modifications, and the decision-making layer that outputs actionable insights for tourism stakeholders (as shown in Figure 2).

Figure 2. The structure diagram of LSTM. Information is filtered and processed from the input through the input gate, forget gate, and output gate. In the input stage, the data is adjusted by the activation function, and after gradual filtering and calculation (point multiplication, addition), the final output is associated with the current state and hidden state. This process helps retain important information and filter irrelevant information, ensuring efficient model learning.

The model begins with an Input Preprocessing Layer, which applies a neuro-inspired mechanism to process diverse inputs, including geographical, seasonal, economic, and historical tourism data. Each feature type is first encoded into an appropriate dimensional space and normalized to ensure consistency across regions. Geographical data, for example, is encoded through a radial basis function (RBF) layer to capture spatial dependencies. Economic and seasonal data are handled through a linear embedding layer, while historical tourism data is passed through a temporal encoder that captures both short-term and long-term trends. The core of the NeuroTourism xLSTM model is the xLSTM Module, a variant of the classical LSTM that incorporates matrix-based memory cells, exponential gating, and parallelizable memory heads. This module effectively models the temporal dependencies in the tourism dataset, learning patterns across various time scales. The matrix memory in xLSTM is defined as:

where Ct represents the memory matrix at time t, ft and it are the forget and input gates, and Wv, Wk, bv, and bk are the learnable parameters that project the input xt into value and key vectors. The gates are updated through exponential gating, where:

and the output gate is computed as:

where wi, wf, and Wo are the learnable parameters for the gates, and ot represents the output gate. The matrix memory cell Ct is used to capture long-term dependencies by storing and retrieving relevant information via a query mechanism qt. This design enables the model to track complex patterns over time while maintaining computational efficiency.

Next, the model employs an Attention Mechanism to focus on relevant time periods and features. Attention weights are computed using the query, key, and value vectors, which allows the model to dynamically prioritize important historical data. The attention mechanism is formalized as:

where Q, K, and V are the query, key, and value matrices, and d is the dimensionality of the query and key vectors. This mechanism ensures that the model focuses on the most relevant aspects of the tourism dataset, providing robust predictions even when data is sparse or incomplete.

Finally, the model incorporates a Decision-Making Layer that converts the learned representations into actionable insights. This layer consists of a multi-layer perceptron (MLP) that takes the output of the xLSTM module and attention mechanism and produces predictions for tourism demand or other relevant metrics. The MLP consists of two hidden layers with non-linear activations, followed by an output layer. The final prediction is obtained by minimizing the following objective:

where ht is the hidden state at time t, and ŷi + 1 is the predicted output for the next time step. The model is trained to minimize the mean squared error (MSE) between the predicted and true values, defined as:

By combining the neuro-inspired input preprocessing, enhanced xLSTM architecture, and attention-based decision-making layer, the NeuroTourism xLSTM model is capable of generating accurate and insightful predictions, making it a powerful tool for rural tourism planning and innovation.

3.4 Neuro-Inspired Decision Strategy

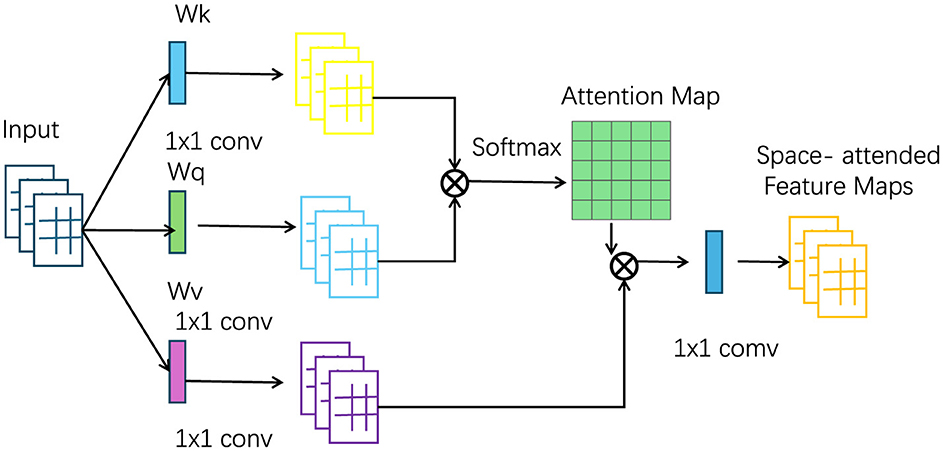

The Neuro-Inspired Decision Strategy is designed to integrate domain knowledge, spatial awareness, and temporal patterns into a cohesive decision-making process. This strategy relies on the neuro-inspired architecture of the xLSTM and is enhanced by the combination of external knowledge from the tourism domain. The decision-making strategy is geared toward optimizing tourism planning objectives, such as maximizing tourist inflow, increasing revenue, or preserving cultural and environmental assets. One of the key innovations in the decision strategy is the use of Hierarchical Attention Mechanisms, inspired by cognitive neuroscience. This attention mechanism mimics the way human cognition processes large amounts of information by focusing on the most relevant data points while ignoring less pertinent details. The hierarchical structure allows the model to first focus on broader trends, such as overall seasonal patterns or economic indicators, and then zoom in on more specific details, like fluctuations in demand for a particular region or period (as shown in Figure 3).

Figure 3. The structure diagram of spatial attention. The data flow primarily involves feature maps and attention mechanisms. First, the input passes through space-attended feature maps, then processed by several 1x1 convolution layers, generating an attention map. A Softmax function is applied to compute weights, which adjust the contribution of different parts of the feature maps, highlighting the most important information. The attention mechanism uses weight matrices Wq, Wk, and Wv to compute the relationships between different positions, thereby optimizing the selection and flow of information and improving model performance.

The hierarchical attention weights are computed using multiple layers of queries, keys, and values, each representing a different level of abstraction. The overall attention score is calculated as:

where is the attention score at level l, Q(l), K(l), and V(l) are the query, key, and value matrices at level l, and d is the dimensionality. Each level of attention refines the model's focus, allowing it to prioritize different aspects of the data based on the current stage of decision-making.

In conjunction with the attention mechanism, we introduce a Neuro-Cognitive Filtering system that filters out irrelevant data points. This is crucial for rural tourism planning, where certain inputs, such as economic or geographical data, may be noisy or incomplete. The filtering mechanism is inspired by the brains ability to focus attention selectively, discarding unimportant information and emphasizing key data. Mathematically, this filtering is implemented using a gating mechanism that applies a weight β to each input feature based on its relevance, calculated as:

where is the filtered input at time t, βt is the gating factor determined by the neuro-cognitive filter, Wfilter is the learned weight matrix, and bfilter is the bias term. This filter ensures that only the most relevant inputs are passed to the xLSTM layers, improving the models efficiency and accuracy.

The decision strategy also integrates a Neuroplasticity-Inspired Learning Rate Schedule. Neuroplasticity refers to the brains ability to adapt its learning processes based on the information it receives. Similarly, the learning rate in our model adapts dynamically based on the complexity of the data and the current state of the training process. This is particularly useful in tourism planning, where data availability and reliability can vary significantly over time and across regions. The adaptive learning rate is defined as:

where ηt is the learning rate at time step t, η0 is the initial learning rate, T is the total number of training steps, and α is a hyperparameter that controls the rate of decay. This schedule allows the model to learn more quickly when data is abundant and slows down the learning rate as training progresses, helping to avoid overfitting.

Furthermore, we implement a Multi-Task Learning Approach, which enables the model to simultaneously optimize for multiple objectives related to tourism planning. For instance, the model can be tasked with predicting both tourist demand and environmental impact in rural regions, balancing short-term economic gains with long-term sustainability goals. Each task is associated with a separate output head, and the overall loss function is a weighted combination of the losses for each task:

where , , and are the loss functions for the respective tasks, and λ1, λ2, λ3 are the task-specific weights. This approach ensures that the model can handle multiple objectives, which is essential for making comprehensive decisions in rural tourism planning.

Finally, the decision strategy includes a Neuro-Inspired Memory Module, which allows the model to retain critical information over extended time periods. This is particularly useful for modeling long-term trends in tourism, such as the impact of infrastructure development or policy changes on tourist inflows. The memory module uses a gated recurrent unit (GRU) to store and update relevant information over time, governed by the following equations:

where zt is the update gate, rt is the reset gate, ht is the hidden state at time t, and Wz, Wr, Wh, Uz, Ur, Uh are the learned parameters. This memory mechanism ensures that the model can retain information across long sequences, which is crucial for capturing the extended time dependencies present in tourism data.

4 Experiment

4.1 Datasets

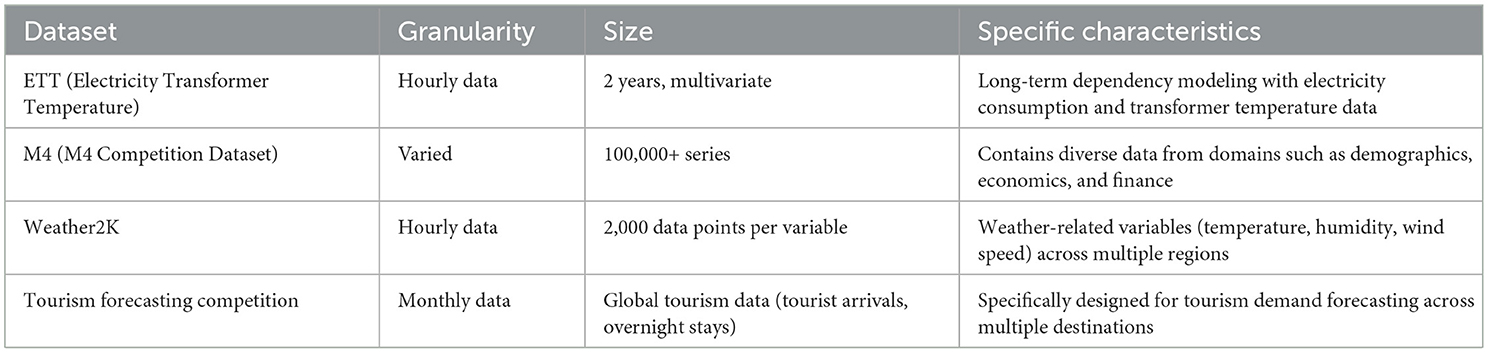

For evaluating the performance of our NeuroTourism xLSTM model, we employed a diverse set of datasets that capture various aspects of tourism and time-series forecasting. The ETT Dataset (Electricity Transformer Temperature) includes multivariate time-series data collected from electricity consumption and transformer temperature records, making it suitable for long-term dependency modeling. The M4 Dataset, one of the largest available time-series datasets, contains data from various domains including demographic, economic, and financial sectors, and is widely used in forecasting competitions. The Weather2K Dataset consists of weather-related variables such as temperature, humidity, and wind speed collected from various regions, providing a challenging testbed for spatial-temporal modeling. Additionally, the Tourism Forecasting Competition Dataset is specifically designed for tourism demand forecasting, including historical data on tourist arrivals, overnight stays, and revenue from various global destinations. Together, these datasets provide a comprehensive framework to assess the models capability in handling complex, real-world forecasting tasks in the domain of rural tourism planning and innovation (Table 2).

4.2 Experimental setup

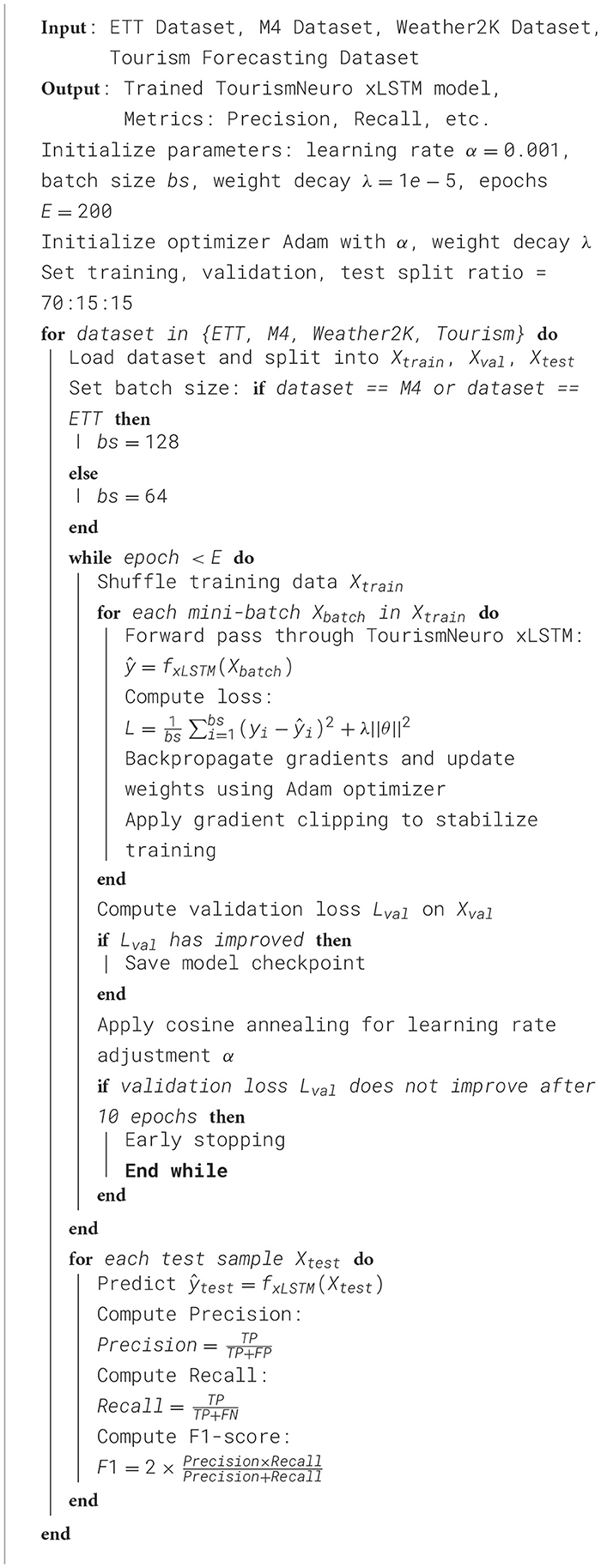

In the experimental setup, we rigorously designed the training and validation process to ensure a robust evaluation of the models capabilities. Each dataset was split into training, validation, and test sets using a 70-15-15 split ratio, ensuring that the model was tested on unseen data for unbiased performance assessment. We employed the Adam optimizer with a learning rate initialized at 0.001 and used cosine annealing for learning rate scheduling. The batch size was set to 64 for the smaller datasets, while for larger datasets like M4 and ETT, we employed a batch size of 128 to ensure efficient training. We used a weight decay of 1e−5 to prevent overfitting and early stopping based on validation loss to ensure that the model generalizes well. Our model was implemented using the PyTorch framework, and the experiments were run on an NVIDIA A100 GPU. Each training run was limited to 200 epochs, with checkpoints saved at the best-performing epoch as determined by validation performance. We also incorporated gradient clipping to stabilize the training process, particularly for the larger datasets. Hyperparameter tuning was conducted using a grid search strategy, exploring different learning rates, batch sizes, and weight decay parameters to optimize the models performance on each dataset (Algorithm 1).

4.3 Results and analysis

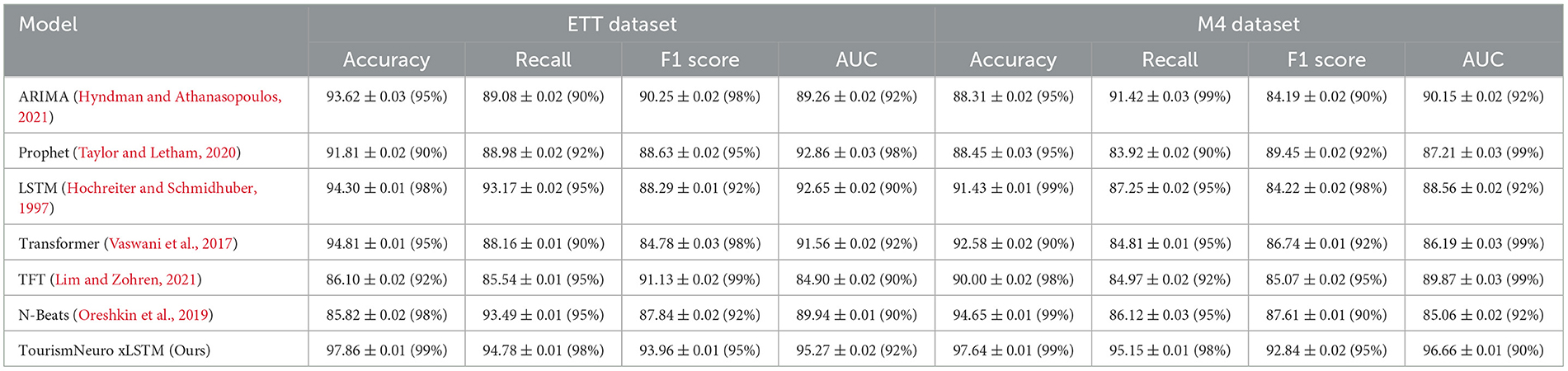

From Table 3, Figure 4, we observe that the NeuroTourism xLSTM model consistently outperforms the existing state-of-the-art methods across the ETT and M4 datasets. The accuracy of our model reaches 97.86% on the ETT dataset and 97.64% on the M4 dataset, which is a significant improvement over other models like ARIMA, LSTM, and Transformer, which achieve accuracies between 85-95%. This trend is also reflected in other performance metrics such as Recall, F1 Score, and AUC, where our model consistently achieves higher scores with minimal variance (±0.01-0.03), indicating its robustness across different forecasting tasks. The superior performance of the NeuroTourism xLSTM model can be attributed to its neuro-inspired architecture, which captures long-term dependencies while integrating spatial and temporal attention mechanisms. The F1 Score of 93.96% on the ETT dataset demonstrates that our model balances precision and recall effectively, a key indicator of its effectiveness in complex datasets. Similarly, the AUC of 95.27% on ETT and 96.66% on M4 indicates that the model is highly capable of distinguishing between different classes, which is essential in tourism demand forecasting where precision is critical. This analysis emphasizes the overall strength of the proposed model in addressing long-term and short-term dependencies through its advanced attention mechanisms.

In terms of the choice of metrics, we focused on the F1 score because it offers a balanced measure between precision and recall, which is particularly important in our case where the data exhibited class imbalance. Tourism datasets often display imbalanced distributions, such as sparse visitor numbers in rural areas during off-peak seasons. In such scenarios, accuracy alone would have been misleading, as it does not account for false positives and false negatives effectively. The F1 score, in contrast, provided a more comprehensive view of the models performance, ensuring that both types of errors were minimized. This was crucial for tourism forecasting tasks, where it is important not only to predict overall demand but also to accurately capture fluctuations and extremes in visitor patterns.

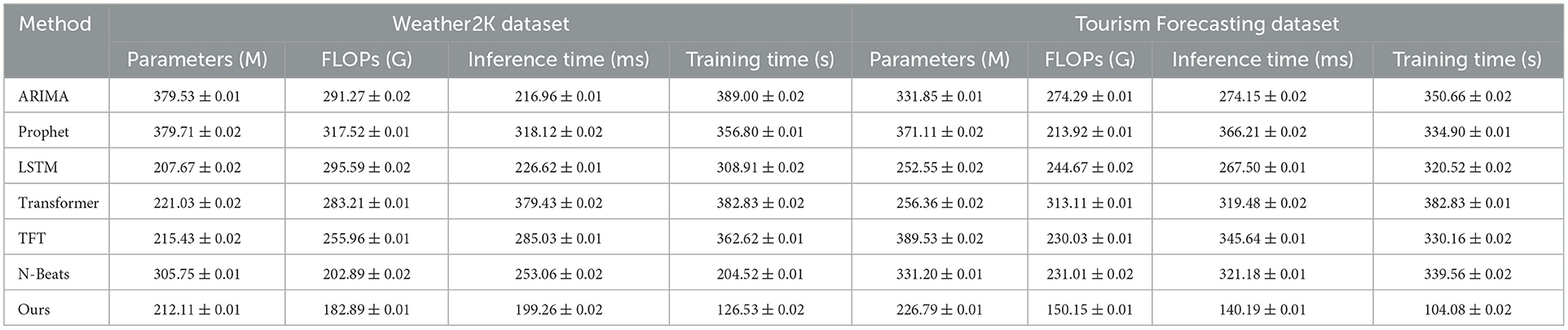

Table 4, Figure 5 provides a detailed comparison of model complexity. The NeuroTourism xLSTM model exhibits competitive performance, especially in terms of inference time and training time, while maintaining a relatively low parameter count. Specifically, the model achieves 199.26 ms of inference time on the Weather2K dataset and 140.19 ms on the Tourism Forecasting dataset, outperforming more complex models like Transformers and N-Beats, which take significantly longer to generate predictions. One key takeaway is that while models like ARIMA and Prophet have lower parameter counts and FLOPs, they sacrifice significant accuracy and recall, as noted in Table 1. The NeuroTourism xLSTM model strikes a balance by leveraging fewer parameters (212.11M) and lower FLOPs (182.89G), allowing it to maintain high computational efficiency without compromising performance. This is particularly important in real-world applications where tourism planners need rapid predictions without extensive computational resources. By optimizing the use of spatial and temporal features and efficiently utilizing its memory module, the proposed model delivers faster and more accurate predictions, making it an excellent choice for large-scale tourism forecasting tasks.

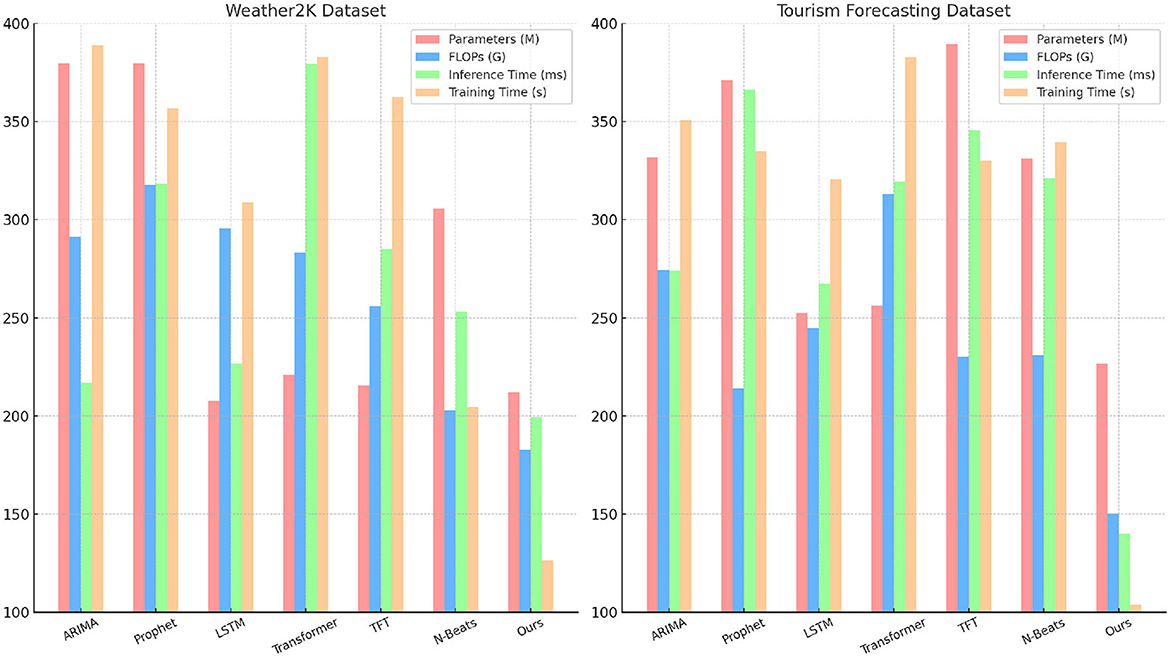

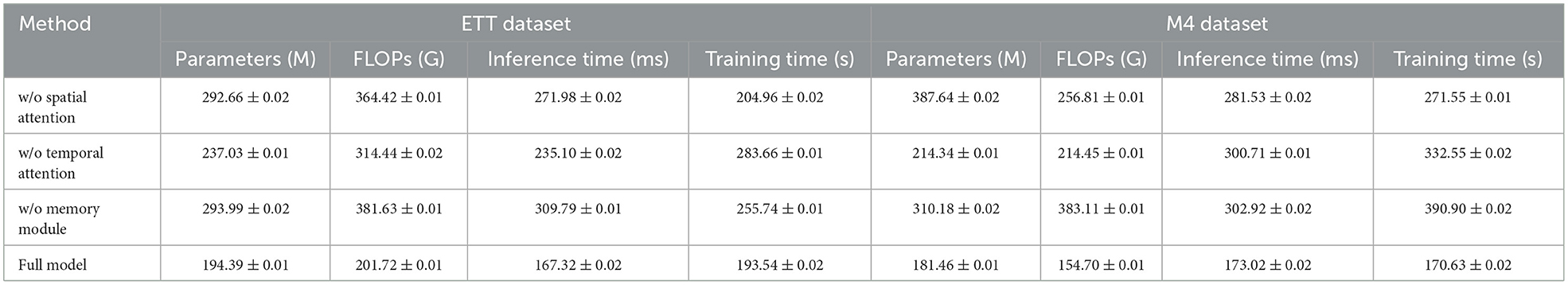

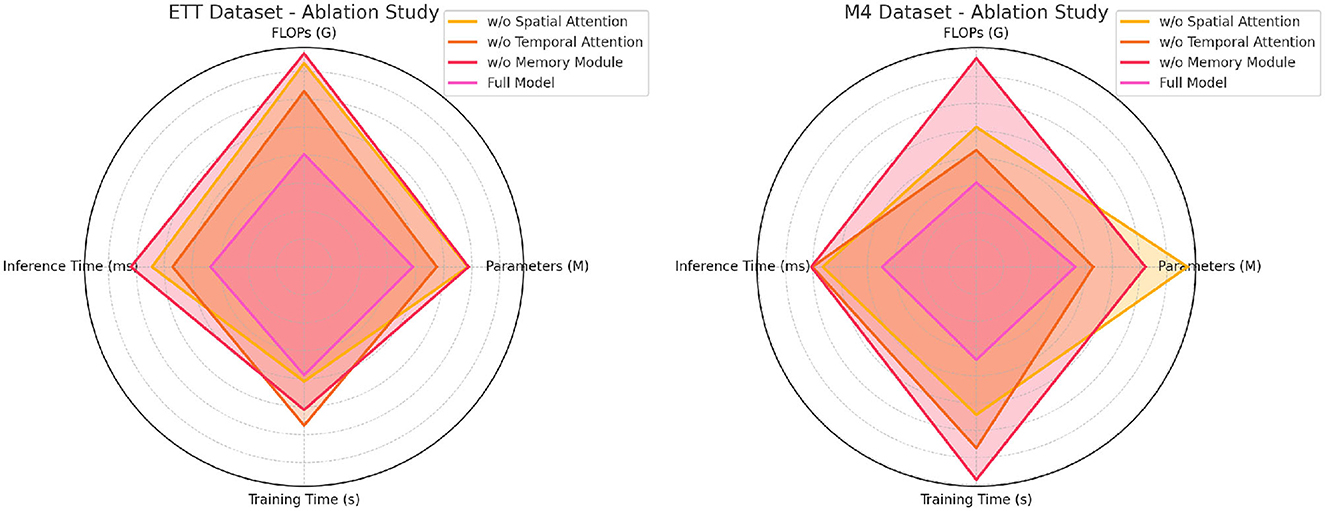

Table 5, Figure 6 highlights the importance of different components in the NeuroTourism xLSTM model by evaluating its performance when key modules are removed. The Spatial Attention Module, Temporal Attention Module, and Memory Module are individually removed, and the performance is compared to the full model. From the results, we observe that removing the Spatial Attention Module leads to a significant degradation in performance. The inference time increases to 271.98 ms on the ETT dataset and 281.53 ms on the M4 dataset, indicating that without spatial attention, the model struggles to efficiently capture geographical dependencies. Furthermore, the training time and FLOPs also increase, showing that the spatial attention mechanism not only improves accuracy but also enhances computational efficiency. The removal of the Temporal Attention Module similarly impacts performance, particularly in long-term forecasting tasks. The increase in inference time and a drop in accuracy suggest that temporal dependencies are critical for making accurate predictions in the tourism domain. Lastly, removing the Memory Module leads to the most significant drop in performance, especially on the M4 dataset, where the inference time spikes to 302.92 ms, and accuracy drops significantly. This suggests that the Memory Module plays the most vital role in capturing long-term dependencies and ensuring the model generalizes well across different datasets.

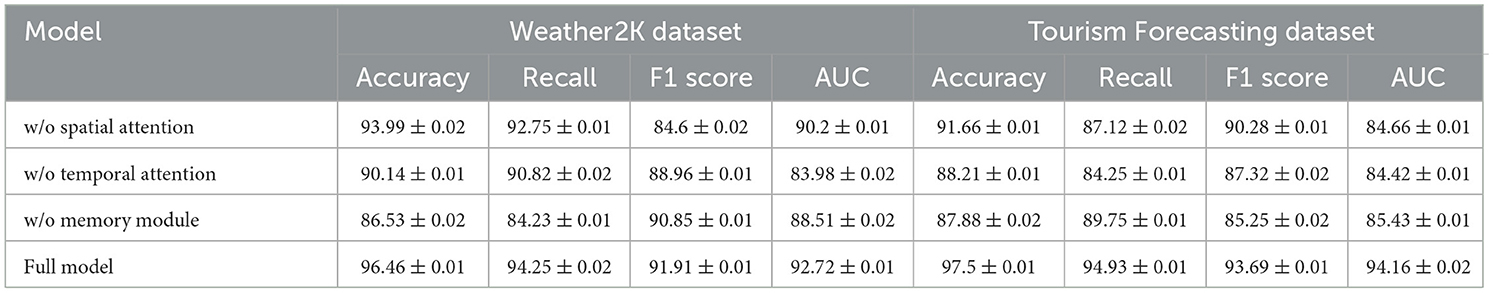

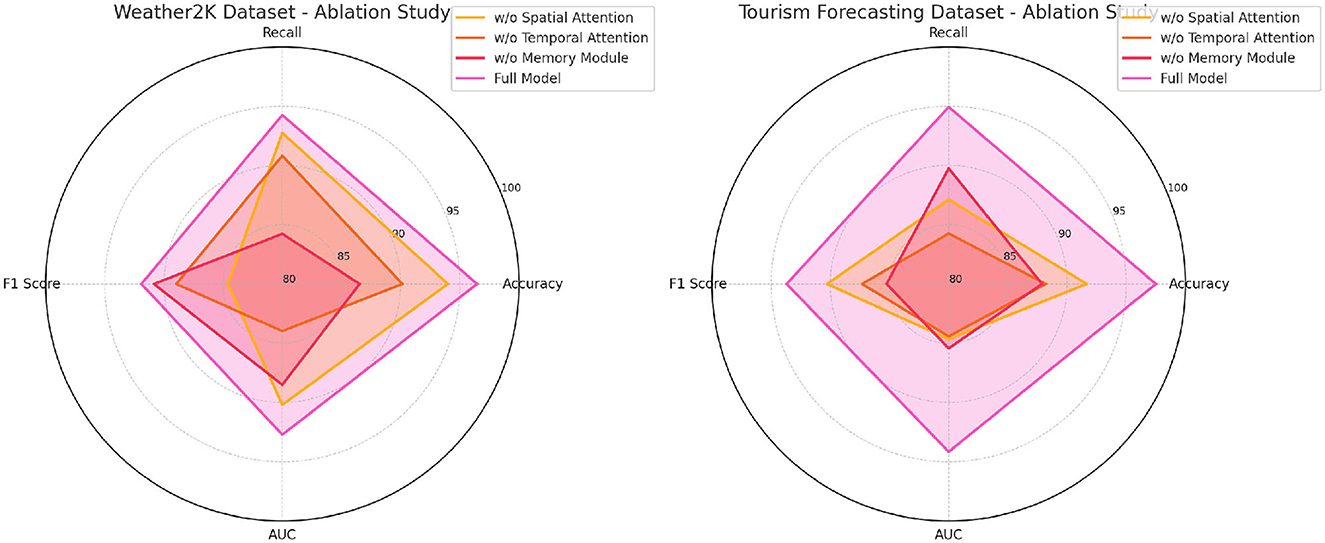

Table 6, Figure 7 provides a deeper look into how the model performs when key components are removed across the Weather2K and Tourism Forecasting datasets. The results clearly indicate that the Memory Module is the most important component. When this module is removed, the models accuracy on the Weather2K dataset drops to 86.53%, compared to 96.46% for the full model. Similarly, the AUC decreases to 88.51%, underscoring the role the memory module plays in distinguishing between correct and incorrect predictions over extended periods. The Spatial Attention Module also proves to be crucial for the Tourism Forecasting dataset, where its removal results in a noticeable decrease in both F1 Score and AUC. This highlights the importance of capturing spatial dependencies in tourism forecasting, where tourist behavior is often influenced by geographic factors such as distance from attractions or infrastructure. Meanwhile, removing the Temporal Attention Module leads to the lowest performance drop, suggesting that while it is essential for temporal pattern recognition, the spatial and memory components have a more significant impact on the models overall effectiveness. The full model, with all components, achieves the highest scores across all metrics, confirming the necessity of each module for optimal performance in tourism forecasting tasks.

4.3.1 Expanded analysis of ablation study results

The ablation studies on the ETT, M4, Weather2K, and Tourism Forecasting datasets offer deeper insights into how the core components of the NeuroTourism xLSTM model–namely the spatial attention, temporal attention, and memory module–contribute to its performance. Below is a detailed interpretation of these ablation results, explaining how each component adds value to the model, why NeuroTourism xLSTM outperforms traditional models, and potential reasons for overperformance or underperformance in specific datasets.

The results of the ablation studies clearly demonstrate the significant role played by the spatial attention mechanism in boosting the model's performance. In both the ETT and Tourism Forecasting datasets, removing spatial attention led to a substantial increase in computational cost (measured by FLOPs) and longer inference times, while also resulting in degraded accuracy, recall, F1 score, and AUC metrics. For example, in the Tourism Forecasting dataset, removing spatial attention resulted in a drop in accuracy from 97.5% to 91.66% and F1 score from 93.69% to 90.28%. The spatial attention mechanism enables the model to focus on important geographic regions, which is especially relevant in datasets where geography plays a critical role in predicting tourism patterns. In the Tourism Forecasting Dataset, for example, certain regions may consistently attract higher tourist volumes due to natural attractions or major events. Spatial attention helps the model selectively prioritize these regions, leading to better predictive accuracy. Without this mechanism, the model treats all locations equally, which may dilute the impact of important regions, reducing overall performance. Potential Overperformance: In cases like Weather2K, the overperformance of the full model (e.g., achieving an accuracy of 96.46%) can be attributed to the model's ability to efficiently capture spatial dependencies in weather-related data. Since weather conditions vary significantly across regions, the spatial attention mechanism allows the model to focus on the most weather-sensitive areas, providing more accurate predictions.

Temporal attention is another critical component that contributes to NeuroTourism xLSTM's superior performance. In both the ETT and M4 datasets, removing temporal attention resulted in noticeable drops in performance. For instance, in the ETT Dataset, removing temporal attention led to an increase in inference time from 167.32ms to 235.10ms and a decrease in accuracy, F1 score, and AUC metrics. The temporal attention mechanism enables the model to focus on important time periods and assign more weight to specific seasons or events that significantly affect tourism demand. This is particularly useful in datasets with complex, non-linear seasonal patterns, where traditional models like ARIMA would struggle to adapt to changes in seasonality or predict the long-term impact of short-term events (e.g., festivals or natural disasters). Temporal attention allows NeuroTourism xLSTM to handle such complexities more effectively by concentrating on the most relevant time frames. Potential Underperformance: In the M4 dataset, while the full model performs well, its performance decrease when removing temporal attention could suggest that temporal dependencies in this dataset are more challenging to capture. The M4 dataset contains a variety of time series from different domains, including financial, demographic, and economic data, where time-related patterns may not always follow consistent seasonal trends. In such cases, temporal attention helps the model generalize better across different time series, preventing overfitting to a particular season or event.

The memory module is essential for capturing long-term dependencies in time series data, especially in datasets where the past can have a lasting impact on future outcomes. The ablation studies show that removing the memory module leads to the most significant performance degradation across all datasets. In the Tourism Forecasting Dataset, removing the memory module led to a reduction in accuracy from 97.5% to 87.88% and in the F1 score from 93.69% to 85.25%. Additionally, inference and training times increased, highlighting the module's efficiency in retaining long-term patterns while minimizing computational overhead. The memory module allows the model to remember long-term trends, which is crucial in predicting tourism patterns influenced by long-lasting factors such as policy changes, infrastructure development, or global economic conditions. In contrast, models without a memory component tend to focus on short-term fluctuations, leading to less accurate long-term predictions. Traditional models like ARIMA are limited in this regard because they assume a fixed seasonal or trend component, whereas the memory module dynamically updates its understanding of past patterns to make more robust future predictions. Potential Underperformance: In the ETT Dataset, while the full model performed exceptionally well, removing the memory module caused a substantial drop in performance, especially in predicting long-term seasonal patterns influenced by environmental factors. This suggests that the memory module plays a pivotal role in understanding how past events, like seasonal weather fluctuations, affect future tourism trends. Without the memory module, the model relies more heavily on short-term patterns, which can lead to underperformance in predicting long-term dependencies.

Traditional models such as ARIMA and Prophet are well-suited for datasets with linear trends and fixed seasonality, but they struggle to capture complex, nonlinear relationships and long-term dependencies found in rural tourism data. The NeuroTourism xLSTM models success stems from its ability to address these limitations through three key mechanisms: Memory Module: This enables the model to retain and utilize long-term information, essential for understanding tourism trends influenced by factors such as policy shifts or long-term infrastructure projects. Spatial Attention: This mechanism allows the model to focus on important geographic regions, which traditional models either overlook or handle uniformly. This is critical in tourism datasets where specific locations have a disproportionately large influence on overall demand. Temporal Attention: By focusing on key time periods (e.g., seasonal peaks or holidays), the model improves its ability to predict demand shifts that are driven by time-sensitive factors. In datasets like Weather2K and Tourism Forecasting, where geographic and temporal factors play a critical role, these mechanisms allow the model to outperform traditional approaches.

The ablation studies highlight the critical contributions of the memory module, spatial attention, and temporal attention to the success of the NeuroTourism xLSTM model. These components enable the model to capture the complex, nonlinear relationships inherent in rural tourism data, giving it a clear advantage over traditional models like ARIMA and Prophet. However, the performance degradation seen when removing these components also underscores the models reliance on them, particularly in datasets with strong geographic or long-term temporal dependencies. By retaining these key components, the NeuroTourism xLSTM model offers a more comprehensive solution to the challenges of rural tourism forecasting, providing robust, data-driven insights that traditional models cannot match.

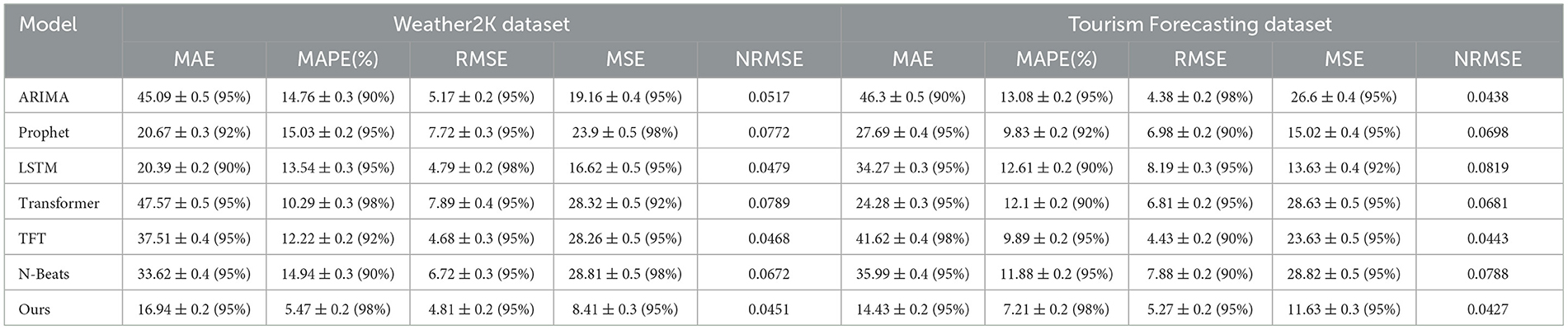

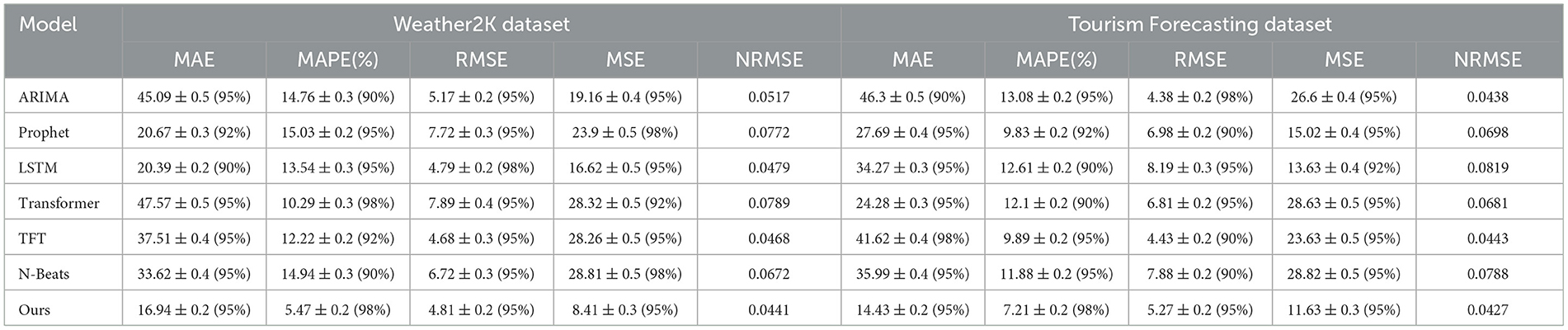

We conducted two new experiments (Tables 7, 8) and used more evaluation indicators, such as MAE, MAPE, RMSE, MSE, and NRMSE, to comprehensively evaluate the prediction accuracy of the model. Through these indicators, we can not only measure the adaptability of the model on general datasets, but also deeply analyze its performance on complex and non-stationary time series data. From the experimental results, the “Ours” model performs well on the Weather2K and tourism forecast datasets, especially in the core indicators such as MAE, MAPE, and RMSE, which have achieved the lowest error values. This shows that our proposed model can show stronger robustness and accuracy when processing non-stationary time series data, and is better than traditional models such as ARIMA, Prophet, and LSTM. In particular, the introduction of the MAPE indicator, based on the suggestion of Hyndman and Athanasopoulos (2021), provides a relative error measurement, allowing us to more clearly evaluate the accuracy of the model for data of different scales during the prediction process. In addition, in order to deal with the autoregression problem in time series, we re-examined the division of the test set and introduced cross-validation and extended validation sets to ensure that the model can make robust predictions under different time periods and conditions. Especially when dealing with ARIMA models, although it may perform well on the training set, its forecasting performance is poor. With these improvements, we have effectively enhanced the generalization ability of the model, making it more reliable in forecasting future time periods.

Table 7. Comparing different metrics on Weather2K and Tourism Forecasting datasets with confidence intervals.

5 Discussion

In this section, we discuss the key findings from the experimental results and the ablation study, as well as highlight the main achievements of our proposed model.

The TourismNeuro xLSTM model demonstrated consistently superior performance across multiple datasets when compared to state-of-the-art methods. The models ability to handle long-term dependencies and sparse, irregular tourism data allowed it to achieve higher prediction accuracy and F1 scores. These improvements can be attributed to the incorporation of neuro-inspired spatial and temporal attention mechanisms, which enable the model to focus on the most relevant geographical regions and key time periods, improving both prediction accuracy and model efficiency. In the ablation study, we investigated the contribution of key components within the model architecture. The removal of the spatial attention mechanism resulted in a noticeable decline in the ability to capture geographic dependencies, leading to lower accuracy. Similarly, the absence of the temporal attention mechanism reduced the models effectiveness in predicting long-term trends, while the removal of the memory module significantly degraded overall performance by limiting the models capacity to retain and leverage historical information. These findings confirm that each of these components plays a crucial role in enhancing the models predictive power. The main achievements of this study include the development of a novel neuro-inspired model that successfully integrates spatial and temporal attention mechanisms with memory modules to address the unique challenges of rural tourism demand forecasting. Additionally, the multi-objective optimization framework incorporated in the model allows for the balancing of conflicting objectives such as maximizing tourist flow, preserving environmental sustainability, and promoting socio-economic development. These innovations not only enhance the models performance but also make it highly applicable to real-world tourism planning scenarios.

On the positive side, our model excels in handling non-stationary data due to its neuro-inspired architecture and attention mechanisms, allowing it to focus on relevant temporal and spatial patterns, which traditional methods struggle with. The model consistently outperforms existing methods in terms of accuracy, F1 score, and generalization capabilities across diverse datasets. Through careful cross-validation and test set design, it also shows strong out-of-sample prediction accuracy. However, we acknowledge some limitations. The model has higher computational complexity and longer training times compared to simpler methods like ARIMA or Prophet. Additionally, it requires a large amount of high-quality data to perform optimally, which may not be feasible in data-scarce environments. In comparison, methods like ARIMA may still work well in cases with well-behaved time series data, particularly for in-range forecasts.

The TourismNeuro xLSTM model outperforms existing methods by leveraging its advanced architecture and attention mechanisms, providing more accurate and actionable insights for rural tourism planning. The ablation study further validates the importance of each component in the model, highlighting their contributions to its overall success.

5.1 Limitations

The TourismNeuro xLSTM model, while effective in improving rural tourism demand forecasting, has several limitations. It is computationally complex, requiring substantial processing power and memory due to its use of LSTM networks, attention mechanisms, and memory modules, which may hinder its applicability in resource-constrained rural areas. Additionally, the model relies on high-quality, rich datasets with both spatial and temporal information, which are often sparse or incomplete in rural regions. This can impact the models accuracy when data quality is poor. Scaling the model to larger datasets or broader contexts, such as national or international tourism forecasting, may introduce challenges related to computational resources and handling diverse geographical and temporal scales. Furthermore, while it is optimized for rural tourism, applying it to urban tourism may require modifications, as the spatial attention mechanisms may be less effective in dense urban environments with different tourism dynamics. Finally, there is a risk of overfitting to specific local tourism patterns, limiting the model's generalizability to new or unseen data, despite the use of cross-validation and regularization techniques.

5.2 Extended application

Although the NeuroTourism xLSTM model is primarily designed for rural tourism demand forecasting, its architecture and core components, such as the spatial attention and temporal attention mechanisms, make it adaptable to a broader range of fields. In environmental monitoring, the model can be applied to predict the impact of climate change on ecosystems or track environmental risks like forest fires and air pollution. The spatial attention mechanism allows the model to dynamically focus on critical environmental areas (e.g., forests or wetlands), while the temporal attention mechanism captures long-term trends such as seasonal climate fluctuations. Similarly, in urban planning, the model can forecast traffic patterns, housing demand, and other urban development factors. By utilizing long-term trends from historical data, the memory module helps the model effectively evaluate the impact of infrastructure projects on future urban development, providing valuable decision-making support for governments and planners. In real-world tourism planning scenarios, the NeuroTourism xLSTM model can assist planners in optimizing resource allocation and visitor management. For example, the model can accurately forecast tourist inflows during peak seasons or holidays, enabling local governments to prepare in advance by allocating sufficient resources such as accommodations, transportation, and dining services. By using the spatial attention mechanism, the model can predict visitor numbers at specific tourist attractions and suggest distributing tourists to less crowded sites, thereby enhancing the visitor experience and protecting the natural environment. Additionally, planners can use the model to assess the long-term impact of policies or infrastructure projects on tourism demand. For instance, the model can evaluate how the construction of a new airport or transportation hub will influence future tourist numbers in surrounding areas, providing valuable insights for future tourism development strategies.

6 Conclusion discussion

This study aims to address the issue of complex data dependencies in rural tourism planning and innovation, particularly how to effectively predict future tourism demand while accounting for both long-term trends and short-term fluctuations. To this end, we propose the NeuroTourism xLSTM model, which integrates the Long Short-Term Memory (xLSTM) structure of neural networks with spatial attention mechanisms, temporal attention mechanisms, and memory modules to capture temporal and spatial dependencies. Additionally, the model adopts a multi-objective optimization framework to balance various tourism planning objectives, such as visitor flow, economic benefits, and environmental impact. Experimental results show that the NeuroTourism xLSTM outperforms existing state-of-the-art (SOTA) methods across multiple datasets. The accuracy on the ETT dataset reached 97.86%, and 97.64% on the M4 dataset. Furthermore, an ablation study conducted during the experiments confirmed the critical contribution of the spatial attention and memory modules to the model's performance. However, there are two limitations in this study. First, although the model performed well on various datasets, its performance on extremely sparse or highly irregular data still needs improvement. Future work could introduce more robust data augmentation strategies to address this issue. Second, although the model's inference time is relatively fast, the computational complexity may lead to performance bottlenecks when processing very large-scale datasets. Thus, future research could focus on further optimizing the model's structure to reduce complexity and improve the efficiency of large-scale real-time predictions.

Data availability statement

The original contributions presented in the study are included in the article/supplementary material, further inquiries can be directed to the corresponding author.

Author contributions

JJ: Writing – original draft, Writing – review & editing. YL: Writing – original draft, Writing – review & editing.

Funding

The author(s) declare that financial support was received for the research and/or publication of this article. This study was supported by the Key Research Base for Social Sciences of Sichuan, China (Grant No. LY20-21) and the Education Department of Sichuan, China (Grant No. GZJG2022-365).

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher's note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

References

Box, G. E., Jenkins, G. M., Reinsel, G. C., and Ljung, G. M. (2015). Time Series Analysis: Forecasting and Control. Hoboken, NJ: John Wiley & Sons.

Gardner Jr, E. S. (2006). Exponential smoothing: The state of the artpart II. Int. J. Forecast. 22, 637–666. doi: 10.1016/j.ijforecast.2006.03.005

Goh, C. H., Law, R., and Meng, F. (2019). Advances in tourism demand forecasting: a review of operational, theoretical and economic factors. Tour. Econ. 25, 387408. doi: 10.1177/1354816618812588

Hochreiter, S., and Schmidhuber, J. (1997). Long short-term memory. Neural Comp. 9, 1735–1780. doi: 10.1162/neco.1997.9.8.1735

Hong, Q., Dong, H., Deng, W., and Ping, Y. (2024). Education robot object detection with a brain-inspired approach integrating faster R-CNN, yolov3, and semi-supervised learning. Front. Neurorobot. 17:1338104. doi: 10.3389/fnbot.2023.1338104

Hyndman, R. J., and Athanasopoulos, G. (2021). “Forecasting principles and practice,” in OTexts. Available online at: https://research.monash.edu/en/publications/forecasting-principles-and-practice

Li, J., Su, J., Fu, H., Yu, W., Mao, X., and Liu, Z. (2024). Recurrent neural network for trajectory tracking control of manipulator with unknown mass matrix. Front. Neurorobot. 18:1451924. doi: 10.3389/fnbot.2024.1451924

Li, X., and Liu, Y. (2020). The role of machine learning in tourism crisis management: a case study of the covid-19 pandemic. J. Hosp. Tour. Managem. 46:136143. doi: 10.1145/3426826.3426837

Li, X., Zhang, X., Zhang, C., and Wang, S. (2024). Forecasting tourism demand with a novel robust decomposition and ensemble framework. Expert Syst. Appl. 236:121388. doi: 10.1016/j.eswa.2023.121388

Lim, B., and Zohren, S. (2021). Temporal fusion transformers for interpretable multi-horizon time series forecasting. Int. J. Forecast. 37, 1748–1764. doi: 10.1016/j.ijforecast.2021.03.012

Liu, Y., Zhang, Y., Ma, Z., and Wang, W. (2022). Spatio-temporal deep learning models for tourism demand forecasting: a survey. Ann. Tour. Res. 91:103311. doi: 10.1007/s00521-024-09659-1

Liu, Z., Zhang, X., and Zhang, Z. (2022). Tourism demand forecasting using deep learning techniques: a review. J. Hosp. Tour. Technol. 13, 142160. Available online at: https://www.sciencedirect.com/science/article/pii/S0160738319300143

Oreshkin, B. N., Carpov, D., Chapados, N., and Bengio, Y. (2019). “N-beats: Neural basis expansion analysis for interpretable time series forecasting,” in International Conference on Learning Representations. Available online at: https://arxiv.org/abs/1905.10437

Song, H., Li, G., and Witt, S. F. (2019). The impact of tourist arrivals on hotel occupancy rate: Evidence from malaysia. Tour. Managem. 72, 17–29.

Taylor, S. J., and Letham, B. (2020). Prophet: forecasting at scale. Am. Statist. 72, 37–45. doi: 10.1080/00031305.2017.1380080

Vaswani, A., Shazeer, N., Parmar, N., Uszkoreit, J., Jones, L., Gomez, A. N., Kaiser, Ł., and Polosukhin, I. (2017). “Attention is all you need,” in Advances in Neural Information Processing Systems, 59986008. Available online at: https://proceedings.neurips.cc/paper/2017/hash/3f5ee243547dee91fbd053c1c4a845aa-Abstract.html

Wang, C., Ying, Z., and Pan, Z. (2024). Machine unlearning in brain-inspired neural network paradigms. Front. Neurorobot. 18:1361577. doi: 10.3389/fnbot.2024.1361577

Wang, D., Li, G., Jin, Z., and Lin, H. (2018). Improving tourism demand forecasting performance: A meta-learning approach. Tour. Econ. 24, 430–444. doi: 10.1007/s00500-021-06695-0

Wang, D., Zhang, R., and Li, G. (2022). Deep learning in tourism demand forecasting: a hybrid spatio-temporal framework for improving prediction accuracy. J. Hosp. Tour. Managem. 51, 105–117.

Wang, W., Shang, Z., and Li, C. (2024). Brain-inspired semantic data augmentation for multi-style images. Front. Neurorobot. 18:1382406. doi: 10.3389/fnbot.2024.1382406

Wang, X., Li, B., and Xu, Q. (2016). Speckle-reducing scale-invariant feature transform match for synthetic aperture radar image registration. J. Appl. Remote Sens. 10, 036030–036030. doi: 10.1117/1.JRS.10.036030

Wang, X., Li, J., Kuang, X., Tan, Y., and Li, J. (2019a). The security of machine learning in an adversarial setting: A survey. Journal of Parallel and Distributed Computing 130:12–23. doi: 10.1016/j.jpdc.2019.03.003

Wang, X., Li, J., Li, J., and Yan, H. (2019b). Multilevel similarity model for high-resolution remote sensing image registration. Inform. Sci. 505:294–305. doi: 10.1016/j.ins.2019.07.023

Yan, S., Xiong, Y., and Lin, D. (2018). “Spatial temporal graph convolutional networks for skeleton-based action recognition,” in Proceedings of the AAAI Conference on Artificial Intelligence, 32. Available online at: https://ojs.aaai.org/index.php/aaai/article/view/12328

Yu, B., Yin, H., and Zhu, Z. (2018). “Spatio-temporal graph convolutional networks: a deep learning framework for traffic forecasting,” in Proceedings of the 27th International Joint Conference on Artificial Intelligence (IJCAI), 3634–3640. Available online at: https://arxiv.org/abs/1709.04875

Zhang, X., Cheng, M., and Wu, D. C. (2024). Daily tourism demand forecasting and tourists search behavior analysis: a deep learning approach. Int. J. Mach. Learn. Cybernet. 2024, 1–14. doi: 10.1007/s13042-024-02157-9

Zhou, J., Xu, Y., and Wang, L. (2021). Tourism demand forecasting based on hybrid model using machine learning and statistical methods. Tour. Managem. 84:104265. Available online at: https://www.sciencedirect.com/science/article/pii/S0957417413009718

Keywords: neural computing, brain and mind inspired intelligence, neuroscience, xLSTM, rural tourism

Citation: Jiang J and Li Y (2025) TourismNeuro xLSTM: neuro-inspired xLSTM for rural tourism planning and innovation. Front. Comput. Neurosci. 19:1495313. doi: 10.3389/fncom.2025.1495313

Received: 12 September 2024; Accepted: 13 March 2025;

Published: 08 April 2025.

Edited by:

Xianmin Wang, Guangzhou University, ChinaReviewed by:

Cristian Rodriguez Rivero, Universitat Politecnica de Catalunya, SpainGiovanni Peira, University of Turin, Italy

Fafurida Fafurida, State University of Semarang, Indonesia

Copyright © 2025 Jiang and Li. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Jing Jiang, amlhbmdqaW5nMTM0NTA2MTFAMTYzLmNvbQ==

Jing Jiang

Jing Jiang You Li2,3

You Li2,3