- 1Glenn Biggs Institute for Alzheimer’s & Neurodegenerative Diseases, University of Texas Health Science Center, San Antonio, TX, United States

- 2Department of Neuroscience, University of Virginia, Charlottesville, VA, United States

- 3Oregon Alzheimer’s Disease Research Center, Oregon Health & Science University, Portland, OR, United States

- 4Oregon Center for Aging & Technology, Oregon Health & Science University, Portland, OR, United States

- 5Graduate School of Biomedical Sciences, University of Texas Health Science Center, San Antonio, TX, United States

- 6Department of Neurology, Boston University School of Medicine, Boston, MA, United States

- 7Department of Neurology, Cedars-Sinai Medical Center, Los Angeles, CA, United States

Introduction: Health tracking technologies hold promise as a tool for early detection of cognitive and functional decline.

Methods: This pilot study of 5 households [N = 7 residents, mean age: 74 (5), 71% Hispanic, 14% Black] used the Oregon Center for Aging & Technology (ORCATECH) platform to evaluate the technology and acceptance of the technology over a one-year interval in South Texas. Cognitive assessments and other surveys were administered at baseline and end-of-study visits.

Results: Participants felt comfortable with the technology in their homes (86% Very Satisfactory or Satisfactory) and did not express privacy concerns (100% Very Satisfactory or Satisfactory).

Conclusion: Health, cognition, and activity measures did not significantly change from baseline to end-of-study. Depression scores significantly improved (p = 0.034). The ORCATECH platform was an acceptable method of analyzing health and activity in a small, but diverse older population.

Introduction

Non-Hispanic Black and Hispanic adults are at a higher risk of cognitive decline and dementia compared to non-Hispanic Whites. Nonetheless, they remain underrepresented in research on Alzheimer's disease and related dementias (ADRD). Early detection of cognitive decline using biomarkers is essential for prevention and intervention efforts, especially considering recent advances in disease modifying therapies (1, 2).

Digital biomarkers provide an opportunity to continuously capture data relevant to cognition and daily functioning. The Oregon Center for Aging & Technology (ORCATECH) platform, which assesses functional change at home, has been developed over the last two decades and includes wearables, in-home sensors, and other devices to collect data relevant to multiple domains of functioning (3, 4). Beattie et al. demonstrated the platform to be a reliable method of collecting health data (5). However, research on user experiences in diverse populations is necessary to tailor digital health solutions to communities that have not been adequately represented in this line of research (6). Feasibility studies offer valuable insights for evaluating new technologies before larger implementation trials, particularly in underrepresented populations where technology acceptance may differ.

We sought to assess the acceptability of the ORCATECH platform in the diverse San Antonio, South Texas population. As a secondary aim, we evaluated changes in health and activity levels using the ORCATECH platform.

Methods

Participants

Participants were recruited between May 2021 and July 2021 through community outreach events and contact with previous research participants at the Glenn Biggs Institute in San Antonio, Texas. Eligible participants were 62 years or older, lived in multi-room residences with reliable internet, had basic computer/email experience, and resided alone or with one other adult. Exclusion criteria included significant mobility limitations, uncontrolled medical conditions preventing study completion, household resident size >2, or an inability to provide informed consent independently. The study was approved by the IRB at UT Health San Antonio. All study participants signed the written informed consent.

Technology

Required technologies included passive infrared motion sensors, door contact sensors, and an actigraphy watch. Device installation protocols were derived from Beattie et al. (5) and conducted during standardized home visits within four weeks of enrollment. Trained research staff conducted all installations within four weeks of enrollment and provided comprehensive orientation sessions for each device. Participants received detailed contact information for technical support and were encouraged to report any device malfunctions immediately. The monitoring period lasted twelve months following device installation.

Movement detection devices

Our installation team deployed a standardized sensor array in each home, beginning with passive infrared motion sensors (NYCE Control, Burnaby, BC, Canada) strategically placed in every room to capture general activity patterns. Door contact sensors from the same manufacturer were mounted on all exterior doors to monitor home exits and entries, providing insights into community engagement and daily routines.

Health activity monitoring devices

Each participant received an activity tracking watch (Withings Steel, Withings, Issy-les-Moulineaux, France) configured to monitor step count, activity levels, and sleep patterns without requiring participants to download or manage mobile applications. This device served as our primary wearable sensor for capturing both in-home and community-based activities.

Beyond these required components, participants could select from several optional monitoring devices based on their preferences and comfort level. An electronic pillbox (TimerCap, Moorpark, CA, USA) was used to analyze participants' pill-taking routines (4 of 7 participants accepted). An electronic bed mat (Emfit Quantified Sleep; Emfit Ltd, Vaajakoski, Finland) was used to detect heart rate, respiratory rate, and hours of sleep (6 of 7 participants accepted). Finally, a digital scale (Withings Body Cardio digital scale; Withings, Issy-les-Moulineaux, France) was used to measure participants' weights on a weekly basis (all 7 participants accepted).

Annual study visits

At in-person baseline and 12-month end-of-study visits, participants completed the Uniform Data Set version 3 (UDS-3) National Alzheimer's Coordinating Center (NACC) clinical assessment (7), which includes a standardized neuropsychological battery (8).

Surveys

Participants completed weekly Qualtrics surveys online that inquired about mood, changes in weekly routines, home visitors, extended home absences (e.g., vacation), and other relevant questions to gauge health and activity (9–11). If a participant did not complete the survey within three days, a reminder was emailed. At end-of-study, participants completed a user experience survey designed to elicit their opinions on the devices and their overall participation.

Data analysis

We used SAS to conduct all analyses, while figures were generated using GraphPad Prism 10. Our analytical approach reflected the study's feasibility objectives, emphasizing descriptive statistics appropriate for this preliminary investigation. We calculated descriptive statistics of the health and wellness domains at three time periods: baseline (45 days, with the exception of the initial 2-week installation period), midpoint (days 151–210), and study end (the final 60 days).

For participant-level analyses, means and standard deviations of daily or weekly (scale only) measurements within each time period were calculated. For data that was not collected daily, the weekly statistics were prorated if a minimum of 2 measurements were available. Device data completeness was measured as the percentage of days or weeks during which device data was not missing. The overall mean of the study sample within a time interval of interest was the average of participant-level means, and the overall variability of the study sample was the squared root of the pooled variance (i.e., average of participant-level variance within the time interval of interest). Given our small sample size and feasibility study design, we interpreted all results as preliminary and hypothesis-generating.

Results

Participants demographics

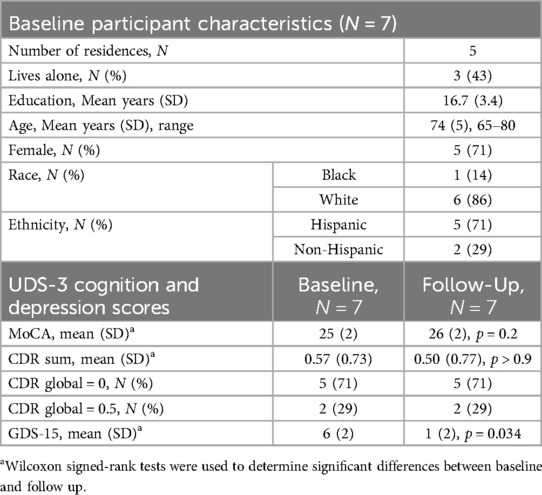

Seven participants [mean age: 74 (SD = 5) years, 71% Hispanic, 14% Black] were enrolled in the study (Table 1). Two households consisted of a male and female couple, while the remaining three households were single females.

End-of-study user experience

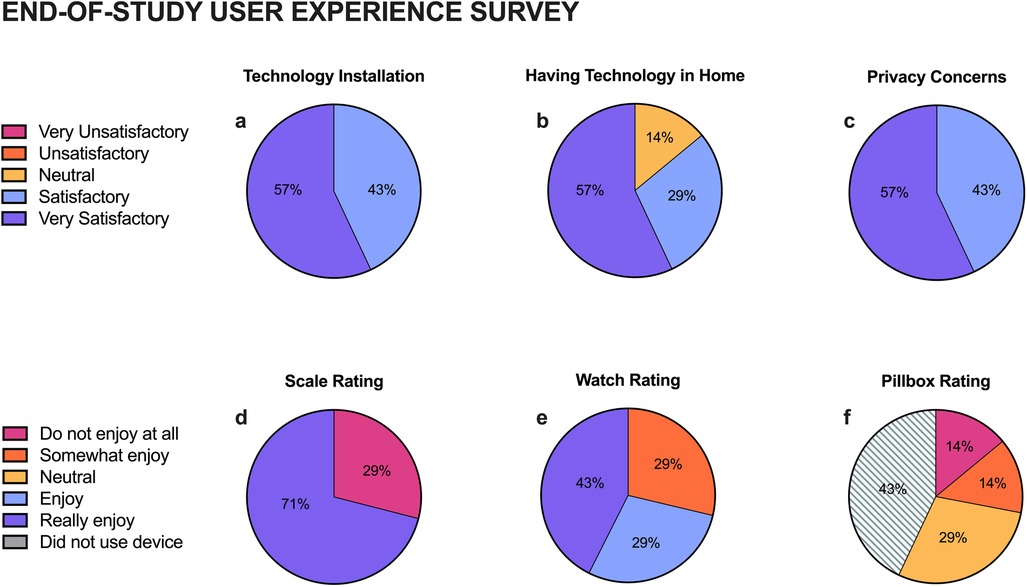

All participants completed the end-of-study survey. Participants were satisfied with the installation process (100% Very Satisfactory and Satisfactory) and the technology platform (86% Very Satisfactory or Satisfactory). Concerns related to privacy were adequately addressed (100% Very Satisfactory or Satisfactory) (Figures 1a–c). Participants generally rated the scale (71% Enjoyed) and watch (71% Enjoyed) positively but gave the pillbox neutral to low ratings (49%) (Figures 1d–f).

Figure 1. End-of-Study user experience survey results. Participants rated their satisfaction of the technology installation process (a), having technology in their home (b), and privacy (c), as well as how much they enjoyed the usable devices: scale (d), watch (e), and pillbox (f).

Participant cognition, survey, and activity results

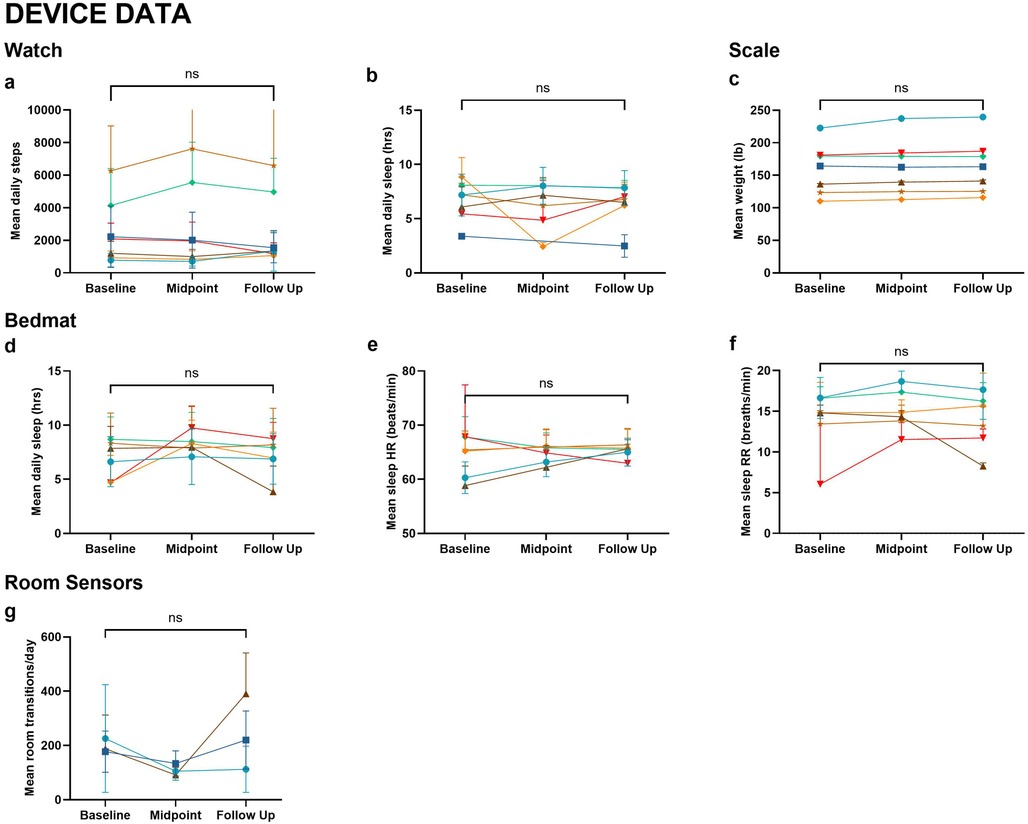

At baseline, 5 participants (71%) had a global Clinical Dementia Rating (CDR) score of 0 and two (29%) had a global CDR of 0.5 with no changes occurring at the one year-follow-up. Montreal Cognitive Assessment (MoCA) scores also did not significantly change over the study period. However, depression scores, as measured by the Geriatric Depression Scale 15-Item (GDS-15), significantly improved (p = 0.034) (Table 1). There were no significant changes in activity levels as measured by the devices (Figure 2).

Figure 2. Device data. Metrics collected from wearable and in-home devices across Baseline, Midpoint, and Follow-Up include: (a) Mean daily steps (watch); (b) Mean daily sleep duration (watch); (c) Mean weight (scale); (d) Mean daily sleep duration (bedmat); (e) Mean sleep heart rate (bedmat); (f) Mean sleep respiratory rate (bedmat); (g) Mean room transitions per day (room sensors). Wilcoxon signed-rank tests were used to compare baseline and follow-up measures. No statistically significant differences were observed (ns). Each line represents an individual participant, with color coding consistent across figures.

Participant device and survey completion rates

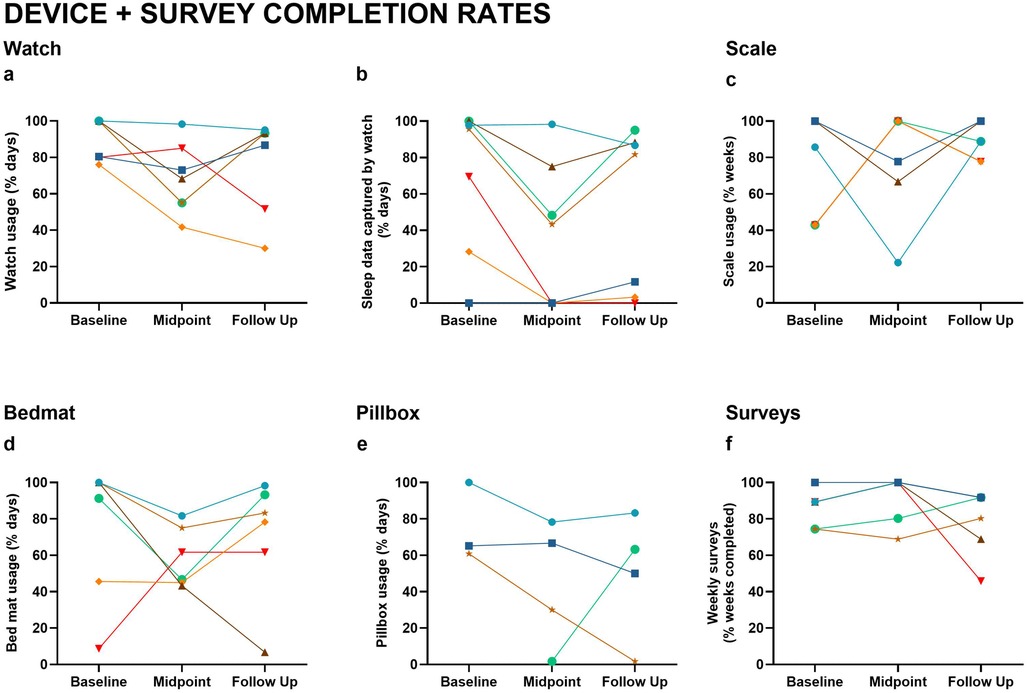

The percentage of data obtained for the watch, scale, pillbox, and bed mat varied and was inconsistent across participants and timepoints (Figure 3). Missing data were typically due to problems with the devices or user noncompliance. Survey completion rates were the most consistent over the course of the study, ranging from 74%–97%. Watch usage ranged from 49%–98%. Approximately half of the participants did not consistently wear their watch while sleeping (Figure 3), but of those who did wear their watch, compliance dipped during the study midpoint. Bed mat compliance was similar, ranging from 44%–93% with some user error (e.g., unplugging the device). Scale usage was better, ranging from 66%–93%. Two of the four participants who opted to use the pillbox had consistent data 60%–87% of the time. The pillbox frequently malfunctioned (i.e., the plastic lids were prone to breakage), resulting in a large proportion of missing data.

Figure 3. Device and survey completion rates. Usage and data capture rates across Baseline, Midpoint, and Follow-Up include: (a) Watch usage (% of days worn); (b) Sleep data captured by watch (% of days); (c) Scale usage (% of weeks); (d) Bedmat usage (% of days); (e) Pillbox usage (% of days); (f) Weekly survey completion (% of weeks). Lines represent individual participants, with consistent color coding across figures.

Discussion

This study aimed to evaluate the feasibility and acceptability of the ORCATECH platform in a small cohort of diverse individuals in effort to gain insight from their study experiences to inform future research. We found high levels of technology acceptability, with 86% rating their experience as highly satisfactory or satisfactory, suggesting an openness to engaging in digital health research. Nonetheless, consistency in digital data collection was variable across devices and individuals, indicating opportunities to better tailor the technology to meet the needs and interests of the community. In our study, the pillbox received the lowest ratings of usability from participants and had the least viable data collection over the study interval. Based on this finding, the pillbox was omitted from a larger, ongoing study (R01AG077472) This demonstrates the benefit of conducting short-term pilot studies to optimize participant experience and data completeness.

As a secondary aim, we evaluated everyday activity levels using the ORCATECH platform. We generally found no significant changes in the values of activity measurements over the 1-year study, demonstrating the reliability of the platform.

The absence of statistically significant changes in cognitive function from baseline to follow-up was expected given the largely cognitively unimpaired community dwelling cohort. However, depression scores (GDS-15) significantly improved at one-year follow-up, with 71% (n = 5) of participants scoring 5 or higher at baseline, suggesting mild depression, compared to 14% (n = 1) at follow-up (12). One possible explanation is that our study began in May 2021, when the COVID-19 pandemic was ongoing, and depression was significantly higher in older adults (13). As participants returned to their normal routines and engaged in research activities requiring home visits, their depression symptoms may have lessened through increased social connectedness.

These findings, along with our implementation experience, provide valuable preliminary insights for future research in diverse older adult populations. Our study highlights the need for dedicated, well-trained research staff who conduct weekly data monitoring, provide technology troubleshooting support, and are responsive to participant privacy concerns. For device selection, our preliminary findings suggest digital scales and activity watches have high user acceptance and data completeness, while the challenges with the electronic pillboxes suggested that additional troubleshooting is needed prior to broader implementation.

Our pilot study had several limitations. The cohort was relatively well-educated, which may not reflect broader community attitudes toward technology. Tailored recruitment strategies are necessary to engage participants with varied educational backgrounds in order to improve generalizability. Study design considerations should also include formal assessment of within-household agreement when enrolling couples, comprehensive device orientation sessions, and realistic expectations and statistical powering for compliance variability (20%–30% range observed across devices). Implementation protocols should emphasize privacy protections and installation support, areas where we achieved 100% satisfaction, while building contingency plans for device malfunctions. Finally, outcome measurement should balance comprehensive clinical assessments with participant burden. Our 5-minute weekly surveys showed strong completion rates (74%–97%), suggesting that brief, high frequency data collection may be capable of providing important insights on the course and frequency of fluctuating symptoms (i.e., mood, pain). These evidence-based recommendations can help optimize both participant experience and data quality in future digital health research with underrepresented populations.

In conclusion, the ORCATECH platform for home-based assessment was widely accepted amongst our diverse participants, suggesting its utility for future studies in our South Texas population. Important limitations include the small sample size (n = 7) highly educated cohort, and the restriction of single or dual household residence sizes, which limit statistical power and generalizability to the broader South Texas population. Additionally, acceptability was evaluated among participants who elected to enroll in the research study, which may not generalize to the broader community. Despite these limitations, our findings provide important preliminary data on technology acceptability and device performance in this South Texas community, establishing a foundation for larger studies adapted to meet specific population needs. Future directions include evaluating interests and barriers to digital technology research in larger studies, as well as collecting data to optimize the technology to the needs of individuals and the community. More broadly, these findings support the development of home-based digital biomarker platforms that could enable continuous monitoring of cognitive health in diverse older adults, facilitating earlier intervention when disease-modifying treatments are most beneficial.

Data availability statement

The original contributions presented in the study are included in the article/Supplementary Material, further inquiries can be directed to the corresponding author.

Ethics statement

The studies involving humans were approved by UT Health San Antonio's Institutional Review Board. The studies were conducted in accordance with the local legislation and institutional requirements. The participants provided their written informed consent to participate in this study.

Author contributions

JM: Writing – original draft. RM: Data curation, Methodology, Project administration, Writing – review & editing. NR: Conceptualization, Data curation, Methodology, Resources, Software, Writing – original draft, Writing – review & editing. TK: Conceptualization, Formal analysis, Investigation, Supervision, Writing – original draft, Writing – review & editing, Project administration, Validation. KC: Conceptualization, Data curation, Methodology, Resources, Software, Writing – review & editing, Validation. C-PW: Formal analysis, Writing – review & editing, Validation. DM: Formal analysis, Writing – review & editing, Validation. RF: Formal analysis, Writing – review & editing. SG: Conceptualization, Methodology, Writing – review & editing. NS: Conceptualization, Project administration, Writing – review & editing. LS: Writing – original draft, Writing – review & editing. VY: Writing – original draft, Writing – review & editing. LM: Conceptualization, Project administration, Resources, Writing – review & editing. SS: Conceptualization, Investigation, Supervision, Writing – review & editing. JK: Conceptualization, Funding acquisition, Investigation, Resources, Supervision, Writing – review & editing. ZB: Conceptualization, Data curation, Funding acquisition, Investigation, Methodology, Project administration, Resources, Software, Supervision, Writing – original draft, Writing – review & editing. MG: Conceptualization, Formal analysis, Funding acquisition, Investigation, Project administration, Supervision, Writing – original draft, Writing – review & editing.

Funding

The author(s) declare that financial support was received for the research and/or publication of this article. This work was supported by the following grants: National Institutes of Health R01AG077472, National Institute on Aging P30AG066546, P30AG024978, U2CAG054397, and P30AG066518.

Acknowledgments

We would like to thank our participants for generously donating their time to this study.

Conflict of interest

ZB and JK have a financial interest in Life Analytics, Inc., a company that may have a commercial interest in the results of this research and technology. This potential conflict of interest has been reviewed and managed by OHSU.

The remaining authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Generative AI statement

The author(s) declare that no Generative AI was used in the creation of this manuscript.

Any alternative text (alt text) provided alongside figures in this article has been generated by Frontiers with the support of artificial intelligence and reasonable efforts have been made to ensure accuracy, including review by the authors wherever possible. If you identify any issues, please contact us.

Publisher's note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

References

1. Hale JM, Schneider DC, Mehta NK, Myrskylä M. Cognitive impairment in the U.S.: lifetime risk, age at onset, and years impaired. SSM—Popul Health. (2020) 11:100577. doi: 10.1016/j.ssmph.2020.100577

2. Babulal GM, Quiroz YT, Albensi BC, Arenaza-Urquijo E, Astell AJ, Babiloni C, et al. Perspectives on ethnic and racial disparities in Alzheimer’s disease and related dementias: update and areas of immediate need. Alzheimers Dement. (2019) 15:292–312. doi: 10.1016/j.jalz.2018.09.009

3. Kaye JA, Maxwell SA, Mattek N, Hayes TL, Dodge H, Pavel M, et al. Intelligent systems for assessing aging changes: home-based, unobtrusive, and continuous assessment of aging. J Gerontol B Psychol Sci Soc. Sci. (2011) 66B:i180–90. doi: 10.1093/geronb/gbq095

4. Kaye J, Reynolds C, Bowman M, Sharma N, Riley T, Golonka O, et al. Methodology for establishing a community-wide life laboratory for capturing unobtrusive and continuous remote activity and health data. J Vis Exp. (2018) (137):56942. doi: 10.3791/56942

5. Beattie Z, Miller L, Almirola C, Au-Yeung W-T, Bernard H, Cosgrove K, et al. The collaborative aging research using technology initiative: an open, sharable, technology-agnostic platform for the research community. Digit Biomark. (2021) 4:100–18. doi: 10.1159/000512208

6. Boise L, Wild K, Mattek N, Ruhl M, Dodge HH, Kaye J. Willingness of older adults to share data and privacy concerns after exposure to unobtrusive in-home monitoring. Gerontechnology. (2013) 11:428–35. doi: 10.4017/gt.2013.11.3.001.00

7. Besser L, Kukull W, Knopman DS, Chui H, Galasko D, Weintraub S, et al. Version 3 of the national Alzheimer’s coordinating center’s uniform data set. Alzheimer Dis Assoc Disord. (2018) 32(4):351–8. doi: 10.1097/WAD.0000000000000279

8. Weintraub S, Besser L, Dodge HH, Teylan M, Ferris S, Goldstein FC, et al. Version 3 of the Alzheimer disease centers’ neuropsychological test battery in the uniform data set (UDS). Alzheimer Dis Assoc Dis. (2018) 32(1):10–7. doi: 10.1097/WAD.0000000000000223

9. Seelye A, Mattek N, Sharma N, Riley T, Austin J, Wild K, et al. Weekly observations of online survey metadata obtained through home computer use allow for detection of changes in everyday cognition before transition to mild cognitive impairment. Alzheimers Dement J. (2018) 14:187–94. doi: 10.1016/j.jalz.2017.07.756

10. Seelye A, Mattek N, Howieson DB, Austin D, Wild K, Dodge HH, et al. Embedded online questionnaire measures are sensitive to identifying mild cognitive impairment. Alzheimer Dis Assoc Disord. (2016) 30(2):152–9. doi: 10.1097/WAD.0000000000000100

11. Bernstein JPK, Dorociak K, Mattek N, Leese M, Trapp C, Beattie Z, et al. Unobtrusive, in-home assessment of older adults’ everyday activities and health events: associations with cognitive performance over a brief observation period. Aging Neuropsychol Cogn. (2022) 29:781–98. doi: 10.1080/13825585.2021.1917503

12. Shin C, Park MH, Lee S-H, Ko Y-H, Kim Y-K, Han K-M, et al. Usefulness of the 15-item geriatric depression scale (GDS-15) for classifying minor and major depressive disorders among community-dwelling elders. J Affect Disord. (2019) 259:370–5. doi: 10.1016/j.jad.2019.08.053

Keywords: aging, cognition, daily life, digital biomarkers, older adults, technology, wearables

Citation: Mathews JJ, Mulavelil R, Rodrigues N, Kautz TF, Cosgrove K, Wang C-P, MacCarthy D, Fernandez RA, Gothard S, Sharma N, Serranorubio L, Young VM, Miller L, Seshadri S, Kaye J, Beattie ZT and Gonzales MM (2025) Feasibility and acceptability of an in-home digital device health and activity assessment platform in a diverse South Texas cohort: a pilot study. Front. Digit. Health 7:1603062. doi: 10.3389/fdgth.2025.1603062

Received: 31 March 2025; Accepted: 4 August 2025;

Published: 1 September 2025.

Edited by:

Andrea Caroppo, National Research Council, ItalyReviewed by:

Gabriele Rescio, National Research Council (CNR), ItalyElinor Schoenfeld, Stony Brook University, United States

Copyright: © 2025 Mathews, Mulavelil, Rodrigues, Kautz, Cosgrove, Wang, MacCarthy, Fernandez, Gothard, Sharma, Serranorubio, Young, Miller, Seshadri, Kaye, Beattie and Gonzales. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Mitzi M. Gonzales, bWl0emkuZ29uemFsZXNAY3Nocy5vcmc=

Julia J. Mathews

Julia J. Mathews Rachel Mulavelil1,2

Rachel Mulavelil1,2 Tiffany F. Kautz

Tiffany F. Kautz Kevin Cosgrove

Kevin Cosgrove Nicole Sharma

Nicole Sharma Vanessa M. Young

Vanessa M. Young Lyndsey Miller

Lyndsey Miller Jeffrey Kaye

Jeffrey Kaye Zachary T. Beattie

Zachary T. Beattie Mitzi M. Gonzales

Mitzi M. Gonzales