- 1Institute for Medical Epidemiology, Biometrics and Informatics, Interdisciplinary Center for Health Sciences, Medical Faculty of the Martin Luther University Halle-Wittenberg, Halle (Saale), Germany

- 2Institute of General Practice & Family Medicine, Interdisciplinary Center of Health Sciences, Medical Faculty of the Martin Luther University Halle-Wittenberg, Halle (Saale), Germany

- 3Institute for History and Ethics of Medicine, Interdisciplinary Center for Health Sciences, Medical Faculty of the Martin Luther University Halle-Wittenberg, Halle (Saale), Germany

- 4Friedrich-Alexander-Universität Erlangen-Nürnberg, Institute for Medical Informatics, Biometrics and Epidemiology, Medical Informatics, Erlangen, Germany

- 5Junior Research Group (Bio-) Medical Data Science, Medical Faculty of the Martin Luther University Halle-Wittenberg, Halle (Saale), Germany

Background: The role of artificial intelligence (AI) in medicine is rapidly expanding, with the potential to transform physicians’ working practices across various areas of medical care. As part of the PEAK project (Perspectives on the Use and Acceptance of Artificial Intelligence in Medical Care) this study aimed to investigate physicians’ attitudes towards and acceptance of AI in medical care.

Methods: Between June 2022 and January 2023 eight semi-structured focus groups (FGs) were conducted with general practitioners (GPs) recruited from practices in the region of Halle/Leipzig, Germany, via email and postal mail, as well as with university hospital physicians from Halle and Erlangen, recruited via email. To conduct the FGs, a topic guide and a video stimulus were developed, including a definition of AI and three potential applications in medical care. Transcribed FGs and field notes were analyzed using qualitative content analysis.

Results: 39 physicians participated in eight FGs, including 15 GPs [80% male, mean age 44 years, standard deviation (SD) 10.4] and 24 hospital physicians (67% male, mean age 42 years, SD 8.6) from specialties including anesthesiology, neurosurgery, and occupational medicine. Physicians’ statements were categorized into four themes: acceptance, physician–patient relationship, AI development and implementation, and application areas. Each theme was illustrated with selected participant quotations to highlight key aspects. Key factors promoting AI acceptance included human oversight, reliance on scientific evidence and non-profit funding. Concerns about AI's impact on the physician-patient relationship focused on reduced patient interaction time, with participants emphasizing the importance of maintaining a human connection. Key prerequisites for AI implementation included legal standards, like clarifying responsibilities and robust data protection measures. Most physicians were skeptical about the use of AI in tasks requiring empathy and human attention, like psychotherapy and caregiving. Potential areas of application included early diagnosis, screening, and repetitive, data-intensive processes.

Conclusion: Most participants expressed openness to the use of AI in medicine, provided that human oversight is ensured, data protection measures are implemented, and regulatory barriers are addressed. Physicians emphasized interpersonal relationships as irreplaceable by AI. Understanding physicians’ perspectives is essential for developing effective and practical AI applications for medical care settings.

Introduction

As healthcare demands increase and workforce shortages emerge (1–3), the need for digital transformation to enhance efficiency and strengthen system capacity has become increasingly evident (4). In this context, artificial intelligence (AI) has the potential to optimize workflows and care processes, addressing key healthcare challenges (2).

The integration of AI into medical care is accelerating, offering solutions to enhance diagnostic accuracy, treatment efficiency, and personalized patient care (5). AI, defined as the use of machines to simulate human reasoning and problem-solving, is designed to tackle complex problems traditionally addressed by human experts (6). Subfields such as machine learning and natural language processing enable data analysis and task automation in healthcare, helping to reduce physicians’ workload and minimize errors from overwork (7–10). At the same time, the introduction of AI in healthcare presents challenges, including data privacy risks and reliance on potentially biased algorithms, which, when trained on non-representative data, can lead to inaccurate diagnoses (11, 12).

Across various medical specialties, a growing number of AI applications are emerging (11, 13). In dermatology, for instance, AI may support the diagnosis of skin lesions and could facilitate more efficient referrals from primary to secondary care (14, 15). However, if algorithms are trained on non-representative data, they may fail to accurately diagnose conditions in individuals with darker skin tones or those with uncommon skin conditions, thereby exacerbating existing health disparities (16). Additionally, several studies have reported that AI is increasingly applied in surgical practice, utilizing robotic-assisted systems to enhance precision, improve diagnostic accuracy, and support postoperative monitoring (17, 18). A well-known example is the Da Vinci system, which employs machine learning and computer vision to facilitate minimally invasive procedures (17, 19, 20). However, concerns persist regarding high acquisition and maintenance costs, longer operative times, and limited evidence demonstrating a clear clinical benefit (19, 20). Despite its growing integration, AI adoption remains inconsistent across medical specialties due to persistent ethical, technological, regulatory, liability, and patient safety concerns (9, 21). To address these challenges in the development and implementation of AI systems, appropriate regulatory oversight is indispensable (22). At the European level, the AI Act serves as a foundational legal framework, aiming to harmonize standards for safe, transparent, and human-centered AI across member states (23). Although regulatory frameworks such as the European AI Act aim to promote trustworthy AI, the actual readiness and confidence of physicians to use these technologies vary across countries. Similarly, prior studies from Saudi Arabia and South Korea have demonstrated variability in physician preparedness and confidence regarding the use of AI in clinical practice (24, 25).

Addressing these barriers requires the active involvement of physicians, whose acceptance is essential to achieving widespread adoption of AI-related technologies (26). Although physicians generally have favorable attitudes towards AI in medicine, several studies suggest that their experience and overall knowledge of AI applications remain limited (27–29). A recent systematic review reported that physicians and medical students were receptive to clinical AI, albeit with some concerns (27).

Despite these insights, research gaps remain in understanding physicians’ attitudes towards AI across different fields of medicine. In fact, the literature to date has primarily focused on individual medical fields (30, 31), thus failing to provide a comprehensive view of the medical profession as a whole. Additionally, while existing studies have predominantly employed quantitative methods (24, 29, 32), a qualitative approach may be more suitable for capturing physicians’ perspectives, as it allows for a more nuanced and exploratory investigation of their views (33). In this regard, conducting focus groups (FGs) can provide a deeper understanding of physicians’ views (34). As part of the PEAK project (Perspectives on the Use and Acceptance of Artificial Intelligence in Medical Care) (35), this study therefore aimed to explore physicians’ attitudes towards and acceptance of AI in medical care using a qualitative approach.

Methods

Participants and data collection

This qualitative study is part of the PEAK project, an explorative sequential mixed-methods study designed to explore physicians’ and patients’ attitudes towards AI in medical care. For this part, we included general practitioners (GPs) and hospital physicians to represent two distinct levels of care within White et al.'s healthcare pyramid: primary care and tertiary care (36, 37). By selecting these two groups, which differ fundamentally in their clinical routines, patient populations, and potentially also in their perspectives (38), we aimed to capture a broad spectrum of perspectives and attitudes towards AI in medical care (38, 39). Between June 2022 and January 2023, a total of eight semi-structured FGs were conducted with GPs and university hospital physicians. GPs were recruited from the research network RaPHaeL (Research Practices Halle-Leipzig) (40), and the Medical Association of Saxony-Anhalt, Germany, via email and postal mail. Similarly, hospital physicians were recruited from the University Hospitals Halle (Saale) and Erlangen, Germany, via email. FGs consisted of three to six participants each and were conducted separately for GPs and hospital physicians. Prior to the FGs, the participants’ socio-demographic data, level of further education, duration of medical practice, specialization, experience in their specialization, and technology affinity were collected. The latter was assessed using three items on Perceived Technology Competence from a standardized questionnaire (41). Information about the study was communicated both orally and in writing to participants before the FG. They were informed about the voluntary nature of their participation, the lack of financial compensation, and their right to refrain from answering questions or to terminate the focus group without providing a reason. No monetary incentives were offered for participation; however, participants were informed in advance that light refreshments would be provided during the focus groups. Study participants gave their written informed consent. After introducing a stimulus through a video, the moderator used questions from the FG guide to prompt the discussion, ensuring that all participants were actively engaged in the discussion. The FGs were moderated by two researchers, both of whom were largely unfamiliar to the participants. Prior to the FGs, participants were informed about the moderators’ backgrounds and the aim of the study. They were given space to express their opinions openly and without disruptions. All FGs were audio-recorded, with duration ranging between 88 and 135 min, and notes were taken by additional researchers. FGs were conducted until thematic saturation was reached, which was assessed through an emergent, iterative process. Saturation was considered achieved when no new relevant themes, insights or codes emerged from the data. All data were handled confidentially.

Outline of topic guide and application examples

A topic guide was developed using literature from a PubMed search and guided by Helfferich's methods (42) and Krueger and Casey's approach to focus group guides (43). It included open-ended questions designed to explore attitudes towards AI in medical care, including factors promoting and hindering physicians’ acceptance of AI, effects on the physician–patient relationship, challenges in implementing AI, and areas of application for AI. To stimulate the FGs, a video presentation was shown that defined AI and highlighted three potential healthcare applications: (a) diagnosis: symptom check via Ada app (44); (b) treatment: alternative medication plan (45); (c) process optimization: voice documentation (46). The example sequence was varied across groups to reduce bias, with all materials pretested.

Data analysis

The audio-recorded FGs were transcribed verbatim. To ensure anonymity, any information that could identify participants was removed from the transcripts, and pseudonyms were used to replace real names, ensuring that identification was no longer possible. The textual material was analyzed using a content analysis approach linked to Mayring (47) by systematically assigning physicians’ attitudes to a codebook. It was developed collaboratively by four researchers independently coding a representative FG and discussing and refining the codes until consensus was reached. The codebook was applied to all FGs by three of the four researchers and modifications were discussed after each FG until consensus was achieved; new codes were applied to all FGs. Main themes were generated deductively based on the FG guide, while subthemes were identified inductively from the data. All researchers used MAXQDA software. To enhance methodological credibility, the coding was conducted iteratively with regular team discussions to resolve discrepancies and refine the codebook. Consensus was achieved through collaborative coding and thorough review. Member checking was not performed, as the focus of the analysis was on thematic patterns across groups rather than on individual perspectives.

Results

Participant characteristics

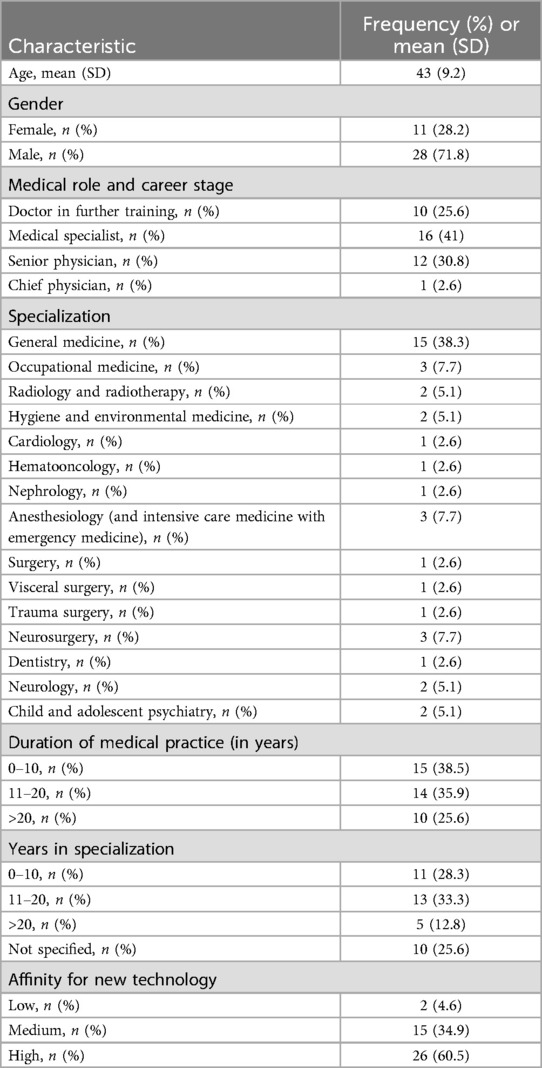

39 physicians participated in eight FGs, including 15 GPs (80% male, mean age 44 years, SD 10.4) and 24 hospital physicians from various specialties, such as anesthesiology, neurosurgery, and occupational medicine (67% male, mean age 42 years, SD 8.6). The majority of participants (60.5%) had a high affinity for new technology and around one third of participants (34.9%) a medium affinity. A more detailed description of the sample is given in Table 1.

Themes and subthemes

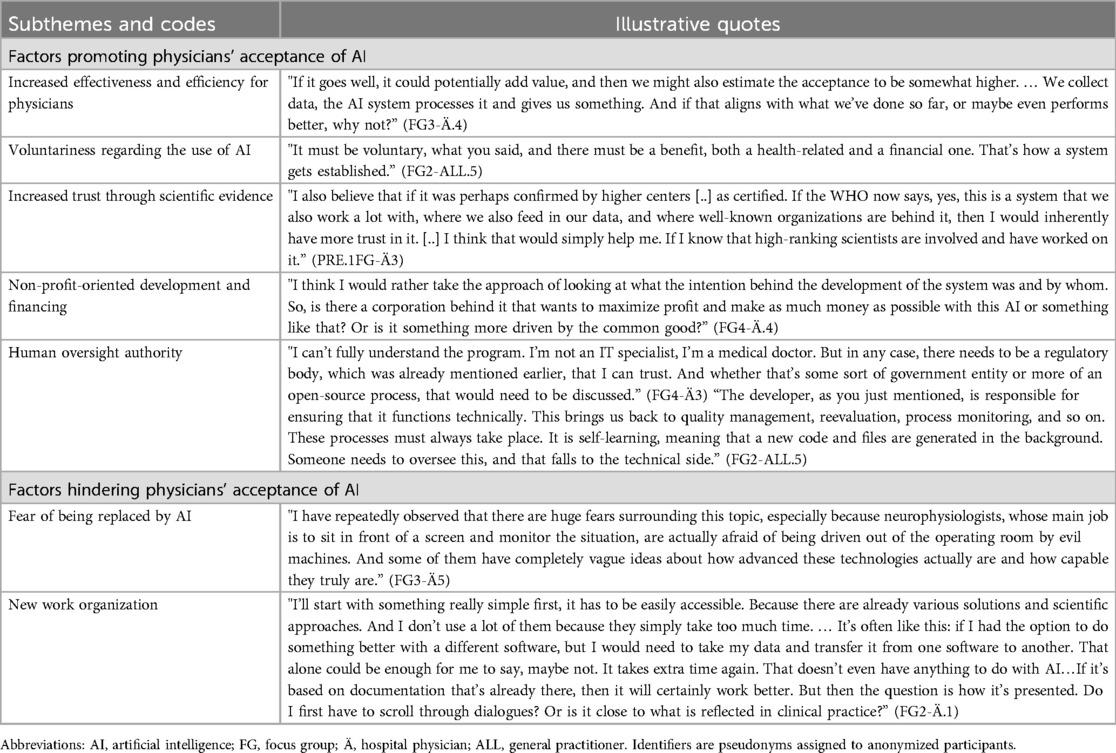

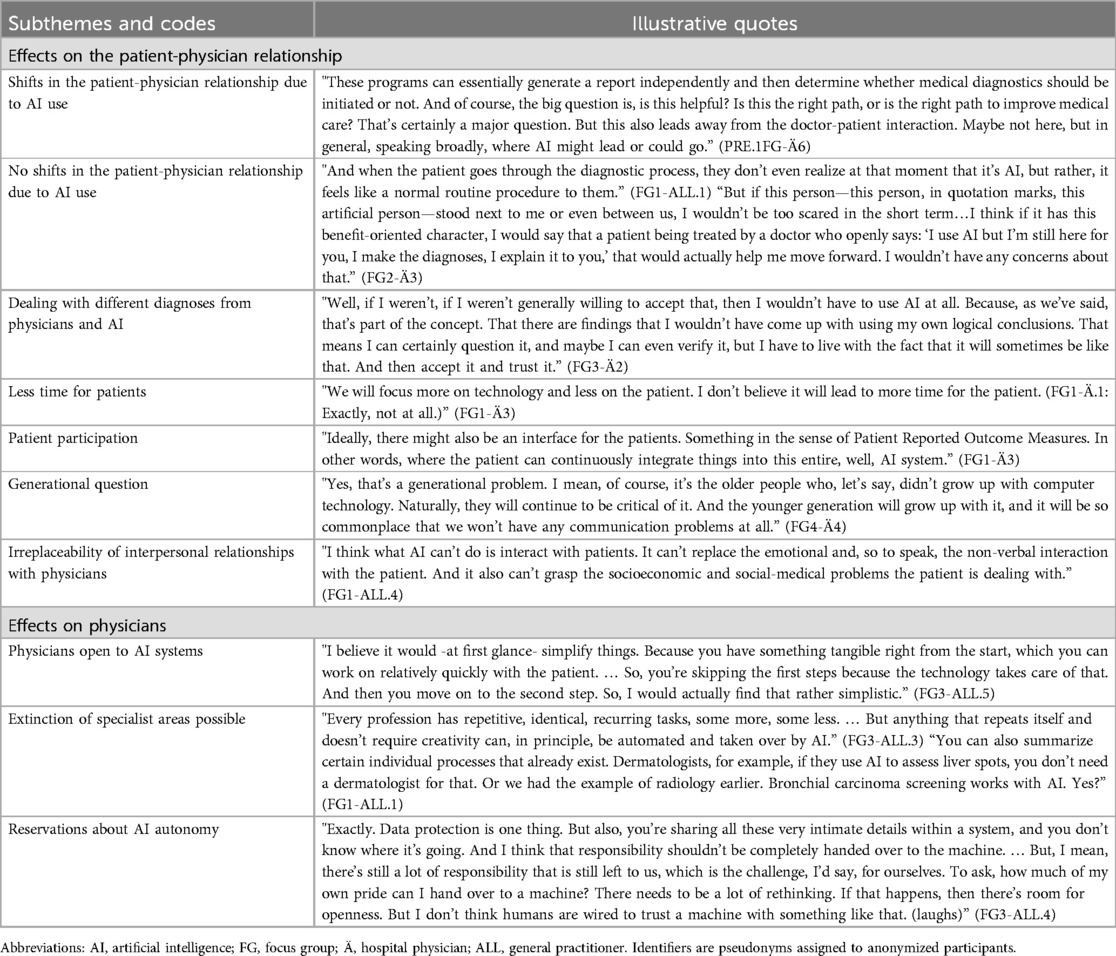

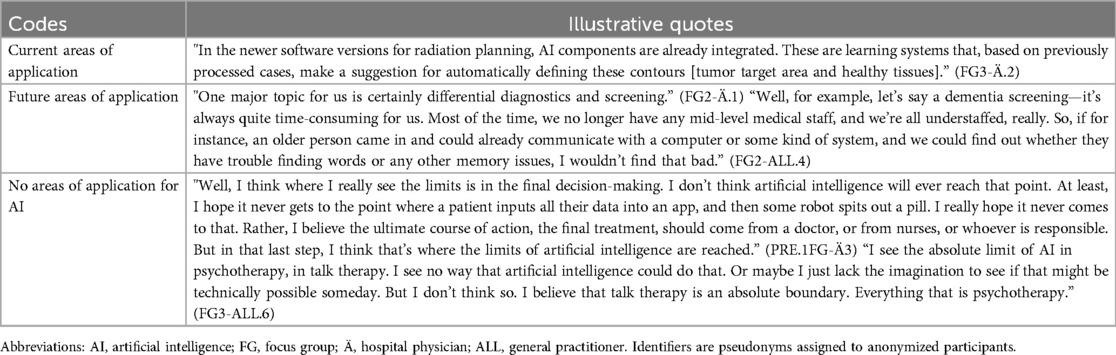

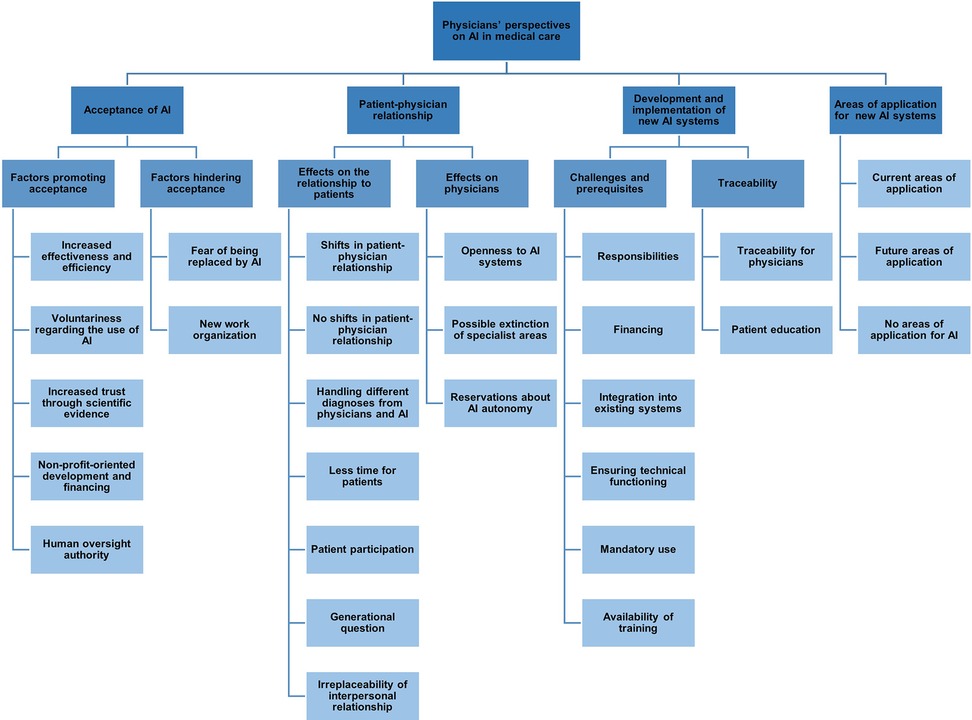

The results of this study are organized into four main themes: (1) acceptance, (2) physician–patient relationship, (3) development and implementation of new AI systems, and (4) areas of application for AI. An overview of the thematic structure is illustrated in Figure 1. Each theme and its subthemes are described in detail below; quotes can be seen in Tables 2–5.

Figure 1. Thematic map of the codebook: physicians’ perspectives on artificial intelligence (AI) in medical care.

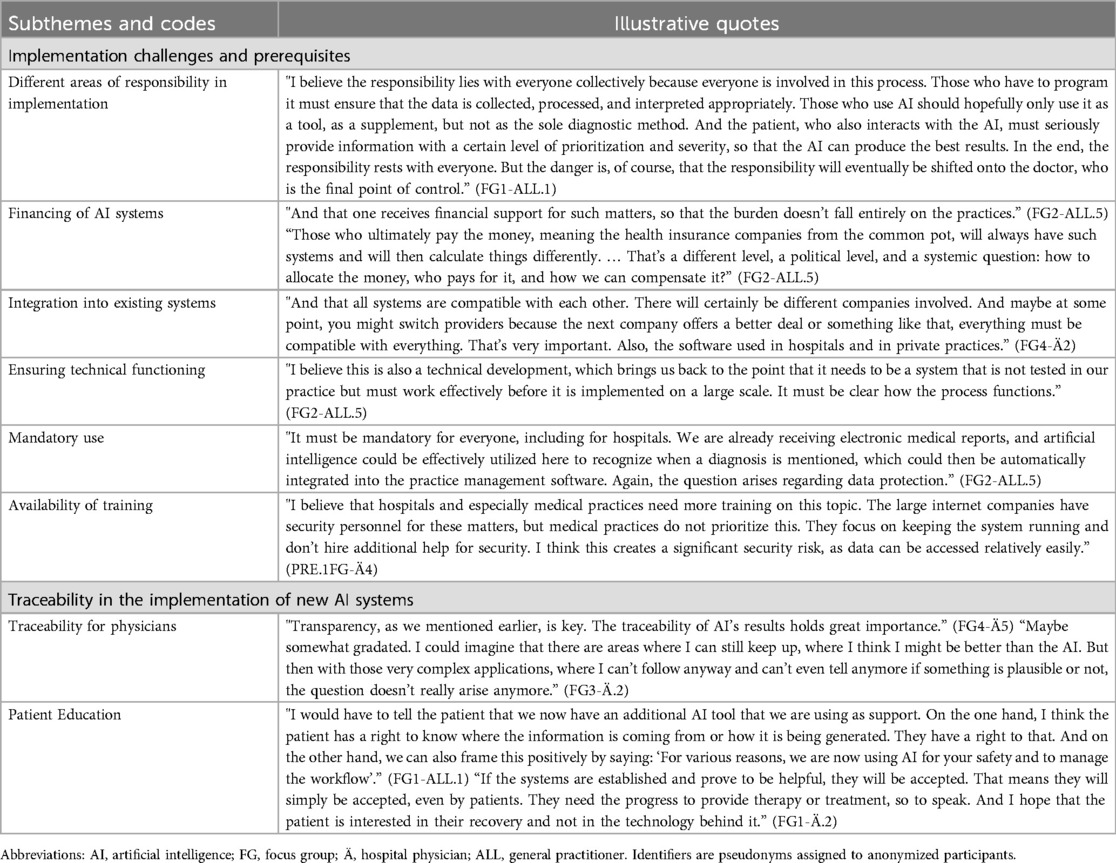

Table 4. Subthemes, codes and illustrative quotes for the theme development and implementation of new AI systems.

Theme 1: acceptance

Subtheme 1: factors promoting physicians᾽ acceptance of AI

In the FGs, participants considered a substantial increase in effectiveness and efficiency in their medical work as a crucial precondition for accepting the use of AI in their daily routine (Table 2). Examples mentioned in this context were improvements in diagnostic accuracy and in personalized treatment. Physicians emphasized the importance of adopting AI tools at their own pace and discretion to maintain autonomy in their decision-making. Furthermore, participants noted that trust in AI and willingness to adopt it in practice increase when systems are supported by strong scientific evidence, reinforcing confidence in the efficacy and safety of these technologies. Some participants also highlighted the need for AI systems to be developed and financed without profit motives, expressing concerns that such motives could compromise the integrity of AI applications. Physicians advocated for models that prioritize ethical considerations and patient welfare over financial gain. Additionally, most participants emphasized the importance of ensuring that AI systems in the medical field do not operate autonomously, but are instead supervised and controlled by experts and regulatory bodies, highlighting the need for human oversight to ensure safety, accuracy, and ethical standards in medical applications.

Subtheme 2: factors hindering physicians’ acceptance of AI

Participants expressed concerns about the potential for AI to replace aspects of their professional roles. Some suggested that AI could eventually perform tasks traditionally carried out by physicians, leading to fears of job displacement and a reduction in the value of their expertise. Framing AI as a replacement for physicians could lead to resistance from both medical professionals and patients. Instead, they suggested that framing and designing AI as a supportive tool for physicians would be more likely to gain acceptance and be successfully integrated into medical practice. Some participants, particularly GPs, perceived the new organization of work and the additional workload that might result from the introduction of AI systems as a barrier to their acceptance.

Theme 2: physician–patient relationship

Subtheme 1: effects on the physician–patient relationship

The potential shift in the physician–patient relationship as a result of the integration of AI systems was another concern raised by some participants (Table 3). They expressed that AI might alter this relationship, particularly in terms of trust and communication, emphasizing the importance of maintaining interpersonal relationships with their patients. Another recurring theme in this context was the fear that the use of AI could result in less time for patients, as the focus may shift toward technology-driven diagnostics and treatment planning.

In contrast, other physicians indicated that the integration of AI would not lead to significant changes in the physician–patient relationship. They regarded AI as a supportive tool that would enhance decision-making without compromising key elements such as trust, communication, and empathy, which they believed remain central to physicians. While acknowledging the growing role of AI in healthcare, participants emphasized that the human connection with patients, which they identified as a key element of patient-centered care, cannot be replaced by machines. Some physicians reflected on the challenges of dealing with different diagnoses from physicians and AI, highlighting potential confusion or uncertainty for patients. Discussions also revealed that the effects of AI on the physician–patient relationship might vary across generations, with younger patients perhaps being more comfortable with AI, while older generations might place more value on personal interaction with their physicians.

Subtheme 2: effects on physicians

Regarding the effects of AI on physicians themselves, the majority of participants expressed openness towards incorporating AI systems into their practice, recognizing the potential benefits for diagnosis and treatment. However, some physicians voiced reservations, particularly regarding the autonomy of AI systems, raising concerns about the extent to which AI should be allowed to make independent decisions in patient care.

Theme 3: development and implementation of new AI systems

Subtheme 1: implementation challenges and prerequisites

Physicians discussed the required prerequisites and various challenges related to the development and successful implementation of new AI systems in medical practice (Table 4). A central topic was the clarification of their own and other stakeholders’ responsibilities. Participants expressed their responsibility for final decision-making, while they considered AI developers responsible for ensuring the technical robustness of AI systems and for training their systems with diverse datasets to prevent discrimination in medical applications. Opinions diverged, however, regarding the level of responsibility attributed to patients. On the one hand, physicians suggested that patients were often unaware of the tools and technologies physicians used in the background, and that such understanding could not reasonably be expected. On the other hand, they argued that patients should be regarded as active participants, whose consent and understanding of AI's role in their care were essential.

Physicians highlighted the challenge of ensuring collective responsibility in the implementation of AI, emphasizing the crucial roles of developers, physicians, and patients, while noting the risk that ultimate responsibility could be disproportionately shifted onto physicians as the final point of control. Another challenge mentioned by participants, particularly GPs, was the financing of AI systems. Several GPs noted that they might need to cover the costs of these systems for their practices, which could pose a financial burden. Both GPs and hospital physicians emphasized the importance of seamless compatibility with current medical workflows and the need for appropriate technical functioning of AI systems. Additionally, most participants advocated for the mandatory use of AI systems across all medical facilities (including practices and clinics) to promote standardization in medical care, provided that robust data protection measures are in place. Physicians also highlighted the necessity of adequate training to ensure the safe integration of AI systems into their daily work and raised concerns about potential security risks for medical practices associated with AI implementation.

Subtheme 2: traceability in the implementation of new AI systems

While some physicians viewed full traceability as difficult to achieve due to the sophisticated nature of AI systems, others emphasized that comprehensibility and transparency were essential to ensure accountability. Opinions were similarly divided regarding patient education, ranging from the importance of informing patients about the use and benefits of AI systems in their treatment to facilitate informed decision-making, to no need to explain the technology and its underlying principles once AI systems are integrated into routine practice.

Theme 4: areas of application for AI

Finally, physicians discussed their perspectives on the current and potential future applications of AI in healthcare (Table 5). They indicated that AI is currently being introduced or has already been applied in several areas, including radiology and dermatology. In particular, several physicians highlighted the use of AI in radiotherapy, emphasizing its role in enhancing workflow efficiency by automating tasks such as the contouring of organs at risk and tumors in imaging scans. In dermatology, a few others noted AI's role in the early detection of skin cancer. Participants considered these application areas particularly well suited for AI integration due to the high degree of standardization and the availability of image-based data in these specialties.

Furthermore, physicians identified several potential future application areas for AI in medical care. They particularly highlighted the areas of early diagnosis and screening as promising fields for AI integration. According to the participants, AI systems could be valuable in detecting diseases at earlier stages, which would improve patient outcomes and reduce the burden on healthcare systems. In the field of neurology, they frequently noted AI's potential role in the early detection of diseases such as Alzheimer's, emphasizing that its predictive capabilities could meaningfully advance preventive strategies across various medical specialties. Physicians suggested that AI could help to tailor treatments to individual patients by analyzing large datasets to predict treatment outcomes more accurately. Additionally, AI's potential to assist in surgical planning and real-time decision-making during procedures was highlighted as an area that could enhance precision and patient safety.

In contrast, participants expressed clear hesitations about certain areas where they do not see AI playing a major role in healthcare. Mental health, particularly psychotherapy, was one domain where they felt AI would be less effective, citing the importance of human empathy and the nuanced understanding required in therapeutic relationships. Similarly, they considered AI unsuitable for use in direct patient care, where the personal touch and human connection are crucial for providing comfort and emotional support to patients. Another area where physicians were skeptical about AI's potential was in ultimate decision-making, especially in complex medical cases where ethical considerations and the expertise of experienced physicians are paramount. Participants strongly emphasized that human interaction is irreplaceable in many aspects of healthcare, stressing that the physician–patient relationship, built on trust, communication, and compassion, cannot be replicated by machines. In discussing application areas, physicians also addressed why AI remains in its early stages in many fields. The reasons mentioned included insufficient data sources for training AI systems, data protection concerns, and technical challenges.

Discussion

This study explored physicians’ attitudes towards and acceptance of AI in medical care, drawing on perspectives from multiple medical specialties. While most themes were discussed similarly across GPs and hospital physicians, certain differences became apparent. For instance, GPs, operating at the primary care level, voiced greater concern about the practical and financial aspects of AI implementation, which tend to be less pressing for hospital physicians working in tertiary care settings, where implementation is often handled at the institutional level. Overall, physicians’ acceptance of AI was influenced by perceived benefits such as increased efficiency and diagnostic accuracy, but also shaped by concerns regarding AI autonomy, changes to the physician–patient relationship, and broader ethical implications. Participants emphasized the need for human oversight, scientific validation, and the establishment of ethical and regulatory safeguards. While there was skepticism about using AI in empathy-driven domains such as psychotherapy and caregiving, participants recognized potential applications in early diagnosis, screening, and data-intensive, repetitive processes.

In terms of factors promoting acceptance, physicians highlighted the potential of AI to substantially enhance effectiveness and efficiency in their medical work, which aligns with previous findings emphasizing AI's role in improving diagnostic accuracy and personalized care (5, 48). Participants highlighted the need for physician-driven AI adoption to safeguard professional autonomy, aligning with broader concerns in the literature on maintaining professional independence while integrating new technologies (49). Furthermore, trust in AI was closely linked to scientific validation, with physicians advocating for robust evidence to support the efficacy and safety of these systems. Additionally, participants strongly favored human oversight of AI systems, reflecting broader discussions about the indispensable role of expert regulation in mitigating potential risks and maintaining high ethical standards (12, 50). On the other hand, barriers to acceptance were closely linked to fears of being replaced in certain tasks and the potential devaluation of physicians’ expertise. These findings align with prior research indicating that framing AI as a supportive tool rather than a replacement is key to fostering acceptance among healthcare professionals (51). Notably, GPs expressed concerns about potential disruptions to their workflow, highlighting the need for seamless integration of AI systems into existing practices. Similarly, Shamszare et al. (52) suggested that optimal integration strategies could foster clinicians’ trust, mitigate perceived risks and workload, and enhance acceptance of AI-assisted clinical decision-making. This underlines the importance of addressing organizational challenges to support the adoption of AI in medical care.

Our results revealed participants’ concerns that AI could significantly influence the physician–patient relationship by compromising trust and communication, underscoring the importance of preserving the interpersonal connection central to patient-centered care. These concerns align with findings from Kerasidou (53) and Inanaga et al. (54) who highlighted the critical role of empathy and trust in patient satisfaction and treatment adherence. While AI can streamline clinical processes (55), some participants worried this could detract from direct patient interaction. Participants also highlighted the issue of responsibility in medical decision-making, emphasizing the need to clarify the responsibilities of all stakeholders while ensuring that physicians, alongside patients, remain the ultimate decision-makers. This perspective reflects a broader concern within the medical community about the ethical and legal implications of AI integration and was identified by physicians as a prerequisite for the successful implementation of AI systems in medical practice. Clear regulations delineating responsibilities are essential to ensure legal protection and maintain trust in medical decision-making (56, 57). This perspective aligns with the concerns raised by the German Medical Association (“Bundesärztekammer”) in a recent statement, which underscores the importance of addressing both technical and ethical challenges in the implementation of AI across various medical environments (58). These include the need for robust validation of AI models across diverse patient populations, transparency to ensure comprehensibility for physicians, and a regulatory framework that enables innovation while safeguarding physicians’ responsibility for diagnosis and treatment. Similar to previous findings in the literature (21, 59), our results indicated that while physicians, developers, and patients were all identified as key stakeholders in AI implementation, the precise allocation of responsibilities and liabilities remains unresolved. Physicians were predominantly seen as ultimately responsible for clinical decisions, while AI developers were expected to ensure technical robustness and minimize biases by utilizing diverse datasets. Perspectives on patients’ roles varied; some saw them as largely unaware of the technologies in their care, while others stressed the need for active participation and understanding of AI's role in treatment. Consistent with this, a qualitative study on patient perspectives regarding engagement in AI highlighted the significance of meaningful patient involvement, suggesting that it should serve as the gold standard for AI application development (60). These findings reflect existing concerns about regulatory gaps, given that current liability frameworks fail to clearly define stakeholder responsibilities in AI-driven healthcare, calling for urgent regulatory updates (61).

Echoing findings from a previous study (62), GPs particularly highlighted concerns about the financing of AI systems, including acquisition and operational costs, as well as the need for financial support for implementation. Beyond these concerns, the literature highlights potential benefits of implementing AI in medical practice, such as cost reductions through increased efficiency, automation of manual tasks, and lower expenses related to misdiagnoses or late diagnoses (63, 64). Furthermore, explainability and traceability of AI systems were recurring themes. In this context, many physicians emphasized the importance of comprehensible AI processes for building trust, while acknowledging the challenges posed by the ‘black box’ nature of AI. This is consistent with findings from the study by Sangers et al. (50), which focused on the views of GPs and dermatologists and found that they struggled to understand the rationale behind algorithmic decisions, making it difficult to assess their accuracy.

When participants discussed current and potential future applications of AI in healthcare, they emphasized its diverse capabilities, particularly in data-intensive specialties like radiology and dermatology, with a focus on workflow efficiency enhancement. These findings align with earlier studies emphasizing AI's compatibility with standardized, image-based fields (15, 30, 65). Mental health, on the other hand, particularly psychotherapy, was seen as reliant on human empathy and nuanced understanding, making AI less suitable. Similarly, caregiving roles requiring emotional support were viewed as unsuitable for AI implementation. While these concerns align with previous studies emphasizing the importance of human interaction in these domains (66, 67), recent research also highlights AI's potential to support mental health care, especially through AI-driven conversational agents that provide psychoeducational resources and mediate evidence-based therapeutic techniques (68, 69). Finally, participants also discussed reasons why AI remains underutilized in some fields. Insufficient data, data protection concerns, and technical challenges were identified as major barriers. These issues reflect findings in literature highlighting the need for robust datasets and regulatory frameworks to advance AI in healthcare (55, 70).

The findings of this study offer key insights into AI integration in medical care and highlight important implications for research and practice. They emphasize factors influencing physicians’ acceptance, such as efficiency gains, while underscoring the essential role of expert judgment and autonomous decision-making authority of physicians. We suggest that a key consideration for the integration of AI into medical practice should be to ensure that it complements, rather than replaces, physician decision-making, while preserving physician autonomy and medical judgment. Concerns about AI's impact on the physician–patient relationship suggest that implementation should prioritize human interaction and trust. Additionally, challenges related to standardization, and data protection require clear regulatory frameworks, such as the recently enacted AI Act, a European regulation aimed at ensuring legal and ethical standards for AI development and implementation enabling AI's social acceptance across diverse populations (23, 70). This initiative marks a step forward in building confidence among AI providers and users (71). Our study contributes to the broader discourse on AI prerequisites for implementation and acceptance in medical care by offering a cross-specialty perspective, filling a gap in previous studies that were limited to individual specialties and lacked a broader view (31, 34, 72). The study identifies avenues for future research, particularly on how AI can be integrated into specific medical domains, such as mental health and situations involving complex clinical decisions. These include, for example, end-of-life care, resource allocation, or treatment prioritization, where the unique challenges of patient sensitivity and moral dilemmas need to be addressed. Additionally, future studies could employ a quantitative methodology to determine the impact of influencing factors, such as specialization, on physicians’ attitudes and to explore the broader impact of AI integration in medical practice. As part of the broader PEAK study, patients also participated in focus groups exploring their perceptions of AI in medical care (73). Their views aligned in several respects with those of physicians, especially regarding the importance of empathy and human oversight. In light of recent advances in conversational diagnostic systems (74), future research should also investigate how patients experience empathy and communication in interactions with AI-based systems, and how these perceptions compare to those of physicians. Building on physicians’ concerns about preserving trust and human connection in the physician–patient relationship, future studies should also explore how AI might support shared decision-making by encouraging more active patient involvement in treatment discussions and supporting patient autonomy (75). This will be essential to understanding how AI-mediated shared decision-making influences adherence, satisfaction, and the evolving roles of both patients and physicians in medical care (66, 76). Given the rapid pace of AI developments in medicine, conducting repeated studies at regular intervals is recommended to capture evolving physician attitudes and implementation conditions. This is particularly relevant in light of expanding AI-related training opportunities, such as the German AI education platform KI Campus, which offers Continuing Medical Education (CME)-certified courses for physicians (77). Addressing these questions is essential to ensure the responsible and effective application of AI in medical care.

Strengths and limitations

A strength of this study is its inclusion of both hospital physicians and GPs, providing insights from multiple medical specialties on the implementation of AI in medical care. By employing a qualitative approach, the study allowed for a more nuanced analysis of physicians’ opinions. However, it is important to note some limitations of this study. The gender distribution among participants, particularly the overrepresentation of male GPs, may have influenced the diversity of perspectives captured. This imbalance may stem from differing interest in AI-related topics or time constraints, as the two-hour focus group duration may have posed a greater barrier for some participants (78). Previous research suggests, however, that gender does not necessarily influence attitudes towards AI (79). This study did not include physicians working in secondary care settings, such as outpatient specialists, whose perspectives may differ and should be explored in future research. As most participating physicians had little to no experience with AI in their daily medical practice, the discussions were largely based on hypothetical scenarios, which should be considered when interpreting the findings. Another limitation is the potential for selection bias, as participation in the FGs was voluntary, which may have led to an overrepresentation of physicians with a particular interest in AI. Given that the FGs were conducted both before and after the public release of ChatGPT in November 2022, the accompanying media attention may have further increased general interest in AI. Notably, ChatGPT was mentioned a few times when participants were asked about their initial associations with AI, indicating a general awareness of its emergence. However, the fact that the majority of study participants demonstrated a high level of technical affinity suggests that this external influence, if any, was likely minimal. Despite these limitations, the study provides a valuable starting point for further research and highlights important gaps in the action needed for meaningful AI implementation in medical care.

Conclusion

This qualitative study provides insights into how German physicians from various specialties perceive the use of AI in medical care, revealing both an openness toward its application and concerns about potential risks. These included organizational, regulatory, and ethical challenges, as well as the need for human oversight. Physicians emphasized interpersonal relationships as irreplaceable by AI, highlighting the importance of preserving human interaction when integrating AI into medical care. Addressing these concerns is key to successful implementation, with physicians’ attitudes central to shaping effective AI applications.

Data availability statement

The raw qualitative data supporting the conclusions of this article will be made available by the authors, in compliance with applicable ethical and privacy standards.

Ethics statement

The study involving human participants was approved by the Ethics Committee of the Medical Faculty of the Martin Luther University Halle-Wittenberg (ethics vote: 2021-229). The study was conducted in accordance with the Declaration of Helsinki and with local legislation and institutional requirements. Participants provided their written informed consent to participate in this study.

Author contributions

SN: Writing – original draft, Data curation, Methodology, Investigation, Software, Conceptualization, Formal analysis, Project administration, Writing – review & editing. JG: Formal analysis, Writing – review & editing, Investigation, Methodology. CB: Methodology, Writing – review & editing, Formal analysis. TA: Investigation, Writing – review & editing. JS: Supervision, Writing – review & editing. TF: Writing – review & editing, Supervision. JC: Supervision, Writing – review & editing. RM: Writing – review & editing, Supervision, Conceptualization, Funding acquisition.

Funding

The author(s) declare that financial support was received for the research and/or publication of this article. The Innovation Fund of the Federal Joint Committee [G-BA (01VSF20017)] funds the PEAK project. In addition, we acknowledge financial support from the Open Access Publication Fund of the Martin Luther University Halle-Wittenberg. The funders had no role in study design, data collection and analysis, decision to publish, or preparation of the manuscript.

Acknowledgments

We thank all focus group participants for their participation. The study is part of the Project ‘Perspectives on the use and acceptance of Artificial Intelligence in medical care’ (PEAK) (35). Contributions of PEAK consortium: conceptualization, funding acquisition, project administration, supervision, investigation, formal analysis and development of mock-ups and video. Thanks to further members of PEAK consortium: Dr. Nadja Kartschmit (Institute for Outcomes Research, Center for Medical Data Science, Medical University of Vienna, Vienna, Austria): conceptualization, funding acquisition; Carsten Fluck [Institute of Medical Epidemiology, Biometrics, and Informatics (IMEBI), Medical Faculty, Martin-Luther-University Halle-Wittenberg, Halle (Saale), Germany] and Iryna Manuilova [Junior Research Group (Bio-) medical Data Science, Medical Faculty, Martin-Luther-University Halle-Wittenberg, Halle (Saale), Germany]: technical support; Johannes Ennenbach [IMEBI, Medical Faculty, Martin-Luther-University Halle-Wittenberg, Halle (Saale), Germany] and Claudius Sommer [IMEBI, Medical Faculty, Martin-Luther-University Halle-Wittenberg, Halle (Saale), Germany]: technical support and coding. Parts of this study, including interim findings, were presented as a poster at the 34th Medical Informatics Europe Conference. The abstract was published by IOS Press in the conference proceedings: doi: 10.3233/SHTI240501

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Generative AI statement

The author(s) declare that no Generative AI was used in the creation of this manuscript.

Publisher's note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

References

1. Hong C, Sun L, Liu G, Guan B, Li C, Luo Y. Response of global health towards the challenges presented by population aging. China CDC Wkly. (2023) 5:884. doi: 10.46234/ccdcw2023.168

2. Maleki Varnosfaderani S, Forouzanfar M. The role of AI in hospitals and clinics: transforming healthcare in the 21st century. Bioengineering. (2024) 11(4):337. doi: 10.3390/bioengineering11040337

3. Schmitt T, Haarmann A, Shaikh M. Strengthening health system governance in Germany: looking back, planning ahead. Health Econ Policy Law. (2023) 18:14–31. doi: 10.1017/S1744133122000123

4. Dietrich CF, Riemer-Hommel P. Challenges for the German health care system. Z Gastroenterol. (2012) 50:557–72. doi: 10.1055/s-0032-1312742

5. Javanmard S. Revolutionizing medical practice: the impact of artificial intelligence (AI) on healthcare. OA J Applied Sci Technol. (2024) 2(1):01–16. doi: 10.33140/OAJAST.02.01.07

6. Shen T, Fu X. Application and prospect of artificial intelligence in cancer diagnosis and treatment. Zhonghua Zhong Liu Za Zhi. (2018) 40:881–4. doi: 10.3760/cma.j.issn.0253-3766.2018.12.001

7. Kaul V, Enslin S, Gross SA. History of artificial intelligence in medicine. Gastrointest Endosc. (2020) 92:807–12. doi: 10.1016/j.gie.2020.06.040

8. Dongre AS, More SD, Wilson V, Singh RJ. Medical doctor’s perception of artificial intelligence during the COVID-19 era: a mixed methods study. J Family Med Prim Care. (2024) 13(5):1931–6. doi: 10.4103/jfmpc.jfmpc_1543_23

9. Shinners L, Aggar C, Grace S, Smith S. Exploring healthcare professionals’ understanding and experiences of artificial intelligence technology use in the delivery of healthcare: an integrative review. Health Informatics J. (2020) 26(2):1225–36. doi: 10.1177/1460458219874641

10. Allam Z. The Rise of Machine Intelligence in the COVID-19 Pandemic and its Impact on Health Policy. London: Elsevier (2020). p. 89.

11. Alowais SA, Alghamdi SS, Alsuhebany N, Alqahtani T, Alshaya AI, Almohareb SN, et al. Revolutionizing healthcare: the role of artificial intelligence in clinical practice. BMC Med Educ. (2023) 23:689. doi: 10.1186/s12909-023-04698-z

12. Mennella C, Maniscalco U, De Pietro G, Esposito M. Ethical and regulatory challenges of AI technologies in healthcare: a narrative review. Heliyon. (2024) 10:e26297. doi: 10.1016/j.heliyon.2024.e26297

13. Srivastava R. Applications of artificial intelligence in medicine. Explore Res Hypothesis Med. (2024) 9(2):138–46. doi: 10.14218/ERHM.2023.00048

14. Wen D, Khan SM, Xu AJ, Ibrahim H, Smith L, Caballero J, et al. Characteristics of publicly available skin cancer image datasets: a systematic review. Lancet Digit Health. (2022) 4:e64–74. doi: 10.1016/S2589-7500(21)00252-1

15. Li Z, Koban KC, Schenck TL, Giunta RE, Li Q, Sun Y. Artificial intelligence in dermatology image analysis: current developments and future trends. J Clin Med. (2022) 11:6826. doi: 10.3390/jcm11226826

16. Marko JGO, Neagu CD, Anand P. Examining inclusivity: the use of AI and diverse populations in health and social care: a systematic review. BMC Med Inform Decis Mak. (2025) 25:57. doi: 10.1186/s12911-025-02884-1

17. Ma L, Li C. Da Vinci robot-assisted surgery for deep lobe of parotid benign tumor via retroauricular hairline approach: exploration of a new surgical method for parotid tumors. Oral Oncol. (2024) 159:107043. doi: 10.1016/j.oraloncology.2024.107043

18. Knudsen JE, Ghaffar U, Ma R, Hung AJ. Clinical applications of artificial intelligence in robotic surgery. J Robot Surg. (2024) 18:102. doi: 10.1007/s11701-024-01867-0

19. Zylka-Menhorn V. Roboterassistierte chirurgie: kostenintensiv—bei eher dünner evidenzlage. Dtsch Arztebl Int. (2019) 116:1053–4.

20. Chatterjee S, Das S, Ganguly K, Mandal D. Advancements in robotic surgery: innovations, challenges and future prospects. J Robot Surg. (2024) 18:28. doi: 10.1007/s11701-023-01801-w

21. Ahmed MI, Spooner B, Isherwood J, Lane M, Orrock E, Dennison A. A systematic review of the barriers to the implementation of artificial intelligence in healthcare. Cureus. (2023) 15:e46454. doi: 10.7759/cureus.46454

22. Currie G, Hawk KE, Rohren EM. Ethical principles for the application of artificial intelligence (AI) in nuclear medicine. Eur J Nucl Med Mol Imaging. (2020) 47:748–52. doi: 10.1007/s00259-020-04678-1

23. European Parliament and Council. Regulation (EU) 2024/1689 of 13 June 2024 Laying Down Harmonised Rules on Artificial Intelligence and Amending Certain Union Legislative Acts (Artificial Intelligence Act). Brussels: Publications Office of the European Union (2024). Available at: https://eur-lex.europa.eu/eli/reg/2024/1689/oj/eng (Accessed January 15, 2025).

24. Oh S, Kim JH, Choi S-W, Lee HJ, Hong J, Kwon SH. Physician confidence in artificial intelligence: an online mobile survey. J Med Internet Res. (2019) 21(3):e12422. doi: 10.2196/12422

25. Radhwi OO, Khafaji MA. The wizard of artificial intelligence: are physicians prepared? J Family Community Med. (2024) 31:344–50. doi: 10.4103/jfcm.jfcm_144_24

26. Lambert SI, Madi M, Sopka S, Lenes A, Stange H, Buszello C-P, et al. An integrative review on the acceptance of artificial intelligence among healthcare professionals in hospitals. NPJ Digit Med. (2023) 6(1):111. doi: 10.1038/s41746-023-00852-5

27. Chen M, Zhang B, Cai Z, Seery S, Gonzalez MJ, Ali NM, et al. Acceptance of clinical artificial intelligence among physicians and medical students: a systematic review with cross-sectional survey. Front Med. (2022) 9:990604. doi: 10.3389/fmed.2022.990604

28. Buck C, Doctor E, Hennrich J, Jöhnk J, Eymann T. General practitioners’ attitudes toward artificial intelligence–enabled systems: interview study. J Med Internet Res. (2022) 24:e28916. doi: 10.2196/28916

29. Hamedani Z, Moradi M, Kalroozi F, Manafi Anari A, Jalalifar E, Ansari A, et al. Evaluation of acceptance, attitude, and knowledge towards artificial intelligence and its application from the point of view of physicians and nurses: a provincial survey study in Iran: a cross-sectional descriptive-analytical study. Health Sci Rep. (2023) 6(9):e1543. doi: 10.1002/hsr2.1543

30. Yu F, Moehring A, Banerjee O, Salz T, Agarwal N, Rajpurkar P. Heterogeneity and predictors of the effects of AI assistance on radiologists. Nat Med. (2024) 30(3):837–49. doi: 10.1038/s41591-024-02850-w

31. Amann J, Vayena E, Ormond KE, Frey D, Madai VI, Blasimme A. Expectations and attitudes towards medical artificial intelligence: a qualitative study in the field of stroke. PLoS One. (2023) 18(1):e0279088. doi: 10.1371/journal.pone.0279088

32. Maassen O, Fritsch S, Palm J, Deffge S, Kunze J, Marx G, et al. Future medical artificial intelligence application requirements and expectations of physicians in German university hospitals: web-based survey. J Med Internet Res. (2021) 23(3):e26646. doi: 10.2196/26646

33. Renjith V, Yesodharan R, Noronha JA, Ladd E, George A. Qualitative methods in health care research. Int J Prev Med. (2021) 12:20. doi: 10.4103/ijpvm.IJPVM_321_19

34. Blease C, Kaptchuk TJ, Bernstein MH, Mandl KD, Halamka JD, DesRoches CM. Artificial intelligence and the future of primary care: exploratory qualitative study of UK general practitioners’ views. J Med Int Res. (2019) 21:e12802. doi: 10.2196/12802

35. Gemeinsamer Bundesausschuss. PEAK—Perspektiven des Einsatzes und Akzeptanz Künstlicher Intelligenz. Berlin: Gemeinsamer Bundesausschuss (2025). Available at: https://innovationsfonds.g-ba.de/projekte/versorgungsforschung/peak.397 (Accessed June 12, 2025).

36. White KL, Williams TF, Greenberg BG. The ecology of medical care. Bull N Y Acad Med. (1996) 73:187–212.8804749

37. Green LA, Fryer GE Jr, Yawn BP, Lanier D, Dovey SM. The ecology of medical care revisited. N Eng J Med. (2001) 344:2021–5. doi: 10.1056/NEJM200106283442611

38. Strumann C, Flägel K, Emcke T, Steinhäuser J. Procedures performed by general practitioners and general internal medicine physicians-a comparison based on routine data from Northern Germany. BMC Fam Pract. (2018) 19(1):189. doi: 10.1186/s12875-018-0878-3

39. Perkin MR, Pearcy RM, Fraser JS. A comparison of the attitudes shown by general practitioners, hospital doctors and medical students towards alternative medicine. J R Soc Med. (1994) 87:523–5. doi: 10.1177/014107689408700914

40. Virnau L, Braesigk A, Deutsch T, Bauer A, Kroeber ES, Bleckwenn M, et al. General practitioners’ willingness to participate in research networks in Germany. Scand J Prim Health Care. (2022) 40(2):237–45. doi: 10.1080/02813432.2022.2074052

41. Kamin ST, Lang FR. The subjective technology adaptivity inventory (STAI): a motivational measure of technology usage in old age. Geron. (2013) 12(1):16–25. doi: 10.4017/gt.2013.12.1.008.00

42. Helfferich C. Die Qualität Qualitativer Daten: Manual für die Durchführung Qualitativer Interviews. 4th Eds Wiesbaden: Springer VS (2011). p. 167–94.

43. Krueger RA, Casey MA. Focus Groups: A Practical Guide for Applied Research. 5th Eds Los Angeles: Sage (2015). p. 1–186.

44. Ada. Gesundheit. Powered by Ada. (2024). Available at: https://ada.com/de/ (Accessed July 15, 2024).

45. Department of Medical Informatics, Friedrich-Alexander-Universität Erlangen-Nürnberg. P³ Personalisierte Pharmakotherapie in der Psychiatrie. (2024). Available at: https://www.imi.med.fau.de/projekte/abgeschlossene-projekte/p%C2%B3-personalisierte-pharmakotherapie-in-der-psy (Accessed July 15, 2024).

46. IAIS, Fraunhofer, Fraunhofer Institute for Intelligent Analysis and Information Systems IAIS. Künstliche Intelligenz im Krankenhaus. (2020). Available at: https://www.iais.fraunhofer.de/lotte (Accessed July 15, 2024).

47. Mayring P. Qualitative Inhaltsanalyse: Grundlagen und Techniken.13., überarbeitete Auflage. Weinheim, Basel: Beltz (2022). p. 49–124.

48. Khalifa M, Albadawy M. AI In diagnostic imaging: revolutionising accuracy and efficiency. Comput Methods Programs Biomed Update. (2024) 5:100146. doi: 10.1016/j.cmpbup.2024.100146

49. Funer F, Wiesing U. Physician’s autonomy in the face of AI support: walking the ethical tightrope. Front Med. (2024) 11:1324963. doi: 10.3389/fmed.2024.1324963

50. Sangers TE, Wakkee M, Moolenburgh FJ, Nijsten T, Lugtenberg M. Towards successful implementation of artificial intelligence in skin cancer care: a qualitative study exploring the views of dermatologists and general practitioners. Arch Dermatol Res. (2023) 315(5):1187–95. doi: 10.1007/s00403-022-02492-3

51. Sezgin E. Artificial intelligence in healthcare: complementing, not replacing, doctors and healthcare providers. Digit Health. (2023) 9:20552076231186520. doi: 10.1177/20552076231186520

52. Shamszare H, Choudhury A. Clinicians’ perceptions of artificial intelligence: focus on workload, risk, trust, clinical decision making, and clinical integration. Healthcare. (2023) 11:2308. doi: 10.3390/healthcare11162308

53. Kerasidou A. Artificial intelligence and the ongoing need for empathy, compassion and trust in healthcare. Bull W H O. (2020) 98:245. doi: 10.2471/BLT.19.237198

54. Inanaga R, Toida T, Aita T, Kanakubo Y, Ukai M, Toishi T, et al. Trust, multidimensional health literacy, and medication adherence among patients undergoing long-term hemodialysis. Clin J Am Soc Nephrol. (2023) 18:463–71. doi: 10.2215/CJN.0000000000000392

55. Bajwa J, Munir U, Nori A, Williams B. Artificial intelligence in healthcare: transforming the practice of medicine. Future Healthcare J. (2021) 8:e188–94. doi: 10.7861/fhj.2021-0095

56. Elendu C, Amaechi DC, Elendu TC, Jingwa KA, Okoye OK, Okah MJ, et al. Ethical implications of AI and robotics in healthcare: a review. Medicine (Baltimore). (2023) 102:e36671. doi: 10.1097/MD.0000000000036671

57. Kahraman F, Aktas A, Bayrakceken S, Çakar T, Tarcan HS, Bayram B, et al. Physicians’ ethical concerns about artificial intelligence in medicine: a qualitative study: “the final decision should rest with a human”. Front Public Health. (2024) 12:1428396. doi: 10.3389/fpubh.2024.1428396

58. Bundesärztekammer. Künstliche Intelligenz in der Medizin. Berlin: Bundesärztekammer (2025). doi: 10.3238/arztebl.2025.Stellungnahme_KI_Medizin (Accessed March 20, 2025).

59. Khullar D, Casalino LP, Qian Y, Lu Y, Chang E, Aneja S. Public vs physician views of liability for artificial intelligence in health care. J Am Med Inform Assoc. (2021) 28:1574–7. doi: 10.1093/jamia/ocab055

60. Adus S, Macklin J, Pinto A. Exploring patient perspectives on how they can and should be engaged in the development of artificial intelligence (AI) applications in health care. BMC Health Serv Res. (2023) 23:1163. doi: 10.1186/s12913-023-10098-2

61. Cestonaro C, Delicati A, Marcante B, Caenazzo L, Tozzo P. Defining medical liability when artificial intelligence is applied on diagnostic algorithms: a systematic review. Front Med. (2023) 10:1305756. doi: 10.3389/fmed.2023.1305756

62. Nash DM, Thorpe C, Brown JB, Kueper JK, Rayner J, Lizotte DJ, et al. Perceptions of artificial intelligence use in primary care: a qualitative study with providers and staff of Ontario community health centres. J Am Board Fam Med. (2023) 36(2):221–8. doi: 10.3122/jabfm.2022.220177R2

63. Olawade DB, David-Olawade AC, Wada OZ, Asaolu AJ, Adereni T, Ling J. Artificial intelligence in healthcare delivery: prospects and pitfalls. J Med Surg Public Health. (2024) 3:100108. doi: 10.1016/j.glmedi.2024.100108

64. Väänänen A, Haataja K, Vehviläinen-Julkunen K, Toivanen P. AI in healthcare: a narrative review. F1000Res. (2021) 10:6. doi: 10.12688/f1000research.26997.2

65. Shafi S, Parwani AV. Artificial intelligence in diagnostic pathology. Diagn Pathol. (2023) 18:109. doi: 10.1186/s13000-023-01375-z

66. Lorenzini G, Arbelaez Ossa L, Shaw DM, Elger BS. Artificial intelligence and the doctor–patient relationship expanding the paradigm of shared decision making. Bioethics. (2023) 37:424–9. doi: 10.1111/bioe.13158

67. Lee EE, Torous J, De Choudhury M, Depp CA, Graham SA, Kim H-C, et al. Artificial intelligence for mental health care: clinical applications, barriers, facilitators, and artificial wisdom. Biol Psychiatry Cogn Neurosci Neuroimaging. (2021) 6:856–64. doi: 10.1016/j.bpsc.2021.02.001

68. Auf H, Svedberg P, Nygren J, Nair M, Lundgren LE. The use of AI in mental health services to support decision-making: scoping review. J Med Internet Res. (2025) 27:e63548. doi: 10.2196/63548

69. Sedlakova J, Trachsel M. Conversational artificial intelligence in psychotherapy: a new therapeutic tool or agent? Am J Bioeth. (2023) 23(5):4–13. doi: 10.1080/15265161.2022.2048739

70. Schmidt J, Schutte NM, Buttigieg S, Novillo-Ortiz D, Sutherland E, Anderson M, et al. Mapping the regulatory landscape for artificial intelligence in health within the European union. NPJ Digit Med. (2024) 7:229. doi: 10.1038/s41746-024-01221-6

71. Ho CW-L, Caals K. How the EU AI act seeks to establish an epistemic environment of trust. Asian Bioeth Rev. (2024) 16:345–72. doi: 10.1007/s41649-024-00304-6

72. Alsharif W, Qurashi A, Toonsi F, Alanazi A, Alhazmi F, Abdulaal O, et al. A qualitative study to explore opinions of Saudi Arabian radiologists concerning AI-based applications and their impact on the future of the radiology. BJR Open. (2022) 4:20210029. doi: 10.1259/bjro.20210029

73. Gundlack J, Negash S, Thiel C, Buch C, Schildmann J, Unverzagt S, et al. Artificial intelligence in medical care–Patients’ perceptions on caregiving relationships and ethics: a qualitative study. Health Expect. (2025) 28:e70216. doi: 10.1111/hex.70216

74. Saab K, Freyberg J, Park C, Strother T, Cheng Y, Weng W-H, et al. Advancing conversational diagnostic ai with multimodal reasoning. arXiv [Preprint]. (2025). Available at: https://arxiv.org/abs/2505.04653 (Accessed June 12, 2025).

75. Sauerbrei A, Kerasidou A, Lucivero F, Hallowell N. The impact of artificial intelligence on the person-centred, doctor-patient relationship: some problems and solutions. BMC Med Inform Decis Mak. (2023) 23:73. doi: 10.1186/s12911-023-02162-y

76. Kingsford PA, Ambrose JA. Artificial intelligence and the doctor-patient relationship. Am J Med. (2024) 137:381–2. doi: 10.1016/j.amjmed.2024.01.005

77. KI-Campus. Grundlagen der Künstlichen Intelligenz in der Medizin—cME-Kurs. (2025). Available at: https://ki-campus.org/courses/drmedki_grundlagen_cme (Accessed June 12, 2025).

78. Gao S, He L, Chen Y, Li D, Lai K. Public perception of artificial intelligence in medical care: content analysis of social media. J Med Internet Res. (2020) 22:e16649. doi: 10.2196/16649

Keywords: artificial intelligence, physicians, attitudes, acceptance, medical care, healthcare

Citation: Negash S, Gundlack J, Buch C, Apfelbacher T, Schildmann J, Frese T, Christoph J and Mikolajczyk R (2025) Physicians’ attitudes and acceptance towards artificial intelligence in medical care: a qualitative study in Germany. Front. Digit. Health 7:1616827. doi: 10.3389/fdgth.2025.1616827

Received: 12 May 2025; Accepted: 23 June 2025;

Published: 14 July 2025.

Edited by:

Jorge Cancela, Roche, SwitzerlandReviewed by:

Cornelius G. Wittal, Roche Pharma AG, GermanyOsman Radhwi, King Abdulaziz University, Saudi Arabia

Copyright: © 2025 Negash, Gundlack, Buch, Apfelbacher, Schildmann, Frese, Christoph and Mikolajczyk. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Rafael Mikolajczyk, cmFmYWVsLm1pa29sYWpjenlrQHVrLWhhbGxlLmRl

Sarah Negash

Sarah Negash Jana Gundlack2

Jana Gundlack2 Rafael Mikolajczyk

Rafael Mikolajczyk