- 1Department of Joints, Tianjin Hospital of Tianjin University (Tianjin Hospital), Tianjin, China

- 2Clinical College of Orthopedics, Tianjin Medical University, Tianjin, China

- 3Tianjin University, Tianjin, China

Background: Osteoarthritis (OA) is a debilitating condition characterized by pain, stiffness, and impaired mobility, significantly affecting patients' quality of life. Health education is crucial in helping individuals understand OA and its management. In China, where OA is highly prevalent, platforms such as TikTok, WeChat, and XiaoHongshu have become prominent sources of health information. However, there is a lack of research regarding the reliability and educational quality of OA-related content on these platforms.

Methods: This study analyzed the top 100 OA-related videos across three major platforms: TikTok, WeChat, and XiaoHongshu. We systematically evaluated the content quality, reliability, and educational value using established tools, such as the DISCERN scale, JAMA benchmark criteria, and the Global Quality Score (GQS) system. The study also compared differences in video content across platforms, offering insights into their relevance for addressing professional needs.

Results: Video quality varied significantly between platforms. TikTok outperformed WeChat and XiaoHongshu in all scoring criteria, with mean DISCERN scores of 32.42 (SD 0.37), 24.57 (SD 0.34), and 30.21 (SD 0.10), respectively (P < 0.001). TikTok also scored higher on the JAMA (1.36, SD 0.07) and GQS (2.46, SD 0.08) scales (P < 0.001). Videos created by healthcare professionals scored higher than those created by non-professionals (P < 0.001). Disease education and symptom self-examination content were more engaging, whereas rehabilitation videos received less attention.

Conclusions: Short-video platforms have great potential for chronic disease health education, with the caveat that the quality of the videos currently varies, and the authenticity of the video content is yet to be verified. While professional doctors play a crucial role in ensuring the quality and authenticity of video content, viewers should approach it with a critical mindset. Even without medical expertise, viewers should be encouraged to question the information and consult multiple sources.

Introduction

Osteoarthritis (OA) is a chronic degenerative joint disease characterized by progressive wear and degeneration of articular cartilage, often accompanied by damage to the surrounding soft tissues (1–3). It is recognized as a major cause of disability and motor dysfunction worldwide. Data from the World Health Organization show that the global prevalence of OA is approximately 5%, and its incidence increases with age (1, 4). OA causes not only persistent joint pain, limitation of movement, and morning stiffness, but also joint swelling. In advanced stages, OA may result in joint deformity and even disability, significantly impairing patients' ability to perform daily activities and affecting their quality of life (3). Although OA remains incurable, comprehensive interventions—including standardized pharmacological treatment, physical therapy, injection therapy, exercise rehabilitation, and surgery when necessary can effectively alleviate symptoms and significantly improve patients' quality of life (1, 2, 5–7).

Health education plays a pivotal role in the management of OA by equipping patients with the knowledge necessary to manage this chronic condition. Enhancing public awareness facilitates a better understanding of OA pathogenesis, common symptoms, and available treatment options (8, 9), thereby promoting early detection, timely intervention, and appropriate management. The rapid development of information technology and the proliferation of short video platforms have led to transformative changes in the form of health education. Short video, as an emerging form of social media, have quickly become a major vehicle for health science dissemination (10–13). Platforms such as TikTok, WeChat Video Accounts, and Xiaohongshu have transcended their purely entertainment and social functions, becoming essential platforms for health education among Chinese people (14–16).

A significant amount of OA-related content has emerged on short-form video platforms, ranging from specialized medical information to personal patient experiences and discussions of various treatment options. A major issue is that creators possess varying levels of professionalism, resulting in mixed content quality and raising concerns about the accuracy, reliability, and educational value of the videos. Studies have shown that many medical science videos lack a solid scientific foundation, and numerous content creators disseminate misleading information in pursuit of increased traffic and viewer engagement (17, 18). For instance, some so-called “special effect treatments” are not only ineffective but may also delay proper treatment. Therefore, it is crucial to conduct a comprehensive evaluation of OA-related videos on these platforms to rigorously assess their quality and credibility. This is not only a critical step in enhancing the effectiveness of public health education, but also a necessary measure to ensure the public has access to accurate and reliable health information.

In recent years, researchers have frequently utilized specialized tools, such as the DISCERN scale, the JAMA criteria, and the Global Quality Score (GQS), to assess three key dimensions: reliability, completeness, and medical standardization of video content (19–25). D’Ambrosi et al., analyzed 100 videos related to humeral epicondylitis on the Shakeology platform (26). The study found that the videos were posted by physical therapists and focused on rehabilitation exercises. However, despite the high number of clicks and interactions, the quality and credibility of the videos were questionable. Other similar studies have highlighted a paradox: short video platforms can quickly disseminate health information, yet improving the quality of content remains a persistent challenge (27–29).

Previous studies have primarily focused on health-related videos on platforms such as YouTube and Instagram, with limited research conducted on Chinese short-video platforms. OA, a highly prevalent and disabling condition, has not yet been systematically assessed in terms of the quality of related health content, despite its undeniable importance. This study aims to critically assess the top 100 OA-related videos on Chinese short-video platforms such as TikTok, WeChat, and Xiaohongshu, using tools like DISCERN, the JAMA benchmark, and GQS to evaluate their quality, reliability, and educational value, thereby addressing gaps in previous research. Additionally, this study explores the impact of short-video platform algorithms on the visibility and quality of OA-related content, revealing how algorithm-driven recommendation mechanisms shape health education in the digital age.

Methods

Ethical considerations

This study focused solely on data obtained from publicly accessible short-video sharing platforms, with no involvement of human experimental research, thereby obviating the need for ethical approval. Furthermore, no identifiable information regarding individual users or their IDs was included in this study, ensuring privacy and confidentiality.

Search strategy and data collection

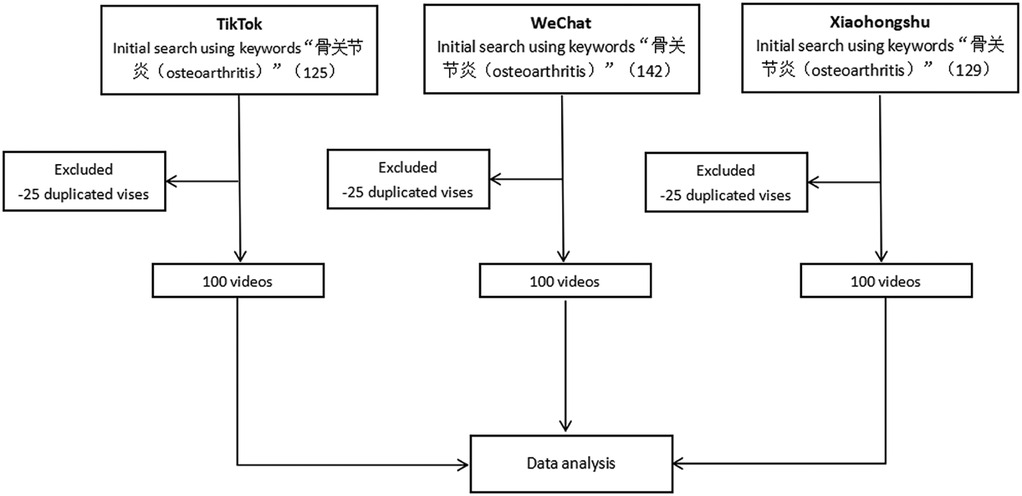

In designing our search and data collection strategy, we referred to established methodological guidelines for conducting health research using data from social media platforms, which provide a step-by-step framework for healthcare professionals and researchers to follow (30). A search was performed on TikTok, WeChat, and Xiaohongshu from March 1 to March 6, 2025, using the keyword “骨关节炎” (osteoarthritis). To minimize bias from personalized recommendation algorithms, searches were performed while logged out and using newly created accounts. The search results from each platform were sorted according to the default ranking algorithms, without applying any preset filters (e.g., relevance, most viewed). This approach ensured that the collected videos adhered to the platform's standard sorting criteria. We selected the top 100 videos for each search term to ensure a representative sample of the most relevant content (31). Inclusion criteria were as follows: (1) videos related to OA, and (2) videos in Chinese. Exclusion criteria were: (1) duplicate content, (2) advertisements, and (3) videos containing irrelevant content. For the selected videos, characteristics such as titles, number of likes, comments, collections, shares, video duration, sources, type of information, and content were recorded and analyzed.

Primary and secondary outcomes

The primary outcomes of this study were the reliability and quality of OA-related videos, evaluated using the DISCERN scale, JAMA benchmarks, and the GQS. These outcomes aimed to assess the accuracy and overall quality of the health information presented in the videos. The secondary outcomes included engagement metrics (likes, comments, shares, collections). The understandability of the videos was also assessed using the Patient Education Materials Assessment Tool (PEMAT).

Exploratory analyses

In addition to the primary and secondary outcomes, we conducted exploratory analyses to examine differences in video quality based on the content creator type. Specifically, we compared the DISCERN, JAMA, and GQS scores for videos created by healthcare professionals (e.g., physicians, rehabilitation practitioners) and independent users. Furthermore, we examined how the content type influenced engagement metrics (likes, comments, shares, collections). This study also analyzes the impact of platform algorithms on content visibility and recommendation quality through correlation analysis. Specifically, it explores how algorithm-driven factors, such as user engagement (likes, comments, shares), correlate with video quality scores (e.g., DISCERN, JAMA, GQS).

Assessment tools for video reliability, validity, and quality

DISCERN instrument

The DISCERN tool is a structured assessment scale designed to evaluate the reliability and quality of information for both patients and healthcare providers. Items 1–8 form the first section, focusing on evaluating the reliability of the information. Items 9–15 form the second section, evaluating the quality of the information, while the final item (16) provides an overall quality rating. The DISCERN tool uses a five-point Likert scale for evaluation. For the first 15 items, a score of 1 indicates “no,” while a score of 5 indicates “yes.” For item 16, a score of 1 signifies “low quality with significant deficiencies,” while a score of 5 signifies “high quality with minimal deficiencies.” The total DISCERN score is calculated as the sum of the first 15 items, ranging from a minimum of 15 to a maximum of 75. A higher score indicates greater reliability and quality of the information—scores of 15–27 indicate “very poor,” 28–38 indicate “poor,” 39–50 indicate “medium,” 51–62 indicate “good,” and 63–75 indicate “excellent.” The DISCERN tool is freely accessible at http://www.discern.org.uk (19, 23).

JAMA benchmark criteria

The JAMA benchmark criteria tool is is one of the most widely used tools for assessing medical information sourced from online platforms. It comprises four criteria: authorship, attribution, disclosure, and currency, each with a maximum score of one point, resulting in a total possible score of 4 points. In the JAMA evaluation, a score of 0–1 points indicates insufficient information, 2–3 points indicates partially sufficient information, and 4 points indicates completely sufficient information (24, 25).

Global quality score

The GQS is a scoring system developed to evaluate the instructional quality of videos. It enables the assessment of the quality, accessibility, and usability of information in online videos. In the GQS evaluation, a score of 1 indicates the lowest quality and limited usefulness for viewers, while a score of 5 indicates excellent quality and substantial usefulness (20, 21).

To further assess the understandability and actionability of the videos, the PEMAT, developed by the Agency for Healthcare Research and Quality, was employed (32). To minimize potential bias, all data collection and statistical analyses were conducted by a designated tester throughout the trial. Two raters, both senior clinicians with over 10 years of experience, independently evaluate the content of each video. They discuss and resolve any disagreement. In cases where consensus cannot be reached—a third rater, an experienced department head with extensive clinical expertise—will intervene to provide the final score.

Statistical analysis

Descriptive statistics were used to analyze all video features, including video source, content, audio, and message type (i.e., DISCERN, JAMA, and GQS). Categorical variables were presented as absolute frequencies and percentages, while continuous variables were reported as mean ± standard deviation, or alternatively as median, interquartile range (IQR), and range. Normally distributed data were presented as mean ± SD, while non-normally distributed data were reported as median and range. Comparisons between two groups were conducted using the Mann–Whitney U test or the Student's t-test, while comparisons among three or more groups were performed using the Kruskal–Wallis test or one-way ANOVA. Spearman correlation analysis was used to assess the relationship between quantitative variables. P value less than 0.05 (P < 0.05) was considered statistically significant. All statistical analyses were conducted using SPSS (version 22.0; IBM Corp) and GraphPad Prism (version 9.0; Dotmatics).

Results

The general characteristics of videos

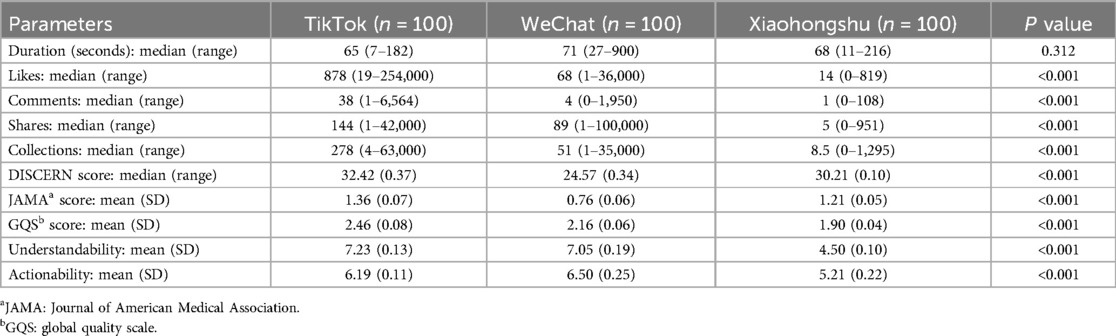

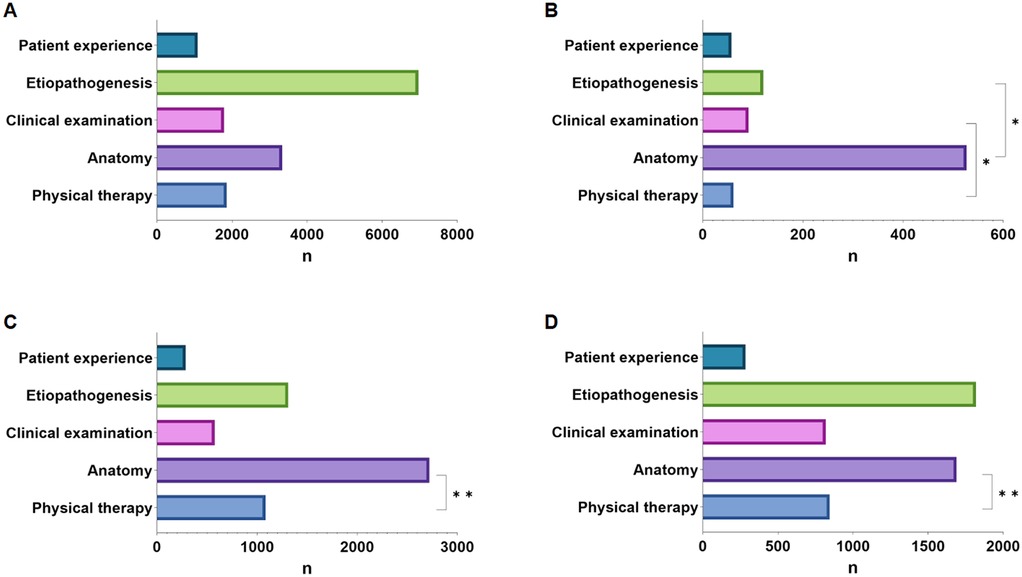

As shown in Figure 1, TikTok, WeChat, and Xiaohongshu each contained 100 videos after excluding advertisements, duplicates, and irrelevant content. Analysis of the general characteristics of the videos revealed no significant difference in video duration across the three platforms (p = 0.312). Videos on TikTok received more likes, comments, shares, and favorites than those from WeChat and Xiaohongshu (both p < 0.001). DISCERN ratings showed that TikTok videos scored higher than Xiaohongshu, which in turn scored higher than WeChat (mean 32.42, SD 0.37 vs. mean 30.21, SD 0.10 vs. mean 24.57, SD 0.34; P < 0.001); The JAMA score for TikTok videos was higher than that for Xiaohongshu, which was higher than that for WeChat (mean 1.36, SD 0.07 vs. mean 1.21, SD 0.05 vs. mean 0.76, SD 0.06; P < 0.001); The GQS score for TikTok videos was higher than for WeChat, which was higher than for Xiaohongshu (mean 2.46, SD 0.08 vs. mean 2.16, SD 0.06 vs. mean 1.90, SD 0.04; P < 0.001). Statistical differences were observed in the understandability and actionability of videos across different platforms (P < 0.001; Table 1). The detailed characteristics of OA-related videos across platforms are presented in Table 1.

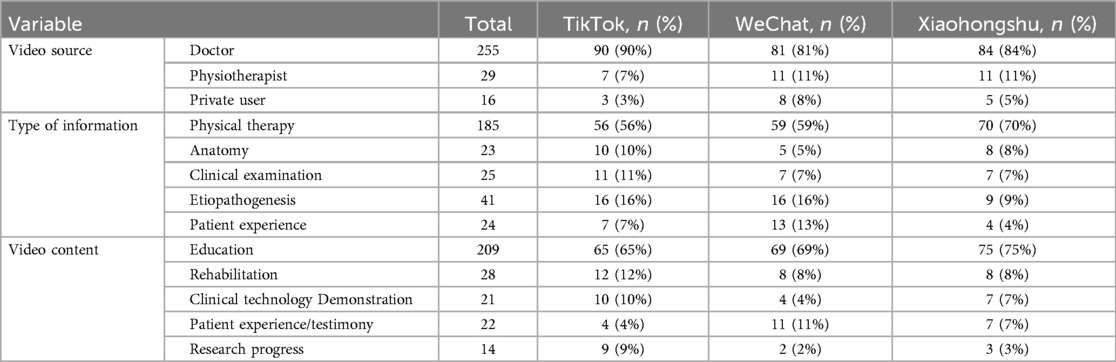

Video source and content

Table 2 presents the sources and content of OA-related videos. Doctors were the primary uploaders of videos (255/300, 85.0%), and the content primarily focused on physical therapy (184/300, 61.3%) and etiology (41/300, 13.6%). The video content predominantly focused on educational science (215/300, 71.7%) and rehabilitation guidance (28/300, 9.3%).

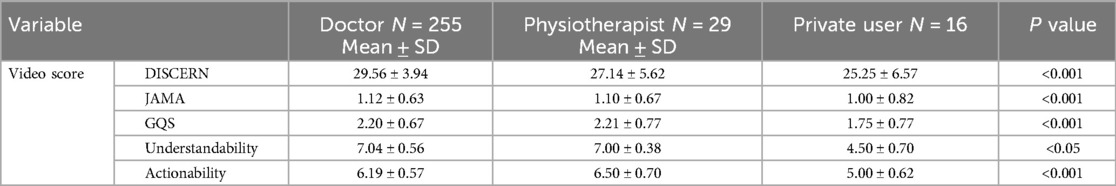

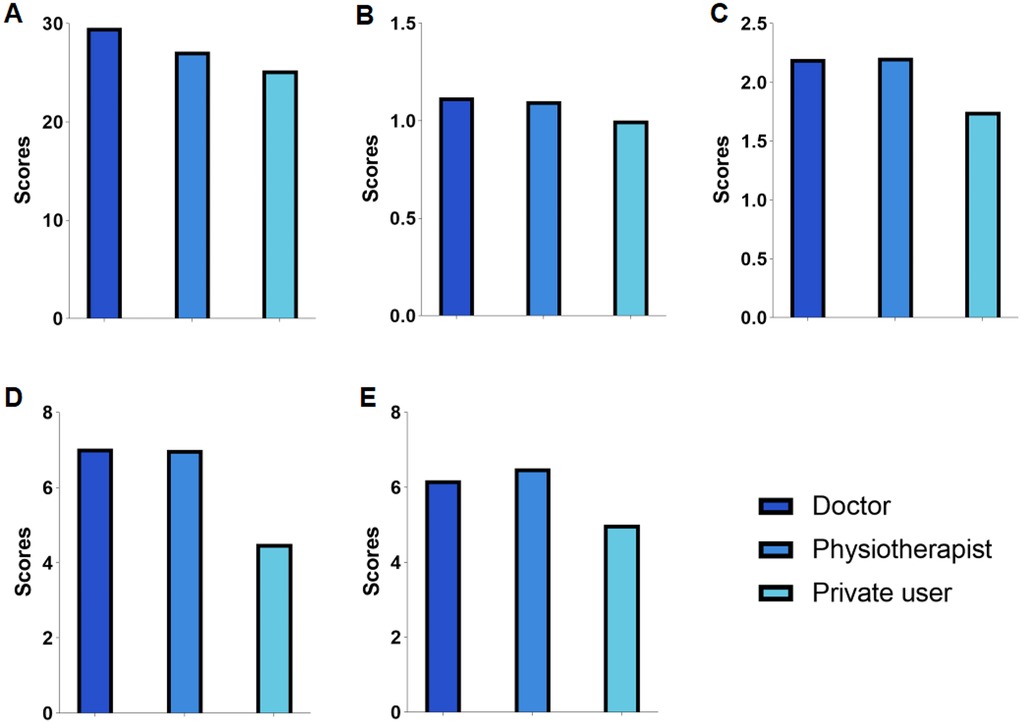

The quality and popularity of videos from different sources with different contents and different presentation forms

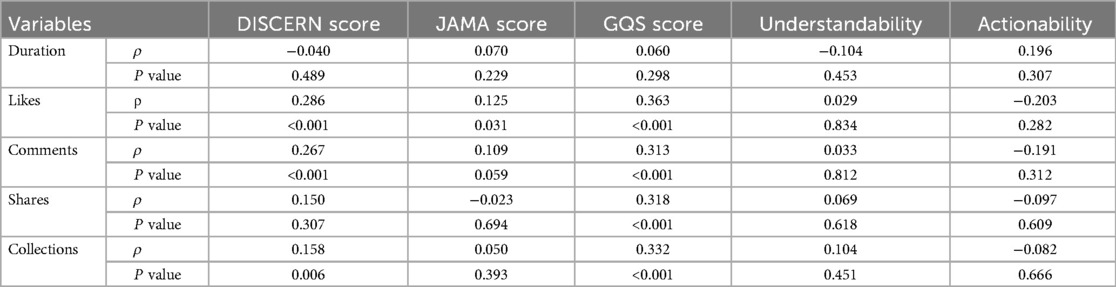

When examining differences in information publishers, we found that videos uploaded by independent users had significantly lower DISCERN, JAMA, and GQS scores compared to those uploaded by physicians and rehabilitation practitioners, with significant differences observed (all P < 0.001; Table 3; Figures 2A–C). Similarly, the understandability and actionability of videos uploaded by private users were significantly lower than those uploaded by rehabilitators and physicians (P < 0.05, P < 0.001; Table 3; Figures 2D,E). When examining differences in the type of information, our study found no significant difference in likes across types (p = 0.053). However, regarding the number of discussions, clinical examination outperformed physical therapy (mean 91.12, SD 156.53 vs. mean 61.55, SD 216.80; P < 0.05), and anatomy outperformed etiopathogenesis (mean 526.65, SD 1438.78 vs. mean 120.40, SD 565.86; P < 0.05). Anatomy outperforms physical therapy in terms of shares (mean 2,720.85, SD 8,060.86 vs. mean 1,081.93, SD 7,717.04; P < 0.01). Patient experience ranked lower than anatomy in terms of number of collections (mean 284.08, SD 899.09 vs. mean 1,689.88, SD 3,875.34; P < 0.05) (Figures 3A–D).

Figure 2. The journal of American Medical Association (JAMA) score, global quality scale (GQS) score, modified DISCERN score, patient education, materials assessment tool (PEMAT)–understandability, and PEMAT-actionability of videos on colorectal polyps from different sources. (A) The JAMA score, (B) the GQS score, (C) the modified DISCERN score, (D) PEMAT-understandability, and (E) PEMAT-actionability.

Figure 3. (A) The number of likes. (B) The number of discussions. (C) The number of shares. (D) The number of collections. (*P < .05, **P < .01).

Correlation analysis

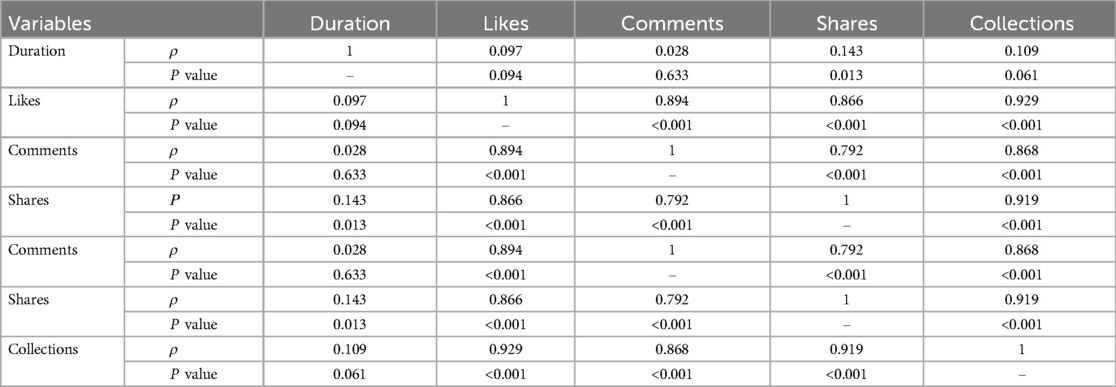

Spearman correlation (ρ) analyses identified the relationships between various video variables. The results showed a positive correlation between video duration and video sharing (P < 0.05), but no correlation between duration and the number of likes, comments, or collections (all P > 0.05). And there is a significant positive correlation between the number of likes and the number of comments, shares and collections (P < 0.001; Table 4). The number of likes and comments were positively correlated with both the DISCERN and GQS scores (P < 0.001). The number of shares was positively correlated with the GQS score (P < 0.001). The number of collections was positively correlated with both the DISCERN and GQS scores (P < 0.01, P < 0.001; Table 5).

Platform algorithm impact on video visibility and quality scores

Correlation analysis revealed a significant relationship between interaction metrics and video quality scores. Specifically, videos with higher interaction rates are generally associated with higher quality scores (Table 5). On TikTok, videos with high interaction rates and quality scores are significantly more prevalent than on other platforms (P < 0.001; Table 1), possibly due to TikTok's recommendation algorithm. TikTok's algorithm tends to prioritize videos with high interaction rates, which generally receive higher quality scores. After viewing high-quality videos, viewer interaction frequency tends to increase, creating a positive feedback loop. This finding further supports the potential influence of platform recommendation algorithms on video visibility and the quality of recommended content.

Discussion

This study aims to assess the quality, reliability, and educational value of OA-related videos on Chinese short-video platforms, including TikTok, WeChat, and Xiaohongshu, thereby addressing a gap in existing research. The results indicate significant differences in video quality across these platforms, reflecting a broader trend in the dissemination of health education content via social media. The study also highlights the impact of short-video platform algorithms on video visibility and recommended content.

Significance of the findings

The evaluation using the DISCERN, JAMA, and GQS revealed that OA-related videos on TikTok significantly outperformed those on Xiaohongshu and WeChat across multiple indicators. Specifically, TikTok videos were not only more credible but also more comprehensive and practical. This finding aligns with previous studies and confirms TikTok's leading role in health science popularization (33–35). This difference also highlights the potential and significance of TikTok in public health education, offering insight into how communication media directly influence the quality of health information.

The DISCERN and JAMA assessments revealed that videos created by healthcare professionals (e.g., physicians, rehabilitation therapists) received higher ratings compared to those produced by independent users. Videos created by non-professionals typically exhibited two major shortcomings: unclear presentation of specialized knowledge and a lack of practical instructional value, often aimed more at garnering attention than providing viewer benefit. These findings are consistent with previous studies (36, 37). These results emphasize that professional qualifications are crucial for ensuring the accuracy and credibility of health information, and that increasing the involvement of medical professionals in health education is essential for disseminating both professional and practical health knowledge to the public.

This study also found that content, subject matter, and presentation style significantly influenced user engagement (likes, shares, comments). Videos providing detailed explanations of bone and joint anatomy and clinical symptoms received more engagement than those focused on rehabilitation. Viewers preferred scientific content that was information-dense, detailed, and practically applicable, offering immediate utility. These findings are consistent with previous studies (38–40).

Comparison with related literature

The results of this study demonstrate a high degree of consistency with previous research on social media health education. D’Ambrosi et al. found that, although physical therapists garnered significant attention on TikTok, the quality of their content varied (41). Similarly, several studies have emphasized the potential of short-form video platforms in advancing public health education (42, 43); however, the issue of fluctuating content quality persists, failing to persuade all viewers. These findings highlight a central paradox: when health discussions are dominated by laypeople, ensuring the accuracy and reliability of information becomes challenging. Our results are consistent with studies by Ozsoy (39) and Uprak (37) on YouTube health videos, which demonstrated that content created by healthcare professionals consistently outperformed that created by laypeople in terms of reliability and quality (37, 39). This finding achieves consensus across platforms and geographies, as an increasing number of researchers focus on online health issues. The deep involvement of healthcare professionals is indispensable for ensuring that video content is both credible and beneficial.

Role of recommendation algorithms

Although recommendation algorithms significantly influence the dissemination of health information on short-video platforms, there has been limited research on the impact of platform-specific recommendation systems on the visibility and quality of health-related videos, particularly those concerning OA. This study is the first to examine how the recommendation algorithms of TikTok, WeChat, and Xiaohongshu influence the display, visibility, and dissemination effectiveness of video content. Using TikTok as an example, its algorithm primarily relies on user interaction metrics such as likes, comments, and shares to determine video recommendations (44–46). The results of this study suggest that videos with higher interaction metrics are generally associated with higher video quality. This positive feedback loop leads TikTok's algorithm to prioritize higher-quality OA-related videos, which subsequently generate more interaction. This interaction-based recommendation mechanism may cause certain health content to gain higher visibility, while content with fewer interactions may be overlooked, despite potentially possessing significant educational value. Similarly, WeChat and Xiaohongshu have their own recommendation algorithms, although their priorities may not rely solely on interaction data but also include content relevance and user behavior. Due to the differing recommendation mechanisms across these platforms, health videos about OA may display varying levels of visibility and dissemination patterns, thereby affecting the accuracy and breadth of health information accessible to users. This disparity highlights potential biases inherent in recommendation algorithms in health information dissemination. We believe that while platform recommendation algorithms can increase video exposure, they may inadvertently enhance the visibility of certain content, causing misleading videos to attract more audience attention. Consequently, algorithmic bias may have detrimental effects on the health education functions of these platforms (47, 48).

Limitations and future directions

While this study offers valuable insights into the quality of OA-related videos on Chinese short-video platforms, several limitations and opportunities for future research remain.

Artificial intelligence-based analysis

This study relied on manual scoring and conventional evaluation tools. Future research could leverage artificial intelligence (AI) and machine learning (ML) techniques to automate the classification and analysis of health-related videos (49). For instance, AI could be employed for content categorization, misinformation detection, and sentiment analysis, enabling large-scale analysis of videos and their potential impact on public health. Additionally, natural language processing (NLP) could be applied to analyze viewer comments and interactions, offering deeper insights into audience engagement, trust, and behavior (50).

Expansion of data set and cross-platform/multilingual comparison

This study focused on a limited set of videos from Chinese platforms. Future research could broaden the dataset to include videos from a wider range of platforms, including YouTube, Instagram, and Facebook, for a comparison of content across different platforms and regions. This would provide a more comprehensive evaluation of social media's role in global health education. Furthermore, including multilingual videos from different cultural contexts would enhance the generalizability of the findings and provide insights into the cross-cultural applicability of health communication strategies.

Algorithmic and cross-cultural research

Further research could explore how platform algorithms influence the dissemination of health information across diverse cultures. This could involve studying the differences in algorithmic impact between Western and Eastern platforms and the role of algorithms in shaping health education across culturally diverse contexts.

Academic contributions and practical implications

In summary, our study emphasizes the need for quality assessment of social media content, particularly on short-form video platforms. We identified significant disparities in the quality of OA-related videos across platforms and creators, underscoring the need for stricter content regulation and greater involvement of healthcare professionals in content creation. From a practical standpoint, enhancing the quality of health science communication on social media is essential. Short-form video platforms can play a pivotal role in chronic disease management by optimizing content presentation and advancing public health education, ultimately empowering individuals to take charge of their health.

Conclusion

While short-video platforms serve as powerful tools for disseminating health information, substantial efforts are needed to enhance content quality. Ensuring the credibility, clarity, and actionability of health-related videos is crucial for optimizing their effectiveness in advancing public health education and empowering viewers with reliable, actionable knowledge.

Data availability statement

The original contributions presented in the study are included in the article/Supplementary Material, further inquiries can be directed to the corresponding author.

Ethics statement

The study was approved by the Ethics Committee of Tianjin Hospital (IRB2024 Medical Ethics Review 206). The social media data was accessed and analyzed in accordance with the platform's terms of use and all relevant institutional/national regulations.

Author contributions

Y-xZ: Data curation, Methodology, Conceptualization, Writing – original draft, Writing – review & editing. H-tY: Writing – original draft, Methodology, Validation, Conceptualization. Y-xL: Methodology, Writing – original draft, Supervision, Investigation. XF: Writing – review & editing, Data curation, Conceptualization. JL: Supervision, Funding acquisition, Writing – review & editing.

Funding

The author(s) declare that no financial support was received for the research and/or publication of this article.

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Generative AI statement

The author(s) declare that no Generative AI was used in the creation of this manuscript.

Any alternative text (alt text) provided alongside figures in this article has been generated by Frontiers with the support of artificial intelligence and reasonable efforts have been made to ensure accuracy, including review by the authors wherever possible. If you identify any issues, please contact us.

Publisher's note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

References

1. Glyn-Jones S, Palmer AJR, Agricola R, Price AJ, Vincent TL, Weinans H, et al. Osteoarthritis. Lancet. (2015) 386(9991):376–87. doi: 10.1016/S0140-6736(14)60802-3

2. Briggs AM, Cross MJ, Hoy DG, Sànchez-Riera L, Blyth FM, Woolf AD, et al. Musculoskeletal health conditions represent a global threat to healthy aging: a report for the 2015 World Health Organization world report on ageing and health. Gerontologist. (2016) 56(Suppl_2):S243–55. doi: 10.1093/geront/gnw002

3. Tsang A, Korff V, Lee M, Alonso S, Karam J, Angermeyer E, et al. Common chronic pain conditions in developed and developing countries: gender and age differences and comorbidity with depression-anxiety disorders. J Pain. (2008) 9(10):883–91. doi: 10.1016/j.jpain.2008.05.005

4. Vina ER, Kwoh CK. Epidemiology of osteoarthritis: literature update. Curr Opin Rheumatol. (2018) 30(2):160–7. doi: 10.1097/BOR.0000000000000479

5. Zhang Y, Zhou Y. Advances in targeted therapies for age-related osteoarthritis: a comprehensive review of current research. Biomed Pharmacother. (2024) 179:117314. doi: 10.1016/j.biopha.2024.117314

6. Bian G, Zhao W, Zhang L. Comparative efficacy of platelet-rich plasma injection vs ultrasound-guided hyaluronic acid injection in the rehabilitation of hip osteoarthritis: an observational study. Ann Med. (2025) 57(1):2524091. doi: 10.1080/07853890.2025.2524091

7. Heredia Sulbaran AA, Gomez PA. Efficacy of hydrolyzed collagen injections compared to platelet-rich plasma and hyaluronic acid in the treatment of patients with symptomatic knee osteoarthritis: a retrospective clinical study. BMC Musculoskelet Disord. (2025) 26(1):619. doi: 10.1186/s12891-025-08811-9

8. Battista S, Recenti F, Kiadaliri A, Lohmander S, Jönsson T, Abbott A, et al. Impact of an intervention for osteoarthritis based on exercise and education on metabolic health: a register-based study using the soad cohort. RMD Open. (2025) 11(1):e005133. doi: 10.1136/rmdopen-2024-005133

9. Gan W, Ouyang J, She G, Xue Z, Zhu L, Lin A, et al. Chatgpt’s role in alleviating anxiety in total knee arthroplasty consent process: a randomized controlled trial pilot study. Int J Surg. (2025) 111(3):2546–57. doi: 10.1097/JS9.0000000000002223

10. Elhajjar S, Ouaida F. Use of social media in healthcare. Health Mark Q. (2022) 39(2):173–90. doi: 10.1080/07359683.2021.2017389

11. Zheluk A, Anderson J, Dineen-Griffin S. Analysis of acute non-specific back pain content on TikTok: an exploratory study. Cureus. (2022) 14(1):e21404. doi: 10.7759/cureus.21404

12. Kara M, Ozduran E, Mercan Kara M, Hanci V, Erkin Y. Assessing the quality and reliability of YouTube videos as a source of information on inflammatory back pain. PeerJ. (2024) 12:e17215. doi: 10.7717/peerj.17215

13. Toler I, Grubbs L. Listening to TikTok—patient voices, bias, and the medical record. N Engl J Med. (2025) 392(5):422–3. doi: 10.1056/NEJMp2410601

14. Minadeo M, Pope L. Weight-normative messaging predominates on TikTok-a qualitative content analysis. PLoS One. (2022) 17(11):e0267997. doi: 10.1371/journal.pone.0267997

15. Alatorre S, Schwarz AG, Egan KA, Feldman AR, Rosa M, Wang ML. Exploring social media preferences for healthy weight management interventions among adolescents of color: mixed methods study. JMIR Pediatr Parent. (2023) 6:e43961. doi: 10.2196/43961

16. Bhandari A, Bimo S. Why’s everyone on TikTok now? The algorithmized self and the future of self-making on social media. SMS. (2022) 8:205630512210862. doi: 10.1177/20563051221086241

17. Comp G, Dyer S, Gottlieb M. Is TikTok the next social media frontier for medicine? AEM Educ Train. (2021) 5(3). doi: 10.1002/aet2.10532

18. Kempenich JW, Willis RE, Fayyadh MA, Campi HD, Cardenas T, Hopper WA, et al. Video-based patient education improves patient attitudes toward resident participation in outpatient surgical care. J Surg Educ. (2018) 75(6):e61–7. doi: 10.1016/j.jsurg.2018.07.024

19. Charnock D, Shepperd S, Needham G, Gann R. Discern: an instrument for judging the quality of written consumer health information on treatment choices. J Epidemiol Community Health. (1999) 53(2):105. doi: 10.1136/jech.53.2.105

20. Erdem MN, Karaca S. Evaluating the accuracy and quality of the information in kyphosis videos shared on YouTube. Spine. (2018) 43(22):E1334–9. doi: 10.1097/BRS.0000000000002691

21. Silberg WM, Lundberg GD, Musacchio RA. Assessing, controlling, and assuring the quality of medical information on the internet: caveant lector et viewor—let the reader and viewer beware. JAMA. (1997) 277(15):1244–5. doi: 10.1001/jama.1997.03540390074039

22. Śledzińska P, Bebyn MG, Furtak J. Quality of YouTube videos on meningioma treatment using the discern instrument. World Neurosurg. (2021) 153:e179–86. doi: 10.1016/j.wneu.2021.06.072

23. Szmuda T, Alkhater A, Albrahim M, Alquraya E, Ali S, Dunquwah RA, et al. YouTube as a source of patient information for stroke: a content-quality and an audience engagement analysis. J Stroke Cerebrovasc Dis. (2020) 29(9):105065. doi: 10.1016/j.jstrokecerebrovasdis.2020.105065

24. Yurdaisik I. Analysis of the most viewed first 50 videos on YouTube about breast cancer. BioMed Res Int. (2020) 2020:2750148. doi: 10.1155/2020/2750148

25. Zhang S, Fukunaga T, Oka S, Orita H, Kaji S, Yube Y, et al. Concerns of quality, utility, and reliability of laparoscopic gastrectomy for gastric cancer in public video sharing platform. Ann Transl Med. (2020) 8(5):196. doi: 10.21037/atm.2020.01.78

26. D’Ambrosi R, Bellato E, Bullitta G, Cecere AB, Corona K, De Crescenzo A, et al. Tiktok content as a source of health education regarding epicondylitis: a content analysis. J Orthop Traumatol. (2024) 25(1):14. doi: 10.1186/s10195-024-00757-3

27. Sun F, Zheng S, Wu J. Quality of information in gallstone disease videos on TikTok: cross-sectional study. J Med Internet Res. (2023):25:e39162. doi: 10.2196/39162

28. Kang E, Ju H, Kim S, Choi J. Contents analysis of thyroid cancer-related information uploaded to YouTube by physicians in Korea: endorsing thyroid cancer screening, potentially leading to overdiagnosis. BMC Public Health. (2024) 24(1):942. doi: 10.1186/s12889-024-18403-2

29. Gong X, Dong B, Li L, Shen D, Rong Z. Tiktok video as a health education source of information on heart failure in China: a content analysis. Front Public Health. (2023) 11:1315393. doi: 10.3389/fpubh.2023.1315393

30. D’Souza RS, Hooten WM, Murad MH. A proposed approach for conducting studies that use data from social media platforms. Mayo Clin Proc. (2021) 96(8):2218–29. doi: 10.1016/j.mayocp.2021.02.010

31. Morahan-Martin JM. How internet users find, evaluate, and use online health information: a cross-cultural review. Cyberpsychol Behav. (2004) 7(5):497–510. doi: 10.1089/cpb.2004.7.497

32. Shoemaker SJ, Wolf MS, Brach C. Development of the patient education materials assessment tool (pemat): a new measure of understandability and actionability for print and audiovisual patient information. Patient Educ Couns. (2014) 96(3):395–403. doi: 10.1016/j.pec.2014.05.027

33. Zeng M, Grgurevic J, Diyab R, Roy R. #Whatieatinaday: the quality, accuracy, and engagement of nutrition content on TikTok. Nutrients. (2025) 17(5). doi: 10.3390/nu17050781

34. Quartey A, Lipoff JB. Is TikTok the new Google? Content and source analysis of Google and TikTok as search engines for dermatologic content in 2024. J Am Acad Dermatol. (2025) 93(1):e25–6. doi: 10.1016/j.jaad.2024.10.131

35. Toler I, Grubbs L. Listening to TikTok—patient voices, bias, and the medical Record. N Engl J Med. (2025) 392(5):422–3. doi: 10.1056/NEJMp2410601

36. Ergenç M, Uprak TK. YouTube as a source of information on helicobacter Pylori: content and quality analysis. Helicobacter. (2023) 28(4):e12971. doi: 10.1111/hel.12971

37. Uprak TK, Ergenç M. Assessment of esophagectomy videos on YouTube: is peer review necessary for quality? J Surg Res. (2022) 279:368–73. doi: 10.1016/j.jss.2022.06.037

38. Shuab A, Krakowiak M, Szmuda T. The quality and reliability analysis of YouTube videos about insulin resistance. Int J Med Inf. (2023) 171:104987. doi: 10.1016/j.ijmedinf.2023.104987

39. Ozsoy-Unubol T, Alanbay-Yagci E. YouTube as a source of information on fibromyalgia. Int J Rheum Dis. (2021) 24(2):197–202. doi: 10.1111/1756-185X.14043

40. Gaş S, Zincir ÖÖ, Bozkurt AP. Are YouTube videos useful for patients interested in botulinum toxin for bruxism? J Oral Maxillofac Surg. (2019) 77(9):1776–83. doi: 10.1016/j.joms.2019.04.004

41. D’Ambrosi R, Carrozzo A, Annibaldi A, Vieira TD, An J-S, Freychet B, et al. YouTube videos provide low-quality educational content about the anterolateral ligament of the knee. Arthrosc Sports Med Rehabil. (2025) 7(1):101002. doi: 10.1016/j.asmr.2024.101002

42. Ayer D, Jain A, Singh M, Tawfik A, Tadros M. Understanding TikTok’s role in young-onset colorectal cancer awareness and education. J Cancer Educ. (2025). doi: 10.1007/s13187-025-02585-3

43. Potluru A, Nikookam Y, Guckian J. Hyperpigmentation and social media: a cross-sectional study of content quality on TikTok. Clin Exp Dermatol. (2025) 50(7):1453–5. doi: 10.1093/ced/llaf084

44. Boothby-Shoemaker W, Khanna R, Khanna R, Milburn A, Walia S, Huggins RH. Social media platforms as a resource for vitiligo support. J Drugs Dermatol. (2022) 21(10):1135–6. doi: 10.36849/jdd.6801

45. Druskovich C, Landriscina A. TikTok and dermatology: questioning the data. J Drugs Dermatol. (2024) 23(8):e167–8. doi: 10.36849/jdd.7956

46. Baghdadi JD, Coffey KC, Belcher R, Frisbie J, Hassan N, Sim D, et al. #Coronavirus on TikTok: user engagement with misinformation as a potential threat to public health behavior. JAMIA Open. (2023) 6(1):ooad013. doi: 10.1093/jamiaopen/ooad013

47. Vosoughi S, Roy D, Aral S. The spread of true and false news online. Science. (2018) 359(6380):1146–51. doi: 10.1126/science.aap9559

48. Wang Y, McKee M, Torbica A, Stuckler D. Systematic literature review on the spread of health-related misinformation on social Media. Soc Sci Med. (2019) 240:112552. doi: 10.1016/j.socscimed.2019.112552

49. Bini SA. Artificial intelligence, machine learning, deep learning, and cognitive computing: what do these terms mean and how will they impact health care? J Arthroplasty. (2018) 33(8):2358–61. doi: 10.1016/j.arth.2018.02.067

Keywords: osteoarthritis, short videos, health information, quality assessment, reliability

Citation: Zhang Y-x, Yin H-t, Liu Y-x, Fu X and Liu J (2025) Videos in short-video sharing platforms as sources of information on osteoarthritis: cross-sectional content analysis study. Front. Digit. Health 7:1622503. doi: 10.3389/fdgth.2025.1622503

Received: 10 June 2025; Accepted: 7 August 2025;

Published: 5 September 2025.

Edited by:

Andreas Kanavos, Ionian University, GreeceCopyright: © 2025 Zhang, Yin, Liu, Fu and Liu. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Jun Liu, bGl1anVuMTk2OHRqdUAxNjMuY29t

†These authors have contributed equally to this work and share first authorship

Yi-xiang Zhang

Yi-xiang Zhang Hao-tian Yin1,3,†

Hao-tian Yin1,3,† Xin Fu

Xin Fu Jun Liu

Jun Liu