- 1Institute for Data Science and Informatics, University of Missouri, Columbia, MO, United States

- 2School of Medicine, Department of Biomedical Informatics, Biostatistics, and Medical Epidemiology, University of Missouri, Columbia, MO, United States

- 3Department of Occupational Therapy, University of Missouri, Columbia, MO, United States

Introduction: Clinical monitoring of functional decline in amyotrophic lateral sclerosis (ALS) relies on periodic assessments, which may miss critical changes that occur between visits when timely interventions are most beneficial.

Methods: To address this gap, semi-supervised regression models with pseudo-labeling were developed; these models estimated rates of decline by targeting Revised Amyotrophic Lateral Sclerosis Functional Rating Scale (ALSFRS-R) trajectories with continuous in-home sensor data from a three-patient ALS case series. Three model paradigms were compared (individual batch learning and cohort-level batch vs. incremental fine-tuned transfer learning) across linear slope, cubic polynomial, and ensembled self-attention pseudo-label interpolations.

Results: Results showed cohort-level homogeneity across functional domains. For ALSFRS-R subscales, transfer learning reduced the prediction error in 28 of 34 contrasts [mean root mean square error (RMSE) = 0.20 (0.14–0.25)]. However, for composite ALSFRS-R scores, individual batch learning was optimal for two of three participants [mean RMSE = 3.15 (2.24–4.05)]. Self-attention interpolation best captured non-linear progression, providing the lowest subscale-level error [mean RMSE = 0.19 (0.15–0.23)], and outperformed linear and cubic interpolations in 21 of 34 contrasts. Conversely, linear interpolation produced more accurate composite predictions [mean RMSE = 3.13 (2.30–3.95)]. Distinct homogeneity-heterogeneity profiles were identified across domains, with respiratory and speech functions showing patient-specific progression patterns that improved with personalized incremental fine-tuning, while swallowing and dressing functions followed cohort-level trends suited for batch transfer modeling.

Discussion: These findings indicate that dynamically matching learning and pseudo-labeling techniques to functional domain-specific homogeneity-heterogeneity profiles enhances predictive accuracy in tracking ALS progression. As an exploratory pilot, these results reflect case-level observations rather than population-wide effects. Integrating adaptive model selection into sensor platforms may enable timely interventions as a method for scalable deployment in future multi-center studies.

1 Introduction

Amyotrophic lateral sclerosis (ALS) is a neurodegenerative disease affecting the motor neuron system, with patients experiencing significant difficulties performing across a range of functions, resulting in a reduced ability for self-care. Decline in function is measured regularly at provider visits using clinical instruments like the Revised Amyotrophic Lateral Sclerosis Functional Rating Scale (ALSFRS-R) (1). However, acute functional decline may go undetected by clinicians until the next follow-up due to the long duration between office visits. Sensor monitoring, which has been shown to be effective in supporting care for older adults living independently, offers a possible solution for tracking functional changes related to disease progression in those living with ALS. Sensor measurements may serve as predictive features to target instrument scales over interim periods between clinic visits, thereby increasing the fidelity of functional measures to aid clinicians in making better, more informed care strategies to guide interventions. In this study, we trained and evaluated three semi-supervised learning models (participant-level batch, cohort-level transfer with batch, and incremental fine-tuning) across three pseudo-label techniques (linear, cubic, and self-attention interpolation) to predict ALSFRS-R scale trajectories from in-home sensor health features using root mean square error (RMSE) and Pearson’s correlation () as primary metrics of model accuracy and fit.

1.1 Sensor monitoring of ALS progression

Sensor-based health monitoring has been shown to improve clinical outcomes in older adult independent living residents through early illness detection, enabling them to maintain their independence longer (2). Physical deficits in older adults may mirror the functional declines observed in ALS, with community-dwelling older adults experiencing a stable physical function until a steep decline 1–3 years before death (3). Additionally, age-related frailty may involve motor unit loss (denervation) similar to ALS, which contributes to muscle wasting and could further exacerbate ALS progression in older patients (4). This evidence indicates that remote sensor monitoring technologies effective for improving care in elder populations may identify digital biomarkers for tracking ALS disease progression. Recent research has found that combining wearable sensor data with self-reported clinic assessments and environmental metrics improves predictive models targeting ALSFRS-R scales (5). Similarly, work evaluating wearable accelerometer, ECG, and digital speech sensors for tracking ALS has shown that changes in physical activity, heart rate, and speech features correlate with a decline in ALSFRS-R scales (6). More frequent, remote sensor-based tracking of changes in ALSFRS-R scales would enable clinicians to better target interventions and detect acute events, such as falls or medication changes, between clinic visits.

1.2 Clinical use of ALSFRS-R scales

ALS disease progression rates vary between patients due to a number of clinical factors including baseline functional status, disease stage at diagnosis and diagnostic delay, co-occurrence of frontotemporal dementia, gender, age and site at onset, particularly respiratory-onset, and a number of genetic and environmental factors (7–9). Disease progression also varies across functional domains within ALS patients, following non-linear rates of decline in specific areas (10). ALS progression is tracked longitudinally using the ALSFRS-R instrument as a qualitative, subjective self-reported measure of performance in functional tasks. ALSFRS-R scales are collected during clinic visits to determine the amount of change in bulbar, fine motor, gross motor, and respiratory functional domains over time. Scales are rated between 0 and 4, with 0 indicating dependence and 4 indicating no difficulty. The composite score and linear slope serve as primary metrics of functional change and decline progression and for measuring intervention effects within individuals or across treatment groups in clinical trials, with more frequent assessment improving slope estimation (11, 12). Due to the multi-dimensional aspect of the aggregate ALSFRS-R composite score, it has been suggested to use the component scales independently for measuring treatment outcomes (13). As such, there is not a one-size-fits-all approach for monitoring progression, as decline varies non-linearly among patients, and individualized clinical models are needed for tracking across ALSFRS-R functional domains.

2 Materials and methods

2.1 Parent study

Participants were recruited for a single-site, single-cohort prospective study overseen by the MU Institutional Review Board through the MU Health ALS Clinic, investigating continuous, in-home sensor monitoring for tracking between-visit functional decline (14). The in-home sensor monitoring systems, licensed by the University of Missouri to Foresite Healthcare, LLC, are composed of three modalities for continuous contactless data collection: bed mattress hydraulic transducers for recording ballistocardiogram (BCG)-derived respiration, pulse, and sleep restlessness measures (15, 16); privacy-preserving thermal depth sensors (17, 18), which detect falls and collect walking speed, stride time, and stride length measurements, although gait data were excluded due to wheelchair use; and passive infrared (PIR) motion sensors that provide room activity counts.

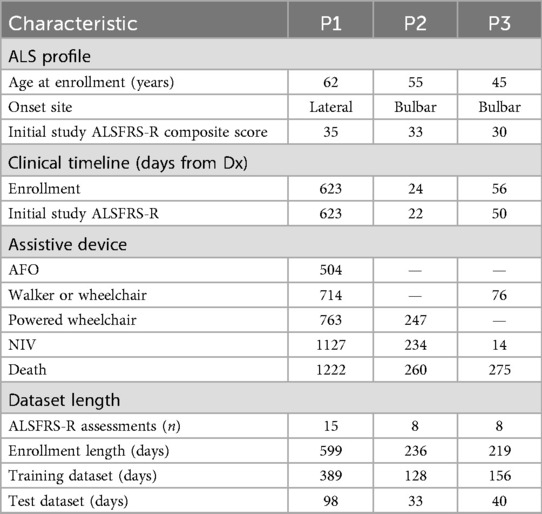

Inclusion criteria required an ALS diagnosis, residence within 100 miles of the clinic, and either a home caregiver or a Montreal Cognitive Assessment (MoCA, 8.1 Blind Version) cutoff score of 19 out of 22, corresponding to the standard cutoff of 26/30 on the full MoCA. ALSFRS-R scores were collected monthly by telephone and quarterly as pre-clinic assessments. After accounting for length of enrollment, data from three participants were of sufficient duration (at least 6 months) for case series modeling, as shown in Table 1. All three participants were non-Hispanic, white, male, Medicare recipients, who left the study due to death. At the time of enrollment, their ALSFRS-R composite scores ranged from 30 to 35, indicating moderate functional impairment. P1 presented with lateral onset (extremity weakness and spasms), P2 with cervical-bulbar and limb weakness, and P3 with bulbar speech changes accompanied by lateral weakness. Diagnosis was determined in the clinic using the revised El Escorial and Awaji diagnostic criteria workflow for ALS (19) and confirmed for study participation by the presence of SNOMED CT codes for ALS (86044005, 142653015, 62293019) and ICD10 (G12.21) in the patient’s medical chart. With regard to disease progression timelines, the interval from diagnosis to enrollment varied among participants (from 24 to 623 days), reflecting the heterogeneous nature of the disease. The use of assistive devices and non-invasive ventilation (NIV) also differed, with P1 requiring ankle-foot orthosis (AFO), walker/wheelchair, and eventually a powered wheelchair over a prolonged period (504–763 days from diagnosis). P2 and P3 were diagnosed at a later ALS stage and had more rapid progressions with shorter intervals to assistive device use (P2: powered wheelchair at 247 days, P3: walker or wheelchair at 76 days) and death. NIV initiation ranged from 14 to 1,127 days post-diagnosis.

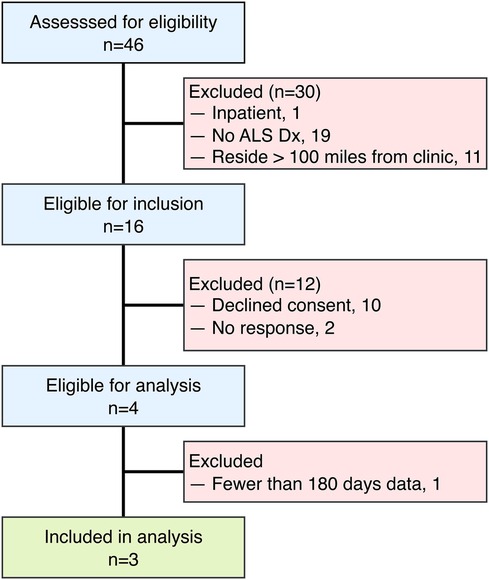

Given the small sample, these findings should be interpreted as case-level observations with limited generalizability, rather than being extrapolated to population-level ALS progression. The limited sample size () of this study reflects both the rarity and rapid progression of ALS, as well as practical limitations specific to remote sensor research within this patient population. Participants were screened for eligibility during the study recruitment period, as outlined in Figure 1. Individuals were excluded for inpatient status, lack of ALS diagnosis, and residing further than 100 miles from the MU ALS Clinic. Additional exclusions occurred due to non-response to recruitment or by declining consent. The inclusion criterion requiring residence within 100 miles of the clinic was selected due to logistical considerations for sensor installation and maintenance rather than intent to restrict sampling. Only those having at least 6 months of monitoring data were included in the analytic sample. Of the 16 individuals who passed eligibility criteria, 10 declined participation due to privacy or structural concerns about sensor installation, 2 did not respond to recruiting materials, and only 3 of the 4 enrolled completed at least 6 months of data collection sufficient for analysis. Despite the small cohort size, participants contributed extensive clinical and sensor-based data, offering within-individual longitudinal detail that is characteristic of ALS observational studies. A larger, multi-site trial is being planned to address scalability and generalizability in future research.

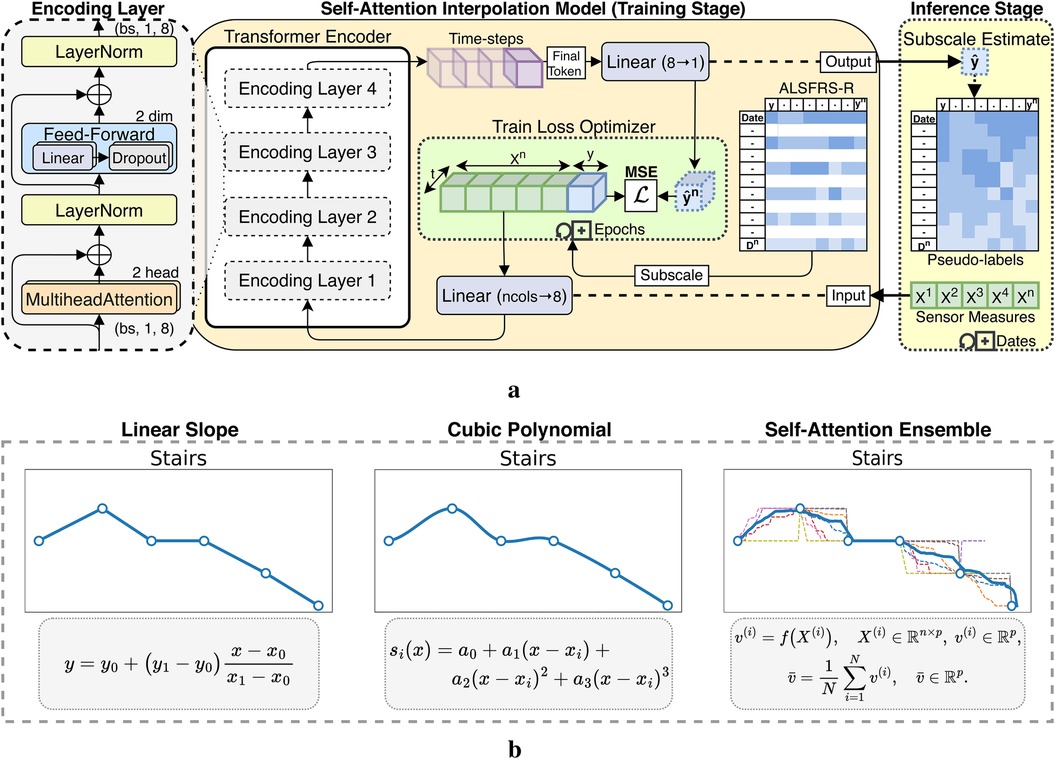

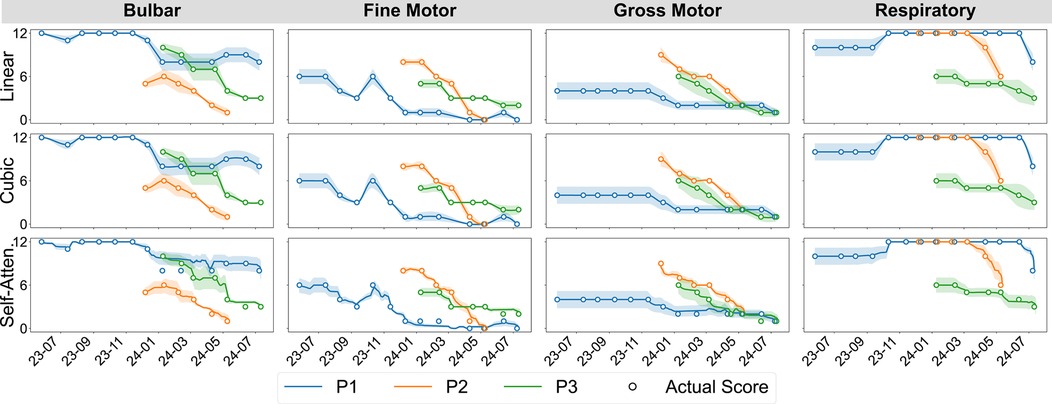

2.2 Estimating between-visit change in ALSFRS-R scales

ALSFRS-R scales were aligned at matching frequency to daily aggregated sensor measurements using pseudo-labels for semi-supervised regression, extending prior work evaluating between-visit interpolation (20). We incorporated a transformer encoder architecture for self-attention interpolation, which we compared to polynomial functions, as illustrated in Figure 2a. Linear 1D piecewise interpolation served as a baseline method, consistent with the clinical methodology for tracking ALS progression. Non-linear cubic spline interpolations were applied to evaluate more gradual rates of decline. The transformer encoder mapped date-indexed sensor vectors with known ALSFRS-R scores to estimate the amount of change occurring between collection points, with the architecture intentionally kept shallow to provide continuous values rather than predicting crisp labels with a deeper network (21). To further smooth the estimations, self-attention interpolation was applied to each sensor feature algorithm table and then ensembled by averaging, as shown by the dashed plots in Figure 2b. The resulting interpolated slopes over time for each pseudo-labeling technique, which are summated by functional area in Figure 3, demonstrate varying rates of decline unique to each participant.

Figure 2. Interpolation techniques applied to ALSFRS-R subscores for estimating sensor feature pseudo-labels. (a) Self-attention interpolation transformer architecture. (b) Comparison of interpolation effects.

Figure 3. Participant-aggregated ALSFRS-R subscores by the functional domain and interpolation technique.

2.3 Semi-supervised learning of ALSFRS-R scales

Three learning approaches for training semi-supervised regression models were compared: batch models fit on sequential participant-level data (individual) and cohort-level (transfer) models initialized on randomized observations and fine-tuned on individual-level data using batch or incremental learning (22). Participant-subscales exhibiting zero or near-zero variance in training samples were not modeled with individual batch learning.

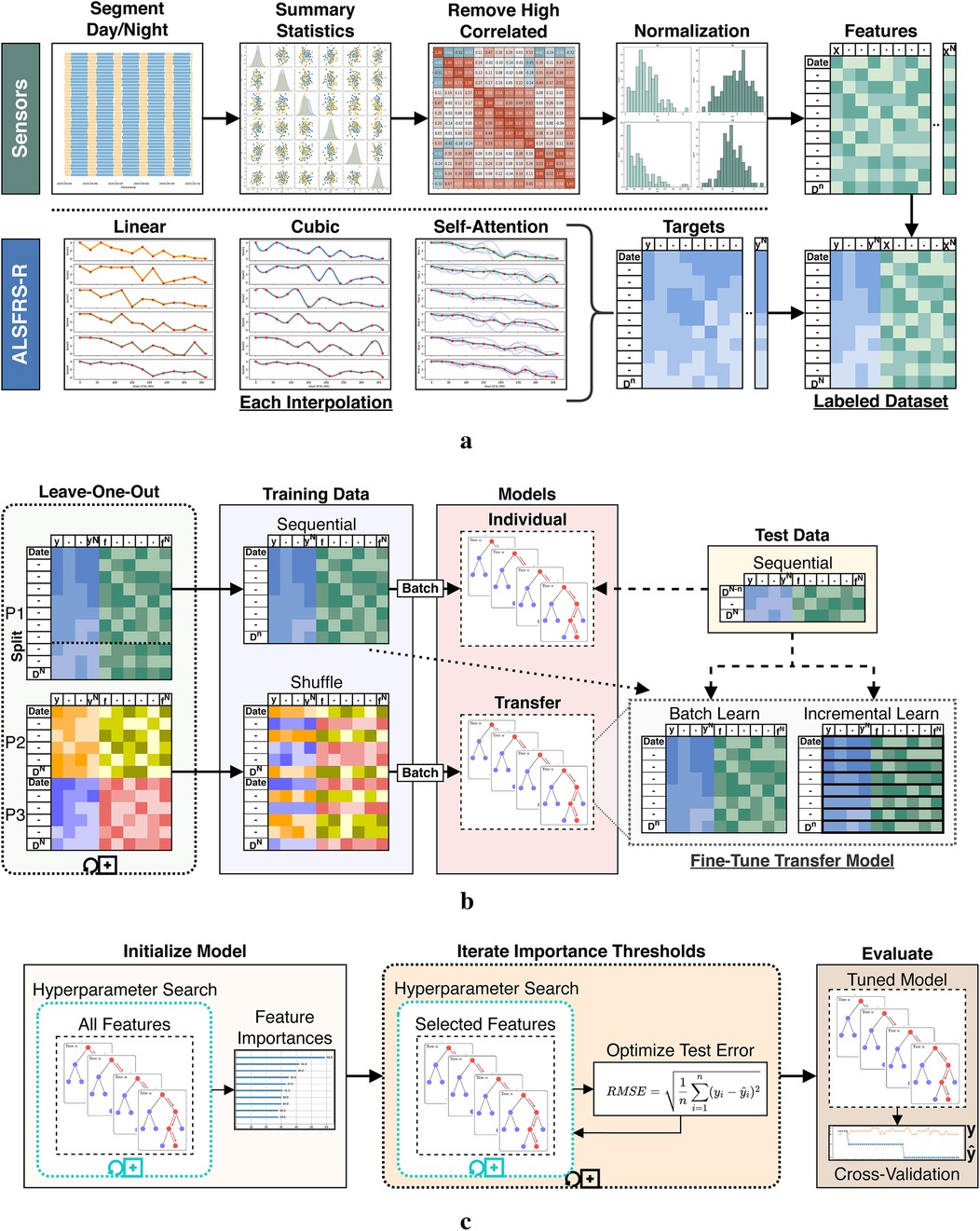

2.3.1 Data preprocessing and feature engineering

High-frequency sensor data were preprocessed using the pipeline described in Figure 4a, beginning with segmentation of the time-indexed features into day and night periods. Summary statistics were calculated over each feature channel and period for count, minimum, maximum, mean, median, mode, variance, range, skew, kurtosis, quantiles, interquartile range (IQR), coefficient of variation (CV), and entropy to better capture temporal patterns by time-of-day. The selected features were chosen based on established use of time-series features in clinical prediction and prior wearable sensor research, where summary statistics have been shown to effectively capture both overall trends and subtle changes in physiological and behavioral signals relevant to disease progression (23, 24). As a case series pilot for continuous in-home sensor monitoring in ALS, we first prioritized conventional summary statistics for baseline modeling and then relied on native feature selection to identify relevant ALSFRS-R predictors. Highly collinear features were then removed, and the resulting set was normalized feature-wise using a minimum–maximum scaling. ALSFRS-R scores were interpolated with each pseudo-labeling technique, resulting in three continuous target trajectories per participant per scale. Finally, the normalized features and interpolated labels were joined by date to produce the pseudo-labeled datasets.

Figure 4. Data processing and model fitting pipeline. (a) Sensor and ALSFRS-R preprocessing steps. (b) Dataset segmentation for individual batch and cohort transfer learning models. (c) Iterative model tuning and evaluation stages.

2.3.2 Individual batch and cohort transfer learning

Individual- and cohort-level models were trained using a leave-one-participant-out strategy, as shown in Figure 4b. For each experiment fold, a single participant (P1, P2, or P3) was withheld as the target subject, while data from the remaining participants formed the source cohort for transfer model training. Each holdout participant’s dataset was split sequentially by 80%/20%. The earliest 80% of observations were used for both training the individual batch model and fine-tuning the cohort transfer model, while the later 20% were set aside as an unseen test set for model evaluation. This temporal partitioning was chosen to reflect the use case of predicting future ALSFRS-R scores based on prior sensor data and prevent data leakage. To ensure fair comparison between models, training and test splits were defined proportionally to each individual’s data, not by group. Individual models were trained using batch learning on the holdout participant’s sequential training data. Transfer learning was conducted by first training a cohort model on the leave-in participants’ data using batch learning with shuffled samples to capture generalizable patterns across individuals. This cross-individual split simulated the scenario of applying knowledge learned from a group to a new, unseen individual. The resulting model parameters (including learned weights and optimizer state) were serialized by pickling and storing the model object. For each participant fold, the initialized transfer model was then reloaded and further adapted using the sequential training data from the holdout participant. This adaptation was done using batch and incremental learning routines, comparatively. Transfer batch fine-tuned models were updated with a single pass through the entire training set of the holdout participant. Conversely, transfer incremental models were trained iteratively by predicting the current outcome label and then fitting the new observation to simulate between-visit model adaptation as additional data become available. All transferred model components were included in the fine-tuning step. Hyperparameters for these fine-tuned models (e.g., learning rate, batch size, optimizer type) were held constant from the initialized model during subsequent fine-tuning and were selected using cross-validation. As shown in Table 1, participants differed in their dataset length and number of ALSFRS-R assessments collected. To prevent bias, all model evaluations were performed within-participant, and prediction errors and outcome correlations were computed only on the holdout subject’s test data for each fold. We did not aggregate or compare metrics across participants. This approach was chosen to focus on the model’s ability to estimate patient-specific ALS disease progression and to infer homogeneous–heterogeneous profiles across ALSFRS-R scales.

2.3.3 Iterative hyperparameter tuning and feature selection

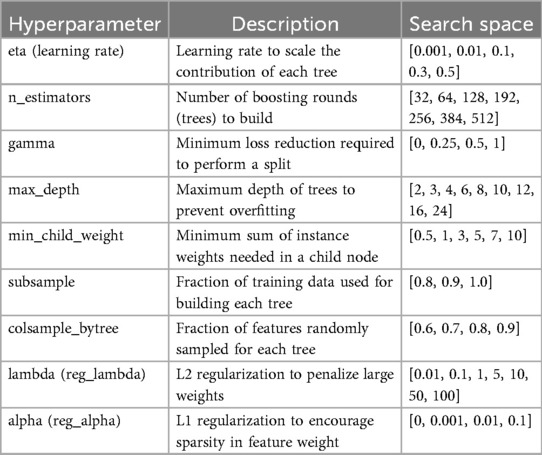

To evaluate the effects of label interpolation and transfer learning strategies within a consistent modeling framework, rather than to benchmark across diverse machine learning algorithms, a single learner experiment design was chosen to evaluate pseudo-labeling interpolations and transfer model adaptations. We employed an iterative screener-learner approach combining hyperparameter optimization with feature selection, illustrated in Figure 4c, using the XGBoost algorithm (25). Models were initialized on the full set of summary features and hyperparameter tuned using RandomizedSearchCV in the Scikit-Learn Python package. Following initialization, feature importance scores were extracted using feature weight frequency internal to XGBoost. Importance scores were then binned through iterative precision-rounding, beginning at six decimal places and consecutively increasing precision levels to a maximum of 200 features to avoid overfitting. For each feature subset identified, a new model was fit using the selected features and tuned hyperparameters, as specified in Table 2. The root mean square error (RMSE) was calculated and compared across all iterations, with the model configuration yielding the lowest test RMSE selected as the optimized model. The best-performing transfer model was then fine-tuned on the holdout participant’s training set using the trained booster as a checkpoint to resume learning.

2.4 Model evaluation metrics and Taylor diagrams

Prediction performance metrics for the RMSE (prediction error), Pearson’s (outcome correlation), and their confidence intervals (CIs) were calculated from modeled outcomes () against the test values () that were held out from the model training data. Confidence intervals for the Pearson correlation were obtained by first applying Fisher’s -transformation to stabilize the sampling distribution and computing the standard error, before constructing the 95% limits in -space, and then converting these limits back to the correlation scale. If fewer than four points were available, the correlation interval was marked as missing. For RMSE confidence intervals, we used the non-parametric bootstrap method with 1,000 resamples of the paired true and predicted values, recalculated the RMSE for each resample using the standard deviation of true and predicted values along with their absolute correlation, and then took the 2.5% and 97.5% percentiles of the resulting RMSE distribution as the confidence bounds. Average summary metrics for ALSFRS-R subscales and the composite score were calculated by taking the mean value across participants, with the 95% confidence interval estimated using the standard error of the mean and the t-distribution. This provides an interval estimate that reflects the expected variability in case series cohort model performance. Comparisons between pseudo-labeling techniques and learning methods were made using Taylor diagrams to assess prediction accuracy, correlation, and variability at the cohort level by averaging outcomes across participants for each subscale. In these Taylor diagrams, the reference point on the -axis represents the actual values (the estimated ALSFRS-R scale) plotted at coordinates , where is the standard deviation of the actual values, while the origin represents a modeled prediction with SD 0 and to the reference vector (26).

3 Results

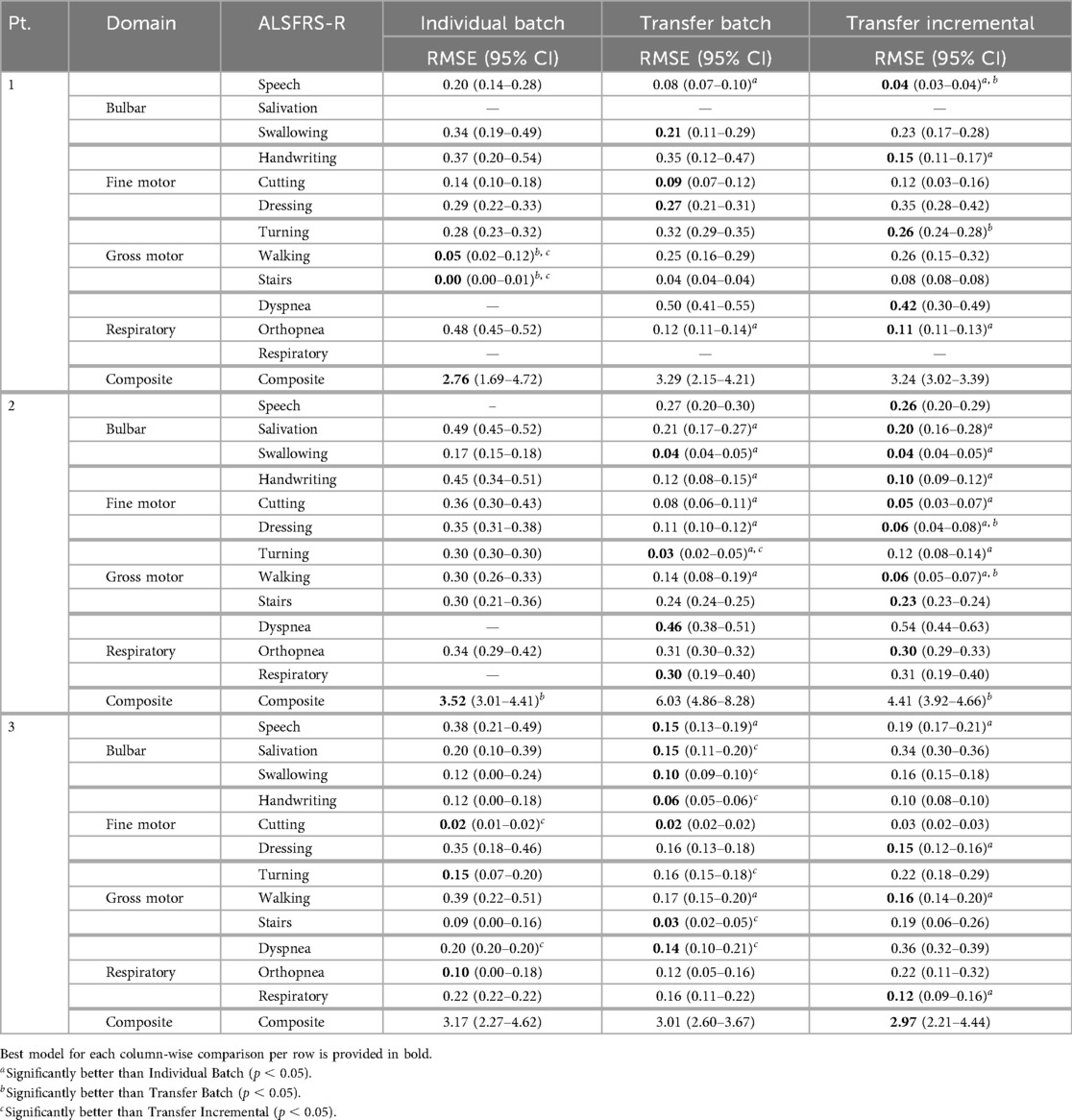

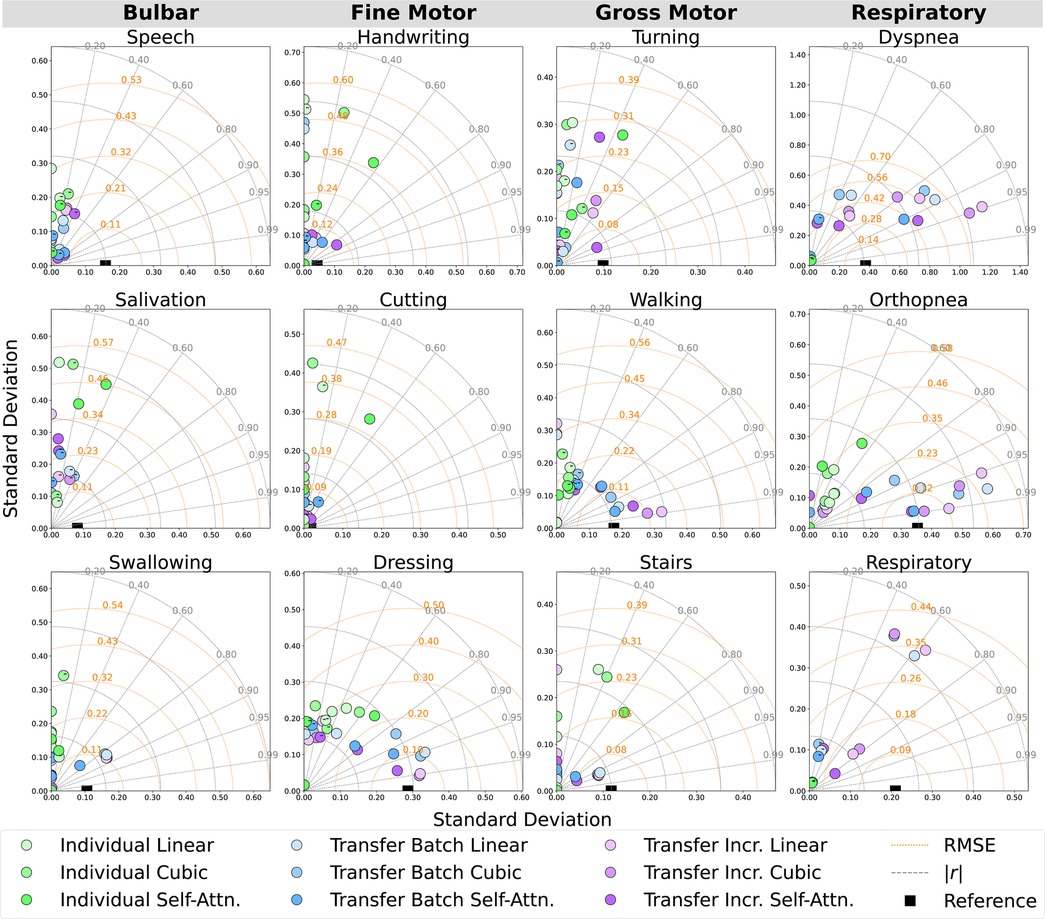

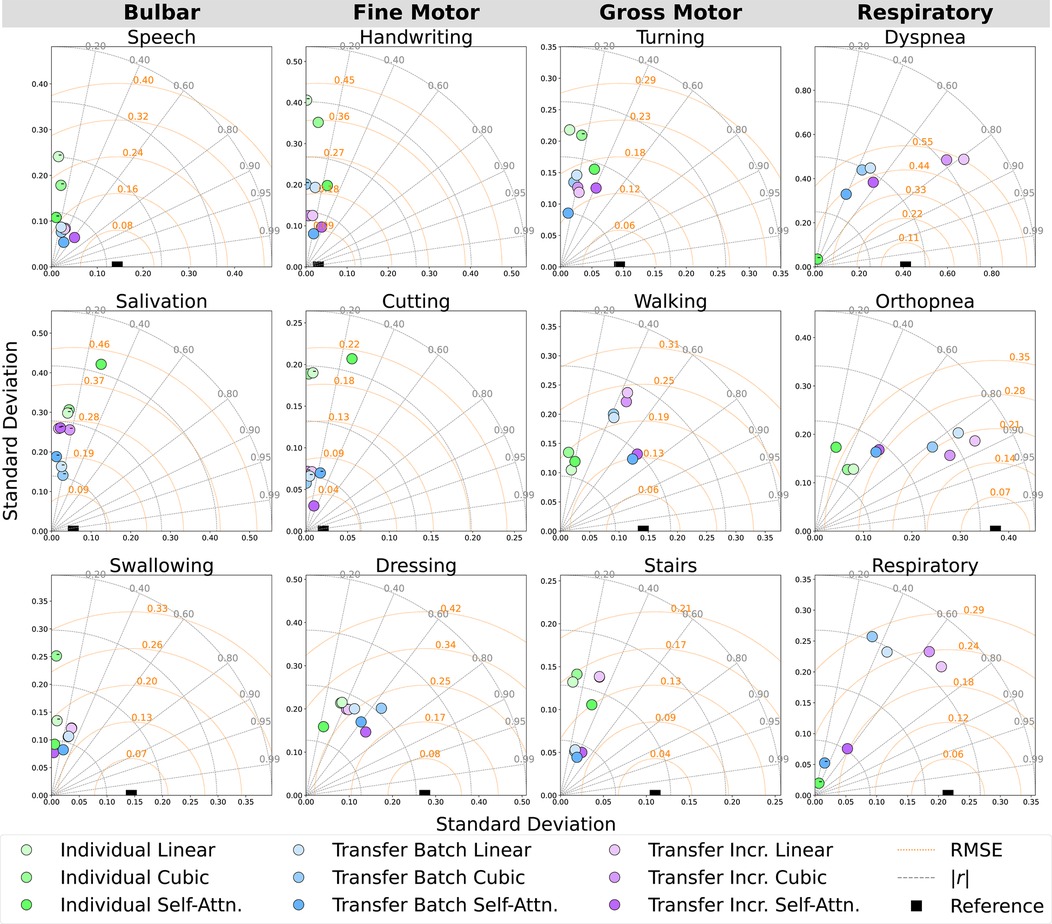

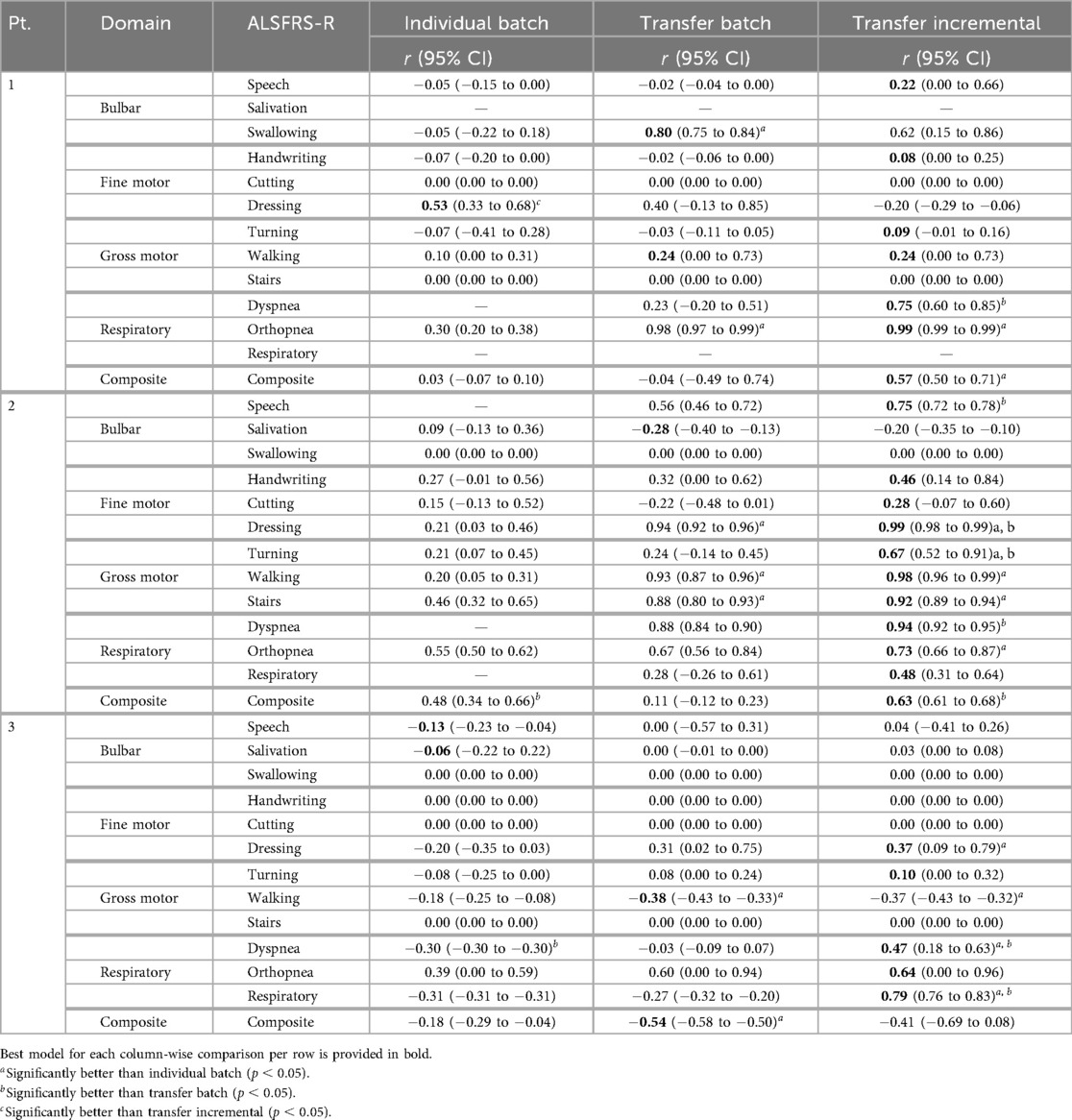

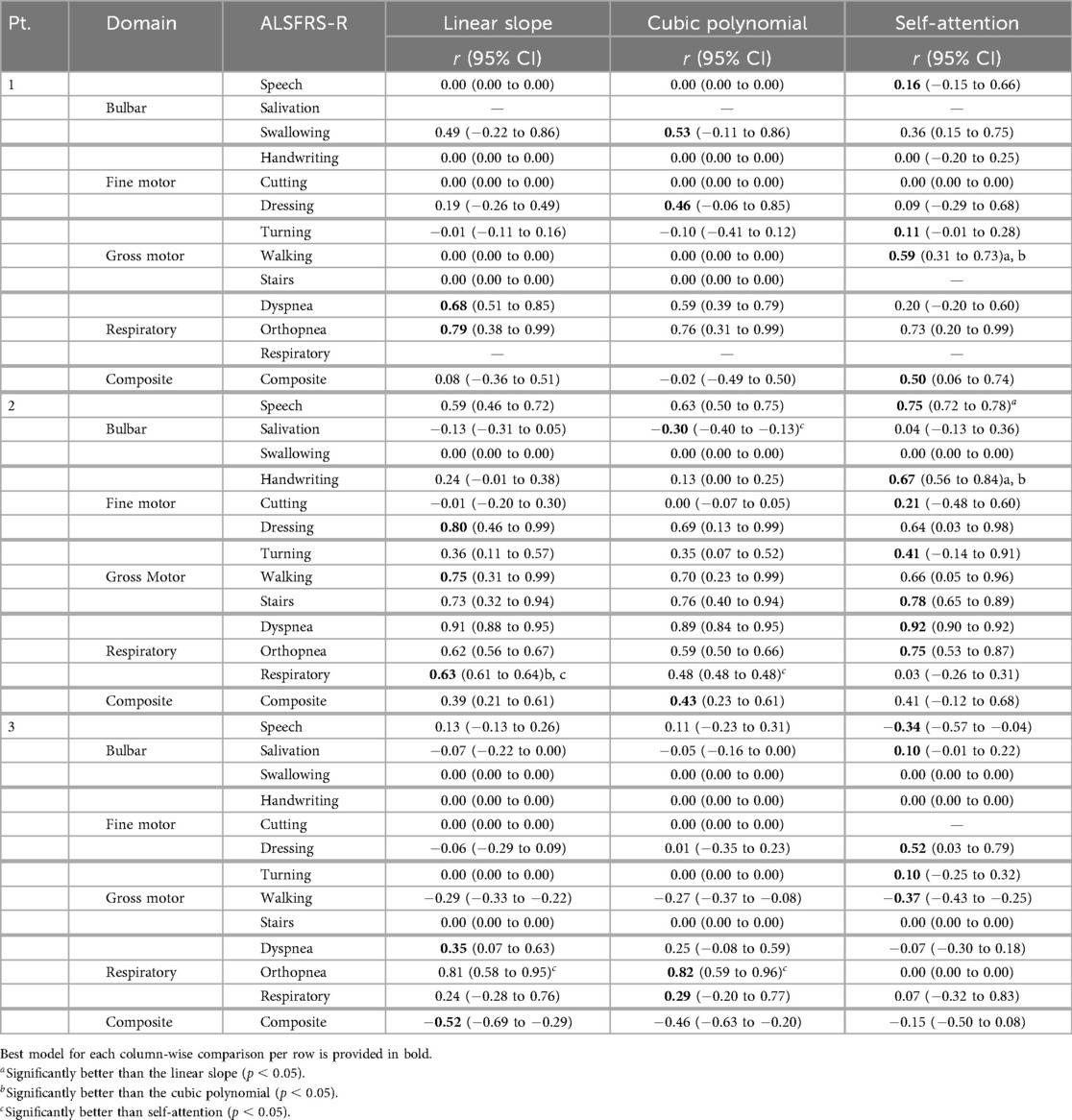

Models were evaluated across pseudo-labeling techniques (linear, cubic, self-attention interpolation) and learning methods (individual batch, transfer batch fine-tuned, transfer incremental fine-tuned) for predicting ALSFRS-R component and composite scales using in-home health sensor features; an asterisk (*) was used to mark significant improvements in these comparisons. Prediction errors and outcome correlations for ALSFRS-R subscales are illustrated by participant as Taylor diagrams in Figure 5 and cohort average in Figure 6. Low variances in collected scores, provided in Table 3, prevented the fitting of participant-subscale models for salivation in P1, speech in P2, dyspnea and respiratory for P1 and P2 for all interpolations, dyspnea and respiratory in P3 with linear and cubic interpolation, and cutting with self-attention interpolation. As P1’s salivation and respiratory scales had zero variance with static scores of 4, transfer models were not fit for these subscales.

Figure 5. Participant-level Taylor diagrams depicting the mean RMSE, absolute correlation (), and standard deviation of the predicted outcomes for each ALSFRS-R scale, annotated by negative () correlation.

Figure 6. Cohort-averaged Taylor diagrams depicting the mean RMSE, absolute correlation (), and standard deviation of the predicted outcomes for each ALSFRS-R scale, annotated by negative () correlation.

3.1 Performance contrast between learning methods

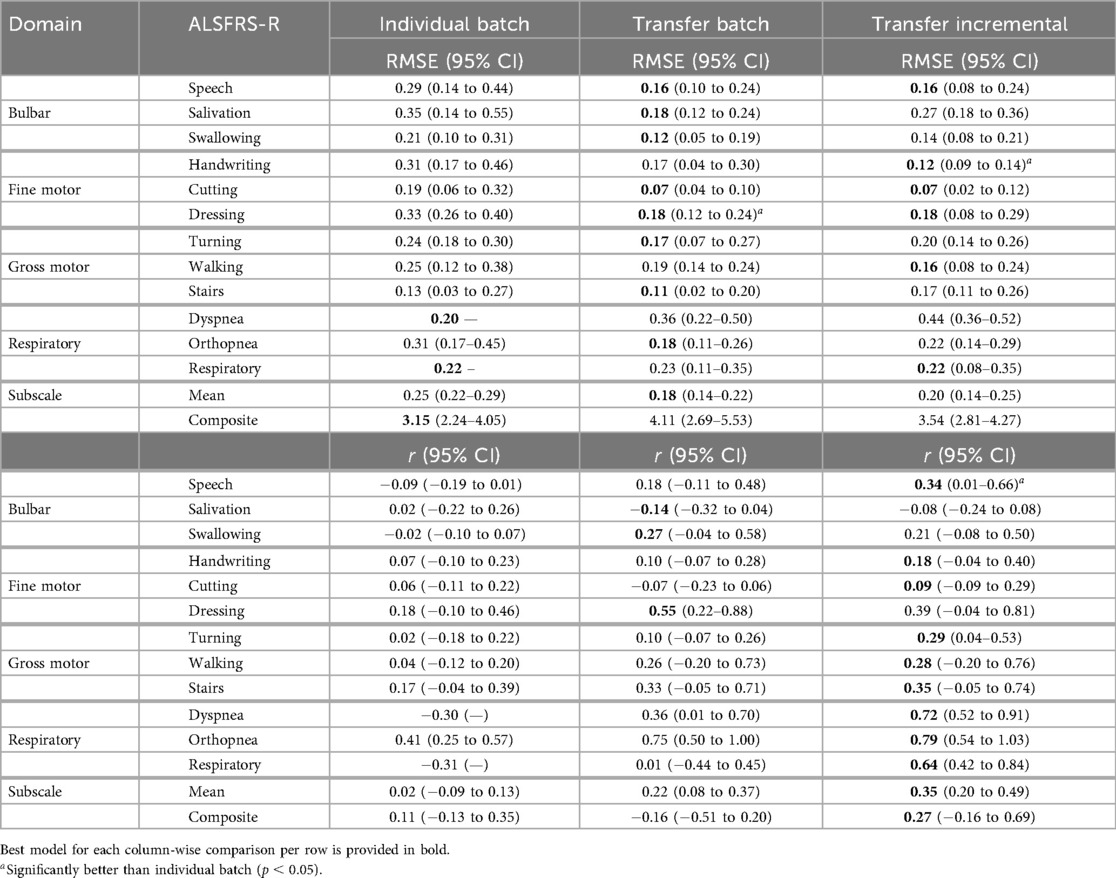

Individual batch and cohort-level transfer learning methods were compared to determine their effectiveness in improving prediction error (RMSE) and outcome correlation () within participants, as detailed in Tables 4 and 5 and as averaged across participants in Table 6. Results from individual batch and transfer incremental models revealed participant-specific heterogeneity in certain subscales. Comparing the lowest and next lowest model errors, transfer learning generally outperformed individual batch learning across most participant-scale combinations; however, there were exceptions where individual batch models provided lower prediction error (P1: walking, stairs, composite; P2: composite; P3: cutting, turning, orthopnea).

Table 5. Mean model outcome correlation () across pseudo-label interpolations for each learning method.

Table 6. Mean model prediction error (RMSE) and outcome correlation () across pseudo-label interpolations and participants for each learning method.

3.1.1 Mean performance across interpolations

The bulbar ALSFRS-R scales (speech, salivation, swallowing) demonstrated cohort-level trends, with both transfer learning models outperforming individual batch models. For speech, transfer incremental learning led to improved mean prediction error and correlation compared to transfer batch learning in P1 (RMSE , ) and P2 (RMSE , ), while P3 showed an increase in mean prediction error and a slight change in correlation (RMSE , ), indicating that speech function has shared cohort-level substrates, with participant-specific changes better captured by transfer incremental fine-tuning than by individual or transfer batch models. For salivation, transfer incremental learning resulted in a negligible change in mean prediction error in P2 (RMSE ), while the error increased in P3 (RMSE ) compared to transfer batch learning. P2 showed a minor decrease in prediction correlation () with a slight change in P3 (), suggesting slight participant heterogeneity in salivation decline. For swallowing, transfer incremental fine-tuning increased the prediction error with decreased correlation for P1 (RMSE , ) and with no change in correlation for P3 [RMSE , (0–0)]. P2 showed no change in error or correlation [RMSE (0.04–0.05), 0 (0–0)].

Fine-motor subscales (handwriting, cutting, and dressing) showed variable responses to individual batch and transfer incremental fine-tuning across participants, indicating an overall weak participant-level heterogeneity. The fact that transfer learning models outperformed individual batch models implies that fine-motor decline progression patterns are relatively homogeneous across the cohort. For handwriting, transfer incremental fine-tuning improved mean prediction error and correlation for P1 (RMSE , ) and P2 (RMSE , ), whereas P3 exhibited a slight increase in prediction error (RMSE ) with no change in correlation [(0–0)]. In contrast, the cutting subscale exhibited more cohort homogeneity, as only P2 showed improvement with transfer incremental learning (RMSE , ), while P1 (RMSE ) and P3 (RMSE ) demonstrated increased prediction error without correlation improvement [(0–0)] compared to the transfer batch model. The dressing subscale showed a moderate level of heterogeneity between participants and the cohort, with slight improvements from transfer incremental learning in mean RMSE and for P2 (RMSE , ) and P3 (RMSE , ). Conversely, both error and correlation worsened for P1 (RMSE , ).

For the gross motor ALSFRS-R scales (turning, walking, stairs), individual batch models outperformed transfer batch models in a few instances, indicating heterogeneity in these cases. Individual batch learning demonstrated lower error but with a minor correlation decrease in the turning subscale for P3 (RMSE , ) compared to transfer batch. Similarly, individual batch models had better error with lower correlation than transfer batch learning in the walking subscale for P1 (RMSE , ) and a marginal improvement in stairs for P1 [RMSE , (0–0)]. For turning, transfer incremental improved both error and correlation in P1 (RMSE , ) and increased both error and correlation in P2 (RMSE , ) compared to transfer batch. The walking subscale also showed mixed results between the transfer methods. Transfer incremental learning improved both mean error and correlation over transfer batch for P2 (RMSE , ) and only slightly improved for P3 (RMSE , ) but with a minor error increase with no change in correlation for P1 (RMSE , ). For stairs, transfer batch and incremental models showed a marginal difference in error and increased correlation for P2 (RMSE , ), while the model error increased with transfer incremental learning for P3 [RMSE , (0–0)].

Within the respiration-related ALSFRS-R scales (dyspnea, orthopnea, respiratory), the collected orthopnea and respiratory scores for P1 and P2 exhibited low variance, as presented in Table 3, which prevented the fitting of individual batch models for those participants. For dyspnea, transfer incremental learning significantly improved correlation for all participants and reduced prediction error for P1 (RMSE , ); however, it increased the error for P2 (RMSE , ) and P3 (RMSE , ). For orthopnea, transfer incremental models resulted in marginal differences in prediction error and a slight improvement in correlation for P1 (RMSE , ) and P2 (RMSE , ). However, individual batch models had slightly better error but lower correlation than transfer batch models in P3 (RMSE , ), indicating that the P3 orthopnea trajectory was not fully captured by the shared model, pointing toward participant heterogeneity, given the small improvements seen in P1 and P2 and the benefit P3 showed from individual batch learning. Similar to orthopnea, respiratory function also displayed participant heterogeneity, with transfer incremental learning resulting in a slight change in error but an increase in correlation between transfer batch and incremental learning for P2 (RMSE , ), while P3 had improved error and a significant increase in correlation (RMSE , ).

To summarize overall functional status across bulbar, fine motor, gross motor, and respiratory items, the composite ALSFRS-R scale captures decline trends as a single index. For the composite ALSFRS-R scale, individual batch learning models resulted in a lower mean prediction error compared to transfer batch learning, with a marginal difference in outcome correlation for P1 (RMSE , ) and with significant improvements in error and correlation for P2 (RMSE , ), demonstrating that modeled composite scores are mostly participant-specific. Transfer incremental learning slightly improved prediction error and decreased correlation for P3 (RMSE , ) over transfer batch models. This performance was also comparable to individual batch learning [RMSE (2.27–4.62), (0.29–0.04)].

3.1.2 Mean group-level performance across interpolations

Contrasting the mean model performances across participants and learning methods provided evidence for whether functional domains exhibit patient-specific heterogeneity or cohort-level homogeneity. Bulbar area functions demonstrated mixed patterns, with speech exhibiting a combination of cohort homogeneity and participant heterogeneity. This was evidenced by transfer incremental learning improving outcome error and correlation over individual batch models (RMSE , ). In contrast, salivation showed near-zero correlation for the individual model and persistent negative correlations for transfer models, suggesting high noise or complex participant-level patterns. Swallowing showed more cohort homogeneity, with transfer batch models [RMSE (0.05–0.19), (0.04–0.58)] outperforming both individual batch and incremental fine-tuned models. Fine motor area functions similarly demonstrated mixed results, as handwriting exhibited cohort patterns benefiting from transfer learning and participant heterogeneity, with incremental fine-tuning improving error and correlation over individual batch models (RMSE , ). Cutting displayed similar heterogeneity, with incremental fine-tuning improving correlation performance over transfer batch models () with nearly equal prediction error. Dressing had moderate cohort homogeneity, with transfer batch showing a stronger correlation than incremental fine-tuning () with no change in error. The gross motor area functions were predominantly cohort homogeneous but showed selective participant heterogeneity, particularly in walking, where incremental fine-tuning marginally improved error and correlation over fine-tuning (RMSE , ), and in stairs, where incremental fine-tuning improved both error and correlation over individual batch models (RMSE , ). These results indicate that participant variations exist within the predominant cohort patterns. Respiratory functions exhibited the strongest evidence of patient heterogeneity, with incremental fine-tuning of transfer models improving negative correlations from individual batch in dyspnea (), orthopnea (), and respiratory ().

3.1.3 Mean group-level performance across interpolations and ALSFRS-R scales

As shown in the Subscale Mean row of Table 6, transfer batch models demonstrated the best subscale performance with the lowest mean prediction error [RMSE (0.14–0.22)], outperforming transfer incremental [RMSE (0.14–0.25)] and individual batch learning (RMSE (0.22–0.29)]. Overall, across all participants and interpolation techniques, transfer incremental learning showed the highest mean correlation [ (0.20–0.49)] compared to transfer batch [ (0.08–0.37)] and individual batch learning ( (0.09–0.13)] for subscale prediction. On composite scales, individual batch models showed the lowest error [RMSE (2.24–4.05)] but only weak correlation [ (0.13–0.35)], while transfer batch resulted in the highest error [RMSE (2.69–5.53)] and a negative correlation [ (0.51–0.20)]. Transfer incremental models had the best balance between error and correlation for the composite scale, with a moderate error (RMSE (2.81–4.27)], slightly higher than the error from individual batch models but lower than transfer batch models, and improved correlation [ (0.16–0.69)].

3.2 Performance contrast between pseudo-label interpolations

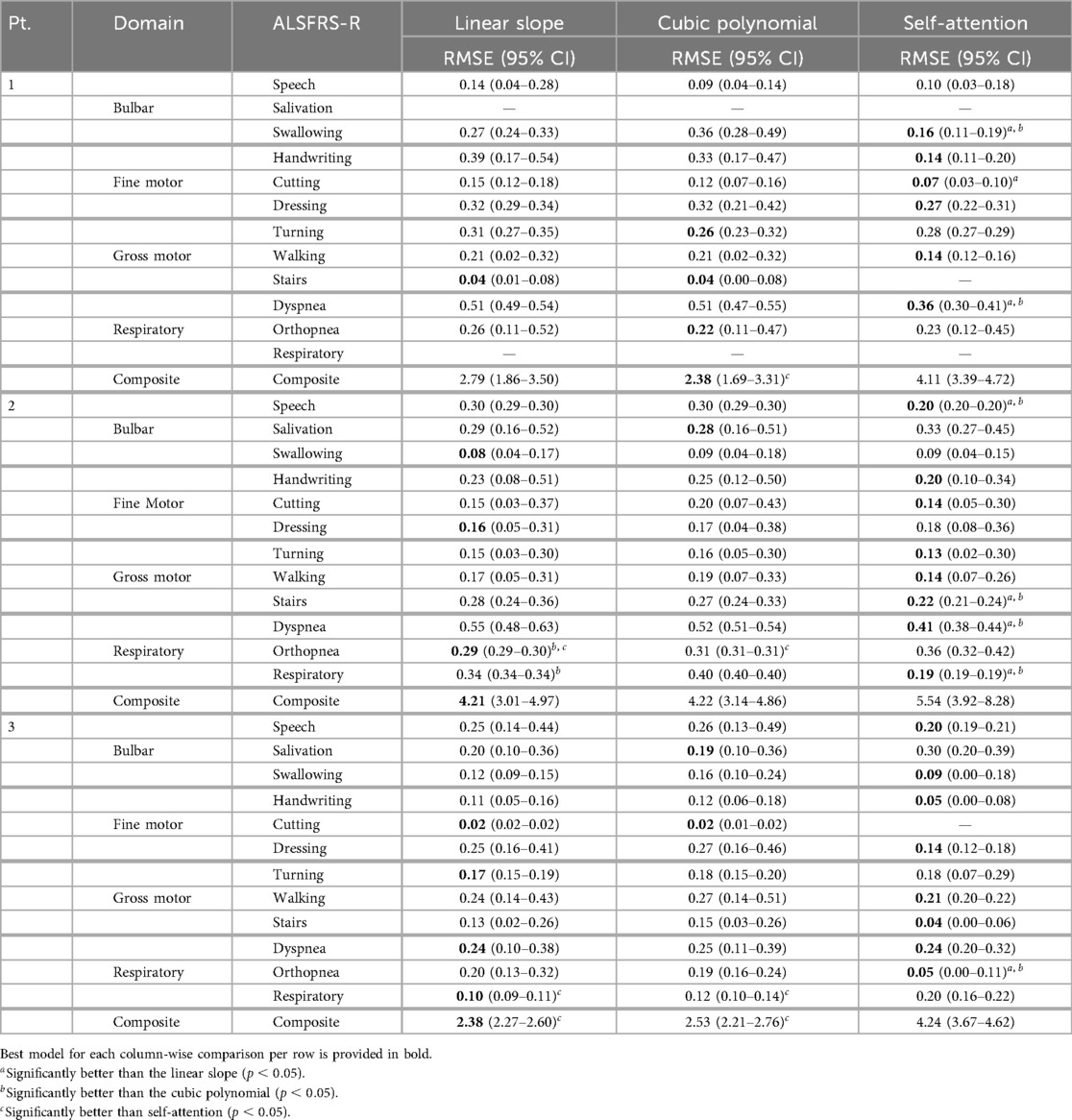

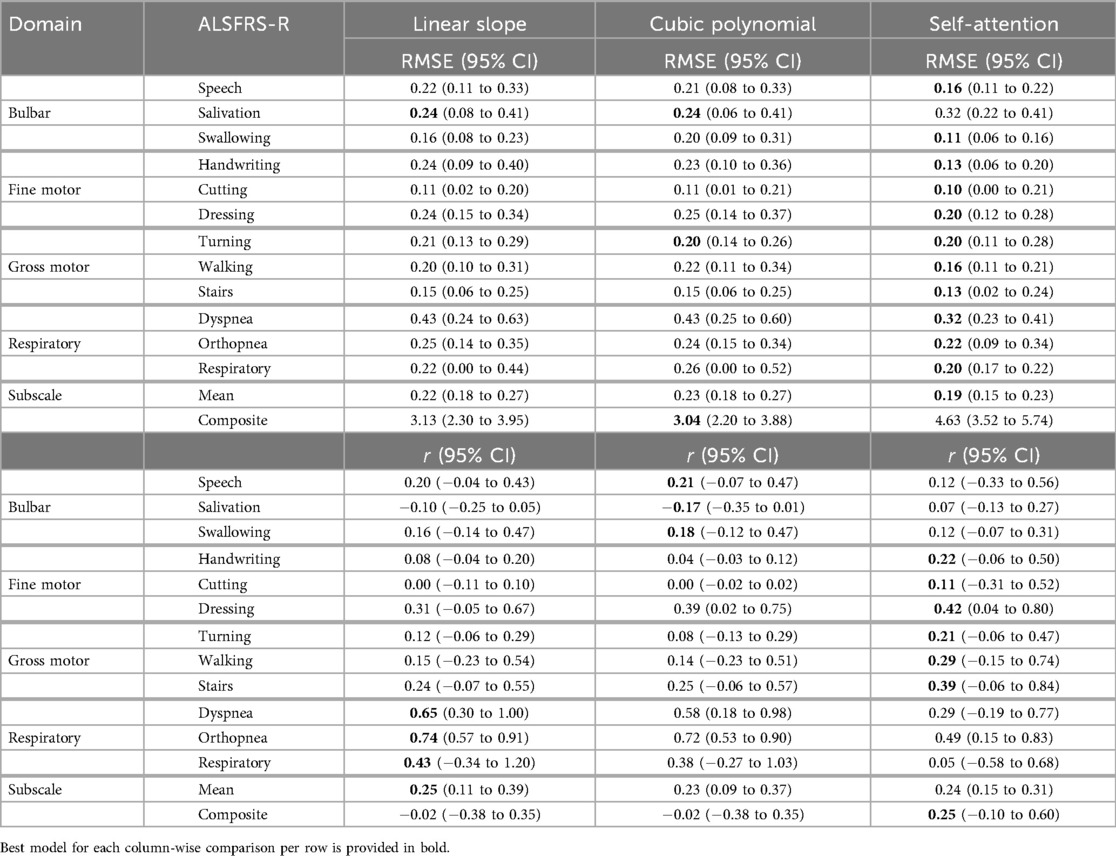

To evaluate how the pseudo-labeling interpolation approach affects model performance, prediction error and outcome correlation were averaged across learning methods, as detailed in Tables 7 and 8 and across participants in Table 9. Results demonstrate that the non-linear cubic polynomial and self-attention interpolation of ALSFRS-R scales follow more closely with daily changes in in-home sensor health measurements, with a few exceptions where linear interpolation resulted in lower errors (P1: composite; P2: dressing, orthopnea, composite; P3: turning, respiratory, composite), as illustrated by the Taylor diagrams in Figure 5.

Table 7. Mean model prediction error (RMSE) across learning methods for each pseudo-labeling interpolation.

Table 8. Mean model outcome correlation () across learning methods for each pseudo-labeling interpolation.

Table 9. Mean model prediction error (RMSE) and outcome correlation () across learning methods and participants for each pseudo-labeling interpolation.

3.2.1 Mean performance across learning methods

The bulbar ALSFRS-R scales (speech, salivation, swallowing) resulted in mean improvements to model error from non-linear cubic and self-attention interpolation compared to linear interpolation. For speech, cubic reduced model error for P1 [RMSE , (0–0)], while self-attention provided improved error and correlation for P2 (RMSE , ) and improved error and correlation for P3 (RMSE , ). Salivation performed only marginally better with cubic interpolation over linear interpolation, with an increase in correlation for P2 (RMSE , ) and a minor correlation change for P3 (RMSE , ). For the swallowing subscale, self-attention interpolation provided lower error across all participants, with a decreased correlation for P1 (RMSE , ) and no change in correlation for P3 [RMSE , (0–0)], while cubic and self-attention performed the same, with a very minor difference in error for P2 [RMSE , (0–0)] compared to linear interpolation.

For fine-motor subscales (handwriting, cutting, and dressing), self-attention interpolation provided the lowest model error, with the exception of cutting for P3 and dressing for P2. For the handwriting scale, self-attention reduced error and either increased or maintained correlation for P1 [RMSE , (0–0)], P2 (RMSE , ), and P3 [RMSE , (0–0)]. Cutting also performed better on self-attention pseudo-labels, reducing error for P1 [RMSE , (0–0)] and for P2 (RMSE , ) with improved correlation, while cubic and linear models performed equally for P3 [RMSE (0.01–0.02), (0–0)]. Dressing models fit on self-attention interpolated labels had decreased model error but with lower correlation for P1 (RMSE , ) and significantly better correlation for P3 (RMSE , ), while the linear slope provided the best error and correlation compared to non-linear interpolations for P2 [RMSE (0.05–0.31), (0.46–0.99)].

For gross motor ALSFRS-R scales (turning, walking, stairs), self-attention interpolation again resulted in improved model error in most cases. The turning subscale had mixed outcomes: cubic interpolation improved error over linear but with decreased correlation for P1 (RMSE , ) and self-attention improved error and correlation for P2 (RMSE , ) and showed a minor change in error with improved correlation for P3 (RMSE , ). For walking, self-attention interpolation resulted in the lowest error for all participants, with significantly improved correlation for P1 (RMSE , ), a moderate decrease in correlation for P2 (RMSE , ), and an increase for P3 (RMSE , ). The stairs models had the lowest prediction error with self-attention interpolation, with a slight improvement in correlation for P2 (RMSE , ) and no change for P3 [RMSE , (0–0)]. However, linear slope and cubic interpolation performed equally for P1 [RMSE (0.01–0.08), (0–0)].

Within the respiration-related ALSFRS-R scales (dyspnea, orthopnea, respiratory), models fit on linear slope demonstrated better error than non-linear interpolations among all functional domains. For the dyspnea scale, self-attention interpolation improved error but with a decreased correlation for P1 (RMSE , ) and a marginal correlation change for P2 (RMSE , ). Self-attention interpolation had equal prediction error to the baseline linear slope but at a decreased correlation for P3 [RMSE (0.20–0.32), ]. For orthopnea models, transfer batch interpolation improved error but with a slight reduction in correlation for P1 (RMSE , ) compared to the linear slope. Self-attention interpolation increased model error over linear interpolation but with improved correlation for P2 (RMSE , ), while the opposite occurred for P3 (RMSE , ), reducing model error and significantly decreasing correlation in P3. Respiratory had the lowest error from self-attention but with a significant reduction in correlation for P2 (RMSE , ), while the linear slope provided a slight decrease in both error and correlation compared to cubic interpolation for P3 (RMSE , ).

For the composite ALSFRS-R scale, linear slope models provided the best prediction error but had a decreased outcome correlation compared to self-attention interpolation for P1 (RMSE , ), P2 (RMSE = , ), and P3 (RMSE , ). The results suggest that while self-attention was best at capturing the overall changes within individual ALSFRS-R subscales, the summation of the ALSFRS-R composite score compensates these changes, resulting in a linear trajectory, confirming to clinical practice of using a linear slope to estimate the rate of functional change.

3.2.2 Mean group-level performance across learning methods and ALSFRS-R scales

Self-attention interpolation had the best subscale-specific performance with the lowest mean prediction error [RMSE (0.15–0.23), (0.15–0.31)] across participants and ALSFRS-R subscales, as shown in the Subscale Mean row of Table 9, outperforming linear and cubic interpolation in 21 of 34 subscale comparisons excluding ties, reported in bold in Table 7. Linear [RMSE (0.18–0.27), (0.11–0.39)] and cubic [RMSE (0.18–0.27), (0.09–0.37)] interpolations showed nearly identical mean performance and were optimal in only six and five subscale models, respectively. For composite scales, the pattern reversed, with linear interpolation having lower error in two of three comparisons [RMSE (2.30–3.95)] and cubic in one of three [RMSE (2.20–3.88)], while self-attention raised error [RMSE (3.52–5.74)], implying that composite trajectories are more accurately captured by a stable linear slope than by self-attention interpolation, which may potentially have been over-responsive. However, choice of evaluation metric also factors into pseudo-labeling selection, with self-attention providing the best correlation for composite models when prioritizing the prediction–outcome trend agreement [ (0.10 to 0.60)] compared to linear and cubic interpolation [ (0.38 to 0.35)].

4 Discussion

Semi-supervised learning approaches were evaluated for predicting ALSFRS-R scale trajectories using in-home sensor health features. We compared participant-level batch learning and cohort-initialized transfer learning, which used batch and incremental fine-tuning strategies. The results demonstrate that adapting cohort transfer learning models with additional individual-level data through incremental fine-tuning improves prediction error (RMSE) and outcome correlation (Pearson’s ).

4.1 ALSFRS-R scales exhibit mixed participant-cohort homogeneity

ALS decline progression varies across different ALSFRS-R functional areas, creating multi-dimensional trajectories, where some subscales decline predictably across the cohort, while others follow patient-specific trends. As illustrated in Figure 3, rates of decline in bulbar, gross, and respiratory area measures for P1 were marked by periods of stability followed by sudden decreases compared to the regular decline observed for P2 and P3. Similarly, fine motor measures for P1 increased around November 2023 followed by a regular rate of decline, mirroring the decreases observed for P2 and P3. Cohort-level model performances, when averaged across pseudo-label interpolations, confirmed that bulbar and gross motor scales largely follow cohort-level patterns. Transfer batch learning provided the lowest error for swallowing and gross motor measures, while subscales such as speech and handwriting benefited more from transfer incremental tuning. Conversely, respiratory functions demonstrated more participant-level heterogeneity, with transfer incremental fine-tuning inverting outcome correlations from negative in individual batch models to strongly positive. These findings suggest that tailoring learning methods to the underlying homogeneity–heterogeneity profile of each functional domain will improve model optimization, with cohort-homogeneous scales making better use of transfer learning, participant-heterogeneous scales requiring incremental fine-tuning to better capture patient-specific patterns. Additionally, mixed-profile subscales could potentially benefit from adaptive learning approaches when participant-level patterns deviate from the cohort-baseline variability.

4.2 Transfer learning improves performance in subscale models

Incremental fine-tuning of transfer learning models provided the best balance between predictive accuracy and correlation when contrasting the results from the learning technique. The Taylor diagram analysis in Figure 6 of model predictions aggregated across participants illustrated how transfer learning models outperformed participant-level batch learning across most subscales, aligning predictions closer to reference vectors with improved accuracy and correlation. Performance differences between individual batch and transfer learning models were particularly pronounced across subscales, as presented in Table 4, where transfer batch models reduced the mean prediction error in 12 of 34 comparisons and transfer incremental learning did so in 16 of 34, excluding ties. Individual batch models exhibited increased error and weaker correlations, achieving best performance in only four ALSFRS-R scale models (P1: walking, stairs; P3: turning, orthopnea). Evaluating outcome correlation, transfer incremental learning increased correlation in 20 of 34 comparisons, as presented in Table 5. As such, although transfer incremental learning had a slightly higher mean prediction error, it was more effective at capturing individual trajectory patterns than transfer batch learning, suggesting that it is better at detecting temporal changes in ALS decline. Additionally, the improved performance of transfer learning approaches across subscales suggests that ALS progression follows cohort-level patterns predictive of individual trajectories despite disease heterogeneity, supporting the presence of underlying shared physiological or functional characteristics that are captured by sensor data as detected by cohort-level models. The effectiveness of incremental fine-tuning indicates that personalized ALS progression tracking should incorporate both group parameters and adaptive learning for predicting decline in individual patients.

4.3 Integrating passive sensor analytics into personalized ALS clinical care

Although preliminary and limited in generalizability, the findings from this case series suggest that integrating passive in-home sensor monitoring into routine ALS care may help clinicians better detect and anticipate functional changes between quarterly assessments, differentiating stable periods from more rapid decline. More specifically, this study shows that combining semi-supervised transfer learning with continual fine-tuning on patient-level sensor data improves the estimation of ALSFRS-R subscale trajectories compared to batch learning, supporting the use of personalized, adaptive algorithms for tracking the course of disease unique to the individual patient from models pretrained on group-level data. The improved performance of group-initialized transfer models indicates that, even with a minimal cohort of three patients, combining data across patients as a baseline model of disease progression that can be further adapted to new patients over time can leverage patterns homogeneous to the case series cohort. The findings also underscore the need for tailoring learning strategies to specific clinical problems, whether they are subscales or composite measures, to provide more reliable indicators of disease progression. In practice, clinicians could receive near real-time notifications about deviations in a patient’s functional trajectories, as this is being evaluated in the parent study. Such notifications may enable proactive adjustments to respiratory support, assistive ambulatory devices, nutritional interventions, or rehabilitation schedules, which would allow for timely interventions, rather than waiting weeks for the next in-person assessment. Sensor-based analytics may also reduce the recall bias and subjective self-assessment errors common in clinic-based evaluations while providing actionable insights into patients’ day-to-day variability, especially for those with unpredictable subscale trajectories or across ALSFRS-R functional areas. The combination of transfer and incremental learning has the potential to optimize clinical workflows and attention by identifying the specific patients, and at the proper time, who need closer monitoring and therapies. Additionally, examining modeled outcomes by the pseudo-labeling technique showed that the choice of optimal interpolation is largely outcome- and metric-specific, with performance varying across subscale and composite measures. In the context of clinical ALS trial studies, the continuous outcome estimates derived from self-supervised models could serve as prognostic endpoints.

5 Conclusion

This study demonstrates that semi-supervised machine learning using in-home sensor data can effectively predict ALSFRS-R scale trajectories, with incremental fine-tuned transfer learning performing well across all functional domains. Given the case series cohort (), the results demonstrate feasibility and within-participant accuracy rather than generalizable effectiveness, with further confirmation requiring a larger, multi-site cohort. The findings indicate that the choice of interpolation techniques for estimating between-visit decline should be tailored to specific clinical objectives, with self-attention interpolation performing best for subscale-level monitoring and polynomial function interpolation performing better for the summated composite ALSFRS-R score. However, the generalizability of reported modeled outcomes is limited by the small participant cohort and reliance on bed sensor and motion detection data, which lack comprehensive gait measurements that may be particularly important for assessing motor function. The low prediction error-low outcome correlation models for bulbar and motor-related subscale models (P1: handwriting, cutting, stairs; P2: swallowing; P3: swallowing, handwriting, cutting, stairs) exemplify the need for motor-related measurements. Future research may be conducted to validate the learning methods applied in this analysis with a larger, multi-center study to establish broader applicability, explore complementary clinical measures such as forced volume capacity (FVC), and investigate enhanced feature engineering approaches that could improve performance for patient-heterogeneous ALSFRS-R component scales. Additionally, developing adaptive incremental learning algorithms with patient-specific clinical feedback mechanisms for ground truth scoring and extending this framework through multi-model ensemble approaches are promising directions for advancing personalized disease progression monitoring that could transform clinical decision-making in neurodegenerative care. As part of the parent study, we will expand the participant sample and train larger cohort-level transfer models with additional training data to improve their sensitivity and specificity to between-visit changes. Overall, this work aims to enable earlier detection of clinically meaningful changes in ALS progression as support for timely interventions that address functional decline.

Data availability statement

The datasets presented in this article are not readily available because they are derived from human subjects and contain sensitive information. Requests to access the datasets should be directed to William Janes, Principal Investigator,amFuZXN3QGhlYWx0aC5taXNzb3VyaS5lZHUs subject to institutional review board approval.

Ethics statement

The studies involving humans were approved by the University of Missouri Institutional Review Board. The studies were conducted in accordance with the local legislation and institutional requirements. The participants provided their written informed consent to participate in this study.

Author contributions

NM: Investigation, Writing – review & editing, Software, Visualization, Formal analysis, Writing – original draft, Validation, Methodology. WJ: Project administration, Supervision, Conceptualization, Writing – review & editing, Funding acquisition. SM: Data curation, Project administration, Writing – review & editing. MP: Funding acquisition, Methodology, Writing – review & editing, Conceptualization. XS: Conceptualization, Funding acquisition, Writing – review & editing, Methodology, Supervision.

Funding

The author(s) declare that financial support was received for the research and/or publication of this article. This work was supported by the Department of Defense Office of the Congressionally Directed Medical Research Programs (CDMRP) through the Amyotrophic Lateral Sclerosis Research Program (ALSRP) Clinical Development Award (#W81XWH-22-1-0491) and the ALS Association (#24-AT-722). Opinions, interpretations, conclusions, and recommendations are those of the authors and are not necessarily endorsed by the Department of Defense.

Acknowledgments

The University of Missouri has a financial relationship with Foresite Healthcare, which produces the sensor platform used in this study. The authors thank Zachary Selby.

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Generative AI statement

The author(s) declare that Generative AI was used in the creation of this manuscript. Preparation of manuscript tables in LaTeX was assisted by Microsoft Copilot (version 1.25042.146) delivered via Microsoft 365 Copilot for Work.

Any alternative text (alt text) provided alongside figures in this article has been generated by Frontiers with the support of artificial intelligence and reasonable efforts have been made to ensure accuracy, including review by the authors wherever possible. If you identify any issues, please contact us.

Publisher's note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Supplementary material

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/fdgth.2025.1657749/full#supplementary-material

References

1. Rutkove SB. Clinical measures of disease progression in amyotrophic lateral sclerosis. Neurotherapeutics. (2015) 12:384–93. doi: 10.1007/s13311-014-0331-9

2. Skubic M, Guevara RD, Rantz M. Automated health alerts using in-home sensor data for embedded health assessment. IEEE J Transl Eng Health Med. (2015) 3:1–11. doi: 10.1109/JTEHM.2015.2421499

3. Stolz E, Mayerl H, Muniz-Terrera G, Gill TM. Terminal decline in physical function in older adults. J Gerontol Ser A Biol Sci Med Sci. (2024) 79:glad119. doi: 10.1093/gerona/glad119

4. Verschueren A, Palminha C, Delmont E, Attarian S. Changes in neuromuscular function in elders: Novel techniques for assessment of motor unit loss and motor unit remodeling with aging. Rev Neurol (Paris). (2022) 178:780–7. doi: 10.1016/j.neurol.2022.03.019

5. Marinello E, Guazzo A, Longato E, Tavazzi E, Trescato I, Vettoretti M, et al.. Using wearable and environmental data to improve the prediction of amyotrophic lateral sclerosis and multiple sclerosis progression: an explorative study. Vol. 3740 (2024), p. 1353–65.

6. Kelly M, Lavrov A, Garcia-Gancedo L, Parr J, Hart R, Chiwera T, et al.. The use of biotelemetry to explore disease progression markers in amyotrophic lateral sclerosis. Amyotroph Later Scler Frontotemp Degener. (2020) 21:563–73. doi: 10.1080/21678421.2020.1773501

7. Engelberg-Cook E, Shah J, Teixeira da Silva Hucke A, Vera-Garcia DV, Dagher JE, Donahue MH, et al.. Prognostic factors and epidemiology of amyotrophic lateral sclerosis in southeastern united states. Mayo Clin Proc Innov Qual Outcom. (2024) 8:482–92. doi: 10.1016/j.mayocpiqo.2024.07.008

8. Requardt MV, Görlich D, Grehl T, Boentert M. Clinical determinants of disease progression in amyotrophic lateral sclerosis—a retrospective cohort study. J Clin Med. (2021) 10:ARTN 1623. doi: 10.3390/jcm10081623

9. Su WM, Cheng YF, Jiang Z, Duan QQ, Yang TM, Shang HF, et al.. Predictors of survival in patients with amyotrophic lateral sclerosis: a large meta-analysis. Ebiomedicine. (2021) 74:ARTN 103732. doi: 10.1016/j.ebiom.2021.103732

10. Ramamoorthy D, Severson K, Ghosh S, Sachs K, Glass JD, Fournier CN, et al.. Identifying patterns in amyotrophic lateral sclerosis progression from sparse longitudinal data. Nat Comput Sci. (2022) 2:605. doi: 10.1038/s43588-022-00299-w

11. Erb MK, Calcagno N, Brown R, Burke KM, Scheier ZA, Iyer AS, et al.. Longitudinal comparison of the self-administered ALSFRS-RSE and ALSFRS-R as functional outcome measures in ALS. Amyotroph Later Scler Frontotemp Degener. (2024) 25:570–80. doi: 10.1080/21678421.2024.2322549

12. van Eijk RPA, Kliest T. Current trends in the clinical trial landscape for amyotrophic lateral sclerosis. Curr Opin Neurol. (2020) 33:655–61. doi: 10.1097/WCO.0000000000000861

13. Franchignoni F, Mora G, Giordano A, Volanti P, Chiò A. Evidence of multidimensionality in the ALSFRS-R scale: a critical appraisal on its measurement properties using Rasch analysis. J Neurol Neurosurg Psychiatry. (2013) 84:1340–5. doi: 10.1136/jnnp-2012-304701

14. Janes W, Marchal N, Song X, Popescu M, Mosa ASM, Earwood JH, et al.. Integrating ambient in-home sensor data and electronic health record data for the prediction of outcomes in amyotrophic lateral sclerosis: Protocol for an exploratory feasibility study. JMIR Res Protoc. (2025) 14:e60437. doi: 10.2196/60437

15. Heise D, Skubic M. Monitoring pulse and respiration with a non-invasive hydraulic bed sensor. In: 2010 Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC) (2010). p. 2119–23. doi: 10.1109/Iembs.2010.5627219

16. Rosales L, Su BY, Skubic M, Ho KC. Heart rate monitoring using hydraulic bed sensor ballistocardiogram. J Ambient Intell Smart Environ. (2017) 9:193–207. doi: 10.3233/AIS-170423

17. Stone EE, Skubic M. Unobtrusive, continuous, in-home gait measurement using the Microsoft Kinect. IEEE Trans Biomed Eng. (2013) 60:2925–32. doi: 10.1109/TBME.2013.2266341

18. Wallace R, Abbott C, Skubic M. Exploring clinical correlations in centroid-based gait metrics from depth data collected in the home. In: Proceedings of the 11th EAI International Conference on Pervasive Computing Technologies for Healthcare. PervasiveHealth ’17 (2017), p. 203–6. doi: 10.1145/3154862.3154890

19. Quinn C, Elman L. Amyotrophic lateral sclerosis and other motor neuron diseases. Continuum. (2020) 26:1323–47. doi: 10.1212/CON.0000000000000911

20. Marchal N, Janes WE, Earwood JH, Mosa ASM, Popescu M, Skubic M, et al.. Integrating multi-sensor time-series data for ALSFRS-R clinical scale predictions in an ALS patient case study. AMIA Annu Symp Proc. (2024) 2024:788–97.40417478

21. Heaton J. Time Series in Keras (Heaton Research), Chap. 10 (2022), p. 361–86. doi: 10.48550/arXiv.2009.05673

22. Hakkal S, Lahcen AA. XGBoost to enhance learner performance prediction. Comput Educ Artif Intell. (2024) 7:100254. doi: 10.1016/j.caeai.2024.100254

23. Guo CH, Lu ML, Chen JF. An evaluation of time series summary statistics as features for clinical prediction tasks. BMC Med Inform Decis Mak. (2020) 20:ARTN 48. doi: 10.1186/s12911-020-1063-x

24. Meyer BM, Tulipani LJ, Gurchiek RD, Allen DA, Adamowicz L, Larie D, et al.. Wearables and deep learning classify fall risk from gait in multiple sclerosis. IEEE J Biomed Health Inform. (2021) 25:1824–31. doi: 10.1109/JBHI.2020.3025049

25. Chen TQ, Guestrin C. XGBoost: a scalable tree boosting system. In: KDD’16: Proceedings of the 22nd ACM Sigkdd International Conference on Knowledge Discovery and Data Mining (2016), p. 785–94. doi: 10.1145/2939672.2939785

Keywords: amyotrophic lateral sclerosis, disease progression, functional status, patient monitoring, personalized medicine, remote sensing technology, semi-supervised machine learning

Citation: Marchal N, Janes WE, Marushak S, Popescu M and Song X (2025) Enhancing ALS progression tracking with semi-supervised ALSFRS-R scores estimated from ambient home health monitoring. Front. Digit. Health 7:1657749. doi: 10.3389/fdgth.2025.1657749

Received: 2 July 2025; Accepted: 26 August 2025;

Published: 26 September 2025.

Edited by:

Ming Huang, Chinese Academy of Sciences (CAS), ChinaReviewed by:

Pilar Maria Ferraro, IRCCS Ospedale Policlinico San Martino, ItalyFangmin Sun, Chinese Academy of Sciences (CAS), China

Copyright: © 2025 Marchal, Janes, Marushak, Popescu and Song. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Noah Marchal, bm9haC5tYXJjaGFsQGhlYWx0aC5taXNzb3VyaS5lZHU=

Noah Marchal

Noah Marchal William E. Janes3

William E. Janes3 Mihail Popescu

Mihail Popescu Xing Song

Xing Song