- 1Centre for Pattern Recognition and Data Analytics, Deakin University, Geelong, VIC, Australia

- 2Centre for Molecular and Medical Research, Deakin University, Geelong, VIC, Australia

- 3Bioinformatics Core Research Group, Deakin University, Geelong, VIC, Australia

Since the turn of the century, researchers have sought to diagnose cancer based on gene expression signatures measured from the blood or biopsy as biomarkers. This task, known as classification, is typically solved using a suite of algorithms that learn a mathematical rule capable of discriminating one group (“cases”) from another (“controls”). However, discriminatory methods can only identify cancerous samples that resemble those that the algorithm already saw during training. As such, discriminatory methods may be ill-suited for the classification of cancer: because the possibility space of cancer is definitively large, the existence of a one-of-a-kind gene expression signature is likely. Instead, we propose using an established surveillance method that detects anomalous samples based on their deviation from a learned normal steady-state structure. By transferring this method to transcriptomic data, we can create an anomaly detector for tissue transcriptomes, a “tissue detector,” that is capable of identifying cancer without ever seeing a single cancer example. As a proof-of-concept, we train a “tissue detector” on normal GTEx samples that can classify TCGA samples with >90% AUC for 3 out of 6 tissues. Importantly, we find that the classification accuracy is improved simply by adding more healthy samples. We conclude this report by emphasizing the conceptual advantages of anomaly detection and by highlighting future directions for this field of study.

1. Introduction

Cancer is a collection of complex heterogeneous diseases with known genetic and environmental risk factors. Physicians diagnose cancer by carefully weighing evidence collected from patient history, physical examination, laboratory testing, clinical imaging, and biopsy. Computers can aid diagnosis and improve outcomes by mitigating diagnostic errors. Indeed, this objective is actively researched, where studies have shown that computers can reduce the reading errors of mammography (Rangayyan et al., 2007) and commuted tomographic (CT) (Chan et al., 2008) images. Meanwhile, researchers have also sought to use computers to diagnose cancer based on gene expression signatures measured by high-throughput assays like microarray or next-generation sequencing (Alon et al., 1999; Golub et al., 1999). Gene expression signatures are ideal biomarkers because mRNA expression is dynamically altered in response to changes in the cellular environment. However, developing molecular diagnostics requires large data sets which have only recently become available due to reduced assay costs. These data could usher in a new era in clinical diagnostics.

Within the last decade, scientists have produced large transcriptomic data sets containing thousands of clinical samples. Of these, the TCGA stands out as the most comprehensive, having sequenced more than 10,000 unique tissue samples from 33 cancers and healthy tissue controls (Weinstein et al., 2013). Meanwhile, an equally large study, GTEx, has sequenced non-cancerous samples comprising 54 unique human tissue types (Lonsdale et al., 2013). Already, a number of studies have used the TCGA data to build diagnostic classifiers that can determine whether a tissue sample is cancerous or not based only on its gene expression signature (Kourou et al., 2015). This task, known as classification, is typically solved using a suite of algorithms that learn a mathematical rule capable of discriminating one group (“cases”) from another (“controls”). This rule is learned from a large portion of the data called the “training set,” and then evaluated on withheld data called the “test set.” Discriminatory classifiers like artificial neural networks (ANNs), support vector machines (SVMs), and random forests (RFs) have become popular in the biological sciences (Jensen and Bateman, 2011). All of these work well for high-dimensional data, so long as the training set contains enough correctly labeled cases and controls.

Clinicians need to answer questions like, “Is this tissue cancerous or not?” and “Is this cancer malignant or not?” ANNs, SVMs, and RFs can all answer these questions by learning a discriminatory rule from labeled data. However, discriminative methods have two major limitations, both of which apply to cancer classification. The first limitation is theoretical: discriminative methods suffer from the problem of having to see all possible abnormalities in order to make an accurate and generalizable prediction (Sodemann et al., 2012). This is relevant to cancer because there exists countless ways in which a normal cell could become cancerous. As such, the label “cancer” does not encompass a known homogeneous group, but rather a heterogeneous collection of unknown types. It is simply not possible to anticipate the nature or extent of these “unknown unknowns” (Rumsfeld, 2002). The second limitation is practical: even for an ideal homogeneous cancer class, the tumor may occur too rarely for there to exist enough data to learn a meaningful discrimination rule. Discriminatory methods require sufficient sample sizes to learn a rule that tolerates the large variance observed in replicates of transcriptomic data (McIntyre et al., 2011). For these reasons, discriminatory methods are doomed to fail.

On the other hand, we expect that the possibility space for steady-state normal tissue is appreciably smaller than that of the aberrant tumor. By modeling this normal latent structure directly, we could learn a new rule that detects cancerous samples as a departure from normal. This follows the biological intuition that tumors themselves are anomalies of normal cellular physiology. The field of machine learning already has well-established methods that can detect anomalies in high-dimensional data, especially images, for the purpose of surveillance (Budhaditya et al., 2009). By transferring these methods to transcriptomic data, we can create an anomaly detector for tissue transcriptomes, a “tissue detector,” that is capable of identifying cancer without ever seeing a single cancer example. In this report, we show that “tissue detectors” are sensible and accurate for the classification of cancer based on gene expression signatures. We do this by training an anomaly detection model on normal GTEx samples, then using it to accurately differentiate normal from cancerous TCGA samples. In presenting these results, we highlight future research directions for the detection of anomalous gene expression signatures.

2. Methods

2.1. Data Acquisition

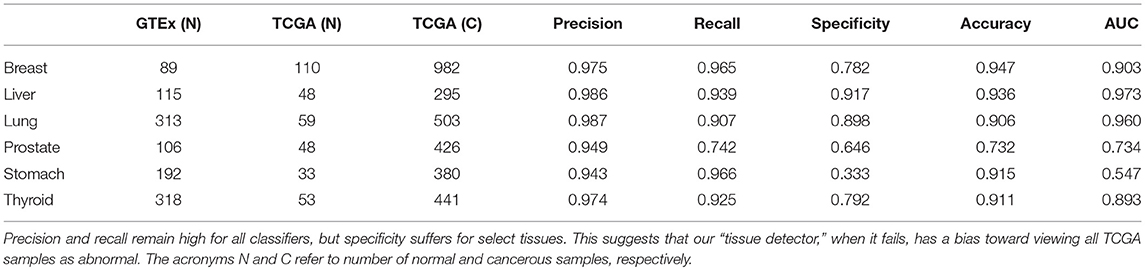

We acquired the combined GTEx and TCGA data from Wang et al. (2018), who harmonized them using quantile normalization and svaseq-based batch effect removal (Wang et al., 2018). After downloading the data in fragments per kilobase of transcript per million (FPKM), we chose six tissues that had large sample sizes in both GTEx and TCGA: breast, liver, lung, prostate, stomach, and thyroid. Table 1 shows the number of healthy and cancer samples for each tissue.

Table 1. This table shows the number of samples in each GTEx training set and TCGA test set, alongside the test set performance of that anomaly detector.

2.2. Model Training

We refer to a predictive model and its threshold as a “tissue detector,” of which we trained six (one for each tissue). To train the “tissue detector,” we z-score standardized each gene within the GTEx training set, then performed a residual analysis of the GTEx training set. Residual analysis is based on the principle that most data have an underlying structure that can be largely reconstructed using a subset of the principal components, whereby the difference between the reduced representation and the original observations are termed the residues. Residual analysis uses the squared value of the residue as a proven way to measure the degree to which an observation is an outlier. For normally distributed data, the squared value of the residues follows a non-central χ2 distribution. By comparing the norm of the residue for an unlabeled sample to a procedurally-selected threshold (corresponding to a stipulated false alarm rate), we have a predictive rule that decides whether to reject the null hypothesis and call that sample an anomaly (Jackson and Mudholkar, 1979). Our “tissue detector” method is available from https://github.com/thinng/tissue_detector.

2.3. Model Testing

After training each model on the GTEx data, we evaluated its performance on the respective TCGA data. For each sample in the test set, we calculated an anomaly score based on the distance between that sample and the model reference. We did this by projecting the sample to the principal component space and measuring its residue, where higher residue scores indicate that the sample is more anomalous. If the anomaly score is larger than the anomaly detection threshold, the sample is called abnormal (i.e., an outlier). Otherwise, the sample is called normal (i.e., an inlier). This allows us to differentiate between normal and cancerous TCGA samples without ever seeing a single cancer example. We repeated this procedure for increasingly smaller subsets of the training data, with specificity averaged across ten bootstraps each.

By using the Wang et al. data, we can evaluate the utility of the anomaly detection method with all batch effects already removed. Nevertheless, we chose to use the GTEx data as the “normal” training set so that any residual batch effects between the GTEx and TCGA data would cause the “tissue detector” to call false positives (i.e., to call the healthy TCGA abnormal). For a robust and conservative estimate of performance, we focus our discussion on specificity (which is especially penalized by false positives).

3. Results and Discussion

3.1. Cancer Is a Tissue Anomaly

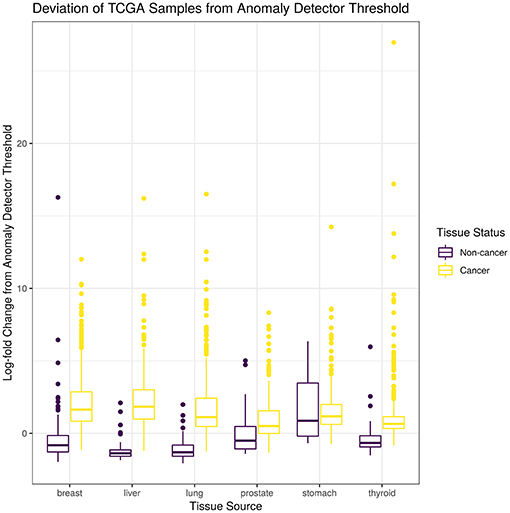

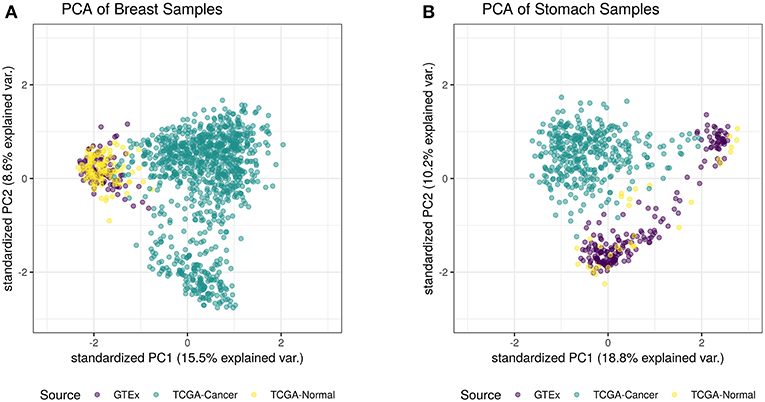

For this study, we trained a “tissue detector” on each of the six tissues described in Table 1, using only the GTEx samples for training. We then evaluated its performance on withheld TCGA data by calculating an anomaly score for each TCGA sample and comparing it against the anomaly threshold: if the score is greater than the threshold, the sample is considered an anomaly (i.e., cancerous). Figure 1 shows the (log-)ratio of per-sample anomaly scores relative to the tissue-specific anomaly threshold (y-axis) for each tissue (x-axis), faceted based on whether the sample is cancerous. Especially for breast, liver, lung, and thyroid data, our “tissue detector” not only recognizes most TCGA cancer samples as anomalies, but also recognizes most TCGA healthy samples as normal. On the other hand, anomaly detection is poor for prostate and stomach tissue. Table 1 shows the precision, recall, and specificity for each “tissue detector.” For almost all tissues, recall is better than specificity, meaning false positives are more common than false negatives. Figure 2 shows the first two principal components of the best performing tissue (breast) with the worst performing tissue (stomach).

Figure 1. This figure shows the (log-)ratio of per-sample anomaly scores relative to the tissue-specific anomaly threshold (y-axis) for each tissue (x-axis), faceted based on whether the sample is cancerous. The “tissue detector” calls any sample above the x-intercept threshold as an anomaly (i.e., cancerous). The threshold is selected procedurally during model training. This figure shows performance for TCGA test set only; no TCGA samples were included in the training set.

Figure 2. This figure shows the first two principal components of the best performing tissue (breast; A) and the worst performing tissue (stomach; B), calculated using the log of all tissue data. While the healthy TCGA breast tissue is indistinguishable from normal GTEx tissue, the healthy TCGA stomach falls slightly outside the range of normal GTEx tissue. Although the healthy TCGA stomach tissue is markedly different than the cancer tissue, many of these samples look like anomalies from the perspective of the GTEx “tissue detector”.

3.2. Detection Improves With More Normal Samples

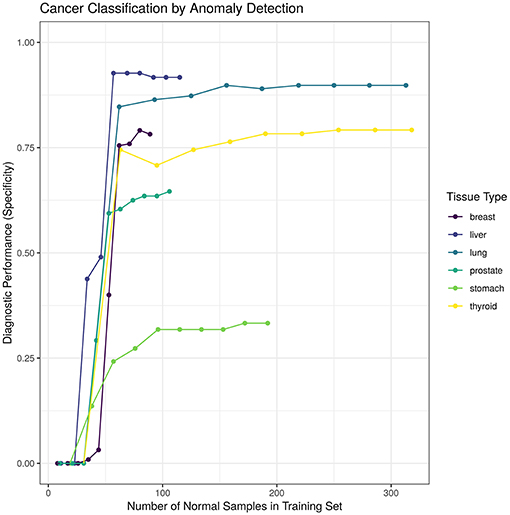

We hypothesized that increasing the number of normal samples shown to the “tissue detector” during model training would improve its specificity, especially for the poorly performing prostate and stomach detectors. To test this hypothesis, we measured the specificity of each “tissue detector” as trained on increasingly smaller subsets of the GTEx data. Figure 3 shows the specificity for each “tissue detector' (y-axis) according to the number of samples in the training set (x-axis). A pattern emerges: the inclusion of additional GTEx samples can improve the classification of TCGA samples, up until a point of diminishing returns.

Figure 3. This figure shows the specificity for each “tissue detector” (y-axis) according to the number of samples in the training set (x-axis). Performance is averaged across 10 bootstraps of the GTEx training set. This figure shows performance for TCGA test set only; no TCGA samples were included in the training set.

4. Current Challenges

4.1. Translating Concept to Clinic

In this study, we used normal GTEx samples to train a model that could classify TCGA samples. We acknowledge that there is no direct clinical application for this experiment, since it is trivial to differentiate between cancer and non-cancer tissue using simple microscopy. As a proof-of-concept, we chose to use these data to demonstrate tissue anomaly detection because the data set is sufficiently large and publicly available. However, anomaly detection could suit many health surveillance applications. By changing the class of samples used in the training set, the meaning of “anomaly” changes. For example, if we include only benign tumors in the training set, then an anomaly detector might identify whether a biopsied tumor is potentially malignant (i.e., not benign). Likewise, using a training set of blood biomarkers for patients with surgically resected tumors might yield an anomaly detector that can identify whether a primary tumor has recurred. Other novel applications might include training a “tissue detector” on healthy lymphatic tissue to screen for lymphatic metastasis or on chemotherapy-sensitive tumor biopsies to screen for emerging drug resistance. Whatever the application, anomaly detection is unique in that it only requires that there exist data for the null state that is under surveillance: it is not necessary that researchers have characterized the full spectrum of the undesired outcome.

4.2. Data Integration

One challenge faced in the detection of anomalous gene expression signatures is the limited amount of data available for training and testing. Even as data sets get larger, anomaly detection will still benefit from the combination of multiple data sets, known as horizontal data integration (Tseng et al., 2012). However, horizontal data integration is complicated because every data set has intra-batch and inter-batch effects caused by systematic or random differences in sample collection. These differences could arise from a variety of biological factors (e.g., biopsy site, age, sex) or technical factors (e.g., RNA extraction protocol, sequencing assay), including latent factors unknown to the investigator (Leek et al., 2012). Although software like ComBat and sva can remove intra-batch biases, inter-batch biases may still remain. Indeed, inter-batch biases could explain why our “tissue detectors,” when they fail, tend to view all TCGA samples as abnormal (though the “normal” TCGA samples do all come from sites adjacent to cancerous tissue). Although Wang et al. tried to harmonize the TCGA and GTEx data (Wang et al., 2018), the removal of inter-batch biases is non-trivial and further challenged by the prevailing need to preserve test set independence. Moreover, owing to how next-generation sequencing data measure the relative abundance of gene expression, these data also contain inter-sample biases that sit on top of the intra-batch and inter-batch biases (Soneson and Delorenzi, 2013; Quinn et al., 2018a). It remains an open question of how best to integrate multiple data sets. Non-parametric or compositional PCA-like methods could provide a suitable alternative to anomaly detection that is more robust to inter-batch and inter-sample biases.

4.3. Interpretability

Another challenge faced in the detection of anomalous gene expression signatures is the lack of transparency in the decision-making process. Although the concept of anomaly detection is intuitive, its implementation decomposes high-dimensional data into orthogonal eigenvectors that do not necessarily have any meaning to biologists. When examining these eigenvectors directly, it may be unclear how an anomaly detection model reached its decision. This makes it difficult to formulate new hypotheses to improve the model performance or elucidate the biological system. Future work should aim to improve the interpretability of anomaly detection methods. One approach might involve building a tool that visualizes which eigenvector components contributed maximally to each decision. If some constituent genes are consistently involved in misclassification, this could generate testable hypotheses. Similarly, one could try to characterize the biological importance of the maximally relevant eigenvectors through gene set enrichment analysis (GSEA), as done by Weighted Gene Correlation Network Analysis (Langfelder and Horvath, 2008). This would allow investigators to frame inlier and outlier distributions not only in terms of the constituent genes involved, but also in terms the biological pathways affected. This too could generate testable hypotheses. With these improvements, anomaly detection would become an interpretable and actionable classification strategy for many health surveillance applications.

5. Summary

Technological advances have made it possible to measure the global gene expression signature of any biological sample at little cost. Already, there is a growing body of evidence that gene expression signatures can be used as biomarkers to diagnose cancer (Kourou et al., 2015). In this report, we present a novel application of anomaly detection to classify cancer based on gene expression signatures. By learning the latent structure of normal gene expression from a training set of normal samples, we created a “tissue detector” that can identify cancer without having seen a single cancer example. Our method contrasts with discriminatory methods, widely used in the biological sciences, which can only identify cancerous samples that resemble those that the algorithm already saw during training. In principle, discriminatory methods do not make sense for a disease like cancer where a one-of-a-kind gene expression signature is theoretically possible. Practically speaking, anomaly detection further benefits from normal samples being more readily available and easier to collect than abnormal samples: for any cancer, many more people do not have the cancer than do. Since the inclusion of additional normal samples can improve the specificity of anomaly detection, the curation of large normal data sets could open up the possibility of building diagnostic tests for extremely rare cancers.

Data Availability

Publicly available datasets were analyzed in this study. This data can be found here: www.doi.org/10.1038/sdata.2018.61.

Author Contributions

TQ prepared the figures and drafted the manuscript. TN performed the primary analyses. SL pre-processed the data and supported primary analyses. SV supervised the project. All authors helped design the project and revise the manuscript.

Conflict of Interest Statement

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Acknowledgments

This manuscript has been released as a Pre-Print at Quinn et al. (2018b).

References

Alon, U., Barkai, N., Notterman, D. A., Gish, K., Ybarra, S., Mack, D., et al. (1999). Broad patterns of gene expression revealed by clustering analysis of tumor and normal colon tissues probed by oligonucleotide arrays. Proc. Natl. Acad. Sci. U.S.A. 96, 6745–6750.

Budhaditya, S., Pham, D., Lazarescu, M., and Venkatesh, S. (2009). “Effective anomaly detection in sensor networks data streams,” in 2009 Ninth IEEE International Conference on Data Mining (Washington, DC), 722–727.

Chan, H. P., Hadjiiski, L., Zhou, C., and Sahiner, B. (2008). Computer-aided diagnosis of lung cancer and pulmonary embolism in computed tomography–a review. Acad. Radiol. 15, 535–555. doi: 10.1016/j.acra.2008.01.014

Golub, T. R., Slonim, D. K., Tamayo, P., Huard, C., Gaasenbeek, M., Mesirov, J. P., et al. (1999). Molecular classification of cancer: class discovery and class prediction by gene expression monitoring. Science 286, 531–537.

Jackson, J. E., and Mudholkar, G. S. (1979). Control procedures for residuals associated with principal component analysis. Technometrics 21, 341–349.

Jensen, L. J., and Bateman, A. (2011). The rise and fall of supervised machine learning techniques. Bioinformatics 27, 3331–3332. doi: 10.1093/bioinformatics/btr585

Kourou, K., Exarchos, T. P., Exarchos, K. P., Karamouzis, M. V., and Fotiadis, D. I. (2015). Machine learning applications in cancer prognosis and prediction. Comput. Struct. Biotechnol. J. 13, 8–17. doi: 10.1016/j.csbj.2014.11.005

Langfelder, P., and Horvath, S. (2008). WGCNA: an R package for weighted correlation network analysis. BMC Bioinformatics 9:559. doi: 10.1186/1471-2105-9-559

Leek, J. T., Johnson, W. E., Parker, H. S., Jaffe, A. E., and Storey, J. D. (2012). The sva package for removing batch effects and other unwanted variation in high-throughput experiments. Bioinformatics 28, 882–883. doi: 10.1093/bioinformatics/bts034

Lonsdale, J., Thomas, J., Salvatore, M., Phillips, R., Lo, E., Shad, S., et al. (2013). The genotype-tissue expression (GTEx) project. Nat. Genet. 45:580. doi: 10.1038/ng.2653

McIntyre, L. M., Lopiano, K. K., Morse, A. M., Amin, V., Oberg, A. L., Young, L. J., et al. (2011). RNA-seq: technical variability and sampling. BMC Genomics 12:293. doi: 10.1186/1471-2164-12-293

Quinn, T. P., Erb, I., Richardson, M. F., and Crowley, T. M. (2018a). Understanding sequencing data as compositions: an outlook and review. Bioinformatics 34, 2870–2878. doi: 10.1093/bioinformatics/bty175

Quinn, T. P., Nguyen, T., Lee, S. C., and Venkatesh, S. (2018b). Cancer as a tissue anomaly: classifying tumor transcriptomes based only on healthy data. bioRxiv 426395. doi: 10.1101/426395

Rangayyan, R. M., Ayres, F. J., and Leo Desautels, J. E. (2007). A review of computer-aided diagnosis of breast cancer: toward the detection of subtle signs. J. Franklin Inst. 344, 312–348. doi: 10.1016/j.jfranklin.2006.09.003

Sodemann, A. A., Ross, M. P., and Borghetti, B. J. (2012). A review of anomaly detection in automated surveillance. IEEE Trans. Syst. Man Cybernet. 42, 1257–1272. doi: 10.1109/TSMCC.2012.2215319

Soneson, C., and Delorenzi, M. (2013). A comparison of methods for differential expression analysis of RNA-seq data. BMC Bioinformatics 14:91. doi: 10.1186/1471-2105-14-91

Tseng, G. C., Ghosh, D., and Feingold, E. (2012). Comprehensive literature review and statistical considerations for microarray meta-analysis. Nucleic Acids Res. 40, 3785–3799. doi: 10.1093/nar/gkr1265

Wang, Q., Armenia, J., Zhang, C., Penson, A. V., Reznik, E., Zhang, L., et al. (2018). Unifying cancer and normal RNA sequencing data from different sources. Sci. Data 5:180061. doi: 10.1038/sdata.2018.61

Keywords: machine learning, TCGA, anomaly detection, classification, surveillance

Citation: Quinn TP, Nguyen T, Lee SC and Venkatesh S (2019) Cancer as a Tissue Anomaly: Classifying Tumor Transcriptomes Based Only on Healthy Data. Front. Genet. 10:599. doi: 10.3389/fgene.2019.00599

Received: 06 March 2019; Accepted: 05 June 2019;

Published: 02 July 2019.

Edited by:

Angelo Facchiano, Institute of Food Sciences, National Research Council (CNR-ISA), ItalyReviewed by:

Shihao Shen, University of California, Los Angeles, United StatesRosalba Giugno, University of Verona, Italy

Copyright © 2019 Quinn, Nguyen, Lee and Venkatesh. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Thomas P. Quinn, Y29udGFjdHRvbXF1aW5uQGdtYWlsLmNvbQ==

Thomas P. Quinn

Thomas P. Quinn Thin Nguyen

Thin Nguyen Samuel C. Lee1

Samuel C. Lee1