- Department of Psychology, SVKM’s Mithibai College of Arts, Chauhan Institute of Science and Amrutben Jivanlal College of Commerce and Economics (Empowered Autonomous), Mumbai, India

Introduction: Various regulatory bodies have published ethical principles, codes, and/or guidelines for mental health practice globally. Although such guidelines may lend themselves equally relevant, there seems a paucity of directives specific to digital platforms such as apps utilizing AI-assisted chatbots, etc. in providing aid for mental health concerns. Exploring data-driven ethical principles for all stakeholders including the practitioners/facilitators, potential consumers, and developers of such platforms is crucial given the rapid expansion of digitized mental health support. A novel approach is proposed undertaking gap-analysis by identifying the themes of ethical concerns from practitioners’ and consumers’ perspectives.

Method: Thematic analysis of literature on ethics in both conventional psychotherapy and digital mental health interventions was conducted to develop a comprehensive thematic framework of ethical principles for digitized mental health care. Based on these foundational themes, a content-valid 30-item research measure was developed to administer on samples of potential consumers as well as practitioners/trainees. In order to reduce the items to meaningful components of ethical considerations, rooted in the participants’ responses, separate principal components analyses were conducted on this primary data from consumers and practitioners, respectively.

Results: Principal components analysis on consumers’ data revealed a single component solution, i.e., the consumers perceived a variety of ethical concerns in a unidimensional manner, suggesting that more awareness is needed for them to make better and more informed choices about their mental health care. Principal components analysis on practitioners/trainees’ data found two meaningful components. In other words, practitioners/trainees on the other hand emphasized two aspects of ethical concerns: the competency, design, accountability of a mental health app, and the rights and security that it needs to provide for its consumers.

Discussion: Current research aimed to bridge the gap in literature with a data-driven, empirical approach to formulate ethical regulations for digitized mental health services, specifically the mental health apps. Findings from the study are proposed to benefit the developers of digital mental health apps, and organizations offering such services in ensuring ethical standards as well as effectively communicating them to the potential consumers.

Introduction

The emergence of AI has considerably reshaped the mental health landscape through the development of digitized mental health services such as AI-powered chatbots, virtual therapists, and mobile apps (Saeidnia et al., 2024). This has made mental health care more accessible for those individuals who could not avail the conventional, in-person therapy services (Bond et al., 2023; Kretzschmar et al., 2019).

The COVID-19 pandemic, especially, led to an increase in the adoption of AI-integration in mental health care services. This approach brought to the forefront, both - its potential as well as shortcomings. On one hand, it ensured that the therapeutic interventions were more accessible to people at any time, making mental health care more inclusive and seeking help less stigmatized. But at the same time, concerns regarding privacy, confidentiality, transparency, safety, equitable access, efficacy, and accountability became increasingly prominent, highlighting the pressing need to address these ethical dilemmas (National Safety and Quality Digital Mental Health Standards, 2020; Bhola and Murugappan, 2020; Martinez-Martin, 2020; American Psychiatric Association, 2020; Wykes et al., 2019; Torous et al., 2018; Joint Task Force for the Development of Telepsychology Guidelines for Psychologists, 2013).

Existing ethical codes and guidelines (Avasthi et al., 2022; Contreras et al., 2021; Jarden et al., 2021; Varkey, 2020; American Psychological Association, 2016; American Counseling Association, 2014; Prentice and Dobson, 2014; Gauthier et al., 2010; Rasmussen and Lewis, 2007; EFPA, 2005; American Public Health Association, 2020; Indian Medical Council, 2002; IMA, 2020) published by various national and international regulatory bodies encompass ethics associated with conventional psychotherapy. Although their scope can be extended to the ever-increasing digitized mental health services, they do not address certain unique set of challenges, such as the integration of AI in mental health care (Martinez-Martin and Kreitmair, 2018). For instance, two important concerns about ethics relating to the use of AI are that very few guidelines are specific to healthcare and the guidelines often emphasize adherence to principles that are too abstract/broad, such as - beneficence, and non-maleficence, without providing steps for implementation (Solanki et al., 2022). Another significant issue is the shift from person-to-person interactions to person-device interactions. This raises a question toward the nature of ‘trust’. ‘Trust’ toward a chatbot differs fundamentally from ‘trust’ in a therapist (Martinez-Martin, 2020). A further concern is the collection of sensitive data of the users. AI usually relies on datasets for algorithmic decision-making which raises concerns regarding data protection, data misuse and algorithmic bias (Iwaya et al., 2022; Wies et al., 2021; Giota and Kleftaras, 2014). Direct-to-consumer psychotherapy apps have high market availability, but they raise concerns about informed consent due to limited professional oversight (Martinez-Martin and Kreitmair, 2018; Malhotra, 2023). Chatbots such as Anna (Happify Health), although beneficial, lack the kind of empathy and nuanced understanding a human therapist brings to the table (Khawaja and Bélisle-Pipon, 2023; Boucher et al., 2021).

Existing frameworks, thus, fall short in addressing the full spectrum of challenges associated with the integration of AI. Researchers have proposed various strategies to address these challenges. Carr (2020) emphasizes the importance of public and patient involvement (PPI), to ensure that ethical guidelines are grounded in the lived experiences of users (Carr, 2020). Still, the extent to which PPI has been implemented is limited. Researchers have also advocated for interdisciplinary approaches to AI development, such as integrating perspectives from sociology, ethics, and policy. Such integration is necessary for addressing biases, societal inequalities, and fostering trust of the stakeholders (Solanki et al., 2022; Garibay et al., 2023).

Despite the rapid growth in digitized mental health care, in the number of mental health apps, online mental health support, and AI-integration in the form of chatbots, there still remains a paucity of standard regulations for ethical practice for the same. Furthermore, a clear gap in the existing literature is found in grounding the ethical principles in the stakeholders’ responses as opposed to several prescriptive ethical frameworks being recommended by various organizations. There also remains a definite lack of clarity in the established ethical guidelines in addressing the ethical concerns or extending their regulatory scope in digitized mental health care services.

The current study seeks to undertake a comprehensive gap analysis of ethical concerns from the perspectives of both practitioners and potential consumers of digital mental health platforms. By doing so, this research adopts an empirical, data-driven approach to identify the ethical themes, paving the way for formation of ethical guidelines that bridge the gap between broader/abstract ethical principles and their practical implementation.

The findings from the current study aim to bridge these gaps as well as to serve as an important resource for developers, practitioners, and policymakers, offering a blueprint for the ethical design and implementation of AI-powered mental health apps.

Method

The current study aimed to propose a comprehensive set of data-driven guidelines for mental health apps by getting insights from practitioners as well as potential consumers regarding their concerns about digitized mental health care. To this end, a multi-phased approach was undertaken. Current study emulated a mixed-method approach through the sequential exploratory design (Creswell and Creswell, 2017), where the initial phase focused on gathering and analyzing secondary qualitative data, followed by the development of a research measure used to collect quantitative data focusing on the ethical concerns in digitisation of mental health care services.

Phase I: identifying ethical themes through thematic analysis

Initially, an examination of the existing ethical guidelines by national and international regulatory bodies for traditional therapy practices (face-to-face therapy) was conducted (Avasthi et al., 2022; Contreras et al., 2021; Jarden et al., 2021; Varkey, 2020; American Psychological Association, 2016; American Counseling Association, 2014; Prentice and Dobson, 2014; Gauthier et al., 2010; Rasmussen and Lewis, 2007; EFPA, 2005; American Public Health Association, 2020; Indian Medical Council, 2002; IMA, 2020). In addition, a separate review of literature focused on ethical concerns specific to digitized mental health care was conducted. The goal of this phase was to identify themes and sub-themes of ethical concerns in the context of digital mental health care services. Thus, phase I involved employing an inductive approach in the thematic analysis. The identified themes were not only aimed to guide the further phases of the study, but also in documenting a thematic framework of relevant ethical concerns in the context of digital mental health apps.

Phase II: item-writing and subject-matter experts’ review

Following the identified themes of ethical concerns, items representing the subject-matter of each theme were developed in an initial item pool of 43 statements. These statements were then shared with seven subject-matter experts who were asked to indicate the relevance of each item on a four-point ordinal scale: “1 = not relevant,” “2 = somewhat relevant,” “3 = quite relevant,” and “4 = highly relevant.” Item content validity indices (I-CVIs) were computed as the proportion of experts rating each item as either “3 = quite relevant” or “4 = highly relevant” among the total seven experts. Comparing with the minimum cut-off value of the I-CVI given by Lynn (1986) as the criterion, 30 items out of the total 43 items were retained. The details of item-screening based on I-CVI are presented in Appendix 1.

Phase III: data collection and principal components analysis

The research measure of the retained set of 30 items based on the content validity analysis was then exposed to two different samples of stakeholders through purposive and convenience sampling, in an online survey.

Participants

Two stakeholder groups were recruited using purposive and convenience sampling methods.

Sample A, i.e., of potential consumers (N = 203) consisted of young adults with Indian nationality, ranging from 18 to 25 years of age primarily undergraduate students from colleges based in Mumbai (Mage = 19.84 years, Sage = 1.24; Females = 176, Males = 25, Other = 1, Preferred not to mention = 1). All the participants were recruited through WhatsApp groups, and peer-to-peer sharing. While data was not collected on specific mental health applications used by the participants, the recruitment invitation made explicit reference to AI-assisted chatbots and digital mental health platforms. Therefore, participants self-selected into the study based on familiarity, awareness or potential usage of such technologies. The inclusion criteria for Sample A, i.e., of potential consumers was the age range of 18–25 years, nationality as Indian, and country of residence as India.

Sample B (N = 55) included mental health professionals and trainees currently practicing in India - counseling psychologists, clinical psychologists, psychiatrists, and MPhil Clinical Psychology trainees (Mage = 28.76 years, Sage = 6.67, with age ranging from 21 to 58 years). They were recruited through WhatsApp and LinkedIn networks. Most of the respondents worked in private practice, academic settings, or clinical institutions, and were located in urban areas. Their familiarity with AI-assisted or digitally mediated mental health tools formed the basis for their inclusion. The inclusion criteria for Sample B, i.e., of practitioners/trainees was nationality as Indian, country of residence as India, and country of professional practice / training as India.

Statistical analysis

The aim of this study was to identify simplified structures (components) of ethical concerns rooted in the responses, i.e., data of the samples A and B, which could be specifically addressed by the digital mental health care services. In order to identify such components and a simplified structure, Principal Components Analysis (PCAs) with Varimax Orthogonal Rotation was conducted on the responses of both the samples separately.

Prior to conducting the analysis, the suitability of both the data sets was checked using the Kaiser-Meyer-Olkin (KMO) measure of sampling adequacy as well as Bartlett’s test of sphericity.

Current study aimed to contribute to the literature and regulations on ethical principles by introducing a novel approach of data-driven ethical guidelines based on the stakeholders’ (here, potential consumers and mental health practitioners/trainees) sensitivity to ethical concerns in the given context.

This novel approach to identifying ethical concerns entailed focusing on the components emerging out of (and hence, grounded in) participants’ responses, rather than only relying on existing prescriptive guidelines for ethical considerations. For this goal, although the phase I of the study involved thematic analysis to identify themes of ethical considerations in the existing prescriptive guidelines and/or literature, the items representing those themes were later analyzed using principal components analyses. Findings from these principal components analyses may provide insight into the participants’ view of ethical considerations and the meaningful dimensions emerging out of it. The results from this sequential exploratory design are discussed next.

Results

Thematic analysis

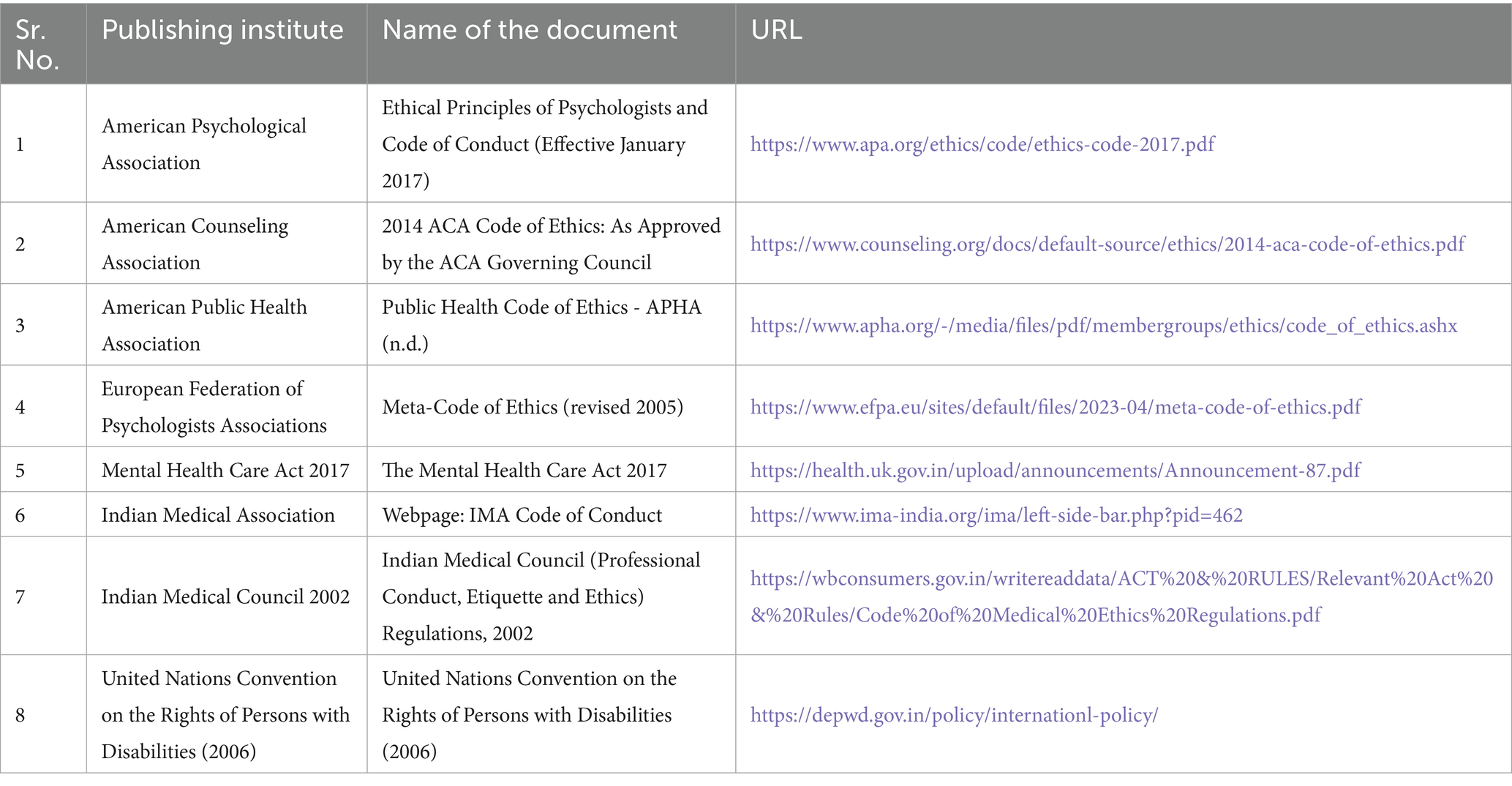

In order to identify the ethical principles in mental health care being emphasized by the regulatory bodies as well as in the existing literature, a thematic analysis was conducted. This review yielded themes of ethical concerns such as “Rights”1, “Security,” “Self-Determination— Autonomy,” “Competence,” “Responsibility and Integrity,” “Non-maleficence,” “Fees & Financial Arrangements,” “Design of Program,” and “Research and Publication.” These themes were identified through the process of two-stage coding, where initially relevant descriptions (sub-themes) from these references were identified and noted, and were categorized into the broad themes mentioned above. For most of these themes, saturation of the sub-themes was observed across various resources. Lastly, the names of the board themes were determined and finalized based on the ethical concerns being addressed under them (see Table 1). Most often, this decision was guided by the sections of the ethical guidelines reviewed themselves.

Table 1. Themes from existing guidelines and research-based literature on traditional and digital mental health practices.

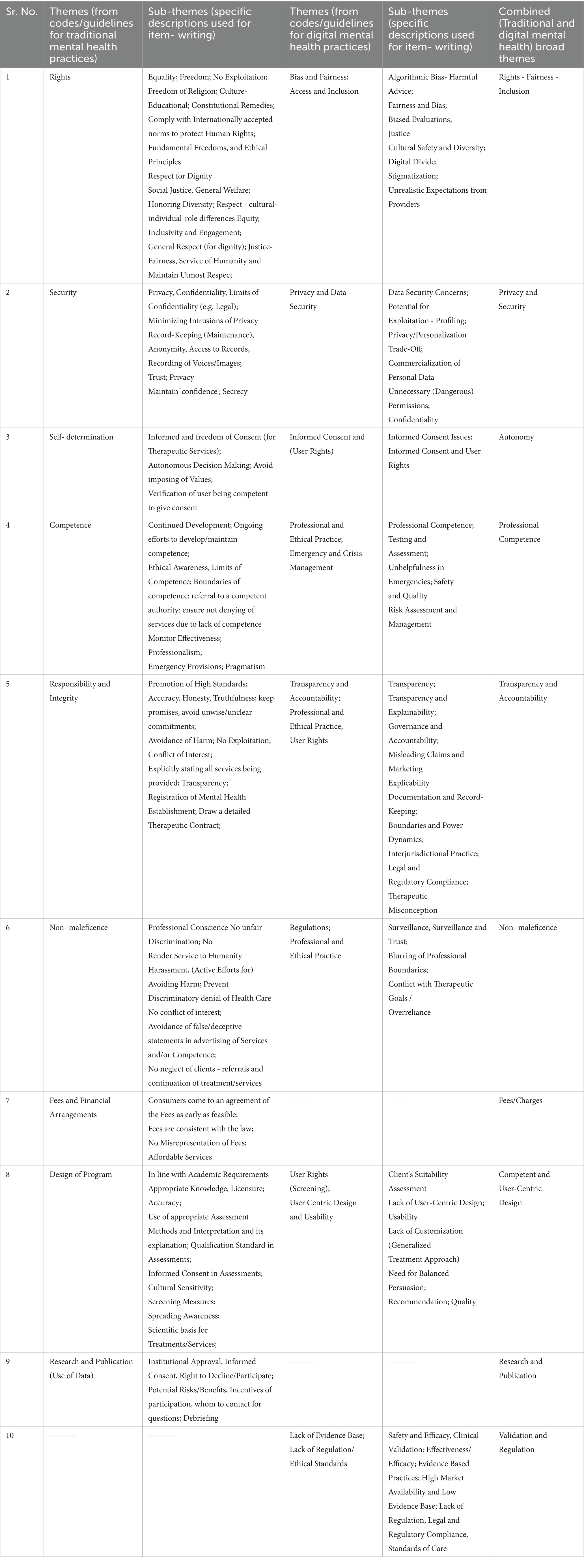

Table 2 shows the existing prescriptive guidelines employed in the thematic analysis.

A review of the existing literature on concerns specific to digitized mental health services was also conducted to identify ethical concerns associated with such modalities. This review revealed themes such as, “Privacy and Data Security,” “Transparency and Accountability,” “Regulation and Ethical Standards,” “Informed Consent and User Rights,” “Bias and Fairness,” “Low Evidence Base,” “User-Centric Design and Usability,” “Professional and Ethical Practice,” “Access and Inclusion,” and “Emergency and Crisis Management.” A similar two-stage coding process was followed while identifying these broad themes, as the one mentioned above (see Table 1).

Both the sets of broad themes (generated through ethical guidelines for mental health practice, and the literature on ethical concerns in digital mental health care services) were then compared to find the similarities in their sub-themes, i.e., in the subject matter of ethical concerns being addressed. Based on the similarities, the two sets of themes were consolidated into 10 foundational themes, i.e., combined broad themes (see Table 1).

These 10 foundational themes were coded as: “Rights - Fairness - Inclusion,” “Privacy & Security,” “Autonomy,” “Professional Competence,” “Transparency & Accountability,” “Non-maleficence,” “Fees/Charges,” “Competent & User-Centric Design,” “Research & Publication,” and “Validation & Regulation” respectively. This informed the second phase of our research - development of an initial set of statements.

Development of measure on ethical concerns

Based on the 10 foundational themes, an initial item pool of 43 statements was developed. This set of statements representing potential ethical concerns were subjected to expert review to assess their relevance. The set of statements with their I-CVIs are presented in Appendix 1. The final set retained 30 items, as the items below the criterion cut-off of I-CVI = 0.86 for seven experts, were removed. The scale content validity index (S-CVI) calculated by averaging all the I-CVIs for these 30 items was found to be 0.94 (Polit and Beck, 2006). Hence, the developed research measure of 30 items showed more than satisfactory evidence of content validity for its administration on the research sample.

Data-driven ethical concerns: principal components approach

Separate principal components analyses (PCAs) were run on data collected with the 30-item measure on ethical concerns in digitisation of mental health, from potential consumers (young adults in India of age 18–25 years) and mental health practitioners/trainees.

Potential consumers’ data

The overall Kaiser-Meyer-Olkin (KMO) measure of sampling adequacy for the data (N = 203) was found to be 0.90. Results from Bartlett’s test of sphericity χ2(435) = 1075.712, p < 0.0001 also verified that the correlation matrix was not an identity matrix. Both these results indicated that the data was suitable for PCA.

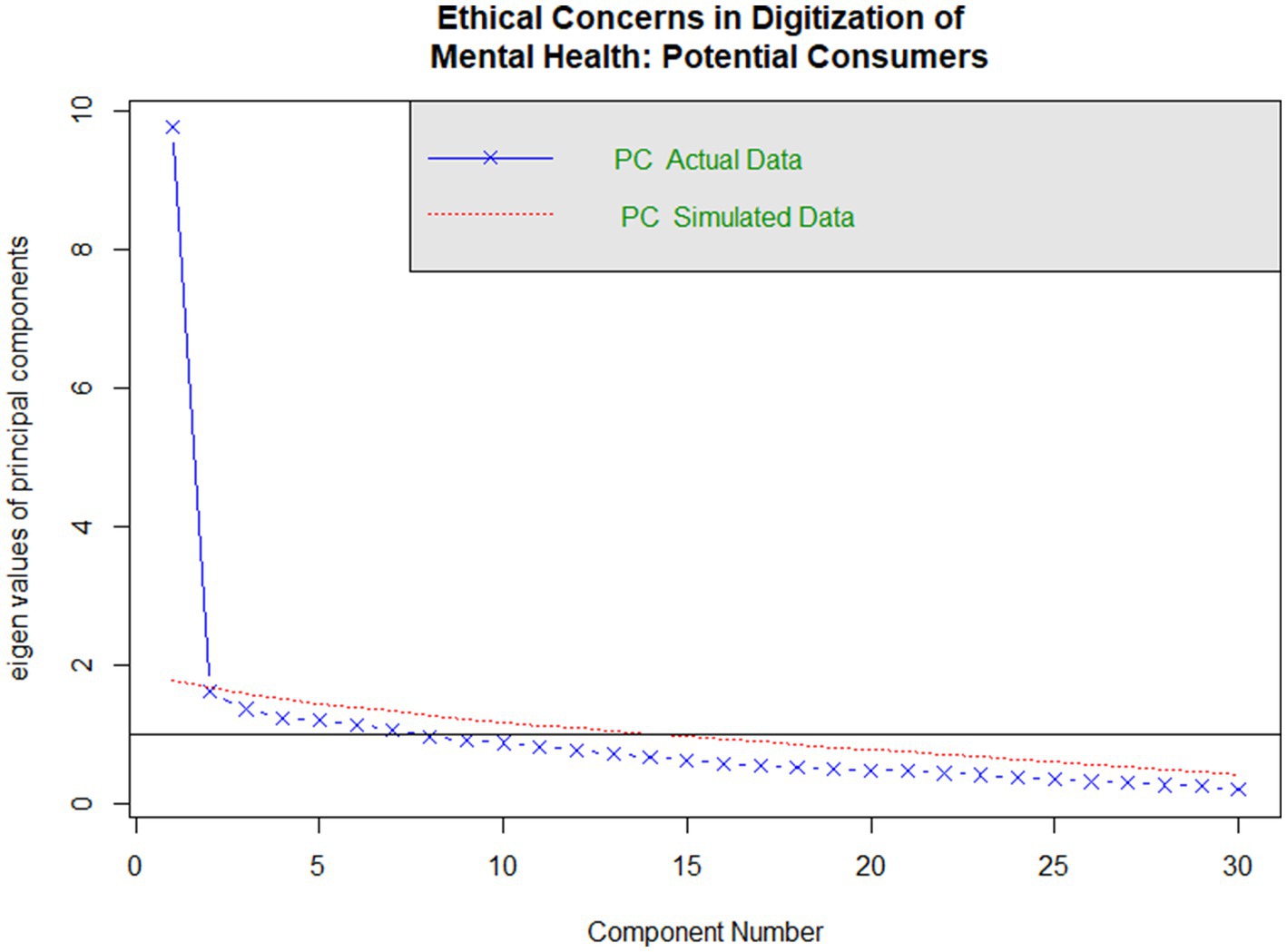

Parallel Analysis (see Figure 1) indicated that one component was sufficient to be extracted from the data.

Figure 1. Parallel analysis plot for potential consumers’ responses to ethical concerns in digitization of mental health.

The one component model was found to be sufficient with the empirical chi-square χ2 = 834.7, p < 0.0001, accounting for 33% variance in total. In other words, it was found that the sample of potential consumers were perceiving all the ethical concerns in a similar manner (see Appendix 2, for the full PCA output). This was also supplemented by the high internal consistency (α = 0.93), among the 30 items, showing homogeneity in their perceptions of ethical concerns sorted under various themes.

Practitioners’/trainees’ data

The overall Kaiser-Meyer-Olkin (KMO) measure of sampling adequacy for the data (N = 55) was found to be 0.75. Results from Bartlett’s test of sphericity χ2(435) = 2340.203, p < 0.0001 also verified that the correlation matrix was not an identity matrix. Both these results indicated that the practitioners’/trainees’ data was also suitable for PCA.

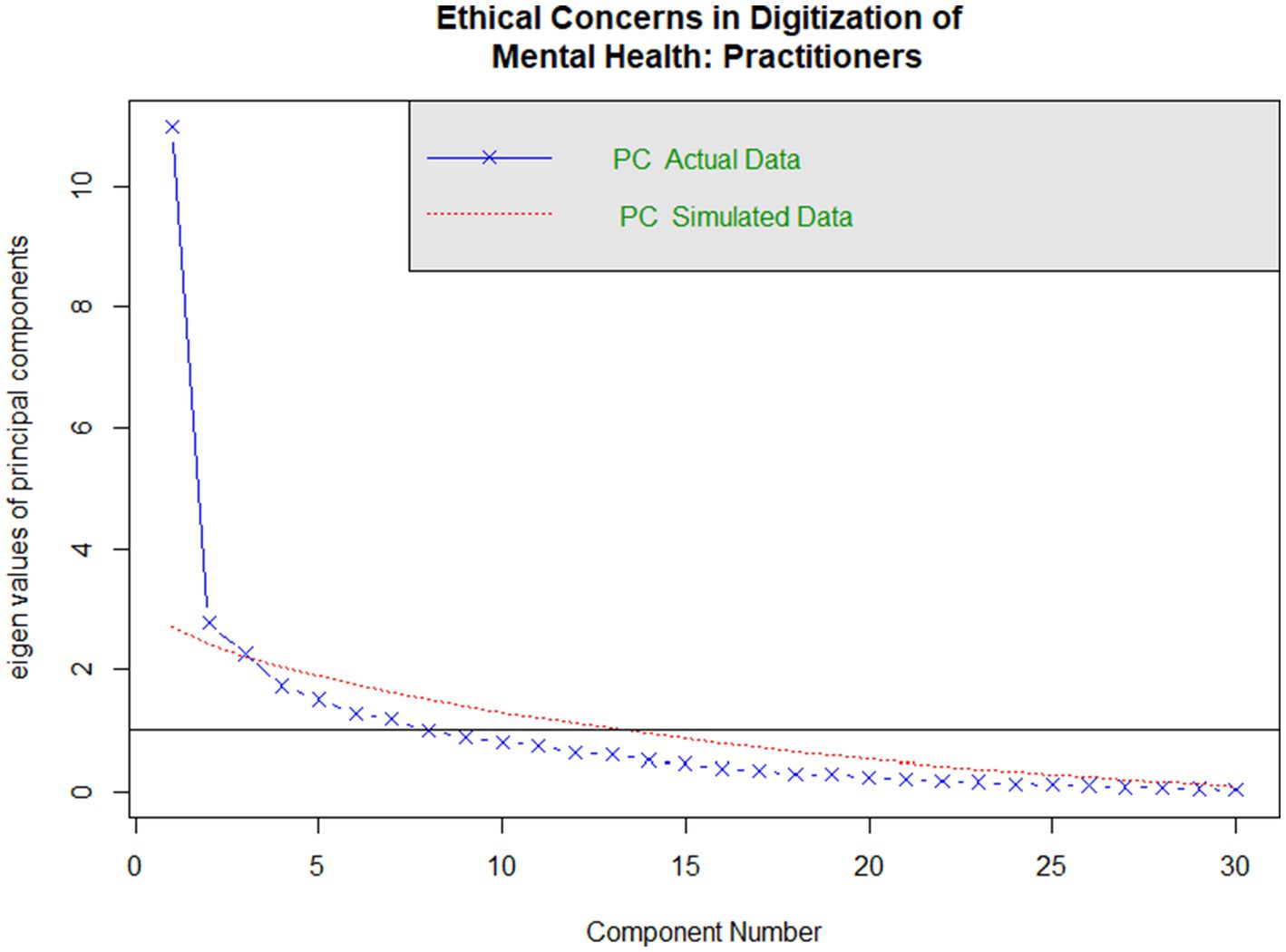

Parallel analysis (see Figure 2) indicated that two components were sufficient to be extracted from the data.

Figure 2. Parallel analysis plot for practitioners’/trainees’ responses to ethical concerns in digitization of mental health.

Appendix 3 shows the PCA output for practitioners’/trainees’ data. PCA was used to extract two components using varimax rotation to find a parsimonious and interpretable structure. The first rotated component was named as “Competency-Design-&-Accountability” based on the themes covered by the items loading on this component. Whereas the second rotated component was named as “Rights & Security.” Few items had shared loadings on both the components which also guided the interpretation of these two components (see Appendix 3).

Discussion

The current study ventured to propose a novel approach for finding ethical guidelines through a data-driven, principal components solution. This study was conducted in the context of a clear paucity in established ethical principles which provide practical guidelines in their application to the rapidly expanding field of digital mental health care. By applying Principal Components Analysis (PCA) separately to data from potential consumers and mental health practitioners and trainees, the goal was to offer a stake-holder sensitive approach to identifying ethical frameworks for digital mental health practice.

Among potential consumers, all 30 ethical concerns measured loaded onto a single component, suggesting a unidimensional structure. This suggests that they tend to view ethical issues as a part of one broad category, without separating them into different types, suggesting low domain-specific awareness. This aligns with the past research indicating that users while understanding general ideas of fairness, privacy, often lack conceptual clarity or vocabulary to articulate their concerns about AI in mental health apps, in structured ways (Wies et al., 2021; Funnell et al., 2024). Additionally, their perceptions are shaped more by general sense of trust or usability rather than by frameworks of professional ethics (Holtz et al., 2023). However, findings by Kretzschmar et al. (2019) show that some youth who have higher digital literacy or previous exposure to therapy do differentiate between ethical concerns. This reflects informational gaps. This calls for greater awareness initiatives to help potential consumers make informed choices while seeking support on mental health apps.

Conversely, the practitioners’ and trainees’ data yielded a two-component structure, separating into Competency-Design-&-Accountability and Rights-&-Security. This shows that professionals tend to think about ethical concerns in more differentiated ways. On one hand, they are concerned about the absence of an empirical evidence base, insufficient regulatory supervision, limited crisis preparedness, and user-fit design. These mapped onto the ‘Competency-Design-&-Accountability’ component. This reflects expectations of validation, ethical robustness, and regulatory clarity. On the other hand, they were equally attuned to the protection of users’ rights, particularly in relation to data security, the clarity of consent, and fairness in delivery of services. This dimension was captured by the component of ‘Rights & Security’. These concerns reflect the practitioners’ and trainees’ familiarity with the medico-legal ethical frameworks and also what existing literature calls for––more emphasis on evidence-based design and clearer regulatory frameworks for––AI-driven mental health apps (Wykes et al., 2019; Martinez-Martin and Kreitmair, 2018; Solanki et al., 2022).

Many national and international ethical guidelines promote broad principles such as beneficence, non-maleficence, autonomy, justice, confidentiality, and informed consent, however, they often lack specificity in addressing the algorithmic, design-based challenges posed by digital mental health platforms (Avasthi et al., 2022; Contreras et al., 2021; Jarden et al., 2021; Varkey, 2020; American Psychological Association, 2016; American Counseling Association, 2014; Prentice and Dobson, 2014; Gauthier et al., 2010; Rasmussen and Lewis, 2007; EFPA, 2005; American Public Health Association, 2020; Indian Medical Council, 2002; IMA, 2020). For instance, APA’s telepsychology guidelines (2013) recommend adherence to confidentiality and informed consent but they do not account for mental health applications and limitations of chatbots or explainability of how AI views mental health (Joint Task Force for the Development of Telepsychology Guidelines for Psychologists, 2013). Our findings highlight this limitation as they show practitioners and trainees actively distinguishing between ethical concerns rooted in traditional therapy versus the concerns that emerge out of digital contexts. This resonates with the criticism raised by Solanki et al. (2022) and Martinez-Martin and Kreitmair (2018), who advocate for operationalizing ethical principles with concrete implementation strategies. Thus, these findings suggest that app developers may benefit from designing the platforms in ways that reflect both clusters. In addition to this, these components may be strategically emphasized during app marketing, training and/or quality review processes.

However, these findings should be interpreted with caution keeping in mind the potential sampling bias. Respondents in both the samples were primarily from the metropolitan regions of India, with the majority of respondents from the Mumbai city. Furthermore, the limited sample size of practitioners/trainees warrants further efforts of replication before these results can be meaningfully applied as suggested above. Although, the current study did not aim to uncover the latent structures underlying the ethical concerns perceived by the respective samples, future studies may alternatively consider employing exploratory factor analysis, which may be more suitable for the Likert scale responses without assuming a continuous nature on such data.

Scope and implications

The findings of this study offer key insights into the ethical concerns identified by various stakeholders in digital mental health care – including potential consumers and practitioners. Further research could employ the data-driven approach introduced in this study, to more clearly understand such broad ethical considerations that must be addressed as we make the leap toward accessible digitized mental health care services. Ethical considerations rooted in the stakeholders’ responses would provide a pragmatic as well as a just approach in ensuring the well-being of the consumers of mental health care.

Such data-driven insights may also inform policy-regulations and awareness initiatives for competent and ethical practice on one hand, as well as an informed consumer on the other. For policy-makers, our findings suggest that a data-driven approach that centers stakeholders, especially practitioners and trainees, who work in both traditional and digital spaces, can make guidelines more implementable, relevant and effective. Lastly, this bottom-up approach, rooted in PCA demonstrates a shift from normative, top-down ethical frameworks to more dynamic stakeholder-sensitive guidelines that are informed by the potential users of mental health technologies.

Conclusion

The novel data-driven approach employed in uncovering the ethical concerns rooted in responses of stakeholders of the ever-growing digitized mental health care, provided two major insights. First, potential consumers of these services tend to view all ethical concerns as part of a single overarching framework of professional ethics. Hence, they may be benefited by the awareness initiatives through the practitioners, governing institutes, as well as the app-developers to make informed choices while seeking such services, and not miss out on (or get compromised in) any ethical consideration necessary for their wellbeing. Second, the practitioners–trainees perceived pertinent ethical considerations for these services under two broad dimensions: competency–design–and–accountability in the services being delivered, and the rights–and–security of the consumers. Future directions may focus on similar data-driven research efforts, with more representative and larger samples, further informing policy, regulations, and awareness initiatives.

Data availability statement

The raw data supporting the conclusions of this article will be made available by the authors, without undue reservation.

Ethics statement

Ethical review and approval were not required for this study involving human participants, in accordance with local legislation and institutional requirements, as the research was considered a pilot investigation. Participants provided informed consent prior to participating in the online survey. All relevant ethical considerations were ensured and communicated to participants, including the right to withdraw, the right to decline participation, confidentiality, anonymity, the absence of potential harm or risk, and the opportunity for debriefing.

Author contributions

CB: Conceptualization, Data curation, Formal analysis, Investigation, Methodology, Software, Validation, Visualization, Writing – original draft, Writing – review & editing. SJ: Data curation, Investigation, Writing – original draft, Writing – review & editing.

Funding

The author(s) declare that no financial support was received for the research and/or publication of this article.

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Generative AI statement

The authors declare that no Gen AI was used in the creation of this manuscript.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Supplementary material

The Supplementary material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/fhumd.2025.1549325/full#supplementary-material

Footnotes

1. ^Since the study was focused on an Indian sample, fundamental rights mentioned in the Constitution of India were also considered under the broad theme of "Rights".

References

American Counseling Association. (2014). ACA code of ethics: As approved by the ACA governing council [Electronic version].

American Psychiatric Association. The App Evaluation Model. (2020). Available online at: https://www.psychiatry.org/psychiatrists/practice/mental-health-apps/the-app-evaluation-model.

American Psychological Association (2016). Revision of ethical standard 3.04 of the “ethical principles of psychologists and code of conduct” (2002, as amended 2010). Am. Psychol. 71:900. doi: 10.1037/amp0000102

American Public Health Association. (2020). Public Health Code of Ethics. American Public Health Association. Available online at: https://www.apha.org/about-apha/apha-code-of-ethics.

Avasthi, A., Grover, S., and Nischal, A. (2022). Ethical and legal issues in psychotherapy. Indian J. Psychiatry 64, S47–S61. doi: 10.4103/indianjpsychiatry.indianjpsychiatry_50_21

Bhola, P., and Murugappan, N. P. (2020). Guidelines for telepsychotherapy services. National Institute of Mental Health and Neuro Sciences.

Bond, R. R., Mulvenna, M. D., Potts, C., O’Neill, S., Ennis, E., and Torous, J. (2023). Digital transformation of mental health services. NPJ Ment. Health Res. 2:3. doi: 10.1038/s44184-023-00033-y

Boucher, E. M., Harake, N. R., Ward, H. E., Stoeckl, S. E., Vargas, J., Minkel, J., et al. (2021). Artificially intelligent chatbots in digital mental health interventions: a review. Expert Rev. Med. Devices 18, 37–49. doi: 10.1080/17434440.2021.2013200

Carr, S. (2020). ‘AI gone mental’: engagement and ethics in data-driven technology for mental health. J. Ment. Health 29, 125–130. doi: 10.1080/09638237.2020.1714011

Contreras, B. P., Hoffmann, A. N., and Slocum, T. A. (2021). Ethical behavior analysis: evidence-based practice as a framework for ethical decision making. Behav. Anal. Pract. 15, 619–634. doi: 10.1007/s40617-021-00658-5

Creswell, J. W., and Creswell, J. D. (2017). Research design: Qualitative, quantitative, and mixed methods approaches. Thousand Oaks, CA: Sage Publications.

EFPA. (2005). Meta-code of ethics. Available online at: https://www.efpa.eu/meta-code-ethics.

Funnell, E. L., Spadaro, B., Martin-Key, N. A., Benacek, J., and Bahn, S. (2024). Perception of apps for mental health assessment with recommendations for future design: United Kingdom Semistructured interview study. JMIR Form. Res. 8:e48881. doi: 10.2196/48881

Garibay, O. O., Winslow, B., Andolina, S., Antona, M., Bodenschatz, A., Coursaris, C., et al. (2023). Six human-centered artificial intelligence grand challenges. Int. J. Hum.-Comput. Interact. 39, 391–437. doi: 10.1080/10447318.2022.2153320

Gauthier, J., Pettifor, J., and Ferrero, A. (2010). The universal declaration of ethical principles for psychologists: a culture-sensitive model for creating and reviewing a code of ethics. Ethics Behav. 20, 179–196. doi: 10.1080/10508421003798885

Giota, K. G., and Kleftaras, G. (2014). Mental health apps: innovations, risks and ethical considerations. E-Health Telecommun. Syst. Netw. 3, 19–23. doi: 10.4236/etsn.2014.33003

Holtz, B. E., Kanthawala, S., Martin, K., Nelson, V., and Parrott, S. (2023). Young adults’ adoption and use of mental health apps: efficient, effective, but no replacement for in-person care. J. Am. Coll. Heal. 73, 1–9. doi: 10.1080/07448481.2023.2227727

IMA. (2020). Statement of Ethical Professional Practice. IMA. Available online at: https://www.imanet.org/career-resources/ethics-center/statement.

Indian Medical Council. (2002). Indian medical council (professional conduct, etiquette and ethics) regulations, 2002. Gazette of India. Medical Council of India. Available online at: https://wbconsumers.gov.in/writereaddata/ACT%20&%20RULES/Relevant%20Act%20&%20Rules/Code%20of%20Medical%20Ethics%20Regulations.pdf.

Iwaya, L. H., Babar, M. A., Rashid, A., and Wijayarathna, C. (2022). On the privacy of mental health apps. Empir. Softw. Eng. 28:236. doi: 10.1007/s10664-022-10236-0

Jarden, A., Rashid, T., Roache, A., and Lomas, T. (2021). View of ethical guidelines for positive psychology practice (English: version 2). Available online at: https://www.internationaljournalofwellbeing.org/index.php/ijow/article/view/1819/1041.

Joint Task Force for the Development of Telepsychology Guidelines for Psychologists (2013). Guidelines for the practice of telepsychology. Am. Psychol. 68, 791–800. doi: 10.1037/a0035001

Khawaja, Z., and Bélisle-Pipon, J. C. (2023). Your robot therapist is not your therapist: understanding the role of AI-powered mental health chatbots. Front. Digit. Health 5:186. doi: 10.3389/fdgth.2023.1278186

Kretzschmar, K., Tyroll, H., Pavarini, G., Manzini, A., and Singh, I. (2019). Can your phone be your therapist? Young people’s ethical perspectives on the use of fully automated conversational agents (chatbots) in mental health support. Biomed. Inform. Insights 11:83. doi: 10.1177/1178222619829083

Malhotra, S. (2023). Mental health apps: a new field in community mental health care. Indian J. Soc. Psychiatry 39, 97–99. doi: 10.4103/ijsp.ijsp_145_23

Martinez-Martin, N. (2020). Trusting the bot: addressing the ethical challenges of consumer digital mental health therapy. In: Developments in neuroethics and bioethics. pp. 63–91.

Martinez-Martin, N. (2020). “Trusting the bot: addressing the ethical challenges of consumer digital mental health therapy” in Developments in neuroethics and bioethics. ed. N. Martinez-Martin (Amsterdam, Netherlands: Elsevier), 63–91.

Martinez-Martin, N., and Kreitmair, K. (2018). Ethical issues for direct-to-consumer digital psychotherapy apps: addressing accountability, data protection, and consent. JMIR Mental Health. 5:e32. doi: 10.2196/mental.9423

National Safety and Quality Digital Mental Health Standards. (2020). Australian Commission on Safety and Quality in Health Care. Available online at: https://www.safetyandquality.gov.au/standards/national-safety-and-quality-digital-mental-health-standards.

Polit, D. F., and Beck, C. T. (2006). The content validity index: are you sure you know what’s being reported? Critique and recommendations. Res. Nurs. Health 29, 489–497. doi: 10.1002/nur.20147

Prentice, J. L., and Dobson, K. S. (2014). A review of the risks and benefits associated with mobile phone applications for psychological interventions. Can. Psychol. 55:282. doi: 10.1037/a0038113

Rasmussen, M., and Lewis, O. (2007). United Nations convention on the rights of persons with disabilities. Int. Leg. Mater. 46, 441–466. doi: 10.1017/s0020782900005039

Saeidnia, H. R., Fotami, S. G. H., Lund, B., and Ghiasi, N. (2024). Ethical considerations in artificial intelligence interventions for mental health and well-being: ensuring responsible implementation and impact. Soc. Sci. 13:381. doi: 10.3390/socsci13070381

Solanki, P., Grundy, J., and Hussain, W. (2022). Operationalising ethics in artificial intelligence for healthcare: a framework for AI developers. AI Ethics 3, 223–240. doi: 10.1007/s43681-022-00195-z

Torous, J., Nicholas, J., Larsen, M. E., Firth, J., and Christensen, H. (2018). Clinical review of user engagement with mental health smartphone apps: evidence, theory and improvements. Evid. Based Ment. Health 21, 116–119. doi: 10.1136/eb-2018-102891

Varkey, B. (2020). Principles of clinical ethics and their application to practice. Med. Princ. Pract. 30, 17–28. doi: 10.1159/000509119

Wies, B., Landers, C., and Ienca, M. (2021). Digital mental health for young people: a scoping review of ethical promises and challenges. Front. Digit. Health 3:72. doi: 10.3389/fdgth.2021.697072

Keywords: mental health apps, app developers, ethical concerns, data-driven guidelines, principal components

Citation: Bapat C and Jog S (2025) Data-driven ethical guidelines for digitized mental health services: principal components analysis. Front. Hum. Dyn. 7:1549325. doi: 10.3389/fhumd.2025.1549325

Edited by:

Poonam Sharma, Vellore Institute of Technology, IndiaReviewed by:

Pragya Lodha, Lokmanya Tilak Municipal General Hospital, IndiaMedha Wadhwa, Indian Institute of Public Health Gandhinagar (IIPHG), India

Copyright © 2025 Bapat and Jog. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Chinmay Bapat, Y2hpbm1heS5zLmJhcGF0QGdtYWlsLmNvbQ==

Chinmay Bapat

Chinmay Bapat Snehal Jog

Snehal Jog