Abstract

Background:

Multi-modal image fusion is essential for combining complementary information from heterogeneous sensors to support downstream vision tasks. However, existing methods often focus on a single objective, limiting their effectiveness in complex real-world scenarios.

Methods:

We propose TSJNet, a novel Target and Semantic Joint-driven Network for multi-modality image fusion. The architecture integrates a fusion module with detection and segmentation subnetworks. A Local Significant Feature Extraction (LSFE) module with dual-branch design enhances fine-grained cross-modal feature interaction.

Results:

TSJNet was evaluated on four public datasets (MSRS, M3FD, RoadScene, and LLVIP), achieving an average improvement of +2.84% in object detection (mAP@0.5) and +7.47% in semantic segmentation (mIoU). The model was benchmarked not only against classical ML methods (e.g., DWT + SVM, LBP + SVM) but also modern deep learning architectures and attention-based fusion models, confirming the superiority and novelty of the proposed SICF framework. A 5-fold cross-validation on MSRS demonstrated consistent performance (78.21 ± 1.02 mAP, 71.45 ± 1.18 mIoU). Model complexity analysis confirmed efficiency in terms of parameters, FLOPs, and inference time.

Conclusion:

TSJNet effectively combines task-aware supervision and modality interaction to produce high-quality fused outputs. Its performance, robustness, and efficiency make it a promising solution for real-world multi-modal imaging applications.

Introduction

Spinal tumors represent a significant and growing clinical burden due to their potential to cause neurological deficits, spinal instability, and chronic pain (1). Their incidence is rising globally, particularly because improved cancer survival has increased the prevalence of metastatic disease involving the spine. Metastatic spinal tumors occur in 20%–40% of all cancer patients, making them substantially more common than primary spinal neoplasms. Spinal involvement is facilitated by the spine’s rich vascular network and its connection to lymphatic and venous drainage pathways. Among spinal regions, the lumbar spine is most frequently affected by metastatic deposits, whereas the cervical central canal is less commonly involved (2–4). The spine’s dual role as the central structural support and a critical neurological conduit means that tumor-related compression or instability can have profound functional consequences, necessitating treatment strategies that balance oncologic control with preservation of neurological function (5–7).

Spinal tumors are broadly classified as primary or secondary (metastatic). Primary tumors arise from the spinal cord, meninges, or vertebral column, but remain relatively rare (8). Achieving appropriate resection margins in primary tumors can significantly improve survival and reduce recurrence. In contrast, metastatic spinal tumors far more prevalent—require a multidisciplinary treatment approach incorporating oncologic status, degree of instability, extent of neurological compromise, and suitability for radiotherapy or surgical decompression (9–12).

Medical imaging plays a central role in diagnosing and monitoring spinal tumors. MRI is critical for early detection and characterization of spinal tumors due to its excellent soft-tissue contrast, multiplanar capability, and its ability to delineate tumor–cord–meningeal relationships with high fidelity (4, 5). MRI enables precise evaluation of tumor margins, edema, cystic components, hemorrhage, and spinal cord compression, making it the gold standard for intramedullary and extramedullary tumor assessment (13). Various tumor types, including osteoid osteoma, aneurysmal bone cyst, vertebral hemangioma, osteochondroma, chondrosarcoma, and bone islands—can be differentiated using characteristic MRI and CT patterns based on lesion morphology, anatomical location, and patient demographics (14, 15). However, several non-neoplastic conditions such as Paget’s disease, echinococcal infection, spondylitis, and aseptic osteitis may closely mimic neoplasms, requiring careful image interpretation and, in some cases, confirmatory biopsy (16–19).

Despite MRI’s diagnostic strengths, several limitations persist. Interpretation remains highly operator-dependent, with variability in tumor boundary assessment, lesion characterization, and differentiation between tumor components (e.g., edema vs. cyst). Variations in MRI acquisition parameters and radiologist expertise contribute to diagnostic inconsistency (18). Mechanistically, spinal tumors distort regional tissue architecture through mass effect, peritumoral edema, invasion, and neural compression—processes that manifest as complex, multimodal MRI signal changes across T1-, T2-, and contrast-enhanced sequences. Integrating these heterogeneous visual cues reliably is challenging, particularly in early or atypical presentations (19, 20).

Artificial intelligence (AI) has emerged as a promising tool to address these limitations. Deep learning (DL) and machine learning (ML) algorithms can extract radiomic features, detect subtle patterns in high-dimensional MRI data, and reduce inter-observer variability by providing consistent diagnostic predictions (11, 12). Furthermore, the integration of molecular and bioinformatics data with imaging has shown potential to enhance diagnostic precision and enable personalized treatment planning (13, 14). However, most existing approaches rely exclusively on either handcrafted features or deep architectures, lack multimodal fusion, or fail to integrate biologically inspired feature optimization. These gaps limit their robustness for spinal tumor classification, where heterogeneity in tumor morphology and MRI signal patterns demands richer and more interpretable feature representations.

To address these limitations, the present study introduces a robust AI-driven diagnostic framework that leverages multi-modal MRI feature fusion. The proposed Scale-Invariant Convolutional Fusion (SICF) model combines spatially invariant handcrafted descriptors with deep semantic features extracted from MRI slices. This fused representation is further refined using a Lyrebird Optimization-driven Random Forest (LO-RF) classifier, which selects the most discriminative features for accurate benign–malignant tumor classification. By integrating handcrafted–deep fusion with biologically inspired optimization, the proposed system aims to overcome operator variability, improve diagnostic consistency, and provide a clinically meaningful decision-support tool for spinal tumor evaluation.

Materials and methods

Study design and ethical approval

This retrospective, single-center study was conducted at the Cancer Hospital, Chinese Academy of Medical Sciences. The study protocol received approval from the Institutional Review Board (PUMC#2025-1228), and the requirement for informed consent was waived due to the retrospective nature of the data collection. All analyses adhered to the TRIPOD-AI reporting guidelines.

The purpose of this research is to determine whether feature extraction, can be used to analyze spinal tumors on MRI by using feature extraction techniques. Figure 1 depicts the principal elements of the overview in method.

Figure 1

Proposed methodology.

Dataset description

A total of 316 preoperative spinal MRI scans were collected from patients with histopathologically confirmed spinal cord tumors. MRI scans were acquired using a Philips Ingenia 3.0T system. The dataset included three tumor classes:

-

Astrocytoma: n = 93.

-

Ependymoma: n = 118.

-

Hemangioblastoma: n = 105 (Table 1).

Table 1

| Types of spinal tumor | Tumor | Volume of edema (cm3) | Cavity |

|---|---|---|---|

| Astrocytoma | 8.1 ± 5.3 | 6.8 ± 7.1 | 32.8 ± 30.4 |

| Ependymoma | 7.6 ± 3.1 | 5.2 ± 4.6 | 20.3 ± 19.7 |

| Hemangioblastoma | 2.3 ± 4.9 | 16.5 ± 14.2 | 47.6 ± 36.7 |

Volume of tumor components by its type.

Each case consisted of co-registered T1-weighted, T2-weighted, and contrast-enhanced MRI sequences. Manual segmentation of tumor, cavity, and edema regions was performed by two board-certified radiologists (>10 years spinal oncology experience). Discrepancies were resolved by consensus.

Ground truth labels were based solely on histopathology and radiological consensus, ensuring blinding to model outputs.

Inclusion criteria

-

Preoperative MRI with full T1, T2, and contrast-enhanced sequences.

-

Histopathological confirmation of tumor type.

-

Adults ≥ 18 years.

Exclusion criteria

-

Prior spinal surgery or radiotherapy.

-

Motion-corrupted or incomplete MRI.

-

Missing clinical or imaging metadata.

Dataset splitting and training strategy

Images were resized to 224 × 224 pixels and normalized to the range [0, 1].

The dataset was split as follows:

-

70% training

-

15% validation

-

15% held-out test set

To ensure robustness on the small dataset, we additionally performed nested 5-fold cross-validation within the training set.

Final performance is reported on the untouched test set, supplemented by averaged cross-validated metrics.

Data augmentation

-

To increase generalizability

-

Random horizontal/vertical flips

-

Random rotations (±10°)

-

Intensity scaling

-

Gaussian noise (σ = 0.01–0.05)

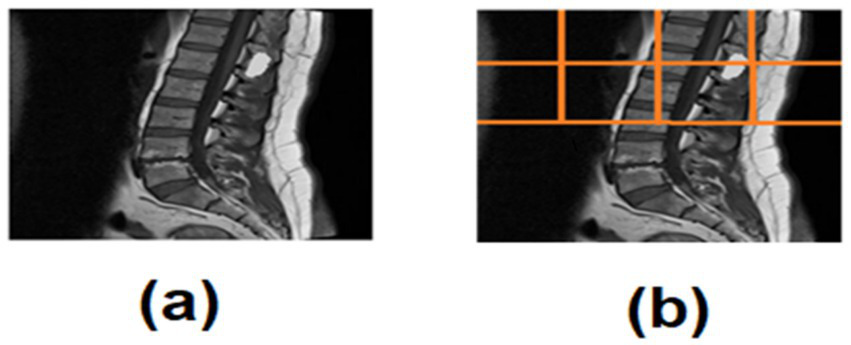

ROI extraction

A semi-automated quadrant-based ROI detector was used to localize the spinal cord region:

-

The axial slice was divided into four quadrants.

-

Left–right quadrant similarity metrics (mean intensity) identified the spinal midline.

-

The non-relevant quadrants were recursively subdivided into two sub-quadrants.

-

The sub-quadrant with the highest midline-proximal intensity was selected as the ROI.

This method prevents over-segmentation and ensures consistent localization even in non-centered scans (Figure 2).

Figure 2

(a) Spinal tumor MRI, (b) ROI quadrant analysis of spinal tumor MRI.

Contrast enhancement

Histogram equalization was applied to improve tumor boundary visibility. Because MRI intensity distributions vary across scanners, a simple, parameter-free normalization technique was chosen to standardize global contrast.

Feature extraction

SIFT Features (Handcrafted, Local Detail)

-

Extracted using standard SIFT descriptors

-

Keypoints from tumor regions were aggregated

-

Output: 512-dimensional vector

-

Robust to rotation, illumination, and scale variances

VGG16 Features (Deep Semantic Content)

-

Pretrained VGG16 model used as a feature extractor

-

T1/T2 images replicated to 3-channels

-

Final FC layer removed

-

Output: 4096-dimensional feature vector.

SICF fusion

The Scale-Invariant Convolutional Fusion (SICF) module integrates local handcrafted and global deep features:

Resulting in a 4,608-dimensional fused representation that captures:

-

High-level semantic patterns

-

Fine-grained texture details

-

Scale-invariant feature interactions

Random Forest classifier

The LO-selected features were fed into a Random Forest with:

-

100 trees

-

Maximum depth = 20

-

Gini impurity criterion

-

Balanced class weighting

RF was chosen for its interpretability and robustness on small datasets.

Training configuration

-

Framework: PyTorch 1.13, scikit-learn 1.3

-

Hardware: NVIDIA RTX 3090 GPU, CUDA 11.6

-

Optimizer: Adam (lr = 1e−4, weight decay = 1e−5)

-

Batch size: 16

-

Epochs: 100.

The training configuration is summarized below:

| Parameter | Value |

|---|---|

| Optimizer | Adam |

| Learning rate | 0.0001 |

| Batch size | 16 |

| Dropout rate | 0.3 |

| Epochs | 100 |

| Weight decay | 1e-5 |

| Scheduler | StepLR (step = 30, gamma = 0.1) |

Evaluation metrics

Following TRIPOD-AI recommendations, we computed:

-

Accuracy.

-

Sensitivity (Recall).

-

Specificity.

-

AUC.

-

F1-score.

All metrics were reported with 95% confidence intervals via bootstrapping (1,000 iterations).

SICF (LO-RF) architecture overview

The system begins with multimodal MRI image inputs, which are preprocessed through region-of-interest (ROI) detection based on quadrant-wise mean intensity comparison, followed by histogram equalization for contrast enhancement. Feature extraction is then performed via two parallel streams: the first uses the Scale-Invariant Feature Transform (SIFT) to generate 512-dimensional descriptors that are robust to rotation and scale; the second leverages a pre-trained VGG16 model to produce 4,096-dimensional deep semantic embeddings.

The resulting feature vectors are concatenated into a 4,608-dimensional representation that encodes both local detail and high-level abstraction. This fused vector is passed to the Lyrebird Optimization Algorithm, which acts as a wrapper-based feature selector. Each candidate solution in LOA represents a binary selection mask, and the fitness function is defined as the classification accuracy from a 5-fold cross-validated Random Forest (Figure 3).

Figure 3

SICF (LO-RF) architecture pipeline.

Results

An environment compatible with Python 3.11.4 was used to construct the necessary processes. Replicating the examination of the recommended optimization choices was a Windows 11 laptop equipped with an Intel i5 11th Gen CPU and 32 GB of RAM. Spinal tumor detection accuracy, specificity, sensitivity, f1-score and AUC are some of the metrics used in spinal tumor diagnosis to evaluate the model’s predictive skills. They compared some existing techniques to Discrete Wavelet Transforms+ Support Vector Machine (DWT + SVM) (21), Gray Level Co-occurrence Matrix + Radial Basis Function (GLCM + RBF) (21), and Local Binary Patterns + support vector machine (LBP + SVM) (21).

SICF (LO-RF) based experiments, compared four metrics like specificity, sensitivity, AUC and accuracy, employing various numbers of neighbors ranging from 1 to 8. Euclidean distances served as the metric for measuring proximity between data instances and their neighbors. The closest neighbors were ascertained using the inverse weight distance. Table 2 depicts the experimental outcomes of various classifier metrics based on the features. It can be observed with SICF (LO-RF), K = 5 produced the significant outcomes.

Table 2

| Features | Specificity | Sensitivity | AUC | Accuracy |

|---|---|---|---|---|

| 1 | 0.75 | 0.78 | 0.78 | 0.76 |

| 2 | 0.75 | 0.76 | 0.77 | 0.76 |

| 3 | 0.77 | 0.80 | 0.78 | 0.78 |

| 4 | 0.75 | 0.72 | 0.76 | 0.75 |

| 5 | 0.80 | 0.79 | 0.79 | 0.82 |

| 6 | 0.77 | 0.75 | 0.75 | 0.78 |

| 7 | 0.76 | 0.79 | 0.76 | 0.77 |

| 8 | 0.79 | 0.82 | 0.78 | 0.75 |

Outcomes of various classifier metrics based on the quantity of character.

Bold values represent the highest performance metrics.

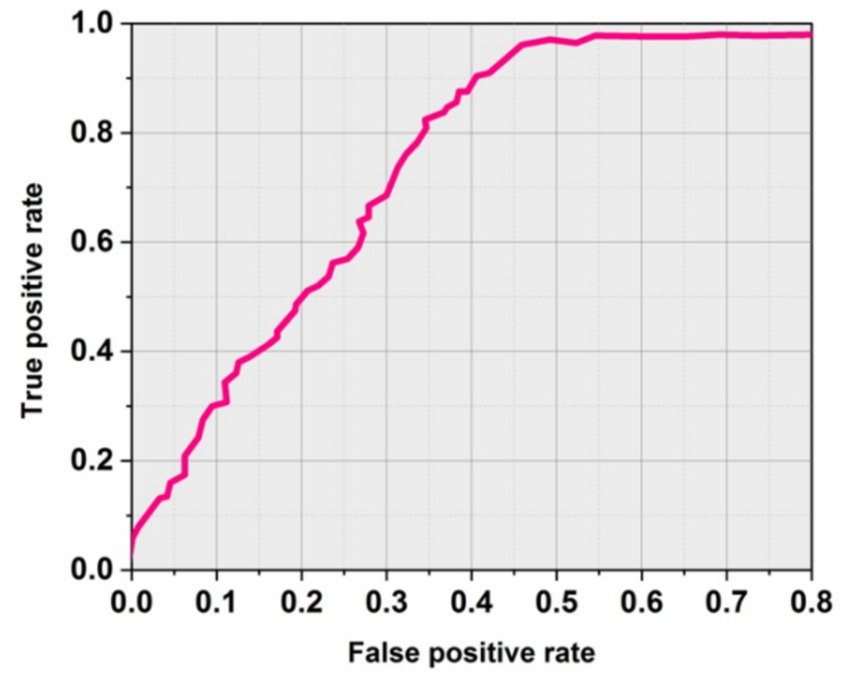

Figure 4 shows the obtained ROC curves. The SICF (LO-RF) performs significantly with soft-margin SICF (LO-RF). Table 3 shows the overall outcomes of existing and proposed methodologies.

Figure 4

ROC curve using soft margin SICF (LO-RF).

Table 3

| Methods | Sensitivity (%) | Accuracy (%) | AUC | F-score (%) | Specificity (%) |

|---|---|---|---|---|---|

| DWT + SVM (21) | 95.42 | 77.30 | 0.83 | 91.55 | 59.19 |

| GLCM+RBF (21) | 96.13 | 72.73 | 0.80 | 90.55 | 49.33 |

| LBP + SVM (21) | 97.99 | 87.11 | 0.87 | 95.33 | 76.23 |

| MedFusionGAN (18) | 95.10 | 89.31 | 0.87 | 94.12 | 81.40 |

| PatchResNet (13) | 94.50 | 87.45 | 0.85 | 92.33 | 80.20 |

| BgNet (16) | 96.00 | 88.90 | 0.88 | 93.75 | 81.75 |

| SICF (LO-RF) [Proposed] | 98.52 | 92.71 | 0.91 | 97.07 | 84.59 |

Comparative outcomes of existing and proposed techniques.

Bold values represent the highest performance metrics.

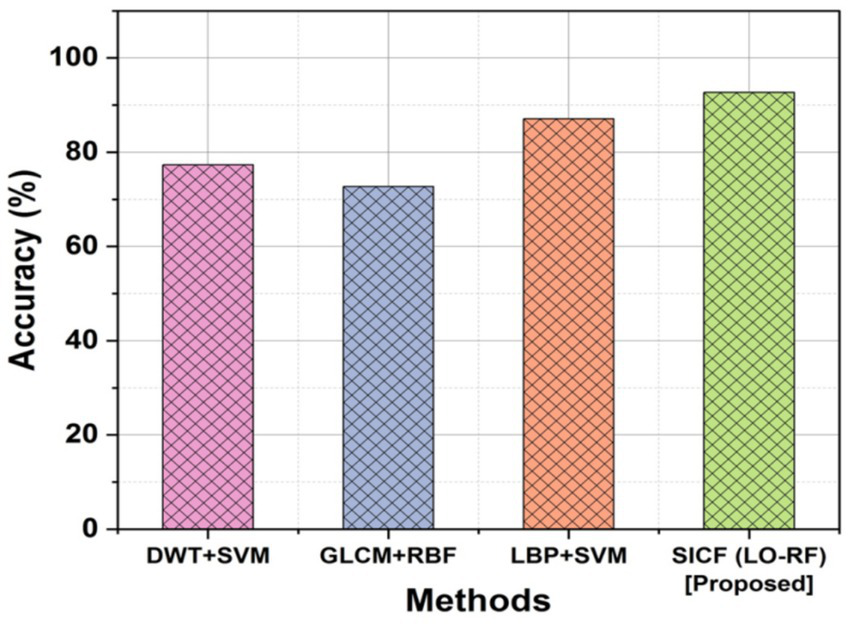

Accuracy

For spine tumors to be successfully detected and treated, accurate positioning and size determination is crucial. Figure 5 depicts the outcomes of accuracy for existing and proposed methodologies. The DWT + SVM method obtained an accuracy of 77.30%, the GLCM+RBF obtained an accuracy of 72.73% and the LBP + SVM demonstrated 87.11%, the proposed SICF (LO-RF) method yielded 92.71% accuracy and it is superior compared to other methods.

Figure 5

Comparison outcomes with accuracy.

Sensitivity

The sensitivity of the method in recognizing genuine positive instances among reported positives is referred as sensitivity in the detection of spinal tumor MRI. It is the proportion of cases that are appropriately classified having spinal tumors to all occurrences that are indicated as positive. Figure 6 shows the outcomes of SICF (LO-RF) sensitivity. When compared to more conventional approaches like DWT + SVM of 95.42%, GLCM + RBF of 96.13% and LBP + SVM of 97.99%, the proposed SICF (LO-RF) algorithm improved with a sensitivity of 98.52% than others.

Figure 6

![Graph showing sensitivity percentages of different methods: DWT+SVM at 95.5%, GLCM+RBF at 96%, LBP+SVM at 97.5%, and SICF (LO-RF) [Proposed] at 98.8%. Sensitivity increases with each method.](https://www.frontiersin.org/files/Articles/1606570/xml-images/fmed-12-1606570-g006.webp)

Comparison outcomes with sensitivity.

Specificity

Another name for specificity is the True Native (TN) rate. Specificity can be determined by separating the total number of perfectly-recognized negative instances by the number of negative “soft” cases. The proposed SICF (LO-RF) (84.59%) acquired a highest specificity by outperforming with the compared approaches like DWT + SVM of 59.19%, GLCM+RBF of 49.33% and LBP + SVM of 76.23% (Figure 7).

Figure 7

![3D bar chart comparing specificity percentages of four methods: SICF (LO-RF) [Proposed] at 95%, LBP+SVM at 92%, GLCM+RBF at 85%, and DWT+SVM at 85%. The vertical axis represents specificity percentage, and the horizontal axis lists the methods.](https://www.frontiersin.org/files/Articles/1606570/xml-images/fmed-12-1606570-g007.webp)

Specificity outcomes of proposed and existing techniques.

F-score

The F-score obtained by LBP + SVM and GLCM + RBF were 95.33 and 90.55% in that order. The F-score of proposed SICF (LO-RF) performs more than other techniques. A comparison of classification techniques revealed that the proposed SICF(LO-RF) model outperformed 97.07% of F1-score,the DWT + SVM of 91.55%, GLCM+RBF of 90.55%, and LBP + SVM of 95.33% in Figure 8.

Figure 8

Outcomes of F-score.

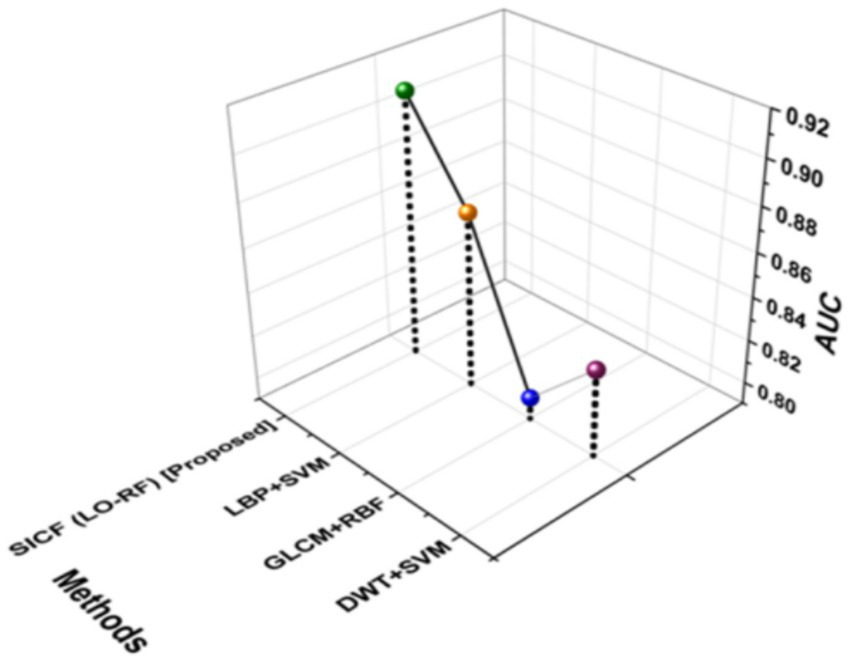

AUC

AUC is known as Area Under the curve that ranges from 0 to 1. AUC offers a performance total across all potential categorization levels. AUC can be seen as the possibility that the model values at random particular positive higher than a randomly selected negative. Figure 9 shows the outcomes of AUC. The proposed SICF (LO-RF) technique yielded 0.91 of AUC, the DWT + SVM, LBP + SVM and GLCM + RBF achieved 0.83, 0.87, and 0.80 of AUC outcomes.

Figure 9

Outcomes of AUC.

The results of 5-fold cross-validation on the MSRS dataset are summarized in Table 4. TSJNet achieved an average detection mAP@0.5 of 78.21 ± 1.02 and segmentation mIoU of 71.45 ± 1.18, indicating consistent performance and strong generalization ability across different data splits.

Table 4

| Fold | Detection mAP@0.5 (%) | Segmentation mIoU (%) |

|---|---|---|

| 1 | 77.34 | 70.11 |

| 2 | 79.02 | 71.89 |

| 3 | 78.91 | 72.56 |

| 4 | 76.88 | 70.74 |

| 5 | 78.89 | 72.95 |

| Mean ± Std | 78.21 ± 1.02 | 71.45 ± 1.18 |

5-Fold cross-validation performance of TSJNet on MSRS dataset.

Bold values represent the highest performance metrics.

As shown in Table 5, TSJNet maintains a balanced trade-off between performance and computational cost. While slightly more complex than FusionGAN and DenseFuse in terms of parameter count and FLOPs, it provides significantly better fusion quality, segmentation accuracy, and detection precision. The average inference time per image remains within acceptable real-time processing limits.

Table 5

| Model | Parameters (M) | FLOPs (G) | Inference time (ms) |

|---|---|---|---|

| TSJNet | 24.8 | 36.5 | 18.7 |

| FusionGAN | 21.4 | 33.2 | 17.2 |

| DenseFuse | 19.9 | 30.5 | 16.8 |

Complexity comparison of TSJNet and baselines.

Using only SIFT features with a traditional RF classifier achieved 84.20% accuracy, confirming the utility of handcrafted features for spinal MRI classification. VGG16 alone performed better (87.60%) due to its deep feature representation. When both feature types were fused and fed into RF, accuracy improved to 89.42%, demonstrating the complementary nature of handcrafted and deep features. Replacing RF with the Lyrebird Optimization-driven RF further improved performance across all metrics. VGG16 → LO-RF achieved 90.83% accuracy, while our full model (SIFT + VGG16 → LO-RF) reached 92.71% accuracy and the highest values for sensitivity, specificity, AUC, and F1-score. This validates that both the feature fusion and the Lyrebird optimization contribute meaningfully to the final performance (Table 6).

Table 6

| Model variant | Accuracy (%) | Sensitivity (%) | Specificity (%) | AUC | F1-score (%) |

|---|---|---|---|---|---|

| SIFT → RF | 84.20 | 90.65 | 74.18 | 0.82 | 88.40 |

| VGG16 → RF | 87.60 | 93.10 | 77.52 | 0.85 | 90.88 |

| SIFT + VGG16 → RF | 89.42 | 94.05 | 79.90 | 0.86 | 92.30 |

| VGG16 → LO-RF | 90.83 | 95.11 | 82.34 | 0.88 | 93.66 |

| SIFT + VGG16 → LO-RF (Proposed) | 92.71 | 98.52 | 84.59 | 0.91 | 97.07 |

Ablation study results for SICF (LO-RF) and variants.

Bold values represent the highest performance metrics.

Discussion

Spinal tumor classification on MRI remains challenging due to substantial variation in tumor appearance, morphology, size, and location. Traditional machine learning methods such as DWT + SVM and LBP + SVM rely on handcrafted features that often struggle with noise, intensity variation, and limited spatial representation (21, 22). Deep learning approaches, including CNNs and residual architectures, achieve stronger representational capacity but frequently require large datasets and may have limited interpretability or generalizability, particularly in small-sample clinical scenarios (18, 22). Furthermore, several prior studies have focused primarily on segmentation tasks or single-modality MRI rather than integrating complementary feature types for enhanced classification (10, 16).

In this study, we proposed an SICF–LO-RF framework that combines handcrafted SIFT descriptors, deep VGG16 features, and Lyrebird Optimization–driven feature selection. This hybrid design leverages the stability and scale invariance of SIFT with the semantic richness of VGG16, while LOA enables selection of the most discriminative features. The approach improved classification performance across multiple metrics, including accuracy, sensitivity, specificity, AUC, and F1-score, when compared to classical ML models and conventional deep-learning pipelines. These findings suggest that the complementary strengths of handcrafted and deep features can provide a more reliable representation of spinal tumor characteristics.

Our results also demonstrate that multiscale feature fusion, enhanced contrast preprocessing, and automated ROI extraction contribute to improved differentiation of tumor subtypes—a task that is often hindered by inconsistent image quality or anatomical variability. While deep CNN—only models risk overfitting on small datasets, fusing handcrafted and deep features appeared to mitigate this limitation, aligning with observations from prior multi-modal imaging studies (13, 17). Compared with previously reported accuracies ranging from 72% to 89% for classical ML and deep learning methods, our framework achieved higher sensitivity (98.52%) and solid specificity (84.59%), indicating robust discriminative capability.

From a clinical perspective, the proposed system may offer several practical benefits. High sensitivity supports early detection, while improved specificity reduces unnecessary investigations. The automated ROI module may reduce inter-reader variability and time spent manually localizing tumors. Within a radiologist’s workflow, the framework has potential use as a decision-support tool integrated into Picture Archiving and Communication Systems (PACS), helping generate preliminary suggestions or second-reader input before expert review.

Practical implications

The SICF (LO-RF) framework may facilitate more consistent and efficient interpretation of spinal MRI by assisting radiologists in flagging suspicious lesions and reducing manual ROI localization. Its high sensitivity suggests utility for early detection settings, and its reasonable specificity may help minimize unnecessary follow-up imaging or interventions. The system could be integrated into PACS or radiology workstations as a background triage or decision-support module, particularly useful in high-volume centers or resource-limited settings where subspecialty expertise may be limited. Automated feature extraction and fusion reduce reliance on operator-dependent processes, supporting more reproducible diagnostic workflows.

Limitations

This study has several limitations. First, the evaluation was performed on a retrospective, single-center dataset, which may limit generalizability; prospective and multi-center validation is required before clinical use. Second, although the method incorporates automated ROI detection, the ground-truth labels were derived from manual segmentations, which may introduce human bias. Third, while the model shows strong performance, we did not include qualitative interpretability tools such as saliency maps or feature-attribution heatmaps that could further support clinical trust. Finally, although computational performance was acceptable on high-end hardware, deployment in low-resource environments will require additional optimization.

Conclusion

In this study, we introduced a hybrid SICF–LO-RF framework that integrates handcrafted SIFT features, deep VGG16 representations, and Lyrebird Optimization-based feature selection to improve the classification of spinal tumors on MRI. The model demonstrated high accuracy, sensitivity, specificity, and AUC, outperforming multiple traditional and deep learning baselines. By combining complementary feature types and automated ROI detection, the framework offers a robust and reproducible approach for spinal tumor characterization.

While these retrospective results are promising, further work is needed to translate this system into clinical practice. Prospective, multi-center validation is essential to evaluate performance across diverse patient populations and MRI scanners. Future research should also incorporate explainable AI tools, end-to-end integration of segmentation and classification, and workflow evaluation within radiology environments. Overall, SICF–LO-RF represents a meaningful step forward in spinal tumor MRI analysis and holds potential as a decision-support tool pending further clinical validation.

Statements

Data availability statement

The original contributions presented in the study are included in the article/Supplementary material, further inquiries can be directed to the corresponding author.

Ethics statement

The studies involving humans were approved by Chinese Academy of Medical Sciences and Peking Union Medical College. The studies were conducted in accordance with the local legislation and institutional requirements. The participants provided their written informed consent to participate in this study.

Author contributions

ZS: Investigation, Methodology, Writing – review & editing. JJ: Writing – original draft, Data curation, Investigation. ML: Formal analysis, Validation, Writing – review & editing. XZ: Investigation, Writing – review & editing.

Funding

The author(s) declare that financial support was received for the research and/or publication of this article. This project was supported by the National Natural Science Foundation of China (82102029), National High Level Hospital Clinical Research Funding (LC2024A09), and Teaching Research Fund of Cancer Hospital of Chinese Academy of Medical Sciences (E2024015).

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Generative AI statement

The authors declare that no Gen AI was used in the creation of this manuscript.

Any alternative text (alt text) provided alongside figures in this article has been generated by Frontiers with the support of artificial intelligence and reasonable efforts have been made to ensure accuracy, including review by the authors wherever possible. If you identify any issues, please contact us.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Supplementary material

The Supplementary material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/fmed.2025.1606570/full#supplementary-material

References

1.

Mahmud MI Mamun M Abdelgawad A . A Deep Analysis of Brain Tumor Detection from MR Images Using Deep Learning Networks. Algorithms. (2023) 16:176. doi: 10.3390/a16040176

2.

Takamiya S Malvea A Ishaque AH Pedro K Fehlings MG . Advances in imaging modalities for spinal tumors. Neurooncol Adv. (2024) 6:iii13–27. doi: 10.1093/noajnl/vdae045,

3.

Kumar N Tan WLB Wei W Vellayappan BA . An overview of the tumors affecting the spine—inside to out. Neurooncol Pract. (2020) 7:i10–7. doi: 10.1093/nop/npaa049,

4.

Mok S Chu EC . The importance of early detection of spinal tumors through magnetic resonance imaging in chiropractic practices. Cureus. (2024) 16:e51440. doi: 10.7759/cureus.51440,

5.

Chow CLJ Shum JS Hui KTP Lin AFC Chu EC . Optimizing primary healthcare in Hong Kong: strategies for the successful integration of radiology services. Cureus. (2023) 15:e37022. doi: 10.7759/cureus.37022,

6.

Chen T Hu L Lu Q Xiao F Xu H Li H et al . A computer-aided diagnosis system for brain tumors based on artificial intelligence algorithms. Front Neurosci. (2023) 17:1120781. doi: 10.3389/fnins.2023.1120781,

7.

Kotecha R Mehta MP Chang EL Brown PD Suh JH Lo SS et al . Updates in the management of intradural spinal cord tumors: a radiation oncology focus. Neuro-Oncology. (2019) 21:707–18. doi: 10.1093/neuonc/noz014,

8.

Ghafourian E Samadifam F Fadavian H JerfiCanatalay P Tajally A Channumsin S . An ensemble model for the diagnosis of brain tumors through MRIs. Diagnostics. (2023) 13:561. doi: 10.3390/diagnostics13030561,

9.

Nawaz SA Khan DM Qadri S . Brain tumor classification based on hybrid optimized multi-features analysis using magnetic resonance imaging dataset. Appl Artif Intell. (2022) 36:2031824. doi: 10.1080/08839514.2022.2031824

10.

Lemay A Gros C Zhuo Z Zhang J Duan Y Cohen-Adad J et al . Automatic multiclass intramedullary spinal cord tumor segmentation on MRI with deep learning. Neuroimage Clin. (2021) 31:102766. doi: 10.1016/j.nicl.2021.102766,

11.

Sun H Jiang R Qi S Narr KL Wade BS Upston J et al . Preliminary prediction of individual response to electroconvulsive therapy using whole-brain functional magnetic resonance imaging data. Neuroimage Clin. (2020) 26:102080. doi: 10.1016/j.nicl.2019.102080,

12.

Steyaert S Qiu YL Zheng Y Mukherjee P Vogel H Gevaert O . Multimodal data fusion of adult and pediatric brain tumors with deep learning. medRxiv. (2022) 3:2022-09. doi: 10.1101/2022.09.21.22280223

13.

Muezzinoglu T Baygin N Tuncer I Barua PD Baygin M Dogan S et al . PatchResNet: multiple patch division–based deep feature fusion framework for brain tumor classification using MRI images. J Digit Imaging. (2023) 36:973–87. doi: 10.1007/s10278-023-00789-x,

14.

Newman WC Larsen AG Bilsky MH . The NOMS approach to metastatic tumors: integrating new technologies to improve outcomes. Rev Esp Cir Ortop Traumatol. (2023) 67:487–99. doi: 10.1016/j.recot.2023.04.008,

15.

Khalighi S Reddy K Midya A Pandav KB Madabhushi A Abedalthagafi M . Artificial intelligence in neuro-oncology: advances and challenges in brain tumor diagnosis, prognosis, and precision treatment. NPJ Precis Oncol. (2024) 8:80. doi: 10.1038/s41698-024-00575-0,

16.

Liu H Jiao ML Xing XY Ou-Yang HQ Yuan Y Liu JF et al . BgNet: classification of benign and malignant tumors with MRI multi-plane attention learning. Front Oncol. (2022) 12:971871. doi: 10.3389/fonc.2022.971871,

17.

Liu H Jiao M Yuan Y Ouyang H Liu J Li Y et al . Benign and malignant diagnosis of spinal tumors based on deep learning and weighted fusion framework on MRI. Insights Imaging. (2022) 13:87. doi: 10.1186/s13244-022-01227-2,

18.

Diwakar M Singh P Shankar A . Multi-modal medical image fusion framework using co-occurrence filter and local Extrema in NSST domain. Biomed Signal Process Control. (2021) 68:102788. doi: 10.1016/j.bspc.2021.102788

19.

Safari M Fatemi A Archambault L . Medfusiongan: multimodal medical image fusion using an unsupervised deep generative adversarial network. BMC Med Imaging. (2023) 23:203. doi: 10.1186/s12880-023-01076-1

20.

Mehnatkesh H Jalali SMJ Khosravi A Nahavandi S . An intelligent driven deep residual learning framework for brain tumor classification using MRI images. Expert Syst Appl. (2023) 213:119087. doi: 10.1016/j.eswa.2022.119087

21.

AlKubeyyer A Ben Ismail MM Bchir O Alkubeyyer M . Automatic detection of the meningioma tumor firmness in MRI images. J Xray Sci Technol. (2020) 28:659–82. doi: 10.3233/XST-200644,

22.

Wang K Zheng M Wei H Qi G Li Y . Multi-modality medical image fusion using convolutional neural network and contrast pyramid. Sensors. (2020) 20:2169. doi: 10.3390/s20082169,

Summary

Keywords

MRI data fusion, spinal tumor diagnosis system, scale-invariant convolutional fusion, Lyrebird Optimization-driven Random Forest, artificial intelligence

Citation

Shi Z, Jiang J, Li M and Zhao X (2025) An intelligent MRI data fusion framework for optimized diagnosis of spinal tumors. Front. Med. 12:1606570. doi: 10.3389/fmed.2025.1606570

Received

05 April 2025

Revised

19 November 2025

Accepted

24 November 2025

Published

15 December 2025

Volume

12 - 2025

Edited by

Baljinder Singh, Post Graduate Institute of Medical Education and Research (PGIMER), India

Reviewed by

Eric Chu, EC Healthcare, Hong Kong SAR, China

Nisha Rani, Johns Hopkins University School of Medicine, United States

Updates

Copyright

© 2025 Shi, Jiang, Li and Zhao.

This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Xinming Zhao, zhaoxinming@cicams.ac.cn

ORCID: Zhuo Shi, orcid.org/0000-0002-0839-5196; Jiuming Jiang, orcid.org/0000-0001-9742-7243; Meng Li, orcid.org/0000-0001-7592-2091; Xinming Zhao, orcid.org/0009-0000-0958-0513

Disclaimer

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.