- 1College of Medicine and Biological Information Engineering, Northeastern University, Shenyang, China

- 2College of Intelligent Medicine, Chengdu University of Traditional Chinese Medicine, Chengdu, China

- 3Department of Radiology, Shengjing Hospital of China Medical University, Shenyang, China

- 4The University of New South Wales, Sydney, NSW, Australia

- 5Institute of Medical Informatics, University of Luebeck, Luebeck, Germany

Background: TP53 mutations play a critical role in the clinical management and prognostic evaluation of gynecologic malignancies such as cervical, endometrial, and ovarian cancers. With the advancement of radiomics and deep learning technologies, noninvasive AI models based on medical imaging have become important tools for assessing TP53 mutation status.

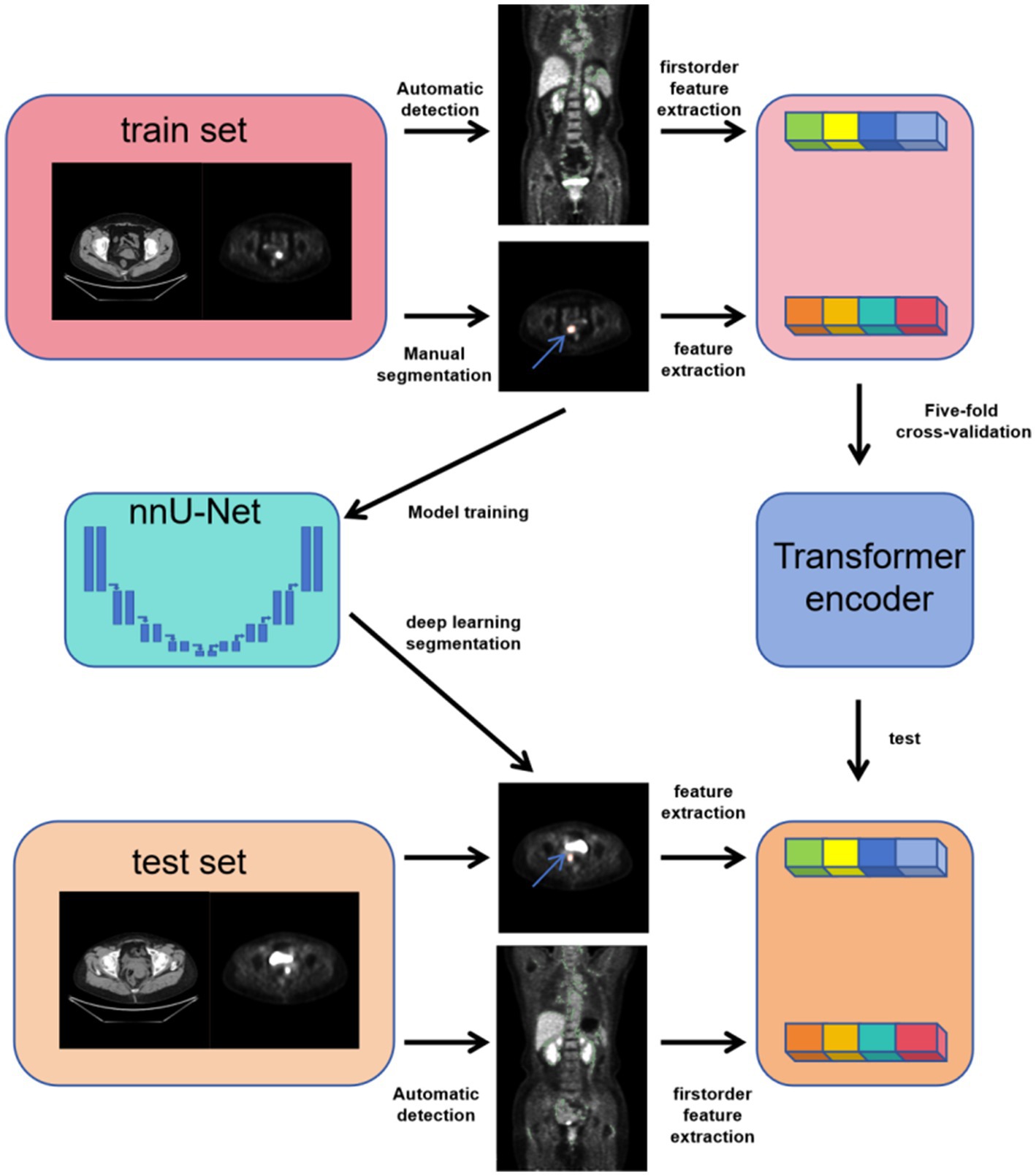

Methods: This study retrospectively analyzed 259 patients with cervical, endometrial, or ovarian cancer who underwent PET/CT before treatment. Radiomics features from tumors and brown adipose tissue (BAT) were extracted, and a Transformer-based model was developed to predict TP53 mutation by integrating imaging and clinical data. The model was trained with five-fold cross-validation, and clustering analysis was performed on deep features to explore their correlation with TP53 status.

Results: Radiomic features from tumor CT images, tumor PET images, brown adipose tissue CT images, and brown adipose tissue PET images were all found to be associated with TP53 mutation status in gynecological tumors. On the test set, the accuracy of the tumor CT radiomic model was 0.7931, the tumor PET radiomic model achieved an accuracy of 0.8276, the brown adipose tissue CT radiomic model had an accuracy of 0.7241, and the brown adipose tissue PET radiomic model reached an accuracy of 0.7931. The combined model achieved an accuracy of 0.8620 on the test set, and after automatic annotation using nn-UNet, the combined model’s accuracy was 0.8000. Unsupervised clustering of the deep features extracted by the combined model showed that the image clustering patterns were significantly correlated with TP53 mutation status (p = 0.001, p < 0.05), indicating that our model successfully captured TP53-related features that exist across different cancer types.

Conclusion: This study demonstrates that radiomic features from tumor and brown adipose tissue CT and PET images are closely associated with TP53 mutation status in gynecological tumors. This study constructed a cross-cancer TP53 model. The combined model constructed based on multi-modal imaging effectively captures TP53-related imaging phenotypes across different cancer types, and these phenotypic patterns show a significant correlation with TP53 mutation status.

1 Introduction

Cervical, endometrial, and ovarian cancers are the three major malignancies of the female reproductive system, contributing to an estimated 5 million deaths globally each year (1). Cervical cancer accounts for approximately 3.1% of new cancer cases, while endometrial cancer constitutes 2.2%, and ovarian cancer makes up 1.6%. TP53, a tumor suppressor protein, acts as a key safeguard mechanism against cancer by inhibiting cell division and responding to various stresses. Therefore, TP53 tumor suppressor gene mutations frequently occur in human cancers (2). In cervical cancer, TP53 mutations can be attributed to HPV infection, rendering individuals susceptible to the disease (3). TP53 mutations in endometrial cancer aid in identifying specific, high-risk tumor genotypes/phenotypes. In endometrial cancer, the CNH subgroup includes all uterine serous carcinomas and approximately 25% of high-grade endometrial cancer, which exhibits TP53 pathogenic mutations. Besides its diagnostic role, TP53 serves as a predictive factor in chemotherapy (4). In high-grade ovarian cancer, TP53 mutations are widespread (5), and TP53 inactivation assessment can predict the intrinsic and acquired resistance to taxane-based drugs (6). Despite differences in the epidemiology, genetic risk, and tumor microenvironment of various cancers, TP53 mutations play essential roles in these three types of tumors.

TP53 mutation status in gynecologic cancers can be highly accurately detected using p53 immunohistochemistry (IHC) (7). However, as a highly specific diagnostic method, IHC requires additional procedures, increasing the workload for pathologists. Currently, 18F-FDG is the most commonly used radiotracer for PET/CT (Positron emission tomography/computed tomography) imaging of malignant tumors, with a primary focus on glucose metabolism within tissues. PET/CT offers advantages in initial staging and response assessment in cancer patients by combining functional and anatomical information (8). The diagnostic value of 18F-FDG PET/CT has been confirmed in various gynecologic tumors and has also been validated for assessing tumor responses to tumor markers and predicting patient responses to treatment (9–11). Researches suggests that there may be a highly similar growth pattern associated with TP53 mutations in different types of tumors in medical images from various modalities (12–14).

PET/CT not only provides imaging information of the tumor itself but also reflects relevant features of brown adipose tissue (15). Brown adipose tissue is a mitochondria-rich fat tissue whose main function is to regulate body temperature through non-shivering thermogenesis. It is abundant in newborns and, although present in smaller amounts in adults, is mainly distributed in areas such as the neck and supraclavicular region (16). As a metabolism-related biomarker, brown adipose tissue can predict weight loss and the risk of cancer cachexia in tumor patients (17). Moreover, the presence of brown adipose tissue and the browning of white adipose tissue exert certain anti-cancer effects by regulating tumor glycolytic metabolism (18). Studies have also shown that brown adipose tissue is closely associated with tumor mutation status and can serve as an independent risk factor to predict recurrence and mortality in tumor patients (19, 20). These findings suggest that brown adipose tissue holds broad application prospects in tumor-related research.

In the medical field, artificial intelligence methods have been extremely widely used (21). Medical images including pathology, pathological images, and radiology images are processed by artificial intelligence methods (22, 23). At present, multi-modal medical images based on gynecological tumors can predict the benign and malignant tumors, gene mutations, lymph node metastasis status, chemotherapy treatment response, patient prognosis and other types of medical information (24). Some of these techniques are already in practice. For example, deep learning-based classification of cells on cervical smears has been applied in medical practice to improve the efficiency of tumor screening (25–27).

This study extracted radiomic features from tumor regions and brown adipose tissue regions, analyzed their correlation with TP53 mutation status, and evaluated the distribution differences of these features among three major gynecological tumors—endometrial cancer, cervical cancer, and ovarian cancer. Based on this, we compared the radiomic features of tumor regions and brown adipose tissue regions under two modalities, PET and CT, and established four deep learning models to predict TP53 mutation status in these three tumors. Subsequently, a combined model was developed to predict TP53 mutation status. We further extracted the high-dimensional deep learning features from this model for clustering analysis.

2 Materials and methods

Our institutional review board has approved the current study (Shengjing Ethics Committee, 2023PS1013K). The written informed consent was waived for the retrospective cohorts.

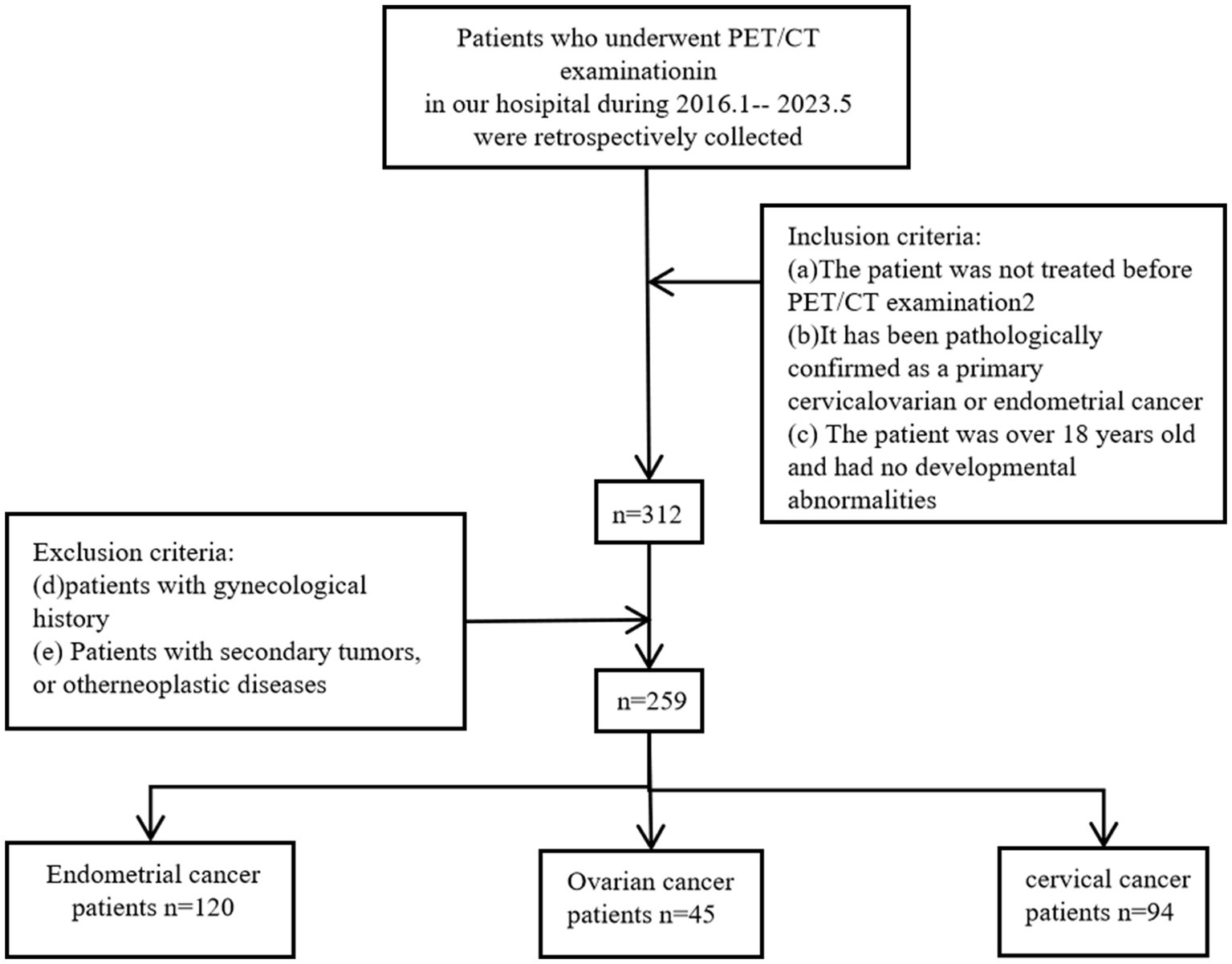

2.1 Retrospective cohort

We conducted a retrieval of patients who visited Shengjing Hospital, China Medical University, from January 2016 to May 2023. As shown in Figure 1, we included patients according to the following criteria: (a) The patient underwent a PET/CT examination within 2 weeks of initial admission and received a biopsy or surgery to pathologically confirm any of primary cervical, endometrial, or ovarian cancer following the imaging examination; (b) Patients had not received any treatment before the PET/CT; and (c) Patients were over 18 years old and had no developmental abnormalities. Our study adhered to the following exclusion criteria; (d) Patients with a history of gynecological diseases; and (e) Patients with secondary tumors or a history of other tumors. As a result, this study included a total of 259 patients.

Figure 1. Inclusion and exclusion criteria workflow for patient data selection in cervical, endometrial, and ovarian cancers.

For privacy protection, we de-identified the data, retaining only images and relevant imaging information.

2.2 Pathology processing and TP53 assessment

Pathological specimens were obtained from the enrolled patients. Pathological specimens were prepared into H&E stained slides by standard procedure and photographed. All images were re-evaluated by pathologists to ensure that the central area of the images contained clearly identifiable typical tumor tissue. On this basis, the TP53 mutation status was determined using IHC. Paraffin-embedded sections were subjected to IHC staining, observed, and photographed under a microscope. The standard IHC SP method was used for susceptibility gene expression detection, and slides were examined by pathologists. Observations with staining greater than 5% were diagnosed as having a TP53 mutation. In addition, we also collected patients’ tumor staging, lymph node metastasis status, and pathological grading.

2.3 Radiomics and cross-modality tumor segmentation pipeline

Before 18F-fluorodeoxyglucose (18F-FDG) PET/CT scanning, all patients fasted for ≥6 h and had blood glucose levels of ≤7 mmol/L. They were injected with 3.70–5.55 MBq/kg 18F-FDG in the resting state. After 60 min, 18F-FDG PET/CT scanning was performed on a Discovery PET/CT 690 scanner (GE Healthcare, Waukesha, WI, USA) while the patients were lying on the patient bed. The scan ranged from the calvarium to the middle thigh (120 s/bed). The slice thickness, tube voltage, and tube current for CT scans were 3.75 mm, 120–140 kvp, and 80 mA, respectively.

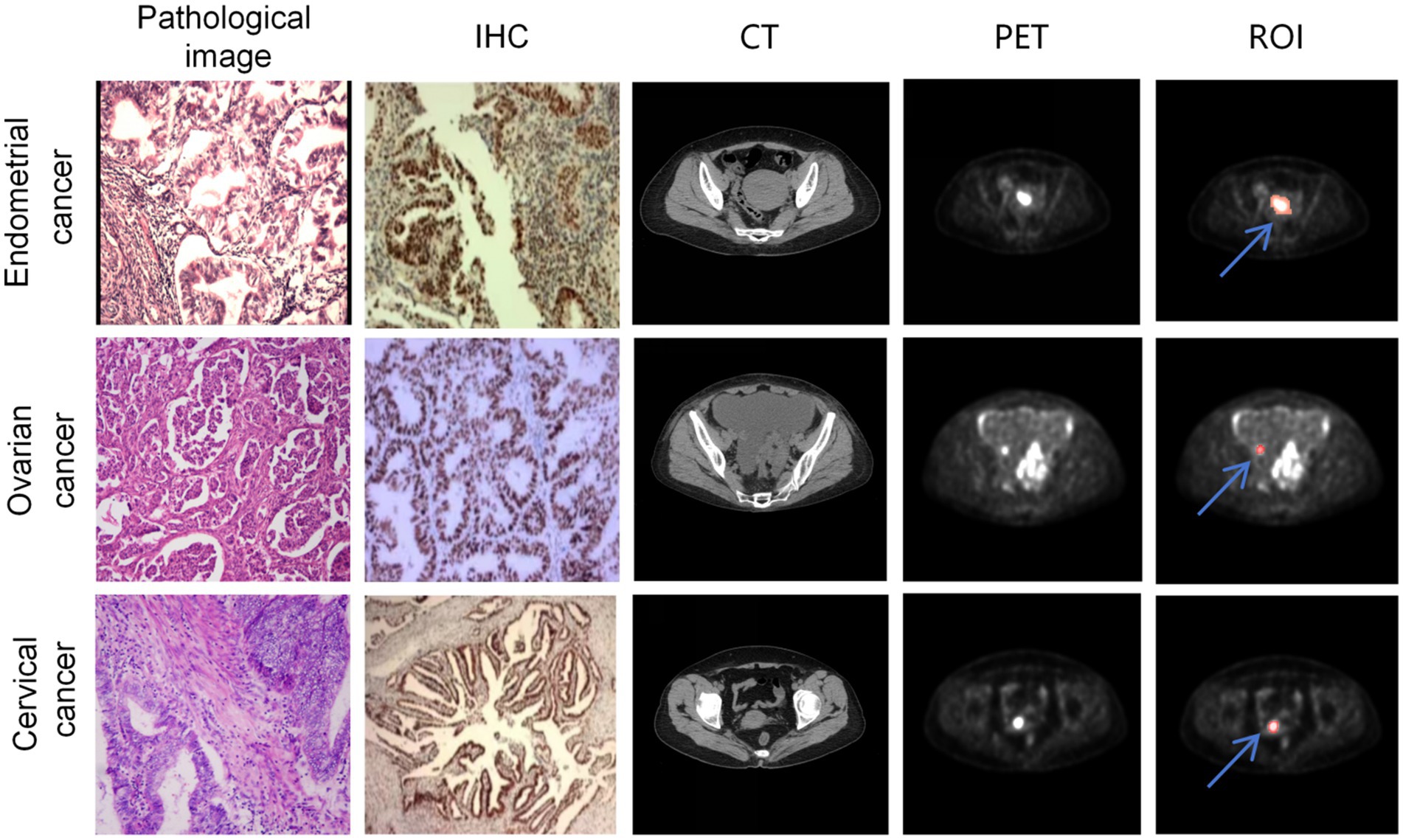

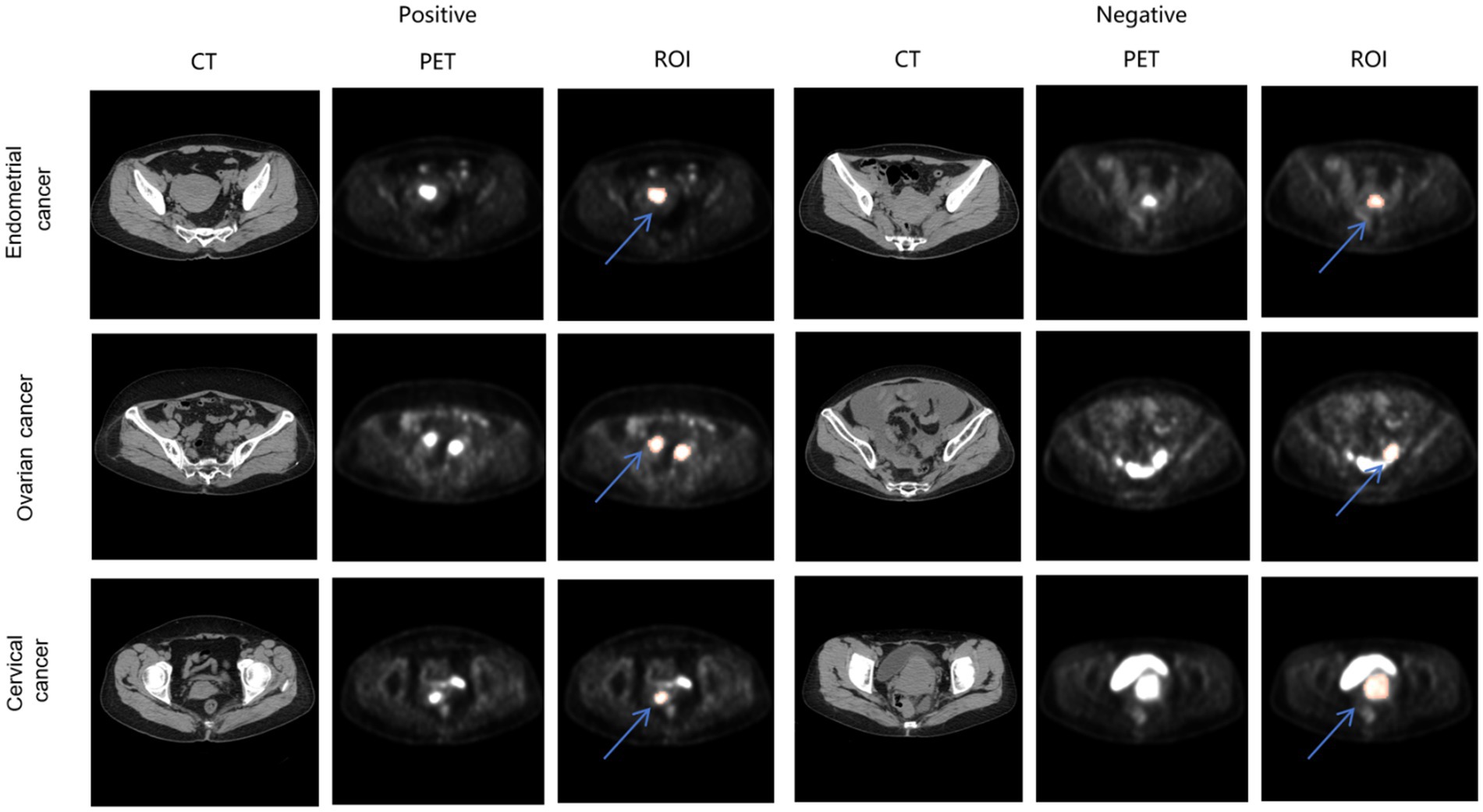

The image annotation was manually performed based on PET images using 3D Slicer by three radiologists with over 5 years of experience. The region of interest (ROI) was defined as the tumor area. In cases of disagreement among the radiologists, the final annotation was determined through consensus. Since PET and CT images were acquired on the same plane, the PET images were resampled to match the resolution of the CT images and then cropped to 512 × 512 pixels centered on the image. Both CT and PET images were normalized, as shown in Figure 2.

Figure 2. A sample consisted of the patient’s PET cross-sectional image containing the ROI. The first, second, and third rows represent samples from endometrial cancer, ovarian cancer, and cervical cancer, respectively. The first column shows the patient’s HE-stained pathological image, the second column displays the p53 immunohistochemistry stained image, the third column exhibits the cross-sectional CT image containing the tumor, the fourth column presents the cross-sectional PET image containing the tumor, and the fifth column indicates the ROIs annotated on the PET image (The red region marked by the blue arrow).

Radiomic features were separately extracted from tumor images of CT and PET, yielding a total of 1,781 features. All features were automatically extracted based on regions of interest (ROI) using the PyRadiomics tool. Feature extraction covered the original images as well as various filtered images, including square transformation (Square), square root transformation (SquareRoot), logarithmic transformation (Logarithm), exponential transformation (Exponential), Laplacian of Gaussian filtering (LoG), wavelet transform (Wavelet), 3D local binary pattern (LBP3D), and gradient images (Gradient). The final extracted features comprised 456 gray level co-occurrence matrix (GLCM) features, 342 first-order statistics features, 304 gray level run length matrix (GLRLM) features, 304 gray level size zone matrix (GLSZM) features, 266 gray level dependence matrix (GLDM) features, 95 neighboring gray tone difference matrix (NGTDM) features, and 14 shape-based features, which were used to quantitatively characterize the tumor imaging phenotypes from multiple dimensions.

To enhance the clinical usability of the diagnostic system, we developed a cross-modality gynecologic tumor segmentation model based on the annotated ROIs. We employed the nnU-Net framework for training (28). This framework is an adaptive benchmark segmentation method that automatically configures network architecture, preprocessing, training, and post-processing pipelines according to the characteristics of the dataset. We selected the 3D U-Net full resolution model structure and trained it for 100 epochs. During training, the model weights with the best validation Dice score were saved for each fold. At the testing stage, nnU-Net automatically enabled sliding window inference and incorporated test-time augmentation (TTA) to improve prediction stability. The final results were obtained by ensemble prediction using the five-fold models.

2.4 Identification and radiomics feature extraction of brown adipose tissue

We detected brown adipose tissue (BAT) activated under normal temperature conditions on PET/CT images. Regions with a standardized uptake value (SUV) greater than 1.5 were selected from the PET images, and combined with CT images, areas with CT values between −190 HU and −10 HU were identified as brown adipose tissue and annotated accordingly. Radiomics features were extracted separately from the BAT regions on both CT and PET images, yielding a total of 342 features. All features were automatically extracted based on regions of interest (ROIs) using the PyRadiomics tool. Feature extraction covered the original images and various filtered images, including square, square root, logarithm, exponential transforms, Laplacian of Gaussian (LoG), wavelet transform, 3D local binary pattern (LBP3D), and gradient images. For brown adipose tissue, only first-order statistical features were extracted to quantify the basic imaging properties of the fat regions through simple and intuitive pixel intensity distribution metrics, such as mean gray level, intensity range, and dispersion. This approach reflects the overall density and uniformity of the adipose tissue, facilitating the assessment of metabolic or structural differences, while avoiding the complexity and computational burden associated with higher-order radiomics features.

2.5 Deep learning-based TP53 classification model

As shown in Figure 3, in this study, we designed and implemented a multimodal feature modeling framework based on the Transformer architecture to predict the TP53 gene mutation status in three types of gynecologic tumors: cervical cancer, ovarian cancer, and endometrial cancer. The dataset was split into training and testing sets at a ratio of 9:1 to ensure the independence of model training and evaluation. During the training phase, five-fold cross-validation was further applied within the training set to optimize the model’s hyperparameters and assess its stability and generalization capability. In the cross-validation process, each fold’s model was trained on the remaining four folds and validated on the current fold, and based on this, the performance was compared on the test set. This approach fully leverages the limited sample size, improving the model’s adaptability and robustness in real-world applications. We modeled radiomics features from tumor CT images, tumor PET images, as well as CT and PET radiomics features of brown adipose tissue to evaluate their predictive power for TP53 mutation. Furthermore, for the combined model, we integrated pathological information reflecting patients’ biological characteristics, such as lymph node metastasis, tumor grading, and staging. By jointly inputting these heterogeneous multimodal data into the Transformer encoder, the model can thoroughly explore the latent relationships among different modalities, enhancing its ability to discriminate TP53 mutation status.

2.6 Feature clustering analysis

Based on the well-trained deep learning model, we extracted deep learning feature vectors from all samples. Hierarchical clustering was employed for clustering patient feature vectors (29). We used the agglomerative clustering method. We evaluated the clustering performance of the model using the Davies-Bouldin Index (DBI) to determine the optimal number of clusters and, consequently, the clustering pattern of image features (30). Given the characteristics of agglomerative clustering and for correlation analysis, we limited the number of clusters to a range of 2–9.

2.7 Statistical analysis

We evaluated the accuracy of the deep learning model for predicting TP53 mutation status. Additionally, we computed specific performance metrics, including Precision, Specificity, Sensitivity (Recall), and F1-score.

Statistical analysis was carried out using SPSS 26.0 software. Parametric data following a normal distribution were analyzed using t-tests or analysis of variance. Non-normally distributed data were tested using the Kruskal-Wallis H test. Group data were analyzed using the chi-square test. Pearson correlation analysis was used as well. Inter-group pairwise comparisons were performed using the Mann–Whitney U rank-sum test. A significance level of p < 0.05 was considered statistically significant. We separately assessed the correlations between TP53 mutation status and clustering, as well as cancer type and clustering.

3 Results

3.1 Baseline characteristics of patients

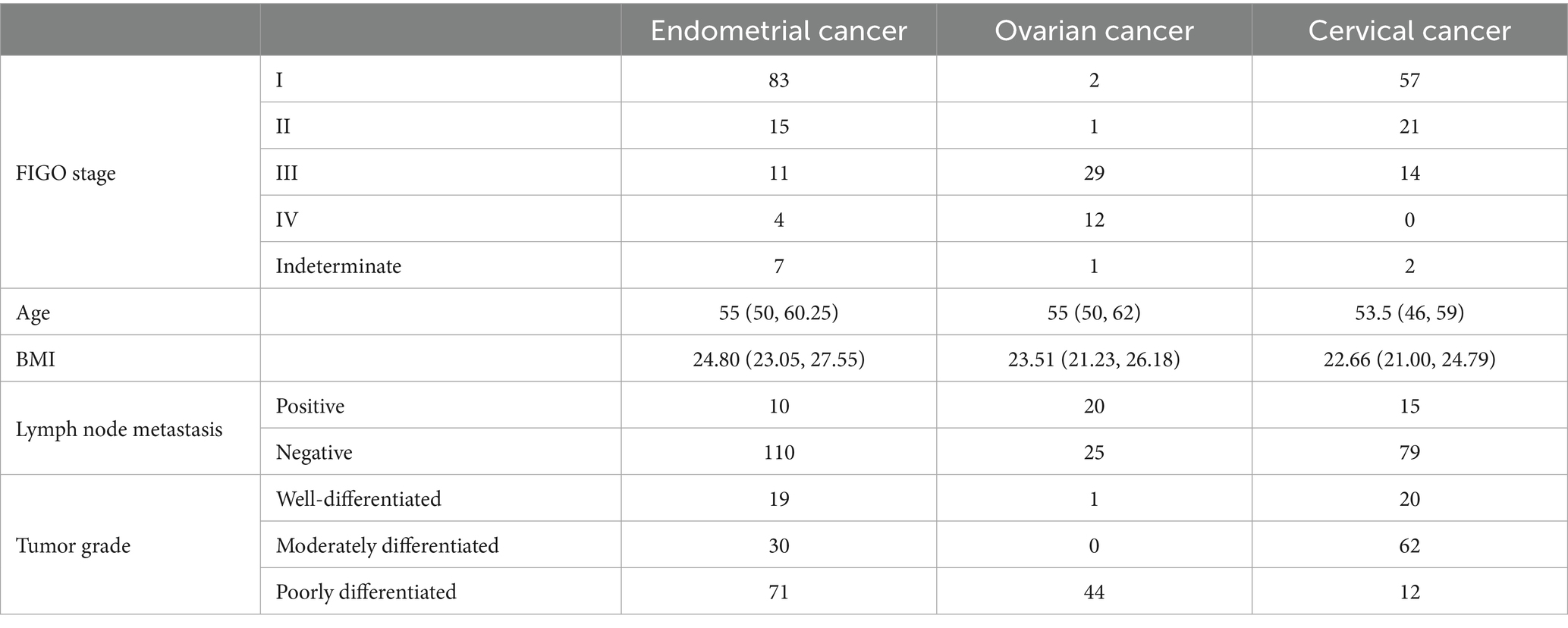

A total of 259 Endometrial Cancer, Ovarian Cancer and Cervical Cancer patients were included in this study, with their baseline characteristics, including age, type of cancer, TP53 mutations, BMI, Lymph node metastasis, tumor grade, as shown in Table 1.

PET/CT images of different TP53 states in the three kind of tumors are shown in Figure 4.

Figure 4. PET/CT images of endometrial cancer, cervical cancer, ovarian cancer in different TP53 states. The red region indicated by the blue arrow represents the annotated ROIs.

3.2 Correlation analysis between radiomics features and TP53 status

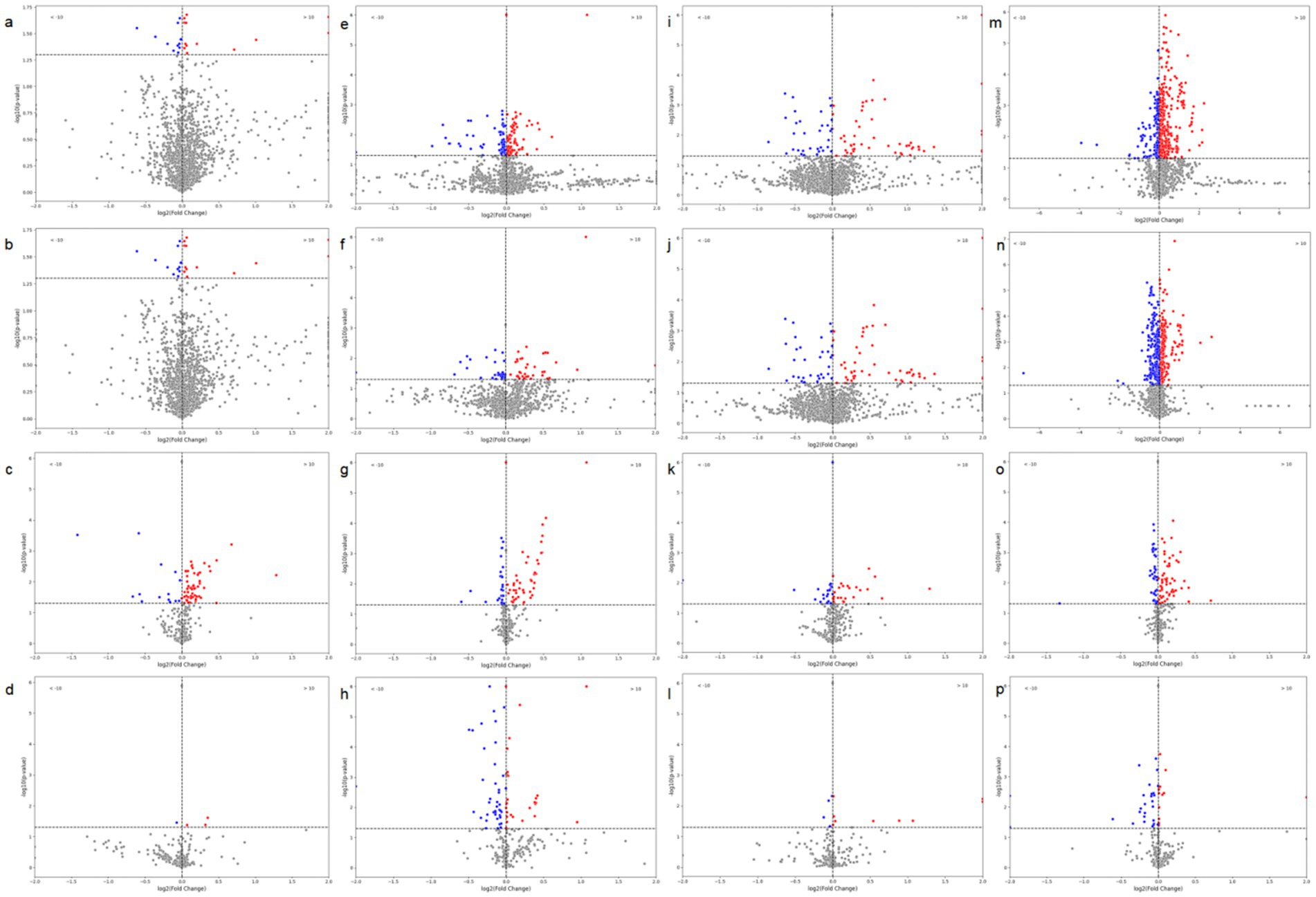

We first performed differential analysis of radiomics features between TP53-negative and TP53-positive patients, calculating the statistical significance (p-values) and effect sizes for each feature. As shown in panels a–i of Figure 5, to explore the differences in the association between radiomics features and TP53 mutation status across different tumor types, we conducted feature differential analyses separately in patients with cervical cancer, ovarian cancer, and endometrial cancer. Volcano plots were used to visualize the statistical significance (p-values) and fold changes of each radiomic feature under different TP53 statuses for each tumor type, facilitating comparison of their performance differences across tumor types.

Figure 5. Volcano plots of radiomic features based on tumor images and brown adipose tissue (BAT) in relation to TP53. (a) CT-based radiomic features of tumors in ovarian cancer patients; (b) PET-based radiomic features of tumors in ovarian cancer patients; (c) CT-based radiomic features of BAT in ovarian cancer patients; (d) PET-based radiomic features of BAT in ovarian cancer patients; (e) CT-based radiomic features of tumors in endometrial cancer patients; (f) PET-based radiomic features of tumors in endometrial cancer patients; (g) CT-based radiomic features of BAT in endometrial cancer patients; (h) PET-based radiomic features of BAT in endometrial cancer patients; (i) CT-based radiomic features of tumors in cervical cancer patients; (j) PET-based radiomic features of tumors in cervical cancer patients; (k) CT-based radiomic features of BAT in cervical cancer patients; (l) PET-based radiomic features of BAT in cervical cancer patients; (m) CT-based radiomic features of tumors in all three cancer types; (n) PET-based radiomic features of tumors in all three cancer types; (o) CT-based radiomic features of BAT in all three cancer types; (p) PET-based radiomic features of BAT in all three cancer types.

The results showed that although TP53-related radiomics features varied to some extent among the three tumor types, as shown in panels m–p of Figure 5, when cervical cancer, ovarian cancer, and endometrial cancer data were integrated for unified analysis, significant differences in tumor structural features (CT radiomics features) and metabolic features (PET radiomics features) were still observed between the TP53-negative and TP53-positive groups. Additionally, the structural features (CT features) and metabolic features (PET features) of patients’ brown adipose tissue were also closely associated with TP53 mutation status.

3.3 Model performance evaluation

For comparison, we constructed four models based on four different data sources: CT-based radiomic features of tumors, PET-based radiomic features of tumors, CT-based radiomic features of brown adipose tissue, and PET-based radiomic features of brown adipose tissue. Each model was evaluated using five-fold cross-validation. Subsequently, we incorporated all four types of features along with pathological features into a unified model. Additionally, we applied our custom tumor annotation using a segmentation model based on nn-UNet and conducted further testing on this basis.

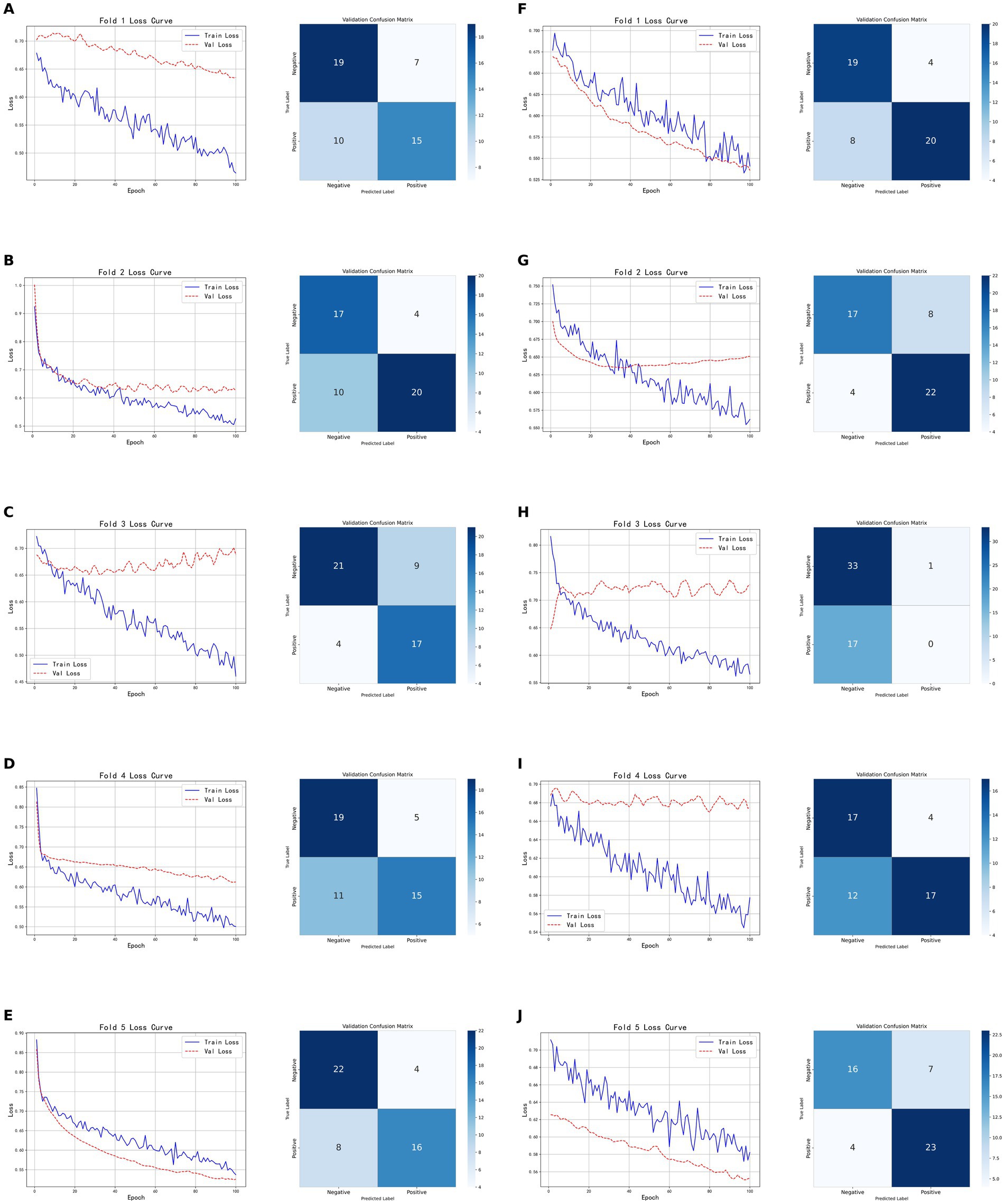

3.3.1 Model based on tumor radiomic features

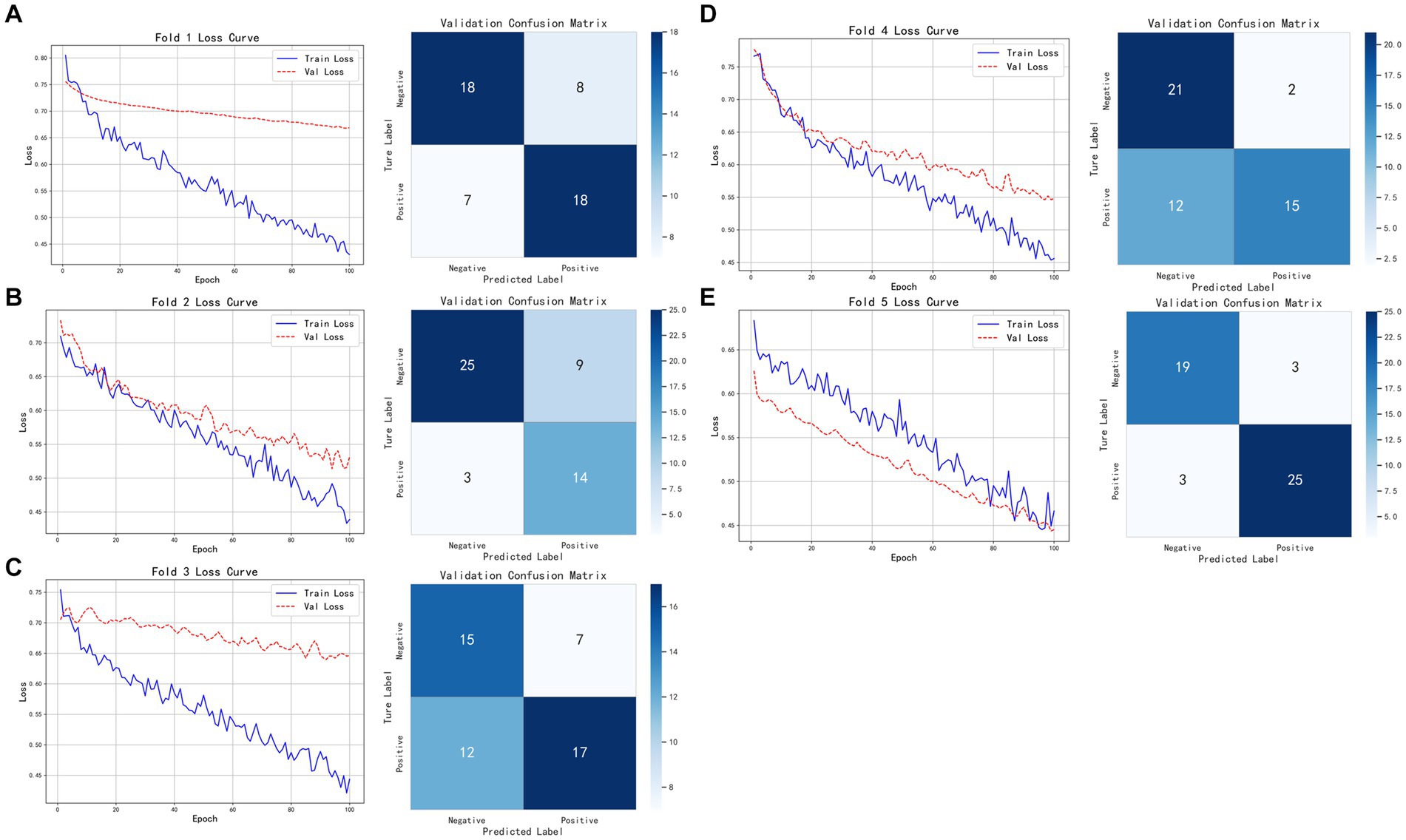

As shown in Figure 6, the models based on tumor data exhibited inconsistent performance across the five-fold cross-validation, both for CT-based and PET-based models. The model based on CT radiomic features achieved an accuracy of 0.7600 on the validation set, with a precision of 0.8000, recall of 0.6667, and F1-score of 0.7273. On the test set, it achieved an accuracy of 0.7931, precision of 0.8462, recall of 0.7333, and F1-score of 0.7857. In contrast, the model based on PET radiomic features performed better on the validation set, with an accuracy of 0.7800, precision of 0.7667, recall of 0.8519, and F1-score of 0.8070. It also demonstrated superior performance on the test set, with an accuracy of 0.8276, precision of 0.8462, recall of 0.7857, and F1-score of 0.8148. Overall, the model constructed using PET radiomic features outperformed the CT-based model in terms of classification performance, particularly in recall and F1-score, indicating a better capability in identifying positive samples.

Figure 6. Five-fold cross-validation results of classification models based on tumor radiomic features. (A–E) Show the training and validation loss curves, as well as the confusion matrices on the validation sets, for each fold of the CT-based radiomic model. (F–J) Show the training and validation loss curves for each fold of the PET-based radiomic models, as well as the corresponding confusion matrices on the validation sets.

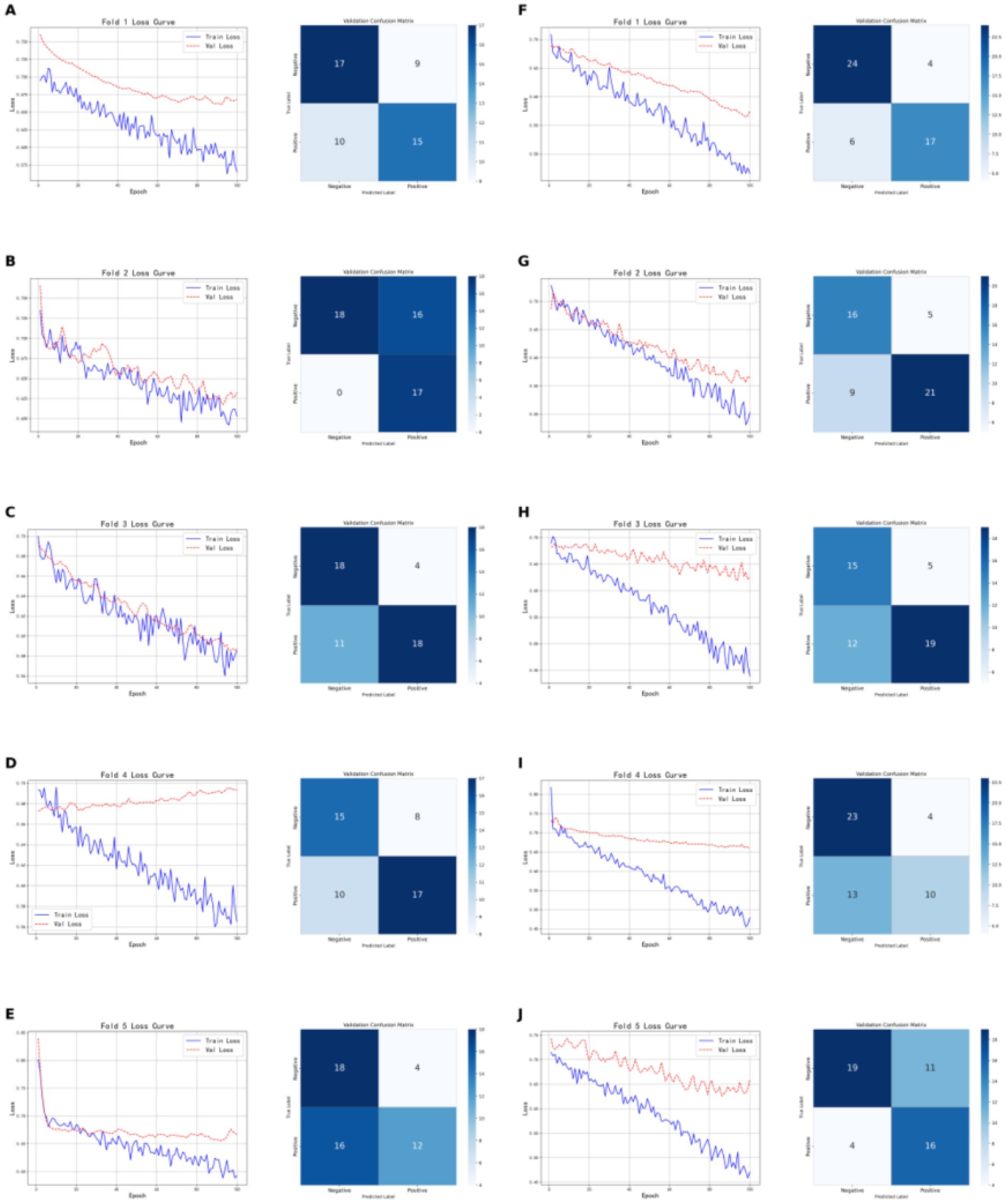

3.3.2 Models based on brown adipose tissue radiomic features

As shown in Figure 7, the models based on brown adipose tissue data demonstrated greater stability across the five-fold cross-validation compared to models built on tumor features, for both CT-based and PET-based models. The CT radiomic feature model achieved an accuracy of 0.7059, precision of 0.8182, recall of 0.6207, and F1-score of 0.7059 on the validation set; on the test set, the accuracy was 0.7241, with both precision and recall at 0.7333, and an F1-score of 0.7333. In contrast, the PET radiomic feature model performed better on the validation set, with an accuracy of 0.8040, precision of 0.8095, recall of 0.7391, and F1-score of 0.7727; on the test set, it achieved an accuracy of 0.7931, precision of 0.8333, recall of 0.7143, and F1-score of 0.7692. Overall, the model constructed using PET radiomic features outperformed the CT model across all evaluation metrics, particularly showing clear advantages in accuracy and F1-score on the validation set, indicating better overall performance and stability in classification tasks based on brown adipose tissue features.

Figure 7. Shows the five-fold cross-validation results of classification models based on brown adipose tissue radiomic features. (A–E) Present the training and validation loss curves for each of the five folds of the CT-based radiomic models, along with the confusion matrices on the validation sets. (F–G) Show the training and validation loss curves for each fold of the PET-based radiomic models, as well as the corresponding confusion matrices on the validation sets.

3.3.3 Evaluation of the combined model

As shown in Figure 8, the model based on tumor data demonstrated relatively consistent performance across the five folds of cross-validation. The model’s training and validation losses closely tracked each other, indicating near-synchronous fitting. On the validation set, the model achieved an accuracy of 0.8800, with Precision, Recall, and F1-score all at 0.8929, demonstrating consistent and excellent classification performance. On the test set, the model attained an accuracy of 0.8620, Precision of 0.8235, Recall of 0.9333, and F1-score of 0.8750, indicating that while maintaining high accuracy, the model also has strong recognition ability, particularly excelling in recall and effectively identifying positive samples. Overall, the model shows good generalization ability and robust classification performance.

Figure 8. Five-fold cross-validation results of the classification model based on tumor imaging radiomic features, brown adipose tissue imaging radiomic features, and pathological features. (A–E) Show the training and validation loss curves for each of the five folds.

Building on this, we employed nn-UNet for automatic tumor annotation and then tested the classification model. The model achieved an accuracy of 0.8000, Precision of 0.7885, Recall of 0.8200, and F1-score of 0.8040 on the test set. The model’s performance under automatic annotation was similar to that with manual annotation. Although slightly lower in Recall and F1-score, it maintained a good balance between precision and recall. In summary, the model with nn-UNet automatic annotations still exhibits good classification capability and strong robustness while maintaining high accuracy.

3.4 Feature clustering and analysis

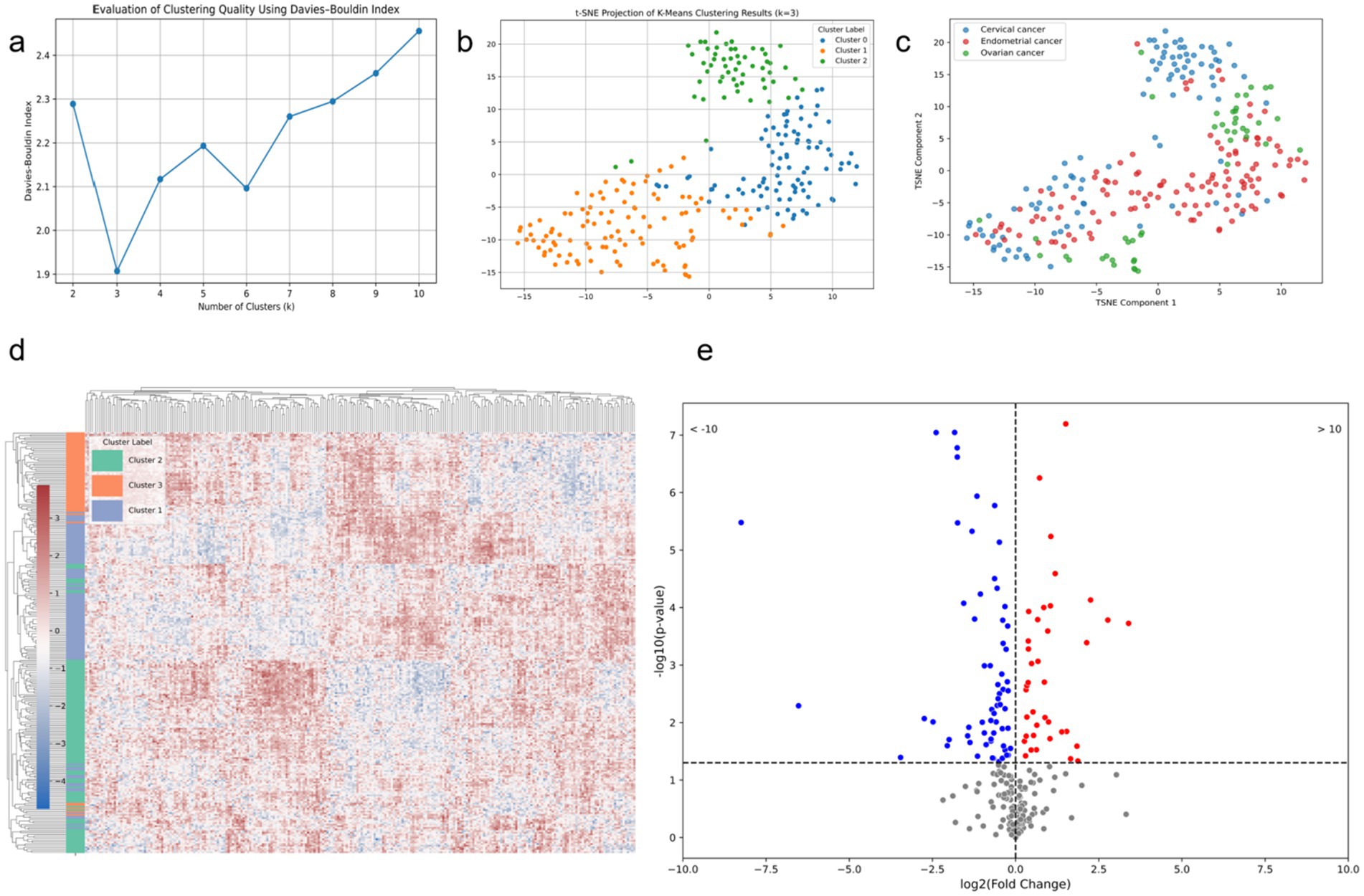

After applying t-SNE dimensionality reduction to the features extracted by the deep learning classification model, we performed unsupervised clustering analysis, as shown in Figure 9a. When the number of clusters was set to three, the Davies-Bouldin Index (DBI) reached its minimum and was significantly lower than that for other cluster numbers, indicating the presence of three image patterns related to TP53. The t-SNE reduced clustering results of these patterns are shown in Figures 9b, c. Correlation analysis revealed a significant association between the image clustering patterns and TP53 mutation status (p = 0.001, p < 0.05), demonstrating that our model effectively captured TP53-related features across cancer types. As shown in Figure 9d, the clustering results after t-SNE reduction were nearly identical to those before reduction, further indicating that the deep features extracted by the model already had strong discriminative ability in the original feature space. Figure 9e shows that a considerable number of these deep features are directly related to TP53 mutation status.

Figure 9. Modeling results based on deep features extracted from the combined model. (a) DBI corresponding to different numbers of clusters for the deep learning features; (b) t-SNE scatter plot with the number of clusters set to 3; (c) t-SNE scatter plot of different tumor types; (d) heatmap of deep features for the three clusters based on t-SNE; (e) volcano plot of deep features and TP53 mutation status.

4 Discussion

TP53 mutations are prevalent in gynecological tumors and have a significant impact on patient prognosis (31). Studies have shown that in gynecological tumors, TP53 mutation status can be predicted using data from various modalities (32, 33). In this study, we demonstrated the correlation between TP53 mutation status and tumor-related CT and PET features, as well as brown adipose tissue-related CT and PET features in patients with gynecological tumors. Based on this, we predicted TP53 status separately using tumor CT features, tumor PET features, brown adipose tissue CT features, and brown adipose tissue PET features, finding that models based on PET features performed better. Subsequently, we developed a combined prediction model and proposed a fast delineation method based on nnU-Net, both achieving good results on the test set. Finally, we analyzed the deep features extracted by the combined model, further confirming that our model successfully captured cross-modal TP53 imaging features.

Our study demonstrates a significant association between TP53 mutation status and various radiomic features, which is evident across different tumor types. Although there is some heterogeneity in how radiomic features respond to TP53 mutations in cervical, ovarian, and endometrial cancers, integrative analysis revealed a shared imaging feature pattern across these cancer types. This unified pattern likely reflects TP53’s role as a key tumor suppressor gene regulating common biological processes—such as cell cycle control, DNA repair, and metabolic reprogramming—across different gynecological tumors, thereby manifesting as recognizable imaging features in structural and metabolic scans (34). Brown adipose tissue (BAT) is recognized for its critical role in metabolism and has shown potential when combined with deep learning approaches (35, 36). BAT has been linked to tumor mutation characteristics and is considered directly related to tumor activity (37–39). In our study, we found that CT and PET features of brown adipose tissue in patients are also significantly associated with TP53 mutation status. Previous studies have shown that browning of subcutaneous adipose tissue can contribute to weight loss and cancer-associated cachexia. Additionally, thermogenic adipocytes locally activated within the tumor microenvironment may accelerate cancer progression by supplying energy and potentially inducing chemotherapy resistance (40). Our findings suggest that the impact of TP53 mutations may extend beyond the local tumor tissue. Given the central role of brown adipose tissue in energy metabolism and thermoregulation, TP53 mutations might systemically influence the metabolic function and status of adipose tissue, leaving distinctive metabolic signatures at the fat tissue level.

In this study, the model built on tumor PET radiomic features outperformed the CT-based model in accuracy, recall, and F1-score, demonstrating the superiority of PET imaging in reflecting tumor TP53 characteristics. Similarly, for brown adipose tissue radiomic features, the PET model showed more stable and overall better classification performance than the CT model, especially exhibiting outstanding accuracy and F1-score on the validation set. This indicates that metabolic information of brown adipose tissue is more important than its structural information for TP53-related features. The multimodal fusion model combining tumor and brown adipose tissue imaging features with pathological features further improved classification accuracy and robustness, showing consistently high performance on both validation and test sets. Notably, it achieved excellent recall, enabling more effective identification of positive samples and demonstrating strong generalization capability. After tumor annotation using an nn-UNet–based automatic segmentation model, the classification model’s performance was slightly lower than that with manual annotation but with a small gap. It also maintained a good balance between accuracy, precision, and recall, proving that automatic segmentation is feasible and stable for practical use, offering a fast alternative that does not require specialized medical imaging expertise.

The purpose of our study is not only to establish a model for tumor microenvironment recognition but also to extract features using a deep learning model and cluster them to reveal their correlation with cancer types. We hypothesize that a specific microenvironmental state, potentially characterized by multi-modal medical imaging biomarkers extracted through deep learning, can detect the same gene mutation across different tumor types. Recent studies have demonstrated the potential of radiogenomics in predicting the mutation status of genes like PD-1 and TP53 based on CT images and molecular markers (41). In previous artificial intelligence research, it is a mainstream practice to use single-modal data, which is conducive to building a more stable expert model (42, 43). However, these studies did not indicate whether specific gene mutations exhibit identical image features across different tumors. In this study, unsupervised clustering of features extracted by the deep learning classification model revealed that when the number of clusters was set to three, the Davies-Bouldin Index (DBI) reached its minimum value, significantly outperforming other cluster counts. This suggests the presence of three distinct imaging patterns closely associated with TP53 mutation status. Visualization via t-SNE dimensionality reduction showed clear clustering results, with clustering performance before and after dimensionality reduction being nearly identical, indicating that the deep features extracted by the model already possess strong discriminative ability in the high-dimensional space. This finding was further validated by correlation analysis, which demonstrated a significant association between the imaging cluster patterns and TP53 mutation status (p = 0.001), indicating that the model successfully captured TP53-related features common across different cancer types. Additionally, among the extracted deep features, many were directly related to TP53 mutations, suggesting these features have strong biological significance and potential clinical value. This research reveals greater potential for deep learning to accurately depict the tumor microenvironment and underscores the universality of the concept of genomics (44).

Our study has several limitations. First, this study is a single-center study; constructing a more robust classification model requires the inclusion of a larger population cohort and multiple centers. Second, PET/CT in our center was not used for screening but rather based on patients’ economic status and disease stage, the patients included in this study had more advanced tumor stages and poorer grades (45). Third, in tumor-related medical image research, pathological images are one of the most important research data types (46, 47). Predicting tumor gene mutations based on pathological images has been proven feasible (48). This study only utilized pathology-related features due to the complexity of standardizing pathological images, which may require the incorporation of additional generative networks (49, 50). Besides that, WSI technology can provide more comprehensive pathological information of tumors, which is helpful to improve the feature extraction capabilities of the model (51). For endometrial cancer, researchers predicted the molecular subtypes of endometrial cancer from hematoxylin and eosin-stained whole-slide images with an area under the curve of 0.874 (95% CI, 0.856–0.893) (52). While that study was successful, whole-slide images are difficult to prepare, costly, and challenging to apply clinically (53). To ensure the robustness of the features extracted in this study, we selected a fixed set of radiomic features as input, which to some extent limits the model’s ability to directly extract features from images. Meanwhile, although the features extracted by the deep learning model have been shown to be associated with TP53 characteristics, their relationship with intuitive radiological descriptors has yet to be established. Considering that the first-order features of brown adipose tissue contain relatively clear and interpretable information, this study did not extract higher-order texture or spatial features. The stability of such advanced features still needs to be validated in a larger cohort. Therefore, future attention should be focused on explaining neural networks to obtain more explainable diagnostic information (54). Meanwhile, the multimodal feature fusion method used in this study—direct concatenation of feature vectors—still has room for improvement (55). In addition, there is still a lack of public databases based on gynecological tumors, and we will continue to work on this (56).

5 Conclusion

This study confirms that radiomic features derived from CT and PET images of tumors and brown adipose tissue are closely associated with TP53 mutation status in gynecological tumors. By constructing multiple Transformer-based deep learning models and integrating them into a multi-modal combined model, we achieved high accuracy in predicting TP53 mutations and successfully captured cross-cancer imaging phenotypes significantly associated with TP53 status. The proposed cross-cancer TP53 prediction model offers a promising noninvasive tool for tumor molecular subtyping, with potential applications in personalized treatment planning, early risk stratification, and selection of targeted therapeutic strategies. This study highlights the potential of deep radiomics in bridging medical imaging and genomics, promoting the application of precision medicine in gynecological oncology.

Data availability statement

The raw data supporting the conclusions of this article will be made available by the authors without undue reservation.

Ethics statement

The studies involving humans were approved by Ethics Committee of Shengjing Hospital. The studies were conducted in accordance with the local legislation and institutional requirements. Written informed consent for participation was not required from the participants or the participants’ legal guardians/next of kin in accordance with the national legislation and institutional requirements.

Author contributions

TD: Software, Investigation, Writing – original draft, Formal analysis, Methodology, Visualization, Validation, Conceptualization. TJ: Writing – review & editing, Funding acquisition. XL: Writing – original draft, Data curation. MR: Writing – original draft, Formal analysis. MG: Writing – review & editing, Project administration. CL: Project administration, Supervision, Writing – review & editing, Methodology, Formal analysis.

Funding

The author(s) declare that financial support was received for the research and/or publication of this article. This work was supported by the Special Scientific Research Project of Sichuan Administration of Traditional Chinese Medicine (No. 2024zd030), and the “Haiju Program” High-end Talent Introduction Project of the Sichuan Provincial Department of Science and Technology (No. 2024JDHJ0041).

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Generative AI statement

The authors declare that no Gen AI was used in the creation of this manuscript.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

References

1. Sung, H, Ferlay, J, Siegel, RL, Laversanne, M, Soerjomataram, I, Jemal, A, et al. Global cancer statistics 2020: GLOBOCAN estimates of incidence and mortality worldwide for 36 cancers in 185 countries. CA Cancer J Clin. (2021) 71:209–49. doi: 10.3322/caac.21660

2. Kastenhuber, ER, and Lowe, SW. Putting p53 in context. Cell. (2017) 170:1062–78. doi: 10.1016/j.cell.2017.08.028

3. Zhang, J, Yu, G, Yang, Y, Wang, Y, Guo, M, Yin, Q, et al. A small-molecule inhibitor of MDMX suppresses cervical cancer cells via the inhibition of E6-E6AP-p53 axis. Pharmacol Res. (2022) 177:106128. doi: 10.1016/j.phrs.2022.106128

4. Thiel, KW, Devor, EJ, Filiaci, VL, Mutch, D, Moxley, K, Secord, AA, et al. TP53 sequencing and p53 immunohistochemistry predict outcomes when bevacizumab is added to frontline chemotherapy in endometrial cancer: an NRG oncology/gynecologic oncology group study. J Clin Oncol. (2022) 40:3289–300. doi: 10.1200/JCO.21.02506

5. Ahmed, AA, Etemadmoghadam, D, Temple, J, Lynch, AG, Riad, M, Sharma, R, et al. Driver mutations in TP53 are ubiquitous in high grade serous carcinoma of the ovary. J Pathol. (2010) 221:49–56. doi: 10.1002/path.2696

6. Shu, C, Zheng, X, Wuhafu, A, Cicka, D, Doyle, S, Niu, Q, et al. Acquisition of taxane resistance by p53 inactivation in ovarian cancer cells. Acta Pharmacol Sin. (2022) 43:2419–28. doi: 10.1038/s41401-021-00847-6

7. Singh, N, Piskorz, AM, Bosse, T, Jimenez-Linan, M, Rous, B, Brenton, JD, et al. p53 immunohistochemistry is an accurate surrogate for TP53 mutational analysis in endometrial carcinoma biopsies. J Pathol. (2020) 250:336–45. doi: 10.1002/path.5375

8. Fonti, R, Conson, M, and Del Vecchio, S. PET/CT in radiation oncology In: Seminars in oncology. WB Saunders: Elsevier (2019). 202–9.

9. Apostolova, I, Ego, K, Steffen, IG, Buchert, R, Wertzel, H, Achenbach, HJ, et al. The asphericity of the metabolic tumour volume in NSCLC: correlation with histopathology and molecular markers. Eur J Nucl Med Mol Imaging. (2016) 43:2360–73. doi: 10.1007/s00259-016-3452-z

10. Ruohua, C, Xiang, Z, Jianjun, L, and Gang, H. Relationship between 18F/F-FDG PET/CT findings and HER2 expression in gastric cancer. J Nucl Med. (2016) 57:1040. doi: 10.2967/jnumed.115.171165

11. Wang, X, Yang, W, Zhou, Q, Luo, H, Chen, W, Yeung, S-CJ, et al. The role of 18F-FDG PET/CT in predicting the pathological response to neoadjuvant PD-1 blockade in combination with chemotherapy for resectable esophageal squamous cell carcinoma. Eur J Nucl Med Mol Imaging. (2022) 49:4241–51. doi: 10.1007/s00259-022-05872-z

12. Chen, X, Lin, X, Shen, Q, and Qian, X. Combined spiral transformation and model-driven multi-modal deep learning scheme for automatic prediction of TP53 mutation in pancreatic cancer. IEEE Trans Med Imaging. (2020) 40:735–47. doi: 10.1109/TMI.2020.3035789

13. Du, T., Li, C., Jiang, T., Yang, J., Grzegorzek, M., and Sun, H. Multi-modal medical information based data mining for expression and characteristic pattern prediction of TP53 in endometrial carcinoma, in: 2023 IEEE international conference on big data (BigData), (2023), 4392–4396.

14. Noguchi, T, Ando, T, Emoto, S, Nozawa, H, Kawai, K, Sasaki, K, et al. Artificial intelligence program to predict p53 mutations in ulcerative colitis–associated cancer or dysplasia. Inflamm Bowel Dis. (2022) 28:1072–80. doi: 10.1093/ibd/izab350

15. Ghesmati, Z, Rashid, M, Fayezi, S, Gieseler, F, Alizadeh, E, and Darabi, M. An update on the secretory functions of brown, white, and beige adipose tissue: towards therapeutic applications. Rev Endocrine Metab Disord. (2024) 25:279–308. doi: 10.1007/s11154-023-09850-0

16. Aboouf, MA, Gorr, TA, Hamdy, NM, Gassmann, M, and Thiersch, M. Myoglobin in brown adipose tissue: a multifaceted player in thermogenesis. Cells. (2023) 12:2240. doi: 10.3390/cells12182240

17. Panagiotou, G, Babazadeh, D, Mazza, DF, Azghadi, S, Cawood, JM, Rosenberg, AS, et al. Brown adipose tissue is associated with reduced weight loss and risk of cancer cachexia: a retrospective cohort study. Clin Nutr. (2025) 45:262–9. doi: 10.1016/j.clnu.2024.12.028

18. Sun, X, Sui, W, Mu, Z, Xie, S, Deng, J, Li, S, et al. Mirabegron displays anticancer effects by globally browning adipose tissues. Nat Commun. (2023) 14:7610. doi: 10.1038/s41467-023-43350-8

19. Chu, K, Bos, SA, Gill, CM, Torriani, M, and Bredella, MA. Brown adipose tissue and cancer progression. Skeletal Radiol. (2020) 49:635–9. doi: 10.1007/s00256-019-03322-w

20. Fujii, T, Yajima, R, Tatsuki, H, Oosone, K, and Kuwano, H. Implication of atypical supraclavicular F18fluorodeoxyglucose uptake in patients with breast cancer: Association between brown adipose tissue and breast cancer. Oncol Lett. (2017) 14:7025–30. doi: 10.3892/ol.2017.6768

21. Kumar, S, Singh, J, Ravi, V, Singh, P, Al Mazroa, A, Diwakar, M, et al. Deep learning and MRI biomarkers for precise lung Cancer cell detection and diagnosis. Open Bioinf J. (2024) 17. doi: 10.2174/0118750362335415240909061539

22. Agrawal, T, Choudhary, P, Shankar, A, Singh, P, and Diwakar, M. MultiFeNet: multi-scale feature scaling in deep neural network for the brain tumour classification in MRI images. Int J Imaging Syst Technol. (2024) 34:e22956. doi: 10.1002/ima.22956

23. Wu, J, Liu, W, Li, C, Jiang, T, Shariful, IM, Yao, Y, et al. A state-of-the-art survey of U-net in microscopic image analysis: from simple usage to structure mortification. Neural Comput & Applic. (2024) 36:3317–46. doi: 10.1007/s00521-023-09284-4

24. Gao, Y, Zeng, S, Xu, X, Li, H, Yao, S, Song, K, et al. Deep learning-enabled pelvic ultrasound images for accurate diagnosis of ovarian cancer in China: a retrospective, multicentre, diagnostic study. Lancet Digit Health. (2022) 4:e179–87. doi: 10.1016/S2589-7500(21)00278-8

25. Liu, W, Li, C, Xu, N, Jiang, T, Rahaman, M, Sun, H, et al. CVM-cervix: a hybrid cervical pap-smear image classification framework using CNN, visual transformer and multilayer perceptron. Pattern Recogn. (2022) 130:108829. doi: 10.1016/j.patcog.2022.108829

26. Rahaman, M, Li, C, Yao, Y, Kulwa, F, Wu, X, Li, X, et al. DeepCervix: a deep learning-based framework for the classification of cervical cells using hybrid deep feature fusion techniques. Comput Biol Med. (2021) 136:104649. doi: 10.1016/j.compbiomed.2021.104649

27. Zhang, J, Li, C, Rahaman, M, Yao, Y, Ma, P, Zhang, J, et al. A comprehensive review of image analysis methods for microorganism counting: from classical image processing to deep learning approaches. Artif Intell Rev. (2021) 55:2875–944. doi: 10.1007/s10462-021-10082-4

28. Isensee, F, Jaeger, PF, Kohl, SA, Petersen, J, and Maier-Hein, KH. nnU-net: a self-configuring method for deep learning-based biomedical image segmentation. Nat Methods. (2021) 18:203–11. doi: 10.1038/s41592-020-01008-z

29. Murtagh, F, and Contreras, P. Algorithms for hierarchical clustering: an overview. Wiley Interdis Rev Data Min Knowl Discov. (2012) 2:86–97. doi: 10.1002/widm.53

30. Petrovic, S. A comparison between the silhouette index and the Davies-Bouldin index in labelling ids clusters In: Proceedings of the 11th Nordic workshop of secure IT systems : Citeseer (2006). 2006: 53–64.

31. Voskarides, K, and Giannopoulou, N. The role of TP53 in adaptation and evolution. Cells. (2023) 12:512. doi: 10.3390/cells12030512

32. Shen, L, Dai, B, Dou, S, Yan, F, Yang, T, and Wu, Y. Estimation of TP53 mutations for endometrial cancer based on diffusion-weighted imaging deep learning and radiomics features. BMC Cancer. (2025) 25:45. doi: 10.1186/s12885-025-13424-5

33. Wang, C-W, Muzakky, H, Lee, Y-C, Chung, Y-P, Wang, Y-C, Yu, M-H, et al. Interpretable multi-stage attention network to predict cancer subtype, microsatellite instability, TP53 mutation and TMB of endometrial and colorectal cancer. Comput Med Imaging Graph. (2025) 121:102499. doi: 10.1016/j.compmedimag.2025.102499

34. Donehower, LA, Soussi, T, Korkut, A, Liu, Y, Schultz, A, Cardenas, M, et al. Integrated analysis of TP53 gene and pathway alterations in the cancer genome atlas. Cell Rep. (2019) 28:1370–1384.e5. e1375. doi: 10.1016/j.celrep.2019.07.001

35. Das, SK, Roy, P, Singh, P, Diwakar, M, Singh, V, Maurya, A, et al. Diabetic foot ulcer identification: a review. Diagnostics. (2023) 13:1998. doi: 10.3390/diagnostics13121998

36. Jørgensen, K, Høi-Hansen, FE, Loos, RJ, Hinge, C, and Andersen, FL. Automated supraclavicular brown adipose tissue segmentation in computed tomography using nnU-net: integration with TotalSegmentator. Diagnostics. (2024) 14:2786. doi: 10.3390/diagnostics14242786

37. Bos, SA, Gill, CM, Martinez-Salazar, EL, Torriani, M, and Bredella, MA. Preliminary investigation of brown adipose tissue assessed by PET/CT and cancer activity. Skeletal Radiol. (2019) 48:413–9. doi: 10.1007/s00256-018-3046-x

38. Pace, L, Nicolai, E, Basso, L, Garbino, N, Soricelli, A, and Salvatore, M. Brown adipose tissue in breast cancer evaluated by [18F] FDG-PET/CT. Mol Imaging Biol. (2020) 22:1111–5. doi: 10.1007/s11307-020-01482-z

39. Rämö, JT, Kany, S, Hou, CR, Friedman, SF, Roselli, C, Nauffal, V, et al. Cardiovascular significance and genetics of epicardial and pericardial adiposity. JAMA Cardiol. (2024) 9:418–27. doi: 10.1001/jamacardio.2024.0080

40. Yin, X, Chen, Y, Ruze, R, Xu, R, Song, J, Wang, C, et al. The evolving view of thermogenic fat and its implications in cancer and metabolic diseases. Signal Transduct Target Ther. (2022) 7:324. doi: 10.1038/s41392-022-01178-6

41. Ma, C, Wang, L, Song, D, Gao, C, Jing, L, Lu, Y, et al. Multimodal-based machine learning strategy for accurate and non-invasive prediction of intramedullary glioma grade and mutation status of molecular markers: a retrospective study. BMC Med. (2023) 21:198. doi: 10.1186/s12916-023-02898-4

42. Chen, H, Li, C, Li, X, Rahaman, M, Hu, W, Li, Y, et al. IL-MCAM: an interactive learning and multi-channel attention mechanism-based weakly supervised colorectal histopathology image classification approach. Comput Biol Med. (2022) 143:105265. doi: 10.1016/j.compbiomed.2022.105265

43. Rahaman, M, Li, C, Yao, Y, Kulwa, F, Rahman, M, Wang, Q, et al. Identification of COVID-19 samples from chest X-ray images using deep learning: a comparison of transfer learning approaches. J Xray Sci Technol. (2020) 28:821–39. doi: 10.3233/XST-200715

44. Bustamante, CD, De La Vega, FM, and Burchard, EG. Genomics for the world. Nature. (2011) 475:163–5. doi: 10.1038/475163a

45. Jiang, X, Tang, H, and Chen, T. Epidemiology of gynecologic cancers in China. J Gynecol Oncol. (2018) 29. doi: 10.3802/jgo.2018.29.e7

46. Jing, Y, Li, C, Du, T, Jiang, T, Sun, H, Yang, J, et al. A comprehensive survey of intestine histopathological image analysis using machine vision approaches. Comput Biol Med. (2023) 165:107388. doi: 10.1016/j.compbiomed.2023.107388

47. Zheng, Y, Li, C, Zhou, X, Chen, H, Xu, H, Li, Y, et al. Application of transfer learning and ensemble learning in image-level classification for breast histopathology. Intell Med. (2022) 3:115–28. doi: 10.1016/j.imed.2022.05.004

48. Rahaman, M. M., Millar, E. K., and Meijering, E. (2023). Breast Cancer histopathology image based gene expression prediction using spatial transcriptomics data and deep learning. arXiv preprint arXiv:230309987.

49. de Haan, K, Zhang, Y, Zuckerman, JE, Liu, T, Sisk, AE, Diaz, MFP, et al. Deep learning-based transformation of H&E stained tissues into special stains. Nat Commun. (2021) 12:4884. doi: 10.1038/s41467-021-25221-2

50. Waqas, A, Bui, MM, Glassy, EF, El Naqa, I, Borkowski, P, Borkowski, AA, et al. Revolutionizing digital pathology with the power of generative artificial intelligence and foundation models. Lab Investig. (2023) 103:100255. doi: 10.1016/j.labinv.2023.100255

51. Hu, W, Li, X, Li, C, Li, R, Jiang, T, Sun, H, et al. A state-of-the-art survey of artificial neural networks for whole-slide image analysis: from popular convolutional neural networks to potential visual transformers. Comput Biol Med. (2023) 161:107034. doi: 10.1016/j.compbiomed.2023.107034

52. Fremond, S, Andani, S, Wolf, JB, Dijkstra, J, Melsbach, S, Jobsen, JJ, et al. Interpretable deep learning model to predict the molecular classification of endometrial cancer from haematoxylin and eosin-stained whole-slide images: a combined analysis of the PORTEC randomised trials and clinical cohorts. Lancet Digit Health. (2023) 5:e71–82. doi: 10.1016/S2589-7500(22)00210-2

53. Li, X, Li, C, Rahaman, M, Sun, H, Li, X, Wu, J, et al. A comprehensive review of computer-aided whole-slide image analysis: from datasets to feature extraction, segmentation, classification and detection approaches. Artif Intell Rev. (2022) 55:4809–78. doi: 10.1007/s10462-021-10121-0

54. Van der Velden, BH, Kuijf, HJ, Gilhuijs, KG, and Viergever, MA. Explainable artificial intelligence (XAI) in deep learning-based medical image analysis. Med Image Anal. (2022) 79:102470. doi: 10.1016/j.media.2022.102470

55. Diwakar, M, Singh, P, Singh, R, Sisodia, D, Singh, V, Maurya, A, et al. Multimodality medical image fusion using clustered dictionary learning in non-subsampled shearlet transform. Diagnostics. (2023) 13:1395. doi: 10.3390/diagnostics13081395

Keywords: PET/CT, TP53, deep learning, endometrial cancer, ovarian cancer, cervical cancer

Citation: Du T, Jiang T, Li X, Rahaman MM, Grzegorzek M and Li C (2025) Prediction of TP53 mutations across female reproductive system pan-cancers using deep multimodal PET/CT radiogenomics. Front. Med. 12:1608652. doi: 10.3389/fmed.2025.1608652

Edited by:

Chen Shanxiong, Southwest University, ChinaReviewed by:

Zainab Siddiqui, Era University, IndiaYongyi Shi, Rensselaer Polytechnic Institute, United States

Copyright © 2025 Du, Jiang, Li, Rahaman, Grzegorzek and Li. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Chen Li, bGljaGVuQGJtaWUubmV1LmVkdS5jbg==; Tao Jiang, amlhbmd0b3BAY2R1dGNtLmVkdS5jbg==

†ORCID: Chen Li, http://orcid.org/0000-0003-1545-8885

Tianming Du

Tianming Du Tao Jiang

Tao Jiang Xuanyi Li

Xuanyi Li Md Mamunur Rahaman

Md Mamunur Rahaman Marcin Grzegorzek

Marcin Grzegorzek Chen Li

Chen Li