Abstract

Background:

Traditional cadaveric dissection is considered the gold standard in anatomical education; however, its accessibility is limited by ethical, logistical, and financial constraints. Recent advancements in three-dimensional (3D) scanning technologies provide an alternative approach that enhances anatomical visualization while preserving the fidelity of real human specimens.

Aim:

This study aimed to create digitized 3D models of dissected human cadaveric specimens using a handheld structured-light scanner, thus providing a sustainable and accessible resource for educational and clinical applications.

Methods:

Eight human cadaveric specimens were dissected and scanned using the Artec 3D Spider handheld scanner. The obtained scans were processed in Artec Studio 17 Professional and further processed in Blender software. Finalized 3D models were exported in.MP4 format and paired with two-dimensional (2D) images for enhanced anatomical understanding.

Results:

A total of 12 anatomical 3D models were successfully created, capturing detailed anatomical landmarks with a resolution of 0.1 mm and an accuracy of 0.05 mm. The models encompassed key anatomical regions or organs, including the brain, skull, face, neck, thorax, heart, abdomen, pelvis, and lower limb. The combination of 3D models alongside 2D images allowed for interactive and immersive learning, as well as improving spatial comprehension of complex anatomical structures.

Conclusion:

The use of high-fidelity 3D scanning technology provides a promising alternative to traditional dissection by offering an accessible, sustainable, and detailed representation of spatial relationships in the human body. This approach enhances medical education and clinical practice, bridging the gap between theoretical knowledge and practical application.

1 Introduction

The detailed anatomical knowledge is one of the most significant factors in clinical excellence regardless of medical specialty (1). Medical trainees as well as experienced clinicians are challenged by the complexity of human body structure and often return to anatomical foundations, recall key structures with their locations and refine surgical techniques and approaches (2).

Dissecting human cadavers has been recognized as the most effective method to understand anatomical structures, spatial relationships and the individuality of each human organism (1, 3–5). However, anatomical dissection facilities face several technical limitations, such as low availability and evanescence of human specimens, plus resource-intensive and carcinogenic preservation technique (1) along with jurisdictional differences in regulatory policies (6).

Recent technological advancements have proven that virtual models are an effective resource in anatomical studies that can overcome many constraints inherent to cadaveric method (2, 7–9). Three-dimensional (3D) visualization technology has introduced “new medicine,” enhancing medical practices, being used for surgical planning and education of trainees and patients (10, 11). Previous studies have shown that 3D models can supplement traditional teaching methods and are superior to two-dimensional (2D) imaging in teaching complex anatomy (7, 9). Among many methods of creating 3D scans (12), the handheld, structured-light 3D scanner acquires precise details of the surface characteristics of an object by directing the light source at the object and scanning the desired target, in a quick and convenient workflow without any special lightning. Finally, the result of the 3D model converted from series of 2D images, can be displayed and manipulated remotely by the viewers.

Given the demanding nature of clinical schedules and varying levels of accessibility and familiarity with new technologies, there is a growing need for an educational solution that maximizes teaching efficiency and allows for integration into daily clinical practice. As anatomical knowledge is universal, it should be readily accessible, in the most reliable form based on human cadaveric specimens.

Considering the potential use of 3D models in anatomical studies and education (7, 12), the aim of this study was to make 3D models of dissected human cadaveric specimens and highlight their main anatomical landmarks. 2D images and 3D models were created to provide interactive and immersive learning content for students, researchers, and clinicians, offering an opportunity to gain a deeper understanding of the structure and function of the human body. Ultimately, this research aimed to provide a promising, sustainable solution to combine cadaver-based anatomy with 3D imaging technology.

2 Materials and methods

2.1 Ethical consideration and specimen preparation

The research was approved by the Institutional Review Board of the Jagiellonian University (no. 118.0043.1.269.2024). All human cadavers (n = 8) used in this study, were provided by an educational body donation program at the Department of Anatomy, Jagiellonian University Medical College in Cracow, Poland. All the cadavers included in the program were obtained up to 72 h after death and are proven negative for COVID-19. All specimens specifying the form of dissection as well as the sex, age, and ethnicity of the deceased are listed in Table 1. Firstly, all cadavers used in this study were perfused with a 10% formalin (36% formaldehyde in methanol) aqueous solution via the femoral artery, then stored in containers filled with the 25% formalin solution for 2 years, then dissected. Only cadavers without any macroscopically visible malformations or pathologies were selected for dissection. The specimens were dissected using surgical and microsurgical instruments including scalpels, forceps, scissors and tweezers. Until the scanning process, all the isolated specimens were kept in boxes filled with 6% formalin solution, whereas the whole cadaveric dissected bodies were covered in 11% formalin solution.

Table 1

| Cadaver specimen number | Sex | Age (years) | Ethnic group | Form of dissection |

|---|---|---|---|---|

| 1 | Male | 55 | West Slavic | Isolated specimen |

| 2 | Male | 48 | West Slavic | Whole cadaveric body |

| 3 | Male | 68 | West Slavic | Whole cadaveric body |

| 4 | Female | 72 | West Slavic | Whole cadaveric body |

| 5 | Female | 74 | West Slavic | Isolated specimen |

| 6 | Male | 70 | West Slavic | Whole cadaveric body |

| 7 | Male | 57 | West Slavic | Isolated specimen |

| 8 | Female | 69 | West Slavic | Whole cadaveric body |

List of human cadaveric specimens used in the study for the scanning process, highlighting the form of dissection and the sex of the body.

List of human cadaveric specimens used in the study, highlighting the form of dissection, sex, age and ethnicity of the deceased.

The final number of cadavers included in this study was determined by choosing those cadavers in which the key anatomical region was best preserved and intact.

2.2 3D scanning and model production

Using an industrial, hand-held scanner “Artec 3D Spider” based on blue-light technology and a licensed software “Artec Studio 17 Professional” installed onto a notebook, every specimen was appropriately scanned repetitively to capture key anatomical structures. All key structures were clearly visualized with 0.1 mm resolution and 0.05 mm accuracy of details. Next, all obtained scans from one specimen were aligned to create a 3D model in “Artec Studio 17 Professional.” The models were exported to meshes then opened and edited to a final version in a free software—Blender [Blender 4.2.3.LTS].

The final versions of animated 3D models were saved in .MP4 format. Based on the obtained videos, keyframes were captured, offering views of the most essential anatomical structures of every specimen, and appropriate descriptions using valid anatomical nomenclature in English were labeled.

3 Results

The 3D models were generated with a resolution of 0.1 mm and an accuracy of 0.05 mm, ensuring a high level of anatomical detail. Based on eight human cadaveric specimens described in Table 1, a total of 12 3D models were successfully created and documented in the .MP4 format (Supplementary Videos S1–11), each corresponding to specific anatomical regions. The models provided a detailed representation of key anatomical landmarks, ensuring a high level of accuracy and visualization fidelity.

The 3D models were paired with 2D images (Figures 1–11) to facilitate interactive and comprehensive anatomical understanding. To avoid repetition, each figure is described in detail in its corresponding figure legend.

Figure 1

Cerebrum and spinal cord. (A) Lateral view: right lateral sulcus (1), right precentral gyrus (2), right postcentral gyrus (3), right superior temporal gyrus (4), right middle temporal gyrus (5), right inferior frontal gyrus–triangular part (6); (B) Both hemispheres: superior view with intact arachnoid mater on the left hemisphere focused on longitudinal cerebral fissure (1), precentral gyrus (2), central sulcus (3), postcentral sulcus (4), arachnoid mater (5); (C) Both hemispheres: inferior view, brainstem and cerebellum with vascularization, focus on optic chiasm (1), left facial and vestibulocochlear nerves (2), left internal carotid artery (3), basilar artery (4), left anterior inferior cerebellar artery (5), anterior spinal artery (6), left olfactory bulb (7); (D) Cerebral hemispheres: posterior view with brainstem and cerebellum, highlighting right occipital pole (1), right hemisphere of cerebellum (2), vermis of cerebellum (3), left superior cerebellar artery (4). The 3D model of this specimen is available in the supplementary material as Supplementary Video S1.

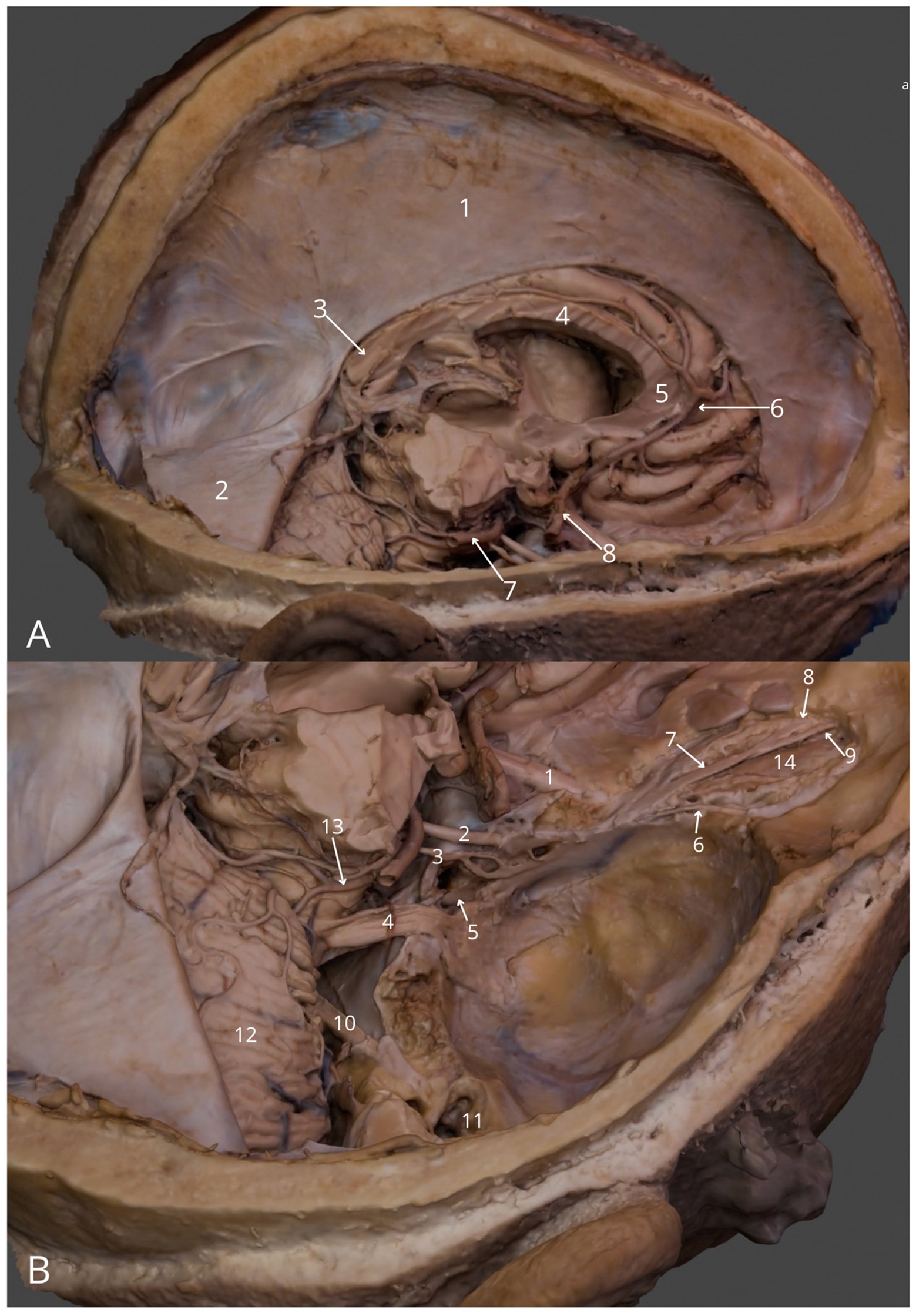

Figure 2

Intracranial structures: (A) general view (right): falx cerebri (1), right half of the tentorium cerebelli (2), splenium of corpus callosum (3), body of corpus callosum (4), genu of corpus callosum (5), right anterior cerebral artery (6), right posterior cerebral artery (7), cavernous part of right internal carotid artery (8); (B) superior posterior view into the cranial fossae: right optic nerve (1), right oculomotor nerve (2), right trochlear nerve (3), right trigeminal nerve (4), right abducens nerve (5), right lacrimal nerve (6), right frontal nerve (7), right supratrochlear nerve (8), right supraorbital nerve (9), right facial and vestibulocochlear nerves (10), right tympanic cavity (11), right cerebellar hemisphere (12), right superior cerebellar artery (13), right levator palpebrae superioris muscle (14). The 3D model of this specimen is available in the supplementary material as Supplementary Video S2.

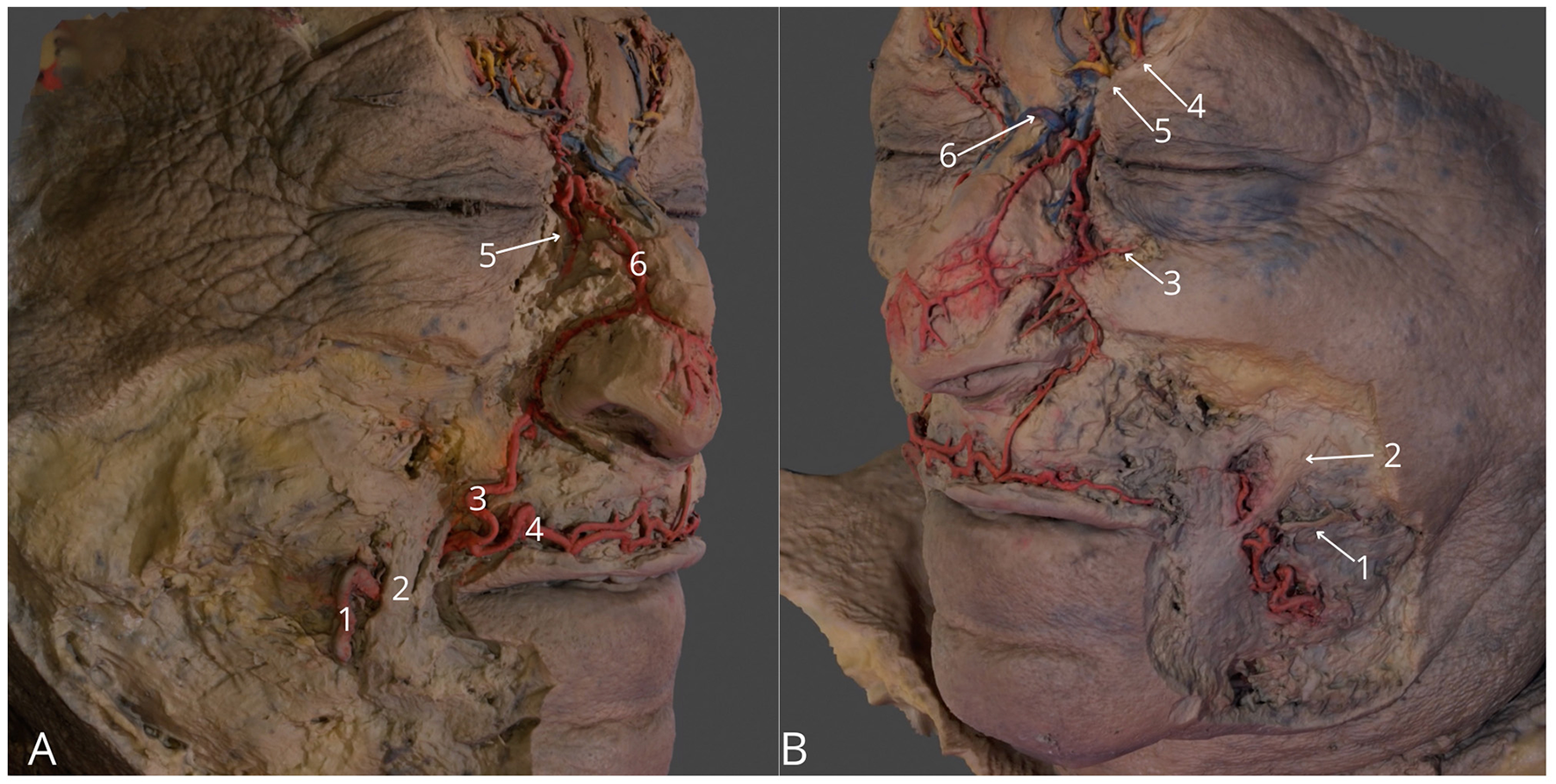

Figure 3

Region of the face. (A) Right side, highlighting facial artery (1), right angular artery (3), right superior labial artery (4), anastomosis between right angular artery and right dorsal nasal artery (5), external nasal branch of the right dorsal nasal artery (6); (B) Left side, highlighting left parotid duct (1), left zygomatic major muscle (2), left medial palpebral artery (3), left supraorbital vessels with left supraorbital nerve (4), left supratrochlear vessels with left supratrochlear nerve (4) anastomosis between supratrochlear veins (6). The 3D model of this specimen is available in the supplementary material as Supplementary Video S3.

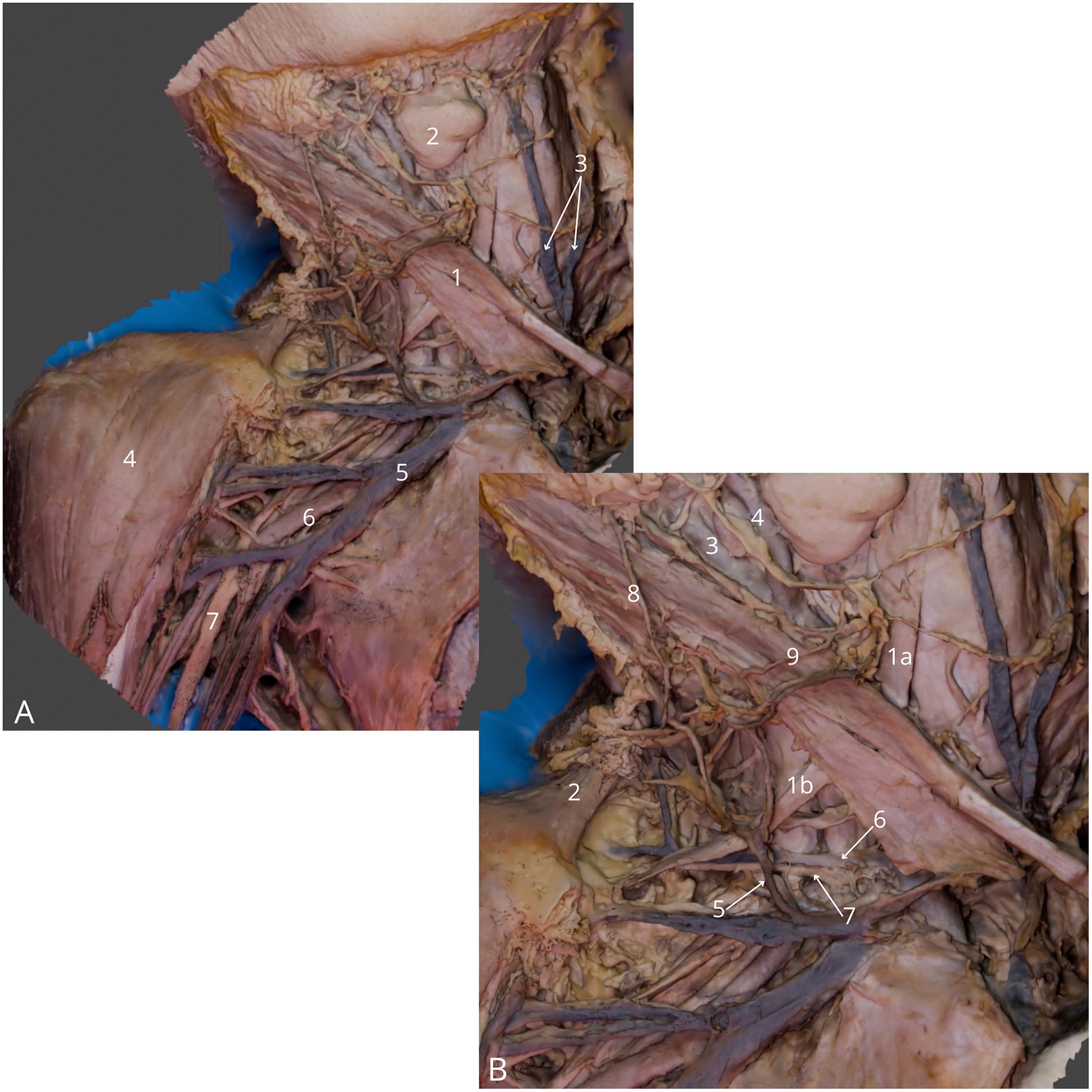

Figure 4

Neck and axillary fossa dissection. (A) General view, highlighting right sternocleidomastoid muscle (1), right submandibular gland (2), anterior jugular veins (3), right deltoid muscle (4), right axillary vein (5), right axillary artery (6), right median nerve (7); (B) Detailed view at the lateral triangle of the neck, highlighting right omohyoid muscle—superior (1a) and inferior (1b) belly, right trapezius muscle (2), right internal carotid artery (3), right external carotid artery (4), right external jugular vein (5), right transverse cervical vein (6), right transverse cervical artery (7), right great auricular nerve (8), right transverse cervical nerve (9). The 3D model of this specimen is available in the supplementary material as Supplementary Video S4.

Figure 5

Sternocostal surface of the heart in the thoracic cavity. Lifted pericardial sac (1) with highlighted ascending aorta (2), pulmonary trunk (3), right appendage (4), right ventricle (5), right coronary artery (6) and anterior interventricular branch of left coronary artery (7). Left internal thoracic vessels (8), left phrenic nerve (9), diaphragm (10) and left lung (11). The 3D model of this specimen is available in the supplementary material as Supplementary Video S5.

Figure 6

Posterior mediastinum. (A) General view highlighting trachea (1), right main bronchus (2) and left main bronchus (3), superior vena cava - cut (4), superior lobe of the right lung (5), inferior lobe of the right lung (6), superior lobe of the left lung (7), lingula of the lung (8), esophagus (9) and diaphragm (10) with right phrenic nerve (11); (B) Detailed view on tracheal bifurcation (1), eparterial bronchus (2), hyparterial bronchus (3), esophagus (4), hemiazygos vein (5), right inferior pulmonary vein (6), caval opening (7), thoracic aorta – cut (8), left vagus nerve (9), left recurrent laryngeal nerve (10) and left phrenic nerve (11). The 3D model of this specimen is available in the supplementary material as Supplementary Video S6.

Figure 7

Heart—four chamber view (A) General view: right atrium (1), left atrium (2), right ventricle (3), left ventricle (4), interventricular septum (5), apex of the heart (6) and trabeculae carneae (7); (B) Focus on the internal structure of the ventricles with ascending aorta (1), left anterior papillary muscle (2), chordae tendineae (3), mitral valve leaflet (4), tricuspid valve leaflet (5), interventricular septum with muscular part (6), and membranous part (7). The 3D model of this specimen is available in the supplementary material as Supplementary Video S7.

Figure 8

Abdominal cavity, anterior view. (A) General view with liver (1), falciform ligament of the liver (2), celiac trunk (3), greater curvature of stomach (4), epiploe (5), gastrocolic ligament (7); (B) Celiac trunk and its branches: left gastric artery (1) common hepatic artery (2) proper hepatic artery (3) gastroduodenal artery (4). Bile duct (5), portal vein (6), pylorus of stomach (7), bulb of duodenum (8), quadrate lobe of the liver (9). The 3D model of this specimen is available in the supplementary material as Supplementary Video S8.

Figure 9

Left kidney in retroperitoneal space, anterior view. (A) General view: left kidney (1), body of pancreas (2), abdominal aorta (3), left renal vein (4), double left renal artery (5a, 5b), left ureter (6), left iliohypogastric nerve (7), left ilioinguinal nerve (8), and left lateral cutaneous nerve of thigh (9); (B) Zoom at left kidney with left ureter (1), left renal vein (2), left superior renal artery (3), inferior mesenteric vein (4), inferior mesenteric artery (5), and left testicular vein (6); (C) Zoom at the left inferior quadrant with marked left psoas major muscle (1), left genitofemoral nerve (2), and left common iliac artery (3). The 3D model of this specimen is available in the supplementary material as Supplementary Video S9.

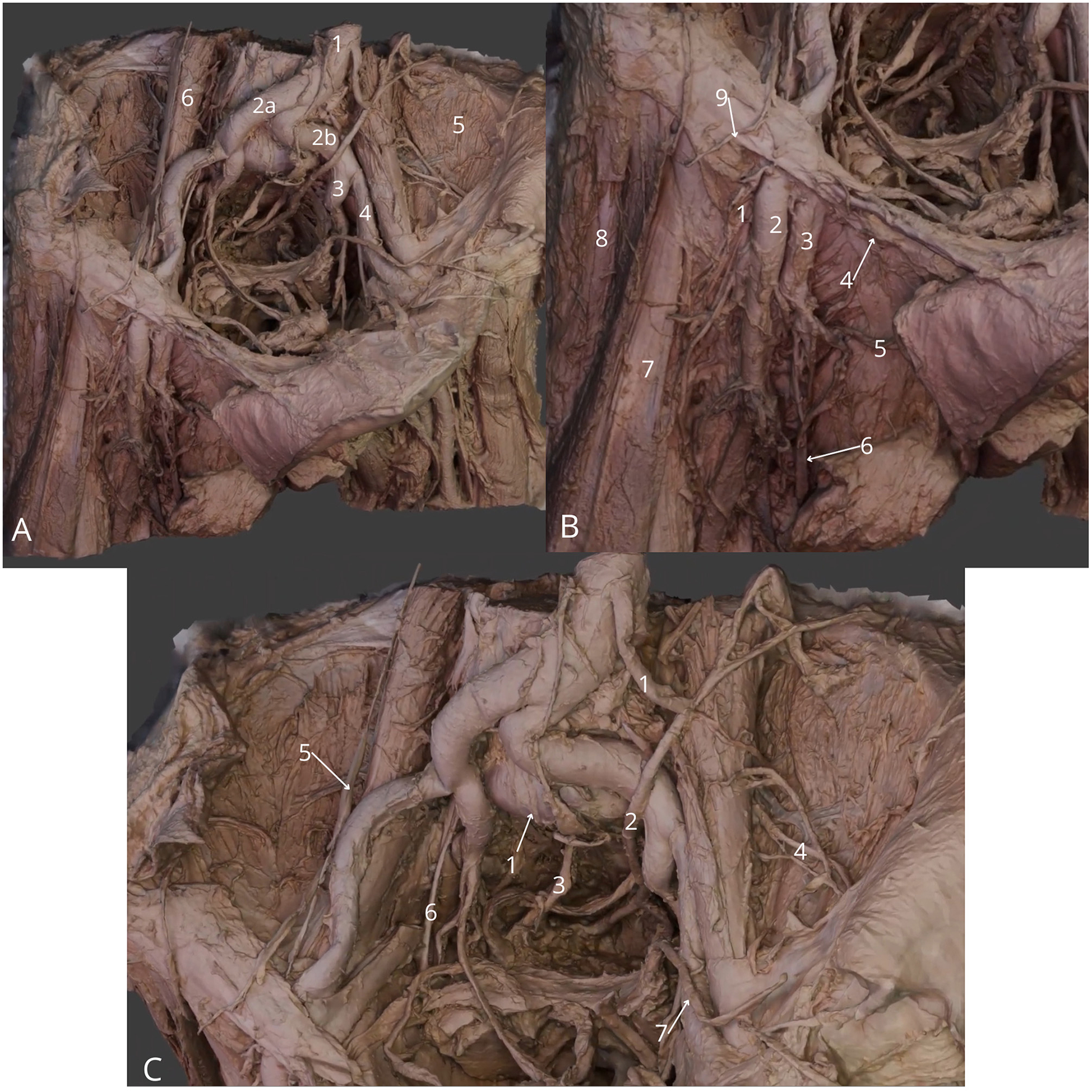

Figure 10

Male pelvic cavity dissection, femoral triangle region. (A) General view: abdominal aorta (1), right common iliac artery (2a), left common iliac artery (2b), left internal iliac artery (3), left external iliac artery (4), left iliacus muscle (5) and left psoas major muscle (6); (B) Detailed view at femoral triangle: right femoral nerve (1), right femoral artery (2), right femoral vein (3), right inguinal ligament (4), right external pudendal vein (5), right great saphenous vein (6), right sartorius muscle (7), right tensor fasciae latae muscle (8) and right lateral cutaneous nerve of thigh (9); (C) Zoom at the pelvic cavity: median sacral artery (1), left ureter (2), right hypogastric nerve (3), left lateral cutaneous nerve of thigh (4), right genitofemoral nerve (5), right obturator nerve (6), left vas deferens (7). The 3D model of this specimen is available in the supplementary material as Supplementary Video S10.

Figure 11

Left lower limb, posterior side with popliteal fossa. (A) General view: left sciatic nerve (1), left semitendinosus muscle (2), left semimembranosus muscle (3), long head of left biceps femoris (4), short head of left biceps femoris muscle (5), left plantaris muscle (6), left gastrocnemius muscle - lateral head (7) and medial head (8); (B) Zoom at popliteal fossa with visible left popliteal vein (1), left small saphenous vein (2), left posterior tibial vein (3), left popliteal artery (4), left posterior tibial artery (5), left common fibular nerve (6), left tibial nerve (7), left lateral cutaneous sural nerve (8) and left medial cutaneous sural nerve (9). The 3D model of this specimen is available in the supplementary material as Supplementary Video S11.

Table 2 outlines the models, descriptions, dissection details, and corresponding figures and videos. The videos of every specimen are available in the Supplementary materials.

Table 2

| Model number | Model description | Specimen number* | Dissection details | Corresponding figure | Corresponding video |

|---|---|---|---|---|---|

| 1 | Cerebrum and spinal cord | 1 | Arachnoid mater removed on the right hemisphere | Figures 1A–D | Supplementary Video S1 |

| 2 | Intracranial structures | 2 | Right cerebral hemisphere, right orbital roof and right tympanic tegmentum removed, the right part of tentorium cerebelli lifted | Figures 2A, B | Supplementary Video S2 |

| 3 | Region of the face | 3 | Skin removed only in areas surrounding the course of the highlighted and colored vessels and nerves (arteries highlighted in red, veins highlighted in blue, and nerves highlighted in yellow) | Figures 3A, B | Supplementary Video S3 |

| 4 | Neck and axillary fossa dissection | 4 | Right platysma removed, corpus of the right clavicle and sternum cut out | Figures 4A, B | Supplementary Video S4 |

| 5 | Sternocostal surface of the heart in the thoracic cavity | 4 | Anterior thoracic wall removed, pericardial sac lifted, left lung retracted to the left with a retractor | Figure 5 | Supplementary Video S5 |

| 6 | Posterior mediastinum | 4 | Heart within the pericardial sac, pretracheal vessels and thoracic aorta with its branches removed | Figures 6A, B | Supplementary Video S6 |

| 7 | Heart—four-chamber view | 5 | Cut longitudinally along the axis of the heart on the anterior wall, exposing both atria and ventricles | Figures 7A, B | Supplementary Video S7 |

| 8 | Abdominal cavity, anterior view | 6 | Anterior abdominal wall and the lesser omentum removed, liver with the round ligament and falciform ligament lifted cranially and to the right | Figures 8A, B | Supplementary Video S8 |

| 9 | Left kidney in retroperitoneal space, anterior view | 6 | The small intestine with transverse, descending and sigmoid colon lifted outwards and to the right, the left lateral wall of the abdomen was cut longitudinally along the middle axillary line | Figures 9A–C | Supplementary Video S9 |

| 10 | Pelvic cavity dissection with femoral triangle regions | 7 | Cadaveric corpus cut horizontally at the level of L5 vertebral corpus, and horizontally through both thighs; anterior abdominal wall lifted anteriorly and to the left with other abdominal walls removed; urinary bladder, uterus and rectum removed from pelvic cavity | Figures 10A–C | Supplementary Video S10 |

| 11 | Left lower limb, posterior side with popliteal fossa | 8 | Hamstrings muscles retracted outwards | Figures 11A, B | Supplementary Video S11 |

List of 3D models, highlighting the key dissection details, with corresponding figures (Figures 1–11) and videos (Supplementary Videos S1–S11).

*All dissected specimens that were scanned, are listed in Table 1. The videos of every specimen are available in the Supplementary materials.

4 Discussion

4.1 Technological advancements in clinical anatomical education

This study demonstrates a valuable learning tool using cutting-edge 3D digital technology for anatomical education and professional medical practice. It offers the opportunity to develop a deep understanding of the complex topographical organization of anatomical features, which is key for further analysis of pathological processes (11, 13, 14). There has been considerable debate among academics and medical trainees not about the quantity of anatomy taught, but about the relevance and effectiveness of teaching methods employed (15, 16). Studying the relationship between anatomical education and professional practice is intricate and demanding due to the wide variation in individual experiences and the difficulty of clearly defining knowledge and its application in professional settings. In the cognitive process, an important role is played by the gradual and smooth transition through subsequent stages of knowledge combined with their integration and building on additional information (11, 13, 17). As a result, in medical practice, it is important to revisit the basic sciences including anatomical foundations (11, 13, 14).

Current readily accessible study resources mostly offer 2D representations typically found in textbooks or atlases, which often fall short in conveying the intricacies of the multiple planes and spatial relationships, thereby limiting understanding (18). Anatomy is fundamentally a three-dimensional subject (10), and the benefits of three-dimensional learning tools are now undeniable and widely recognized (19). In view of this study, integrating 3D visualization technology with traditional cadaveric method of teaching, has the potential to improve spatial cognition, refine surgical techniques, and makes high-fidelity training more accessible. Multiple studies have demonstrated that volumetric visualization enhances learner's ability to identify and localize anatomical structures (9, 19, 20). 3D images offer an innovative approach, enhancing both student education and clinical training of novice trainees especially in the target stage—operation planning, which can be seen in the medical fields such as neuroanatomy, abdominal surgery, tumor anatomy, cardiology, rheumatology, immunology and many others (2, 21–23).

While other techniques exist for creating 3D models, including 3D segmentation from magnetic resonance (MR) or computed tomography (CT), pre-acquired images (12, 24, 25), structured-light, surface 3D scanning offers the advantage of capturing more realistic features, colors, and textures of the specimen of interest in more efficient and accessible workflow. Another advanced 3D visualization method, photogrammetry, is based on overlapping two-dimensional photographs taken from different angles and converting them into 3D digital models (26, 27). The quality of photogrammetric 3D models, strongly depends on the resolution of the photographs taken, requiring expensive setup of multiple cameras and sophisticated software for 3D reconstruction (12, 27).

The 3D scanner used in this study stands out amongst other methods for its high precision, portable handheld design, allowing easy visualization of versatile objects. According to certain studies, this method of 3D scanning provides more accurate registration of anatomical structures, and the obtained images exhibit less geometric distortion (12, 28). The 3D scanner can be utilized in remote locations or without power supply (with the attached battery pack). The scanning process can be possible after plugging the Artec 3D Space Spider scanner to a computer with installed Artec Studio Professional software. The scanner's technology uses hybrid geometry and color tracking technologies for the highest quality data acquisition and faster processing. This means no targets are required to achieve accurate results.

While several prior studies have successfully demonstrated 3D scanning and photogrammetry-based reconstructions of cadaveric specimens, our study introduces distinct elements that set it apart in terms of workflow, fidelity, and applicability. Notably, previous works from Barrow, Miami, and Yeditepe Universities have produced highly impactful contributions in the field, such as developing simplified photogrammetry workflows for cadaveric specimens (29), generating detailed augmented and virtual reality (VR) simulations of cerebral white matter anatomy (30), and producing extended-reality fiber dissection models of the cerebellum and brainstem (31). These studies underscore the value of AR/VR-enhanced models for neurosurgical education and research.

In contrast, our methodology focuses on high-fidelity surface 3D scanning of formalin-fixed cadavers, which are more commonly available in standard anatomical laboratories worldwide, rather than relying exclusively on fresh specimens or highly specialized photogrammetry setups. By using a structured-light handheld scanner, we achieved accurate surface texture capture without requiring multiple camera arrays or extensive photogrammetry calibration, thereby offering a more accessible and efficient workflow for widespread adoption. Furthermore, our integration of 3D reconstructions with corresponding 2D cadaveric dissection images provides a dual-format learning tool that enhances both spatial understanding and structural recognition. This hybrid approach is relatively underexplored in the existing literature and offers a novel pedagogical advantage.

By situating our work alongside these pioneering efforts, we highlight its unique contribution: a scalable and accessible protocol for generating high-resolution 3D anatomical models from formalin-fixed specimens, complemented by integrated 2D references, making it well-suited for both anatomy education and clinical training. The contribution to the broad dissemination of anatomical knowledge with the presented method, can be achieved by hosting acquired 3D models on digital platforms, allowing users to access them on personal devices such as computers or mobile phones at no additional cost. Moreover, the creation of digital libraries of anatomical specimens allows documentation of anatomical variations that may be otherwise difficult to identify routinely in various laboratory settings. Therefore, students, educators, and medical professionals can gain easy access to such invaluable resources without financial constraints unlike other methods, such as cadaveric dissection or anatomical literature.

Another method, particularly gaining attraction recently, is the use of virtual reality (VR) headsets. Despite the advantage of viewing real-life 3D images integrated into reality such devices require costly VR equipment, regular technical maintenance and the physical discomfort experienced by users must all be taken in account. Studies have demonstrated that VR headset induce symptoms aligning with cybersickness, such as nausea, head pain, visual discomfort, and disorientation (32, 33), limiting the time in which the technology can be comfortably used, reducing the effectiveness of VR as a learning tool (34, 35). Nevertheless, 3D models as produced in this study, could be uploaded to such headsets which would supplement the learners experience by the real-life cadaveric images.

Additionally, apposing 2D images and interactive 3D models—as implemented in this study—presents a novel approach that accentuates the advantages of both imaging formats and provides a comprehensive platform for grasping the anatomical complexity. This unique combination of imaging not only has the potential to enrich an interactive learning experience, but enhance clarity in identifying anatomical landmarks, establishing a robust foundation for advanced clinical interpretations.

Notably, using a visually engaging and interactive learning tool like the method introduced in this study, can potentially reduce the cognitive load on the learner, and thus facilitate more effective learning by stimulating the repetition stage, necessary for the transition of information from working memory to long-term memory (11, 17). This is noted to be crucial aspect in freeing up limited working memory, which can lead to integrating many areas of knowledge and skills at the same time (13). The goal of this process is to develop three-dimensional image into a mental model within the physician's mind, aiding clinical practice (11).

4.2 Integrating virtual surgical simulation and digital twin technologies

While our study primarily focuses on anatomical education using handheld 3D scanning, recent advances in virtual surgical simulation and digital twin technologies warrant further discussion. Such developments have significant implications for enhancing training and real-time surgical rehearsal, where precise anatomical visualization is essential.

Several studies demonstrated advantages of application of augmented reality (AR) and digital twin models for preoperative planning and intraoperative guidance in various surgical fields (36, 37). Digital twins are useful for real-time simulations that replicate patient-specific anatomy and pathology, supporting precision medicine and improving surgical outcomes (38). Visualizing small vessels, particularly if the cadaver is not fresh, presents significant challenges in terms of quality of image and reproducibility, hence the use of contrast enhanced imaging (39), initially validated for purposes of virtual surgery (40, 41), has been proposed to create digital twins for medical education with encouraging initial results (42). Virtual reality (VR) based microsurgical training models, particularly in neurosurgery, have also evolved significantly, for example, tools like the NeuroTouch simulator have proven useful for refining skills in microvascular anastomosis, aneurysm clipping, and tumor resection (36, 43).

These innovative technologies demonstrate how reproducible, high-fidelity virtual environments can improve procedural accuracy. Importantly, many existing platforms have achieved reproducibility and transferability across specialties such as otolaryngology, urology, and cardiothoracic surgery, validating the scalability of such virtual training models (44, 45).

We hope that in the future, there will be many opportunities for integration of our cadaver-derived 3D models into such AR/VR/digital twins platforms, enabling hybrid systems that combine real anatomical data with dynamic simulation technologies. By incorporating and comparing our models with these virtual platforms, we envisage a next-generation educational platform; one that merges real anatomical accuracy, clinical context, and procedural feedback. This would provide a compelling and educationally rich environment for students and trainees across a variety of surgical disciplines.

4.3 New perspective on the cadaver-based study

The study of anatomy is inextricably linked to the use of human cadavers. Dissection offers material abundant in anatomical variations (14, 46) giving opportunity for better understanding the differences and individualities occurring in particular cases.

Despite the clear advantages of cadaveric dissections, there are several disadvantages that underscore the need for more modern solutions in anatomical education. These include low and unequal availability of cadavers, expensive preservation regimen, time-consuming specimen preparation, potential health hazards, and for some, religious moral concepts and inability to restore damaged structures (6).

A significant debate has emerged regarding which chemicals are best to use in terms of cadaver preservation to prevent fast tissue degradation. Some non-formalin-based alternatives include ethanol-glycerin, pickling salts and a patented Bronopol solution (47). However, the widespread use of formalin-based solution, containing formaldehyde as the fixing agent diffused in alcohol is still commonly used in facilities, despite the risks of formaldehyde being acknowledged and proven as carcinogenic and mutagenic to humans (1, 5). Therefore, the need for a formalin-free and fresh-like cadaver in cadaver-based education. Some preservation alternatives have been explored but are incomparable to results provided by formalin use (47, 52). In terms accessibility of dissecting rooms, doctors lack access to these spaces once they have completed their medical studies and begun clinical work. Nevertheless, online resources parenting human cadaveric images are quite uncommon or unknown to many clinicians.

This research presents a solution: a virtual platform with models accurately representing anatomical structures from various donors providing imperative benefits in standard clinical practice. Moreover, 3D models in a digital format are sustainable, overcoming the inevitable issue of specimen degradation and dependency on body donation programmes.

4.4 Limitations

Despite the advantages of the cadaveric dissections and the use of 3D imaging technology, there are some limitations to the presented method in this research. The scanner used in this study is designed for visible light usage, therefore the quality of scans depends on numerous factors such as lighting conditions, tissue's light reflectivity and hydration levels, as well as the background for scanning and the position of the device and specimen. Not meeting these conditions can result in the low-quality images, making difficult to create real-life reconstructions. Also, the scanner's resolution of 0.1 mm restricted visualizing texture details smaller than 0.1 mm. Similarly, the ability to capture depth and spatial relationship between some parts located at different depth levels proved difficult. To address these imperfections, some adjustments on the Blender® software were used, to effectively enhance the image quality and resolution. However, it is worth noting that this solution demanded a nuanced understanding of the software's settings, underscoring the importance of technical proficiency when working with intricate 3D imaging technology.

Another limitation is that although the presented models are described as valuable educational tools, no direct validation with students or trainees was conducted in this study. While this was not the primary aim, future research should focus on pedagogical validation to assess the effectiveness of such models in anatomy education. Previous studies have already demonstrated that 3D models, augmented reality, and virtual reality applications can significantly enhance learning outcomes, spatial understanding, and engagement in medical education (48–51).

Despite these limitations, the integration of dissection and 3D models in anatomy education opens new horizons for understanding complex spatial anatomical relations. These tools offer clinicians and students engaging, interactive, and readily accessible learning experiences, complementing traditional 2D resources, in a format accessible to all.

5 Conclusion

Interactive 3D models of human cadavers allow to bridge the gap between practical application and theoretical knowledge eliminating challenges that come with practicing on cadavers. In addition to fast, easy and widespread access, the low maintenance costs make this technology a strong contender in the educational and clinical sector. Integrating advanced technologies and reliable study materials promises to strengthen anatomical fluency and improve clinical outcomes.

Statements

Data availability statement

The original contributions presented in the study are included in the article/Supplementary material, further inquiries can be directed to the corresponding author.

Author contributions

WM: Data curation, Resources, Visualization, Formal analysis, Software, Project administration, Writing – review & editing, Conceptualization, Methodology, Writing – original draft, Funding acquisition. MS: Writing – original draft, Resources. KB: Writing – original draft, Resources. MMo: Writing – original draft, Resources, Project administration. KB: Resources, Writing – original draft, Project administration. KF: Resources, Writing – original draft. KJ: Writing – original draft. SM: Software, Visualization, Writing – original draft. MP: Software, Visualization, Writing – original draft. AA: Writing – original draft. DR: Software, Writing – original draft, Project administration. JW: Conceptualization, Validation, Writing – review & editing. HD: Validation, Conceptualization, Funding acquisition, Writing – review & editing. MMa: Supervision, Writing – review & editing, Project administration.

Funding

The author(s) declare that financial support was received for the research and/or publication of this article. With the grant of research funds within the SONATA 17 program with research number No. 2021/43/D/NZ4/00806 funded by the Polish National Science Center, a commercial scanner “Artec 3D Spider” and a licensed software “Artec Studio 17 Professional” were provided for this research. Using the grant prize of research funds within the 4th edition of the “TechMinds” program organized by PwC Poland. Supported by the Leducq Foundation (THE FANTACY 19CVD03) to HD and the British Heart Foundation (FS/PhD/25/29655, non-clinical PhD) to AA.

Acknowledgments

The authors would like to thank Prof. Mateusz Koziej MD PhD and Prof. Mateusz Hołda MD PhD, from Department of Anatomy at Jagiellonian University Medical College in Kraków, Poland, for their technological and logistical help during the production of the 3D scans; Mr Andrzej Dubrowski and cadaveric laboratory workers from Department of Anatomy at Jagiellonian University Medical College in Kraków, Poland, for his guidance and cooperation during the cadaveric dissection process.

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

The author(s) declared that they were an editorial board member of Frontiers, at the time of submission. This had no impact on the peer review process and the final decision.

Generative AI statement

The author(s) declare that no Gen AI was used in the creation of this manuscript.

Any alternative text (alt text) provided alongside figures in this article has been generated by Frontiers with the support of artificial intelligence and reasonable efforts have been made to ensure accuracy, including review by the authors wherever possible. If you identify any issues, please contact us.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Supplementary material

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/fmed.2025.1644808/full#supplementary-material

References

1.

Aung WY Sakamoto H Sato A Yi EEPN Thein ZL Nwe MS et al . Indoor formaldehyde concentration, personal formaldehyde exposure and clinical symptoms during anatomy dissection sessions, university of medicine 1, Yangon. Int J Environ Res Public Health. (2021) 18:1–18. 10.3390/ijerph18020712

2.

Bao G Yang P Yi J Peng S Liang J Li Y et al . Full-sized realistic 3D printed models of liver and tumour anatomy: a useful tool for the clinical medicine education of beginning trainees. BMC Med Educ. (2023) 23:574. 10.1186/s12909-023-04535-3

3.

Aziz MA Mckenzie JC Wilson JS Cowie RJ Ayeni SA Dunn BK . The human cadaver in the age of biomedical informatics. Anat Rec. (2002) 269:20–32. 10.1002/ar.10046

4.

Zurada A St Gielecki J Osman N Tubbs RS Loukas M Zurada-Zielińska A et al . The study techniques of Asian, American, and European medical students during gross anatomy and neuroanatomy courses in Poland. Surg Radiol Anat. (2011) 33:161–9. 10.1007/s00276-010-0721-6

5.

Adamović D Cepić Z Adamović S Stošić M Obrovski B Morača S et al . Occupational exposure to formaldehyde and cancer risk assessment in an anatomy laboratory. Int J Environ Res Public Health. (2021) 18:11198. 10.3390/ijerph182111198

6.

Kalladan A Sharma S . Review article on the pros and cons of virtual dissection Ver-Sus Cadaveric Dissection. Int Ayurved Med J. (2022) 2021. 10.46607/iamj1610012022

7.

Ye Z Dun A Jiang H Nie C Zhao S Wang T et al . The role of 3D printed models in the teaching of human anatomy: a systematic review and meta-analysis. BMC Med Educ. (2020) 20:335. 10.1186/s12909-020-02242-x

8.

Salazar D Thompson M Rosen A Zuniga J . Using 3D printing to improve student education of complex anatomy: a systematic review and meta-analysis. Med Sci Educ. (2022) 32:1209–18. 10.1007/s40670-022-01595-w

9.

Ruisoto P Juanes JA Contador I Mayoral P Prats-Galino A . Experimental evidence for improved neuroimaging interpretation using three-dimensional graphic models. Anat Sci Educ. (2012) 5:132–7. 10.1002/ase.1275

10.

Rama M Schlegel L Wisner D Pugliese R Ramesh S Penne R et al . Using three-dimensional printed models for trainee orbital fracture education. BMC Med Educ. (2023) 23:467. 10.1186/s12909-023-04436-5

11.

Jones RA Mortimer JW Fitzgerald A Parks RW Findlater GS Sinclair DW . The Wade Programme in surgical anatomy: educational approach and 10-year review. Curr Prob Surg. (2024) 61:101641. 10.1016/j.cpsurg.2024.101641

12.

Erolin C . Interactive 3D digital models for anatomy and medical education. Adv Exp Med Biol. (2019) 1138:1–16. 10.1007/978-3-030-14227-8_1

13.

Sullivan ME . Applying the science of learning to the teaching and learning of surgical skills: the basics of surgical education. J Surg Oncol. (2020) 122:5–10. 10.1002/jso.25922

14.

Willan P . Basic surgical training 2: interactions with the undergraduate medical curriculum. Clin Anat. (1996) 9:167–70. 10.1002/(SICI)1098-2353(1996)9:3 < 167::AID-CA6>3.0.CO;2-D

15.

Smith CF Mathias HS . What impact does anatomy education have on clinical practice?Clin Anat. (2011) 24:113–9. 10.1002/ca.21065

16.

Fitzgerald JEF White MJ Tang SW Maxwell-Armstrong CA James DK . Are we teaching sufficient anatomy at medical school? The opinions of newly qualified doctors. Clin Anat. (2008) 21:718–24. 10.1002/ca.20662

17.

Schenarts PJ Schenkel RE Sullivan ME . The biology and psychology of surgical learning. Surg Clin North Am. (2021) 101:541–54. 10.1016/j.suc.2021.05.002

18.

Preece D Williams SB Lam R Weller R . “Let's Get Physical”: advantages of a physical model over 3D computer models and textbooks in learning imaging anatomy. Anat Sci Educ. (2013) 6:216–24. 10.1002/ase.1345

19.

Müller-Stich BP Löb N Wald D Bruckner T Meinzer HP Kadmon M et al . Regular three-dimensional presentations improve in the identification of surgical liver anatomy - a randomized study. BMC Med Educ. (2013) 13:131. 10.1186/1472-6920-13-131

20.

Azer SA Azer S . 3D Anatomy models and impact on learning: a review of the quality of the literature. Health Prof Educ. (2016) 2:80–98. 10.1016/j.hpe.2016.05.002

21.

Oliveira F Figueiredo L B L Peris-Celda . 3d Mdel a a Sce f Neaam Edcai: A Seie Whie Mae Dieci Uig 3d Image ad Phgamme Sca [Italian] (2024). 10.21203/rs.3.rs-3895027/v1

22.

Stephenson RS . High Resolution 3-Dimensional Imaging of the Human Cardiac Conduction System from Microanatomy to Mathematical Modeling (2017). Available online at: https://www.nature.com/scientificreports/ (Accessed 2017).

23.

Rea Editor PM . Biomedical visualisation. Advances in Experimental Medicine and Biology (2019). Available online at: http://www.springer.com/series/5584 (Accessed 2023).

24.

Schiemann T Freudenberg J Pflesser B Pommert A Priesmeyer K Riemer M et al . Exploring the visible human using the VOXEL-MAN Framework. Comput Med Imaging Graph. (2000) 24:127–32. 10.1016/S0895-6111(00)00013-6

25.

Höhne KH Pflesser B Pommert A Riemer M Schubert R Schiemann T et al . A realistic model of human structure from the visible human data. Methods Inf Med. (2001) 40:83–9. 10.1055/s-0038-1634481

26.

Krause KJ Mullins DD Kist MN Goldman EM . Developing 3D models using photogrammetry for virtual reality training in anatomy. Anat Sci Educ. (2023) 16:1033–40. 10.1002/ase.2301

27.

de Benedictis A Nocerino E Menna F Remondino F Barbareschi M Rozzanigo U et al . Photogrammetry of the human brain: a novel method for three-dimensional quantitative exploration of the structural connectivity in neurosurgery and neurosciences. World Neurosurg. (2018) 115:e279–91. 10.1016/j.wneu.2018.04.036

28.

Dixit I Kennedy S Piemontesi J Kennedy B Krebs C . Which tool is best: 3D scanning or photogrammetry – It depends on the task. Adv Exp Med Biol. (2019) 1120:107–19. 10.1007/978-3-030-06070-1_9

29.

Gurses ME Gungor A Hanalioglu S Yaltirik CK Postuk HC Berker M et al . Qlone®: a simple method to create 360-degree photogrammetry-based 3-dimensional model of cadaveric specimens. Oper Neurosurg. (2021) 21:E488–93. 10.1093/ons/opab355

30.

Gurses ME Gungor A Gökalp E Hanalioglu S Karatas Okumus SY Tatar I et al . Three-dimensional modeling and augmented and virtual reality simulations of the white matter anatomy of the cerebrum. Oper Neurosurg. (2022) 23:355–66. 10.1227/ons.0000000000000361

31.

Gurses ME Gungor A Rahmanov S Gökalp E Hanalioglu S Berker M et al . Three-dimensional modeling and augmented reality and virtual reality simulation of fiber dissection of the cerebellum and brainstem. Oper Neurosurg. (2022) 23:345–54. 10.1227/ons.0000000000000358

32.

Oh H Son W . Cybersickness and its severity arising from virtual reality content: a comprehensive study. Sensors. (2022) 22:1314. 10.3390/s22041314

33.

Kolecki R Pregowska A Dabrowa J Skuci Nski C J Pulanecki T Walecki P et al . Assessment of the utility of Mixed Reality in medical education. Transl Res Anat. (2022) 28:100214. 10.1016/j.tria.2022.100214

34.

Wainman B Aggarwal A Birk SK Gill JS Hass KS Fenesi B . Virtual dissection: an interactive anatomy learning tool. Anat Sci Educ. (2021) 14:788–98. 10.1002/ase.2035

35.

Bevizova K Falougy H el Thurzo A Harsanyi S . Is virtual reality enhancing dental anatomy education? A systematic review and meta-analysis. BMC Med Educ. (2024) 24:1395. 10.1186/s12909-024-06233-0

36.

Chumnanvej S Chumnanvej S Tripathi S . Assessing the benefits of digital twins in neurosurgery: a systematic review. Neurosurg Rev. (2024) 47:52. 10.1007/s10143-023-02260-5

37.

Mekki YM Luijten G Hagert E Belkhair S Varghese C Qadir J et al . Digital twins for the era of personalized surgery. Npj Digit Med. (2025) 8:283 10.1038/s41746-025-01575-5

38.

Asciak L Kyeremeh J Luo X Kazakidi A Connolly P Picard F et al . Digital twin assisted surgery, concept, opportunities, and challenges. NPJ Digit Med. (2025) 8:32. 10.1038/s41746-024-01413-0

39.

Ganau M Syrmos NC D'Arco F Ganau L Chibbaro S Prisco L et al . Enhancing contrast agents and radiotracers performance through hyaluronic acid-coating in neuroradiology and nuclear medicine. Hell J Nucl Med. (2017) 20:166–68. 10.1967/s002449910558

40.

Sboarina A Foroni RI Minicozzi A Antiga L Lupidi F Longhi M et al . Software for hepatic vessel classification: feasibility study for virtual surgery. Int J Comput Assist Radiol Surg. (2010) 5:39–48. 10.1007/s11548-009-0380-4

41.

Zaed I Chibbaro S Ganau M Tinterri B Bossi B Peschillo S et al . Simulation and virtual reality in intracranial aneurysms neurosurgical training: a systematic review. J Neurosurg Sci. (2022) 66:494–500. 10.23736/S0390-5616.22.05526-6

42.

Ou Y Chen Q Xu D Gong J Li M Tang M et al . Anatomical digital twins for medical education: a stepwise guide to create perpetual multimodal three-dimensional reconstruction of digital brain specimens. Quant Imaging Med Surg. (2025) 15:4164–79. 10.21037/qims-24-2301

43.

Alaraj A Lemole MG Finkle JH Yudkowsky R Wallace A Luciano C et al . Virtual reality training in neurosurgery: review of current status and future applications. Surg Neurol Int. (2011) 2:52. 10.4103/2152-7806.80117

44.

Naughton PA Aggarwal R Wang TT Van Herzeele I Keeling AN Darzi AW et al . Skills training after night shift work enables acquisition of endovascular technical skills on a virtual reality simulator. J Vasc Surg. (2011) 53:858–66. 10.1016/j.jvs.2010.08.016

45.

van Dongen KW van der Wal WA Rinkes IH Schijven MP Broeders IA . Virtual reality training for endoscopic surgery: voluntary or obligatory?Surg Endosc. (2008) 22:664–7. 10.1007/s00464-007-9456-9

46.

Estai M Bunt S . Best teaching practices in anatomy education: a critical review. Ann Anat. (2016) 208:151–7. 10.1016/j.aanat.2016.02.010

47.

Balta JY Cronin M Cryan JF O'Mahony SM . Human preservation techniques in anatomy: a 21st century medical education perspective. Clin Anat. (2015) 28:725–34. 10.1002/ca.22585

48.

Gonzalez-Romo NI Mignucci-Jiménez G Hanalioglu S Gurses ME Bahadir S Xu Y et al . Virtual neurosurgery anatomy laboratory: a collaborative and remote education experience in the metaverse. Surg Neurol Int. (2023) 14:90. 10.25259/SNI_162_2023

49.

Gurses ME Gökalp E Gecici NN Gungor A Berker M Ivan ME et al . Creating a neuroanatomy education model with augmented reality and virtual reality simulations of white matter tracts. J Neurosurg. (2024) 141:865–74. 10.3171/2024.2.JNS2486

50.

Gurses ME Hanalioglu S Mignucci-Jiménez G Gökalp E Gonzalez-Romo NI Gungor A et al . Three-dimensional modeling and extended reality simulations of the cross-sectional anatomy of the cerebrum, cerebellum, and brainstem. Oper Neurosurg. (2023) 25:3–10. 10.1227/ons.0000000000000703

51.

Gurses ME Gonzalez-Romo NI Xu Y Mignucci-Jiménez G Hanalioglu S Chang JE et al . Interactive microsurgical anatomy education using photogrammetry 3D models and an augmented reality cube. J Neurosurg. (2024) 141:17–26. 10.3171/2023.10.JNS23516

52.

Brenner E . Human body preservation – old and new techniques. J Anat. (2014) 224:316–44. 10.1111/joa.12160

Summary

Keywords

human anatomy, 3D scanning, cadaveric dissection, new technologies in medicine, anatomical education

Citation

Michalik W, Szczepanik M, Biel K, Mordarski M, Bak K, Fryzlewicz K, Jaszewski K, Maciaszek S, Pierzchała M, Arshad A, Rams D, Walocha J, Dobrzynski H and Mazur M (2025) High-fidelity 3D models of human cadavers and their organs with the use of handheld scanner–Alternative method in medical education and clinical practice. Front. Med. 12:1644808. doi: 10.3389/fmed.2025.1644808

Received

10 June 2025

Accepted

04 September 2025

Published

24 September 2025

Volume

12 - 2025

Edited by

Mario Ganau, Oxford University Hospitals NHS Trust, United Kingdom

Reviewed by

Nikolaos C. H. Syrmos, Aristotle University of Thessaloniki, Greece

Cesare Zoia, San Matteo Hospital Foundation (IRCCS), Italy

Updates

Copyright

© 2025 Michalik, Szczepanik, Biel, Mordarski, Bak, Fryzlewicz, Jaszewski, Maciaszek, Pierzchała, Arshad, Rams, Walocha, Dobrzynski and Mazur.

This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Halina Dobrzynski halina.dobrzynski@manchester.ac.uk

†These authors share senior authorship

Disclaimer

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.