- 1Queen Square Institute of Neurology, University College London, London, United Kingdom

- 2VERSES Research Lab, Los Angeles, CA, United States

- 3Cognitive Computational Neuroscience, Technische Universität, Dresden, Germany

- 4Department of Informatics, University of Sussex, Brighton, United Kingdom

- 5Nuffield Department of Clinical Neurosciences, University of Oxford, Oxford, United Kingdom

This paper describes a discrete state-space model and accompanying methods for generative modeling. This model generalizes partially observed Markov decision processes to include paths as latent variables, rendering it suitable for active inference and learning in a dynamic setting. Specifically, we consider deep or hierarchical forms using the renormalization group. The ensuing renormalizing generative models (RGM) can be regarded as discrete homologs of deep convolutional neural networks or continuous state-space models in generalized coordinates of motion. By construction, these scale-invariant models can be used to learn compositionality over space and time, furnishing models of paths or orbits: that is, events of increasing temporal depth and itinerancy. This technical note illustrates the automatic discovery, learning, and deployment of RGMs using a series of applications. We start with image classification and then consider the compression and generation of movies and music. Finally, we apply the same variational principles to the learning of Atari-like games.

Introduction

This paper considers the use of discrete state-space models as generative models for classification, compression, generation, prediction, and planning. The inversion of such models can be read as inferring the latent causes of observable outcomes or content. When endowed with the consequences of action, they can be used for planning as inference (Attias, 2003; Botvinick and Toussaint, 2012; Da Costa et al., 2020). This allows one to cast any classification, prediction, or planning problem as an inference problem that, under active inference, reduces to maximizing model evidence. However, applications of active inference have been largely limited to small-scale problems. In this paper, we consider one solution to the implicit scaling problem, namely, the use of scale-free generative models and the renormalization group (Cardy, 2015; Cugliandolo and Lecomte, 2017; Hu et al., 2020; Lin et al., 2017; Schwabl, 2002; Vidal, 2007; Watson et al., 2022). The contribution of this paper is to consider discrete models that are renormalizable in state space and time.

Specifically, this paper explores the use of generalized Markov decision processes as discrete models appropriate for compressing data, generating content, or planning. The generalization in question rests on equipping a standard (partially observed) Markov decision process with random variables called paths. This affords an expressive model of dynamics, in which transitions among states are conditioned on paths, which themselves can have lawful transitions. This generalization becomes particularly important when composing Markovian processes in a deep or hierarchical architecture; for example, Friston et al. (2017b). This follows from the use of states at one level to generate the initial conditions and paths at a lower level. In effect, this means that states generate paths, which generate states, which generate paths, and so on, furnishing trajectories with deep, semi-Markovian structure; for example, Marković et al. (2022). Their recursive aspect speaks to a definitive feature of the generative models considered in this paper—renormalizability.

Intuitively, renormalizability rests on a renormalization group (RG) operator, which takes a description of the system at hand (e.g., an action and partition function) and returns a coarse-grained version that retains the properties of interest while discarding irrelevant details (Cardy, 2015; Schwabl, 2002; Watson et al., 2022). For excellent overviews of the renormalization group in machine learning, please see Hu et al. (2020) and Mehta and Schwab (2014). See also Lin et al. (2017), who appeal to the renormalization group to formalize the claim that “when the statistical process generating the data is of a certain hierarchical form prevalent in physics and machine learning, a deep neural network can be more efficient than a shallow one.” Crucially, any random dynamical system with sparse coupling and an implicit Markov blanket partition (Sakthivadivel, 2022) is renormalizable (Friston, 2019). Therefore, any generative model that recapitulates “the statistical process generating the data” must be renormalizable.

In what follows, we illustrate applications of the same renormalizing generative model (RGM) in several settings. The universality of this model calls on the apparatus of the renormalization group. In brief, its deep structure ensures that each level can be renormalized to furnish the level above. The renormalization group requires that the functional form of the dynamics (e.g., belief updating) is conserved over levels or scales. This is assured in variational inference, in the sense that the inference process itself can be cast as pursuing a path of least action (Friston K. et al., 2023), where action is the path integral of variational free energy: c.f., Mehta and Schwab (2014). The only things that change between levels are the parameters of the requisite action (e.g., sufficient statistics of various probability distributions). The relationship between the parameters at one level and the next rests on an RG operator that entails a grouping and dimension reduction, that is, coarse-graining or scaling transformation. By choosing the right kind of RG operator, one can effectively dissolve the scaling problem. In short, by ensuring each successive level of a deep generative model is renormalizable, one can, in principle, generate data at any scale and implicitly infer or learn the causes of those data. The notion of scale invariance is closely related to universality, licensing the notion of a universal generative model.

Instances of the renormalization group abound in natural and machine learning: for example, the cortical visual hierarchy in the brain, with progressive enlargement of spatiotemporal receptive fields as one ascends hierarchical levels or scales (Angelucci and Bullier, 2003; Hasson et al., 2008; Zeki and Shipp, 1988). The same kind of architectures associated with deep convolutional neural networks could almost be definitive of deep models and learning (Hu et al., 2020; Lin et al., 2017). Here, we pay special attention to the implications of universality and scale invariance for specifying the structural form of generative models and illustrate the ensuing efficiency when deployed in some typical use cases.

This paper comprises four sections. The first rehearses the variational procedures or methods used in active inference, learning, and selection, with a special focus on the selection of hierarchical model structures that can be renormalized. In brief, active inference, learning, and selection speak to the distinct sets of unknown variables that constitute a generative model, namely, latent states, parameters, and structure. On this view, model inversion corresponds to (Bayesian) belief updating at each of these levels by minimizing variational free energy, that is, maximizing an evidence lower bound (Winn and Bishop, 2005). The active part of inference, learning, and selection arises operationally through selecting or choosing those actions that minimize expected free energy, which can be decomposed in a number of ways that subsume commonly used objective functions in statistics and machine learning (Da Costa et al., 2020). The dénouement of this section considers the structural form of renormalizing architectures, illustrated by successive elaborations in the remaining sections.

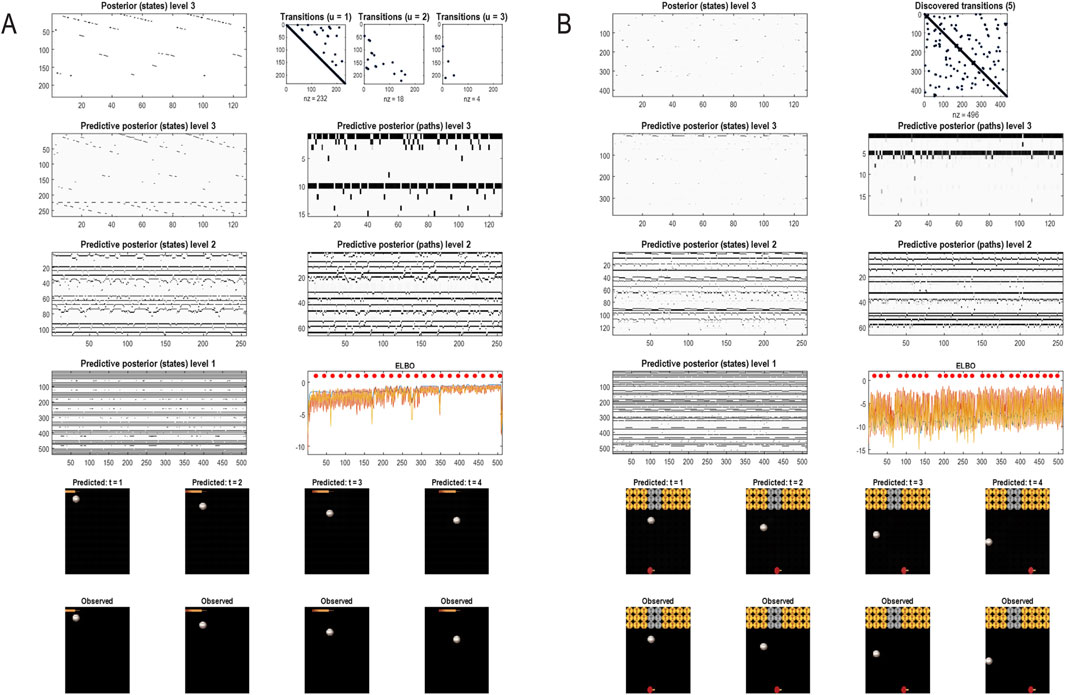

The second section starts with a simple application to models of static images that can be read as a form of image compression; that is, maximizing model evidence via minimizing model complexity through the compression afforded by successive block-spin transformations (Vidal, 2007). The implicit sample efficiency is showcased by application to the MNIST digit classification problem. This application foregrounds the representational nature of the generative model, moving from a place-coded representation at lower levels to an object-centered representation (i.e., digit classes) at the highest level. This differs significantly from approaches to generative modeling in which images depend on several objects that can be placed in different parts of a scene (Henniges, Turner et al., 2014)—in other words, that factorize the highest level into “what” and “where” things are. This is relevant to the way in which occlusions are handled. The renormalized models we propose deal with occlusions in terms of local patterns, and then of local patterns of local patterns, and so on. This means one could generate images of one object partially occluding another by exploiting the statistics of pixels at and around the occluding edge of the proximal object. The benefit of the approach we outline here is that one does not need to know, or even discover (Blei, Ng et al., 2003), the number of objects in a scene to be able to generate it. The downside is that one could not query, from the final model, what would happen were one to change the ordering of objects in the scene such that a previously occluded object becomes the occluder. The next section uses the same methods to illustrate renormalization over time, that is, modeling paths or sequences of increasing temporal depth at successively higher levels. This application can be regarded as a form of video compression illustrated using short movie files that can be used to recognize sequences of events or generate sequences in response to a prompt. In contrast to the object-centered representations afforded by application to static images, this section speaks to event-based compression suitable for classifying or generating visual or auditory scenes. The next section leverages the ability to classify or generate sequences by applying the same methods to sound files, illustrated using birdsong and music. The final section turns to planning and agency by using an RGM to learn and play Atari-like games. This application involves equipping the generative model with the capacity to act, namely, to realize the predicted consequences of action, where these predictions are based on a fast form of structure learning, effectively evincing a one-shot learning of expert play.

Although the focus on renormalization inherits from the physics of universal phenomena (Haken, 1983; Haken, 1996; Haken and Portugali, 2016; Schwabl, 2002; Vidal, 2007), we highlight the biomimetic aspects of inference and learning that emerge under these models. The implication here is that natural intelligence may have evolved renormalizing structures simply because the world features universal phenomena, such as scale invariance. This is not a machine learning paper because the objective in active inference is to maximize model evidence. Therefore, we refrain from benchmarking any of the examples in terms of performance or accuracy. However, it should be self-evident that the methods on offer are generally more sample efficient than extant machine learning schemes1.

Active inference, learning, and selection

This section overviews the model used in the numerical studies of subsequent sections. This model generalizes a partially observed Markov decision process (POMDP) by equipping it with random variables called paths that “pick out” dynamics or transitions among latent states. These models are designed to be composed hierarchically in a way that introduces a separation of temporal scales.

Generative models

Active inference rests on a generative model of observable outcomes. This model is used to infer the most likely causes of outcomes in terms of expected states of the world. These states (and paths) are latent or hidden because they can only be inferred through observations. Some paths are controllable, in that they can be realized through action. Therefore, certain observations depend on action (e.g., where one is looking), which requires the generative model to entertain expectations about outcomes under different combinations of actions (i.e., policies)2. These expectations are optimized by minimizing variational free energy. Crucially, the prior probability of a policy depends on its expected free energy. Having evaluated the expected free energy of each policy, the most likely action is selected, and the implicit perception-action cycle continues (Parr et al., 2022).

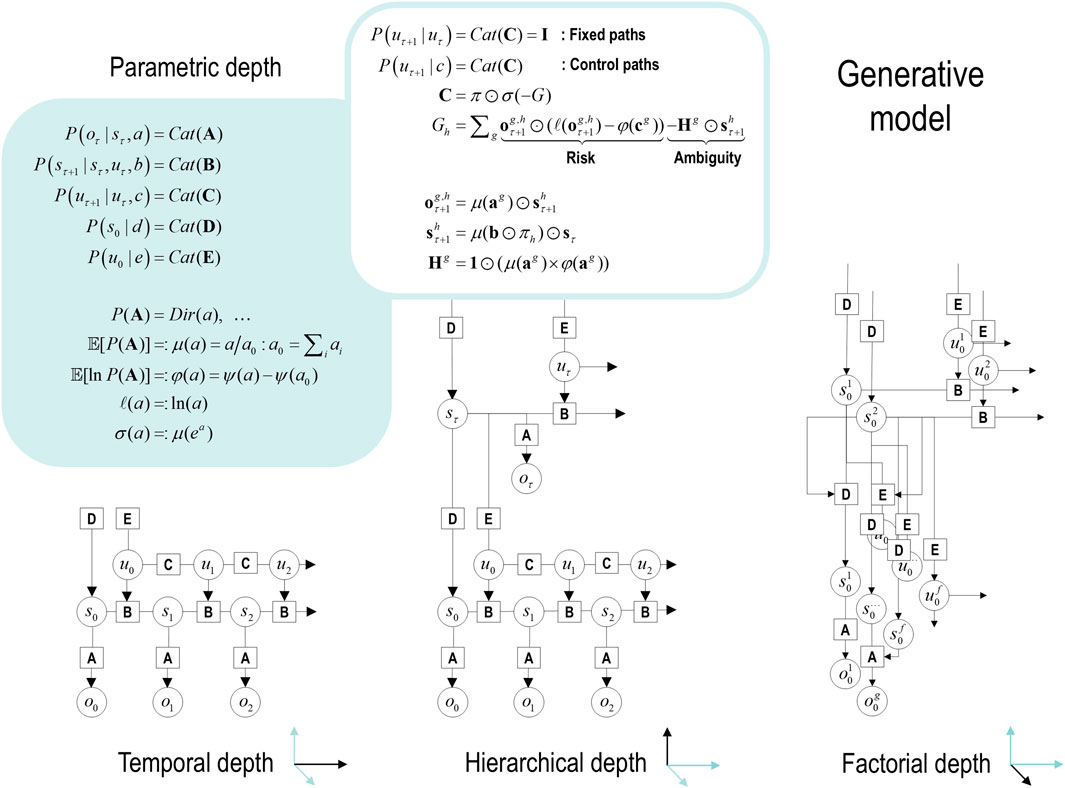

Figure 1 provides an overview of the generative model considered in this paper. It is structured such that it highlights three general motifs in the factorization of a probabilistic generative model. These are shown as three panels arranged in a single row, each with a title underneath indicating the type of factorization. The three panels use a graphical formulation for the models, which are supplemented with mathematical descriptions of their structures. Outcomes at any time depend on hidden states, while transitions among hidden states depend on paths. Note that paths are random variables that may or may not depend on action. The resulting POMDP is specified by a set of tensors. The first set A maps from hidden states to outcome modalities, for example, exteroceptive (e.g., visual) or proprioceptive (e.g., eye position) modalities. These parameters encode the likelihood of an outcome given their hidden causes. The second set B encodes transitions among the hidden states of a factor under a particular path. Factors correspond to different kinds of causes, such as the location versus the class of an object. The remaining tensors encode prior beliefs about paths C and initial conditions D and E. The tensors are generally parameterized as Dirichlet distributions, whose sufficient statistics are concentration parameters or Dirichlet counts, which count the number of times a particular combination of states and outcomes has been inferred. We will focus on learning the likelihood model, encoded by Dirichlet counts, a.

Figure 1. Generative models. A generative model specifies the joint probability of observable consequences and their hidden causes. Usually, the model is expressed in terms of a likelihood (the probability of consequences given their causes) and priors (over causes). When a prior depends on a random variable, it is called an empirical prior. Here, the likelihood is specified by a tensor A, encoding the probability of an outcome under every combination of states (s). Priors over transitions among hidden states, B, depend on paths (u), whose transition probabilities are encoded in C. Certain (control) paths are more probable a priori if they minimize their expected free energy (G), expressed in terms of risk and ambiguity (white panel). If the path is not controllable, it remains fixed over the epoch in question, where E specifies the prior over paths. The left panel provides the functional form of the generative model in terms of categorical (Cat) distributions that are themselves parameterized as Dirichlet (Dir) distributions, equipping the model with the parametric depth. The lower equalities list the various operators required for variational message passing in Figure 2. These functions are taken to operate on each column of their tensor arguments. The graph on the lower left depicts the generative model as a probabilistic graphical model that foregrounds the implicit temporal depth implied by priors over state transitions and paths. This example shows dependencies for fixed paths. When equipped with hierarchical depth, the POMDP acquires a separation of temporal scales. This follows because higher states generate a sequence of lower states, that is, the initial state (via the D tensor) and subsequent path (via the E tensor). This means higher levels unfold more slowly than lower levels, furnishing empirical priors that contextualize the dynamics of their children. At each hierarchical level, hidden states and accompanying paths are factored to endow the model with factorial depth. In other words, the model “carves nature at its joints” into factors that interact to generate outcomes (or initial states and paths at lower levels). The implicit context-sensitive contingencies are parameterized by tensors mapping from one level to the next (D and E). Subscripts pertain to time, while superscripts denote distinct factors (f), outcome modalities (g), and combinations of paths over factors (h). Tensors and matrices are denoted in uppercase bold, while posterior expectations are in lowercase bold. The matrix π encodes the probability over paths under each policy (for notational simplicity, we have assumed a single control path). The ⊙ notation implies a generalized inner (i.e., dot) product or tensor contraction, while × denotes the Hadamard (element by element) product. ψ(·) is the digamma function applied to the columns of a tensor.

The generative model in Figure 1 means that outcomes are generated as follows: first, a policy is selected using a softmax function of expected free energy. Sequences of hidden states are generated using the probability transitions specified by the selected combination of paths (i.e., policy). Finally, these hidden states generate outcomes in one or more modalities. Inference about hidden states (i.e., state estimation) corresponds to inverting a generative model, given a sequence of outcomes, while learning corresponds to updating model parameters. The requisite expectations constitute the sufficient statistics (s,u,a) of approximate posterior beliefs Q(s,u,a) = Qs(s)Qu(u)Qa(a). The implicit factorization of this approximate posterior effectively partitions model inversion into inference, planning, and learning.

Variational free energy and inference

In variational Bayesian inference (a.k.a. approximate Bayesian inference), model inversion entails the minimization of variational free energy with respect to the sufficient statistics of approximate posterior beliefs. This can be expressed as follows, where, for clarity, we will deal with a single factor, such that the policy (i.e., a combination of paths) becomes the path, π = u. Omitting dependencies on previous states, we have for model m:

Because the (KL) divergences cannot be less than 0, the penultimate equality means that free energy is 0 when the (approximate) posterior is the true posterior. At this point, the free energy becomes the negative log evidence for the generative model (Beal, 2003). This means minimizing free energy is equivalent to maximizing model evidence.

Planning emerges under active inference by placing priors over (controllable) paths to minimize expected free energy (Friston et al., 2015):

Here, Qu=Q(oτ+1,sτ+1,a|u)=P(oτ+1,sτ+1,a|u,o0,…,oτ)=P(oτ+1|sτ+1,a)Q(sτ+1,a|u) is the posterior predictive distribution over parameters, hidden states, and outcomes at the next time step, under a particular path. Note that the expectation is over observations in the future that become random variables, hence, expected free energy. This means that preferred outcomes that subtend expected cost and risk are prior beliefs that constrain the implicit planning as inference (Attias, 2003; Botvinick and Toussaint, 2012; Van Dijk and Polani, 2013).

One can also express the prior over the parameters in terms of an expected free energy, where marginalizing over paths:

where Qa = P(o|s,a)P(s|a) = P(o,s|a) is the joint distribution over outcomes and hidden states, encoded by Dirichlet parameters, and σ is the softmax (normalized exponential) function. Here and throughout, we leave the functional dependence of G on c implicit for simplicity of notation. This is only a slight simplification, in that we will treat c as if it is a fixed value and not something we expect to vary. Note that the Dirichlet parameters encode the mutual information, in the sense that they implicitly encode the joint distribution over outcomes and their hidden causes. When normalizing each column of the a tensor, we recover the likelihood distribution (as in Figure 1). However, we could normalize over every element to recover a joint distribution [as in Equation 5 later].

Expected free energy can be regarded as a universal objective function that augments mutual information with expected costs or constraints. Constraints parameterized by c reflect the fact that we are dealing with open systems with characteristic outcomes, o. This can be read as an expression of the constrained maximum entropy principle (Ramstead et al., 2022). Alternatively, it can be read as a constrained principle of maximum mutual information or minimum redundancy (Ay et al., 2008; Barlow, 1961; Linsker, 1990; Olshausen and Field, 1996). In machine learning, this kind of objective function underwrites disentanglement (Higgins et al., 2021; Sanchez et al., 2019) and generally leads to sparse representations (Gros, 2009; Olshausen and Field, 1996; Sakthivadivel, 2022; Tipping, 2001).

There are many special cases of minimizing expected free energy. For example, maximizing expected information gain maximizes (expected) Bayesian surprise (Itti and Baldi, 2009), in accordance with the principles of optimal experimental design (Lindley, 1956). This resolution of uncertainty is related to artificial curiosity (Schmidhuber, 1991; Still and Precup, 2012) and speaks to the value of information (Howard, 1966). Expected complexity or risk is the same quantity minimized in risk-sensitive or KL control (Klyubin et al., 2005; van den Broek et al., 2010) and underpins (free energy) formulations of bounded rationality based on complexity costs (Braun et al., 2011; Ortega and Braun, 2013) and related schemes in machine learning; for example, Bayesian reinforcement learning (Ghavamzadeh et al., 2016). Finally, minimizing expected cost subsumes Bayesian decision theory (Berger, 2011).

Active inference

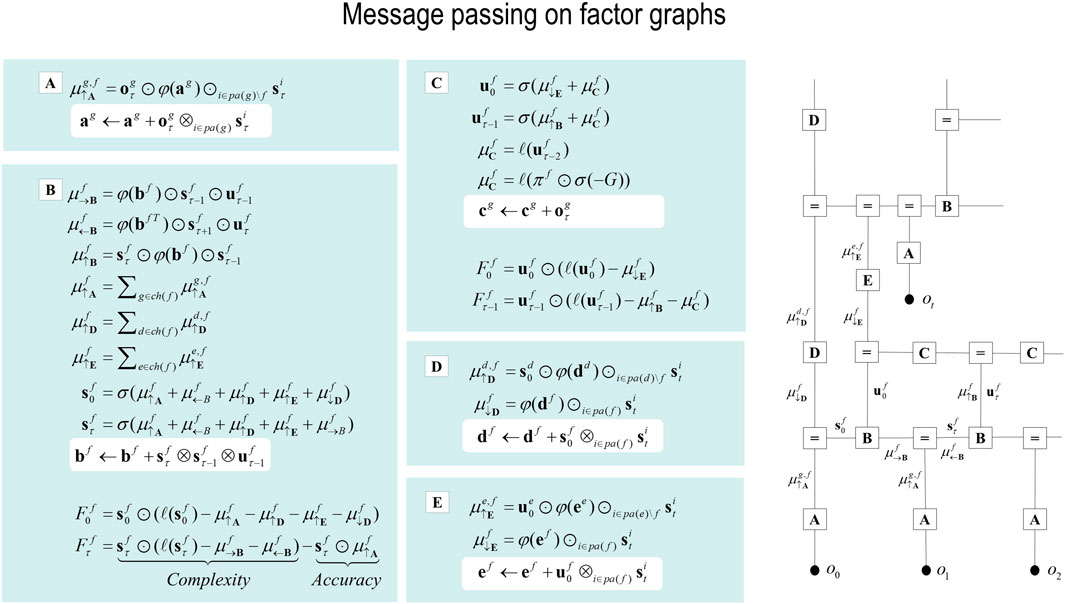

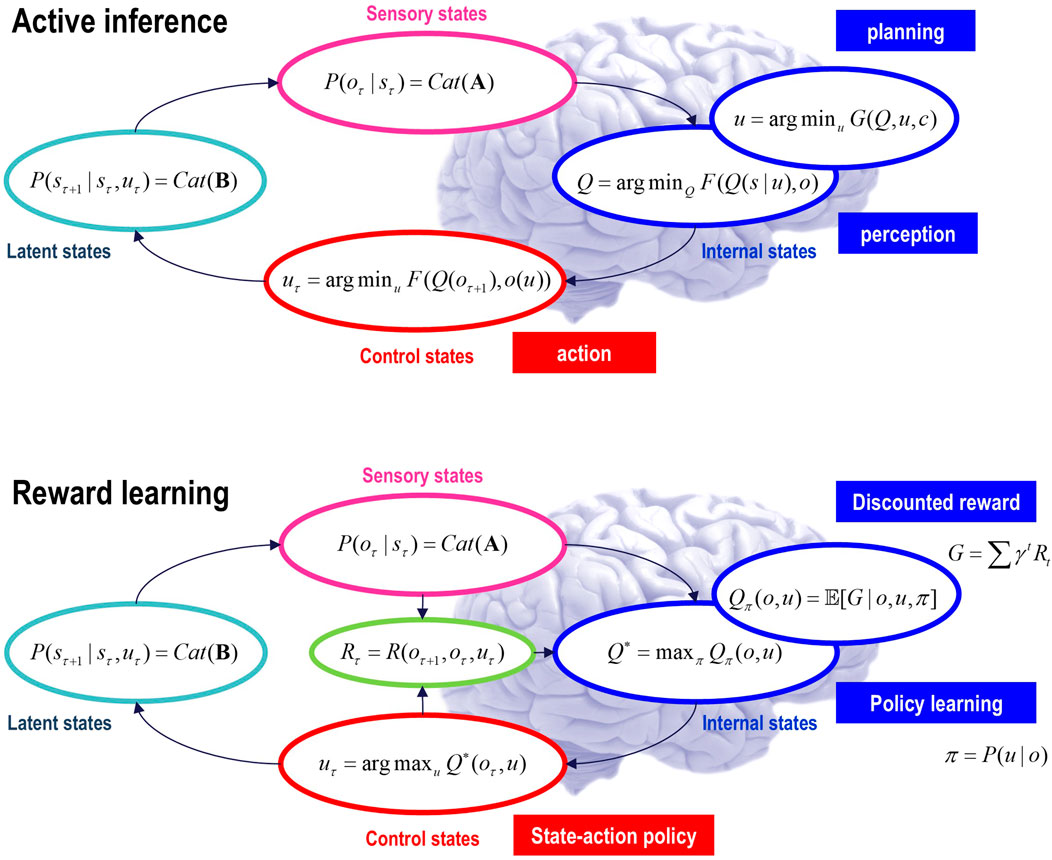

In variational inference and learning, sufficient statistics encoding posterior expectations are updated to minimize variational free energy. Figure 2 illustrates these updates in the form of variational message passing (Dauwels, 2007; Friston et al., 2017a; Winn and Bishop, 2005). For example, expectations about hidden states are a softmax function of messages that are linear combinations of other expectations and observations.

Figure 2. Belief updating and variational message passing: the right panel presents the generative model as a factor graph, where the nodes (square boxes) correspond to the factors of the generative model (labeled with the associated tensors). The edges connect factors that share dependencies on random variables. The leaves of filled circles correspond to known variables, such as observations (o). This representation is useful because it scaffolds the message passing—over the edges of the factor graph—that underwrites inference and planning. The functional forms of these messages are shown in the left-hand panels, where the panels labels (A–E) indicate the corresponding tensors in the factor graph on the right., where the panels labels (A–E) indicate the corresponding tensors in the factor graph on the right. For example, the expected path in the first equality of panel (C) is a softmax function of two messages. The first is a descending message

Here, the ascending messages from the likelihood factor are a linear mixture3 of expected states and observations, weighted by (digamma) functions of the Dirichlet counts that correspond to the parameters of the likelihood model (c.f., connection weights). The definitions of the terms that appear in Equation 4 are summarized in Figure 2 and its caption. The expressions in Figure 2 are effectively the fixed points (i.e., minima) of variational free energy. This means that message passing corresponds to a fixed-point iteration scheme that inherits the same convergence proofs of coordinate descent (Beal, 2003; Dauwels, 2007; Winn and Bishop, 2005)4.

Active learning

Active learning has a specific meaning in this paper. It implies that the updating of Dirichlet counts depends on expected free energy, namely, the mutual information encoded by the tensors: see Equation 1. This means that an update is selected in proportion to the expected information gain. Consider two actions: to update or not to update. From Figure 2, we have (dropping the modality superscript for clarity):

Here, Σ(a)=:1⊙a⊙i∈pa1 is the sum of all tensor elements. The expression on the right-hand side of the first line of Equation 5 indicates the outer product between beliefs about an outcome and the state factor i that is the parent factor of that outcome. The prior probability of committing to an update is given by the expected free energy of the respective Dirichlet parameters, which scores the expected information gain (i.e., mutual information) and cost5:

This prior over the updates furnishes a Bayesian model average of the likelihood parameters, effectively marginalizing over update policies:

In Equation 6, α plays the role of a hyperprior that determines the sensitivity to expected free energy. When this precision parameter is large, the Bayesian model average above becomes Bayesian model selection; that is, either the update is selected, or it is not.

This kind of active learning rests on treating an update as an action that is licensed if expected free energy decreases. A complementary perspective on this selective updating is that it instantiates a kind of Maxwell’s demon, selecting only those updates that maximize (constrained) mutual information. Exactly the same idea can be applied to model selection, leading to active selection.

Active selection

In contrast to learning that optimizes posteriors over parameters, Bayesian model selection or structure learning (Tenenbaum et al., 2011; Tervo et al., 2016; Tomasello, 2016) can be framed as optimizing the priors over model parameters. In this view, model selection can be implemented efficiently using Bayesian model reduction, which starts with an expressive model and removes redundant parameters. Crucially, Bayesian model reduction can be applied to posterior beliefs after the data have been assimilated. In other words, Bayesian model reduction is a post hoc optimization that refines current beliefs based on alternative models that may provide potentially simpler explanations (Friston and Penny, 2011).

Bayesian model reduction is a generalization of ubiquitous procedures in statistics (Savage, 1954). In the present setting, it reduces to something remarkably simple: by applying Bayes rules to parent and reduced models, it is straightforward to show that the change in variational free energy can be expressed in terms of posterior Dirichlet counts a, prior counts a, and the prior counts that define a reduced model a′. Using Β to denote the beta function, we have (Friston et al., 2018):

In Equation 8, a′ corresponds to the posterior that one would have obtained under the reduced priors. Please see Friston et al. (2020) and Smith et al. (2020) for worked examples in epidemiology and neuroscience, respectively.

The alternative to Bayesian model reduction is the bottom-up growth of models to accommodate new data or content. If one considers the selection of one (parent) model over another (augmented) model as an action, then one can score the prior plausibility of a model in terms of its expected free energy so that the difference in expected free energy furnishes a log prior over models that can be combined with the (variational free energy bound on) log marginal likelihoods to score their posterior probability. This can be expressed in terms of a log Bayes factor (i.e., odds ratio) comparing the likelihood of two models, given some observation, o:

Here, a and a′ denote the posterior expectations of parameters under a parent m and augmented model m′, respectively. The difference in expected free energy reflects the information gain in selecting one model over the other, following Equation 6. One can now retain or reject the parent model, depending on whether the log odds ratio is greater than or less than 0, respectively. Active model selection, therefore, finds structures with precise or unambiguous likelihood mappings. When assimilating new (e.g., training) data, one can simply equip the model with a new latent cause to explain each (unique) observation when, and only when, expected free energy decreases (Friston et al., 2023b). This affords a fast kind of structure learning. Before illustrating the above procedures, we now consider a particular structural form that characterizes the generative models used in the illustrative applications.

Renormalizing generative models

Renormalizability is feasible under the models in Figure 1. This follows because the dynamics constitute a coordinate descent on variational free energy, leading to paths of least action, namely, a path integral of variational free energy (Friston K. et al., 2023). However, we also require renormalizing transformations of model parameters from one hierarchical level to the next. These scale transformations entail a coarse graining that generally induces a separation of temporal scales, such that the dynamics—here, belief updating—slow down as one ascends levels or scales. The implicit RG flow rests on the inclusion of dynamics in the generative model.

In discrete time, the inclusion of paths means that a succession of states at any given level can be generated by specifying the initial state and successive transitions encoded by the slice of the transition tensor specified by a path. Crucially, the initial state and path can be generated from the superordinate state, which has its own dynamics and associated path. This structure can be read in a number of ways. It can be regarded as a discrete version of switching dynamical systems (Linderman et al., 2016; Olier et al., 2013), in which the switching variables (i.e., paths) change at a slower timescale than the dynamics or paths at the scale being switched. In the limit of continuous time, the composition of implicit RG operators means that one can model changes or switches in velocity—that is, acceleration—and, at the next level, changes in acceleration—that is, jerk, and so on. In continuous state-space models, this reduces to working in generalized coordinates of motion (Friston et al., 2010; Kerr and Graham, 2000).

A more intuitive view of the latitude afforded by temporal renormalization is that successively higher levels encode sequences of sequences and, implicitly, compositions of events or episodes. In other words, at a deep level, one state can generate sequences of sequences of sequences, thereby destroying the Markovian properties of content generated at the lowest level. It is this deep structure that has been leveraged in applications of active inference under continuous models of song and speech; see, for example, Friston and Kiebel (2009) or Yildiz et al. (2013). We will see the discrete homologs of the ensuing semi-Markovian processes later.

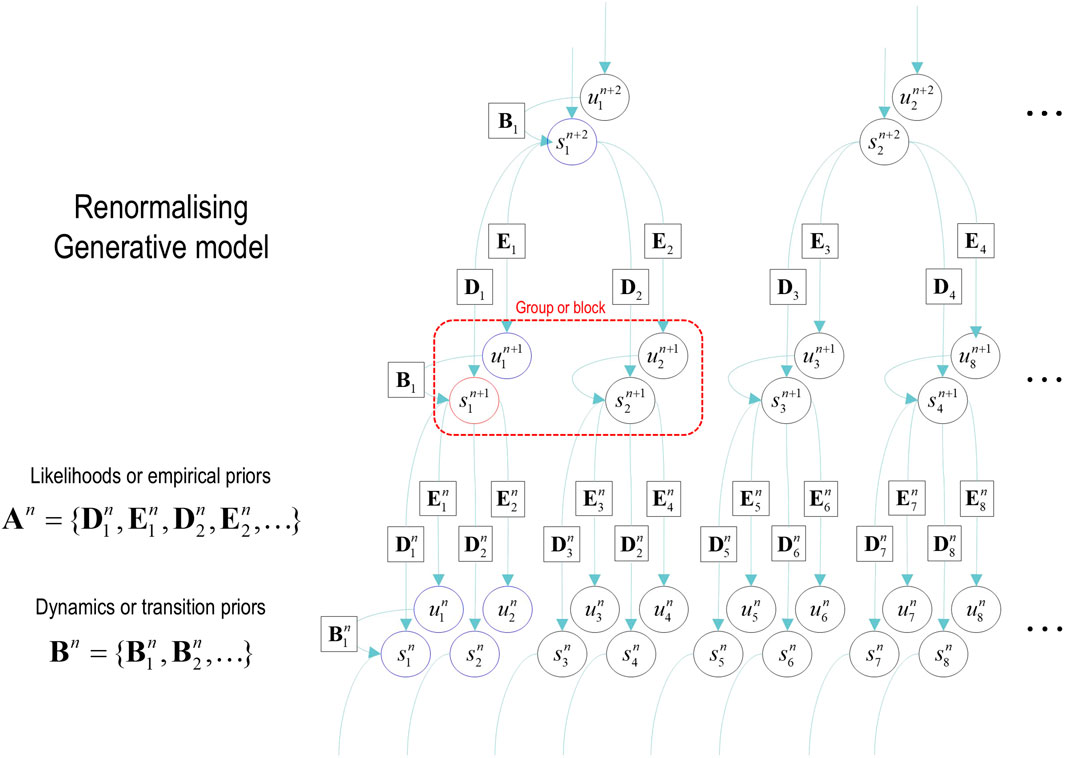

In addition to the renormalization over time, we must also consider renormalization over state space. The example in Figure 3 illustrates a graphical model in which groups of states at a lower level are generated by a single state at the higher level. In the next section, we will associate states at the lowest level with the value of pixels and successive block transformations (i.e., tessellations) with image compression. An important aspect of these models is that the states at any level never share children in the lower level. This renders latent factors at every level conditionally independent. Conditional independence follows from the fact that the Markov blanket of any given state comprises its parents, children, and parents of children; however, its children have no co-parents, rendering hidden factors D-separated when conditioned on the initial states (and paths) of the level below. In turn, this has the practical implication that the likelihood mappings that link different levels or scales (i.e., the D and E tensors in Figure 2) are low-dimensional matrices at every level of the hierarchy. Of course, if one can factorize the states at a given level into distinct state factors, these low-dimensional matrices become lower-dimensional tensors. This means that this scheme is general in the sense that one could construct matrices of the sort described above from tensorial distributions simply by taking Kronecker tensor products of state factors and reorganizing the tensors to matrices. However, it is not general in the sense that it relies on state factors at each level that predict nonoverlapping local (spatiotemporal) regions of the states at the level below.

Figure 3. Renormalizing generative model. This graphical model illustrates the architecture of renormalizing generative models (temporal renormalization has been omitted for clarity). In these models, the latent states at any given level generate the initial conditions and paths of [groups of] states at the lower level (red box). This means that the trajectory over a finite number of timesteps at the lower level is generated by a higher state. This entails a separation of temporal scales and implicit renormalization, such that higher states only change after a fixed number of lower state transitions. This kind of model can be specified in terms of (i) transition tensors (B) at each level, encoding transitions under each discrete path, and (ii) likelihood mappings between levels, corresponding to the D and E tensors of previous figures. These can be treated as subtensors of likelihood mappings

Models of this sort can be regarded as generating local dependencies within each group of states at the lower level, whereas global (between-group) dependencies are modeled at higher levels. They feature a characteristic progression from local to global as one ascends the hierarchy: c.f., Hochstein and Ahissar (2002), providing an efficient way to model data that show strong local dependencies over space and time6.

In the setting of discrete models, one can also regard an RGM as an expressive way of modeling nonlinearities in the generation of data, much in the spirit of deep neural networks with nonlinear activation or rectification functions. In the limit of precise likelihood mappings, this can be viewed as the composition of logical operators. For example, if the first two elements of the leading dimension of a D likelihood tensor were nonzero, this means that the parent state can generate the first OR second child’s initial state. Conversely, nonzero elements of two likelihood tensors generate a particular state of one child AND another child. Heuristically, one can see why an RGM can compose operators to represent or generate content that has a compositional structure. The inversion of an RGM could be construed as a simple form of abductive (e.g., modal) logic. In the ensuing example, this perspective is leveraged to learn the composition of local image features subtending global objects.

Image compression and compositionality

We start with the simplest example of renormalizing generative models appropriate for image classification and recognition. The aim is to automatically assemble an RGM to classify and generate the image content to which it is exposed. In other words, we seek procedures for automatic structure learning followed by learning the parameters of the ensuing structure, which can then be deployed to classify or generate the kind of content on which it was trained.

The first step is to quantize continuous pixel values for an image of any given size. Effectively, this involves mapping continuous pixel values to some discrete state space, whose structure can be learned automatically by recursive applications of a blocking transformation. Note that this procedure gives us the first (and lowest) level of a hierarchical model and is not required to map between higher levels as they will all deal in categorical variables. In this example, we group local pixels by tiling (i.e., tessellating) an image, partitioning it into little squares with a spin-block transformation (Vidal, 2007)7. Each group is then subject to singular value decomposition, given a training set of images, to identify an orthogonal (spatial) basis set of singular vectors. This grouping is followed by a reduction operator that retains singular variates with large singular values (here, the first 32 principal vectors based on groups of 4 × 4 pixels). Under linear transformations, this is guaranteed to maximize the mutual information in accordance with Equation 3 (given the absence of prior constraints). One way to see this is to assume a precise conditional distribution of a continuous variable I given observations that can be expressed in terms of linear weights inside a Dirac delta and identify the weights that maximize the mutual information (using ρ to indicate a mean-centered sample distribution):

The conditional entropy of the delta distribution tends to zero and is, therefore, omitted on the second line of Equation 10. The approximate equality rests on the assumption that the sample distribution for o is approximately Gaussian and uses a singular value decomposition of the concatenated samples, where the diagonal elements of S are singular values. To maximize the entropy of the marginal of o, the weights must be chosen to be proportional to the left singular vectors (columns of U) or, equivalently, the eigenvectors of the sample covariance.

The set of singular variates for each group specifies the pattern for any given image at the corresponding location. The continuous variates can then be quantized to a discrete number of levels (here, seven) to provide a discrete representation of each block. This corresponds to the first RG operator (a.k.a. blocking transformation). Given a partition of the image into quantized blocks, we now apply a second block transformation into groups of four nearest neighbors. This reduces the number of blocks by a factor of two in each image dimension. One then repeats this procedure until only one group remains at the highest level or scale.

Each application of the block transformation creates a likelihood mapping (D) from the states at a higher level to the lower level. In other words, the state of a latent factor at any level generates the states of a group at lower levels (or quantized singular variates for pixels within a group at the image level). The requisite likelihood matrices can be assembled using a fast form of structure learning based on Equation 9. This equation says that the likelihood mapping (in the absence of any constraints) should have the maximum mutual information. This is assured if each successive column of the likelihood matrix is unique. In turn, this means we can automatically assemble the requisite likelihood mappings by appending unique instances of quantized singular variates (encoded as one-hot vectors) in a set of training images. This results in one-hot likelihood arrays for each group that share the same parent at the higher level:

We have dropped En=[1,1,…] in Equation 11 because there is only one path in the absence of dynamics. In this case, the penultimate level comprises the likelihood mappings that generate each quadrant of the image in terms of quadrants of quadrants, much like a discrete wavelet decomposition. The ultimate level corresponds to priors over the (initial) states or class of image. In short, structure learning emerges from the recursive application of blocking transformations of some training images. This is a special case of fast structure learning (see Algorithm 1), described in detail in the next section.

At the first glance, this procedure may appear to generate likelihood mappings (D matrices) of increasing size because the combinatorics of increasingly large groups could explode at higher levels. However, by training on a small number of images, one upper bounds the number of states at each level. This follows from the fact that there can be no more columns of the likelihood matrix than there are unique images in the training set. In other words, one can effectively encode a finite number of images without any loss of information such that RGM inversion corresponds to lossless compression.

To generalize to a more expansive training set, one can populate the likelihood mappings with small concentration parameters and use active learning to recover an optimal lossy compression. According to Equation 7, active learning simply means accumulating appropriate Dirichlet counts in the likelihood mappings until the mutual information converges to its maximum. This offers a principled way to terminate the ingestion of training data, after which there can be no further improvement in expected free energy or mutual information.

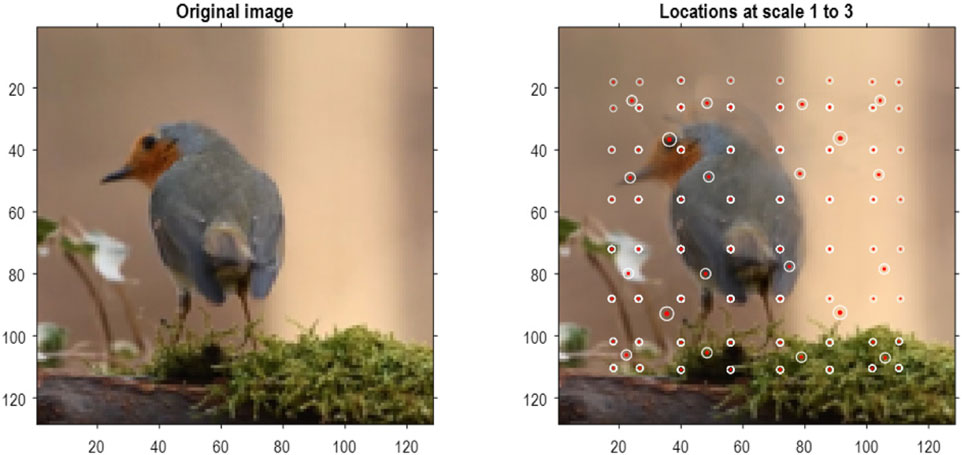

A worked example

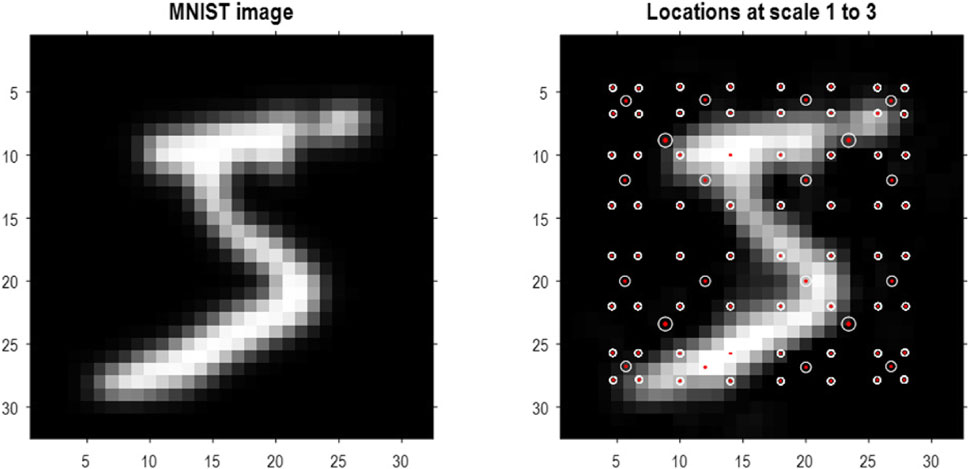

To demonstrate the above methods, they were applied to the MNIST digit classification problem (LeCun and Cortes, 2005). MNIST images were preprocessed by up-sampling to 32 pixels × 32 pixels, smoothing, and histogram equalization8. In addition, they were converted into a format suitable for video processing with three (TrueColor or RGB) channels. An exemplar image is shown in Figure 4 (left panel). The blocking transformation to discrete state space is illustrated in the right panel, which shows the reconstructed image (i.e., local mixtures of singular vectors weighted by discrete singular variates). The centroids of each group are shown with small red dots, where each group comprises pixels within a radius of four pixels.

Figure 4. Quantizing images. The left panel shows an example of an MNIST image after resizing to 32 pixels × 32 pixels, following histogram equalization. The image in the right panel corresponds to the image in pixel space generated by quantized singular variates, used to form a linear mixture of singular vectors over groups of pixels. The centers of the (4 × 4) groups of pixels are indicated by the small red dots (encircled in white). In this example, the singular variates could take seven discrete values centered on zero for a maximum of 16 singular vectors. At subsequent levels, (2 × 2) groups of groups are combined via grouping or blocking operators. The centroids of these groups (of groups) at the three successive scales are shown with successively larger red dots. At the third scale, there are four groups corresponding to the quadrants of the original image.

Based on the prior that there can be a dozen ways of writing any given number, the first 13 (Baker’s dozen) images of each digit class were used for fast structure learning. This produced an RGM with four levels. The centroids of the ensuing groups of increasing size are shown as successively larger red dots in Figure 4. At the penultimate level, this grouping is into quadrants. In this application, we equipped the last level, covering all pixel locations, with a likelihood mapping between the known digit class (i.e., label) and compressed representations at the penultimate level.

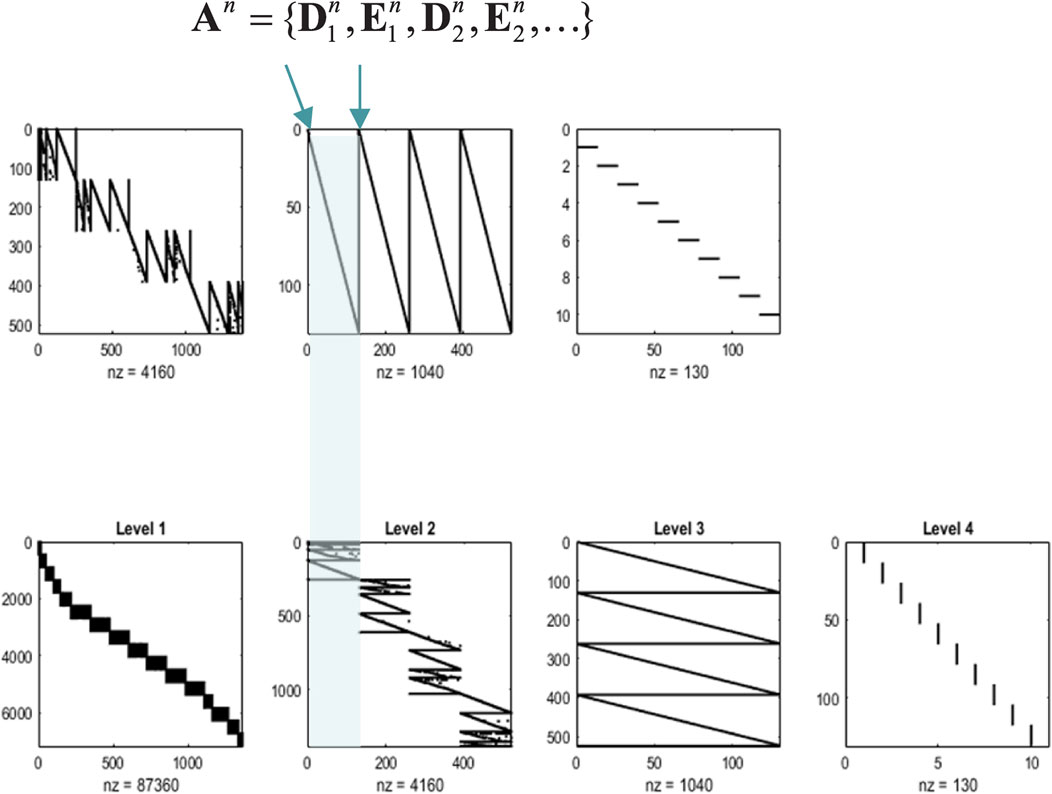

The likelihood mappings are shown in Figure 5 to illustrate the ensuing structure. The lower row shows concatenated likelihood mappings at each level. The upper row reproduces these mappings after transposing to illustrate how states at one level generate the states of groups at the subordinate level. For example, at level 1, we have 16 groups of pixels whose states are generated by four groups at level 2. Similarly, the four groups at level 2 are generated by one group at level 3. Level 4 implements our prior knowledge about digit classes, effectively providing a mapping from digit class to the (13 × 10) exemplar images that have been compressed in a lossless fashion.

Figure 5. Renormalizing likelihoods. This figure is a schematic representation of the composite likelihood mappings (comprising D and E) among the levels of an RGM, following fast structure learning. In each of these graphics, black indicates a nonzero element, and white indicates a zero element. In the lower row of matrices, the columns of the matrices are the alternative possible values of states at that level, concatenated for all state factors. The rows are the possible values for states (or observations) at the level below, similarly concatenated. By the third level, each latent state can generate an entire image via recursive application of sparse, block-diagonal matrices, where “nz” counts the number of nonzero elements. In this example, the model has been equipped with a fourth level, mapping from 10 digit classes to 130 latent states at the third level (encoding 13 images of 10 digits). These likelihood mappings (that mediate empirical priors) are assembled automatically during structure learning by accumulating unique combinations of (recursively grouped) states at subordinate levels. The upper row reproduces the matrices of the lower row after transposition to illustrate the dimension reduction inherent in the grouping of states. Each transposed matrix is shifted one to the left relative to the lower row. This means the states generated by the upper matrices are represented in the columns, aligning with the states in the conditioning sets in the columns of the matrices below. For example, the thousand or so states at the second level generate over 6,000 states at the first, which specify the mixture of singular vectors required to generate an image. Similarly, the 500 or so states at level 3 generate empirical priors over a partition of level 2 states into four subsets or groups (the first is highlighted in cyan), and so on. Crucially, by construction, the children of states at any level constitute a partition, such that every child is included in exactly one subset. This means that states at any level have only one parent, rendering the subsets of the partition at the higher level conditionally independent. In other words, there are no conditionally dependent co-parents. This enables efficient sum–product operations during model inversion because one must only compute dot products of subtensors (i.e., small matrices) specified by the parents of a group. Note that the matrices in this figure are not simple likelihood mappings: they are concatenated likelihood mappings from all hidden states at one level to all hidden states (and paths) at the subordinate level, where the sum to one constraint is applied to the states (or paths) each child could be in.

Note that the graphics in Figure 5 represent concatenated likelihood matrices. In other words, each block of the likelihood matrices generates multiple outcomes, namely, combinations of outputs for several groups. This illustrative concatenation conceals the fact that each likelihood (i.e., A, D, or E tensor) is a relatively small matrix. The implicit sparsity of these likelihoods inherits from the blocking transformations based on a partition at each level. If we had imposed the additional constraint (i.e., structural prior) that these likelihood mappings are conserved identically over groups, then one would have the discrete homolog of a convolutional neural network with an implicit weight sharing. Here, we did not impose this prior constraint because different groups of pixels show systematic, location-dependent differences. From a biomimetic perspective, this can be likened to differences in the size of receptive fields between central (i.e., foveal) and peripheral visual fields that speak to the principles of maximum mutual information and minimum redundancy (Barlow, 1961; Linsker, 1990; Olshausen and Field, 1996; Simoncelli and Olshausen, 2001). Technically, this is reflected in the fact that the peripheral groups of pixels showed little or no variation over exemplar images. This means that only a small number of singular variates were retained in the periphery and explains why the size of the groups at the first level increases toward the center of the image (see the lower left panel of Figure 5).

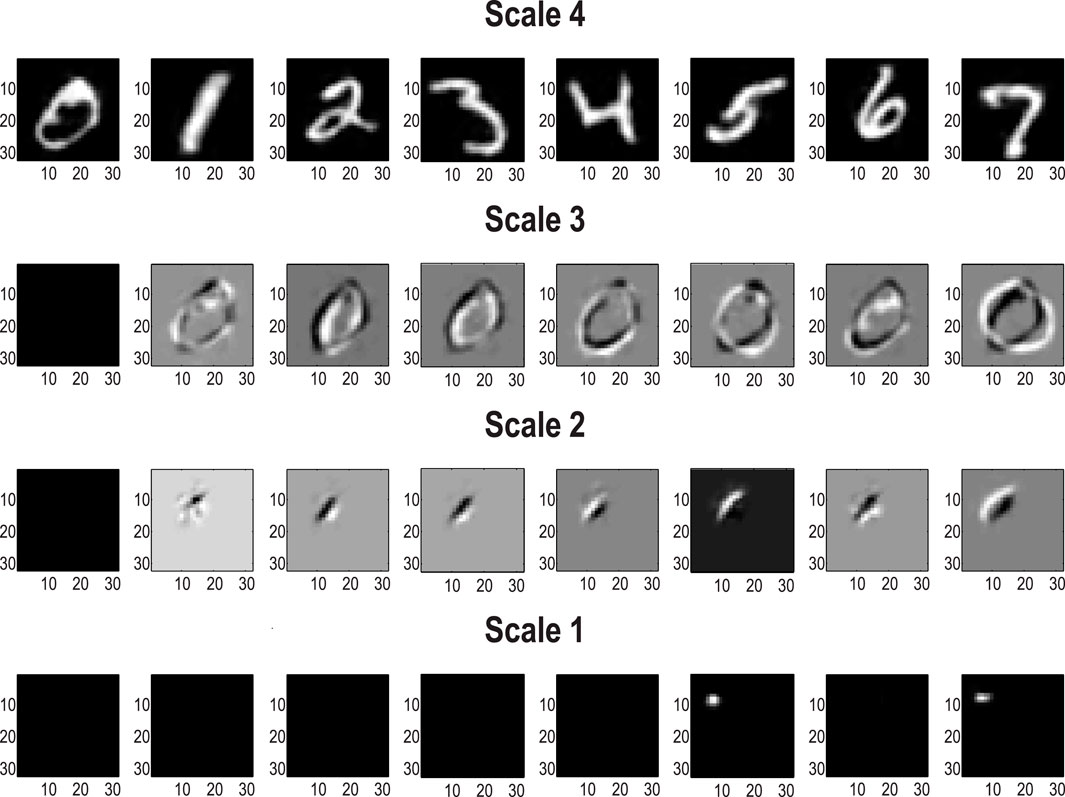

Pursuing the biomimetic theme, Figure 6 illustrates the encoding of images at successively deeper levels of the RGM. The top row (Scale 4) shows the images generated under the first eight levels of the corresponding latent factor. By construction, this factor has 10 levels corresponding to prior knowledge about the class of each digit. The subsequent rows illustrate the projective fields of particular states at lower levels. These fields can be regarded as the changes in predicted stimuli due to changes in the representations (i.e., the complement of receptive fields). In Figure 6, the first state of every factor of every level was selected to provide a baseline image. This was then subtracted from the image generated by subsequent states of the first factor. The purpose of these characterizations was to show that changing posterior expectations at the highest levels (Scales 3 and 4) produces changes everywhere in the image. In other words, these are coarse-grained global representations of the object represented. Conversely, at subordinate levels (Scales 1 and 2), the projective fields are restricted to local parts of the image. The form of these projective fields is remarkably similar to that seen in the visual system, namely, compact, simple receptive fields in the early visual cortex and complex fields (e.g., with a center–surround structure) of greater spatial extent at higher levels in the visual hierarchy (Angelucci and Bullier, 2003; Zeki and Shipp, 1988). It is noteworthy that this kind of functional specialization is an emergent property of a renormalizing generative model.

Figure 6. Renormalization and projective fields. This figure shows exemplar projective fields of the (MNIST) RGM in terms of posterior predictive densities in pixel space associated with states at successive levels (or scales) in the generative model. The top row corresponds to the posterior predictions of the first eight states at the fourth level, while subsequent rows show the differences in posterior predictions obtained by switching the first state for the subsequent eight states at each level. The key thing to note is that the sizes of the projective fields become progressively smaller and more localized as we descend scales or levels.

From a statistical or Gestalt perspective, the progressive enlargement of projective fields can also be viewed in terms of composition and the transformation from local, place-coded representations to global object-centered representations; for example, Hochstein and Ahissar (2002) and Kersten et al. (2004). This is again reminiscent of biomimetic architectures in the sense that elemental image features are encoded at the lower levels of a visual hierarchy while objects and classes (e.g., faces) are encoded at much higher levels with successive levels comprising more extended but compressed representations from lower-level representations (Alpers and Gerdes, 2007; Kersten et al., 2004; Zeki and Shipp, 1988).

The foregoing illustrates the generation of content following structure learning of a lossless sort. We now turn to inference and classification. This rests on optimizing the parameters of the RGM structure to maximize the marginal likelihood of some training data. Figure 5 illustrates the results of this active learning during exposure to the first 10,000 training images of the MNIST dataset. This training proceeded by (i) populating all the likelihood mappings with small concentration parameters9, (ii) equipping the highest level of the model with precise priors (D4) corresponding to the class labels, and (iii) accumulating Dirichlet parameters according to Equation (7), with α = 512.

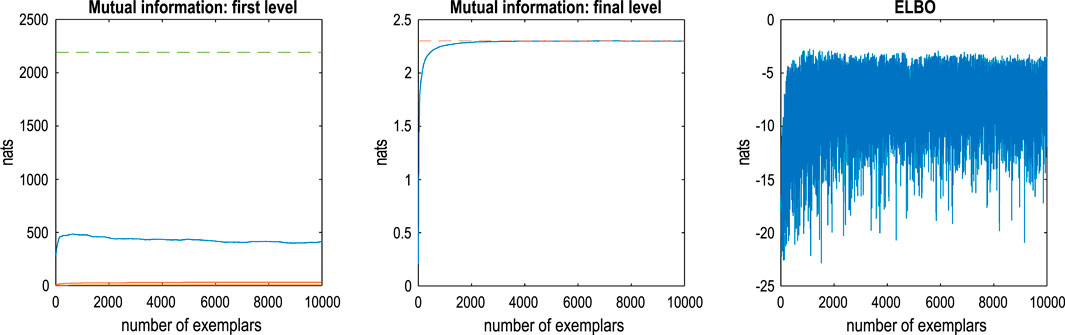

This kind of evidence accumulation converges when the expected free energy asymptotes. This convergence is illustrated in the middle panel of Figure 7, which plots the mutual information at the final level as a function of training exemplars. The broken red line corresponds to the upper bound on mutual information afforded by the fact that there are 10 classes or hidden states at the final level. The left panel shows the equivalent (summed) mutual information of lower-level likelihood mappings, while the right panel shows the accompanying variational free energy following each exemplar. One can see that there is an initial period of fast learning that asymptotes in terms of mutual information and (negative) variational free energy with little further improvement after approximately 5,000 samples. Note that the expected free energy provides a convergence criterion that quantifies the number of training exemplars required to maximize model evidence (on average).

Figure 7. Active learning. This figure reports the assimilation or active learning of the training MNIST dataset. In this example, images were assimilated if, and only if, they increased the expected free energy of the RGM. In the absence of prior preferences or constraints, this ensures minimum information loss by underwriting informative likelihood mappings at each level (i.e., maximizing mutual information). The left panel reports the mutual information as a function of ingesting 10,000 training images. The left panel reports the mutual information at the first level (blue line) and intermediate levels. The middle panel reports the mutual information at the final (fourth) level. The dashed lines correspond to the maximum mutual information that could be encoded by the likelihood mappings. The right panel shows the corresponding evidence lower bound (negative variational free energy), scored by inferring the latent states (digit class) generating each image. The fluctuations here reflect the fact that some images are more easily explained than others under this model. As the model improves, there are progressively fewer images with a very low evidence lower bound (ELBO): that is, −16 natural units or fewer.

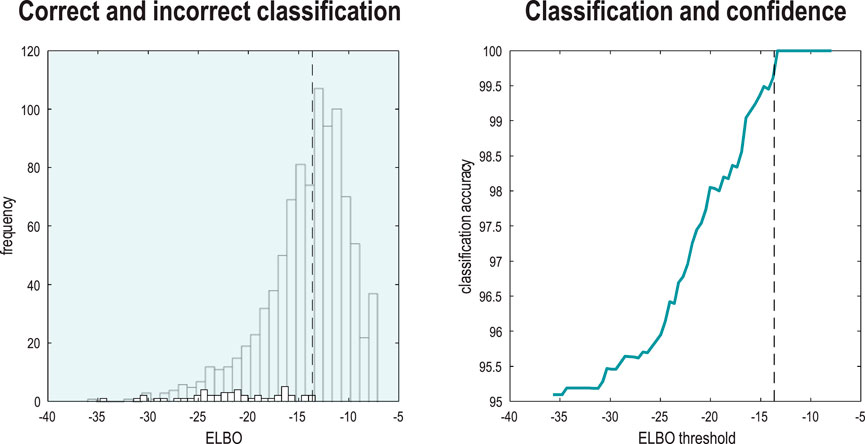

Figure 8 reports the capacity of the ensuing model to correctly infer or classify the class of each the subsequent 1,000 (unseen test) images. This figure provides a nuanced report of classification performance, which reflects the fact that we have two kinds of inference at hand. These rest on (i) the posterior distribution over digit classes and (ii) the marginal likelihood that the image is classifiable under the model. This means that one can assess the accuracy of classification conditioned on whether a particular image was classifiable, enabling a more comprehensive characterization of performance in terms of sensitivity and specificity. The format adopted in Figure 8 plots the classification accuracy as a function of the ELBO (right panel) and the distribution of ELBOs for correctly and incorrectly classified images (left panel). The thing to note here is that classification accuracy increases with the marginal likelihood that each image belongs to the class of numbers. For example, if we split the test data into two halves, the half with the highest marginal likelihood was classified with 99.8% accuracy, while the classification accuracy for the entire test set was only 95.1%

Figure 8. Classification and confidence. This figure reports classification performance following the learning described in Figure 7. Because inverting a generative model corresponds to inference, recognition, or classification, one can evaluate the posterior over latent causes—here, digit class—and the marginal likelihood (i.e., model evidence) of an image while accommodating uncertainty about its class. This means that one can score the probability that each image was caused by any digit class in terms of the ELBO. The distribution of the ELBO over the 10,000 training images is shown as a histogram in the left panel (for correctly classified images). The smaller histogram (foregrounded) shows the distribution of log-likelihoods for the subset of images that were classified incorrectly. Having access to the marginal likelihood means that one can express classification accuracy as a function of the (marginal) likelihood the image was generated by a digit. The ensuing classification accuracy is shown in the right panel as a function of a threshold (c.f., Occam’s window) on the ELBO or evidence that each image was generated by a digit. The vertical dashed lines show the median ELBO (−13.85 nats). Classification accuracy for all images was only 95.1%. However, the accuracy rises to 99.8% following a median split based on their marginal likelihoods.

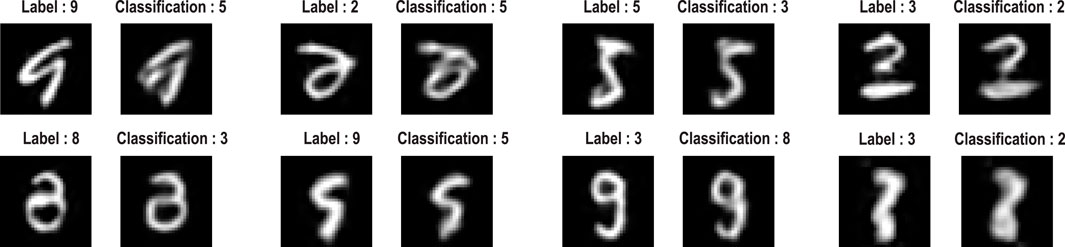

Images with a low marginal likelihood can be regarded as ambiguous or difficult to classify because they have a small likelihood of being sampled from the class of digits. Figure 9 provides some examples that speak to the potential importance of scoring the validity of classification (Bach et al., 2015).

Figure 9. Classification failures. This figure provides examples of incorrect classification of images with a small marginal likelihood. Each pair of images presents the training image with its label and the corresponding posterior prediction in pixel space and accompanying maximum a posteriori classification.

Summary

This section illustrates the use of renormalization procedures for learning the structure of a generative model for object recognition and generation in pixel space. The protocol uses a small number of exemplar images to learn a renormalizing structure appropriate for lossless compression. The ensuing structure was then generalized by active learning, that is, learning the likelihood mappings that parameterize the block transformations required to compress images sampled from a larger cohort. This active learning ensures high mutual information between the scale-invariant mapping from pixels to objects or digit classes. Finally, the RGM was used to classify test images by inferring the most likely digit class.

It is interesting to compare this approach to learning and recognition with the complementary schemes in machine learning. First, the supervision in active inference rests on supplying a generative model with prior beliefs about the causes of content. This contrasts with the use of class labels in some objective function for learning. In active inference, the objective function is a variational bound on the log evidence or marginal likelihood. Committing to this kind of (universal) objective function enables one to infer the most likely cause (e.g., digit class) of any content and whether it was generated by any cause (e.g., digit class), per se.

In classification problems of this sort, test accuracy is generally used to score how well a generative model or classification scheme performs. This is similar to the use of cross-validation accuracy based on a predictive posterior. The key intuition here is that test and cross-validation accuracy can be read as proxies for model evidence (MacKay, 2003). This follows because log evidence corresponds to accuracy minus complexity: see Equation 2. However, when we apply the posterior predictive density to evaluate the expected log-likelihood of test data, the complexity term vanishes because there is no further updating of model parameters. This means, on average, the log evidence and test or cross-validation accuracy are equivalent (provided the training and test data are sampled from the same distribution). Turning this on its head, models with the highest evidence generalize in the sense that they furnish the highest predictive validity or cross validation (i.e., test) accuracy. One might argue that the only difference between variational procedures and conventional machine learning is that variational procedures evaluate the ELBO explicitly (under the assumed functional form for the posteriors), whereas generic machine learning uses a series of devices to preclude overfitting, such as regularization, mini-batching, and other stochastic schemes. See Sengupta and Friston (2018) for further discussion.

This speaks to the sample efficiency of variational approaches that elude batching and stochastic procedures. For example, the variational procedures above attained state-of-the-art classification accuracy on a self-selected subset of test data after seeing 10,000 training images. Each training image was seen once, with continual learning (and no notion of batching). Furthermore, the number of training images actually used for learning was substantially smaller10 than 10,000 because active learning admits only those informative images that reduce expected free energy. This Maxwell’s demon aspect of selecting the right kind of data for learning will be a recurrent theme in subsequent sections.

Finally, the requisite generative model was self-specifying, given some exemplar data. In other words, the hierarchical depth and size of the requisite tensors were learned automatically within a few seconds on a personal computer. In the next section, we pursue the notion of efficiency and compression in the context of time-series and state-space generative models that are renormalized over time.

Video compression and generative AI

This section generalizes the renormalizing procedures of the previous section to include dynamics for recognizing and generating ordered sequences of images. Procedurally, this simply involves specifying a scale transformation in time and installing unique state transitions into the prior transition tensors at each level of the RGM. In this setting, structure learning reduces to quantizing images in space, color, and time to produce time-color-pixel voxels. Unique transitions among neighboring voxels are then recorded in B tensors, where each unique voxel state is recorded in the column of the corresponding A matrix of each group of voxels. The time series of quantized images is then partitioned into segments of equal lengths (e.g., pairs), and the ensuing segments are inverted (using the A and B tensors) to evaluate posterior estimates over the initial state and path of each segment11. This produces a new sequence that is coarse-grained in time. Neighboring states (and paths) are then grouped together, and the process is repeated to populate the likelihood mappings (D and E) from parents at the higher level. By repeating this process, one ends up with a representation of an image sequence in terms of paths through the states of a single factor at the highest level. Each state in this factor generates the initial state (and path) of a group at the lower level, and so on recursively until an image sequence is generated.

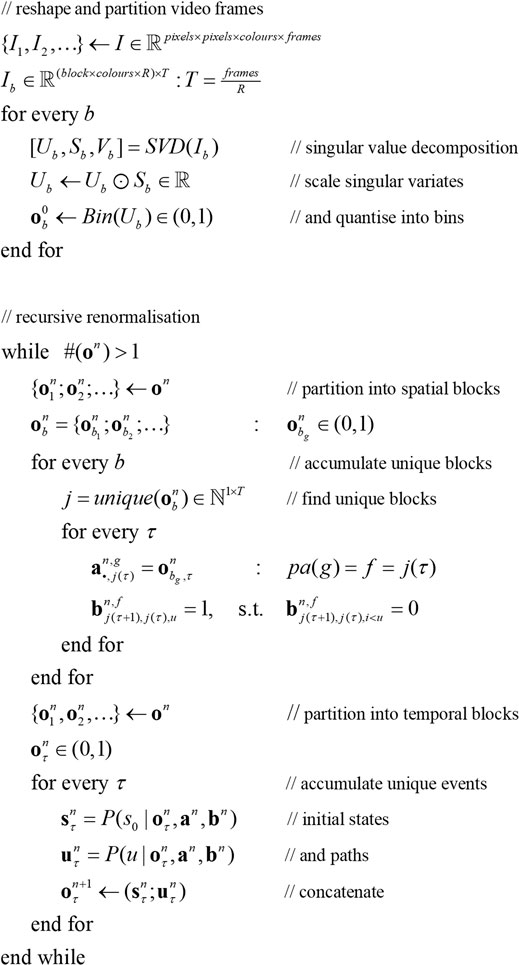

Algorithm 1 provides a pseudocode description of the procedure, where R denotes the number of frames constituting a video event and j labels successive blocks in terms of unique instances. Here, the renormalization implicit in fast structure learning is expressed in terms of the Dirichlet parameters of the requisite tensors:

By partitioning each sequence into nonoverlapping pairs during the timescale transformation at successive levels, one effectively halves the length of the sequence when ascending from one level to the next. From the perspective of generating sequences, this means that a state at one level generates two successive states at the lower level by generating the initial state and the path to the second state. The ensuing RGM acquires a definitive feature of such models, namely, a separation of temporal scales, in which there are two (or more) belief updates for every update at the level above. This means that higher levels encode sequences of sequences of sequences that can be regarded as episodes of successive events. From the perspective of recognition or classification, an image sequence is successively compressed with a coarse graining or blocking over space and time into a succession of events. This furnishes an event-based representation that generalizes the object-based representations of the preceding section. Note that this kind of RGM compresses images or scenes that may involve multiple objects or, indeed, causes that may not have the attribute of objecthood, such as textures and backgrounds.

During inference, the separation of temporal scales manifests a particular kind of reactive message passing (Bagaev and de Vries, 2021; Hewitt et al., 1973). Because each level generates a small sequence (here pairs), the variational message passing depicted in Figure 2 is scheduled as follows: at the highest level, priors are passed to the level below to generate two iterations. After two belief updates, the ascending messages are returned to the higher level to form a posterior over events. However, before the lower level can respond, it must query its lower level, waiting for two iterations before updating its beliefs, and so on, down to the first level. This means any given level receives messages or requests from the level above and responds to those requests at a slower rate than it exchanges messages with its subordinates. In short, lower levels update their beliefs more quickly, in a way that rests on an asymmetry in the frequency of hierarchical message passing—an asymmetry that characterizes message passing in real neuronal networks (Bastos et al., 2015; Hasson et al., 2008; Kiebel et al., 2008; Pefkou et al., 2017).

A worked example

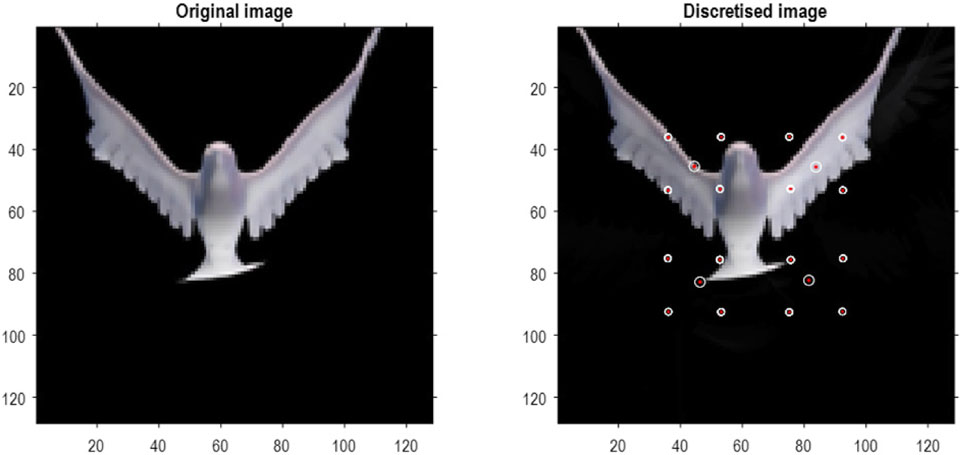

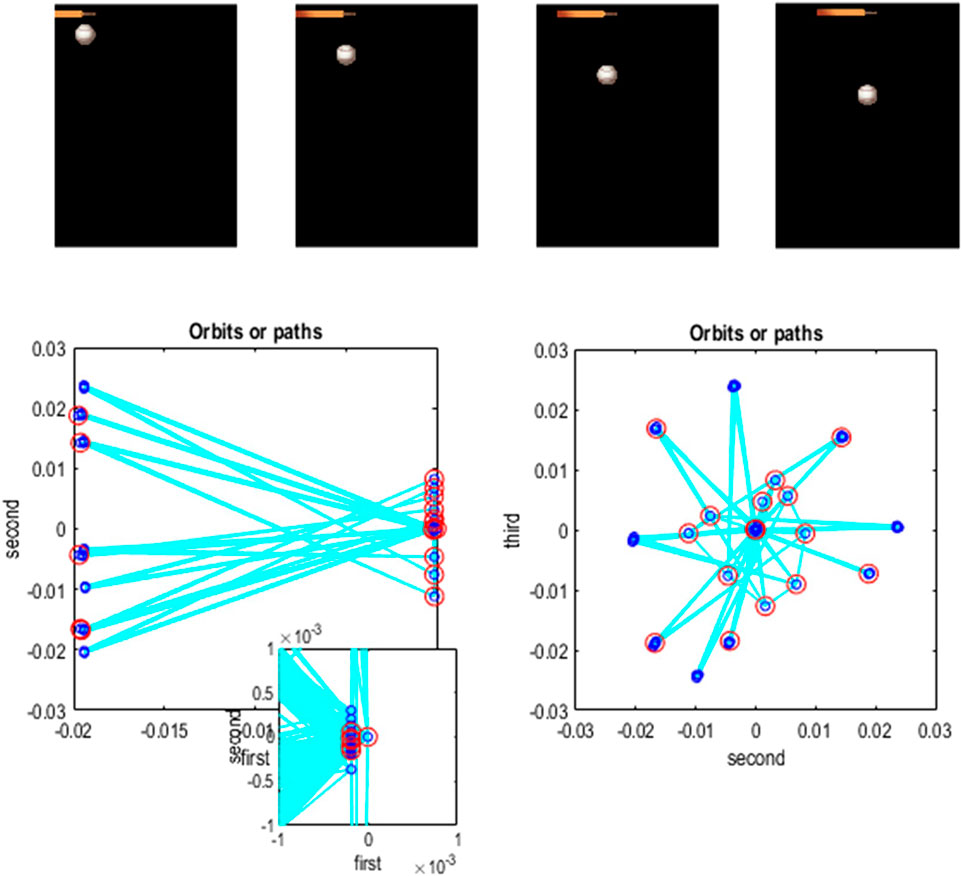

To illustrate the basic architecture of this RGM, we used a short video sequence of a dove flapping her wings. The original video12 was down-sampled to 32 frames, where each frame comprised 128 pixels × 128 pixels with three TrueColor channels. The first scaling transformation from images to discrete state space used the same nearest-neighbor block transformation as in the previous section; however, here, we blocked each image into 4 × 4 blocks, generating an RGM with two hierarchical levels. Crucially, singular value decomposition operated on successive pairs of images (c.f., spatiotemporal receptive fields). This simply involved reshaping the tensors for each image block to concatenate color and time before applying a singular-value decomposition. The ensuing singular vectors, therefore, span three colors and two time points, compressing the video into 64 time-color-pixel voxels. The remaining scaling transformations constructed the requisite likelihood and transition matrices as described in Algorithm 1.

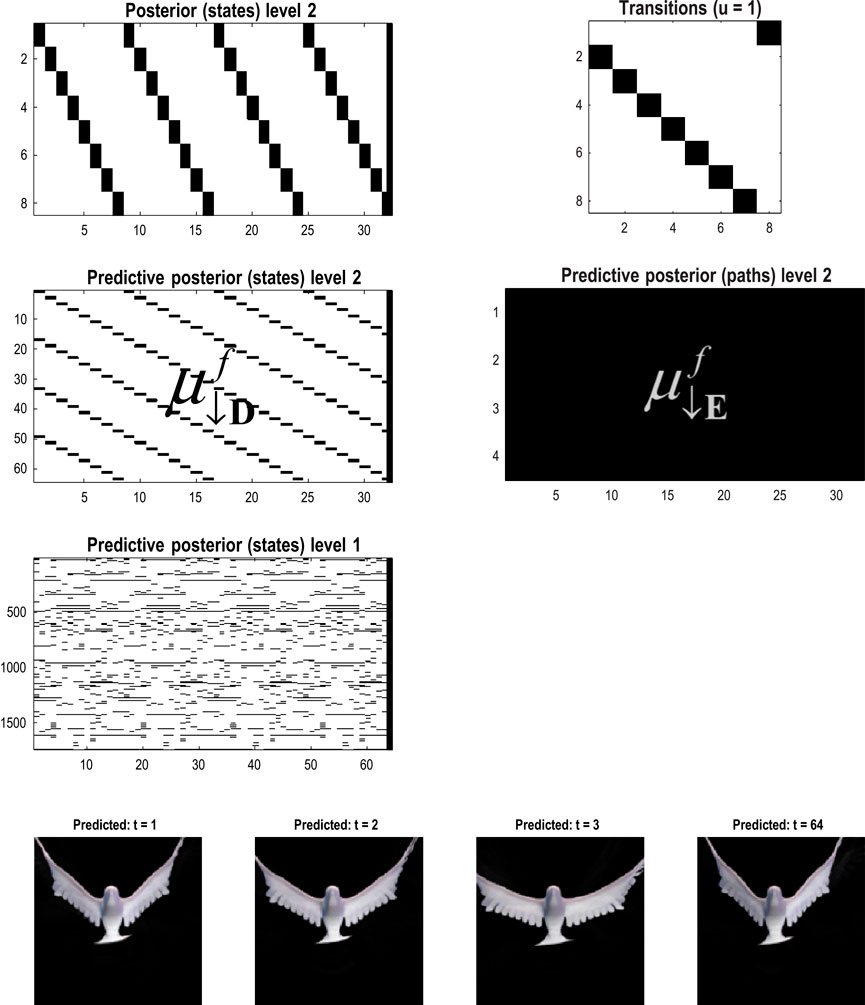

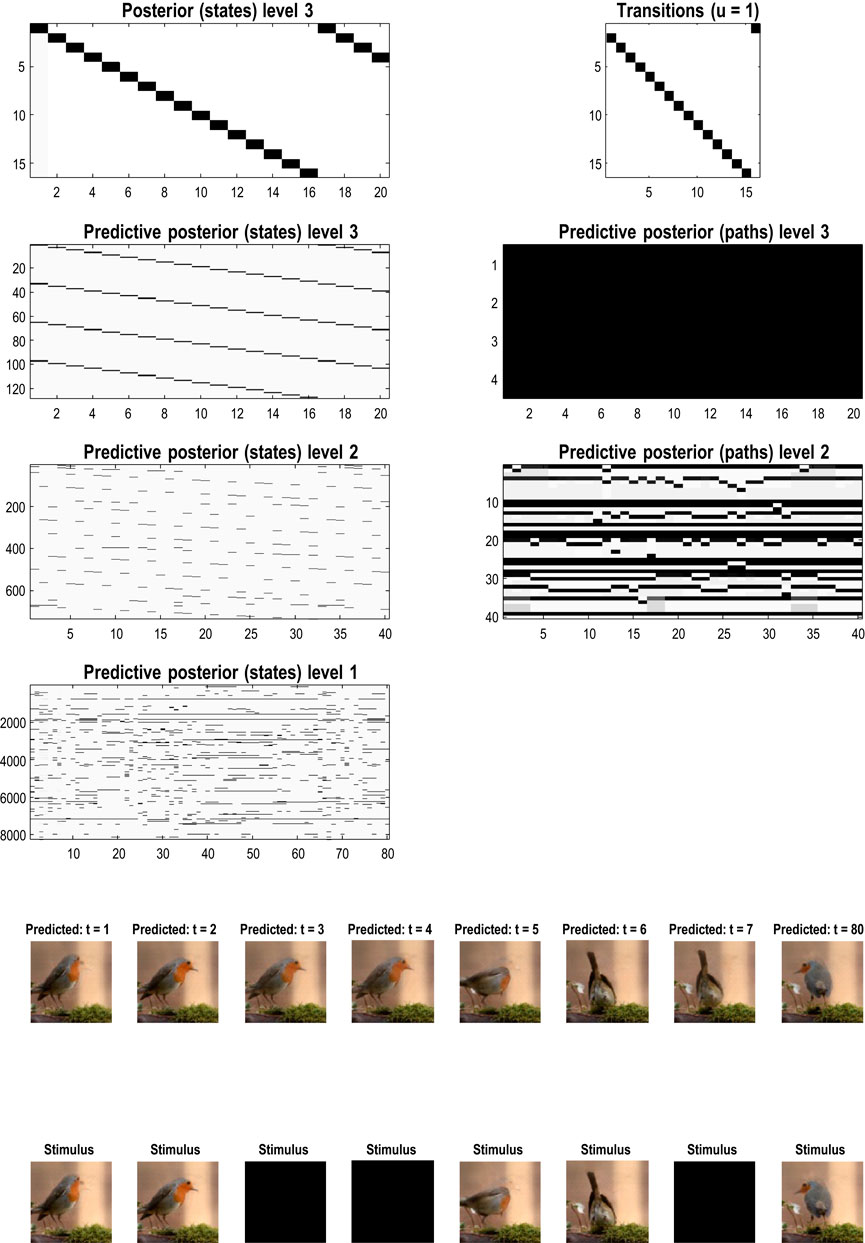

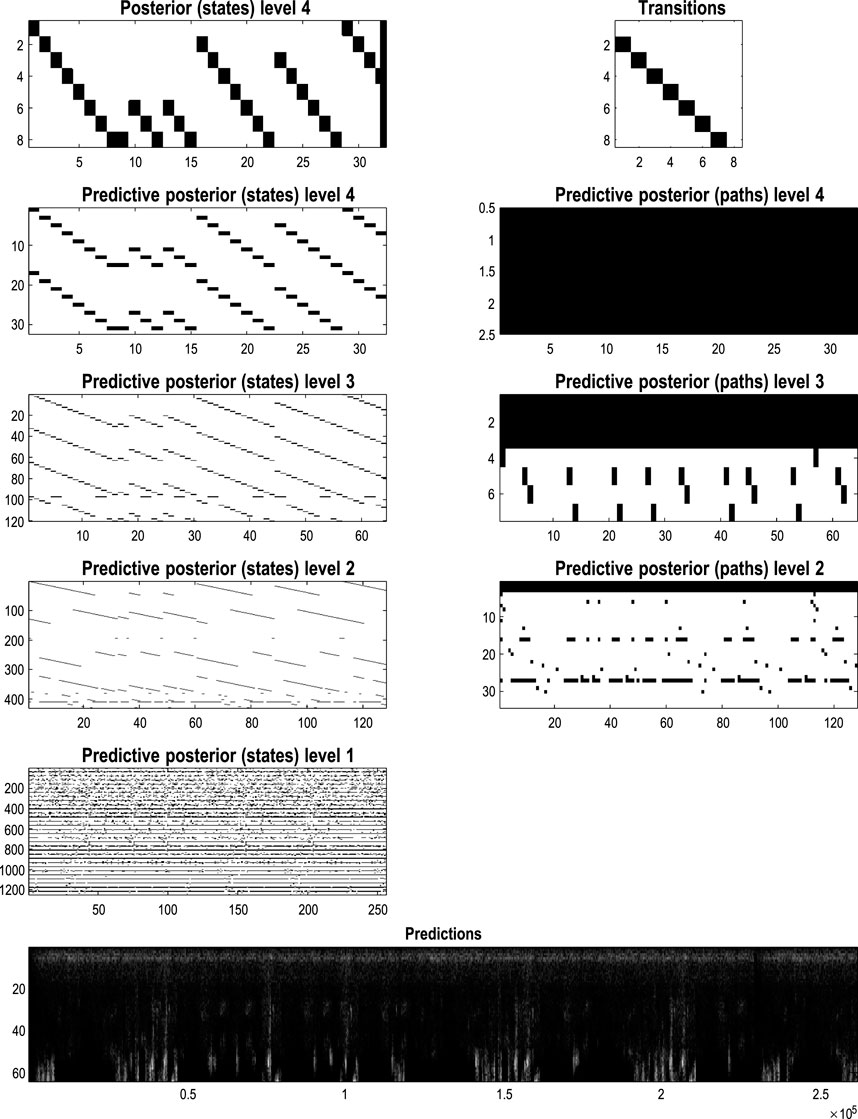

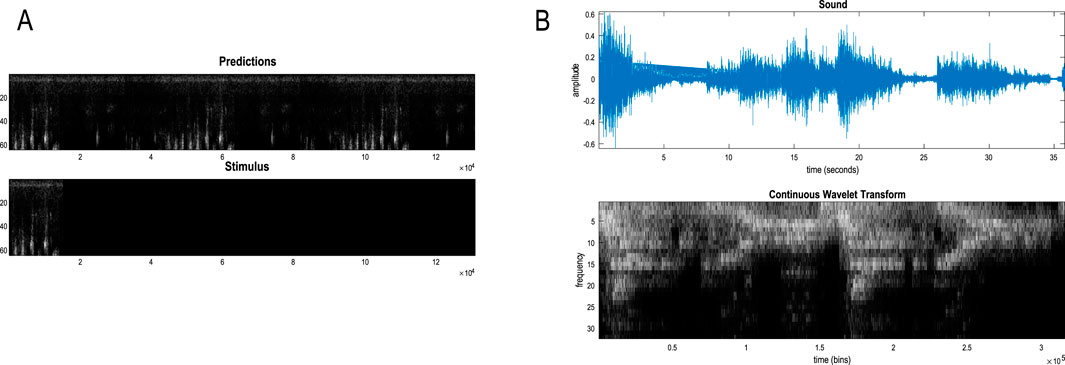

Figure 10 shows the first frame from the original video and the reconstructed frame following compression using the same format as Figure 4. Figure 11 illustrates the generation of a movie with 128 frames or 64 voxels from a structure learned from compressing a video of 64 frames (a cycle through two flaps of the wings). The RGM has compressed each cycle into eight events, repeated four times during generation. The upper right panel shows the discovered transitions among these events. In this instance, we have a simple orbit or closed path where the last event transitions to the first.

Figure 10. A dove in flight. This figure shows a frame from a movie of a (digital) dove flapping her wings. The left panel is a TrueColor (128 pixels × 128 pixels, RGB) image used for structure learning, while the right panel shows the corresponding posterior prediction following discretization. This example used a tessellation of the pixels into 32 voxels × 32 voxels, with a temporal resampling of R = 2: that is, successive pairs of (32 pixels × 32 pixels) image patches were grouped together for singular value decomposition. Singular variates took nine discrete values (centered on zero) for a maximum of 32 singular vectors. The locations of the image patches are shown with small red dots (encircled in white). The larger dots correspond to the centroids of blocks, following the first block transformation at the second level of the ensuing RGM.

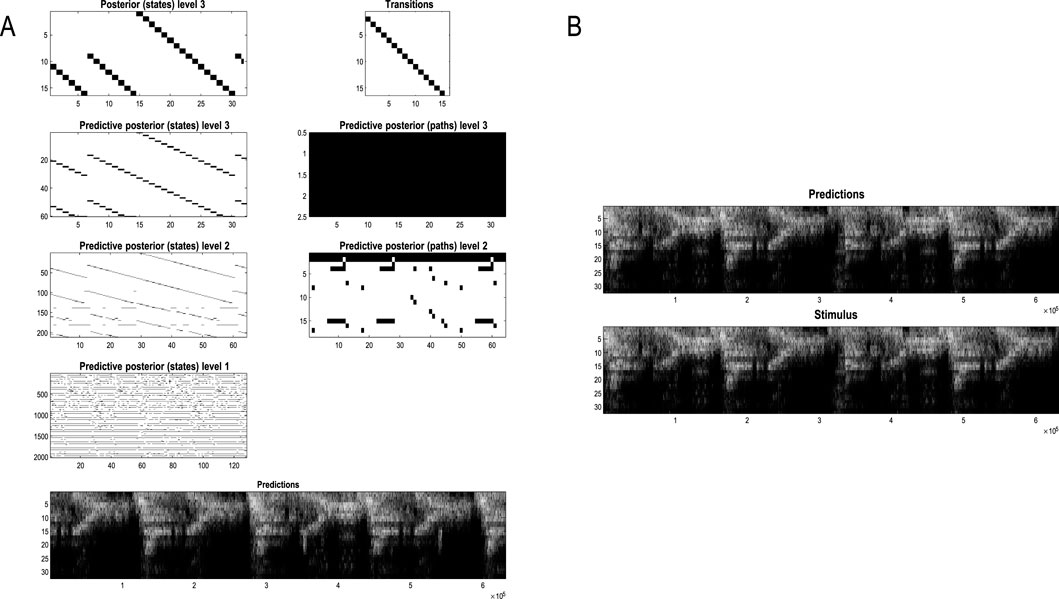

Figure 11. Generating movies. Following structure learning based on two cycles of wing flapping (i.e., 64 frames or 32 time-color-pixel voxels), an RGM was used to generate posterior predictions over 128 video frames, namely, four flaps. Each panel plots probabilities, with white representing zero and black representing one. The “Posterior” plots have an x-axis that represents time, with coarser steps at higher levels. The rows along the Y-axis are the different states we might occupy. The “Transitions” plot has columns representing the state we come from and rows representing that we go to. The posterior predictions are the messages passed down hierarchical levels (see Figure 2). The structure learned under this RGM compressed each cycle into eight events. The format of this figure will be used in subsequent examples: the upper right panel shows the discovered transitions among (high-level) events. In this instance, we have an orbit where the last state transitions to the first. The upper left panel depicts the posterior distribution over states at the highest level in image format; here, showing four cycles. These latent states then provide empirical priors over 64 initial states of the four image quadrants at the subordinate level, depicted in the predictive posterior panel below. The accompanying predictive posterior over paths at this level (on the right) shows that each of the four paths was constant over time, thereby generating predictive posteriors over the requisite states at the first level (i.e., singular variates) and, ultimately, the posterior predictions in pixel space. The first and last generated images are shown in the lower row.

The upper left panel depicts the posterior distribution over states at the highest level in image format; here, it shows four cycles. These latent states then provide empirical priors over the initial states of the four image quadrants at the subordinate level (via D). The accompanying predictive posterior over paths (via E) shows that each of the four paths was constant over time, thereby generating predictive posteriors over the requisite states at the first level (i.e., singular variates) and, ultimately, the posterior predictions in pixel space. The first and last generated images are shown in the lower row.

Figure 11 illustrates the ability of the RGM to generate video content. Conversely, Figure 12 illustrates the inference or recognition of images presented as (partial) stimuli using the same format. The upper panels show the predictive posteriors over states (on the left) and paths (on the right), respectively. The images below correspond to the predictions in pixel space at the first time points and at the last time point. The corresponding stimuli are shown in the lower row of images. This illustration of model inversion speaks to some key biomimetic aspects.

Figure 12. Image completion. This figure reproduces the previous figure but presents the model with a partial stimulus in the upper right quadrant. The likelihood mappings were equipped with small concentration parameters (of 1/32) to model any uncertainty around the events installed during structure learning. The lower rows show the posterior predictions (upper row) and stimulus (lower row) for the first and last timeframes. The key thing to take from this figure is that by the sixth video frame or third voxel (t = 3), the posterior predictive density has correctly filled in the missing quadrants and continues to predict the stimuli veridically by treating the missing data as imprecise or uninformative.

First, by generalizing scale transformations to both space and time, we induce nontrivial posteriors over paths or dynamics. The separation into predictive posteriors over states and paths has a clear homology with the segregation of processing in the visual cortical hierarchy in the brain (Ungerleider and Mishkin, 1982). This is often cast in terms of a distinction between dorsal and ventral streams, in which the dorsal stream is concerned with “where” things are in the visual scene and how they move or can be moved (Goodale et al., 2004). In contrast, the ventral stream is responsible for encoding “what” is causing visual impressions. Furthermore, higher or deeper levels of the visual hierarchy show slower stimulus-bound responses than lower levels: for example, Hasson et al. (2008). This is an emergent property of the (perceptual) inference demonstrated in the above example in terms of the successive slowing of belief updating at higher levels.

In Figure 12, the stimuli presented to the RGM were restricted to the upper left quadrant. In neurobiology, this would be like presenting a moving bar in a restricted portion of the visual field (Livingstone and Hubel, 1988). Despite this partial stimulus, the top-down predictions quickly evince a form of pattern completion, effectively seeing what was not actually presented. Indeed, by the sixth frame (i.e., third voxel), the posterior predictions have filled in the missing content. This predictive capacity is reminiscent of functional magnetic resonance imaging studies of predictive processing in human subjects, in which one can record predictive activity in the visual cortex that is induced by only providing one quadrant of the visual stimulus (Muckli et al., 2015).

Paths, orbits, and attractors

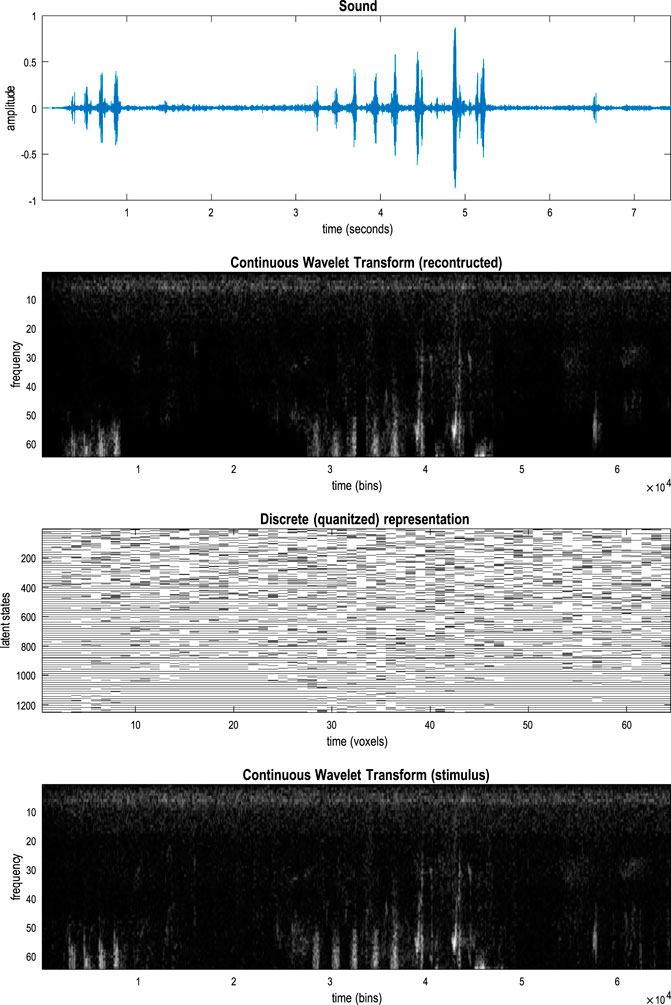

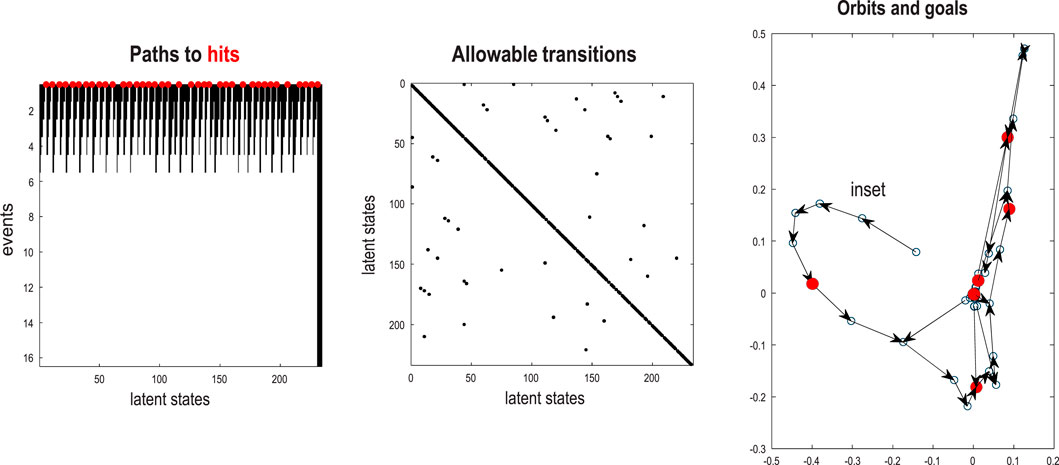

In the preceding example, the sequence of images constitutes a closed path, namely, a simple orbit. This can be regarded as a quantized representation of a periodic attractor. Here, we use the same procedures to compress and generate stochastic chaos, using images generated by a Lorenz system (Friston K. et al., 2021; Lorenz, 1963; Ma et al., 2014; Poland, 1993). The aim of this example is to show how active learning after structure learning furnishes a generative model of chaotic orbits. In this instance, the dynamics or transitions are learned to accommodate switches among paths that acquire a probabilistic aspect due to random fluctuations and exponential divergence of trajectories.

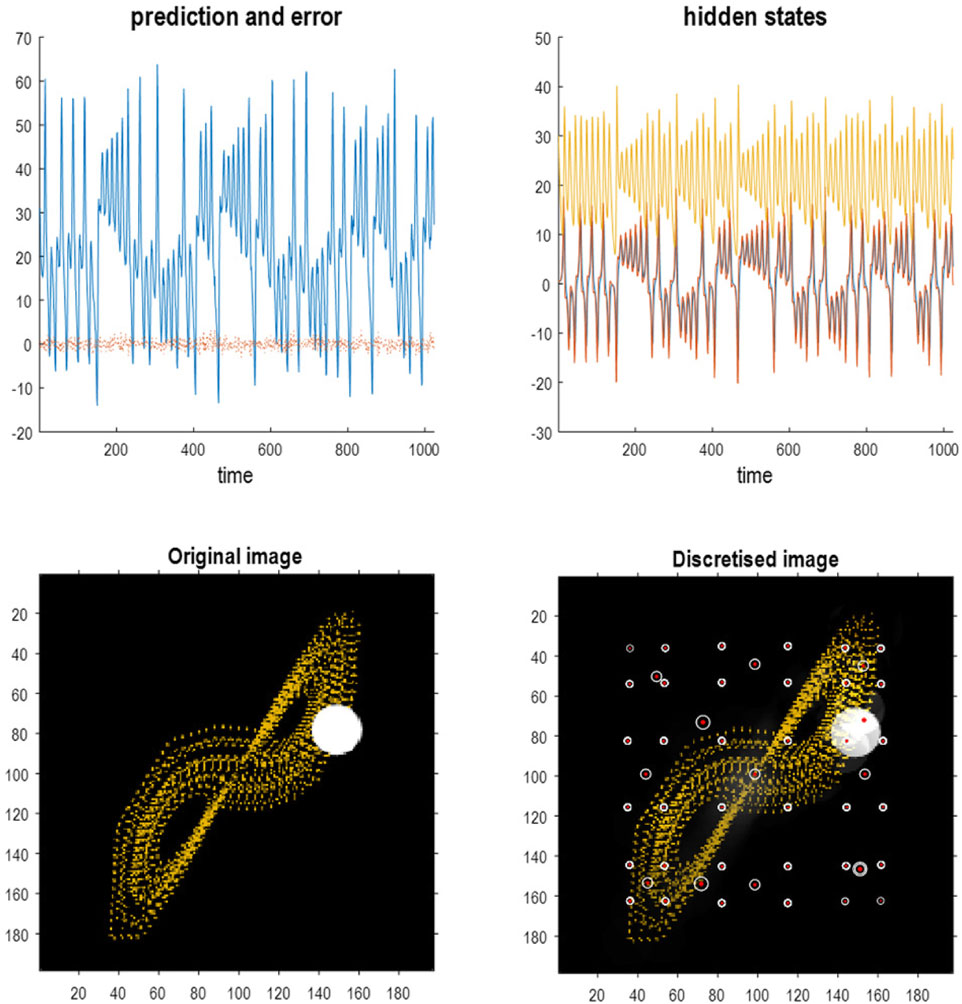

Figure 13 shows the time series used to generate training images. The upper right panel shows a sequence of hidden states over 1,024 time bins, generated by solving stochastic differential equations based on a Lorenz attractor. The upper left panel shows the random fluctuations (i.e., state noise) used in generating these states in terms of an arbitrary mixture of hidden states and stochastic fluctuations on their motion (i.e., prediction and error). The ensuing hidden states were used to generate an image sequence in which the motion of a white circle traced out the trajectory of the first two hidden states (indicated with golden dotted lines). The first half of the resulting sequence was then used for structure learning. A training image and its reconstitution following discretization are shown in the lower panels of Figure 13. The encircled red dots show the location of the groups of pixels at successive scales.

Figure 13. Stochastic chaos. This figure summarizes the quantization of images generated from stochastic differential equations based on the Lorenz system. The upper panels report the solution in terms of the three hidden states of a Lorenz system (right upper panel) and the contribution of random fluctuations, innovations, or state noise (left upper panel). This contribution is characterized in terms of an arbitrary linear mixture of the hidden states and the prediction errors induced by random fluctuations (red line). The hidden states were used to generate an image in which the position of a white ball was specified by the first two hidden states. The ensuing trajectory was used to populate the image with gold dots. One can envisage the ensuing sequence of video frames as depicting a white particle flowing in a medium whose convection is described using the Lorenz equations of motion. (Strictly speaking, the equations pertain to the eigenmodes of convection). The lower left panel shows an exemplar video frame in a TrueColor (198 pixels × 198 pixels) image. The lower right panel shows the reconstructed image generated from its quantized representation. Following the format of Figures 4, 10, the encircled red dots show the centroids of subsequent groups. In this example, the image was tessellated into (32 × 32) pixel groups with singular variates taking five discrete values for a maximum of 16 singular vectors. As previously mentioned, the temporal resampling considered successive pairs. The resulting three-level RGM is illustrated in the subsequent figure.

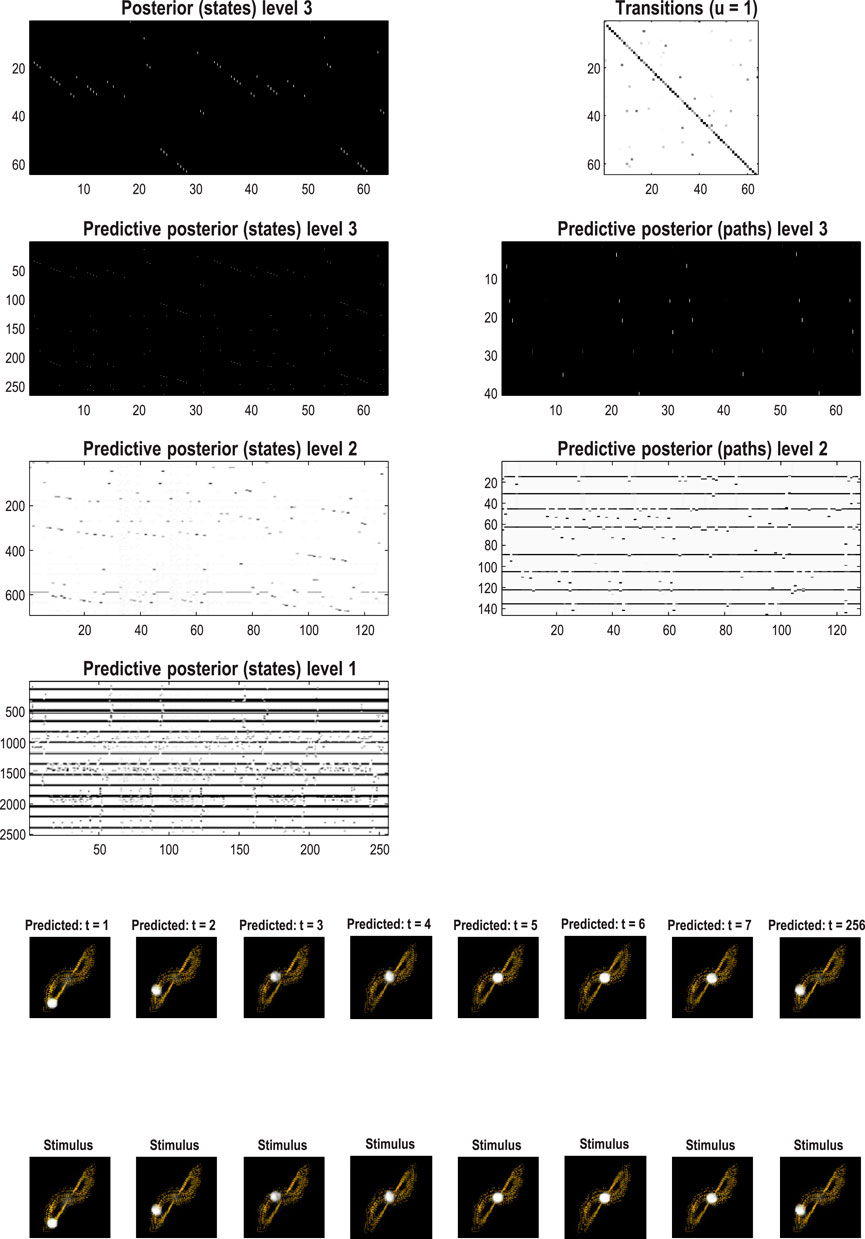

Structure learning was based on the first 512 images. Following this, the model was exposed to the subsequent 512 images to enable learning of the transition dynamics via the accumulation of appropriate Dirichlet parameters. The resulting transitions are shown in the top left of Figure 14. The resulting model has represented this dynamical system with 64 events (i.e., states at the highest level) with switching among certain events that recapitulate the stochastic switching of trajectories on the underlying Lorenz attractor. Figure 14 shows the predictive posteriors over states at successive levels and predicted images in response to a stimulus. This stimulus was a 128-image sequence from the training set. Following this initial “prompt,” the stimulus was rendered imprecise for a further 128 images and then removed completely for the remainder of the simulation period.

Figure 14. Quantized stochastic chaos. This figure uses the same format as Figure 12 to illustrate the learned transitions following fast structure learning and subsequent active learning, based on the first and second half of the image sequence depicted in Figure 13. In this example, the dynamics are summarized in terms of 64 events that pursue stochastic orbits under the discovered probability transition matrix shown on the upper left. The lower panels show the posterior predictions in pixel space and the accompanying stimuli presented for the first quarter of the simulated recognition and generation illustrated in Figure 15.

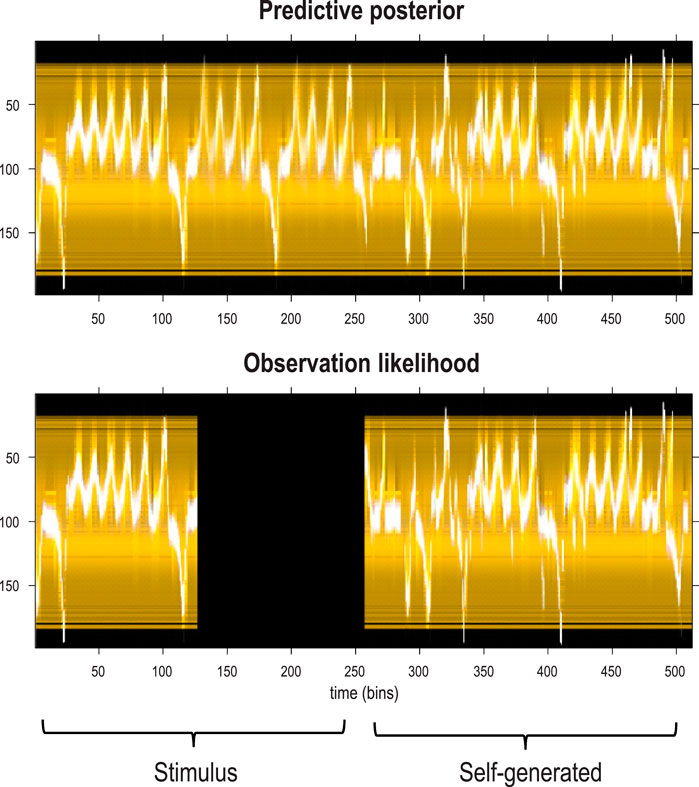

Figure 15 shows the stimuli and posterior predictions induced with this stimulation protocol. Maximum-intensity projections over the horizontal dimension were concatenated to render the fluctuations of the first hidden (Lorenz) state easily visible (compare Figure 15 with Figure 14). This format shows that the first 128 images have been recognized veridically; namely, the paths through renormalized latent state spaces have been correctly identified. When the stimulus is rendered imprecise (the dark region in the lower panel), the posterior predictions continue to produce plausible chaotic dynamics until the stimulus is removed altogether at 256 time bins. At this point, the RGM generates its own outcomes based on the learned generative model, which correctly infers the latent causes of this self-generated content. In other words, the second sequence of stimuli in the lower panel of Figure 15 is generated by the model’s discrete representation of chaotic events, as opposed to the first period, in which they were generated by solving continuous stochastic differential equations.