- 1Hamilton Glaucoma Center and Division of Ophthalmology Informatics and Data Science, Shiley Eye Institute, Viterbi Family Department of Ophthalmology, University of California (UC) San Diego, La Jolla, CA, United States

- 2Kanto Central Hospital of the Mutual Aid Association of Public School Teachers, Tokyo, Japan

- 3Tajimi Iwase Eye Clinic, Tajimi, Japan

- 4Department of Ophthalmology, Toho University Ohashi Medical Center, Tokyo, Japan

- 5Department of Ophthalmology and Visual Science, Tokyo Medical and Dental University, Tokyo, Japan

- 6Department of Ophthalmology, Graduate School of Medicine, The University of Tokyo, Tokyo, Japan

- 7Center Hospital of the National Center for Global Health and Medicine, Tokyo, Japan

- 8R&D Division, Topcon Corporation, Tokyo, Japan

- 9Department of Ophthalmology, Kanazawa University Graduate School of Medical Sciences, Kanazawa, Japan

- 10Department of Innovative Visual Science, Osaka University Graduate School of Medicine, Osaka, Japan

- 11Department of Myopia Control Research, Aichi Medical University Medical School, Nagakute, Japan

- 12Department of Ophthalmology, Tohoku University School of Medicine, Sendai, Japan

- 13Department of Ophthalmology, Seoul National University College of Medicine, Seoul National University Bundang Hospital, Seongnam, Republic of Korea

- 14Department of Ophthalmology, LKS Faculty of Medicine, The University of Hong Kong, Hong Kong, Hong Kong SAR, China

Purpose: To evaluate the diagnostic accuracy of a deep learning autoencoder-based model utilizing regions of interest (ROI) from optical coherence tomography (OCT) texture enface images for detecting glaucoma in myopic eyes.

Methods: This cross-sectional study included a total of 453 eyes from 315 participants from the multi-center "Swept-Source OCT (SS-OCT) Myopia and Glaucoma Study", composed of 268 eyes from 168 healthy individuals and 185 eyes from 147 glaucomatous individuals. All participants underwent swept-source optical coherence tomography (SS-OCT) imaging, from which texture enface images were constructed and analyzed. The study compared four methods: (1) global RNFL thickness, (2) texture enface image, (3) a single autoencoder model trained only on healthy eyes, and (4) a dual autoencoder model trained on both healthy and glaucomatous eyes. Diagnostic accuracy was assessed using the area under the receiver operating curves (AUROC) and precision recall curves (AUPRC).

Results: The dual autoencoder model achieved the highest AUROC (95% CI) (0.92 [0.88, 0.95]), significantly outperforming the single autoencoder model trained only on healthy eyes (0.86 [0.83, 0.88], p = 0.01), the global RNFL thickness model (0.84 [0.80, 0.86], p = 0.003), and the texture enface model (0.83 [0.79, 0.85], p = 0.005). Using AUPRC (95% CI), the dual autoencoder model (0.86 [0.83, 0.89]) also outperformed the single autoencoder model trained only on healthy eyes (0.80 [0.78, 0.82], p = 0.02), the global RNFL thickness model (0.74 [0.70, 0.76], p = 0.001), and the texture enface model (0.71 [0.68, 0.73], p<0.001). No significant difference was observed between the global RNFL thickness measurement and the texture enface measurement (p = 0.47).

Discussion: The dual autoencoder model, which integrates reconstruction errors from both healthy and glaucomatous training data, demonstrated superior diagnostic accuracy compared to the single autoencoder model, global RNFL thickness and texture enface-based approaches. These findings suggest that deep learning models leveraging ROI-based reconstruction error from texture enface images may enhance glaucoma classification in myopic eyes, providing a robust alternative to conventional structural thickness metrics.

Introduction

Glaucoma is reportedly more prevalent in myopic eyes, particularly in highly myopic eyes (1), than in emmetropic eyes (2–4). It has been estimated that approximately 5 billion people will be affected by myopia by the year 2050. Of these, approximately 1 million will be affected by high myopia (5). Diagnosing glaucoma in myopic eyes is challenging due to structural alterations, such as optic disc tilt, peripapillary atrophy, and thinner retinal nerve fiber layers (RNFL), which complicate standard assessments such as RNFL thickness.e.g (6, 7). Other sources of difficulties for detecting glaucoma in myopic eyes are discussed in detail by Tan et al. (8) and Jiravarnsirikul and colleaugues (9).

Recently, our group proposed texture-based enface imaging to detect glaucoma by leveraging intrinsic retinal texture properties rather than relying solely on thickness metrics (10). Results indicated that this novel texture-based analysis method can improve on standard macular ganglion cell-inner plexiform layer thickness, macular retinal nerve fiber layer thickness, and ganglion cell complex thickness maps for discriminating between highly myopic glaucomatous and highly myopic healthy eyes.

Instead of analyzing global optic nerve head (ONH) RNFL changes as is often done, focusing on specific regions of interest (ROI) may improve detection sensitivity, as glaucomatous damage tends to be localized in early stages. Bowd et al. reported that ROI-based approaches show promise in tracking glaucomatous progression by isolating regions more likely to exhibit structural deterioration (11). This method employed a single autoencoder trained on healthy eyes to detect abnormal changes based on reconstruction errors. Reconstruction errors are deviations from learned normal patterns that suggest potential glaucomatous damage. The current study expands this approach by employing two specialized autoencoders. A Healthy Autoencoder trained on normal aging eyes to model expected age-related change and a Glaucoma Autoencoder trained on glaucomatous eyes to capture disease-specific damage. This dual-autoencoder system enhances detection by comparing how well an input image aligns with learned normal or glaucomatous patterns.

The objective of the current study was to evaluate whether the dual-autoencoder framework improves glaucoma detection in myopic eyes compared to a single autoencoder model, global RNFL thickness, or texture enface image analysis alone.

Materials and methods

For the current analysis, 453 eyes from 315 individuals participating in the multi-center "Swept-Source OCT (SS-OCT) Myopia and Glaucoma Study" (12–14) were included: 268 eyes from 168 healthy participants and 185 eyes from 147 glaucomatous participants.

Eight institutions, primarily from Asia, participated in the Swept-Source OCT (SS-OCT) Myopia and Glaucoma Study (Kanazawa University, Osaka University, Tajimi Iwase Eye Clinic, Toho University Ohashi Medical Center, Tohoku University, Seoul National University Bundang Hospital, University of California, San Diego and Hong Kong Eye Hospital). All study participants provided written informed consent according to their institution's requirements, fulfilled all inclusion and exclusion criteria, and were evaluated according to a standardized protocol. The methodology adhered to the tenets of the Declaration of Helsinki for research involving human subjects

All individuals underwent comprehensive ocular examination including refraction and corneal curvature measurements (ARK-900; NIDEK), best-corrected visual acuity (BCVA) with a 5-meter Landolt chart, axial length measurements (IOL Master; Carl Zeiss Meditec, Inc), slit-lamp biomicroscopy, Goldmann applanation tonometry, dilated fundus examination, fundus photography, stereophotography, and Humphrey Field Analyzer 24–2 Swedish Interactive Threshold Algorithm Standard testing (Carl Zeiss Meditec, Inc).

Individuals were excluded if they had a family history of glaucoma, ocular or systemic diseases that could affect VF or OCT results, or a history of systemic steroid or anti-cancer drug use. Additionally, individuals with clinically significant hypertension or hypotension (treated systolic blood pressure<100 mmHg) were excluded. All individuals were between the ages of 30 and 70 years (12, 14).

Eyes were included if they had a spherical equivalent (SE) of +1 diopter (D) or less, astigmatism<2 D, axial length<28 mm, best-corrected visual acuity (BCVA) ≥20/25, and good-quality OCT images and fundus photographs as determined by expert graders.

Eyes were excluded if there was any contraindication to pupillary dilation or if they were found to have narrow anterior chamber angles defined as Shaffer grade ≤2. Unreliable visual field results, characterized by fixation loss or false negatives >20% or false positives >15%, were also excluded. Additional exclusion criteria included the presence of optic nerve or retinal abnormalities other than glaucoma, pathologic myopia or suspected pathologic myopia (e.g., eyes with pronounced optic disc ovality >1.33 on fundus examination, inverted optic discs, posterior staphyloma, focal and/or diffuse macular chorioretinal atrophy, intrachoroidal cavitation, or circular peripapillary atrophy zones), and any history of intraocular or refractive surgery. Healthy subjects with a family history of glaucoma were excluded. Ocular or systemic conditions that could affect visual field or OCT results—such as clinically significant cataract, diabetic retinopathy, age-related macular degeneration, epiretinal membrane, or systemic steroid/anti-cancer drug use—were also grounds for exclusion, as were clinically significant hypertension or hypotension.

Normal eyes had no abnormal findings OU on complete ophthalmologic assessments including slit-lamp and fundus examinations, an intraocular pressure<21 mmHg, clinically open angles, normal optic disc appearance by stereoscopic optic disc photograph assessment (by M.A., A.I, G.T., and K.O.M.), and normal visual field (VF) results. Glaucomatous eyes were defined by the presence of a glaucomatous optic disc appearance confirmed by masked assessment of stereoscopic disc photographs (M.A., A.I., G.T., K.O.M.) and corresponding visual field (VF) defects consistent with the Hodapp–Parrish–Anderson criteria (15). Treated glaucoma patients with controlled IOP were included. All included eyes had an MD better than –12 dB, consistent with early to moderate glaucoma severity.

Axial length-based myopia definition

We defined high axial myopia by an axial length of > 26.0 mm because axial elongation can lead to morphological changes of the optic disc and the fundus (6); myopia defined by refractive error does not necessarily reflect these changes. Moreover, cataract surgery or refractive procedures can result in a refractive shift in eyes that are axially elongated but no longer classified as (highly) myopic by refractive error. To systematically categorize axial length, we used the following classification:

● Emmetropic-Mild Myopic (<24.5 mm)

● Moderately Myopic (24.5-26.0 mm)

● Highly Myopic (>26.0 mm)

Optical coherence tomography

All participants underwent swept-source optical coherence tomography (SS-OCT) imaging (DRI OCT Triton; Topcon, Inc). A 6.0 × 6.0 mm ONH raster scan was obtained in each eye to assess the ONH and peripapillary structures. This system uses a 1050-nm wavelength to achieve high-speed scanning and deeper tissue penetration. Each scan comprises 256 horizontal B-scans, each consisting of 512 A-scans. The scanning protocol has been previously described (12) and all OCT images deemed to meet acceptable quality standards were included in the analysis.

Texture enface image

Texture can be characterized as a visual pattern that reflects spatial arrangement of pixel intensities of an image. Texture analysis captures the granularity and repetitive patterns of object surfaces. In the case of OCT images, each retinal layer has a unique texture that can be visually distinguished. We recently proposed a new texture descriptor called SALSA-Texture, which is robust to the intensity variation of local region caused by illumination (10). In brief, a slab 70 μm from the inner limiting membrane is created. Then for each pixel in a B-scan, a 9x9 pixel neighbouring system is created. A local normalized difference of Gaussian filter was then applied to reduce the noise and Homogeneous -bin (H-bin) normalization to increase the robustness to local contrast differences (16). In this study, the global RNFL thickness and the texture enface were both calculated using the 2–6 mm grid, where average RNFL thickness and average intensity were computed, respectively.

Region of interest map

Deep learning autoencoder for glaucoma classification

Previously, we used an autoencoder to detect glaucomatous changes. For more details, see (11). Briefly, the autoencoder was trained to analyze difference images between baseline and follow-up RNFL images, identifying changes indicative of glaucoma progression. The goal of the DL-AE is to reconstruct output x′ to match the input x as closely as possible. Because the number of nodes gets progressively smaller (encoder) and then larger again (decoder) the model is forced to use the most important features of the image to be able to reconstruct the input using compressed data. The reconstruction error was used as a key indicator, with higher errors suggesting deviations from learned patterns of aging changes in glaucomatous and healthy eyes.

In this study, we expand on this approach by employing two specialized autoencoders to classify each texture enface image as either "healthy-like" or "glaucoma-like":

1. Healthy Autoencoder – Trained exclusively on texture enface images from healthy eyes, not only learning common patterns of normal ONH structure but also age-related changes and machine specific variability.

2. Glaucoma Autoencoder – Trained exclusively on texture enface images from glaucoma eyes, capturing characteristics indicative of glaucomatous damage.

Because each autoencoder is restricted to its own class, they become "specialists" in reconstructing either healthy or glaucoma-specific patterns. During inference, any texture enface image is presented to both autoencoders, generating two separate reconstructions. The reconstruction error (input map – reconstruction map) from each model provides insight into how well the image aligns with the patterns learned by each autoencoder.

The key idea is that the healthy autoencoder will reconstruct healthy-like images more accurately, leading to lower reconstruction errors for normal eyes, but higher errors when presented with glaucomatous images it has never encountered. Conversely, the glaucoma autoencoder will perform well on glaucomatous images but struggle to reconstruct healthy patterns, resulting in higher reconstruction errors for normal eyes.

Similar to our previous work, in this paper, we employed 5-layer deep learning architecture (11). We used Glorot uniform to initialize the weight matrix and the tanh activation function for the hidden layers. The model was compiled using the Adam optimization algorithm, which typically yields better results than the simpler stochastic gradient descent algorithm. To train the network, the Adam method was used to minimize the loss function (Mean Squared Error, MSE) with a learning rate of α = 10-³ and a batch size of 50. The deep learning autoencoder was trained end-to-end for 150 epochs, and we selected the best model based on the lowest reconstruction error.

Markov-based image segmentation

The reconstruction error images was used to estimate the ROI map identifying areas most likely to correspond to glaucomatous structural damage. To incorporate spatial consistency and reduce noise, we applied a Markov random field (MRF)-based segmentation algorithm, which exploits the statistical correlation of reconstruction errors among neighbouring pixels (17). The MRF, a stochastic process, models the local characteristics of an image and integrates this information with observed data to infer the most probable segmentation.

Each input texture enface image was segmented into two classes, distinguishing healthy-like and glaucoma-like regions. Each pixel was represented by a 2D error feature, incorporating reconstruction errors from both healthy and glaucoma autoencoders. The MRF framework treated each pixel's classification label as a hidden variable, estimating the most probable classification while maintaining spatial smoothness in the segmentation process.

To optimize segmentation, we adopted a 2D prior-based MRF model, inspired by the approach of Kato and Pong (18) for color-textured image segmentation. In their work, multi-dimensional features—such as color and texture—were used as priors to enhance segmentation accuracy. Similarly, in our model, 2D reconstruction error features serve as priors within the MRF, allowing for more robust, spatially coherent segmentation of glaucoma-related structural damages,

Glaucoma classification

Instead of averaging intensity within a fixed 2–6 mm diameter region centered on the ONH using predefined concentric circles (2 mm and 6 mm), we introduce a ROI-Based Glaucoma Score that leverages the segmented region identified through MRF-based classification. This score accounts for both the extent of the affected region and the intensity reduction due to glaucomatous thinning. The ROI-Based Glaucoma Score is defined as:

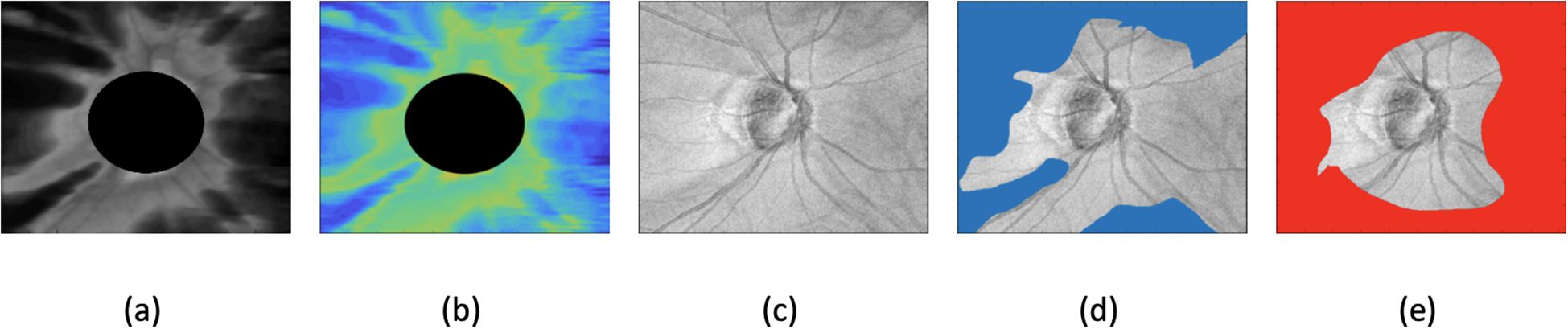

Where: 1) is the area of the segmented region identified as glaucoma-like 2).is the total image area 3) is the mean intensity within the glaucoma-like region and 4) is the mean intensity from a training healthy dataset. Figure 1 presents an example of a glaucomatous eye, illustrating (a) the RNFL thickness map, (b) texture image, and (c) SLO (scanning laser ophthalmoscopy) image, along with (d, e) the ROI-based maps produced by the dual and single autoencoder models.

Figure 1. Example of glaucomatous eyes, showing (a) RNFL (retinal nerve fiber layer) thickness map, (b) texture image, (c) SLO (scanning laser ophthalmoscopy) image, (d) dual autoencoder ROI-based map, and (e) single autoencoder ROI-based map.

Training and evaluation

Five-fold cross-validation was used to provide out-of-sample predictions for auto-encoder ROI model to avoid overoptimistic estimates of classification accuracy. Both healthy and glaucoma eyes were randomly divided at the patient level into 5 subsets. For each model, we used four subsets to train the model and used the fifth subset to assess model performance. This sequence was repeated 5 times, with each subset serving as the test set one time so that each tested eye was never part of its own training set and was tested only once. An augmentation procedure in the form of horizontal mirroring similar to that used by Christopher et al. was applied to the minority class to balance the data (19).

Statistical analyses

Descriptive statistics included the calculation of the mean and standard deviation for normally distributed variables and median, first quartile, and third quartile values for non-normally distributed variables. Student's t-tests or Mann-Whitney tests were used to evaluate the statistical significance of differences in demographic and clinical parameters between glaucoma patients and healthy individuals.

Areas under the receiver operating characteristic curves (AUROCs) and precision recall curves were used to evaluate the diagnostic accuracies of the different models investigated in the study. An AUROC regression model with maximum likelihood estimator was used to adjust for the potentially confounding effects of age, image quality, axial length, and. The difference between AUROCs was assessed using a Wald test based on the bootstrap covariance (20). In addition, precision-recall curves (AUPRCs) were computed to account for class imbalance and sensitivities at fixed specificities of 80% and 95% were calculated.

To account for the correlation between observations from the same eye, a bootstrap resampling procedure was used to derive 95% CIs and P values, where the eye-level clusters were considered as the units of resampling.

Statistical analyses were performed using Stata (StataCorp LLC, College Station, TX). P values less than 0.05 were considered statistically significant.

Results

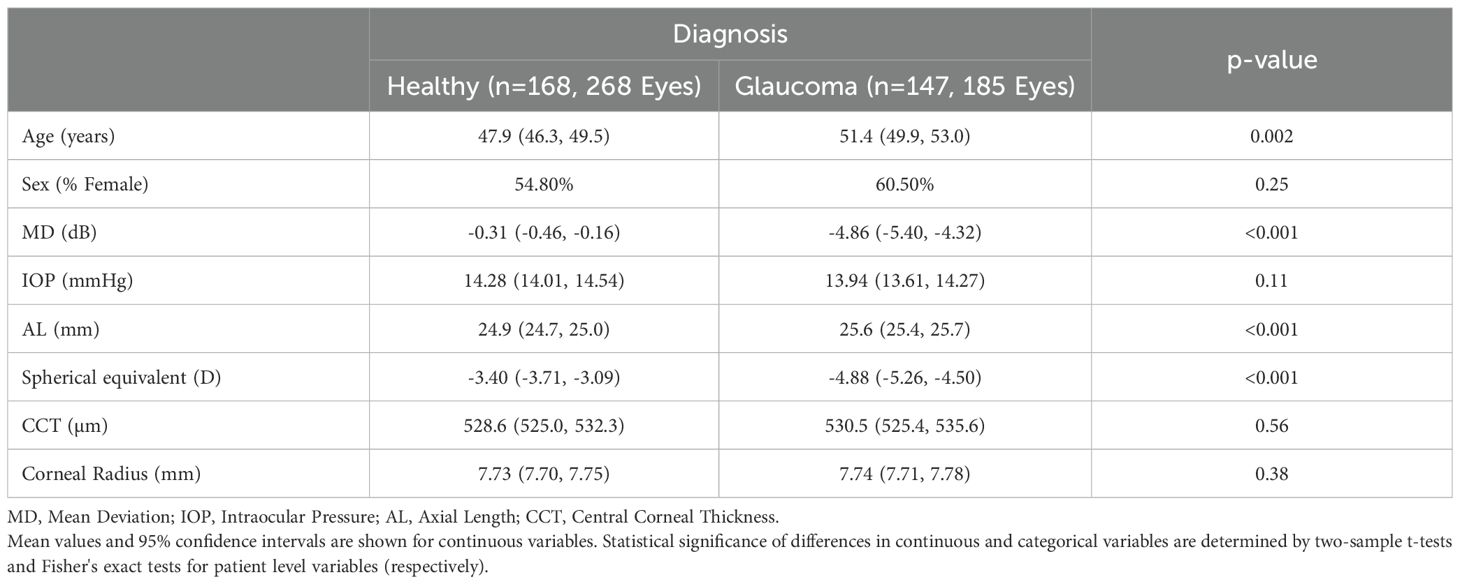

This cross-sectional study included 185 eyes from 147 patients diagnosed with POAG and 268 eyes from 168 healthy subjects. Table 1 presents the demographic and clinical characteristics of the study participants and their eyes. The mean age (95% CI) at SS-OCT imaging was 51.4 (49.9, 53.0) years for the glaucoma group and 47.9 (46.3, 49.5) years for the healthy controls (p = 0.002). POAG eyes exhibited significantly worse VF mean deviation (MD) (-4.86 [-5.40, -4.32]) compared to healthy eyes (-0.31 [-0.46, -0.16]) (p< 0.001). The proportion of females was slightly higher in the glaucoma group (60.5%) compared to the healthy group (54.8%), but this difference was not statistically significant (p = 0.357).

Mean spherical equivalent was significantly lower in POAG eyes (-4.88 [-5.26, -4.50]) than in healthy eyes (-3.40 [-3.71, -3.09]) (p< 0.001). Additionally, axial length was significantly longer in the POAG group (25.6 [25.4, 25.7] mm) compared to the healthy group (24.9 [24.7, 25.0] mm) (p< 0.001). No significant differences were found in CCT (p = 0.565), corneal radius (p = 0.381), or IOP (p = 0.114).

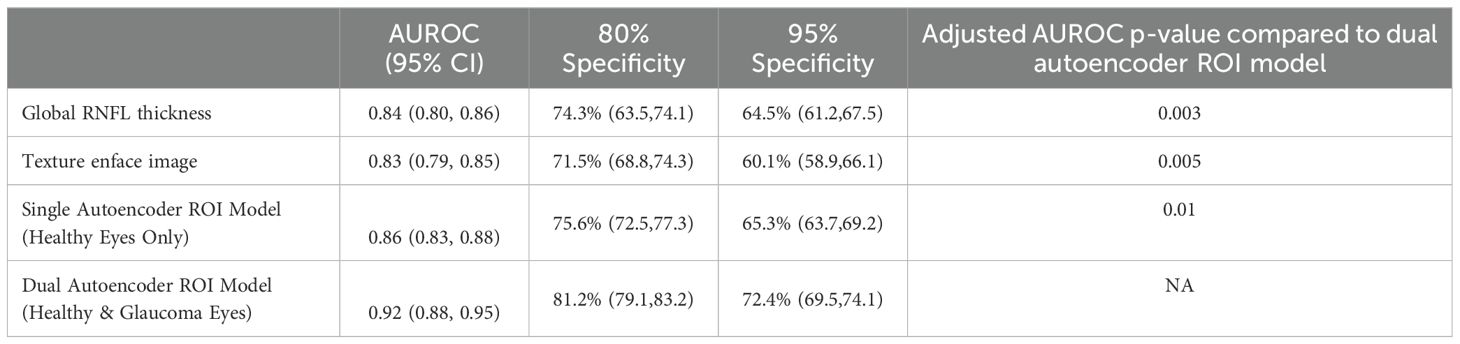

AUROCs and sensitivities at 80% and 95% specificity for the single autoencoder model (trained only on healthy eyes), the dual autoencoder model (trained on both healthy and glaucomatous eyes), the global RNFL thickness measurement, and the texture enface measurement are summarized in Table 2. The dual autoencoder model demonstrated the highest AUROC (0.92 [0.88, 0.95]), significantly outperforming the single autoencoder model trained only on healthy eyes (0.86 [0.83, 0.88], p = 0.01), global RNFL thickness measurement (0.84 [0.80, 0.86], p = 0.003), and the texture enface measurement (0.83 [0.79, 0.85], p = 0.005).

Table 2. Area under the receiver operating curves (AUROC) and sensitivities at fixed specificities for each model.

At 80% specificity, the dual autoencoder model achieved higher sensitivity 81.2% [79.1, 83.2], compared to 75.6% [72.5, 77.3] for the single autoencoder model trained only on healthy eyes, 74.3% [63.5, 74.1] for the global RNFL thickness measurement, and 71.5% [68.8, 74.3] for the texture enface measurement (all p-value<0.05).

At 95% specificity, the dual autoencoder model also demonstrated superior sensitivity (72.4% [69.5, 74.1]), compared to 65.3% [63.7, 69.2] for the single autoencoder model trained only on healthy eyes, 64.5% [61.2, 67.5] for the global RNFL thickness model, and 60.1% [58.9, 66.1] for the texture enface model (all p-value<0.05). There was no significant difference between the global RNFL thickness measurement and the texture enface measurement in terms of AUROC (0.84 vs. 0.83, p = 0.47).

Given the class imbalance in the dataset, AUPRC was used as it better reflects model performance in imbalanced settings by emphasizing the ability to correctly identify glaucomatous eyes while minimizing false positives. Unlike AUROC, which can be overly optimistic in imbalanced datasets, AUPRC provides a more informative evaluation of precision and recall trade-offs in this study. The dual autoencoder model achieved the highest AUPRC (0.86 [0.83, 0.89]), significantly outperforming the single autoencoder model trained only on healthy eyes (0.80 [0.78, 0.82], p = 0.02), the global RNFL thickness model (0.74 [0.70, 0.76], p = 0.001), and the texture enface model (0.71 [0.68, 0.73], p<0.001).

Because of the age difference between healthy individuals and those with glaucoma, we conducted an age-matched sub-analysis, where the age difference between healthy eyes (n = 40, mean age = 48.3 years [46.1, 50.1]) and glaucoma eyes (n = 38, mean age = 49.2 years [47.5, 51.2]) were similar (p = 0.15).

In this adjusted analysis, the dual autoencoder model (trained on both healthy and glaucomatous eyes) achieved the highest age-adjusted AUROC (0.90 [0.87, 0.93]), significantly outperforming the global RNFL thickness measurement (0.82 [0.78, 0.85], p = 0.009), the texture enface measurement (0.81 [0.77, 0.85], p = 0.004), and the single autoencoder model trained only on healthy eyes (0.85 [0.81, 0.86], p = 0.03). These results confirm that even after adjusting for age differences, the dual autoencoder model remains the most effective in distinguishing glaucoma from healthy eyes.

To assess the versatility of the dual autoencoder approach, we applied it to RNFL thickness maps instead of enface texture images. The dual autoencoder using RNFL thickness map achieved an AUROC of 0.90 [0.87, 0.93], which was similar to 0.92 [0.88, 0.95] for dual autoencoder based on using enface images (p = 0.37).

Because axial length was significantly shorter in healthy compared to glaucomatous eyes (p< 0.001), we evaluated whether variations in this measurement influenced classification performance. To address this potential confounder, we performed subgroup analyses by training the model on two AL-defined groups and testing it on the third. If a patient had one eye in the training set and the other in the test set, the training eye was removed to ensure independence between training and evaluation at the patient level. Notably, 46 eyes from 23 patients were categorized into more than one axial length groups.

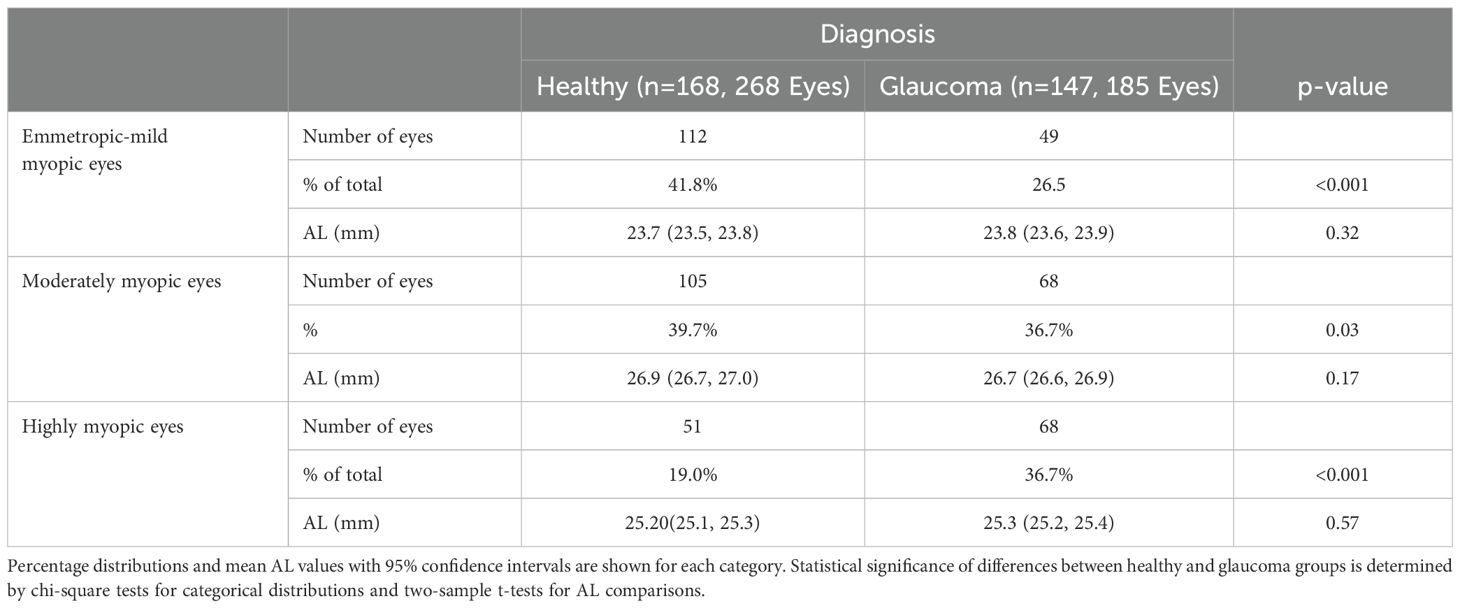

Table 3 presents the distribution of eyes by axial length category and diagnosis. Emmetropic-mild myopic eyes comprised 41.79% (112/268) of healthy eyes and 26.48% (49/185) of glaucomatous eyes (p< 0.001). The mean axial length (95% CI) in this group was 23.69 mm (23.53, 23.85) for healthy eyes and 23.79 mm (23.68, 23.89) for glaucomatous eyes (p = 0.32). Moderately myopic eyes accounted for 39.71% (105/268) of healthy eyes and 36.75% (68/185) of glaucomatous eyes (p = 0.03). The mean axial length was 26.88 mm (26.73, 27.02) in healthy eyes and 26.73 mm (26.60, 26.87) in glaucomatous eyes (p = 0.17). Highly myopic eyes represented 19.02% (51/268) of healthy eyes and 36.75% (68/185) of glaucomatous eyes (p< 0.001). The mean axial length was 25.20 mm (25.09, 25.30) in healthy eyes and 25.27 mm (25.18, 25.36) in glaucomatous eyes (p = 0.57).

For emmetropic-mild myopic eyes, the dual autoencoder model (trained on two other groups) achieved an AUROC of 0.92 [0.89, 0.94], significantly outperforming the single autoencoder model (0.86 [0.81, 0.88], p = 0.03). For moderately myopic eyes, the dual autoencoder model trained on two other groups achieved an AUROC of 0.90 [0.86, 0.92], outperforming the single autoencoder model (0.83 [0.79, 0.85], p = 0.01). For highly myopic eyes, the dual autoencoder model trained on two other groups achieved an AUROC of 0.88 [0.85, 0.91], outperforming the single autoencoder model (0.80 [0.77, 0.83], p = 0.004).

Discussion

The results of the current study indicate that dual autoencoder trained on normal aging eyes to model expected age-related change and trained on glaucomatous eyes to capture disease-specific damage achieved the highest AUROCs significantly outperforming the single autoencoder, global RNFL thickness, and texture enface models with p< 0.01. The glaucoma-trained autoencoder accounted for disease-specific changes beyond normal aging, improving classification accuracy, particularly in myopic eyes, where normal variations can obscure glaucomatous damage.

In myopic eyes, elongated axial length alters optic nerve morphology, making traditional optic nerve head based diagnostic metrics less reliable as previously discussed. By incorporating both normal aging and glaucomatous damage, the dual autoencoder differentiates between natural variations and pathology more effectively than a model trained only on healthy eyes. Further, the ROI-based method allows for targeted analysis of regions of the ONH most susceptible to glaucomatous damage, reducing false negatives, as shown previously. From a clinical standpoint, the region of interest-based, combined healthy autoencoder- and glaucoma autoencoder-trained dual autoencoder approach could complement standard thickness measurements or replace them in cases where conventional RNFL metrics fail to provide clear diagnostic insights.

Other studies have investigated the use of texture analysis for detecting glaucoma and myopia with varying degrees of success. Leung and colleagues introduced RNFL Optical Texture Analysis (ROTA) to reveal the optical texture and trajectories of individual axonal fiber bundles including the optic nerve head region and the macula (21). This method integrates both RNFL thickness and RNFL reflectance measurements obtained from standard OCT scans. Results indicated that papillofoveal and papillomacular bundle defects previously thought to develop late in the disease were common in early glaucoma. In addition, ROTA defined defects significantly increased the likelihood of abnormality in the corresponding central visual field defects (Humphrey Field Analyzer 10–2 protocol) (22). With regards to myopia, the texture-based assessment PAMELA (Pathological Myopia Detection Through Peripapillary Atrophy) has been shown to automatically assess a retinal fundus image for pathological myopia using an SVM classification (23). In addition, a sensitivity of 0.90 and a specificity of 0.94 with a total accuracy of up to 92.5% was obtained for detecting pathological myopia using a modified version of PAMELA incorporating grey level analysis (24). Finally, In an earlier study, Bowd et al. (10) reported that the performance of SALSA-texture analysis used in the current study for differentiating between healthy and highly myopic glaucoma eyes was superior to that reported currently (AUROCs 0.92; 95% CI: 0.88-0.94 versus 0.83; 95% CI: 0.79-0.85). The inferior performance of texture en face images in the present study may be attributed to differences in subject demographics and characteristics.

Machine learning analysis of OCT measurements for detecting glaucoma in myopic eyes also has been reported. For instance, in one such study by Kim et al. (25) several convolutional neural network-based DL models were compared for classifying highly myopic healthy and highly myopic glaucomatous eyes with large PPA areas affecting circumpapillary OCT scans. In their study, an EfficientNet-B0 model trained and tested on macular vertical OCT measurements outperformed a model trained and tested on circumpapillary OCT measurements with AUROCs of 0.981 (0.955-1.00) and 0.840 (0.769, 0.912), respectively. Significant differences using other models (DenseNet-121, VGG-13, ResNet-34, ResNet-101, EfficientNet-B1) were not observed.

There are several strengths to using the novel SALSA-texture auto-encoder based detection of glaucoma using OCT ONH images. First, SALSA-texture auto-encoder analysis can achieve high diagnostic accuracy, both AUROC and AUPRC, with a relatively small sample size. In addition, in contrast to ROTA (21) which requires segmentation of both the ILM and RNFL layers, SALSA-Texture requires segmentation of only 1 layer, as it is applied to tissue in a 70 micron slab below the ILM. Requiring fewer segmented ONH layers reduces the likelihood of segmentation errors which can lead to artifacts and erroneous results. Finally, the use of a dual autoencoder trained on both healthy aging eyes to model expected age-related change and glaucomatous eyes to capture disease-specific damage resulted in an improvement in glaucoma detection performance compared to a single autoencoder trained on healthy eyes alone.

The current study is not without limitations. First, the available dataset is rather small. Although the model demonstrated strong performance with a relatively small sample size, larger datasets are needed to validate its robustness. Because of the small sample size, we relied on cross-validation instead of an external dataset to test model accuracy. However, an external dataset is preferrable so that the generalizability of the models can be evaluated, and to reduce the likelihood of overfitting. The reported findings need replication across different populations and imaging systems.

The population in the current study was largely Asian, with 5 Japanese sites, 1 Korean site, 1 Chinese site and 1 U.S. site limiting generalizability of results across races. Finally, training was instrument specific. The model was trained on a single OCT device, and performance across other imaging systems remains untested. To overcome these limitations, future directions include dataset expansion, cross-validation and integration with functional testing. Ideally, we would 1) validate the model on a multi-center, larger, and more diverse cohort to ensure generalizability; 2) test performance using different OCT devices and imaging protocols to enhance applicability, and 3) combine autoencoder-based structural analysis with visual field testing to create a more comprehensive glaucoma detection framework.

Another limitation of this study is the use of RNFL thickness as the sole structural comparator for evaluating the performance of our texture-based autoencoder model. Although RNFL is commonly used in clinical glaucoma assessment, macular ganglion cell complex (GCC) parameters have shown strong diagnostic utility in highly myopic eyes. Notably, prior studies (26, 27) have reported that GCC and GCIPL metrics are often less affected by optic disc tilt and peripapillary atrophy than RNFL measures in this population. We selected a texture-based approach specifically because it requires only ILM segmentation, which tends to remain reliable even in the presence of myopia-related structural distortion—unlike segmentation of deeper layers around the ONH. Since the 6×6 mm scan used in this study captures both ONH and macular regions, future research will investigate the added value of incorporating GCC thickness as a complementary structural comparator to texture and RNFL features.

Moreover, further improvements in our ROI-based framework could be realized by integrating complementary biomarkers beyond structural texture. For example, OCT angiography-derived vessel density has been shown to decline in glaucomatous eyes with high myopia and may provide vascular insight where structural metrics are ambiguous (28). In addition, retinal ganglion cell metabolic dysfunction, especially involving mitochondrial pathways, plays a critical role in glaucomatous neurodegeneration. Imaging methods such as flavoprotein autofluorescence have been used to noninvasively assess mitochondrial activity in vivo and could offer valuable functional context to structural findings (29). Incorporating such multimodal features into future versions of our dual-autoencoder model may further optimize glaucoma detection in myopic eyes.

Another limitation of this study is that the model was developed and evaluated solely on emmetropic to myopic eyes. While this was appropriate given the study's objective to improve glaucoma detection in myopia—a group where diagnostic performance is often suboptimal—it may limit generalizability to other refractive categories such as hyperopia. Hyperopic eyes can exhibit distinct optic nerve head morphologies and retinal structures, which may influence model performance. Future validation in more diverse refractive populations, including hyperopic and astigmatic eyes, will be essential to assess the broader clinical applicability of the proposed approach.

The ROI-Based Glaucoma Score generated by the dual autoencoder framework represents the extent and severity of localized deviations in retinal texture, which may serve as a quantitative indicator of glaucomatous damage. To support clinical translation, future efforts will focus on building a normative database to establish reference ranges and confidence intervals for the score enabling percentile-based interpretation. This will allow clinicians to contextualize patient scores within a population distribution, facilitating risk stratification and decision support. Additionally, prospective validation will be necessary to determine score thresholds for diagnostic or monitoring use in clinical workflows.

In conclusion, the dual autoencoder ROI-based approach significantly improves glaucoma detection in myopic eyes by accounting for both normal aging and disease-specific damage. This method shows promise for improving our ability to utilize optic nerve head OCT images to detect glaucoma in myopic eyes.

Data availability statement

The raw data supporting the conclusions of this article will be made available by the authors, without undue reservation.

Ethics statement

The studies involving humans were approved by University of California, San Diego Institutional Review Board. The studies were conducted in accordance with the local legislation and institutional requirements. The participants provided their written informed consent to participate in this study.

Author contributions

CB: Writing – original draft, Writing – review & editing, Methodology, Conceptualization, Validation. AB: Methodology, Writing – original draft, Formal analysis, Conceptualization, Validation, Writing – review & editing. MC: Writing – review & editing, Methodology. MA: Data curation, Writing – review & editing. AI: Writing – review & editing, Data curation. GT: Data curation, Writing – review & editing. KO-M: Data curation, Writing – review & editing. HS: Funding acquisition, Data curation, Writing – review & editing. HM: Writing – review & editing, Data curation. TK: Writing – review & editing, Resources. KS: Writing – review & editing, Data curation. TH: Writing – review & editing, Data curation. AM: Funding acquisition, Data curation, Writing – review & editing. TN: Writing – review & editing, Data curation. MA: Data curation, Writing – review & editing. T-WK: Data curation, Writing – review & editing, Funding acquisition. CL: Funding acquisition, Writing – review & editing, Data curation. RW: Writing – review & editing, Funding acquisition, Supervision. LZ: Funding acquisition, Writing – review & editing, Project administration, Supervision.

Funding

The author(s) declare that financial support was received for the research and/or publication of this article. MC: National Eye Insititute (NEI) EY030942, EY034146; HS: Japan Society for the promotion of science 20K18368; AM: Council for Science,Technology and Innovation; Cross-ministerial Strategic Innovation Program; RW: NEI EY029058; LZ: NEI EY027510, EY034146.

Conflict of interest

Author TK was employed by the company Topcon Corporation. HS, AM, TN, TK, CL, RW, and LZ all receive research support from Topcon.

The remaining authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Generative AI statement

The author(s) declare that no Generative AI was used in the creation of this manuscript.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

References

1. Wang YX, Yang H, Wei CC, Xu L, Wei WB, and Jonas JB. High myopia as risk factor for the 10-year incidence of open-angle glaucoma in the Beijing Eye Study. Br J Ophthalmol. (2023) 107:935–40. doi: 10.1136/bjophthalmol-2021-320644

2. Leske MC, Connell AM, Schachat AP, Hyman L, and The Barbados Eye Study. Prevalence of open angle glaucoma. Arch Ophthalmol. (1994) 112:821–9.

3. Xu L, Wang Y, Wang S, Wang Y, and Jonas JB. High myopia and glaucoma susceptibility the Beijing Eye Study. Ophthalmology. (2007) 114:216–20. doi: 10.1016/j.ophtha.2006.06.050

4. Mitchell P, Hourihan F, Sandbach J, and Wang JJ. The relationship between glaucoma and myopia: the Blue Mountain Eye Study. Ophthalmology. (1999) 106:2010–5.

5. Holden BA, Fricke TR, Wilson DA, Jong M, Naidoo KS, Sankaridurg P, et al. Global prevalence of myopia and high myopia and temporal trends from 2000 through 2050. Ophthalmology. May. (2016) 123:1036–42. doi: 10.1016/j.ophtha.2016.01.006

6. Jonas JB, Ohno-Matsui K, and Panda-Jonas S. Optic nerve head histopathology in high axial myopia. J Glaucoma. (2017) 26:187–93. doi: 10.1097/Ijg.0000000000000574

7. Lee JE, Sung KR, Park JM, Yoon JY, Kang SY, Park SB, et al. Optic disc and peripapillary retinal nerve fiber layer characteristics associated with glaucomatous optic disc in young myopia. Graefes Arch Clin Exp Ophthalmol. (2017) 255:591–8. doi: 10.1007/s00417-016-3542-4

8. Tan NYQ, Sng CCA, Jonas JB, Wong TY, Jansonius NM, and Ang M. Glaucoma in myopia: diagnostic dilemmas. Brit J Ophthalmol Oct. (2019) 103:5–13. doi: 10.1136/bjophthalmol-2018-313530

9. Jiravarnsirikul A, Belghith A, Rezapour J, Bowd C, Moghimi S, Jonas JB, et al. Evaluating glaucoma in myopic eyes: Challenges and opportunities. Surv Ophthalmol. (2024) 70(3):563–852. doi: 10.1016/j.survophthal.2024.12.003

10. Bowd C, Belghith A, Rezapour J, Christopher M, Hyman L, Jonas JB, et al. Diagnostic accuracy of macular thickness map and texture en face images for detecting glaucoma in eyes with axial high myopia. Am J Ophthalmol May 2. (2022) 242:26–35. doi: 10.1016/j.ajo.2022.04.019

11. Bowd C, Belghtih A, Christopher M, Goldbaum MH, Fazio MA, Girkin CA, et al. Individualized glaucoma change detection using deep learning auto encoder-based regions of interest. Transl Vis Sci Technol. (2021) 10:19. doi: 10.1167/tvst.10.8.19

12. Saito H, Ueta T, Araie M, Enomoto N, Kambayashi M, Murata H, et al. Association of bergmeister papilla and deep optic nerve head structures with prelaminar schisis of normal and glaucomatous eyes. Am J Ophthalmol Jan. (2024) 257:91–102. doi: 10.1016/j.ajo.2023.09.002

13. Kambayashi M, Saito H, Araie M, Enomoto N, Murata H, Kikawa T, et al. Effects of deep optic nerve head structures on bruch's membrane opening- minimum rim width and peripapillary retinal nerve fiber layer. Am J Ophthalmol Jul. (2024) 263:99–108. doi: 10.1016/j.ajo.2024.02.017

14. Saito H, Kambayashi M, Araie M, Murata H, Enomoto N, Kikawa T, et al. Deep optic nerve head structures associated with increasing axial length in healthy myopic eyes of moderate axial length. Am J Ophthalmol May. (2023) 249:156–66. doi: 10.1016/j.ajo.2023.01.003

16. Margolin R, Zelnik-Manor L, and Tal A. OTC: A novel local descriptor for scene classification. Lect Notes Comput Sc. (2014) 8695:377–91.

17. Belghith A, Bowd C, Medeiros FA, Balasubramanian M, Weinreb RN, and Zangwill LM. Glaucoma progression detection using nonlocal Markov random field prior. J Med Imaging. (2014) 1:34504. doi: 10.1117/1.JMI.1.3.034504

18. Kato Z and Pong T-C. Markov random field image segmentation model for color textured images. Image Vis Comput. (2006) 24:1103–14. doi: 10.1016/j.imavis.2006.03.005

19. Christopher M, Bowd C, Belghith A, et al. Deep learning approaches predict glaucomatous visual field damage from OCT optic nerve head en face images and retinal nerve fiber layer thickness maps. Ophthalmology. (2020) 127:346–56. doi: 10.1016/j.ophtha.2019.09.036

20. Alonzo TA and Pepe MS. Distribution-free ROC analysis using binary regression techniques. Biostatistics. (2002) 3:421–32. doi: 10.1093/biostatistics/3.3.421

21. Leung CKS, Guo PY, and Lam AKN. Retinal nerve fiber layer optical texture analysis (ROTA): involvement of the papillomacular bundle and papillofoveal bundle in early glaucoma. Ophthalmology. (2022) 129(9):1043–1055. doi: 10.1016/j.ophtha.2022.04.012

22. Kamalipour A, Moghimi S, Khosravi P, Tansuebchueasai N, Vasile C, Adelpour M, et al. Retinal nerve fiber layer optical texture analysis and 10–2 visual field assessment in glaucoma. Am J Ophthalmol Oct. (2024) 266:118–34. doi: 10.1016/j.ajo.2024.05.013

23. Liu J, Wong DWK, Lim JH, Tan NM, Zhang Z, Li H, et al. Detection of pathological myopia by PAMELA with texture-based features through an SVM approach. J Healthc Eng. (2010) 1:1–11. doi: 10.1260/2040-2295.1.1.1

24. Benghai L, Wong DWK, Tan NM, Zhang Z, Lim H, Li H, et al. Fusion of pixel and texture features to detect pathological myopia. (2010). 2010 5th IEEE Conference on Industrial Electronics and Applications, Taichung, Taiwan pp. 2039–42.

25. Kim JA, Yoon H, Lee D, Kim M, Choi J, Lee EJ, et al. Development of a deep learning system to detect glaucoma using macular vertical optical coherence tomography scans of myopic eyes. Sci Rep. (2023) 13:8040. doi: 10.1038/s41598-023-34794-5

26. Wang W-W, Wang H-Z, Liu J-R, Zhang X-F, Li M, Huo Y-J, et al. Diagnostic ability of ganglion cell complex thickness to detect glaucoma in high myopia eyes by Fourier domain optical coherence tomography. Int J ophthalmology. (2018) 11:791.

27. Poon LY-C, Wang C-H, Lin P-W, and Wu P-C. The prevalence of optical coherence tomography artifacts in high myopia and its influence on glaucoma diagnosis. J Glaucoma. (2023) 32:725–33. doi: 10.1097/ijg.0000000000002268

28. Chang P-Y, Wang J-Y, and Wang J-K. Optical coherence tomography angiography compared with optical coherence tomography for detection of glaucoma progression with high myopia. Sci Rep. (2025) 15:9762. doi: 10.1038/s41598-025-91880-6

Keywords: glaucoma, myopia, optical coherence tomography, deep learning, artificial intelligence, diagnosis, classification

Citation: Bowd C, Belghith A, Christopher M, Araie M, Iwase A, Tomita G, Ohno-Matsui K, Saito H, Murata H, Kikawa T, Sugiyama K, Higashide T, Miki A, Nakazawa T, Aihara M, Kim T-W, Leung CKS, Weinreb RN and Zangwill LM (2025) Glaucoma detection in myopic eyes using deep learning autoencoder-based regions of interest. Front. Ophthalmol. 5:1624015. doi: 10.3389/fopht.2025.1624015

Received: 06 May 2025; Accepted: 14 July 2025;

Published: 04 August 2025.

Edited by:

Brent Siesky, Icahn School of Medicine at Mount Sinai, United StatesReviewed by:

Keren Wood Shalem, Icahn School of Medicine at Mount Sinai, United StatesIngrida Januleviciene, Lithuanian University of Health Sciences, Lithuania

Copyright © 2025 Bowd, Belghith, Christopher, Araie, Iwase, Tomita, Ohno-Matsui, Saito, Murata, Kikawa, Sugiyama, Higashide, Miki, Nakazawa, Aihara, Kim, Leung, Weinreb and Zangwill. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Linda M. Zangwill, bHphbmd3aWxsQGhlYWx0aC51Y3NkLmVkdQ==

Christopher Bowd

Christopher Bowd Akram Belghith

Akram Belghith Mark Christopher1

Mark Christopher1 Kyoko Ohno-Matsui

Kyoko Ohno-Matsui Hitomi Saito

Hitomi Saito