- China Academy of Space Technology, Beijing, China

Accurate crop classification is essential for agricultural management, resource allocation, and food security monitoring. GF-1 Wide Field View (WFV) imagery suffers from Bidirectional Reflectance Distribution Function (BRDF) effects due to large viewing angles (0°–48°), reducing crop classification accuracy. This study innovatively integrates BRDF correction with deep learning to address this. First, a BRDF correction method based on normalized difference vegetation index (NDVI) and anisotropy flat index (AFX) is developed to normalize radiometric discrepancies. Secondly, utilizing four spectral bands from WFV images along with three effective vegetation indices as feature variables, a multi-feature fusion deep learning classification system was constructed. Three typical deep learning architectures—Feature Pyramid Network (FPN), Fully Convolutional Network (FCN), and UNet, are employed to perform classification experiments. Results demonstrate that BRDF correction consistently improves accuracy across models, with UNet achieving the best performance: 95.02

1 Introduction

Crop classification is a key task in remote sensing, supporting agricultural monitoring, food security, and ecological management (Ding et al., 2023; Gentry et al., 2025). The Gaofen-1 (GF-1) satellite, launched in 2013, offers large-scale land monitoring via four Wide Field View (WFV) cameras. These sensors provide 16 m resolution multispectral imagery (0.45–0.89 µm) over an 800 km swath, enabling observations of vegetation, land degradation, and water resources (Chen et al., 2022). However, due to the wide viewing angles (

In order to eliminate the effect of BRDF effect on crop classification, BRDF correction of GF-1 WFV image is needed. BRDF correction normalizes the surface reflectance from different observation directions to nadir observation reflectance, systematically eliminates the radiation distortion caused by large observation angles, and enhances the radiation consistency of wide-viewing-angle images. Traditional BRDF correction methods (Schläpfer et al., 2014), such as the Ross Thick-LiSparse Reciprocal model (Roujean et al., 1992), predominantly rely on kernel-driven parameters derived from low spatial resolution data (e.g., MODIS). However, their limited parameter spatial scales struggle to effectively characterize the micro-scale anisotropic reflectance heterogeneity in high-resolution imagery (10–30 m), such as GF-1 and Landsat (Román et al., 2011). To overcome this limitation, (Roy et al., 2016) utilized MODIS BRDF parameters to normalize Landsat reflectance into nadir bidirectional reflectance-adjusted reflectance (NBAR), thereby improving data consistency. Building on this theoretical foundation, studies by Jiang et al. (2023) and Jiang et al. (2024) demonstrated that BRDF correction enhances the radiometric consistency of WFV imagery and subsequently improves vegetation parameter retrieval accuracy. Jia W. et al. (2024) reported a maximum increase of 2.9

Crop classification in agricultural remote sensing generally adopts either traditional machine learning or deep learning methods. Traditional approaches (e.g., decision trees, support vector machines) rely on handcrafted spectral and geometric features but struggle with complex, high-resolution imagery. In contrast, deep learning models offer superior performance by learning hierarchical features from data, and have become the mainstream choice. Notably, Fully Convolutional Networks (FCN), Feature Pyramid Networks (FPN), and UNet have shown strong potential. FCNs enable end-to-end pixel-level classification and effectively capture field boundaries (Maggiori et al., 2016). FPNs enhance multi-scale feature extraction for improved recognition of crop regions with varying sizes (Xu et al., 2023). UNet integrates high-level semantics and spatial details via skip connections, showing robustness in dealing with spectral variability due to crop height and planting density (Jia Y. et al., 2024; Wang et al., 2024; Chang et al., 2024). However, most of these models overlook the impact of Bidirectional Reflectance Distribution Function (BRDF) effects, which arise from varying observation angles. BRDF-induced spectral distortion reduces classification accuracy and weakens model generalization, especially in large-scale applications, and remains a major technical challenge for high-resolution agricultural monitoring.

Therefore, this study aims to develop a high-precision classification framework for GF-1 WFV 16 m imagery, systematically investigating the impacts of BRDF effects on crop classification accuracy and the performance variations across deep learning architectures. An NDVI-level driven BRDF parameters method is used to correct BRDF effect of WFV image. In addition, hierarchical feature architecture combining FCN, FPN, and UNet paradigms to synergistically enhance spectral-spatial feature representation. Through controlled experiments with BRDF-corrected and uncorrected reference data, quantitatively evaluate how BRDF effect influences classification fidelity across different crop types and evaluate applicability of different deep learning methods for crop classification in WFV Images.

2 Study area and data sources

2.1 Overview of the study area

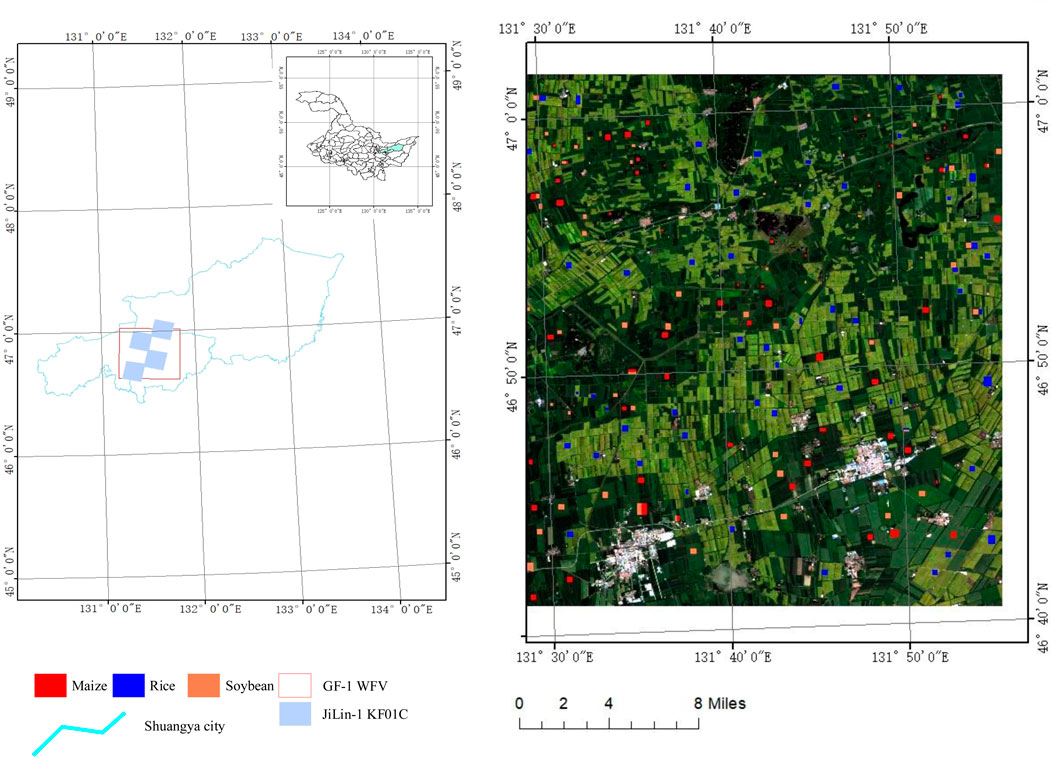

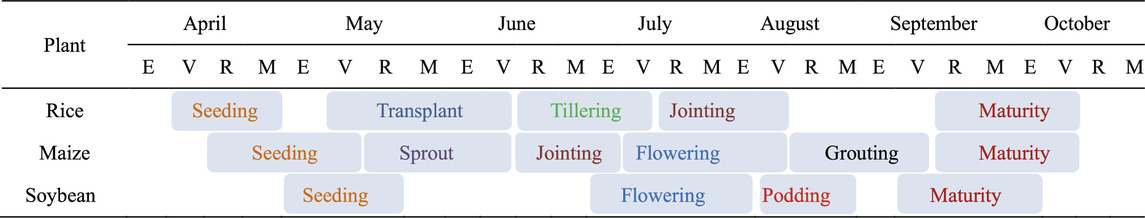

The study area is situated in Shuangya City (46°40′–47°04′N, 131°30′–131°50′E), eastern Heilongjiang Province, China (Figure 1), within the core region of the Sanjiang Plain formed by alluvial deposits from the Heilongjiang, Songhua, and Wusuli Rivers. Characterized by flat low-lying topography with average elevations of 50–60 m, this agriculturally significant zone features fertile black soils and meadow soils, serving as a crucial commercial grain production base in China. The region experiences a temperate continental climate with mean annual temperatures of 1°C–3°C and precipitation ranging 500–600 mm. With a frost-free period of 120–140 days, the climatic conditions support cultivation of early-maturing crops including maize, rice, and soybean (Qu et al., 2024; Gentry et al., 2025), whose distinct phenological stages are detailed in Table 1.

2.2 Data and preprocessing

2.2.1 GF-1 WFV data

This study utilized 16-m spatial resolution GF-1 WFV remote sensing images, com- prising four spectral bands: blue (450–520 nm), green (520–590 nm), red (630–690 nm), and near-infrared (770–890 nm). In this study, an image of GF-1 WFV4 level 1A on 28 August 2022 was downloaded from the China Centre for Resources Satellite Data and Application (CRESDA) (https://data.cresda.cn/), covering the core agricultural region of the Sanjiang Plain in eastern Heilongjiang Province, China. Prior to application, GF-1 WFV data undergo rigorous preprocessing steps, including radiometric calibration, atmospheric correction, orthorectification, and geometric correction. The atmospheric correction was performed using the fast line-of-sight atmospheric analysis of spectral hypercubes (FLAASH) model. In addition, to conduct BRDF correction for WFV image, the per-pixel observation geometry of WFV image was calculated, including the solar zenith angle

2.2.2 JiLin-1 KF01C data

The Jilin-1 Wide 01C satellite, launched on 5 May 2022, has a swath width of over 150 km and provides imagery products with a panchromatic resolution of 0.5 m and a multispectral resolution of 2 m. In this study, four scenes of Level-3 Jilin-1 KF01C imagery data, acquired on 10 July 2022, with a resolution of 0.5 m, were downloaded from https://www.jl1mall.com/ to assist in the creation of crop classification labels. The Level-3 Jilin-1 KF01C data is high-precision image data that has undergone radiometric calibration, geometric precision correction, and image fusion, and can be directly used for image interpretation.

2.2.3 Ground sample label data

To create labeled samples for crop classification, this study integrated labeled data from Qu et al. (2024) with Jilin-1 KF01C imagery, based on WFV imagery, to produce 210 labeled samples. The labeled samples in the study by Qu et al. (2024) were obtained from field survey experiments conducted from July to August 2022. In this study, a total of 210 sample points were obtained through field investigations, with balanced distribution among three major crops: rice (70), soybean (70), and maize (70), as illustrated in Figure 1. Around each labeled point, multiple image patches of 128

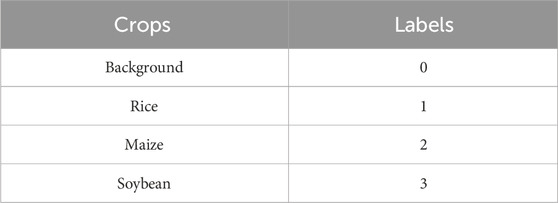

A hierarchical classification system was developed to align with agricultural monitoring requirements. Target features were categorized into three primary crop classes (rice, maize, soybean) and a composite background class encompassing non-cultivated features such as buildings, roads, water bodies, and woodlands. Each class was assigned a unique identifier (background: 0; rice: 1; maize: 2; soybean: 3) to facilitate precise model training, as outlined in Table 2. To enhance visual interpretability of classification outputs, a dedicated pseudocolor mapping scheme was designed: rice paddies in blue (RGB: 0, 0, 255), maize in red (RGB: 255, 0, 0), soybean fields in yellow (RGB: 255, 127, 80), and background features in black (RGB: 0, 0, 0). This scheme enables clear discrimination between target croplands and non-target regions, with dataset visualization and manual annotations.

3 Methods

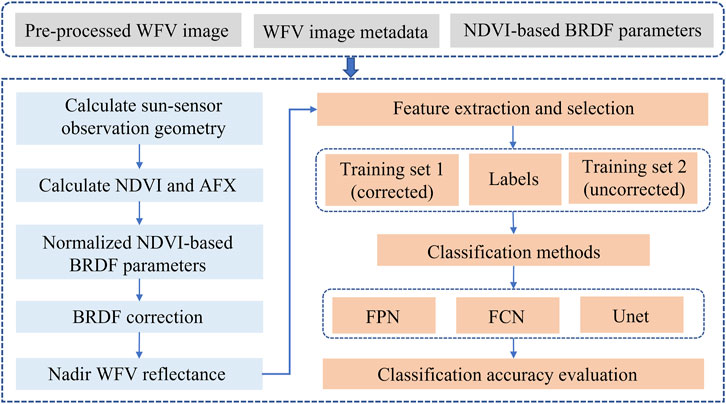

This study aims to enhance the classification accuracy of crops in single-scene wide field-of-view (WFV) remote sensing images by constructing a classification technology system based on bidirectional reflectance distribution function (BRDF) correction and deep learning methods (as shown in Figure 2). The specific implementation process consists of four progressive steps: Firstly, based on the WFV image metadata and preprocessed data, the observation geometric angles are calculated and the normalized difference vegetation index (NDVI) is extracted. Secondly, the AFX index is calculated based on the NDVI-based BRDF parameters to achieve the processing of normalized BRDF parameters, and then the BRDF correction of the WFV image is completed, ultimately obtaining nadir reflectance data. Subsequently, crop classification studies are conducted based on the WFV images before and after correction. Two experimental datasets were generated from uncorrected and corrected WFV data.

By constructing spectral and vegetation index features, three typical deep learning models (FPN, FCN, and Unet) are selected for comparative analysis. Finally, the impact of BRDF correction on the classification effect of crops is evaluated by comparing the classification accuracy of the images before and after correction, and the feature expression ability and classification performance differences of different models are deeply analyzed.

3.1 BRDF correction of WFV image

In order to eliminate the radiation differences caused by different viewing angles of WFV images, BRDF correction was carried out to normalize the reflectance of WFV images at different viewing angles to the nadir observation. The WFV image BRDF correction is implemented based on the RTLSR-chen BRDF model (Chen and Cihlar, 1997), a semiempirical kernel-driven model with considering hotspot effect. The RTLSR-chen equation of the bidirectional reflectance factor (BRF) is given as Equation 1:

where

The RossThickChen kernel (Chen and Cihlar, 1997), which takes into account hotspot variations, is used to calculate volume scattering in this study, as shown in Equations 2, 3:

where

The

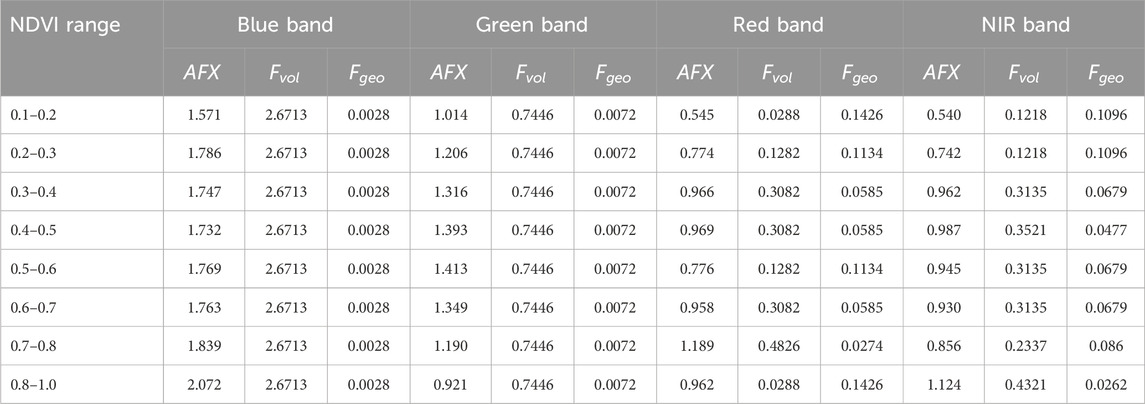

The cropland NDVI-based BRDF parameters in Jiang et al. (2024) study are employed to calculate AFX, following the methodology of Jiao et al. (2014). Subsequently, the normalized NDVI-based BRDF parameters are derived using the AFX value in conjunction with the BRDF archetype (as referenced in Jiao et al. (2014)). The final normalized

GF-1 WFV reflectance at nadir observation

where

3.2 Feature extraction and selection

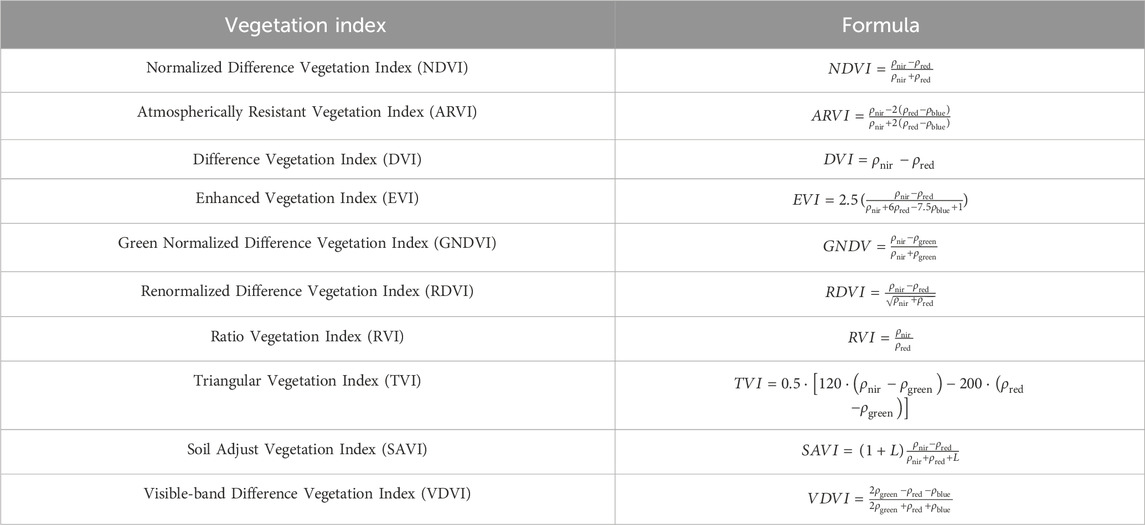

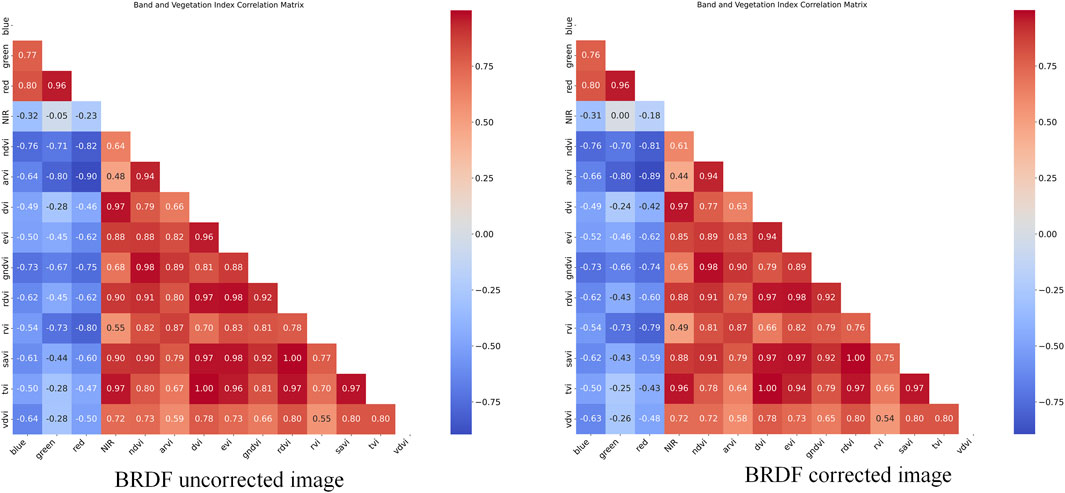

In this study, crop classification of WFV imagery was developed using spectral bands and vegetation indices as feature variables. Specifically, ten commonly used vegetation indices were calculated as candidate features for the classification experiments, based on both raw WFV images and BRDF-corrected WFV images. These indices include the Normalized Difference Vegetation Index (NDVI), Atmospherically Resistant Vegetation Index (ARVI), Difference Vegetation Index (DVI), Enhanced Vegetation Index (EVI), Green Normalized Difference Vegetation Index (GNDVI), Renormalized Difference Vegetation Index (RDVI), Ratio Vegetation Index (RVI), Triangular Vegetation Index (TVI), Soil Adjusted Vegetation Index (SAVI), and Visible-band Difference Vegetation Index (VDVI). The formulas for calculating these indices are provided in Table 4. To optimize model performance by addressing multicollinearity issues, we systematically analyzed feature (four spectral bands and ten vegetation indices) correlations through Pearson’s correlation coefficient analysis (Figure 3). In this study, variables exhibiting inter-correlation coefficients exceeding the 0.85 threshold were subsequently eliminated to mitigate redundancy and prevent model overfitting. Through this rigorous feature selection process, the final input variables were streamlined to four spectral bands (blue, green, red, and NIR) and three vegetation indices demonstrating unique information contributions: NDVI, RVI, and VDVI.

3.3 Classification methods

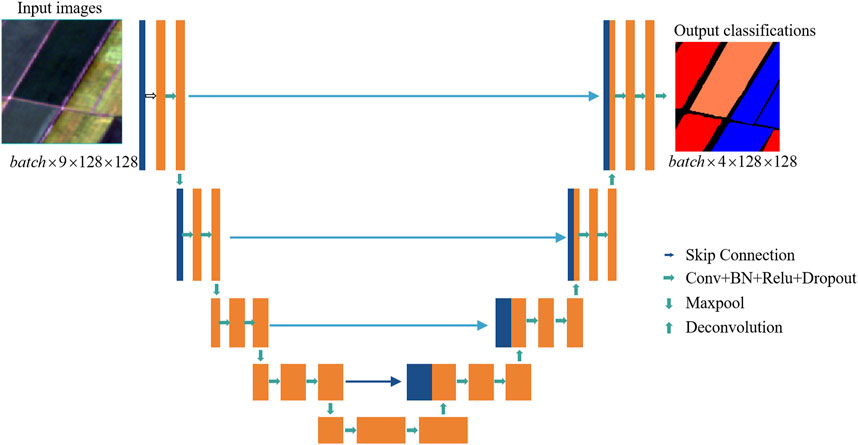

3.3.1 Unet

The UNet architecture (Ronneberger et al., 2015), an end-to-end fully convolutional network, employs an encoder-decoder structure to effectively capture multi-scale feature information, as illustrated in Figure 4. The encoder progressively extracts high-level semantic features through downsampling, while the decoder restores spatial details via upsampling. Skip connections integrate shallow texture features with deep semantic information, enabling precise image segmentation. In remote sensing-based crop classification tasks, this architecture facilitates the simultaneous extraction of local crop details (e.g., leaf textures) and global distribution patterns (e.g., field boundaries), significantly enhancing classification accuracy (Yu et al., 2022; Liu et al., 2025).

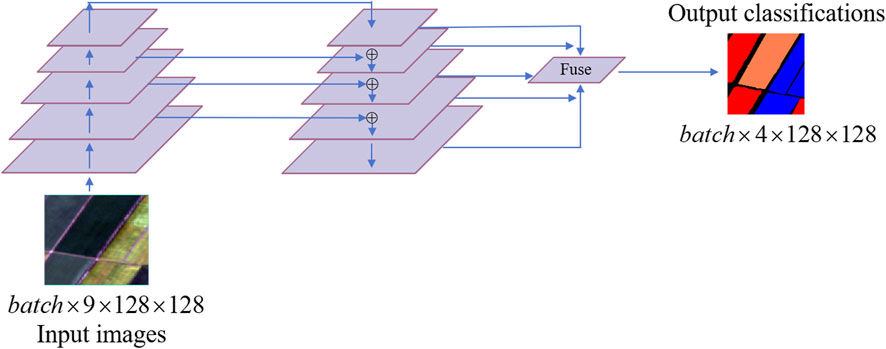

The proposed model is based on the UNet architecture and is designed for semantic segmentation of multi-channel remote sensing images. The input comprises 9-channel images with a spatial resolution of 128

3.3.2 FPN

Feature Pyramid Network (FPN) (Lin et al., 2017), originally designed for object detection tasks (e.g., Mask R-CNN), introduces multi-scale feature fusion through hierarchical pyramid construction. By combining deep semantic information with shallow spatial details, FPN enhances model sensitivity to objects of varying scales (Figure 5). When applied to remote sensing image classification, this approach effectively addresses challenges including multi-scale object coexistence, small-target omission, and complex background interference, thereby improving crop classification accuracy in heterogeneous landscapes (Xu et al., 2021).

This model, based on Lin et al. (2017), is specifically designed for semantic segmentation of multi-channel remote sensing images. The input consists of 9-channel images with a resolution of 128

3.3.3 FCN

Conventional convolutional neural networks (CNNs) typically rely on fully connected layers for category labeling, which often compromises spatial localization. In contrast, the Fully Convolutional Network (FCN) (Long et al., 2015) replaces fully connected layers with 1

The model is based on the FCN-16s semantic segmentation classification model with VGG16 backbone, which outputs multi-level features through the VGG16 feature extractor, which acts as a backbone network. Then pixel-level classification results are generated by fusing deep semantic features with mid-level detail features. The input of the model is a 9-channel image of 128

3.4 Metrics

This study employs 7 quantitative metrics:Overall Accuracy (OA), Mean Intersection over Union (MIoU), Kappa coefficient, User’s Accuracy (UA), Producer’s Accuracy (PA), F1-score (F1), and Intersection over Union (IOU),to systematically evaluate the impact of BRDF correction on crop classification accuracy.

Define the classification task to contain four categories, and the element

where

4 Results

Focusing on crop classification tasks using GF-1 16-m spatial resolution imagery, a controlled variable experimental framework was implemented with three representative deep learning models: FPN, FCN, and UNet. The analysis investigates BRDF-induced variations in classification performance through three dimensions: (1) Overall crop classification performance, (2) Crop-specific classification performance, (3) Confusion matrix analysis and (4) Crop Classification Mapping. This multidimensional evaluation elucidates the influence of BRDF correction on classification accuracy, providing critical insights for optimizing agricultural remote sensing workflows in anisotropic reflectance scenarios.

4.1 The setting of training coefficient

To ensure the fairness of model training, all experimental models were optimized using the Adam optimizer with a unified learning rate of 0.001. The cross-entropy loss function was employed as the loss criterion. The classification task involved four categories: Background, Rice, Maize, and Soybean.

4.2 Overall crop classification performance

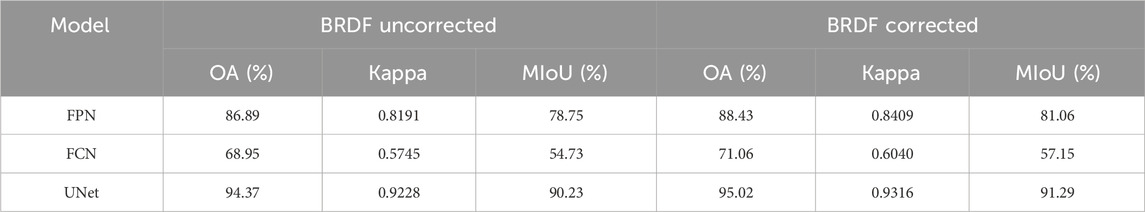

This study evaluates the impact of BRDF correction on crop classification performance using three metrics: OA, Kappa coefficient, and MIoU, demonstrating its critical role in improving model accuracy and mitigating directional reflectance bias. As shown in Table 5, BRDF correction significantly enhanced the classification capabilities across all models. Specifically, UNet, a representative model with an encoder-decoder architecture, achieved an increase in OA from 94.37

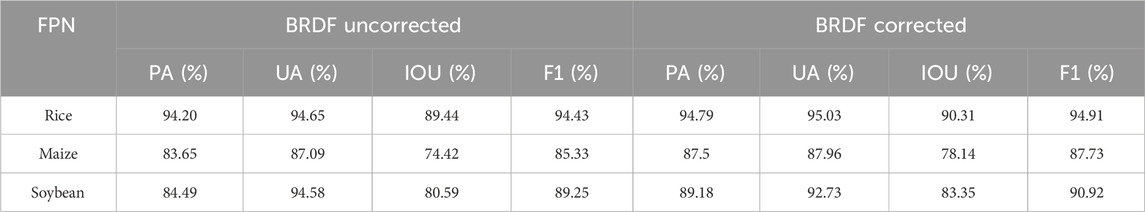

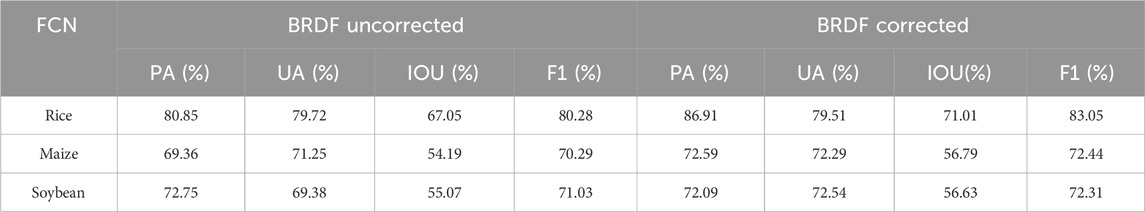

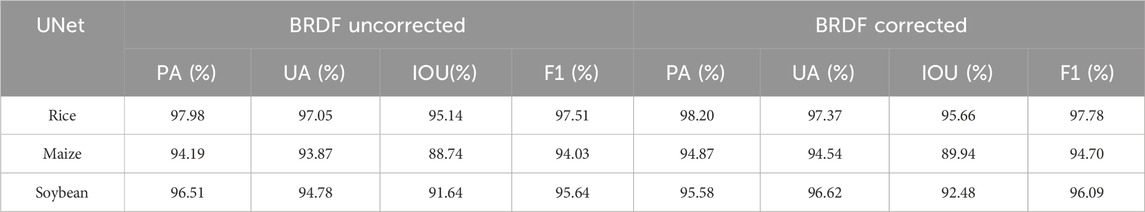

4.3 Crop-specific classification performance

A detailed analysis of individual crop categories reveals varying impacts of BRDF correction on classification performance in Tables 6–8. The correction demonstrates more pronounced benefits for high-canopy crops. For example, under the FPN model, soybean’s F1-score improves from 89.25

Table 6. Classification evaluation of different crop regions based on FPN with/without BRDF correction.

Table 7. Classification evaluation of different crop regions based on FCN with/without BRDF correction.

Table 8. Classification evaluation of different crop regions based on UNet with/without BRDF correction.

The observed variations in correction effectiveness stem from the interplay between crop growth characteristics and model architectures. BRDF correction standardizes multi-angle observation data, reducing classification ambiguity induced by canopy geometry—particularly critical for crops with distinct 3D structures (e.g., maize and soybean). For instance, FPN improves maize’s F1-score by 2.4

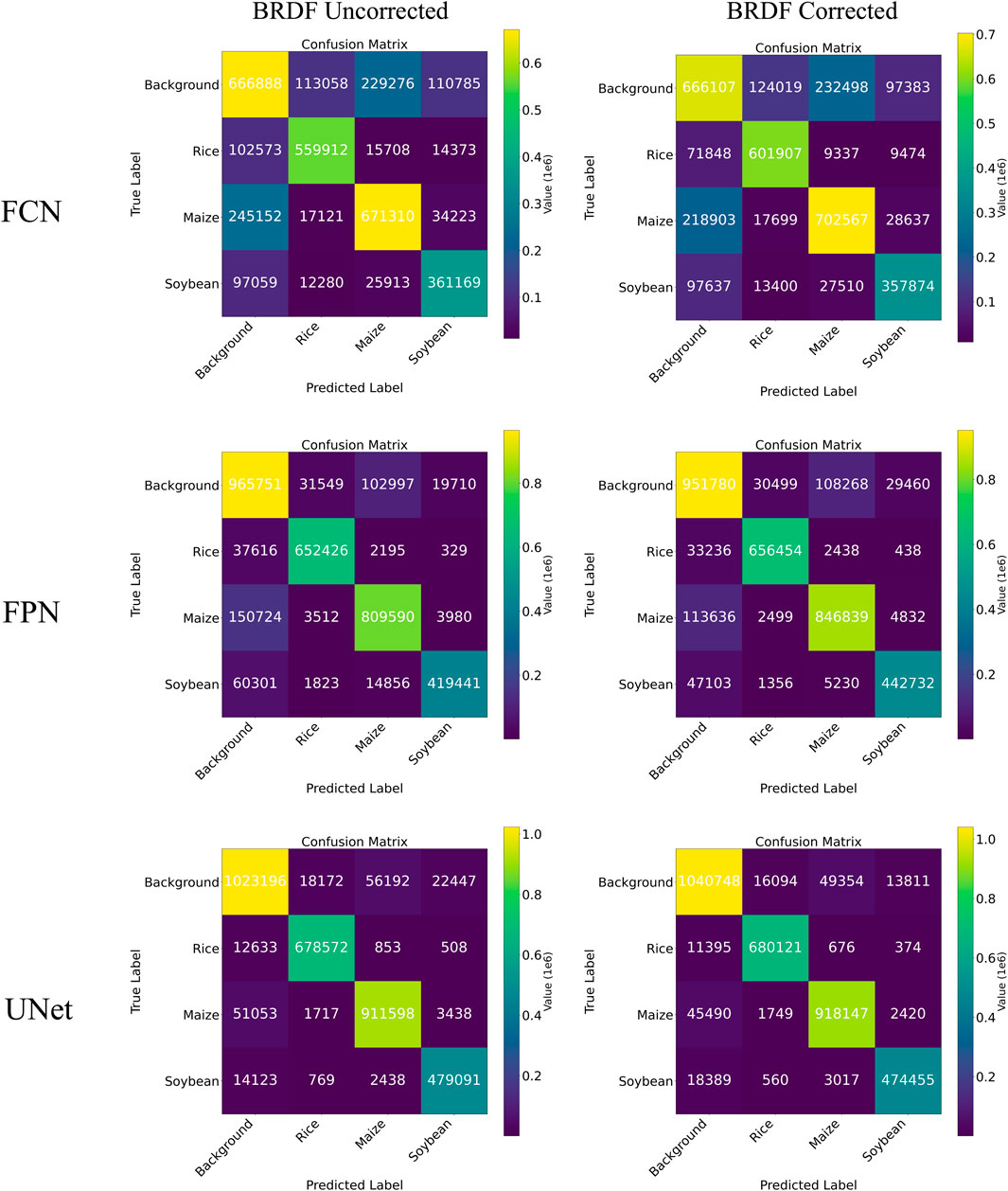

4.4 Confusion matrix analysis

As illustrated in Figure 6, BRDF correction markedly improves rice classification accuracy in FCN. Post-correction, the number of correctly predicted rice samples increases from 559,912 to 601,907, while misclassifications as maize and soybean decrease from 15,708 to 9,337 and from 14,373 to 9,474, respectively. This demonstrates effective mitigation of spectral confusion between rice and high-reflectance crops. However, background misclassification remains unresolved, with background samples misidentified as maize increasing from 229,276 to 232,498. This persistence may stem from FCN’s shallow architecture, which lacks sensitivity to the intricate spectral features of corrected data, perpetuating low discriminability between background and maize. Additionally, limited improvement in soybean classification suggests FCN’s weak representation of anisotropic reflectance in soybean canopies.

FPN exhibits balanced performance enhancement post-BRDF correction. Correct maize predictions rise from 809,590 to 846,839, while misclassifications as background decline from 150,724 to 113,636, confirming the correction’s efficacy in suppressing background confusion induced by directional reflectance in maize canopies. Soybean classification improves significantly, with correct predictions increasing from 419,441 to 442,732 and major misclassification sources (background and maize) reduced by 13,198 and 9,626, respectively, validating BRDF’s optimization for 3D canopy structures. Rice classification remains stable (correct predictions: 652,426 to 656,454), though misclassifications as maize slightly increase from 2,195 to 2,438, suggesting a slight spectral overlap that may still pose challenges post-correction.

UNet achieves superior classification consistency after BRDF correction. Correct maize predictions increase notably from 911,598 to 918,147, with background misclassifications decreasing from 51,053 to 45,490, indicating deep networks’ capacity to leverage corrected spectral-spatial features and suppress directional reflectance noise. Soybean classification shows minor trade-offs: correct predictions slightly decline from 479,091 to 474,455, while background misclassifications increase from 14,123 to 18,389, likely due to over-smoothed spectral distinctions between soybean canopies and background post-correction. Rice performance continues to improve, with correct predictions rising from 678,572 to 680,121 and misclassifications as maize and soybean further reduced.

Before and after the BRDF correction, the confusion matrices of each model show varying degrees of increase along the diagonal (correctly classified samples), indicating that the normalization of lighting and angular differences effectively enhances the stability and accuracy of remote sensing image classification. Correspondingly, the number of misclassifications in the off-diagonal areas has decreased, particularly the boundary between the background and crop categories has become clearer, further verifying the positive impact of the BRDF correction on improving classification accuracy.

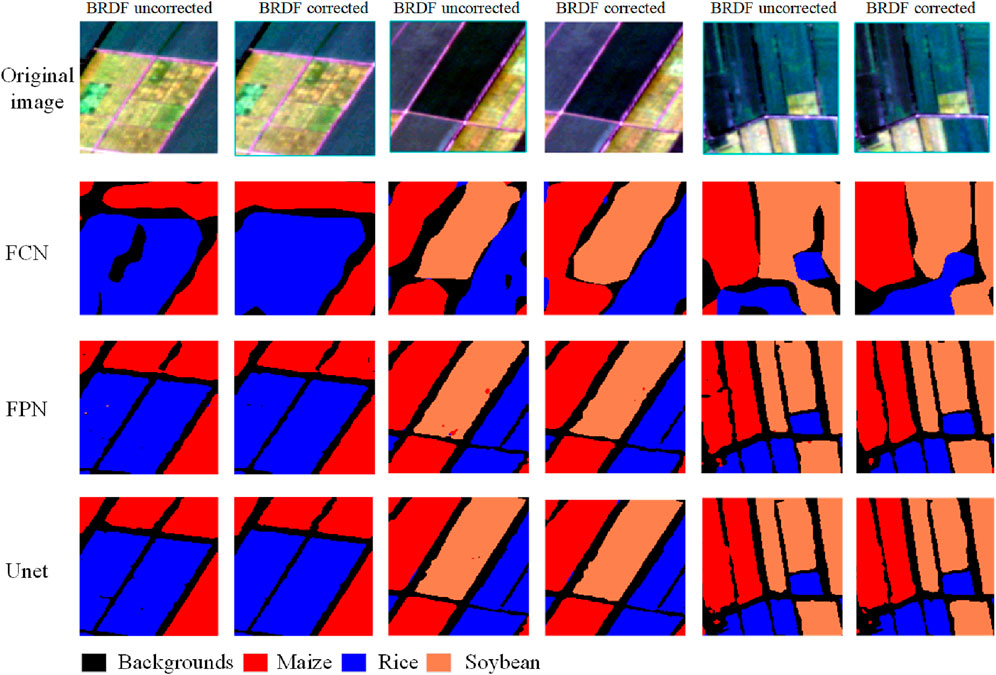

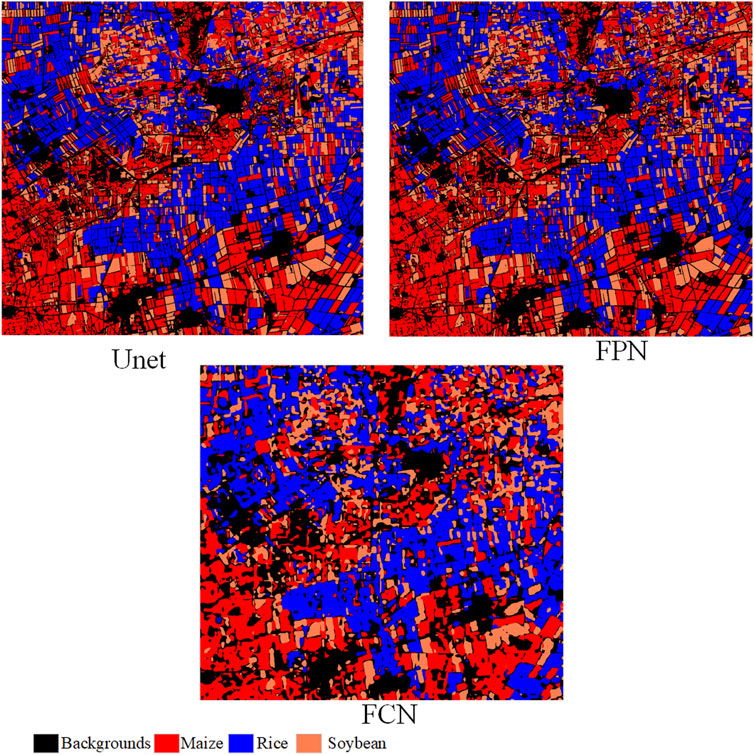

4.5 Crop Classification Mapping

Mapping results of BRDF-corrected remote sensing image classification tasks in Figure 7 reveal significant differences among the three models in feature extraction and spatial information preservation. For the FCN model, uncorrected classification results exhibit blurred regional boundaries and pronounced background noise interference. After BRDF correction, crop boundary clarity improves substantially, with enhanced texture features in maize canopies and reduced confusion between soybean and rice classifications. This demonstrates that BRDF correction effectively mitigates reflectance variations caused by illumination angle changes, thereby improving ability of FCN to discriminate spectrally similar crops. The FPN model demonstrates robust multi-scale feature extraction on uncorrected images but still misclassifies certain soybean and maize regions. Post-correction, it captures the strip-distribution characteristics of crops more accurately. In the UNet model, uncorrected results show speckled misclassifications within soybean cultivation areas. BRDF correction enhances illumination consistency, optimizing the fusion efficiency between low-level features and high-level semantic information. This improves internal homogeneity in soybean regions and better aligns rice field boundaries with ground-truth labels.

Further comparison of the spatial segmentation performance across models, as illustrated in Figure 8, underscores the superior capability of UNet in delineating farmland boundaries. Its edge contours are notably clearer, making it particularly suitable for fine-grained classification of crops with complex canopy textures such as soybean. However, the direct concatenation of deep semantic features with shallow spatial details in UNet may result in suboptimal visual classification for small-scale targets, such as sparsely distributed maize plants, indicating a trade-off between detail preservation and scale adaptability. In contrast, the FPN model focuses on global farmland distribution patterns (e.g., large-scale rice cultivation areas), while preserving local crop textures through low-level features. Nevertheless, FPN still exhibits edge blurring effects in high-resolution regions. As a fully convolutional baseline, the FCN model suffers from severe spatial detail loss due to its single-scale feature mapping. Mapping results indicate FCN’s limited capability in suppressing background interference, manifesting as extensive classification voids within farmlands and low recognition rates for small-area crops.

5 Discussion

5.1 BRDF effect on crop classification

BRDF effect significantly affects the accuracy of crop classification through spectral distortion and spatial interference. In terms of spectral dimension, the anisotropic reflectance characteristics of vegetation canopy lead to the differential spectral response of similar crops under different observed geometry, and the sensitivity of upright structure crops (such as maize) is significantly higher than that of diffuse canopy crops (such as soybean) or homogeneous background crops (such as rice). The results showed that the IOU of maize increased by 3.72

5.2 Applicability of FCN, UNet, and FPN in crop classification

The response of the three deep learning models to BRDF effects and crop types showed significant differences. With its encoder-decoder structure and multi-scale jump connection, UNet shows the strongest robustness in complex field scenarios, especially for high-precision classification of BRDF-sensitive crops (maize, soybean) (IOU

Future studies should combine crop physiological characteristics with model architecture innovation. For BRDF-sensitive crops such as maize, UNet variants with inputting three sun-sensor observation angle parameters can be designed; For large-scale monitoring tasks, the cascaded framework of FPN and lightweight BRDF compensation module is proposed. In the edge computing scenario, dynamic kernel convolution optimization of FCN should be explored to suppress Angle noise at low cost. The ternary cooperative optimization of “crop - model - BRDF correction” can significantly improve the operational efficiency of agricultural remote sensing classification system.

5.3 Limitations

Despite the systematic investigation of BRDF effects on crop classification and model applicability, this study has several limitations. First, only three crop classifications under single-scene GF-1 WFV images are discussed, and the limited geographical/temporal coverage limits the validation of model generality across climatic zones, growth stages, and crop types (such as wheat or cotton). Second, the employed BRDF correction models (e.g., RTLSR) may lack adaptability to complex agricultural scenarios, such as hilly terrain or mixed-cropping systems, where local illumination-geometry relationships deviate from theoretical assumptions, potentially introducing residual errors. Third, while deep learning models like UNet demonstrated robustness, their high computational costs and the absence of lightweight deployment strategies (e.g., pruning or quantization) hinder their practical application in large areal crop classification and resource-constrained scenarios.

Secondly, the misclassification rate for the background class is higher than that for crop classes. For example, in the FCN model, prior to BRDF correction, a total of 668,888 pixels were misclassified as background. This phenomenon can be attributed to several inherent challenges:

Diversity of background components: Unlike the relatively homogeneous crop fields, the background class comprises highly heterogeneous features such as roads, water bodies, and agricultural residues, which lack consistent spectral patterns. This variability complicates feature learning, even when ample training samples are available; Boundary and mixed pixel effects: At the edges of crop fields or in fragmented landscapes, mixed pixels often contain signals from both crops and background elements, making deterministic classification inherently difficult. For instance, although the UNet model achieved high accuracy for crop classes (e.g., 911,598 correctly classified maize pixels), its performance on the background class was comparatively lower. This suggests that edge-related ambiguities are a major contributor to background classification errors.

To further address the challenges associated with background misclassification, future strategies could include incorporating temporal spectral trajectories to distinguish dynamic crop patterns from static background elements, or applying object-based segmentation to reduce pixel-level noise. Nevertheless, our current results demonstrate that the combination of BRDF correction and model optimization already substantially mitigates these issues. For instance, maize classification achieved an F1-score exceeding 0.92, supporting the reliability of crop mapping—the core objective of this study.

Building on these findings, future research should systematically investigate the classification impact and underlying mechanisms of BRDF effects in WFV imagery, particularly under complex terrain conditions and in regions with mixed cropping patterns. To this end, it is essential to develop a multi-temporal analysis framework based on WFV image time series, enabling the evaluation of BRDF’s dynamic influence throughout the crop growth cycle—especially its role in enhancing temporal consistency across different phenological stages.

Moreover, integrating physical BRDF models with deep learning represents a promising direction. Developing lightweight neural networks that embed prior BRDF knowledge could ensure high classification accuracy while significantly reducing computational costs, thus laying a theoretical and technical foundation for real-time crop monitoring using satellite-based remote sensing data.

Finally, future studies may leverage multi-angle satellite observations. for example, using the off-nadir imaging capability of the GF-1 WFV sensor to acquire imagery of the same area from different viewing angles. By assessing whether BRDF-corrected reflectance values converge toward the nadir direction, a physically consistent evaluation framework can be established. This would allow BRDF correction performance to be assessed without reliance on ground-truth data, effectively mitigating issues related to spatial scale mismatch and spatiotemporal heterogeneity.

6 Conclusion

Based on single-scene GF-1 WFV remote sensing images, this study systematically explored crop classification methods through BRDF correction and different deep learning models. By constructing a normalized NDVI-based BRDF parameterized correction model, the BRDF effect of WFV image is effectively corrected. With spectral information and multi-vegetation index as feature variables, three deep learning frameworks of FPN, FCN and UNet were used to carry out crop classification experiments. The results showed that BRDF correction could significantly improve the crop classification performance of WFV images, and FPN method had the most significant improvement after BRDF correction, with the overall accuracy and average crossover ratio increased by 1.54

Data availability statement

The original contributions presented in the study are included in the article/supplementary material, further inquiries can be directed to the corresponding author.

Author contributions

YC: Writing – review and editing, Writing – original draft, Visualization, Formal Analysis, Validation, Conceptualization, Data curation, Project administration, Supervision, Methodology, Investigation. YL: Writing – original draft, Writing – review and editing, Conceptualization, Supervision. RL: Investigation, Writing – review and editing, Writing – original draft, Methodology. CG: Writing – review and editing, Writing – original draft, Supervision, Visualization. JL: Visualization, Writing – review and editing, Writing – original draft.

Funding

The author(s) declare that no financial support was received for the research and/or publication of this article.

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Generative AI statement

The author(s) declare that no Generative AI was used in the creation of this manuscript.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

References

Chang, Z., Xu, M., Wei, Y., Lian, J., Zhang, C., and Li, C. (2024). Unext: an efficient network for the semantic segmentation of high-resolution remote sensing images. Sensors 24, 6655. doi:10.3390/s24206655

Chen, J., and Cihlar, J. (1997). A hotspot function in a simple bidirectional reflectance model for satellite applications. J. Geophys. Res. Atmos. 102, 25907–25913. doi:10.1029/97jd02010

Chen, Y., Sun, L., Pei, Z., Sun, J., Li, H., Jiao, W., et al. (2022). A simple and robust spectral index for identifying lodged maize using gaofen1 satellite data. Sensors 22, 989. doi:10.3390/s22030989

Ding, Y., Gu, X., Liu, Y., Zhang, H., Cheng, T., Li, J., et al. (2023). Gf-1 wfv surface reflectance quality evaluation in countries along “the belt and road”. Remote Sens. 15, 5382. doi:10.3390/rs15225382

Gentry, L. E., Mitchell, C. A., Green, J. M., Guacho, C., Miller, E., Schaefer, D., et al. (2025). A diverse rotation of corn-soybean-winter wheat/double crop soybean with cereal rye after corn reduces tile nitrate loss. Front. Environ. Sci. 13, 1506113. doi:10.3389/fenvs.2025.1506113

Guan, Y., Zhou, Y., He, B., Liu, X., Zhang, H., and Feng, S. (2020). Improving land cover change detection and classification with brdf correction and spatial feature extraction using landsat time series: a case of urbanization in tianjin, China. IEEE J. Sel. Top. Appl. Earth Observations Remote Sens. 13, 4166–4177. doi:10.1109/jstars.2020.3007562

Hautecœur, O., and Leroy, M. M. (1998). Surface bidirectional reflectance distribution function observed at global scale by polder/adeos. Geophys. Res. Lett. 25, 4197–4200. doi:10.1029/1998gl900111

Jia, W., Pang, Y., and Tortini, R. (2024a). The influence of brdf effects and representativeness of training data on tree species classification using multi-flightline airborne hyperspectral imagery. ISPRS J. Photogrammetry Remote Sens. 207, 245–263. doi:10.1016/j.isprsjprs.2023.11.025

Jia, Y., Lan, H., Jia, R., Fu, K., and Su, Z. (2024b). Enhanced u-net algorithm for typical crop classification using gf-6 wfv remote sensing images. Eng. Agrícola 44, e20230110. doi:10.1590/1809-4430-eng.agric.v44e20230110/2024

Jiang, H., Jia, K., Wang, Q., Shang, J., Liu, J., Xie, X., et al. (2023). Angular effect correction for improved lai and fvc retrieval using gf-1 wide field view data. IEEE Trans. Geoscience Remote Sens. 61, 1–14. doi:10.1109/tgrs.2023.3304531

Jiang, H., Jia, K., Wang, Q., Yuan, B., Tao, G., Wang, G., et al. (2024). General brdf parameters for normalizing gf-1 reflectance data to nadir reflectance to improve vegetation parameters estimation accuracy. IEEE Trans. Geoscience Remote Sens. 62, 1–14. doi:10.1109/tgrs.2024.3403523

Jiao, Z., Hill, M. J., Schaaf, C. B., Zhang, H., Wang, Z., and Li, X. (2014). An anisotropic flat index (afx) to derive brdf archetypes from modis. Remote Sens. Environ. 141, 168–187. doi:10.1016/j.rse.2013.10.017

Jiao, Z., Schaaf, C. B., Dong, Y., Román, M., Hill, M. J., Chen, J. M., et al. (2016). A method for improving hotspot directional signatures in brdf models used for modis. Remote Sens. Environ. 186, 135–151. doi:10.1016/j.rse.2016.08.007

Lin, T.-Y., Dollár, P., Girshick, R., He, K., Hariharan, B., and Belongie, S. (2017). Feature pyramid networks for object detection. Proc. IEEE Conf. Comput. Vis. pattern Recognit., 2117–2125.

Liu, S., Cao, S., Lu, X., Peng, J., Ping, L., Fan, X., et al. (2025). Lightweight deep learning model, convnext-u: an improved u-net network for extracting cropland in complex landscapes from gaofen-2 images. Sensors 25, 261. doi:10.3390/s25010261

Long, J., Shelhamer, E., and Darrell, T. (2015). Fully convolutional networks for semantic segmentation. Proc. IEEE Conf. Comput. Vis. pattern Recognit., 3431–3440.

Maggiori, E., Tarabalka, Y., Charpiat, G., and Alliez, P. (2016). Convolutional neural networks for large-scale remote-sensing image classification. IEEE Trans. geoscience remote Sens. 55, 645–657. doi:10.1109/tgrs.2016.2612821

Qu, T., Wang, H., Li, X., Luo, D., Yang, Y., Liu, J., et al. (2024). A fine crop classification model based on multitemporal sentinel-2 images. Int. J. Appl. Earth Observation Geoinformation 134, 104172. doi:10.1016/j.jag.2024.104172

Román, M. O., Gatebe, C. K., Schaaf, C. B., Poudyal, R., Wang, Z., and King, M. D. (2011). Variability in surface brdf at different spatial scales (30 m–500 m) over a mixed agricultural landscape as retrieved from airborne and satellite spectral measurements. Remote Sens. Environ. 115, 2184–2203. doi:10.1016/j.rse.2011.04.012

Ronneberger, O., Fischer, P., and Brox, T. (2015). “U-net: convolutional networks for biomedical image segmentation,” in Medical image computing and computer-assisted intervention–MICCAI 2015: 18th international conference, Munich, Germany, October 5-9, 2015, proceedings, part III 18 (Springer), 234–241.

Roujean, J.-L., Leroy, M., and Deschamps, P.-Y. (1992). A bidirectional reflectance model of the earth’s surface for the correction of remote sensing data. J. Geophys. Res. Atmos. 97, 20455–20468. doi:10.1029/92jd01411

Roy, D. P., Zhang, H., Ju, J., Gomez-Dans, J. L., Lewis, P. E., Schaaf, C., et al. (2016). A general method to normalize landsat reflectance data to nadir brdf adjusted reflectance. Remote Sens. Environ. 176, 255–271. doi:10.1016/j.rse.2016.01.023

Schläpfer, D., Richter, R., and Feingersh, T. (2014). Operational brdf effects correction for wide-field-of-view optical scanners (brefcor). IEEE Trans. Geoscience Remote Sens. 53, 1855–1864.

Wan, S., Yeh, M.-L., and Ma, H.-L. (2021). An innovative intelligent system with integrated cnn and svm: considering various crops through hyperspectral image data. ISPRS Int. J. Geo-Information 10, 242. doi:10.3390/ijgi10040242

Wang, M., Ma, X., Zheng, T., and Su, Z. (2024). Msmtriu-net: deep learning-based method for identifying rice cultivation areas using multi-source and multi-temporal remote sensing images. Sensors Basel, Switz. 24, 6915. doi:10.3390/s24216915

Xu, Y., Xue, X., Sun, Z., Gu, W., Cui, L., Jin, Y., et al. (2023). Deriving agricultural field boundaries for crop management from satellite images using semantic feature pyramid network. Remote Sens. 15, 2937. doi:10.3390/rs15112937

Xu, Z., Zhang, W., Zhang, T., Yang, Z., and Li, J. (2021). Efficient transformer for remote sensing image segmentation. Remote Sens. 13, 3585. doi:10.3390/rs13183585

Keywords: Gaofen-1 satellite, crop classification, deep learning, BRDF, feature extraction

Citation: Chen Y, Li Y, Li R, Guo C and Li J (2025) Synergizing BRDF correction and deep learning for enhanced crop classification in GF-1 WFV imagery. Front. Remote Sens. 6:1620109. doi: 10.3389/frsen.2025.1620109

Received: 29 April 2025; Accepted: 16 June 2025;

Published: 10 July 2025.

Edited by:

Xiguang Yang, Northeast Forestry University, ChinaReviewed by:

Bin Wang, Northeast Forestry University, ChinaBingjie Liu, Shanxi Agricultural University, China

Haiying Jiang, Harbin Engineering University, China

Anam Sabir, Indian Institute of Technology Indore, India

Neeraj Goel, Indian Institute of Technology Ropar, India

Copyright © 2025 Chen, Li, Li, Guo and Li. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Yang Li, bGl5YW5ncnNAMTI2LmNvbQ==

Yuanwei Chen

Yuanwei Chen Yang Li

Yang Li