- School of Marine Science and Technology, Northwestern Polytechnical University, Xi’an, China

This paper investigates the leader-based distributed optimal control problem of discrete-time linear multi-agent systems (MASs) on directed communication topologies. In particular, the communication topology under consideration consists of only one directed spanning tree. A distributed consensus control protocol depending on the information between agents and their neighbors is designed to guarantee the consensus of MASs. In addition, the optimization of energy cost performance can be obtained using the proposed protocol. Subsequently, a numerical example is provided to demonstrate the effectiveness of the presented protocol.

1 Introduction

Inspired by biological motion in nature, the cooperative motion of multi-agent systems (MASs) has been studied extensively in the past decade (Wang et al., 2017, 2019; Wang and Sun, 2018; Wang et al., 2020b; Koru et al., 2021; Wang and Sun, 2021). Compared to a single agent, networked MASs have the advantages of fast command response and robustness. Due to the distributed network computing system having the characteristics of strong scalability and fast computing speed, the study of distributed cooperative control problems for multi-agent systems has attracted increasing attention of control scientists and robotics engineers by virtue of its extensive applications in many cases, such as mobile robots (Mu et al., 2017; Zhao et al., 2019), autonomous underwater vehicles (AUVs) (Zuo et al., 2016; Li et al., 2019), and spacecrafts (Zhang et al., 2018; 2021a). A classical framework for the cooperative control of MASs with switching topologies is discussed in the study by Olfati-Saber and Murray (2004). Ren and Beard (2005) have further relaxed the conditions given by Olfati-Saber and Murray (2004), which present some new results with regard to the consensus of linear MASs.

In practice, it is necessary to investigate the control problem of multi-agent systems in discrete time with most computer systems being discrete structures. In the study by Liang et al. (2017), the cooperative containment control problem of a nonuniform discrete-time linear multi-agent is studied, and a novel internal mode compensator is designed to deal with the uncertain part of system dynamics. A solution method of the discrete-time MAS decentralized consensus problem based on linear matrix inequality (LMI) is given in the study by Liang et al. (2018). The problem of multi-agent consensus control based on the terminal iterative learning framework is discussed by Lv et al. (2018) where an adaptive control method based on time-varying control input is proposed to improve the control performance of the system. Su et al. (2019) proposed a distributed control algorithm based on the low-gain feedback method and the modified algebraic Riccati equation to achieve a semi-global consensus of discrete-time MASs under input saturation conditions. A multi-agent consensus framework based on the distributed model predictive controller is proposed by Li and Li (2020), while the self-triggering mechanism is adopted to reduce communication cost and solve the problem of asynchronous discrete-time information exchange. Liu et al. (2020) proposed a distributed state feedback control algorithm based on the Markov state compensator to solve the problem, that is, some followers cannot directly obtain the leader’s own state information. For MASs with unknown system parameters, a distributed adaptive control protocol containing local information was designed by Li et al. (2020) to ensure the inclusiveness of the system. In the study by Li et al. (2021), a class of discrete-time MASs adaptive fault-tolerant tracking problem based on reinforcement learning is studied, in which an adaptive auxiliary signal variable is designed to compensate the effect of actuator faults on the control system.

In practical applications, the energy cost performance of the designed protocols should be considered carefully, especially for the systems with low loadability, for example, autonomous underwater vehicles and spacecrafts. In the study by Zhang et al. (2017), the discrete-time MAS optimal consensus problem is discussed, and a data-driven adaptive dynamic programming method is proposed to solve the problem, that is, it is difficult to obtain an accurate mathematical model of the system. Wen et al. (2018) constructed a reinforced learning framework based on fuzzy logic system (FLS) approximators for the identifier–actor–critic system to achieve optimal tracking control of MASs. An optimal signal generator is presented in the study by Tang et al. (2018), where an embedded control scheme by embedding the generator in the feedback loop is adopted to realize the optimal output consensus of multi-agent networks. Tan (2020) transformed the distributed H∞ optimal tracking problem of a class of physically interconnected large-scale systems with a strict feedback form and saturated actuators into the equivalent control problem of MASs; meanwhile, a feedback control algorithm is designed to learn the optimal control input of the system. In the study by Wang et al. (2020a), the optimal consensus problem of MASs is decomposed into three sub-problems: input optimization, consensus state optimization, and dual optimization, and a distributed control algorithm is proposed to achieve the optimal consensus of the system. The nonuniform MAS distributed optimal steady-state regulation is investigated in the study by Tang (2020), and the results are extended to the case where the system only has real-time gradient information using high gain control techniques. The single-agent goal representation heuristic dynamic programming (GrHDP) technique is extended to the multi-agent consensus control problem in by Zhong and He (2020), and an iterative learning algorithm based on GrHDP is designed to make the local performance indexes of the system converge to the optimal value. In the study by Xu et al. (2021), the optimal control problem with piecewise-constant controller gain in a random environment is solved, and an improved Hamilton–Jacobi –Bellman (HJB) partial differential equation is obtained by the splitting method and Feynman-KAC formula.

However, to the authors’ best knowledge, there are very few studies focusing on the optimal control of discrete-time MASs only containing a directed spanning tree. In this study, the leader-based distributed optimal control problem of discrete-time linear MASs on directed communication topologies is investigated. A distributed discrete-time consensus protocol based on the directed graph is designed, and it is proved that the optimization of energy cost performance can be satisfied with the presented consensus protocol. Furthermore, the optimal solution can be obtained by solving the algebraic Riccati equation (ARE), and the design of the protocol presented in this study does not require global communication topology information and relies on only the agent dynamics and relative states of neighboring agents, which means that every agent manages its protocol in a fully distributed way.

Notation.

2 Preliminaries

2.1 Algebraic Graph Theory

A digraph

2.2 Problem Formulation

Considering a group of N agents with the discrete-time system presented by the following equation.

where

Assumption 1. the leader agent’s index is defined as 0, and the leader agent’s and the follower agent’s index are defined as 1, … , N,. The digraph

Lemma 1. (Matrix Inversion Lemma (Horn and Johnson, 1996)): For any nonsingular matrices E ∈ CN×N, G ∈ CN×M and the general matrices F ∈ CN×N, H ∈ CN×M holds. Then, the inverse of the matrix(E + FGH) is as follows.

3 Main Results

In this section, a distributed optimal controller is designed to solve the consensus of the system in Eq. 1, and the optimization of cost function is achieved with the presented protocol.

Since Assumption 1 holds, the Laplacian matrix

where

Let

where

A distributed optimal controller is developed as follows.

where c represents the coupling strength, and K denotes the control gain matrix.

The error system can be obtained by taking the difference of Eq. 3 as follows.

where

Inspired by reference given by Zhang et al. (2021b), the cost function is chosen to be

where the matrices

In Eq. 7,

where λT (k + 1) represents the costate variable and

Next, the protocol presented in Eq. 4 is proved to guarantee the optimization of the energy cost performance and stability of system in Eq. 5.

Theorem 1. For the given matrices Q = QT > 0 and R = RT > 0, the cost function L(k) is optimized and the stability of system in Eq. 5 can be achieved if and only if the following ARE holds:

where P is the positive definite solution of Eq. 9, and the control gain matrix is

Proof. i) Optimization of Cost Function

(i–i) Necessity

The optimal control input can be solved from the equation as follows.

Let λ(k) = −(IN ⊗ P)ξ(k), then the optimal controller can be obtained in Eq. 10 as follows.

Let

According to Lemma 1, it indicates that the expression of U* can be rewritten as

where

which indicates that

Let

which is identical to the ARE presented in Eq. 9.It is to be noted that if c ≥ 1, we have

(i–ii) Sufficiency

Considering the following equation:

Based on

Since Eq. 5 and Eq. 15 hold true, we have

According to the following conditional equation

Then, Eq. 19 can be regarded as

Let

Let

Then, the cost function can be optimized, that is, L*(k) = −ΔV(k) with the controller

where V (0) represents the initial value of V(k).

(ii) The Stability of System

Based on the expressions of U*(k), we have

It is inferred from Eq. 25 that − limk→∞V(k) = 0. Then, the optimal performance index J* can be rewritten as

As a consequence, the conditions in Theorem 1 are all satisfied, which completes the proof.

Remark 1. Based on Theorem 1, it is obvious that the value of the control gain matrix K mainly depends on the matrix P and the coupling strength c, where the value of P is directly solved by Eq. 9, and c is a constant value satisfying the condition c > 1. Therefore, the design of the control protocol ui(k) in Eq. 4 does not require global communication topology information and relies only on the agent dynamics and relative states of neighboring agents, that is, every agent manages its control protocol ui(k) in a fully distributed way.

Remark 2. The topology considered in this study is a structure containing only one directed spanning tree, which means that the agent can only obtain the information of a single neighbor, and we prove the effectiveness of the proposed distributed optimal controller under the abovementioned conditions. In fact, the proposed controller is also suitable for the case with the general case, such as reference given by Wang et al. (2017), Wang et al. (2019).

4 Numerical Example

In this section, a numerical example is provided to demonstrate the effectiveness of the proposed controller.

Considering a network with seven agents, the communication topology is described by Figure 1. Moreover, the system parameters of each agent are given as follows (Xi et al., 2020).

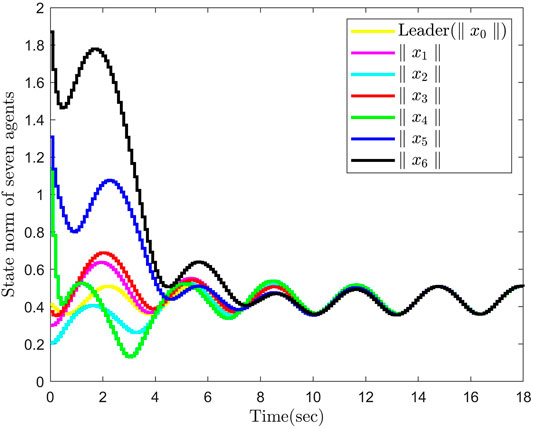

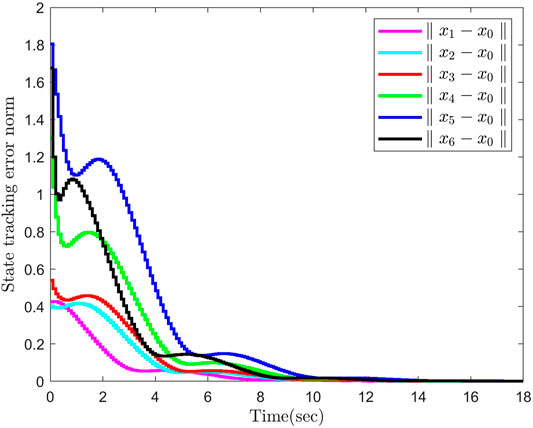

Let R = 10, Q = 10*I3, and the coupling strength c = 2, then the matrix P and the control gain K can be calculated by Theorem 1. The initial conditions are given by x0 (0) = [0.2–0.2 0.3]T, x1 (0) = [0.1 0.2 0.2]T, x2 (0) = [−0.15–0.1 0.1]T, x3 (0) = [0.3 0.2 0.1]T, x4 (0) = [−0.2 0.2–1.1]T, x5 (0) = [1.3 0.1–0.1]T, and x6 (0) = [1.0 0.5 1.5]T. Then, the trajectories of the state norm and tracking error norm are shown in Figures 2, 3.

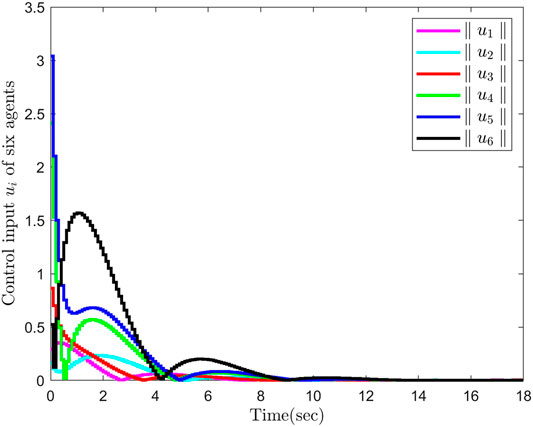

It can be seen from Figures 2, 3 that six followers can track the leader successfully within about 11 s by using the proposed optimal controller, and the steady-state tracking error is less than 2.0. In addition, it is shown in Figure 4 that the control input of six agents will nearly reach zero at about 13 s.

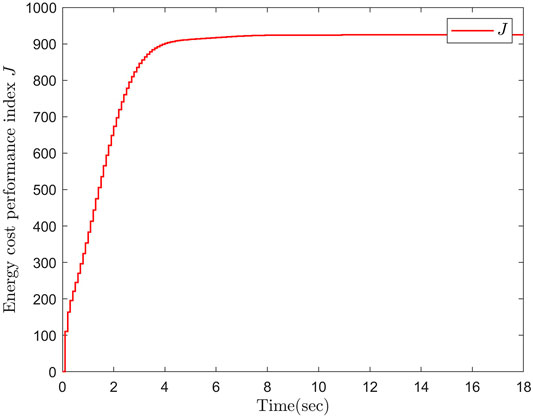

Moreover, the trajectories of energy cost performance J are displayed in Figure 5, which shows that the optimal performance of J equals 924. It can be acquired from Theorem 1 that the theoretical value of the optimal performance is

5 Conclusion

In this study, the leader-based distributed optimal control of discrete-time linear MASs only containing a directed spanning tree has been investigated. A distributed optimal consensus control protocol is presented to guarantee that multiple followers can successfully track the leader. It can be proved that the proposed protocol can ensure the optimization of the energy performance index with the optimal gain parameters which can be realized by solving the ARE. Moreover, the design of the protocol presented in this study is independent with the global information of topologies, which indicates that every agent manages its protocol in a fully distributed way. Finally, a numerical example which illustrates the effectiveness of the designed protocol is reported.

Data Availability Statement

The original contributions presented in the study are included in the article/Supplementary Material, further inquiries can be directed to the corresponding author.

Author Contributions

GH is responsible for the simulation and the writing of this manuscript. ZZ is responsible for the design idea of this study. WY is responsible for the revision of this manuscript.

Funding

This study was supported in part by the National Key R&D Program of China 2019YFB1310303, in part by the Key R&D program of Shaanxi Province, 2021GY-289, in part by the National Natural Science Foundation of China under Grant U21B2047, Grant U1813225, Grant 61733014, and Grant 51979228.

Conflict of Interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher’s Note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

References

Koru, A. T., Sarslmaz, S. B., Yucelen, T., and Johnson, E. N. (2021). Cooperative Output Regulation of Heterogeneous Multiagent Systems: A Global Distributed Control Synthesis Approach. IEEE Trans. Automat. Contr. 66, 4289–4296. doi:10.1109/TAC.2020.3032496

Li, H., and Li, X. (2020). Distributed Model Predictive Consensus of Heterogeneous Time-Varying Multi-Agent Systems: With and without Self-Triggered Mechanism. IEEE Trans. Circuits Syst. 67, 5358–5368. doi:10.1109/TCSI.2020.3008528

Li, H., Wu, Y., and Chen, M. (2021). Adaptive Fault-Tolerant Tracking Control for Discrete-Time Multiagent Systems via Reinforcement Learning Algorithm. IEEE Trans. Cybern. 51, 1163–1174. doi:10.1109/TCYB.2020.2982168

Li, J., Du, J., and Chang, W.-J. (2019). Robust Time-Varying Formation Control for Underactuated Autonomous Underwater Vehicles with Disturbances under Input Saturation. Ocean Eng. 179, 180–188. doi:10.1016/j.oceaneng.2019.03.017

Li, N., Fei, Q., and Ma, H. (2020). Distributed Adaptive Containment Control for a Class of Discrete-Time Nonlinear Multi-Agent Systems with Unknown Parameters and Control Gains. J. Franklin Inst. 357, 8566–8590. doi:10.1016/j.jfranklin.2020.06.009

Liang, H., Li, H., Yu, Z., Li, P., and Wang, W. (2017). Cooperative Robust Containment Control for General Discrete‐time Multi‐agent Systems with External Disturbance. IET Control. Theor. Appl. 11, 1928–1937. doi:10.1049/iet-cta.2016.1475

Liu, Z., Yan, W., Li, H., and Zhang, S. (2020). Cooperative Output Regulation Problem of Discrete-Time Linear Multi-Agent Systems with Markov Switching Topologies. J. Franklin Inst. 357, 4795–4816. doi:10.1016/j.jfranklin.2020.02.020

Lv, Y., Chi, R., and Feng, Y. (2018). Adaptive Estimation‐based TILC for the Finite‐time Consensus Control of Non‐linear Discrete‐time MASs under Directed Graph. IET Control. Theor. Appl. 12, 2516–2525. doi:10.1049/iet-cta.2018.5602

Mahmoud, M. S., Khan, G. D., Yu, Z., Li, P., and Wang, W. (2018). Lmi Consensus Condition for Discrete-Time Multi-Agent Systems. Ieee/caa J. Autom. Sinica 5, 509–513. doi:10.1109/JAS.2016.7510016

Mu, B., Chen, J., Shi, Y., and Chang, Y. (2017). Design and Implementation of Nonuniform Sampling Cooperative Control on a Group of Two-Wheeled mobile Robots. IEEE Trans. Ind. Electron. 64, 5035–5044. doi:10.1109/tie.2016.2638398

Olfati-Saber, R., and Murray, R. M. (2004). Consensus Problems in Networks of Agents with Switching Topology and Time-Delays. IEEE Trans. Automat. Contr. 49, 1520–1533. doi:10.1109/TAC.2004.834113

Su, H., Ye, Y., Qiu, Y., Cao, Y., and Chen, M. Z. Q. (2019). Semi-global Output Consensus for Discrete-Time Switching Networked Systems Subject to Input Saturation and External Disturbances. IEEE Trans. Cybern. 49, 3934–3945. doi:10.1109/TCYB.2018.2859436

Tan, L. N. (2020). Distributed H∞ Optimal Tracking Control for Strict-Feedback Nonlinear Large-Scale Systems with Disturbances and Saturating Actuators. IEEE Trans. Syst. Man. Cybern, Syst. 50, 4719–4731. doi:10.1109/TSMC.2018.2861470

Tang, Y., Deng, Z., and Hong, Y. (2019). Optimal Output Consensus of High-Order Multiagent Systems with Embedded Technique. IEEE Trans. Cybern. 49, 1768–1779. doi:10.1109/TCYB.2018.2813431

Tang, Y. (2020). Distributed Optimal Steady-State Regulation for High-Order Multiagent Systems with External Disturbances. IEEE Trans. Syst. Man. Cybern, Syst. 50, 4828–4835. doi:10.1109/TSMC.2018.2866902

Wang, B., Chen, W., and Zhang, B. (2019). Semi-global Robust Tracking Consensus for Multi-Agent Uncertain Systems with Input Saturation via Metamorphic Low-Gain Feedback. Automatica 103, 363–373. doi:10.1016/j.automatica.2019.02.002

Wang, B., Wang, J., Zhang, B., and Li, X. (2017). Global Cooperative Control Framework for Multiagent Systems Subject to Actuator Saturation with Industrial Applications. IEEE Trans. Syst. Man. Cybern, Syst. 47, 1270–1283. doi:10.1109/TSMC.2016.2573584

Wang, Q., Duan, Z., and Wang, J. (2020a). Distributed Optimal Consensus Control Algorithm for Continuous-Time Multi-Agent Systems. IEEE Trans. Circuits Syst. 67, 102–106. doi:10.1109/TCSII.2019.2900758

Wang, Q., Psillakis, H. E., and Sun, C. (2020b). Adaptive Cooperative Control with Guaranteed Convergence in Time-Varying Networks of Nonlinear Dynamical Systems. IEEE Trans. Cybern. 50, 5035–5046. doi:10.1109/TCYB.2019.2916563

Wang, Q., and Sun, C. (2018). Adaptive Consensus of Multiagent Systems with Unknown High-Frequency Gain Signs under Directed Graphs. IEEE Trans. Syst. Man, Cybernetics: Syst. 50, 2181–2186. doi:10.1109/TSMC.2018.2810089

Wang, Q., and Sun, C. (2021). Distributed Asymptotic Consensus in Directed Networks of Nonaffine Systems with Nonvanishing Disturbance. Ieee/caa J. Autom. Sinica 8, 1133–1140. doi:10.1109/JAS.2021.1004021

Wei Ren, W., and Beard, R. W. (2005). Consensus Seeking in Multiagent Systems under Dynamically Changing Interaction Topologies. IEEE Trans. Automat. Contr. 50, 655–661. doi:10.1109/TAC.2005.846556

Wen, G., Chen, C. L. P., Feng, J., and Zhou, N. (2018). Optimized Multi-Agent Formation Control Based on an Identifier-Actor-Critic Reinforcement Learning Algorithm. IEEE Trans. Fuzzy Syst. 26, 2719–2731. doi:10.1109/TFUZZ.2017.2787561

Xi, J., Wang, L., Zheng, J., and Yang, X. (2020). Energy-constraint Formation for Multiagent Systems with Switching Interaction Topologies. IEEE Trans. Circuits Syst. 67, 2442–2454. doi:10.1109/tcsi.2020.2975383

Xu, S., Cao, J., Liu, Q., and Rutkowski, L. (2021). Optimal Control on Finite-Time Consensus of the Leader-Following Stochastic Multiagent System with Heuristic Method. IEEE Trans. Syst. Man. Cybern, Syst. 51, 3617–3628. doi:10.1109/TSMC.2019.2930760

Zhang, H., Jiang, H., Luo, Y., and Xiao, G. (2017). Data-driven Optimal Consensus Control for Discrete-Time Multi-Agent Systems with Unknown Dynamics Using Reinforcement Learning Method. IEEE Trans. Ind. Electron. 64, 4091–4100. doi:10.1109/TIE.2016.2542134

Zhang, H., and Lewis, F. L. (2012). Adaptive Cooperative Tracking Control of Higher-Order Nonlinear Systems with Unknown Dynamics. Automatica 48, 1432–1439. doi:10.1016/j.automatica.2012.05.008

Zhang, Z., Shi, Y., and Yan, W. (2021a). A Novel Attitude-Tracking Control for Spacecraft Networks with Input Delays. IEEE Trans. Contr. Syst. Technol. 29, 1035–1047. doi:10.1109/TCST.2020.2990532

Zhang, Z., Shi, Y., Zhang, Z., Zhang, H., and Bi, S. (2018). Modified Order-Reduction Method for Distributed Control of Multi-Spacecraft Networks with Time-Varying Delays. IEEE Trans. Control. Netw. Syst. 5, 79–92. doi:10.1109/TCNS.2016.2578046

Zhang, Z., Yan, W., and Li, H. (2021b). Distributed Optimal Control for Linear Multiagent Systems on General Digraphs. IEEE Trans. Automat. Contr. 66, 322–328. doi:10.1109/TAC.2020.2974424

Zhao, S., Li, Z., and Ding, Z. (2019). Bearing-Only Formation Tracking Control of Multiagent Systems. IEEE Trans. Automat. Contr. 64, 4541–4554. doi:10.1109/TAC.2019.2903290

Zhong, X., and He, H. (2020). Grhdp Solution for Optimal Consensus Control of Multiagent Discrete-Time Systems. IEEE Trans. Syst. Man. Cybern, Syst. 50, 2362–2374. doi:10.1109/TSMC.2018.2814018

Keywords: leader-based, distributed optimal control, discrete-time, multi-agent systems, directed communication topologies

Citation: Huang G, Zhang Z and Yan W (2022) Distributed Control of Discrete-Time Linear Multi-Agent Systems With Optimal Energy Performance. Front. Control. Eng. 2:797362. doi: 10.3389/fcteg.2021.797362

Received: 18 October 2021; Accepted: 29 December 2021;

Published: 25 April 2022.

Edited by:

Chao Shen, Carleton University, CanadaReviewed by:

Bohui Wang, Nanyang Technological University, SingaporeQingling Wang, Southeast University, China

Copyright © 2022 Huang, Zhang and Yan. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Zhuo Zhang, emh1b3poYW5nQG53cHUuZWR1LmNu

Guan Huang

Guan Huang Zhuo Zhang

Zhuo Zhang Weisheng Yan

Weisheng Yan